AI in B2B: Why 2026 Is When the Real Transformation Begins

Introduction: The False Start Is Over

You've heard it before. AI is transforming B2B. AI will replace your entire sales team. AI will revolutionize customer support. AI will do everything.

Your LinkedIn feed has been screaming about it for two years. Every vendor slapped "AI-powered" on their homepage and called it innovation. You probably bought an AI SDR tool in early 2024, watched it send embarrassing emails to your best prospects, and turned it off after two weeks.

I get it. You're skeptical. You should be.

But here's the thing that most people miss: we're actually just now getting to the real starting line. The foundational technology didn't actually work at production scale until early 2025. Not before. Not in 2024. Not in 2023. The models simply weren't good enough.

So why am I confident that 2026 is different?

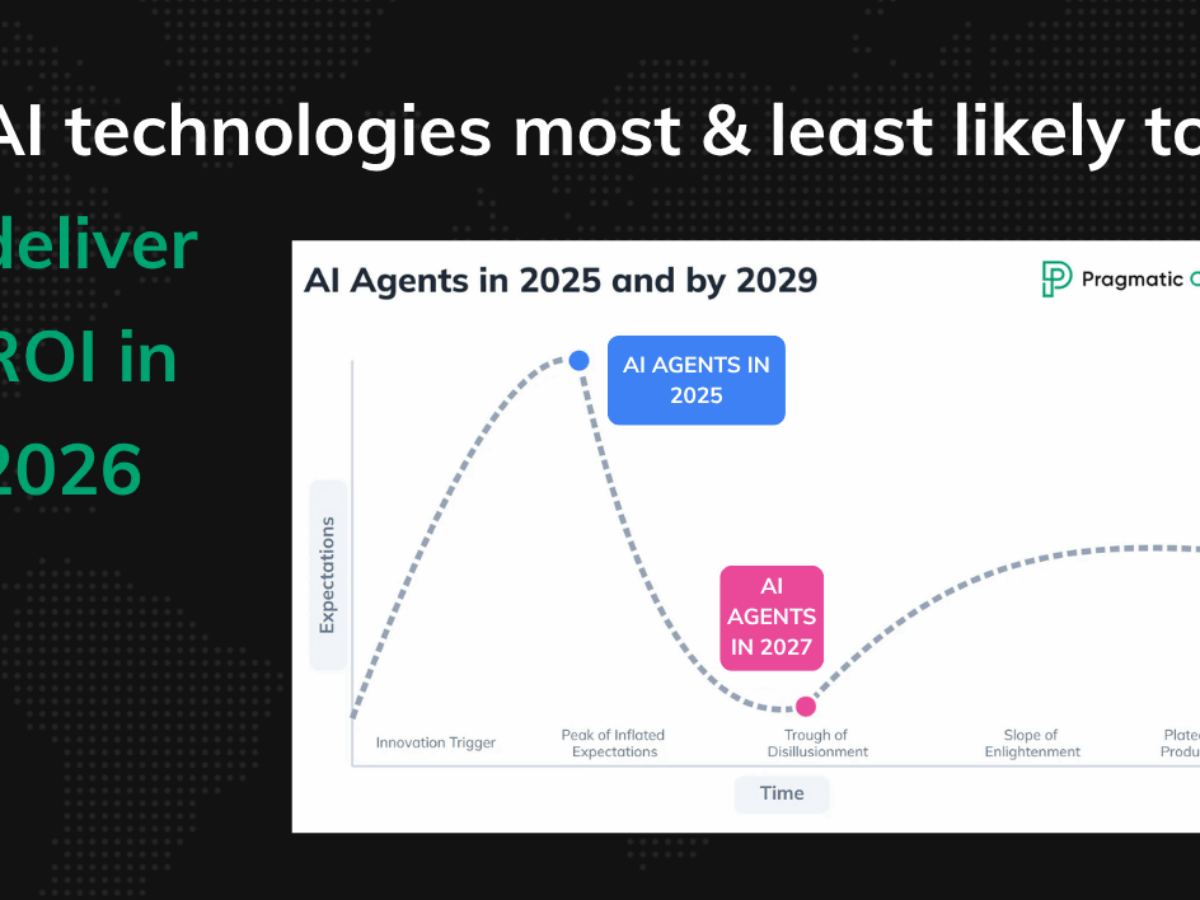

Because we just went through four sequential capability unlocks in the past twelve months. Each one built on the previous one. And we're now at the stage where AI can manage other AIs, orchestrating complex workflows without human intervention. That's not hype. That's a genuine inflection point.

The real transformation in B2B AI isn't coming. It's starting right now. And if you're not paying attention, you're going to miss the biggest productivity opportunity since cloud computing.

Let me walk you through exactly what changed, when it changed, and why 2026 is when things get real.

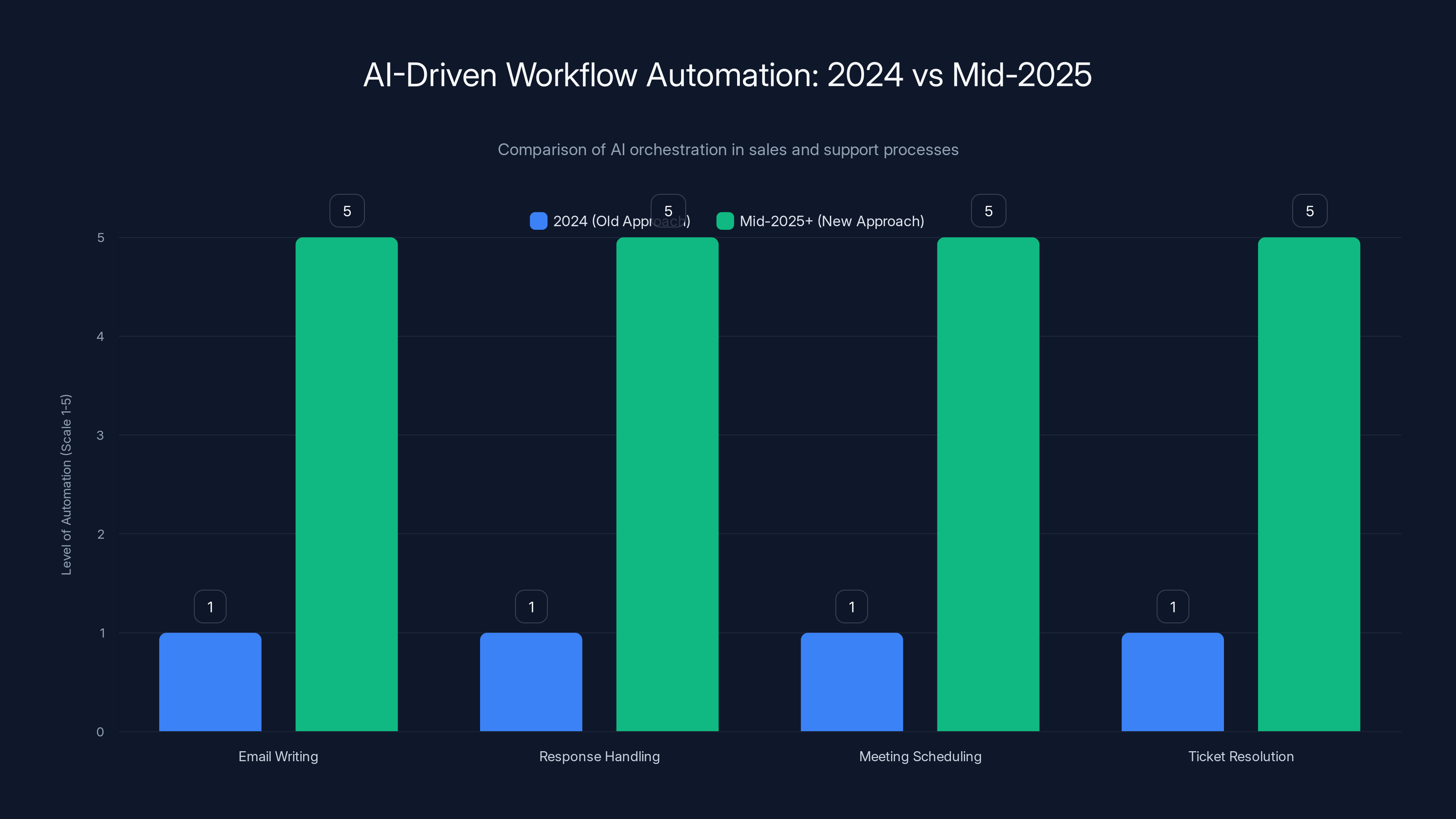

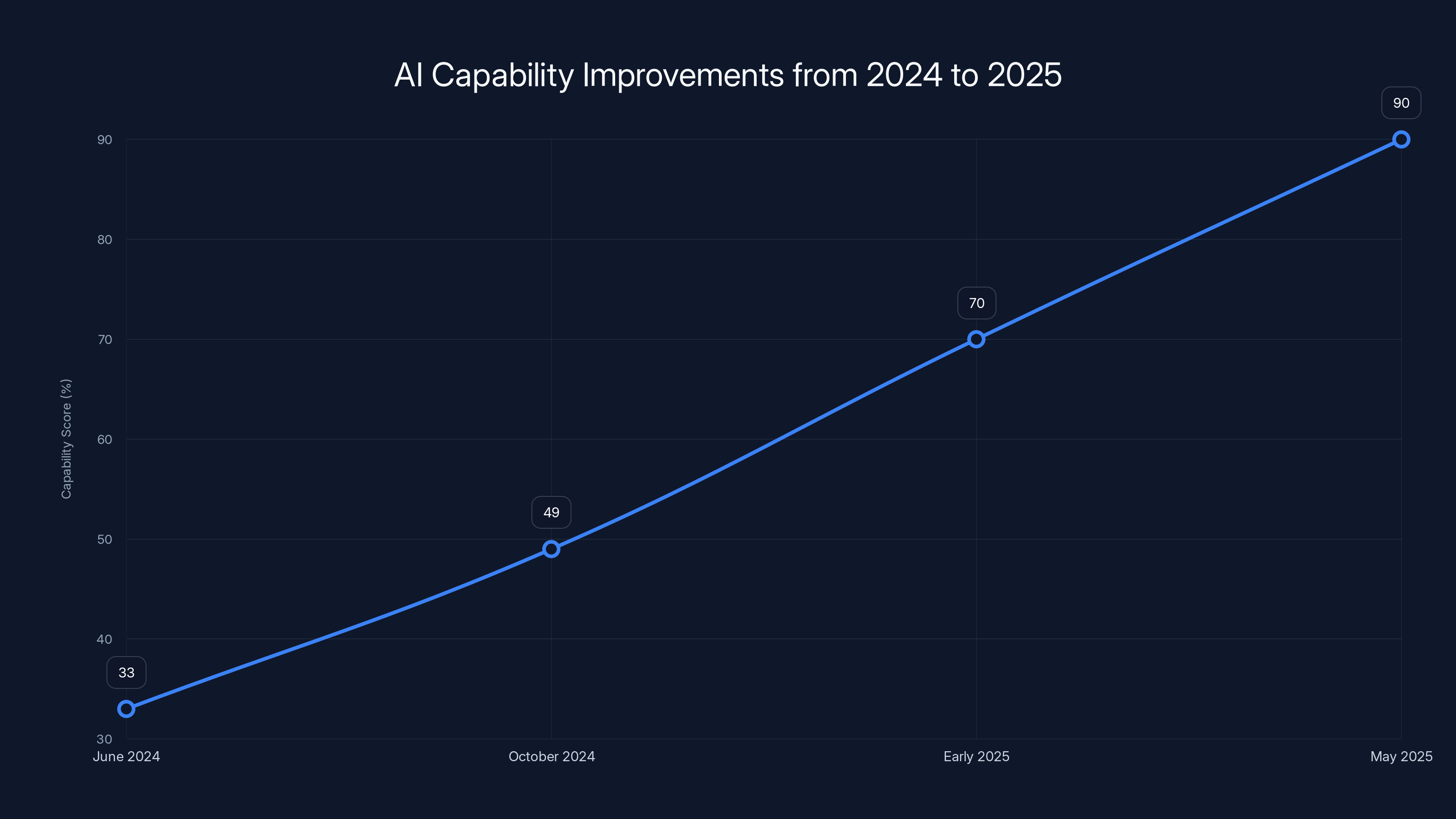

The shift from 2024 to mid-2025 marks a significant increase in AI-driven automation, transforming task-based assistance into full process automation. Estimated data.

TL; DR

- Four capability unlocks happened between June 2024 and May 2025 that finally made production-grade AI work in B2B

- Agentic orchestration (AIs managing other AIs) represents the real breakthrough, not single-task AI assistants

- 2024 was the false start: models weren't good enough, companies tried and abandoned AI tools, hype exceeded reality

- Early 2025 was the inflection: Claude 3.5 Sonnet, GPT-4o, and other models hit capability thresholds that actually worked

- 2026 will be transformation year: when most companies have working AI agents across sales, support, marketing, and operations

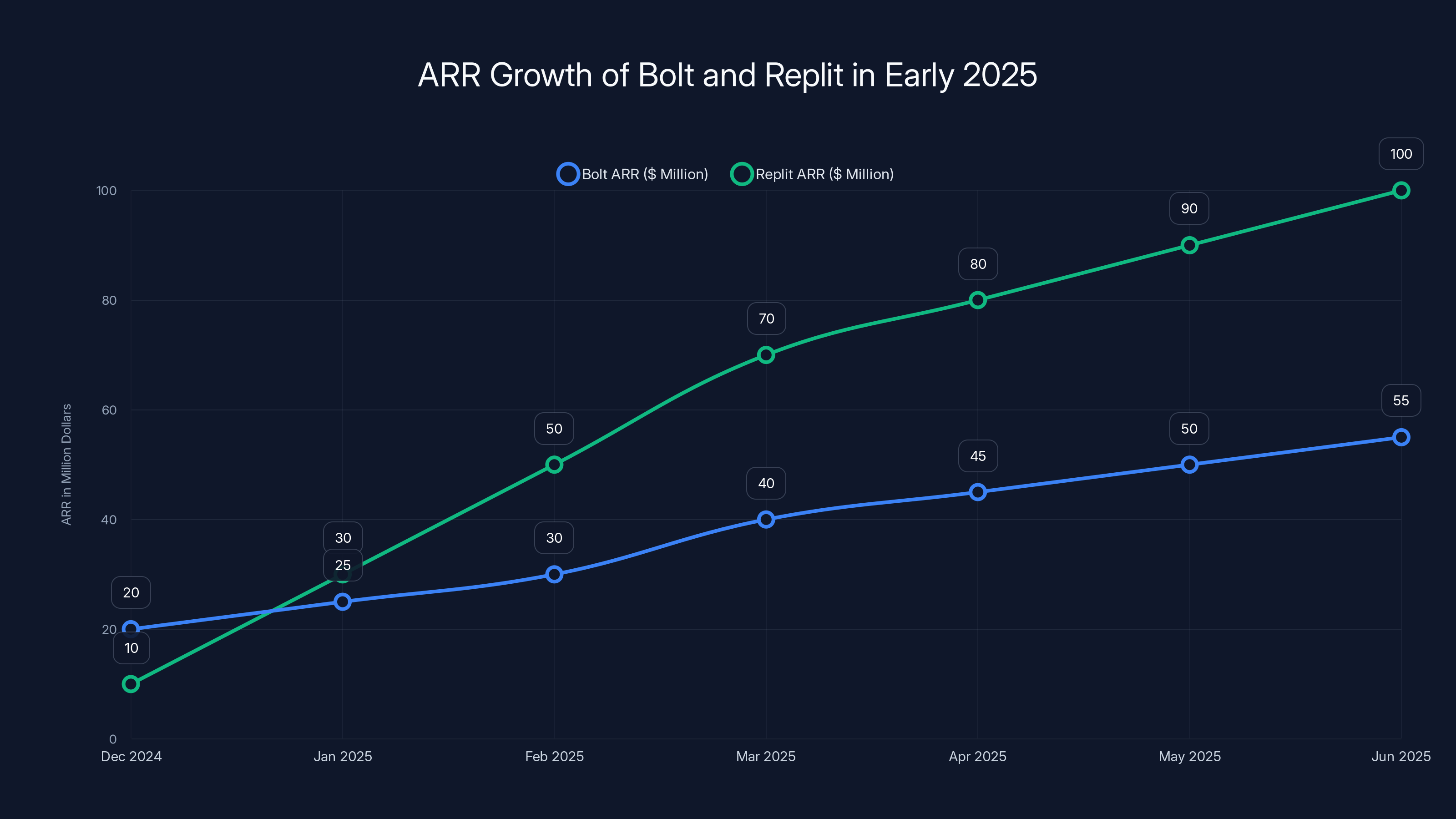

Bolt and Replit saw significant ARR growth in early 2025, with Bolt doubling and Replit increasing tenfold within six months. Estimated data based on reported figures.

Why 2024 Was the False Start (And Why That Matters)

Let's be honest about what happened in 2024. It was a year of ambitious launches and frustrated teams.

Every company working on AI SDRs, AI coding tools, and AI support systems ran into the same wall: the models simply weren't capable enough yet. Not even close. An AI that can write a mediocre email is not the same as an AI that can write a personalized, compelling, contextually appropriate email that actually gets responses. The gap between those two things is enormous.

I've talked to founders who launched AI SDR products in early 2024 with genuine excitement. By mid-year, they were seeing adoption fall off a cliff. Customers loved the idea. They hated the output. The emails were generic. The prospect research was shallow. The follow-up logic was predictable and obvious. The conversion rates were not there.

Why? Because the underlying models had hit a capability ceiling. They could generate plausible text, but they couldn't reason about context. They could pull data from APIs, but they couldn't synthesize it into actionable insights. They could follow rules, but they couldn't adapt to novel situations.

This wasn't a marketing problem. This wasn't a UX problem. This was a raw capability problem.

But here's what's important: everyone learned something from this false start. The market learned what AI actually needs to be useful at production scale. Product teams learned what features matter and what's just theater. Enterprise buyers learned to ask harder questions instead of being dazzled by hype.

That foundation of skepticism and hard lessons? That's actually what makes 2026 real. The companies that build on top of 2025's genuine breakthroughs won't have the hype problem. They'll have the evidence problem instead. They'll have production data proving it works.

Stage One: Claude 3.5 Sonnet (June 2024) - The First Real Unlock

On June 20, 2024, Anthropic released Claude 3.5 Sonnet. This wasn't a minor iteration. This was the moment when AI coding became theoretically possible for the first time.

I say "theoretically" because it didn't immediately solve everything. But it was the first time a model could write code that actually worked with some consistency.

The story of Bolt.new illustrates this perfectly. They had tried building an AI app builder in January 2024. They used the state-of-the-art models available at the time. It didn't work. The models simply couldn't generate coherent, runnable code at scale. By spring, they were weeks away from shutting the entire project down.

Then Claude 3.5 Sonnet dropped. The CEO described the improvement in agentic coding skills as "leaps-and-bounds better." Not incrementally better. Not marginally better. Fundamentally, qualitatively better. They immediately hard-pivoted back to their prototype. By July 1st, they had a product again.

This matters because it shows the difference between incremental model improvements and capability thresholds. You can improve a model by 10% and get nothing. You improve it by the right 10% and suddenly you unlock entire product categories that weren't viable before.

But even this unlock was limited. Claude 3.5 Sonnet could write code. It couldn't consistently reason about complex architecture. It couldn't manage multi-step projects. It was good at tasks. It wasn't good at processes.

That limitation mattered. Because code generation by itself is useful, but it's not transformative. What makes it transformative is when the AI can manage the entire process: breaking down requirements, generating code, testing it, fixing failures, and iterating. That was still not possible in June 2024.

The AI capability score improved significantly from June 2024 to May 2025, with major upgrades in model accuracy and agentic reasoning. Estimated data.

Stage Two: The October 2024 Upgrade - When "Actually Works" Became Real

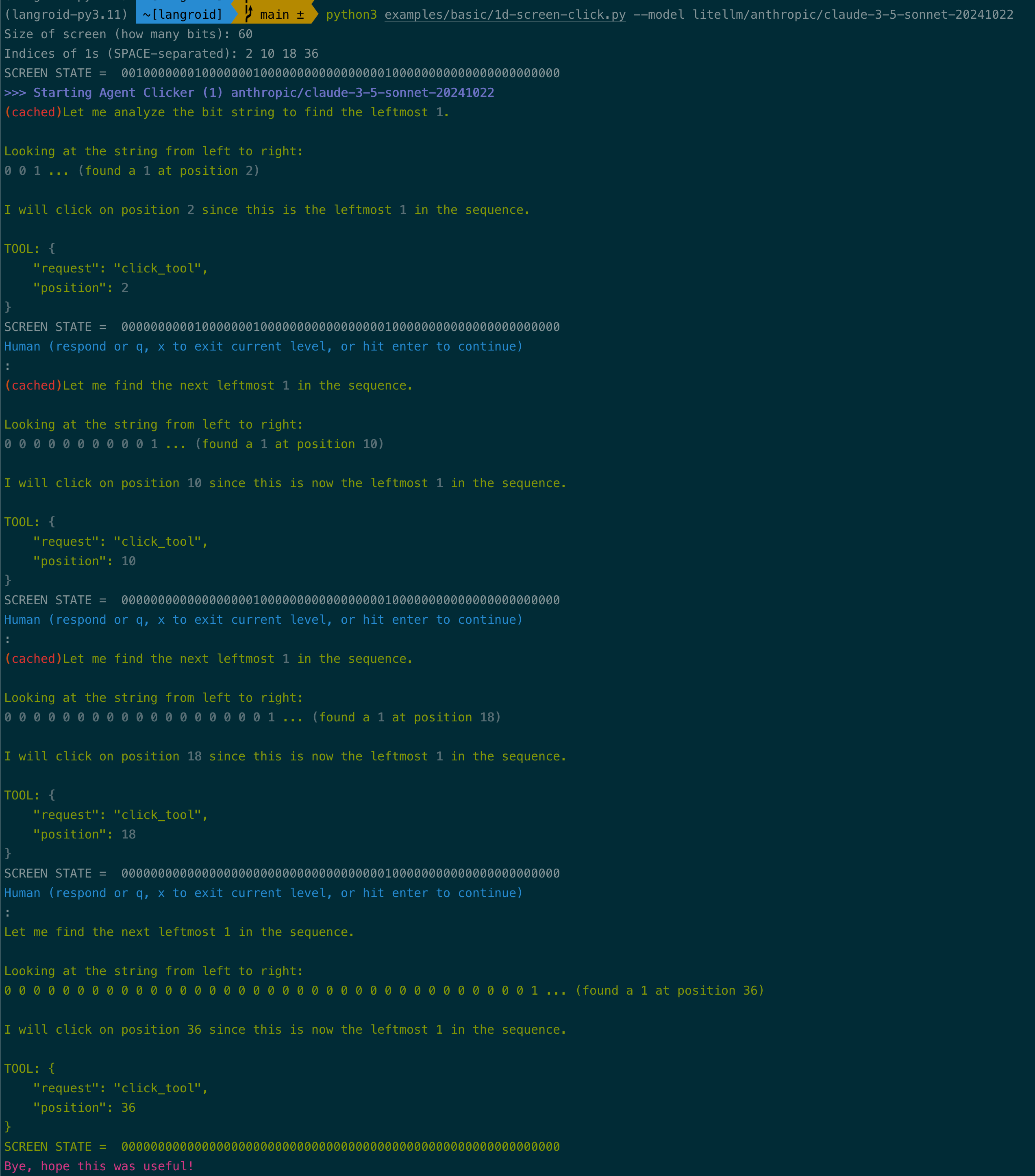

If June 2024 was "theoretically possible," October 22, 2024 was "actually works."

Anthropic shipped a major upgrade to Claude 3.5 Sonnet that month. This wasn't a new model. This was a significant improvement to an existing model. And the improvements were massive.

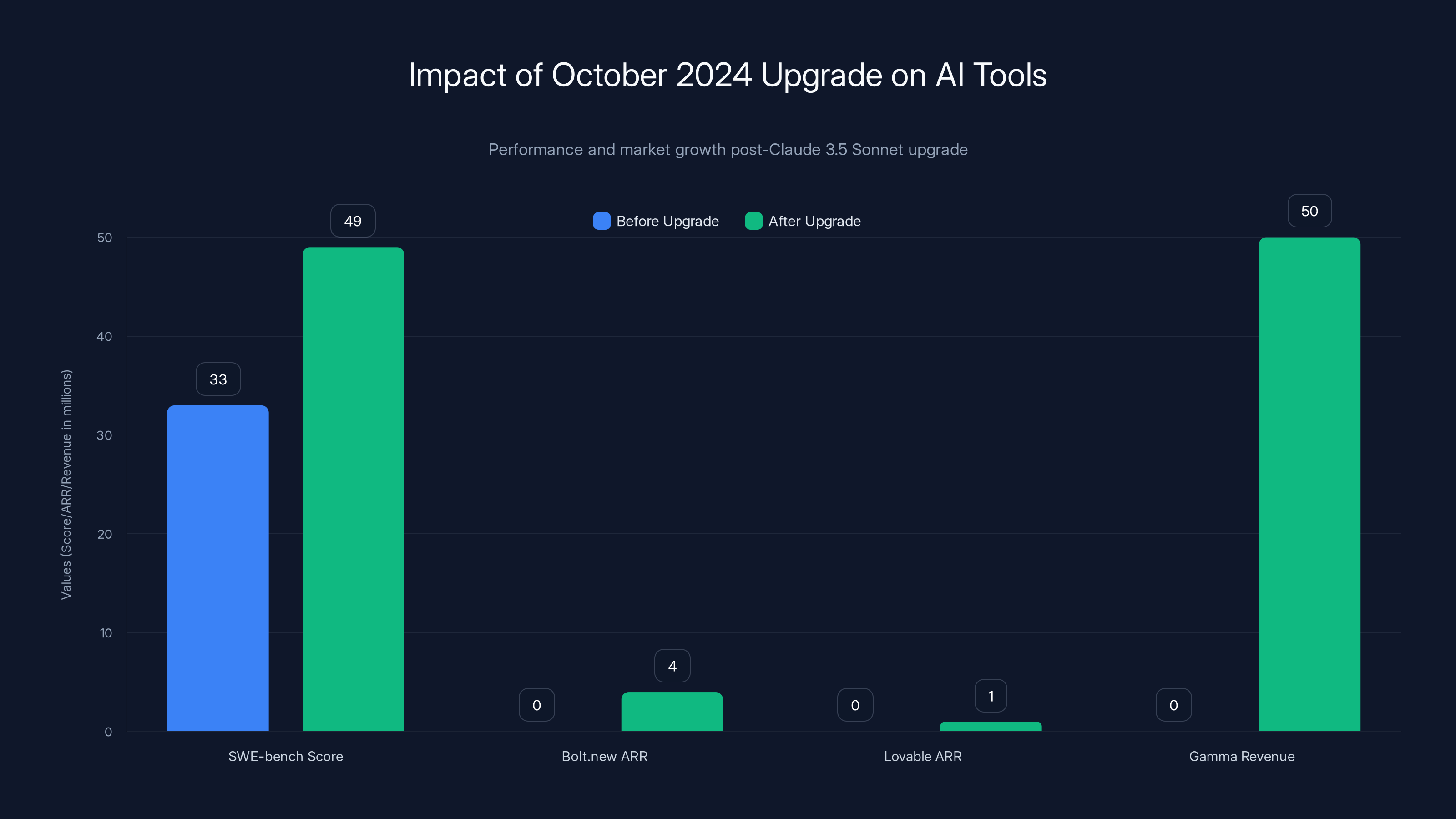

SWE-bench scores jumped from 33% to 49%. That's a 48% relative improvement. Tool use improved dramatically. Computer use launched in beta, allowing the AI to interact with systems the way humans do. This wasn't just incremental progress. This was crossing a threshold.

The market reaction tells you everything. Companies that had shelved AI projects six months earlier suddenly had working prototypes again.

Bolt.new launched their AI app builder on October 3, 2024 (before the major Claude upgrade, but riding the momentum) and went from zero to $4 million ARR in 30 days. Thirty days. That's not a gradual adoption curve. That's hockey stick growth that only happens when you hit product-market fit.

Lovable relaunched their AI coding platform on November 21, 2024 (post-Claude upgrade) and hit $1 million ARR in two weeks. Two weeks. That's not sustainable growth. That's explosive market validation.

Cursor, which had been building a better IDE for developers, suddenly became the place where developers actually wanted to use AI for daily work. It went viral because the model could finally do what people actually needed.

Replit launched their Agent product in September 2024, powered by Claude 3.5 Sonnet, and it became their main growth driver.

Gamma, the AI presentation tool, became profitable and started its run toward $50 million in annual revenue.

All of this happened because one thing changed: the underlying model crossed a capability threshold.

But even this wasn't the full story. These tools were working for technical users (developers). What about non-technical B2B use cases like sales, support, marketing, and operations? Those require different kinds of reasoning. Those require understanding business context, not just technical context.

Those were still hard. Still uncertain. Still not reliably working at scale.

Stage Three: Early 2025 - The Mainstream Explosion

Here's what's wild: the real explosion didn't happen in October or November 2024. It happened in early 2025.

There was a lag. A notable, observable lag. Why would that be?

Partly it was timing. October and November are always slower in B2B because everyone's focused on year-end priorities, budget decisions, and planning for the next year. The holiday slowdown is real.

But there was something else too: time for the news to spread. Time for people to actually try these tools. Time for the viral loop to kick in. Time for people to believe it actually worked.

In January 2025, Andrej Karpathy (former Tesla AI director) coined the term "vibe coding." He described it as "fully giving in to the vibes, embracing exponentials, and forgetting that the code even exists." That's how new this all was. The terminology didn't exist yet. The concept was so recent that someone had to invent a catchy name for it.

Bolt went from

Replit went from

Y Combinator Winter 2025 batch reported that 25% of their companies had codebases that were 95% AI-generated. Zero companies had this ratio in 2024. That's not incremental progress. That's a new normal.

Why the lag between the October upgrade and January explosion? Partly holidays. Partly word of mouth. Partly skepticism wearing off as people got evidence. Partly because the tools needed time to mature from "technically works" to "actually reliable."

But the core reason was simpler: people needed to experience it themselves. One viral thread from a developer or one convincing case study from a founder is worth a thousand marketing claims. The market had to see proof that it actually worked before going all-in.

But even this explosion was primarily in technical domains: coding, design, software development. What about the broader B2B world? Sales, support, marketing, operations?

Those required a different kind of breakthrough.

The October 2024 upgrade of Claude 3.5 Sonnet led to a significant performance boost in AI tools, with SWE-bench scores increasing by 48% and companies like Bolt.new and Lovable experiencing explosive ARR growth. Estimated data for Gamma revenue.

Stage Four: Claude 4 (May 2025) - Production-Grade AI Becomes Real

The real inflection point for broad B2B adoption came on May 22, 2025, when Anthropic released Claude Opus 4 and Claude Sonnet 4.

This wasn't just another model upgrade. This was the jump from "works for prototypes and technical tasks" to "works for enterprise production systems."

Claude Opus 4 could work autonomously for up to seven hours. Seven hours of sustained, unsupervised reasoning on complex tasks. That's the difference between an AI that needs human oversight for every step and an AI that can manage multi-hour projects independently.

It scored 72.5% on SWE-bench. That's high enough that you're not manually fixing half the code anymore. It's high enough that you're manually fixing maybe 10-15% of the code.

More importantly, it could handle the kinds of tasks that B2B companies actually needed:

- Complex sales workflows that require research, analysis, and contextual reasoning

- Support tickets that need to understand customer history and business context

- Marketing campaigns that need to synthesize data and create variations

- Operations processes that involve multiple steps and exception handling

This is the difference between "AI that assists with tasks" and "AI that runs processes."

And suddenly, AI SDRs weren't embarrassing anymore. AI support agents weren't getting refunded. AI coding tools weren't just for technical projects.

They actually worked.

The Agentic Orchestration Breakthrough - AIs Managing AIs

But the real breakthrough—the one that changes everything for 2026—happened around mid-2025.

For the first time, we could have agents manage sub-agents. One AI breaking down a complex workflow into components, delegating pieces to specialized sub-agents, coordinating results, and handling exceptions.

This is not a technical detail. This is the difference between automating tasks and automating processes.

Previously, you could have an AI do one thing: write emails, answer support questions, generate code, create presentations. You could chain these together with some workflow automation, but you still needed humans to oversee the handoffs, fix mistakes, and handle edge cases.

Now, you can have an AI manage the entire process end-to-end. Autonomously. Without human intervention except at decision points.

Let me make this concrete with real B2B examples.

Sales: The Full Prospecting Workflow

Old approach (2024):

- AI writes an email

- Human reviews it

- AI sends it

- Human reads response

- AI suggests next steps

- Human decides on follow-up

New approach (mid-2025+):

- AI orchestrates the entire prospecting process

- Breaks it down: research → personalization → email writing → sending → response handling → meeting scheduling

- Delegates research to a data-gathering sub-agent

- Delegates email writing to a copywriting sub-agent

- Delegates response analysis to an intent-detection sub-agent

- Delegates meeting scheduling to a calendar-integration sub-agent

- Coordinates all of it. Handles exceptions. Escalates only when necessary.

- Human reviews metrics and makes strategic decisions

The difference isn't marginal. It's transformational. One AI agent running an entire sales development process with specialized sub-agents handling specific functions. That's not assistance. That's automation.

Support: Intelligent Triage and Resolution

Old approach (2024):

- AI reads ticket

- AI suggests category

- Human assigns to team

- AI drafts response

- Human approves

- AI sends response

- Human checks if resolved

New approach (mid-2025+):

- AI orchestrates the entire support workflow

- Breaks it down: triage → routing → resolution → escalation → follow-up → pattern analysis

- Delegates triage to a classification sub-agent

- Delegates routine resolution to a knowledge-base sub-agent

- Delegates complex issues to an escalation sub-agent

- Delegates follow-ups to a continuity sub-agent

- Analyzes patterns to identify systemic issues

- Handles 90% of tickets end-to-end. Escalates the 10% that need human judgment

The impact: support response time drops from hours to minutes. Ticket volume scales without hiring more support staff. Customer satisfaction goes up because problems get solved faster.

Marketing: Campaign Management

Old approach (2024):

- AI researches audience

- Human plans campaign

- AI generates copy variations

- Human selects best ones

- AI creates graphics

- Human approves

- Humans monitor and optimize

New approach (mid-2025+):

- AI orchestrates the entire campaign lifecycle

- Breaks it down: research → planning → asset creation → testing → optimization → reporting

- Delegates audience research to a data sub-agent

- Delegates copy generation to a copywriting sub-agent

- Delegates design to a creative sub-agent

- Delegates A/B testing to an experimentation sub-agent

- Delegates optimization to a performance sub-agent

- Automatically runs variations, analyzes results, optimizes allocation

- Reports on performance and recommends next quarter strategy

Human's role shifts from "do the work" to "provide strategy and judgment."

This is why the agentic orchestration breakthrough matters. It's not just about having better models. It's about having the architecture to deploy those models in production B2B workflows without constant human oversight.

And we're only in the first inning of this capability.

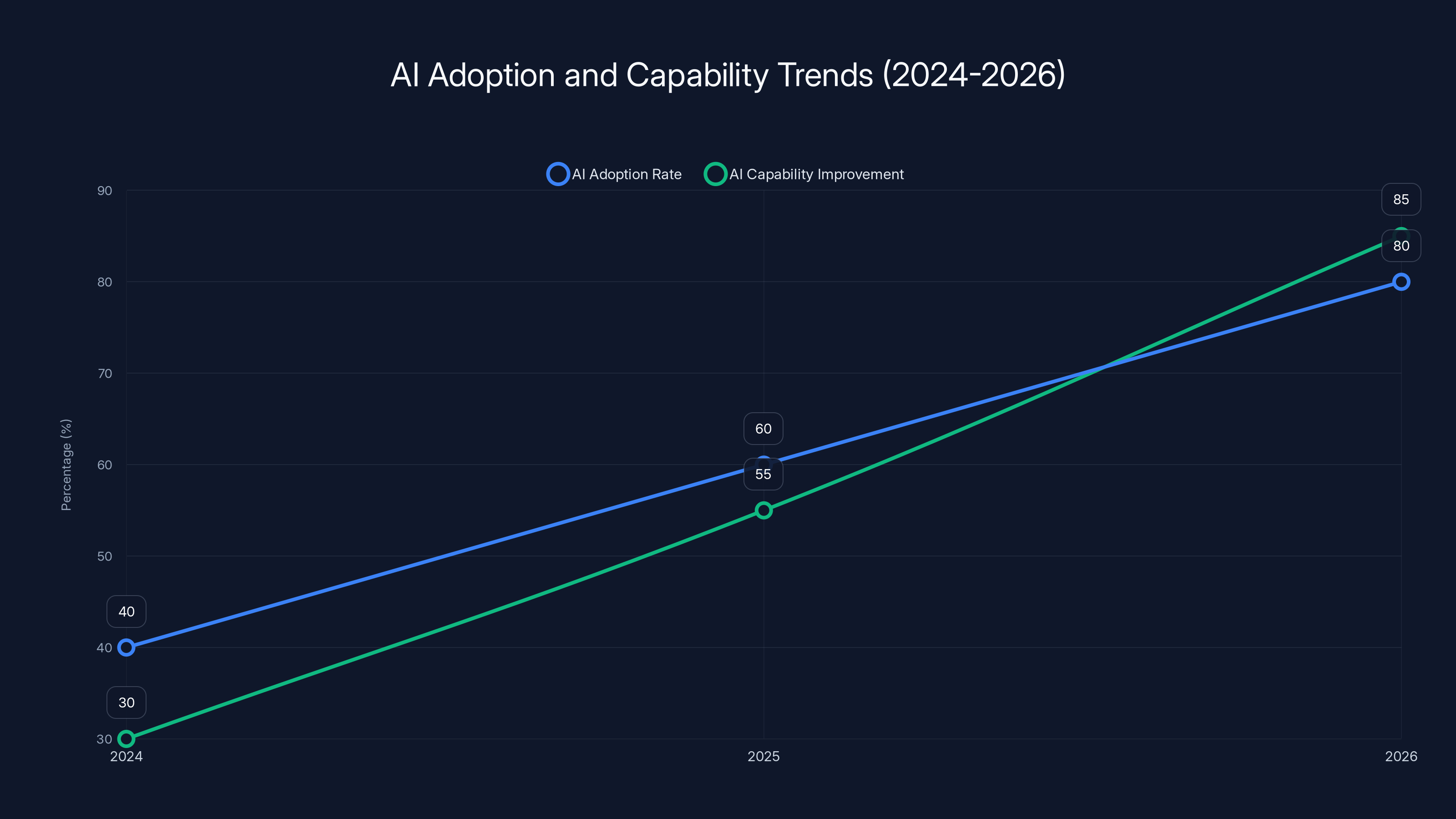

The year 2024 marked a false start for AI, with adoption and capabilities below expectations. However, significant improvements are projected for 2025 and 2026, driven by lessons learned and technological advancements. Estimated data.

What Changed Between 2024 and 2025: The Real Breakthrough

Let me be clear about what actually changed. It's not magic. It's not AGI. It's three concrete things:

1. Raw Model Capability Crossed Critical Thresholds

Models went from "can't consistently generate correct outputs" to "can consistently generate correct outputs." That sounds simple, but the implications are enormous.

When your model is right 60% of the time, you can't use it in production. You'd need to manually validate every output. When your model is right 85% of the time, you still need validation on a random sample. When your model is right 95% of the time, you can deploy to production with confidence.

We crossed those thresholds in late 2024 and early 2025. Not for every task, but for the important ones.

2. Tool Use and Agentic Reasoning Became Reliable

Early AI models could call APIs in theory. In practice, they were terrible at it. They'd misformat the request. They'd fail to parse the response. They'd lose context.

By mid-2025, models could reliably call APIs, integrate the responses, and reason about the results. This is what made agentic workflows possible.

Computer use—the ability to interact with systems the way humans do—went from research project to production feature. That matters because not everything has an API. Sometimes you need to actually use the interface.

3. Infrastructure and Deployment Got Better

It's not just the models. It's the tooling around them. By 2025, you had:

- Better frameworks for building agent systems

- Better ways to manage context windows and memory

- Better safety and monitoring tools

- Better integration with existing B2B systems

- Better cost models that made production deployments economical

A brilliant model doesn't matter if you can't deploy it reliably or you go bankrupt running it.

2026: When AI Moves From Novelty to Default

Here's my prediction for 2026. It's bold, but I think the evidence supports it.

In 2026, AI in B2B stops being optional and becomes the default.

Not because vendors force it on you. Not because hype builds to a crescendo. But because the economics are so compelling that every company that doesn't adopt it is leaving money on the table.

The Sales Organization Gets Rebuilt

By end of 2026, most software companies will have split their sales organizations into two buckets:

-

AI-Driven Sales Development: Prospecting, initial outreach, qualification, meeting scheduling. All managed by AI agents with human oversight. No humans doing repetitive outreach anymore.

-

Human-Focused Account Management: Building relationships, complex negotiations, strategic partnerships, executive engagement. All the stuff that requires trust and judgment.

The middle layer—the part that's been growing but not necessarily adding value—either gets absorbed into the AI system or gets eliminated.

Result: sales organizations that are leaner, higher leverage, and higher-quality.

Support Becomes Intelligent and Invisible

In 2026, going to a chatbot for support will be invisible and fast. You describe your problem. It gets solved. Or it doesn't, and you get a human. The AI will have solved it properly the first time often enough that you don't even realize you talked to an AI.

Support ticket volume won't correlate with hiring anymore. You can 5x support coverage without adding support staff.

Marketing Runs on Automation

Good marketers in 2026 won't be executing campaigns. They'll be strategizing and optimizing. Creating the brief. Setting the goals. Choosing the channels. The actual work—research, copy, graphics, testing, optimization—gets handled by AI agents.

The bottleneck moves from "can we do this?" to "what should we do?" And that's a good bottleneck. That's where judgment lives.

Coding Becomes Different

Developers won't disappear. But the job changes. Instead of writing every line, they'll write the architecture and logic. AI agents will handle the implementation. They'll write 80% of the code. Humans will write the 20% that requires creativity or novel reasoning.

Productivity goes up. Tedium goes down. Better code gets written because developers focus on design instead of syntax.

Operations Stop Fighting Chaos

Operations teams will have AI agents handling routine processes. Invoice processing. Vendor management. Expense reporting. Onboarding workflows. All automated. All auditable. All exception-escalating.

Operations stops being a cost center trying to avoid mistakes and becomes a value center designing better processes.

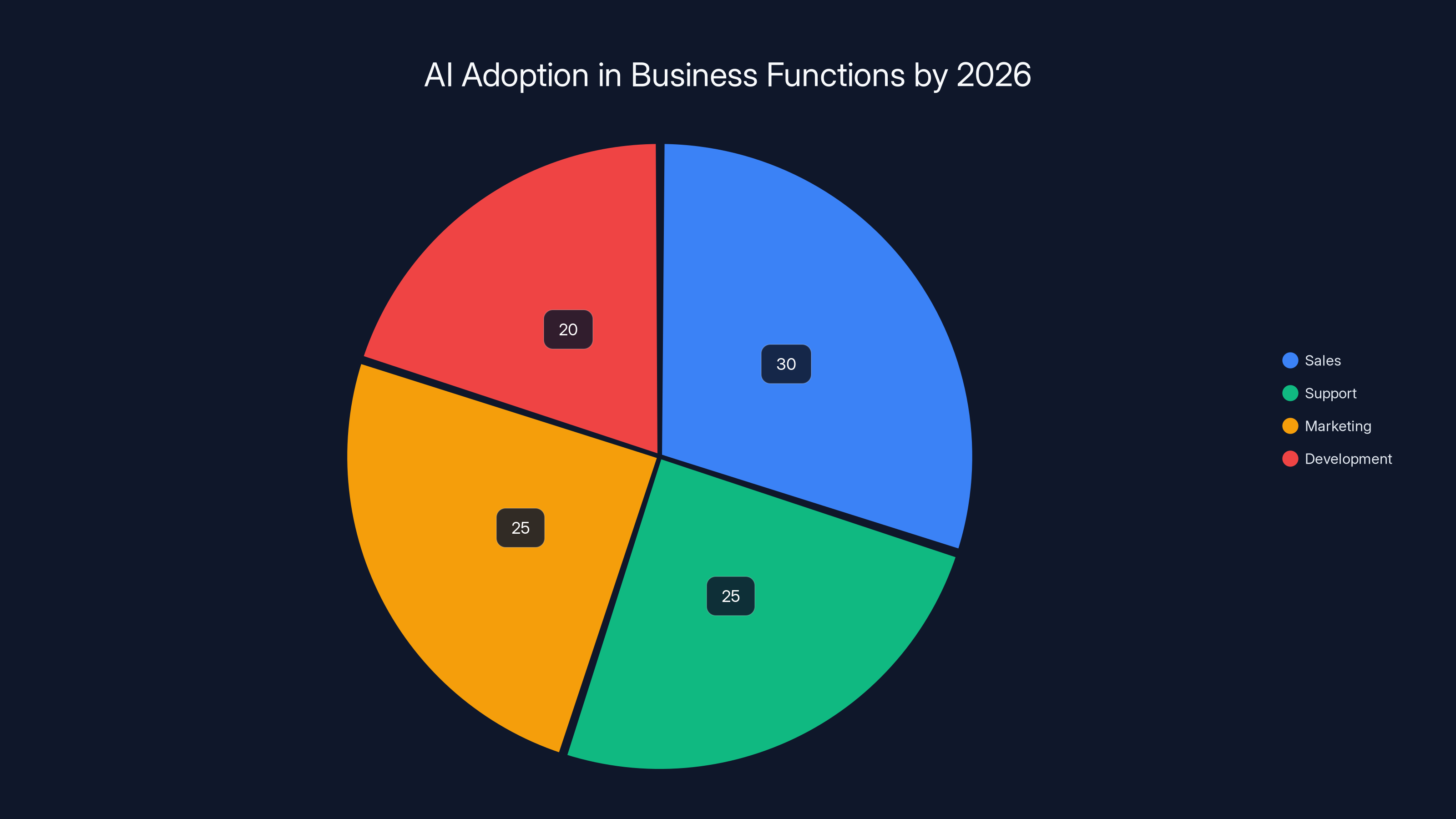

By 2026, AI is expected to be integrated into sales (30%), support (25%), marketing (25%), and development (20%) functions, becoming the default rather than an option. (Estimated data)

Why 2026 Is Different From 2024's False Start

You might be thinking, "Wait, didn't you say people tried this in 2024 and it didn't work?"

Yes. And it didn't.

But 2026 is different because:

1. The Foundation Is Proven

In 2024, companies tried AI tools based on hype. In 2026, they'll adopt based on evidence. We'll have a year of production data showing:

- How much time AI saves

- What quality levels it achieves

- What edge cases require human intervention

- What the actual ROI is

That evidence is powerful. It's harder to dismiss than hype.

2. The Playbook Exists

In 2024, every company was experimenting. In 2026, we'll have clear playbooks for implementation:

- Which workflow to automate first

- How to integrate with existing systems

- How to monitor quality

- How to handle edge cases

- How to measure impact

The first companies to do this will have documented what works. Others will copy.

3. The Skepticism Is Gone

In 2024, skepticism was reasonable. The tools didn't work. By 2026, you have to be skeptical about companies that claim AI doesn't apply to their business. The evidence will be overwhelming.

4. The Economics Are Undeniable

In 2024, cost per unit of AI-generated work was still high. By 2026, the cost will have dropped so low that not using AI becomes a competitive disadvantage.

If your competitor can process customer support tickets at

The Honest Challenges That 2026 Will Face

I don't want to oversell this. 2026 will bring real challenges that companies need to prepare for.

Integration Complexity

Your B2B stack is a mess. You've got Salesforce, Slack, HubSpot, Zapier, custom tools, legacy systems. Getting AI agents to work across all of this will require serious engineering.

Some companies will nail this. Others will struggle. This is an opportunity for platforms that can abstract away integration complexity.

Data Quality and Bias

AI agents are only as good as the data they're trained on. If your historical data is biased (you're disproportionately ignoring certain types of prospects or customers), your AI agent will amplify that bias.

2026 will bring the first real wave of companies discovering that their "objective" AI system is actually amplifying bad decision patterns from their past.

Compliance and Legal

If an AI agent makes a mistake that harms a customer or exposes data, who's responsible? Your company? The AI vendor? Nobody yet has a clear answer to this.

2026 will be the year companies start dealing with these questions in production, not just in legal theory.

Talent Transition

When you eliminate the middle layer of routine work, those people don't just disappear. They need to be retrained, moved to higher-value work, or respectfully managed out. Companies that handle this well will retain their best people and institutional knowledge. Companies that don't will lose both.

What You Should Do Now to Prepare

If you accept that 2026 is the inflection year, you should be doing these things right now.

1. Audit Your Workflows for AI Opportunity

Walk through your major business processes and identify which ones are:

- Repetitive (could run 24/7 instead of 9-5)

- Rule-based (deterministic outcomes based on inputs)

- Data-driven (decisions based on research and analysis)

- Scalable (the same process repeats for hundreds or thousands of instances)

Those are your candidates for AI automation in 2026.

2. Start Small Pilots

Don't try to automate your entire sales organization in Q1 2026. Pick one small workflow. One team. One process. Run it for 30 days. Measure the impact. Learn what breaks.

Then expand.

Companies that have already run pilots in Q4 2025 will have the playbook ready to scale in 2026. Companies starting from scratch in January will be three months behind.

3. Build Your Data Foundation

AI agents need good data. Historical customer interactions. Sales call recordings. Support chat logs. Successful outcomes and failed outcomes. Clean, labeled, accessible data.

If your data is a mess, start fixing it now. You won't have clean data by 2026, but you'll have cleaner data than you do today.

4. Get Your Team Onboard

The biggest risk to AI adoption in 2026 isn't technical. It's organizational. Your sales team thinks AI will take their jobs. Your support team doesn't trust it to handle customers. Your operations team doesn't want to depend on black boxes.

Start the conversations now. Show them what AI will actually do (supplement, not replace). Show them where they'll have more impact (strategy, judgment, relationships). Start building buy-in.

5. Partner With the Right Vendors

Not every vendor will make it through 2026. Some of the companies hyping AI today will quietly shut down when they realize their models don't work at scale. Others will get acquired. Others will pivot to something that actually works.

Choose vendors based on:

- Evidence, not hype (do they have production customers willing to talk?)

- Integration capability (can they connect to your existing systems?)

- Transparency (will they show you how it works and when it fails?)

- Honesty (are they bullish without being delusional?)

The Companies That Will Win in 2026

Let me make a prediction about company categories.

The Winners

Companies that will thrive in 2026:

- AI-native B2B startups that can offer better solutions than bolting AI onto legacy platforms

- Platforms that abstract integration complexity (making it easy to connect AI to your existing stack)

- Vertical SaaS companies that understand specific industries deeply enough to build AI agents tailored to that vertical

- Vendors that focus on specific workflows instead of trying to be "AI for everything"

- Companies that prioritize data quality and ethics over pure feature velocity

The Losers

Companies that will struggle in 2026:

- Legacy platforms that bolted on AI as an afterthought (it'll feel clunky and poorly integrated)

- Companies overhyping AI capabilities when the actual product doesn't deliver (trust gets destroyed)

- Vendors ignoring integration complexity (your data's in Salesforce, but the AI only understands your custom system)

- Companies treating AI as a feature, not a product philosophy (it needs to be baked in, not added on)

- Vendors without a clear business model (cheap AI at scale still requires monetization)

The Real Opportunity Isn't in the Hype, It's in Execution

Here's the thing that separates 2026 from 2024: the opportunity moves from "could this work?" to "how do we make this work?"

2024 was about proof of concept. 2025 was about seeing that proof at scale. 2026 will be about execution at every company in your market.

The question isn't whether AI will transform your business. It will. The question is whether you'll lead that transformation or follow it.

That's determined by what you do in the next 12 months.

Test now. Learn now. Build your playbook now. Get your team aligned now.

Because 2026 isn't coming. It's already here. And the companies that treat it like a novelty will be the ones that suddenly find themselves disrupted by competitors who treated it like table stakes.

Use Case: Building AI agents for your team to handle repetitive processes while you focus on strategy

Try Runable For Free

FAQ

What exactly changed between 2024 and 2025 that makes AI actually work now?

Four major capability unlocks happened. First, Claude 3.5 Sonnet (June 2024) made code generation viable. Second, an October 2024 upgrade to that model improved accuracy dramatically—SWE-bench scores jumped from 33% to 49%. Third, by early 2025, agentic reasoning became reliable enough for multi-step workflows. Fourth, Claude Opus 4 (May 2025) hit production-grade performance where autonomous AI could work for hours without human intervention. Each unlock built on the previous one, crossing thresholds where AI went from "interesting but unusable" to "actually productive."

Why did AI SDRs and support tools fail in early 2024 if the models are so good now?

The models simply weren't good enough. An AI that's right 60% of the time looks impressive in a demo but generates terrible prospects and angry customers at scale. Models had to cross capability thresholds where they're right 90%+ of the time for routine tasks before companies would actually deploy them to customer-facing workflows. This crossed that threshold around late 2024/early 2025. The other factor: 2024 tools tried to do too much with single-purpose AI. 2025 tools use agent orchestration, where specialized sub-agents handle specific functions under a coordinator agent. That architecture matters more than raw model capability.

What is "agentic orchestration" and why does it matter so much?

Agentic orchestration means one AI agent breaking down a complex workflow into components, delegating to specialized sub-agents, and coordinating the results. Instead of a single AI doing sales research, writing emails, analyzing responses, and scheduling meetings, you have a coordinator agent that manages research agents, copywriting agents, intent-detection agents, and calendar-integration agents. This matters because it transforms AI from "task assistant" to "process automation." A single AI might write one email. An orchestration system can run your entire prospecting workflow end-to-end, 24/7, with humans only reviewing results and making strategic decisions.

When you say 2026 is the inflection year, what specifically happens?

By end of 2026, AI stops being optional and becomes the default in B2B workflows. Sales organizations restructure with AI-driven prospecting and human account management. Support scales without hiring. Marketing strategy matters more than execution. Developers write less repetitive code. Operations stops fighting chaos and designs better processes. This happens because the economics become undeniable: competitors using AI can operate at lower cost with higher throughput, forcing everyone else to adopt or lose market share. The playbook for implementation also becomes clear based on 2025 pilots.

What's the biggest risk or challenge companies will face with AI in 2026?

Integration complexity, hands down. Your B2B stack has Salesforce, Slack, HubSpot, legacy systems, custom tools. Getting AI agents to work across all of this requires serious engineering work. The companies that nail integration will create enormous competitive advantage. The ones that don't will get frustrated with siloed AI systems that don't talk to each other. Secondary challenges include data quality (biased historical data creates biased AI agents), compliance liability (who's responsible when AI makes a mistake?), and talent transition (how do you meaningfully redeploy people whose routine work gets automated?).

Should we start investing in AI now or wait until the playbooks are clearer?

Start now, but do it strategically. Don't try to automate your entire business in Q1 2026. Pick one small workflow, run a 30-day pilot, measure impact, document what breaks, then scale. Companies running pilots in Q4 2025 will have playbooks ready to scale in 2026. Companies starting from scratch in January will be three months behind. This isn't about being early to hype. It's about learning now so you can execute faster in 2026 when this becomes competitive necessity.

How do I know which AI vendors will actually survive and which are just hype?

Look for three things: First, production customers willing to talk about real results (not anonymous case studies). Second, clear integration paths to your existing stack (generic "we work with everything" is a red flag). Third, honest about limitations (black-box hype is a tell that they don't have product-market fit). Also, look at their business model. Cheap AI at scale still needs real monetization. Vendor that can't explain how they make money probably won't exist in 2026.

Is there any role left for humans in these AI-automated workflows?

Absolutely. Humans move from execution to judgment. In sales, they move from doing outreach to coaching, strategy, and complex negotiations. In support, they handle escalations and judgment calls. In marketing, they set strategy and decide what campaigns to run. In development, they design architecture and handle creative problems. The tedium gets automated. The high-judgment, high-trust work becomes 100% human focus. That's actually more valuable than execution work anyway.

What happens to companies that don't adopt AI by end of 2026?

They become uncompetitive. If your competitors can operate sales dev at 1/10 the cost with better coverage, you can't win that game. If they can scale support without hiring, you can't. This doesn't mean they fail immediately. It means they lose the structural efficiency game. Over 2-3 years, that compounds into either loss of market share, lower profitability, or being forced to do a compressed adoption that's more painful than gradual implementation starting now.

Final Thoughts: The Real Transformation Starts Now

Let me be clear about what I'm saying. I'm not saying AI will solve everything. I'm not saying you should rip out your entire tech stack and rebuild around AI. I'm not saying humans become irrelevant.

What I'm saying is: we finally have technology that works well enough at production scale to automate entire B2B workflows without constant human oversight. That's new as of late 2024/early 2025. It wasn't true in 2024. It is true now.

The companies that accept this and start planning accordingly will have tremendous advantage in 2026. The ones that treat it as hype will be caught flat-footed when competitors suddenly operate at dramatically lower cost with higher throughput.

The false start is over. The real transformation begins now.

2026 isn't coming. You have about one year to prepare for it.

What you do with that year determines how you look back on it from 2027.

Key Takeaways

- Four capability unlocks between June 2024 and May 2025 crossed critical thresholds where AI actually works in production B2B workflows

- Agentic orchestration (AIs managing other AIs) is the real breakthrough, not single-task AI assistants

- Companies that started pilots in Q4 2025 will dominate in 2026 when AI becomes competitive default

- 2026 inflection point moves organizations from execution-based to judgment-based human work

- Integration complexity and data quality are the biggest challenges, not model capability

Related Articles

- Meta's Manus Acquisition: What It Means for Enterprise AI Agents [2025]

- Meta Acquires Manus: The AI Agent Revolution Explained [2025]

- AI Budget Is the Only Growth Lever Left for SaaS in 2026 [2025]

- The 32 Top Enterprise Tech Startups from TechCrunch Disrupt [2025]

- AWS CISO Strategy: How AI Transforms Enterprise Security [2025]

![AI in B2B: Why 2026 Is When the Real Transformation Begins [2025]](https://tryrunable.com/blog/ai-in-b2b-why-2026-is-when-the-real-transformation-begins-20/image-1-1767281744374.jpg)