Open AI Frontier: The Complete Guide to AI Agent Management Platform [2025]

Managing AI agents is becoming just as complicated as managing people. And that's not a metaphor.

Think about it. Your enterprise probably has AI tools scattered everywhere. Chat GPT in one department. Custom models in another. APIs firing off data in silos. Nobody talking to anybody. Workflows breaking because agents can't access context from other systems. It's chaos.

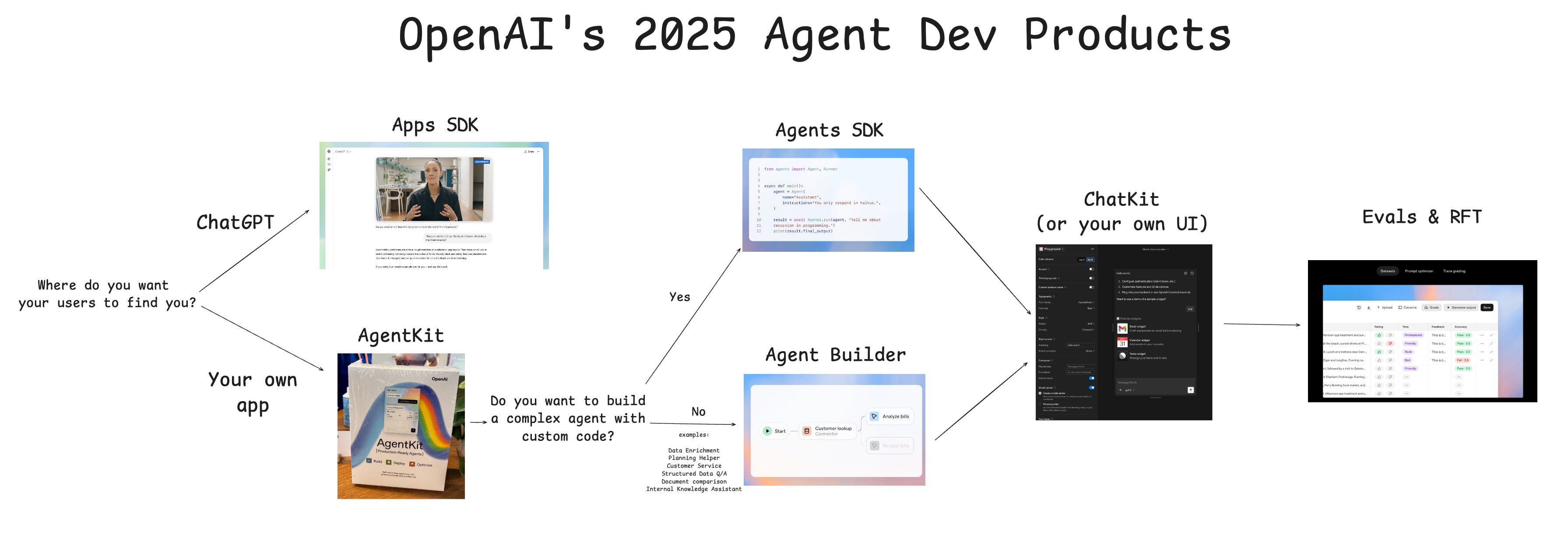

Open AI just launched something called Frontier to fix this. It's a platform designed to let you build, deploy, and manage AI agents—not just Open AI's, but agents from anywhere. It's basically HR for your AI workforce.

The market is watching. Intuit, State Farm, Thermo Fisher, and Uber are already using it. That's not a coincidence. These are billion-dollar companies betting that unified agent management matters.

Here's what you need to know about Frontier, why it exists, what it actually does, and whether it's worth your attention.

TL; DR

- What it is: Open AI's unified platform for managing AI agents from any source, inspired by HR practices for humans

- Who's using it: Intuit, State Farm, Thermo Fisher, Uber, and dozens of other enterprises in early access

- Key features: Shared context, onboarding, agent memory, permission boundaries, hands-on learning with feedback

- Availability: Limited access now, broader rollout within months

- Pricing: Not yet disclosed, but enterprise-focused

- Bottom line: Frontier addresses a real problem—fragmented agent workflows—but enterprise adoption timelines will determine its actual impact

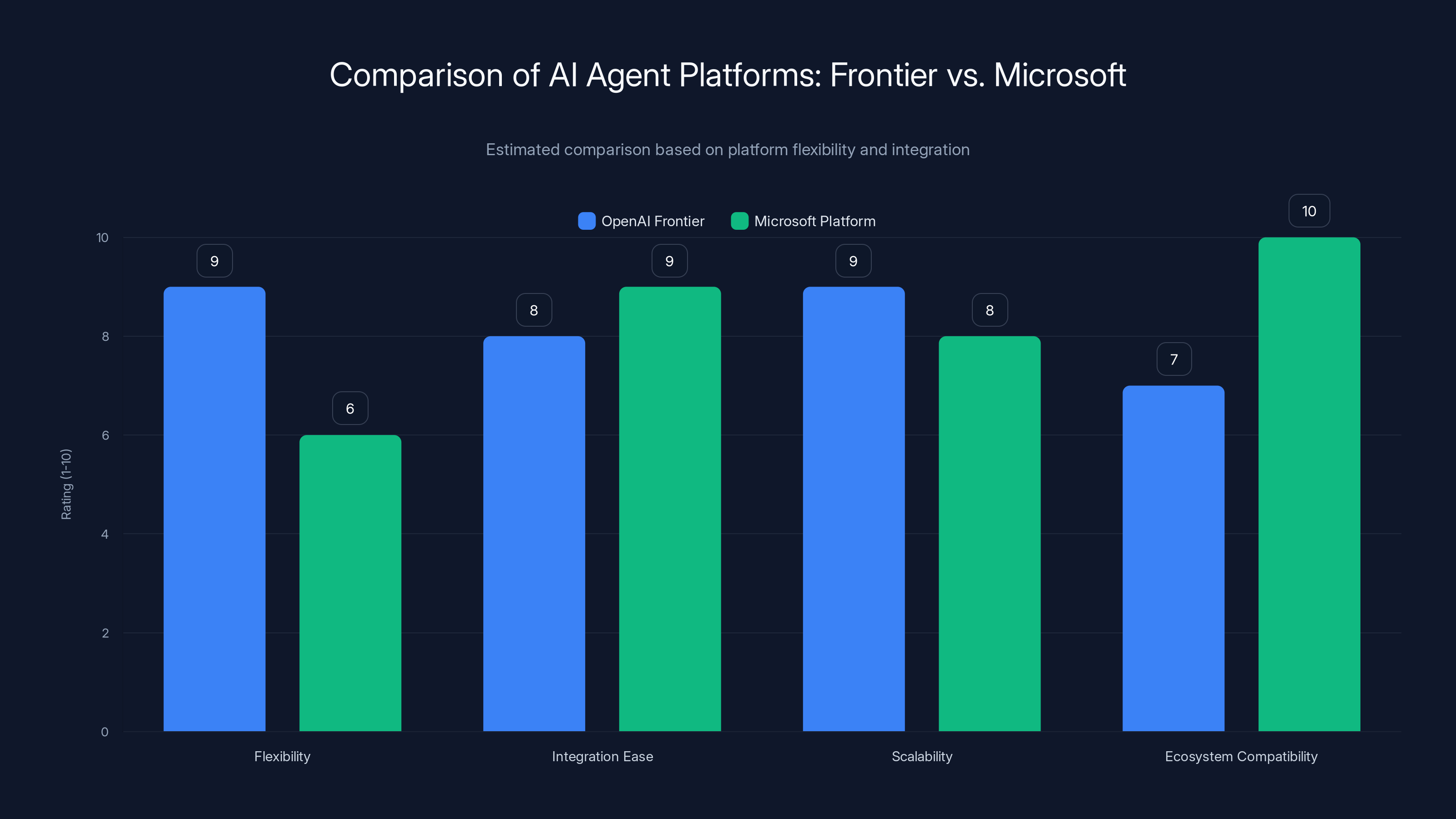

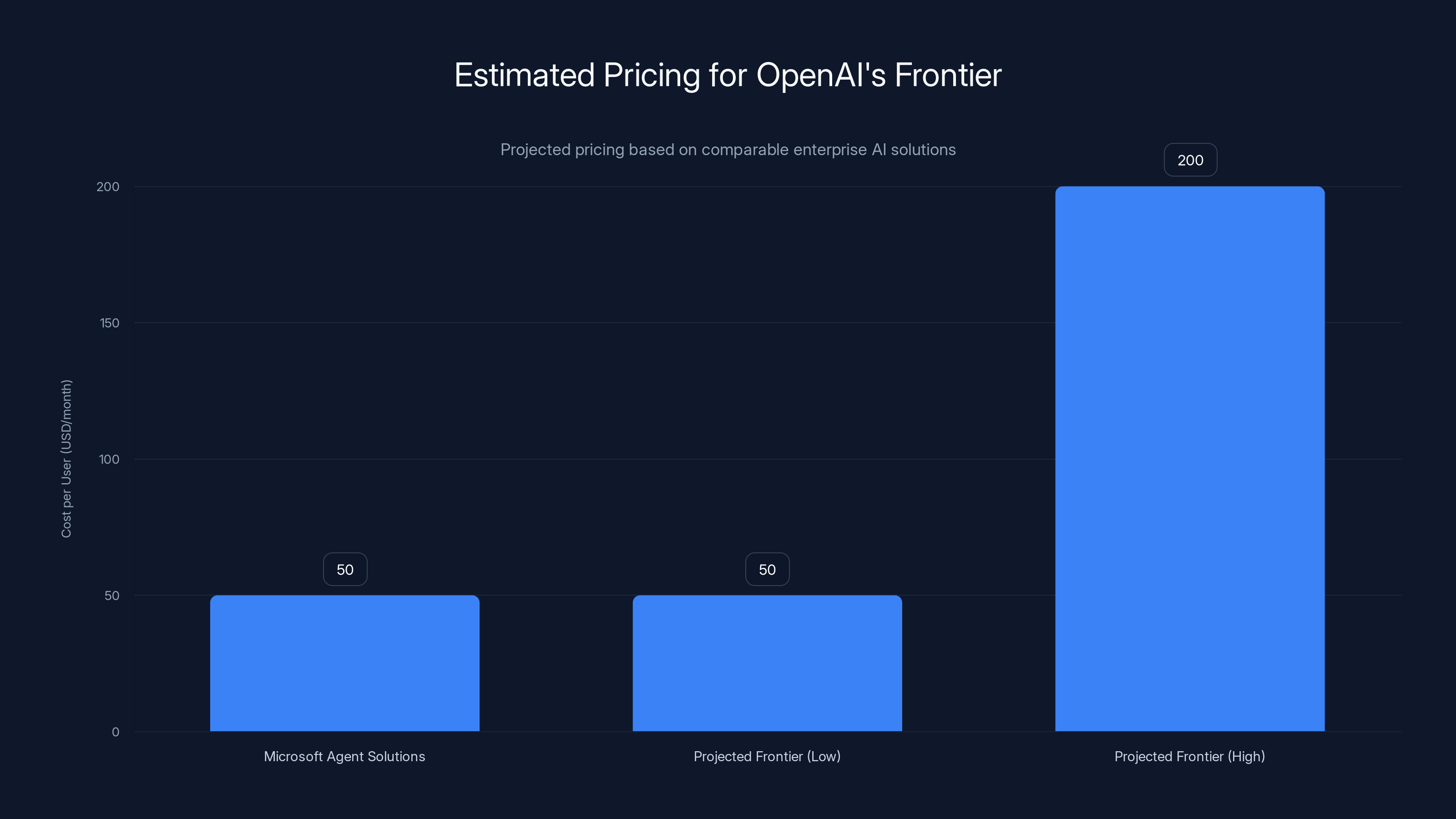

OpenAI Frontier offers greater flexibility and scalability, while Microsoft's platform excels in integration ease and ecosystem compatibility. Estimated data based on typical platform characteristics.

Why Open AI Built Frontier: The Agent Management Problem

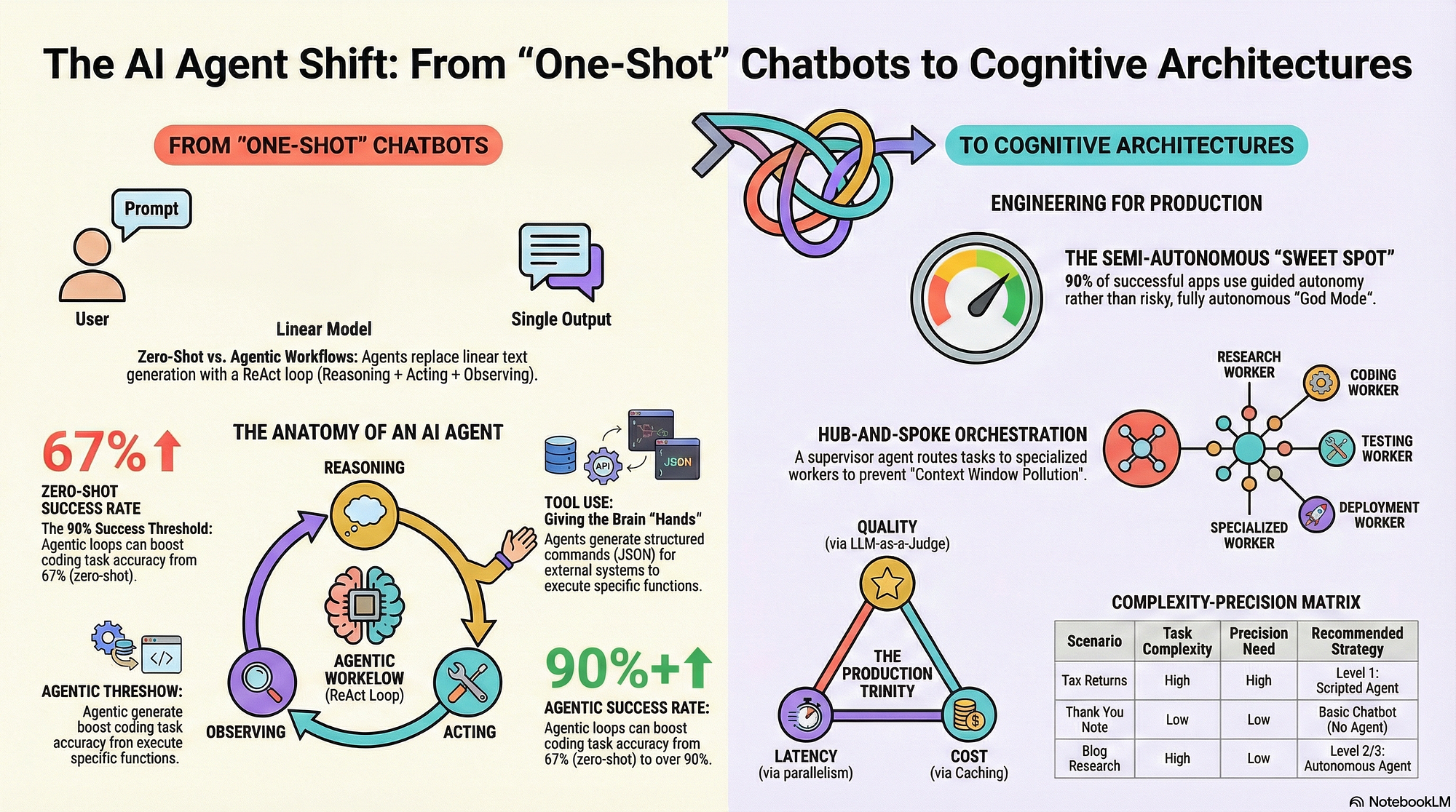

Let's start with the problem Frontier actually solves, because the problem is more interesting than the solution.

Right now, when enterprises deploy AI agents, they're doing it wrong. Not intentionally—they just don't have better options yet.

Company A runs an AI agent on top of Slack. Company B uses it on Salesforce. Company C builds a custom API wrapper. Company D tries to use three agents simultaneously but they don't know about each other. The agent running customer service doesn't know the context from the sales agent. The compliance agent can't talk to the operations agent.

Data silos multiply. Workflows fragment. Security gets messy because nobody's managing permissions consistently.

Open AI's Frontier tackles this by creating what Barret Zoph, the company's general manager for B2B, called an "agent interface." It's a single place where all your AI agents—regardless of origin—can access shared context, collaborate, and operate within defined boundaries.

The inspiration? How companies actually scale human workforces.

Fidji Simo, Open AI's CEO of Applications, put it plainly: "We looked at how enterprises already scale people. We applied those same principles to agents."

That's why Frontier includes features like onboarding (getting agents up to speed), shared context (agents understanding organizational information), learning with feedback (agents improving over time), and clear permissions (agents knowing what they can and can't do).

It's not revolutionary. It's just... sensible.

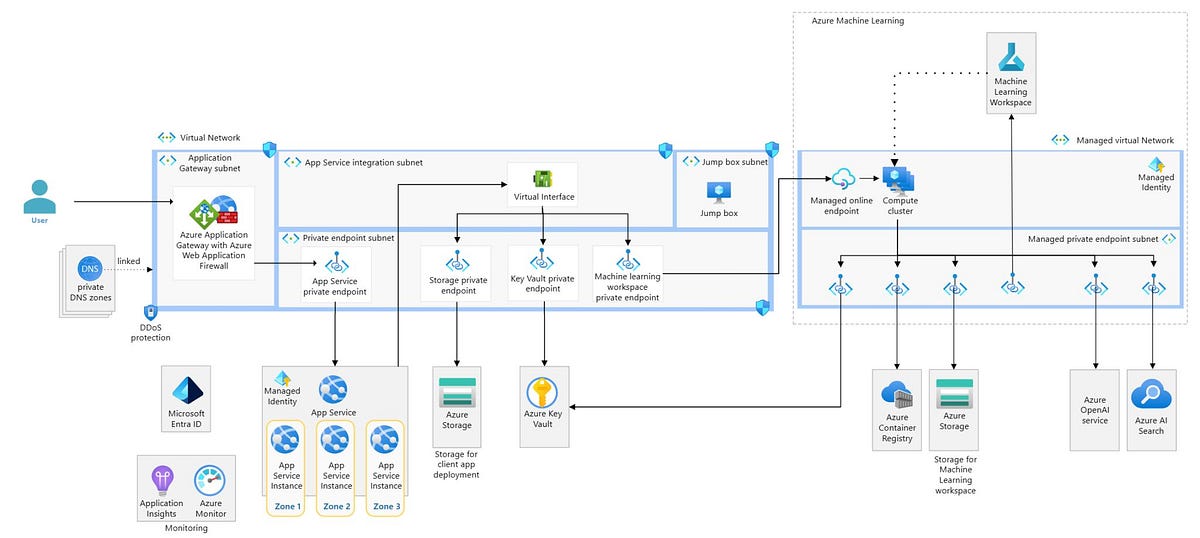

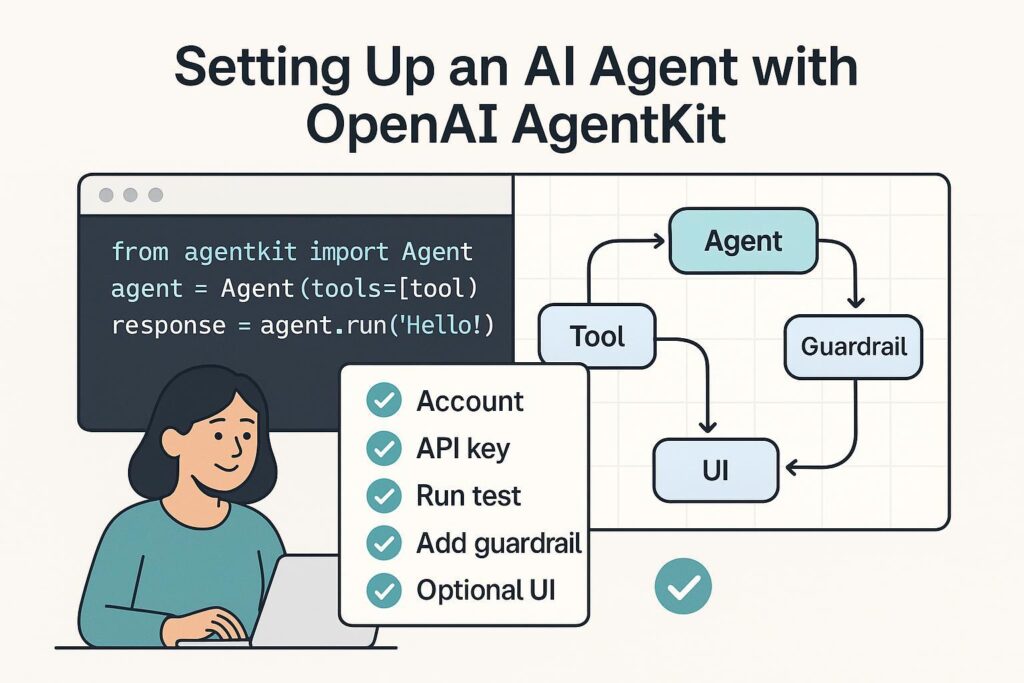

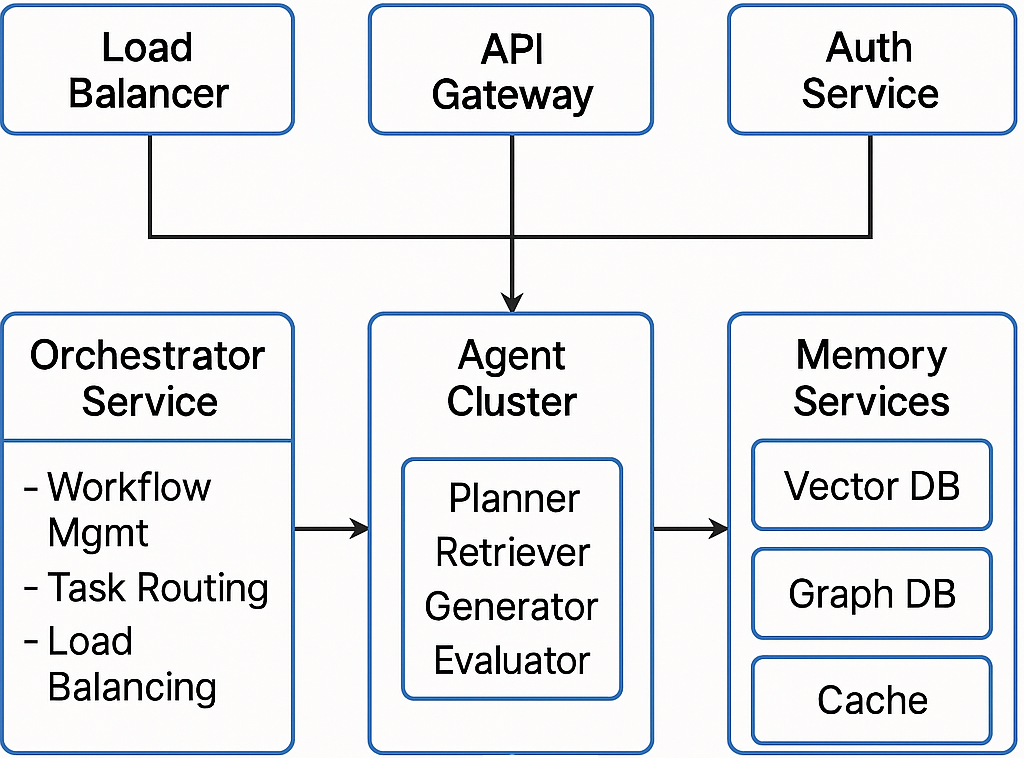

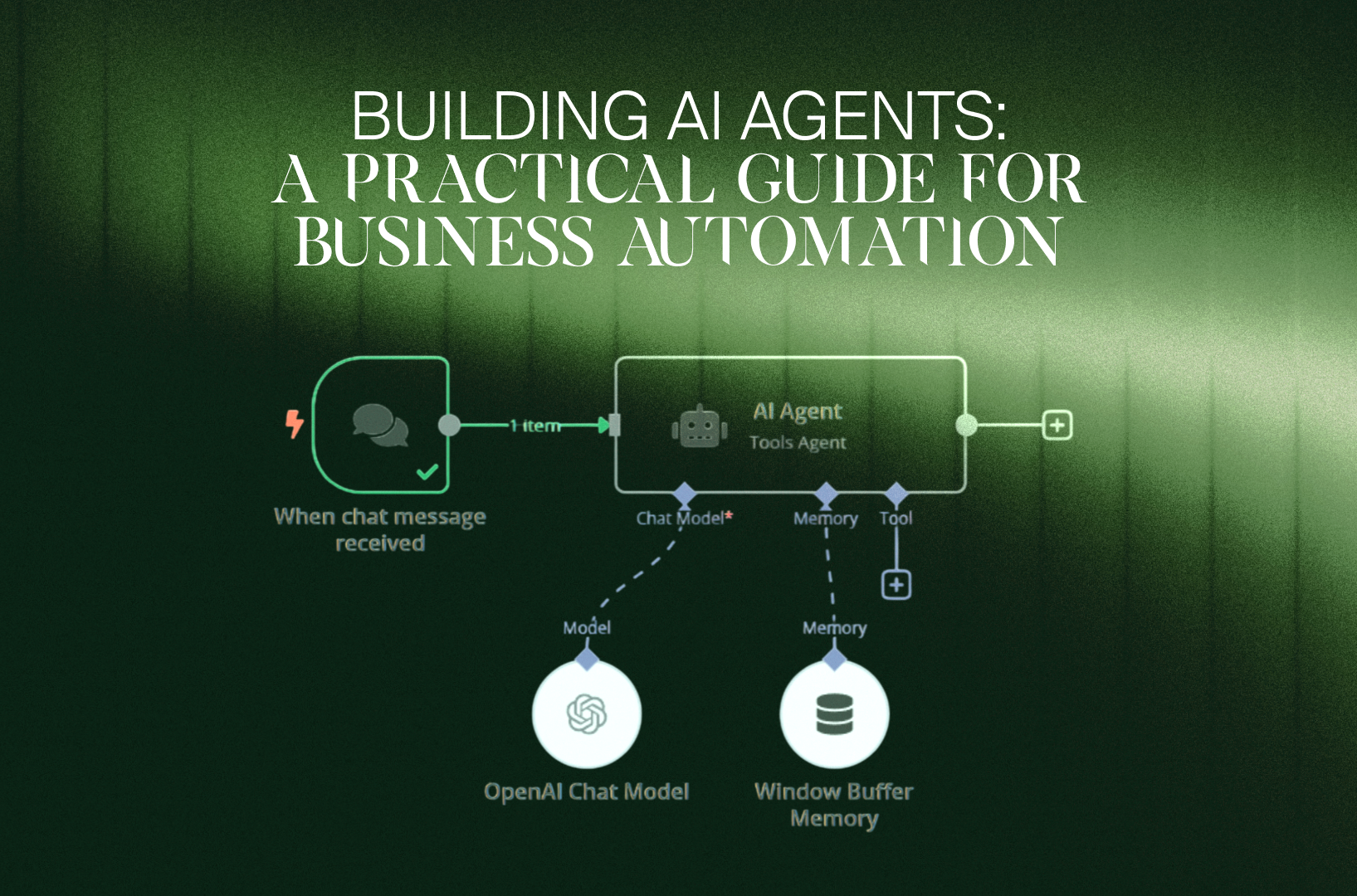

How Frontier Works: The Architecture

Frontier isn't a tool for building agents from scratch. It's middleware. A nervous system for agent orchestration.

Here's the architecture:

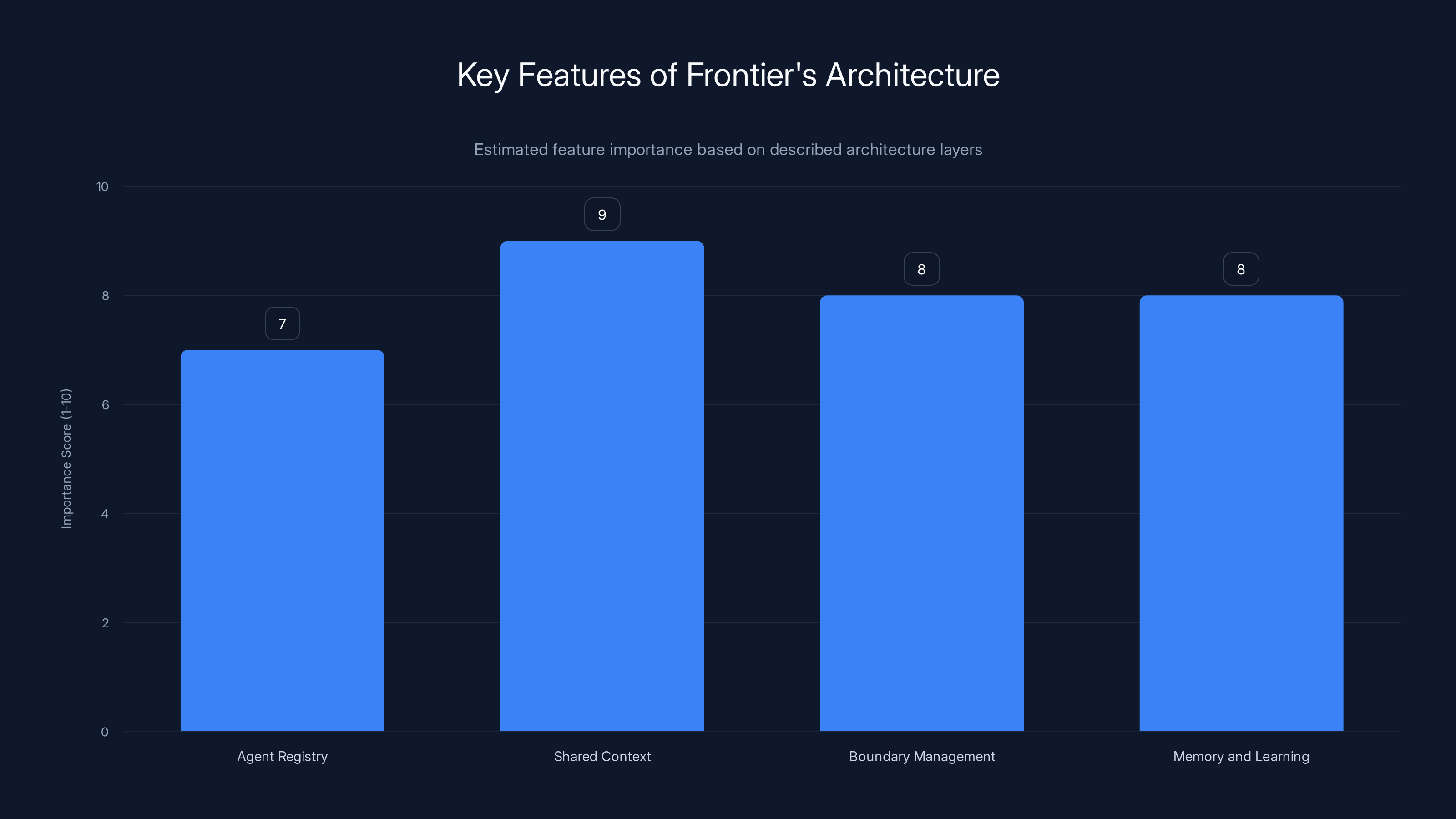

Layer 1: Agent Registry

First, you inventory your agents. These can come from Open AI, from Anthropic, from your own team, or anywhere else. Frontier doesn't care about origin. It just needs to know the agent exists, what it can do, and how to call it.

Layer 2: Shared Context Layer

This is where the magic starts. Frontier maintains a shared business context that all agents can access. Think of it like this: without Frontier, each agent is an isolated worker. With Frontier, all your agents have access to the same organizational knowledge—customer history, product specs, policy guidelines, previous decisions.

Imagine an HR agent running onboarding and a compliance agent checking regulatory requirements. Both need the same company policies. Instead of storing policy documents in fifteen different places, Frontier maintains one source of truth. Both agents hit the same endpoint.

This isn't just efficiency. It's consistency and safety.

Layer 3: Boundary Management

Here's something critical: in regulated industries, you can't just let agents roam free. A healthcare AI agent shouldn't access patient data without explicit permission. A financial agent shouldn't move money without authorization.

Frontier lets you set precise boundaries. Agents operate within defined scope. You can say: "This agent can read customer records but not delete them. That agent can execute trades up to $100K but needs approval beyond." It's fine-grained permission management.

Layer 4: Memory and Learning

Agents in Frontier can build memories. This means they learn from interactions. An agent that handled a specific customer issue learns to recognize similar issues. It improves over time without explicit retraining.

Open AI says humans evaluate agents, provide feedback, and the agents incorporate that feedback. It's supervised learning, built into the platform.

Estimated data suggests Frontier's pricing could range from

Key Features That Matter

Let's break down what Frontier actually gives you.

Shared Business Context

Agents need information to be useful. But right now, agents usually need you to feed that information to them. Every. Single. Time.

Frontier changes this. It maintains persistent business context that every agent can access. Your customer service agent knows the customer's full history. Your operations agent knows current inventory levels. Your compliance agent knows all active regulations.

This isn't magic. It's just good API design applied at scale. But the impact is massive. Response quality improves. Context hallucinations drop. Agents make better decisions.

Onboarding and Knowledge Transfer

When you hire a human, you onboard them. You explain how the company works. You introduce them to other employees. You give them tools and access.

Frontier does this for agents. New agents get context about the organization, connections to other agents, and clear role definition. This means new agents are productive faster. No more weeks of "learning the systems."

Hands-On Learning with Feedback

Agents get better with feedback. Frontier builds this in. Humans review agent outputs, provide corrections, and those corrections feed back into the system. The agent learns.

This is massive for enterprises. You're not stuck with the agent's original training. You're actively improving it based on your specific business needs.

Clear Permissions and Boundaries

Frontier lets you define what each agent can and cannot do. Read-only access to this database. Write access to that queue. API calls capped at this rate. Delete operations forbidden entirely.

For regulated industries, this is non-negotiable. You need audit trails showing what agents did, when, and why. Frontier provides that.

Cross-System Integration

Here's what's genuinely useful: agents can operate across different environments. An agent can query your ERP system, process the data, post results to Slack, and update your CRM—all in one workflow.

Without Frontier, you'd need custom integration code. With Frontier, agents handle it natively.

Real Enterprise Use Cases

Understanding how Frontier works in theory is one thing. Real deployments show where it actually adds value.

Use Case 1: Multi-Departmental Workflows

Intuit's accounting software touches multiple user departments. Imagine this workflow:

- A customer service agent receives a billing question

- It routes the query to the finance agent

- The finance agent accesses shared customer context (payment history, contract terms)

- It calculates the correct response

- The customer service agent delivers the answer

- Both agents log the interaction for compliance

Without Frontier, you'd need custom integration logic. With Frontier, agents collaborate natively because they share context.

Use Case 2: Regulated Industry Compliance

Thermo Fisher works in highly regulated biotech. Compliance isn't optional—it's existential.

With Frontier:

- Every agent action is logged and auditable

- Agents can't exceed their permission scope

- Context includes current regulatory requirements

- Compliance agents monitor other agents in real time

This matters. It's the difference between "Can we use AI here?" being a compliance nightmare versus a solved problem.

Use Case 3: Service Delivery at Scale

Uber operates globally. Different regions, different regulations, different operational requirements.

Frontier lets Uber:

- Deploy agents consistently across regions

- Customize agent behavior per region while maintaining shared logic

- Ensure all agents follow the same security and compliance policies

- Scale from hundreds to thousands of agents without fragmenting operations

How Frontier Compares to Competitive Solutions

Frontier isn't operating in a vacuum. Microsoft's Copilot Agent Studio and Anthropic's Claude Cowork are doing similar things.

Let's see how Frontier actually stacks up.

Frontier vs. Microsoft Copilot Agents

Microsoft's agent platform is tightly integrated with Microsoft 365—Outlook, Teams, Excel, etc. It's powerful if you're all-in on Microsoft.

Frontier's advantage: it's platform-agnostic. You can use Open AI models, Anthropic's Claude, your own custom models, anything. You're not locked into one vendor.

Microsoft's advantage: deep integration with enterprise software that most large companies already use heavily. The switching cost is lower if you're already Microsoft-dependent.

Frontier vs. Anthropic's Claude Cowork

Claude Cowork is deeply integrated with Claude models. It's excellent for teams using Claude as their primary AI.

Frontier's advantage: you're not locked into Claude. You can mix and match models based on use case. Customer service might use Claude. Code analysis might use GPT-4. Frontier orchestrates both.

Claude Cowork's advantage: tighter integration with Claude's capabilities. If Claude is your standard, Cowork is simpler to implement.

Frontier vs. Custom Integration Solutions

Large enterprises sometimes build custom agent orchestration. It's expensive, slow, and brittle.

Frontier replaces that entire engineering effort. No custom code. No maintenance burden. Updates and security patches happen automatically.

Enterprises face significant challenges with AI agent deployment, including data silos, fragmented workflows, and inconsistent security. Manual data handoffs consume 30% of work time. (Estimated data)

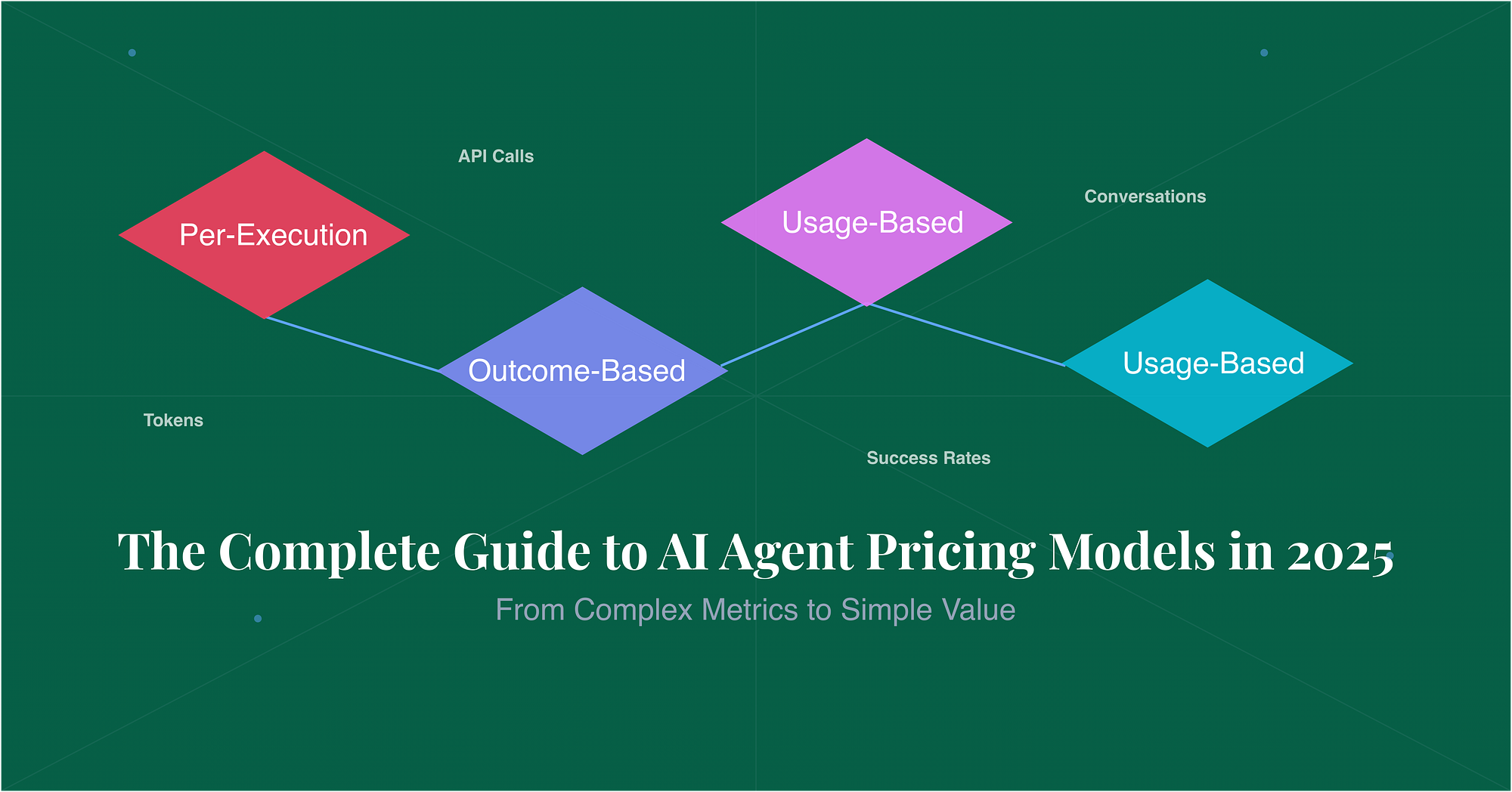

Pricing: What We Know (And Don't)

Open AI hasn't published Frontier pricing yet. Denise Dresser, Open AI's chief revenue officer, declined to disclose pricing during the announcement.

But we can make educated guesses based on:

- Target market: Enterprises with significant AI deployment (Intuit, State Farm, Uber aren't small)

- Comparable products: Microsoft's agent solutions typically run $15-50/month per user for enterprise tiers

- Value proposition: Frontier is preventing millions in integration costs and security incidents

Expect:

- Tiered pricing: Likely freemium or paid tiers based on agent count, API calls, or storage

- Per-agent pricing: Maybe $50-200/month per active agent for enterprises

- Custom enterprise deals: Large deployments (100+ agents) likely negotiate custom contracts

What matters more than price is ROI. If Frontier saves your team 5 engineers' worth of integration work, it pays for itself immediately.

The Open AI Open Standards Play

Here's something important nobody's talking about enough: Open AI's commitment to open standards.

Sam Altman and his team have repeatedly said Frontier will support open standards. Agents from Anthropic, open-source models, custom deployments—all welcome.

This is a strategic move. It signals that Frontier is the orchestration layer, not a vendor lock-in tool. You can build on Frontier confident that you're not betting the farm on Open AI's models.

Is this entirely altruistic? No. Open AI benefits from a larger Frontier ecosystem. More agents using Frontier means more data, more use cases, more learning opportunities.

But it also means Frontier could become genuinely important infrastructure. Like how Kubernetes became the container orchestration standard, Frontier could become the agent orchestration standard.

Implementation Considerations

Frontier looks great in theory. Implementation is harder.

Data Migration and Integration

Getting Frontier working means connecting it to your existing systems. Your CRM. Your ERP. Your data warehouse. Your APIs.

This is engineering work. You're mapping your data architecture to Frontier's context model. You're defining how information flows between systems.

Estimate: 2-4 weeks for basic integration. 8-12 weeks for complex enterprise environments with legacy systems.

Agent Inventory and Strategy

You need to know what agents you're actually deploying. What problem does each solve? What data does it need? What are its boundaries?

Most enterprises don't have this clarity yet. They're still experimenting.

Before implementing Frontier, run an audit. Map your current AI deployments. Prioritize which ones benefit most from unified management.

Change Management

Frontier changes how teams work. Your engineers now think in terms of agents and orchestration instead of point solutions.

Budget time for training. Budget time for cultural shift. Early adopters will champion it. Skeptics will resist.

Compliance and Auditing

In regulated industries, every agent action must be auditable. Frontier handles this, but you need to set it up correctly.

Work with compliance early. Define what audit trails you need. Ensure Frontier's logging meets your requirements.

This chart compares various agent management platforms based on integration, flexibility, and vendor lock-in. Open-source solutions offer the most flexibility but require significant engineering effort. (Estimated data)

The Competitive Landscape: Who Else Is Building This?

Frontier isn't alone. The agent management space is getting crowded.

Microsoft's Approach

Microsoft is building agent capabilities into Microsoft 365 directly. Your Office suite is becoming an agent platform.

Advantage: Deep integration, familiar tools, existing relationships. Disadvantage: Locked into Microsoft's stack.

Anthropic's Claude Cowork

Anthropic is pushing Claude-native agent orchestration. If you're all-in on Claude, it's excellent.

Advantage: Best-in-class for Claude workflows. Disadvantage: Less flexible for multi-model scenarios.

Google's Vertex AI

Google Cloud's Vertex AI includes agent orchestration as part of its broader ML platform.

Advantage: Google's data and ML infrastructure is best-in-class. Disadvantage: Requires heavy Google Cloud commitment.

Open-Source Alternatives

Projects like Lang Chain and Meta GPT let you build agent orchestration yourself.

Advantage: Complete control, no vendor lock-in. Disadvantage: Massive engineering effort, ongoing maintenance.

Security and Compliance Deep Dive

Frontier is targeting enterprises. Enterprise means security matters obsessively.

Permission Boundaries

Frontier's permission model works like this:

Each agent gets a role. That role defines capabilities:

Agent: Customer Service Bot

Role: customer_service

Permissions:

- read: customer_profiles

- read: order_history

- write: support_tickets

- call: payment_api (read-only)

Restrictions:

- cannot delete anything

- cannot access: employee_data

- rate_limit: 100 calls/minute

This isn't theoretical. It's enforced at the API level. The agent can't exceed its boundaries even if it tries.

Audit Logging

Every agent action logs:

- What the agent did

- When it happened

- What data it accessed

- What changes it made

- Who reviewed/approved it (if applicable)

For compliance audits, this is gold. You can show regulators exactly what your agents did and why.

Data Isolation

In multi-tenant scenarios, data isolation is critical. Your customer data can't accidentally leak to another customer's agent.

Frontier handles this through context partitioning. Each agent's context is isolated. Cross-contamination is architecturally impossible.

Encryption and Data Protection

All data in transit is encrypted (TLS 1.3). Data at rest is encrypted with customer-provided keys.

Open AI doesn't store agent-specific data longer than necessary. Context is ephemeral unless you configure otherwise.

The Future of Agent Management

Where is this all going?

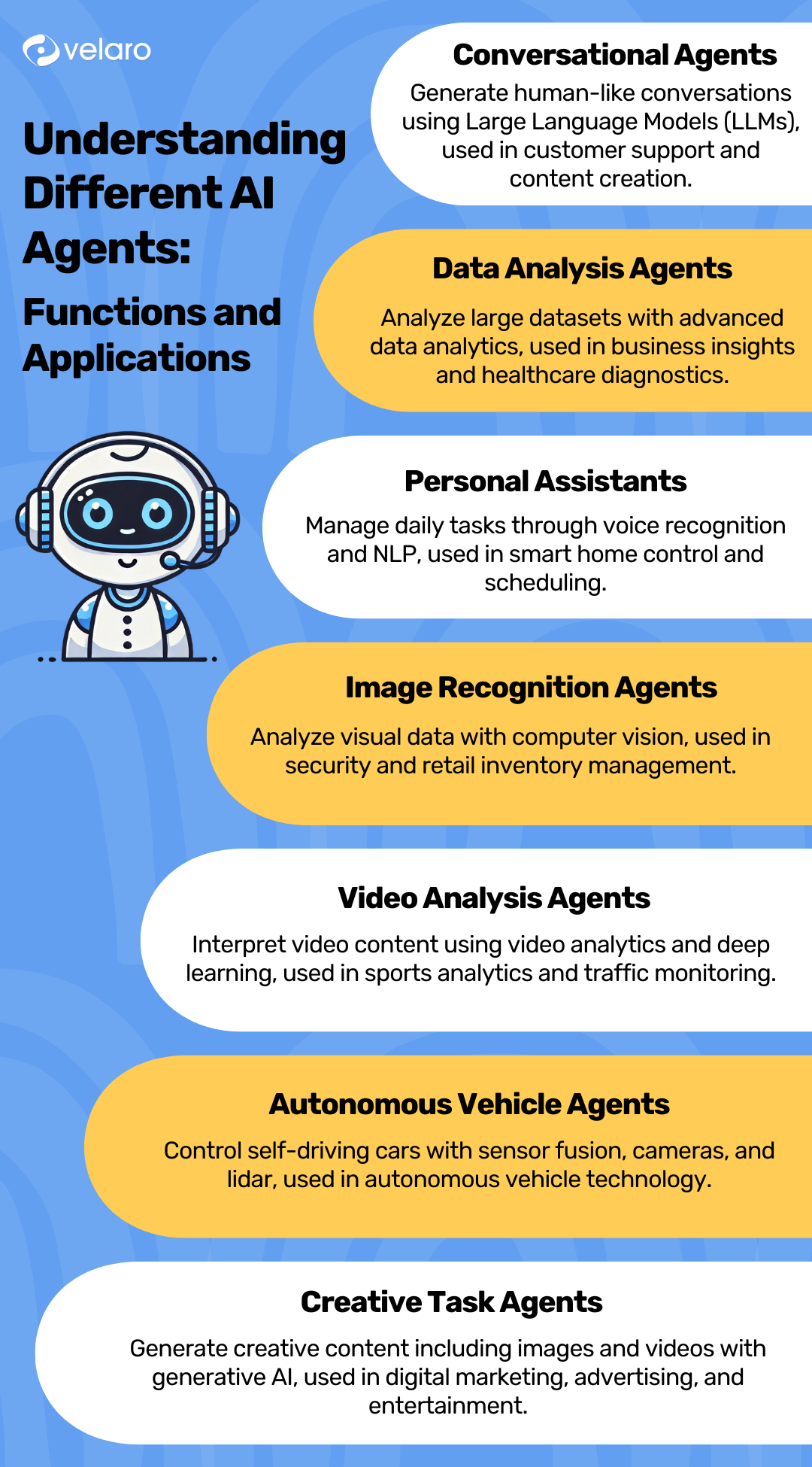

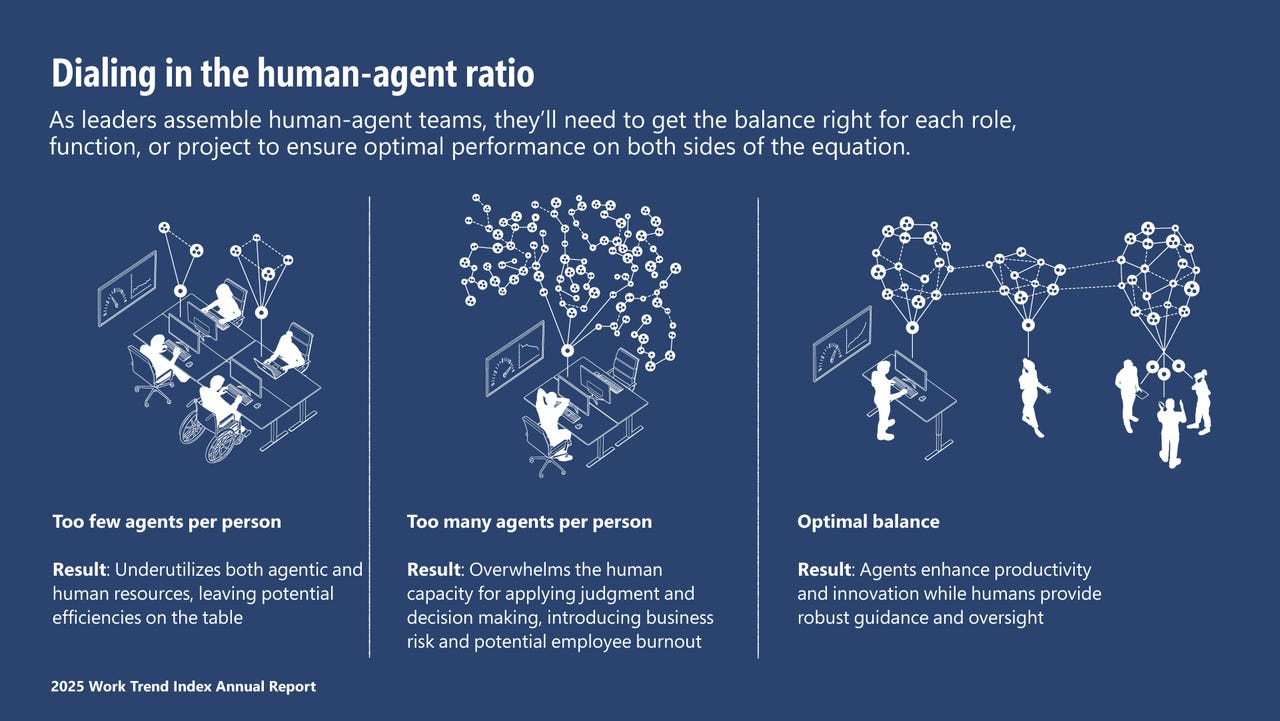

Agentic Workflows Becoming Standard

Within 12-18 months, major enterprises will run significant portions of their business through agent workflows. Not 100%, but maybe 40-60% of routine operations.

Frontier is betting it will be the orchestration layer for that shift.

Multi-Model Becoming Default

Companies will stop thinking in terms of "we use Chat GPT" or "we use Claude." They'll use whatever model is best for each specific task.

Frontier enables this. One agent runs on GPT-4 Turbo for reasoning. Another uses Claude for code generation. A third uses a fine-tuned model for domain-specific tasks.

Autonomous Business Processes

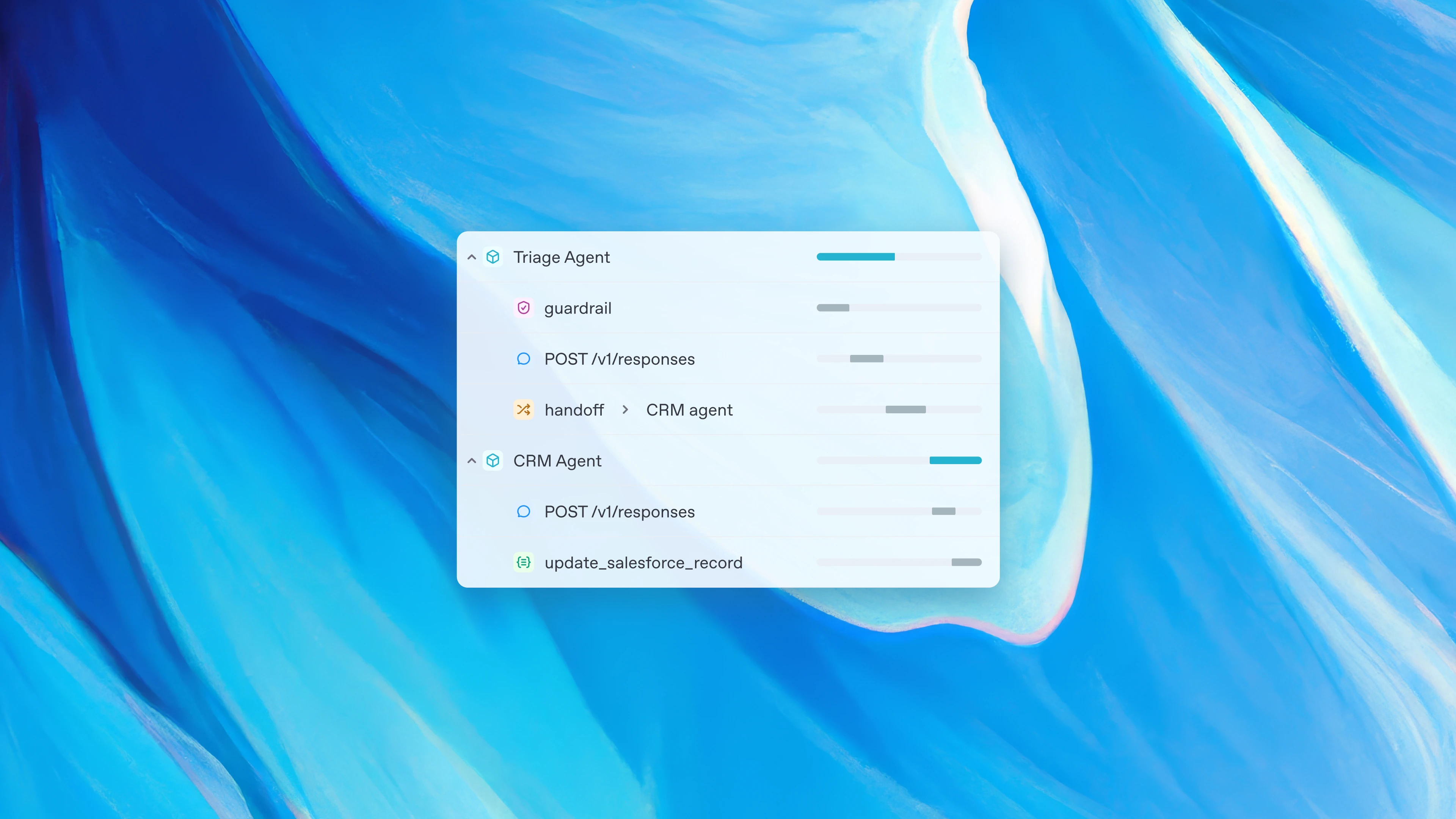

Eventually, entire business processes run autonomously. A customer inquiry triggers a chain of agents:

- Routing agent categorizes the request

- Specialist agent researches the issue

- Solution agent generates a response

- Compliance agent validates it

- Customer service agent delivers it

No human involvement unless something's unusual.

Frontier is the platform that makes this orchestration straightforward.

The Skills Gap

Managing agents well is a new skill. Enterprises need people who understand:

- How to define agent boundaries and permissions

- How to set up feedback loops and learning

- How to monitor agent behavior for drift and hallucination

- How to handle agent failures gracefully

A new job title is emerging: Agent Manager. Similar to engineering managers, but for AI agents.

The Shared Context layer is estimated to be the most critical feature of Frontier's architecture, providing consistency and safety across agent operations. Estimated data.

Potential Limitations and Concerns

Frontier isn't a silver bullet. Let's talk about the things that could go wrong.

Model Hallucinations Aren't Solved

Frontier provides context and boundaries, but it doesn't fix the underlying issue: LLMs hallucinate.

An agent with perfect context and clear boundaries can still make confident, incorrect statements. Frontier helps reduce this, but doesn't eliminate it.

You still need human review loops. You still need guardrails.

Complexity of Large Deployments

When you go from 3 agents to 30 agents, complexity explodes exponentially. Managing interactions, debugging failures, ensuring consistency—it becomes hard.

Frontier tries to help, but there's no magic solution. Large agent fleets require sophisticated monitoring and management.

Cost of Agent Inference at Scale

Running a single agent costs pennies per interaction. Running 50 agents across millions of daily interactions costs significant money.

If Frontier doesn't dramatically improve agent efficiency, cost can spiral. You need ROI analysis before committing.

Vendor Dependency

Frontier is built by Open AI. Open AI will shape its evolution. If Open AI's strategy shifts, Frontier's roadmap shifts with it.

For risk-averse enterprises, this is a concern.

Getting Started with Frontier

If you're interested in Frontier, here's the practical path:

Phase 1: Research and Planning (Weeks 1-2)

- Inventory your current AI deployments

- Map workflows that would benefit from agent orchestration

- Identify business unit leaders who champion agents

- Document your compliance and security requirements

Phase 2: Pilot Program (Weeks 3-8)

- Apply for early access to Frontier

- Pick one workflow as your pilot (start simple)

- Integrate Frontier with your core systems

- Deploy one or two agents

- Measure: time saved, quality improved, costs reduced

Phase 3: Proof of Concept (Weeks 9-16)

- Expand to 3-5 agents if pilot succeeds

- Test compliance and auditing thoroughly

- Run this through your compliance team

- Quantify ROI carefully

Phase 4: Production Rollout (Weeks 17+)

- Define rollout sequence

- Train team members

- Set up monitoring and alerting

- Deploy gradually, not all at once

Integration with Runable for Enhanced Automation

While Frontier handles agent orchestration and management, Runable complements it by providing AI-powered automation for creating the documentation, reports, and presentations that agents often need to generate.

Imagine this workflow: Your Frontier agents process data and generate insights. Then Runable automatically transforms those insights into polished reports, executive summaries, or presentation slides.

It's a natural pairing. Frontier orchestrates agent workflows. Runable automates content generation from agent outputs. Together, they create end-to-end automation for complex business processes.

Use Case: Your Frontier agents run weekly analysis. Runable automatically generates executive reports with charts, insights, and visualizations—no manual work required.

Try Runable For Free

The Bottom Line

Open AI Frontier addresses a real problem: fragmented AI agent deployments.

It won't solve everything. Hallucinations, cost management, and skill gaps remain. But for enterprises ready to go all-in on agents, Frontier provides the infrastructure to do it safely, consistently, and at scale.

The early adopters (Intuit, State Farm, Thermo Fisher, Uber) are betting it will become essential. Their success will determine whether Frontier becomes an industry standard or remains a nice-to-have.

If you're managing AI deployments at an enterprise, Frontier deserves serious evaluation. The problem it solves—orchestrating multiple agents across complex organizations—is genuinely important.

The only question is whether Frontier's solution is better than alternatives. That depends on your specific situation, existing infrastructure, and model preferences.

Start with a small pilot. Measure results. Then decide.

FAQ

What is Open AI Frontier?

Open AI Frontier is a unified platform for building, deploying, and managing AI agents. It provides shared context, permission boundaries, agent memory, and orchestration capabilities so that multiple agents—from any source—can work together effectively within enterprises. Think of it as HR infrastructure for your AI workforce.

How does Frontier handle agents from different sources?

Frontier is vendor-agnostic. You can deploy agents built by Open AI, Anthropic, your own team, or any other source. Frontier connects them to shared business context, enforces permission boundaries, and enables cross-agent collaboration through a unified interface and API.

What are the main benefits of Frontier?

The primary benefits include reduced integration complexity (no more custom orchestration code), improved agent collaboration through shared context, enforced security and compliance through permission boundaries, and the ability to scale from a few agents to hundreds without fragmenting your operations. McKinsey research shows that unified systems reduce operational overhead by 25-40% compared to fragmented approaches.

How does Frontier compare to Microsoft's agent platform?

Microsoft's platform is deeply integrated with Microsoft 365 and works best if you're already invested in the Microsoft ecosystem. Frontier is platform-agnostic—you can use Open AI models, Claude, custom models, or anything else. Choose Frontier if you want flexibility across multiple AI providers. Choose Microsoft if you're all-in on Microsoft 365.

What does Frontier cost?

Open AI hasn't announced official pricing yet, but based on comparable enterprise solutions and the target market, expect tiered pricing ranging from

Who is currently using Frontier?

Early adopters include Intuit, State Farm, Thermo Fisher, and Uber, with dozens more in beta. Broader availability is rolling out over the next few months as Open AI expands from limited early access.

How long does it take to implement Frontier?

A basic implementation takes 2-4 weeks, but enterprise integrations with legacy systems typically require 8-12 weeks. The timeline depends on the complexity of your existing systems, the number of agents you're deploying, and your compliance requirements. Start with a single simple workflow to validate the approach before expanding.

Can Frontier eliminate hallucinations?

No. Frontier provides context and boundaries that reduce hallucinations, but underlying LLM models still hallucinate occasionally. You still need human review loops, guardrails, and monitoring. Frontier helps control the impact, but it's not a complete solution.

Is Frontier suitable for regulated industries?

Yes. Frontier was specifically designed with regulated industries in mind. It provides comprehensive audit logging, permission boundaries, data isolation, and compliance controls. Start with a single low-risk agent to prove compliance, then expand.

What happens if an agent goes wrong?

Frontier enforces permission boundaries, so agents can't exceed their scope. Every action is logged. You can review what the agent did, when, and why. For serious issues, you can immediately disable the agent, and all actions remain auditable. This is far better than agents operating without boundaries.

Related Trends and Future Outlook

Frontier is riding a wave of broader enterprise AI adoption. Here's what's changing:

Enterprise AI spending is accelerating. IDC projects that enterprise AI spending will reach $154 billion by 2026. Most of that is going toward operational AI—agents that actually run parts of the business.

Agent reliability is improving. As LLMs get better and techniques like retrieval-augmented generation (RAG) and fine-tuning mature, agents become more trustworthy.

Compliance frameworks are solidifying. Regulators are developing clearer guidelines for AI in business. Frontier's compliance-first approach aligns with where regulation is heading.

Skills gaps are widening. There aren't enough people who know how to manage agent deployments at scale. This creates opportunity for early platforms.

Frontier's success depends on whether these trends continue—and they likely will.

Key Takeaways

-

Frontier solves a real problem: Fragmented AI agent deployments are costing enterprises millions in integration work and operational complexity.

-

It's enterprise-focused: The price point, feature set, and early customers all signal this is for large organizations with serious AI commitments.

-

Vendor flexibility matters: Frontier's support for agents from any source (not just Open AI's) is a genuine competitive advantage.

-

Compliance is built-in: For regulated industries, Frontier's permission boundaries and audit logging are non-negotiable capabilities.

-

Timing is strategic: Agent management is becoming essential just as enterprises are scaling AI. Frontier arrives when demand is highest.

-

Competition is real: Microsoft, Anthropic, and Google are all building similar capabilities. Frontier isn't unique, but it's well-positioned.

-

Measurement matters: Before committing, run a pilot and measure ROI carefully. Agent management is valuable, but only if it delivers measurable results.

-

Skills are the real bottleneck: Technology is easy. Training teams to manage agents effectively is the actual challenge.

Conclusion

Open AI Frontier represents a meaningful step forward in enterprise AI operations. It's not revolutionary—agent orchestration is a logical evolution of what developers have been building ad-hoc for years.

But it's the first time a major vendor is offering it as a coherent platform.

For enterprises with multiple AI agents, fragmented workflows, or complex compliance requirements, Frontier deserves serious evaluation. The problem it solves is real. The solution is solid. The timing is right.

The only question is execution. Can Open AI maintain momentum? Will competitors catch up? Will enterprises actually shift to agent-centric operations?

If the answer to those questions is yes, Frontier could become as essential to enterprise AI as Kubernetes became to container orchestration.

Time will tell. But for early movers like Intuit, State Farm, and Uber, the bet is clear: unified agent management is worth building around.

The era of scattered point-solution AI deployments is ending. The era of orchestrated, managed agent fleets is beginning.

Frontier is Open AI's move for that future. Whether it wins will depend on execution, not positioning.

Related Articles

- Resolve AI's $125M Series A: The SRE Automation Race Heats Up [2025]

- AI Agents & Access Control: Why Traditional Security Fails [2025]

- Enterprise AI Race: Multi-Model Strategy Reshapes Competition [2025]

- A16z's $1.7B AI Infrastructure Bet: Where Tech's Future is Going [2025]

- ChatGPT Outages & Service Issues: What's Happening [2025]

- Microsoft's Security Leadership Shift: What Gallot's Return Means [2025]

![OpenAI Frontier: The Complete Guide to AI Agent Management [2025]](https://tryrunable.com/blog/openai-frontier-the-complete-guide-to-ai-agent-management-20/image-1-1770302513338.png)