Open AI's 2026 'Practical Adoption' Strategy: Closing the AI Gap

Open AI just made a quiet but significant announcement. While the industry obsesses over frontier models and raw capability, the company's leadership is publicly pivoting toward something less glamorous but arguably more important: practical adoption.

CFO Sarah Friar laid it out plainly in a recent blog post titled "A business that scales with the value of intelligence." The message is clear. You can build the most powerful AI model ever created, but if nobody uses it in ways that matter, you've got an expensive research project, not a business.

This shift matters because it signals a maturation in how Open AI thinks about its role. The company isn't abandoning frontier research. But it's acknowledging a fundamental reality: there's a massive gap between what AI can theoretically do and what people actually deploy in their workflows, their businesses, and their lives.

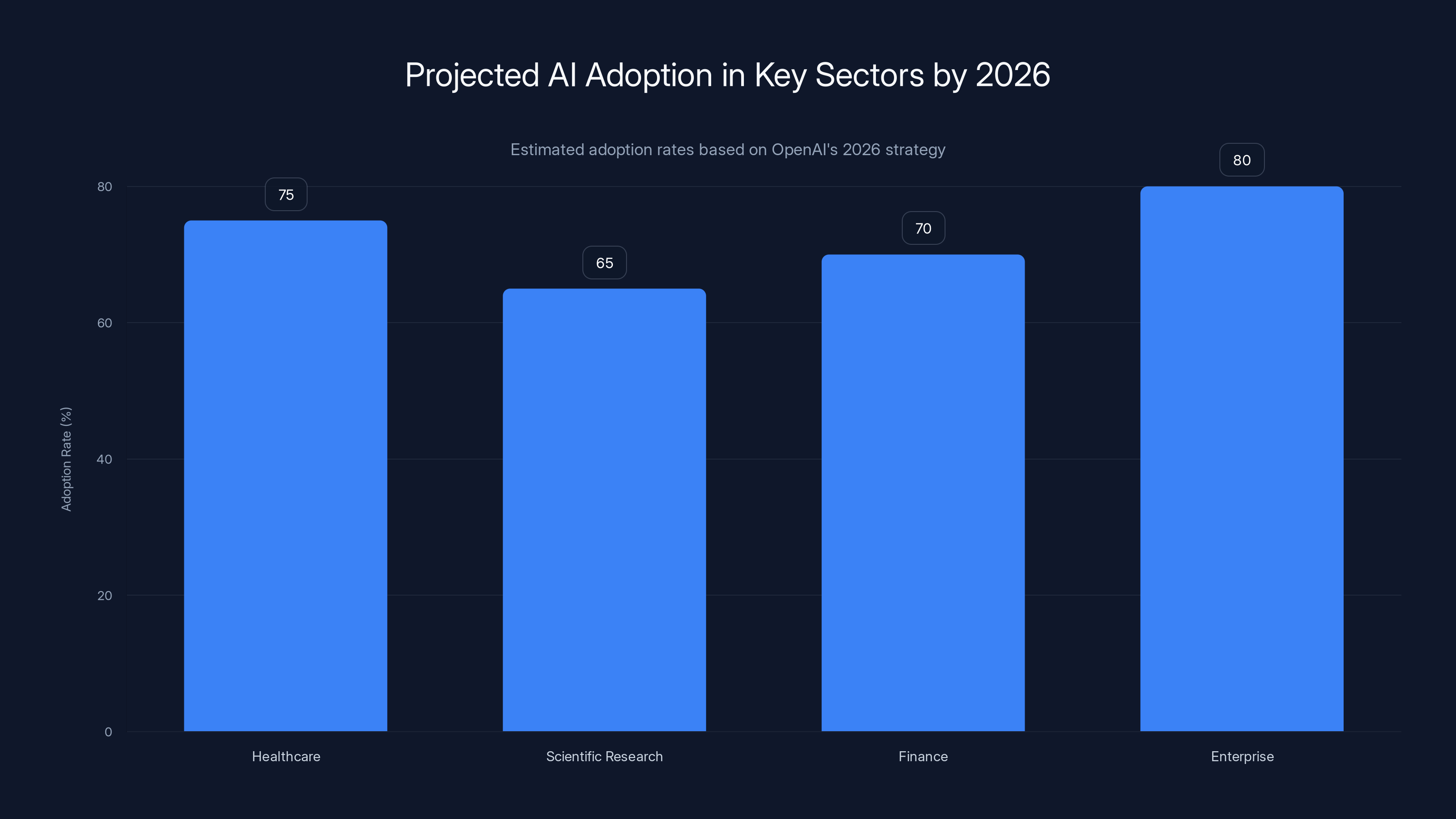

The question isn't whether AI is powerful anymore. It's whether that power translates into tangible value for healthcare systems, scientific researchers, financial institutions, and enterprises wrestling with legacy infrastructure and skeptical stakeholders.

Open AI's betting that 2026 will be the year those doubts start dissolving. And they're backing that bet with infrastructure commitments that border on staggering. The company has committed roughly $1.4 trillion to data centers and compute capacity. That's not theoretical infrastructure. That's shovels in the ground, equipment orders locked in, power contracts negotiated years in advance.

Understand the economics here for a second. When you commit $1.4 trillion to compute infrastructure, you're not doing that on a hunch. You're doing that because you believe demand will follow. You're betting that 2026 won't be another year of incremental feature releases and social media hype. You're betting it'll be the year that doctors start using AI to read scans faster and more accurately. That researchers start using it to accelerate drug discovery timelines. That enterprises stop running pilots and actually deploy AI into production.

That's the bet. And Friar's blog post is essentially a manifesto explaining why Open AI thinks it'll pay off.

The Infrastructure Gamble That Underpins Everything

Let's ground this in reality. Open AI's infrastructure commitments are extraordinary by any standard. The company has locked in approximately $1.4 trillion in compute, data center, and related infrastructure spending as of late 2024. To put that in context, that's roughly equivalent to the entire GDP of several developed nations. It's roughly equivalent to the annual revenue of the entire technology sector in some years.

Why make that commitment? Because training frontier models and running inference at scale requires guaranteed access to compute capacity. You can't go to a data center on Tuesday and say, "Hey, I need 100,000 GPUs for the next three months." The supply chain doesn't work that way. You negotiate contracts years in advance. You reserve capacity. You make commitments.

Open AI's doing exactly that. But here's what's interesting: they're not owning all of it. In fact, Friar explicitly states that Open AI keeps "the balance sheet light, partnering rather than owning." This is crucial. It means Open AI is structuring deals where they're committing to usage levels and paying for compute as they go, rather than building a proprietary data center empire.

Why? Flexibility. If you own the infrastructure, you're locked into specific hardware types, specific locations, specific power contracts. If you partner, you can diversify across multiple providers. You can add capacity from whoever's cost-effective in any given region. You can negotiate terms that include flexibility clauses.

Friar frames this as keeping "contracts with flexibility across providers and hardware types." That's code for: we're not betting the company on any single vendor or hardware generation. We're hedging. And we're structuring deals so that capital is "committed in tranches against real demand signals."

This is important infrastructure strategy, not flashy headline material. But it's how companies survive tech cycles. You build capacity incrementally as demand materializes. You don't over-commit to infrastructure that might become obsolete if a rival discovers a more efficient architecture.

The underlying logic is almost Darwinian. Open AI's positioning itself to scale aggressively if practical adoption takes off. But if adoption stalls, they're not locked into massive fixed costs with nowhere to go.

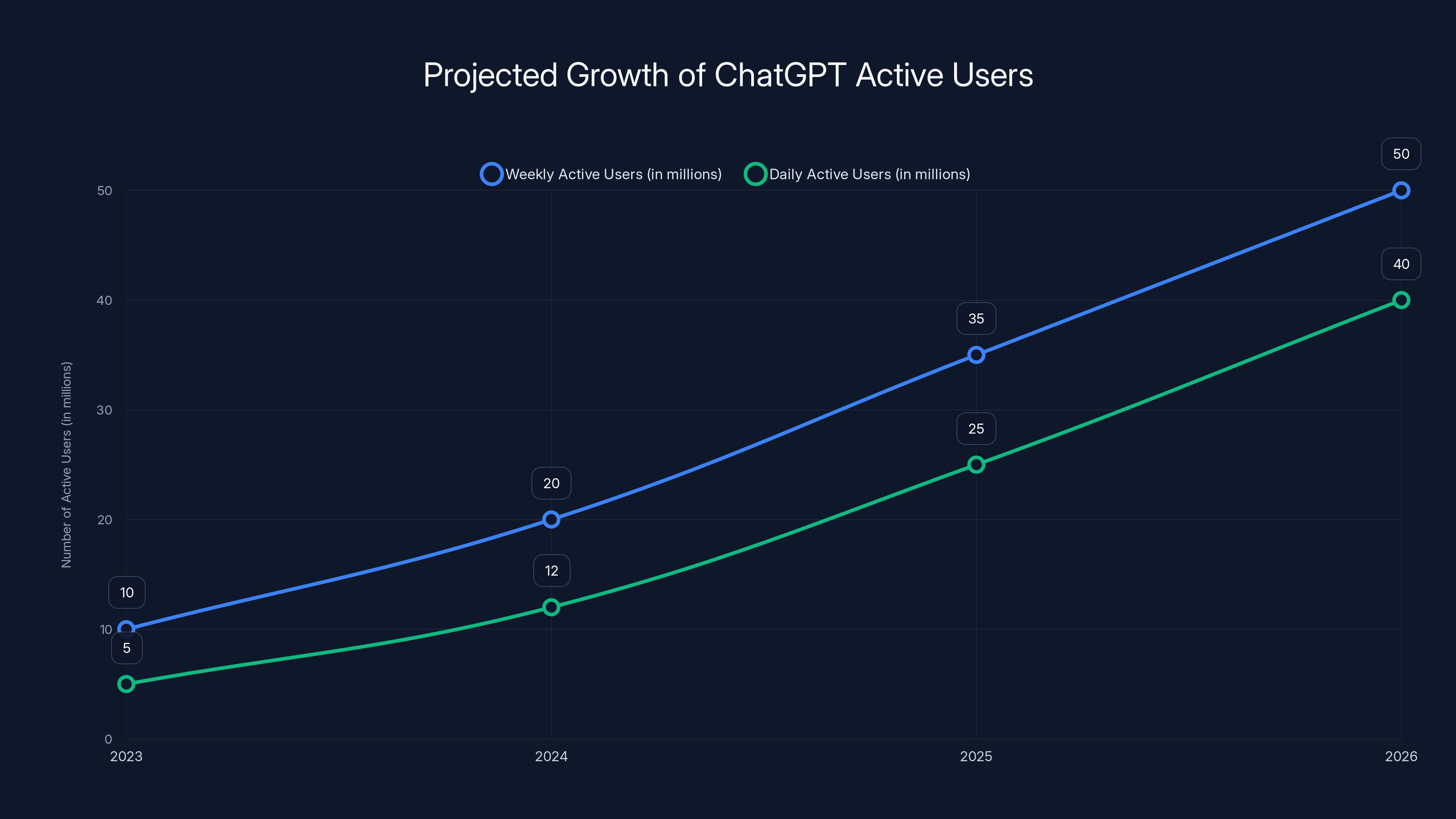

Estimated data suggests that both weekly and daily active users of ChatGPT will continue to grow significantly through 2026, driven by increased practical adoption and dependency-building.

What "Practical Adoption" Actually Means in Open AI's Vocabulary

When Friar writes about "closing the gap" between what AI can do and how people use it, she's identifying a specific problem: capability-usage disconnect.

Right now, AI models can do extraordinary things. They can analyze medical imaging. They can propose new chemical compounds. They can generate complex code. They can model financial scenarios with nuance. They can write grant proposals. They can synthesize research across thousands of papers.

But adoption remains frustratingly slow in most sectors. Why? Because deploying AI in production is actually hard. It requires integrating with existing systems. It requires retraining staff. It requires regulatory compliance. It requires proving ROI in contexts where the metrics aren't obvious.

A hospital CEO doesn't wake up thinking, "Wouldn't it be cool if our radiologists used AI?" They think, "Can we get faster diagnoses while reducing malpractice liability? Will our staff support this? What's the actual dollar impact?"

A pharma researcher doesn't think, "AI is cool." They think, "Will this accelerate our pipeline enough to justify regulatory filing timelines?"

An enterprise CTO doesn't think, "We should use this in a chatbot." They think, "Can this integrate with our data warehouse? Does it handle our data governance requirements? What's the security model?"

These aren't technical questions about AI. They're business questions. Practical adoption means solving those problems.

Open AI's signaling that 2026 will focus on exactly those problems. Not building more capable models (though that continues). But building products, partnerships, and integrations that make deployment actually possible.

Friar specifically mentions three sectors where "better intelligence translates directly into better outcomes": health, science, and enterprise. These are deliberately chosen. They're the sectors where AI adoption matters most, where ROI is measurable, where stakes are highest.

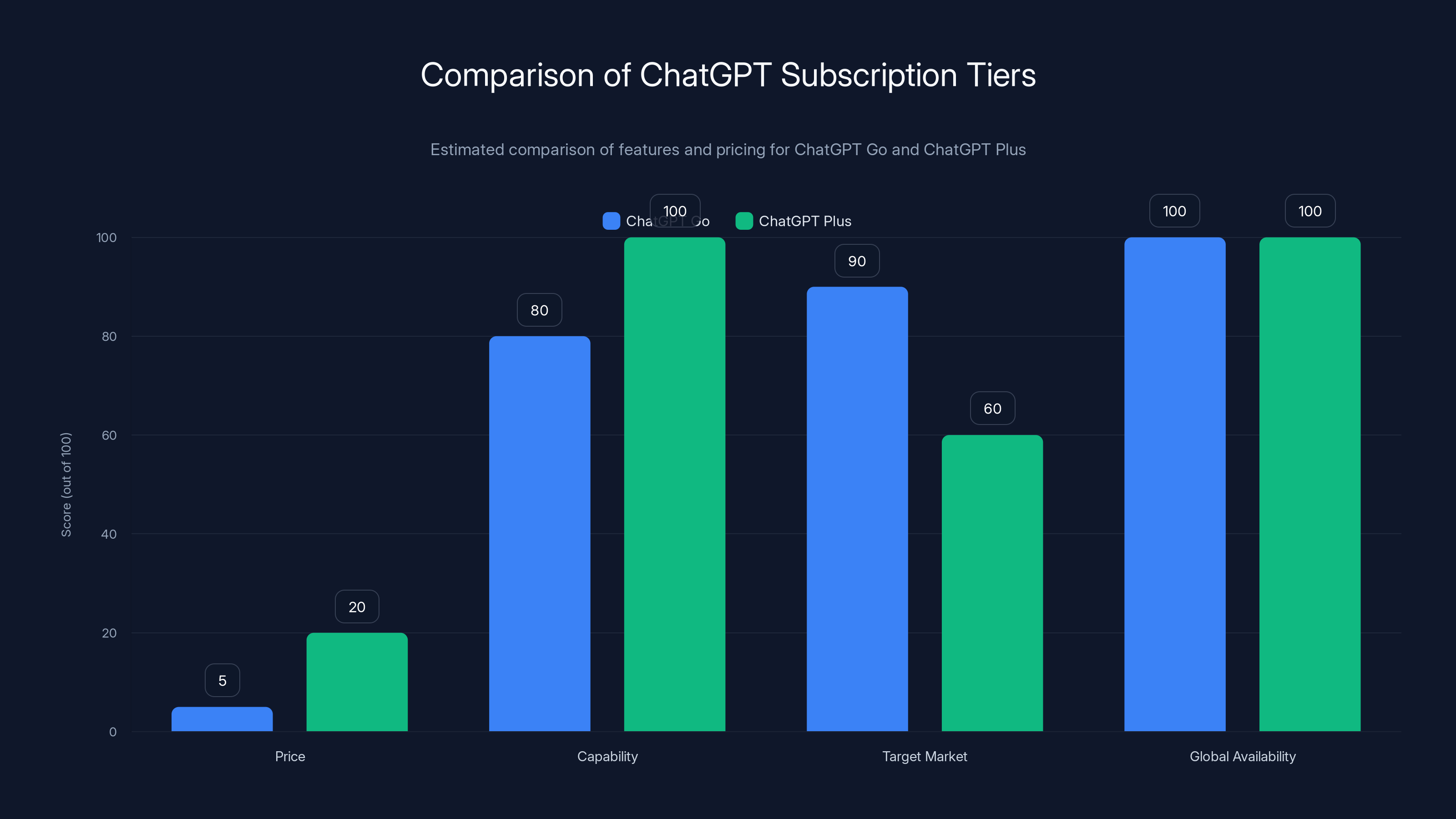

ChatGPT Go offers a lower price and slightly reduced capability compared to ChatGPT Plus, targeting a broader global market with practical adoption in mind. Estimated data.

The Shift in Business Models: From Subscription to Outcome-Based Pricing

Here's where things get really interesting. Friar drops a hint about how Open AI's business model will evolve: "As intelligence moves into scientific research, drug discovery, energy systems, and financial modeling, new economic models will emerge. Licensing, IP-based agreements, and outcome-based pricing will share in the value created."

Stop there. This is a fundamental shift from how Open AI currently makes money.

Right now, Open AI mostly operates on a usage-based model (pay per token) or subscription model (Chat GPT Plus, Chat GPT Pro, business accounts). You use the product, you get billed proportionally.

But that model breaks down in certain contexts. A pharmaceutical company doesn't want to pay per API call for a drug discovery system. They want to pay based on how many viable drug candidates the system identifies. A financial institution doesn't want to pay per token for a risk modeling system. They want to pay based on how much risk the system helps them avoid.

These are outcome-based deals. They're common in enterprise software, but they're complex. You have to instrument everything. You have to track outcomes. You have to build relationships with customers where there's transparency and alignment.

But the payoff is enormous. Outcome-based pricing aligns incentives. Both parties win when the AI system drives better results. It also creates opportunities for partnerships and licensing that go beyond what Open AI's currently doing.

Friar draws an analogy to how the internet evolved. Initially, the internet was a subscription thing (AOL, early ISPs). Eventually, it evolved to outcome-based and licensing models (CDNs pricing based on bandwidth, cloud providers pricing based on computation, infrastructure partnerships). Intelligence, Friar argues, will follow the same trajectory.

This isn't just philosophy. It's a roadmap. Open AI's explicitly telegraphing that they'll start moving enterprise customers toward outcome-based pricing models. That means deeper integration with customer businesses. It means more complex contracts. It means more revenue per deal, but fewer total deals.

It's a maturation strategy. From selling commodity tokens to enterprise customers buying sophisticated intelligence outcomes.

Weekly and Daily Active Users: The Flywheel That's Actually Working

But none of this infrastructure and strategy matters if actual users aren't coming. So let's look at the only hard metric Friar provided: user growth.

Friar states that Chat GPT's weekly and daily active user metrics "continue to produce all-time highs." She doesn't provide specific numbers in the blog post, which is interesting. But the pattern is consistent with what we know from external data.

Chat GPT has sustained user growth even as the novelty has worn off. That's actually remarkable. Most applications spike at launch, then face flat or declining usage as the initial excitement fades. Chat GPT's doing the opposite. Users are increasing even after the initial hype cycle.

Why? Because people are actually finding uses for it. Not just playing with it. Actually using it in their workflows.

Friar describes this as a "flywheel" driven by four components: compute, frontier research, products, and monetization. Let's unpack that.

Compute is the raw infrastructure we discussed. The $1.4 trillion commitment.

Frontier research is the modeling work. The papers. The new architectures and training approaches. The fundamental breakthroughs that improve capability.

Products are the applications built on top of frontier research. Chat GPT itself. The API. Chat GPT for business. Custom GPTs. All the interfaces that humans interact with.

Monetization is converting product usage into revenue.

The flywheel works like this: better frontier research improves capability. Better capability enables better products. Better products drive more user adoption. More usage generates more revenue, which funds more compute and frontier research.

It's elegant. And Friar's arguing that 2026 is when this flywheel actually accelerates. Not because of some technological breakthrough, but because practical adoption finally tips the scale.

Users aren't novelty-seeking anymore. They're dependency-building. They're integrating AI into their daily workflows. They're getting value. And when that happens, growth becomes inevitable.

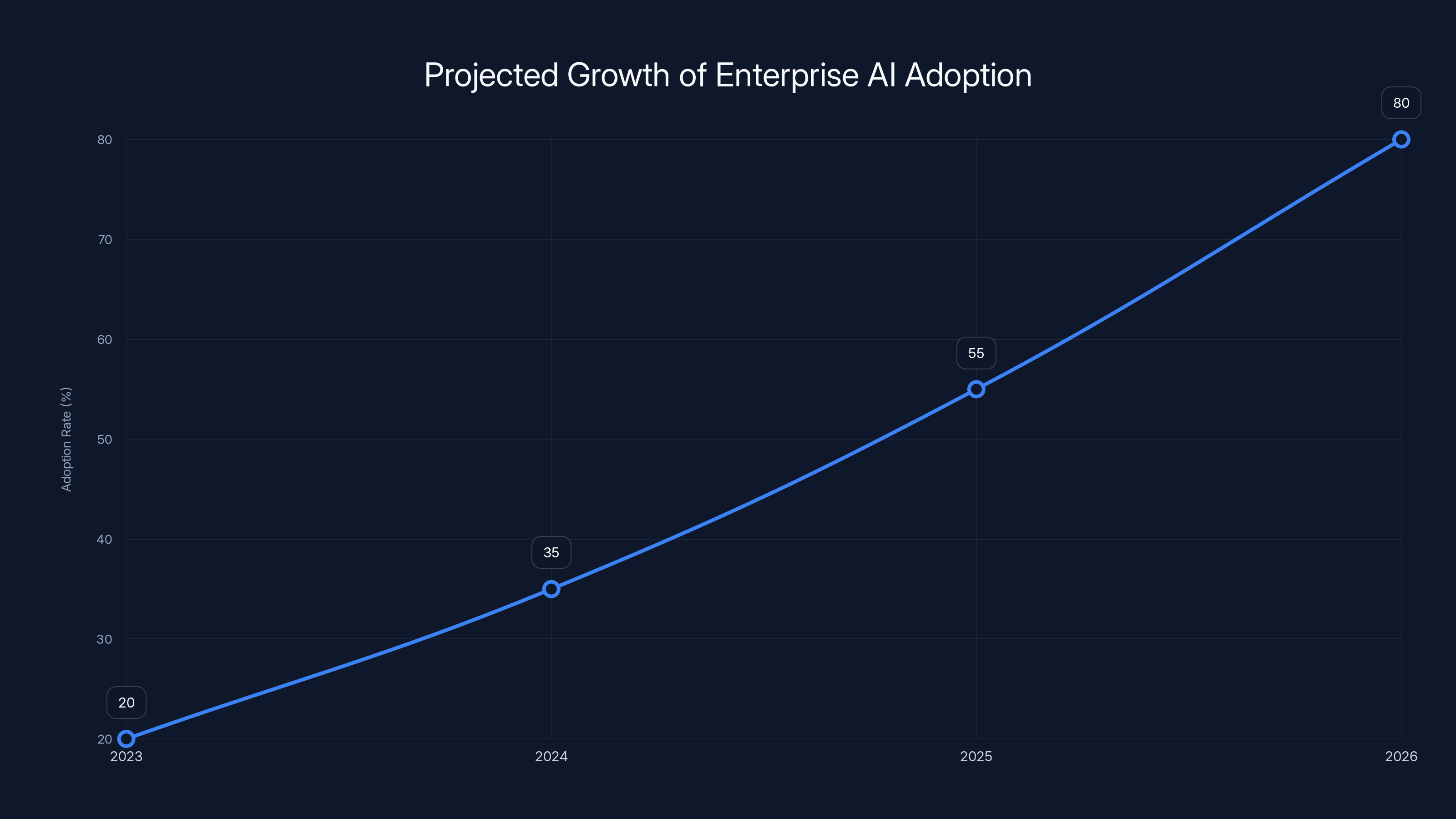

Enterprise AI adoption is projected to grow significantly, reaching 80% by 2026 as enterprises move from pilots to full-scale deployments. Estimated data.

The Chat GPT Go Launch: Democratizing Access as a Practical Adoption Play

Open AI recently announced Chat GPT Go, a cheaper subscription tier priced lower than Chat GPT Plus. This is significant in context.

On the surface, it looks like a pricing move. Capture more users at a lower price point. Classic SaaS playbook.

But in context of the "practical adoption" strategy, it's different. Chat GPT Go is explicitly targeted at people and organizations that want AI capability without paying for a premium service. It's a deliberate narrowing of the capability-price gap.

This matters because practical adoption often stalls on price. You've got a small business owner who wants to use AI to draft customer emails. She's not going to pay

Chat GPT Go captures that market. It's adoption strategy priced as a product.

The same applies to worldwide availability. Friar notes that Chat GPT Go launched globally. That's deliberate. Practical adoption is multinational. You don't expand to a single market. You build infrastructure and products for global deployment.

Advertising: The Hidden Revenue Stream

Open AI also announced that advertising will be coming to the platform. Friar doesn't elaborate in the blog post, but this is worth examining.

Adding ads is controversial in AI because it changes the user experience. People who've grown accustomed to ad-free Chat GPT won't appreciate banner ads or sponsored content.

But advertising is also a massive revenue lever for practical adoption. Think about it: if you have 100 million weekly active users, even modest CPM rates (cost per thousand impressions) generate substantial revenue. Google makes $200+ billion per year in advertising revenue. If Open AI captures even a fraction of that, it's transformative.

Advertising also enables a freemium model at scale. Free users see ads. Premium users don't. This dramatically lowers the barrier to entry for practical adoption. More people can access the product at no cost. Some portion converts to premium.

It's a maturation strategy. From pure usage-based pricing toward a diversified revenue model that includes subscription, usage-based, licensing, and advertising.

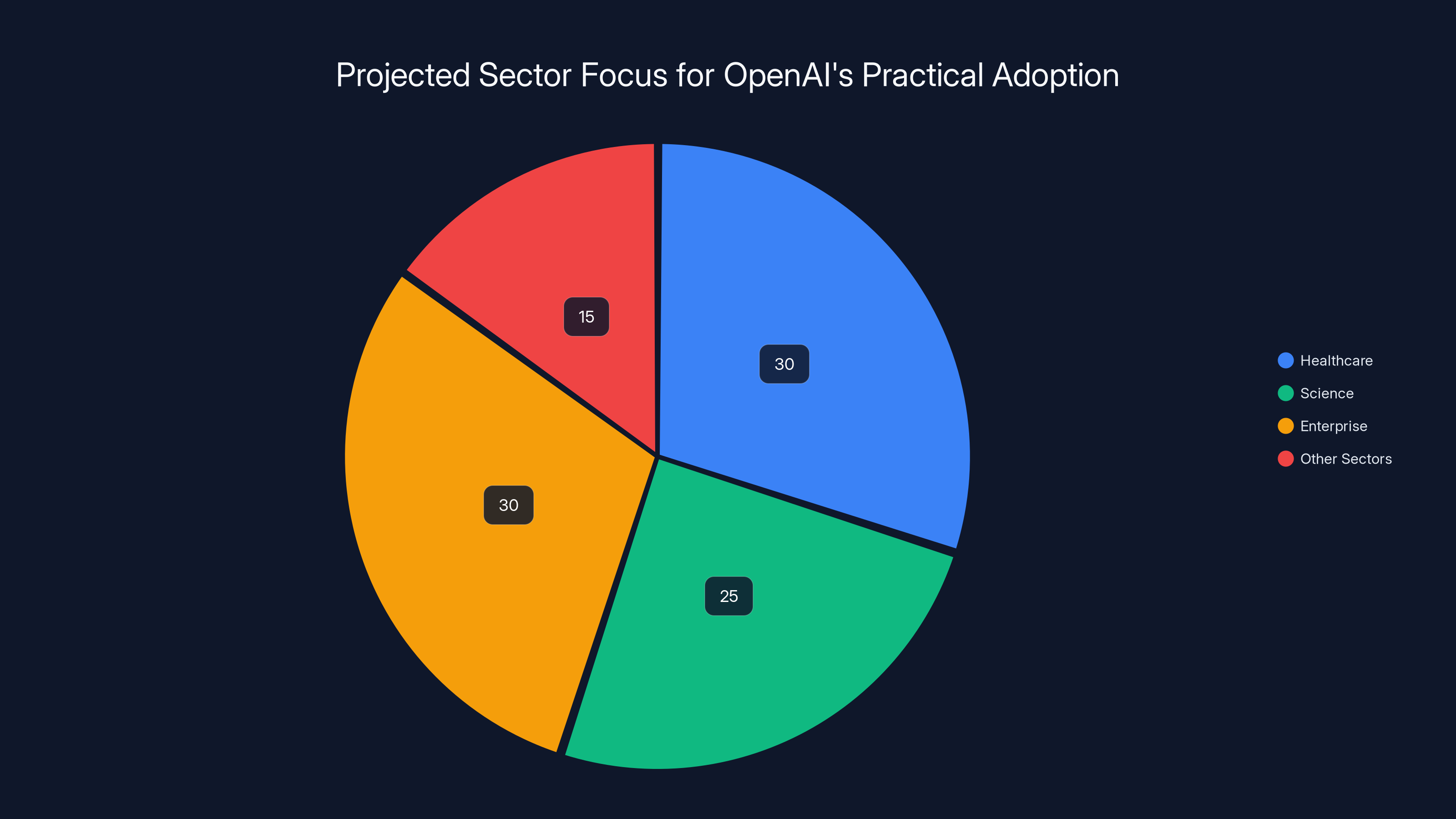

OpenAI is estimated to focus 30% on healthcare, 25% on science, and 30% on enterprise sectors for practical adoption, with 15% on other sectors. Estimated data based on strategic priorities.

The Jony Ive Hardware Partnership: Practical Adoption in Physical Form

Friar mentions that Open AI is building hardware devices in partnership with design legend Jony Ive, with prototypes potentially shown in late 2026.

This is where "practical adoption" gets concrete. Hardware is the ultimate adoption enabler. Because it's not about whether you'll use AI "someday." It's about whether AI is present in your day-to-day environment, ready to use, integrated into your workflow.

Imagine a device that sits on your desk. You can voice-interact with it. It understands context about your work and your industry. It integrates with your calendar, your documents, your communications. It proactively surfaces insights relevant to what you're doing.

That's not science fiction. That's what smart hardware in partnership with frontier AI could actually be.

Jony Ive's involvement is the signal that matters. Ive has spent decades optimizing for human-centered design. When he designs a product around AI, he's not building a processor showcase. He's building something that's actually pleasant to use.

Hardware is also the practical adoption moat. It's much harder to compete in hardware than in software. Apple's lock-in advantage comes partly from iOS being good software, but mostly from iPhone being a physical device that's hard to replicate. If Open AI ships a hardware device that integrates elegantly with their AI, that's a massive advantage.

Health and Science: Where Practical Adoption Actually Saves Lives

Friar specifically calls out health and science as priority sectors for practical adoption. This is deliberate.

In health, AI has concrete, measurable value. A radiologist can review imaging 30% faster with AI assistance. A pathologist can prioritize slide analysis based on AI flagging suspicious areas. A clinician can access research summaries on drug interactions for a specific patient profile in seconds instead of hours.

These aren't incremental improvements. They're practice-transforming.

Similarly in science. A researcher can run thousands of simulations exploring molecular structures. A team can accelerate literature reviews by months. A lab can identify promising research directions that would take human researchers weeks to spot.

But there's a catch. Health and science adoption requires more than capability. It requires validation. Clinical trials for AI in medical imaging. Regulatory approval. Published research establishing efficacy. Integration with hospital IT systems. Training for staff.

Open AI's betting that 2026 is when they'll start closing that gap. Not through product releases, but through partnerships. Expect announcements with hospital systems. Expect collaborations with research institutions. Expect published outcomes demonstrating AI value.

This is what practical adoption looks like in high-stakes sectors. It's slower than consumer adoption. It's more complex. But it's more valuable and more sustainable.

OpenAI's $1.4 trillion investment in infrastructure is expected to significantly boost AI adoption across various sectors by 2026. Estimated data.

Enterprise Deployment: The Real Revenue Driver

Friar mentions "enterprise" as a key vertical where better intelligence drives better outcomes. This is Open AI's most important market.

Enterprise adoption is different from consumer adoption. A consumer uses Chat GPT because it's fun or convenient. An enterprise buys AI solutions because they drive measurable business value: faster time-to-market, reduced operational costs, improved decision-making, revenue uplift.

Enterprise also means contracts, support, integration, customization. It means higher contract values but more sales complexity. It means longer sales cycles but higher customer lifetime value.

Open AI's been gradually building enterprise infrastructure. The API pricing has improved. They've released enterprise-grade models. They've added security and compliance features. They've launched dedicated support.

But there's still a gap between enterprise customers running pilots and enterprise customers deploying AI at scale. That gap is what "practical adoption" in the enterprise context means.

Expect to see 2026 announcements featuring enterprise customers who've deployed Open AI technology broadly. Not proof-of-concept pilots. Actual production deployments across multiple teams, handling real business processes.

That's when the flywheel accelerates. When enterprise customers prove ROI publicly, other enterprises start asking, "Why aren't we doing this?"

The Capacity vs. Usage Dynamics

Friar notes something interesting about the infrastructure strategy: "At times, capacity leads usage. At other times, usage leads capacity."

This is the infrastructure version of supply and demand dynamics. Sometimes you build capacity in anticipation of demand. Sometimes demand spikes and you scramble to add capacity.

Open AI's $1.4 trillion commitment is a "capacity leads usage" move. They're building infrastructure in anticipation of adoption acceleration. It's a bullish bet.

But it's also a risk. If practical adoption doesn't materialize the way Open AI expects, they're stuck with expensive infrastructure they don't have usage for. That's why they're structuring deals with flexibility rather than building owned data centers.

The strategy is: build capacity aggressively, but structure it so you're not locked in if adoption stalls. Classic risk management for a company betting on an uncertain outcome.

Monetization Models of the Future

Friar paints a picture of how Open AI's monetization will evolve. Beyond the current usage-based and subscription models, she describes three emerging models:

Licensing agreements are about Open AI providing access to their models or technology for a flat fee or revenue share. Think of a hospital licensing Open AI's imaging analysis technology. Or a pharmaceutical company licensing Open AI's molecular modeling capability.

IP-based agreements are about Open AI providing technology or know-how that customers build on. This might mean providing training data, algorithms, or architectural innovations that customers integrate into their own products.

Outcome-based pricing is about aligning payment with results. A customer pays based on the business outcome AI generates. Better results, higher payment. Weaker results, lower payment.

Each model is appropriate for different customer segments and use cases. Together, they diversify Open AI's revenue sources. The company's not dependent on any single monetization lever.

This also explains why infrastructure commitment is so massive. If Open AI's expecting to offer licensing, IP partnerships, and outcome-based deals at scale, they need capacity to serve all those customers simultaneously.

Balancing Growth and Discipline

Friar keeps emphasizing "discipline" in managing growth. This is revealing. It suggests Open AI has learned lessons from other fast-growing tech companies that over-extended and collapsed.

Specifically, she notes that Open AI is "structuring contracts with flexibility across providers and hardware types" and committing "capital in tranches against real demand signals."

This is not a company trying to dominate through sheer scale and winner-take-all dynamics. This is a company trying to scale sustainably. Scaling capital-efficiently. Scaling in ways that don't create hidden liabilities.

This matters psychologically because it signals confidence without recklessness. Open AI's not panicking. They're not desperate to prove something. They're executing a long-term strategy with measured risk.

That's actually reassuring to enterprise customers considering adoption. If you're betting your critical processes on Open AI's technology, you want to know the company isn't going to collapse under the weight of its own capital commitments.

The Path Forward: 2026 and Beyond

Pull back and look at the big picture. Open AI's making a strategic shift from "frontier research and capability" to "frontier research and practical adoption."

It's not either-or. Open AI will continue pushing the boundary of what's possible. New models will be more capable. New techniques will emerge. But success in 2026 is measured by adoption, not by capability alone.

This shift makes sense. The industry has spent three years proving that large language models are powerful. That's established. The next frontier is proving that this power translates into value for real institutions solving real problems.

Open AI's positioning to lead that shift. They have the technology. They have the infrastructure commitment. They have the partnerships (Microsoft, enterprise customers, healthcare systems, research institutions). They have the capital.

The question is whether they can execute. Whether they can take internal research and convert it into products enterprises actually deploy. Whether they can build hardware that drives adoption. Whether they can navigate the regulatory landscape and build trust with institutions like hospitals and pharma companies.

Those are execution questions. And execution is always harder than strategy.

But if they pull it off, 2026 becomes the year AI stopped being theoretical and started being business-critical infrastructure. That's the practical adoption play.

FAQ

What does "practical adoption" mean in Open AI's 2026 strategy?

Practical adoption refers to closing the gap between AI's technical capabilities and its real-world implementation in enterprises, healthcare, science, and other sectors. Rather than focusing solely on building more powerful models, Open AI is shifting its strategy toward making AI technology that actually integrates into existing workflows, generates measurable business outcomes, and solves tangible problems for organizations at scale.

Why is Open AI committing $1.4 trillion to infrastructure if adoption rates are uncertain?

Open AI's infrastructure commitment is a bullish bet that practical adoption will accelerate in 2026 and beyond. The company has structured these commitments carefully through partnerships rather than ownership, building in flexibility across providers and hardware types. This allows them to scale aggressively if adoption materializes while avoiding massive fixed costs if usage doesn't meet expectations. The infrastructure supports both computing capability and the ability to serve enterprise customers with outcome-based or licensing arrangements.

How will Open AI's business model change with practical adoption?

Open AI is shifting from primarily usage-based and subscription pricing toward a diversified model including licensing agreements, IP-based partnerships, outcome-based pricing where companies pay based on AI-generated results, and advertising revenue. This evolution mirrors how the internet evolved from subscription access to multiple monetization models, allowing Open AI to capture value across different customer segments and use cases.

What sectors is Open AI prioritizing for practical adoption?

Open AI specifically highlighted health, science, and enterprise as sectors where better intelligence translates directly to better outcomes. In healthcare, AI can improve diagnostic speed and accuracy. In scientific research, it can accelerate drug discovery and literature analysis. In enterprise, it can improve decision-making, reduce operational costs, and increase efficiency. These sectors have measurable ROI and high-stakes outcomes that justify the implementation complexity.

How does Chat GPT Go support the practical adoption strategy?

Chat GPT Go is a lower-priced subscription tier that removes the price barrier to AI adoption for small businesses, freelancers, and individuals who don't need GPT-4 capabilities. By launching this globally, Open AI democratizes access and increases weekly and daily active users, driving the network effects and flywheel needed for practical adoption. It's positioning AI as an accessible tool rather than a premium service.

What role will hardware play in Open AI's practical adoption efforts?

Open AI's partnership with Jony Ive to develop hardware devices represents a move to make AI adoption more seamless and integrated into daily workflows. Physical devices with voice interaction, context awareness, and integration with calendars and documents can drive adoption more effectively than software alone. Hardware also creates a stronger competitive moat and deeper customer lock-in, making it a strategic enabler for long-term practical adoption.

Key Takeaways

- OpenAI's 2026 strategy focuses on 'practical adoption,' closing the gap between AI capability and real-world implementation across healthcare, science, and enterprise sectors

- The company has committed approximately $1.4 trillion to infrastructure, strategically structured through partnerships to maintain flexibility across providers rather than vertical integration

- ChatGPT continues achieving all-time-high weekly and daily active user metrics through a 'flywheel' driven by compute, frontier research, product development, and monetization

- OpenAI is diversifying revenue models beyond usage-based and subscription pricing toward licensing, IP-based agreements, and outcome-based pricing aligned with customer business results

- Strategic initiatives including ChatGPT Go, advertising integration, and Jony Ive hardware partnership represent practical adoption enablers for different market segments

- Infrastructure strategy emphasizes discipline and flexibility, committing capital in tranches against real demand signals rather than betting the company on uncertain adoption

Related Articles

- Why Retrieval Quality Beats Model Size in Enterprise AI [2025]

- 55 US AI Startups That Raised $100M+ in 2025: Complete Analysis

- Why Agentic AI Pilots Stall & How to Fix Them [2025]

- The Hidden Cost of AI Workslop: How Businesses Lose Hundreds of Hours Weekly [2025]

- AI Bubble Myth: Understanding 3 Distinct Layers & Timelines

- Anthropic's Economic Index 2025: What AI Really Does for Work [Data]

![OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]](https://tryrunable.com/blog/openai-s-2026-practical-adoption-strategy-closing-the-ai-gap/image-1-1768858537559.png)