Open AI Staff Exodus: Why Senior Researchers Are Leaving in 2025

Introduction: The Transformation of Open AI's Research Culture

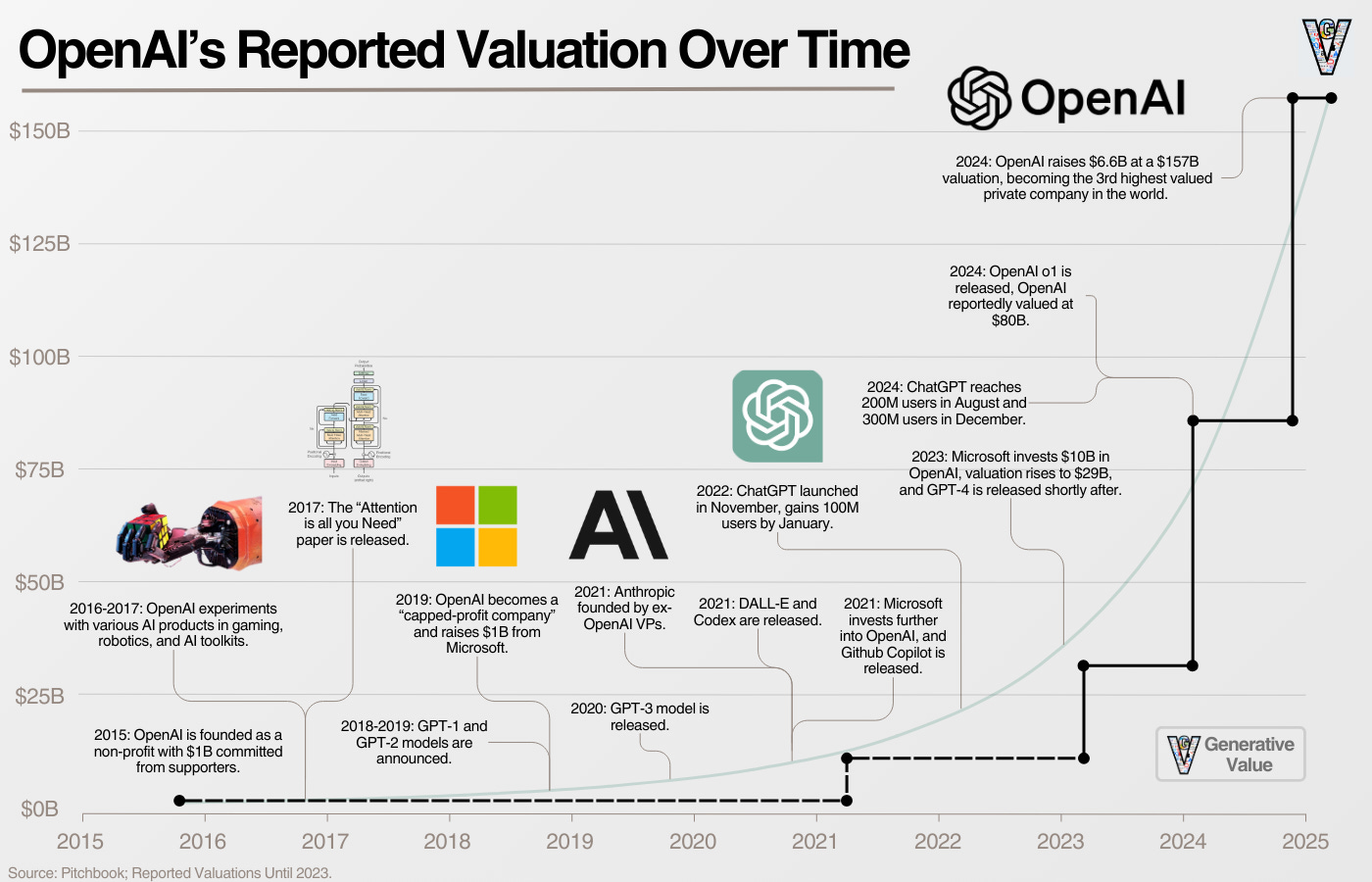

Open AI, once celebrated as a beacon of open-source artificial intelligence research and long-term scientific inquiry, is experiencing a significant transformation. The $500 billion company is undergoing a fundamental strategic pivot that has prompted the departure of some of its most accomplished researchers and thinkers. This shift—from prioritizing experimental, foundational research to focusing resources almost exclusively on improving Chat GPT—represents a watershed moment in the company's evolution and raises critical questions about the future trajectory of AI development.

The departures are not merely personnel changes within a large technology company. They signal a deeper philosophical recalibration about what Open AI values, what problems it wants to solve, and how it allocates the extraordinary computational and financial resources at its disposal. When organizations as influential as Open AI make strategic choices about where to invest billions in research and development, those choices ripple throughout the entire artificial intelligence ecosystem, influencing which research directions get funding, which talent gets developed, and ultimately which technological breakthroughs will or won't happen in the coming years.

The company's founding vision emphasized responsible artificial general intelligence development paired with rigorous, exploratory research that wasn't necessarily tied to immediate commercial applications. That ethos—where researchers could pursue "blue-sky" questions about how AI systems work, how they learn, and how they might be improved—appears to be giving way to a more focused, product-driven approach. The company has begun redirecting computational resources, personnel, and executive attention toward the single objective of making Chat GPT better, faster, and more capable than competing offerings from Google, Anthropic, and other well-funded rivals.

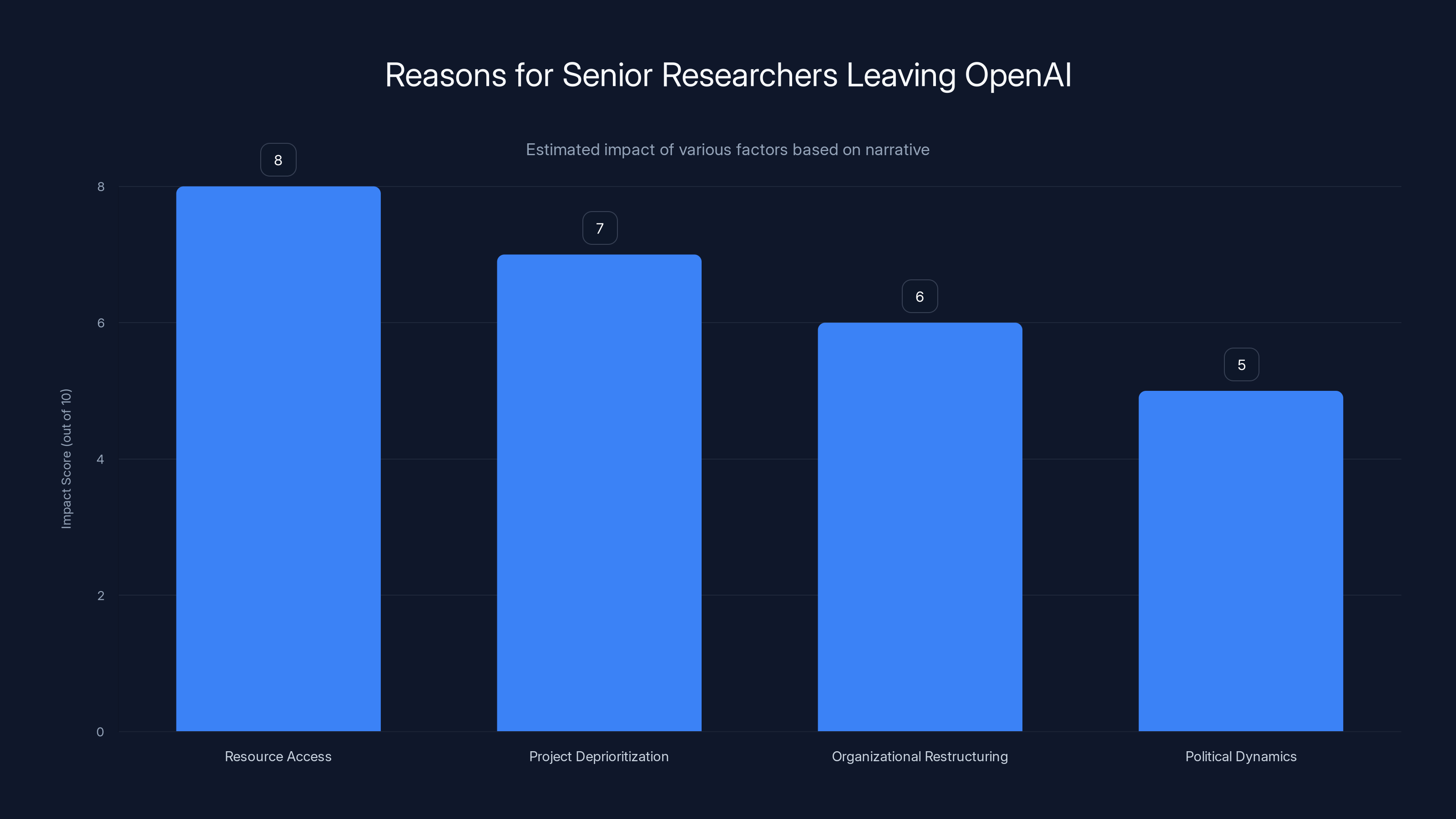

This transformation has concrete consequences. Multiple senior researchers have decided to leave the organization over recent months, citing frustration with the new resource allocation dynamics, the reduced opportunity to pursue independent research directions, and what some describe as an increasingly political environment for those not working on core language models. Understanding why these departures are happening, what they reveal about strategic priorities in AI development, and what they might portend for the future of AI research requires careful examination of the forces driving this shift.

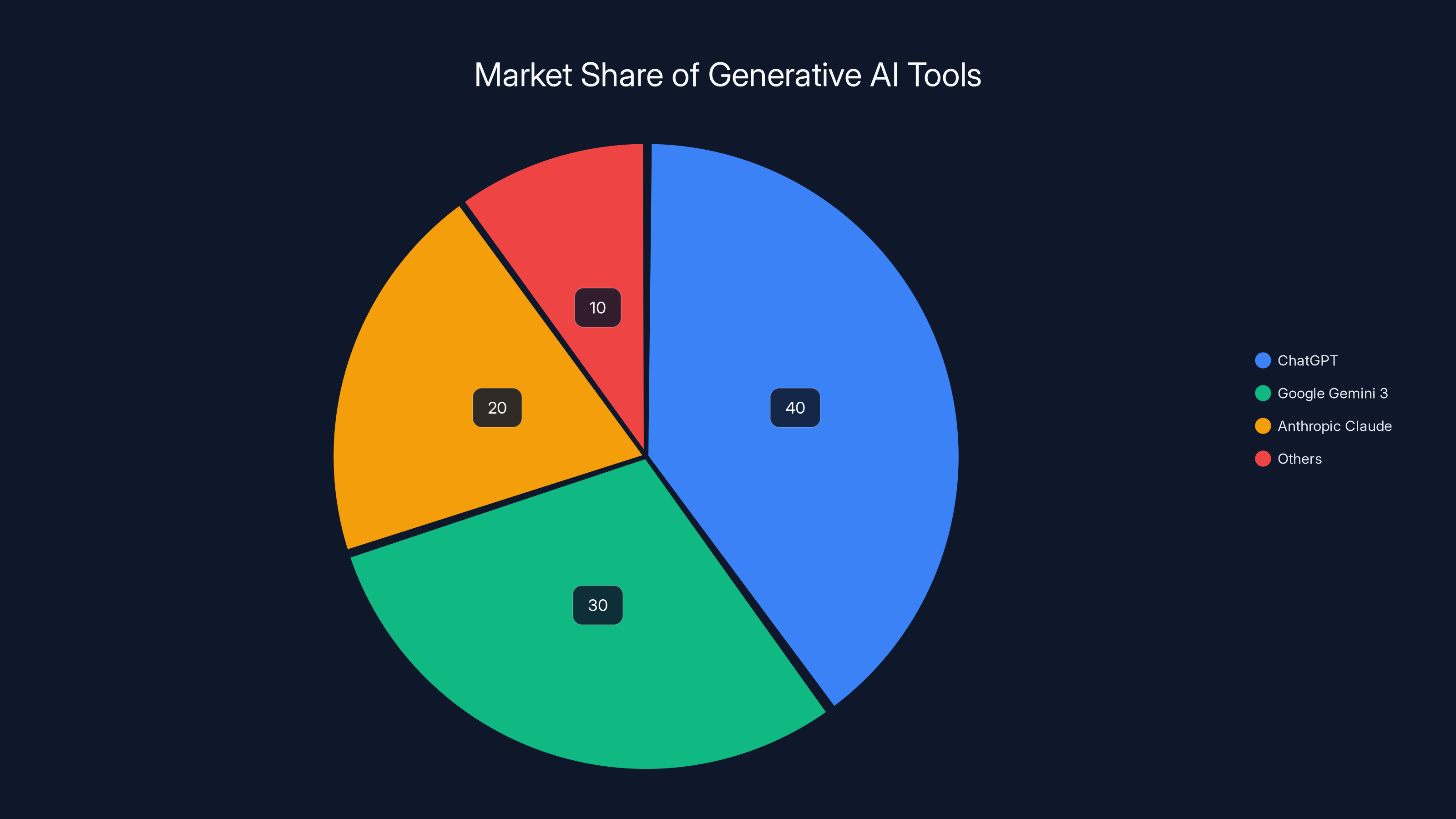

ChatGPT holds an estimated 40% market share in the generative AI space, leading over competitors like Google Gemini 3 and Anthropic Claude. Estimated data based on user adoption and performance benchmarks.

The Strategic Pivot: From Research Lab to Product Company

Chat GPT as the Defining Priority

Open AI's transformation from research laboratory to product-driven company crystallized around a single application: Chat GPT. Launched as a research preview in late 2022, Chat GPT unexpectedly ignited the generative AI boom, capturing 800 million users and becoming arguably the fastest-adopted consumer application in technology history. This success created an unprecedented opportunity for Open AI, but it also created an inescapable pressure: the company had to prove to investors that it could generate revenues sufficient to justify the astronomical valuations being placed on it.

When Sam Altman declared a "code red" in December regarding Chat GPT improvement, he was responding to a specific competitive threat: Google had released Gemini 3, which outperformed Open AI's models on several independent benchmarks, and Anthropic's Claude was making significant strides in code generation capabilities. In a space where technological superiority can rapidly translate to market advantage, falling behind in benchmark performance even temporarily represents an existential threat to a company's ability to maintain its market position and attract top talent.

The strategic logic is straightforward: resources are finite, even for well-capitalized companies like Open AI. The computational infrastructure required to train state-of-the-art large language models costs billions of dollars. The engineering talent required to optimize these models, scale them, and deploy them reliably is scarce. When leadership decides that maintaining technological leadership in language models is the paramount priority, that decision necessarily means reducing resources available for other research directions.

The Engineering Problem Framing

Open AI's internal perspective on this shift reflects a particular view of where AI development stands and where the highest-value innovations lie. According to individuals familiar with the company's research strategy, Open AI increasingly treats language model development as primarily an engineering problem rather than a research problem. This framing suggests that the fundamental insights about how large language models work have been established, and what remains is optimization: scaling up compute resources, refining algorithms, acquiring better training data, and engineering these systems for real-world deployment.

This perspective has profound implications for how resources get allocated. If language model advancement is fundamentally about engineering and scaling, then the researchers most valuable to the organization are those who can contribute to these optimization efforts. Experimental research aimed at understanding novel properties of AI systems, exploring alternative architectures, or investigating how learning actually occurs in neural networks becomes less urgent. Research that doesn't directly support Chat GPT development gets characterized as interesting but not critical to the company's core mission.

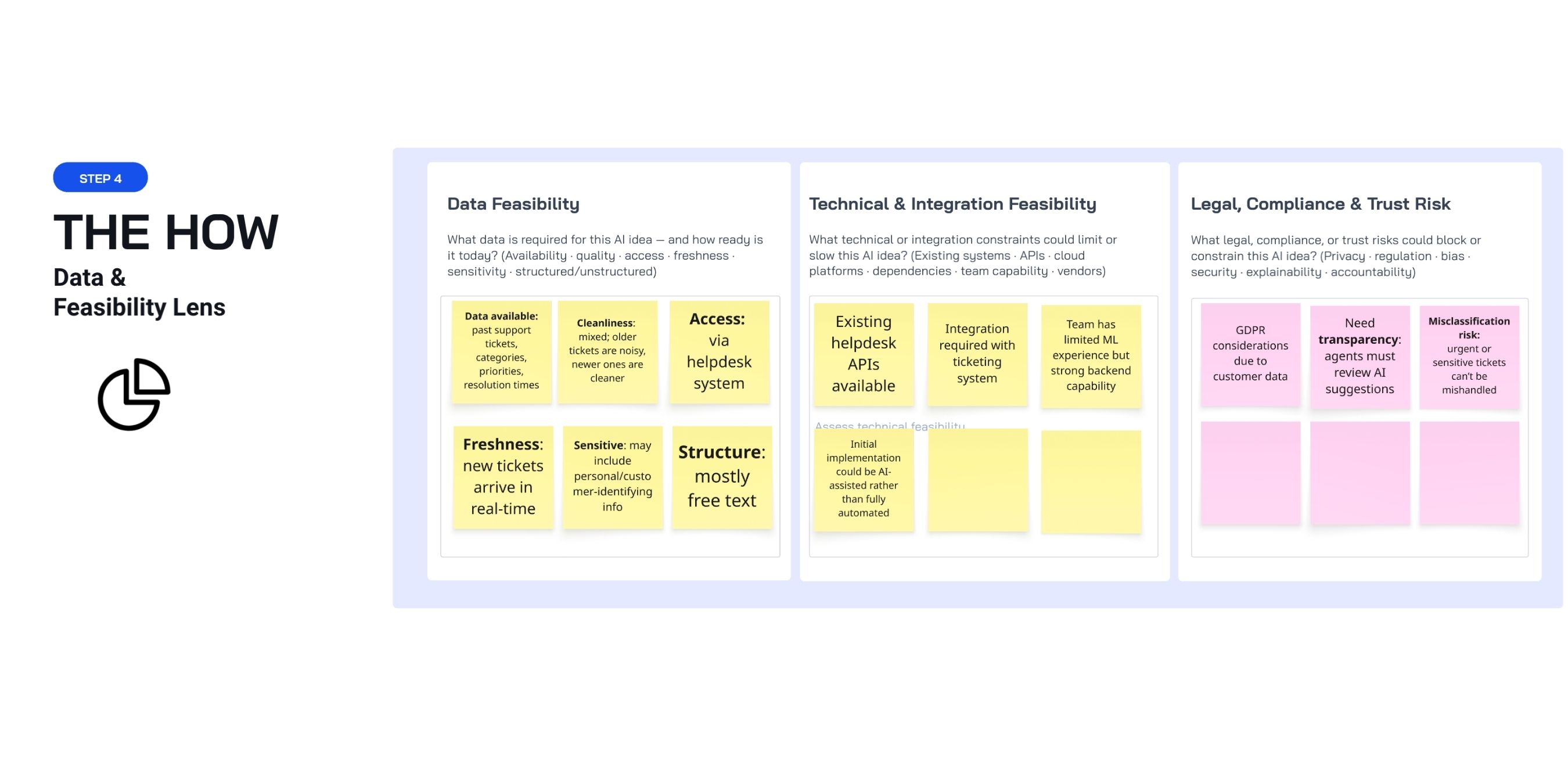

Resource access and project deprioritization are the most significant factors driving senior researchers to leave OpenAI. (Estimated data)

Key Departures: Understanding Who Left and Why

Jerry Tworek: The Reasoning Researcher

Jerry Tworek served as Vice President of Research at Open AI, leading the company's efforts to develop more sophisticated reasoning capabilities in AI models. After seven years at the company, Tworek departed in January, publicly stating that he wanted to explore "types of research that are hard to do at Open AI." More specifically, Tworek wanted to focus on continuous learning—the ability of AI models to learn from new data over time while preserving previously acquired knowledge. This capability represents a different frontier in AI research compared to the static, pre-trained models that power Chat GPT.

Tworek's departure wasn't simply a matter of career opportunism or a desire to explore new pastures. According to people close to him, Tworek had repeatedly requested additional resources for his research agenda—more computing power, more engineering support, more personnel dedicated to his team. Leadership rejected these requests. The situation escalated into a significant dispute with Jakub Pachocki, Open AI's Chief Scientist, who disagreed both with the specific scientific approach Tworek was pursuing and with the premise that Open AI's existing architectural foundations around large language models weren't the most promising path forward.

This conflict illuminates a crucial dynamic: when an organization commits to a particular technological path, researchers pursuing different approaches inevitably find themselves in adversarial relationships with leadership. Pachocki's position—that LLM-based architectures represent the most promising direction and should receive the vast majority of resources—necessarily means that alternative approaches receive minimal support. Tworek concluded that pursuing his research agenda was incompatible with remaining at Open AI under these constraints.

Andrea Vallone: The Model Policy Challenge

Andrea Vallone led Open AI's model policy research team, focusing on crucial questions about how AI systems are used in society, how they affect users, and what safeguards are necessary. In early 2025, Vallone joined Anthropic, a rival AI safety and research company, representing both a talent loss and a symbolic departure of someone focused on research beyond pure product optimization.

According to individuals familiar with the circumstances of her departure, Vallone was effectively given an impossible mission: protecting the mental health of users becoming emotionally attached to Chat GPT. This task encapsulates the challenge of maintaining robust research and policy work within a product-driven organization. While the mental health implications of increasingly sophisticated AI companions represents a genuinely important research question, framing it as Vallone's responsibility to "solve" suggests a misalignment between the complexity of the problem and the organizational support available to address it.

Vallone's move to Anthropic signals that the talent market for AI researchers focused on safety, alignment, and societal implications remains strong. Anthropic, explicitly founded to prioritize AI safety alongside capability development, represented an environment where policy research isn't subordinate to product development but rather integrated as a core organizational value.

Tom Cunningham: The Economics Perspective

Tom Cunningham, who led Open AI's economic research team, departed last year with a significant public statement: he suggested that Open AI was increasingly prioritizing research that promoted the company's commercial interests over impartial scientific inquiry. Cunningham's departure and his public comments raise important questions about whether an organization can maintain truly independent research capability once it becomes dependent on those research findings being commercially advantageous.

Economic research questions—about how AI affects labor markets, how it should be regulated, what distributional consequences different policy approaches would have—are important and technically challenging. They're also politically sensitive and commercially significant. When an organization becomes dominant in a space, maintaining research capability that might suggest the organization should be regulated differently or that its products have negative externalities becomes organizationally difficult. Cunningham apparently concluded that genuine independent research was incompatible with his position at Open AI.

The Resource Allocation Crisis: Computing Credits and Project Viability

Computing Credits as Power Currency

At Open AI, as at most large AI research organizations, access to computing infrastructure is the ultimate constraint. Running experiments with large language models requires enormous computational resources, often costing tens of thousands or hundreds of thousands of dollars per experiment. The company allocates access through a system of "computing credits," which researchers must request from top executives. This creates a gating mechanism through which leadership can exercise control over which research directions proceed and which are starved of resources.

Multiple researchers who spoke about the situation reported that over recent months, requests for computing credits from researchers not working on core language model capabilities faced increasing skepticism. Requests were either denied outright or approved for amounts so small that they couldn't adequately support validation of the research hypotheses. For researchers accustomed to pursuing ambitious experimental programs with appropriate computational resources, this represented a dramatic downgrade in their ability to do meaningful work.

This isn't simply a matter of slight budget reductions. In the AI research context, computing resources determine whether research is even possible. A researcher who can't access sufficient compute can't run the experiments necessary to test their hypothesis. They can't generate the results necessary to publish in competitive venues. They can't train the models necessary to validate their ideas. Effectively denying computing access is equivalent to telling researchers they cannot pursue their research agenda.

Neglected Projects: Video, Images, and Beyond

Teams working on video generation (Sora) and image generation (DALL-E) felt the effects of this recalibration particularly acutely. These projects, while commercially valuable and technically sophisticated, came to be viewed as less central to the Chat GPT mission. People familiar with the situation reported that Sora and DALL-E teams felt systematically under-resourced compared to language model teams, even as those projects continued delivering commercially relevant products.

Over the past year, additional projects unrelated to language models were entirely wound down. This wasn't a gradual process of shifting priorities, but rather deliberate termination of research directions deemed incompatible with the Chat GPT-first focus. For researchers who had invested years in these directions, the message was clear: your work is no longer valued by the organization's leadership.

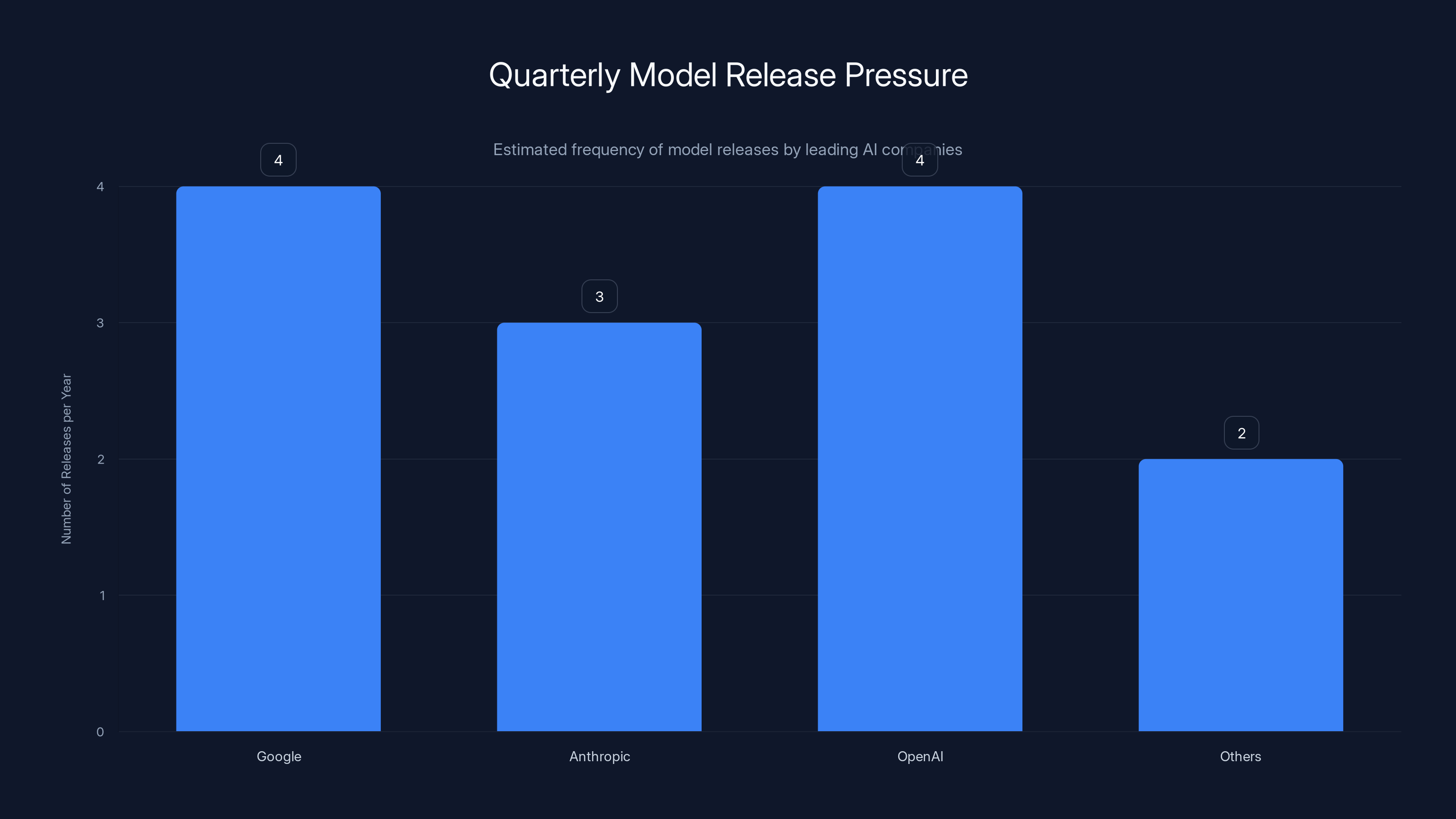

Estimated data shows Google and OpenAI releasing models quarterly, reflecting intense competitive pressure. Anthropic follows closely, emphasizing the race mentality in AI development.

Organizational Restructuring: Consolidating Power Around Language Models

The Reorganization's Real Purpose

Open AI underwent significant organizational restructuring aimed at streamlining the company's structure around the objective of improving Chat GPT. On the surface, organizational restructuring is a normal part of company management, but in this context, it represented a consolidation of resources and authority around specific strategic priorities. Teams were reorganized, reporting lines were adjusted, and decision-making authority was concentrated among executives most focused on language model development.

This kind of restructuring sends powerful cultural signals beyond just the formal organizational chart. It tells employees which research directions are central to the company's future and which are peripheral. It determines whose work will be celebrated in all-hands meetings and whose will be acknowledged politely but without particular emphasis. It influences which junior researchers will be hired, which will be promoted, and which will find themselves gradually sidelined as less valuable to the organization's core mission.

The Political Dynamics of Resource Scarcity

One former senior employee described the emerging culture as increasingly political: researchers not working on core language models felt like "second-class citizens" compared to those in the main bets. In organizations with abundant resources, this kind of hierarchy might be invisible or inconsequential. But in organizations facing resource constraints, hierarchical differences become visceral. Meetings about budget allocation become contentious. Decisions about which projects to pursue become zero-sum competitions between research directions.

This political dimension helps explain why talented researchers choose to leave. Brilliant scientists are often drawn to organizations where they can pursue their best ideas and where the organizational culture values their contributions. When organizational culture shifts to one where you're systematically disadvantaged relative to colleagues working on different problems, the environment becomes professionally unsatisfying regardless of compensation or prestige.

Competitive Pressures: The Race Mentality

The Quarterly Model Race

When companies compete on technological capability, they face powerful incentives to move as quickly as possible. Releasing superior models on a regular cadence creates the perception of relentless technological advancement. When competitors are releasing new capabilities quarterly, the pressure to match that pace becomes overwhelming. One former employee described this as a "crazy, cut-throat race" with "tons of competitive pressures," especially for scaling companies that want to have the best model every quarter.

This dynamic favors focus and specialization over exploratory breadth. It favors incremental improvements to proven approaches over risky bets on novel directions. It favors product-facing research that delivers immediately measurable improvements over foundational work that might pay off in three to five years. Once you're in this race, changing your approach becomes difficult because doing so means potentially falling behind while your competitors continue improving.

The Google and Anthropic Threat

Google and Anthropic are spending enormous amounts of capital on language model development. Google, as arguably the most capable company in the world at scaling machine learning systems, posed an existential threat when Gemini 3 demonstrated superior performance on key benchmarks. Anthropic, founded by former Open AI researchers and explicitly focused on building increasingly capable and safe AI systems, represents a credible technical competitor backed by significant capital. Falling behind either competitor could translate into losing market share, losing talent, and losing investor confidence.

From the perspective of leadership managing these competitive dynamics, the choice to prioritize Chat GPT development represents a rational response to external competitive pressure. The organization cannot afford to pursue multiple equally ambitious research directions while competitors focus their resources on the single goal of building better language models.

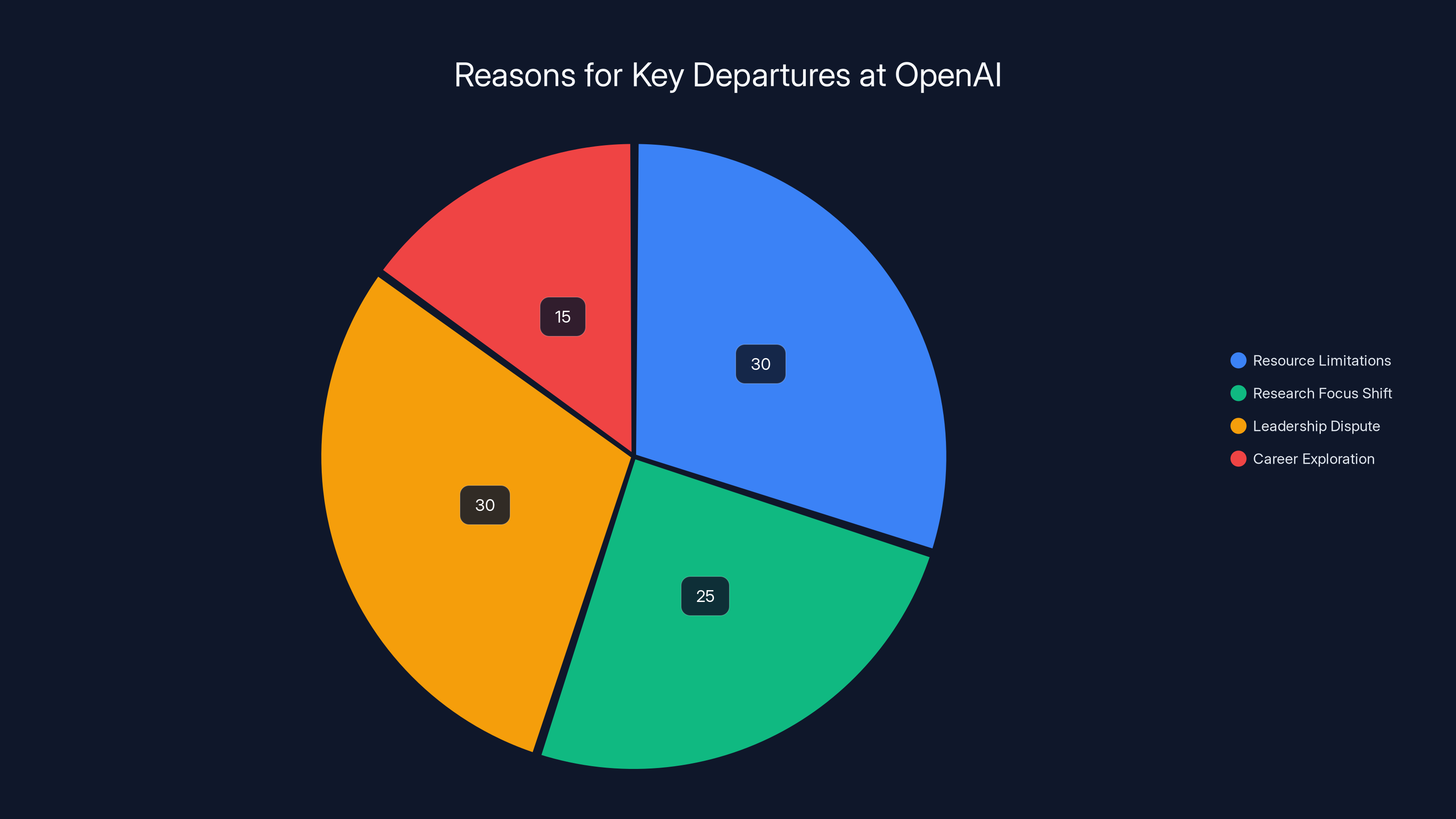

Estimated data shows that resource limitations and leadership disputes are major reasons for departures, each accounting for about 30%.

The Counterargument: Open AI's Defense of Its Research Commitment

Mark Chen's Position on Foundational Research

When confronted with characterizations of the research pivot, Mark Chen, Open AI's Chief Research Officer, rejected the framing. He stated that "long-term, foundational research remains central to Open AI and continues to account for the majority of our compute and investment, with hundreds of bottom-up projects exploring long-horizon questions beyond any single product."

This statement presents an important counterpoint to the narrative of research abandonment. If Chen is correct, then the departures might represent not a fundamental shift in organizational values but rather growing pains as the company scales and resource allocation becomes more competitive. Perhaps the researchers who departed simply wanted resources at scales that even a research-focused organization cannot sustainably provide to every team.

Chen also emphasized that "pairing that research with real-world deployment strengthens our science by accelerating feedback, learning loops and rigour." This reflects a sophisticated view of research methodology: basic research is most productive when it's informed by real-world applications, and applications are most robust when informed by rigorous research. According to this perspective, the focus on Chat GPT development isn't a departure from research but rather research conducted in productive connection with deployed systems.

The Majority of Compute Claim

Chen's assertion that "the majority" of compute remains allocated to foundational research is factual claim that could be verified or disputed. If Open AI dedicates, say, 55% of compute to foundational research across many projects and 45% to Chat GPT optimization, Chen's statement would be technically accurate even while researchers feel that their specific projects receive inadequate resources. The aggregated numbers could mask the lived experience of researchers in specific domains.

The Researcher's Perspective: Conditions That Drive Departures

Blue-Sky Research in Product-Driven Organizations

One consistent theme across departing researchers is frustration with the difficulty of conducting "blue-sky" research—exploratory work aimed at understanding fundamental questions without immediate commercial application. Blue-sky research is expensive and time-consuming. It produces negative results and dead ends alongside breakthroughs. It's difficult to justify to investors and boards of directors who want to understand how each research dollar generates value.

Yet historically, blue-sky research has been the source of many transformative discoveries. The transistor emerged from fundamental research at Bell Labs without any immediate commercial application in mind. Photographic film, the Internet, GPS, and the algorithms underlying modern search engines all emerged from research that wouldn't pass a near-term commercial ROI test. Researchers value environments where they can pursue these kinds of fundamental questions.

The Visibility and Career Problem

For researchers at Open AI, another concern involves career development and professional recognition. In a research-focused organization, publishing papers in top venues, presenting at major conferences, and being recognized as a thought leader in your field shapes career trajectory and professional reputation. Researchers contributing to Chat GPT improvements might add significant value, but they often cannot publish about their work due to competitive sensitivity. This creates a situation where their most impactful work is invisible to the broader research community and doesn't contribute to their professional standing.

Researchers working on foundational questions in AI reasoning, continuous learning, or policy implications can publish their findings, present them at conferences, and build reputations as leading thinkers in their areas. When an organization makes clear that these kinds of publications are less valued than confidential work on proprietary products, researchers face a career incentive to look elsewhere.

The Autonomy and Agency Factor

Talented researchers, particularly senior researchers with track records of impactful work, have strong preferences for autonomy and agency in their work. They want to identify important problems, pursue their own approaches to solving them, and have the authority to follow evidence wherever it leads. When decisions about resource allocation become increasingly centralized and criteria for funding become narrowly focused on a single product's improvement, researchers lose that autonomy.

The experience of needing approval from executives for every marginal computing resource, of seeing requests consistently denied, of being told that your research direction isn't a priority—these experiences accumulate and create an environment of constraint rather than freedom. For researchers accustomed to designing their own experiments and pursuing their own ideas, this becomes intolerable.

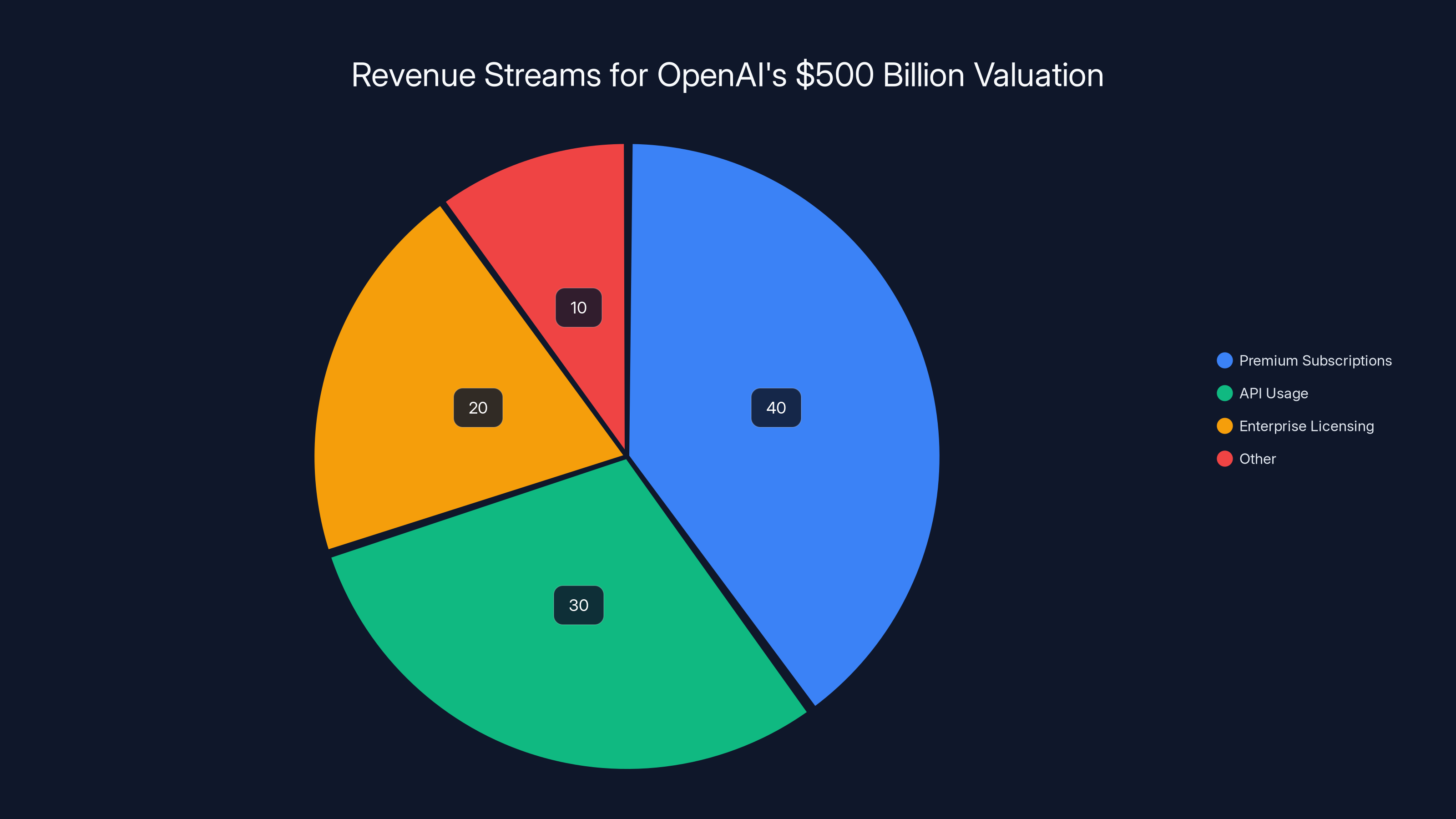

Estimated data shows that premium subscriptions could be the largest revenue source for OpenAI, followed by API usage and enterprise licensing, supporting its $500 billion valuation.

Market Dynamics: Why the Pivot Makes Investor Sense

Justifying Billion-Dollar Valuations

Open AI is valued at $500 billion, a figure that requires justification through extraordinary revenue potential. The only way to justify such a valuation is to demonstrate either that the company will generate massive revenues or that it will develop technology so transformative that it enables entirely new business models and value creation at unprecedented scales. Both narratives require focusing organizational resources on the most likely path to these outcomes.

Fundamental AI research, while potentially valuable in the long term, creates uncertainty. You cannot promise investors that your reasoning research will definitively lead to better AI systems within any particular timeframe. You cannot guarantee that your continuous learning research will work or that it will be valuable if it does work. But you can promise that optimizing Chat GPT will likely improve a product used by 800 million people, which will likely generate additional revenue through premium subscriptions, API usage, and enterprise licensing.

The Paradox of Research at Scale

There's a paradoxical tension in large commercial organizations that want to maintain serious research capability. Research requires uncertainty, experimentation, and willingness to pursue directions that might not pan out. But public companies and venture-backed companies valued in the hundreds of billions face pressure to minimize uncertainty and maximize predictable value generation. These pressures are fundamentally in tension.

Open AI's transformation from research laboratory to product company reflects this fundamental tension being resolved in favor of predictability and product value generation. This is not unique to Open AI—it's the typical pattern as research-focused startups scale into large commercial organizations. The question becomes not whether this pivot will happen, but whether an organization can find ways to maintain meaningful research capability alongside its product-driven business.

The Talent Implications: Where Researchers Are Going

Brain Drain to Rivals

Andrea Vallone's move to Anthropic exemplifies a broader pattern: departing Open AI researchers have credible alternatives, and some are choosing to work at organizations that maintain stronger commitments to research diversity and autonomy. Anthropic explicitly positions itself around dual commitments to capability development and safety research, and it was founded by researchers who left Open AI in part due to disagreements about safety prioritization.

When an organization loses senior researchers to its most serious competitors, it loses not just human capital but also institutional knowledge and research direction. Vallone understands Open AI's approach to model policy, understands what safety considerations might be neglected, and understands Open AI's technical capabilities. Anthropic gains all this understanding by hiring her.

The Venture/Academic Alternative

Other departing researchers might choose to start companies or join academic institutions. Jerry Tworek's stated interest in pursuing research "hard to do at Open AI" suggests he might form a company or join an academic institution focused on continuous learning and reasoning in AI systems. If researchers with Tworek's track record start companies and those companies develop important capabilities, Open AI loses the opportunity to develop those capabilities first and to incorporate that researcher's expertise into its own development process.

Talent Market Signals

The departures send signals to the broader talent market. Junior researchers considering Open AI as a destination now see evidence that the organization is shifting away from research diversity and autonomy toward product focus. This may discourage some percentage of talented researchers from joining, particularly those most interested in fundamental research questions. Over time, the quality of research talent entering the organization might decline, which could eventually affect the organization's ability to maintain technological leadership even in its core areas of focus.

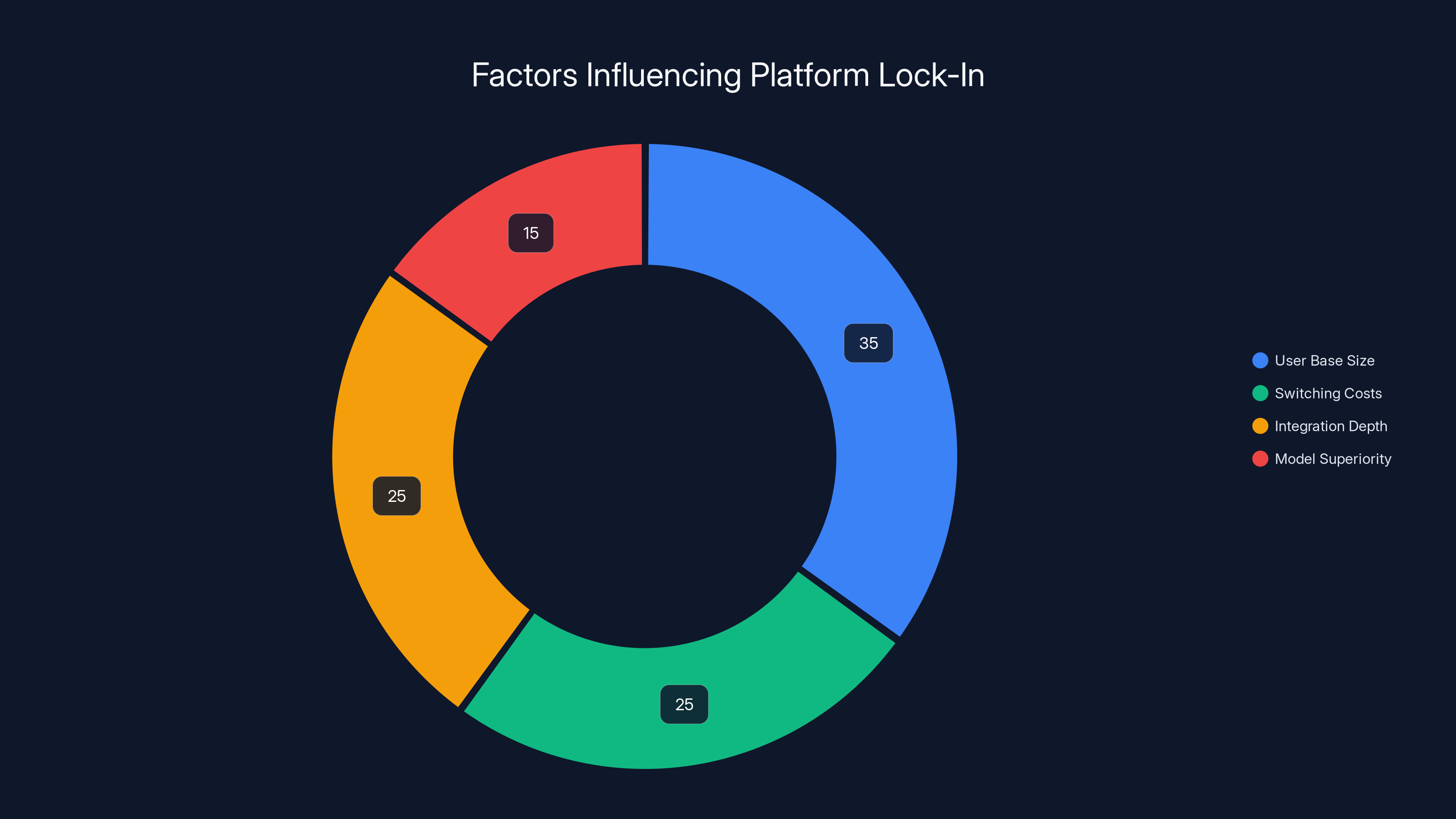

User base size and integration depth are major factors in platform lock-in, outweighing model superiority. Estimated data.

Sora and DALL-E: When Core Products Get Deprioritized

The Case of Multimodal Research

Sora, Open AI's video generation model, and DALL-E, its image generation model, are both commercially valuable products used by thousands of organizations. Yet both teams reportedly felt systematically under-resourced under the new prioritization regime. This illustrates how even genuine product successes can be deprioritized when organizational leadership decides that one product—Chat GPT—deserves disproportionate resources.

From a business strategy perspective, this makes sense if leadership believes that language models represent the primary competitive frontier and that defending leadership in language models requires dedicating the vast majority of incremental resources there. But from the perspective of researchers working on Sora and DALL-E, the signal is clear: your work is valuable, but it's less valuable than the work being done on language models. This creates frustration and motivation to seek opportunities elsewhere.

Competitive Risk in Multimodal AI

Deprioritizing multimodal research—video, image, and potentially audio generation—carries competitive risk. Google, Anthropic, and other rivals are advancing capabilities in these domains. By dedicating fewer resources to Sora and DALL-E, Open AI risks falling behind in multimodal capabilities even while it races ahead in language-only models. The long-term market success of AI assistants might depend on multimodal capabilities more than current focus levels suggest.

Strategic Rationale: The Case for Focus

Specialization as Strength

There are genuine strategic arguments for focusing organizational resources on the most promising direction. In competitive markets, organizations that do one thing exceptionally well often outcompete organizations that do many things reasonably well. By dedicating maximum resources to language model development, Open AI can potentially build an insurmountable lead in this domain.

Furthermore, from a scaling perspective, large language models represent the most resource-intensive frontier in AI development. The computational and financial requirements to build and train state-of-the-art models increase exponentially with scale. An organization can, realistically, only maintain one or two research teams working on cutting-edge frontier models. Doing so requires most of the organization's resources. Supporting multiple equally ambitious research directions becomes structurally impossible.

The Empirical Question

Ultimately, whether Open AI's strategy is optimal depends on empirical outcomes. If the company maintains technological leadership in language models while continuing to deliver valuable products in other domains (video, image generation, etc.), then the strategic pivot will be vindicated. If competitors develop superior language models while Open AI's multimodal research lags, then the strategy will be questioned. We're still in the early enough stages that the long-term consequences remain uncertain.

Implications for AI Research and Development Broadly

The Consolidation Around Product

Open AI's transformation reflects broader patterns in the AI industry. As AI capabilities transition from research curiosities to valuable commercial products, resources naturally concentrate on product development. This creates systematic pressure toward particular research directions (those that improve deployed products) and against others (those that might lead to useful knowledge but not immediate product improvements).

This dynamic isn't unique to AI. The pharmaceutical industry, for example, conducts relatively little basic research into the fundamental chemistry of disease processes but focuses heavily on drug development. The defense industry focuses on building weapons systems rather than fundamental physics research. Product-driven industries naturally tend toward product-focused research.

But the shift in Open AI's case is notable because the organization was explicitly founded with a research focus and a commitment to long-term AI safety and understanding. The fact that even an organization with explicit research commitments finds itself converging toward product focus suggests that these dynamics are very powerful.

What Might Get Neglected

If Open AI and other large AI labs shift toward product-focused research, certain research directions might receive inadequate resources across the entire AI ecosystem. Safety research, for example, is difficult to commercialize directly but potentially crucial for responsible AI development. Research into the mechanisms of learning in neural networks is intellectually interesting but might not improve any particular product. Research into alternative architectures might be valuable but threatens the current focus on language models.

If these research directions are deprioritized across the industry, progress in these areas might slow. Safety research might not keep pace with capability development. Alternative approaches to AI might not be explored thoroughly. This could concentrate risk and reduce the diversity of technical approaches being pursued.

Talent Pool Fragmentation

As large AI labs shift toward product focus, researchers with different interests face difficult choices. Some will accept product-focused research as legitimate and valuable. Others will seek out organizations that maintain stronger research commitments. This might result in a fragmentation where pure research talent concentrates at academic institutions, organizations like Anthropic that maintain explicit research commitments, and newly formed companies pursuing particular research directions.

This fragmentation could be healthy—diversity of approaches is valuable. Or it could be problematic if it means that the most well-funded and resource-rich organizations are pursuing research in narrower directions while more exploratory work happens in under-resourced environments.

The Investor Perspective: Platform Lock-In vs. Model Superiority

From Capability Moat to User Moat

Jenny Xiao, a venture capital partner at Leonis Capital and former Open AI researcher, offers an important perspective on why investors might be unconcerned about the research pivot. She argues that the competitive question isn't primarily about which organization has the technically best model, but rather about which organization has captured the largest user base and created lock-in through behavioral and platform effects.

From this perspective, Open AI's advantage isn't fragile research superiority but rather the embedded position of Chat GPT in the workflows of millions of users. Users have invested time in learning how Chat GPT works, have customized it for their needs, have built workflows around it. Switching costs, while not impossibly high, are substantial enough that many users will stick with Chat GPT even if a competitor releases a technically superior model.

This perspective suggests that the research pivot might be strategically sound even if it results in competitors developing superior models in narrow domains. As long as Chat GPT remains good enough and maintains its user base, Open AI's business might thrive regardless of whether it's definitively the best language model.

The Network Effects Argument

Platforms with large user bases become more valuable as the user base grows, because more users means more data, more use cases, more developers building on top of the platform, and more integrations into other systems. This network effect dynamic could protect Open AI's position even if its technological lead narrows. A competitor with a 5% better model might struggle to displace Chat GPT if the switching costs for users are high and if the Chat GPT platform has deep integration with many other tools and services.

Future Outlook: Will the Research Exodus Accelerate?

The Precedent Problem

Once an organization establishes a pattern of deprioritizing certain research directions and redistributing resources toward core products, researchers in those domains face a clear signal about their professional future. The question isn't whether a particular researcher might get resources for their project, but whether the organization values their research direction at all. This creates cascading effects: as some researchers depart, others working in similar domains become more likely to depart as well.

If this pattern continues, we might expect continued departures from researchers working in domains like video generation, image generation, policy research, and foundational research not directly tied to language models. Some of these researchers might form startups, others might join academic institutions, and some might join competitors like Anthropic.

The Leadership Question

Much depends on how leadership evolves thinking about research priorities. If Mark Chen's assertion that foundational research remains central is genuine and if that commitment translates into actual resource allocation and cultural signals, then the organization might stabilize its researcher base. Departures might be interpreted as natural evolution rather than signs of organizational drift.

Alternatively, if the trend toward product focus accelerates, we might see a clearer bifurcation where Open AI becomes primarily a product and deployment organization, with research conducted more carefully and selectively, while other organizations more explicitly focus on research.

The Competitive Consequence

One uncertainty is how research leadership will evolve. If Google, which has enormous resources, decides to prioritize transformative AI research alongside product development, it might develop capabilities that Open AI misses by focusing too narrowly. If Anthropic, with explicit research and safety commitments, develops important capabilities or insights from its research focus, it might develop advantages that product focus cannot offset. The long-term competitive landscape will depend on which approach—focused product development or broader research—ultimately proves more effective.

Parallels in Tech History: When Research Labs Transform

Bell Labs and the Commercial Imperative

Bell Labs, historically one of the world's most productive research institutions, generated innovations ranging from the transistor to information theory to Unix. Yet as telephone companies became more competitive and more focused on quarterly performance, Bell Labs gradually shifted from pure research toward product development. Resources for exploratory research declined, and many foundational researchers departed to academic institutions or startups.

The lesson from Bell Labs is that organizational structure and resource incentives shape research direction. Once an organization becomes focused on commercial products and quarterly performance, maintaining parallel research capability becomes difficult. Researchers who loved the open-ended exploration at Bell Labs found that environment increasingly constrained.

Xerox PARC and Strategic Myopia

Xerox PARC generated extraordinary research in personal computing, graphical user interfaces, object-oriented programming, and networking, yet Xerox as an organization failed to capitalize on these innovations. This failure isn't typically attributed to PARC being too research-focused, but rather to Xerox's product organization not seeing the commercial value in PARC's research. The lesson might be different: sometimes organizations fail not by deprioritizing research, but by failing to integrate research insights into products.

The Alternatives Perspective: Where Departing Researchers Might Thrive

Building at Anthropic

Anthropic represents an alternative model: a company founded explicitly to balance capability development with safety and alignment research. Researchers like Andrea Vallone find environments at Anthropic where safety research is central to the organizational mission rather than peripheral to it. This alternative is available to researchers with sufficient track record and visibility, which creates competition between Open AI and Anthropic for research talent at the senior levels.

Academic Opportunities

Leading universities maintain research groups focused on AI. Researchers departing Open AI have the opportunity to return to academic environments where research direction is determined more by scientific interest than commercial pressure. Academic positions offer different tradeoffs—potentially less access to computational resources, but more autonomy over research direction and more opportunity to publish and build a public reputation for thought leadership.

For development teams seeking to automate workflows and documentation, platforms like Runable offer an alternative to building from scratch. Runable's AI-powered automation capabilities for content generation and workflow management provide cost-effective solutions for teams that don't need to develop proprietary AI models. This kind of platform democratizes access to AI capabilities that might otherwise require building internal research and product teams.

Startup Formation

Researchers departing Open AI have demonstrated expertise and track records that make them attractive to venture capital. Jerry Tworek's interest in continuous learning could translate into a company focused on that problem. Other researchers might form companies around particular technical insights or approaches they developed at Open AI but couldn't pursue due to resource constraints. This entrepreneurial path offers researchers both autonomy and the possibility of building products around their technical insights.

Conclusion: The AI Research Crossroads

Open AI's strategic pivot from research-focused organization to product-focused company represents a watershed moment for artificial intelligence development and research. The departures of senior researchers like Jerry Tworek, Andrea Vallone, and Tom Cunningham signal genuine tensions between research autonomy and product focus that likely extend beyond Open AI to other organizations navigating similar transitions.

The underlying cause of these departures is clear: when organizations allocate resources competitively and favor product development over exploratory research, researchers in non-core domains experience systematic disadvantage. Computing resources become harder to access, career advancement appears to favor product teams, and organizational culture shifts to value commercial contribution over scientific discovery. These conditions naturally motivate talented researchers to seek opportunities elsewhere.

Open AI's leadership provides a counternarrative: that foundational research remains central to the organization, that product focus and research can be complementary rather than competing, and that real-world deployment actually strengthens scientific rigor. This perspective isn't unreasonable, but it exists in tension with researchers' lived experiences of resource constraints and deprioritization. The question isn't whether either perspective is correct in the abstract, but whether organizational structures and resource allocation systems translate the aspirational commitment to research into daily reality for researchers.

The broader implications are significant. If leading AI organizations increasingly prioritize product development over exploratory research, the pace of fundamental advances in understanding how AI systems work might slow. Safety research, alignment research, and investigation of alternative architectures might receive inadequate resources relative to their potential importance. The diversity of technical approaches being pursued might decline as resources concentrate on the most commercially promising directions.

At the same time, there are legitimate arguments for focus and specialization. Large language models represent an extraordinary technical frontier requiring vast resources. Organizations cannot realistically maintain equally ambitious research programs in multiple directions. Focus on proven approaches might be more efficient than pursuing uncertain explorations.

The resolution to these tensions likely involves multiple models coexisting: some organizations like Open AI focused heavily on product and capability development, others like Anthropic maintaining explicit balance between research and products, academic institutions conducting fundamental research with less commercial pressure, and startups formed by departing researchers pursuing particular technical directions. This ecosystem diversity might ultimately prove beneficial, ensuring that multiple approaches are explored and that research progress doesn't stagnate despite any individual organization's strategic choices.

For teams and organizations watching these dynamics, the lesson is that talent retention in research-oriented fields requires more than compensation. Researchers want autonomy, want to pursue interesting problems, want recognition for their contributions, and want working environments that value exploration alongside optimization. Organizations that fail to provide these elements will see talented researchers depart, particularly when alternatives like Anthropic, academic institutions, or startup opportunities exist.

The challenge Open AI faces is whether an organization valued at $500 billion can maintain meaningful research capability while also servicing that valuation through product revenue. History suggests this balance is genuinely difficult, and the departures we're seeing reflect this difficulty playing out in real time. How Open AI and other AI organizations navigate this challenge will shape which research directions get pursued, which capabilities get developed, and ultimately which futures become possible for artificial intelligence technology.

FAQ

What drove senior researchers to leave Open AI?

Senior researchers departed due to Open AI's strategic shift toward prioritizing Chat GPT development over foundational research. Key reasons include reduced access to computing resources, deprioritization of research projects outside core language models, and organizational restructuring that concentrated decision-making authority around Chat GPT optimization. Researchers like Jerry Tworek reported having research funding requests denied and experiencing increasingly political dynamics in resource allocation.

Why is Open AI prioritizing Chat GPT over other research?

Open AI's prioritization reflects competitive pressures from Google and Anthropic, investor demands to justify a $500 billion valuation through revenue generation, and the strategic view that large language models represent the highest-value frontier in AI development. Computing resources required for frontier-model development are finite, even for well-capitalized organizations, making resource concentration around core products a rational strategic choice in competitive markets.

What are the implications of deprioritizing research like Sora and DALL-E?

Deprioritizing multimodal research like video and image generation creates tactical risk in domains where competitors are actively developing capabilities, and it sends cultural signals that discourage talented researchers from joining or remaining in multimodal research teams. While it allows greater resource concentration on language models, it potentially creates competitive vulnerabilities in domains that might become strategically important longer-term.

How does this affect the broader AI research ecosystem?

Open AI's pivot influences which research directions receive funding and attention across the AI industry. If major well-resourced organizations converge on product-focused research, exploratory research in safety, alignment, alternative architectures, and fundamental questions might receive inadequate resources. This could slow progress in these domains and reduce the diversity of technical approaches being pursued industry-wide.

Where are departing researchers going?

Departing researchers are pursuing multiple paths: some joining competitors like Anthropic that maintain stronger research commitments, others moving to academic institutions where research direction is determined by scientific interest rather than commercial pressure, and some forming startups to pursue particular technical directions they couldn't pursue at Open AI. These departures represent a brain-drain to organizations with different research models.

Is Open AI's approach to research genuinely changing?

Open AI's leadership maintains that foundational research remains central to the organization and continues to receive the majority of compute resources. However, researchers' experiences suggest actual resource allocation increasingly favors product development, with computing credit requests from non-core teams being denied or underfunded. The gap between stated commitment and lived experience appears to be driving departures and cultural strain.

What is the difference between research-focused and product-focused AI organizations?

Research-focused organizations prioritize exploratory work on fundamental questions, often with uncertain commercial timelines, and provide researchers significant autonomy in directing their work. Product-focused organizations concentrate resources on developing deployed products and improvements to existing systems, with research evaluated based on near-term commercial contribution. Open AI appears to be shifting from the former model toward the latter.

How does this compare to other organizations' approaches?

Anthropic explicitly balances capability development with safety and alignment research, Google maintains parallel research groups alongside product development, academic institutions prioritize fundamental research with less commercial pressure, and startups typically focus on particular technical directions or market opportunities. Open AI's shift toward product focus represents one point on a spectrum of organizational approaches to research.

What should teams consider when choosing between product development and research focus?

Organizations navigating this balance should recognize that talent in research-intensive fields requires autonomy, career development opportunity, and alignment between stated values and actual resource allocation. Deprioritizing entire research domains without clear communication creates frustration and departures. Organizations can benefit from maintaining some research capability even in a primarily product-focused structure, while being honest about resource constraints and competitive pressures.

Will this trend continue across the AI industry?

Unless competitive pressures ease or organizational structures evolve to better integrate research and products, the trend toward product focus in large commercial AI organizations is likely to continue. This might result in a bifurcated ecosystem where dedicated research-focused organizations, academic institutions, and well-funded startups pursue fundamental research while large product companies focus on optimization and deployment of proven approaches.

Key Takeaways

- OpenAI is shifting from research-focused organization to product-driven company prioritizing ChatGPT over foundational research

- Senior researchers including VP of Research Jerry Tworek, policy researcher Andrea Vallone, and economist Tom Cunningham have departed due to resource constraints

- Computing resources are being concentrated on language models while projects like Sora and DALL-E face reduced funding and staffing

- Leadership maintains research remains central to the organization, but researchers report increasingly political dynamics and denied funding requests

- Departing researchers are joining competitors like Anthropic or pursuing academic and startup opportunities with different research models

- The shift reflects rational response to competitive pressures from Google and Anthropic but risks neglecting important research domains like AI safety and alternative architectures

- This pattern mirrors historical transformation of research-focused organizations like Bell Labs toward product development once commercial pressures increase

- Multiple organizational models coexist: OpenAI's product focus, Anthropic's research-balanced approach, academic research, and research-focused startups

- Long-term implications include potential consolidation around particular technological approaches and reduced diversity in pursued research directions

- Organizations seeking to retain research talent must align stated research commitments with actual resource allocation and provide autonomy in research direction

Related Articles

- NVIDIA's $100B OpenAI Investment: What the Deal Really Means [2025]

- Flapping Airplanes and Research-Driven AI: Why Data-Hungry Models Are Becoming Obsolete [2025]

- ChatGPT's Age Detection Bug: Why Adults Are Stuck in Teen Mode [2025]

- How AI Is Accelerating Scientific Research Globally [2025]

- Tech CEOs on ICE Violence, Democracy, and Trump [2025]

- ChatGPT 5.2 Writing Quality Problem: What Sam Altman Said [2025]