Physical AI in Automobiles: How the Industry Is Redefining What Vehicles Can Do

You've probably heard the term "Physical AI" thrown around in tech circles lately, especially if you've been paying attention to CES announcements or automotive industry coverage. It sounds like a contradiction, right? Artificial intelligence is supposed to be... artificial. Intangible. Living in servers and data centers. How does software suddenly get a body?

Here's the thing: "Physical AI" isn't really a technological breakthrough hiding under a fancy name. It's more of a marketing umbrella term that encompasses something genuinely transformative. The buzzword points toward a fundamental shift in how the automotive industry—and the chipmakers supplying it—think about themselves and their role in the future.

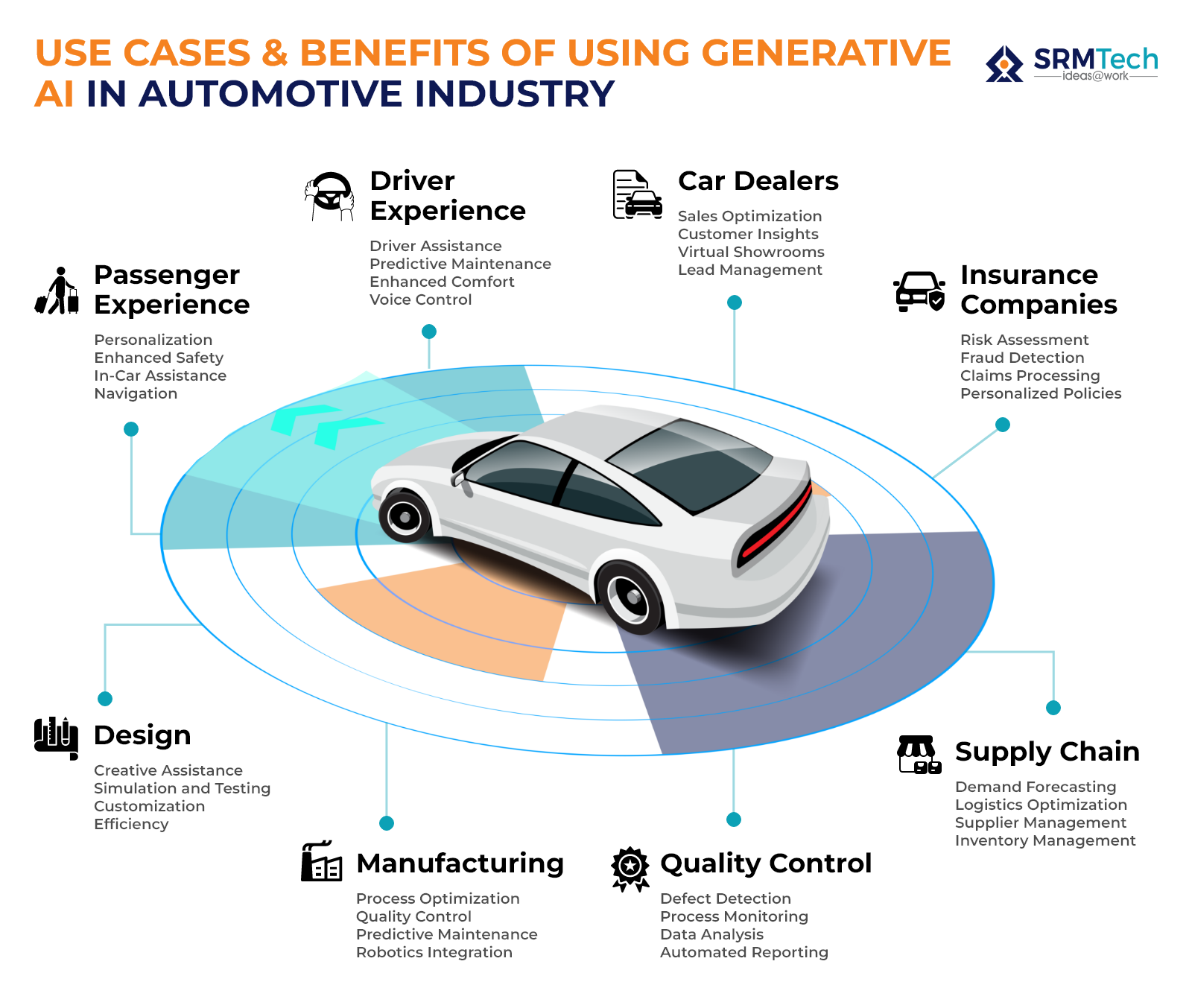

The automotive sector is undergoing one of its most dramatic technological transitions since the introduction of electronic fuel injection. We're not just talking about electric batteries or better infotainment systems. We're talking about vehicles that can perceive their environment, make decisions, and act autonomously in ways that were science fiction just a decade ago. And the financial stakes are enormous.

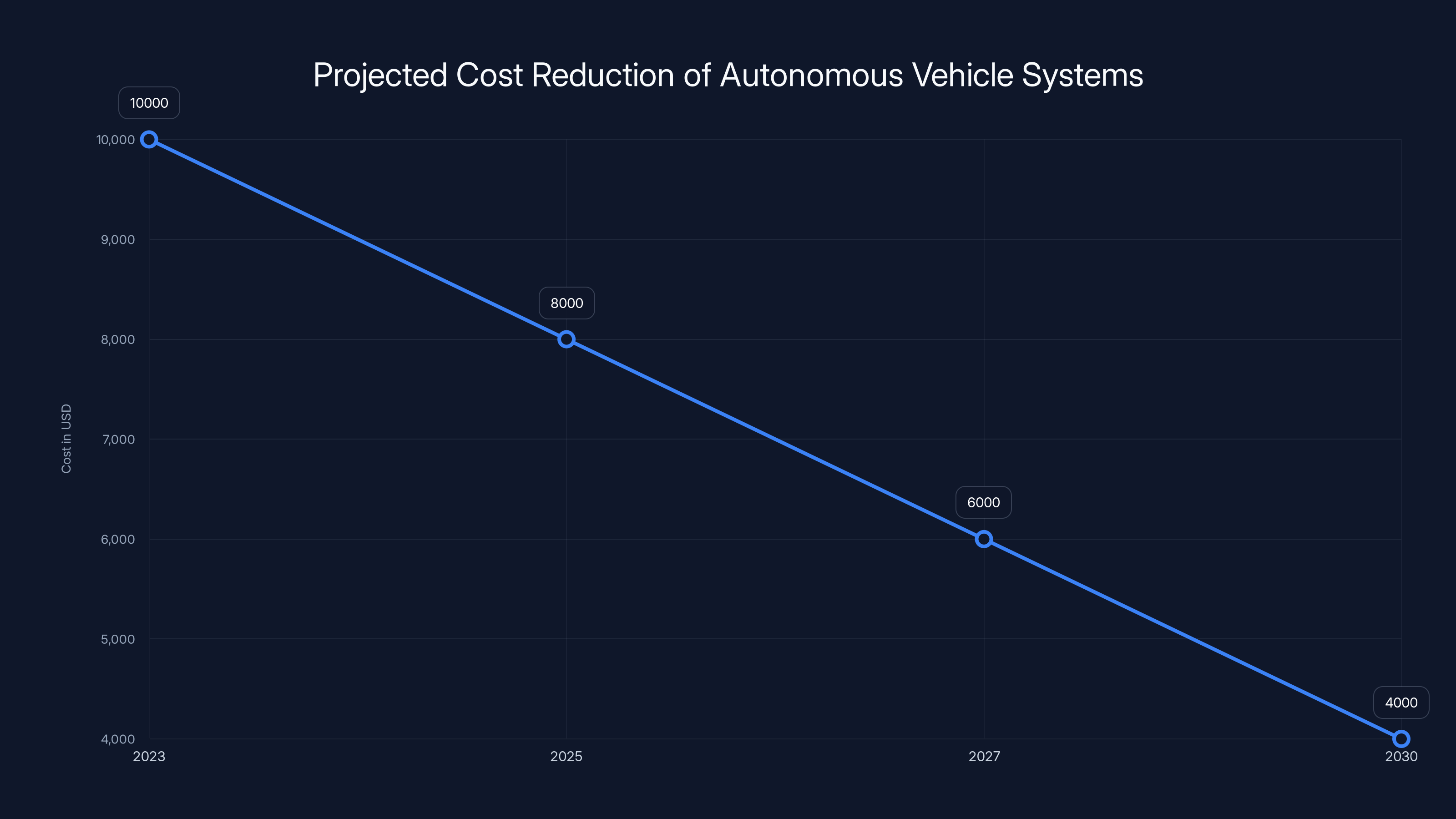

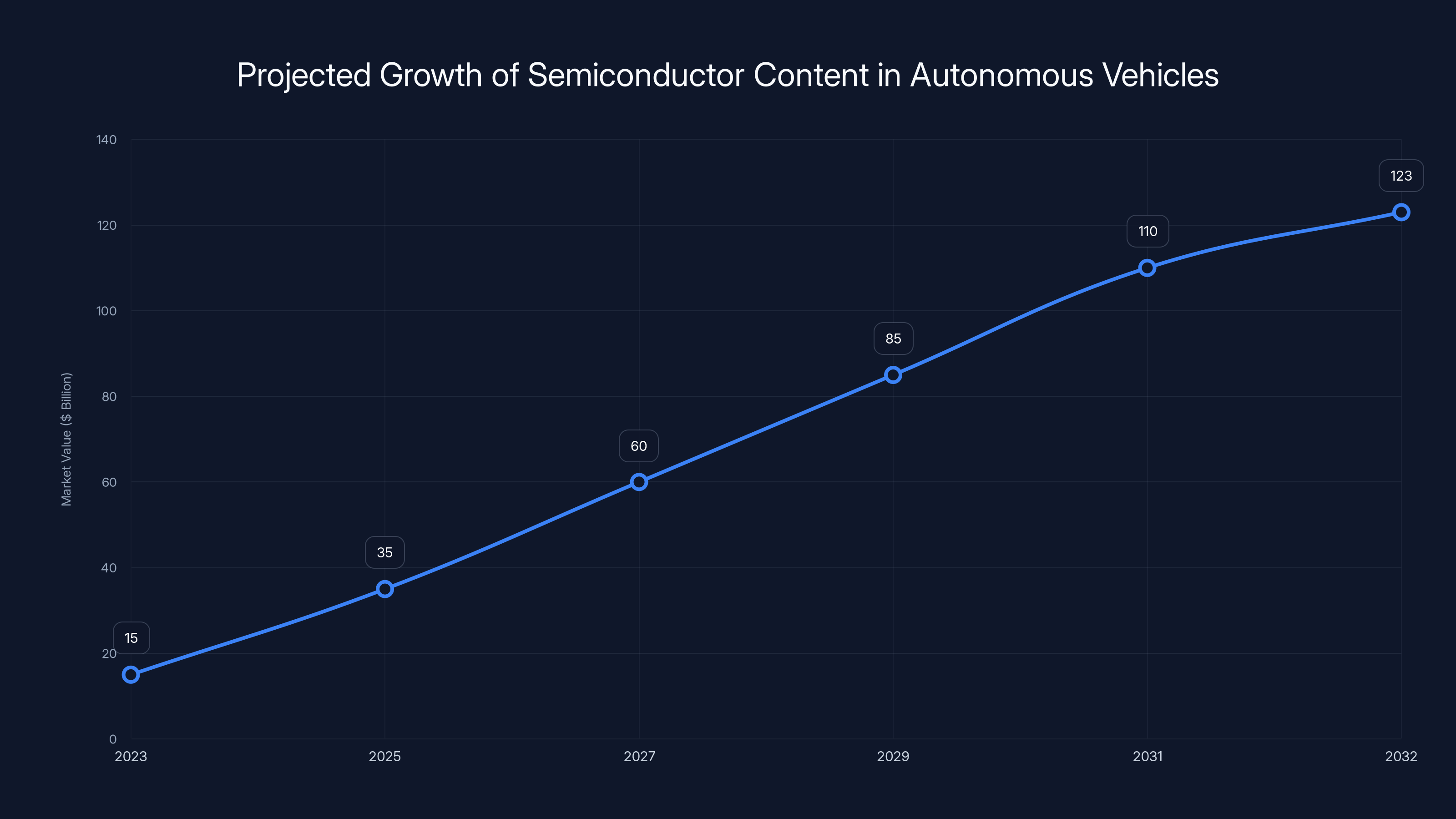

According to market analysts, the semiconductor content in autonomous vehicles could represent a $123 billion opportunity by 2032, up roughly 85 percent from 2023 levels. That's the kind of number that makes chipmakers like Nvidia and ARM sit up and pay attention. It's also the kind of number that makes them invest in marketing departments to explain why their products matter.

The term "Physical AI" is really their way of saying: "Here's what we're building toward, and here's why you should care." It's the shorthand for a future where autonomous systems don't just process information—they interact with the real world in meaningful, complex ways. They perceive what's happening around them through cameras and sensors. They reason through what they're seeing. They make decisions. And they act on those decisions.

Let's dig into what Physical AI actually means, how it's changing the automotive landscape, and whether the hype matches reality.

What Exactly Is Physical AI? Defining the Marketing Term That's Reshaping Transportation

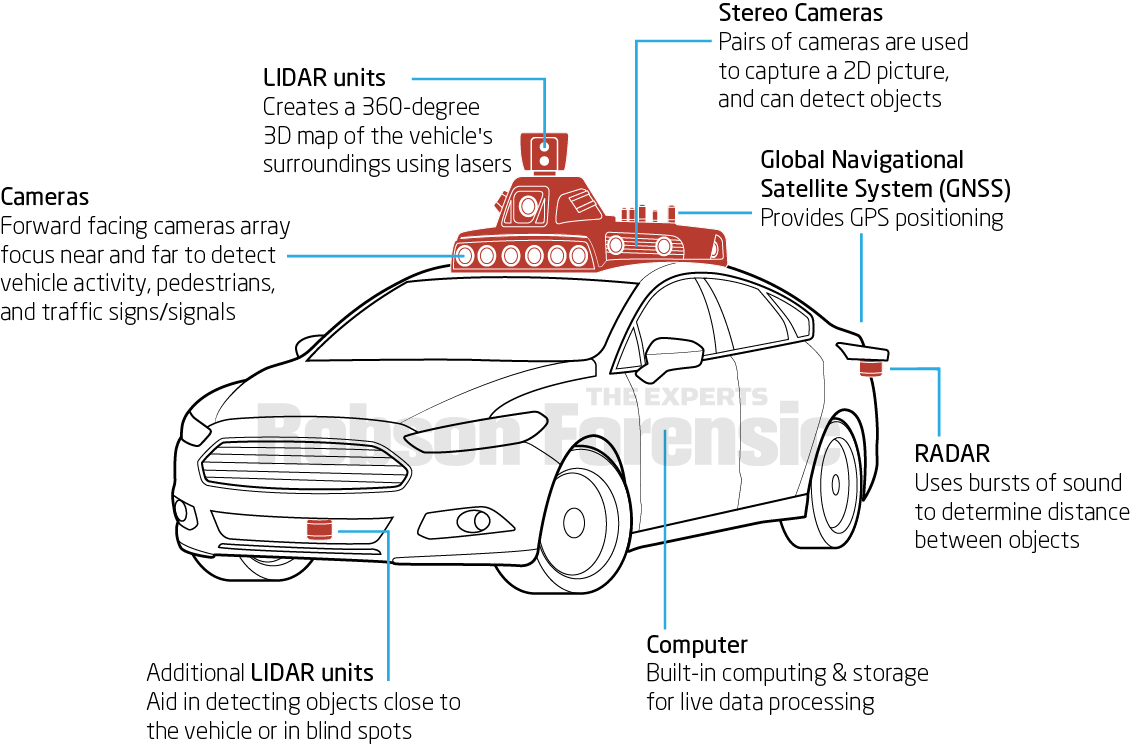

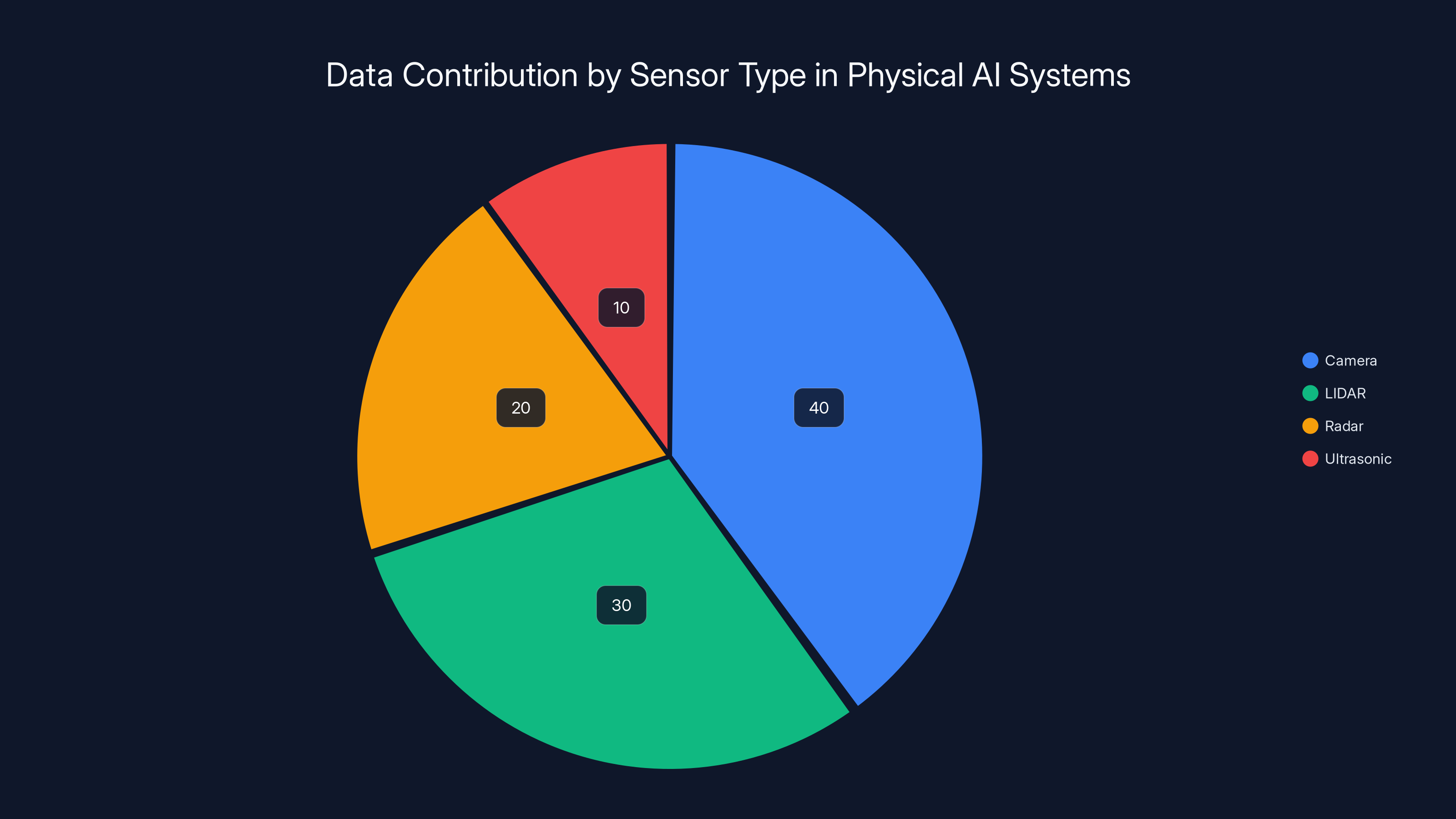

At its core, Physical AI refers to autonomous systems that can understand and interact with physical environments in real time. We're talking about vehicles that use camera feeds, LIDAR sensors, radar, and other data streams to build a comprehensive picture of their surroundings. But it's not just perception. True Physical AI systems need to reason about what they're seeing, predict how situations might evolve, and make decisions about how to respond.

Let's break this down into components, because the term is genuinely useful even if it does sound like marketing speak.

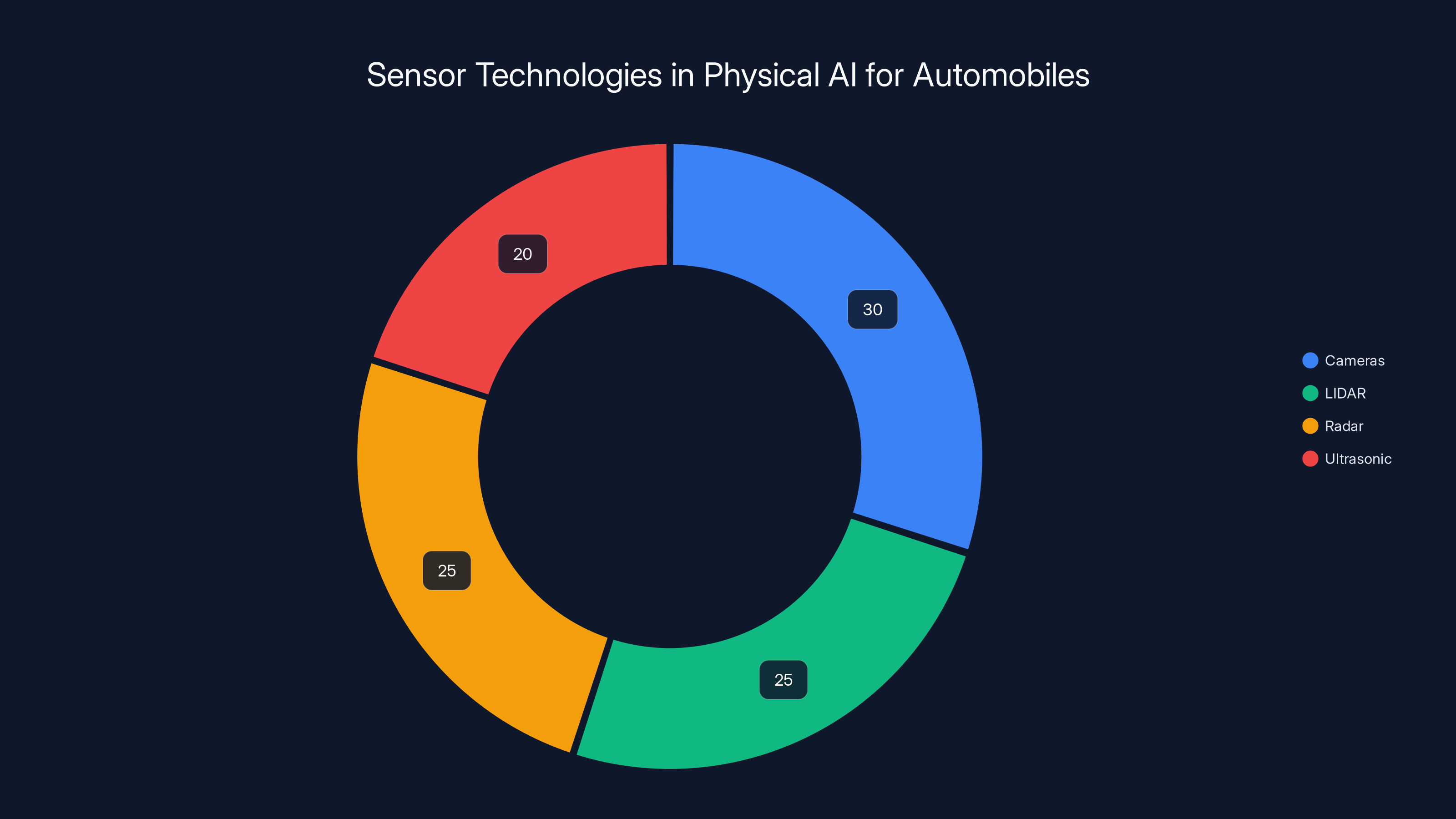

Perception: This is where it starts. Your car has sensors pointing in every direction. Cameras capture visual information. LIDAR creates a three-dimensional point cloud of everything nearby. Radar measures velocity of moving objects. Ultrasonic sensors provide close-range coverage. All of this data streams into the vehicle's computing system at enormous rates. A single modern autonomous vehicle prototype can generate 4 to 5 terabytes of data per day—that's equivalent to roughly 1,500 hours of high-definition video.

Understanding: Raw sensor data is meaningless without interpretation. Physical AI systems use deep learning models to process this data. They identify objects: cars, pedestrians, cyclists, road signs, lane markings, debris. They classify situations: "This is a four-way intersection," "There's a pedestrian jaywalking," "Traffic is backed up." This understanding phase transforms streams of numbers into actionable information.

Reasoning: This is where things get genuinely difficult. Once the system understands what's happening, it needs to think about what might happen next and what it should do. If a pedestrian is looking at their phone and walking toward the road, the system needs to predict they might step into traffic and adjust accordingly. If another vehicle suddenly changes lanes, the system needs to react. These are split-second decisions that require something approximating reasoning.

Action: Finally, the system needs to act. It adjusts acceleration, applies braking, steers the vehicle, signals intentions. All of this needs to happen flawlessly, because the stakes are human lives.

This is what "Physical AI" actually describes, stripped of marketing language. It's AI that doesn't just exist in abstract digital space. It's AI with real-world consequences, operating under real-world constraints, with real penalties for failure.

Why did the term emerge now, and why is it catching on? Partly because the technology is maturing. Partly because it's genuinely useful as a concept. But honestly? Partly because chipmakers needed a way to explain to investors why autonomous vehicles represent such a massive opportunity. The phrase "Physical AI" sounds more compelling than "we're selling more expensive processors to automakers."

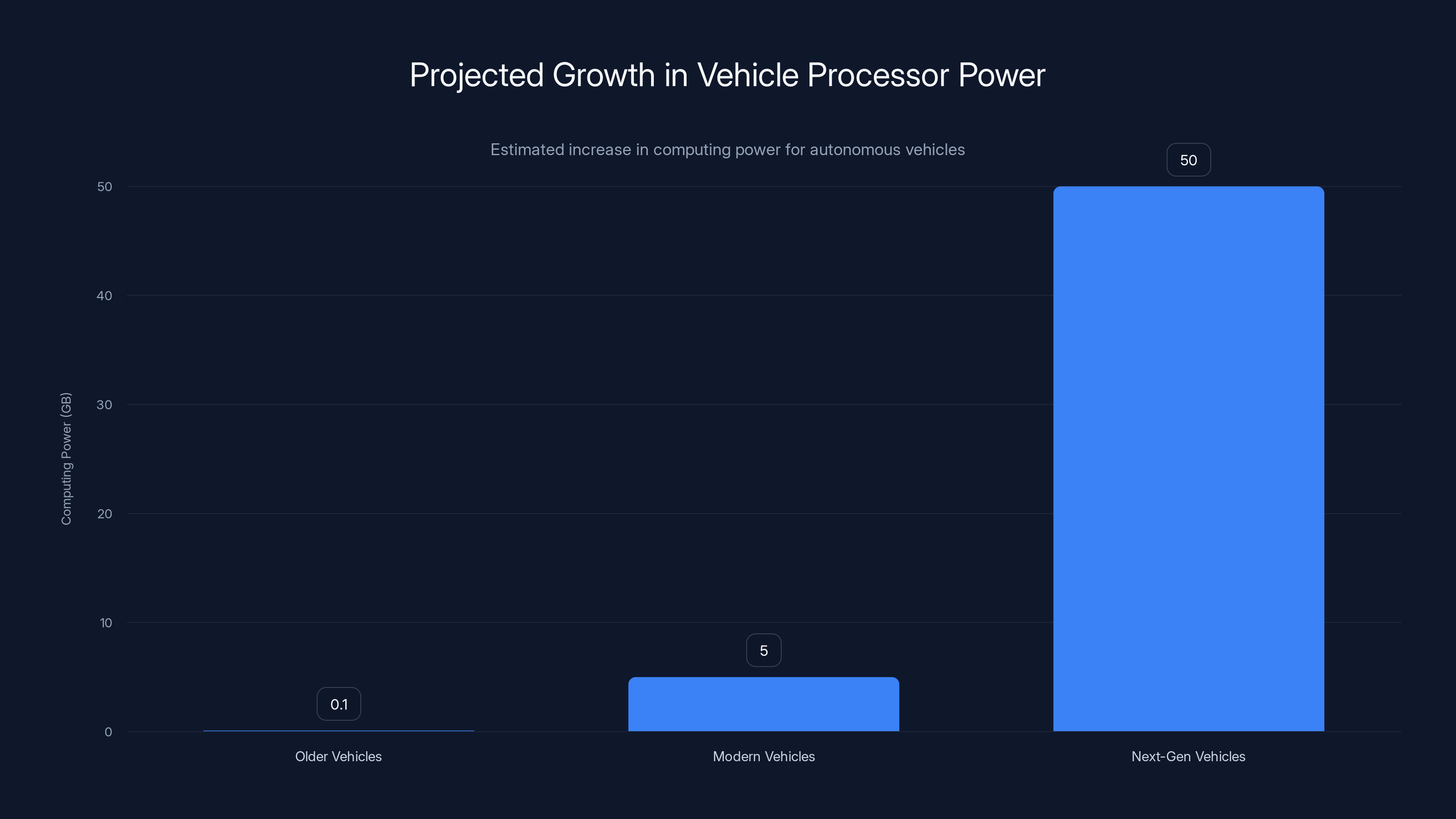

Estimated data shows a significant increase in computing power requirements from older to next-gen autonomous vehicles, highlighting the growing demand for advanced processors.

The Chipmakers' Perspective: Why Nvidia and ARM Are Betting Big

Understand this first: chipmakers don't make cars. They make the brains that go inside cars. And those brains are becoming exponentially more powerful.

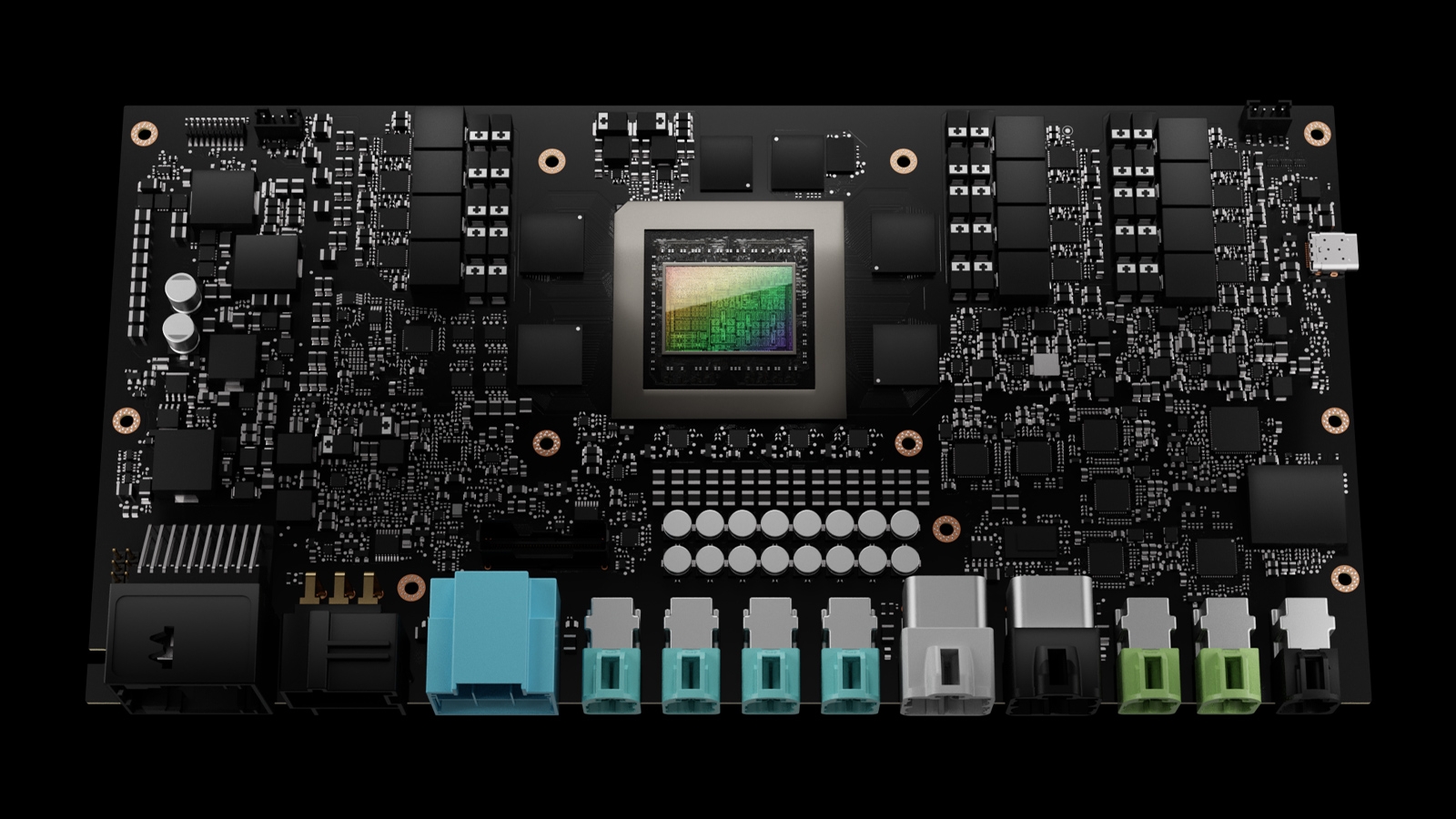

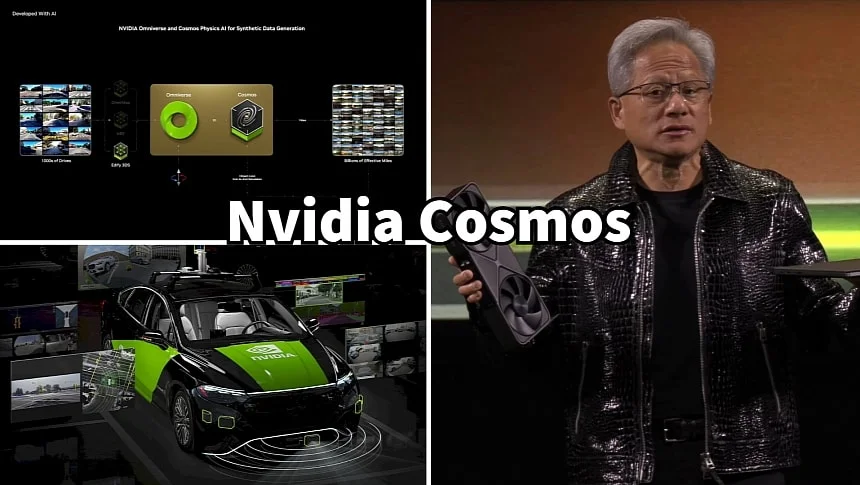

Nvidia, the chipmaker best known for graphics processors and AI acceleration, has emerged as the dominant supplier for autonomous vehicle systems. Their DRIVE platform is used by automakers ranging from Mercedes-Benz to Geely to startups building their first autonomous prototypes.

During his CES 2025 keynote address, Nvidia CEO Jensen Huang made a telling statement: "This is already a giant business for us. The central brain of the vehicle will now be quantum leaps bigger—hundreds of times as big—and that's what we're selling into." That's the financial reality behind the marketing. Older vehicles might have had a few megabytes of computing power dedicated to autonomous features. Modern autonomous vehicles need gigabytes. The next generation will need dozens of gigabytes.

Why? Because modern AI systems—particularly large language models and vision transformers—are computationally expensive. A single inference pass through a modern AI model can require billions of mathematical operations. Do that 10 times per second across multiple models running simultaneously, and suddenly you understand why vehicle processors are becoming exponentially more powerful and more expensive.

The competitive advantage: Nvidia's advantage isn't just raw performance. It's the software stack. Their CUDA programming framework has become the standard for AI workloads. Automakers who choose Nvidia aren't just buying chips, they're integrating into an entire ecosystem. Switching costs are high. Once you've optimized your software for CUDA, switching to a competing architecture means rewriting everything.

ARM, the company that designs the processor instruction sets used in virtually every smartphone, is positioning itself as the alternative. Rather than competing directly with Nvidia's specialized AI accelerators, ARM is building a broader ecosystem around Physical AI. Their approach emphasizes efficiency and the heterogeneous computing architectures that will be necessary for autonomous vehicles. A self-driving car needs to run multiple AI models simultaneously—one for object detection, one for trajectory prediction, one for traffic sign recognition—and they need to run efficiently.

ARMs strategy is to position themselves as the integrator, the company that brings together perception processors, reasoning accelerators, and real-time operating systems into a cohesive platform. It's a different play than Nvidia's, but it recognizes the same fundamental truth: the future of automotive is the future of semiconductor dominance.

Market dynamics: Other chipmakers are watching closely. Qualcomm has automotive ambitions. Intel has made relevant acquisitions. But Nvidia's first-mover advantage is substantial. They've had years to develop relationships with automakers, to build out the software ecosystem, to demonstrate reliability.

The

Cameras, LIDAR, and radar are the primary sensors in Physical AI systems, each contributing significantly to the vehicle's environmental understanding. Estimated data.

Ford's Hands-Free Driving: What 2028 Actually Means

Ford made a significant announcement: by 2028, the company plans to sell a system that allows drivers to operate vehicles without looking at the road in front of them.

That's provocative language, and it deserves scrutiny. Ford isn't claiming full autonomy. They're claiming "eyes-off" capability in specific conditions.

What does that mean in practice? The system would need to handle highway driving without driver visual attention. Imagine you're on a straight highway with moderate traffic. You're not looking at the road; you're reading an email or watching a video on your center display. The vehicle maintains lane position, manages speed, makes lane changes, and handles typical highway scenarios. If something unexpected happens—a pedestrian crosses the road, a serious traffic incident occurs—the system alerts you to take over.

This is a meaningful milestone, but it's crucial to understand what it isn't. It isn't full autonomy. It isn't urban driving with complex intersections. It's a narrow set of scenarios where the vehicle can operate without continuous driver attention.

The implementation challenge: Eyes-off driving requires redundancy. If the primary perception system fails, you need a secondary system ready to alert the driver. If the primary computing system crashes, you need a backup. This redundancy is expensive and complex to integrate.

Ford's timeline is credible because they're not claiming full autonomy—that would be incredible. They're claiming something more modest: safe operation in specific, well-understood scenarios. Highway driving is one of the easier autonomous driving problems. The environment is more predictable than urban streets. The stakes are still high, but the complexity is lower.

What it requires: Advanced sensor fusion across cameras, LIDAR, and radar. Robust object detection and tracking. Reliable road segmentation. Accurate vehicle dynamics models. All running with sub-second latency on hardware that's small enough to fit in a car and power-efficient enough to not devastate range on electric vehicles.

Ford's actual implementation will likely involve significant geofencing. The system might work on specific highway routes the company has tested and validated. You won't be able to use it on every road. But as a concrete, near-term milestone, it's significant.

The Afeela: Sony and Honda's Bet on Consumer Acceptance

When Sony and Honda announced their partnership on the Afeela vehicle, it signaled something important: established electronics companies are willing to invest in automotive. Sony isn't naturally an automotive company. They make cameras, displays, sensors, entertainment systems. Honda is obviously an automotive company, but their strength is in mechanical engineering and manufacturing, not in AI-powered perception systems.

Their partnership combines strengths. Sony brings perception expertise—they make excellent image sensors, and they have deep software experience with AI. Honda brings automotive expertise—they understand manufacturing, regulatory compliance, and consumer expectations.

The Afeela is planned as a luxury vehicle that will eventually have autonomous driving capability. Here's the critical phrase: "at some point, date TBD." This is honest. Sony and Honda aren't claiming they'll have full autonomous driving ready at launch. They're saying it's coming, but they're not committing to a specific timeline.

Why this matters: The automotive industry is learning from years of overpromises. Remember when Elon Musk claimed Tesla would have "full self-driving" ready in 2015? It's now 2025, and Tesla still doesn't have true full self-driving, though they've made progress with driver assistance features. The industry has become more cautious.

Sony and Honda's approach is more credible partly because it's less ambitious in its timeline claims. They're essentially saying: "We're developing this technology. We'll deploy it when it's ready. We won't rush." That's refreshingly honest.

The consumer angle: Sony's involvement suggests a focus on the in-cabin experience. Autonomous driving is useless if the vehicle is boring. A car that can drive itself but has a terrible entertainment system misses the point. Sony understands entertainment, user interface design, and creating immersive experiences. The Afeela will likely emphasize these aspects.

The battery-electric platform is also telling. Every major automotive manufacturer is building autonomous capability into electric vehicles, not traditional combustion vehicles. This makes sense: electric drivetrains are simpler to control electronically, the battery management systems already require sophisticated computing, and the total lifecycle cost economics favor EVs.

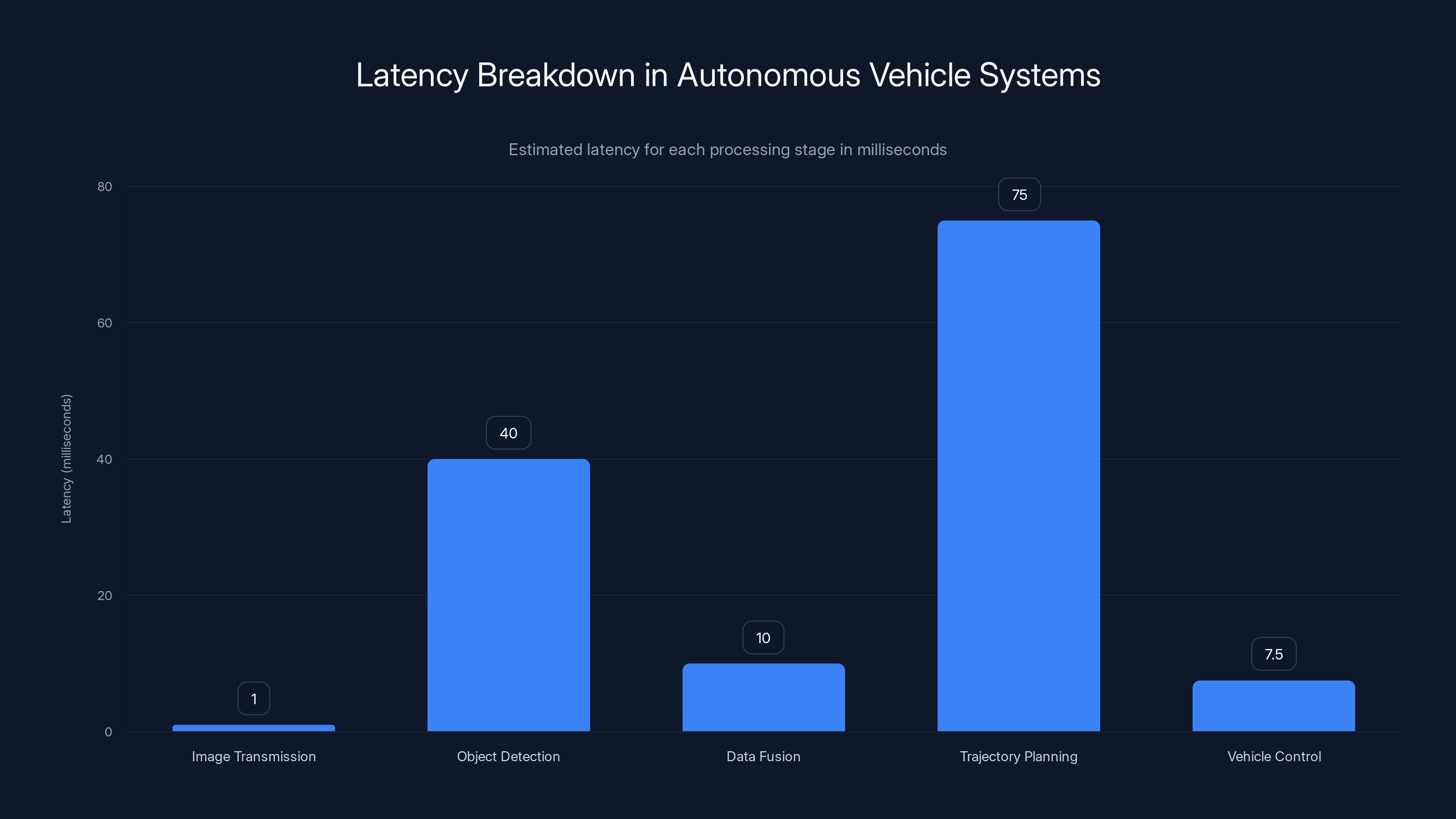

The total latency from image capture to vehicle response is approximately 100-150 milliseconds. This latency can introduce a 3-meter delay in response at 100 km/h. Estimated data.

Mercedes-Benz and Nvidia: Hands-Off Driving Coming to the US Market

Mercedes-Benz made a concrete announcement: they'll deploy a hands-off driving system in the US market in 2025, using Nvidia technology.

This is significant because Mercedes is a premium manufacturer with high standards for reliability and user experience. They won't deploy something that doesn't work reliably. Mercedes also has strong regulatory relationships, particularly in Germany and Europe, which makes US regulatory approval more likely.

The hands-off system is planned to eventually enable autonomous commuting—driving between home and work without driver intervention. Again, this is geofenced capability. It won't work everywhere. But for specific routes the car has been trained on, the car can handle the driving.

Technical requirements: Hands-off driving requires absolute reliability in certain scenarios. You need redundant sensor coverage. You need backup computing. You need to be able to reliably detect situations where the driver needs to take over. Mercedes has the engineering resources to build this correctly.

Using Nvidia's processors and software gives Mercedes access to proven technology. They're not reinventing autonomous driving from scratch. They're integrating Nvidia's DRIVE platform, training it on their specific routes and driving scenarios, and then deploying it.

The market signal: When Mercedes—a company obsessed with reliability and luxury—deploys an autonomous feature, it tells consumers this stuff is real. Not science fiction. Real technology with real limitations, but actual capability.

Geely, a Chinese automaker owned by Geely, is also using Nvidia technology for their "intelligent driving system." The architecture is similar: perception systems built on Nvidia processors, machine learning models optimized for their hardware, deployment on specific routes initially, and plans to expand over time.

Humanoid Robots on Factory Floors: Physical AI in Manufacturing

The CES 2025 announcements weren't limited to cars. Google Deep Mind, Boston Dynamics, and Hyundai announced plans to deploy humanoid robots on Hyundai factory floors.

This is Physical AI applied to manufacturing. These robots need to perceive the environment (where are the parts? where are my hands relative to the assembly line?), understand tasks (how do I install this component?), reason about execution (if I approach at this angle, I'll collide with the conveyor belt), and act precisely.

Manufacturing is actually an ideal testing ground for Physical AI. The environments are somewhat controlled—you can standardize the lighting, the background, the layout. The tasks are repetitive enough that you can collect training data, but complex enough that you can't use simple programmed rules. It's a Goldilocks scenario for physical AI development.

Why automakers care: Manufacturing is expensive. Labor accounts for a substantial portion of automotive production costs. If robots can handle welding, painting, assembly, and quality inspection—tasks that are tedious, repetitive, and sometimes dangerous for humans—the economics become compelling.

But here's the subtlety: these robots aren't replacing workers entirely (at least not yet). They're handling specific tasks within a broader assembly process. Human workers remain essential for quality control, for handling exceptions, for flexible problem-solving. The robots handle the routine repetitive work.

This is actually a more realistic near-term application of Physical AI than fully autonomous vehicles. The environments are more controlled, the tasks are more defined, the error tolerance is different.

Cameras contribute the most to data generation in Physical AI systems, followed by LIDAR, radar, and ultrasonic sensors. Estimated data based on typical usage.

Computer Vision as the Foundation: How Cameras Enable Autonomy

Here's something important: all of Physical AI ultimately depends on computer vision. The sensors can capture information, but making sense of that information requires understanding images and video.

Modern computer vision relies on deep convolutional neural networks—AI systems trained on millions of images to recognize objects, detect patterns, and understand spatial relationships. A well-trained vision model can identify objects with accuracy exceeding 99 percent under ideal conditions. Under real-world conditions—nighttime, rain, snow, unusual angles—accuracy drops to 85-95 percent depending on the object class and conditions.

This is good enough for many tasks. It's not good enough for driving a car in dense urban traffic. That's why autonomous vehicles use multiple cameras with overlapping fields of view, plus LIDAR and radar providing additional information.

The redundancy principle: No single sensor or AI system is reliable enough. You need multiple independent systems providing information about the same situation. If camera-based object detection says "there's a pedestrian ahead," but LIDAR doesn't see anything, the system should assume the pedestrian exists and act cautiously. If three separate systems agree, confidence is higher.

This redundancy is expensive and computationally intensive, but it's essential. A failure mode where the system confidently identifies something that doesn't exist is different from a failure mode where the system fails to identify something real. The former can cause swerving into oncoming traffic. The latter can cause accidents by not stopping in time.

Training the models: Modern computer vision models are trained using supervised learning on massive datasets. Autonomous vehicle companies have collected billions of miles of driving data. They use that data to train models to recognize everything relevant to driving: pedestrians, cyclists, vehicles, road signs, lane markings, potholes, debris, weather conditions.

The challenge is generalization. A model trained primarily on sunny California driving might fail in snowy Wisconsin conditions. Models trained on urban streets might not generalize to rural roads. Companies spend enormous effort on dataset diversity to address this.

LIDAR, Radar, and Sensor Fusion: Building a Complete Picture

LIDAR creates three-dimensional maps by firing laser pulses and measuring how long they take to return. It's like sonar, but using light instead of sound. LIDAR is extraordinarily useful for autonomous vehicles because it provides precise distance measurements to every object in the environment. A LIDAR sensor can generate a point cloud with hundreds of thousands of points per second, each with accurate three-dimensional coordinates.

The downside? LIDAR systems are expensive. High-quality automotive LIDAR costs several thousand dollars. As volumes increase and manufacturing scales, costs will drop. But today, LIDAR is one of the reasons autonomous vehicle prototypes are so expensive.

Radar complements LIDAR. Radar measures not just distance, but velocity. It tells you that not only is there an object 50 meters ahead, but that object is approaching at 30 kilometers per hour. Radar also works better than LIDAR in heavy rain or snow—conditions where LIDAR performance degrades.

Together, LIDAR, radar, and cameras provide complementary information. Cameras understand what things are (that's a pedestrian, that's a bicycle, that's a dog). LIDAR provides precise distance information. Radar provides velocity information. The autonomous vehicle system fuses all three information sources into a unified world model.

Sensor fusion algorithms: Combining information from multiple sensors requires sophisticated algorithms. You need to determine which sensor observations correspond to the same physical object, account for latency differences between sensors, handle sensor failures or degradation gracefully, and update the world model in real time.

This is a hard problem in computer science and engineering. The algorithms require low-latency processing, they need to run on automotive-grade computing hardware, they need to be robust to sensor failures, and they need to produce accurate, reliable output repeatedly in diverse driving conditions.

Companies have been working on sensor fusion for years. The technology is mature enough for commercial deployment, but it's not trivial. Mistakes in sensor fusion can cause serious accidents.

Estimated data shows a significant reduction in autonomous vehicle hardware costs over the next decade, potentially dropping below $5,000 by 2030, making it economically viable for commercial use.

Real-Time Processing and Latency: Why Milliseconds Matter

Autonomous vehicle systems need to make decisions quickly. If a pedestrian steps into the road 30 meters ahead, the vehicle has roughly 1-2 seconds before impact (depending on speed). The entire perception, reasoning, and action cycle needs to complete in milliseconds.

Let's think about the latency budget. A camera captures an image. That image is transmitted to the computing system (latency: ~1 millisecond). The image is processed through the neural network for object detection (latency: ~30-50 milliseconds). The detected objects are tracked and fused with data from other sensors (latency: ~10 milliseconds). The planning algorithm computes the optimal trajectory (latency: ~50-100 milliseconds). The vehicle control system translates the desired trajectory into steering, acceleration, and braking commands (latency: ~5-10 milliseconds).

Total: roughly 100-150 milliseconds from image capture to vehicle response.

At 100 kilometers per hour, a vehicle travels roughly 2.8 meters per 100 milliseconds. So the latency introduces about a 3-meter delay in response to obstacles. For higher speeds or more complex scenarios, you need even better latency.

This is why automotive computing hardware is specialized. General-purpose processors like conventional CPUs aren't optimized for this workload. Automotive computing platforms include dedicated hardware accelerators for machine learning inference, real-time processing kernels for vehicle control, and redundant systems for critical functions.

The cost of reliability: All of this specialized hardware is expensive. A single autonomous vehicle computing platform might cost $5,000-10,000 in component costs. Manufacturing, integration, validation, and support add more costs. When autonomous vehicles finally scale to millions of units annually, these costs will decrease through manufacturing economies of scale, but today they're high.

This is why Physical AI is generating so much interest from chipmakers. Every autonomous vehicle needs sophisticated computing hardware. If you're supplying the chips for millions of vehicles, the revenue opportunity is enormous.

The Regulatory Landscape: Safety Standards and Approval Processes

Autonomous vehicles don't just need to work technically. They need to be approved by regulators who care deeply about safety.

In the United States, the National Highway Traffic Safety Administration (NHTSA) oversees vehicle safety. In Europe, the European Commission sets standards. Different jurisdictions have different requirements, and they're still being finalized.

Regulators are fundamentally asking: "Is this technology safer than human driving?" This seems like an obvious bar to meet—human drivers cause ~40,000 deaths annually in the US alone. But it's actually a harder bar than it seems, because you need to prove safety through data.

Random accidents happen unpredictably. To prove that autonomous vehicles are safer, companies need to collect data demonstrating that autonomous vehicles have fewer accidents per mile driven than human drivers. This requires enormous amounts of real-world testing data.

Testing and validation: This is why companies like Tesla, Waymo, and others have conducted hundreds of millions of miles of autonomous driving. They're collecting data to demonstrate safety. It also helps that every mile of testing produces more data to improve the systems.

But there's a slower, more careful approach as well. Rather than requesting blanket approval for full autonomy, companies are requesting approval for specific features in specific conditions. Ford's eyes-off driving for 2028 is exactly this approach: approval for highway driving, not urban driving. Mercedes's hands-off system is similar.

Regulators are comfortable with this incremental approach because it allows them to observe real-world performance before expanding the scope of permitted autonomy.

Liability questions: Who's responsible if an autonomous vehicle causes an accident? The manufacturer? The software company? The vehicle owner? These questions remain unsettled. Insurance companies, regulators, and legal systems are all working through these issues.

Most proposals converge on manufacturer liability, at least for the autonomous system itself. If the system fails dangerously, the manufacturer bears responsibility. This creates strong incentives for quality and thorough testing before deployment.

The semiconductor content in autonomous vehicles is projected to grow significantly, reaching $123 billion by 2032. Estimated data based on industry trends.

Cybersecurity and Connected Vehicle Threats

Autonomous vehicles are computers on wheels. They have network connectivity, they run AI systems, they accept over-the-air updates. All of this creates security challenges.

A successful cyberattack on an autonomous vehicle could cause catastrophic harm. Imagine remote attackers disabling the braking system, or causing the vehicle to accelerate unexpectedly, or disabling the steering system. It's theoretically possible and genuinely terrifying.

This is why automotive cybersecurity is becoming a major industry focus. Vehicles need encrypted communication with backend servers. They need secure boot processes that prevent unauthorized firmware modifications. They need intrusion detection systems that identify suspicious behavior. They need rapid patching mechanisms that can deploy security updates without requiring drivers to visit dealerships.

Standards and requirements: ISO 27001 and related standards provide frameworks for information security. The automotive industry is adapting these for vehicle-specific requirements.

European regulators have been particularly rigorous on this. The EU's proposed regulations for autonomous vehicles include specific cybersecurity requirements. Manufacturers need to demonstrate that they can prevent and respond to cyberattacks.

This is expensive and complex, but it's necessary. A security breach that causes accidents is unacceptable. The bar for automotive cybersecurity is appropriately higher than for most software systems.

Data Collection and Privacy: The Information Economy of Autonomous Vehicles

Autonomous vehicles collect enormous amounts of data. They record everything: video from cameras, LIDAR point clouds, radar data, GPS location, vehicle speed, steering angle, acceleration. All of this data is useful for training and improving systems.

But it's also private information. When you drive in an autonomous vehicle, you're revealing where you go, when you go there, how fast you drive, where you stop. This data could be used to build detailed profiles of your movement patterns, your habits, your relationships.

Privacy vs. improvement: The tension is real. Better autonomous vehicles require better training data. Better training data requires collecting real-world driving information. Protecting privacy requires limiting data collection or anonymizing data, which makes it less useful for training.

Companies are exploring various solutions: collecting data locally on the vehicle and only sending anonymized summaries to servers; using federated learning where models are trained on-device rather than on centralized servers; differential privacy techniques that add noise to datasets to prevent identifying individuals while preserving overall patterns.

But these solutions have trade-offs. Local processing is more computationally expensive. Federated learning is more complex to implement. Differential privacy can reduce data quality.

Regulators are paying attention. The EU's GDPR applies to vehicle data collection. California's privacy laws will likely apply to autonomous vehicles operating there. Consumers should expect future regulations around how autonomous vehicle data can be collected, stored, and used.

The Economics of Autonomy: Cost Reduction and Adoption Timeline

Autonomous vehicles currently are expensive because they require expensive computing hardware, expensive sensors, and expensive development. The question is: when do they become economically competitive with human drivers?

Let's do the math. A truck driver with benefits costs roughly

An autonomous vehicle system needs to cost less than that to be economically attractive to fleet operators. The computing hardware alone might cost $10,000 today, but that will decrease with manufacturing scale. LIDAR costs will decrease. Sensor costs will decrease. Development costs will be amortized across millions of vehicles.

Within 5-10 years, the hardware costs for autonomous vehicle systems will likely drop below $5,000. At that price point, the economics become compelling for commercial applications—trucking, delivery, taxi services.

Consumer vehicles are a different story. Consumers won't pay $15,000 for an autonomous system that only works on highways and in good weather. They'll want full autonomy that works everywhere. That's a harder problem technologically and economically.

Timeline reality check: Near-term adoption (next 5 years): specialized applications like highway trucking, shuttle services in controlled environments, robotaxis in specific cities. Mid-term (5-10 years): broader geographic expansion, more weather conditions, more scenarios. Long-term (10+ years): possibly full autonomy in all conditions, but this is genuinely uncertain.

The companies making near-term timelines claims are being optimistic. The companies saying "we don't know when full autonomy will arrive" are probably being honest. Autonomous driving is hard. Harder than many in the industry initially expected.

Unexpected Challenges: Why Physical AI Is Harder Than Predicted

Despite years of development and enormous investment, autonomous vehicles remain challenging. Why?

The long tail problem: Most driving scenarios are straightforward. Detecting pedestrians, reading traffic signs, maintaining lane position—these problems are solved or nearly solved. But the edge cases remain difficult. A pedestrian with an umbrella obscuring their face. A traffic sign covered in snow. A vehicle swerving unexpectedly. An animal in the road. A road surface with unusual markings. An unusual intersection design.

These scenarios account for a tiny percentage of all driving, but they're common enough to cause problems. Each scenario requires specific training data and careful testing.

Weather challenges: LIDAR performance degrades significantly in heavy rain or snow. Camera-based systems struggle with snow covering lane markings. Sensor fusion algorithms need to gracefully degrade when some sensors are unreliable.

Companies have been testing in diverse weather conditions, but there's always another edge case. The combination of heavy rain, darkness, unexpected road geometry, and other road users is genuinely challenging.

Human behavior unpredictability: Humans do strange things on the road. They make unexpected gestures. They ignore traffic rules. They behave inconsistently. Training AI systems to predict and respond to human behavior requires capturing that variability in training data.

Some of this unpredictability is genuinely irreducible. You can't completely eliminate the possibility that someone will do something unexpected.

Regulatory uncertainty: Regulators are still defining what's acceptable for autonomous vehicles. Requirements change. Standards evolve. Companies working toward one regulatory framework might find the framework has shifted.

Future Roadmap: Where Physical AI in Automotive Is Heading

Let's look forward. What's the trajectory for Physical AI in automobiles?

Near-term (2025-2027):

- Eyes-off and hands-off driving systems in specific conditions (highways, good weather)

- Expanded geographic deployment for these systems

- Introduction of humanoid robots in automotive manufacturing

- Continued increase in semiconductor content per vehicle

- Early commercial deployment of robotaxis in controlled environments

Medium-term (2027-2032):

- Full autonomy in specific geographic areas (particular cities, routes)

- Significant expansion of commercial autonomous trucking

- Consumer vehicles with advanced driver assistance systems that approach autonomy in specific scenarios

- Integration of AI systems that handle increasingly complex scenarios

- Regulatory frameworks becoming more settled

Long-term (2032+):

- Uncertain. Will technology advances enable true full autonomy? Or will practical limitations prove insurmountable? Will consumer acceptance reach the point where autonomous vehicles are mainstream?

The honest answer is: nobody knows for certain. The industry is making progress, but the problems remaining are genuinely hard.

The Real Story: Hype Versus Reality

Cutting through the marketing language, here's what's actually happening:

Chipmakers like Nvidia and ARM see a massive market opportunity. Autonomous vehicles require powerful computing hardware, and that hardware is becoming more important every year. They're using "Physical AI" as a term that describes this reality and makes it sound exciting.

Automakers are making real progress on autonomous systems. They're deploying specific features in specific conditions. The technology works, but only in constrained scenarios.

Regulators are proceeding cautiously, which is appropriate. Safety is paramount.

Consumers will benefit from gradual introduction of autonomous features over years and decades. The near-term won't bring flying cars or cars that handle any scenario. But it will bring better driver assistance, reduced accident rates, and slowly expanding autonomy in specific conditions.

The term "Physical AI" is useful because it captures something real: autonomous systems interacting with the physical world. But like all marketing terms, it's a simplification. The reality is messier, more complex, and more interesting than the buzzword suggests.

TL; DR

- Physical AI describes autonomous systems that perceive, reason about, and act in physical environments using cameras, sensors, and AI models

- The market opportunity is genuine: semiconductor content in autonomous vehicles could reach $123 billion by 2032, driving major investment from chipmakers

- Specific timelines are credible: Ford's eyes-off driving by 2028, Mercedes's hands-off system in 2025, but only for highway conditions and good weather

- Technology challenges remain significant: edge cases, weather conditions, unpredictable human behavior, and regulatory uncertainty all pose ongoing challenges

- Near-term adoption favors commercial applications: trucking, delivery, shuttles in controlled environments are more feasible than consumer autonomous vehicles

- Security and privacy are critical concerns: autonomous vehicles collect massive amounts of data and face cybersecurity threats that require sophisticated defensive measures

FAQ

What is Physical AI in the context of automobiles?

Physical AI refers to autonomous systems that use cameras, sensors, and AI models to perceive their environment, understand what's happening, reason about appropriate responses, and take actions in the real world. In automobiles, it means vehicles that can detect pedestrians, read traffic signs, predict behavior, make driving decisions, and control steering, acceleration, and braking without human intervention in specific scenarios.

How does Physical AI differ from traditional autonomous driving systems?

Physical AI emphasizes the complete cycle of perception, understanding, reasoning, and action, while traditional autonomous driving might focus primarily on perception and basic control. Physical AI systems need to understand complex scenarios, predict future events, reason about multiple possible outcomes, and select appropriate actions. This requires more sophisticated AI models and more computational power than earlier autonomous driving approaches.

What are the main sensor technologies used in Physical AI automotive systems?

Autonomous vehicles typically use multiple complementary sensors: cameras for visual understanding, LIDAR for precise three-dimensional distance measurements, radar for velocity detection and performance in adverse weather, and ultrasonic sensors for close-range obstacle detection. Nvidia's DRIVE platform coordinates data from these sensors to build a unified understanding of the vehicle's environment.

Why are chipmakers like Nvidia investing so heavily in automotive AI?

Chipmakers are investing heavily because autonomous vehicles require significantly more computing power than traditional vehicles. As vehicles transition from simple driver assistance to more sophisticated autonomous systems, semiconductor content per vehicle increases dramatically. This represents a multi-billion dollar market opportunity, with projections suggesting semiconductor content could reach $123 billion annually by 2032 across the automotive industry.

When will fully autonomous vehicles be available for consumers?

Full autonomy across all driving conditions and scenarios remains years or decades away. Near-term deployments (2025-2028) focus on specific scenarios like highway driving or controlled environments. Commercial applications like autonomous trucking and robotaxis in limited geographic areas are likely to arrive first. Consumer vehicles with full autonomy are further out and depend on continued progress in AI, regulatory approval, and cost reduction in autonomous systems.

What are the main challenges preventing faster adoption of Physical AI in vehicles?

Key challenges include handling edge cases and unusual driving scenarios that rarely occur but need careful handling, maintaining performance in adverse weather conditions where sensor reliability degrades, unpredictability of human driver behavior, high costs of autonomous system components, unresolved regulatory frameworks, cybersecurity vulnerabilities, and consumer acceptance concerns. These problems are being addressed gradually, but progress is slower than early optimists predicted.

How does sensor fusion improve autonomous vehicle safety?

Sensor fusion combines information from multiple independent sensors to create a more complete and reliable understanding of the environment. If cameras detect an object but LIDAR doesn't, the system can make conservative assumptions about uncertainty. If multiple sensors agree on an observation, confidence is higher. This redundancy prevents catastrophic failures from single sensor malfunctions and improves reliability in diverse conditions where individual sensors might perform poorly.

What role do large language models play in Physical AI for automobiles?

Large language models can help with natural language understanding for voice interfaces, dialogue systems that allow passengers to interact conversationally with vehicles, and potentially reasoning about complex driving scenarios described in text. However, the core autonomous driving problem—perception, decision-making, and control—primarily relies on computer vision models and reinforcement learning rather than large language models.

How are automakers addressing cybersecurity in autonomous vehicles?

Automakers are implementing encrypted communication with backend servers, secure boot processes that prevent unauthorized firmware modifications, intrusion detection systems that identify suspicious behavior, and over-the-air update mechanisms for rapid security patches. Regulatory requirements, particularly in Europe, mandate specific cybersecurity measures. The automotive industry is adapting cybersecurity standards from other domains while addressing vehicle-specific security challenges.

What data privacy concerns arise from autonomous vehicles?

Autonomous vehicles collect continuous data about location, movement patterns, routes, stops, and timing. This detailed information could reveal sensitive personal information about where people work, socialize, and spend time. Privacy concerns have prompted development of techniques like on-device processing, federated learning, and differential privacy to protect individual privacy while maintaining the data quality needed to improve autonomous systems. Regulations like GDPR and emerging vehicle-specific privacy laws will govern how autonomous vehicle data can be collected and used.

Use Case: Automate technical documentation for your fleet management system with AI-powered document generation.

Try Runable For Free

Key Takeaways

- Physical AI enables autonomous systems to perceive environments through multiple sensors, reason about complex scenarios, and take real-world actions—but success depends on robust computing hardware and extensive real-world testing

- The semiconductor opportunity in autonomous vehicles is genuine: computing content per vehicle is projected to increase dramatically, potentially reaching a $123 billion market by 2032

- Near-term autonomous driving deployments (2025-2028) focus on specific, well-defined scenarios like highway driving—full autonomy across all conditions remains years away despite industry optimism

- Technical challenges remain substantial: edge case handling, weather robustness, human behavior unpredictability, and regulatory uncertainty all complicate autonomous vehicle deployment

- Commercial applications like robotaxis in controlled areas and autonomous trucking will likely arrive before consumer autonomous vehicles, following the incremental regulatory approval strategy

Related Articles

- Ford's AI Voice Assistant & Level 3 Autonomy: What's Coming [2025]

- Mobileye Acquires Mentee Robotics: $900M Bet on Humanoid AI [2025]

- CES 2026: Complete Guide to Tech's Biggest Innovations

- Ford's AI Assistant Revolution: What's Coming to Your Car in 2027 [2025]

- CES 2026: Everything Revealed From Nvidia to Razer's AI Tools

- CES 2026: Why EVs Lost to Robotaxis & AI – Industry Shift Explained

![Physical AI in Automobiles: The Future of Self-Driving Cars [2025]](https://tryrunable.com/blog/physical-ai-in-automobiles-the-future-of-self-driving-cars-2/image-1-1767960467702.jpg)