Tik Tok Data Center Outage Sparks Censorship Fears: What Really Happened

Last week, something happened on Tik Tok that shouldn't have made headlines but absolutely did. The app went down across the United States, and millions of users couldn't post content, couldn't view videos properly, and couldn't engage with the platform in ways they'd grown accustomed to. Sounds like a normal technical glitch, right? Except the timing was catastrophic.

This outage occurred just days after Tik Tok officially transferred ownership of its American operations to a group of majority-US investors in what's being called the Tik Tok US Data Security entity, or USDS. The timing alone was enough to send shockwaves through the internet. Users weren't just experiencing frustration about a broken app, they were questioning whether something more sinister was happening behind the scenes.

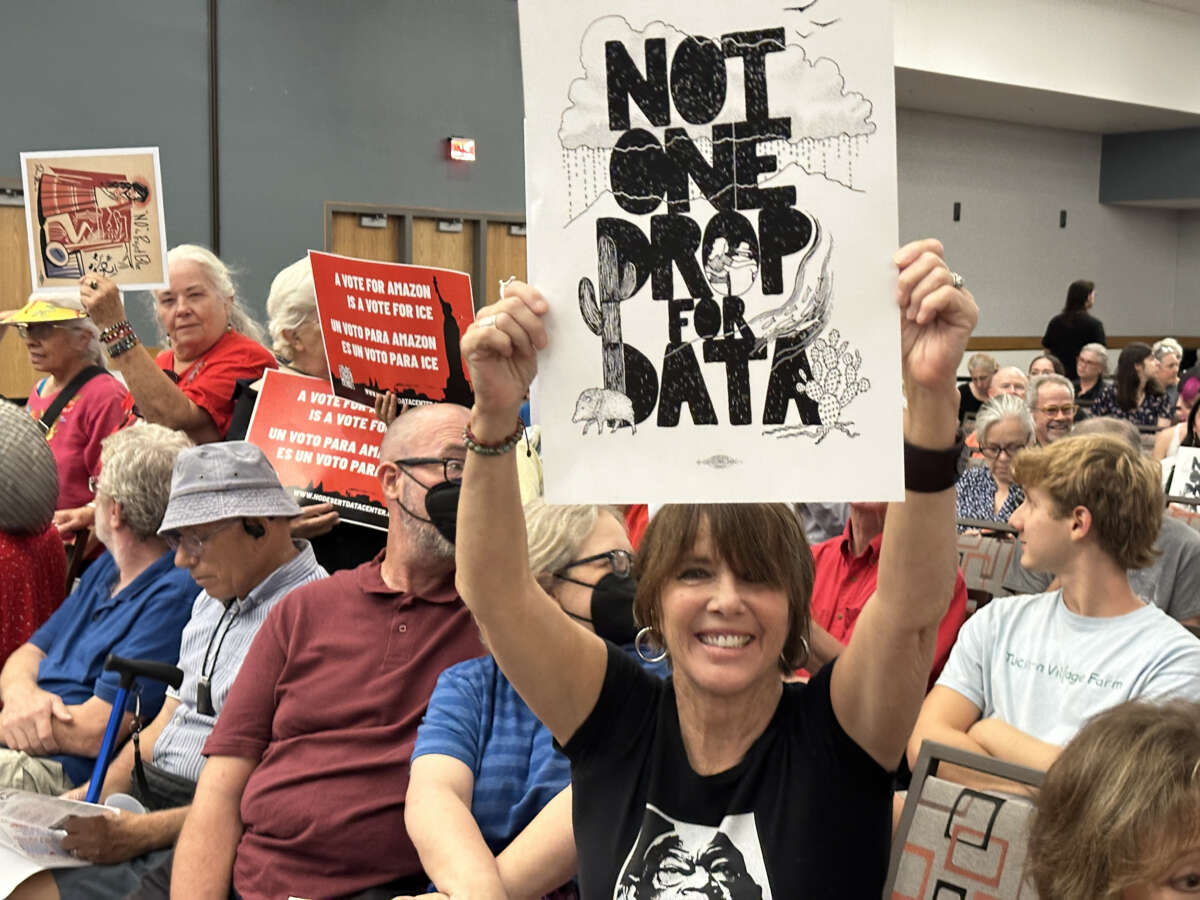

The conspiracy theory that emerged was simple but potent: the new American owners were using this "outage" as cover to suppress videos critical of ICE (Immigration and Customs Enforcement) operations happening in Minneapolis. Videos documenting immigration protests, ICE raids, and civil rights concerns were being blocked or buried. At least, that's what many users believed. The problem is separating what actually happened from what people feared might happen.

This incident sits at the intersection of three major issues: technical infrastructure failures, platform trust in the social media era, and the politically charged debates surrounding content moderation and government power. Understanding what really occurred requires looking at the technical facts, the political context, and the very real concerns about algorithmic manipulation that have become central to how we think about modern social media.

Let's break down what happened, why people thought what they thought, and what it tells us about the future of one of the world's most influential social media platforms.

TL; DR

- The Outage Was Real: A genuine power outage at a US data center disrupted Tik Tok service across the country for hours, affecting millions of users unable to upload or view content properly.

- Timing Made It Explosive: The outage occurred days after new US ownership took control, creating a perfect storm of skepticism about whether the new owners were intentionally suppressing content.

- The Specific Concern: Videos related to ICE operations in Minneapolis became the focal point, with users unable to post or share content about immigration enforcement and protests.

- Oracle Connection Fueled Suspicion: The data center partner Oracle is owned by Larry Ellison, a Trump ally, raising fears about algorithmic favoritism toward certain political viewpoints.

- The Reality Was Messy: While Tik Tok denied censorship allegations, the outage exposed deeper anxieties about corporate ownership changes, algorithmic transparency, and who actually controls what billions of people see.

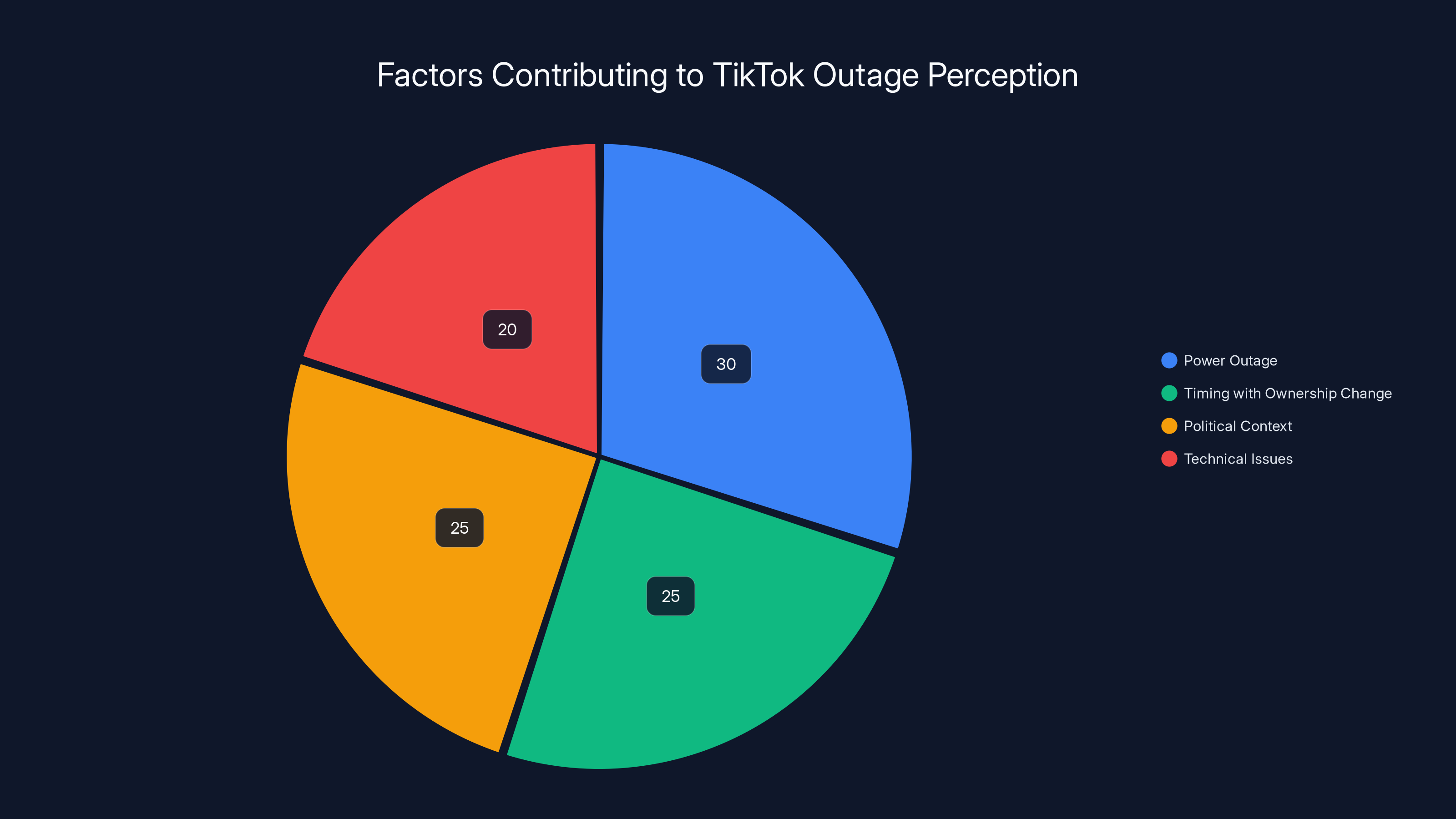

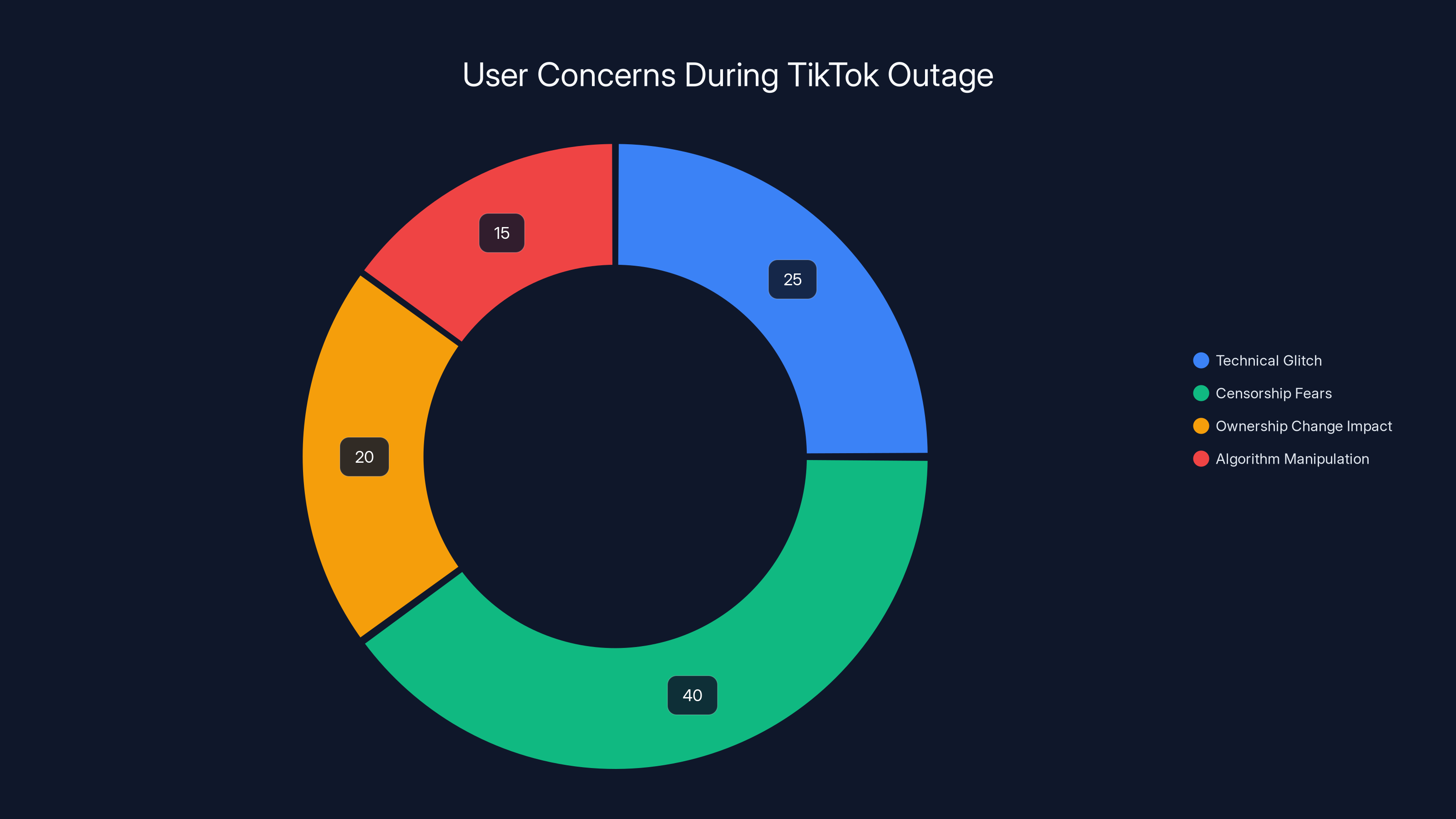

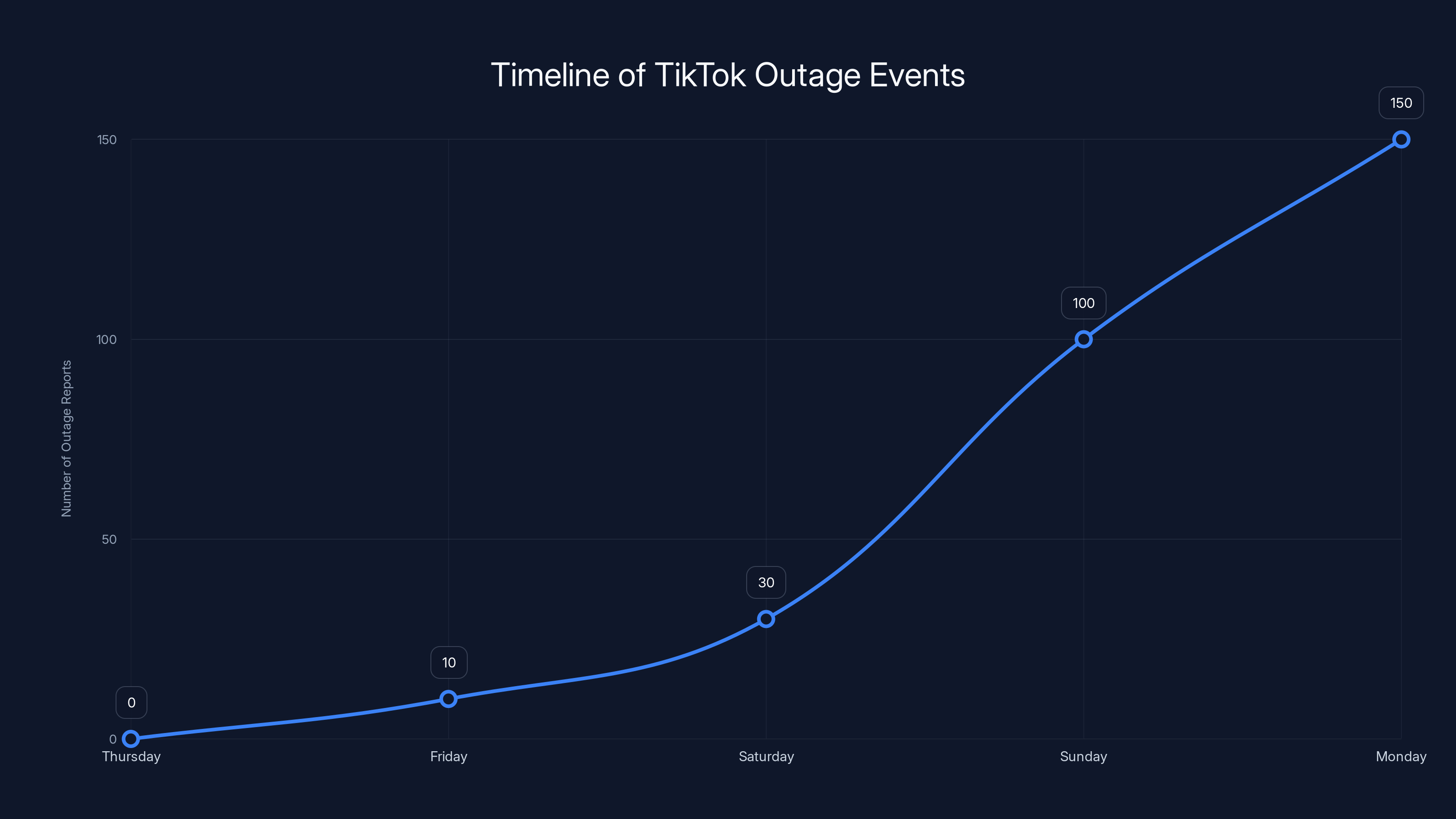

Estimated data shows that while the power outage was a significant factor, the timing with ownership change and political context also heavily influenced public perception of the TikTok outage.

The Timeline: When Everything Started Falling Apart

Understanding the Tik Tok outage requires understanding the timeline of events leading up to it. This wasn't just a random technical failure that happened to occur at an inconvenient moment. The sequence of events created a narrative that practically wrote itself.

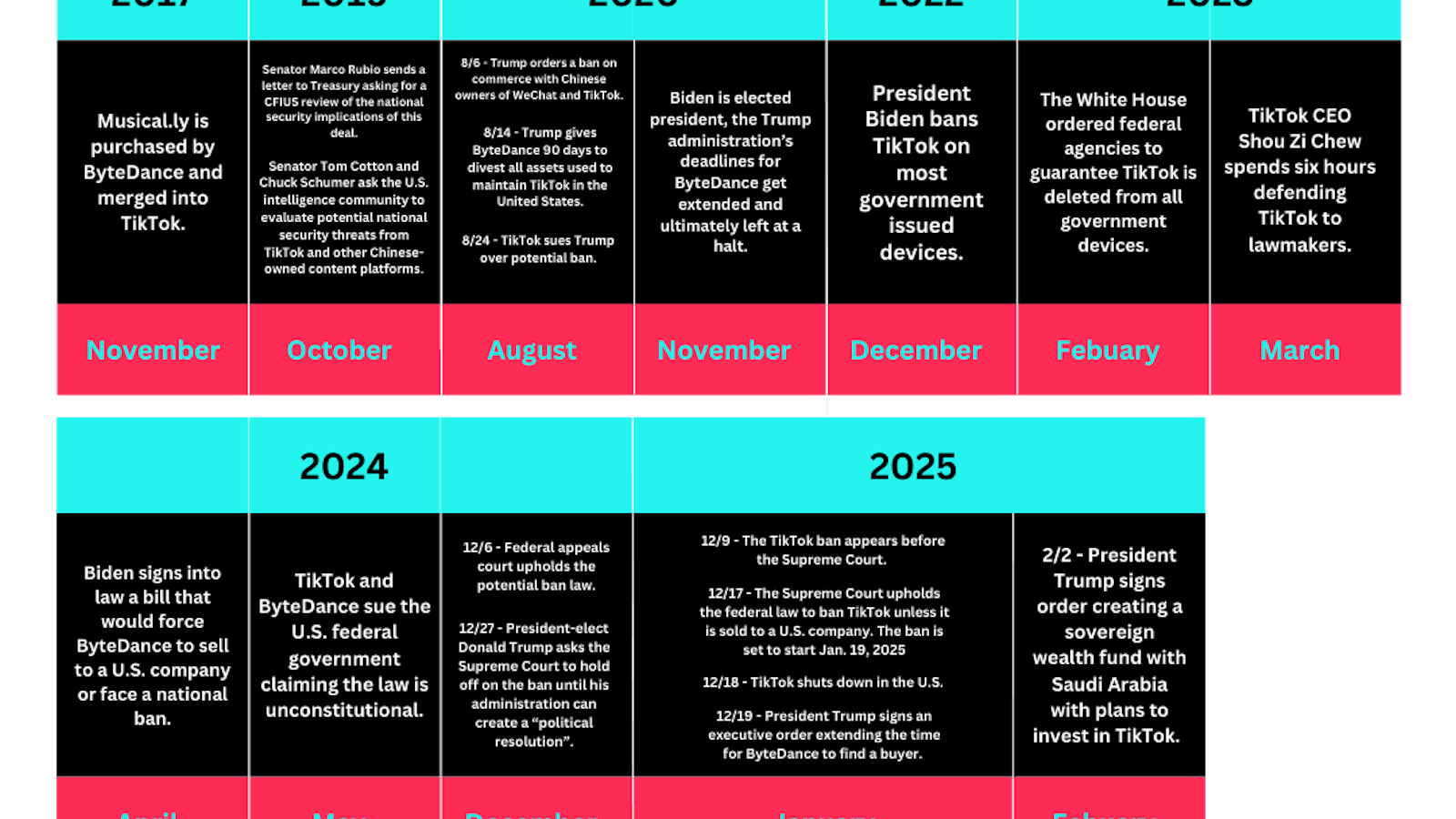

On Thursday of the previous week, Tik Tok announced it had completed the transfer of its US operations to Tik Tok USDS Joint Venture. This wasn't a secret process—it had been mandated by legislation passed in 2024 that required Tik Tok to divest from its Chinese parent company Byte Dance or face a ban in the United States. The Supreme Court had upheld this law, making it essentially unquestionable from a legal standpoint.

But here's where timing becomes everything. Just days after this ownership transfer was completed and announced, the app started having problems. Early Sunday morning, users began reporting issues uploading videos. Others said their videos were uploading fine, but they were getting mysteriously low engagement—far fewer views and shares than they'd normally expect.

Downdetector, which tracks real-time service disruptions across the internet, began showing a massive spike in Tik Tok outage reports that morning. By Monday, it was clear this wasn't just a few users experiencing isolated problems. This was a widespread, platform-level issue affecting millions of people simultaneously.

Meanwhile, a real-world event was happening in Minneapolis. Federal immigration enforcement had escalated, resulting in ICE raids and confrontations with communities. Some of these encounters had turned deadly—ICE agents had fatally shot two US citizens during enforcement operations. The Minneapolis situation was already a flashpoint for national conversation about immigration policy, law enforcement accountability, and federal power.

For many Tik Tok users, especially younger ones who depend on the platform for news and activism, this was exactly the moment when Tik Tok's reach and audience should have been amplifying these stories. Instead, the app was broken. The combination of these two events—the ownership transfer and the service outage—created a combustible mix of suspicion and concern.

A particularly influential moment came when Steve Vladeck, a Georgetown University law professor who teaches about constitutional law and government power, posted on Bluesky about his own experience. He'd recorded a video explaining why the Department of Homeland Security's legal arguments for entering homes without warrants during immigration cases were unconstitutional. Nine hours after uploading it, his video was still stuck in review and couldn't be shared. For many people, this became the smoking gun evidence that something was wrong.

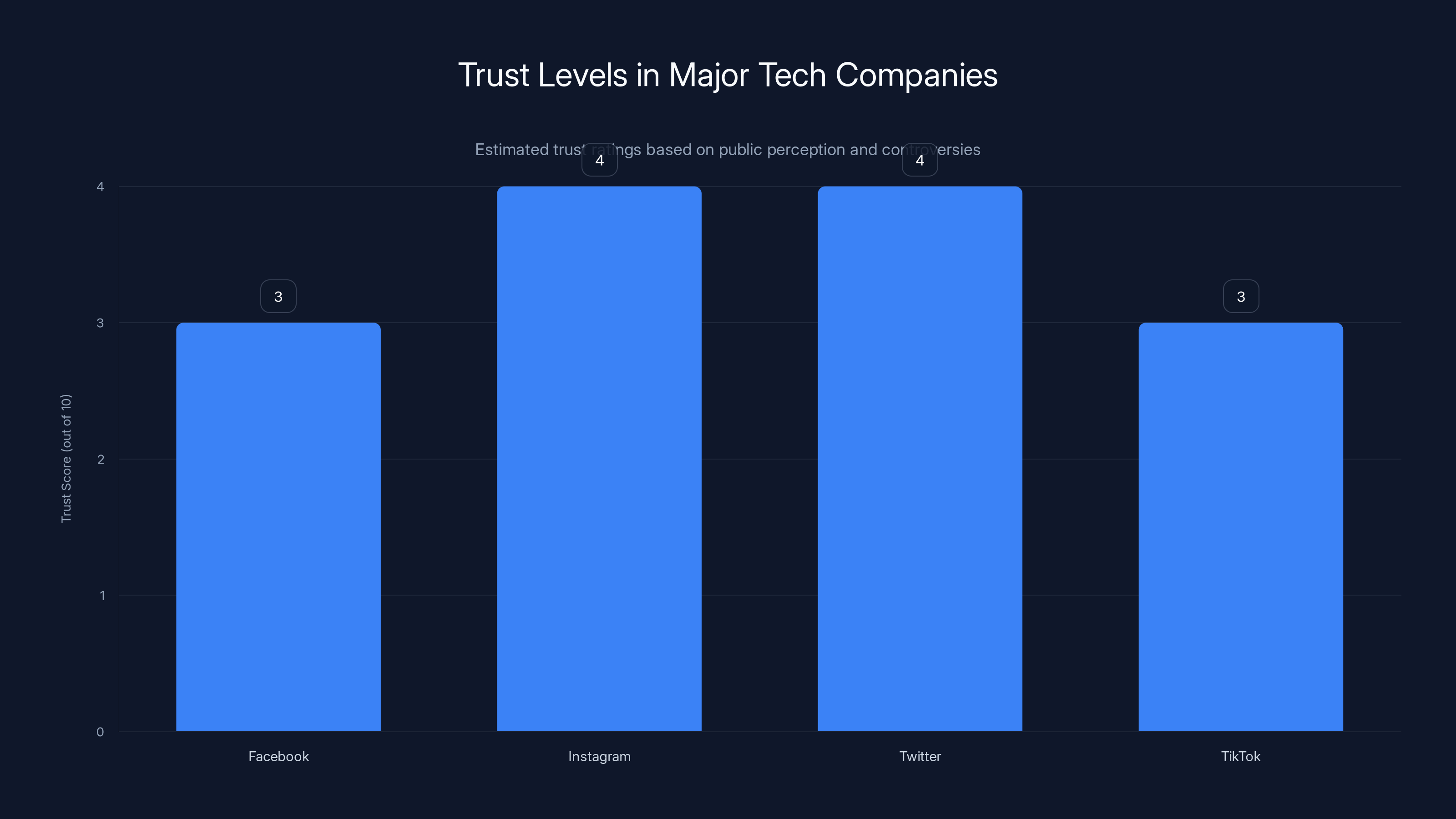

Estimated data shows low trust levels in major tech companies due to repeated controversies and algorithm manipulation. Estimated data.

What Actually Caused the Outage

Let's talk about what Tik Tok said happened, because understanding the company's explanation is crucial to evaluating whether the censorship concerns had any merit.

Tik Tok released a statement through a new X account created for its US-controlled entity, explaining that the service disruption was caused by "a power outage at a US data center." A company spokesperson confirmed this account was authentic when contacted by media outlets. It wasn't some cryptic non-answer—Tik Tok was being surprisingly direct about the root cause.

Now, here's the technical reality. Tik Tok's US user data has been hosted by Oracle since 2022. This arrangement was part of Tik Tok's earlier effort to address national security concerns by keeping American user data separated from its Chinese parent company. Oracle, as the infrastructure partner, would be the entity responsible for data center operations, power management, redundancy systems, and ensuring continuous service.

A power outage at a data center is one of the most common causes of widespread service disruptions. It's also one of the least glamorous failure scenarios from a conspiracy theory perspective, which is probably why many people found it hard to believe. When you're looking for evidence of sinister corporate manipulation, a literal power outage feels too mundane to be the real explanation.

However, there was a contextual factor worth noting. A powerful winter storm was sweeping across large parts of the United States at the time, knocking out electricity for hundreds of thousands of people. This made the power outage explanation more plausible—it wasn't some inexplicable failure of Oracle's systems, it was a result of severe weather affecting infrastructure across broad regions.

But here's where the plot thickens. Oracle declined to comment on the outage. The company said nothing publicly about whether the power outage was related to the winter storm, how long backup power systems took to activate, whether redundancy systems failed, or any technical details about what happened. That silence created a vacuum that speculation rushed in to fill.

The Oracle-Ellison Connection and Why It Matters

To understand why the Tik Tok outage triggered such widespread concern about censorship, you have to understand the broader context of who now owns stakes in Tik Tok's US operations, and what that might mean for the platform's future.

Oracle, the company hosting Tik Tok's US data, is owned and co-founded by Larry Ellison. This alone probably wouldn't matter much if Ellison were a politically neutral tech entrepreneur, but he's not. Ellison is known as a close ally of President Trump. This connection created immediate concerns in the minds of many people who worry about political interference in major social media platforms.

The concern deepened when you look at what happened at other media properties connected to the Ellison family. In August 2025, David Ellison (Larry Ellison's son) became CEO of Paramount Global through the Skydance merger. Shortly after taking control, David Ellison installed Bari Weiss as the head of CBS News. Weiss is a well-known conservative pundit and commentator.

What followed at CBS News was a series of internal changes and restructurings that critics alleged made the news organization friendlier to the Trump administration and more skeptical of progressive coverage. Whether these changes were actually attributable to political ideology or were simply standard business restructuring is debated, but the perception was unmistakable: the Ellison family seemed willing to reshape media properties to align with certain political viewpoints.

This is where it becomes clear why so many people were worried about what might happen to Tik Tok under its new ownership structure. If the Ellison family had influence over both Oracle (which controls Tik Tok's data infrastructure) and Paramount (a major news organization), couldn't they potentially exert similar influence over Tik Tok's algorithm? Couldn't they shape what content gets promoted, what gets buried, and what viewers see?

The ownership structure of the new Tik Tok USDS entity adds another layer of complexity. When Tik Tok announced the transfer, it said the new entity would be structured to "retrain, test, and update the content recommendation algorithm on US user data." This sounds reasonable—of course a new ownership structure would involve reviewing and updating systems. But for many users, the phrase "retrain...the recommendation algorithm" raised an immediate red flag. Retraining algorithms is how you change what content gets promoted and what gets suppressed.

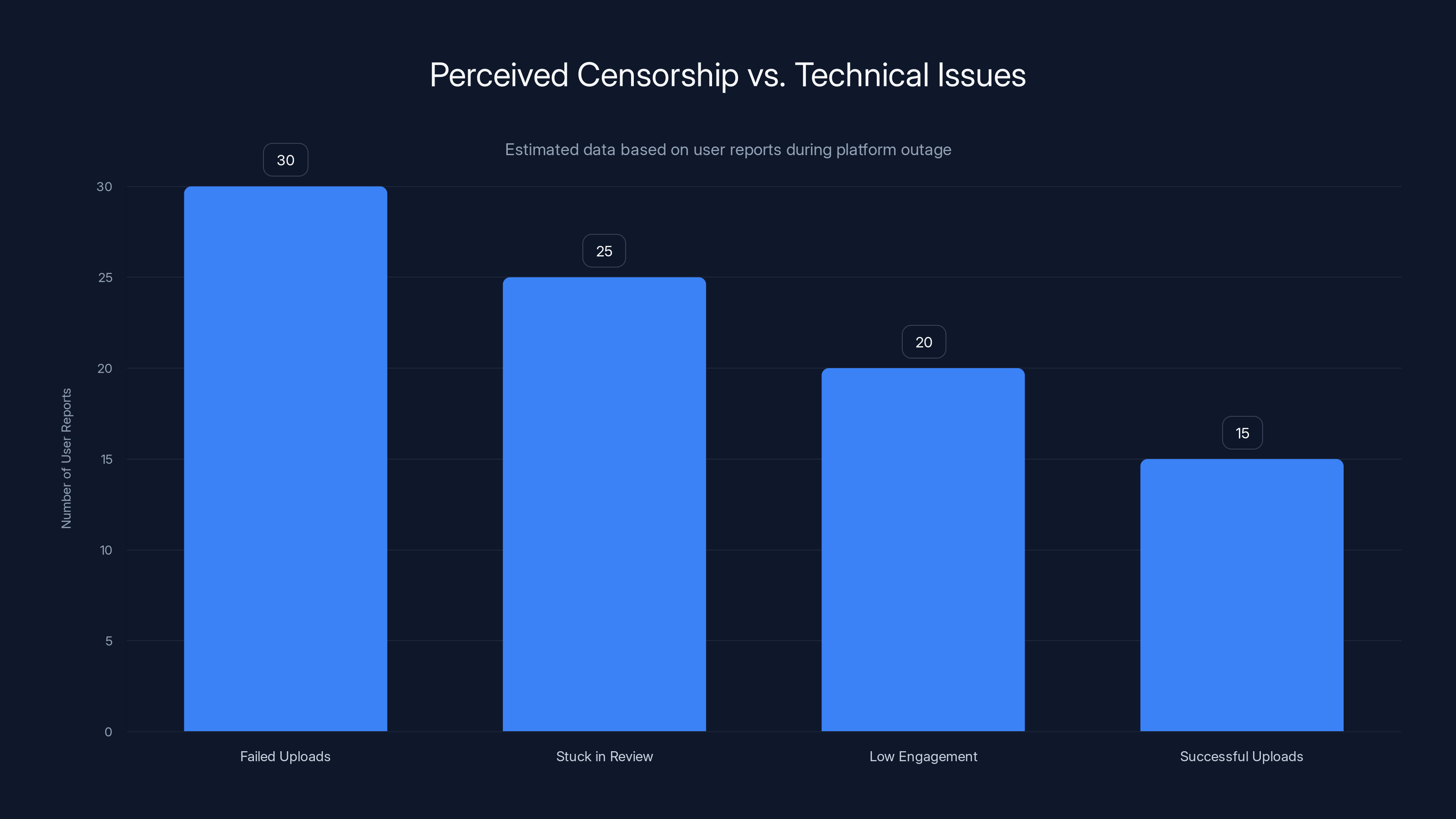

Estimated data suggests a mix of failed uploads, prolonged reviews, and low engagement during the outage, which could indicate either technical issues or perceived censorship.

Censorship Allegations and the Content Moderation Question

Once the outage occurred, allegations of censorship spread rapidly across social media. But were they based on anything concrete, or were they pure speculation?

The evidence users pointed to was anecdotal but compelling. People reported trying to upload videos about ICE operations and immigration protests, only to have their videos either fail to upload at all, or languish in review status for hours while technically not being available for public viewing. Some users said their videos uploaded successfully but received far fewer views than similar content normally would get.

Steve Vladeck's experience became the most cited example. A constitutional law professor, his video about DHS legal arguments and warrantless home entry being stuck in review for nine hours while the platform was experiencing outages seemed to many like proof of deliberate suppression. If the platform was just having general technical problems, why would only certain videos be affected?

Other notable figures and creators added their voices. Actress Megan Stalter announced she was permanently deleting her Tik Tok account because she couldn't upload a video about ICE and was urging others to do the same. Several other creators and activists reported similar experiences.

But here's the complication: during a widespread outage, you absolutely would expect that some videos upload successfully while others fail, some get stuck in review, and some receive artificially low engagement. That's literally what happens when infrastructure fails. A broken system doesn't uniformly prevent all uploads—it creates inconsistent behavior.

The key question becomes: were these users experiencing the normal, expected behavior of a system under technical stress, or were they experiencing intentional suppression? Without access to Tik Tok's internal logs, outage reports, and system status information, there's no way to definitively answer that question.

Tik Tok's official response was straightforward: the company denied any intentional censorship. A Tik Tok spokesperson said the service disruption was purely technical, caused by the power outage, and that any delays in content moderation or visibility were side effects of the infrastructure failure, not deliberate policy. The company also noted that new posts might temporarily take longer to publish and be circulated by the algorithm while systems were being restored.

But here's what's important to understand about content moderation: Tik Tok, like all major social media platforms, uses a combination of automated systems and human review to moderate content. The automated systems flag content as potentially violating guidelines, and then human reviewers (or more sophisticated AI systems) make final decisions about whether to allow, restrict, or remove content.

During a data center outage, these systems can absolutely get backed up. Videos submitted during the outage might get stuck in queue waiting to be reviewed. Recommendations might not update properly. The algorithm might not function normally. All of this would happen without anyone deliberately "censoring" anything.

The problem is that in the social media age, people are primed to assume intentional suppression. We've seen so many documented cases of platforms modifying algorithms, prioritizing content, and making editorial decisions that affect what millions of people see. The idea that a platform would use a technical outage as cover for censorship isn't crazy—it's a reasonable hypothesis given the history of social media companies.

Trust, Timing, and the Perception Problem

Regardless of what actually happened during the outage, the incident exposed something important about how people now think about major social media platforms: trust is gone.

When Facebook experienced an outage in 2021, people weren't immediately suspicious of censorship. Sure, there was frustration that the app wasn't working, but the leap to "they're suppressing content about immigration" didn't happen. But Tik Tok is different. Tik Tok is a platform where control has just changed hands, where the new owners have unclear intentions, where the company is controlled through US ownership structures that people don't fully understand, and where there are real concerns about how algorithmic recommendations might be influenced by political or commercial interests.

The timing of the outage—occurring just days after a major ownership transfer and during a moment of intense national discussion about immigration enforcement—created a perfect storm. It's not that people are paranoid. It's that they have legitimate reasons to be cautious about how social media platforms operate when the stakes are high.

Senator Chris Murphy of Connecticut, no stranger to tech regulation, spoke up about the incident, calling it "at the top of the list" of threats to democracy. He didn't necessarily claim that Tik Tok had censored content—he was expressing concern about the broader situation: a major social media platform with unclear ownership structures at a moment when that platform's reliability became questionable.

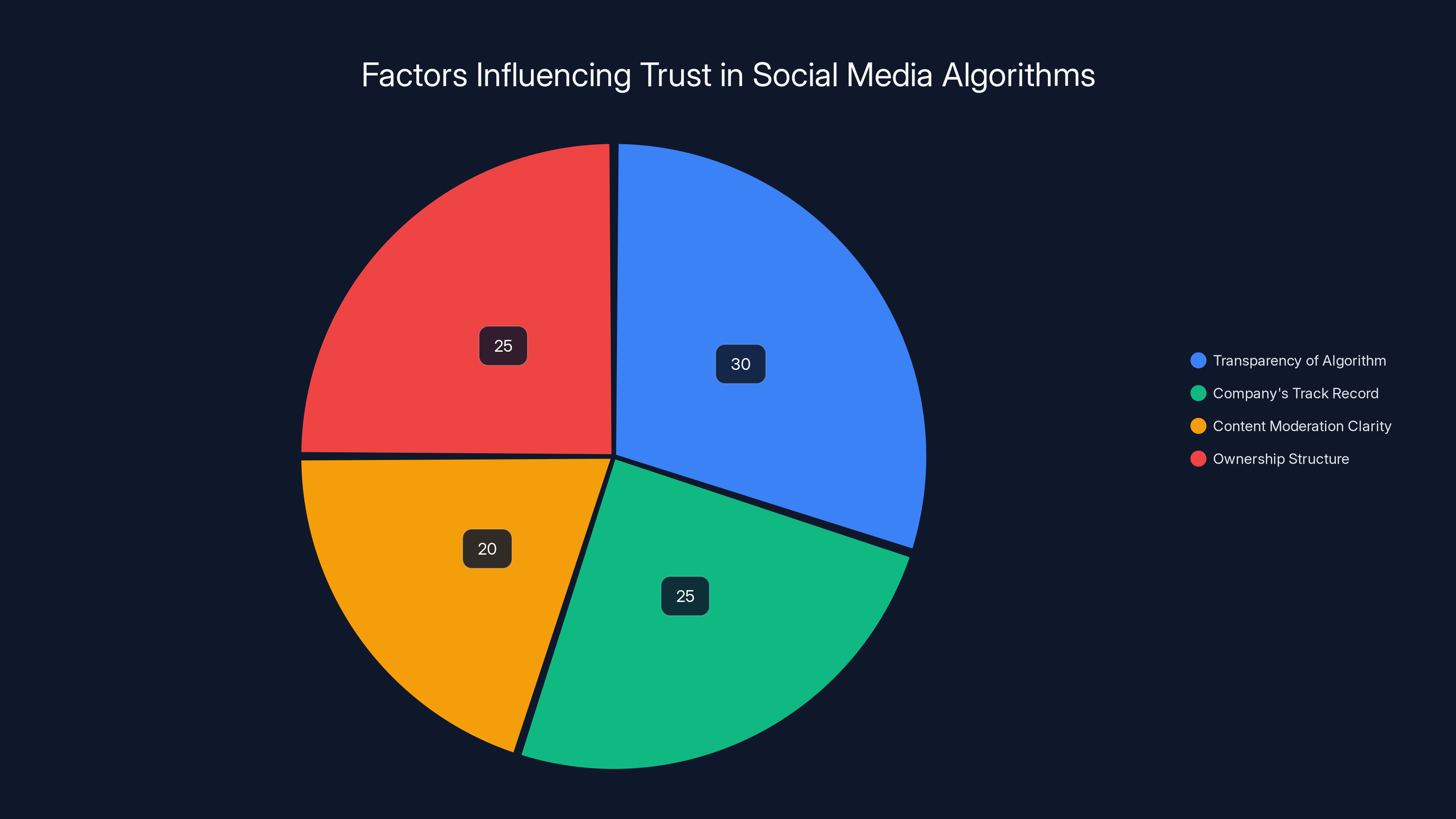

Transparency of algorithms and company track records are key factors influencing user trust. Estimated data.

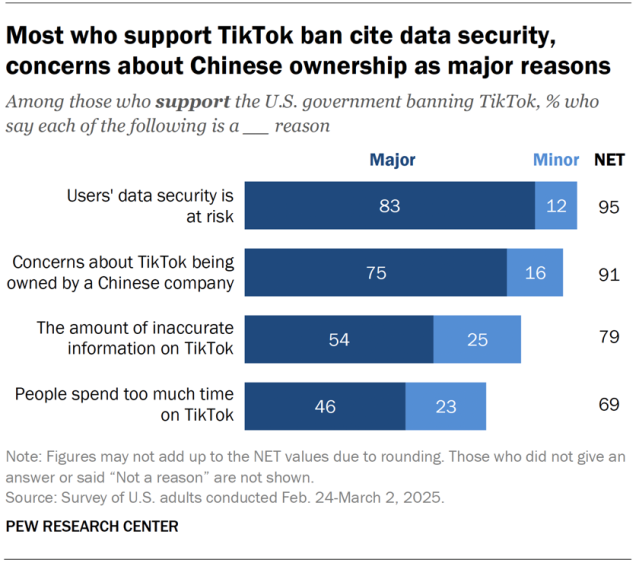

The Broader Context: Why People Don't Trust Tech Companies Anymore

To really understand the panic that spread during the Tik Tok outage, you have to understand the history of social media companies demonstrating that they absolutely will manipulate platforms for various reasons—sometimes political, sometimes commercial, sometimes just to serve their business interests.

Facebook has been caught multiple times modifying its News Feed algorithm in ways that prioritized certain political viewpoints or spread engagement-driving content regardless of whether it was accurate. Instagram's algorithm has been shown to recommend increasingly extreme content to users, a behavior that researchers have repeatedly documented. Twitter, after being taken over by Elon Musk, has allegedly changed its algorithm to favor accounts with certain political views, though exactly how much of that is true versus perception is debated.

Tik Tok itself has history here. The platform has faced criticism for deprioritizing content from users who are elderly, disabled, or considered less conventionally attractive. Tik Tok's algorithm has been accused of promoting eating disorder content and other harmful material in ways that seem deliberate. Whether these are conscious decisions or emergent behaviors from the algorithm is sometimes unclear, but the pattern exists.

Against this backdrop, when Tik Tok experiences an outage right after an ownership transfer, and when videos about immigration rights seem to be getting stuck in review, people's skepticism makes sense. We're not dealing with a group of users making wild accusations out of nowhere. We're dealing with users who have watched major tech platforms behave badly repeatedly and who are now cautious about how a platform operates when the stakes are high.

The question isn't really "Did Tik Tok intentionally censor immigration content?" The real question is "Can we even know whether Tik Tok is being transparent about how it operates?" And the answer, honestly, is no. We can't know. The company isn't required to publish algorithmic transparency reports or detailed outage logs. We have to take Tik Tok's word for what happened, and millions of people have decided that's not good enough.

Oracle's Role and Data Center Operations

Understanding what actually happened during the outage requires understanding how data center infrastructure works and why Oracle's role is important.

Data centers aren't simple storage facilities. They're incredibly complex environments where thousands of computers are coordinated to serve millions of concurrent users. Everything in a data center is redundant—power systems, network connections, cooling equipment. If the primary power source fails, backup generators are supposed to kick in automatically. If one server fails, others are supposed to take over its workload. If one network path is interrupted, traffic should route around it.

The fact that an outage occurred at all suggests that multiple redundancies failed simultaneously or that the failure was so severe that backup systems couldn't compensate. A winter storm hitting a region can affect multiple infrastructure components at once, potentially causing cascading failures that take time to recover from.

Oracle, being one of the world's largest software and infrastructure companies, has experience running massive data centers. The company provides cloud infrastructure services to thousands of enterprise customers. When Oracle's data center experiences an outage affecting Tik Tok, it's not because Oracle doesn't know how to run data centers. It's because something went wrong that was bigger than the normal redundancies could handle.

But here's the thing: Oracle declined to comment on the outage. The company didn't release a statement about what happened, how long it took to restore service, whether all backup systems worked as expected, or what they're doing to prevent similar failures in the future. That silence is notable.

In other major cloud outages—when Amazon Web Services has had problems, or Google Cloud, or Microsoft Azure—those companies typically release detailed post-mortems explaining exactly what went wrong and how they're fixing it. It's good public relations and good practice. Oracle's silence stood out, and that silence fed speculation.

During the TikTok outage, 40% of users were concerned about potential censorship, while 25% attributed it to a technical glitch. Estimated data based on topic context.

The Algorithm Retraining Question and Future Control

When Tik Tok announced the new ownership structure, it specifically mentioned that the new USDS entity would "retrain, test, and update the content recommendation algorithm on US user data." This phrasing became a focal point for concerns about future algorithmic manipulation.

Retraining an algorithm is a neutral, technical process. All modern algorithms require periodic retraining to adapt to changing data, user behavior, and business objectives. It's not inherently suspicious. But the question becomes: what criteria will the new owners use when they retrain the algorithm?

Will the algorithm prioritize engagement above all else, as Tik Tok's algorithm currently does? Will it be adjusted to promote a particular political viewpoint? Will it be optimized to make the platform profitable, which might mean reducing reach for controversial political content? Will it be changed to make the US government happier with how the platform operates?

These are legitimate questions, and they get at something fundamental about how Tik Tok works. Tik Tok's algorithm is famously effective at predicting what users want to see and showing it to them. This is why Tik Tok has grown so fast and why young people are increasingly using it as their primary source of news and information. The algorithm is so good that it's somewhat mysterious—even Tik Tok employees don't fully understand how all of its decision-making works.

When control of that algorithm transfers to new ownership, and when the new ownership has opaque structures and unclear intentions, it's reasonable for users to worry about what might change. The difference between Tik Tok under Byte Dance ownership and Tik Tok under Oracle-connected ownership might not be huge in terms of direct censorship. But it could manifest in subtle algorithmic changes that affect which creators succeed, which issues get amplified, and what kind of information reaches millions of people.

What Happened to Users Who Left Tik Tok

The outage and surrounding controversy triggered a documented exodus of some users from Tik Tok, though the scale is debated. Some creators and activists announced they were permanently deleting the app and encouraging others to do so. The movement gained enough momentum that it became a trending conversation across social media.

Social media migrations are interesting phenomena. They rarely succeed in replacing the original platform. People tried to move from Twitter to Bluesky after the Elon Musk takeover. Some did, but most kept their Twitter accounts active while also participating on Bluesky. It's hard to leave a platform where your entire social graph exists, where your audience is built, and where all your content is stored.

With Tik Tok, the barrier to leaving is even higher. The app is designed to be addictive—the algorithm is specifically engineered to keep people watching. Tik Tok has 100+ million US users, and many of them have built careers, audiences, and communities on the platform. Leaving Tik Tok isn't like deleting a rarely-used social media account. It's like moving away from a city where you've built a life.

But some people did leave, and their departures were meaningful. They were saying something: we don't trust this platform anymore under the new ownership, and we're willing to sacrifice our audience and our presence to make that statement. That's a powerful signal about the erosion of trust in social media platforms.

The timeline shows a significant spike in TikTok outage reports starting Sunday, following the ownership transfer announcement on Thursday. Estimated data.

The Regulation Question: Should Government Be Involved?

The Tik Tok outage also highlighted a deeper question about how governments should oversee social media platforms, particularly when those platforms are central to how people get information and organize politically.

The original law requiring Tik Tok to divest from its Chinese parent company was based on national security concerns. The worry was that Byte Dance, as a Chinese company, might be compelled by Chinese law to hand over data about American users or to modify the platform in ways that served Chinese interests. That concern, whether valid or not, led to legislation and regulatory action.

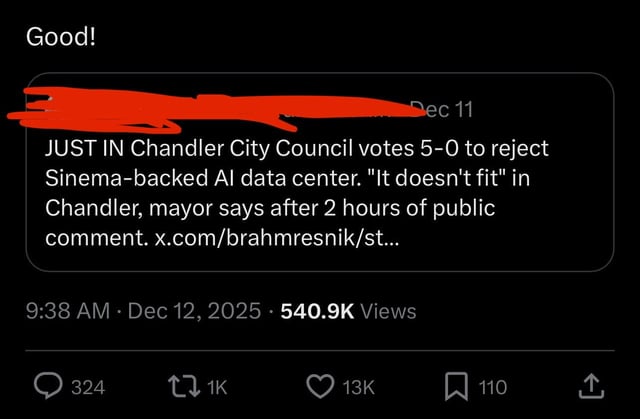

But the divestment created its own set of problems. Now Tik Tok is controlled by a corporate structure that includes Oracle and other American investors. But Oracle is controlled by Larry Ellison, a Trump ally. Does that make Tik Tok more trustworthy from a national security perspective, or does it just shift the problem from "What if China controls Tik Tok?" to "What if the Trump administration controls Tik Tok through Oracle?"

This is where it gets complicated. You can regulate foreign companies, but you can't easily regulate American companies in a way that doesn't raise free speech concerns. You can pass laws requiring divestment, but the new owners might have their own agendas that are equally concerning from a platform trust perspective. There might not be a solution that satisfies both national security concerns and concerns about political interference.

How Other Platforms Handle Outages and Transparency

When you compare Tik Tok's response to the outage with how other major platforms have handled similar incidents, you get a clearer picture of where expectations for transparency stand.

Amazon Web Services maintains a real-time status page where AWS customers can see detailed information about ongoing outages and estimated time to recovery. After an outage, AWS publishes a detailed post-mortem explaining exactly what went wrong, why it happened, how long it took to fix, and what AWS is doing to prevent it from happening again. These post-mortems are valuable for customers and also for the public understanding of how infrastructure works.

Google Cloud and Microsoft Azure follow similar practices. They publish transparency reports about their operations, maintain status pages, and communicate clearly with customers and the public about what's happening when things go wrong.

Tik Tok's communication during the outage was minimal. The company said it was a power outage at a data center. That's it. No detailed explanation, no timeline for recovery, no post-mortem afterward about what happened and how it will be prevented. This lack of transparency is understandable from one perspective—companies don't want to share detailed information about security vulnerabilities or operational details that competitors could exploit. But it also leaves room for speculation and suspicion.

The Perception-Reality Gap and Social Media Trust

One of the most important things the Tik Tok outage revealed is the gap between technical reality and public perception when it comes to social media platforms. Even if Tik Tok's explanation is completely truthful—even if there really was just a power outage and nothing else—the outage still damaged trust in the platform. The damage happens not because people are irrational, but because the history of social media companies has taught people to be skeptical.

This is a real problem for platforms, advertisers, creators, and policymakers. When public trust erodes, you can't fix it just by saying "Nothing bad happened." You have to rebuild confidence through transparency, clear communication, and consistent trustworthy behavior over time.

Tik Tok's situation is complicated because the company inherited a significant trust deficit from its previous status as a Chinese-owned company. Many Americans were skeptical of Tik Tok because of national security concerns. The law requiring divestment was itself a statement that Tik Tok couldn't be trusted in its previous form. Now, the company has divested, new American owners are in place, but immediately something goes wrong and it looks suspicious. That's not a coincidence of bad luck—that's Tik Tok starting from a position of low trust and then experiencing an event that confirmed people's worst fears.

What Comes Next: Regulatory Scrutiny and Platform Accountability

After the outage, there was increased discussion about whether social media platforms should face more regulatory oversight, particularly around outages, content moderation, and algorithmic transparency.

The European Union has been moving in this direction with the Digital Services Act, which requires platforms to provide more transparency about how they operate, how they moderate content, and how their algorithms work. The US hasn't implemented similar comprehensive regulation, though there are various proposals in Congress.

The Tik Tok situation makes the case for more regulatory oversight. If platforms are going to be central to how people access information and organize politically, shouldn't there be requirements for transparency about outages, content moderation decisions, and algorithmic changes? Shouldn't regulators and the public have access to post-mortems explaining what went wrong and how it will be fixed?

But regulation also comes with risks. If governments require platforms to be more transparent about their operations, that's good for the public. But if governments use that requirement as a way to pressure platforms into suppressing certain kinds of content or favoring certain viewpoints, that's bad for free expression.

The Tik Tok outage highlighted this tension. On one hand, we need more transparency from platforms. On the other hand, we don't want governments using transparency requirements as a tool for controlling what people see.

Algorithmic Transparency and the Future of Trust

One of the most important takeaways from the Tik Tok outage is that the public increasingly wants to understand how algorithms work and how they're controlled. This is a reasonable desire, but it's at odds with how tech companies operate.

Algorithms are competitive advantages. Companies don't want to publish the exact parameters of their algorithms because competitors could use that information to build better competing systems. Algorithms are also increasingly complex and difficult to explain—even the engineers who build them sometimes can't articulate why a particular decision was made. The field of "explainable AI" exists precisely because algorithms have become black boxes.

But for social media platforms, this complexity and secrecy creates a trust problem. When you can't see how an algorithm works, you have to trust the company operating it. And if that company has shown a pattern of putting profit before user wellbeing, or if there are legitimate concerns about who controls the company, that trust erodes.

Tik Tok faces this problem acutely. The algorithm is so effective that it's mysterious. The new ownership structure is so opaque that it raises questions. The combination creates an environment where even a normal technical failure becomes suspicious.

Moving forward, platforms that want to maintain user trust might need to find ways to be more transparent about how algorithms work. This doesn't necessarily mean publishing exact algorithmic parameters. It could mean publishing reports about what kinds of content are being promoted or suppressed, what kinds of creators are succeeding or failing, what geographic or demographic patterns emerge. It could mean allowing researchers more access to study how platforms operate. It could mean being clearer about outages, content moderation decisions, and algorithmic changes.

The Immigration Politics and Why This Moment Matters

The Tik Tok outage occurred during a specific moment when immigration was a central political issue. Federal enforcement operations in Minneapolis had escalated, ICE agents had fatally shot two US citizens, and communities were organizing and protesting. For many young people, Tik Tok is where this story would be told and shared.

When Tik Tok becomes unavailable or unreliable at exactly the moment when people are trying to share information about immigration enforcement, it raises obvious questions about whose interests are being served. Is it a coincidence that a platform suspected of potentially favoring Trump-administration interests goes down during an immigration crisis? Or is something more going on?

The political nature of the incident is important. This isn't about a platform failing to deliver entertaining videos. This is about a platform that increasingly serves as a primary news source for millions of Americans experiencing technical problems at a moment when that news source is needed for organizing and activism.

That's why Senator Chris Murphy called it a threat to democracy. He wasn't necessarily claiming that Tik Tok intentionally censored immigration content. He was saying that social media platforms have become essential infrastructure for political participation and information access, and that infrastructure failing or being unreliable at critical moments is a serious problem.

What We Can Learn from the Tik Tok Outage

The Tik Tok data center outage, while ultimately appearing to be a technical failure caused by a power outage, revealed important truths about how we think about social media platforms in 2025.

First, trust in social media companies has eroded significantly. People are primed to assume the worst because they've seen companies behave badly. Transparency and clear communication aren't optional niceties anymore—they're essential for maintaining any credibility.

Second, platform control matters. Who owns a platform, who has influence over how it operates, and what their incentives are—these things matter enormously. When control changes hands, users have legitimate reasons to worry about how the platform might change.

Third, outages during politically sensitive moments look suspicious regardless of whether anything suspicious is actually happening. That's not user paranoia—that's a rational response to uncertainty in a system where the stakes are high.

Fourth, algorithmic transparency remains an unsolved problem. We don't have good ways for the public to understand how algorithms work or to verify that they're operating fairly. This creates an information asymmetry where platforms have enormous power and users have almost no visibility.

Fifth, infrastructure matters. Data centers are critical infrastructure for the modern internet. When they fail, it affects millions of people and can undermine trust in entire sectors of the economy and society.

The Tik Tok outage is ultimately a story about trust, control, infrastructure, and information access in the digital age. It's a story that will continue to unfold as Tik Tok's new ownership structures become clearer and as users decide whether they're willing to stay on a platform whose future is uncertain.

FAQ

What caused the Tik Tok data center outage?

Tik Tok attributed the outage to a power outage at a US data center operated by its infrastructure partner Oracle. A severe winter storm sweeping across the United States at the time likely contributed to the broader power infrastructure failures. The outage affected millions of users who experienced problems uploading videos, viewing content, and getting normal levels of engagement on their posts.

Why did people think the outage was intentional censorship?

The timing of the outage created suspicion. It occurred just days after Tik Tok completed its transfer to new US-majority ownership, and it coincided with intense national discussions about ICE operations and immigration enforcement in Minneapolis. Some users reported difficulty uploading videos about immigration-related content, leading to speculation that the new owners were suppressing certain types of political content. While this turned out to be consistent with how systems behave during outages, the combination of timing, political context, and the new ownership structure fueled concerns.

How does Oracle's ownership stake affect Tik Tok's operations?

Oracle has hosted Tik Tok's US user data since 2022 and currently operates the data center infrastructure supporting the platform. Oracle's co-founder Larry Ellison is a close ally of President Trump, which raised concerns about potential political influence. Additionally, the Ellison family has been involved in changes at other media properties like CBS News, adding to concerns about algorithmic manipulation. However, Oracle's primary role is technical infrastructure management rather than direct control over content or algorithms.

What exactly is the Tik Tok USDS Joint Venture?

Tik Tok USDS (US Data Security) is a corporate entity created as part of the 2024 divestment law that required Tik Tok to separate from its Chinese parent company Byte Dance. The new structure is majority-owned by US investors, though the exact ownership percentages and decision-making structures are not entirely transparent. The entity is responsible for operating Tik Tok's US operations, managing user data separately from Byte Dance, and retraining and updating the content recommendation algorithm using US user data.

Could a power outage really explain why some videos got stuck in moderation review?

Yes. During a data center outage, content moderation systems can absolutely become backed up. Videos submitted during the outage might queue up waiting for human or AI review. The recommendation algorithm might not update content visibility properly. Some uploads might fail while others succeed, depending on which systems are affected and at what stage of recovery the platform is in. However, this normal outage behavior is indistinguishable from intentional suppression to users.

What does Tik Tok's new ownership mean for content moderation and algorithmic recommendation?

Tik Tok's announcement indicated that the new USDS entity would "retrain, test, and update the content recommendation algorithm." This is a normal technical process, but it raises questions about what criteria will be used during retraining. Whether the algorithm will prioritize engagement (as it currently does), be adjusted for political preferences, be optimized for profitability, or be modified to satisfy regulatory concerns remains unclear. Without transparency requirements, users have no way to verify that algorithmic changes are fair.

Why is algorithmic transparency important for social media platforms?

Algorithmic transparency matters because algorithms determine what billions of people see, what information reaches them, and how they understand the world. When algorithms are opaque and controlled by companies with incentives that might not align with user interests, there's potential for manipulation—whether intentional or emergent from how the algorithm is designed. Understanding what content is being promoted or suppressed helps users critically evaluate the information they receive and helps societies hold platforms accountable.

Has Tik Tok been caught moderating content unfairly in the past?

Yes, Tik Tok has faced criticism for algorithmic decisions that appeared to deprioritize content from elderly, disabled, and less conventionally attractive users. The platform has also been accused of promoting content related to eating disorders and other harmful behavior. Whether these are intentional decisions or emergent properties of the algorithm is debated, but they demonstrate that algorithmic systems can produce outcomes that seem biased against certain groups.

What's the difference between technical outages and algorithmic suppression?

A technical outage is when infrastructure fails and prevents the platform from working normally. Everyone experiences reduced functionality. Algorithmic suppression is when a platform intentionally reduces the visibility or discoverability of certain types of content. During a technical outage, you might see behavior that looks like suppression (some content uploads failing, some videos not being recommended) but is actually just normal system dysfunction under stress. Without access to internal logs, it's nearly impossible for users to distinguish between the two.

What regulatory changes might result from the Tik Tok outage incident?

Potential changes could include requirements for platforms to maintain public status pages showing real-time outage information, publish detailed post-mortems explaining what went wrong, provide greater transparency about algorithmic changes, and submit to more rigorous oversight of content moderation practices. The European Union's Digital Services Act moves in this direction, while the US has various proposals in Congress but no comprehensive regulation yet. However, regulatory oversight comes with risks if governments use transparency requirements as tools to control what people see on platforms.

Conclusion

The Tik Tok data center outage of early 2025 will likely be remembered not as a major technical failure but as a revealing moment about the state of trust in social media platforms. A power outage, while frustrating, is a relatively routine infrastructure problem. But its timing, combined with Tik Tok's newly transferred ownership and the political context of immigration enforcement, transformed it into a crisis of confidence.

What the incident reveals is that we've entered a new era where technical failures and political concerns are inseparable. When a platform is central to how people access information and organize politically, any failure feels potentially suspicious. This isn't paranoia. It's a rational response to a history of social media companies demonstrating that they're willing to manipulate their platforms for profit or other interests.

The fundamental problem is one of trust and transparency. Tik Tok's users want to know: Who controls this platform? How does the algorithm work? Will it be modified to favor certain political viewpoints? What safeguards exist against abuse? These are reasonable questions, but the company has limited incentives to answer them thoroughly. Sharing algorithmic details is competitively risky. Admitting to controversial content moderation decisions opens the company to criticism.

Moving forward, several things need to happen. First, social media platforms need to invest in transparency and communication, especially around outages and algorithmic changes. Second, policymakers need to decide what level of oversight is necessary and how to implement it without enabling government censorship. Third, users need to understand that no platform is truly trustworthy—the key is to have visibility into how they operate and to have alternatives available if they behave badly.

Tik Tok's new ownership structure was supposed to address national security concerns about Chinese control. Instead, it's raised new concerns about American control, particularly by parties with clear political alignments. The platform faces a credibility challenge that can't be solved by simply denying censorship allegations. It will require demonstrable, verifiable commitment to fair and transparent operations.

The Tik Tok outage wasn't an unusually significant technical event. But it was a significant moment for understanding how we think about technology, trust, and power in 2025. In an era where social media platforms are essential infrastructure for information and political participation, their reliability and trustworthiness aren't optional. They're fundamental to whether democracy can function.

Key Takeaways

- The TikTok outage was caused by a genuine power failure at Oracle's data center, likely exacerbated by severe winter weather affecting infrastructure.

- The timing of the outage—days after ownership transfer and during ICE enforcement operations—fueled widespread censorship allegations despite no concrete evidence.

- Trust in social media platforms has eroded significantly, making users suspicious of any failure occurring during politically sensitive moments.

- Oracle's role as TikTok's infrastructure provider and Larry Ellison's political alignments created legitimate concerns about future algorithmic bias.

- The incident exposed a fundamental gap between technical reality and public perception in how we evaluate platform reliability and trustworthiness.

- Algorithmic transparency remains an unsolved problem—without visibility into how platforms operate, distinguishing between technical failures and intentional suppression is nearly impossible.

- Regulatory frameworks like the EU's Digital Services Act may be necessary to require platforms to maintain transparency about outages and algorithmic changes.

Related Articles

- TikTok's First Weekend Meltdown: What Actually Happened [2025]

- TikTok Outage in USA [2025]: Why It Failed and What Happened

- How Reddit's Communities Became a Force Against ICE [2025]

- TikTok Power Outage: What Happened & Why Data Centers Matter [2025]

- Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed [2025]

- Social Media Companies' Internal Chats on Teen Engagement Revealed [2025]

![TikTok Data Center Outage Sparks Censorship Fears: What Really Happened [2025]](https://tryrunable.com/blog/tiktok-data-center-outage-sparks-censorship-fears-what-reall/image-1-1769465253773.jpg)