Introduction: The $5.3 Billion Bet on AI Video and Beyond

When Runway announced its Series E funding round in early 2026, the AI industry took notice. A

This isn't your typical "AI hype" story. Runway has spent the last few years building actual technology that works. Their Gen 4.5 model outperformed offerings from OpenAI and Google DeepMind on critical benchmarks. They've shipped products used by real companies—from Adobe to studios creating content at scale. And now, with fresh capital backing them, they're making a bold pivot that could reshape how AI systems understand the world.

The real story here isn't just about more money flowing into AI. It's about what comes next. Runway is betting billions that world models, not just language models, are the path to more capable AI. A world model is an AI system that builds an internal representation of how environments work, allowing it to predict what happens next. Think of it like this: a language model reads text and predicts the next word. A world model watches a video and predicts what the scene looks like in the next frame. It understands physics, causality, and consequence.

This distinction matters enormously. Yann LeCun and other leading researchers have argued that large language models hit a fundamental ceiling. They're pattern-matching engines, not reasoning engines. To get to AGI—or even just significantly more capable systems—you need AI that understands how the world actually works.

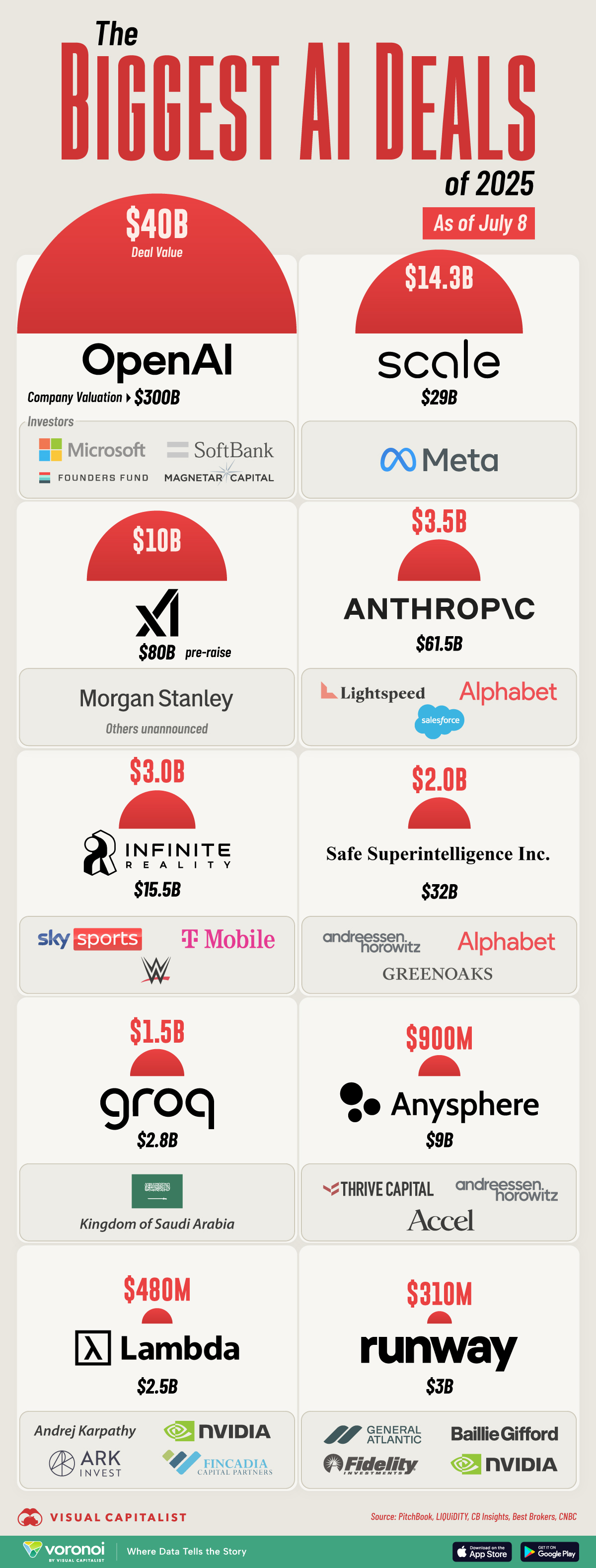

Runway's funding round brings together an impressive roster of backers: General Atlantic led the round, with participation from Nvidia, Fidelity Management & Research, Alliance Bernstein, Adobe Ventures, Mirae Asset, and AMD Ventures. That lineup tells you something crucial: this isn't just venture capital chasing trends. This is strategic capital from companies that manufacture chips, manage massive portfolios, and actually understand the infrastructure layer.

Let's dig into what Runway is actually building, why this matters, and what the implications are for AI, creativity, and the future of software itself.

TL; DR

- Runway raised 5.3B valuation to develop next-generation AI world models beyond video generation

- World models simulate environments and predict future states, addressing fundamental limitations of language models

- Gen 4.5 outperforms competitors from OpenAI and Google DeepMind on multiple video generation benchmarks

- Cross-industry applications expanding into medicine, climate, energy, robotics, and gaming beyond entertainment

- Infrastructure scaling challenge requires strategic partnerships like the CoreWeave compute deal to support training demands

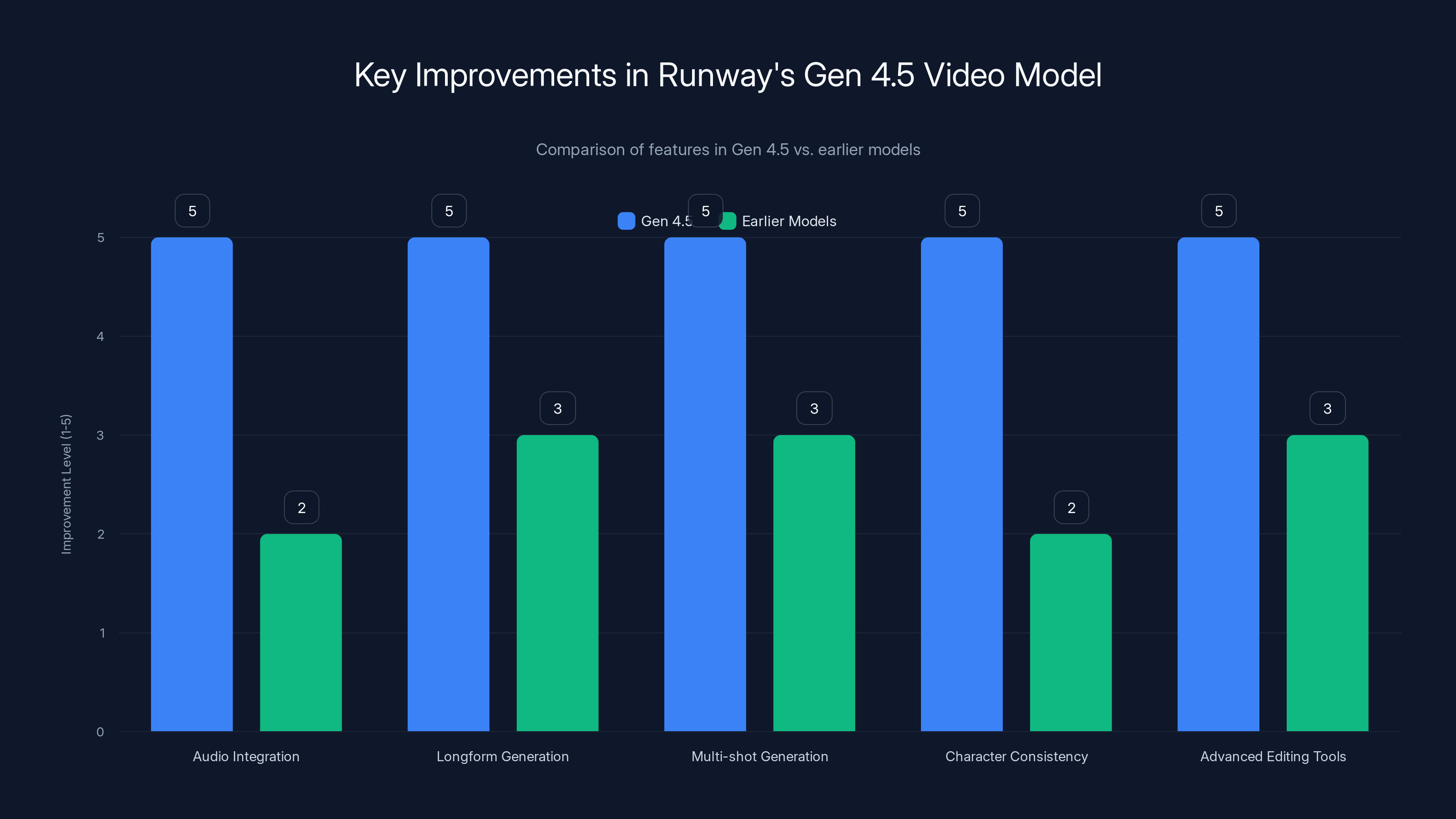

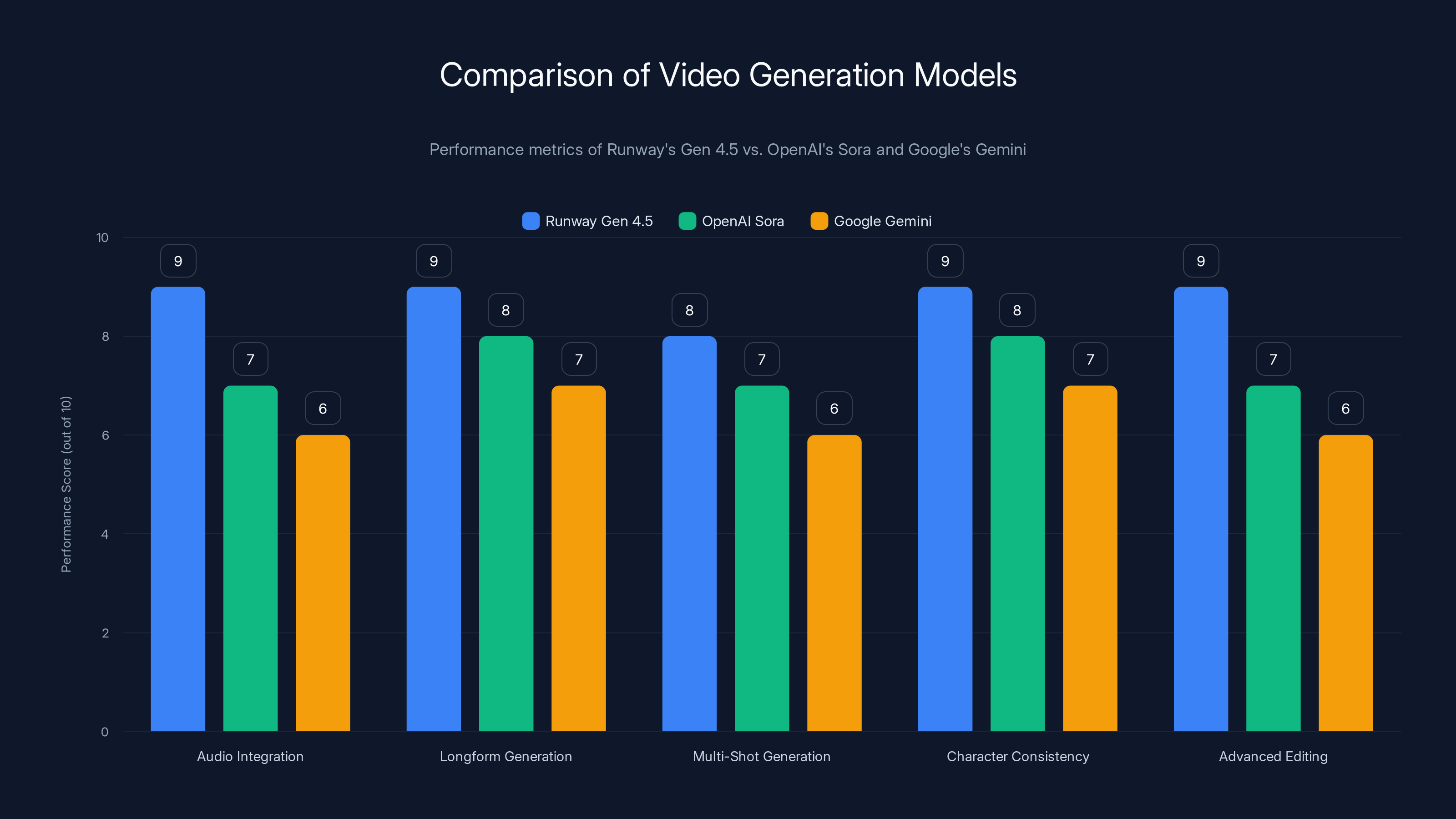

Gen 4.5 significantly enhances video generation capabilities with improvements in audio integration, video length, narrative complexity, character consistency, and editing tools.

What Is Runway, and Why Should You Care?

Runway started as a creative tool. If you've worked in video production, design, or digital media in the last few years, you've either used Runway or seen its output. The company made it possible to generate video from text prompts, edit video with AI, and extend scenes beyond their original boundaries. These aren't minor features—they represent a fundamental shift in how creative work gets done.

But the company has always been more ambitious than its product line suggested. The founding team, led by Cristobal Valenzuela, came from backgrounds in machine learning and media technology. They understood early that video generation was solving a real problem, but it was also a beachhead into something much larger.

Video data is fundamentally different from text data. When you read a sentence, you're engaging with a symbolic system. Language is abstract. Video is grounded in physical reality. Every frame contains information about how objects move, how light behaves, how materials respond to forces. If you could train an AI system to understand video deeply enough, you'd have an AI system that understands physics, causality, and consequence. You'd have a model of how the world works.

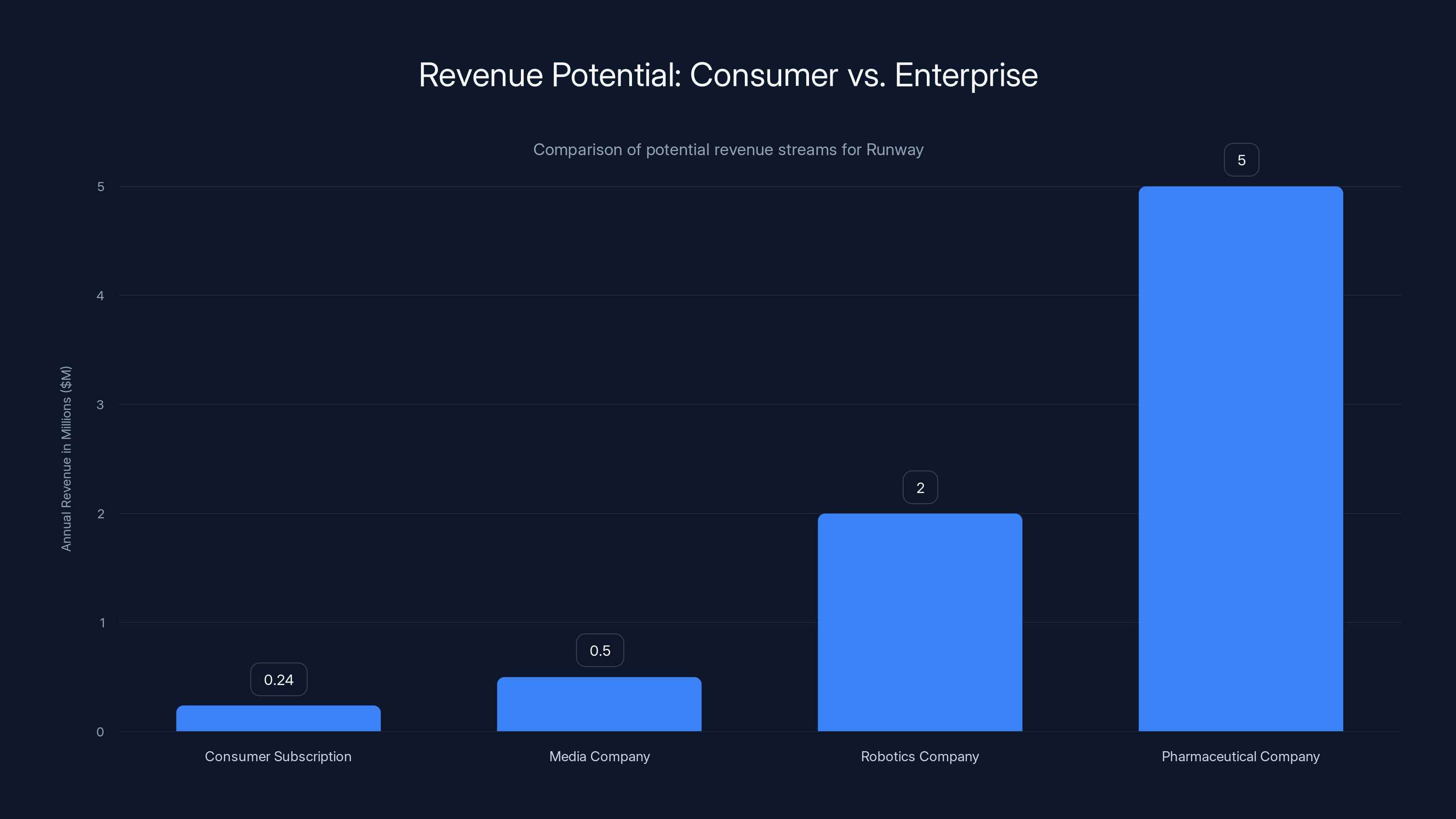

Runway's revenue model has been subscription-based. Pro users pay monthly for access to tools. But as the company scaled, it became clear that the real value wasn't in the consumer market. It was in enterprise. Adobe adopted Runway's technology. Studios used it to accelerate production. VFX studios integrated it into their pipelines.

The funding round validates this shift. A $5.3 billion valuation isn't predicated on creatives paying monthly subscriptions. It's predicated on something bigger: the belief that Runway is building the infrastructure layer for a new era of AI applications.

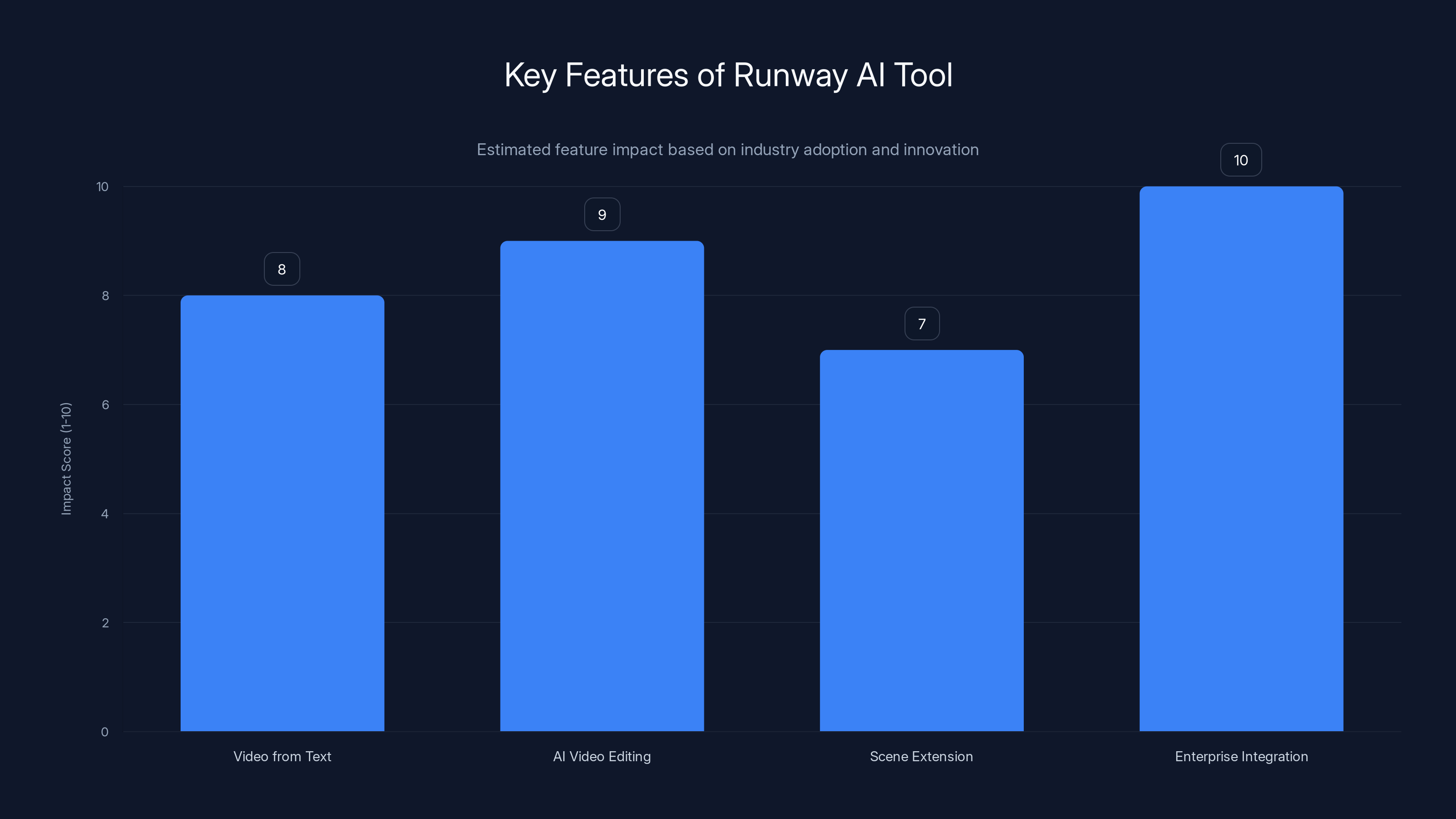

Runway's AI video editing and enterprise integration features have the highest impact, revolutionizing creative workflows. (Estimated data)

Understanding World Models: The Next Layer of AI

Let's talk about world models directly, because this is where the real technical innovation lives.

A traditional machine learning model learns patterns in data. A neural network trained on images learns to recognize cats because it's seen thousands of cat images and learned which pixel patterns correlate with "cat." It's powerful, but fundamentally reactive. You show it data, it classifies or predicts based on that data.

A world model does something different. It builds an internal representation of an environment's structure and dynamics. If you show it a video of a ball rolling across a table, it doesn't just learn "this is a video of a ball." It learns the implicit physics: gravity pulls downward, the ball rolls forward, it stops at the edge. It builds a generative model that can predict what happens next.

Why does this matter? Because prediction requires understanding causality. If you can predict what happens in the next frame of a video, you're not just generating plausible pixels. You're simulating physics.

Runway's approach builds on work from Demis Hassabis and the team at DeepMind, as well as from Fei-Fei Li's World Labs. But Runway's advantage is distribution and product. They already have millions of creators familiar with their tools. They already have enterprise relationships. They can build world models and immediately put them in the hands of people who can use them.

The technical architecture involves several components:

Representation Learning: The model learns a compressed representation of visual information. Instead of operating on raw pixels, it works with a learned latent space that captures the essential information about what's happening.

Dynamics Modeling: The model learns how states evolve over time. Given a current state, what's the next state? This requires understanding physics, object interactions, and environmental properties.

Generative Capability: Once trained, the model can generate plausible continuations of videos. It can extend a clip, fill in missing frames, or simulate what would happen if you changed initial conditions.

This is computationally expensive. Training world models requires massive amounts of video data and significant compute. Which brings us to the infrastructure question.

The Compute Challenge: Why CoreWeave Matters

Runway's recent deal with CoreWeave to expand compute capacity is more significant than it might initially seem. This isn't just a vendor relationship. It's a signal about the company's priorities and constraints.

Training large AI models is expensive. Training large vision models is even more expensive. Video data is high-dimensional and information-dense. A single high-resolution video frame contains millions of pixels. A second of video at 30fps contains 30 frames. An hour of video contains 108,000 frames. For world model training, you don't just want duration—you want diversity. Thousands of hours of video, all different environments, all different scenarios.

Processing this requires GPUs. Lots of them. Nvidia's H100 and newer H200 GPUs are the current standard for training, but they're expensive and scarce. CoreWeave is an infrastructure provider that specializes in GPU cloud services. They've built data centers optimized for AI workloads.

Runway's deal with CoreWeave suggests a few things:

- Scale is accelerating: The company is planning to train larger models on more data

- In-house infrastructure isn't enough: Even with capital, it's faster to partner with a specialized provider

- Cost is still a constraint: Efficient infrastructure management is critical to unit economics

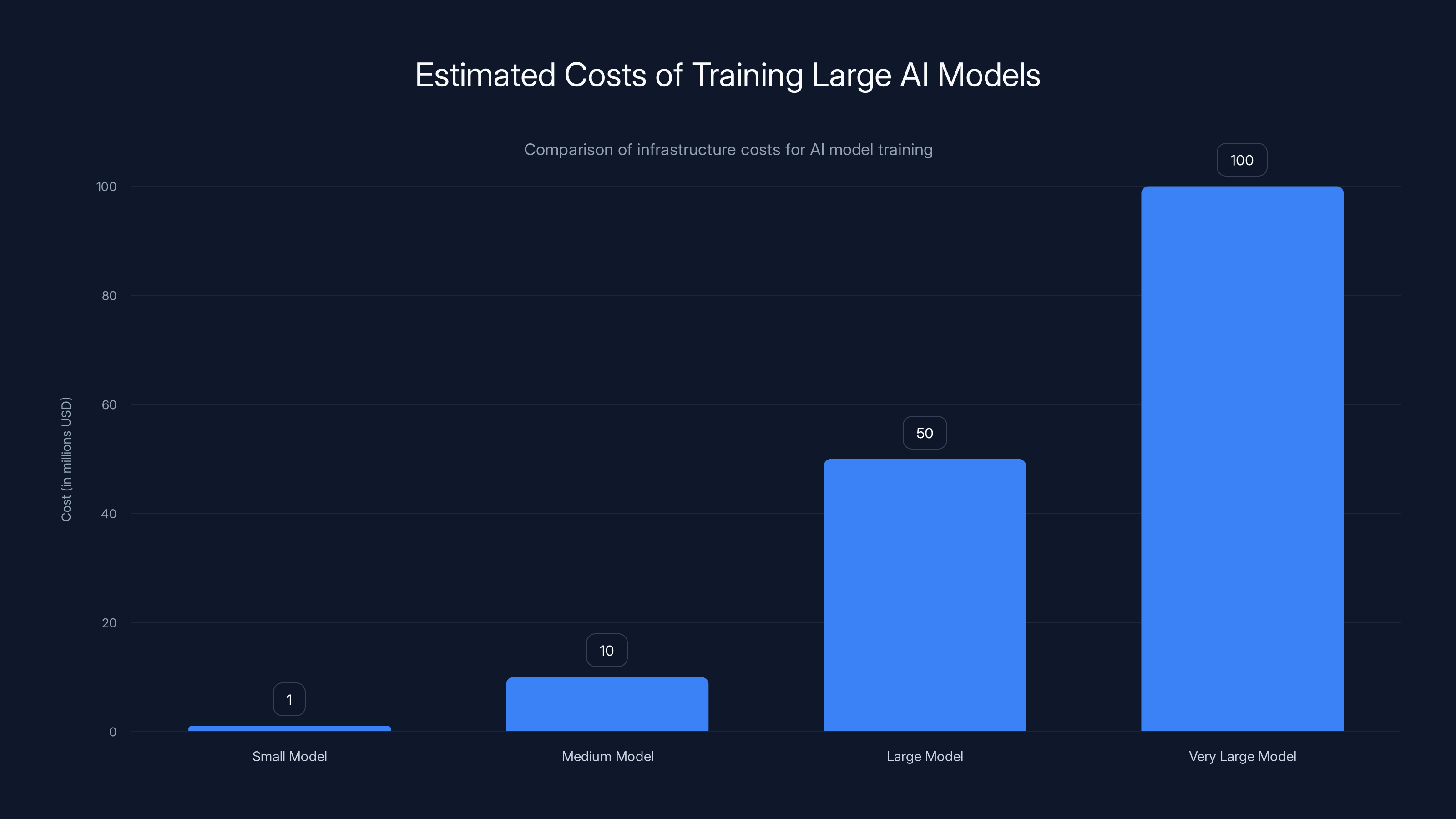

The math here is important. If you're training a world model on thousands of hours of video data, and training takes weeks or months on modern hardware, infrastructure costs can reach millions of dollars per training run. Companies like OpenAI and Anthropic report that training a single large model can cost $10-100 million. Runway's models are probably on the lower end of that spectrum, but they're still expensive.

This infrastructure investment also signals something about Runway's timeline. They're not planning to release world models once and call it a day. They're planning to iterate continuously. That requires ongoing compute, which requires a partnership with a provider that can scale elastically.

Estimated data suggests that training very large AI models can cost up to $100 million, highlighting the financial challenge and importance of efficient infrastructure like CoreWeave's services.

Gen 4.5: The Model That Changed the Conversation

Runway's Gen 4.5 video generation model was released shortly before the funding announcement, and it's worth understanding in detail because it demonstrates the company's technical leadership.

Previous generations of Runway's models were good, but they had clear limitations. Character consistency was difficult. Longer videos would drift or lose coherence. Audio wasn't well integrated. Editing capabilities were limited.

Gen 4.5 addresses most of these problems:

Native Audio Integration: Videos can now be generated with synchronized audio. This isn't post-hoc—the model understands the relationship between visual content and sound, which is a non-trivial challenge in multimodal AI.

Longform Generation: The model can now generate much longer video sequences—up to 10 minutes—without significant drift or loss of coherence. This required advances in how the model maintains state over long sequences.

Multi-Shot Generation: Complex videos with multiple scenes and transitions can be generated from a single prompt. The model understands narrative flow and scene structure.

Character Consistency: Characters maintain consistent appearance and behavior across a generated video. This is harder than it sounds—it requires the model to maintain a consistent representation of character identity while generating novel poses, expressions, and actions.

Advanced Editing: Users can edit generated videos at a detailed level. Want to change what a character is wearing? Edit the prompt and regenerate just that section. Want to slow down motion? Adjust the speed parameter.

On benchmarks, Gen 4.5 outperformed OpenAI's Sora and Google's Gemini video generation on several metrics. This is significant because Sora in particular has been considered the gold standard for video generation quality.

Why does this matter for the funding round? Because it proves that Runway can compete with well-resourced competitors. OpenAI has more capital. Google has more GPUs. But Runway has better product instincts and better execution. That's attractive to investors.

The World Model Shift: From Creative Tools to Scientific Instruments

Here's where Runway's strategy gets interesting. They're not positioning world models as an incremental improvement on Gen 4.5. They're positioning them as a fundamentally different category of tool.

The applications are vast:

Medicine: Simulate drug interactions, protein folding, cell behavior. A world model trained on biological video data could help researchers understand disease progression and test interventions in simulation before clinical trials.

Climate: Model weather systems, ocean currents, atmospheric dynamics. World models could improve climate prediction and help test interventions like carbon capture or cloud seeding.

Robotics: Train robots in simulation, then transfer that training to physical systems. A world model understands object interactions and physics, making it invaluable for robotics development. Companies like Tesla and Boston Dynamics are heavily invested in this area.

Energy: Model power grids, optimize energy distribution, simulate renewable energy systems. World models could help predict demand and reduce waste.

Manufacturing: Simulate production lines, optimize workflows, predict failures. A factory is a complex system with many interacting components. World models could optimize throughput and reduce downtime.

This diversification matters for two reasons. First, it reduces Runway's dependence on the entertainment and creative markets, which are interesting but not massive. Second, it positions Runway as infrastructure for AI development itself.

If Runway can build world models that accurately simulate physical systems, then every company training AI agents will want access to those models. They'll use them to generate training data, test scenarios, and validate behavior before deploying to the real world.

Runway's Gen 4.5 model outperforms OpenAI's Sora and Google's Gemini across key video generation features, notably in audio integration and character consistency. Estimated data based on feature descriptions.

Competitive Landscape: Runway vs. World Labs vs. Google Deep Mind

Runway isn't alone in this space. There's real competition, and understanding it matters for assessing whether the valuation is justified.

World Labs, founded by Fei-Fei Li, is specifically focused on world models. The company was founded in 2024 with the explicit mission of building large-scale world models. They've released a model called Open World Model that anyone can use. The advantage: legitimacy from Fei-Fei Li's reputation and research credentials. The disadvantage: limited product distribution and no revenue stream yet.

Google DeepMind is pursuing world models as part of their broader AI research. They've released models like Genie, which can generate interactive video environments from images. The advantage: Google's compute resources and research talent. The disadvantage: DeepMind tends to release research, not products. There's no clear path to commercialization.

Other players include OpenAI (via Sora research), Stability AI (via their video and 3D efforts), and various academic labs.

Runway's competitive advantages:

- Product distribution: Millions of creators already know the product

- Revenue: Runway is already generating significant revenue from Gen 4.5 and earlier models

- Enterprise relationships: Adobe partnership is massive—built-in distribution channel

- Team: The engineering team has proven they can ship competitive models

- Capital efficiency: Runway has achieved a $5.3B valuation with less capital than some competitors

The risks:

- Execution complexity: Building and shipping world models is harder than Gen 4.5

- Infrastructure costs: Scaling compute might become a bottleneck

- Regulatory uncertainty: Using proprietary training data at scale could face legal challenges

- Moat questions: What prevents a well-funded competitor from building equally good models?

Overall, Runway appears positioned better than most, but this is still a high-risk bet on the company's ability to execute at scale.

Adobe Partnership: What It Means

The mention of an Adobe partnership deserves deeper analysis. Adobe is arguably the most important software company in creative workflows. They own the market through Photoshop, Premiere Pro, After Effects, and other tools. If Runway's technology is integrated into Adobe's workflow, it becomes indispensable.

What does integration look like? Realistically, it means:

- Video editing inside Premiere Pro can leverage Runway's models for extending clips, removing objects, or generating missing frames

- Photoshop gets AI video capabilities for manipulating video like still images

- Adobe's subscription model monetizes Runway's capabilities to Adobe's existing customer base

An Adobe partnership is worth potentially hundreds of millions in revenue. It's also a massive credibility boost. If the world's largest creative software company trusts Runway enough to integrate into core products, other enterprises will too.

This also explains part of the valuation. Adobe Ventures participated in the round, which suggests Adobe is doubling down on the partnership. They're not just integrating existing tech—they're investing in the company's future development.

Enterprise clients offer significantly higher revenue potential compared to consumer subscriptions, with contracts ranging from $500K to several million annually. Estimated data.

Enterprise vs. Consumer: Why Runway is Betting Big on B2B

Early in Runway's history, the company focused on consumers. Individual creators could buy a subscription and generate videos. This was a smart move for validation and product-market fit. Consumer users tell you quickly what's broken.

But the business model doesn't scale in the same way. A few million consumers at

Enterprise is different. A large media company using Runway to accelerate production might pay $500K/year. A robotics company using world models to train agents might pay millions. A pharmaceutical company using world models for drug simulation might pay substantial licensing fees.

Moreover, enterprise customers have different requirements:

- Customization: They want models trained on their data, tuned for their specific use cases

- Integration: They want APIs, webhooks, integration with existing workflows

- Support: They want technical support, SLAs, and dedicated engineering

- Security: They have data governance requirements and need on-premise or VPC deployment options

- Scale: They have volume needs that far exceed consumer usage

The funding round positions Runway to address all of this. They're raising capital to expand engineering and go-to-market. That's explicitly enterprise-focused investment.

Team Expansion: From 140 to Scale

The company has about 140 people. They're planning to expand significantly with the new capital. Where are they investing?

Research: More PhDs. More people working on core model architecture, world model development, and long-term R&D. This is the foundation layer.

Engineering: More infrastructure engineers to manage compute. More software engineers to build products and APIs. More ML engineers to ship models and improve training efficiency.

Go-to-Market: Sales, marketing, customer success. The company has mostly relied on product-led growth. Enterprise sales requires different muscles.

This ratio is interesting. A company focused on consumer products might hire more go-to-market. A company focused on research might hire more researchers. Runway's balanced approach suggests they understand they need to excel at both—good research that ships, and enterprise sales that scales.

The timeline implied here is aggressive. If they hire 50-100 people in the next year, they're doubling their team. That's fast, and it increases risk. Large-scale hiring can dilute culture and reduce execution velocity.

But it's the bet Runway is making. They believe the market opportunity is large enough to justify doubling down.

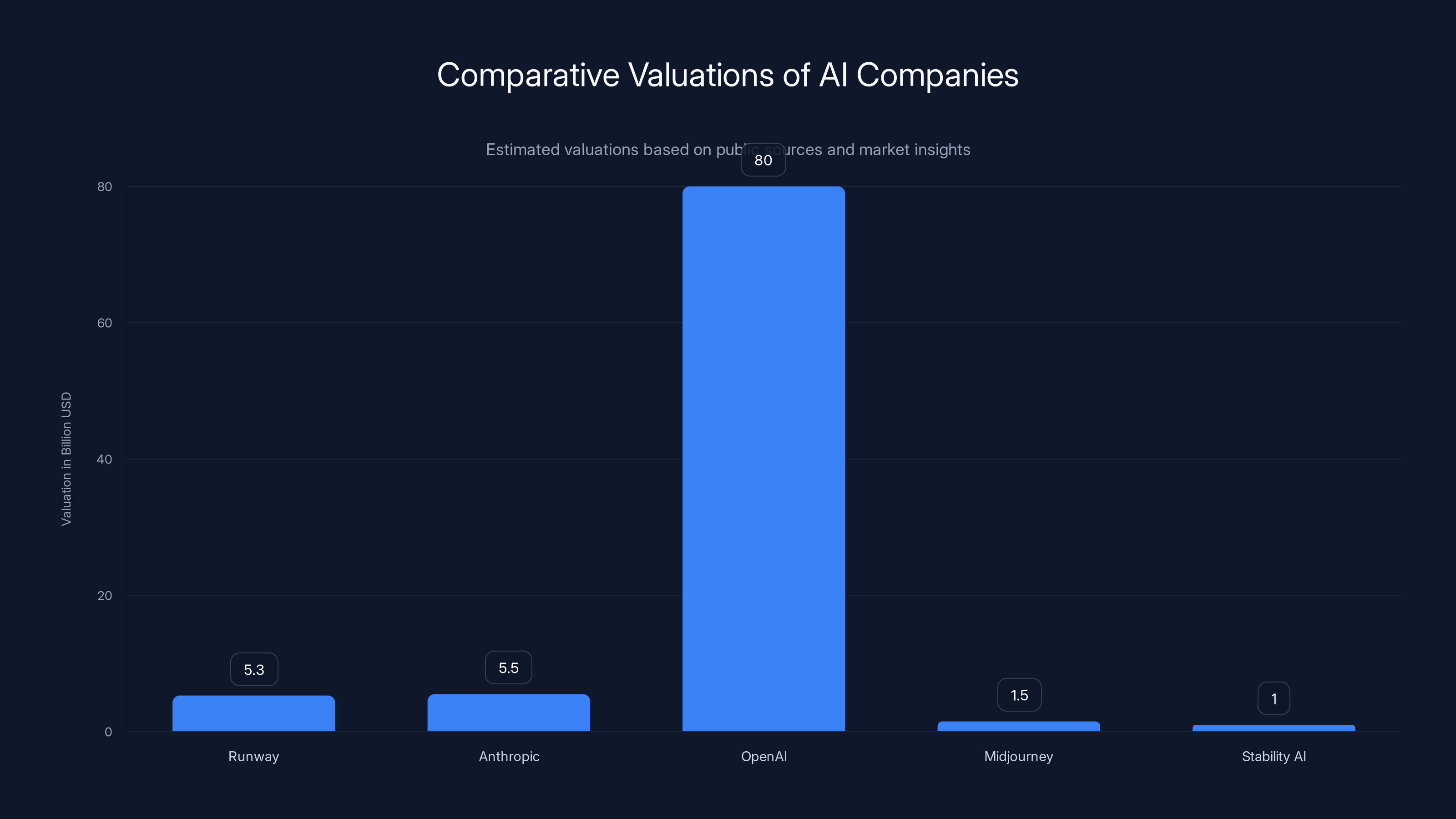

Runway's $5.3B valuation is comparable to Anthropic and higher than Midjourney and Stability AI, but significantly lower than OpenAI. Estimated data based on market insights.

The Broader AI Landscape: Why World Models Matter Now

There's a reason multiple companies are pursuing world models simultaneously. There's a genuine belief in the research community that they represent a path forward for more capable AI.

Yann LeCun, the chief AI scientist at Meta, has been vocal about this. He argues that large language models, while powerful, are fundamentally limited. They're next-token predictors. They're optimized for generating text that looks right, not text that's true. To get to real reasoning and planning, you need models that understand causality and environment dynamics—exactly what world models provide.

The theory goes like this:

Large language models work because language has a lot of structure and redundancy. If you predict enough tokens correctly, you implicitly learn something about how language works. But the models aren't actually reasoning. They're statistical pattern matching at scale.

World models are different. They're forced to learn something about physical causality because predicting the next frame of video requires understanding physics. Drop a ball, it falls. Throw it, it flies. These rules aren't about language—they're about the world.

This is the promise: If you train systems on video data and task them with prediction, you'll get systems that understand causality. Those systems will generalize better to new problems. They'll be more capable.

Is this true? It's still an open question. But there's enough theoretical justification that smart people are willing to bet billions on it.

Valuation Analysis: Is $5.3B Justified?

Let's do some back-of-envelope math on the valuation.

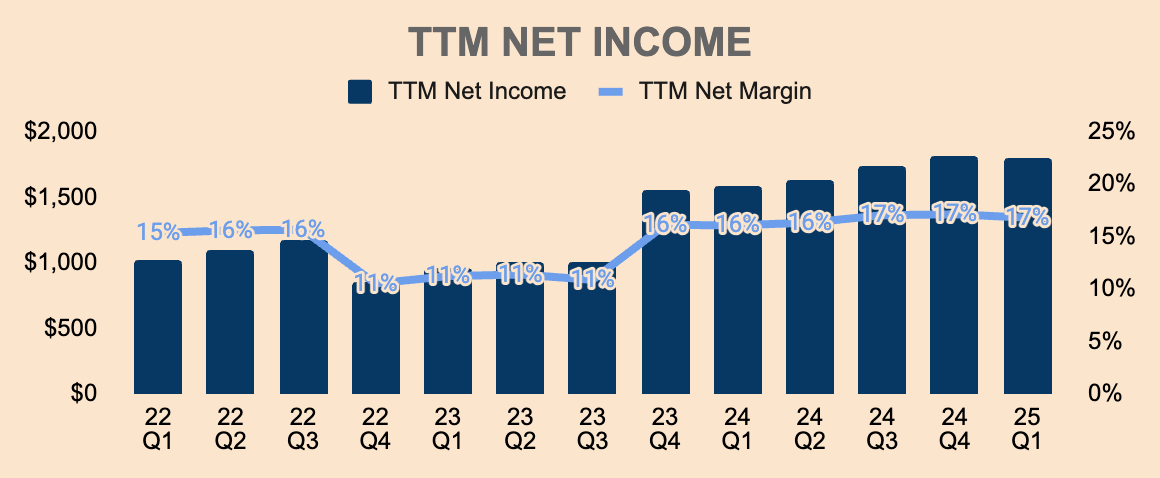

Runway likely has meaningful revenue from existing products. Let's estimate conservatively: maybe

But we're talking about a $5.3B valuation. That implies investors are betting on:

- Dramatic revenue growth: From world models and expanded enterprise adoption

- Margin improvement: AI models might be expensive to run, but if monetized efficiently, they're high-margin

- Strategic value: To larger companies (Adobe, Nvidia, etc.) that benefit from Runway's success

- Market expansion: Into robotics, medicine, climate, energy—new markets worth billions

Comparable companies:

- Anthropic is valued at around $5-6B with no public revenue

- OpenAI is valued at $80B+ on massive revenue and broad applications

- Midjourney is likely worth $1-2B on image generation alone

- Stability AI has been valued at $1B despite burning through capital

In that context, $5.3B for Runway seems defensible but not cheap. It's betting on execution. If Runway ships competitive world models and lands enterprise deals, the valuation makes sense. If they fail to differentiate or execution stalls, investors overpaid.

Implications for Creators and Enterprises

What does this funding round mean for actual users?

For creators: More features, faster iteration, better tools. Runway will invest in product. They'll add capabilities that make creative work faster and easier. The question is pricing—will they stay consumer-friendly or shift to enterprise pricing?

For enterprises: More investment in customization, integration, and support. Runway will build APIs and integrations. They'll offer enterprise contracts. They'll provide technical support.

For investors: This is a bet on world models being as important as transformers were for language. If true, early investors could see massive returns. If false, money gets written off.

For the industry: Signal that world models are real and serious. Other companies will accelerate their own efforts. Investment will flow into this space. Talent will concentrate here.

Future Roadmap: What's Next?

Based on the funding round and stated priorities, here's what we can expect:

Near-term (6-12 months):

- Improved world model performance

- More domain-specific models (medicine, robotics, climate)

- Better integration with Adobe

- Continued Gen 4.5 improvements

Medium-term (1-2 years):

- Commercial world model products

- Enterprise contracts with pharma, robotics, climate companies

- Potential acquisition or partnership with larger tech company

- Team expansion completing

Long-term (2+ years):

- Runway as infrastructure layer for AI development

- Multiple revenue streams (B2B, B2C, licensing)

- Potential IPO or acquisition by Google, Meta, or another tech giant

The company is clearly thinking long-term. They're building infrastructure, not just products. That's how you build a multi-billion dollar company.

Challenges Ahead: What Could Go Wrong

Despite the positive momentum, there are real risks:

Regulatory: Using video data for training could face privacy and copyright challenges. If major creators or companies challenge Runway's data usage, it could slow development.

Execution: Building world models is genuinely hard. It's possible Runway's first generation is impressive but doesn't actually solve real problems well enough to justify enterprise pricing.

Competition: OpenAI and Google have more capital and more compute. If they prioritize world models, they could outcompete Runway through sheer resource advantage.

Unit economics: Generating video is expensive. If Runway can't figure out how to monetize efficiently, costs could exceed revenue at scale.

Technological shifts: What if world models don't actually lead to more capable AI? What if transformer-based models continue to improve and render world models unnecessary? This is unlikely but possible.

Talent: Expanding to 200+ people means managing growth. Fast-growing companies often struggle with culture and retention.

The Broader Context: AI Investment in 2025

This funding round exists in context. AI investment is at record levels. Billions of dollars are flowing into AI companies. But not all of it is rational. Some is hype. Some is fear of missing out.

Runway's round suggests we're in a phase where the market is differentiating. Not all AI companies get $5B valuations. The ones that do have proven business models (or very credible paths to them) and demonstrated product excellence.

Runway has both. They're making a credible play for infrastructure-level importance. They're executing well. The money makes sense.

But this also means the AI market is consolidating expectations. The days of every generative AI startup getting funded are ending. Now investors are backing companies with real advantages and clear paths to sustainable business.

FAQ

What is a world model in AI?

A world model is an AI system that learns to simulate the dynamics of physical environments by building internal representations of how things work. Unlike language models that predict the next word, world models predict how scenes evolve over time, learning implicit understanding of physics, causality, and object interactions. This capability makes them valuable for applications like robotics training, drug simulation, climate modeling, and scientific research where understanding environmental dynamics is critical.

How does Runway's Gen 4.5 differ from earlier video generation models?

Gen 4.5 introduces several major improvements: native audio integration that synchronizes sound with video, longform generation capability supporting videos up to 10 minutes without coherence loss, multi-shot generation that handles complex narratives with multiple scenes, character consistency across entire videos, and advanced editing tools that allow users to modify specific elements. These advances address previous limitations where character appearance would drift, longer videos would degrade, and audio had to be added separately.

Why is the 5.3 billion valuation significant?

The valuation reflects investor confidence that Runway has achieved technical leadership in video generation and is positioned to dominate world model applications across robotics, medicine, climate, and energy sectors. The investor composition—including Nvidia, Adobe, and AMD Ventures—indicates strategic validation. The capital enables rapid team expansion, compute infrastructure scaling, and enterprise product development to capture emerging markets beyond consumer creative tools.

What are world models used for in practical applications?

World models have diverse applications: in medicine, they simulate protein folding and drug interactions before clinical trials; in robotics, they provide training environments that reduce real-world deployment costs and risks; in climate science, they improve weather prediction and model intervention effectiveness; in manufacturing, they optimize production lines and predict equipment failures; in energy, they improve power grid efficiency and renewable energy forecasting. These applications represent multi-billion dollar market opportunities where Runway could license or provide access to specialized models.

How does Runway's approach differ from competitors like World Labs and Google Deep Mind?

Runway combines strong research capabilities with proven product distribution and existing enterprise relationships through the Adobe partnership. World Labs has credibility through founder Fei-Fei Li but limited commercial infrastructure. Google DeepMind has superior compute resources but tends to release research rather than commercialized products. Runway's advantage is being the only company with significant existing revenue, enterprise adoption, and the operational maturity to scale commercially.

What infrastructure partnerships are critical for world model development?

The CoreWeave partnership reveals that training world models requires specialized compute infrastructure. Video data processing demands GPUs operating continuously for weeks or months, making cloud partnerships more efficient than building proprietary data centers. CoreWeave provides GPU-optimized infrastructure, enabling Runway to scale training elastically without massive capital expenditure in hardware that might become obsolete rapidly.

How does Runway plan to monetize world models?

Runway is transitioning from consumer subscription (

What's the competitive advantage of video-based training for AI systems?

Video contains rich information about physics, causality, and environmental dynamics because every pixel change must follow physical laws. Training on video forces AI systems to learn underlying rules rather than surface-level patterns. This is theoretically more aligned with how humans learn about the world—through observing cause and effect. Systems trained on video should generalize better to novel scenarios because they've learned genuine principles rather than dataset-specific correlations.

What regulatory challenges could affect Runway's growth?

Runway faces potential challenges around copyright of training data (did creators consent to their videos being used for model training?), privacy of people appearing in videos, and data residency requirements for enterprise customers in regulated industries. The entertainment industry has already shown willingness to litigate over AI training data. Regulatory changes in EU, UK, and California could require consent for training data or limit model capabilities. These challenges could slow development or increase costs substantially.

Is the $5.3 billion valuation sustainable, or is it speculative?

The valuation is justified if Runway executes on world models and lands significant enterprise contracts in robotics, medicine, climate, and manufacturing. Comparable companies like Anthropic ($5-6B) also have high valuations based on long-term potential rather than current revenue. However, if execution stalls, if competitors with more resources capture key markets, or if world models fail to deliver expected value, investors could overpay. Success depends on the next 18-24 months of product delivery and customer acquisition.

Conclusion: The AI Inflection Point

Runway's

The company has earned this position through actual execution. Gen 4.5 is a genuinely impressive model. It competes favorably with offerings from companies with 100x more capital. The Adobe partnership is real, providing distribution that most startups only dream of. The team is talented and has proven they can ship.

But this is still a high-stakes bet. World models are theoretically promising, but practically unproven at scale. Runway is betting that the next generation of AI breakthroughs will come from systems trained to understand and predict video, not systems trained only on text. They're betting they can commercialize this technology faster than competitors with more resources. They're betting they can hire and integrate 50+ new people while maintaining execution quality.

These are big bets. But they're bets worth making at a company of Runway's caliber.

For the AI industry, this round signals that we're past the "all generative AI is good" phase. We're entering a phase of specialization and maturation. Companies that can combine research excellence with product execution and sustainable business models will win. Runway appears to be one of those companies.

For creators and enterprises, Runway's momentum is good news. It means better tools, more features, faster iteration. It means world models will become more accessible and more capable. It means the infrastructure layer for the next generation of AI applications is being built.

The next 18 months will tell us whether this bet was genius or hubris. My money's on genius, but the test is real.

Key Takeaways

- Runway raised 5.3B valuation, signaling investor confidence in world models as next-generation AI infrastructure

- Gen 4.5 outperforms OpenAI Sora and Google video models on multiple benchmarks with 10-minute generation and character consistency

- World models enable applications beyond entertainment—medicine, robotics, climate, energy, and manufacturing—creating multi-billion dollar markets

- Adobe partnership provides built-in distribution to millions of creative professionals and enterprise customers

- Competitive advantages are execution, product distribution, and enterprise relationships—but competitors with more capital could catch up

Related Articles

- Will Smith Eating Spaghetti: How AI Video Went From Chaos to Craft [2025]

- Deploying AI Agents at Scale: Real Lessons From 20+ Agents [2025]

- Lidar & Vision Sensor Consolidation Boom [2025]

- Harvey's $11B Valuation: How Legal AI Became Silicon Valley's Hottest Startup [2025]

- Why AI-Generated Super Bowl Ads Failed Spectacularly [2026]

- From AI Pilots to Real Business Value: A Practical Roadmap [2025]

![Runway's $315M Funding Round and the Future of AI World Models [2025]](https://tryrunable.com/blog/runway-s-315m-funding-round-and-the-future-of-ai-world-model/image-1-1770734315686.jpg)