Introduction: The Moderation Reckoning

Something broken happened in early 2026. A social network that barely existed 12 months earlier hit 2.5 million users. Growth like that feels like a victory, right? Until you realize the infrastructure designed for hundreds of thousands of people just got hit with tens of millions of pieces of daily content.

The platform in question faced a brutal reality that every fast-growing social network eventually hits: your systems can't keep up. And when they can't, the worst content bubbles to the surface. According to TechCrunch, racial slurs in usernames, hate speech in hashtags, and content glorifying extremist figures were all documented, reported, and still live on the platform days later. This wasn't some fringe problem buried in obscure corners. This was visible, searchable, and widespread.

Here's what matters about this story: it's not unique. This is the third or fourth time we've watched a social platform explode in growth and immediately struggle with moderation at scale. It happened to Bluesky. It happened to Mastodon. It happened to every platform that saw a sudden influx of users looking for an alternative to an established network.

But there's something deeper here. This isn't just about throwing more moderators at the problem. This is about the fundamental tension between two things platforms claim to want: open speech and safe spaces. And when you grow fast, you can't have both. You have to choose.

The platform's founder claimed they were "offering everyone the freedom to express and share their opinions in a healthy and respectful digital environment." By February 2026, those two goals were already in direct conflict. And the platform was losing on both fronts.

This article breaks down what actually happens when a social network grows too fast for its moderation systems. We'll look at the technical challenges, the human costs, the policy failures, and what actually works when done right. Not the stuff platforms say works. The stuff that actually, measurably prevents harm.

TL; DR

- Moderation scales exponentially, not linearly: Doubling users doesn't require 2x moderators—it requires 4-5x due to increased content volume and complexity

- False choices between growth and safety: Platforms that claim you can have both are lying—every data point shows you have to choose which one matters more

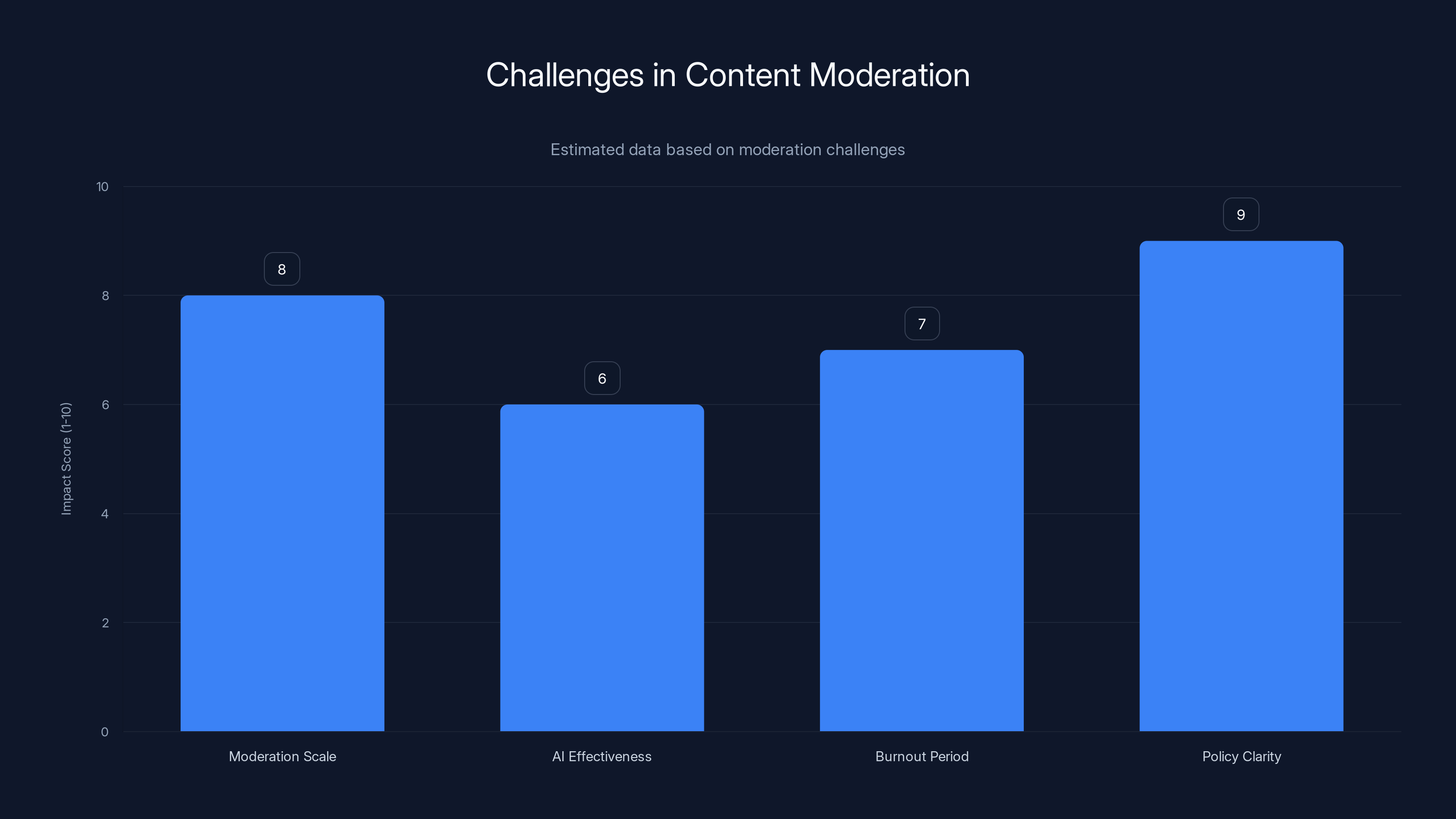

- Technology can't do this alone: AI catches maybe 60-70% of harmful content, and false positives create their own problems

- First-responder burnout is real: Human moderators reviewing hate speech and extremist content burn out after 6-18 months on average

- Policy clarity matters more than policy strictness: Clear, consistently-enforced rules work better than strict but unenforced policies

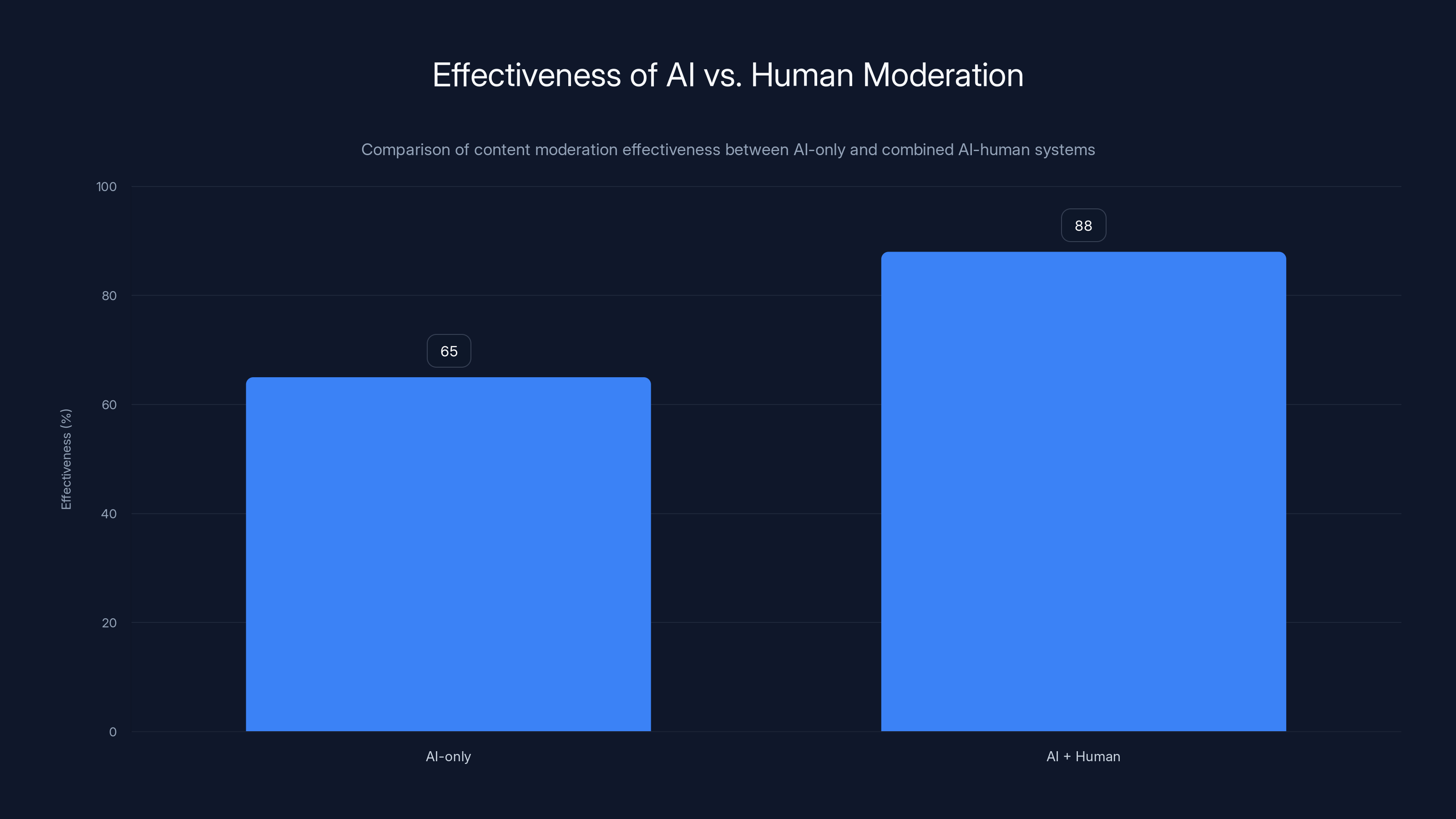

AI-only moderation systems catch about 65% of harmful content, while combining AI with human oversight increases effectiveness to approximately 88%.

The Growth Trap: Why Fast Growth Breaks Moderation

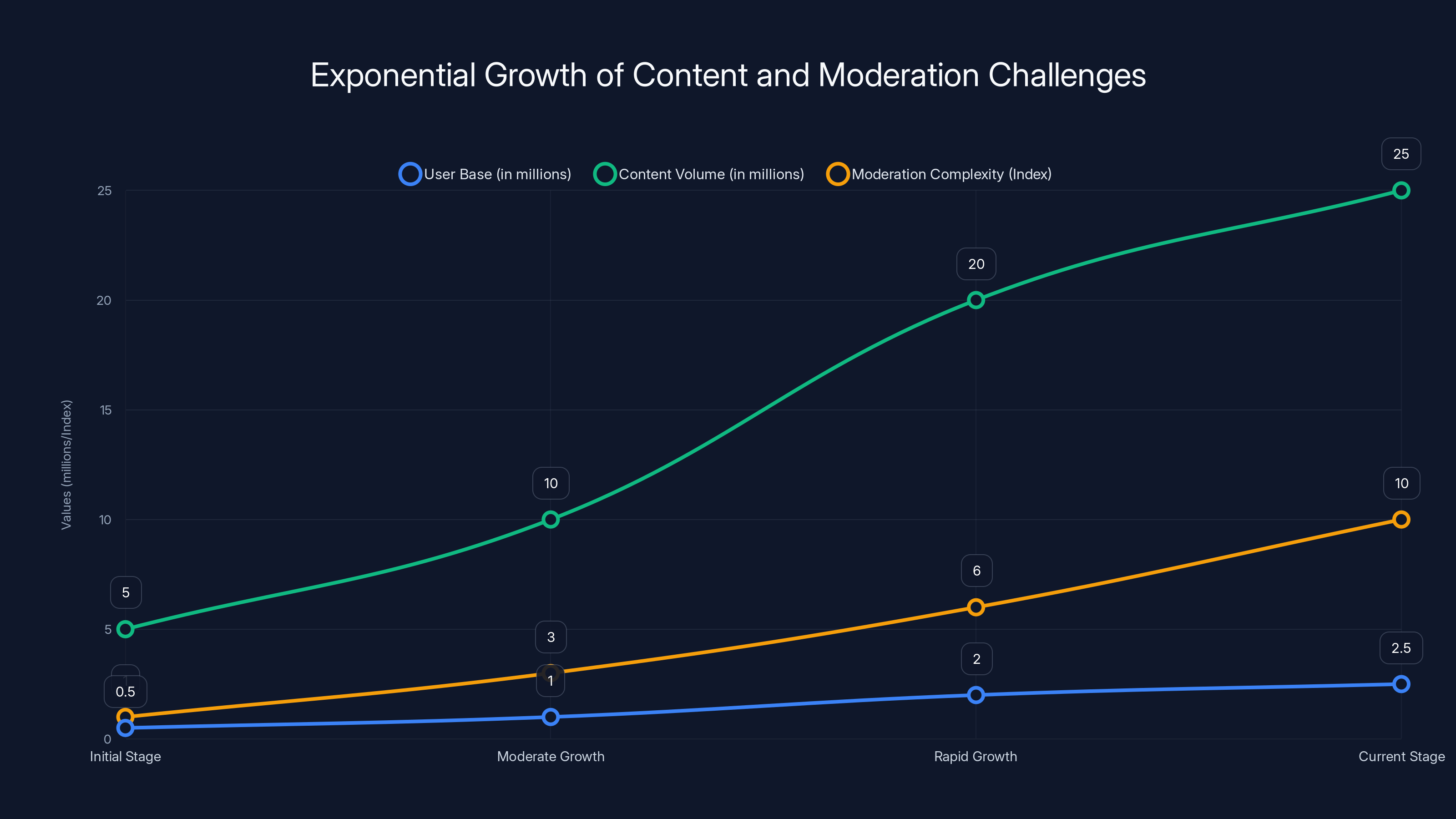

Let's talk about the math first, because math doesn't lie. When a social network goes from 500,000 users to 2.5 million users, the moderation problem doesn't scale linearly. It scales exponentially.

Here's why. If your platform generates an average of 10 pieces of content per user per day, then 500,000 users equals 5 million pieces of content daily. At 2.5 million users, that's 25 million pieces of content daily. That's a 5x increase in content volume.

But here's where it gets worse. Content moderation doesn't scale with volume alone. It scales with complexity. New users bring new languages, new cultural references, new slurs, new ways to disguise harmful content. Your moderators have to learn these patterns. That learning curve adds time per review.

Additionally, bad actors migrate to new platforms specifically because moderation is weak. They're not waiting around. The moment they see a platform gaining users and moving away from established competitors, they move in. It's like how a new neighborhood gets vandalized—not because the neighborhood is bad, but because vandals know the police don't patrol there yet.

This is what happened here. The platform caught fire after a major competitor faced regulatory pressure. Users flooded in. The moderation team didn't scale at the same rate. And the window opened for every bad actor looking for a new home.

The founder's response was telling: the company is "rapidly expanding our content moderation team." Not expanded. Rapidly expanding. Which means it wasn't prepared. They were building the plane while flying it, and the plane was already flying through a storm.

The Policy vs. Enforcement Gap

Here's the thing that actually infuriates me about this: the platform's stated policies are solid. They claim to restrict content involving illegal activity, hate speech, bullying, harassment, and content intended to cause harm. That's a reasonable policy. Most social networks have essentially the same policy.

The gap isn't in the policy. It's in enforcement.

The platform advertised zero tolerance for the exact content that was running rampant on their service. Users reported specific violations with screenshots. The platform received these reports and said they were "actively reviewing and removing inappropriate content." Then days passed, and the content was still there.

This creates a trust problem that's almost impossible to recover from. When a platform says one thing and does another, users stop believing them. Not because they think the policy is wrong, but because they think the policy is theater.

The Anti-Defamation League (ADL) published their own research flagging the platform for antisemitic and extremist content, including designations for foreign terrorist organizations. That's not a small problem. That's a credibility-destroying problem.

When major civil rights organizations have to publish reports documenting your moderation failures, you've already lost the narrative. And you should have.

But here's where policy clarity actually matters: the platform needs to be brutally honest about what it prioritizes. If the platform's real priority is "maximum speech with minimal restrictions," then say that. Let users choose to participate or not based on that honest statement.

Instead, the platform claimed to prioritize both open speech AND a healthy, respectful environment. You can't have both at scale. Every large social network has had to choose. And the choice made here—or rather, the choice avoided here—created an environment where the worst content thrived.

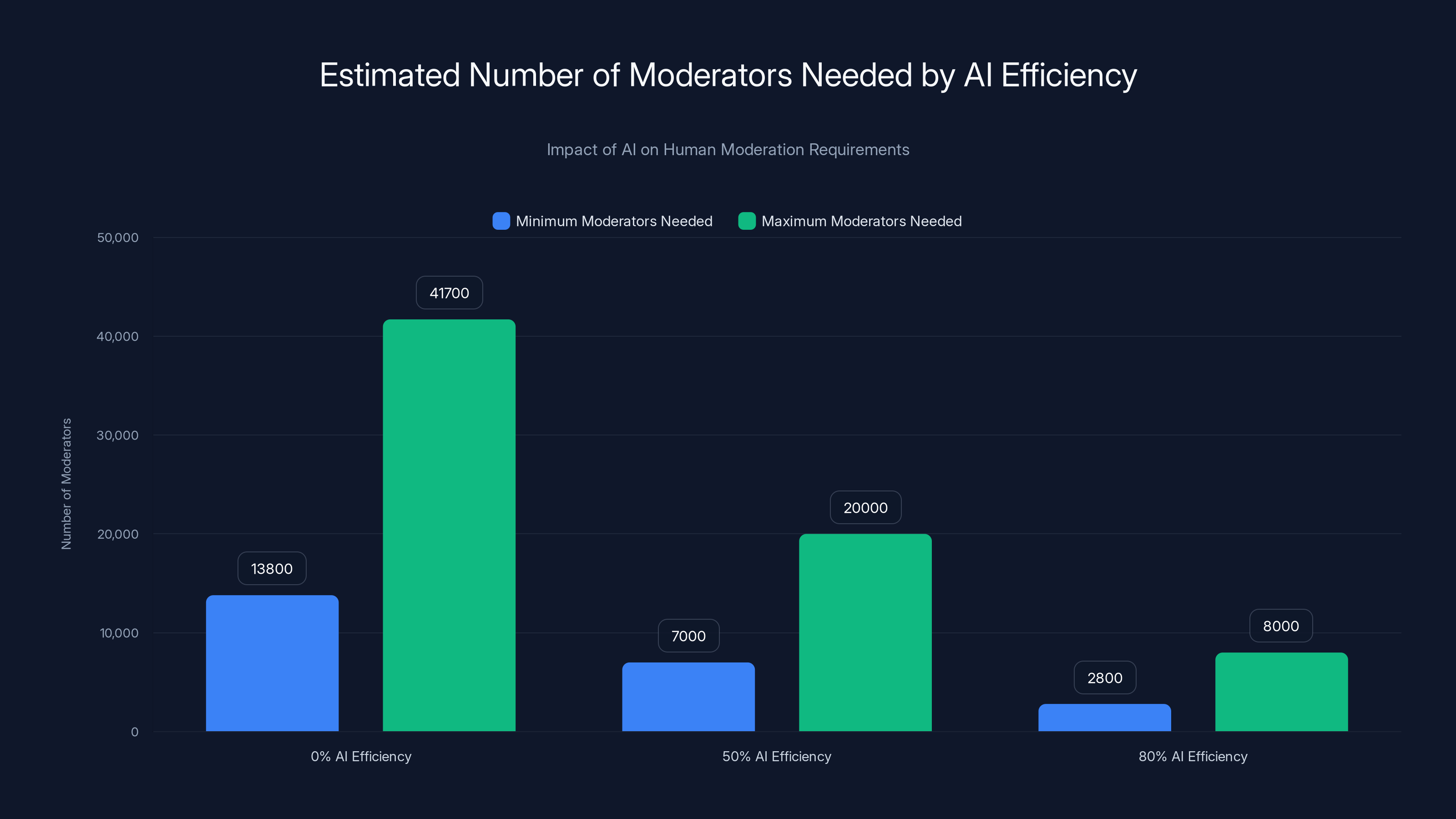

As AI efficiency increases, the number of human moderators required decreases significantly. Estimated data shows a reduction from 13,800-41,700 moderators to 2,800-8,000 when AI efficiency reaches 80%.

The Moderation Workforce Crisis

Here's something that doesn't get enough attention: content moderation work is traumatic.

Moderators are reviewing daily content that includes child exploitation, graphic violence, detailed instructions for suicide and self-harm, extreme bigotry, and everything in between. They do this for 6-8 hours per day, often in outsourced call centers where they're paid $15-20 per hour. Studies show that content moderators develop PTSD at rates similar to soldiers in combat zones.

The average moderator lasts 6-18 months before they burn out, quit, or transfer to less traumatic roles. That's your retention window. After that, you need to hire and train new people, which means you start the cycle over.

When a platform suddenly grows 5x, you need to hire and train 5x as many moderators. But you don't have 5x experienced moderators sitting around waiting for a job. You're hiring people who've never done this work. They're learning on the job while processing the worst content the internet produces.

New moderators make more mistakes. They miss context. They over-remove legitimate content. They under-remove harmful content. They need supervision. That supervision requires experienced moderators, which further stretches your experienced workforce.

This is the workforce crisis that nobody talks about. You can't just throw bodies at the problem. You need experienced, trained, trauma-informed people managing increasingly traumatic content.

The platform's response was to say they're "upgrading our technology infrastructure so we can catch and remove harmful content more effectively." Technology helps. But technology is a multiplier, not a solution. If you have bad systems and throw better technology at them, you get bad systems that run faster.

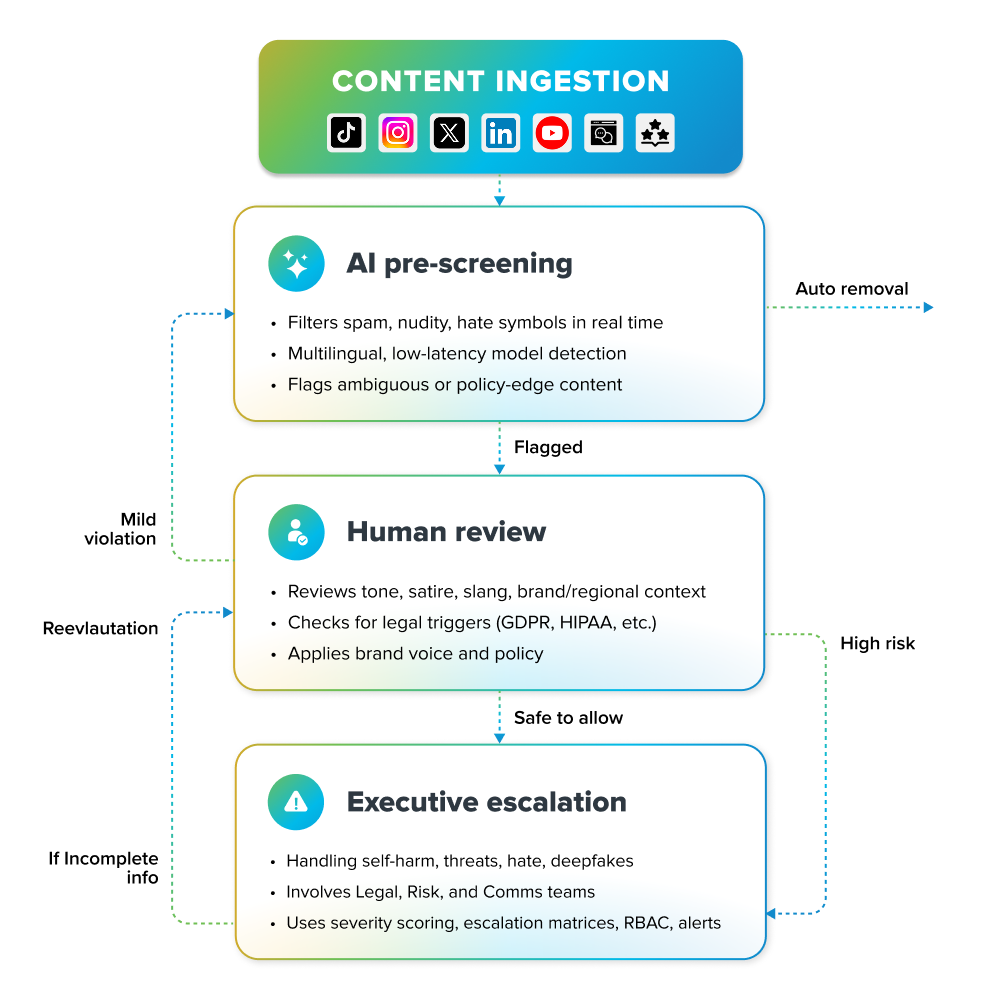

Artificial Intelligence and Its Real Limitations

Let's be clear about something: AI is absolutely part of any working moderation system at scale. You cannot moderate billions of pieces of content with humans alone. It's mathematically impossible.

But AI has real limitations that matter.

First, AI is good at pattern matching. It can learn to identify known slurs, known hate symbols, known extremist propaganda. It can do this at scale quickly. That's genuinely valuable.

Second, AI struggles with context. A word that's a slur in one context is a historical reference in another. A quote that's obviously hateful in English might be meaningless in Japanese. A meme that's a joke to one group is a threat to another. Context is where AI fails.

Third, AI struggles with ambiguity. Is someone genuinely sharing a dangerous idea or are they criticizing the idea? Is someone quoting something they disagree with or endorsing it? Humans understand intent. AI guesses.

For the specific problem this platform faced—slurs in usernames—AI should theoretically be effective. Usernames don't have much context. A username is a slur or it's not. But apparently the platform wasn't running usernames through basic slur-filtering systems. Which suggests either the AI wasn't trained on contemporary slur lists, or the platform hadn't prioritized implementing even basic filtering.

The real problem isn't that AI can't help. It's that AI alone isn't enough, and building good moderation systems takes months or years of training, testing, and iteration. The platform didn't have months or years. They had weeks.

So they ended up with systems that catch some obvious stuff but miss the rest. Which is almost worse than having no systems at all, because it gives users (and the company) false confidence that the moderation is working. "We have an AI system" becomes a public relations statement, not a functioning safety mechanism.

Case Study: Bluesky's Slur Problem in 2023

We've actually seen this movie before. In July 2023, Bluesky—another rapidly growing alternative to a major social network—faced almost exactly this problem.

Users discovered that the platform had accounts with slurs in their usernames and in-profile text. The issue was visible, documented, and similar to what happened here: users reported it, the platform acknowledged it, and then the content remained live.

Bluesky's response eventually involved implementing better username filtering and empowering users to report accounts more effectively. But it took weeks. And in those weeks, people with slurs in their names were publicly visible on a platform that was marketing itself as the safe alternative.

The difference with Bluesky is that they eventually learned. They implemented actual filters. They hired moderation staff. They made hard decisions about trade-offs between open speech and safety. They chose safety.

The platform in this case was still in the middle of that learning process. And the learning process was happening in public. Journalists were documenting it. Civil rights organizations were publishing reports. And the platform's response was still framed as "we're aware and working on it" rather than "we failed and here's what we're doing differently."

That matters. Accountability matters. Admitting failure and actually fixing things matters more than claiming there's no failure.

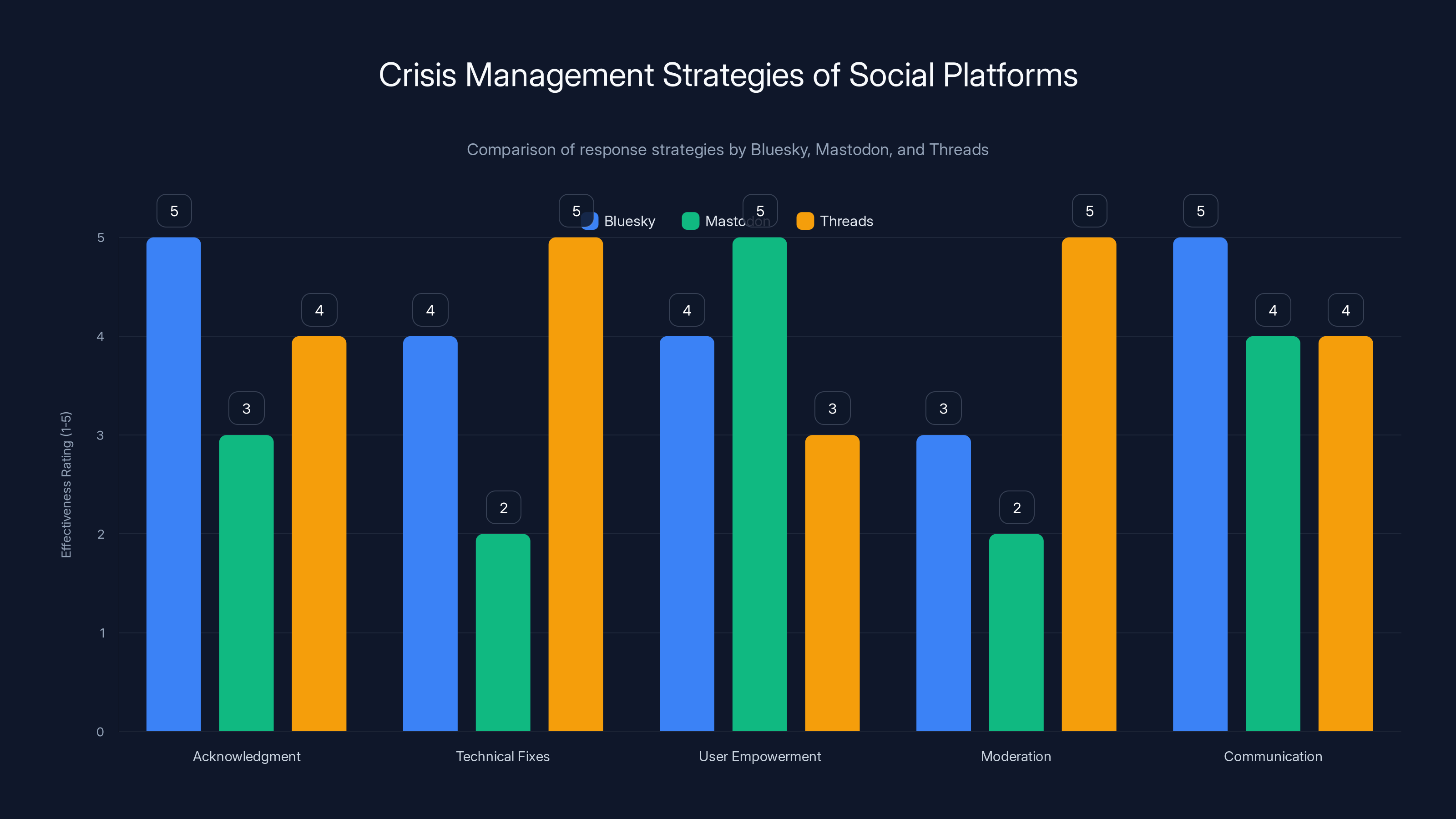

Bluesky, Mastodon, and Threads each used different crisis management strategies. Bluesky excelled in acknowledgment and communication, Mastodon in user empowerment, and Threads in technical fixes and moderation. Estimated data.

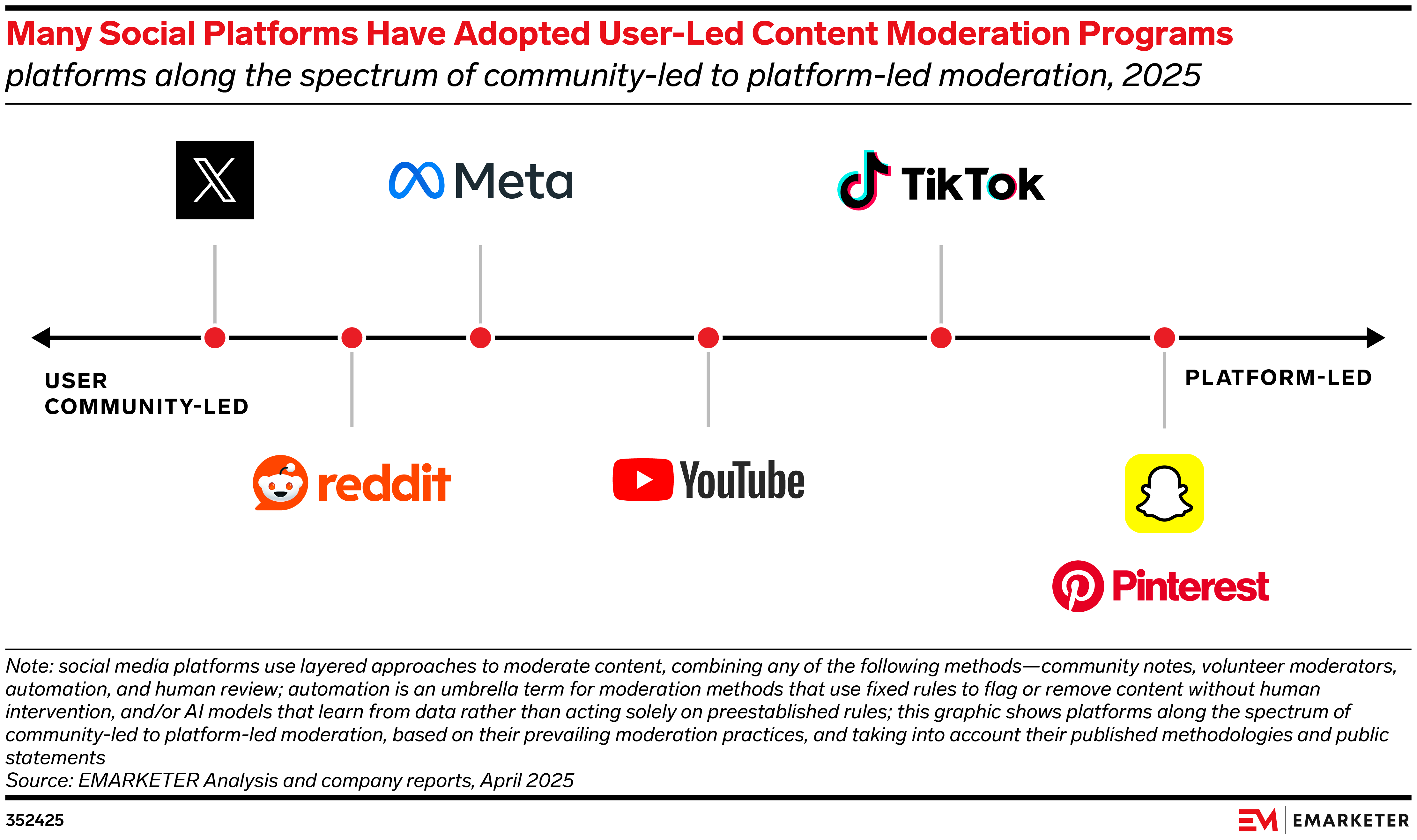

The Policy Choice Problem: Growth Versus Safety

Here's the core issue that nobody wants to address directly: you cannot maximize growth while minimizing harm. These are trade-offs.

If your primary goal is growth, you accept that moderation will be loose. You let more content through, knowing some of it will be harmful. The advantage is that you grow fast. Users flock to a platform with minimal content restrictions. Bad actors and good actors both show up, but you grow.

If your primary goal is safety, you implement strict moderation. You remove content aggressively. You require verification. You moderate comments and hashtags and usernames. You grow slowly, but the environment is cleaner. Users feel safer. Bad actors feel less welcome.

Every platform CEO claims they're optimizing for both. None of them actually are, and the data shows it.

Reddit explicitly chose growth over safety. They wanted user-generated content and community moderation. They grew massively. They also became a haven for some of the worst content online.

Discord initially chose growth over safety in their approach to server moderation. They grew massively. They also had to deal with constant scandals about servers dedicated to hate speech and illegal content.

Twitter's choice changed over time. In the early days, Dorsey prioritized speech. Later leadership prioritized safety. Different eras, different trade-offs, different communities.

Facebook's choice was complexity: they tried to optimize for both. The result is a platform with massive AI systems, massive human moderation teams, and still constant failures. They spend billions on safety and still get it wrong regularly. That's what "trying to have both" actually looks like: massive resource consumption, constant failures, diminishing returns.

This platform claimed to offer everyone equal voice in a healthy and respectful environment. Those two goals are in direct conflict above a certain scale. And at 2.5 million users, they'd clearly passed that scale.

The Role of Bad Actors and Coordinated Migration

When a new platform grows suddenly, it attracts two groups: people looking for an alternative to whatever they were using, and bad actors looking for a space where moderation is weak.

The timing here is important. The platform launched and grew during a period when another major social network faced regulatory and legal pressure. Users who felt that platform was censorious migrated. That included legitimate users and bad actors. The bad actors saw a platform with millions of users and negligible moderation. That's an invitation.

They didn't accidentally end up with slurs in usernames. Bad actors specifically created accounts with slurs in the names because they knew the platform wouldn't remove them fast. They were testing boundaries. And the boundaries failed the test immediately.

This is documented behavior. When 4chan users migrate to a new platform, they explicitly coordinate to test moderation response time. When extremist communities get kicked off one platform, they explicitly migrate to newer platforms with weaker moderation. This isn't accidental. It's intentional.

The platform didn't have any defense against coordinated bad-actor migration because they hadn't anticipated the need for such a defense. You don't anticipate that until you've been burned by it. And this platform got burned immediately.

Bad actors are not stupid. They're strategic. They move fast, they test boundaries, they exploit gaps. A platform with weak moderation looks like opportunity to them.

The platform could have anticipated this by looking at exactly what other platforms experienced. The information exists. It's public. Everyone who's studied moderation at scale knows that coordinated bad-actor migration is a predictable consequence of rapid growth on a new platform.

But apparently that learning didn't happen here.

Moderator Training and Onboarding at Speed

When you need to hire moderation staff rapidly, training becomes almost impossible.

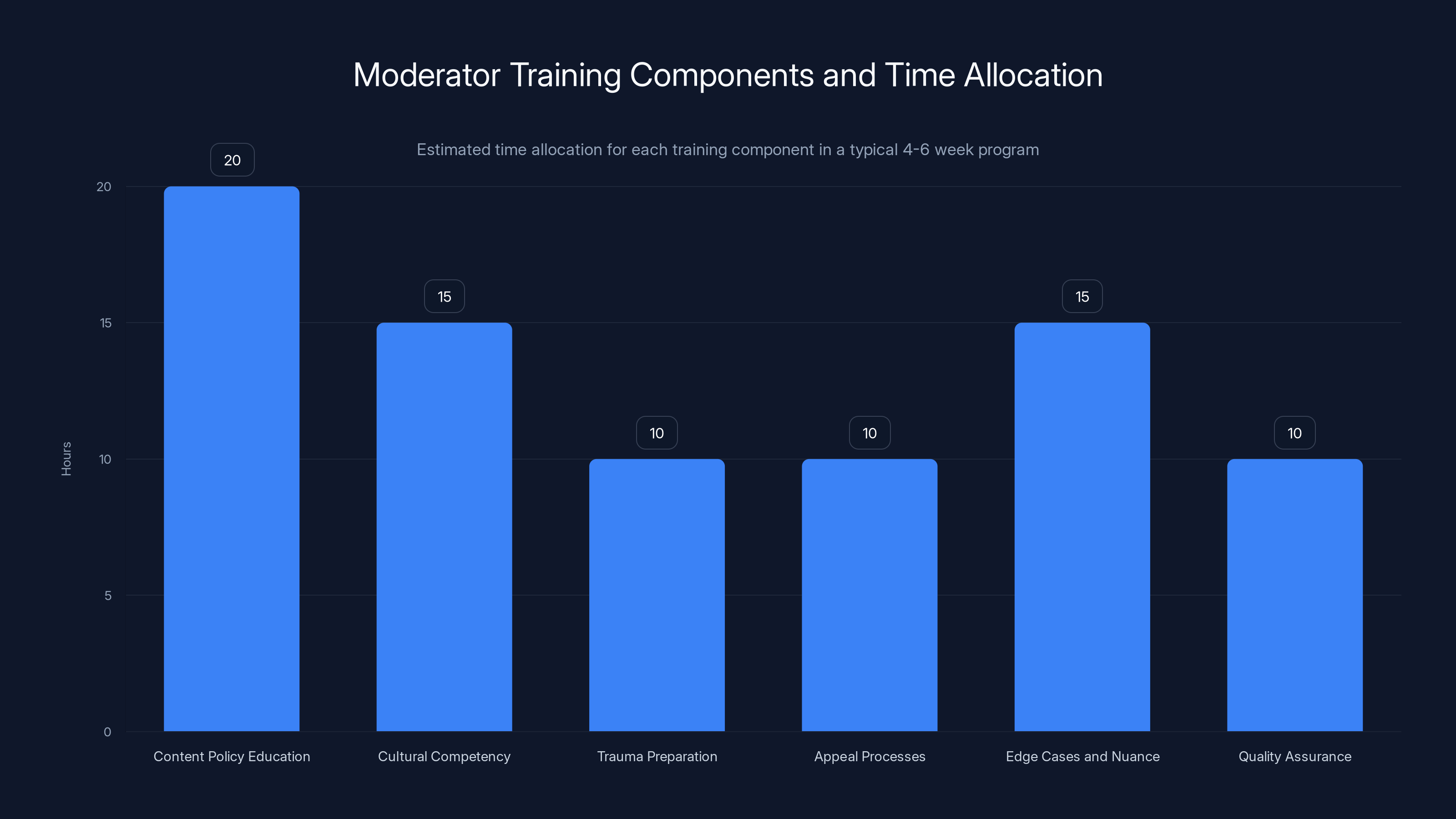

Proper moderation training includes:

- Content policy education: Hours spent learning what violates policy and what doesn't

- Cultural competency: Learning how different cultures interpret symbols, language, and references

- Trauma preparation: Training on how to handle the psychological impact of viewing harmful content

- Appeal processes: Understanding how decisions can be appealed and how to document reasoning

- Edge cases and nuance: Real examples of content that's almost-but-not-quite violations

- Quality assurance: Ongoing review of moderator decisions with feedback

All of this takes time. Usually 4-6 weeks minimum before a moderator is effective. And you need ongoing supervision and feedback.

When you're hiring 100 moderators at once to keep up with growth, you're not giving each one 4-6 weeks of training. You're giving them 1-2 weeks and hoping they figure out the rest on the job.

This leads to inconsistent enforcement. One moderator removes content that another moderator lets through. Users report inconsistent decisions. Moderators miss obvious violations because they haven't learned the patterns yet. And the quality of moderation degrades.

When you combine rapid hiring with inadequate training, you get a moderation team that's overwhelmed, undertrained, and rapidly burning out. That's exactly the situation here.

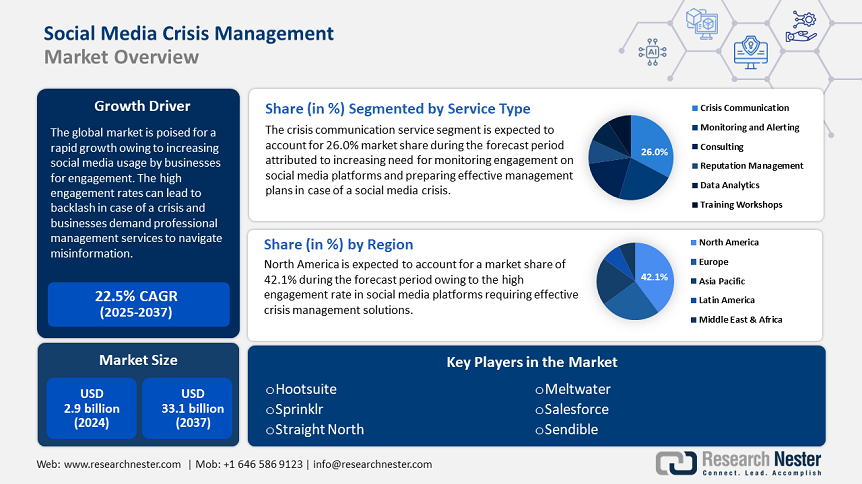

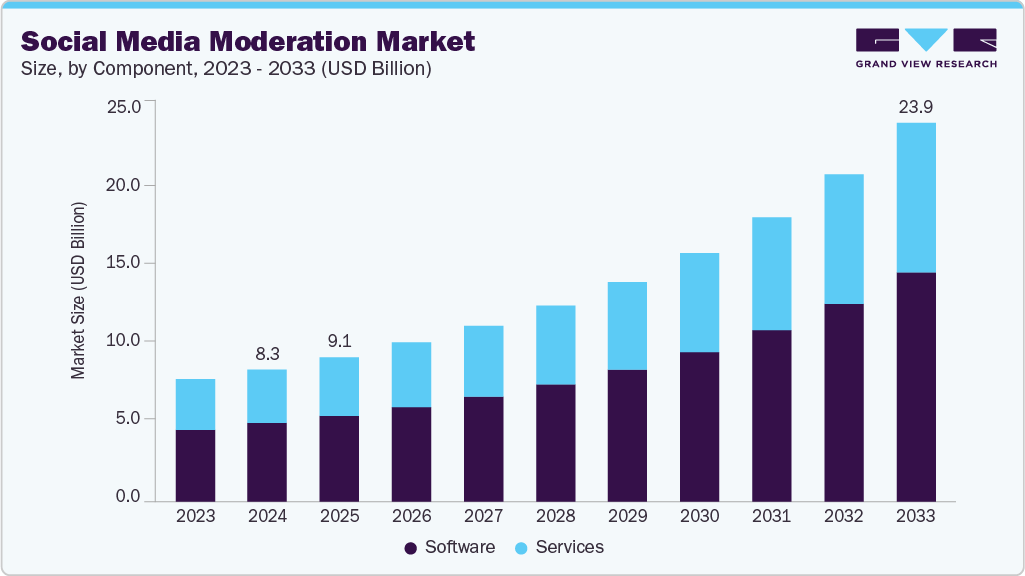

Moderation scales exponentially, AI effectiveness is limited, burnout is common, and clear policies are crucial. Estimated data.

The Data on Moderation Effectiveness

Let's look at what the data actually says about moderation effectiveness.

Meta (formerly Facebook) reportedly removes over 20 million pieces of child exploitation content per month. That's a staggering number. It's also meaningless without context. How many pieces did they miss? If they remove 20 million and miss 100,000, that's a different story than if they remove 20 million and miss 2 million.

The research suggests that Meta's systems catch 90%+ of known illegal content (child exploitation, etc.) because that's highly automated. But for content like hate speech, the numbers are murkier. Estimates range from 60-80% effectiveness depending on language, country, and content type.

That means platforms consistently miss 20-40% of hate speech. And that's the platforms with the most resources.

This platform likely had much lower effectiveness because they had much less mature systems. Early-stage moderation systems catch maybe 40-60% of obvious violations. Everything else gets through.

If we assume 50% catch rate on hate speech in usernames—which is probably generous—then for every slur-filled account removed, one slipped through. And that's only if the system was actively checking usernames, which apparently it wasn't.

The real data shows that moderation at scale requires accepting that you'll miss some percentage of violations. The question isn't whether you'll miss anything. The question is whether the miss rate is acceptable to your users and your values.

This platform hadn't publicly discussed their acceptable miss rate. Which suggests they hadn't thought about it. Which suggests they were just hoping the problem would go away.

The ADL Report and External Accountability

When the Anti-Defamation League publishes a report documenting your platform's failures, you've failed in a way that matters.

The ADL has been documenting hate speech online since the 1990s. They have credibility. When they say a platform has a problem, media outlets listen. Civil rights organizations listen. Users listen.

The report documented antisemitic content, extremist content, and designated terrorist organization content. That's not just "we have some bad stuff." That's "your platform is actively hosting extremist propaganda."

This creates accountability pressure that can't be ignored. You can't just say "we're working on it." You have to actually do something measurable.

For this platform, the ADL report meant that users looking for an alternative to extremism had evidence that this platform wasn't that alternative. It meant advertisers had a reason to avoid the platform. It meant that major media outlets would continue covering the story.

The silver lining is that external accountability actually works. When organizations like the ADL, the Tracking Inequality Tech Lab, or journalists document failures, platforms often respond because they have to. Public pressure is real.

But the platform would have been far better off catching these problems internally and fixing them before external organizations had to document them. That would have cost them time and resources. Instead, they lost time, resources, credibility, and user trust.

Comparison: How Other Platforms Handled Similar Crises

Bluesky, as mentioned, faced this issue in 2023. Their response involved:

- Acknowledging the problem directly

- Implementing technical fixes (username filtering)

- Empowering user reporting and moderation

- Hiring moderation staff

- Transparent communication about the process

Did Bluesky lose trust? Some users did. But they recovered because they showed they took the problem seriously and actually fixed it.

Mastodon, a federated platform, took a different approach. Instead of centralized moderation, they distributed it to individual servers. This meant moderation was community-controlled. The downside was inconsistency. The upside was responsibility fell on each community, and bad communities got defederated by others.

Threads, Meta's alternative to Twitter, took a heavy-handed approach. They implemented strict moderation from day one, even when it meant over-removing content. They chose safety and slow growth over rapid growth and mess. That decision meant Threads grew slower, but it also meant they didn't face the kind of crisis this platform did.

There's no perfect solution. But the data is clear: platforms that acknowledge failures, implement fixes quickly, and communicate transparently recover trust faster than platforms that claim everything is fine while evidence says otherwise.

As the user base grows from 0.5 to 2.5 million, content volume increases 5x, while moderation complexity grows exponentially. Estimated data highlights the disparity between user growth and moderation capacity.

The Technical Infrastructure Challenge

Here's something that's easy to underestimate: moderation infrastructure is complex as hell.

You need systems to:

- Ingest content at massive scale (billions of pieces per day)

- Flag suspicious content using AI and rules-based systems

- Route content to human moderators with appropriate context

- Store decisions and maintain consistency

- Handle appeals and allow users to challenge decisions

- Generate reports for transparency and auditing

- Integrate with external services for verification and reference

- Scale globally across different languages, regions, and cultural contexts

Each of those is a substantial engineering challenge. Building all of them in parallel while growing 5x is almost impossible without a massive engineering team.

This platform apparently didn't have that team. Or didn't prioritize building this infrastructure before they needed it.

The founder said they're "upgrading our technology infrastructure," which is a polite way of saying "we didn't prepare for this and now we're scrambling." That's understandable for a young company. But it's not acceptable to users who are being harmed by the lack of infrastructure.

The technical problem is solvable. You can hire engineers, build systems, implement AI, and create processes. It takes money and time, both of which this platform presumably has access to.

The question is whether they'll do it fast enough to recover trust.

Cultural Context and Global Moderation Challenges

Here's a complication that gets underestimated: moderation decisions change based on culture and context.

A word that's a racial slur in English might be a normal word in another language. A symbol that's hate speech in one country might be a historical symbol in another. Satire in one culture looks like endorsement in another.

When you're moderating globally, you need moderators from different cultural backgrounds who understand these nuances. You need them for every language. You need them for every country.

This platform apparently has English-language moderation down relatively well—or at least can process reports in English. But what about French? German? Spanish? Japanese? The more languages your platform supports, the more moderation complexity increases exponentially.

This is why platforms like Facebook employ thousands of moderators across dozens of countries. It's not because they're inefficient. It's because you literally need people from each region who understand the cultural context.

A young platform probably doesn't have this capability. They probably have English-language moderation and promise to expand later. Which means non-English hate speech and harmful content probably faces almost zero moderation.

This creates a two-tier system where English-language users get some protection and speakers of other languages get almost none. That's unfair. It's also unsustainable if you want to be a global platform.

The Economics of Moderation: True Costs

Let's talk money. Moderation is expensive.

Meta spends an estimated $5 billion per year on safety and security. That's not a rounding error. That's a massive line item. And they still face constant criticism about moderation failures.

For a young platform, $5 billion is their entire valuation probably. So they can't spend that much. But they also can't build a safe platform for free.

Human moderation costs roughly

If your platform generates 25 million pieces of content per day, you need roughly 83,000 to 250,000 moderator-reviews per day. At one review per moderator hour, that's 83,000 to 250,000 moderator-hours per day. At 6 hours per moderator per day, that's 13,800 to 41,700 moderators.

That's not sustainable for most companies. So you need AI to reduce the human load. If AI catches 50% of violations, you need roughly 7,000 to 20,000 human moderators. If AI catches 80%, you need 2,800 to 8,000 moderators.

Even with AI assistance, a platform this size needs thousands of moderators.

For a young platform, this is probably outside their budget. Which means they're trying to do it on the cheap. And cheap moderation is bad moderation.

The founder's response about "rapidly expanding" the team suggests they're aware of this need now. But the financial commitment is real. Building a moderation team takes months. Funding it takes ongoing budget. And there's no path to profitability that includes spending millions on moderation.

This is a structural problem with platform economics that nobody's solved. You need moderation to have a safe community. Safe communities attract users. But moderation is expensive. And user growth doesn't generate revenue fast enough to cover moderation costs.

Most platforms have accepted that moderation is a cost of doing business. They build it in. But young platforms often think they can delay this cost and build it later. By the time they realize they can't, they've already harmed users.

Estimated data shows that content policy education and edge cases require the most training time, highlighting the complexity and importance of these areas in effective moderation.

Transparency Reports and What They Actually Show

Many platforms publish transparency reports showing how much content they remove. These reports are simultaneously useful and misleading.

Useful: You can see trends. If a platform is removing more hate speech, that's something. If they're removing less, that's a warning sign.

Misleading: The numbers don't tell you what they're missing. If a platform reports removing 10,000 pieces of hate speech per month, how many did they miss? 100? 10,000? 100,000?

Without the denominator, the numerator is meaningless.

This platform apparently hadn't published any transparency reports. Which means there's no public baseline for what they're removing or missing. That opacity makes it hard for users, researchers, or civil rights organizations to assess the real problem.

Transparency is a bare minimum for being a responsible platform. It's not a guarantee of quality. But opacity is a guarantee of inability to trust.

For this platform, publishing a transparency report would actually help them. It would show they're catching content. It would provide accountability. It would let researchers track their improvement over time.

The fact that they haven't published one suggests they either don't have the data infrastructure to track it (bad sign) or they don't want to reveal how much they're missing (also a bad sign).

User Safety and Community Moderation

One approach some platforms have adopted is delegating moderation to communities themselves. Users can create content guidelines. Users can vote on what violates them. Users can appeal decisions.

This works for community-focused platforms like Discord or Reddit where people join specific communities with specific norms. It works less well for platform-wide spaces because platform-wide spaces have users with radically different values.

But user-empowered moderation tools do help. When users can report content easily, block other users, mute hashtags, and see what decisions were made about reported content, they feel more agency.

This platform apparently has reporting functionality (since the journalist reported content and got a response). But it's not clear if they have user-facing moderation tools like blocking, muting, or community guidelines.

User-focused moderation tools are relatively cheap to build and incredibly valuable. They don't solve the problem, but they make users feel heard.

The Future: What Happens Next

For this platform, there are a few possible futures:

Scenario 1: Genuine Fix The platform acknowledges the failure, invests seriously in moderation, rebuilds trust over 6-12 months. Growth slows but stabilizes. They become a credible alternative to existing platforms.

Scenario 2: Slow Decline The platform makes token efforts at moderation. Users who care about safety leave. Bad actors stay. The platform becomes increasingly hostile. They either shut down or become a haven for extremist content.

Scenario 3: Acquisition An existing platform (or a parent company) buys them for the user base and integrates their moderation systems. The acquiring company fixes the moderation using their existing infrastructure.

Scenario 4: Pivot The platform decides "hey, maybe moderation is hard" and pivots to a different model: anonymous content, encrypted messaging, or something else that's harder to moderate but also smaller in scope.

The most likely scenario is 2 or 3. Genuine fixes take years and billions of dollars. Most young platforms don't stay independent long enough to do that.

Lessons for Future Platforms

If you're building a social platform, here's what this story teaches:

- Build moderation infrastructure before you need it. Not after.

- Hire experienced people for this role. Moderation is hard. Don't treat it as entry-level work.

- Be honest about trade-offs. Choose safety or speed, don't claim both.

- Plan for bad actors. They will come. Have a strategy.

- Invest in user tools. Reporting, blocking, muting—give users agency.

- Publish transparency reports. Show what you're doing, even if it's imperfect.

- Move fast on problems. Days matter. Weeks are too long.

- Treat moderators as professionals. Trauma support, training, reasonable hours.

- Get external review. Let researchers, civil rights organizations, and journalists evaluate you.

- Be willing to say no to growth. Slow growth with safety beats fast growth with chaos.

None of these are revolutionary. Every platform that's handled moderation well has learned these lessons. But they have to be learned. And this platform is learning them in public.

The Bigger Picture: Platform Responsibility

This story matters because it shows something important: platforms are responsible for the content they host.

Not responsible in a "governments should regulate" way necessarily. But responsible in a "you built this system, you have some control over it, and you chose to let harm happen" way.

This platform didn't accidentally end up with slurs in usernames. They built a system where slurs could be usernames. They didn't implement basic filtering. They didn't plan for rapid growth. They didn't prioritize moderation infrastructure.

Those were choices. And when those choices led to harm, the responsible thing to do is acknowledge it and fix it. Not claim you were "actively reviewing" while content remained live for days.

The broader question this raises is: what responsibility do platform creators have for the systems they build? If a platform enables hate speech, does that matter? If a platform fails to protect vulnerable users, who pays the cost?

These questions don't have easy answers. But the existence of this platform struggling publicly with these questions is actually good. It keeps the conversation alive.

FAQ

What is content moderation at scale?

Content moderation at scale is the process of reviewing and removing harmful user-generated content on large social platforms. At scale, this involves AI systems that flag potentially problematic content, human moderators who review flagged items, and appeal processes. The challenge increases exponentially as user volume grows because not just the quantity of content increases, but the complexity of understanding context, intent, and cultural references also increases.

Why does moderation fail on fast-growing platforms?

Moderation fails on fast-growing platforms because the systems, policies, and trained staff don't scale proportionally with user growth. When a platform goes from 500,000 to 2.5 million users, content volume increases 5x, but moderation capacity doesn't grow at the same rate. New moderators require extensive training and supervision, bad actors specifically migrate to platforms with weak moderation, and infrastructure takes time to build properly.

What's the difference between AI moderation and human moderation?

AI moderation can quickly identify known slurs, banned symbols, and duplicate spam at massive scale. However, AI struggles with context—understanding whether something is a joke, satire, historical reference, or genuine hate speech. Effective moderation combines AI systems (which flag suspicious content) with human judgment (which makes the final decision). Studies show that AI-only moderation catches 60-70% of harmful content, while AI plus humans can reach 85-90%, but never 100%.

How much does platform moderation cost?

Moderation costs vary widely but are generally substantial. Human moderators cost

What happens to content moderators who review harmful content all day?

Content moderators who review harmful content daily experience high rates of trauma, PTSD, depression, and anxiety—similar to soldiers in combat zones according to research. Average moderator tenure is 6-18 months before burnout or resignation. Proper support requires trauma-informed care, mental health resources, reasonable hours (typically 6 hours maximum per day), and clear pathways for career advancement out of content review roles.

What's the most effective way to prevent harmful content on social platforms?

The most effective approaches combine multiple layers: clear policies that are actually enforced, user-facing tools (blocking, reporting, muting), AI systems that flag suspicious content, trained human moderators who make final decisions, external oversight and research, and transparency about what's working and what's not. Platforms that succeed prioritize moderation infrastructure from day one rather than trying to build it after problems appear.

Can a platform have both open speech and safety?

No. Every major platform has learned that open speech and maximum safety are directly opposed at scale. All platforms make trade-offs. Twitter under early Dorsey prioritized speech over safety. Facebook tries to optimize for both and spends billions doing it, but still fails regularly. Honest platforms clearly state which they prioritize. Platforms that claim to optimize for both without stating the trade-offs are usually failing at both.

What should users look for when evaluating a platform's moderation?

Users should evaluate platforms based on: published transparency reports showing what content is being removed, clear policy statements with specific examples, user-facing moderation tools like reporting and blocking, speed of response to reported violations (days, not weeks), and external reviews from civil rights organizations or researchers. Be skeptical of platforms claiming perfect moderation or zero content errors. Perfect doesn't exist. Accountability and responsiveness matter more than perfection.

Conclusion: The Cost of Ignoring the Hard Parts

This story is important not because it's unique, but because it's common.

Every social platform that's grown fast has faced this problem. Every one. The difference is whether they've been honest about it and fixed it, or claimed it wasn't happening while evidence accumulated otherwise.

This platform chose poorly. They got a report. They said they were actively reviewing. They didn't review. Users had to go to journalists and civil rights organizations to get attention.

That's not how you build trust.

But here's the thing that matters: it's fixable. The problems aren't unsolvable. Moderation at scale is hard, but it's a solved problem. Other platforms have done it. The solutions are known. It takes money, time, competent people, and actual commitment.

The question is whether this platform has those things.

Based on the response so far, there's not a lot of confidence. Saying you're "rapidly expanding" your team is not the same as having a team that's expanded. Saying you're "upgrading infrastructure" is not the same as having upgraded infrastructure. And days of inaction while documented hate speech remains live is not the same as actively reviewing and removing.

Words are cheap. Action is expensive. This platform will only rebuild trust if they choose to spend the resources and time to actually fix the problem.

The good news: it's possible. Bluesky did it. Other platforms have done it. If this platform takes it seriously, they can fix this.

The bad news: right now, there's no evidence they're taking it seriously. They're treating it as a PR problem that will go away if they say the right things. It won't. The problem will persist and grow until they actually address it.

For users looking for an alternative to existing platforms: platforms that acknowledge failures and fix them quickly are worth considering. Platforms that deny problems while evidence accumulates are worth leaving. That's the lesson here.

For people working in moderation, policy, or platform safety: this story is a reminder that the infrastructure you build (or don't build) determines what content flourishes. Build with intention. Plan for growth. Invest in people. And be honest about what you can and can't protect.

For anyone building a new platform: start with moderation, not as an afterthought. It's not a feature you add later. It's a foundation you build first. Every platform that's tried to do it backward has regretted it.

The hard work of moderation—the real, expensive, thoughtful work—is what separates platforms that are genuinely safe from platforms that just claim to be. This story is evidence of what happens when you try to take a shortcut on that hard work.

Don't take that shortcut.

Key Takeaways

- Content moderation scales exponentially—doubling users requires 4-5x moderation capacity due to volume and complexity increases

- Every platform makes trade-offs between growth and safety; platforms claiming both are either lying or failing

- AI catches 60-80% of obvious violations but struggles with context; human moderators are irreplaceable for edge cases

- Moderators experience PTSD-like trauma; average tenure is 6-18 months before burnout forces turnover

- Platforms that acknowledge failures and fix them quickly (like Bluesky) recover trust; platforms denying problems (like this one) lose credibility permanently

Related Articles

- Wikipedia vs Archive.today: The DDoS Controversy Explained [2025]

- Social Media Safety Ratings System: Meta, TikTok, Snap Guide [2025]

- Meta's Child Safety Trial: What the New Mexico Case Means [2025]

- Section 230 at 30: The Law Reshaping Internet Freedom [2025]

- India's New Deepfake Rules: What Platforms Must Know [2026]

- Discord Age Verification for Adult Content: What You Need to Know [2025]

![Social Media Moderation Crisis: How Fast Growth Breaks Safety [2025]](https://tryrunable.com/blog/social-media-moderation-crisis-how-fast-growth-breaks-safety/image-1-1770833330314.png)