The AI Monetization Crisis: When Trust Meets Revenue

Last year, an unnamed Perplexity executive said something that cuts to the heart of AI's biggest problem right now: "A user would just start doubting everything."

They were talking about ads. Specifically, why Perplexity decided to completely walk away from them.

This isn't some small pivot in a startup's strategy. This is the entire AI industry facing a fundamental question about how it's going to make money, and the answers people are giving couldn't be more different. Some companies believe ads destroy trust. Others think subscriptions alone won't cut it. And nobody's actually sure who's right.

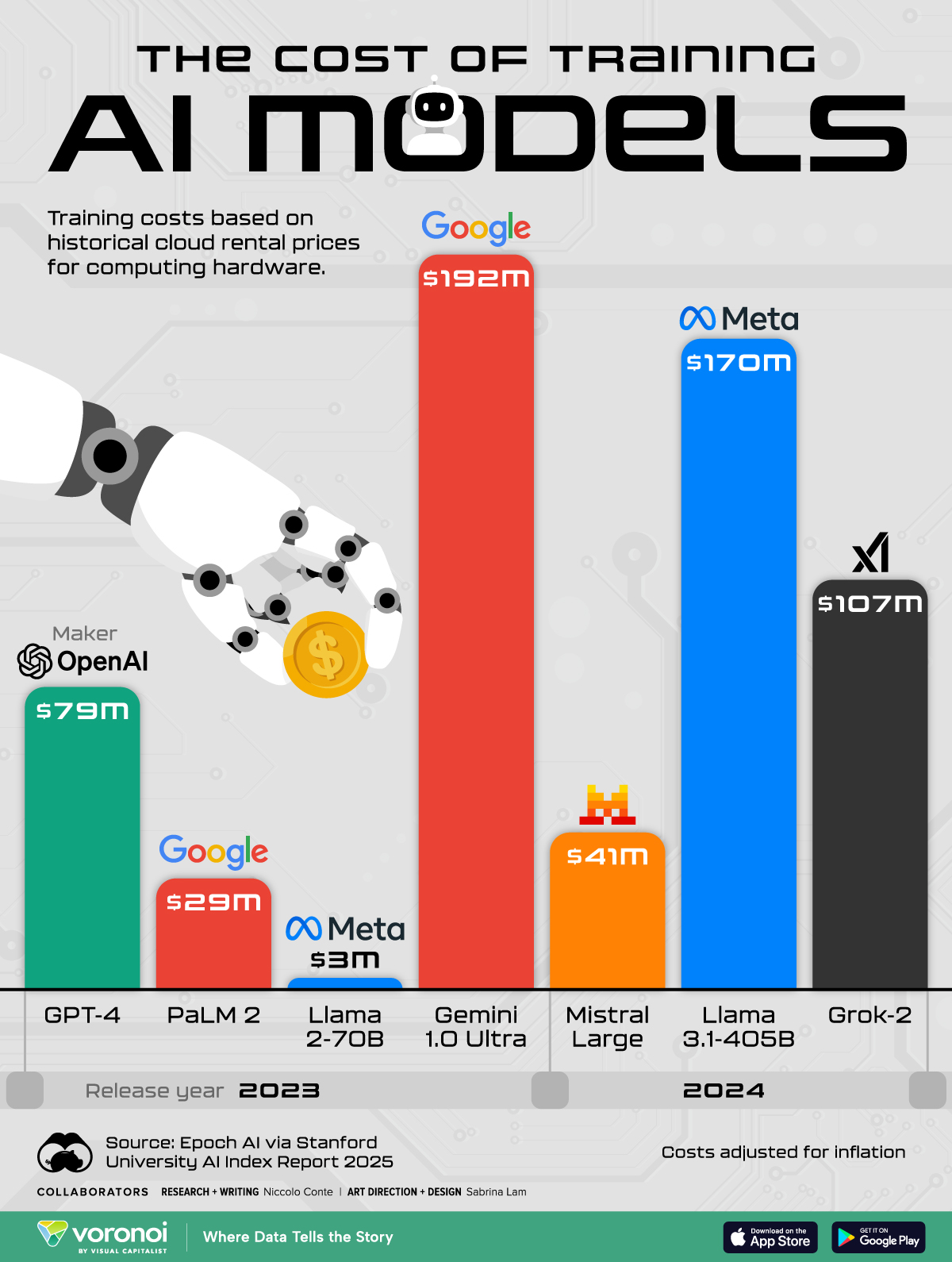

The irony is brutal: AI companies need massive amounts of money to build AI systems. Training a modern large language model costs tens of millions of dollars. Running inference at scale costs millions more every single day. But the moment you try to monetize that through ads, your core product gets worse. Users stop trusting what they're reading. The entire value proposition collapses.

Perplexity experienced this firsthand. In 2024, they tested ads on their platform. Users hated it. Not in a "I wish ads were less prominent" way, but in a fundamental "I no longer trust this service" way. So last year, the company quietly began phasing ads out. No announcement. No press release. They just stopped.

Meanwhile, OpenAI is doing the exact opposite. Last week, they started testing ads in ChatGPT. Google's doing it with their AI products. Meta's eyeing it. The gap between "we will never show ads" and "ads are how we survive" is widening by the week.

This isn't about personal preference anymore. This is about the economics of AI actually working or completely breaking. And right now, we're watching the industry decide its entire future in real time.

The Economics Don't Add Up

Let me be direct: training and running AI at scale is absurdly expensive. A single training run for a competitive large language model costs

The inference side—actually running these models for users—also costs a ton. Every time you ask ChatGPT a question, OpenAI's servers process tokens. That costs money. The bigger the model, the more tokens it needs to process to answer you properly, and the more the infrastructure bill climbs.

Subscription revenue helps, but it doesn't close the gap. A

Ads, theoretically, fix this. Display a relevant ad alongside search results and you generate revenue from users who aren't paying. Google makes $230 billion a year this way. The ad model is proven, mathematizable, and scales infinitely. You get more traffic, you get more ads, revenue grows automatically.

But here's what Perplexity realized: that math works for Google because Google's business model doesn't rely on users believing every single result they see. If you click an ad and it's misleading, you just go back and search again. The consequences are limited.

With AI, the stakes are different. If an AI search engine shows you an ad-influenced answer presented as fact, and you make a decision based on it, the liability is massive. A lawyer using Perplexity for research. A doctor using it for diagnosis support. A finance professional using it to understand regulations. If they get an answer shaped by advertiser incentives and don't realize it, people could get hurt.

The executive wasn't exaggerating. Users would doubt everything.

Why Perplexity Changed Course

Perplexity's pivot away from ads happened quietly, but it represents something important: the company's founders realized early that they'd chosen the wrong path.

In 2024, they tested ads on their platform. The product was already working—it was faster than Google, the answers were better cited, and users actually preferred it. Then they added ads. And the user experience got worse in a way that went beyond "ads are annoying."

Users started questioning whether search results were biased. Whether Perplexity was showing certain sources more because they paid for placement. Whether the company's promise of factual, well-sourced answers actually meant anything anymore.

It's the ultimate product poison. You've built trust over time, and ads destroy it in a single quarter.

Perplexity's executives realized this and made a choice: compete on the subscription model instead. Focus on premium features, business tiers for professionals, API access for enterprises. Build a product so good that users willingly pay for it. It's a fundamentally different strategy than "get users for free, monetize with ads."

This requires different assumptions about your market. You're betting that there's a large enough segment of power users—lawyers, doctors, finance professionals, researchers, engineers—who value accuracy enough to pay for it. That's a more limited market than "everyone," but it's also a market where willingness to pay is much higher.

The company's unnamed executive said it clearly: "We are in the accuracy business, and the business is giving the truth, the right answers." That's a subscription strategy, not an ad strategy. You can't sell both simultaneously.

Another executive noted that they weren't ruling out ads forever, just for now. But the tone was clear: ads weren't strategic anymore. They were a distraction from what Perplexity actually wanted to build.

The Anthropic Counterargument

Perplexity isn't alone in this thinking. Anthropic, one of the only AI companies with genuine credibility in the safety space, has gone further: they're committing to never show ads in Claude, period.

This isn't a temporary decision. It's a core part of their brand promise. When you use Claude, you don't need to worry about whether the company has incentives to bias the output. The business model is subscriptions or API access. That's it.

Anthropically put this philosophy right in the Super Bowl with attack ads directly targeting ChatGPT. The message was simple: we're ad-free, they're not, and that matters. OpenAI's CEO Sam Altman called the campaign "dishonest," which is probably the most interesting part of this whole story. He felt attacked—and he was.

What makes Anthropic's position interesting is that they're betting their entire company on it. They've raised billions of dollars to build AI systems that will never be monetized through ads. That's either brilliant or delusional, and you won't know which until the finances play out.

But here's the thing: if Anthropic's model works, if they can build a sustainable, profitable AI company without ads, then every other AI company will have to follow. Because once users know it's possible to use AI without ads, going back to an ad-supported model becomes a voluntary downgrade.

Anthropically seems to believe they can win on quality and trust. That Claude will be so good, and so trusted, that people pay for it. The Super Bowl ad was them saying: we think we can beat OpenAI by being the company that refused to compromise on this one thing.

It's a bold move. It's also the kind of move that usually only works if you're actually right about the underlying product being better.

OpenAI's Calculated Bet

OpenAI is taking the opposite approach, and they're doing it with full transparency. Last week, they began testing ads in ChatGPT for free users.

Before you think this is some kind of corporate evil, understand the math from OpenAI's perspective. They're burning through billions in compute every year. Their subscriptions generate revenue, but it's not enough. They need more money to:

- Keep building bigger models (the next generation will cost even more)

- Keep running inference at the scale they've promised users

- Actually achieve profitability (they're still operating at a loss)

- Compete with Google, Microsoft, and other companies entering the space

From a pure business standpoint, ads make sense. They've got 200 million weekly active users. Even a small amount of ad revenue per user scales to billions of dollars. That's not corruption. That's math.

Sam Altman's response to Anthropic's Super Bowl ad was telling. He didn't defend ads as good for users. He defended them as necessary for the business. "Ads are how we can make this accessible while maintaining the quality users expect."

There's truth in that. The more revenue OpenAI generates, the more they can afford to run their infrastructure at optimal capacity. More revenue means better models, faster iteration, and the ability to keep services free or cheap for users who can't pay.

But there's also a corporate interest at play. OpenAI is trying to go public. Public companies have shareholders. Shareholders want growth. Ads generate growth. The math points in one direction.

The Trust Problem: Why Ads Might Actually Break AI

All of this comes down to a single fundamental problem: AI search and research tools require absolute trust in a way that traditional search engines don't.

Google can show ads because you know Google isn't the source. You're searching Google for sources. If you get an ad in the results, you know to be skeptical. You know it's paid placement. The entire interaction is predicated on "I'm searching for things, some of which are ads."

But AI is different. When ChatGPT gives you an answer, or when Perplexity synthesizes information, you're not searching. You're asking the AI to synthesize information for you. You're delegating judgment.

That's where ads become catastrophic. If there's any possibility that the AI's answer was influenced by whether companies paid to appear in training data, or whether advertisers have stakes in certain outcomes, then the answer becomes suspect.

This is even worse for professional use cases. A lawyer using an AI to understand case precedents. A doctor using it to understand treatment options. An accountant using it to understand tax regulations. In all of these cases, the liability and consequences of getting biased information are massive.

Perplexity's executives understood this viscerally. They said: "The challenge with ads is that a user would just start doubting everything." That's not marketing speak. That's a description of what actually happened when they tested ads.

Users could sense the conflict of interest, even if they couldn't articulate it. The product felt different when ads were involved. And once that trust is gone, it's almost impossible to get back.

The Business Models Battle

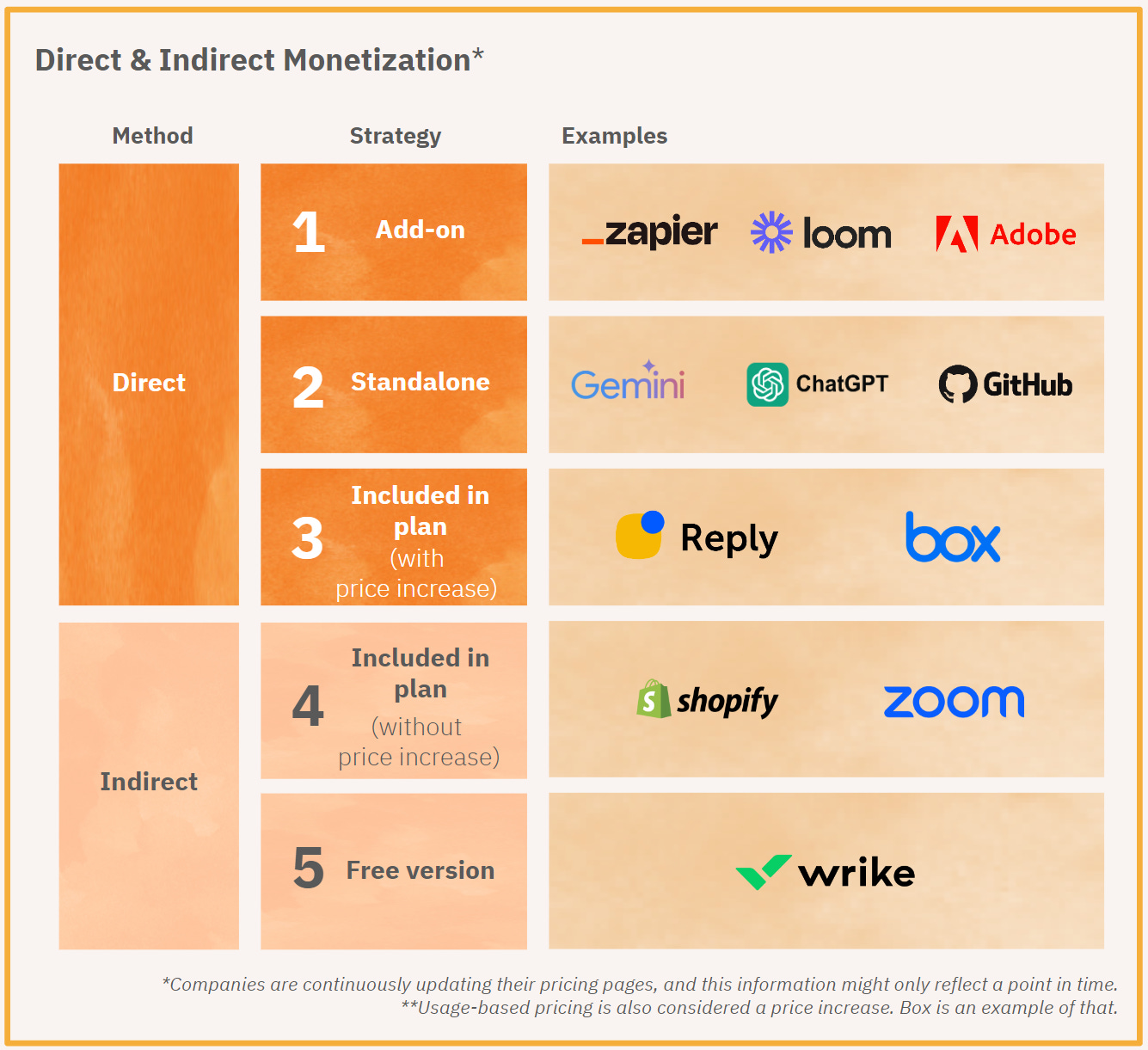

Right now, we're watching three different monetization strategies play out in real time, and they're incompatible.

Strategy One: Premium Subscriptions (Perplexity, Anthropic)

The idea: Build such a good product that users willingly pay for it. This works if you can:

- Build features that free users don't get

- Attract power users who value quality over cost

- Create a network effect where paid users get value from other paid users

The advantage: No ads means full control over user experience and trust. The disadvantage: Limits your market. Not everyone can afford subscriptions. You're competing for money, not attention.

Strategy Two: Free with Ads (OpenAI, Google)

The idea: Give the service away free, monetize through advertising. This works if you can:

- Show relevant ads without destroying product quality

- Keep users engaged enough that ads don't cause them to leave

- Scale the business infinitely (more users = more ad revenue)

The advantage: Unlimited market. Everyone can use the service. The disadvantage: Trust degradation. Users start questioning whether they're getting unbiased information. For professional use cases, it becomes unusable.

Strategy Three: Enterprise + Premium (Most AI Companies)

The idea: Run multiple revenue streams simultaneously. Free tier for consumers, premium for individuals, enterprise for businesses.

The advantage: Maximum revenue capture across different segments. The disadvantage: Requires different products for different tiers. Complex to manage. Can lead to resentment ("free users get ads, paying users don't").

Perplexity's move toward subscriptions and enterprise is essentially saying: "We're not going to try to monetize casual users. We're going to build so good that professionals pay." It's a more focused strategy. It's also a riskier one.

OpenAI's move toward ads is saying: "We need revenue at scale right now. We're willing to risk some user trust to keep the lights on." It's a more pragmatic strategy in the short term. It's also potentially destructive long-term.

The market will decide which is right. And the answer will probably surprise everyone.

The Super Bowl Attack: When AI Companies Fight Publicly

Anthropic's Super Bowl campaign was unusual for several reasons. Most notably: AI companies don't usually go after each other in public advertising. The space is too nascent. Everyone's trying not to attract regulatory attention.

But Anthropic broke that rule. They spent millions on a Super Bowl spot to attack ChatGPT's move toward ads. The message was blunt: Anthropic uses AI for good, OpenAI is compromising its principles for money.

Sam Altman's response was interesting. He didn't defend ads as good. He defended them as necessary. "The ad model allows us to keep ChatGPT accessible while funding the compute required to actually deliver on our mission."

There's a real philosophical divide here that goes beyond business strategy. Anthropic fundamentally believes that AI companies shouldn't compromise on certain principles, even if it costs them money. OpenAI believes that pragmatism sometimes requires compromise.

These aren't abstract philosophical differences. They have real consequences:

- If Anthropic's right, then OpenAI is slowly destroying the thing that made ChatGPT special (it was trustworthy)

- If OpenAI's right, then Anthropic is being naive about the capital requirements of competitive AI development

The Super Bowl ad was Anthropic essentially saying: "We bet OpenAI is wrong. And we're willing to spend money to prove it."

It's the kind of move that only works if you've got the product to back it up. Anthropic does. Claude is legitimately competitive with ChatGPT on most tasks. So Anthropic can make that claim with some credibility.

What This Means for the Broader AI Ecosystem

This isn't just about individual company strategies. This is about what kind of AI industry we end up with.

If the ad-supported model wins (OpenAI's bet), then we get an AI ecosystem that looks like the web today: free services, monetized through ads and data. Powerful companies control the default tools. Users get free access but their attention is monetized.

If the subscription model wins (Perplexity and Anthropic's bet), then we get an AI ecosystem that's more fragmented. Power users and professionals pay for quality. Mass market users either use cheaper alternatives or get lower-quality service.

If hybrid models win (most likely), then we get both. Free tiers with ads. Premium tiers without ads. Enterprise solutions customized for specific industries. Everyone's happy except users trying to figure out which tier they're supposed to be on.

From a regulatory standpoint, this matters too. If AI companies are seen as trustworthy—not inserting ads or commercial interests into critical information—then regulation will probably be lighter. If they're seen as self-interested—doing whatever makes them money—then regulation gets heavier.

Anthropic's Super Bowl ad wasn't just about beating OpenAI in the market. It was about shaping the narrative around what AI companies "should" do. If they can win that narrative war, then regulation becomes easier for them and harder for OpenAI.

It's smart strategy. It's also potentially manipulative. Anthropic is essentially saying: "We chose the noble path." But they chose it because their capital situation allowed them to. They're not more principled than OpenAI. They're just differently constrained.

The Professional User Wildcard

One crucial factor in Perplexity's strategy is something that's been almost entirely overlooked: professional users.

Perplexity's executives specifically mentioned focusing on "finance professionals, lawyers, doctors, and CEOs." This is the key to their entire strategy. These aren't casual users. These are people for whom getting wrong answers has real consequences.

A lawyer who uses an AI tool to research case law and gets misled could lose a case. A doctor who uses an AI tool to understand treatment options and gets biased information could make a wrong medical decision. A finance professional who uses an AI tool to understand regulations and gets influenced by advertiser interests could cost their company millions.

In these professional contexts, the liability alone makes ads impossible. You can't have a tool that professionals rely on also showing ads. The conflict of interest is unworkable.

This is where Perplexity's strategy actually makes sense at a fundamental level. They're not trying to build the most-used AI tool in the world. They're trying to build the most-trusted tool for people who make important decisions.

And they're betting that professionals will pay for that trust. Not casually, but genuinely—with corporate budgets, team licenses, and premium tiers.

OpenAI's approach is different. They're betting that they can show ads without destroying trust. That users will understand the difference between ads and organic results. That professionals will continue using ChatGPT even as free-tier users see ads.

One of these bets will probably be wrong. And the winner will define the entire future of AI monetization.

The Technical Challenge: Can You Even Hide Ad Influence?

Here's something nobody talks about: if you decide to show ads, can you actually prevent those ads from influencing the AI's outputs?

The answer is probably no, and here's why. Large language models are trained on vast amounts of internet text. If advertisers pay to have more favorable content about their products in the training data, then the model will naturally be biased toward them. It's not intentional. It's just how training works.

You can't separate out "show ads" from "don't let ads influence the model." The influence is baked in. If you're accepting payment from advertisers, and you're training on data, then bias is inevitable.

This is the fundamental problem Perplexity realized. Even if they wanted to keep ads completely separated from search results—show ads on the side, keep answers pure—they can't actually do that at the model level. The model itself would be biased.

OpenAI's solution is probably to just... accept it. Show ads, acknowledge that there might be some bias, and hope users don't care enough to leave.

But that's a bet against human psychology. And history suggests it's a bad bet. People care about bias. They care about whether information is trustworthy. Once they suspect bias, they leave.

Subscription Models: Can They Actually Work?

Okay, so subscriptions are Perplexity and Anthropic's solution. But can subscription models actually sustain the kind of infrastructure AI requires?

The math is tight. A subscription AI tool probably needs to charge $15-30/month to be sustainable. That's what Anthropic charges for Claude. That's what OpenAI charges for ChatGPT Plus.

But if you're trying to build a search engine with subscriptions, you run into a different problem: search engines have massive network effects. The more people use Google, the more useful Google becomes (better results, better training data, more advertising). If you only have paying users, your network is smaller. Your training data is sparser. Your results are worse.

Perplexity's solution is to differentiate on quality, not scale. Be better than Google for specific use cases (research, learning, professional work) even if you have fewer users. It's a valid strategy, but it's a smaller market.

Anthropic's solution is similar: be better at specific things (safety, helpfulness, honesty) even if you don't reach as many people.

Both of these are viable strategies. But they're not strategies that will replace Google-scale search. They're strategies for winning specific niches while someone else wins the mass market.

The Regulatory Wild Card

Everyone's making these bets without really knowing how regulation will play out. And regulation could change everything.

If regulators decide that AI companies showing ads in search results is deceptive, they could ban it. If they decide that AI tools need to be certified as unbiased for professional use, they could require subscription-only models with heavy compliance. If they decide that training data needs to be ethically sourced, they could make the whole ad-supported model more expensive.

Rightfully, we don't know yet. AI regulation is still being written. But the regulatory environment will probably determine which monetization strategy wins.

If regulation favors transparency and trust (Anthropic's position), then ads-heavy models lose. If regulation favors consumer access (OpenAI's position), then subscription-only models lose.

What's interesting is that both companies are essentially lobbying through their business strategies. Anthropic's Super Bowl ad was partly regulation messaging: "Look, you can build AI without ads. You don't have to compromise." OpenAI's move to ads is partly regulation messaging: "Look, we need ads to sustain this. Restrict ads and we can't build the best models."

The regulatory battle is happening right now, and both sides are using their business models as ammunition.

International Considerations: Europe's a Different Game

All of this is mostly US-centric thinking. In Europe, the calculus is completely different.

Europe's regulatory framework (GDPR, Digital Services Act, AI Act) is much stricter about what companies can do with user data. Showing ads means collecting data. Collecting data means complying with GDPR. GDPR compliance is expensive and restrictive.

For Perplexity and Anthropic, this is actually a strength. Their subscription model doesn't require extensive data collection. They can operate in Europe fairly easily.

For OpenAI and Google, ads mean data collection means complexity and cost. It's not impossible, but it makes the ad model less attractive in Europe specifically.

This could lead to a weird situation where US-based companies use ads (because they're optimized for the US market) while international competitors use subscriptions (because they're optimized for European regulation).

Longterm, whoever can build a model that works globally wins. Whoever has to maintain two different business models (ads in US, subscriptions in EU) loses.

What Users Actually Want: The Forgotten Variable

In all of this discussion about business models and monetization, we keep forgetting the most important factor: what do users actually want?

Perplexity tested ads and users hated it. That's a data point. When users have a choice between two tools and one has ads while the other doesn't, they pick the one without ads. Even if it costs money.

This is what OpenAI is gambling against. They think that ChatGPT's dominance is strong enough that users will tolerate ads. That switching costs are high enough that users won't leave. That free access is valuable enough that ads aren't a dealbreaker.

They might be right. But Perplexity's experience suggests otherwise.

What we'll actually see is users voting with their feet. Some will use ChatGPT and tolerate ads. Some will pay for Anthropic. Some will use Perplexity. Some will use Google's AI tools.

The market will fragment, and each company will end up in the niche they deserve. OpenAI will be the default for casual users and mass market. Anthropic will own the safety-conscious professionals. Perplexity will own the researchers and power users who demand both quality and privacy.

Nobody will have won. But everyone will have survived.

The Real Cost of Free: Why Someone Has to Pay

Here's the thing people don't want to admit: building and running competitive AI models is expensive. Someone has to pay. It's either users (subscriptions), advertisers (ads), or investors (hoping for future returns).

Right now, investors are paying. But that money isn't infinite. Every AI company is burning through billions of dollars in VC funding while trying to figure out how to actually make money.

That's why this moment matters. In the next 2-3 years, these companies have to transition from "investor funded" to "revenue positive." They have to choose a business model that actually works.

Perplexity's choice: get revenue from professionals and power users who pay. OpenAI's choice: get revenue from casual users through ads and subscriptions. Anthropic's choice: get revenue from subscriptions and enterprise, and take the hard road of not maximizing revenue.

All three are valid choices. All three have tradeoffs. And in 5 years, we'll know which one was right.

The person who gets this wrong goes out of business. The person who gets it right dominates the market. There's no third option.

Looking Forward: What Comes Next

In the next year, we're going to see all three models playing out simultaneously. OpenAI will push ads further. Perplexity will launch enterprise tiers. Anthropic will fight to stay ahead on product quality while maintaining their ad-free promise.

We'll probably see more Super Bowl ads from AI companies attacking each other. We'll see regulatory proposals about ads in AI. We'll see user migration patterns that show which monetization models actually work.

And we'll probably see at least one company completely fail because they chose the wrong business model. That failure will teach everyone else what doesn't work.

My guess? The future looks like this: Ads in consumer AI become standard, but professional AI stays ad-free. OpenAI wins the mass market. Anthropic or Perplexity wins the professional market. Google wins by doing both.

But I could be wrong. This space moves fast, and sentiment can shift in weeks.

The Trust Equation: Trust = Revenue in AI

Maybe the most important insight here is the simplest one: in AI, trust is revenue.

If users trust you, they'll pay for your product. If users don't trust you, they'll leave even if you're free. It's that simple.

Perplexity learned this. OpenAI is betting against it. Anthropic is betting hard on it.

Whoever's right about the relationship between trust and revenue determines everything about AI's future. Not AI safety. Not alignment. Not capabilities. Revenue and trust.

Because AI companies need money to survive. If they can't monetize trust, they'll have to monetize something else. And if what they monetize destroys trust, they're in a doom loop.

Perplexity broke out of that loop by choosing subscriptions. OpenAI is entering it by choosing ads. Anthropic is avoiding it by choosing profitability-be-damned principles.

All three strategies are rational given different assumptions. But they're betting on different futures. And we're about to find out who was right.

FAQ

What is the AI monetization debate about?

The AI monetization debate centers on how companies should generate revenue from AI tools. The core disagreement is between those who believe ads destroy user trust in AI (Perplexity, Anthropic) and those who believe ads are necessary to sustain the massive infrastructure costs (OpenAI, Google). Since users are asking AI for factual information and making decisions based on answers, introducing financial incentives through ads creates potential conflicts of interest that could bias outputs.

Why did Perplexity stop using ads?

Perplexity phased out ads after testing them in 2024 and discovering that users immediately stopped trusting the platform's results. Executives realized that showing ads made users doubt whether answers were biased toward advertisers' interests. For a search tool built on trust and accuracy, this trust erosion was unacceptable, so they pivoted entirely to a subscription-based model targeting professionals and power users.

How does the subscription model work for AI companies?

The subscription model charges users a recurring monthly fee (typically $15-30) for premium access to AI tools. This works best for professional users—lawyers, doctors, finance professionals—who need unbiased, accurate information and have budgets to pay. Anthropic uses this model with Claude Pro. Perplexity offers premium tiers. The advantage is no conflicts of interest. The disadvantage is a smaller addressable market compared to free ad-supported models.

Why is OpenAI testing ads if they have successful subscriptions?

OpenAI is testing ads because the company believes subscriptions alone can't generate enough revenue to sustain competitive AI development. Training and running large language models costs billions annually. Subscriptions generate substantial revenue but create a ceiling—not everyone can or will pay. Ads unlock a different revenue stream from the free or casual-user tier, potentially generating billions more without increasing subscription costs.

What's the trust problem with AI ads?

Unlike traditional search engines where ads are clearly separated from organic results, AI assistants synthesize information into answers. If an AI's training data includes paid promotional content, or if advertisers have stakes in certain outputs, bias becomes inevitable at the model level. Users trusting an AI for critical decisions (legal research, medical information, financial advice) can't distinguish between unbiased answers and advertiser-influenced answers. This conflict makes ads fundamentally problematic for professional use cases.

Could AI companies show ads without biasing their outputs?

Probably not at the model level. Language models are trained on vast text corpora. If more promotional content exists for certain products or viewpoints in that data, models will naturally learn those biases. Companies can separate ads from answers visually, but they can't prevent training data bias. The only way to guarantee no ad influence is to refuse ads entirely, which is Anthropic's strategy.

How did Anthropic's Super Bowl ad challenge OpenAI?

Anthropic spent millions on a Super Bowl commercial attacking ChatGPT for embracing ads, positioning Claude as the ethical alternative. Sam Altman responded that ads were necessary for sustainability, not corporate greed. The ad was both a competitive strike and a regulatory message: "AI companies don't need to compromise principles for money. We're proof."

Which monetization strategy will win?

Most likely outcome is market segmentation: OpenAI dominates mass consumer AI (free with ads, premium without), Anthropic owns the safety-conscious professional segment (subscriptions only), and Perplexity captures researchers and power users. Each company occupies a different part of the market based on user values and budget constraints. The absolute winner depends on regulatory decisions about AI transparency and bias.

How does regulation affect these different models?

If regulators prioritize transparency and bias prevention, ad-supported models face restrictions and subscription models become advantages. If regulators prioritize consumer access, subscription-only models become unfeasible and companies must use ads to stay free. Europe's GDPR makes ads more expensive than the US, so we might see different models succeed in different regions.

What about international competition in AI monetization?

European regulations (GDPR, Digital Services Act, AI Act) make data collection for ads more expensive and complex. This advantages companies using subscription models in Europe while disadvantaging ad-based companies. Companies that can sustain a single global business model win over those maintaining separate US and EU strategies.

What should AI companies prioritize: trust or revenue?

In AI specifically, trust and revenue are correlated, not opposed. Users who trust a tool will pay for it willingly. Users who don't trust it will leave even if free. Building trust is the foundation for revenue. The companies betting that they can sacrifice trust for immediate ad revenue are likely making a strategic error. Long-term revenue depends on maintaining user confidence in answers.

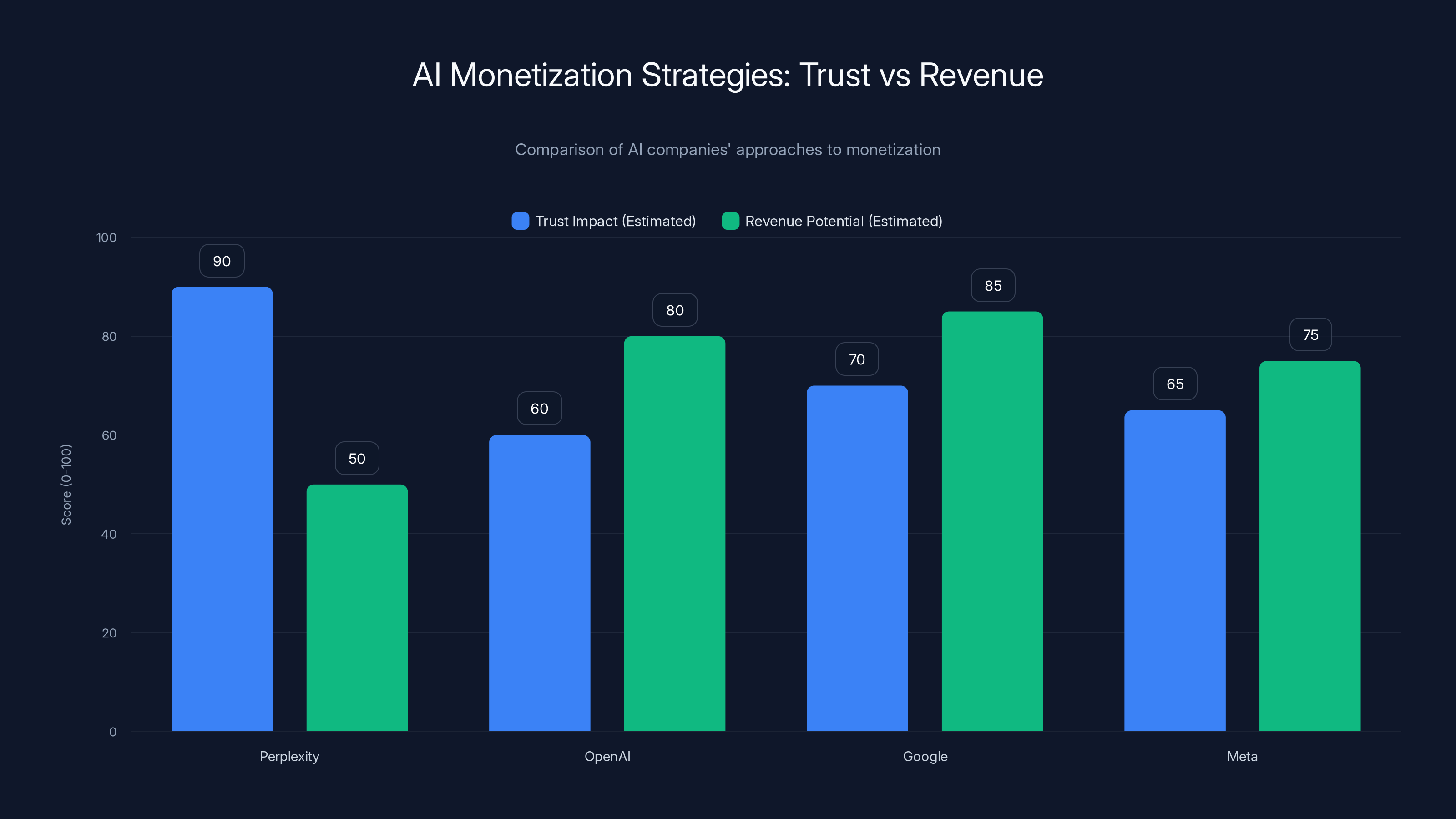

Estimated data shows a trade-off between trust and revenue potential in AI monetization strategies. Companies like Perplexity prioritize trust, while others like OpenAI focus on revenue through ads.

Building Your Own AI Strategy: Key Takeaways

If you're evaluating AI tools for your organization or building one yourself, the monetization model matters more than people realize. Here's what to think about:

For Consumers: Free with ads means the product owner has incentives misaligned with your interests. Premium subscriptions mean you're paying to remove those conflicts. Both are valid, but understand what each costs you.

For Professionals: Ads are probably disqualifying. If your work involves making decisions based on information accuracy, choose tools with subscription models. The extra cost is insurance against bias.

For Builders: Choose your monetization strategy early. It shapes your product, your culture, and ultimately your survival. Subscriptions require a small number of users willing to pay a lot. Ads require a large number of users you can monetize cheaply. You can't do both well simultaneously.

For Regulators: The rules you write now determine which business models survive. Restrict ads and companies need subscriptions. Require transparency on ad influence and they'll abandon ads anyway. Enable data collection for ads and subscription models lose competitiveness. You're not just regulating companies. You're choosing the industry structure.

The AI monetization wars are really just getting started. We're watching the industry make bets about its own future. Some of these bets will pay off. Others will fail spectacularly.

The only certainty is that someone will be wrong. And when they are, we'll all learn something important about how AI actually needs to work.

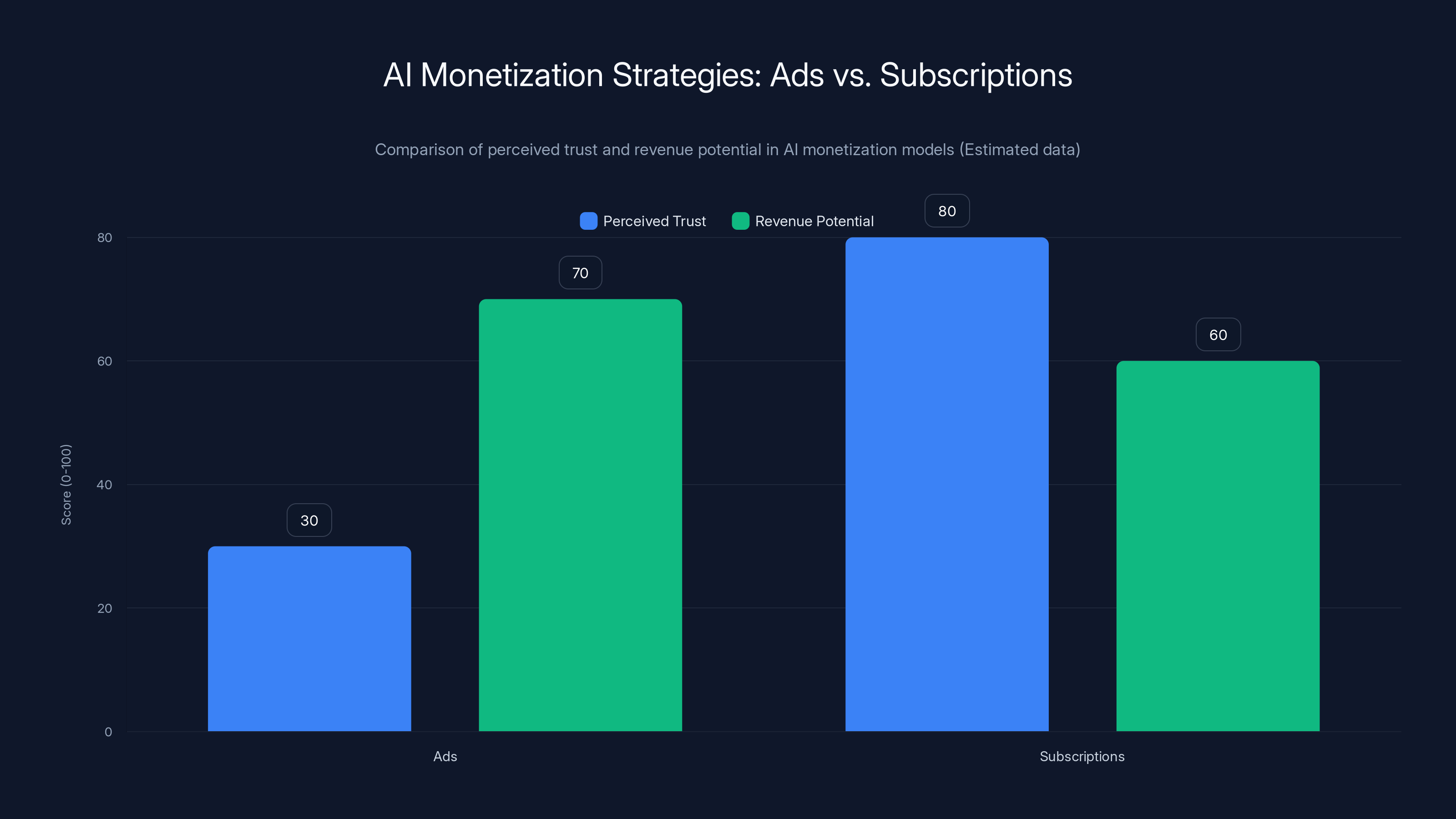

Ads are perceived to lower user trust significantly compared to subscriptions, but they offer higher revenue potential. Estimated data reflects general industry sentiment.

Key Takeaways

- Perplexity abandoned ads after discovering users immediately stopped trusting the platform when ads appeared

- OpenAI is testing ads because infrastructure costs are so high that subscriptions alone can't sustain the company

- Anthropic is betting that AI companies can be profitable on subscriptions alone and winning users through trust

- Professional users need ad-free AI because ads create conflicts of interest that could bias critical information

- The market will likely segment with different AI companies winning different user segments based on monetization choice

Related Articles

- Grok's Deepfake Crisis: EU Data Privacy Probe Explained [2025]

- AI Apocalypse: 5 Critical Risks Threatening Humanity [2025]

- Anthropic's $14B ARR: The Fastest-Scaling SaaS Ever [2025]

- 7 Biggest Tech News Stories This Week: Claude Crushes ChatGPT, Galaxy S26 Teasers [2025]

- The xAI Mass Exodus: What Musk's Departures Really Mean [2025]

- Meta's Facial Recognition Smart Glasses: Privacy, Tech, and What's Coming [2025]

![The Great AI Ad Divide: Why Perplexity, Anthropic Reject Ads While OpenAI Embraces Them [2025]](https://tryrunable.com/blog/the-great-ai-ad-divide-why-perplexity-anthropic-reject-ads-w/image-1-1771414640981.jpg)