Social Media Addiction Lawsuits: TikTok, Snap Settlements & What's Next [2025]

You've probably noticed something weird about social media. You open TikTok for 30 seconds. Suddenly it's 90 minutes later and you've watched 300 videos. Your thumb just kept swiping. Your brain kept hitting that dopamine reward button.

This isn't accidental design. It's intentional.

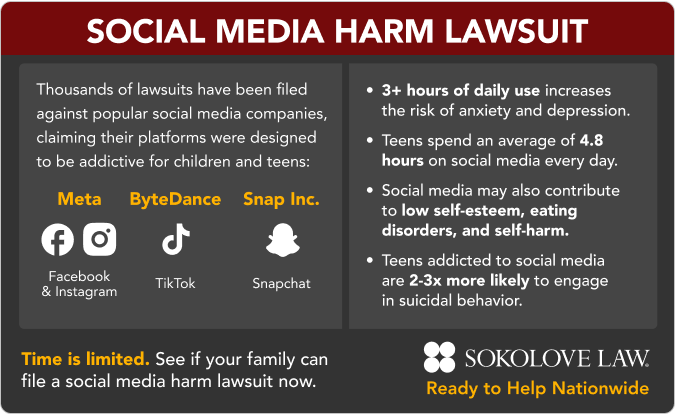

And now, the courts are finally catching up to what users have known for years: these platforms are deliberately engineered to be addictive, and they're causing real psychological harm, especially to young people. TikTok and Snap just settled a landmark lawsuit alleging exactly this. Meta and YouTube are heading to trial where jury selection started in early 2026, as reported by Reuters.

This isn't just legal theater. These settlements and trials could fundamentally reshape how social media companies build products, what they're liable for, and how regulators approach tech accountability. The decisions made in this case will ripple across the entire industry, affecting hundreds of millions of users worldwide.

Let's dig into what's actually happening here, why it matters, and what comes next.

TL; DR

- TikTok and Snap settled addiction lawsuits in January 2026, avoiding trial while admitting no wrongdoing, according to NPR.

- Meta and YouTube heading to trial with jury selection beginning immediately, creating precedent for dozens of pending cases, as noted by Al Jazeera.

- Core claim: Social media companies intentionally designed addictive features targeting minors' developing brains.

- Plaintiff evidence: K. G. M., a 19-year-old, is the lead plaintiff alleging addiction caused psychological harm.

- Industry impact: Settlements could force algorithm changes, content moderation overhauls, and new safeguards across platforms.

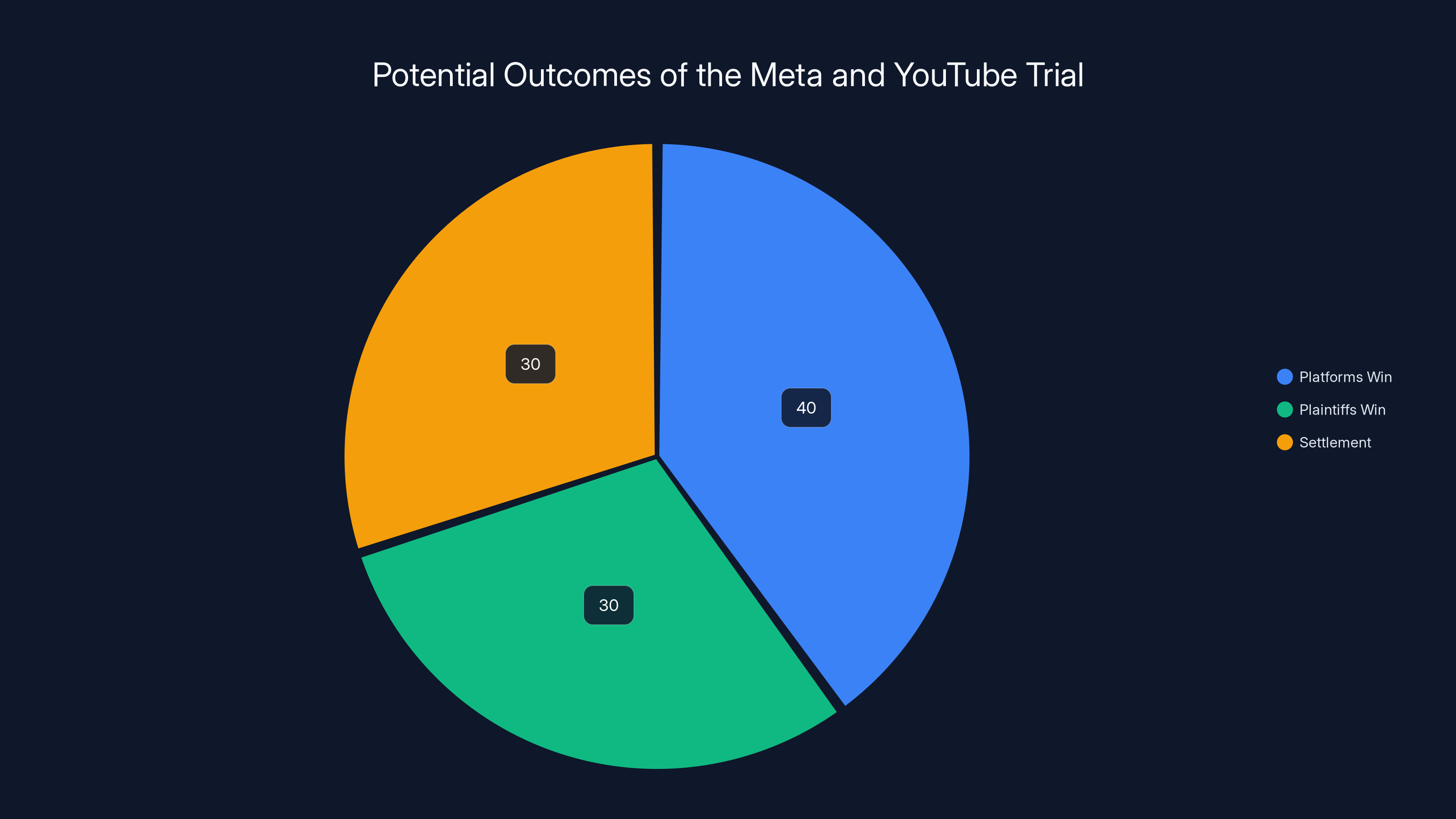

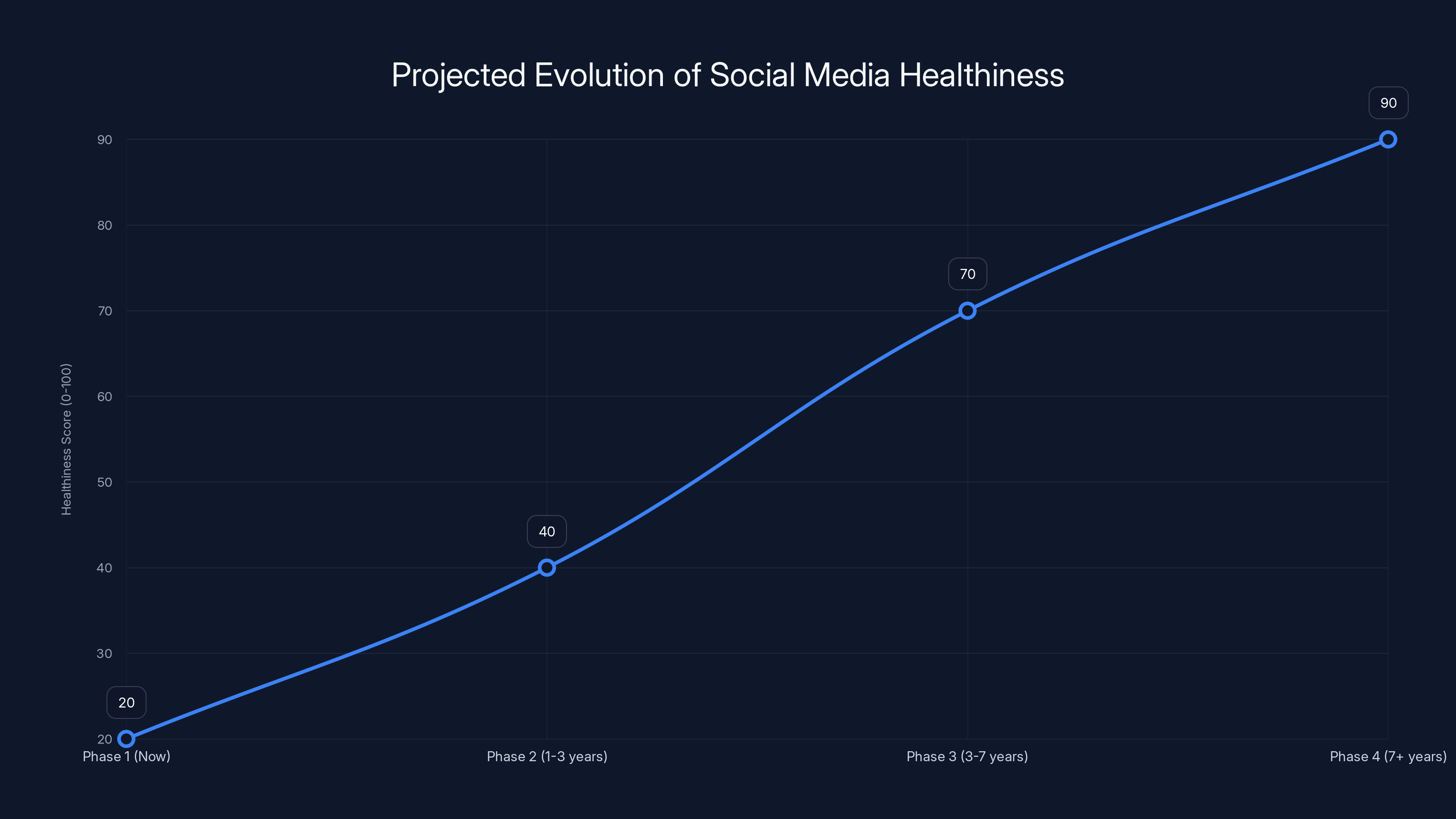

Estimated data shows a balanced probability among winning outcomes and settlements, highlighting the trial's uncertainty. Estimated data.

The Lawsuit That's Reshaping Social Media Accountability

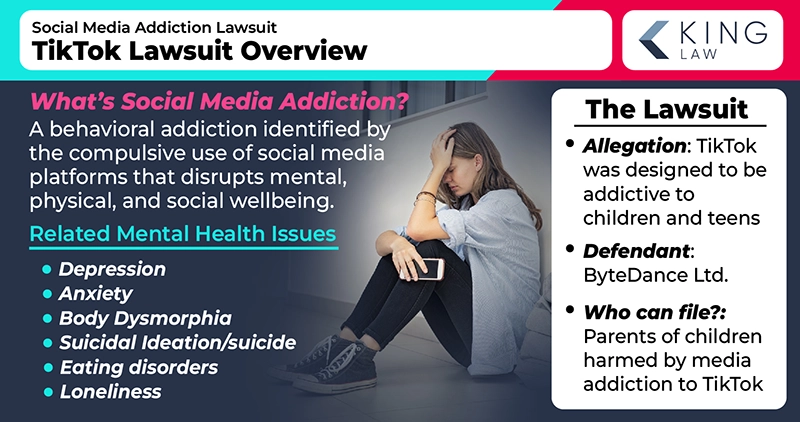

This isn't your typical corporate settlement. The lawsuit alleges something most users feel intuitively but can't quite articulate: social media companies have engineered their platforms to be addictive, knowingly targeting young people whose brains are still developing.

The case centers around a 19-year-old plaintiff identified as K. G. M. (initials used for privacy). Their story is representative of millions: excessive social media use that spiraled into dependency, affecting mental health, sleep patterns, academic performance, and real-world relationships. The legal argument is that this isn't a personal failing. It's the result of deliberate product design choices made by engineers and product managers who understood exactly what they were building.

TikTok and Snap both settled in late January 2026, choosing to pay settlements rather than gamble on jury verdicts. The settlement amounts weren't disclosed, which is frustrating for transparency but typical in these cases. By settling, they avoided admitting wrongdoing (crucial from a legal and PR perspective) while essentially acknowledging enough risk to make the lawsuit go away, as detailed by BBC.

Meta and YouTube did the opposite. They're rolling the dice on trial. Meta CEO Mark Zuckerberg and YouTube head Neal Mohan are expected to testify, which means depositions, cross-examinations, and internal documents becoming public evidence. That's a significant bet.

Understanding the Addiction Design Argument

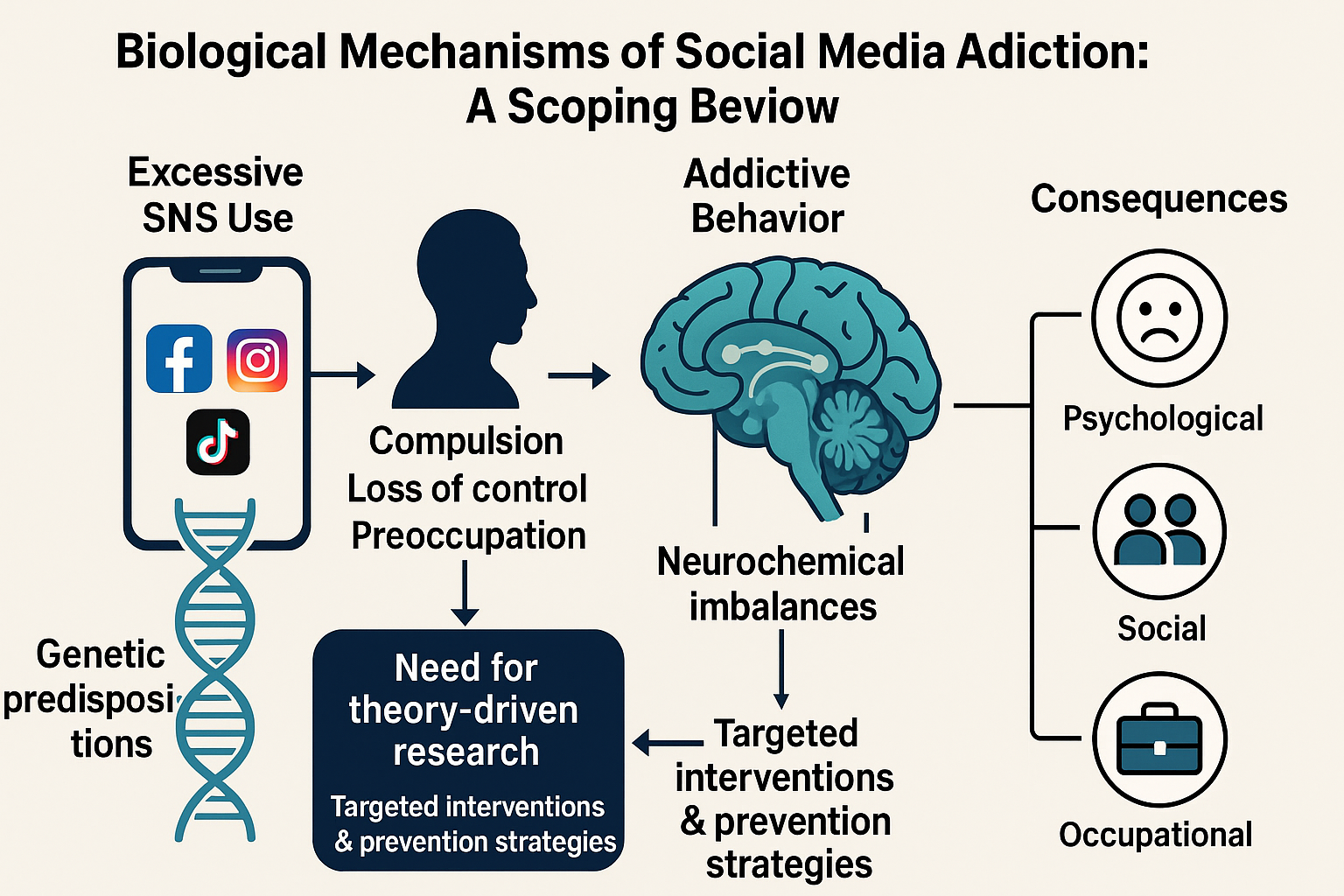

This lawsuit isn't alleging that social media is addictive in some abstract sense. It's arguing that platforms deliberately engineered addiction into their products.

There's substantial evidence supporting this claim. Internal documents from Meta (revealed by whistleblower Frances Haugen in 2021) showed that executives explicitly knew Instagram was "harmful for a sizable percentage of teen girls" and discussed how features like infinite scroll and algorithmic feeds were designed to maximize engagement through psychological manipulation.

TikTok's algorithm is even more sophisticated. The app learns what content keeps you scrolling and serves more of it. Unlike Instagram or YouTube where you follow people, TikTok's algorithmic feed is designed to find the exact content that will keep you swiping. Ex-employees have described this as deliberately optimizing for addiction, as noted by The Washington Post.

The science backs this up. Neuroscientists have documented how social media triggers dopamine release patterns similar to gambling or substance abuse. Your brain isn't overreacting when it feels compulsive urges to check notifications. The apps are literally exploiting your brain's reward systems.

Key design mechanisms identified in litigation:

- Infinite scroll: No natural stopping point, unlike pagination

- Algorithmic feeds: Content selected to maximize engagement rather than chronological

- Notifications and badges: Red dots triggering psychological urgency

- Variable rewards: Unpredictable likes and comments create gambling-like reward patterns

- Social validation metrics: Public like counts and follower numbers triggering status anxiety

- Watch time optimization: Autoplay and content suggestions designed to extend sessions

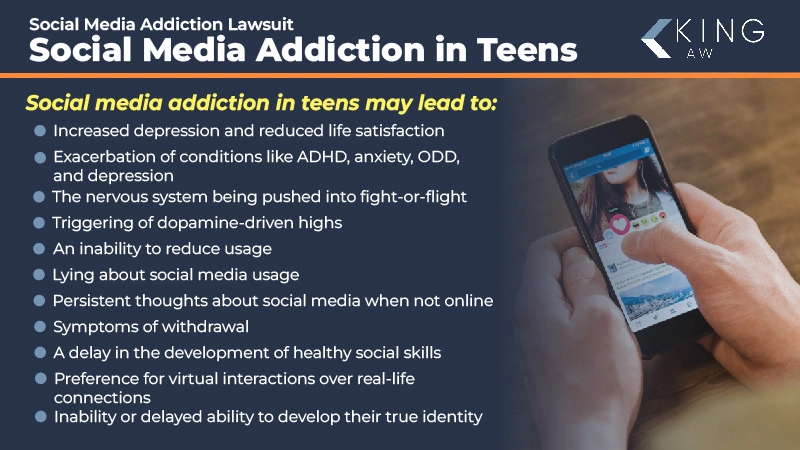

Young people are particularly vulnerable because their prefrontal cortexes (responsible for impulse control and long-term planning) aren't fully developed until the mid-20s. They literally lack the neurological equipment to resist these manipulations that adults might better resist, as highlighted by the American Academy of Pediatrics.

The lawsuit argues this crosses a legal line. It's not just that social media is engaging. It's that companies knowingly designed it to be addictive, suppressed internal research showing harms, and misled parents and regulators about safety.

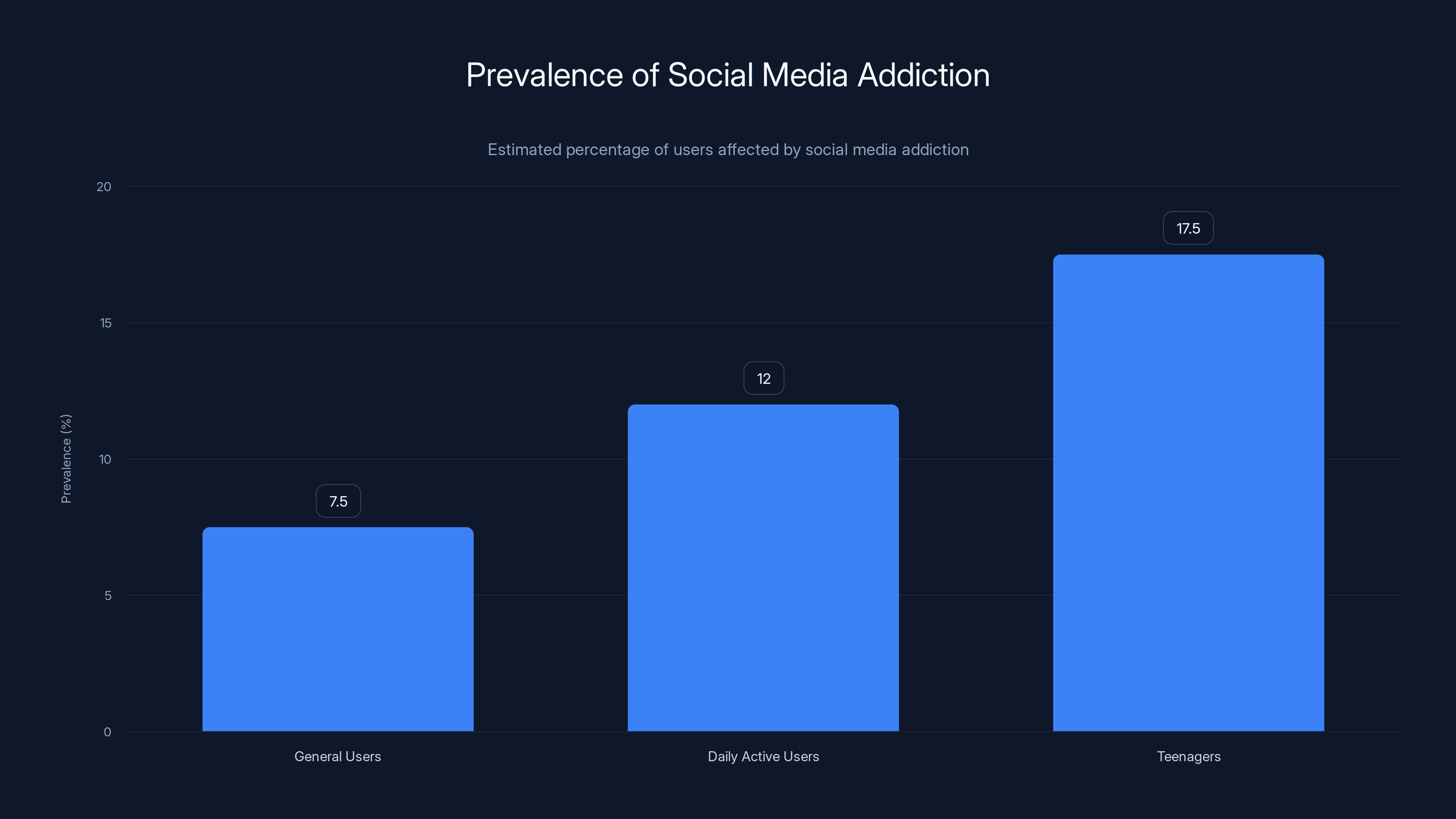

Estimated data shows that teenagers have the highest prevalence of social media addiction, with 15-20% affected, compared to 5-10% of general users.

Why TikTok and Snap Chose to Settle

Settlement versus trial is a strategic calculus. Both companies had to weigh the certainty of settlement costs against the uncertainty and potential catastrophe of losing at trial.

For TikTok, there's an additional pressure: regulatory scrutiny. The U.S. has been threatening to ban TikTok entirely over national security concerns. Simultaneously fighting addiction lawsuits and potential bans is a nightmare scenario. Settling removes one legal liability, freeing up executive attention and legal resources for the existential threat, as reported by ABC7 Chicago.

Snap's reasoning was probably similar, though with less existential regulatory pressure. Snap is smaller than Meta or TikTok, and a jury verdict against them could set precedent that hurts larger competitors too. By settling early, Snap gets a controlled outcome rather than waiting for trial and potentially creating bad precedent.

Both companies also benefited from settling before Meta and YouTube's trial. Any jury verdict against Meta or YouTube would make their own defense harder. Any favorable jury verdict for the platforms would make settlement look stupid. By settling now, before trial verdicts, they avoid both scenarios.

The non-disclosure of settlement amounts is also strategic. If settlements were publicly known to be massive, it would look like the companies were essentially admitting guilt and paying damages. By keeping amounts confidential, the companies can claim vindication while plaintiffs can claim victory, as explained by Sokolove Law.

The Meta and YouTube Trial: A Precedent-Setting Battle

Meta and YouTube's decision to fight is bolder and riskier. But if they win, it could provide a shield against hundreds of similar lawsuits pending across the country.

Jury selection began immediately after TikTok and Snap settled. This trial will likely take months. The evidence will include:

- Internal company documents discussing engagement optimization and user retention

- Expert testimony from neuroscientists and psychologists about addiction mechanisms

- Data showing how features affect user behavior, especially in minors

- Testimony from engineers and product managers about design choices

- Marketing materials showing how companies promote to advertisers (often highlighting addiction-adjacent metrics like "time spent")

Mark Zuckerberg taking the stand is particularly significant. His testimony could be explosive. Prosecutors will want to establish that he knew about harms and chose engagement metrics over safety. Defense will argue they've invested billions in safety features and that personal responsibility matters too, as noted by CBS News.

YouTube faces similar pressures. Neal Mohan will have to explain design choices, algorithmic recommendation systems, and what YouTube knew about effects on young users.

The trial outcome determines whether addiction lawsuits are viable against these companies. If plaintiffs win, expect hundreds of class action lawsuits, state-level litigation, and potentially settlements in the billions. If platforms win, they get a legal shield and settlements like TikTok's become harder to justify.

Either way, the trial will expose internal company knowledge about harms. Even if platforms win on liability, the public will learn uncomfortable truths about how and why these apps are designed the way they are.

What These Lawsuits Mean for Platform Design

Regardless of trial outcomes, these lawsuits are already forcing platforms to rethink product design. The lawsuit itself, the settlements, and the threat of trial are creating pressure for change.

Some platforms have already started making adjustments, though often in response to this litigation:

Time management features: Most platforms now include screen time tracking, break reminders, and the ability to limit notifications. These weren't standard just a few years ago. TikTok added "well-being" features in response to addiction criticism. Instagram introduced time limits after pressure. These aren't perfect solutions, but they acknowledge the problem.

Algorithm transparency: There's growing pressure to let users see why they're seeing specific content. Some platforms are testing chronological feeds as alternatives to algorithmic recommendations. This addresses one of the core addiction mechanisms.

Minors protections: Stricter account verification for users under 18, reduced notifications for minors, and content moderation specific to young users are becoming standard. It's not comprehensive, but it's progress.

Notification changes: Removing like counts in some contexts, disabling notification badges in others. These seem small, but they directly target the social validation feedback loops that trigger compulsive checking.

The challenge is that these changes often hurt engagement metrics. A less-addictive social media platform is a less-profitable social media platform (at least by traditional metrics). This creates tension: legal pressure to reduce addiction versus shareholder pressure to maximize engagement and ad revenue.

The settlements and trials are essentially forcing platforms to accept lower engagement in exchange for legal protection. Some executives recognize this is necessary for long-term sustainability. Others are fighting it, as discussed by Levin Law.

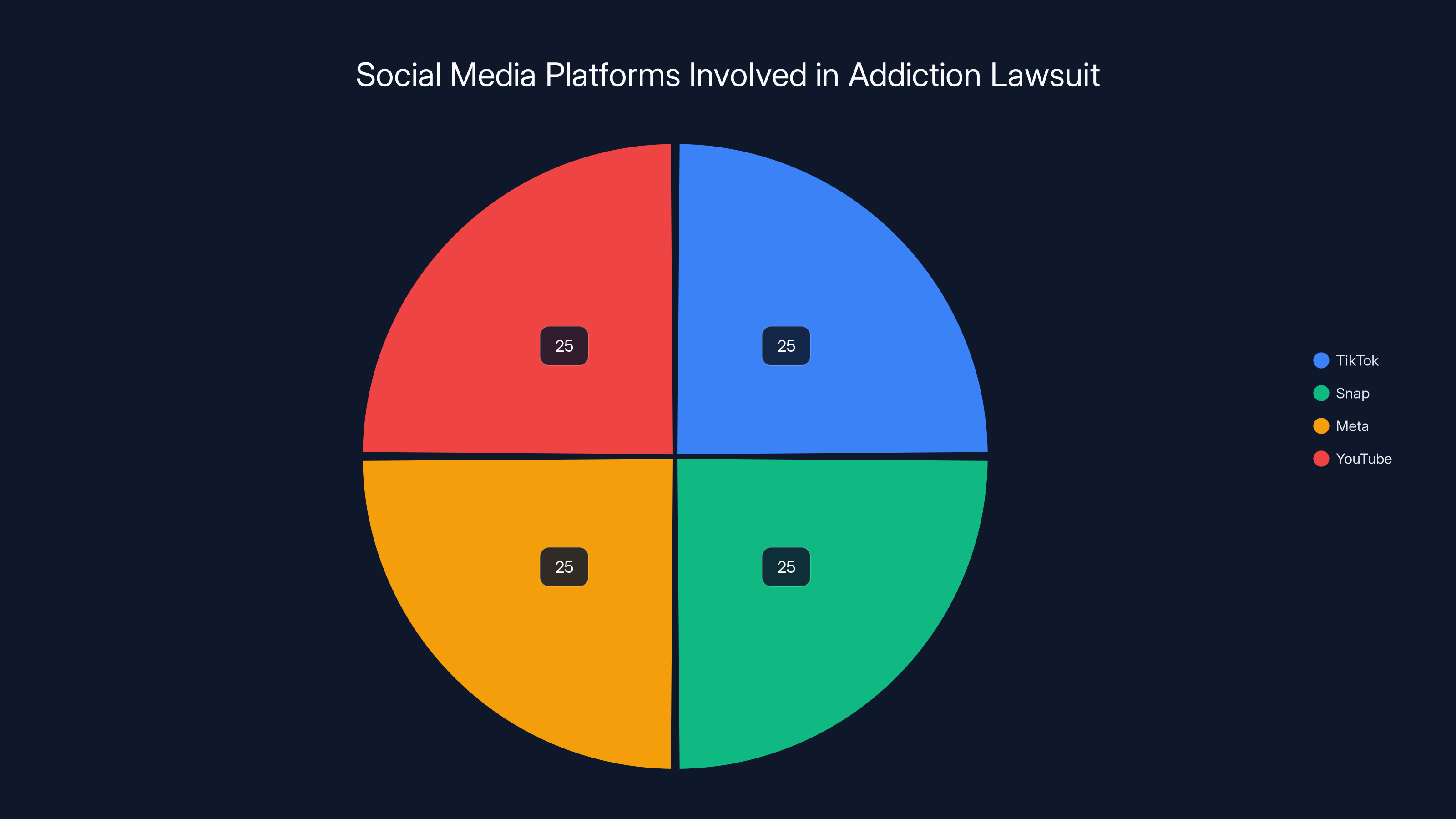

All four major platforms (TikTok, Snap, Meta, YouTube) are equally implicated in the lawsuit, highlighting widespread concerns over addictive design features. Estimated data.

The Role of the K. G. M. Plaintiff and Individual Harm Claims

Lawsuits need a face. That's why K. G. M., a 19-year-old, became the lead plaintiff in this case.

Their story represents millions of teenagers whose social media use spiraled beyond their control. Excessive screen time affecting sleep. Mental health deterioration correlating with platform use. Anxiety about social validation metrics. Academic performance suffering. Real-world relationships sidelined for online engagement.

The legal argument is that this isn't unfortunate happenstance. It's predictable harm resulting from deliberate platform design. K. G. M. didn't lack willpower or make bad choices in a vacuum. They were targeted by sophisticated psychological engineering designed to exploit their brain chemistry.

This framing is important because it shifts liability from the individual to the platform. Traditionally, courts have been skeptical of addiction claims that don't involve substances. But social media addiction has neurological similarity to behavioral addictions like gambling, which the law increasingly recognizes.

K. G. M.'s specific harms will be documented through:

- Mental health evaluations and diagnosis

- School records showing academic decline

- Medical records documenting sleep disruption

- Timeline analysis of app usage versus symptom onset

- Expert testimony connecting platform design to documented harms

- Communications showing conscious design choices by platforms

The power of this approach is that it individualizes the harm. Rather than abstract arguments about social media effects, the jury sees one young person whose life was concretely damaged by deliberate platform engineering.

If K. G. M. and similar plaintiffs win, it establishes legal precedent that social media companies can be held liable for foreseeable harms to minors. This opens the floodgates for similar claims.

The Broader Addiction Epidemic: How Many Users Are Actually Affected?

These lawsuits involve individual plaintiffs, but the underlying issue affects hundreds of millions of people.

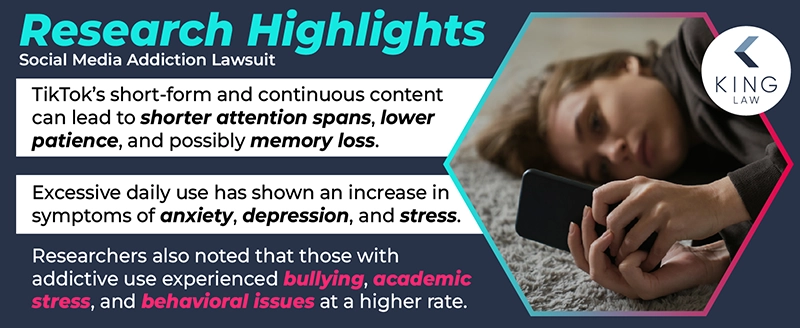

Research on social media addiction is still emerging, but troubling patterns are evident:

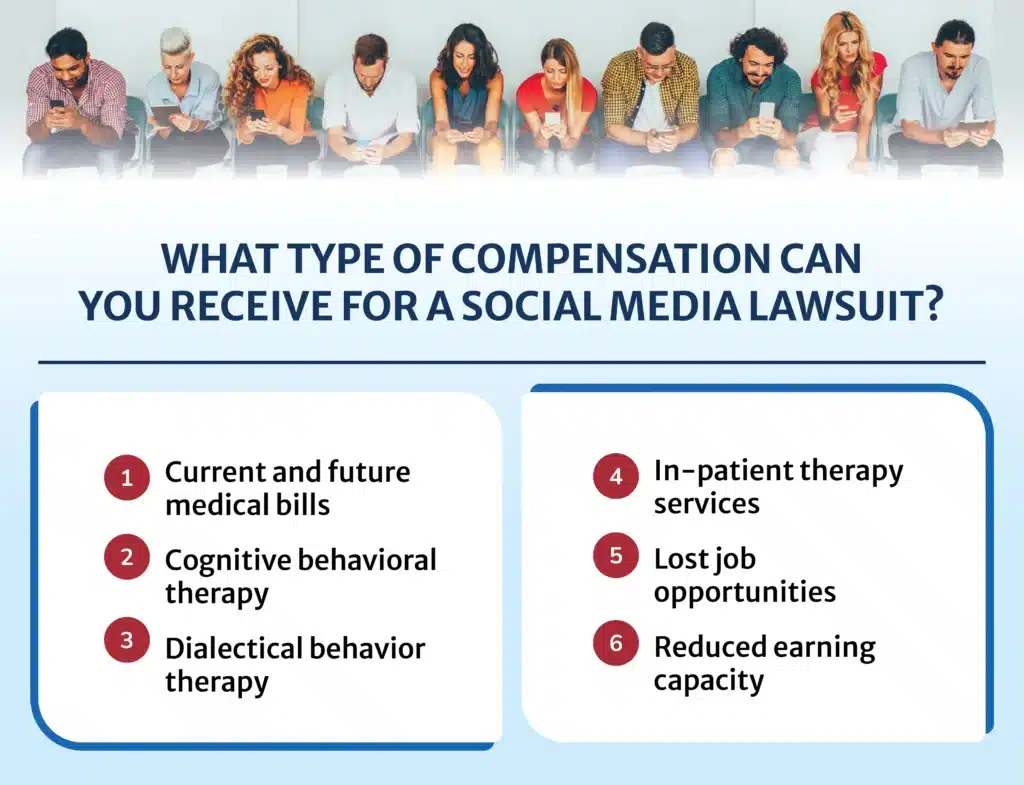

Prevalence estimates: Studies suggest 5-10% of social media users develop clinical addiction patterns (meeting DSM-5 criteria for behavioral addictions). For daily active users, the percentage is higher. For teenagers, estimates run 15-20% depending on methodology.

Mental health correlation: Multiple peer-reviewed studies show correlation between heavy social media use and increased depression, anxiety, and suicidal ideation, especially in adolescents. The correlation is strongest with apps designed around comparison and social validation (Instagram, TikTok).

Sleep disruption: Average teenagers with unrestricted social media access get 1-2 fewer hours of sleep per night. Sleep deprivation itself causes psychological and physical harms independent of the social media content.

Attention span effects: Longitudinal studies show correlation between heavy social media use (particularly TikTok's short-form video) and reduced ability to focus on longer-form content. This has implications for education, work, and reading comprehension.

Economic factors: Social media is free because users are the product. Advertisers pay for access to your attention. This creates economic incentive for platforms to maximize time spent, which directly drives addiction engineering.

What makes these lawsuits significant is that they might finally force companies to acknowledge what researchers have documented: social media is deliberately engineered to be addictive, and that engineering disproportionately harms developing brains, as highlighted by Minnesota Lawyer.

Regulatory Pressure: How Lawsuits Interact with Government Action

These lawsuits don't exist in a vacuum. They're part of a broader regulatory pushback against social media companies.

In the U.S., there's bipartisan concern about social media harms. Both Democrats and Republicans see social media as problematic, though for different reasons (Republicans focus on perceived bias and political influence; Democrats focus on mental health and exploitation). This rare consensus creates political pressure for regulation.

Key regulatory moves happening simultaneously:

Section 230 reform: The legal shield that allows platforms to escape liability for user-generated content is increasingly controversial. Modifying or removing 230 protections would dramatically change how platforms operate and their legal exposure.

Kids Online Safety Act (KOSA) and similar legislation: Multiple bills pending in Congress would establish safety standards specifically for minors. These include age verification, data minimization, algorithm transparency, and explicit addiction safeguards.

State-level regulation: Florida, Utah, and other states are passing laws restricting social media for minors, banning algorithmic feeds for users under 18, and requiring parental notification. European regulation (Digital Services Act) is even stricter.

International precedent: Australia recently passed laws restricting social media for under-16s. This creates global regulatory pressure and gives U.S. regulators precedent to point to.

The addiction lawsuits accelerate this regulatory momentum. Every settlement or verdict that acknowledges platform responsibility strengthens arguments for regulation. Regulators can point to judicial findings as evidence that voluntary platform changes are insufficient.

Platforms face pressure from three directions: lawsuits from individuals, regulation from government, and public opinion from users and parents. These forces compound. A lawsuit that establishes liability makes regulation easier to justify. Regulation that passes creates liability risk that makes settlements more likely.

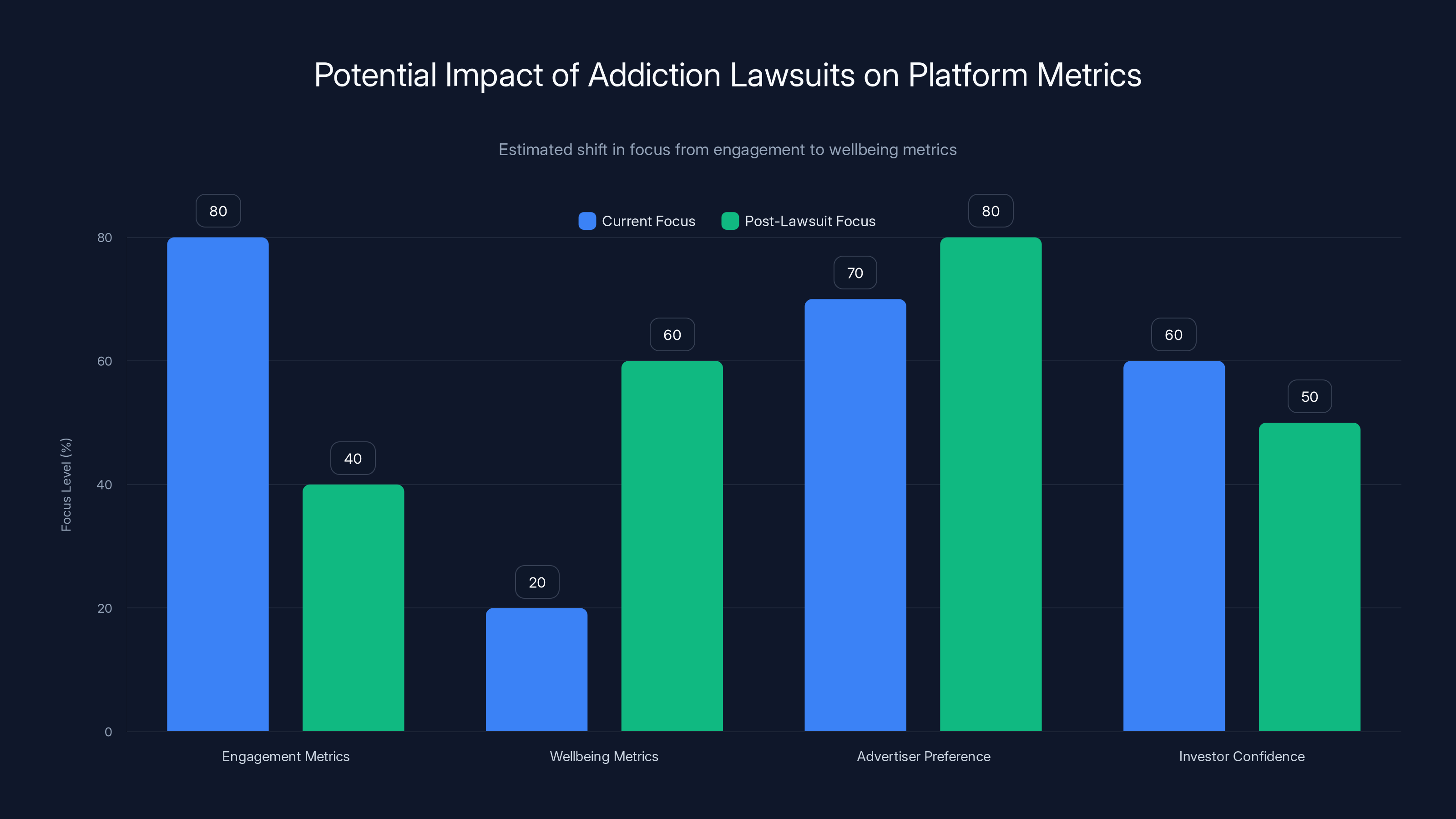

Estimated data suggests a significant shift in platform focus from engagement metrics to wellbeing metrics and safety practices, potentially altering advertiser and investor priorities.

How Addiction Lawsuits Could Reshape Platform Economics

If settlements and trials establish that platforms are liable for addiction harms, the business model implications are significant.

Currently, social media platforms optimize for engagement metrics because engagement drives ad revenue. More time spent equals more ads shown equals more revenue. It's a direct line. Product teams are measured on daily active users, session length, and return frequency.

If platforms become legally liable for addiction, this changes. Designing features that reduce addiction becomes good business (liability reduction) rather than bad business (engagement reduction). Companies might prioritize wellbeing metrics over engagement metrics because avoiding lawsuits is more valuable than marginal ad revenue gains.

This doesn't mean social media gets less engaging. It means platforms start competing on different metrics. Instead of "most addictive," the value proposition becomes "healthy engagement" or "meaningful connection." Different platforms could specialize: some optimizing for brief, casual use; others for community building; others for professional networking.

Advertisers might also shift. If platforms are liable for addiction harms, advertisers might face reputational risk for advertising on addiction-optimized platforms. This could drive advertiser preference toward platforms with better safety practices, creating market incentive for less addictive design.

The economic model could shift from "maximize engagement" to "maximize sustainable engagement," which is a fundamental change. It's similar to how tobacco regulation shifted from maximizing cigarette consumption to managing liability.

For investors, this creates uncertainty. Companies that built their value on engagement growth now face pressure to trade engagement for liability reduction. Stock prices might react negatively to announced safety features that reduce engagement. But long-term, companies that proactively reduce addiction might avoid catastrophic litigation costs.

Smaller platforms and newcomers might actually benefit. They could build from the start with harm-reduction in mind, positioning themselves as healthier alternatives to established platforms. This creates competitive differentiation based on safety rather than addiction mechanics.

International Precedent: How Other Countries Are Addressing Social Media Harms

The U.S. isn't alone in facing social media addiction lawsuits and regulation. Understanding global precedent helps predict where U.S. law might head.

European Union: The Digital Services Act (DSA) requires platforms to assess and mitigate risks from addictive design, particularly for minors. Platforms must make algorithmic feeds optional for users under 18. Failure to comply results in fines up to 6% of annual revenue. These are massive, structural changes being implemented now.

Australia: Recently passed laws restricting social media access for under-16s and banning algorithmic feeds for minors. This is the most restrictive approach globally. While there's debate about effectiveness, it signals regulatory seriousness.

United Kingdom: Proposed Online Safety Bill includes duty of care requirements for platforms, specifically addressing addiction and mental health harms. Online safety is becoming a core regulatory principle, not an afterthought.

China: Ironically, while aggressively regulating Western platforms like TikTok, TikTok's Chinese equivalent (Douyin) faces domestic addiction regulations. Users under 18 get daily time limits and functionality restrictions.

Canada: Multiple provinces are pursuing addiction-focused regulation and lawsuits against platforms, similar to U.S. efforts.

This international precedent matters because it shows a global consensus that social media addiction is a serious problem requiring regulatory intervention. U.S. courts looking at parallel litigation in other countries can cite international approaches as evidence that addiction liability is reasonable.

The convergence is remarkable: Europe, Australia, Canada, and the U.S. are all simultaneously addressing social media addiction through litigation, regulation, or both. Platforms that built their model on optimizing for addiction are now facing headwinds globally.

What Expert Evidence Will Look Like at Trial

Meta and YouTube's trial will feature expert witnesses from neuroscience, psychology, and tech. Understanding what evidence will be presented helps clarify the scientific case for addiction liability.

Neuroscience experts will testify about dopamine, reward circuits, and how social media triggers these systems. They'll explain why adolescent brains are particularly vulnerable due to ongoing prefrontal cortex development. They'll present neuroimaging data showing social media activating the same brain regions as gambling or substance use.

Child development psychologists will explain how adolescent psychology makes them more susceptible to social comparison, status anxiety, and reward-seeking behavior. They'll discuss why teenagers lack impulse control to resist addictive mechanisms that might not capture adults as effectively.

Tech experts and former engineers might testify about specific design choices. What were teams trying to optimize for? Did they have metrics for addictive behavior? Were alternative designs considered and rejected? Did they know their changes would increase addiction risk?

Epidemiologists will present data on correlations between social media use and mental health outcomes. While correlation doesn't prove causation, consistent epidemiological evidence makes causation more plausible.

Social media usage researchers will present data on how platform features (algorithmic feeds, infinite scroll, notifications) affect user behavior. This documents the mechanism through which design choices cause addiction.

Economists might testify about incentive structures. If platforms are economically motivated to maximize engagement, and engagement maximization requires addiction engineering, then addiction is a predictable business outcome, not an accident.

Platforms will counter with their own experts arguing that:

- Correlation between social media and mental health doesn't prove causation (other factors matter)

- Users have agency and choice

- Platforms have implemented safety features

- Social media provides genuine value for connection and expression

- Addiction is a complex phenomenon with multiple causes

The jury will have to weigh competing expert testimony and make judgments about causation, intent, and liability. This is inherently uncertain, which is why both sides are investing heavily in trial preparation.

Estimated data suggests neuroscience and tech design will be the most intensely focused areas in the trial, highlighting their critical role in understanding social media addiction.

Potential Trial Outcomes and Their Implications

There are several possible outcomes from the Meta and YouTube trial, each with different implications.

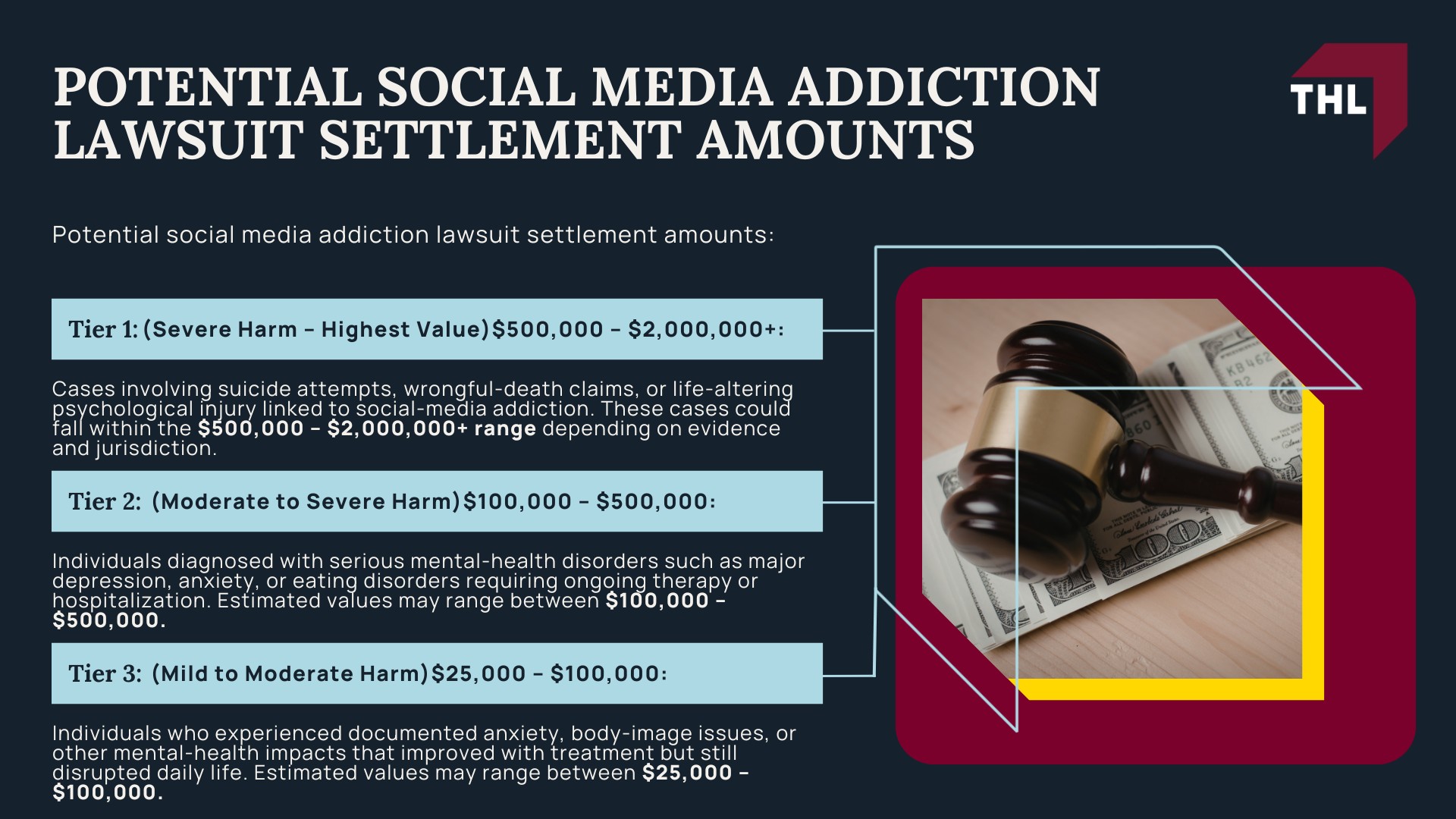

Plaintiff victory: If the jury finds that Meta and YouTube deliberately designed addictive products that harmed K. G. M., this establishes precedent for similar claims. Immediate implications: hundreds of pending cases suddenly become viable. Settlement pressure increases. Regulatory confidence increases. Business model pressure increases. Stock prices likely fall. Platforms immediately accelerate addiction-reduction features to limit further liability.

Platform victory: If the jury finds that platforms aren't liable (either because design wasn't deliberately addictive, or because individual responsibility outweighs company responsibility), this provides legal shield. Pending cases become harder to pursue. But settlements like TikTok's become harder to justify publicly. Regulatory pressure intensifies because courts won't address the issue. This pushes regulation toward legislative solutions. Business model pressure eases but regulatory risk increases.

Partial verdict: Mixed outcomes are possible. Jury might find certain platforms liable but others not. Might find addiction design occurred but causation to K. G. M.'s specific harms insufficient. Might establish narrow liability (e.g., for under-16 users only). Partial verdicts create uncertainty and multiple appeal opportunities.

Settlement mid-trial: Jury selection and early trial could shift settlement calculations. If early testimony damages one side's position, settlement becomes more attractive than rolling the dice on verdict. Mid-trial settlements are common when trial reality doesn't match pre-trial expectations.

Most legal experts expect either platform victory or mid-trial settlement. A complete plaintiff victory is possible but less likely given legal standards for liability and causation. However, even platform victory doesn't end the issue. Regulatory pressure continues regardless of trial outcome.

The settlement amounts also matter. If TikTok and Snap's settlements are later revealed to be in the hundreds of millions, it looks like they paid massive liability even while avoiding trial. This shapes market perception and regulatory response regardless of trial outcome.

How This Affects Everyday Users: What Changes Are Coming

Whether through settlements, trial verdicts, or regulatory pressure, social media will change in coming years. Understanding what changes are coming helps users navigate the shifting landscape.

Algorithm changes: The most likely change is moving away from pure engagement optimization toward mixed algorithms that consider content quality, user wellbeing, and diversity. This means less of "content designed to keep you scrolling" and more of "content relevant to your interests but stopping at reasonable time limits."

Notification changes: Expect further reduction in notifications that exploit urgency and social validation. Many platforms are already disabling like counts in certain contexts. This trend will accelerate.

Content moderation changes: Platforms will face pressure to better moderate content that specifically exploits mental health vulnerabilities or promotes comparisons and status anxiety. This could mean algorithmic suppression of comparison-triggering content.

Default protections for minors: Expect age-based restrictions becoming standard. Minors might get different algorithm types, different notification settings, daily time limits, and different content recommendations by default. This might sound paternalistic, but it's coming.

Data practices changes: Pressure will increase to limit data collection from minors and restrict how that data is used for ad targeting. This reduces incentive for platforms to understand and optimize for youth psychology.

Transparency requirements: More visible disclosure about why you're seeing content, how algorithms work, and what the platform knows about you. This is already happening in Europe and will spread.

Subscription alternatives: Expect more platforms offering ad-free, less-addictive options for paying users. This matches the tobacco industry precedent: while not eliminating addictive products, companies create a "healthier" paid tier.

For individual users, this means more control, more transparency, and more responsibility. You'll have better tools to limit usage, better visibility into why content appears, and more agency in choosing how addictive your feed is. But you'll also have more responsibility to use these tools rather than defaulting to maximized engagement.

The Future of Tech Regulation: Where This Leads

These addiction lawsuits are part of a larger pattern: technology regulation is moving from "hands-off" to "active oversight."

Tech companies spent 15+ years operating with minimal regulation, assuming that innovation and market forces would self-correct problems. This assumption is now proven wrong. Market forces don't incentivize safety when engagement drives revenue. Innovation doesn't self-correct addiction when addiction is the business model.

We're entering an era where tech regulation is becoming normal. Social media is just the first domain. You can expect similar litigation and regulation for:

- AI safety and bias

- Data privacy and surveillance

- Algorithmic discrimination

- Content moderation and free speech

- Cryptocurrency and financial stability

The addiction lawsuit precedent matters because it establishes that tech companies can be held liable for harms caused by deliberately engineered products. This principle will extend to other domains.

For companies, this means compliance costs and liability risk become business-critical. Building safe products isn't just ethically right; it's economically necessary. This shifts incentives across the industry.

For users, this means technology will be more regulated, safer, less addictive, but also potentially less exciting and innovative. There are real trade-offs. Limiting addictive features might also limit features that are genuinely helpful and engaging. Regulation could stifle innovation or impose one-size-fits-all requirements that don't work for diverse use cases.

The question isn't whether regulation is coming. It is. The question is whether it's thoughtful, proportionate regulation that acknowledges trade-offs, or blunt regulation that imposes unnecessary costs. The addiction lawsuits will shape which path we take.

Estimated data suggests social media platforms will become significantly healthier over the next decade, driven by regulation and new business models.

What Parents and Guardians Should Know Right Now

If you have teenagers or young children, these lawsuits and settlements have immediate practical implications.

The evidence is clear: Social media is deliberately designed to be addictive. This isn't opinion or overreaction. Courts, regulators, and companies are essentially acknowledging this through settlements and liability discussions. You're not being paranoid by limiting screen time.

Your parental instincts are correct: If it feels like your kid can't put their phone down, it's not weakness or bad parenting. The platform literally engineered it to be hard to put down. Your kid's struggle against the addiction is a struggle against sophisticated engineering.

Technical solutions exist but aren't sufficient:

- Screen time limits (built into phones) are helpful but easily circumvented

- Content filters can reduce some harms but don't address core addiction mechanics

- Device-free zones and times (no phones at dinner, in bedrooms, etc.) are more effective

- Parental controls can help but aren't foolproof

None of these are magic solutions because the fundamental issue is product design, not user discipline.

Have honest conversations: Instead of treating social media as forbidden or shameful, talk about how it works, why it's designed the way it is, and what addiction mechanisms to watch for. This builds critical awareness that tech literacy requires.

Model healthy behavior: If you're constantly checking your phone, it's harder to convince kids they should limit usage. Your own relationship with technology sends a message about whether it's controllable.

Expect improvement, not perfection: As these lawsuits pressure platforms to reduce addiction, you'll see some genuine improvements. But platforms won't eliminate engagement optimization entirely. Vigilance will remain necessary.

Advocate for regulation: Parent groups are becoming powerful voices in regulatory discussions. If you care about this issue, contact your representatives. Even modest action (like age verification or parental controls improvements) helps.

The litigation trajectory suggests significant platform changes are coming. But change takes time. In the interim, the responsibility falls on parents to manage exposure and conversations.

Key Stakeholders and Their Interests in These Lawsuits

Understanding who benefits and who loses from these lawsuits helps predict how they'll play out and what changes actually happen.

Plaintiffs and their lawyers: Obviously benefit from settlements and trial victories. Larger settlements = more compensation and larger attorney fees. But they also care about precedent; winning establishes a template for future cases.

Social media platforms: Want to minimize liability, avoid precedent that enables hundreds of lawsuits, protect business model based on engagement. Settlement with confidentiality is better than a trial verdict that establishes a dangerous precedent. But they're also split: some executives want to innovate toward safer designs and get ahead of liability; others want to fight everything.

Advertisers: Currently benefit from highly targeted, engagement-optimized ads. Reduced addiction and less data collection hurts their ability to reach audiences. But some advertisers (especially brands marketed to families) might want to avoid association with addiction-optimized platforms.

Regulators and legislators: Want to appear responsive to public concerns about social media harms. Addiction lawsuits create political pressure to "do something." Whether that leads to good regulation or bad regulation varies, but regulation is coming.

Parents and child advocates: Obviously want platforms to be less harmful. They've been central to driving these lawsuits and regulatory pressure. Winning case validates their concerns and funds advocacy.

Employees of tech companies: Mixed impacts. Some believe products should be made safer and support changes. Others fear changes reduce their companies' competitive advantage. Everyone worries about compliance costs and potential layoffs.

Other tech platforms: TikTok and Snap settling hurts other platforms because it suggests the claims have merit. Meta and YouTube fighting helps others because a favorable verdict helps them too. But if platforms lose, others get dragged into litigation.

Public health organizations: Support these cases because they align with public health goals of reducing addictive product exposure, especially for minors.

This complexity of interests shapes outcomes. It's not simple good guys versus bad guys. It's multiple stakeholders with legitimate but conflicting interests navigating uncertainty.

The Long View: How Social Media Becomes Healthier

Eventually, social media will be less addictive than it is today. These lawsuits are accelerating that transition, but the direction is already set. Here's a plausible long-term trajectory:

Phase 1 (now): Litigation and regulatory pressure force platforms to acknowledge harms and make voluntary changes. Settlements become more common. Platforms experiment with safer features but don't completely abandon engagement optimization.

Phase 2 (1-3 years): Regulation passes in multiple jurisdictions. Digital Services Act in Europe is already law. Similar bills pass in the U.S. and other countries. Regulation creates minimum standards that platforms must meet. Some platforms comply better than others, creating competitive differentiation around safety.

Phase 3 (3-7 years): New platforms emerge optimized for safety rather than addiction. These might be smaller, have different business models (subscriptions rather than advertising), or be non-profit/public alternatives. They compete against incumbent platforms on safety rather than features.

Phase 4 (7+ years): Industry consolidates around different models. Some platforms remain highly addictive but operate in gray legal areas (like tobacco). Others become explicitly health-conscious and compete on that basis. Regulation clarifies boundaries.

This is speculative, but the direction is fairly predictable. Addiction-optimized products face legal and regulatory pressure they can't indefinitely evade. Some platforms adapt. Some face constraints. New competitors emerge. Industry evolves.

The wild card is whether regulation is thoughtful or ham-handed. Good regulation acknowledges trade-offs and innovation incentives. Bad regulation crushes innovation or imposes impossible compliance costs. The lawsuits matter because they shape which kind of regulation emerges.

What We Still Don't Know and Why It Matters

For all the clarity these lawsuits provide, major questions remain unanswered.

How much causation is required? Does a platform need to cause someone's addiction, or just contribute to it? If someone has vulnerability factors (depression, ADHD, family addiction history), do platforms bear liability for exploiting those vulnerabilities? The law isn't clear.

Can users consent to addictive products? If someone knowingly uses an addictive app and agrees to terms of service, are they legally consenting to that addictiveness? Or is consent invalid when addiction erodes judgment? This is philosophically and legally unsettled.

Do minors need special protection, or do all users? These cases focus on minors, but the addiction mechanisms affect adults too. Is the legal standard different for minors (yes, almost certainly), but this hasn't been clearly established.

What counts as "addiction" legally? Clinical addiction involves serious impairment. But social media creates compulsive engagement without necessarily meeting clinical addiction criteria. How much harm is required for legal liability?

How much do platforms bear responsibility vs. individual choice? Everyone who uses social media has some responsibility for their own usage. Where does company responsibility end and personal responsibility begin? This is fundamental to liability.

These unresolved questions will be tested through trials and appeals. Each case clarifies boundaries. Over time, legal standards emerge. But right now, there's genuine uncertainty about the scope of liability.

This uncertainty is why platforms both want to fight some cases (establish favorable precedent) and settle others (avoid unfavorable precedent). The outcome shapes the legal landscape for years.

FAQ

What is the social media addiction lawsuit about?

The lawsuit alleges that social media companies like TikTok, Snap, Meta, and YouTube deliberately engineered their platforms to be addictive, particularly targeting young users whose developing brains are vulnerable to such manipulation. The case centers on a 19-year-old plaintiff (K. G. M.) who alleges psychological and physical harm from excessive platform use driven by intentional addictive design features like infinite scroll, algorithmic feeds, and notification systems designed to trigger compulsive engagement.

Why did TikTok and Snap choose to settle instead of fighting the case?

TikTok and Snap settled in January 2026 to avoid the uncertainty and potential costs of trial. Settlement provided them with a controlled outcome, avoiding a public jury verdict that could establish dangerous legal precedent affecting hundreds of pending similar lawsuits. For TikTok specifically, settling one liability helped address regulatory pressures from potential platform bans. Both companies also benefited from settling before Meta and YouTube's trial results were known, avoiding outcomes that could have made settlement look worse.

What evidence shows that platforms deliberately design addictive features?

Evidence includes internal company documents (like those revealed from Meta through whistleblower Frances Haugen) showing executives knowingly discussed engagement optimization and harm, testimonies from former employees describing deliberate addiction mechanics, expert neuroscience evidence of how features trigger dopamine reward systems similar to gambling, and product design choices specifically targeting psychological vulnerabilities. Additionally, Meta's own research documented harmful effects while continuing to optimize for engagement metrics that exploited those harms.

How do social media platforms make products addictive?

Platforms use multiple psychological mechanisms including infinite scroll (no natural stopping point), algorithmic feeds (showing content most likely to capture attention), variable rewards (unpredictable likes and comments creating gambling-like patterns), notifications and badges triggering urgency, public metrics (likes and followers) creating status anxiety, and watch-time optimization (autoplay and recommendations extending sessions). These features exploit normal brain chemistry, particularly dopamine reward systems, creating compulsive usage patterns. Research shows these mechanisms are especially effective on developing adolescent brains still developing impulse control.

What does the trial involve and why does it matter?

Meta and YouTube's trial involves jury determination of whether platforms deliberately designed addictive products causing foreseeable harms. The trial features expert testimony on neuroscience, psychology, product design, and epidemiology, along with internal company documents and executive testimony. It matters because the verdict establishes legal precedent for whether platforms can be held liable for addiction harms. A plaintiff victory opens floodgates for similar cases and strengthens regulatory arguments. A platform victory provides a legal shield against pending cases but increases regulatory pressure.

What changes are platforms making in response to these lawsuits?

Platforms are implementing screen time limits, break reminders, algorithm transparency features, reduced notifications, removal of like counts in certain contexts, stricter age verification, content moderation improvements, and chronological feed options as alternatives to algorithmic feeds. However, these changes are primarily harm-reduction rather than fundamental business model shifts. Platforms continue optimizing for engagement but try to do so more responsibly. Meaningful change has been driven more by regulatory pressure and litigation threat than by genuine voluntary initiatives.

How does adolescent brain development make teenagers more vulnerable to social media addiction?

The prefrontal cortex, responsible for impulse control, risk assessment, and long-term planning, doesn't fully develop until the mid-20s. Teenagers have mature reward-seeking systems (triggering desire for engagement) but immature impulse-control systems (preventing them from stopping). This neurological mismatch makes teenagers specifically vulnerable to platforms engineered around dopamine rewards and compulsive engagement. Additionally, adolescents are naturally more susceptible to social comparison and status anxiety, which social media platforms deliberately exploit through visibility of follower counts and like metrics.

What are the broader regulatory implications of these lawsuits?

These lawsuits create political and legal momentum for regulation globally. Europe's Digital Services Act already requires platforms assess addiction risks and restrict algorithmic feeds for minors. The U.S. has multiple bills pending (KOSA and others) that would establish safety standards. Australia recently passed restrictive laws for under-16 users. These lawsuits demonstrate that courts recognize addiction as a legitimate concern, strengthening regulatory arguments and making platforms more likely to accept regulation voluntarily. Basically, litigation accelerates regulation by establishing that the issue is serious enough for legal attention.

What does this mean for social media platforms' business models?

Liability exposure and regulatory pressure are forcing platforms to decouple business success from maximum engagement optimization. Rather than purely maximizing time spent (which drives ad revenue), platforms now balance engagement with liability reduction. This could lead to business model shifts toward subscription services (which don't require engagement optimization for revenue), mixed algorithms (balancing engagement with other values), or stronger separation between addictive and non-addictive features. The change is incremental but directional: pure engagement maximization is becoming legally and economically riskier.

Conclusion

The social media addiction lawsuits represent a fundamental shift in how courts and regulators approach technology accountability. For the first time, platforms are being held liable for deliberately engineered harms, specifically the exploitation of psychological vulnerabilities to create addictive products.

TikTok and Snap's settlements acknowledge this liability without admitting wrongdoing. Meta and YouTube's decision to fight sets up a trial that will determine whether addiction claims are viable against tech companies, potentially opening floodgates for similar litigation.

Regardless of trial outcomes, change is coming. Regulatory pressure from governments worldwide is intensifying. Consumer and parental pressure is mounting. Employees within companies increasingly recognize that business models based on pure engagement optimization are ethically indefensible and legally risky. Investors are starting to price in liability and regulatory risk.

The trajectory is clear: social media will become less addictive, more transparent about how algorithms work, and more protective of minors. This transition will take years, involve significant litigation and regulation, and create winners and losers. But the direction is set.

For individual users, the lesson is clear: these platforms were deliberately engineered to be addictive. Your struggle to limit usage isn't a personal failing. It's predictable resistance to sophisticated psychological engineering. Using the growing number of harm-reduction tools (screen time limits, notification disabling, feed customization) isn't weakness. It's rational self-defense against deliberately addictive product design.

The real question isn't whether social media addiction is real. These lawsuits confirm it is. The question is how quickly platforms change and how much damage occurs in the interim. Litigation, regulation, and user pressure are the mechanisms that force that change. Each lawsuit that settles or goes to trial brings accountability closer.

In the end, social media will remain part of how humans connect. But it will be less addictive, less exploitative, and more transparent about the psychological mechanisms it uses. These lawsuits are making that future inevitable.

Key Takeaways

- TikTok and Snap settled addiction lawsuits in January 2026, avoiding trial while Meta and YouTube proceed to jury trial with potential precedent-setting impact.

- Social media platforms deliberately engineered addictive features targeting adolescent brains lacking full impulse control development.

- Trial outcomes will determine whether platforms bear liability for addiction harms, potentially opening floodgates for hundreds of similar cases.

- Global regulatory momentum is accelerating with Europe's Digital Services Act, Australia's age restrictions, and US legislation pending.

- Platform business models will shift from pure engagement maximization toward balancing engagement with liability reduction and user wellbeing.

Related Articles

- TikTok Settles Social Media Addiction Lawsuit [2025]

- Meta's "IG is a Drug" Messages: The Addiction Trial That Could Reshape Social Media [2025]

- Social Media Companies' Internal Chats on Teen Engagement Revealed [2025]

- Meta Pauses Teen AI Characters: What's Changing in 2025

- Meta's Aggressive Legal Defense in Child Safety Trial [2025]

- X's Grok Deepfakes Crisis: EU Investigation & Digital Services Act [2025]

![Social Media Addiction Lawsuits: TikTok, Snap Settlements & What's Next [2025]](https://tryrunable.com/blog/social-media-addiction-lawsuits-tiktok-snap-settlements-what/image-1-1769544819348.jpg)