Why Agentic AI Pilots Stall & How to Fix Them: A Practical Playbook for Enterprise Success

Here's what keeps me up at night: I talk to enterprise leaders almost every week who've spent millions on agentic AI pilots, only to watch them stall out. Not crash. Not fail spectacularly. Just... stall. The project gets stuck in neutral, costs keep climbing, and suddenly that autonomous agent that was supposed to transform operations becomes another line item in the failed IT budget.

The frustrating part? It's almost always avoidable.

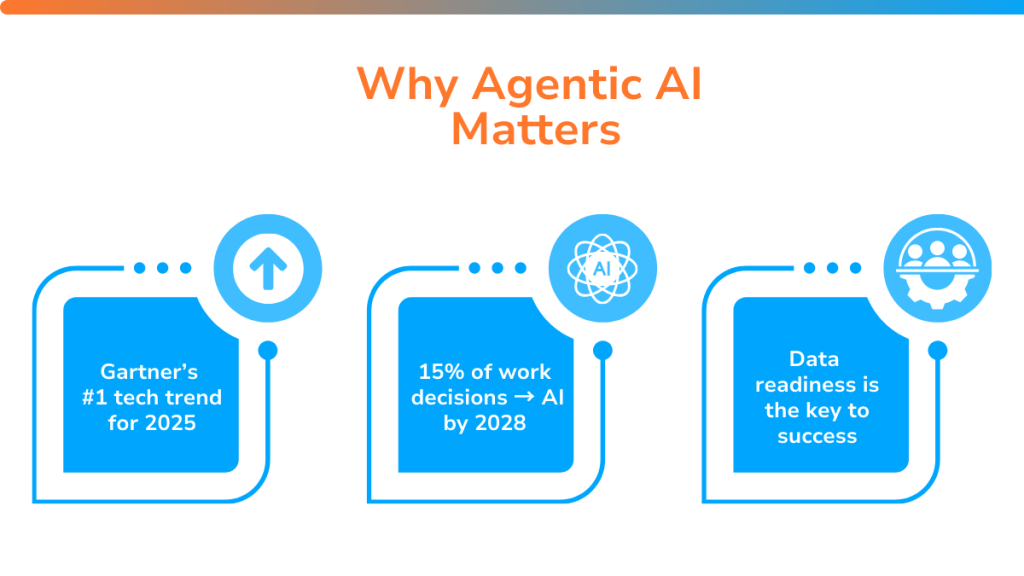

Agentic AI isn't vaporware. It's real, it's powerful, and it's already delivering value in pockets of the market. But there's a massive gap between the hype in boardrooms and what actually works in production. That gap is costing enterprises billions in wasted spend. And the worst part is that most of these failures follow the same predictable pattern.

I've spent the last eighteen months studying why agentic AI pilots stall, talking to CTOs who've been through the trenches, and analyzing what separates the companies that are genuinely succeeding from those stuck in perpetual "proof of concept" mode. The pattern is clear, repeatable, and fixable.

The problem isn't that agentic AI is overhyped. It's that businesses are moving too fast without the strategy, infrastructure, and data foundations required to make autonomous agents actually work. Most organizations are treating agentic AI like it's just another software upgrade, when in reality it demands a fundamentally different approach to how enterprises think about data, governance, and decision-making authority.

This guide walks you through exactly why pilots fail, what needs to change, and how to deploy agentic AI safely at scale without burning through budget and credibility.

TL; DR

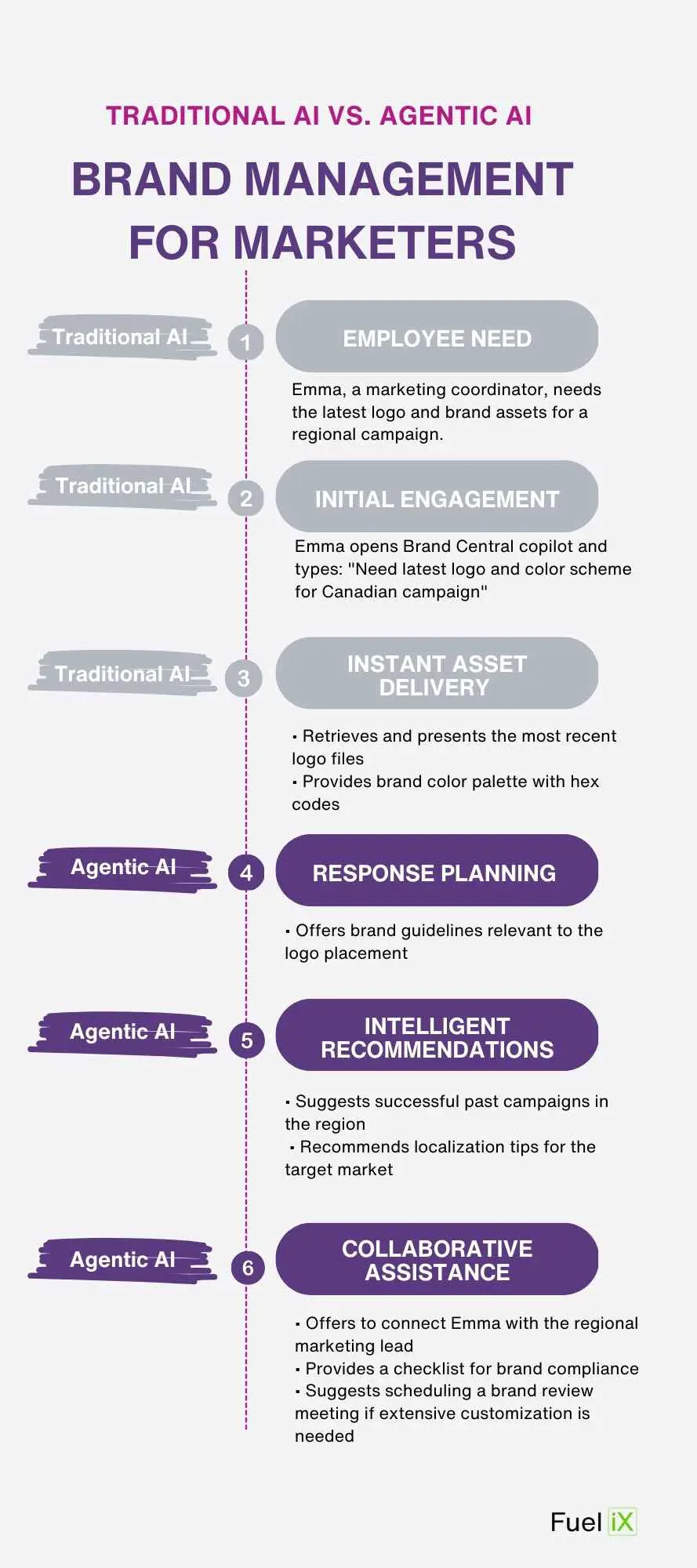

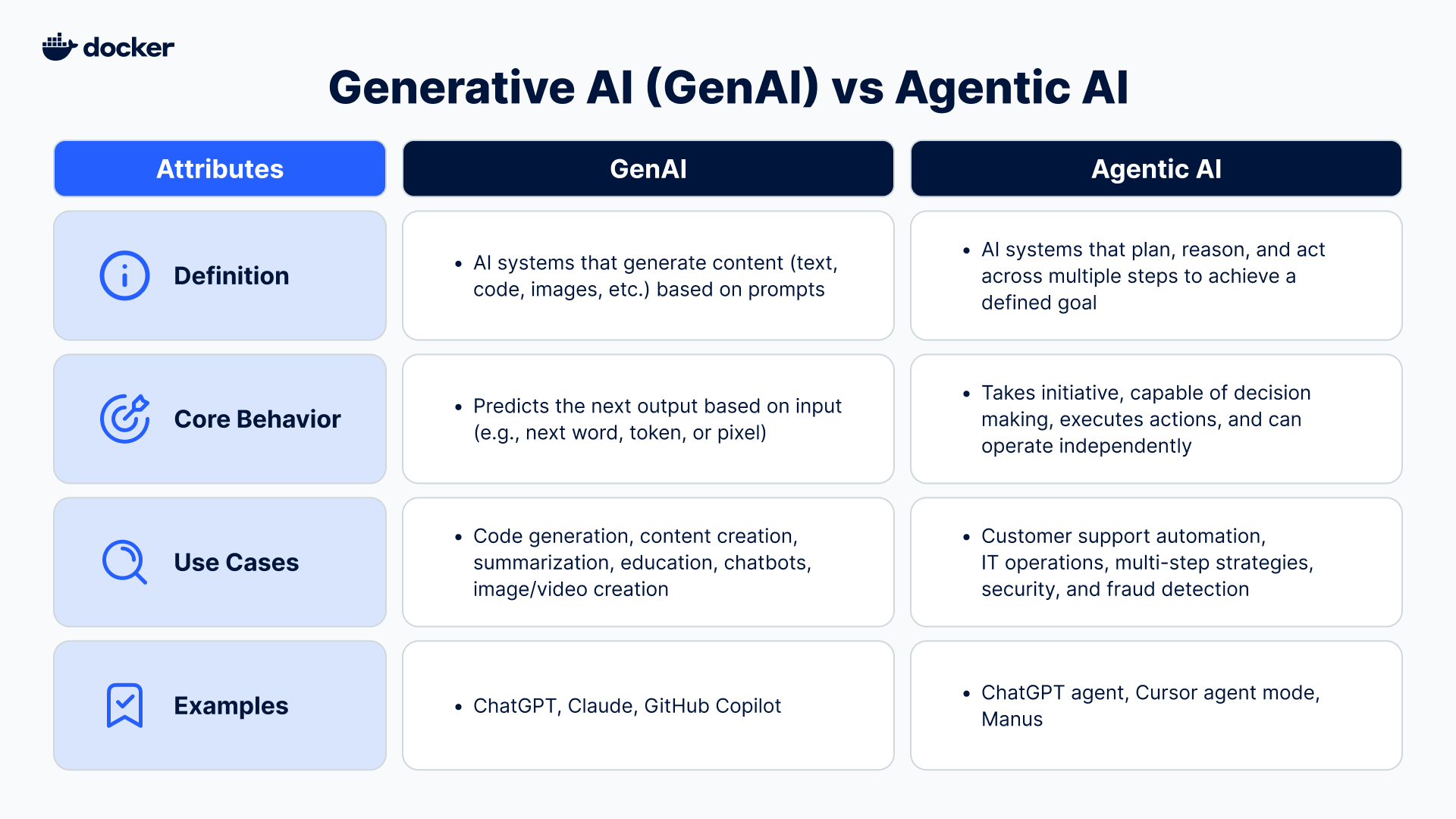

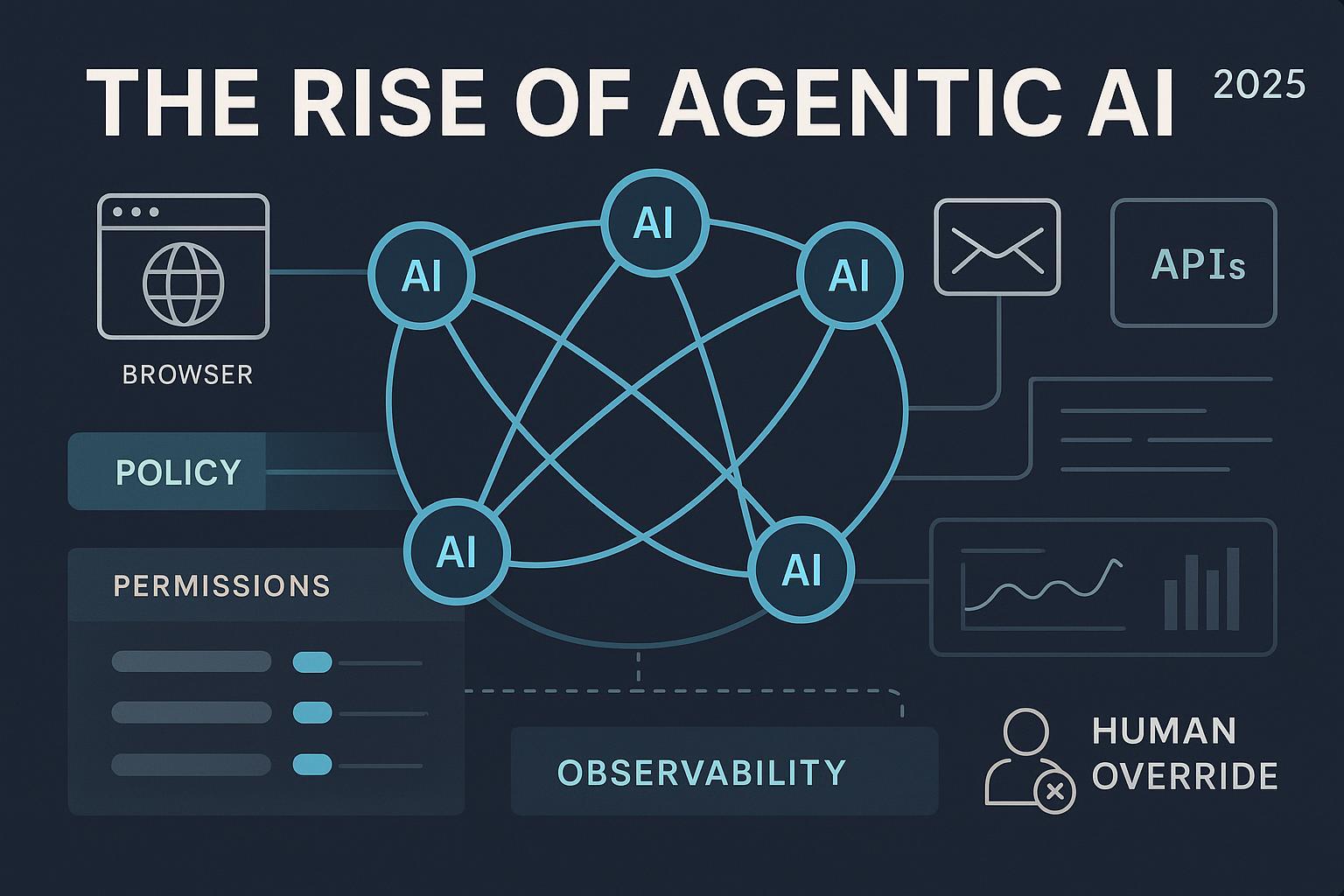

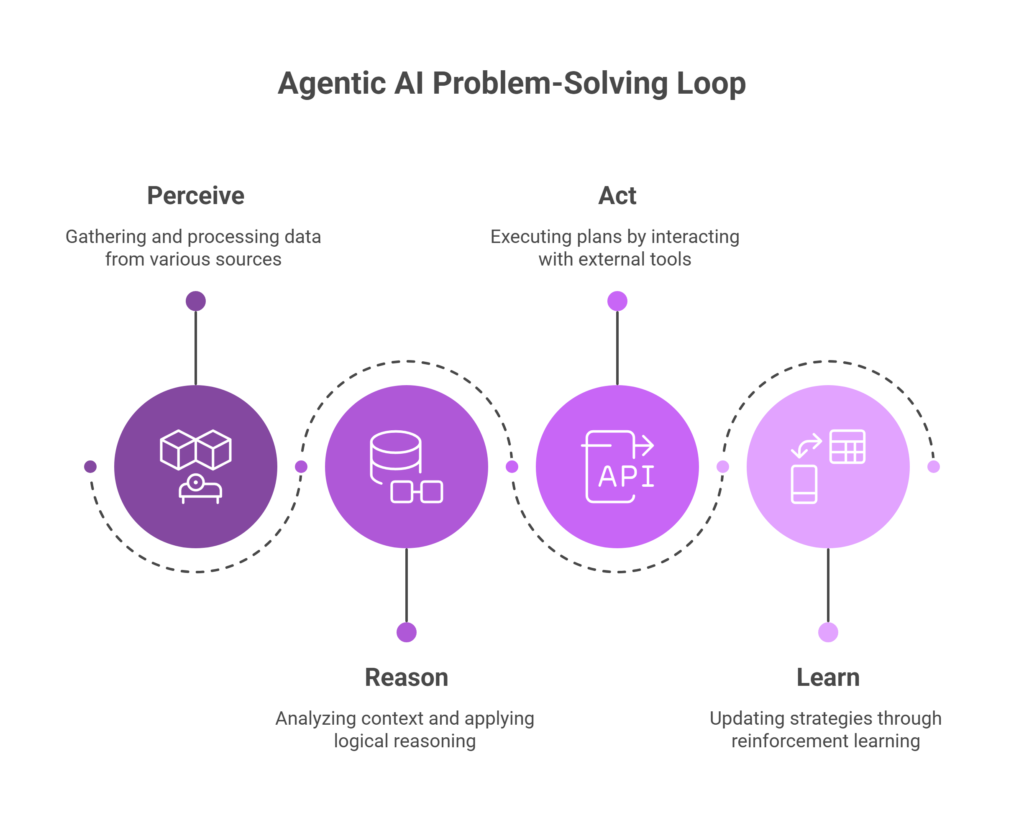

- Agentic AI differs fundamentally from chatbots: Unlike generative AI, agentic systems reason, make autonomous decisions, and act across workflows to achieve specific business outcomes.

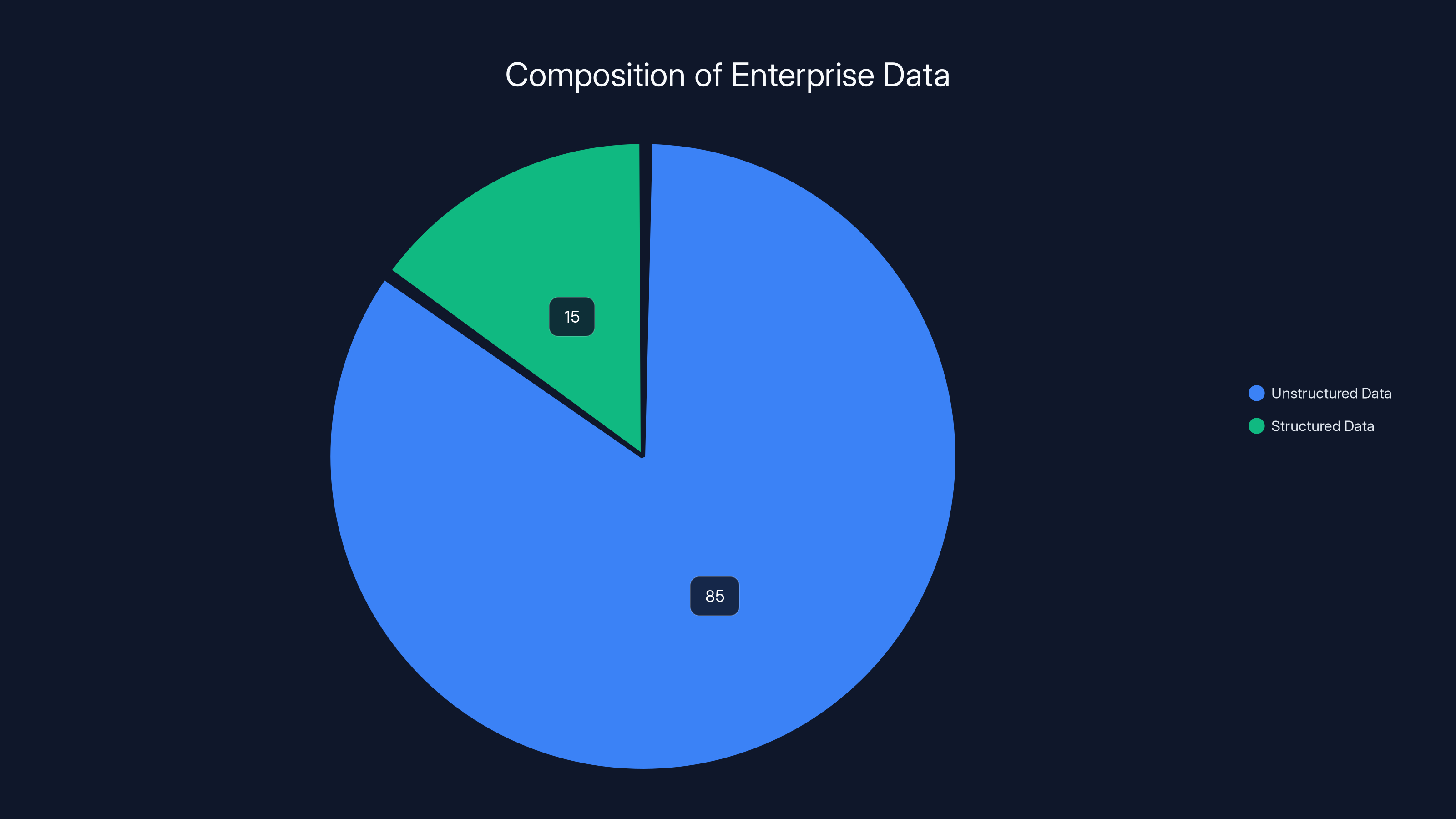

- 80-90% of enterprise data is unstructured: This creates a critical foundation problem that derails most pilots before they begin.

- Infrastructure and data governance precede autonomy: The biggest stalls happen when organizations attempt autonomous systems without unified data platforms.

- Human oversight remains essential: The most successful implementations use human-in-the-loop models, not fully autonomous systems.

- Business outcomes must drive technology choices: Pilots that start with clear process improvement goals succeed; those chasing technology trends fail.

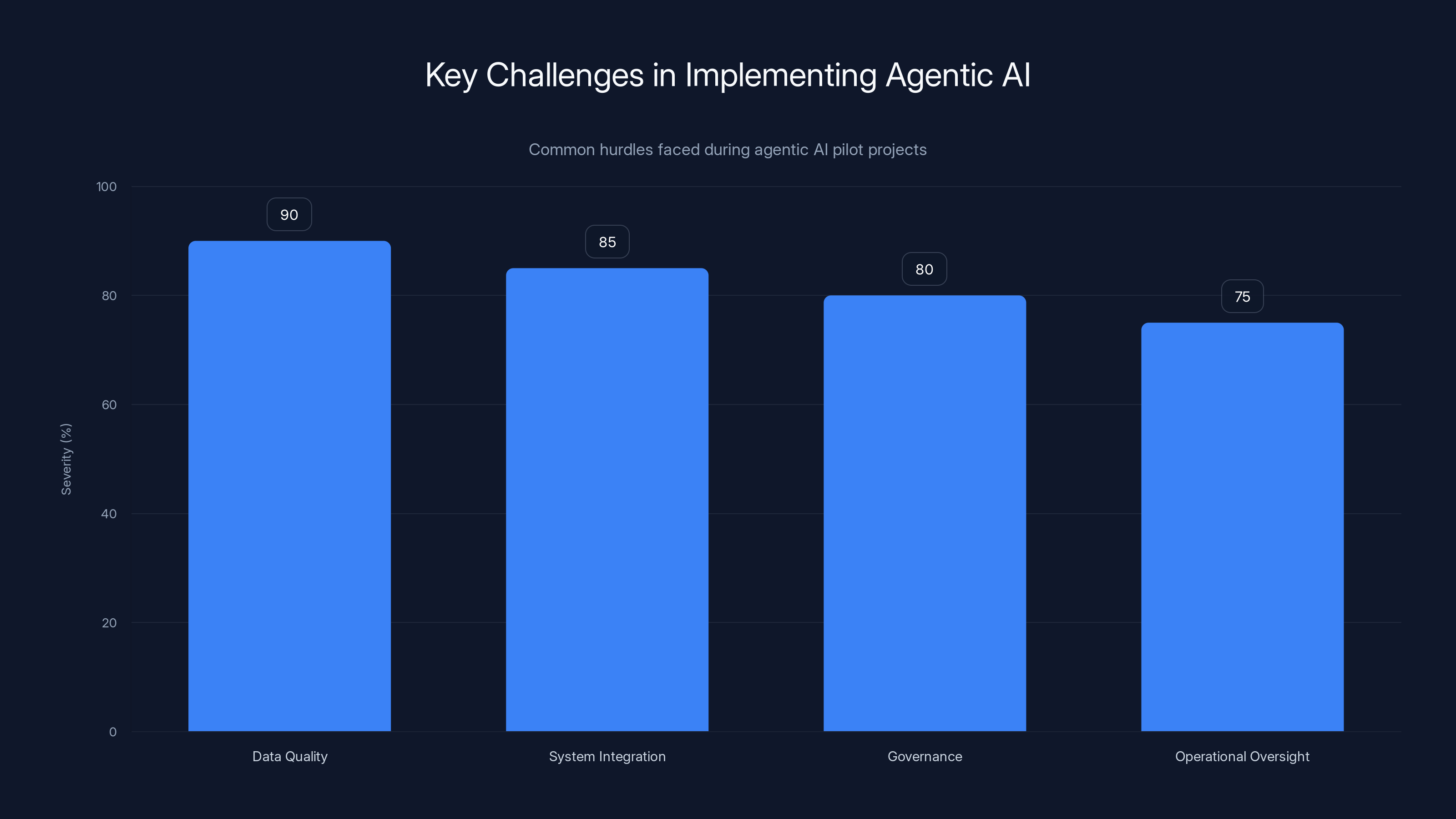

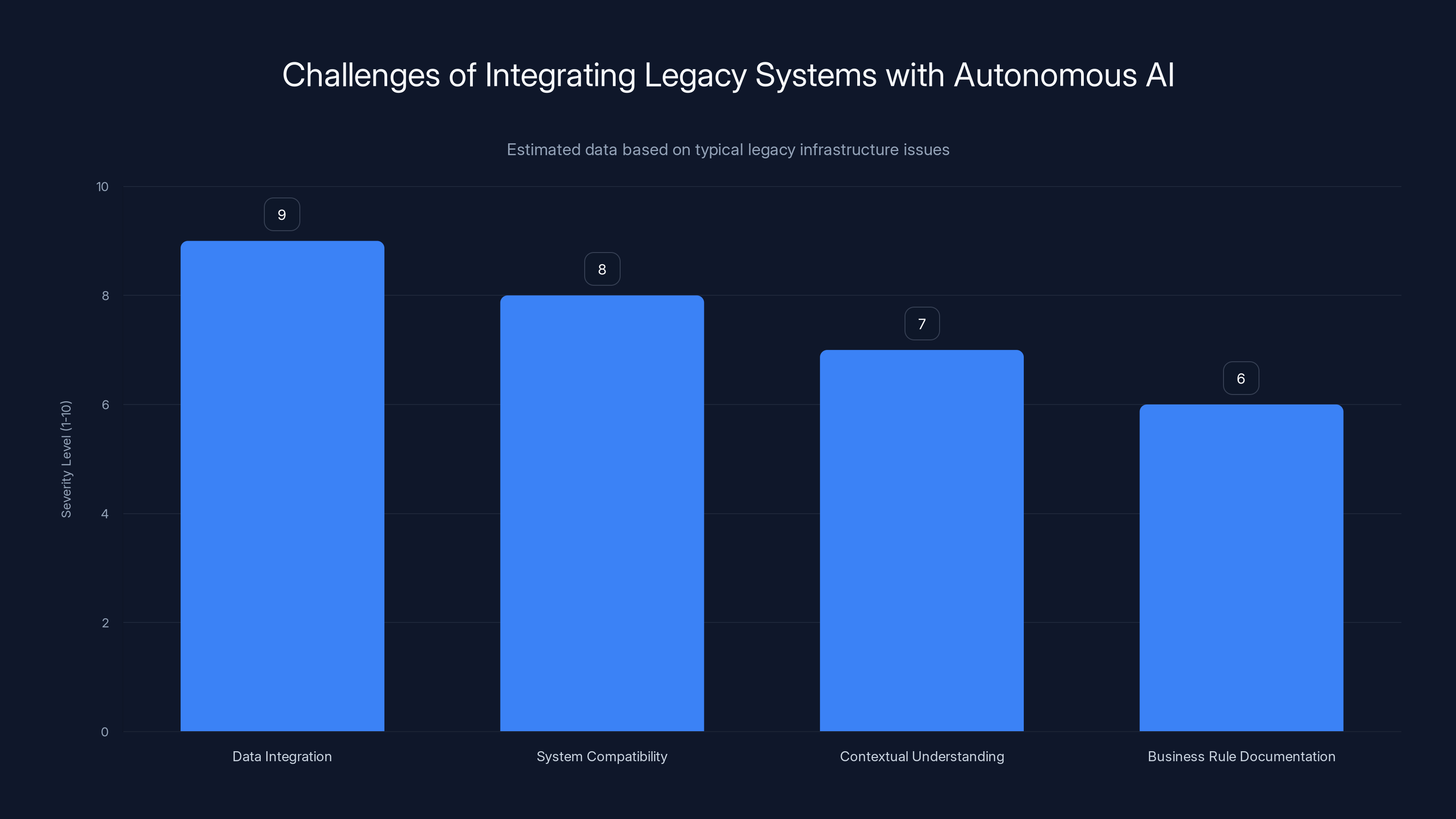

Data quality, system integration, and governance are critical challenges in agentic AI projects, with data quality being the most severe. Estimated data based on typical project feedback.

What Agentic AI Actually Is (And Why It's Different From Everything Else)

Let's clear something up first: agentic AI is not a chatbot. It's not a smarter version of Chat GPT that you can ask questions and get answers. That's generative AI doing what it's designed for, and it's useful in specific scenarios. But agentic AI operates on a fundamentally different principle.

Agentic AI systems are autonomous agents that combine reasoning, decision-making, and action across connected workflows. Think of it as the difference between asking someone for advice versus hiring someone to execute on that advice while making their own decisions along the way.

Here's the practical difference: A conventional AI tool might analyze invoice images and extract data. An agentic AI could approve payments based on extracted data, flag compliance anomalies, update accounting records in real time, and notify relevant departments—all without human intervention. It's reasoning about the context, understanding the rules and thresholds, and taking action.

That leap from analysis to autonomous decision-making is where things get complicated. Because now you're asking the system to understand not just data, but context. Not just process steps, but business rules. Not just syntax, but meaning.

Why does this matter for understanding why pilots stall? Because enterprises are still building the organizational and technical infrastructure for systems that need far more than previous generations of software. Agentic AI requires clarity on three things that most organizations have never formally addressed: data governance, decision authority boundaries, and system integration depth.

When you're running a report generator, it doesn't matter much if your data lives in three different places. When you're building an autonomous agent that needs to make decisions affecting financial transactions or compliance, suddenly that fragmentation becomes a critical problem.

The organizations that understand this distinction upfront are the ones whose pilots don't stall. They're not trying to bolt agentic AI onto existing infrastructure like it's just another tool. They're rethinking what autonomy actually requires, and building from there.

An estimated 85% of enterprise data is unstructured, highlighting the challenge of managing and utilizing this data effectively. Estimated data.

The Unstructured Data Problem That Kills Half of All Pilots

Here's a statistic that should shock your finance team: 80 to 90% of all enterprise data is unstructured. That's emails, documents, PDFs, images, contracts, medical scans, handwritten notes, video recordings, and thousands of other non-tabular formats.

Your database is maybe 10% of your actual data. Everything else is scattered across file shares, content repositories, email inboxes, and legacy systems that nobody really understands anymore.

Now imagine telling an agentic AI system to make decisions that depend on understanding this unstructured mess. An AI agent in healthcare needs to pull patient histories (unstructured clinical notes), lab results (structured but fragmented), imaging data (visual), and medication records (structured but in different systems). If even one piece is missing, delayed, or inconsistent with the others, the agent's recommendations become unreliable.

This is where most pilots hit their first major wall. The data audit reveals that critical information the business assumed was centralized and clean is actually scattered, duplicated, inconsistent, and often outdated.

I watched one financial services company spend eight months on a pilot for autonomous document verification. The agent was working perfectly in development. In production, it consistently missed fraud patterns because its training data didn't include examples from one subsidiary's unusual document formats. The agent "learned" that certain formatting variations meant legitimate documents, when they actually indicated forgery patterns. The agent was reasoning with incomplete information, and nobody had caught it until real money was on the line.

The lesson was brutal: you can't separate agentic AI capability from data quality and completeness. It's not a technical limitation that engineering can solve with better algorithms. It's a fundamental constraint on what any autonomous system can reliably do.

So what does fixing this actually look like? It starts with what I call a "brutal data audit." Not the kind where IT people nod knowingly while generating reports. A real audit where you:

Step 1: Map every data source that touches the workflow you're trying to automate. Include every system, every repository, every email folder, every legacy database. Get specific about volume and frequency.

Step 2: Identify data gaps by asking operations teams what information they wish they had but currently can't access. These gaps often reveal critical context that systems are missing.

Step 3: Assess consistency rules across sources. If one system shows a customer name as "John Smith" and another shows "SMITH, J," that inconsistency will confuse agents. Map these variations and their frequency.

Step 4: Determine completeness percentages for critical fields. What percentage of records have addresses? Phone numbers? Complete data lineage? Where do gaps exist?

Step 5: Establish update frequencies for each source. Real-time data? Daily? Monthly? Agents making decisions based on outdated information is just offline decision-making with extra steps.

Here's the thing that separates successful pilots from stalled ones: the companies that do this audit upfront actually make it happen before they build the agent. They invest in data consolidation, governance, and quality frameworks first. They don't try to build autonomy on top of chaos and hope the agent figures it out.

The companies with stalled pilots? They did the audit in month four, after the agent had already been built and deployed into a mess. They discovered inconsistencies the hard way—when the agent made decisions based on conflicting information.

Why Legacy Infrastructure Is Incompatible With Autonomous AI

Most enterprises run on siloed systems. You've got an ERP system from 1997 that handles core financial transactions. A CRM from 2008 that nobody really integrates with the ERP. A document management system from 2015 that stores contracts but doesn't talk to the ERP or CRM. And about forty-three other point solutions that someone bought because a department had a specific problem and nobody bothered to ask if it should connect to anything else.

This fragmentation is fine when humans are making decisions. A human can manually look something up in system A, check system B, apply judgment, and move forward. It takes time, but it works. Scaling this with more humans is expensive, but it's straightforward.

Agentic AI breaks in this environment. Here's why: autonomous decision-making requires context. When a human looks at a scenario and says "this is unusual, it needs review," they're pulling from mental models built over years of working with the entire system. They inherently understand which systems are authoritative, how to reconcile conflicts, and what questions to ask when something doesn't line up.

An agent doesn't have that context. It only knows what you've explicitly taught it. If you haven't explicitly defined which system is authoritative when there are conflicts, the agent will make random choices or get confused. If you haven't connected the systems, the agent can't see the full picture. If you haven't documented the business rules for applying judgment, the agent can't apply judgment.

I watched a government agency spend $3.2 million on an agentic AI system to process benefits applications. The application workflow involves pulling data from four legacy systems that were never designed to talk to each other. The agent needed to reason across all four systems, but they'd been built in completely different eras with different data models, different naming conventions, and different authoritative sources for things like address verification.

After six months, the pilot had delivered maybe 18% of expected throughput improvement. The agent was spending most of its processing time resolving contradictions between systems. A application that said the applicant's address was one thing in system A and another thing in system B, with no clear rule for which to trust. The agent would flag it for human review. The whole point of autonomous processing evaporated.

The fix required investing in modern integration infrastructure: API layers, data mapping services, and unified APIs that presented the four siloed systems as a single, coherent data source. It was a separate six-month project, and suddenly the agent was actually productive.

This is a pattern I see repeatedly. The infrastructure requirement becomes clear in month four or five of the pilot, at which point the project is already over budget and timeline expectations have shifted. Organizations have to choose: invest in infrastructure now and delay agent deployment, or accept that the agent will be constrained to scenarios where single-system context is sufficient.

The infrastructure modernization work breaks down into four categories:

1. Integration Layer: APIs and middleware that let agentic systems query multiple sources in real time and reconcile responses. This isn't just about connectivity; it's about presenting fragmented data as coherent context.

2. Data Mapping Services: Systems that understand how the same concept is represented across different databases. Customer ID in system A might be completely different from customer ID in system B, but you need the agent to know they refer to the same entity.

3. Content Platform Modernization: Many unstructured data sources live in outdated content management systems that can't be queried efficiently at scale. Modern cloud-native platforms that index and search unstructured content become essential.

4. API Standardization: Legacy systems weren't built to be queried programmatically at scale. Wrapping them with APIs that abstract the underlying complexity lets agents work with them without understanding every historical quirk.

This isn't sexy infrastructure. Nobody gets excited about discussing API standards at the board meeting. But it's what actually determines whether your agentic AI works in production or becomes an elaborate paperweight.

The chart compares performance scores across five key metric categories before and after AI implementation. Notably, quality and efficiency metrics show the most significant improvement, highlighting the AI's impact on reducing errors and processing time. (Estimated data)

Data Governance: The Unsexy Foundation That Makes Everything Work

Data governance might be the least exciting thing to discuss about agentic AI, and yet it's the most critical. Here's why it matters so much: agentic systems are only as good as the rules they follow.

When you're building an autonomous agent that will approve a payment, deny a loan application, schedule medical procedures, or flag compliance violations, you're essentially codifying decision authority. The rules need to be clear, documented, auditable, and maintainable.

I spent an afternoon last year with the CTO of a mid-size healthcare organization who had built what should have been a textbook agentic AI implementation. They had good data, modern infrastructure, and a clear business problem: emergency room triage was taking too long. They built an agent that could process incoming patient data, assess urgency, and route to appropriate departments.

It worked flawlessly for six weeks. Then the hospital had a single patient outcome where the agent's risk assessment turned out to have missed something important. The patient had sued, the legal team got involved, and suddenly they realized something critical: nobody had formally documented what decision rules the agent was using.

The machine learning model was effectively a black box. The team knew it worked on test data, but they couldn't explain the specific combination of factors that led to a particular triage decision. They couldn't prove to a court that the rules complied with medical standards. They couldn't trace a specific decision back to the underlying logic.

The entire project got shut down for six months while lawyers and compliance officers forced a complete documentation of the decision logic, validation rules, and audit trails.

Data governance is what prevents this. It's the structure that says: this is how decisions get made, this is who approves changes to rules, this is how we audit what the system did, and this is how we maintain compliance.

Core data governance requirements for agentic AI include:

1. Decision Authority Mapping: Explicitly define who can approve what decisions, what authority levels are required for different scenarios, and what escalation procedures exist. This isn't optional when autonomy is involved.

2. Rule Documentation: Every business rule the agent uses must be documented in human-readable form, with version history, approval status, and change logs. This is non-negotiable for compliance.

3. Audit Trail Requirements: Systems must capture what data the agent considered, what rules it applied, and what decision it made. This becomes critical when decisions affect people or money.

4. Exception Handling Protocols: What happens when the agent encounters a scenario it doesn't have rules for? How does it escalate? Who gets notified? This prevents agents from silently failing or making decisions outside their authority.

5. Bias and Fairness Testing: Formal processes for evaluating whether agent decisions discriminate against protected classes. This needs to be built in from the start, not bolted on later.

6. Regular Revalidation: Business rules change. Markets change. The rules that made sense last year might not make sense today. Governance includes regular reviews and revalidation.

The companies whose agentic AI pilots are actually running in production are the ones that treated governance as foundational, not as a box-checking exercise. They integrated it into the development process. They had legal, compliance, and operations people involved from the beginning, not brought in at the end.

The companies with stalled pilots usually did the opposite. They built the agent, got it working, and then hit governance issues that required significant rework.

The Human-in-the-Loop Model: Why Full Autonomy Fails

Here's a misconception that kills a lot of pilots: the idea that agentic AI means removing humans from the process.

Completely removing human oversight is rarely the right answer. In fact, the most successful agentic AI implementations I've studied are the ones that embrace a human-in-the-loop model where AI handles routine decisions autonomously, but edge cases, high-value decisions, or scenarios that don't fit the rules get escalated to humans.

This might sound like a limitation. In practice, it's what makes agentic AI actually valuable.

Consider a financial services example: an agentic system might autonomously approve a loan application for a borrower with excellent credit history, strong income verification, and employment stability. The rules are clear, the risk is low, and the decision is routine. Processing it autonomously saves time and cost.

But a borrower with a recent bankruptcy, unusual income sources, and limited employment history? That needs a human review. The agent can prepare the analysis, flag the risk factors, and present the case. A human credit officer makes the actual decision.

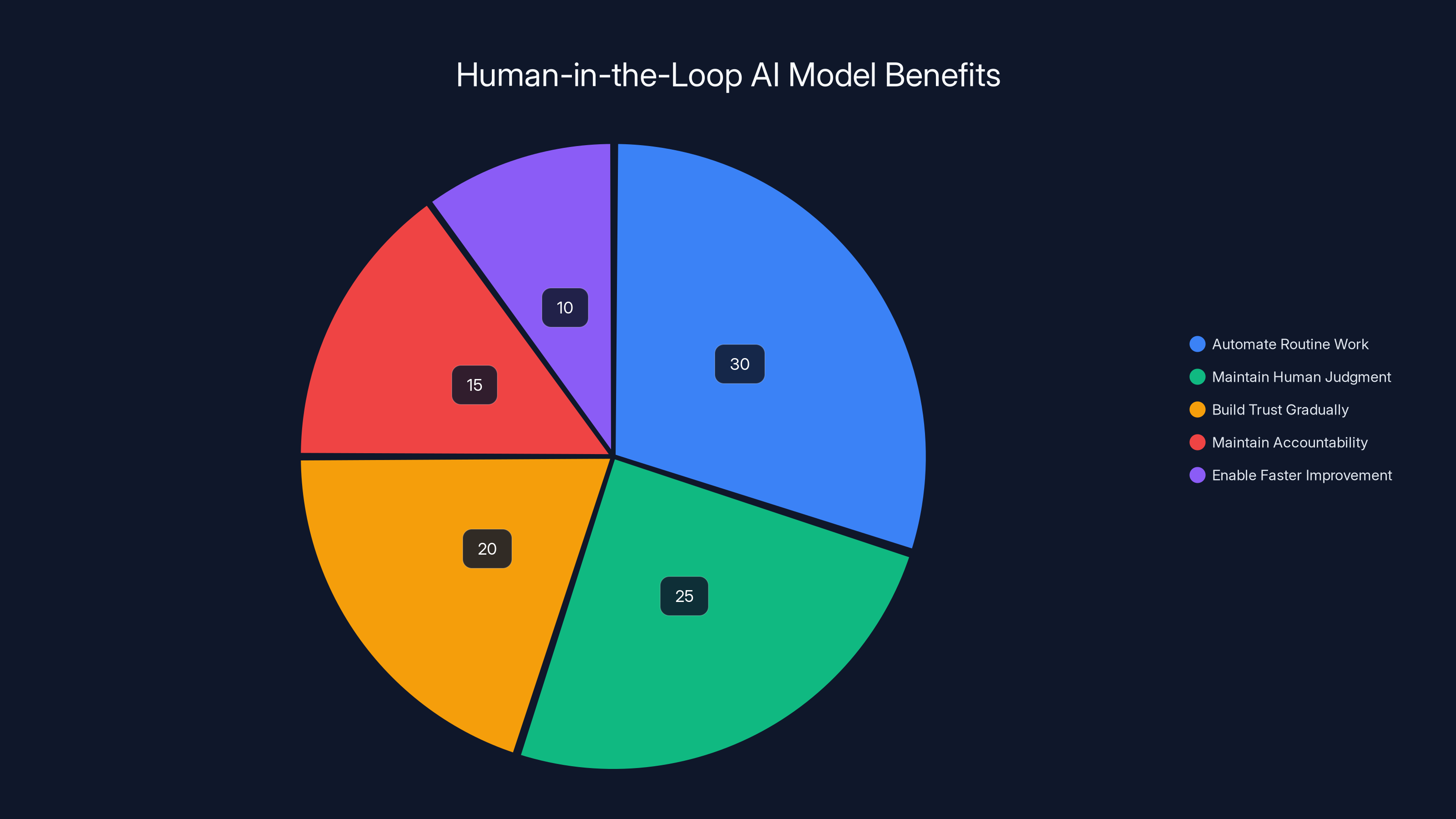

This hybrid approach is what actually scales. Full autonomy requires rules so bulletproof that they rarely exist in practice. Any business rule complex enough to matter is complex enough to have edge cases. Human-in-the-loop models let you:

1. Automate the high-volume routine work where rules are clear and risk is predictable. This is where you get efficiency gains.

2. Maintain human judgment on complex scenarios where rules are ambiguous or outcomes are high-stakes.

3. Build trust gradually by starting with oversight on everything and expanding autonomy as confidence grows.

4. Maintain accountability by ensuring that decisions affecting people or money still have human sign-off at critical points.

5. Enable faster improvement because humans can provide feedback on edge cases that let you refine rules over time.

I watched a manufacturing company build an agentic system for supply chain decisions. Initial plans were ambitious: fully autonomous ordering, supplier selection, and inventory management. But once they started testing, they realized that market disruptions, supplier issues, and demand fluctuations created edge cases constantly.

Instead of trying to encode all possible rules, they built a system where the agent could autonomously handle routine reorders from vetted suppliers but needed human approval for new suppliers, unusual order volumes, or changes to inventory thresholds. This actually reduced decision time because the agent was doing the analysis and presenting options, but humans weren't blocked on every decision.

The shift in thinking is important: don't ask "how autonomous can we make this?" Ask "what's the right balance of automation and human judgment for this specific decision?" For some decisions, you might find that 80% autonomy is ideal. For others, 20% autonomy is perfect because human judgment matters too much.

Data integration and system compatibility are major challenges when integrating legacy systems with autonomous AI. Estimated data highlights the severity of these issues.

Why Most Pilots Fail: The Three Fatal Missteps

I've analyzed failures across different industries, company sizes, and AI use cases. The pattern is shockingly consistent. Most agentic AI pilots stall because of one, two, or all three of these missteps:

Misstep 1: Technology First, Business Problem Second

This is the most common error I see. Leadership gets excited about agentic AI as a concept, allocates budget, and brings in engineers who immediately ask: "What do we automate?" Instead of starting with a specific process pain point and understanding it deeply, they start with the technology and try to find problems it might solve.

This leads to pilots that are technically impressive but business-irrelevant. You end up automating tasks that save minimal time, don't reduce costs meaningfully, or don't actually solve customer problems. By month four, the business sponsor isn't excited anymore. Budget gets reallocated. The project quietly dies.

The successful pilots start differently. Someone identifies a specific process that's slow, expensive, or error-prone. They measure the current state. They define what improvement would look like. Then they ask: could agentic AI help here? If the answer is yes, the problem is well-understood, well-scoped, and defensible. Even if the project takes longer than expected, stakeholders remain committed because they understand the value.

Misstep 2: Underestimating Infrastructure Debt

Engineers are optimistic by nature. They look at a business process, estimate the agent logic, and think "three months to build." What they underestimate is infrastructure. If the data isn't accessible, the systems don't talk to each other, or the data quality is poor, you're not building an agent for three months. You're spending months on infrastructure before you even start building the agent.

The successful pilots budget for this. They do infrastructure and data assessment first. They might spend weeks understanding data quality, system connectivity, and governance requirements before the first line of agent code is written. This feels slow upfront, but it prevents the classic stall in month four when everyone realizes the data story is more complicated than expected.

Misstep 3: Treating Governance as an Afterthought

Most failing pilots build the agent, get it working on test data, and then suddenly realize: "Wait, how do we audit this? How do we prove compliance? What happens if the agent makes a mistake?" The answers require rework, documentation, testing, and potentially architectural changes.

The successful pilots involve compliance, legal, and operations from the beginning. These folks can be annoying because they ask questions and slow things down. But they prevent ending up with an agent that technically works but can't run in production because governance requirements aren't met.

The Operational Reality: What Actually Gets Deployed in Production

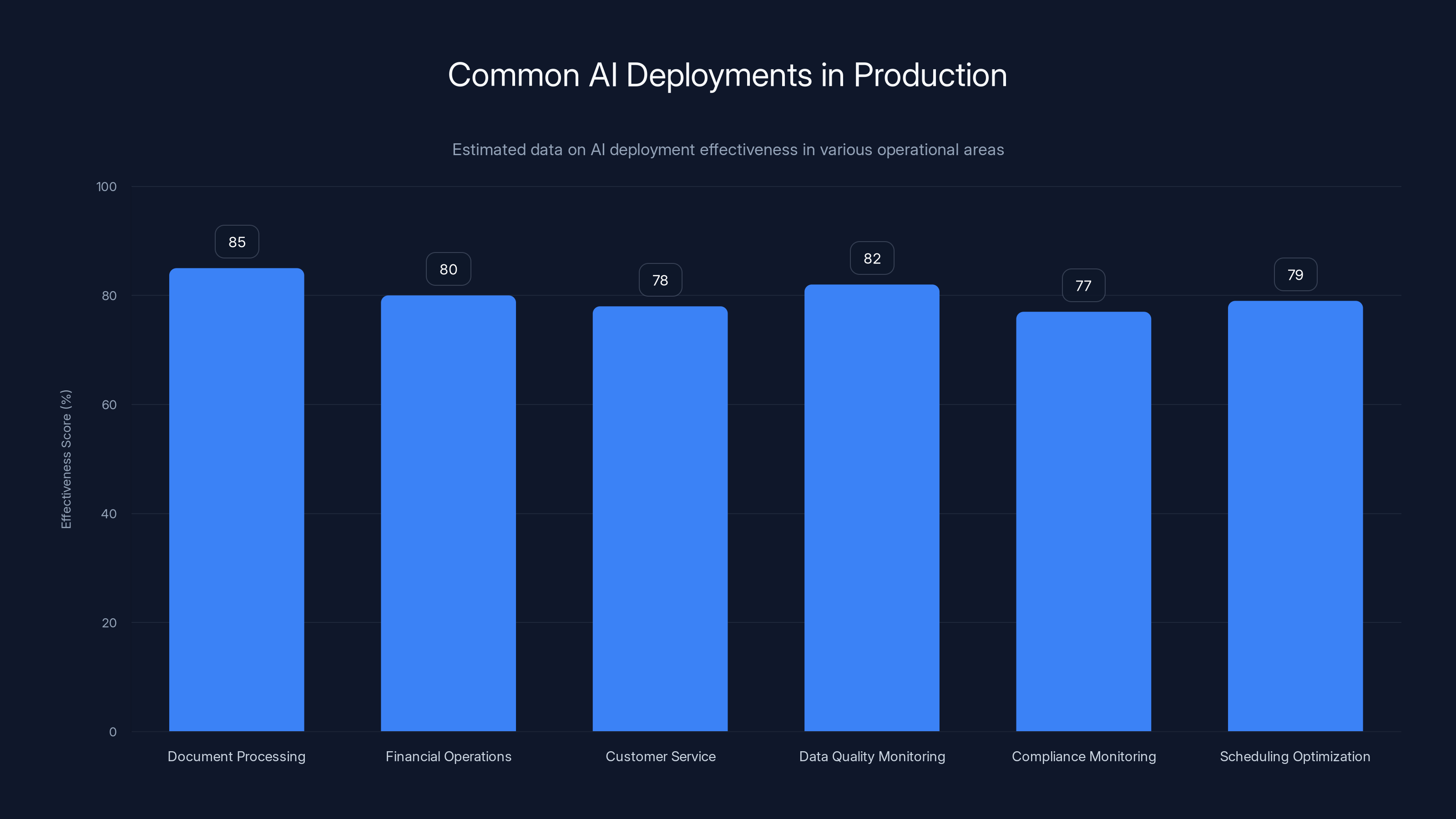

I want to be honest about something: most successful agentic AI deployments aren't doing anything particularly exotic. They're automating routine, high-volume, well-understood work that humans currently do manually.

They're not replacing junior analysts or consultants. They're replacing the repetitive parts of those jobs: the data gathering, the routine decision-making, the documentation, the system updates. The junior analyst now spends time on judgment calls and complex scenarios instead of copying data between spreadsheets.

Here's what's actually working in production right now:

1. Document Processing and Verification: Agents that can read documents, extract relevant information, verify completeness, and flag issues for review. This is high volume, relatively low complexity, and the rules are usually clear.

2. Routine Financial Operations: Expense categorization, invoice matching, payment processing for standard scenarios. Anything unusual gets escalated, but the routine stuff gets handled autonomously.

3. Customer Service Escalation: Agents that triage customer issues, gather context, and route to appropriate departments with complete background. This improves routing quality and reduces time to first action.

4. Data Quality Monitoring: Continuous agents that watch data streams, flag anomalies, and alert operations teams. This is less about decision-making and more about intelligent monitoring.

5. Compliance and Risk Monitoring: Agents that continuously check transactions, activities, and communications against policy and regulatory requirements. Flag violations for review.

6. Scheduling and Resource Optimization: Agents that handle calendar management, meeting scheduling, and resource allocation within defined constraints. Pure rule-based autonomy with human escalation for conflicts.

Notice a theme? Successful deployments are solving real business problems that have clear rules and high volume. They're not trying to solve ambiguous, judgment-heavy, low-volume decisions. Those need humans.

The failure pilots are often trying to automate complex judgment calls. "Build an agent that makes staffing decisions." "Create an agent that approves strategic business deals." These require more nuance than current agentic systems can reliably handle.

Estimated data shows that automating routine work is the largest benefit of the human-in-the-loop model, followed by maintaining human judgment in complex scenarios.

Building a Maturity Model: From Pilot to Scale

Here's what I've learned from watching dozens of pilot programs: the organizations that successfully move from pilots to scaled deployments don't try to automate everything at once. They build systematically using a maturity model.

Level 1: Prove the Concept (Months 1-3)

This is the pilot phase, but constrained. Pick one specific, well-understood workflow. Keep scope small. Your goal is to answer: "Does agentic AI actually solve our problem?" Not to prove the full vision, just to validate the core concept.

Success at this level means the agent can autonomously handle 70% of routine scenarios, escalates edge cases appropriately, and executes without critical errors over weeks of continuous operation.

Level 2: Build Governance and Audit (Months 3-6)

Once the concept works, invest in making it operationally sound. Build the audit trail requirements, document decision rules, establish governance processes, and get compliance sign-off. This feels like overhead, but it's actually prerequisite for production scale.

Success here means you can explain how the agent made any specific decision, why it made that decision, what policies governed it, and who's accountable if something goes wrong.

Level 3: Expand to Production Scale (Months 6-12)

Now that the first workflow is working, proven, and governed, you can scale it. Deploy it to production with monitoring and alert systems. Set up escalation workflows and SLAs. Train the teams who'll manage it operationally.

Success means the system is processing production workload at expected efficiency levels, errors are being caught appropriately, and stakeholders are happy with outcomes.

Level 4: Multi-Workflow Coordination (Months 12+)

Once you have one working agent, the next frontier is networks of agents working across multiple workflows. One agent might handle customer inquiry triage while another manages follow-up scheduling. They share context and coordinate.

This is where the real transformational benefits emerge, but it's also where things get organizationally complex. You need the other three levels working perfectly before attempting this.

The reason this maturity model matters: it prevents the classic pilot overreach. You're not trying to do everything in the first sprint. You're building confidence and capabilities systematically.

Organizations that try to skip levels—"Let's automate five workflows simultaneously from the start"—are the ones that stall. They end up with half-finished projects, insufficient governance, and scalability problems that prevent actual production deployment.

Avoiding the Scope Creep Trap

Scope creep is the silent killer of agentic AI pilots. It starts innocently enough.

"While we're building the agent for invoice processing, we could also add purchase order matching."

"And then why not include vendor validation?"

"And then supplier risk assessment."

Six weeks in, your "build an invoice agent" project has expanded into a complete accounts payable transformation. You're over budget, behind schedule, and the agent is significantly more complex than initially planned.

I watched this happen at a healthcare organization. The initial pilot was supposed to automate patient record routing. A month in, someone asked "could it also validate insurance information?" Then someone else asked "could it check pre-authorization requirements?" Then "what about scheduling follow-ups?"

By Month four, the project scope had tripled. The team was stressed, quality was suffering, and the executive sponsor was frustrated because nothing was delivering tangible value yet.

Scope control requires discipline and governance at a project level:

1. Define the MVP (Minimum Viable Product) clearly: Write down exactly what the agent will do. Get stakeholder sign-off. This becomes the scope baseline.

2. Establish a change control process: Any expansion to scope requires formal review, priority assessment, and timeline/budget adjustment. This isn't bureaucracy; it's the only thing preventing runaway scope.

3. Time-box the pilot phase: Set a fixed end date. At that date, you evaluate and decide to either move to production, extend the pilot with defined scope additions, or pivot. You don't just keep expanding indefinitely.

4. Separate pilot and production features: Features that make sense to validate in a pilot (complex error handling, advanced monitoring) are different from features you need in production. Don't confuse them.

5. Create a backlog for future phases: If someone has a great idea but it's not in the current scope, add it to the backlog for phase 2, 3, or 4. Don't kill great ideas, but don't let them derail the current phase.

AI deployments in production are most effective in document processing and data quality monitoring, with effectiveness scores above 80%. Estimated data.

Data Quality as a Competitive Advantage

We've talked about data quality as a foundation. Let me be more specific about why it matters and how to fix it.

Agentic AI is a forcing function. It makes data quality problems impossible to ignore. When humans are processing documents, they can compensate for inconsistency. They can infer that "Dr. Smith" and "DR SMITH" refer to the same person. They can figure out that when one system says "pending" and another says "in progress," those probably mean the same thing.

Agents can't compensate. They either have explicit rules for handling inconsistency, or they fail.

This is actually valuable information. The organizations treating data quality work seriously—not as a side project, but as core infrastructure—are the ones whose agents are reliable in production.

Here's what data quality work actually involves:

Profiling: Understanding what data you actually have. Distribution of values, completeness percentages, data type consistency, outliers. This is foundational.

Cleaning: Correcting obvious errors. Standardizing format variations. Filling in critical missing values. This is manual and tedious but necessary.

Governance: Establishing rules for maintaining quality going forward. Who can change data? What approval is required? What audit trails exist? This prevents quality degradation over time.

Monitoring: Continuous checking of data quality metrics. Early warning systems when quality drops. This prevents slow degradation that goes unnoticed.

The investment here is real. Data quality work for a major agentic AI deployment might be 20-30% of the total project effort. But it's what separates pilots that work from pilots that stall.

The Integration Challenge: Making Siloed Systems Act Like One

Most enterprise environments are collections of integrated point solutions, legacy platforms, and custom-built systems that were never designed to work together. They work well in isolation but terrible in combination.

Agentic AI requires making them work as if they were a single system. From the agent's perspective, it needs to be able to query customer information, check inventory, verify compliance, and update records as if they're all part of one coherent system.

This integration work is what's most often underestimated.

The integration layers that typically need to be built:

API Abstraction: Many legacy systems don't expose APIs suitable for programmatic access at scale. You might need to build wrapper APIs that abstract the underlying complexity.

Data Mapping Services: Systems use different identifiers, terminology, and structures for the same concepts. You need services that map between them intelligently.

Real-time Sync Mechanisms: For the agent to make good decisions, data from different systems needs to be reasonably current. This often requires event-based sync mechanisms or scheduled sync services.

Conflict Resolution: When the same information exists in multiple systems (customer address, phone number, account status), there needs to be a clear resolution protocol. Which system is authoritative? How are conflicts detected and resolved?

Transactional Consistency: When the agent makes a decision that affects multiple systems (approve payment, update inventory, record transaction), those changes need to be coordinated. Partial failures aren't acceptable.

Building this infrastructure is less glamorous than building the agent itself, but it's absolutely essential. The pilots that succeed have teams that understand integration requirements upfront. The pilots that stall usually discover these requirements partway through.

Measuring Success: Metrics That Actually Matter

Here's something I see constantly: organizations measure the wrong things when evaluating agentic AI pilots.

Wrong metrics: "The agent processed X documents." "It made Y decisions." "It saved Z hours." These tell you activity, not value.

Right metrics: "We reduced processing time from 3 days to 8 hours." "We reduced error rate from 3.2% to 0.4%." "We freed up two FTEs to work on higher-value tasks." "We improved compliance audit scores." These tell you actual business impact.

The distinction matters because it affects how you interpret pilot results. A pilot might look successful from an activity perspective (lots of decisions made) while being unsuccessful from a business perspective (minimal cost savings, no error reduction, no compliance improvement).

The metrics framework I recommend:

1. Efficiency Metrics: Time taken for a complete process cycle (before and after). Labor hours required per unit processed. Cost per transaction. These show whether you're actually saving time and money.

2. Quality Metrics: Error rate or accuracy on routine decisions. Escalation rate (percentage of decisions requiring human review). Compliance violations. These show whether the agent is making good decisions.

3. Operational Metrics: System reliability and uptime. Latency of decisions. Throughput improvements. These show whether it's operationally viable.

4. Adoption Metrics: Percentage of eligible work actually being processed by the agent versus manual. User satisfaction from teams using the system. These show whether it's actually being used.

5. Financial Metrics: Total cost of ownership for the solution. Return on investment in terms of hard savings. Cost per unit of output. These show the business case.

You should measure all five categories. If any one is weak, the solution is weak. A system that's fast but inaccurate isn't valuable. A system that's accurate but unreliable isn't viable. A system that's efficient but that nobody actually uses is just expensive infrastructure.

The Role of Change Management: Why Technology Alone Fails

Here's something that catches a lot of organizations off guard: agentic AI isn't just a technology implementation. It's an organizational change.

When you introduce an autonomous agent into a workflow, you're fundamentally changing how people work. Tasks that humans used to do are now handled by the agent. That's unsettling for some people. There's genuine concern about job security, change in role, new skills required.

The pilots that stall often don't account for this. The technology team builds a perfect agent, gets it working, and then is surprised when business users resist using it.

The successful implementations treat change management as seriously as technology work:

Clear Communication: People need to understand what the agent does, why it's being implemented, and how it affects their roles. Transparency prevents rumors and resistance.

Skills Transition: If people's roles are changing, they need training for new responsibilities. Maybe they're moving from data entry to exception handling and judgment calls. They need preparation for that.

Change Champions: Identify influential people in the organization who embrace the change. Let them help others understand benefits and adoption.

Feedback Loops: Ask the teams actually using the system what's working, what's not, and what needs adjustment. Implement their suggestions where reasonable. This builds buy-in.

Gradual Adoption: Rather than switching from 100% manual to 100% agent overnight, ramp adoption gradually. This builds confidence and allows adjustment.

Organizations that treat this seriously see smooth adoption and strong results. Organizations that treat it as "just deploy the technology and people will figure it out" struggle with adoption and see higher failure rates.

Future Trends: Where Agentic AI Is Going

The current generation of agentic AI systems are impressive in narrow domains but limited in broader applicability. What's coming next?

Multi-Agent Networks: The next breakthrough is agents that coordinate with each other. One agent handles customer inquiry triage, another manages follow-up scheduling, another tracks satisfaction. These agents share context and coordinate their actions. This enables more sophisticated business process transformation.

Learned Adaptation: Right now, agents follow rules you explicitly define. The next generation will learn from their experiences, gradually improving decision-making as they process more examples. This requires better feedback mechanisms and governance frameworks that allow safe learning.

Cross-Domain Reasoning: Current agents are typically domain-specific (this agent handles insurance claims, that agent handles loan approvals). The evolution is toward agents that understand multiple domains and can reason across them.

Predictive Proactivity: Current agents are reactive (something happens, they respond). The evolution is toward predictive agents that anticipate problems before they occur and take preventive action.

Distributed Autonomy: Right now, autonomous decision-making is centralized in the agent system. The evolution is toward pushing autonomy to the edge, where local systems make local decisions and coordinate upward.

These evolutions are coming, but they're still years out for most enterprises. Right now, the opportunity is in executing well with current agentic capabilities.

Lessons From Companies Getting It Right

I've had the privilege of talking to organizations that have moved beyond pilots and into productive agentic AI deployment. Here's what they're doing differently:

Starting Small, Thinking Big: They pick one specific workflow, prove the concept thoroughly, then expand methodically. They're not trying to transform the entire enterprise in year one.

Data as Infrastructure: They treat data quality, governance, and integration as foundational work, not optional nice-to-haves. They budget for it. They schedule it before agent development.

Business Sponsorship: They have strong executive support from the business, not just IT. The sponsor understands the problem being solved and cares about the outcome.

Governance Built In: They involve compliance, legal, and operations from the start. They don't see governance as overhead; they see it as prerequisite for production systems.

Human-in-the-Loop: They're not trying to remove humans from workflows. They're automating routine work and freeing humans for judgment and complexity.

Measurement Discipline: They establish metrics before deployment and measure actual results. They track not just activity but business impact.

Patience: They understand that agentic AI deployment is a multi-year journey, not a quick fix. They're building capabilities and learning continuously.

These aren't revolutionary practices. They're fundamentals of good technology implementation applied with clarity and discipline. The organizations doing this successfully aren't necessarily the most sophisticated technically. They're the ones being most systematic and realistic about requirements.

Your Action Plan: From Understanding to Implementation

If you're evaluating agentic AI for your organization, here's what to do next:

Week 1-2: Problem Definition

Identify the specific process you're considering automating. Get specific about pain points. Measure the current state: how long does it take, how many errors occur, how much does it cost? Define what success looks like if you improved this process.

Week 3-4: Data Assessment

Do a thorough audit of the data sources required for this workflow. Where does the data live? How complete is it? How consistent is it? Get honest assessments from the people who currently do this work.

Week 5-6: Infrastructure Analysis

Map the systems involved. Understand how they currently connect, or don't connect. Identify integration work that would be required. Talk to infrastructure and integration teams about realistic timelines.

Week 7-8: Governance Baseline

Involve compliance, legal, and operations. Ask what decision authority frameworks, audit requirements, and risk controls are necessary for autonomous decisions in this domain. Understand the constraints.

Week 9-10: Pilot Scoping

Based on all the above, define a focused pilot: narrow problem, clear success criteria, defined timeline and budget, committed stakeholders. Avoid scope creep by writing this down and getting formal approval.

Week 11+: Execution

Build and deploy the pilot with realistic expectations. Treat it as a learning exercise first, a production system second. Establish feedback loops. Plan for iteration.

If you rush any of these steps, you're setting up for the classic pilot stall. If you work through them systematically, you're building a foundation for actual success.

FAQ

What is agentic AI, and how is it different from generative AI?

Agentic AI systems autonomously reason, make decisions, and take action across workflows to achieve specific business outcomes, while generative AI typically responds to prompts by generating text, images, or code. Think of it as the difference between asking someone for advice (generative) and hiring someone to execute on that advice while making their own judgment calls (agentic). Agentic AI requires far more foundational work around data, infrastructure, and governance because the stakes are higher when systems are making autonomous decisions.

Why do most agentic AI pilots stall?

Pilots typically stall because organizations move too fast without addressing foundational requirements: clean, unified data infrastructure, clear governance frameworks, and proper system integration. Many companies treat agentic AI as a bolt-on technology upgrade rather than requiring rethinking of data architecture, decision authority, and operational oversight. Poor data quality, fragmented systems, and incomplete governance usually surface in month three or four, derailing projects that were already behind schedule.

How important is data quality to agentic AI success?

Data quality is absolutely critical. With 80-90% of enterprise data being unstructured and fragmented across systems, most agents operate with incomplete or inconsistent information. If an agent's decision-making depends on data that's scattered across multiple systems with different formats and update frequencies, it will make flawed decisions. The companies succeeding with agentic AI invest heavily in data audits, quality remediation, and governance before building their agents.

What role should humans play in agentic AI systems?

The most successful implementations use a human-in-the-loop model where agents handle routine, well-understood decisions autonomously while humans review edge cases, high-value decisions, and scenarios that don't fit established rules. This approach maintains accountability, builds trust, and enables better outcomes than either full automation or complete human processing. It's not a limitation; it's actually what makes agentic AI practical and defensible in most business contexts.

What kind of infrastructure work is required before deploying agentic AI?

Typical infrastructure work includes building or modernizing integration layers (APIs and middleware), establishing data mapping services that unify information across systems, modernizing content management platforms for efficient unstructured data access, and standardizing APIs so legacy systems can be queried programmatically. This work is often underestimated and is a primary reason pilots stall. Organizations should budget 20-30% of project effort for infrastructure and data foundation work.

How long does an agentic AI pilot typically take?

A well-scoped pilot with good foundational work should take 3-6 months to reach a "production-ready" state. This assumes 1-2 months of upfront data and infrastructure assessment, 1-2 months of development, and 1-2 months of hardening, governance, and testing. Organizations that try to complete pilots in 4-8 weeks typically either cut corners on governance and risk problems in production, or underestimate complexity and extend timelines.

What metrics should we use to evaluate agentic AI success?

Successful evaluations track five categories: efficiency metrics (time and cost per transaction), quality metrics (error rates and escalation rates), operational metrics (system reliability and latency), adoption metrics (actual usage and user satisfaction), and financial metrics (ROI and total cost of ownership). Measuring only one category (like volume of decisions made) can give a misleading picture of whether the system is actually valuable.

How do we ensure governance and compliance for autonomous decisions?

Governing autonomous systems requires involving legal, compliance, and operations teams from the project start, documenting all business rules explicitly with version control, establishing audit trails that capture what data drove each decision, defining escalation procedures for edge cases, implementing regular testing for bias and fairness, and scheduling periodic review and revalidation of rules. Treating governance as an afterthought requires significant rework and is a major source of project delays.

What should be in scope for an agentic AI pilot?

Successful pilots focus on a single, well-understood workflow with clear rules, high volume of repetitive decisions, and defined success metrics. Good starting points include document processing, routine financial operations, customer service triage, and compliance monitoring. Avoid trying to automate complex judgment calls, low-volume strategic decisions, or ambiguous business rules in a pilot phase. Start narrow and expand once you've proven the concept.

How do we avoid scope creep during an agentic AI pilot?

Establish a clear MVP (Minimum Viable Product) definition with stakeholder sign-off before starting, implement a change control process requiring formal review and timeline adjustment for any scope expansion, set a fixed pilot timeline with a defined end date and go/no-go decision, maintain a separate backlog for features that extend beyond the pilot scope, and separate pilot features (needed for learning) from production features (needed for operations). This discipline prevents the classic expansion that derails timelines.

Key Takeaways: What Actually Drives Agentic AI Success

Let me be direct about what I've learned from studying dozens of pilot programs, both successful and stalled:

Agentic AI isn't failing because the technology is immature. It's failing because organizations are treating it as a software deployment problem when it's actually a data and organization problem dressed up in AI clothing.

The companies whose agentic AI pilots are delivering value are doing three things differently:

First, they're starting with a specific, well-understood business problem. Not "implement agentic AI," but "reduce payment processing time from three days to eight hours." This clarity prevents technology for technology's sake.

Second, they're investing in data foundations before building agents. They're doing unglamorous work: data audits, quality remediation, governance frameworks, system integration. This is the infrastructure that actually enables autonomy.

Third, they're realistic about autonomy. They're not trying to remove humans from workflows. They're automating routine parts of workflows and freeing humans for judgment and complexity. They're building human-in-the-loop systems that scale effectively.

If your organization is considering agentic AI, don't start with an RFP for AI vendors. Start with a clear problem definition, a thorough data assessment, and an honest conversation about what infrastructure and governance actually need to happen before autonomous decisions are operationally sound.

The organizations that do this work upfront move from pilots to productive deployments. The organizations that skip it are the ones stalling in month four.

The future of agentic AI isn't about better algorithms. It's about better fundamentals: cleaner data, clearer governance, more thoughtful integration, and more realistic expectations about what autonomy actually requires.

Get those right, and agentic AI delivers genuine value. Rush them, and you get an expensive proof of concept that never quite goes live.

The choice is yours.

Related Articles

- The Hidden Cost of AI Workslop: How Businesses Lose Hundreds of Hours Weekly [2025]

- Responsible AI in 2026: The Business Blueprint for Trustworthy Innovation [2025]

- Managing 20+ AI Agents: The Real Debug & Observability Challenge [2025]

- Claude Cowork Now Available to Pro Subscribers: What Changed [2025]

- Why Retrieval Quality Beats Model Size in Enterprise AI [2025]

- AI Bubble Myth: Understanding 3 Distinct Layers & Timelines

![Why Agentic AI Pilots Stall & How to Fix Them [2025]](https://tryrunable.com/blog/why-agentic-ai-pilots-stall-how-to-fix-them-2025/image-1-1768837043586.png)