The $4 Billion Club: How AI Chip Startups Are Redefining Silicon Valley

Something strange is happening in Silicon Valley. Startups that didn't exist six months ago are suddenly worth more than most Fortune 500 companies. We're talking about AI chip design companies raising

This isn't hype. This is capital allocation at scale, and it tells us something fundamental about where the AI industry thinks the real bottleneck exists. Forget software. Forget applications. The constraint right now is silicon. The chips that power AI models. And a handful of researchers who figured out how to automate chip design are suddenly the most valuable people in technology.

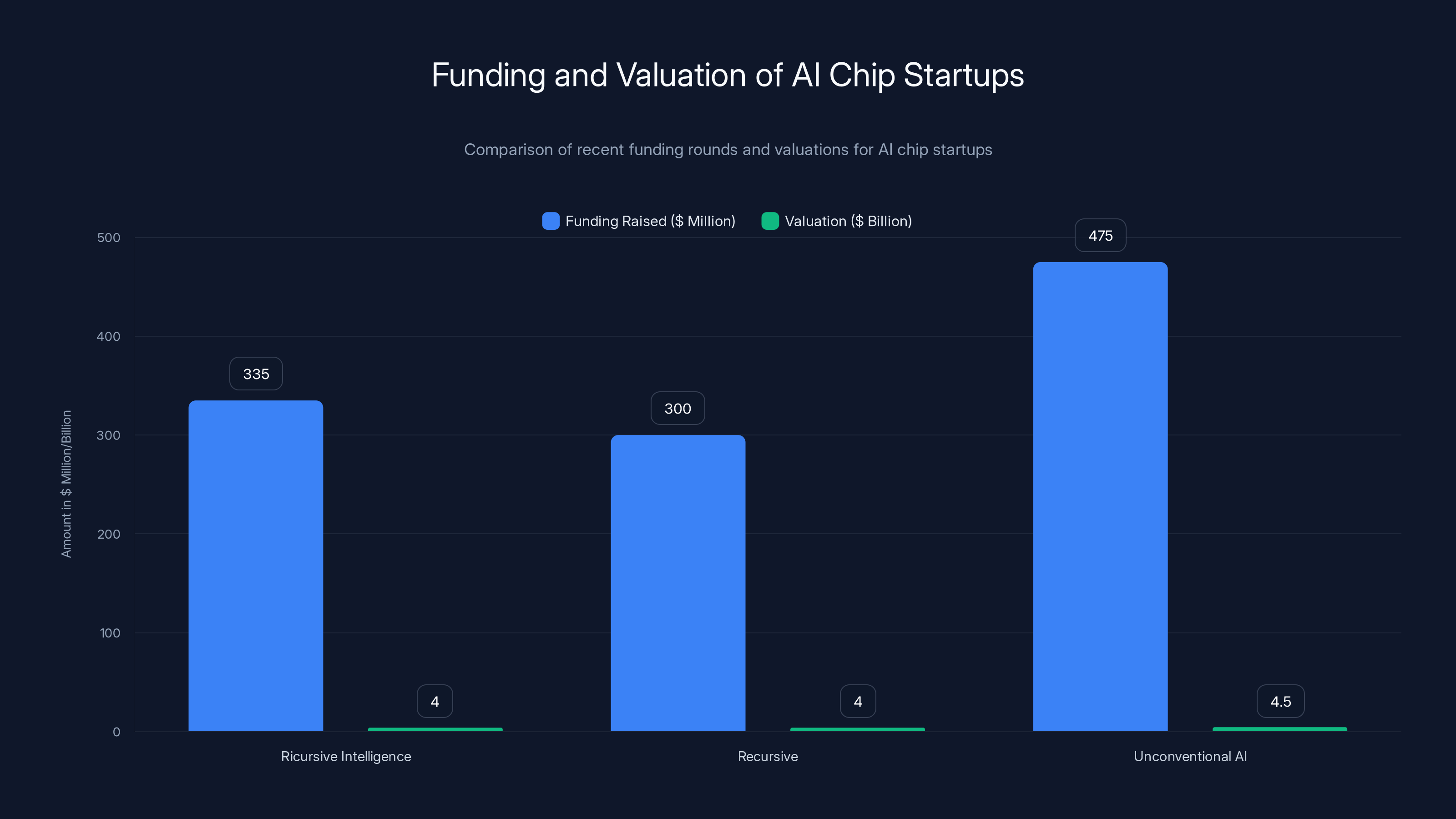

Ricursive Intelligence just raised

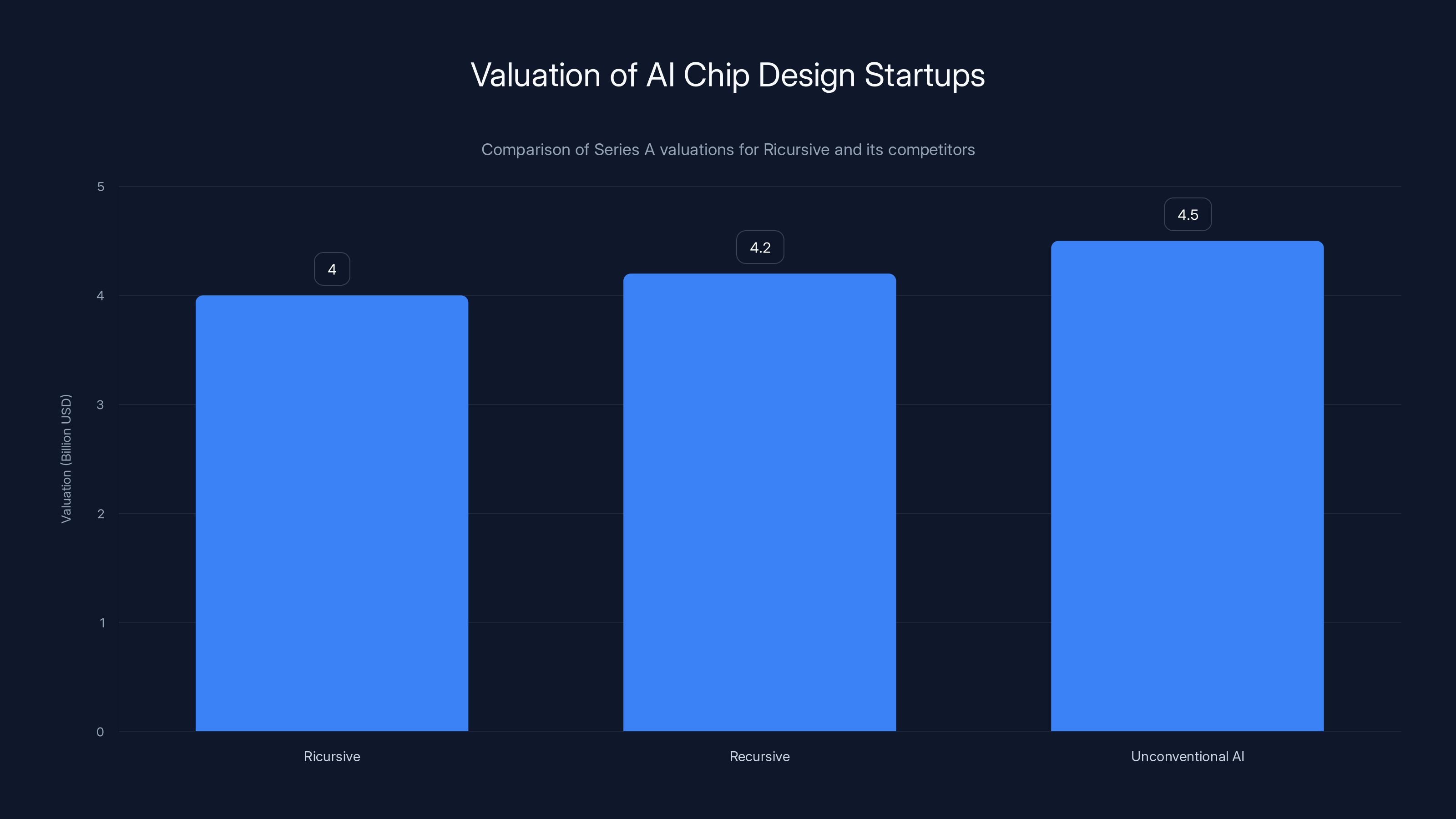

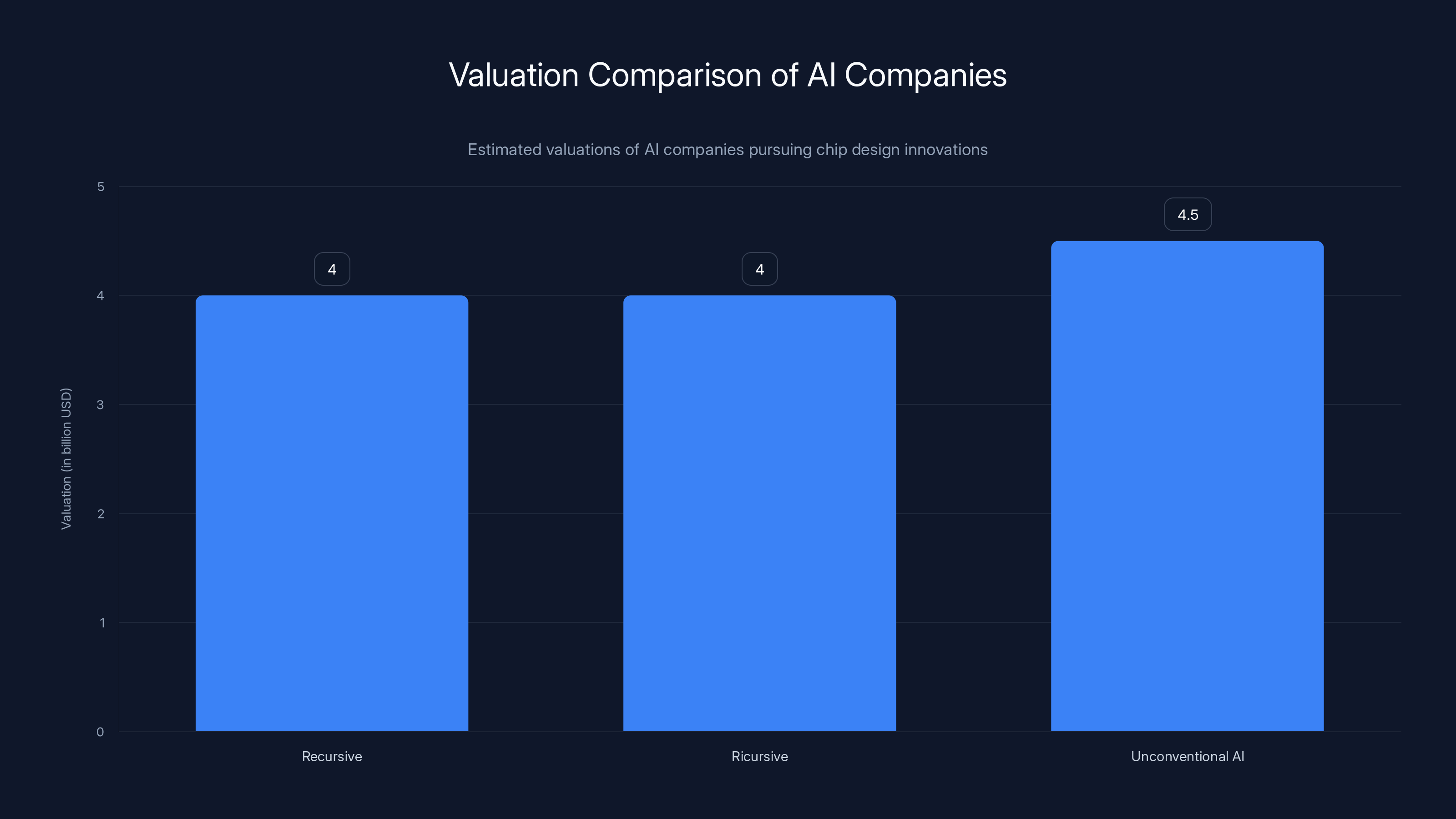

But here's what makes this moment interesting: Ricursive isn't alone. In fact, it's not even the only Ricurs-sounding startup raising at this valuation. There's also Recursive, founded by former Meta researcher Richard Socher, working on similar AI chip design problems and reportedly in talks for a comparable round. And then there's Unconventional AI, backed by Naveen Rao and Andreessen Horowitz, which raised

Three companies. Three different teams. Three different approaches. All working on the same fundamental problem: using AI to design chips better, faster, and cheaper than humans can. And all convinced that this is the path to AGI (Artificial General Intelligence).

What's driving this? Why are investors suddenly writing nine-figure checks for chip design startups? And what does this tell us about the future of AI infrastructure?

That's what we're unpacking here. The story of Ricursive isn't just about one company hitting an insane valuation. It's about a structural shift in how technology gets built. It's about the recognition that the software AI revolution runs on top of a hardware foundation that's fragile, expensive, and full of inefficiencies. And it's about teams of researchers who spent years inside Google, Meta, and other AI labs finally getting a chance to fix it.

The Ricursive Story: From Google Labs to $4B Valuation

How Former Google Researchers Built a Chip Design AI

Let's start with the founders, because their background explains everything. Anna Goldie and Azalia Mirhoseini spent years at Google Research working on a specific problem: chip layout design. Not a sexy problem. Not something that gets venture capitalists excited at parties. But a critical one.

Chip design involves thousands of human decisions. Where do you place transistors? How do you route connections? What's the optimal balance between power consumption, heat dissipation, and performance? These decisions cascade. A bad choice early on means wasted silicon, overheating, or performance bottlenecks that destroy the whole chip.

For decades, teams of specialized engineers called chip designers have made these decisions manually. It's part art, part science, part institutional knowledge. You learn from veterans who learned from other veterans. The expertise is concentrated. The process is slow. And the results, while impressive, are probably not optimal.

Goldie and Mirhoseini didn't accept that. They developed a reinforcement learning method called Alpha Chip (the name echoes Google's Alpha Go) that could learn to design chip layouts automatically. Not perfectly. But really well. The system could explore the design space at a speed no human could match, testing millions of configurations and learning which ones worked best.

Google used this method in four generations of TPU chips (Tensor Processing Units), the custom silicon that powers Google's AI infrastructure. Those TPUs run everything from search to Maps to Gmail to YouTube recommendations. They're also part of the reason Google can afford to spend billions on AI development while maintaining profit margins that would make most companies jealous.

So Goldie and Mirhoseini proved the concept works at scale, inside one of the world's most demanding hardware environments. But they were doing it at Google, with Google's resources, for Google's benefit. The AI chip revolution was generating billions in value, but they were collecting salaries while their insights powered someone else's empire.

From Research to Company: The Founding Thesis

When they left Google to start Ricursive, their thesis was straightforward: if we can automate chip layout design, we can compress years of iteration into months. We can make chip design accessible to teams that previously couldn't afford the expertise. And we can break the hardware bottleneck that's currently limiting AI development.

Ricursive's pitch to investors had several layers. The immediate problem is practical: chip design takes years and costs hundreds of millions of dollars. NVIDIA spends massive resources designing GPUs. Apple designs chips for iPhones and Macs. These are multi-year, multi-hundred-million-dollar efforts. If you can compress that timeline or reduce the cost, you've created enormous value.

But the deeper bet is more philosophical. The founders believe that the path to AGI runs through better hardware. Not just better software. Not just better training. Better hardware. And the way to get better hardware is to automate the part of the process that humans currently bottleneck: the design itself.

Think about it this way: software engineers compile their code. They don't hand-optimize every binary instruction. But chip designers still hand-design chips. Ricursive wants to be the compiler for hardware. You describe what you want the chip to do. The AI system designs the layout. You iterate. You improve. You do it in months instead of years.

It's a compelling vision. And investors clearly believed it, because Sequoia led a

That's the kind of speed and conviction usually reserved for founders who've already achieved market dominance. Not founders who launched a company two months prior.

The Valuation Question: Why $4 Billion?

Let's be blunt: a

That requires multiple things to be true simultaneously. The technology has to work at scale (unproven at production). The market adoption has to materialize (no customers yet). The competitive moat has to hold (other companies are building similar solutions). And none of it gets displaced by someone else solving the problem differently.

But here's why investors are comfortable with that bet: the alternative is worse. Right now, the constraint on AI development is compute. More specifically, it's the cost of compute. A single run of a large language model can cost hundreds of thousands of dollars. The compute cost is eating into margins. It's slowing down iteration. It's creating a moat for the companies that can afford it (OpenAI, Google, Meta, Anthropic).

If Ricursive (or Recursive, or Unconventional AI) can reduce the cost of compute by 10%, that's worth billions. If they can reduce it by 30%, that's worth tens of billions. And that's before you even consider the applications: better chips for phones, for data centers, for autonomous vehicles, for robotics.

Investors aren't just betting on Ricursive. They're betting on the category. They're betting that the AI chip design tools are real. And they're betting that whoever gets there first and builds the most powerful system will own an enormous market.

Ricursive's valuation grew from

The Competitive Landscape: Three Teams, One Problem

Recursive: The Richard Socher Play

Ricursive has company, and it's literally just one letter different. Recursive, founded by Richard Socher, is pursuing a very similar mission. Socher has serious credentials. He's a former Meta researcher who worked on neural networks and natural language processing. He's written papers, published research, and built a reputation in AI.

When Socher started Recursive, the initial story was about using AI systems that could improve themselves. The idea is intriguing: instead of humans designing chips or writing code, you build an AI system that can modify and optimize itself automatically. You point it at a problem (design a more efficient chip), and it iterates toward a solution while learning better strategies with each iteration.

This is philosophically different from Ricursive's approach, though the practical outcome is similar. Ricursive uses reinforcement learning to optimize chip layouts. Recursive seems to be betting on self-modifying AI systems that can iterate across multiple domains. Both arrive at the same place: chips designed faster and better than humans can manage.

Bloomberg reported that Recursive is in talks to raise at roughly the same $4 billion valuation. That suggests investors view the two companies as comparable in potential, even if their technical approaches differ. In competitive startup markets, that's a signal that the category is hot and that multiple approaches are being validated simultaneously.

Unconventional AI: Naveen Rao's Hardware Play

Then there's Unconventional AI, founded by Naveen Rao. Rao is a well-known figure in the AI hardware space. He previously founded Nervana Systems, which Intel acquired for a reported $350 million. He was VP of Artificial Intelligence at Intel for years. He understands the chip manufacturing ecosystem as well as anyone.

Unconventional AI raised

Rao's approach seems to focus on the "intelligent substrate" concept: essentially, building custom silicon that's designed from the ground up with AI in mind, rather than adapting general-purpose chips for AI workloads. This is similar to what Apple does with A-series chips for iPhones or what Google does with TPUs. Custom hardware built for a specific job.

The logic is compelling: consumer GPUs weren't designed for large language models. They're being co-opted for that purpose. What if you designed chips specifically for the workloads that matter? What if you optimized for transformer architectures, not general-purpose computation? What if you integrated memory and compute more efficiently?

Unconventional AI is betting that the future of AI isn't companies like NVIDIA selling generic GPUs. It's a new layer of chip design innovation where specialized silicon becomes the competitive advantage.

Why Three Companies? Why Now?

This is the puzzling part. Why would investors back three companies doing essentially the same thing? Normally, VC capital concentrates behind the winner. You see a category emerge (smartphones, cloud computing, AI apps), and within a few years, one or two companies dominate. The others get acquihired or fizzle.

But in this case, investors are deliberately diversifying. They're betting that all three might succeed. Or more likely, they recognize that the problem is big enough that multiple solutions can co-exist. Chip design isn't a zero-sum game. There's room for different techniques, different tools, different approaches.

Also, timing matters. All three companies appeared within a few months of each other, in late 2025. That suggests there was a trigger event. Something that made clear to founders and investors that now was the moment. That trigger was almost certainly the explosion in AI compute demand.

In 2024 and 2025, AI models got bigger and more expensive. Training costs for state-of-the-art models crossed the billion-dollar mark. The compute bottleneck became undeniable. Companies started asking: what if there's a better way? What if we could design chips specifically for these workloads? What if we could reduce costs by 20%, 30%, 50%?

Once founders started asking those questions, investors started listening. And once investors started listening, capital started flowing. By late 2025, it was clear that AI chip design tools were going to be real. So multiple teams made their moves simultaneously.

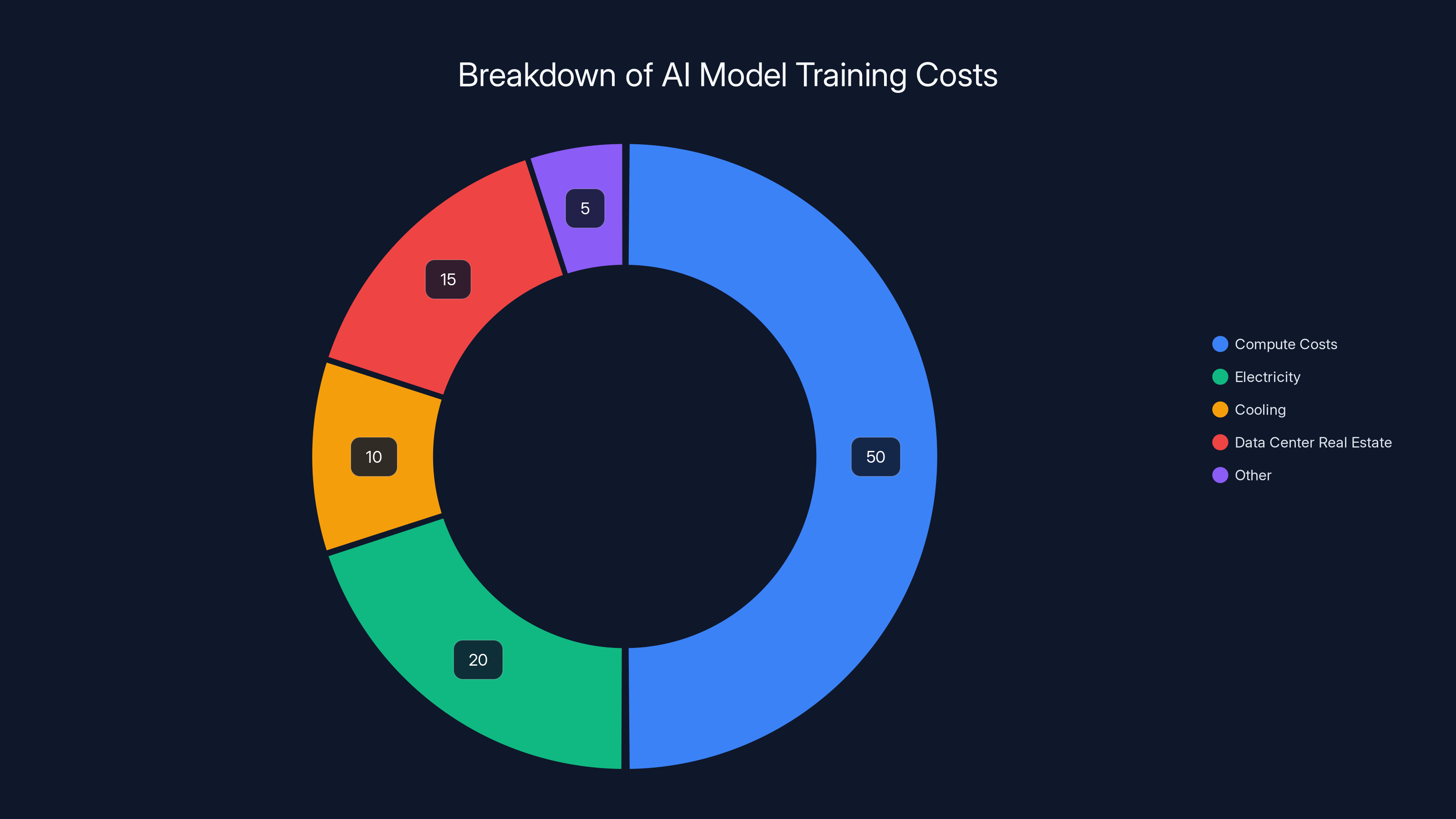

Compute costs constitute the largest portion of AI model training expenses, highlighting the potential savings from optimized hardware. Estimated data.

The Technology: How AI Designs Better Chips

Reinforcement Learning for Layout Optimization

Ricursive's core technology is reinforcement learning, specifically the Alpha Chip method developed by Goldie and Mirhoseini at Google. Here's how it works, at a high level:

You have a chip design problem. You need to place millions of transistors on a piece of silicon, connect them with wires, manage heat dissipation, minimize power consumption, and maximize performance. This is a combinatorial optimization problem. The number of possible configurations is astronomically large. You can't check all of them.

Traditional approaches use heuristics. Human experts use intuition and experience to make good decisions. Simulated annealing and other optimization techniques can explore the space locally. But they're slow and often get stuck in local optima.

Reinforcement learning takes a different approach. You define the problem as a game: each decision is a move in the game, and the outcome (performance, power, heat) is the score. You train an AI system to play this game well. It starts randomly. It plays millions of games. It learns which decisions lead to good outcomes.

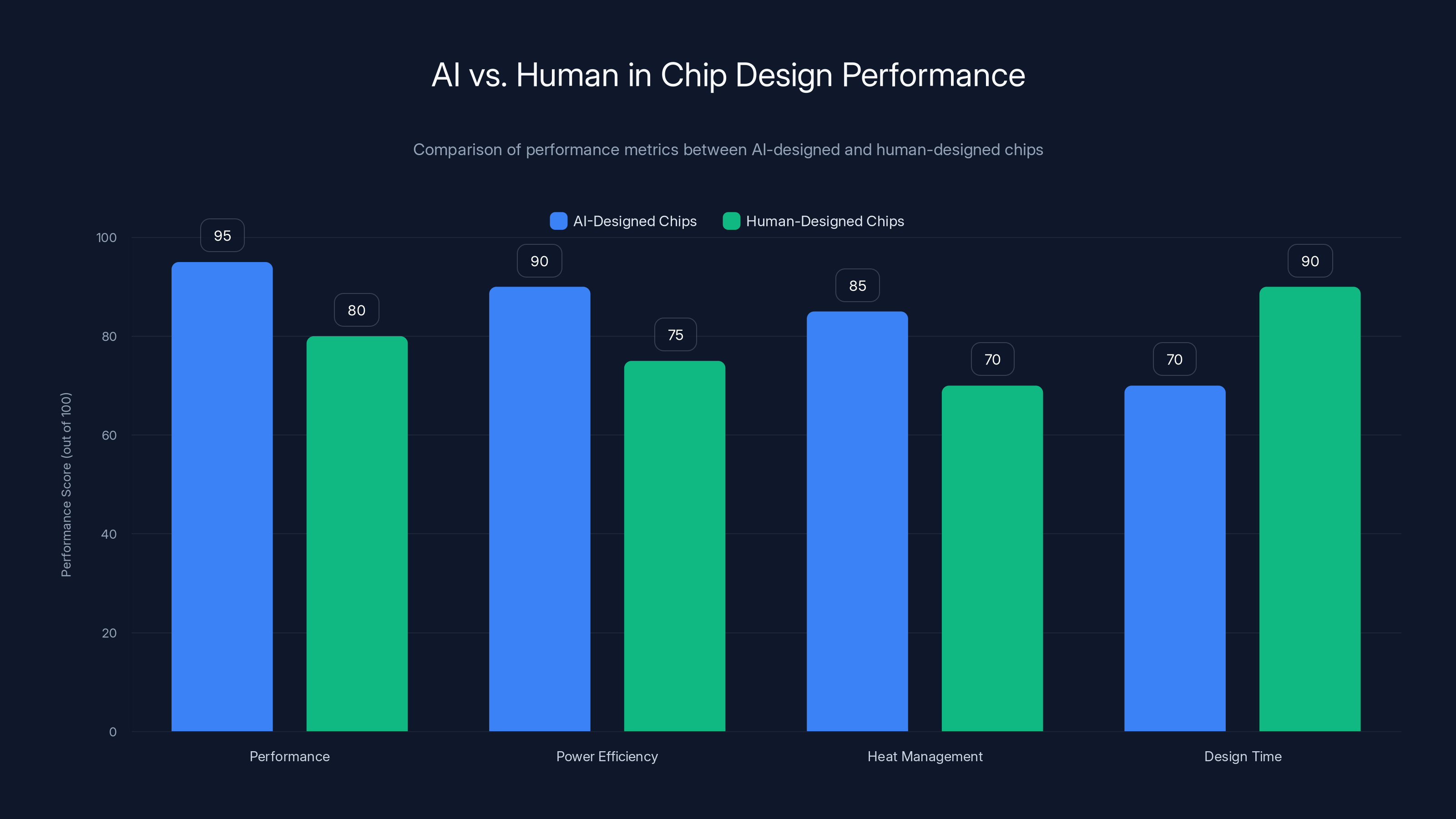

With enough training, the system learns to make decisions that outperform human experts. Not by a tiny margin. By significant margins. Google's TPU chips, designed with Alpha Chip assistance, have performance metrics that top human-designed chips.

The beauty of this approach is that it's domain-agnostic. You're not hand-coding rules about chip design. You're training a learning system that discovers optimal patterns automatically. That same learning system could potentially be applied to different chip designs, different architectures, different constraints.

Graph Neural Networks and Design Space Exploration

Both Ricursive and Recursive likely use graph neural networks (GNNs) to represent chip designs. A chip is essentially a graph: nodes represent components (transistors, logic gates, memory), and edges represent connections. GNNs are excellent at learning on graph-structured data. They can discover patterns about which connection topologies work better than others.

Design space exploration is the process of trying different designs and comparing them. Traditional approaches sample the design space sparsely. You try 100 designs, pick the best, refine, try another 100. That's serial. That's slow.

AI systems can parallelize design space exploration. They can simulate thousands of designs in parallel. They can rank them. They can identify patterns. And critically, they can learn to focus the search on the regions of design space that are most likely to yield good results.

This is where the time compression comes in. A human-led chip design process might iterate on 50 designs over two years. An AI system might iterate on 10,000 designs in two months. And because it's exploring so much faster, it stumbles onto optimizations that humans would take decades to discover.

Self-Improving Systems and the AGI Bet

Recursive's thesis about self-improving systems is more speculative but conceptually interesting. The idea is that you build an AI system that can modify its own design process. It runs a chip design, evaluates the results, and then updates its own algorithms based on what worked.

This is closer to what you might call self-improvement or meta-learning: learning how to learn. Instead of humans improving the chip design process, the AI system improves the process iteratively. Each version is better at designing chips than the previous version. Eventually, the humans are just supervising.

This is also the narrative path to AGI that some researchers find compelling. You start with a narrow domain (chip design). You build a system that can improve itself in that domain. You extend it to related domains. Eventually, you have a system that can improve itself across many domains. Maybe eventually, across all domains.

It's speculative. But it's the kind of idea that gets billionaires and institutional investors excited. If there's even a 5% chance that a chip design company is actually on the path to AGI, the expected value of that investment is enormous.

Validation at Production Scale

The fact that Alpha Chip has been used in Google's TPU chips is the strongest proof point these companies have. It's not a research paper. It's not a simulation. It's production silicon running real AI workloads at massive scale.

Google doesn't deploy unproven technology. If the chip design process was fundamentally flawed, it would show up in performance metrics. It would show up in thermal issues. It would show up in power consumption. But it doesn't. The TPUs work. They're fast. They're reliable. And they were designed (at least in part) using AI-driven optimization.

That validation is why investors trust the technology enough to write $300 million checks. It's not faith. It's evidence.

The Market Opportunity: Why This Matters

The Compute Cost Squeeze

Let's ground this in numbers. Training a state-of-the-art large language model costs hundreds of millions of dollars. OpenAI likely spent over $100 million training GPT-4. Google spent similar amounts on Gemini. These aren't small research projects. These are capital-intensive exercises.

A significant portion of that cost is the compute: the server time, the electricity, the cooling, the data center real estate. If you could reduce compute costs by 30%, you'd save tens of millions on a single training run. Multiply that across thousands of companies running AI workloads, and you're talking about tens of billions in aggregate costs that could be avoided.

But it goes beyond cost. Compute costs also translate to time-to-market. If your model takes 6 months to train instead of 3 months because compute is expensive (and therefore you can't throw as much at it), you're losing competitive advantage. You're slower to iterate. You're slower to deploy new capabilities.

Ricursive and the other chip design companies are essentially promising to compress that timeline. Better chips mean faster training. Faster training means quicker iteration. Quicker iteration means winning in the AI race.

The Hardware Bottleneck

For years, the joke in AI was that software engineers were waiting for hardware engineers to catch up. GPUs were designed for graphics, not AI. TPUs helped (Google designed them specifically for AI), but they were expensive and not accessible to most companies. NVIDIA GPUs became the default, but they're not optimized for transformers or large language models. They're general-purpose compute with a lot of wasted potential.

Custom silicon designed specifically for AI workloads could change the equation. Imagine chips where:

- Memory bandwidth is optimized for attention operations (the bottleneck in large language models)

- Power efficiency is tuned for inference, not just training

- Heat dissipation is managed for 24/7 operation in data centers

- The architecture natively supports distributed computing across multiple chips

None of that exists yet at production scale. The chips that exist today (NVIDIA H100, A100, Google TPU v5) are impressive, but they're not purpose-built for the specific workloads that matter most in 2025.

If Ricursive or Recursive or Unconventional AI can deliver chips that are even 20% better at AI workloads, they unlock enormous value. And if they can do it on a faster iteration cycle, they create a sustainable competitive advantage.

The TAM: Total Addressable Market

How big could this market be? That's the question investors are trying to answer.

Today, NVIDIA dominates AI chip sales, with reported revenues of

But the real market might be bigger. The market isn't just GPUs sold today. It's the total value of AI compute over the next 5-10 years. McKinsey estimates AI could add $15-20 trillion in value to the global economy by 2030. A meaningful portion of that value depends on compute costs. If better chip design reduces those costs by 30%, that's value creation on a staggering scale.

Venture investors use a rule of thumb: target companies that could become multi-billion-dollar businesses. A team that can reduce AI compute costs by 30% across the industry could easily create a $10-20 billion business in chip design, software licenses, and services.

That's the math that justifies a $4 billion valuation for a company with no revenue. Investors aren't valuing today. They're valuing the potential market, discounted by probability and execution risk.

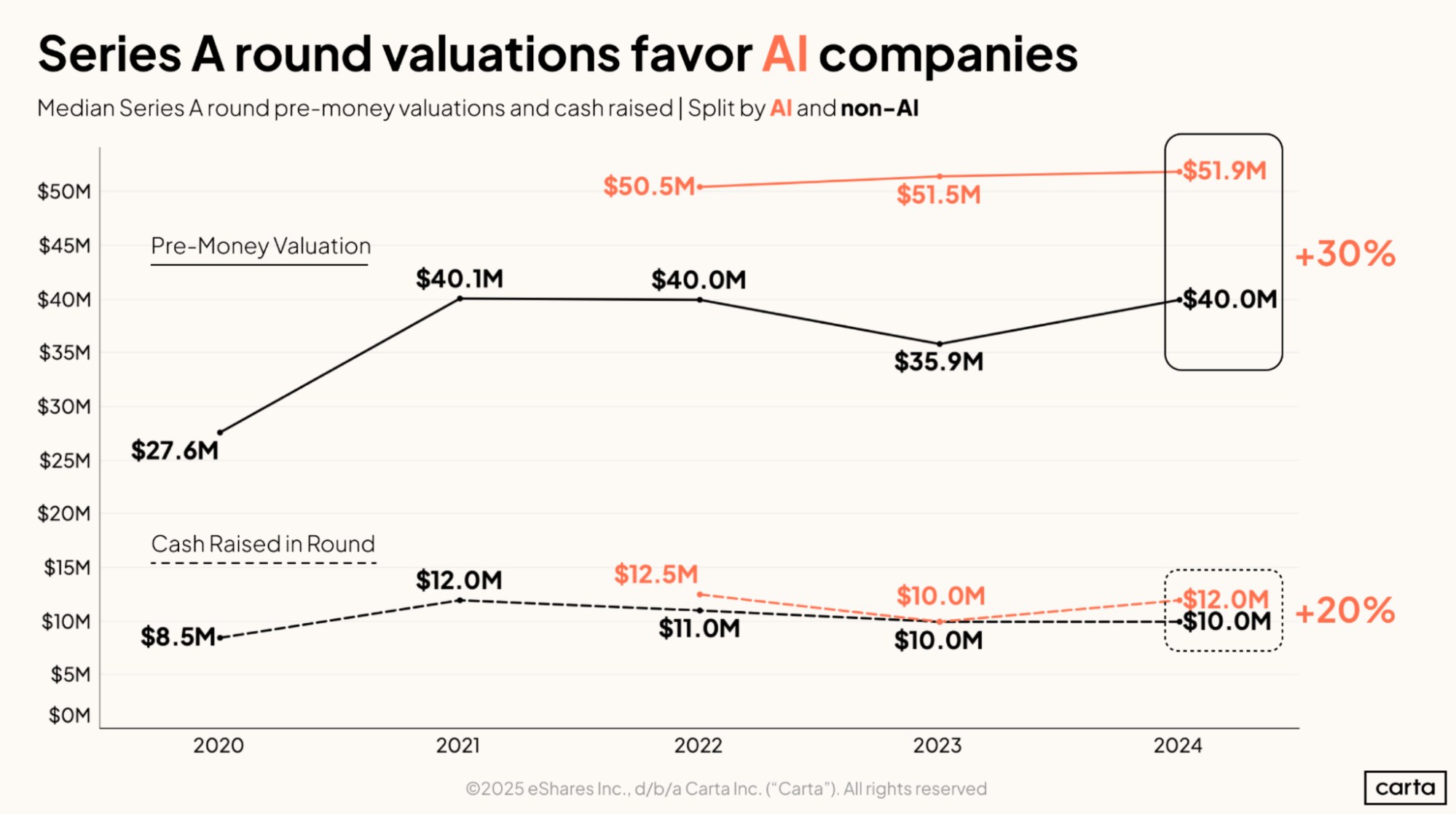

Ricursive, Recursive, and Unconventional AI all raised Series A funding at valuations between

The Technical Challenges: What Could Go Wrong

The Simulation-to-Silicon Gap

Here's the hard truth: every chip design tool is only as good as its simulations. Ricursive's AI system learns from simulations of chip designs. But simulations are approximations. They model the physics of silicon, but they're simplified models. Real silicon is messier.

Temperature variations, voltage fluctuations, manufacturing tolerances, radiation interference, and a thousand other factors affect real chips. A design that looks perfect in simulation might fail in the real world. Or it might be inefficient in ways the simulation didn't predict.

The gap between simulation and reality is the oldest problem in engineering. And it's particularly acute in chip design, where the physics is complex and the manufacturing tolerances are tight.

Ricursive and Recursive will need to validate their designs in actual silicon. And that's expensive. A single chip tape-out (manufacturing run) can cost

Manufacturing Constraints and Yield

Another challenge is manufacturing. Chip design is only half the battle. You also need to actually manufacture the chips. And modern chip manufacturing is incredibly complex.

There are only a handful of companies that can manufacture advanced chips: TSMC, Samsung, and Intel. These foundries have waiting lists. They charge premium prices for cutting-edge process nodes. And they have their own proprietary tools and constraints.

When you design a chip using AI, you need to design it in a way that's manufacturable on available foundries. You can't just design the perfect chip and then wish into existence the manufacturing capacity to build it. The best design that can't be manufactured is worthless.

Ricursive and the others will need to develop deep relationships with foundries, learn their constraints, and optimize designs for manufacturing reality, not just theoretical optimality. That's doable, but it's another layer of complexity.

Competitive Response from Incumbents

NVIDIA, Intel, AMD, and other established chip makers are watching this trend closely. They have their own research teams, their own AI expertise, and their own manufacturing relationships. If they decide to adopt AI-driven chip design tools, they can do it faster and cheaper than startups can.

The existential question for Ricursive and Recursive is: can they move faster than the incumbents? Can they build better tools, deploy them to more customers, and create defensible advantages before NVIDIA or Intel copy the approach?

Historically, startups have an advantage in tools and software. They're not burdened by legacy systems or existing business models. But established chip makers have advantages in manufacturing, customer relationships, and capital. The outcome isn't obvious.

The Path from Tool to Product

Ricursive's positioning as a platform raises a question: who are the customers? Are they companies designing their own chips? If so, that's a small market. There are maybe 50-100 companies in the world with the expertise and resources to design custom chips.

Or are the customers foundries like TSMC? Do they license Ricursive's technology and use it as part of their design services? That could be bigger. TSMC could offer "AI-designed chips" as a premium service, and Ricursive gets licensing fees.

Or are the customers AI companies (OpenAI, Google, Meta) that want to design their own chips faster? That's the most likely scenario. But it limits the market to a handful of players.

The path from having great technology to having a viable business model is not always clear. Ricursive needs to figure out how to monetize their capability. And that might look very different from what investors are imagining.

The Infrastructure Layer: Why This Matters for AI

The Stack Gets Rebuilt

AI infrastructure is rebuilding itself in real-time. Five years ago, the stack was simple: buy GPUs from NVIDIA, rent servers from AWS, build your AI application on top. NVIDIA owned the layer below, AWS owned the layer below that, and customers lived on top.

That's breaking down. Google is increasingly using TPUs for inference, to avoid NVIDIA pricing. Meta is designing custom chips. Microsoft is partnering with chip makers. OpenAI is rumored to be designing chips. The big AI companies are all climbing down the stack.

Ricursive and Recursive and Unconventional AI are tools that accelerate that climb. They make it easier for companies to design custom chips. They compress the timeline from years to months. They reduce the capital barrier to entry.

If these tools succeed, we'll see an explosion of custom chip designs. Not from NVIDIA or Intel. From the companies actually using the chips. From Microsoft for its Azure data centers. From Google for its search and recommendation systems. From Tesla for autonomous driving. From Meta for recommendation engines.

That's a massive structural shift in the chip industry. And it's only possible because AI makes chip design faster and cheaper.

The Virtuous Cycle

Here's where it gets interesting. Better chip design tools lead to better chips. Better chips make AI training cheaper and faster. Cheaper and faster AI training enables more AI companies to exist. More AI companies generate more demand for better chips. Which creates more incentive for chip design innovation.

That's a virtuous cycle. And positive feedback loops in technology tend to create winners very quickly.

The company that wins in chip design tools could find itself with an accelerating advantage. More customers using its tools. More data about what designs work well. Better feedback for improving the tools. Faster iteration. Better designs. And the cycle repeats.

That's why investors think a $4 billion valuation could undervalue the winner. If Ricursive becomes the standard tool for chip design across the industry, the value capture could be enormous.

AI-designed chips significantly outperform human-designed chips in performance, power efficiency, and heat management, though they require more design time. Estimated data based on typical improvements.

The Funding Question: Where Does the Money Go?

Burn Rate and Path to Sustainability

A

Chip design startups have high burn rates. They need:

- Top-tier AI/ML engineers: 500K+ each

- Chip design engineers with deep expertise: 400K+

- Access to expensive design tools and simulations

- Manufacturing partnerships and tape-out costs

- Sales and business development

A burn rate of

That's actually not much time, given the timeline of chip development. But it's enough time to ship some products, demonstrate traction, and raise again at a higher valuation before running out of money.

The Series B Question

Ricursive will need to raise again. Likely in 18-24 months, after shipping initial products and demonstrating customer traction. At that point, the question will be different. It won't be "is this technology promising?" It'll be "does this technology work at production scale, and do customers actually want it?"

If the answer is yes, Series B becomes much easier. And the valuation could be anywhere from $8-20 billion depending on traction and competitive position.

If the answer is no (the technology doesn't work, or customers don't care), Ricursive's runway becomes a problem. $300 million isn't enough to pivot to a different business model. It's enough to get to a reckoning.

Competitive Dynamics: The Game Theory

Multiple Winners Scenario

One possibility is that multiple chip design startups succeed. Ricursive dominates in AI training chips. Recursive dominates in inference chips. Unconventional AI dominates in custom substrate designs. They're solving slightly different problems, serving slightly different customers, using slightly different techniques.

In that world, all three could be billion-dollar companies. Not mutually exclusive. The market is big enough. And different approaches have different strengths.

Winner-Take-Most Scenario

Another possibility is that one company's approach proves superior. Maybe the reinforcement learning method works better than self-improving systems. Maybe the optimization focus on substrates becomes the key differentiator. Maybe a fourth company we haven't heard about has a better idea.

In winner-take-most scenarios, one company gets 60-80% of the value, and the others are acquihired or become niche players. That's more typical of startup markets. But it would be a harsh outcome for two of the three companies that just raised at $4 billion valuations.

Incumbent Absorption

A third possibility is that established companies (NVIDIA, Intel, TSMC) quickly adopt the best ideas from these startups. Either by acquihiring the teams or by licensing the technology. In that scenario, the startup's value gets absorbed into the larger company. The founders and early investors do well, but the company doesn't independently become a $50 billion business.

This might actually be the most likely outcome. NVIDIA could acquire Ricursive for

Recursive and Ricursive are both valued at

The Broader Implications: What This Means

The Future of Hardware Innovation

Ricursive and the other chip design startups represent a shift in how hardware gets innovated. Hardware used to be the domain of large, well-resourced teams. Moore's Law, the observation that the number of transistors on a chip doubles roughly every two years, required constant improvement. Only companies with billions in R&D could keep up.

But if AI can automate chip design, hardware innovation becomes more like software innovation. Faster iteration. Smaller teams. More experimentation. More disruption.

That's a big deal. It means startup companies (not just NVIDIA and Intel) can design chips. It means companies can optimize for their specific use case instead of using generic GPUs. It means the hardware landscape gets more diverse and more competitive.

That's good for the ecosystem. But it's bad for companies (like NVIDIA) whose dominance depends on hardware moats.

The AI Training Efficiency Inflection

For the last few years, the AI industry has been defined by scale. Bigger models. More data. More compute. And companies that could afford the most compute would win.

If Ricursive and Recursive and Unconventional AI deliver on their promises, that changes. Efficiency becomes as important as scale. You don't need the most compute if your compute is twice as efficient.

That democratizes AI. It means smaller companies can compete. It means companies in regions with limited compute access can still build powerful models. It means the cost curve for AI flattens instead of continuing to climb exponentially.

Again, that's good for the ecosystem. It's bad for the companies whose advantage was "we have the most compute."

The AGI Question

Finally, there's the biggest question: does any of this matter for AGI? Some of these founders genuinely believe that chip design is on the critical path to AGI. That you can't get to AGI without orders-of-magnitude improvements in hardware efficiency.

There's an argument for that. Current AI models are inefficient. They require massive amounts of compute. If you could 10x the efficiency of compute, maybe you unlock qualitatively different capabilities. Maybe the models that become possible with better hardware are the ones that develop real reasoning, planning, and agency.

Or maybe that's wishful thinking. Maybe AGI is about software and algorithms, not hardware efficiency. Maybe the hardware improvements just make the existing models faster and cheaper, but don't qualitatively change what's possible.

Time will tell. But it's the kind of belief that drives billion-dollar valuations. Investors don't just think Ricursive will be a good business. They think it might be on the critical path to transforming AI as fundamentally as the software layer did.

The Timing: Why Now?

The Perfect Storm

Why did Ricursive raise

First, the AI training cost crisis became undeniable. By late 2024 and 2025, the cost of training cutting-edge models was reaching absurd levels. That created urgency around efficiency.

Second, the TPU validation provided proof of concept. Google publicly talked about Alpha Chip's role in TPU design. That gave credibility to the idea that AI could design chips better than humans.

Third, AI talent became available. Goldie and Mirhoseini were working on this at Google. But maybe they got tired of working for a corporation. Maybe they wanted to build something of their own. Maybe the equity upside of a startup became more compelling than salaries at Google.

Fourth, venture capital had capital to deploy. 2025 was a year of capital abundance in AI. Firms raised massive funds. They were looking for places to invest. Chip design wasn't on the radar five years ago, but by 2025, it seemed like a critical infrastructure play.

All of those factors aligned in late 2025. And suddenly, founding a chip design company at the right moment could raise $300+ million in Series A funding.

The Venture Capital Narrative

VC is narrative-driven. Investors invest in stories as much as they invest in technology. And the story around chip design was compelling in 2025.

The story was: AI is constrained by compute cost. Compute cost is constrained by chip design efficiency. Chip design is constrained by human expertise. If you automate chip design, you unlock orders of magnitude of value. And the people who can do that automation just happened to start companies.

Once that narrative takes hold, capital flows. It doesn't matter if the narrative is 100% correct. It matters if it's compelling enough to make big institutions write checks.

Ricursive benefited from that narrative. So did Recursive. So did Unconventional AI. All three companies got funded on the strength of the story, not yet on the strength of shipping products or acquiring customers.

AI chip startups are attracting significant investment, with funding rounds ranging from

Lessons for Founders and Investors

The Infrastructure Play Never Dies

One lesson here is that infrastructure plays can move as fast as consumer apps. We often think of infrastructure as boring, slow, unsexy. But infrastructure innovations can create enormous value quickly if they unlock a bottleneck.

Ricursive isn't Uber. It doesn't need to acquire millions of users. It needs to win with a handful of enterprise customers (or foundries or AI labs). That's faster. That's more capital-efficient. That's more defensible.

For founders working on tools, platforms, and infrastructure, this is encouraging. You don't need consumer scale to be valuable. You need to solve a real problem that expensive companies have. And you need timing.

Timing Is Not Luck

Ricursive's timing wasn't luck. The founders worked on this problem for years at Google. They waited until the moment when everyone in the industry understood the problem. When the bottleneck was undeniable. When capital was looking to deploy. Then they moved.

That's the lesson: timing isn't luck. It's understanding when the world is ready for your solution. And that requires deep domain knowledge and patience.

Boldness Gets Funded

Final lesson: boldness gets funded. Ricursive's ask was audacious.

That could have been ignored. Instead, it was funded. Multiple investors participated. Because the ask matched the vision. The vision was: this is the future of chip design. And the ask was: give us enough money to build it.

Founders often under-ask. They think conservative funding rounds are safer. But that leaves money on the table. And more importantly, it signals a lack of conviction. If you really believe in your idea, you should ask for the capital required to move at speed.

Ricursive's investors clearly believed the founders were thinking big enough.

Conclusion: The Inflection Point

We're at an inflection point in how AI infrastructure gets built. For years, the story was about software: better algorithms, larger models, smarter systems. Those stories are still important. But increasingly, the bottleneck is physical: the hardware that runs the software.

Ricursive, Recursive, and Unconventional AI are betting that the next wave of AI value creation comes from solving the hardware bottleneck. Not by inventing new physics or discovering new materials. But by using AI itself to optimize the design of silicon.

It's a clever recursive loop. Use AI to design chips. Use better chips to train more capable AI. Use that AI to design even better chips.

Whether these three companies individually succeed is an open question. The technology is unproven at production scale. The business models are uncertain. The competitive dynamics favor the incumbents.

But the category feels real. The problem they're solving is real. The potential value is enormous. And the founders have legitimate expertise and validation.

So the $4 billion valuations, while aggressive, aren't irrational. They're bets on a future where chip design becomes faster, cheaper, and more accessible. A future where hardware innovation happens as quickly as software innovation. A future where custom silicon is as common as custom code.

That future might take 5-10 years to arrive. Or it might happen in 2-3 years if execution is flawless. But it feels like one of those rare moments where a structural shift in technology is becoming visible. And the investors writing massive checks at sky-high valuations are betting on being first.

Time will tell if they're right. But for now, the inflection point is here. The chip design revolution is starting. And three companies worth $4-4.5 billion are positioned to lead it.

FAQ

What is Ricursive and why did it raise $300 million in Series A?

Ricursive Intelligence is an AI chip design startup founded by former Google researchers Anna Goldie and Azalia Mirhoseini. The company raised

How does AI chip design work, and what makes it different from traditional chip design?

Traditional chip design relies on specialized engineers making manual decisions about transistor placement, wire routing, and optimization. Ricursive's AI system uses reinforcement learning to explore millions of design configurations automatically and learn which ones produce the best performance, power efficiency, and thermal characteristics. This approach compresses years of iteration into months and often discovers optimizations that human designers would take decades to find, all without hand-coding rules or domain-specific heuristics.

What are Ricursive's competitors, and why are three chip design startups raising at similar valuations simultaneously?

Ricursive faces competition from Recursive (founded by former Meta researcher Richard Socher) and Unconventional AI (founded by Naveen Rao, who previously founded Nervana Systems acquired by Intel). All three companies raised at $4-4.5 billion valuations in late 2025. Investors are backing multiple teams because they believe the chip design problem is large enough for multiple approaches to coexist, and because the urgency of solving AI's compute bottleneck validates the entire category, not just individual companies.

What is the market opportunity for AI chip design tools, and why is it so large?

The market opportunity is massive because compute costs represent tens of billions in annual expenses for AI companies, and the infrastructure drives trillions in downstream value creation. If AI chip design tools can reduce compute costs by even 20-30%, that unlocks billions in savings while accelerating AI innovation timelines. Additionally, these tools make custom chip design accessible to more companies (AI startups, cloud providers, hardware makers), creating a new category of specialized silicon designed for specific workloads rather than general-purpose computation.

What are the biggest technical and business challenges that Ricursive and its competitors face?

The simulation-to-silicon gap is the primary technical challenge: chip designs that look optimal in simulations may underperform or fail in real manufacturing due to temperature variations, voltage fluctuations, and manufacturing tolerances. Business challenges include limited addressable market (only 50-100 companies design their own chips), path to monetization (licensing to foundries? selling tools? designing chips directly?), and competition from established semiconductor companies like NVIDIA and Intel that could adopt similar techniques. Manufacturing scalability and foundry relationships are also critical constraints.

How does this trend toward AI-designed chips impact the future of AI development and innovation?

If successful, these tools will democratize hardware innovation by making chip design faster and cheaper, enabling smaller companies and startups to design custom silicon rather than relying solely on NVIDIA GPUs. This shift creates a virtuous cycle: better chip design tools lead to better chips, which make AI training more efficient, which enables more AI companies to exist, which creates more demand for chip innovation. Additionally, it suggests a future where efficiency becomes as important as scale in AI competition, potentially reducing the capital barriers for new entrants to the AI market.

Why is the timing of these companies significant, and what triggered this wave of funding?

The timing converged around several factors in late 2024 and 2025: AI training costs reached unsustainable levels (billion-dollar models), Google's public validation of Alpha Chip in production TPUs provided credibility, and venture capital had massive funds to deploy for infrastructure plays. Additionally, top AI researchers like Goldie and Mirhoseini left established companies to start ventures at the exact moment when the industry recognized compute efficiency as the critical bottleneck. This alignment of urgent problem, proven technology, experienced founders, and available capital created the perfect environment for rapid fundraising and high valuations.

Could Ricursive or its competitors become the next trillion-dollar company?

While unlikely in the immediate term, the mathematical framework exists: if a company captures even 10-20% of the AI chip design market, combined with software licensing and services, the total addressable market could support a

The Next Steps: What to Watch

Ricursive's next critical milestones are shipping its first chip design platform (12-18 months), acquiring initial customers (foundries or AI companies designing custom silicon), and demonstrating measurable improvements in time-to-market and design efficiency. Series B funding will depend heavily on whether the gap between simulation and production silicon can be closed, and whether the company can show clear path to $100M+ ARR (annual recurring revenue).

For the broader industry, the next 24-36 months will determine whether AI-driven chip design becomes standard practice or remains a niche capability. Watch for announcements about TSMC, Samsung, or Intel adopting these tools. Watch for traditional chip makers shipping AI-designed products. Watch for new startups copying this approach. And watch for failures: if the first generation of AI-designed chips underperform in production, that could set back the entire category.

The chip design revolution is starting. The outcome is uncertain. But the fact that three teams have raised billions to pursue it suggests that something real is happening underneath the hype. The infrastructure that powers AI is about to get a lot more interesting.

Key Takeaways

- Three AI chip design startups raised 4-4.5B valuations in late 2025, signaling that chip design automation is real

- Ricursive's technology (AlphaChip) was validated at Google on production TPU chips, proving AI can design chips better than humans

- AI compute costs became the critical bottleneck for AI development, driving urgent demand for design efficiency solutions

- Multiple investors bet that better chip design tools could democratize hardware innovation and reduce capital barriers for new AI companies

- Technical challenges like simulation-to-silicon gaps and manufacturing constraints remain significant risks to the category

Related Articles

- Tesla's Dojo 3 Supercomputer: Inside the AI Chip Battle With Nvidia [2025]

- Microsoft Maia 200: The AI Inference Chip Reshaping Enterprise AI [2025]

- Photonic Packet Switching: How Light Controls Light in Next-Gen Networks [2025]

- When VCs Clash: Inside the Khosla Ventures ICE Controversy [2025]

- Obvious Ventures Fund Five: The $360M Bet on Planetary Impact [2026]

- TechCrunch Disrupt 2026: Your Complete Guide to Early Bird Deals & Networking [2025]

![AI Chip Startups Hit $4B Valuations: Inside the Hardware Revolution [2025]](https://tryrunable.com/blog/ai-chip-startups-hit-4b-valuations-inside-the-hardware-revol/image-1-1769474240242.jpg)