AI Data Centers Hit Power Limits: How C2i is Solving the Energy Crisis [2025]

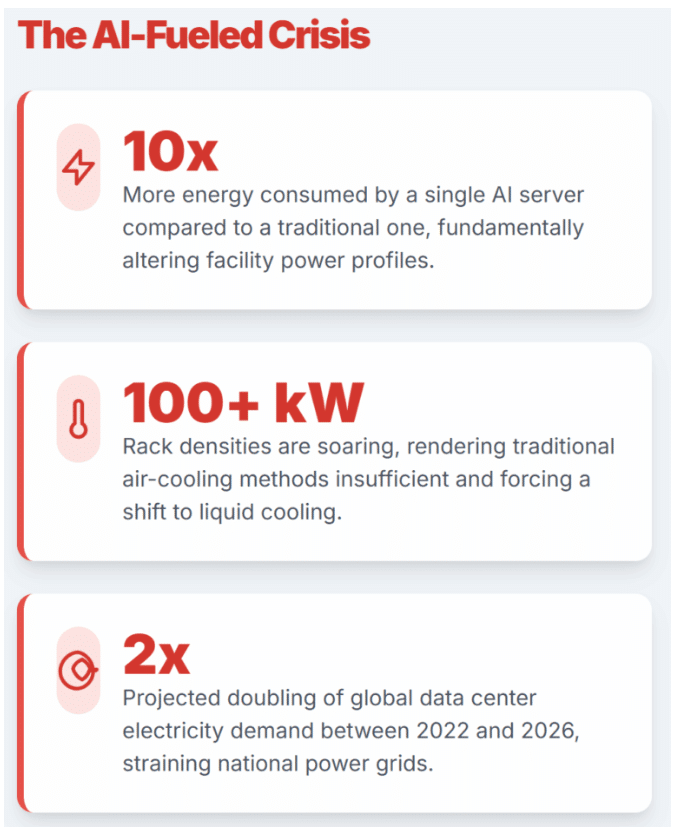

Power isn't sexy. Nobody wakes up excited about voltage converters or power delivery systems. But right now, power is the biggest constraint choking AI's growth.

You can build the fastest GPU on Earth. You can design chips that rival anything from Nvidia. But if you can't efficiently deliver electricity to those chips, you've got a very expensive paperweight.

That's the crisis hitting data-center operators worldwide, and it's about to reshape how we build AI infrastructure. A Bengaluru-based startup called C2i Semiconductors just raised $15 million to fix one of the most expensive, overlooked problems in the entire AI stack: the sheer waste of power conversion inside data centers.

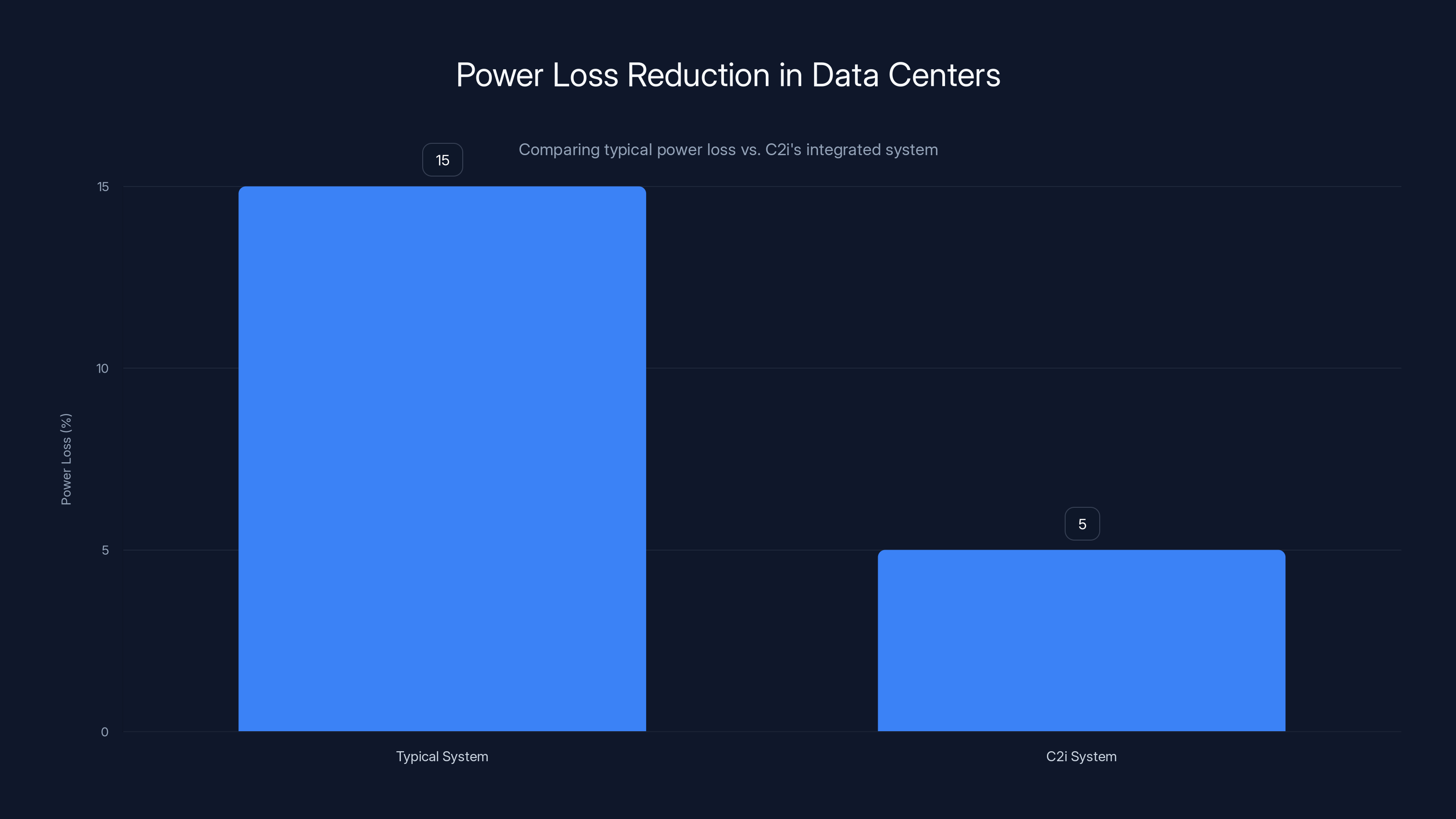

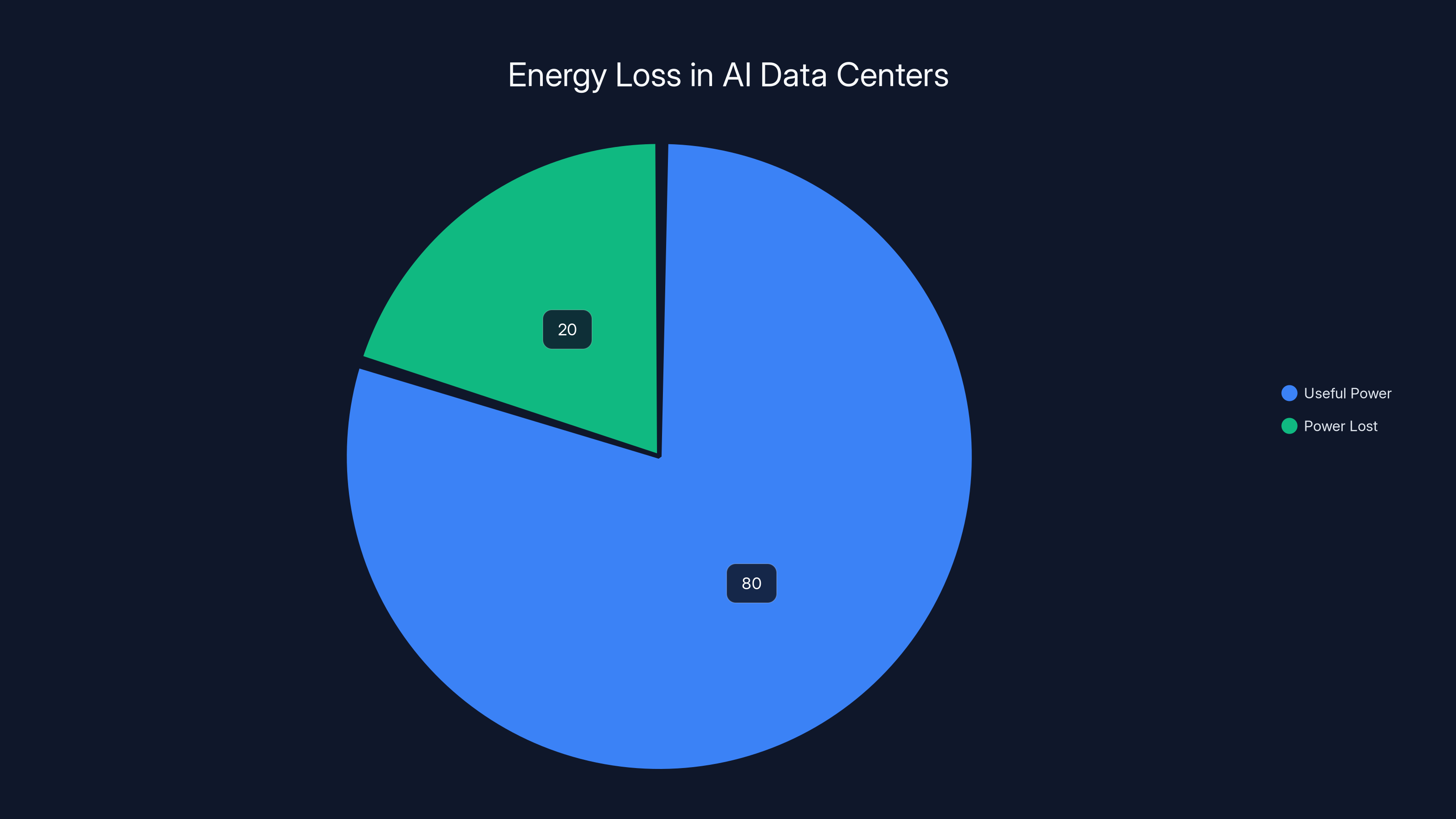

Here's what's happening: electricity travels from the grid into data centers at high voltage. Then it gets converted, stepped down, and converted again—sometimes dozens of times—before it reaches the GPU that actually uses it. Each conversion loses energy. We're talking 15% to 20% of power just vanishing as heat. In a megawatt-scale data center, that's roughly 100 kilowatts wasted every single second. Not per day. Per second.

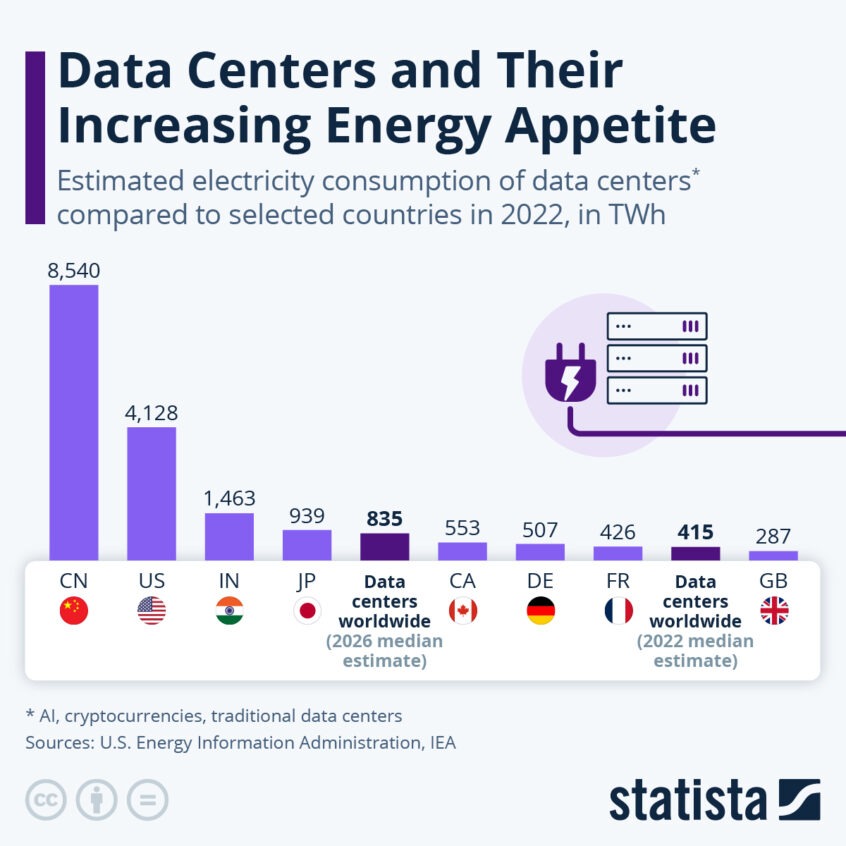

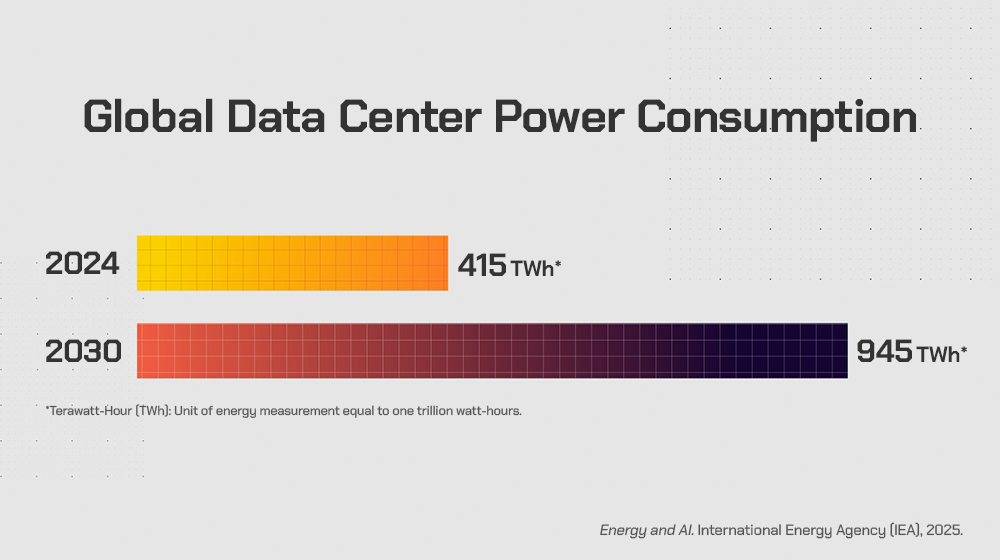

Scale that across the thousands of data centers powering AI training, inference, and everything in between, and you're looking at enough wasted energy to power entire countries.

TL; DR

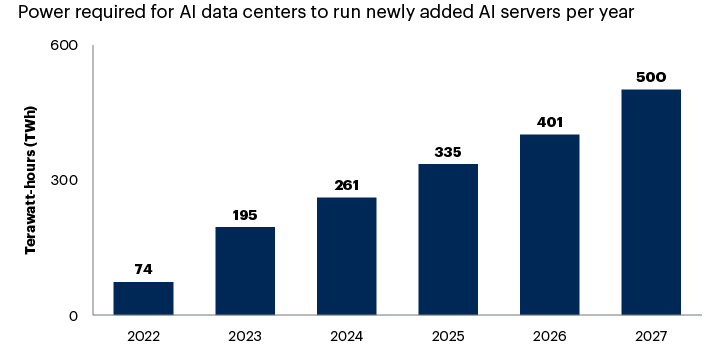

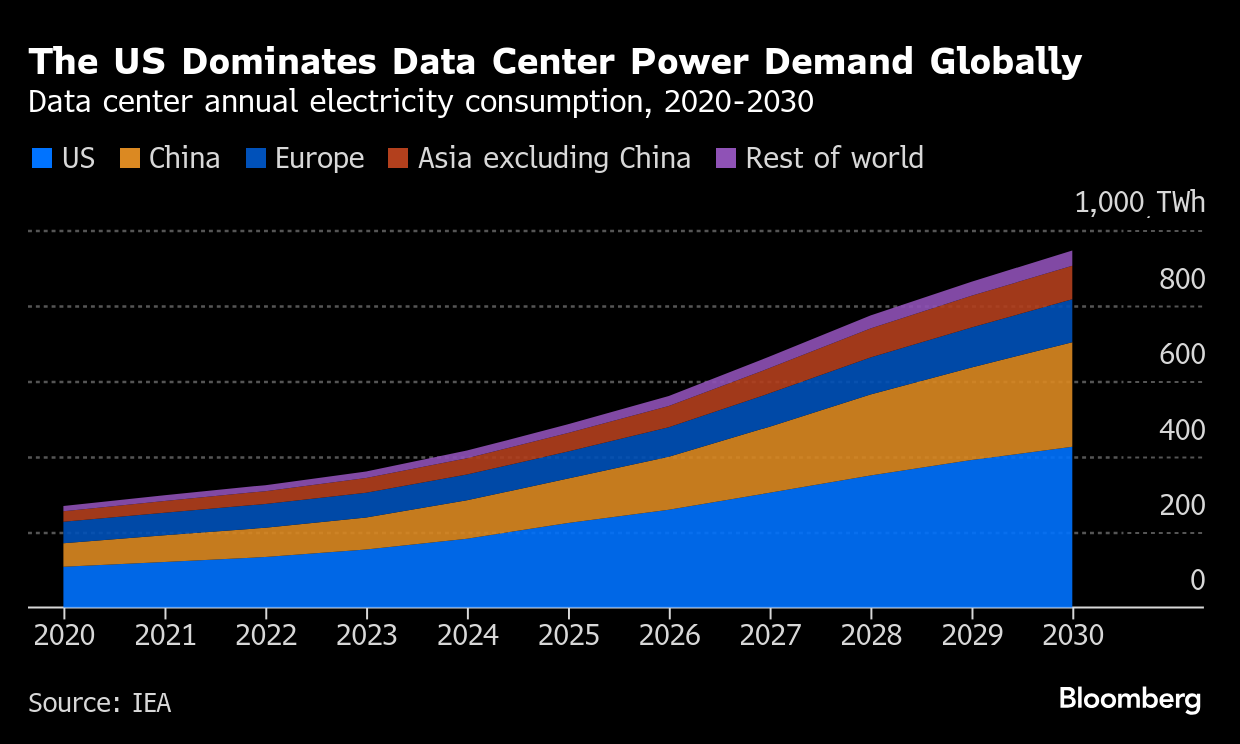

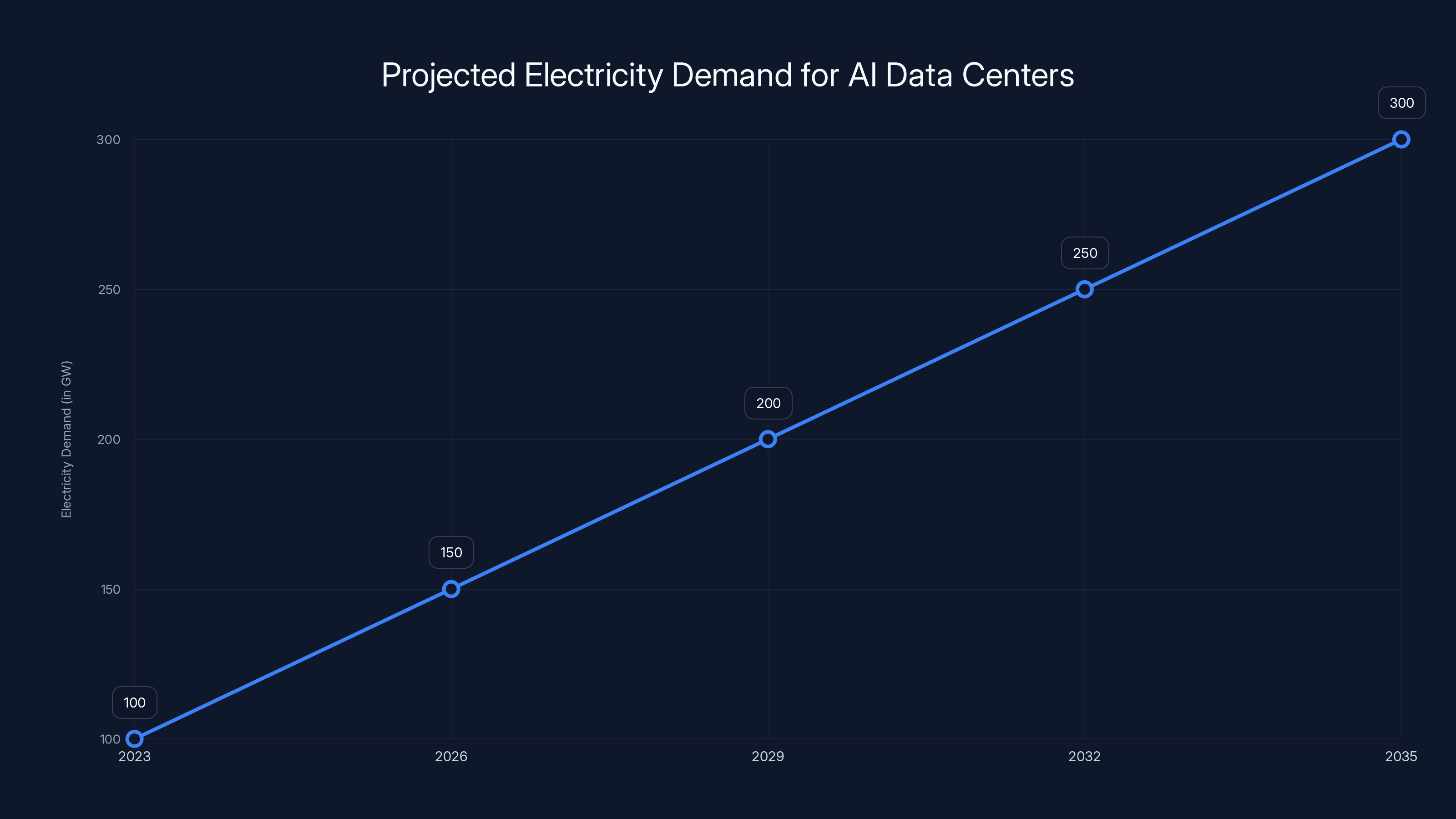

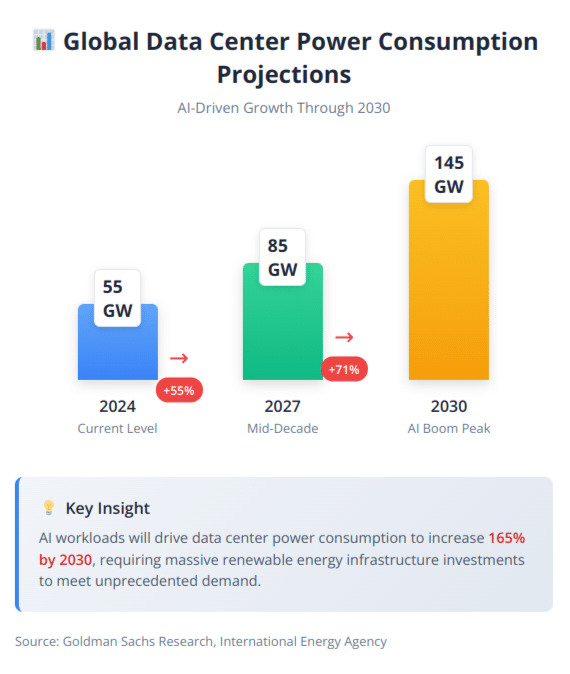

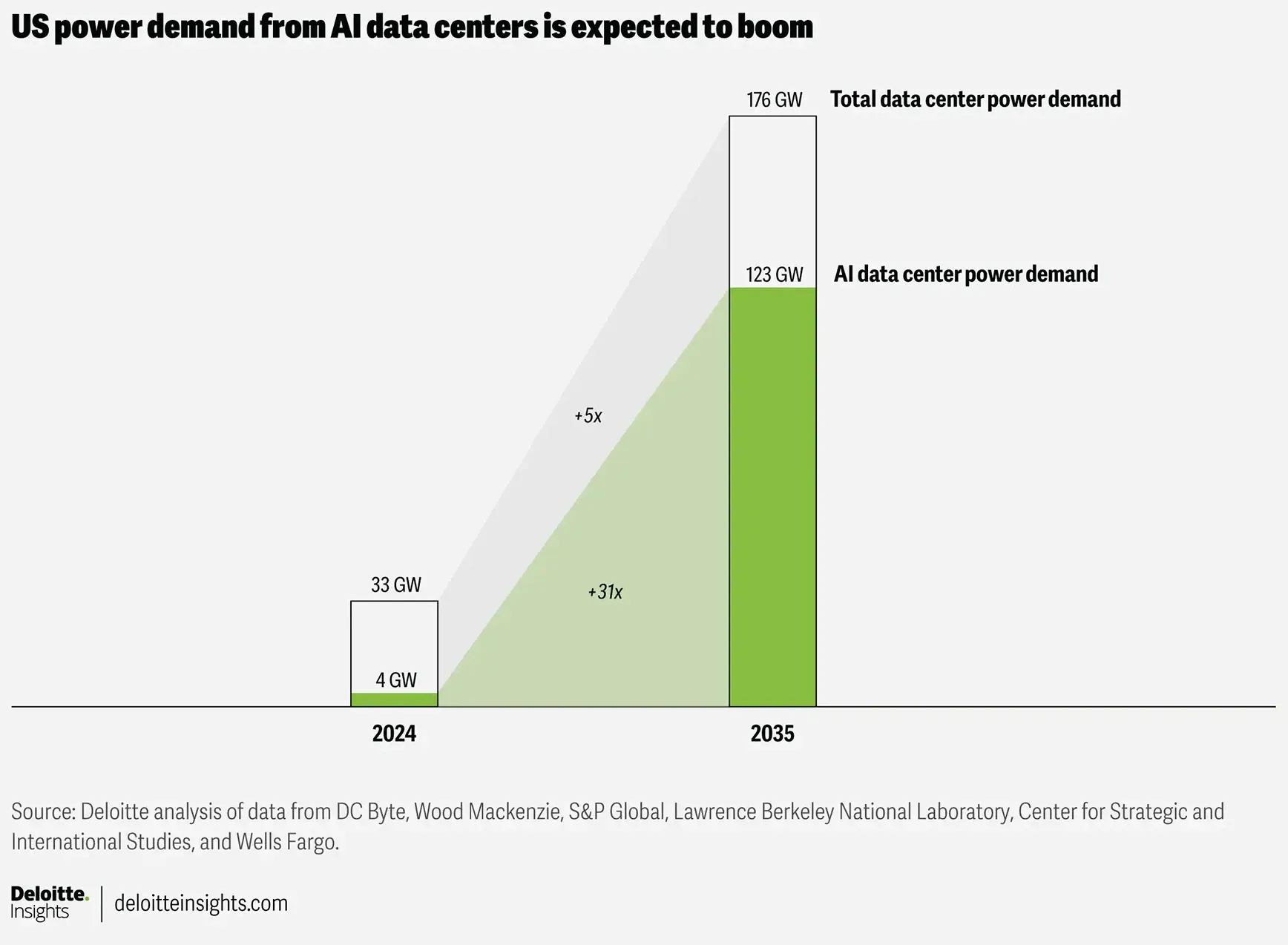

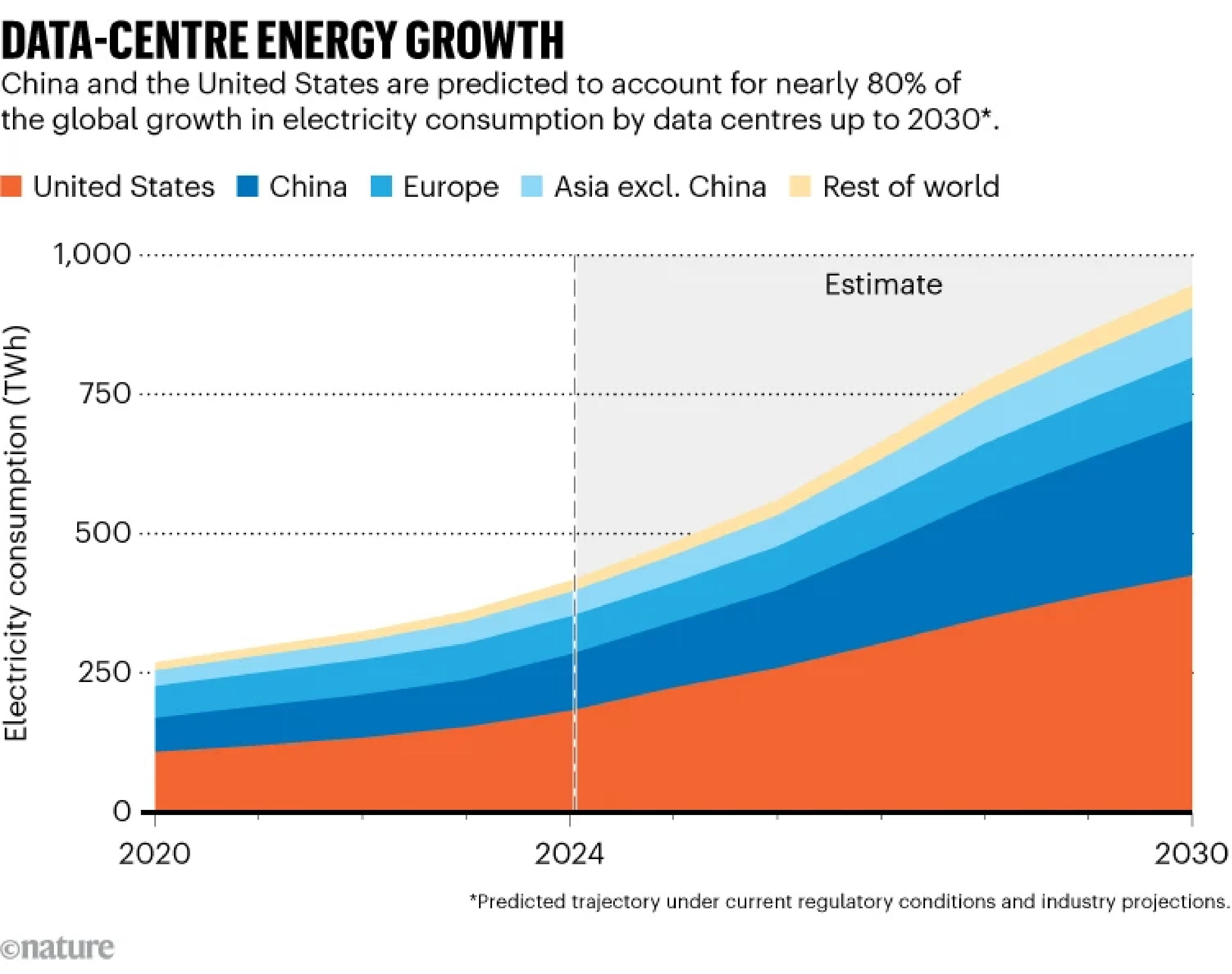

- Power, not compute, is now the limiting factor for AI data center scaling, with electricity demand projected to nearly triple by 2035

- C2i's grid-to-GPU approach reduces end-to-end power losses by approximately 10%, translating to 100 kilowatts saved per megawatt consumed

- Energy waste costs real money: a single percentage point of efficiency improvement can save billions across global hyperscaler infrastructure

- The semiconductor design ecosystem in India is maturing rapidly, making India-based startups viable competitors in specialized chip markets

- Timeline is compressed: C2i expects silicon samples by mid-2025 and customer validation within months, making this an immediate-term infrastructure problem

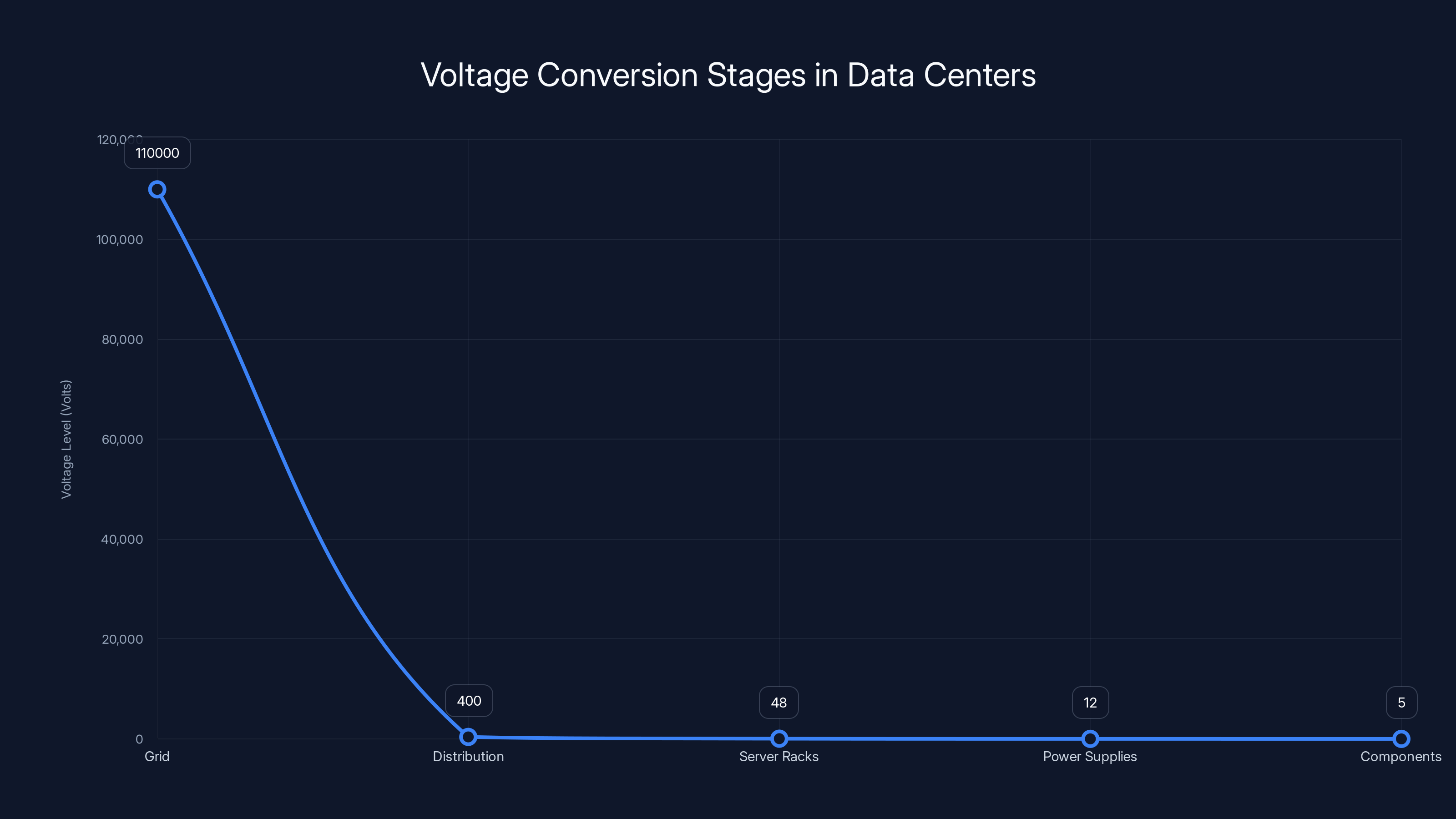

The chart illustrates the voltage conversion process in data centers, highlighting the significant drop from grid to component level, which involves multiple conversion stages.

Why Power Became the New Bottleneck in AI Infrastructure

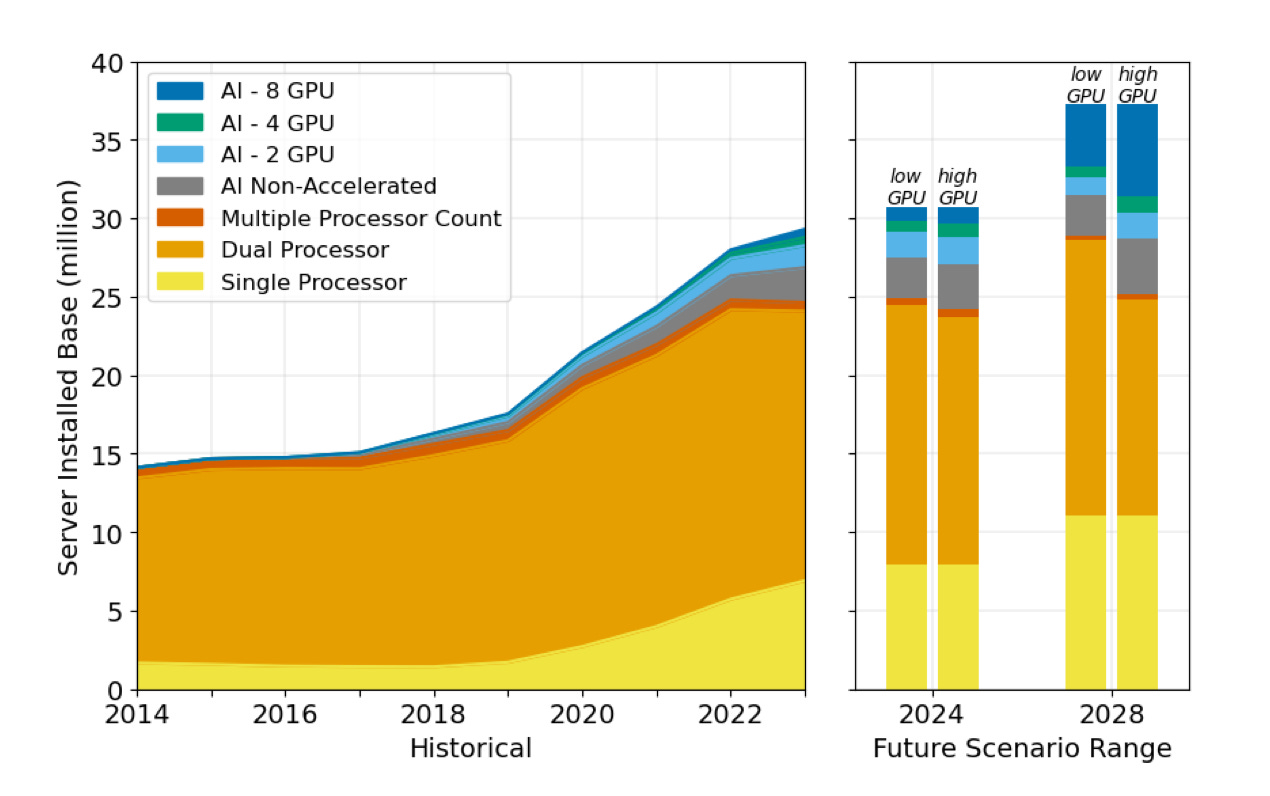

For years, the constraint was compute. How do we get enough transistors? How do we make them faster? The entire semiconductor industry optimized for one goal: more computing power per dollar.

But compute is only half the equation.

Think about it like a water system. You can build the world's most efficient pumps, but if your pipes are leaking 20% of the water before it reaches your faucet, efficiency doesn't matter. You need both the pump and the pipes.

AI data centers are hitting that limit right now. The compute is becoming abundant. Nvidia's producing record volumes of GPUs. AMD's competing aggressively. Custom chips from hyperscalers like Google, Meta, and Amazon are coming online. The constraint shifted.

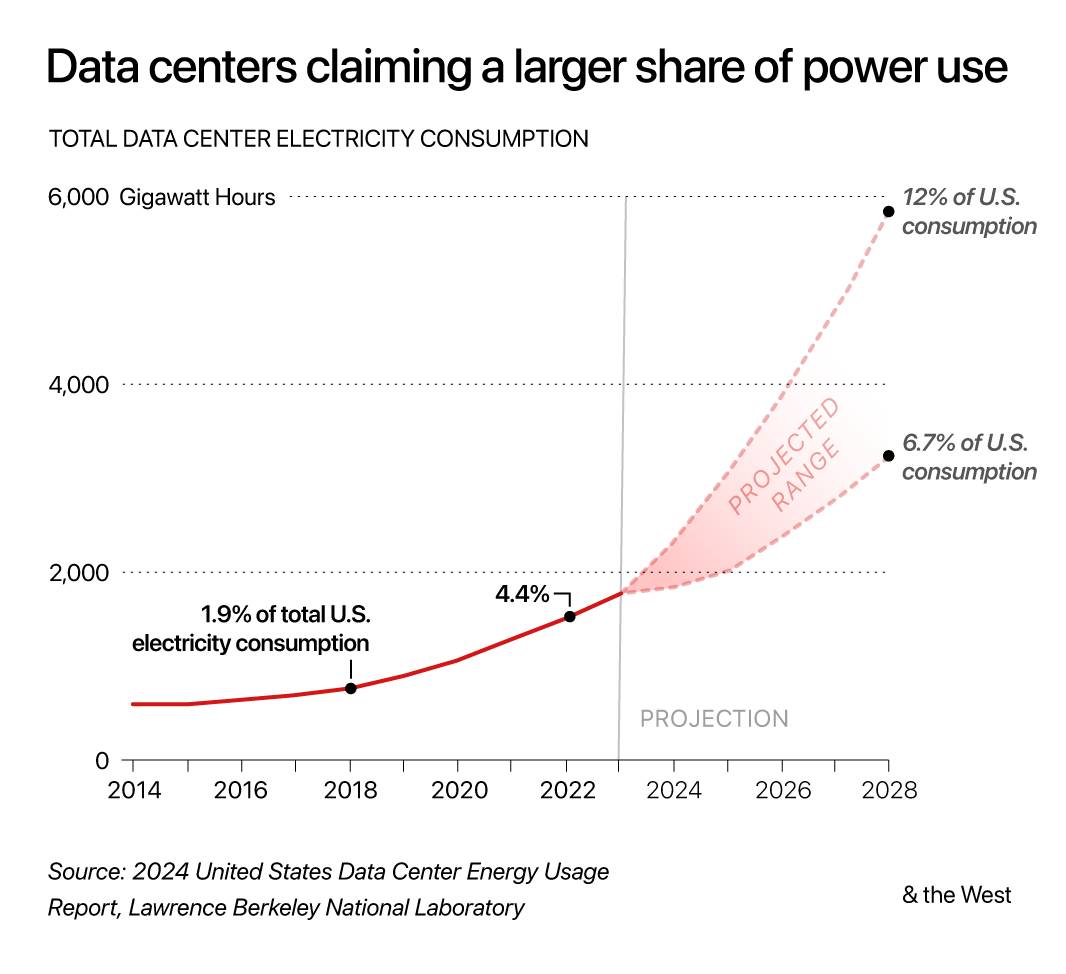

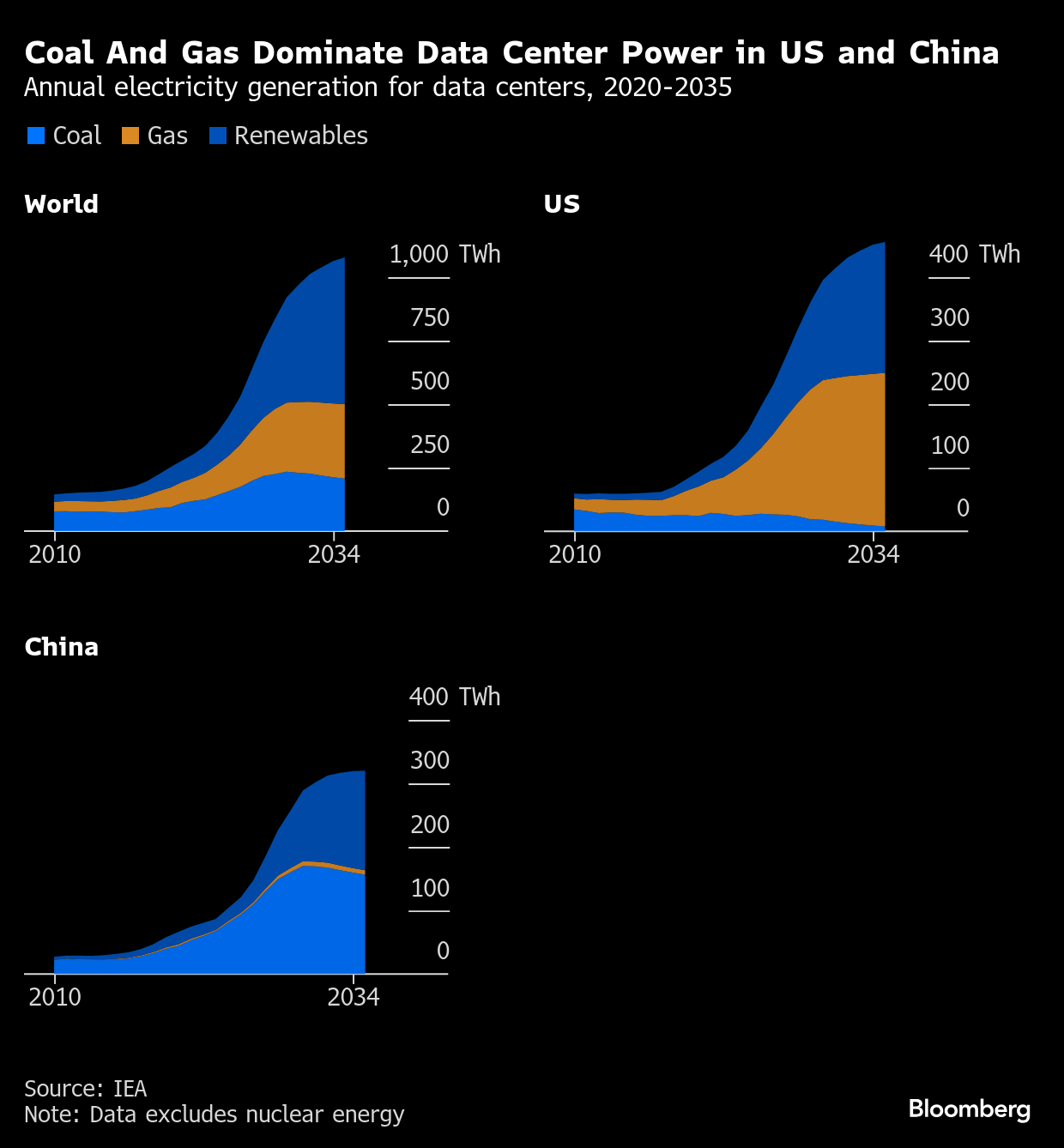

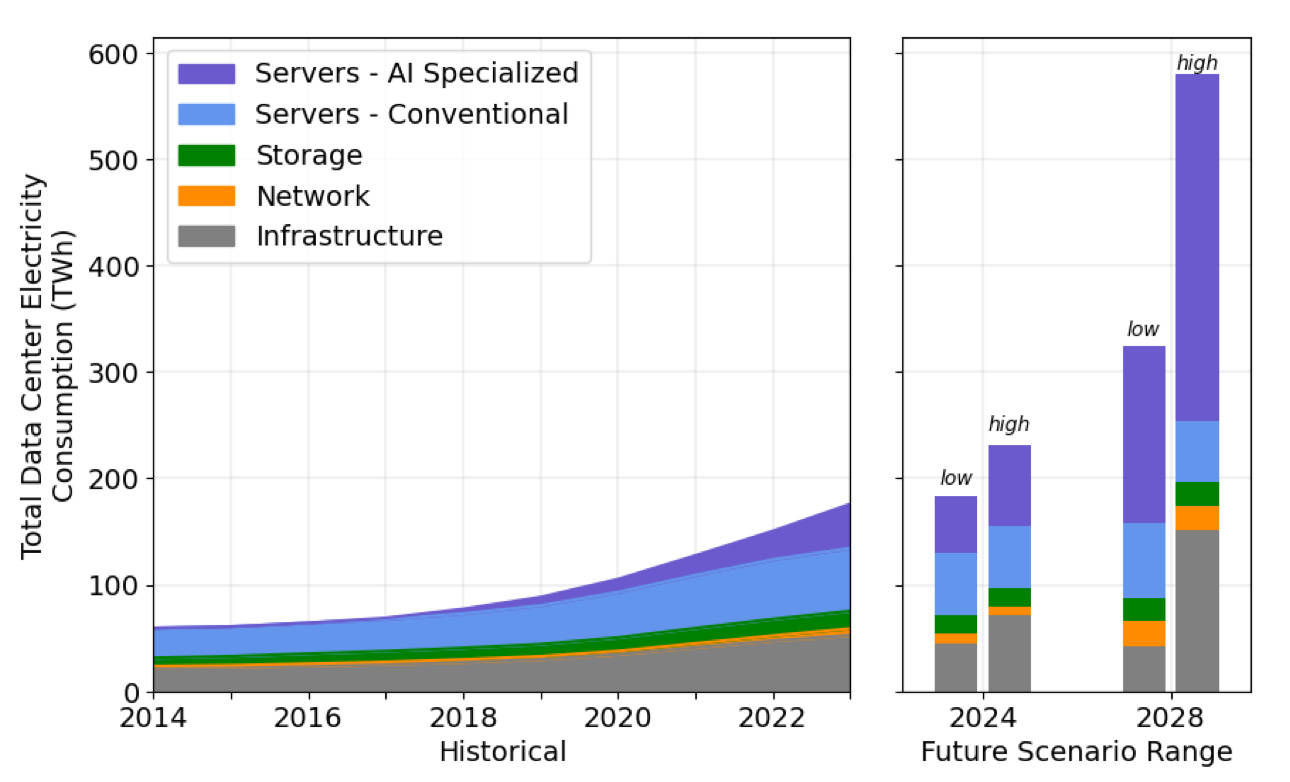

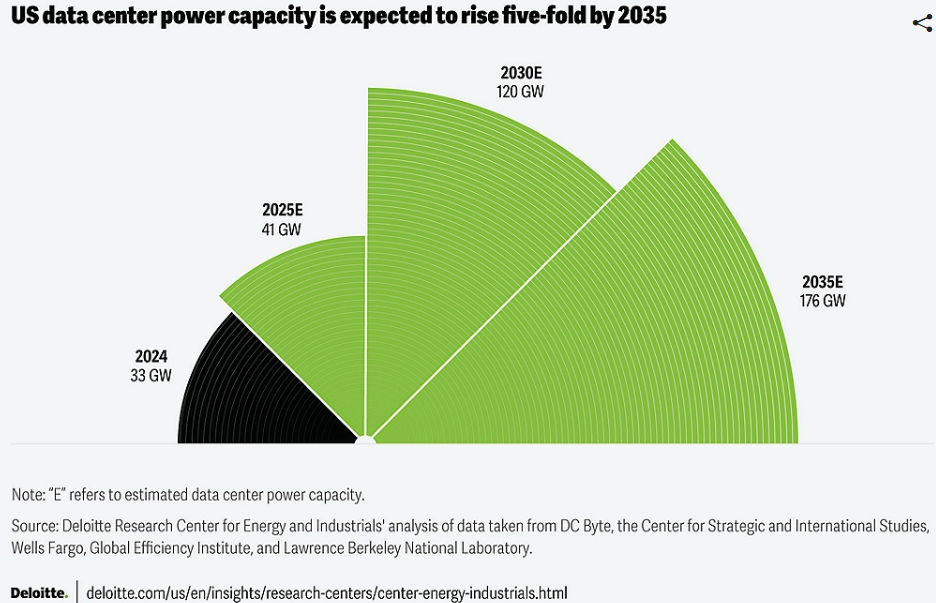

Power demand from data centers is projected to nearly triple by 2035, according to Bloomberg NEF analysis from December 2025. That's not hyperbole. Goldman Sachs Research estimates data-center power demand could surge 175% by 2030 from 2023 levels. To put that in perspective, that's equivalent to adding another country's entire electrical consumption. Another top-10 power-consuming nation, plugged into the grid.

Hyperscalers are already feeling the pressure. Facility planning now starts with a power budget, not a square footage budget. Regional electrical grids can't keep up. Some data centers are being built in locations chosen primarily for cheap electricity access, not for proximity to users or network hubs.

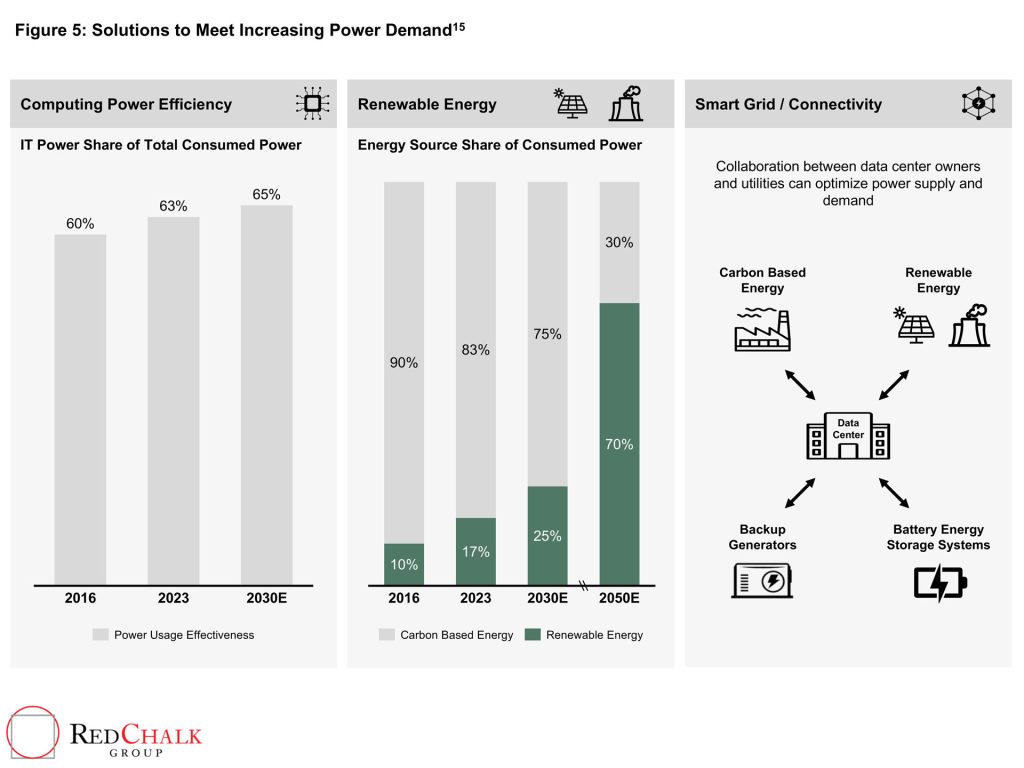

But here's the deeper problem that almost nobody talks about: most of that projected power demand isn't from the chips themselves doing useful work. It's from waste.

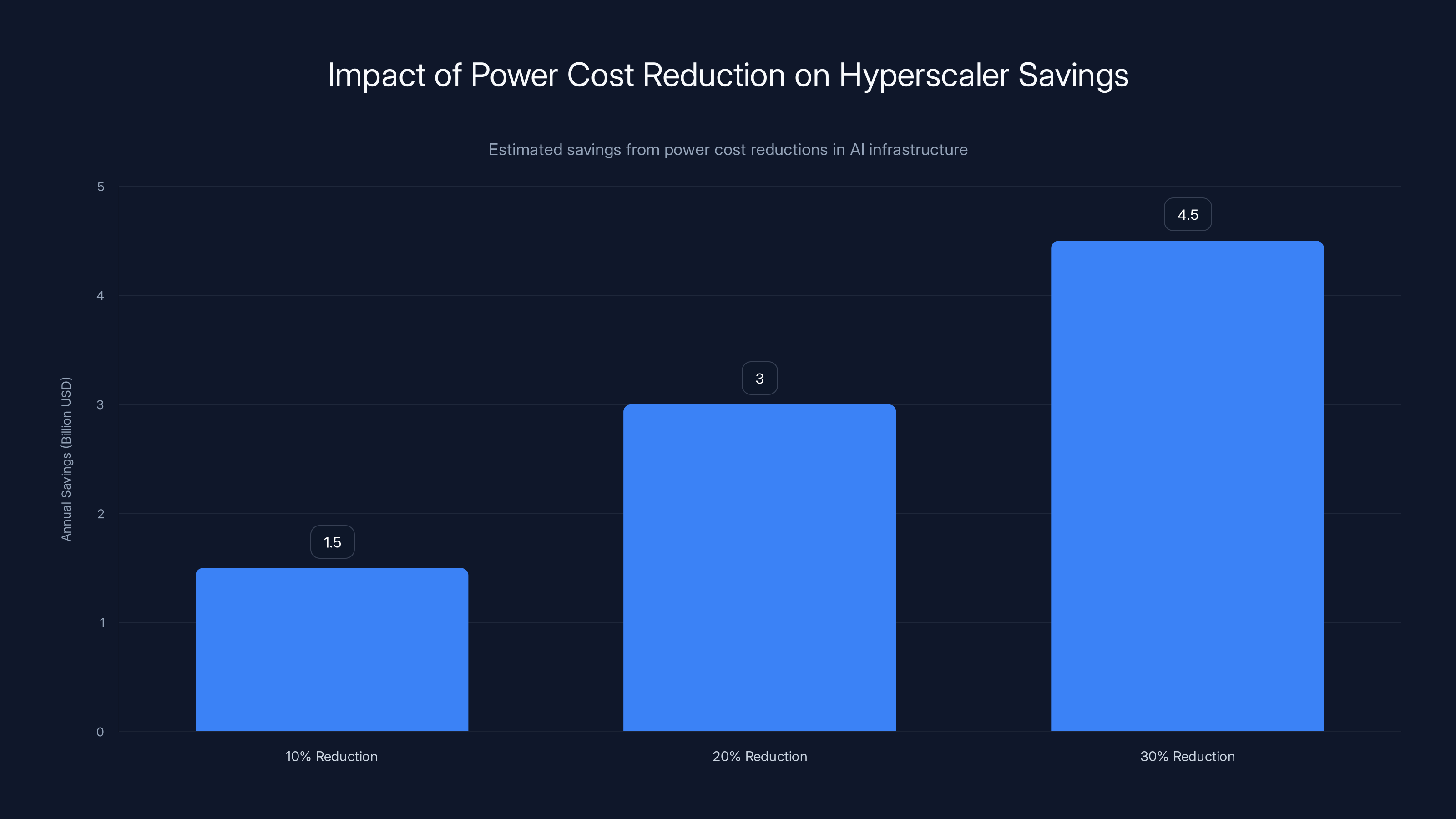

Estimated data shows that a 10% reduction in power costs can save

The Hidden Cost of Voltage Conversion in Data Centers

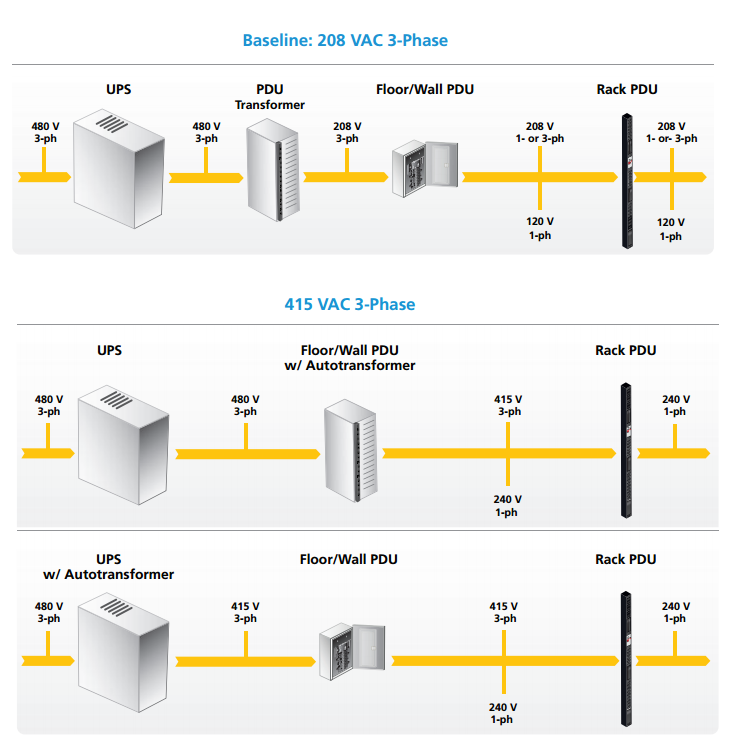

Power delivery in a data center is deceptively complex. Electricity doesn't flow in a straight line from the grid to the GPU. It gets transformed, regulated, and converted multiple times.

Start at the grid: 110 kilovolts or higher, depending on your region. That gets converted to 400 volts for distribution across the data center. Then 48 volts for the server racks. Then 12 volts for power supplies. Then sometimes lower voltages for specific components. Each conversion involves inductors, capacitors, semiconductors, all of which dissipate energy as heat.

C2i's co-founder Preetam Tadeparthy explained it clearly: "What used to be 400 volts has already moved to 800 volts, and will likely go higher." As voltages increase, losses should theoretically decrease because the same power traveling at higher voltage means lower current (and losses scale with current squared). But implementation matters. Design matters. Efficiency matters.

The current approach uses what's called a "point-of-load" converter philosophy. Each component or subsystem gets its own power conversion circuitry. The server gets a converter. The GPU carrier gets another converter. The memory gets its own supply. The storage gets another one. It's modular and flexible but inherently inefficient because you're converting and reconverting the same power multiple times.

C2i's insight is architectural. Instead of treating power delivery as separate components scattered throughout the data center, they're designing it as one integrated system. Imagine redesigning your plumbing so every pipe from the main line to every faucet was optimized for minimal pressure loss—that's conceptually what they're doing with electrical power.

The math on losses is compelling. If C2i's grid-to-GPU system cuts end-to-end losses from the current 15-20% down to 5-10%, that's not a marginal improvement. That's foundational.

For every megawatt a data center consumes, they save roughly 100 kilowatts. A single 10-megawatt AI cluster saves 1 megawatt. For reference, 1 megawatt of power consumption running 24/7 for a year costs somewhere between

The Economics That Make This Investment Worth Billions

Peak XV Partners didn't back C2i just because power is interesting technically. They backed it because the unit economics are brutal.

After you build a data center—the real estate, the buildings, the cooling systems, the network hardware—the dominant operating expense becomes power. It's not close. For a hyperscaler running large-scale AI infrastructure, power often represents 30-40% of total operating costs. Sometimes higher.

That means even tiny efficiency improvements compound into massive savings. A 10% reduction in power losses doesn't just save 10% on electricity bills. It cascades. Less heat means lower cooling costs. Lower cooling costs mean you need less physical space for cooling equipment. Less space means smaller buildings or more compute in the same footprint. More compute capacity means better amortization of fixed costs.

Rajan Anandan, managing director at Peak XV Partners, put it plainly: "If you can reduce energy costs by 10 to 30%, that's like a huge number. You're talking about tens of billions of dollars."

That's not marketing speak. That's literally the math. If hyperscalers globally run approximately 15-20 megawatts of dedicated AI compute infrastructure (a conservative estimate), and C2i's solution saves 10% of power costs at scale, that's

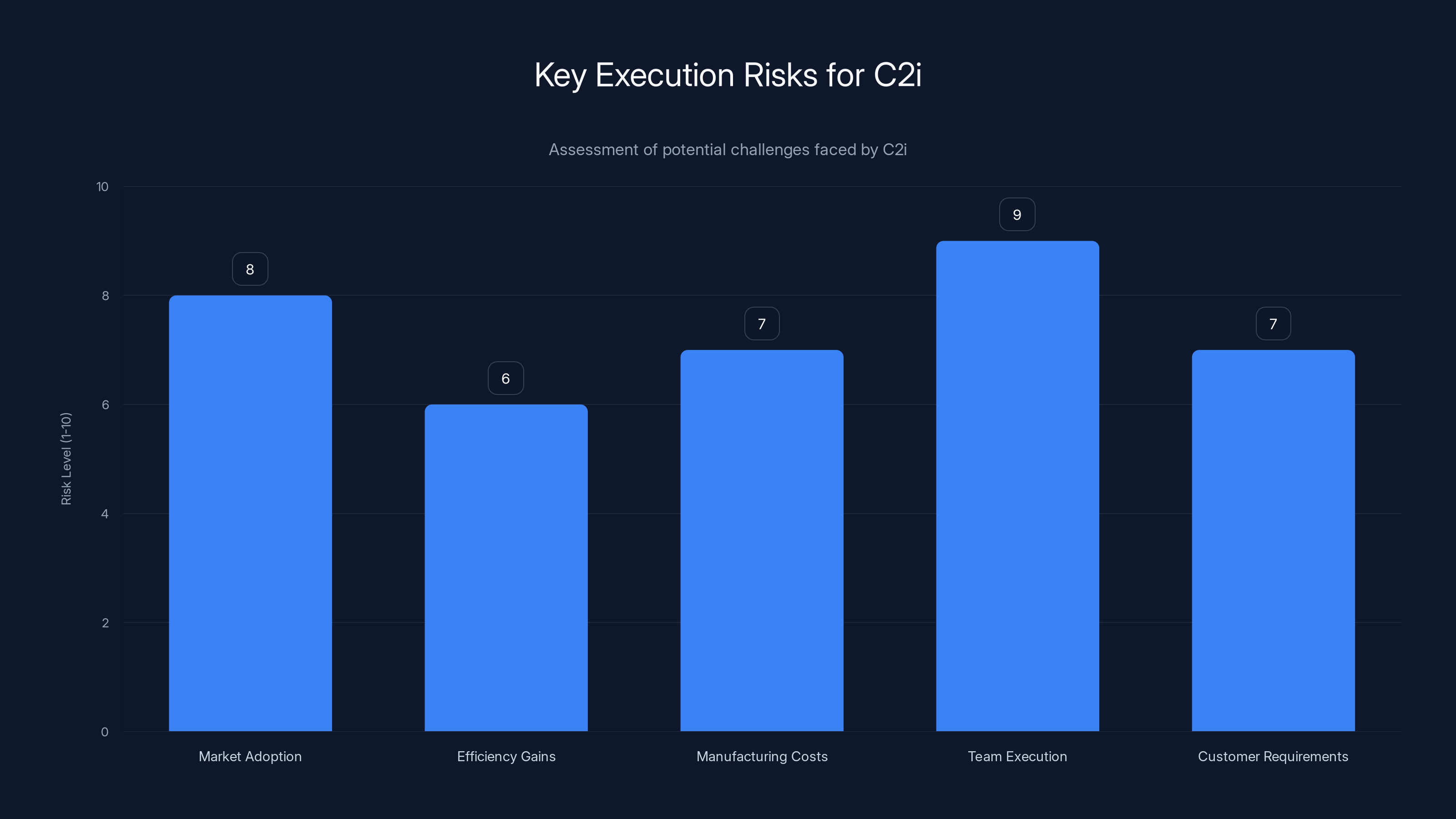

But unit economics alone don't explain the investment. Execution risk is enormous.

C2i's integrated power delivery system reduces power conversion losses from 15% to 5%, significantly saving energy and cooling costs in data centers.

Why Power Delivery is One of the Hardest Problems in Semiconductors

Power delivery systems are the forgotten infrastructure of computing. They work invisibly until they don't. When they fail, entire data centers go dark.

That invisibility creates a qualification problem. Data center operators don't swap in new power solutions on a whim. They need years of proven reliability, multiple redundancies, thermal validation, failure analysis, and vendor lock-in contracts. A new power delivery solution from an unknown startup? That's not a casual evaluation. That's a multi-year qualification process.

C2i's founders understand this because they came from Texas Instruments, one of the few semiconductor companies that's dominated power delivery for decades. Ram Anant, Vikram Gakhar, Preetam Tadeparthy, and Dattatreya Suryanarayana all have backgrounds in power systems design. They know the entrenched players, the regulatory hurdles, and the technical complexity.

They also know why nobody's solved this problem yet. Power delivery requires simultaneous optimization across multiple domains: silicon design, packaging, thermal management, control algorithms, and system integration. Each domain has its own tradeoffs. Do you prioritize efficiency or response time? Cost or reliability? Density or serviceability?

Most semiconductor startups solve one problem. Maybe they design a more efficient buck converter, or a better voltage regulator controller, or optimized packaging. But end-to-end system redesign? That requires coordinating silicon, packaging, and architecture simultaneously. That's capital-intensive. That takes years. That has massive execution risk.

Which is exactly why there aren't many companies doing it. It's the hardest problem, which means the biggest opportunity for whoever solves it.

C2i's Grid-to-GPU Architecture: What's Different

Most power systems are designed locally. Each component optimizes its own conversion without considering the broader system. The GPU power supply doesn't know about the memory supply. The memory supply doesn't know about the SSD supply. It's like having separate water systems for different rooms in a building instead of one main line.

C2i's approach is to treat power delivery as a single, integrated system spanning from the incoming grid connection all the way to the GPU itself. They call it grid-to-GPU architecture.

What does that actually mean in practice? It means:

Single-stage conversion where possible: Instead of converting 400V to 48V, then 48V to 12V, then 12V to the final voltage the GPU needs, you reduce intermediate steps. Fewer conversions mean fewer losses.

Integrated control systems: Rather than independent voltage regulators scattered throughout, you have coordinated control that manages power distribution across the entire system. When one component needs more power, the system redistributes from lower-demand areas instead of inefficiently converting extra power.

Optimized packaging: Power delivery systems include passive components (inductors, capacitors) and semiconductors. C2i's packaging approach minimizes parasitic resistance and inductance, reducing losses in the physical layout itself.

Thermal optimization: Power losses in traditional systems manifest as heat. Better efficiency means less heat, which means lower cooling requirements. C2i's design considers cooling from the ground up.

Custom silicon: Some of the losses in existing systems come from using general-purpose power management ICs designed for broad applications. C2i is designing silicon specifically for the grid-to-GPU use case, optimizing every transistor for this specific application.

The claimed improvement is a 10% reduction in end-to-end losses. That might sound like a rounding error, but in power systems, it's transformational. Every percentage point of efficiency improvement in a power system costs roughly the same in engineering effort as the last percentage point. Going from 80% to 90% efficiency is ten times harder than going from 70% to 80%. A 10% relative improvement in loss (from 15% to 5%, for example) represents architectural thinking, not incremental optimization.

In AI data centers, approximately 20% of power is lost during conversion processes, highlighting a significant inefficiency in current systems. Estimated data.

The Timeline: When This Actually Impacts Data Centers

C2i's first silicon designs are expected back from fabrication between April and June 2025. That's fast for semiconductor development, which speaks to the maturity of their design team and the leverage they're getting from India's semiconductor ecosystem.

Once they have silicon, the company plans to validate performance with data-center operators and hyperscalers. Multiple large operators have apparently asked to review the data, which is encouraging. It suggests genuine interest rather than polite listening.

Why the fast validation cycle? Because the problem is so urgent that customers are motivated to move quickly. Data centers aren't waiting patiently for solutions. They're actively seeking efficiency improvements. C2i's timing coincides with when this need became critical.

Deployment will still take time. Early adopters might have C2i systems running in pilot configurations by late 2025 or early 2026. Real scale—retrofitting existing data centers or standardizing in new builds—probably takes until 2026-2027. But in the fast-moving world of AI infrastructure, that's rapid.

Peak XV Partners: Why This Specific Investor Believed

Peak XV Partners is the continuation of Sequoia Capital's India operations after a 2023 separation. They're not a new fund. They're one of the most sophisticated tech investors globally, just with a specific India focus.

Their decision to back C2i signals something important: they believe the India semiconductor ecosystem is mature enough to develop globally competitive products. Not design centers for foreign companies. Not offshore development. Actual products designed, owned, and manufactured through India.

For years, India's semiconductor contributions were mostly software, services, and design outsourcing. Increasingly, Indian engineers are founding semiconductor companies that own their IP and compete globally.

Rajan Anandan's quote on this is telling: "The way you should look at semiconductors in India is, this is like 2008 e-commerce. It's just getting started."

E-commerce in 2008 was wildly fragmented. Lots of small players building rapidly. High execution risk but enormous potential. That's where Anandan sees semiconductors in India now.

What's changed to make this possible?

Talent pool: Indian engineers have been designing semiconductors for decades, but mostly for companies like Qualcomm, Intel, or TI. Now those engineers are starting companies. The talent is there. The experience is there. The confidence is there.

Foundry access: Companies like TSMC, Samsung, and Intel are opening capacity specifically for startups. Design services have become commoditized. You can tape out (send a chip design to manufacturing) without being a billion-dollar company.

Design-linked incentives: Indian government incentives (particularly under programs like the Scheme for Promotion of Semiconductor Manufacturing) have lowered the cost and risk of early-stage tape-outs. This de-risks the company's path to silicon.

Specialized market gaps: Rather than trying to compete with Nvidia in GPUs or Intel in processors, Indian semiconductor startups are attacking specialized markets where they can be best-in-class. Power delivery for AI infrastructure is exactly that kind of market.

C2i faces significant execution risks, with team execution and market adoption being the highest. Estimated data based on narrative insights.

The Entrenched Opposition: Why Incumbents Are Vulnerable

Power delivery has been dominated by the same companies for decades. Texas Instruments, Infineon, Vicor, and a few others basically own the market. They have existing relationships with hyperscalers, proven track records, and massive R&D budgets.

This sounds like C2i is doomed, right? The incumbents can just copy them.

But there's a structural reason that might not happen fast enough to matter.

Large semiconductor companies are good at incremental improvements. They make power conversion 2% more efficient this year, 2% next year. That's profitable. Their design teams are optimized for that cadence. Their qualification processes are set up for minor revisions.

Complete architectural redesign? That's disruptive to their own business. It obsoletes their existing IP portfolio. It requires retooling manufacturing partners. It disrupts customer relationships built on the old architecture. Large companies do this slowly or not at all.

For a startup like C2i, there's no installed base to cannibalize. They can redesign from scratch. They're motivated by the possibility of massive market share in a critical area. They move fast because they have to.

Incumbents will eventually compete. But by then, C2i might be entrenched in the largest hyperscaler infrastructure, with validation data that's hard to beat. In power systems, switching costs are high. Once you qualify a vendor, you're locked in for years.

The Technical Challenges Ahead: What Could Go Wrong

C2i's success isn't guaranteed. There are real technical risks that could derail the company.

Thermal management: More efficient conversion reduces heat, but it doesn't eliminate it. In a dense GPU cluster, you're still dealing with megawatts of power in compact spaces. Managing that heat without introducing new cooling costs is non-trivial. If C2i's solution looks great on paper but requires exotic cooling, it loses value.

Control algorithm complexity: Integrated power delivery means coordinated control across the entire system. Get that control algorithm wrong, and you have oscillations, instability, or worse, cascading failures. Power system stability is a mature field with well-understood failure modes. But implementing it at the scale and speed C2i needs is challenging.

Manufacturing variability: Power conversion losses are sensitive to component tolerances. A small variation in a capacitor or inductor can change efficiency by measurable percentages. Scaling from a few prototypes to volume production requires managing variation across suppliers and manufacturing partners. This is an execution detail that kills many hardware startups.

Compatibility with existing infrastructure: Data centers have invested heavily in existing power distribution architectures. C2i's solution needs to work within those constraints or drive adoption of entirely new infrastructure. The latter is much harder and slower.

Competing solutions: C2i isn't working in a vacuum. Other teams are probably thinking about similar problems. If a larger incumbent, or a better-funded startup, solves this first, C2i becomes a footnote. The power delivery market is attractive enough that C2i won't be the only player pursuing this.

Electricity demand for AI data centers is projected to nearly triple by 2035, highlighting power as the key limiting factor for scaling. Estimated data.

How Hyperscalers Will Evaluate C2i's Claims

Hyperscalers are smart buyers. They understand power systems intimately. When C2i claims 10% loss reduction, these customers will demand proof.

C2i's validation process will likely include:

Benchtop testing: Running C2i's silicon and power delivery systems in controlled laboratory environments. Measuring efficiency at various load conditions. Stress testing.

Simulation vs. reality: Comparing actual measured performance against computer simulations. If there's a gap, investigating why. Large efficiency gaps would be red flags.

Thermal testing: Operating the system under realistic power loads and measuring heat dissipation. Validating cooling requirements. Stress testing at elevated ambient temperatures.

Reliability testing: Accelerated life testing, thermal cycling, component failure injection. Hyperscalers need decades of reliable operation, not years.

Integration testing: Installing C2i hardware into a representative data center rack or small cluster. Testing interaction with real GPUs, memory, storage, and cooling systems. Validating that the claimed efficiency gains hold up in production environments.

This validation won't finish overnight. Expect 6-12 months of customer testing before any real production deployments.

The Broader Context: Power as a Competitive Advantage

C2i's success matters beyond just the company. It signals that power efficiency is becoming a first-order competitive advantage in AI infrastructure.

For hyperscalers, a 10% improvement in power efficiency translates directly to the bottom line. It also translates to competitive advantage. If you operate AI infrastructure 10% more efficiently than your competitors, you can either charge customers less (increasing market share) or maintain higher margins. Either way, you win.

This creates pressure on all hyperscalers to adopt better power delivery. The best companies might work with C2i. Others might push their existing suppliers to improve. Some might develop in-house solutions.

But the overall industry shifts toward efficiency-first thinking. That's not a bad outcome. It means less wasted energy, lower carbon footprint, and more sustainable AI infrastructure.

It also means more opportunities for C2i if they execute. If they become the standard for grid-to-GPU power delivery, they're looking at potential multibillion-dollar markets. Injecting C2i's technology into every new AI cluster built over the next decade is an enormous addressable market.

India's Semiconductor Moment: Why This Matters Beyond C2i

C2i's funding is significant because it validates India as a source of semiconductor innovation, not just engineering services.

Historically, India's role in semiconductors was backfill. Design centers for foreign companies. Offshore development. Valuable work, but not ownership.

What's changing:

Government policy: Design-linked incentives make it economically viable for startups to tape out (send designs to manufacturing) multiple times without massive capital. This de-risks experimentation.

Venture capital: Indian VC funds now understand semiconductors. They're willing to back 10-year plays with high technical risk. That's a recent shift.

Talent repatriation: Senior engineers with global experience are returning to India to start companies. This accelerates execution and credibility.

Specialized markets: Rather than competing in broad markets (where incumbents dominate), Indian startups are picking specialized gaps. Power delivery for AI infrastructure is one example. Custom AI accelerators are another.

None of this means India will displace Silicon Valley in semiconductors. But it does mean India becomes a serious player in specific, high-value segments. C2i is a bet on this becoming a trend.

If the bet pays off, we'll see a wave of Indian semiconductor startups over the next 5-10 years, each attacking specialized markets where they can be best-in-class. That benefits global tech because it increases competition and innovation.

Real-World Impact: What Happens When C2i Succeeds

Assuming C2i executes and their technology gets adopted by major hyperscalers, what changes?

Data center economics improve: Hyperscalers can build AI infrastructure with lower total cost of ownership. Some deploy more capacity. Others improve profitability. Likely both.

Competitive pressure on power delivery: Incumbents like TI and Infineon will face pressure to improve. They'll invest in competing architectures. The entire industry gets better.

Faster AI scaling: Lower power costs mean economics favor more AI training and inference. This doesn't sound profound, but it actually drives down costs for AI services, making them more accessible to smaller companies and developing economies.

Regional infrastructure expansion: Currently, AI infrastructure concentrates in regions with cheap power (Iceland, Middle East, parts of Asia). Better efficiency enables AI infrastructure in other regions. You might see major AI data centers in places they couldn't justify before.

Renewable energy integration: More efficient power systems mean less cooling overhead. This makes it easier to integrate renewable power sources, which have variable output. Energy efficiency and renewable energy both benefit.

None of this happens overnight. But if C2i's technology becomes standard in hyperscaler infrastructure within 3-5 years, the second-order effects are significant.

What C2i's Success Means for the Semiconductor Industry

C2i is attacking a problem that incumbents can solve but won't prioritize. That's the sweet spot for startup disruption.

It also demonstrates how the semiconductor industry is evolving. We're past the era where one company (Nvidia for GPUs, Intel for processors) dominates everything. Increasingly, specialized companies own specialized problems.

For power delivery, we might see a future where C2i owns grid-to-GPU systems, while other companies own high-frequency switch-mode power supplies, or dynamic voltage and frequency scaling, or thermal management. Each company is world-class in their segment.

This is healthier for the industry because it drives specialization and innovation. It's also better for customers because they can pick best-in-class solutions for each part of the stack.

C2i's funding validates that this model works. If they execute, expect more Indian (and global) semiconductor startups solving specialized infrastructure problems.

Execution Risks: The Honest Assessment

Let's be clear about what could go wrong:

Market adoption could be slower than expected: Hyperscalers might be interested but move slowly. Qualification cycles might extend. By the time C2i's technology reaches volume deployments, other competitors might emerge.

The efficiency gains might not hold up in real deployments: Laboratory results don't always translate to production. C2i's 10% loss reduction might become 6% or 7% when integrated into actual data center environments. Still valuable, but less compelling.

Manufacturing costs could be higher than expected: Custom silicon and integrated packaging are expensive to manufacture at scale. C2i's cost structure might not support aggressive pricing needed to win large contracts.

Team execution: C2i is a 65-person startup taking on a massive technical and commercial challenge. Team dynamics, hiring, or key personnel changes could derail progress. Execution risk is real.

Changing customer requirements: Data centers continuously evolve. By the time C2i's solution is ready for scale, customer needs might have shifted. Power might still matter, but different aspects of power delivery could become critical.

These risks don't mean C2i will fail. But they're real. The company needs to execute nearly flawlessly over the next 18-24 months.

The Broader Lesson: Infrastructure Innovation Requires Specific Conditions

C2i's story illustrates why infrastructure gets solved by specialized companies, not generalists.

Power delivery is unglamorous. It doesn't generate PR. Journalists don't cover power efficiency with the excitement they cover AI models. But it's foundational. It enables everything else.

For a founder team to attack power delivery, they need:

Deep domain expertise: You can't fake power systems knowledge. You need years of experience understanding loss mechanisms, control systems, and failure modes.

Patient capital: Power system development takes time. VCs need to understand that timelines are measured in years, not quarters.

Access to manufacturing: You need relationships with foundries, packaging companies, and test facilities. These relationships take time to build.

Clear customer pain point: The pain has to be acute enough that customers will work with you despite execution risk.

C2i has all four. That's why it has a shot.

More broadly, this suggests other infrastructure problems might be solvable by similar startup approaches. What other aspects of data center infrastructure are suboptimized? Cooling systems? Networking? Storage I/O? There are probably dozens of problems where architectural thinking could drive significant improvements.

Looking Forward: What Happens Next

Over the next 12-18 months, watch for:

Silicon tape-out results: When C2i announces their first silicon is back from manufacturing, that's a major milestone. If it works as designed, momentum accelerates. If there are issues, confidence drops.

Customer validation announcements: When the first hyperscaler (or even a smaller data center operator) publicly validates C2i's efficiency claims, that's transformational. It removes skepticism.

Competitive response: Watch for announcements from TI, Infineon, or other incumbents developing competing solutions. A strong competitive response suggests they take C2i seriously.

Follow-on funding: If C2i needs more capital for manufacturing ramp or customer support, that signals confidence from existing investors.

Hiring announcements: C2i will need to hire significantly to support customer deployments and product evolution. New hires in systems engineering or field applications engineering signal scaling.

The semiconductor industry moves slowly, but this is one of the most consequential problems in infrastructure right now. How C2i executes will matter beyond the company itself.

FAQ

What is grid-to-GPU power delivery architecture?

Grid-to-GPU architecture treats power delivery as a single, integrated system spanning from incoming electrical power to GPU consumption. Instead of multiple separate conversions (400V to 48V to 12V, etc.), the system minimizes conversion stages and coordinates power management across all components. This reduces cumulative power losses from the typical 15-20% down to approximately 5-10%, saving significant energy and cooling costs in data centers.

Why do data centers waste 15-20% of power in conversion losses?

Data centers receive high-voltage power from the grid, then convert it multiple times for different components. Each conversion involves semiconductors, inductors, and capacitors that dissipate energy as heat. Current designs use independent point-of-load converters for different components, meaning power is converted multiple times inefficiently. C2i's approach integrates these conversions into one optimized system, dramatically reducing losses.

How much can hyperscalers save with C2i's technology?

For every megawatt of power consumed, a 10% loss reduction saves roughly 100 kilowatts. A single 10-megawatt AI cluster saves 1 megawatt, worth approximately

When will C2i's technology be available in production data centers?

C2i expects silicon samples by mid-2025 followed by customer validation over 6-12 months. Early production deployments could begin in late 2025 or early 2026, with significant scale likely by 2026-2027. The timeline is aggressive for semiconductors but achievable given the urgent market need and the team's experience.

Why is India becoming a semiconductor hub for startups like C2i?

Several factors converge: (1) Abundant engineering talent with global semiconductor experience, (2) Government design-linked incentives that reduce tape-out costs and risk, (3) Access to foundries and manufacturing partners becoming more accessible to startups, and (4) Specialized market opportunities where Indian startups can be world-class without competing directly with Silicon Valley incumbents. This creates an environment where experienced semiconductor teams can found companies with reasonable probability of success.

What risks could prevent C2i from succeeding?

Key execution risks include: market adoption slower than expected, efficiency gains not holding up in real deployments, higher-than-expected manufacturing costs, team execution challenges, and shifts in customer requirements during the long development cycle. Semiconductor startups face 5-10 year timelines to revenue, making operational discipline critical. Even slight delays can cascade into missed windows.

How will hyperscalers validate C2i's claims about power efficiency?

Customers will conduct benchtop testing of C2i's hardware, compare simulation predictions against real measurements, perform thermal testing under realistic loads, conduct reliability testing via accelerated life testing, and run integration tests in representative data center environments. This validation cycle typically takes 6-12 months before any production deployment. Hyperscalers require extensive proof before trusting new infrastructure vendors.

Could existing power delivery companies like Texas Instruments simply copy C2i's design?

Incumbents could eventually compete, but face structural barriers: their business models depend on incremental improvements to existing products, architectural redesign threatens their installed IP and customer relationships, and their qualification processes move slowly. C2i's advantage is moving fast without internal constraints. By the time incumbents respond, C2i could be entrenched through first-mover advantage and customer lock-in.

What is the broader impact of power efficiency in AI data centers?

Better power efficiency directly improves hyperscaler profitability and competitiveness, reduces AI infrastructure costs enabling broader adoption, enables AI deployments in regions previously uneconomical due to high power costs, integrates better with renewable energy sources, and reduces the overall carbon footprint of AI infrastructure. The second-order effects matter as much as the direct cost savings.

How does this relate to India's overall semiconductor ambitions?

C2i represents a shift in India's role from engineering services provider to IP owner and innovator. Government incentives, returning talent, and venture capital creating the conditions for Indian semiconductor startups to compete globally in specialized segments. If successful, C2i could validate a model for other Indian semiconductor companies targeting specific infrastructure problems. This doesn't displace Silicon Valley but creates a multipolar semiconductor ecosystem.

Conclusion

Power was always the unsexy part of data center infrastructure. Until it became the bottleneck.

C2i's $15 million funding round signals that the data center industry finally recognizes what engineers have known for years: power delivery is where the real optimization problems hide. It's not glamorous, but it's critical.

The company's path to success is narrow. They need to execute silicon design flawlessly. They need customers willing to take a chance on a startup vendor in mission-critical infrastructure. They need manufacturing partners who can scale efficiently. They need to keep pace with competitive responses from well-funded incumbents.

But the opportunity is massive. If they pull it off, they're looking at a multibillion-dollar market. More importantly, they're looking at foundational change in how hyperscalers build AI infrastructure.

The broader implication matters too. C2i's success would validate that India can produce semiconductor innovation that competes globally, not just support global companies. That opens doors for the next wave of specialized semiconductor startups.

For now, the company has 18 months to prove their architecture works in silicon. Everything else follows from that. Watch their silicon tape-out results closely. That's when we'll know if this bet on architectural thinking pays off.

The infrastructure needed to power AI's growth is just beginning to get the attention it deserves. C2i's timing is perfect—or at least, perfectly aligned with when the problem became impossible to ignore.

Key Takeaways

- Power efficiency, not raw compute capacity, is now the primary constraint limiting AI data center scaling and hyperscaler economics

- C2i's grid-to-GPU architecture can reduce power conversion losses by approximately 50% relative to current systems, saving 100kW per megawatt consumed

- A single percentage point of power efficiency improvement translates to tens of millions in annual savings for individual hyperscalers, and tens of billions industry-wide

- India's semiconductor ecosystem has matured sufficiently to enable startups to develop globally competitive specialized chips, not just provide engineering services

- Power delivery remains entrenched with incumbents, but architectural innovation opportunities exist because large companies are disincentivized from disruptive redesigns

- C2i's validation timeline is compressed—silicon by mid-2025, customer validation within 12 months—making this a near-term infrastructure transformation

Related Articles

- AI Data Centers & Electricity Costs: Who Pays [2025]

- How to Get Into a16z's Speedrun Accelerator: Insider Tips [2025]

- Cherryrock Capital's Contrarian VC Bet on Overlooked Founders [2025]

- Cohere's $240M ARR Milestone: The IPO Race Heating Up [2025]

- StreamFast SSD Technology: The Future of Storage Without FTL [2025]

- xAI Engineer Exodus: Inside the Mass Departures Shaking Musk's AI Company [2025]

![AI Data Centers Hit Power Limits: How C2i is Solving the Energy Crisis [2025]](https://tryrunable.com/blog/ai-data-centers-hit-power-limits-how-c2i-is-solving-the-ener/image-1-1771205753996.jpg)