AI Gross Margins Are Climbing, but R&D Costs Are Skyrocketing: What the Latest Data Reveals About AI Economics

If you're building AI products right now, you're living in two conflicting realities.

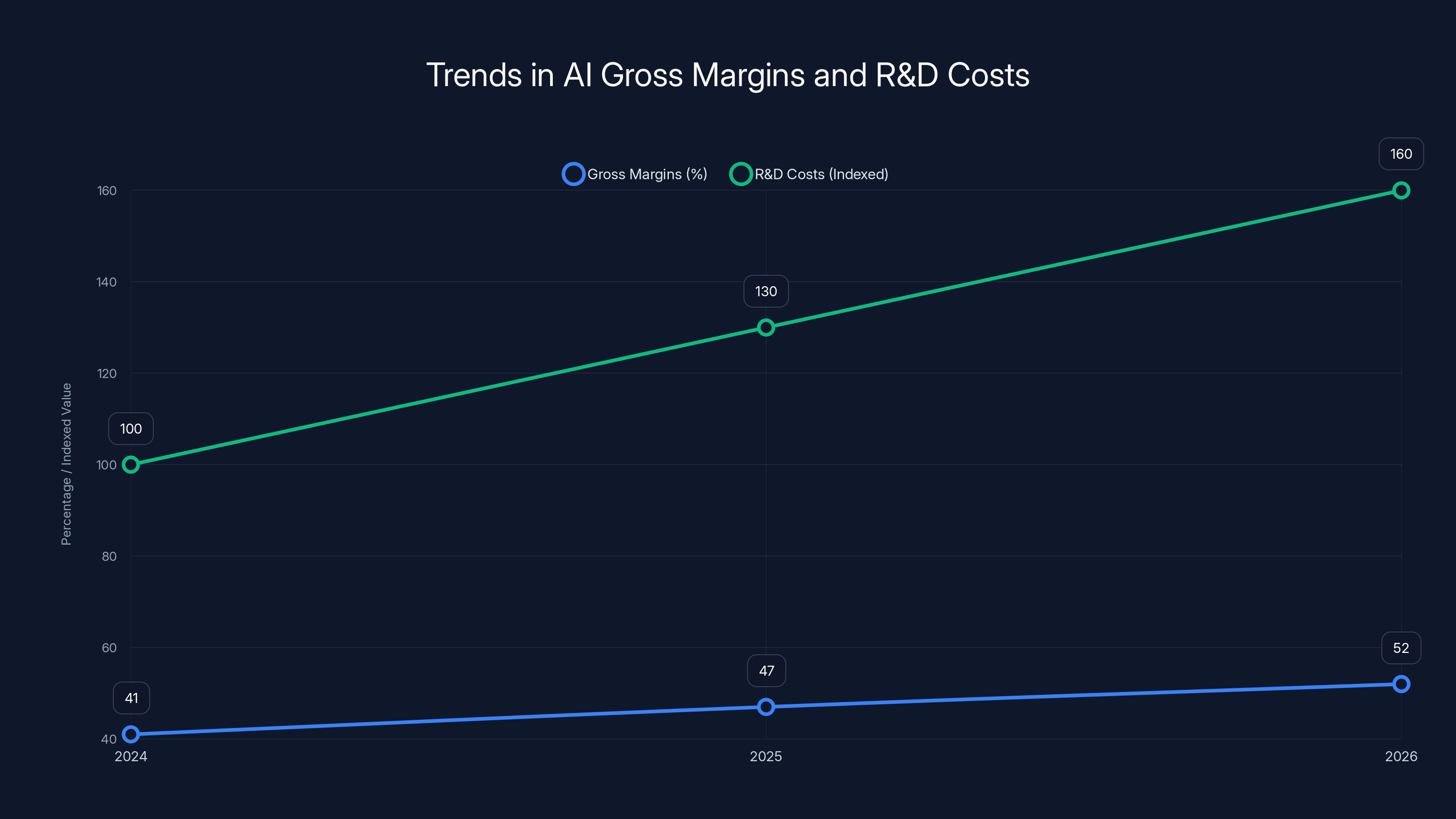

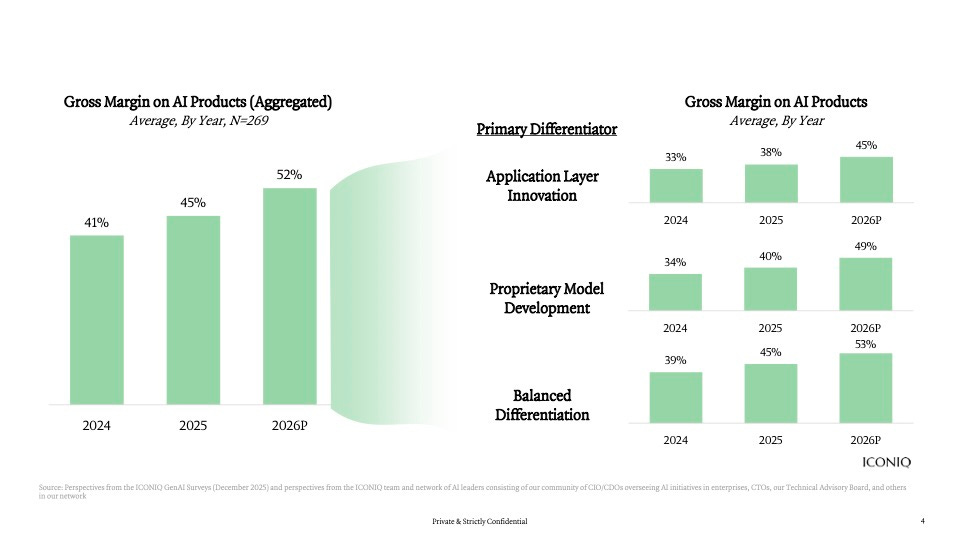

On one hand, gross margins for AI software are improving. Companies are getting smarter about infrastructure, optimizing model selection, and routing tasks efficiently. The best performers are seeing margins push toward 52% in 2026, compared to just 41% in 2024. That's real progress.

On the other hand, R&D spending is absolutely exploding. Companies are doubling down on AI talent, investing heavily in data infrastructure, and building more sophisticated product layers. The cost of staying competitive has never been higher.

And then there's pricing. It's a complete mess.

Recent industry research surveyed roughly 300 executives across AI-focused software companies, ranging from early-stage startups to billion-dollar enterprises. The results reveal a market in transition, where the easy wins are gone and the hard work of building durable, profitable AI businesses is just beginning.

This isn't theoretical anymore. We've moved past the "should we build AI features" question. The real question now is: how do we scale AI sustainably?

Here's what matters most for founders and executives building AI products in 2025 and beyond.

TL; DR

- Gross margins are improving to projected 52% by 2026, up from 41% in 2024, but only for disciplined operators

- R&D spending is surging as companies invest in AI talent and infrastructure to stay competitive

- Vertical AI applications are the primary value driver, with 70% of companies building industry-specific solutions

- Pricing models are unstable, with 37% of companies planning to change their approach within 12 months

- Model commoditization is accelerating, making application-layer differentiation essential for survival

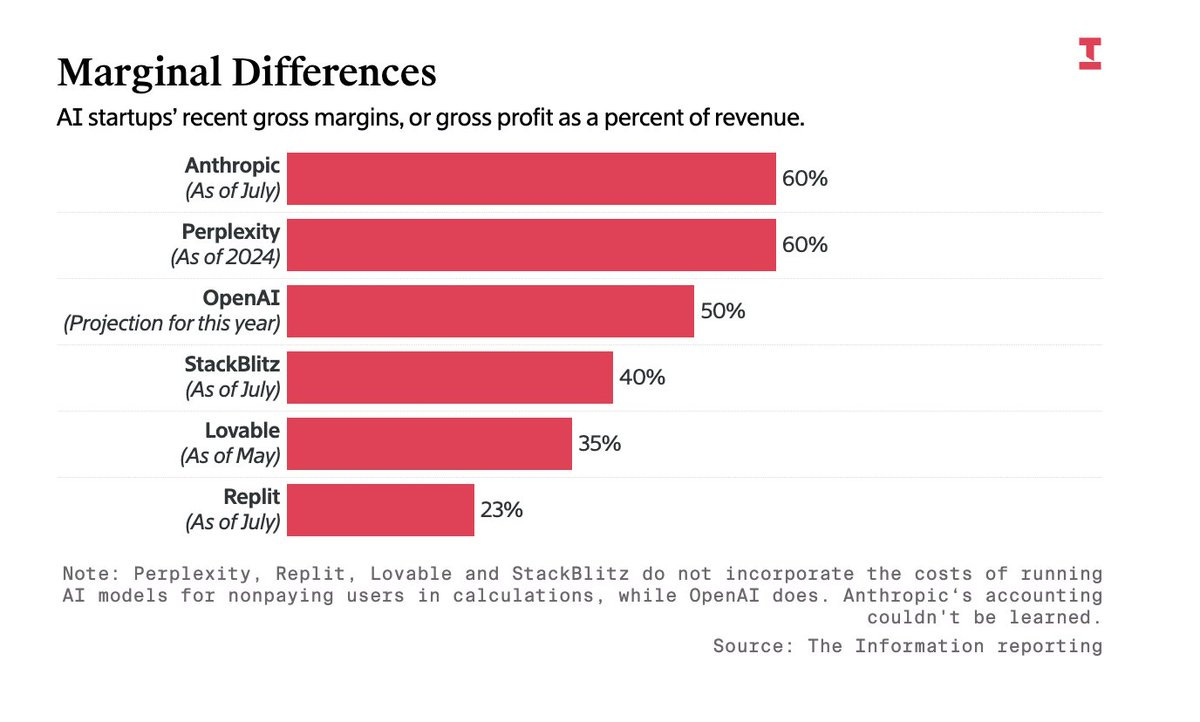

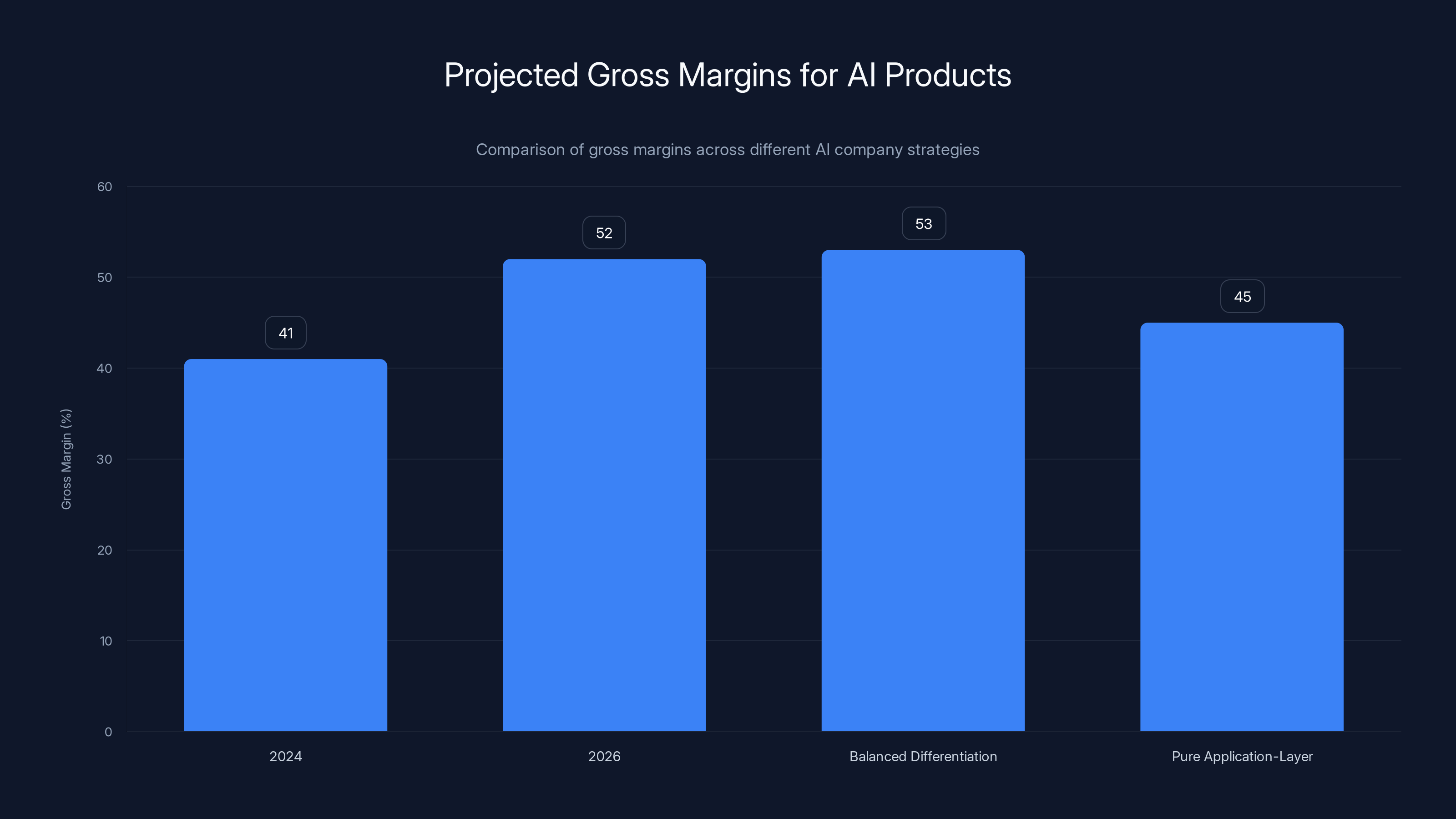

AI product gross margins are projected to improve from 41% in 2024 to 52% in 2026. Companies with balanced differentiation report the highest margins at 53%, while pure application-layer companies report 45%.

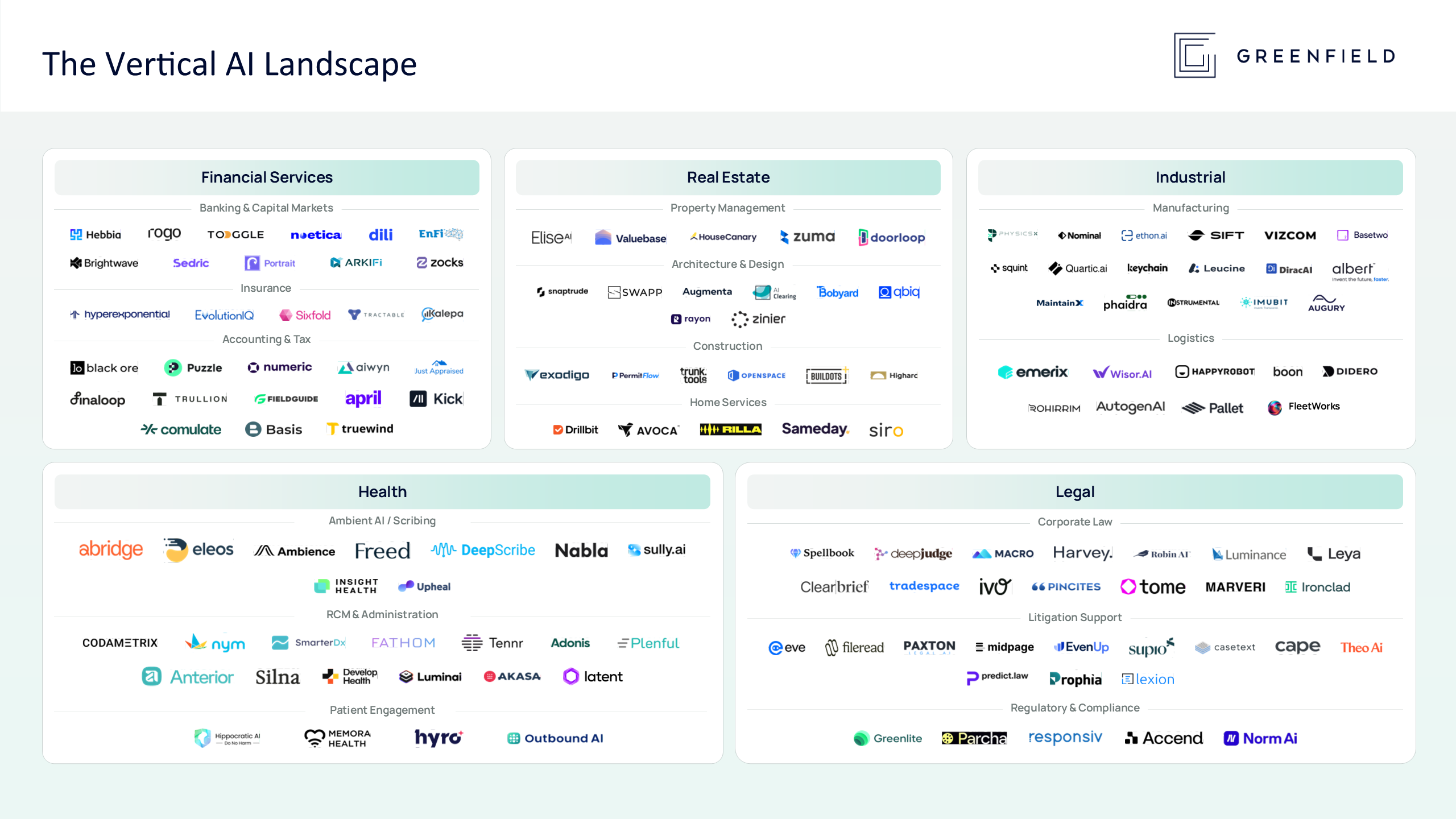

1. The Vertical AI Boom Is Reshaping How Companies Build

The shift from horizontal AI tools to vertical solutions is now undeniable. Nearly 70% of companies are now building vertical AI applications, up from 59% just six months earlier. This isn't a gradual trend anymore—it's become the default strategy.

Vertical AI applications solve specific problems for specific industries. A loan underwriting system built for banks operates completely differently than an AI tool for insurance claims processing, even though both use the same underlying language models. The vertical approach acknowledges a simple truth: generic AI features are commoditizing, but deeply specialized solutions remain valuable.

What's driving this shift? Companies are realizing that 49% of their differentiation now comes from application-layer innovation—unique user experiences, integrated workflows, and ecosystem integrations. The model layer has become interchangeable.

The Multi-Model Reality

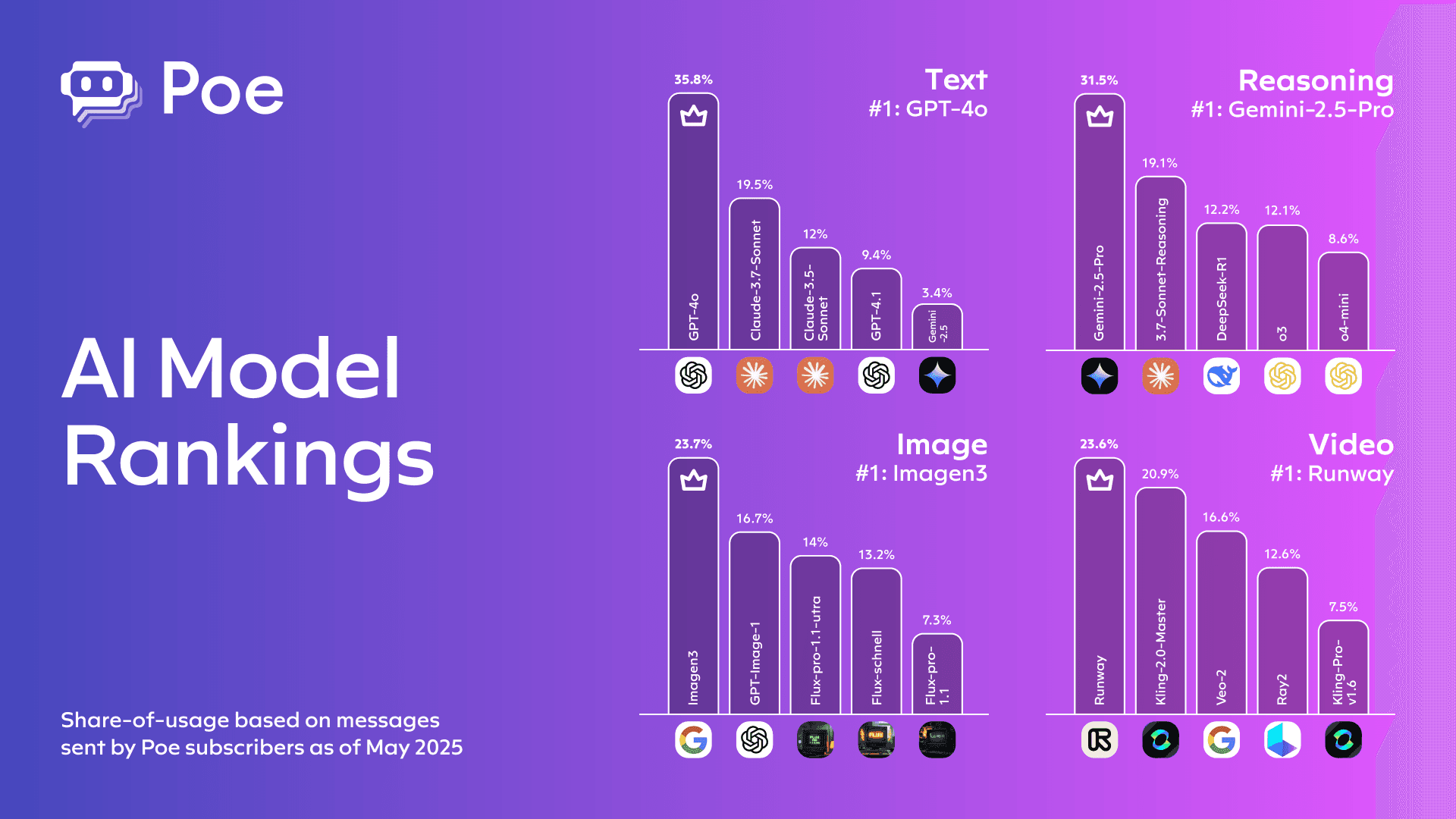

As proof of this commoditization, companies are now juggling an average of 3.1 different model providers, up from 2.8 six months ago. This is completely different from 2023, when picking one model provider felt like a strategic decision.

Open AI remains the dominant choice at 77% adoption, but the competitive landscape is fragmenting rapidly. Google's Gemini surged to 55% adoption (from 43%), and Anthropic's Claude is now at 51%. Companies aren't replacing Open AI—they're layering competitors on top.

Why? Because different models excel at different tasks. One model might be better at reasoning, another at coding, another at long-context understanding. Smart teams are building routing logic that sends each request to whichever model optimizes for latency, cost, or quality.

Where Competitive Advantage Actually Lives

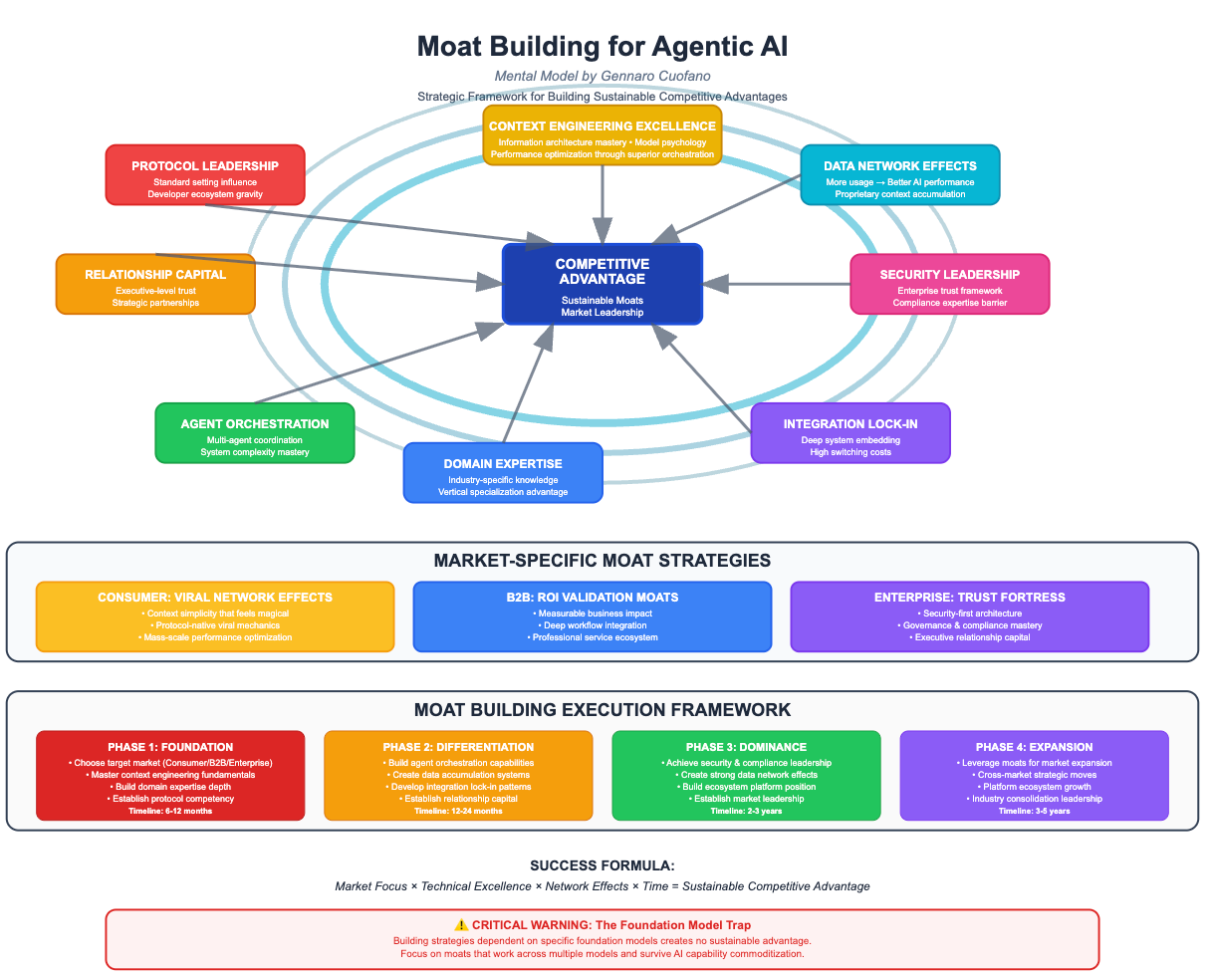

If models are becoming commodities, where do winners and losers actually diverge?

The answer is in the layers above the models. Companies that understand their customers' workflows deeply—that know the pain points, the edge cases, the regulatory requirements—are building products that can't easily be replicated. They're combining model intelligence with domain expertise, proprietary data, and integrations with adjacent tools.

For example, a vertical AI application for commercial real estate needs to understand property law, financing structures, market dynamics, and integration points with existing CRM systems. Building that requires domain knowledge that no general-purpose AI model possesses.

This explains why applications are becoming the value driver. The model itself is getting commoditized, but the surrounding product—the UX, the workflows, the ecosystem connections—creates real defensibility.

2. Gross Margins Are Improving, But the Path Is Unequal

Let's talk about the encouraging news first: AI product gross margins are heading in the right direction.

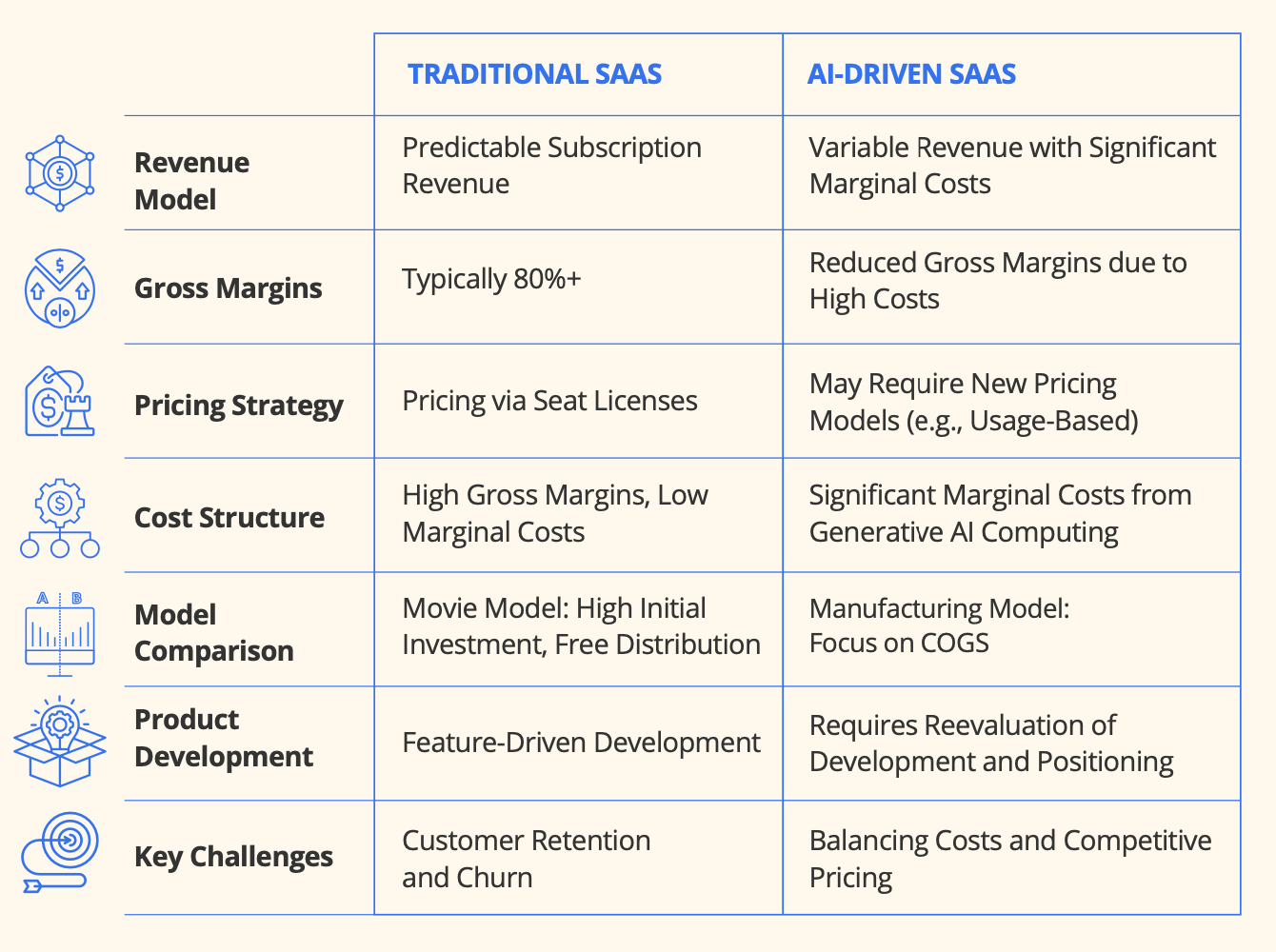

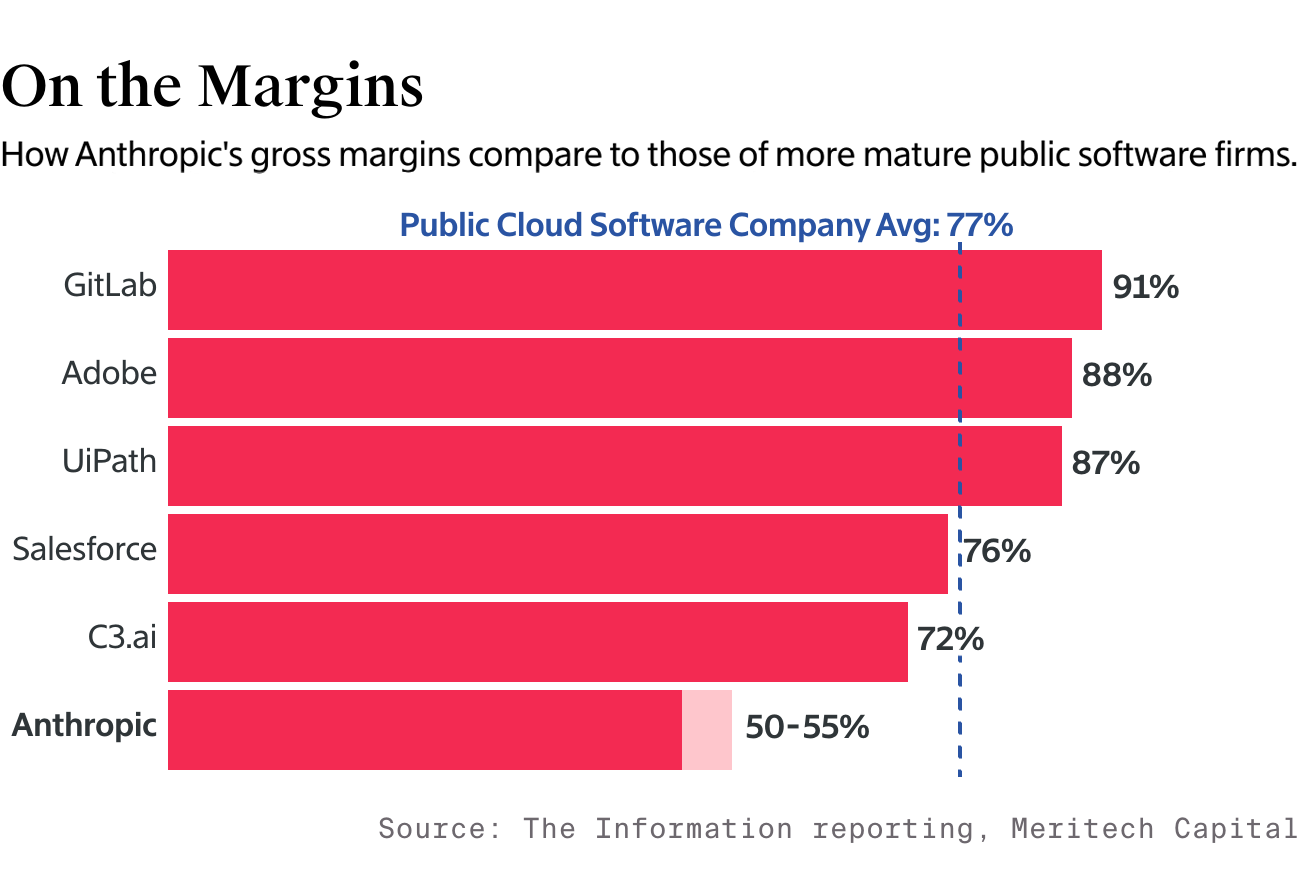

Projected gross margins for 2026 hit 52%, compared to 41% in 2024. That's an 11-point improvement in just two years. For context, typical Saa S applications hover in the 70-80% gross margin range, so AI products are still not there. But the trajectory matters.

Here's the catch: margins aren't improving evenly.

The Margin Hierarchy

Companies with balanced differentiation (combining both model innovation and product innovation) report the highest margins at 53%. Pure application-layer companies—those building entirely on top of existing models without any proprietary AI research—report 45% gross margins. That's an 8-point spread.

What's the difference? It comes down to cost structure and defensibility. When you're building on top of models you don't control, you're at the mercy of pricing changes. When you combine proprietary models or fine-tuning with strong product layers, you have more control over your margins.

This creates an interesting strategic question for founders: Is it worth investing in proprietary AI models if it means spending heavily on research and infrastructure?

For most companies, the answer is probably no. Building frontier models is expensive and competitive advantages are temporary. But for specialized use cases—where proprietary data or training approaches create real advantages—balanced differentiation can be worth the investment.

The Cost Structure Shift

As AI products scale, the cost composition changes dramatically. This is crucial to understand because it shapes your unit economics.

When companies are young and experimenting, talent costs dominate at 32% of total spend. Developers, ML engineers, and product managers are expensive, and you need a lot of them to figure out what customers actually want. Model inference costs are secondary at 20%.

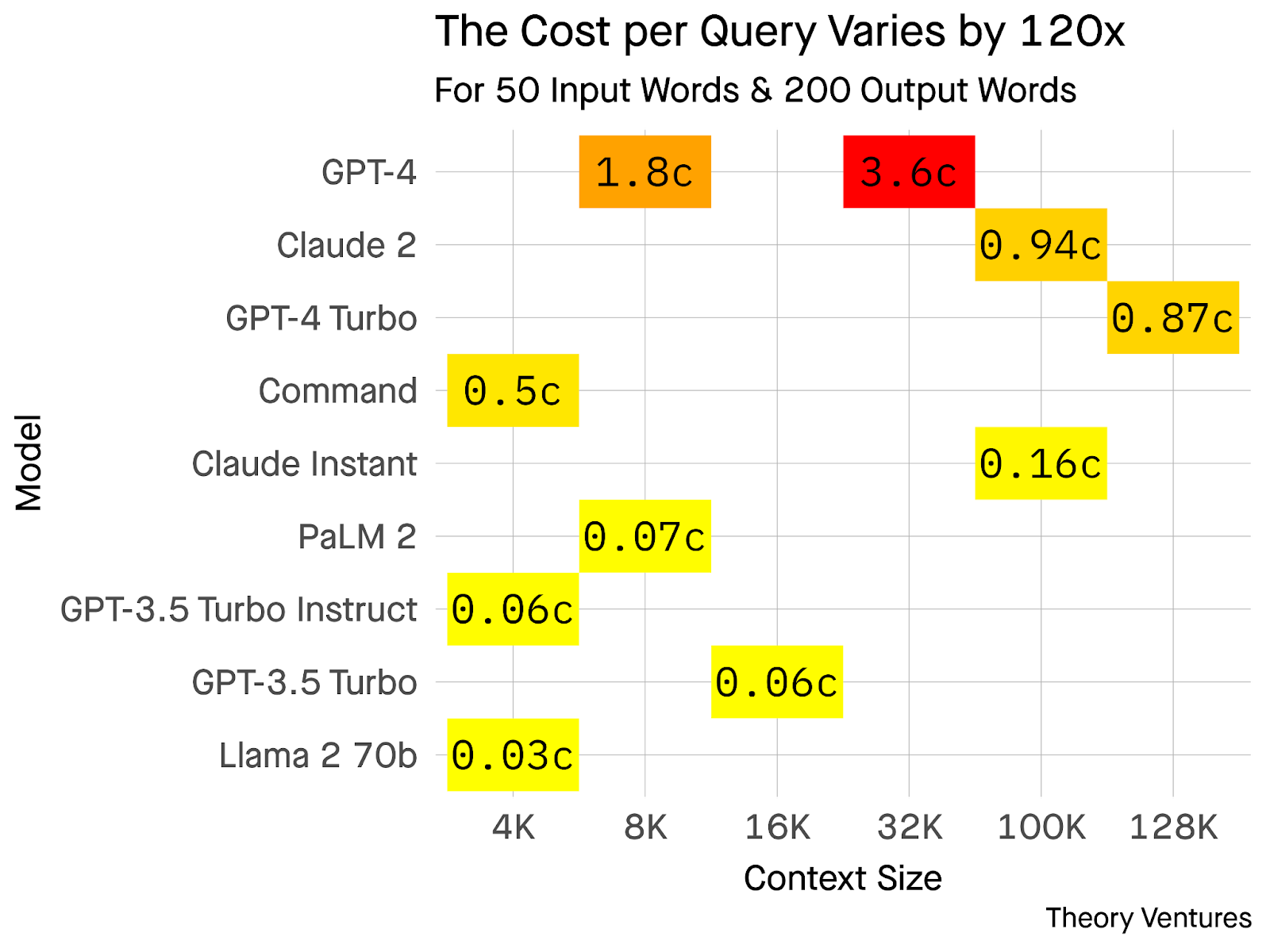

But as products mature and move to production, the balance flips. Talent drops to 26% of spend (you're still hiring, but you're hiring more efficiently). Model inference climbs to 23% and keeps growing, as noted in SaaStr's analysis.

This means two things:

First, your margin optimization strategy changes over time. Early on, hiring disciplined engineers matters more than squeezing model costs. Later on, infrastructure efficiency becomes crucial.

Second, companies that don't address inference optimization at scale get crushed. If you're running millions of daily API calls and paying full price for frontier models on every single one, you're leaving money on the table. The winners are implementing sophisticated routing logic that reserves expensive models for complex queries and sends straightforward requests to cheaper alternatives.

Real Numbers on Model Routing

One company in the dataset ran approximately 1.6 million monthly calls to their AI system. Their first version used the same model for everything. They then implemented intelligent routing based on query complexity.

The result? Average call time dropped from 15 minutes to 4-5 minutes. Customer satisfaction improved 3x. Costs dropped dramatically because routine queries were routed to cheaper models.

At that scale, the difference between routing efficiently and routing naively is hundreds of thousands of dollars per year.

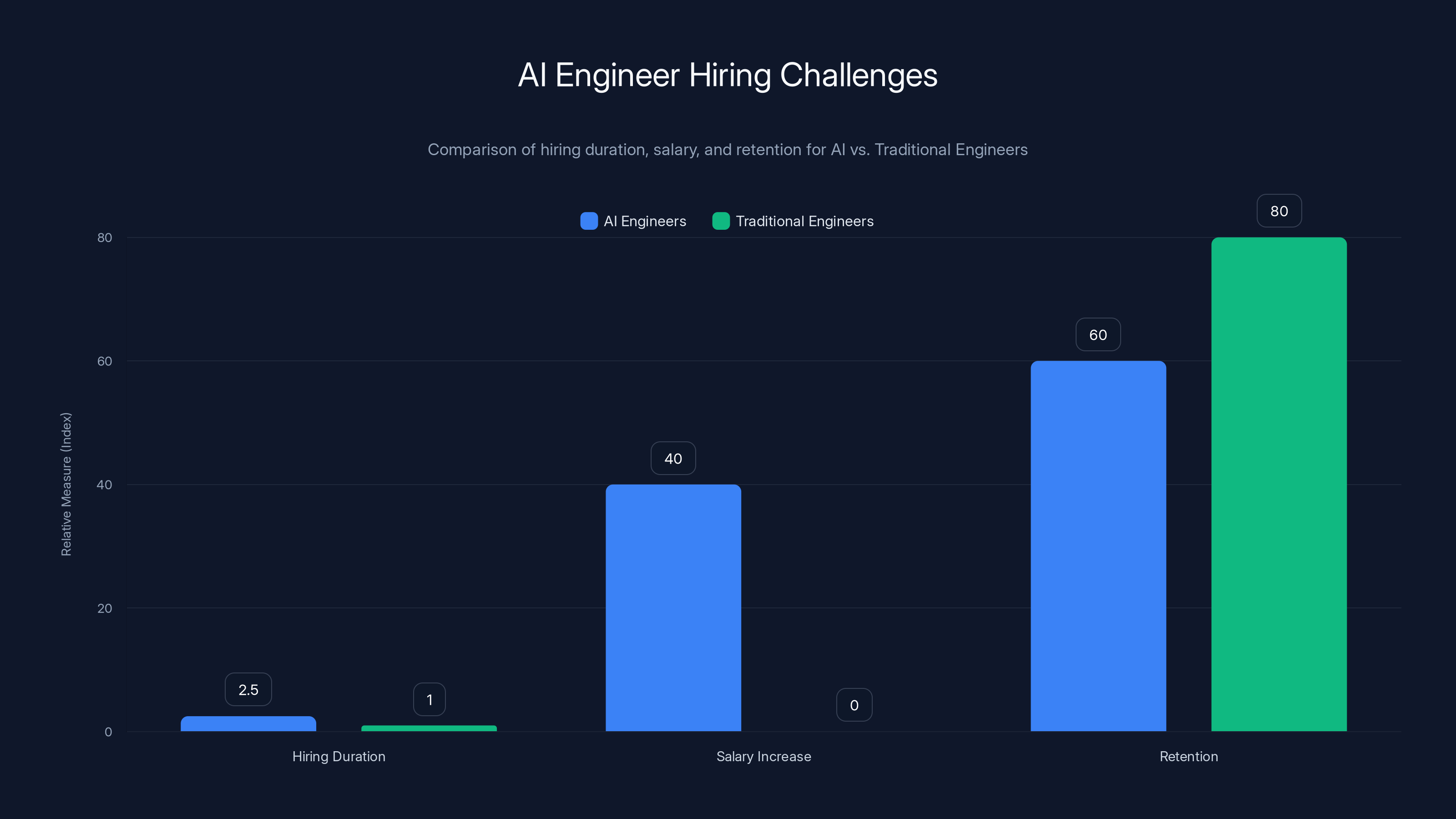

AI engineers take 2-3x longer to hire and command 30-50% higher salaries than traditional engineers, with lower retention rates. (Estimated data)

3. Pricing Models Are Breaking Down and Need Rebuilding

If gross margins are getting better and product-market fit is improving, why are companies so unhappy with their pricing?

Because current pricing models don't map to AI product value.

The Pricing Landscape Is Fractured

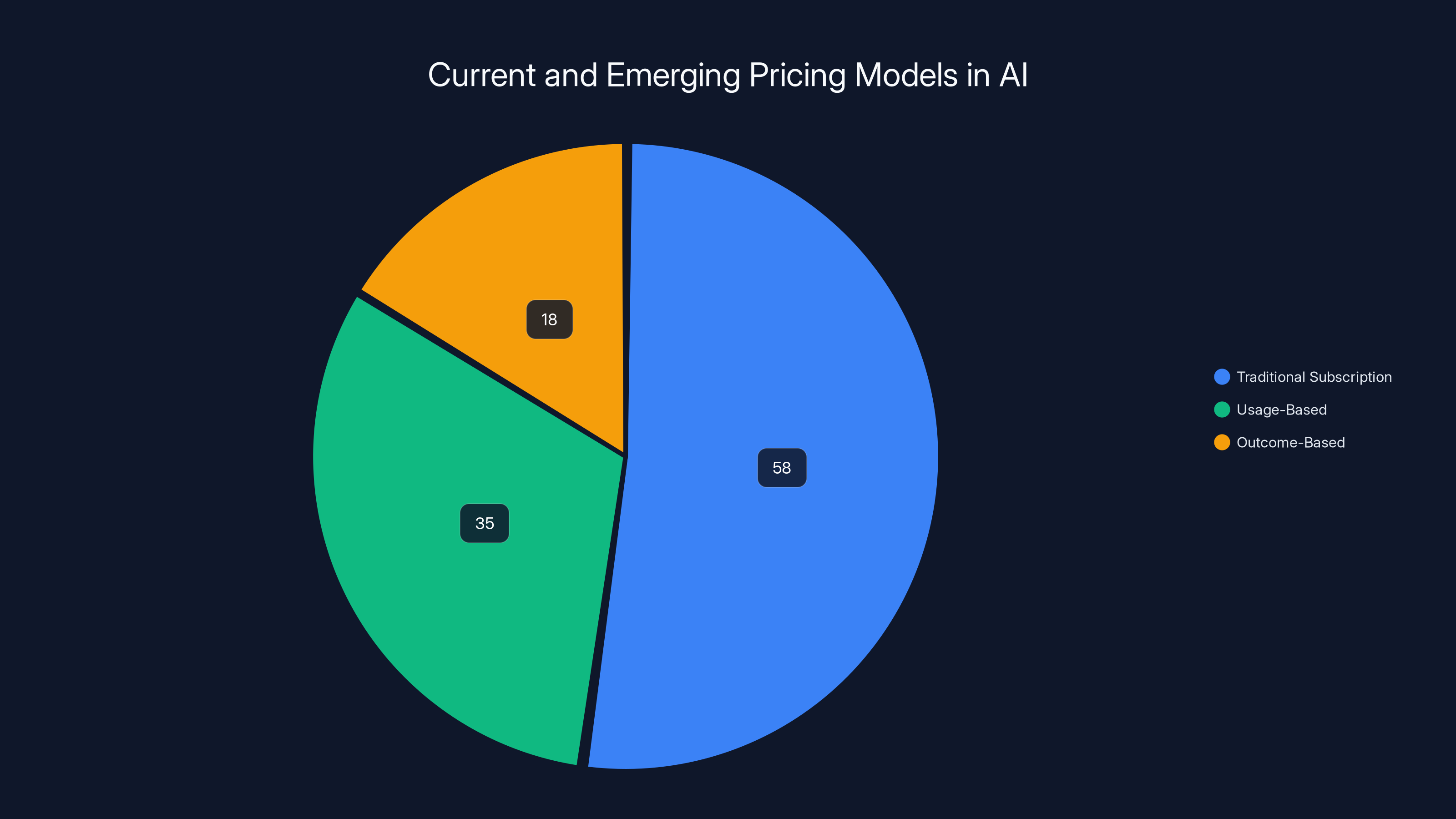

Still, 58% of companies are using traditional subscription or platform pricing. That makes sense as a starting point. You charge a monthly or annual fee, customers get access to the platform, everyone knows what to expect.

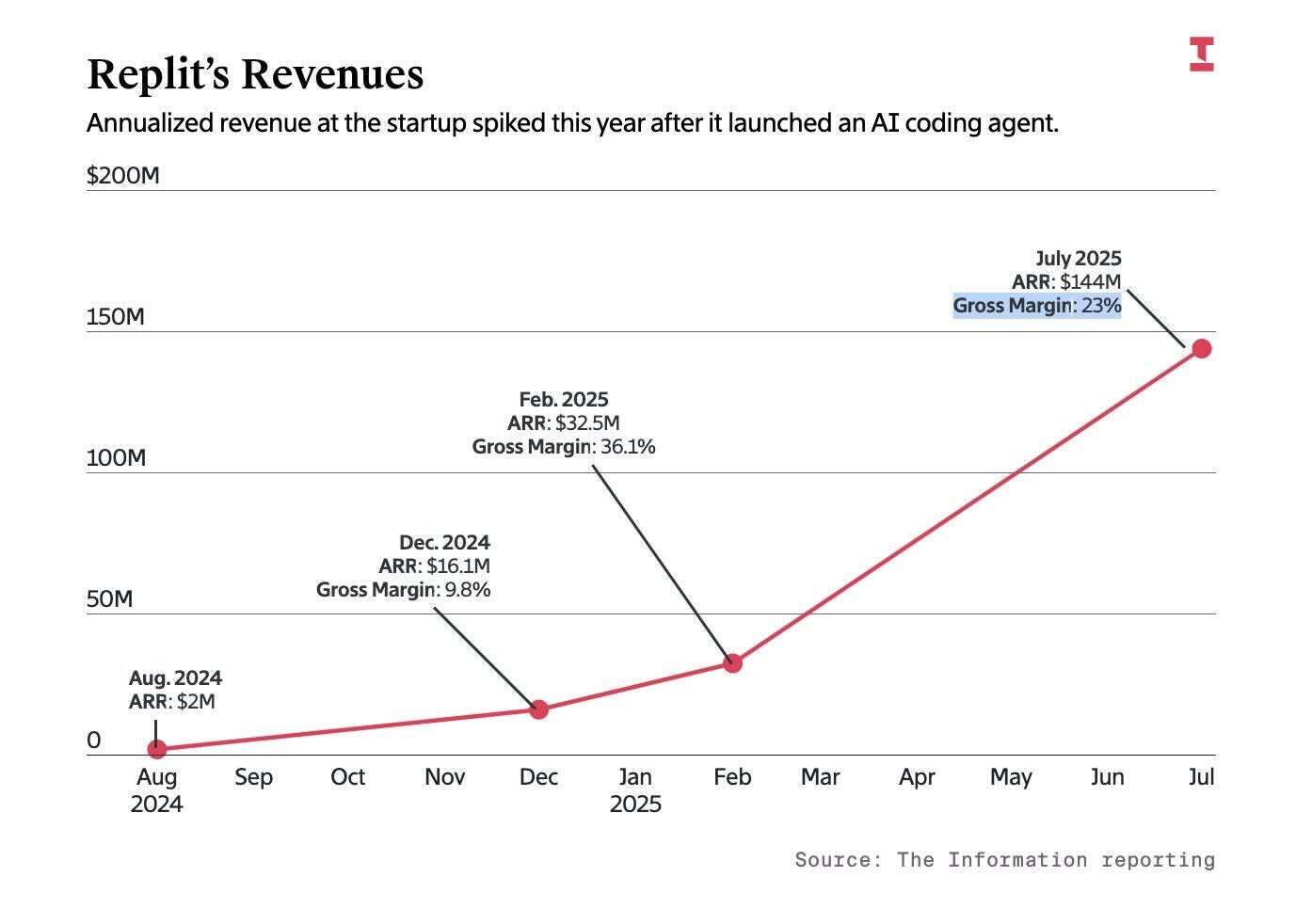

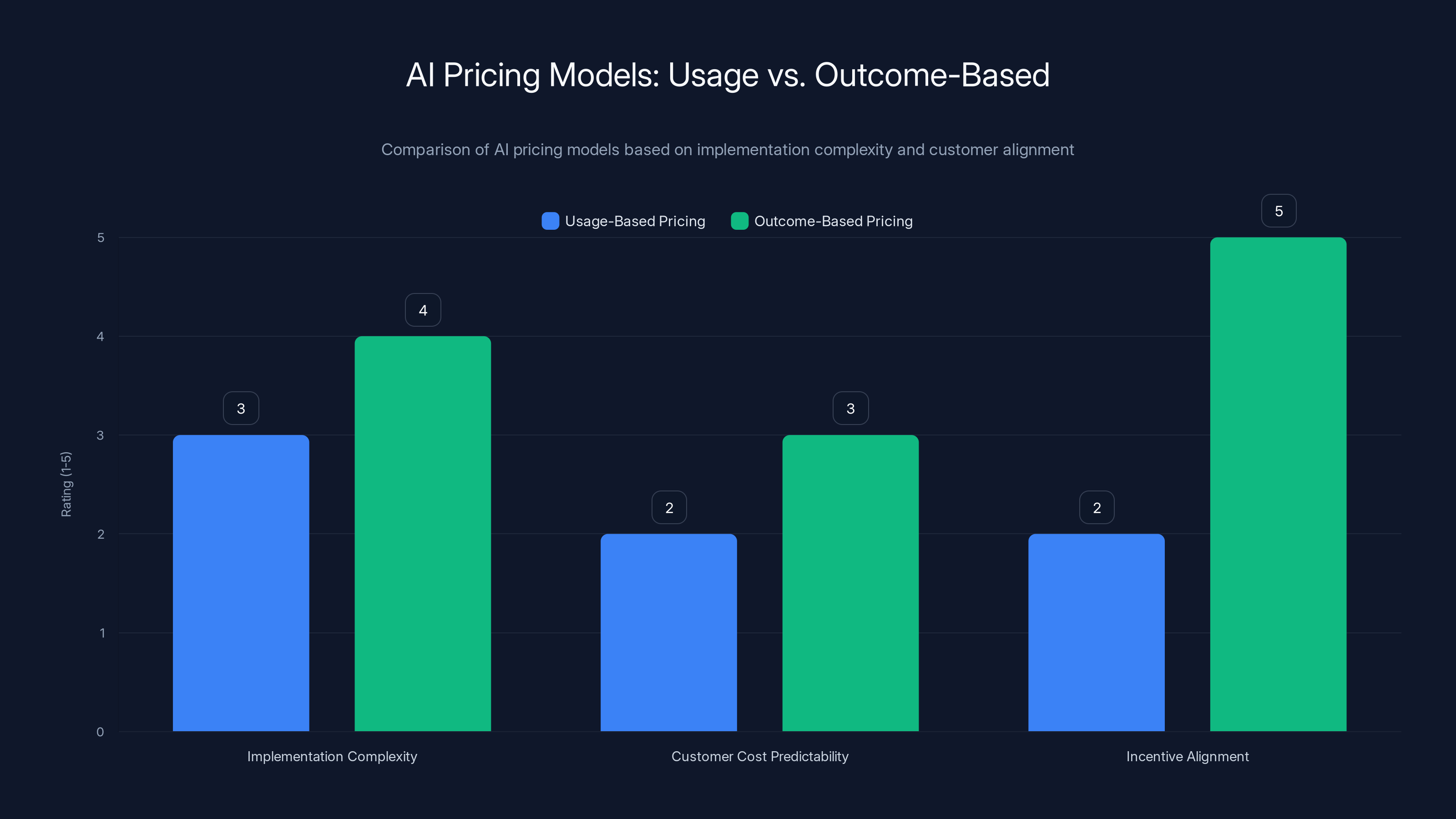

But that model is increasingly failing for AI products. Usage-based pricing is now at 35% (customers pay based on consumption), and outcome-based pricing jumped from 2% to 18% in just six months. That's a tenfold increase, according to Forbes.

The shift makes sense. With traditional Saa S, usage and value are loosely connected. With AI products, usage can vary wildly based on how customers configure the system. One customer might run 1,000 inferences per month. Another might run 1 million. Fixed pricing breaks down.

Outcome-based pricing is compelling but harder to execute. You're saying: "We charge based on the results we deliver." That requires deep insight into customer economics and the ability to quantify impact. It's powerful when it works, but it's also risky because your economics become dependent on customer success.

The 37% Who Are Changing

Here's the smoking gun: 37% of companies plan to change their pricing model within the next 12 months.

That's not a small minority tinkering at the edges. That's more than a third of the market saying their current approach isn't working.

What's driving the change?

- Customer demand for consumption-based pricing: 46% of companies cite this. Customers want to pay for what they use, not for platform access they might not fully utilize.

- Customer demand for predictable pricing: 40% cite this. The flip side of consumption-based pricing is uncertainty. Customers want to know their costs ahead of time.

- Competitive pressure: 39% cite this. Competitors are offering better pricing terms, so you have to match or lose deals.

The tension here is real. Customers want two conflicting things: consumption-based pricing AND predictable costs. And companies are caught trying to satisfy both.

Case Study: The Pricing That Broke

One company in the dataset ran on outcome-based pricing initially. They delivered exceptional results for customers, but their economics were misaligned.

They could prove that their AI system saved customers 20% on a specific process. So they charged 15% of the savings. Sounds fair, right?

But here's what happened: as their product got better, it got more efficient. Customers saw bigger savings. The company's revenue share went up, but cost stayed the same. It looked like a great business, but it turned out to be unstable because customer value and company margin were too tightly coupled.

They had to move to hybrid pricing: light subscription base plus outcome-based adjustments for exceptional performance. This aligned incentives better and stabilized the business.

The Pricing Roadmap Most Companies Are Considering

The data suggests a three-stage evolution:

Stage 1 (Experimental): Simple platform pricing. You're trying to find product-market fit, so you keep pricing simple and focus on usage patterns and customer feedback.

Stage 2 (Scaling): Subscription base plus usage. You've proven the model works, so you add a subscription tier (for predictability) and charge for overage (for fairness). Customers know their base costs. They pay extra if they push volume.

Stage 3 (Mature): Subscription plus outcome-based adjustments. Once your product is proven and you can quantify customer impact reliably, you shift to outcome-based pricing for upsell and retention.

Most companies in the dataset are somewhere between Stage 1 and Stage 2. Only the most mature are in Stage 3.

4. R&D Spending Is Accelerating and Becoming Unsustainable

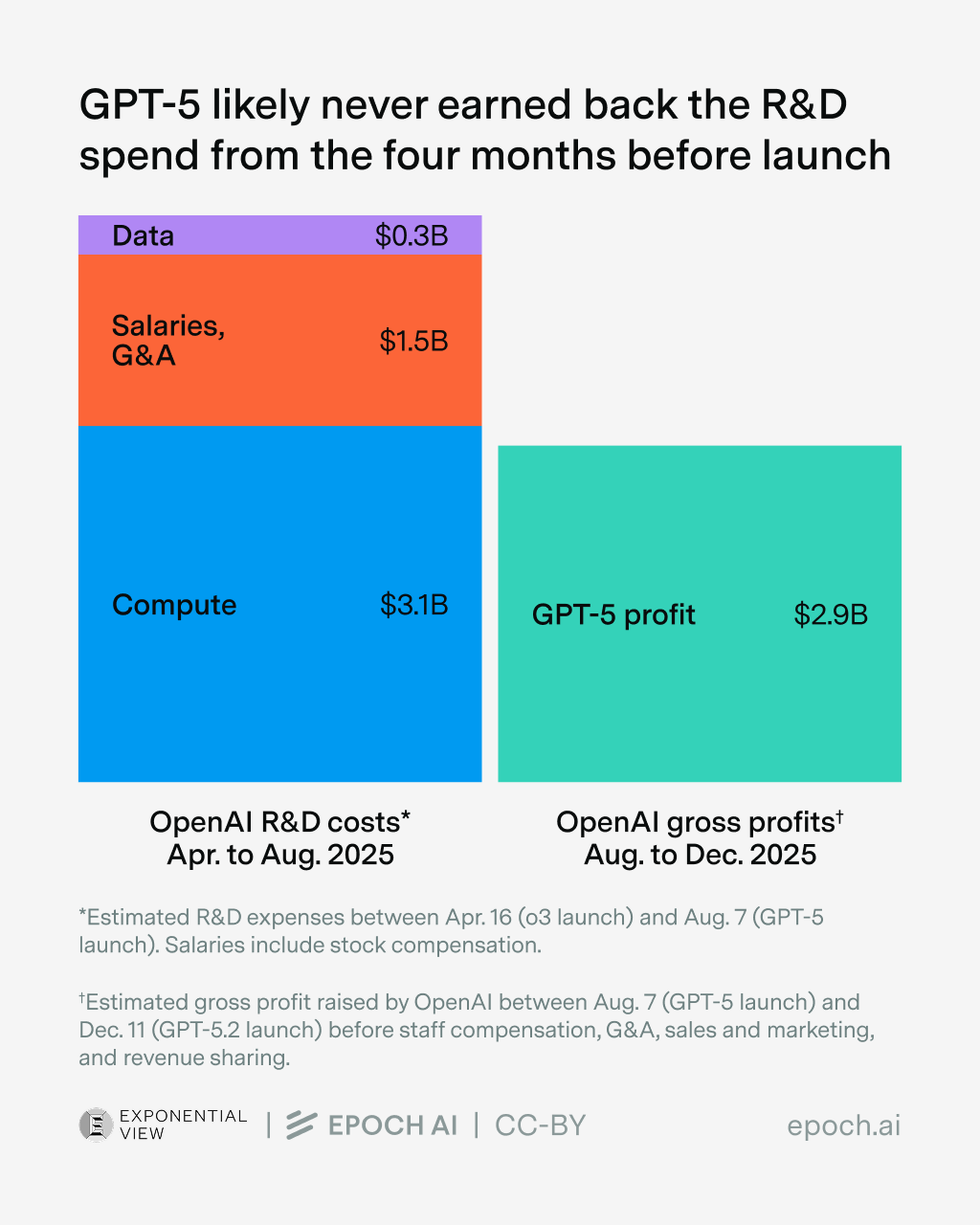

Here's the uncomfortable truth: R&D budgets are growing faster than revenue.

This makes sense on the surface. Building AI products is hard. You need strong engineers, ML expertise, and solid infrastructure. But there's a point where R&D growth outpaces commercial traction, and the numbers suggest many companies are approaching or passing that threshold.

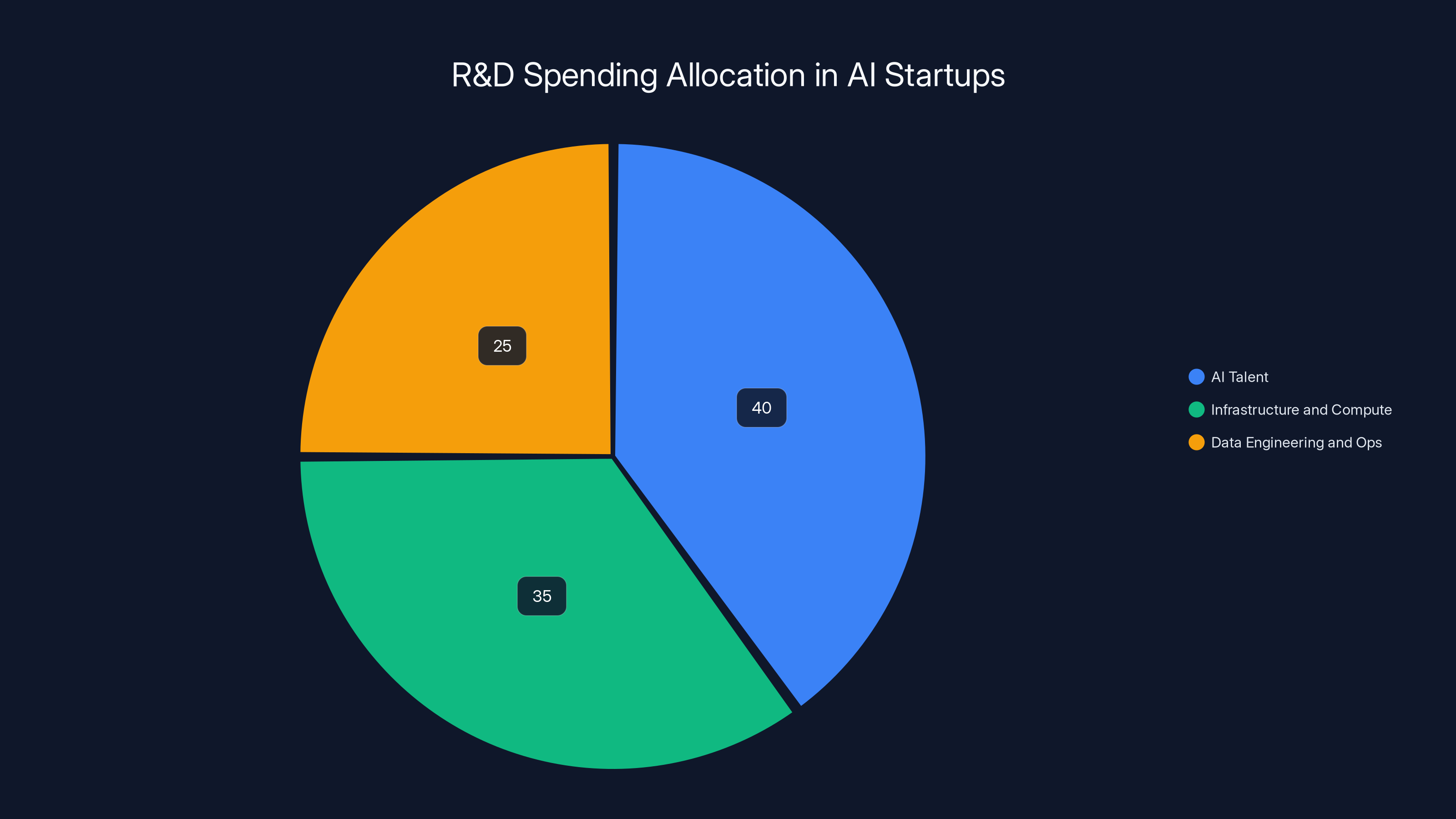

Where the R&D Money Is Going

Three categories dominate:

AI Talent: Hiring data scientists, ML engineers, and AI product specialists. These are expensive hires, often commanding 30-50% premiums compared to traditional software engineers.

Infrastructure and Compute: Building or renting the computational resources to train models, fine-tune existing models, and run inference at scale. This is increasingly expensive as you move beyond prototypes.

Data Engineering and Ops: Building pipelines, managing training data, setting up monitoring systems, and handling the operational burden of production AI systems.

Companies are investing heavily in all three, often simultaneously. And they're doing it before proving that the investments actually drive competitive advantage.

The Sustainability Question

R&D spending makes sense when it translates to defensibility. If you're building proprietary models, training on unique datasets, or developing novel approaches to hard problems, R&D investment can create lasting advantages.

But if you're building on top of commodity models with generic product features, R&D spending becomes a cost burden without a corresponding benefit. You're paying for the perception of innovation without creating real differentiation.

This is where the vertical AI trend becomes relevant. Companies building deeply specialized applications in specific industries can justify higher R&D because they're creating genuine expertise and proprietary workflows. Companies building generic AI features can't.

The data should make this clear: if your R&D is >30% of revenue and you're not seeing corresponding margin improvements or market share gains, something is wrong. Either your R&D is inefficient, or your product strategy isn't creating value.

The Talent Crisis Inside R&D

One specific issue worth highlighting: AI talent is expensive and concentrated.

There aren't enough qualified ML engineers and AI researchers to fill all the positions being created. This drives salaries up and causes companies to overpay for mediocre talent because they don't have better options.

This creates a vicious cycle. Higher salaries mean higher burn rates. Higher burn rates mean you need to raise more money. More money means more competition for talent. More competition means salaries keep climbing.

The companies that will win this game are the ones that figure out how to build and deliver AI products without needing an army of Ph Ds. That means building simpler, more focused products on top of existing models rather than trying to build everything from scratch.

5. Model Switching Is Becoming Standard Operating Procedure

The multi-model reality isn't just a trend. It's becoming fundamental to how AI companies operate.

The fact that companies are now using 3.1 model providers on average—and this number keeps growing—tells you something important: companies are treating models as commodities.

How Companies Are Thinking About Model Selection

Five years ago, choosing a model was a big decision. You picked one, you committed, and you built your whole system around it.

Now, companies are implementing:

- Model routing logic that sends different types of requests to different models based on cost, latency, and quality tradeoffs

- A/B testing at the model level where you test new models in production with a subset of users before rolling out fully

- Fallback chains where if one model is slow or fails, you automatically try another

- Cost optimization algorithms that continuously evaluate whether you're using the right model for each request type

This is rational behavior when models are commodities. But it also means model providers can't rest on their laurels. If you're consistently more expensive or slower than a competitor, companies will route around you.

The Model Hierarchy Emerging

Based on the adoption data, a rough hierarchy is emerging:

Tier 1 (Default): Open AI. Still the most widely adopted, still considered the best overall performer. But increasingly viewed as the default rather than the exclusive choice.

Tier 2 (Specialized): Google Gemini (coding, reasoning), Anthropic Claude (safety, long-context), and emerging players for specific use cases.

Tier 3 (Experimental): Open-source models and smaller providers that companies test but don't rely on for critical workloads.

The trend is clear: Tier 1 is slowly losing exclusivity as Tier 2 gets stronger and more specialized. Companies aren't abandoning Open AI, but they're supplementing it with competitors.

What This Means for Model Providers

If you're a model provider watching this trend, it's concerning. You used to be the strategic choice. Now you're one option in a portfolio.

This is the model commoditization story playing out in real time. Providers will compete increasingly on:

- Cost efficiency: How cheap can you make inference while maintaining quality?

- Latency: How fast can you return results?

- Specialization: Are you best at coding? Math? Reasoning? Long-context retrieval?

- Integration: Do you integrate smoothly with the tools and workflows companies are already using?

The days of one dominant model for everything are ending.

Traditional subscription models are still dominant at 58%, but usage-based and outcome-based models are gaining traction, with outcome-based pricing seeing a tenfold increase to 18%.

6. Talent Concentration and AI Engineer Shortages Are Reshaping Economics

Building AI products requires different skills than traditional software engineering. And those skills are in short supply.

The talent crisis isn't theoretical. Companies report that hiring qualified AI engineers takes 2-3x longer than hiring traditional software engineers. Salaries are 30-50% higher. Retention is worse because top talent has multiple offers at any given time.

The Economics of AI Talent

When you have a scarce resource that multiple companies are competing for, prices rise. That's basic economics.

A senior ML engineer at a major tech company might earn

This creates a structural problem for startups trying to build sustainable businesses. Your cost structure assumes you can hire engineers at market rates. But if you're competing with Google, Meta, and Open AI for talent, market rates don't apply.

The solution most companies are implementing: hire fewer Ph Ds and more engineers who can translate between product teams and AI infrastructure. Find people who understand both software engineering and machine learning, rather than pure researchers.

Geographic Concentration

The survey notes that 85% of companies are headquartered in North America, with 15% in Europe. This concentration matters for talent because it means competition for that talent is local.

If you're building an AI company in Silicon Valley or the Bay Area, you're competing with every other tech company in the region. If you're in Europe or other geographies, you have fewer competitors but also a smaller talent pool.

Smart companies are starting to distribute teams geographically to access talent pools outside hotspots. But this brings its own challenges: timezone coordination, cultural differences, and the loss of in-person collaboration.

The Automation Inversion

Here's an interesting irony: AI companies are building AI products that automate work, but they can't automate their own product development because building AI is still fundamentally hard.

Eventually this changes. As AI tools get better, you'll be able to use AI to help build AI faster. But we're not there yet. Most AI teams are still doing a lot of manual work around data labeling, model evaluation, and infrastructure maintenance.

The companies that figure out how to automate these workflows internally will have a huge advantage over competitors who are still doing everything manually.

7. Infrastructure Efficiency Is Becoming the Secret Lever

Gross margins improve in two ways: raise prices or lower costs. Raising prices is hard in a competitive market. Lowering costs is mechanical and reliable.

For AI products, infrastructure cost is the biggest lever most companies can pull. And the best operators are pulling it hard.

Where Infrastructure Costs Hide

Most companies think about infrastructure costs as: model inference fees. If you call GPT-4, you pay Open AI. Simple.

But there's a lot of hidden cost elsewhere:

- Data storage and retrieval: Storing vectors, embeddings, and training data for fine-tuning. If you're doing semantic search or retrieval-augmented generation, you need a vector database. These aren't free.

- Compute for fine-tuning: If you're customizing models for specific use cases, you need GPU time for training. That's expensive.

- Monitoring and logging: Running production AI systems means logging lots of data for debugging and optimization. Storage and processing for that adds up.

- Testing and validation: Before deploying a new model or feature, you need to test it. That means running predictions on test data. It's small relative to production, but it accumulates.

Companies that optimize all of these, not just model inference, see margins improve substantially.

The Caching Revelation

One specific optimization worth highlighting: caching.

Most AI product queries aren't truly unique. Many customers ask similar questions or have similar workflows. If you implement smart caching, you can return cached responses for queries that are semantically similar to previous ones.

The math is simple: if 20% of your queries can be served from cache instead of hitting a model, you save 20% on model costs. With margin improvements of just a few percentage points making the difference between profit and loss, caching becomes strategically important.

The Real Cost of Scale

Here's something that surprises founders: infrastructure costs don't scale linearly. They scale worse than linearly initially (because fixed costs get amortized), then better than linearly (because you can negotiate better rates with providers).

But the inflection point where costs improve depends on volume. For model inference, you typically need millions of monthly API calls to get favorable pricing. Companies below that threshold are paying list prices.

This creates a valley of death around 100K-500K monthly API calls where you've outgrown hobby pricing but haven't reached scale pricing yet. The companies that survive this valley are the ones with the deepest pockets or the best cost optimization.

8. Customer Acquisition Costs and Unit Economics Are Diverging

Building a product is one thing. Selling it profitably is another.

AI product unit economics are starting to show warning signs. CAC (customer acquisition cost) is increasing faster than LTV (lifetime value) is growing, which is the opposite of what you want.

Why CAC Is Rising

Few reasons combine:

Market saturation: Every software buyer knows about AI now. The free attention and hype cycle that early AI companies enjoyed is over. You have to pay for attention.

Longer sales cycles: For enterprise customers, AI is still new. Procurement teams are cautious. Security and compliance reviews take longer. Integration is more complex. Sales cycles have stretched from 3-4 months to 6-9 months, which increases CAC.

Lower conversion rates on freemium: Early AI companies had phenomenal conversion rates from free to paid because there was so little competition. Now there are dozens of companies in most verticals. Free trial conversion rates are dropping.

What's Happening to LTV

LTV is under pressure from different angles.

Churn is higher than expected: The initial cohorts of customers using AI products were early adopters who were sticky. As the market broadens, you're acquiring less engaged customers who churn faster.

Expansion revenue isn't materializing: Many companies assumed that as customers adopted AI features more widely, they'd upgrade to higher tiers or contract larger teams. This is happening slower than expected.

Competitive displacement: Customers are evaluating competitors more frequently because the product category is still being defined. If a better alternative emerges, they switch faster than they would with mature Saa S products.

The Unit Economics Math

Let's say you have:

- CAC: $5,000

- Monthly ARPU: $1,000

- Monthly churn: 5% (customer lifetime 20 months)

- Gross margin: 50%

Your LTV is

Now imagine CAC rises to $7,500 (realistic for a maturing market). Your ratio drops to 1.33:1. You're barely breaking even on customer acquisition.

This is why so many AI companies are trying to move upmarket to higher-ARPU customers. Enterprise deals have higher CAC but also higher LTV and lower churn.

AI startups allocate significant portions of their R&D budgets to AI talent, infrastructure, and data engineering. Estimated data.

9. Data Quality and Fine-Tuning Are Becoming Competitive Necessities

You can't build a differentiated AI product on top of a commodity model without something proprietary to customize it.

For most companies, that something is data and fine-tuning.

The Fine-Tuning Opportunity

Using a base model out of the box gets you 80% of the way there. Getting the last 20% requires fine-tuning on data that's specific to your domain and use case.

The companies that are doing this well are seeing measurable improvements:

- Better accuracy: Fine-tuned models outperform base models on domain-specific tasks by 10-30% depending on the domain and amount of training data available.

- Lower latency: Smaller fine-tuned models can sometimes outperform larger base models on your specific task, which means faster inference and lower costs.

- Better cost efficiency: A fine-tuned smaller model might cost 70% less to run than a base frontier model while delivering better results.

But fine-tuning requires data. High-quality, labeled data. And that's expensive and time-consuming to build.

The Data Moat

Companies with access to proprietary data have a real advantage. A healthcare company with decades of patient records can build AI products that a competitor without that data can't match. A financial services company with transaction data can train models that competitors can't replicate.

The question is whether you can build these data moats fast enough to create defensibility before competitors catch up. Some industries have natural data advantages (healthcare, finance, law). Others don't.

The Operational Burden

Building and maintaining a fine-tuning pipeline is operationally complex. You need:

- Processes for collecting and labeling training data

- Quality control to ensure labels are accurate

- Version control for different fine-tuned model versions

- Evaluation frameworks to measure whether new versions are better

- Monitoring to detect when model performance degrades

All of this requires engineering effort. And unlike model inference (which you can outsource to an API), this is mostly manual or requires custom tooling.

The companies that win are the ones that systematize this and make it efficient. The companies that treat fine-tuning as a one-time activity get surprised when they realize they need to do it again as their data or use cases evolve.

10. Regulatory and Compliance Overhead Is Growing

AI regulation is still emerging, but it's becoming real enough that companies need to account for it in their planning.

Regulations vary by geography and industry, but the general trend is clear: governments are paying attention and rules are coming.

Geographic Variations

EU: The AI Act is in effect. It classifies AI systems by risk level and applies different requirements to each. High-risk systems require extensive documentation, testing, and monitoring. This has real cost implications, as discussed in Euractiv.

USA: No comprehensive federal AI regulation yet, but sector-specific rules are emerging (healthcare, finance, etc.). The regulatory landscape is fractured and unclear, which creates uncertainty for companies.

Other regions: Australia, UK, Canada, and others are developing their own frameworks. The pattern suggests convergence around similar principles (transparency, accountability, fairness) but different implementation.

The Compliance Cost

For most AI companies, compliance isn't a product feature. It's overhead.

You need:

- Legal review of your systems

- Documentation of how models work

- Testing for bias and safety

- Audit trails and monitoring

- Processes for handling complaints and appeals

For early-stage companies, this can add 10-20% to engineering costs. For enterprise-focused companies selling to regulated industries, it can be much higher.

The Advantage of Scale

Here's where scale helps: compliance costs don't scale linearly. The cost of documenting one AI system is similar to documenting ten. Once you've built the compliance infrastructure, adding new products is cheaper.

This creates an advantage for larger companies and funding-rich startups over bootstrapped competitors. If you need to spend $500K on compliance before you can sell to enterprise customers, you need capital to fund that.

11. The Application Layer Is Where Products Differentiate

By now, the message is clear: building on top of commodity models requires something else to differentiate.

That something is application-layer innovation.

What Application-Layer Innovation Means

It's everything above the model:

- User experience: The interface, the workflow, the way users interact with the AI. A great UX can make an adequate model feel exceptional.

- Domain expertise: Understanding your customers' specific workflows, pain points, and regulatory requirements. This translates to better product decisions.

- Integrations: Connecting with the tools and systems customers already use. A standalone AI tool might be interesting, but an AI tool that integrates with your existing CRM or ERP is transformative.

- Outcomes focus: Designing your product to deliver measurable business outcomes, not just AI capabilities. This is the shift from "we have AI" to "we solve problems."

- Feedback loops: Building mechanisms for continuous improvement based on actual customer usage.

Case Studies in Application Innovation

Take vertical AI solutions for commercial real estate. A generic AI assistant that can answer questions is not valuable. But an AI system that understands commercial real estate law, financing structures, market dynamics, and integrates with your existing workflow tools? That's valuable.

Or consider AI for customer support. A generic chatbot can handle some percentage of inquiries. But an AI system trained on your company's product documentation, previous support tickets, and integrated with your CRM can handle much more. And it gets smarter as you feed more data into it.

The pattern is consistent: the valuable AI applications are the ones that combine model capability with deep product thinking and domain expertise.

Building Defensible Application Layers

The advantage of focusing on application innovation is that it's defensible. Models are commodities. Application layers aren't.

Once you've built a deeply integrated AI system for a specific industry, with strong UX, good integrations, and proven outcomes, it's expensive for customers to switch. They've built workflows around it. They've trained their teams on it. They're getting value from it.

That stickiness creates the moat that allows for sustainable margins and profitable growth.

Outcome-based pricing aligns incentives better but is harder to implement compared to usage-based pricing. Estimated data.

12. Open Source Models Are Disrupting Closed Models

There's a quiet revolution happening in open-source AI. Models like Llama 2, Mistral, and others are getting better faster than anyone expected.

Just 12-18 months ago, open-source models were clearly inferior to closed models like GPT-4. The gap is closing. For some specific tasks, open-source models now match or exceed closed models.

The Economics of Open Source

The appeal of open-source models is straightforward: no per-API-call fees. You can run them on your own infrastructure or rent GPU capacity as needed.

For use cases where latency isn't critical or where you're running very high volume, the economics can be significantly better than using commercial APIs.

A company running 10 million monthly API calls might pay

The Trade-offs

But open-source models require infrastructure investment. You need:

- Engineers to manage the infrastructure

- Monitoring and scaling to handle traffic

- Fine-tuning and customization to get good results

- Ongoing maintenance as new model versions emerge

For companies with sophisticated engineering teams, this is worthwhile. For companies without that infrastructure capability, it's a distraction.

This creates a clear split: well-funded AI companies with strong engineering teams are increasingly using open-source models and saving significantly. Smaller companies are sticking with APIs because the infrastructure cost isn't worth the savings.

The Long-term Trajectory

The trend is clear: open-source models are improving, but not at a pace that's eroding closed models' advantages in raw capability. Instead, open-source is creating a "good enough" tier that's optimal for many use cases and far cheaper than frontier models.

The market is stratifying: frontier models for the hardest problems, open-source models for everything else. This is healthy for the ecosystem because it creates options and competition.

13. Customer Success and Outcomes Measurement Are Becoming Strategic

With pricing models shifting toward usage-based and outcome-based approaches, understanding and delivering customer outcomes becomes strategic, not just nice-to-have.

The Outcomes Measurement Problem

Most AI products can't reliably measure whether they're delivering value to customers. This is a massive problem.

If you can't measure outcomes, you can't:

- Price based on outcomes

- Justify retention conversations

- Identify which customers are getting value and which aren't

- Improve the product based on what works

The companies that are building measurement into their products from day one have a huge advantage. They know what works, they can optimize, and they can charge based on proven value.

Building Measurement In

This means:

- Instrumentation: Tracking how customers use your product and what outcomes they achieve

- Benchmarking: Understanding what good looks like so you can measure against it

- Attribution: Connecting customer actions in your product to business outcomes

- Feedback loops: Showing customers their outcomes so they understand the value

It's not sexy work, but it's increasingly essential.

The Customer Success Burden

As products become more complex and outcomes less obvious, customer success (CS) becomes more important and more expensive.

Early AI product customers could see value immediately. Now you're selling to broader audiences who need help implementing and getting value. CS teams are growing faster than revenue.

Winning companies are automating CS wherever possible: self-service dashboards that show outcomes, automated alerts when value is at risk, AI-powered recommendations for how to use the product better.

14. The Role of Prompting vs. Fine-Tuning in Product Strategy

There's a spectrum of ways to customize models to your use case: from simple prompting to extensive fine-tuning.

Prompting: The Quick Win

Prompt engineering—writing sophisticated prompts that get models to do what you want—has gotten surprisingly powerful.

Simple example: instead of asking a model "Summarize this document," you can ask "You are a contract lawyer reviewing a commercial lease. Identify the key terms, liability clauses, and anything unusual. Here's the document: [document]. Your summary:"

The more specific your prompt, the better the output. Companies are building entire features on top of prompt engineering without any fine-tuning.

The advantage is speed: you can iterate on prompts in hours, not weeks. The disadvantage is cost: running inference with long, complex prompts means longer requests and higher costs.

Fine-Tuning: The Long Game

Fine-tuning means training a model on examples specific to your use case. It's slower and more expensive upfront, but it delivers:

- Better accuracy on your specific task

- Lower latency

- Lower cost per inference (you can use a smaller model)

The Hybrid Approach

Winning companies aren't choosing between prompting and fine-tuning. They're combining them.

Start with prompt engineering to get to decent quality fast. As you understand your use case better and collect more data, gradually shift to fine-tuning. The final state is a combination: highly optimized prompts combined with a fine-tuned model.

AI gross margins are projected to increase from 41% in 2024 to 52% in 2026, while R&D costs are expected to rise significantly, highlighting the dual challenge of profitability and investment. Estimated data.

15. The Future of AI Pricing: Toward Transparency and Predictability

The current chaos in AI pricing is unsustainable. Eventually, the market will settle on models that customers and vendors can both live with.

The Direction of Travel

Based on industry trends, the future likely includes:

Transparent usage: Customers will have clear visibility into how much they're spending and why. No surprise bills.

Tiered access to models: Different tiers for different models. Basic tier gets access to cheaper models. Premium tier gets frontier models. This gives customers choice and price points.

Outcome-based components: As measurement improves, pricing will increasingly include outcome-based components. You'll pay a base subscription plus a percentage of value created.

Bundled services: Rather than paying separately for models, infrastructure, and integration, companies will bundle these together and charge a single price.

The Pricing That Will Win

My bet is on: Subscription base plus light usage component plus optional outcome-based kicker.

Subscription covers platform access and some monthly usage. Usage component covers overage. Outcome-based kicker lets you share in exceptional value when you deliver outsized results.

This balances predictability (base subscription) with fairness (usage component) with upside sharing (outcome component).

16. Building Sustainable AI Businesses: The Path Forward

If you zoom out from all the trends and data, a clear picture emerges of what sustainable AI businesses look like:

First: Pick Your Vertical

Vertical > horizontal. Specialized > generic. Deep > broad.

Find an industry or workflow where you understand the problem deeply, where there's a clear ROI for solving it, and where your AI solution can integrate into existing workflows.

Second: Own the Application Layer

Don't bet the company on being better at building models. Bet on being better at building products that solve customer problems.

Use commodity models as your foundation. Differentiate through UX, integrations, domain expertise, and continuous improvement based on customer feedback.

Third: Optimize Economics Relentlessly

Build measurement into your product from day one. Track:

- Unit economics (CAC, LTV, payback period)

- Infrastructure costs per customer

- Gross margins by customer segment

- Customer outcomes and correlation to retention

Use this data to make decisions. Move fast where data supports it. Move slow where uncertainty is high.

Fourth: Price for Sustainability

Don't chase growth at the expense of margins. Price in a way that aligns your success with customer success.

Start simple. Iterate based on feedback. Be willing to change your pricing model as you learn what actually drives value for customers.

Fifth: Invest Strategically in R&D

R&D spending should align with your differentiation strategy. If your differentiation is application-layer, heavy R&D in model training is a waste. If your differentiation requires fine-tuning and data, R&D makes sense.

Be ruthless about eliminating R&D that doesn't drive differentiation. Every dollar spent on R&D is a dollar not spent on growth.

17. Competitive Dynamics in 2025: Consolidation and Specialization

The AI software market is beginning to stratify. A few themes are emerging:

Horizontal Consolidation

We're starting to see AI features being built directly into existing platforms. Salesforce isn't waiting for a startup to build AI CRM features—they're building them. Microsoft isn't waiting for a startup to build AI Office features—they're building them.

This means startups have fewer opportunities to compete at the horizontal layer. The traditional playbook of building a horizontal tool and selling it to enterprises is becoming harder.

Vertical Consolidation

At the same time, we're seeing startups carving out defensible positions in vertical markets. A startup building AI specifically for healthcare, finance, or legal has a chance because it understands the domain and can build deeper integration than a horizontal player.

Distribution Partnerships

Some startups are competing not through direct distribution but through partnerships with existing platforms. Being the AI engine that powers another company's product can be lucrative if you pick the right partner.

The Survivor Profile

The AI startups that will thrive in 2025 likely share characteristics:

- Focused: Solving a specific problem for a specific customer segment, not trying to be all things to all people

- Operationally disciplined: Ruthless about unit economics and customer success

- Well-capitalized: Enough money to weather the current market conditions and competitive pressures

- Founder-led: Strong product intuition and deep customer understanding from the top

18. The Role of Integration and Ecosystem in Winning

AI products don't exist in isolation. They exist within ecosystems of other tools.

A customer using an AI product also uses a CRM, a project management tool, an ERP system, and a dozen other business applications. The AI product that integrates seamlessly with existing workflows wins. The one that requires customers to copy/paste between systems loses.

Building Integration Early

Winning companies are prioritizing integration from early stages:

- API-first architecture: Building in a way that makes it easy for customers to integrate

- Native integrations: Building direct connectors to popular platforms like Salesforce, Hub Spot, and others

- Webhooks and automation: Making it easy for customers to trigger your AI system from other tools

The Integration Moat

Once you're integrated into a customer's workflow, switching costs increase dramatically. If using your AI system requires touching multiple other tools, the effort to switch is much higher.

This is why integration is strategic, not just a feature request.

The Platform Risk

However, deep integration creates risk. If the platform you're integrating with changes their API, you have to change. If they build competitive features, you're suddenly a feature in their product instead of a standalone offering.

This is why the most resilient AI companies are the ones with multiple integration points, not ones that are completely dependent on a single platform.

19. The Data Privacy and Security Implications of AI

AI systems are data hungry. The more data you feed them, the better they get. But data has regulatory and reputational implications.

The Privacy Tension

There's a fundamental tension: to build great AI products, you need to train on customer data. But customers are increasingly concerned about privacy and data security.

How do you reconcile this?

Transparency: Be explicit about what data you collect and why. Don't surprise customers.

Control: Give customers granular control over what data is used for training and optimization.

Anonymization: Where possible, remove identifying information before training.

Compliance: Build privacy by design, not as an afterthought.

The Security Requirements

As AI becomes more strategically important, the security requirements increase. Customers want to know:

- How is data encrypted in transit and at rest?

- What access controls are in place?

- What happens if there's a breach?

- Can they audit your systems?

Meeting these requirements is expensive. It's another reason why infrastructure costs are climbing.

The Competitive Advantage Opportunity

Companies that build privacy and security as core differentiators—not as checkbox features—will win over more cautious customers. "We don't see or store your data. It's fine-tuned locally in your infrastructure" is a compelling story if you can deliver on it.

20. Preparing for the Next Wave: What's Coming in 2025-2026

Based on current trends, here's what's likely coming:

Model Costs Will Drop Faster

Competition will drive down API costs for AI inference. Closed-model providers will need to match open-source cost economics or lose customers.

This is good for customers but puts pressure on margins. Companies that have built cost optimization into their products will be fine. Companies that haven't will struggle.

Outcome-Based Pricing Will Become Standard

As measurement improves and vendors gain confidence in their product's impact, outcome-based pricing will move from experimental to standard. This requires strong measurement and alignment with customer success.

Consolidation Will Accelerate

There are too many AI startups chasing too little clearly-defined value. Some will get acquired. Some will shut down. The winners will be the ones with strong product-market fit and sustainable unit economics.

Regulation Will Get Real

EU regulations are already in effect. US regulations are coming. This will increase compliance costs and slow product development in regulated industries. Companies with strong compliance infrastructure will have advantages.

The Model Wars Will Settle

Eventually, we'll see a clear hierarchy of models: frontier models for the hardest problems, mid-tier models for common tasks, and open-source models for everything else. The disruption will settle into a stable state.

Vertical Solutions Will Dominate

In two years, the dominant AI startups will be the ones with deep vertical focus, strong product differentiation, sustainable unit economics, and proven customer value.

The era of building generic AI features is ending. The era of building AI-powered vertical solutions is just beginning.

Conclusion: The Shift From Experimentation to Execution

We're witnessing a fundamental shift in the AI market. For the last 18-24 months, the focus was on experimentation: Can we build AI features? Do customers want them? Does this even work?

Those questions are largely answered. Yes, you can build AI features. Yes, customers want them. Yes, it works.

Now the hard part begins: building sustainable, profitable businesses on top of AI.

The data from the latest industry research confirms what many have intuited: the easy money in AI has been made. Margins are improving for disciplined operators, but they're under pressure from rising R&D and infrastructure costs. Pricing is in flux. The market is getting competitive.

The companies that will thrive are the ones that understand this transition. They're the ones building deeply specialized vertical solutions, not trying to be everything to everyone. They're the ones obsessing over unit economics and customer outcomes, not just feature velocity. They're the ones using commodity models as a foundation and building differentiation in the layers above.

The next phase of AI isn't about bigger models or better training techniques. It's about better products, smarter operations, and sustainable business models.

If you're building an AI product right now, the decisions you make in the next 6-12 months will determine whether you're in the winner's circle in 2027. Focus on what actually matters: solving real problems for real customers in ways that generate genuine value.

That's how sustainable AI businesses get built.

FAQ

What does "vertical AI application" mean and why does it matter?

A vertical AI application solves a specific problem for a specific industry or workflow. Unlike horizontal AI tools that try to serve everyone, vertical applications go deep into one domain. They understand the workflows, regulations, and pain points specific to that industry. This deep specialization creates defensibility that generic tools can't match, which is why vertical solutions are becoming the focus of most AI companies.

How do companies actually improve AI gross margins?

Companies improve margins through a combination of strategies: optimizing model selection and routing (using cheaper models for simple tasks), implementing smart caching to reduce redundant API calls, building infrastructure efficiency into product design, and combining multiple model providers to find the best cost-quality tradeoff for each use case. The most dramatic improvements come from companies that optimize across all these dimensions simultaneously, not just focusing on one.

What's the difference between outcome-based pricing and usage-based pricing?

Usage-based pricing charges customers based on how much they use the product (e.g., number of API calls). Outcome-based pricing charges based on the business results delivered (e.g., percentage of cost savings achieved). Usage-based is simpler to implement but can create unpredictable costs for customers. Outcome-based aligns incentives perfectly but requires both parties to agree on how to measure outcomes, which is harder to implement.

Why are companies planning to change their AI pricing models?

Fixed subscription pricing breaks down for AI because actual value depends on usage and outcomes, which vary dramatically by customer. Some customers need 1,000 monthly API calls, others need 1 million. Fixed pricing either leaves money on the table or prices out valuable customers. As measurement improves and outcomes become clearer, companies are shifting to pricing models that capture value more accurately.

Should AI startups be investing heavily in proprietary model development?

For most AI startups, the answer is no. Building frontier models requires enormous capital, top talent, and years of development. Instead, most winners are building on top of commodity models (Open AI, Anthropic, Google) and competing on application layer innovation. The exceptions are companies with unique data assets or specific use cases where a specialized model creates real advantage over general-purpose models.

How much R&D spending is too much for an AI company?

A general heuristic: R&D should be 15-25% of revenue for companies in growth mode, higher (25-35%) only if you're building differentiating technology like proprietary models or specialized fine-tuning. If your R&D exceeds 35% of revenue and you're not seeing corresponding margin improvements or market share gains, you likely have an efficiency problem. Compare your R&D efficiency against peers in your vertical.

What's the most important thing to measure in an AI product?

Customer outcomes. Everything else is secondary. Can you prove that your AI product delivers measurable value to customers? If yes, pricing is easier, retention is higher, and growth is sustainable. If no, you're selling features instead of solutions, and the market will eventually punish you for it. Build measurement into your product from day one.

Key Takeaways

- AI gross margins are improving to 52% by 2026, but only for companies that are disciplined about infrastructure optimization and model routing

- The market has shifted decisively toward vertical AI applications (70% adoption) over horizontal tools, as model commoditization accelerates

- AI pricing models are breaking down with 37% of companies planning changes, moving from subscription toward usage-based and outcome-based models

- R&D spending is exploding faster than revenue growth, requiring founders to be ruthless about what R&D investments actually drive competitive advantage

- Application-layer differentiation now drives 49% of competitive advantage compared to model-layer innovation, fundamentally reshaping AI product strategy

Related Articles

- The ARR Myth: Why Founders Need to Stop Chasing Unrealistic Growth Numbers [2025]

- Google Gemini Hits 750M Users: How It Competes with ChatGPT [2025]

- AI Super Bowl Ads: ChatGPT vs Claude Controversy Explained [2025]

- Microsoft's AI Chip Stops Hardware Inflation: What's Coming [2025]

- OpenAI vs Anthropic: Enterprise AI Model Adoption Trends [2025]

- AI Agent Training: Why Vendors Must Own Onboarding, Not Customers [2025]

![AI Gross Margins, R&D Spend, and Pricing Trends [2025]](https://tryrunable.com/blog/ai-gross-margins-r-d-spend-and-pricing-trends-2025/image-1-1770473295849.jpg)