Introduction: When AI's Biggest Players Fight for Your Attention

Davos isn't just about economics anymore. In January 2025, the World Economic Forum transformed into something altogether different: a primary debate stage for the AI race, where the three most powerful artificial intelligence labs in the world spent a week taking shots at each other like political candidates fighting for votes.

This wasn't subtle corporate posturing. This was a full-contact reputation war.

Google Deep Mind's CEO Demis Hassabis questioned OpenAI's ad strategy. Anthropic CEO Dario Amodei called the move desperate and elitist. OpenAI's policy head Chris Lehane, a veteran political operative from the Clinton White House with a nickname "master of disaster," fired back with rhetoric that felt ripped from a presidential campaign.

The stakes aren't academic debates about AI safety or capabilities anymore. They're about survival, market share, and whose vision of artificial intelligence will define the next decade. They're about who gets to monetize billions of users. They're about who becomes the Microsoft of AI.

Here's what's really happening beneath the surface of Davos, and why it matters to anyone paying attention to the future of technology.

The Davos Moment: When AI Leaders Descended on Switzerland

The World Economic Forum has always been where power brokers gather to shape narratives about the future. Davos is where the world's elites go to influence policy, make deals, and secure their place in whatever comes next. In 2025, that "what comes next" was artificial intelligence.

The timing was strategic for everyone involved. OpenAI had just introduced ads into ChatGPT. Anthropic was positioning itself as the ethical alternative. Google Deep Mind was trying to prove it could compete with both of them. Nobody could resist the stage.

What made Davos different from normal corporate competition wasn't the products. It wasn't engineering breakthroughs or capability comparisons. It was the willingness to fight publicly, on camera, in front of journalists, investors, and policymakers. These weren't press releases or subtle blog posts. This was face-to-face combat disguised as business-as-usual conference appearances.

Davos, in essence, became the Iowa Caucuses for the AI race.

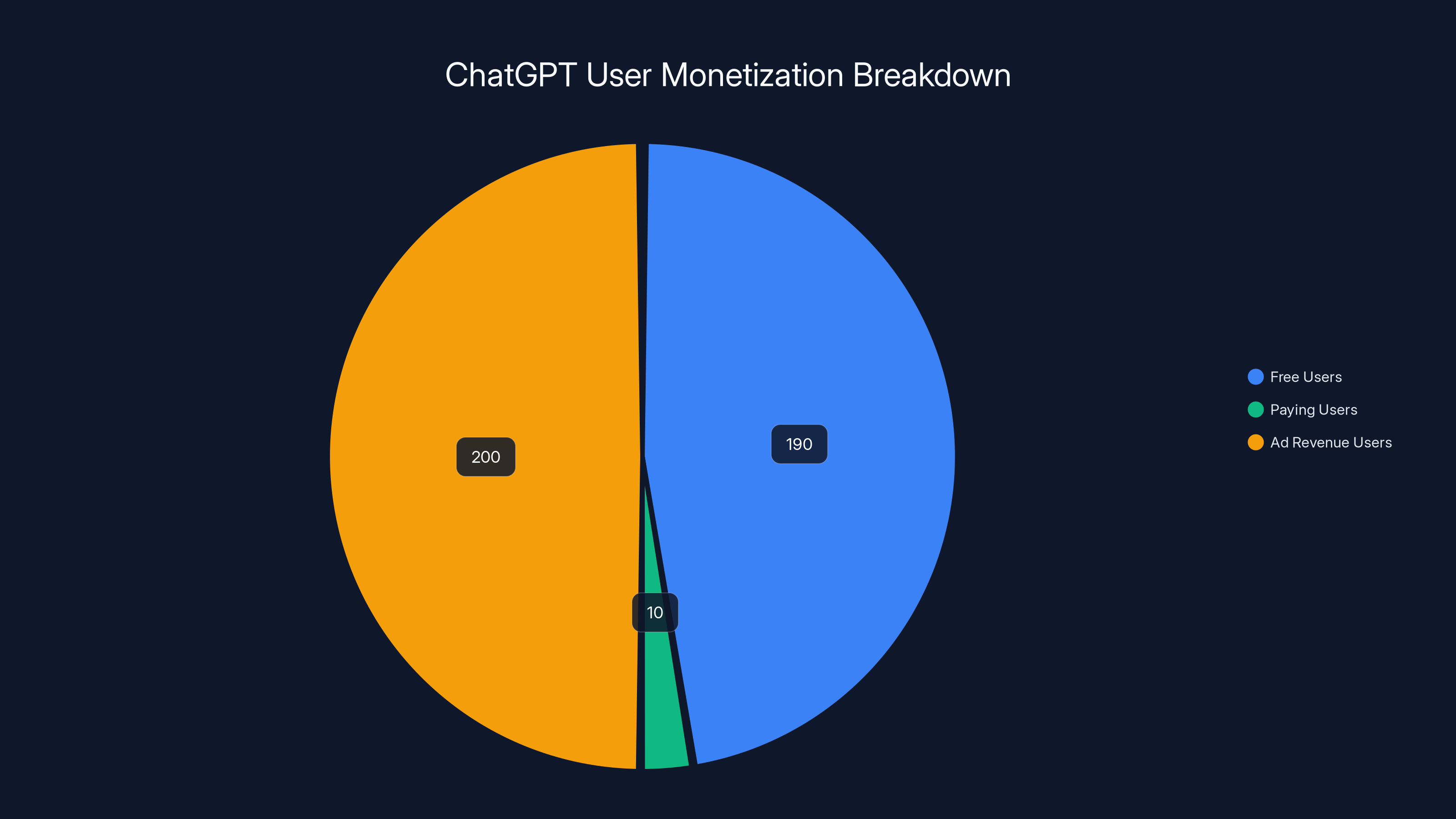

Estimated data shows that 95% of ChatGPT's 200 million users are free users, with a small percentage paying for ChatGPT Plus. Ads are introduced to monetize the large free user base.

OpenAI's Ad Strategy: A Desperate Move or Smart Monetization?

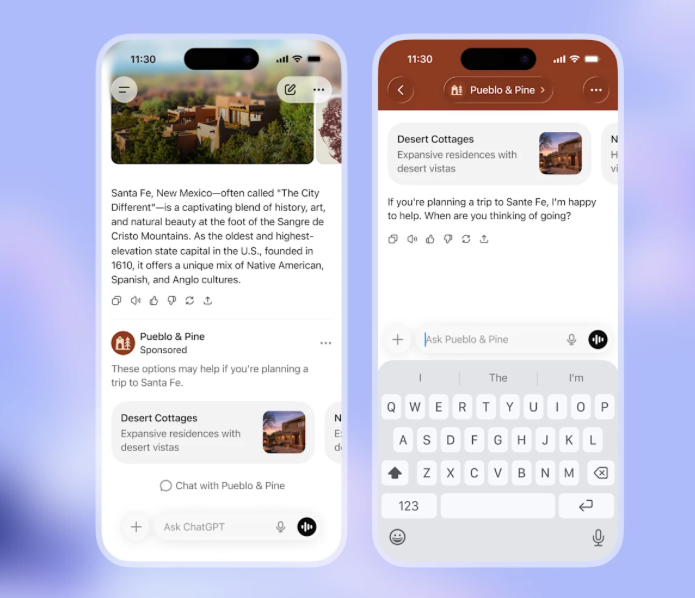

OpenAI started this round of Davos drama by doing something controversial: putting ads in ChatGPT. The company had spent years positioning itself as the scrappy underdog that cared more about impact than profit. Suddenly, ads appeared. It felt, to some observers, like a betrayal of principle.

But here's the actual problem OpenAI faced: how do you monetize a service that hundreds of millions of people use for free?

ChatGPT is used by an estimated 200 million people monthly. That's massive reach. But if 95% of those users aren't paying for ChatGPT Plus at $20 a month, the economics don't work. The company burns through compute costs that are genuinely staggering. Running large language models isn't cheap. Every inference, every token generated, costs real money.

OpenAI had to make a choice: raise prices, limit free access, find new revenue streams, or accept that they'd never be profitable serving free users at that scale. Ads were the option that let them keep the free tier while generating revenue.

Demis Hassabis leaned into this vulnerability immediately. In an interview Tuesday at Davos, he suggested OpenAI moved to ads because they "feel they need to make more revenue." It was a subtle dig, but the implication was clear: OpenAI is desperate, and desperation leads to bad decisions.

There's truth in that critique. Ad-supported models can commoditize what was positioning itself as a premium service. Ads can feel antithetical to the kind of creative, thoughtful AI interaction people want. And once you start advertising, you've crossed a line toward becoming another free service funded by attention and targeting rather than actual value provided to users.

But Hassabis's critique also glossed over a harder reality: everyone needs to monetize eventually. Google Deep Mind exists inside a company already built on ad monetization. Anthropic relies on enterprise customers and investor backing. None of them have figured out how to profitably serve a billion free users. OpenAI just decided to try a different path.

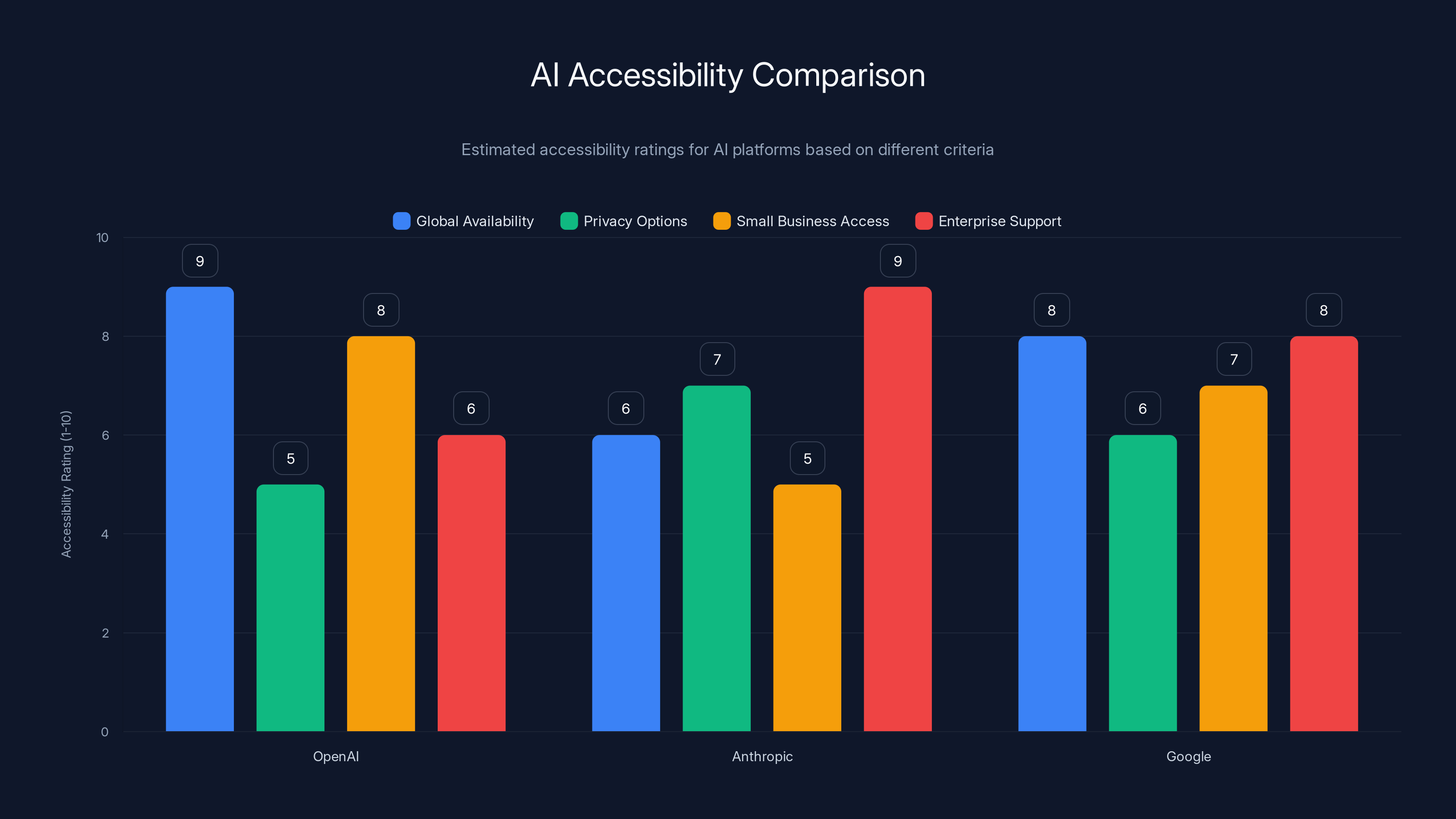

Estimated data shows OpenAI excels in global availability and small business access, while Anthropic leads in enterprise support. Privacy options remain a challenge for all.

Anthropic's Counterattack: The Ethics Play

If OpenAI threw the first punch at Davos, Anthropic came back swinging with different ammunition: morality.

Dario Amodei positioned Anthropic as the company that refuses to chase revenue at the expense of principles. "We don't need to monetize a billion free users because we're in some death race with some other large player," he said during an interview at the Wall Street Journal House.

It's a clever framing. It suggests that Anthropic is above the competitive scramble that drives OpenAI and Google Deep Mind. It positions the company as the thoughtful, ethical choice in a race defined by desperation.

The problem? It's not entirely honest.

Anthropic absolutely wants to win. The company was founded by former OpenAI executives who left specifically because they believed OpenAI was making wrong choices. They want market dominance. They want influence. They want their vision of AI safety to be the industry standard. The only difference is that Anthropic doesn't need to monetize free users because it focuses almost entirely on enterprises and institutions that pay serious money.

That's not ethics. That's business model design. It's brilliant business model design, but it's not a moral position.

The real genius of Anthropic's Davos strategy wasn't just the ad critique. It was Amodei's promise to publish an essay on "the bad things" AI could bring—a dark counterpart to his optimistic "Machines of Loving Grace" essay from the previous year. It was his comparison of allowing Nvidia to sell GPUs to China as "selling nuclear weapons to North Korea."

These weren't just policy positions. They were signal flares that Anthropic had bigger concerns than quarterly revenue. That it was thinking about catastrophic risk. That it was the serious player in the room.

Chris Lehane's Political Playbook: How OpenAI Punches Back

Chris Lehane is not a typical tech executive. He's a political operator who spent decades in the Clinton White House perfecting opposition research, crisis management, and the dark arts of political messaging. He earned the nickname "master of disaster" by helping politicians survive scandals that should have ended their careers. Later, he joined Airbnb as a policy chief and helped the company survive regulatory battles that threatened its existence.

Now he's at OpenAI, applying campaign tactics to the AI race.

Lehane didn't defend the ad strategy by explaining the economics. He attacked his critics. When asked about Hassabis's comment about ads, Lehane pointed out the irony: "You do have to pay for compute if you're going to give people access. I'm happy to have that conversation with the biggest online advertising platform in the world every day, seven days a week."

That's a shot at Google, which owns Deep Mind and built its trillion-dollar empire on advertising. Coming from a company now doing the same thing, it's not a logical argument. But it's a political argument. It reframes the debate from "Is OpenAI desperate?" to "Isn't Google already doing this?"

Then Lehane went harder. He called Amodei's comments "elitist" and "undemocratic." The framing is extraordinary. He suggested that focusing on enterprise use cases—Anthropic's core business—means the company is making AI available only to the wealthy and powerful. Meanwhile, OpenAI, by putting ads in ChatGPT and keeping it free, is democratizing AI for everyone.

It's masterful spin. It's also unfair, because free doesn't mean accessible if you have to watch ads to use it, and enterprise focus doesn't mean elitism if it funds better safety research. But that's not the point in political campaigns. The point is which narrative wins.

Lehane's most revealing comment came when he positioned OpenAI as the "front-runner" and suggested that companies like Anthropic were fighting from second tier. "You'll often have someone who is trying to move up from the second tier say things that are provocative, because it creates a feedback loop," he said. "That gets you some attention."

Translate that from political operative speak: Lehane was saying that criticism from Anthropic is just noise from a company trying to grab market share. It's not serious business strategy, it's marketing. It's the complaint of a challenger losing to the incumbent.

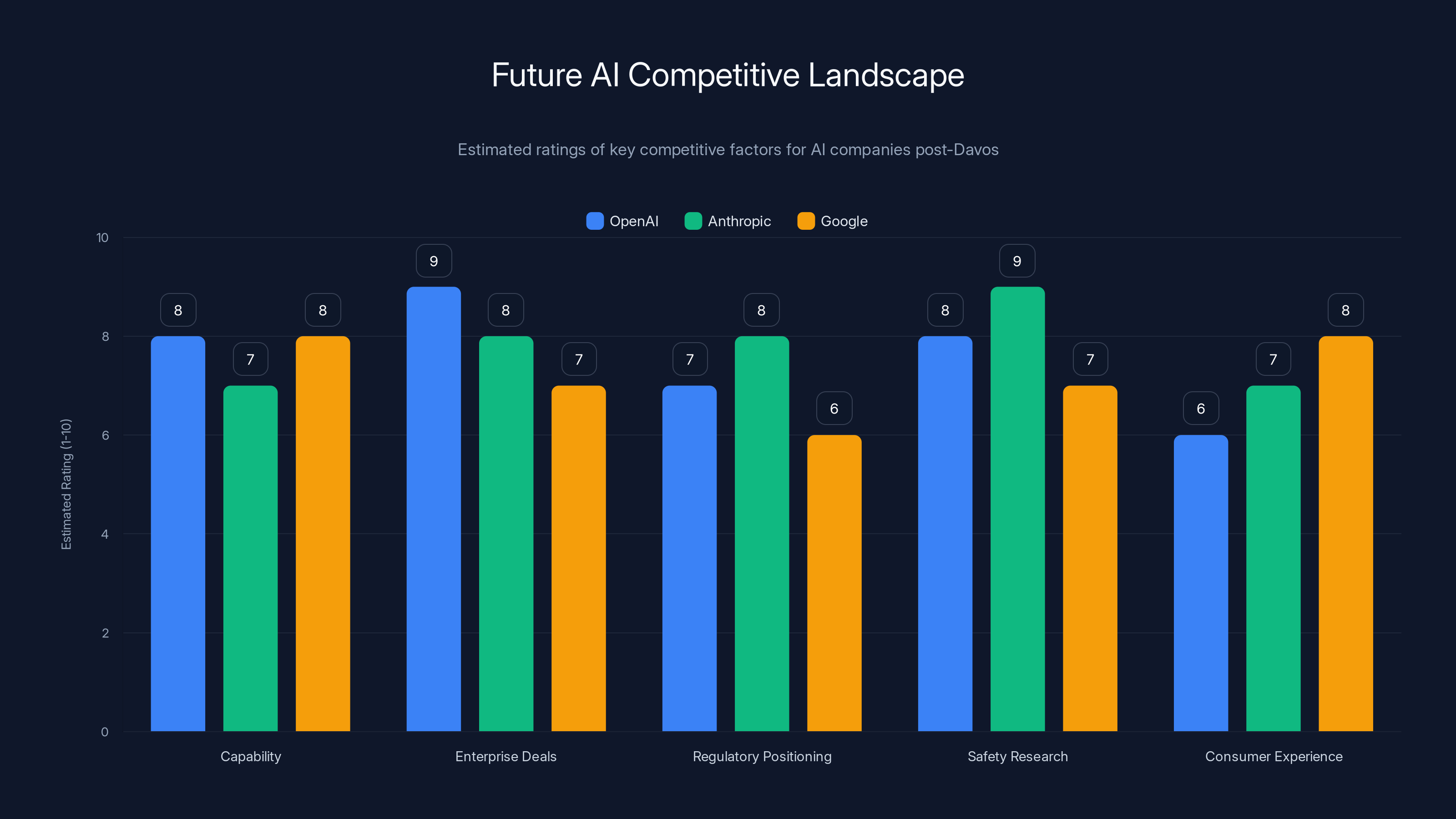

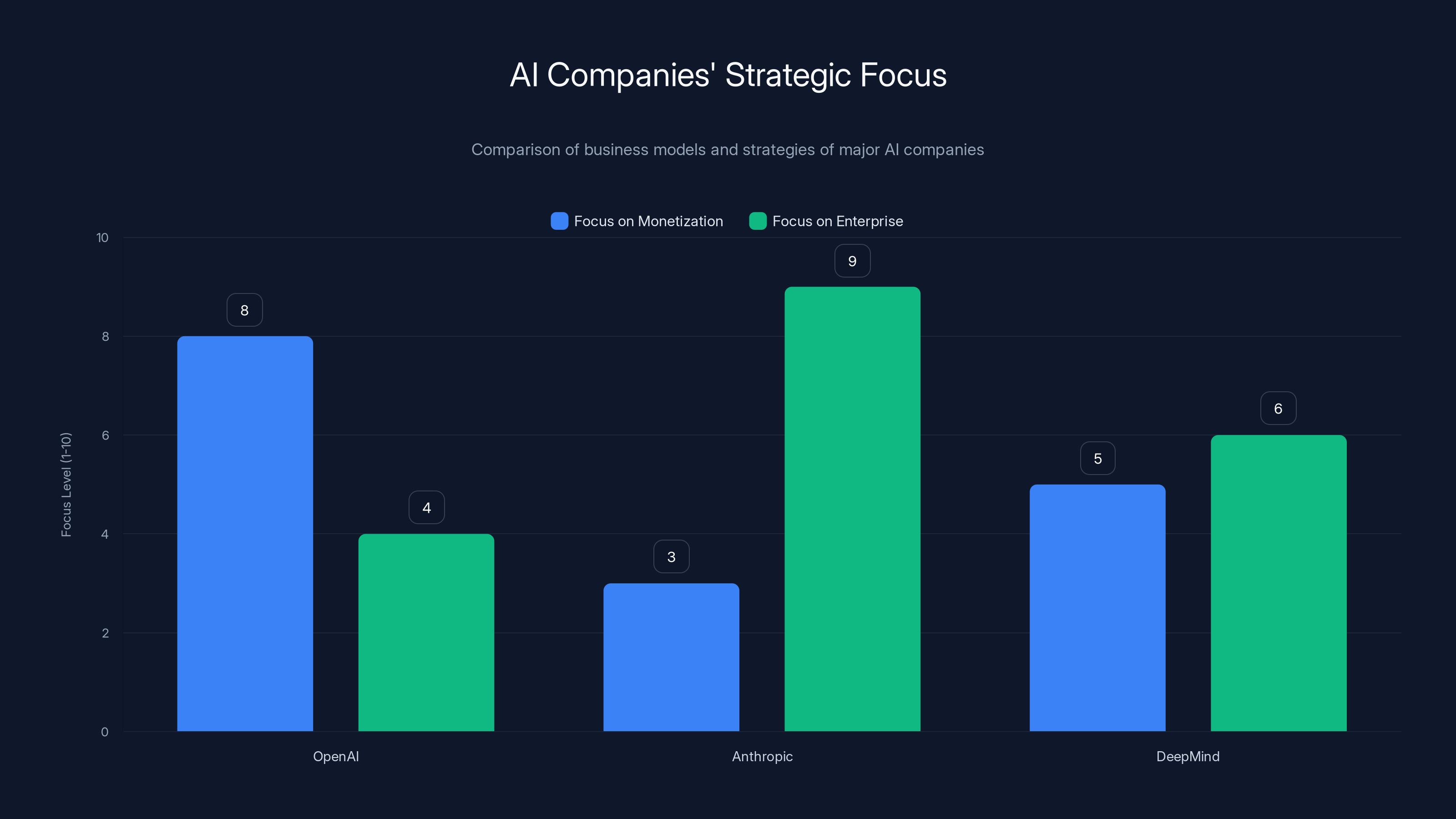

This bar chart estimates the competitive strengths of AI companies in key areas post-Davos. OpenAI leads in enterprise deals, while Anthropic excels in safety research. Estimated data.

The Reality Beyond the Rhetoric: What's Actually True

Here's where the Davos theatrics break down and the actual competition becomes clear: everyone's playing the same game, just with different PR strategies.

OpenAI is aggressively trying to capture Anthropic's enterprise AI business. Google is doing the same. The company with the most market share wins long-term. That's not evil or elitist or desperate. That's capitalism in the AI era.

OpenAI being "the most widely used chatbot" is a real advantage, but it's also one that's surprisingly brittle. User preferences shift faster in software than in most industries. Anthropic's Claude has made significant gains in reasoning tasks and in customer satisfaction metrics over the past year. Google's Gemini is free and integrated into every Google service. None of these positions are unassailable.

The ad strategy isn't either democratic virtue or financial desperation. It's both and neither. It's a choice to monetize without sacrificing free access. It's a tradeoff that makes sense for OpenAI's position in the market. Whether it's the right choice depends on whether users tolerate ads in their AI. We'll find out.

When Lehane said Anthropic comes "from a background that focuses almost exclusively on enterprise use cases," he was describing a fundamental business model difference. Anthropic makes money from companies. OpenAI wants to make money from everyone. Those are different business strategies. One isn't inherently better than the other. They're different bets on how AI monetization will work.

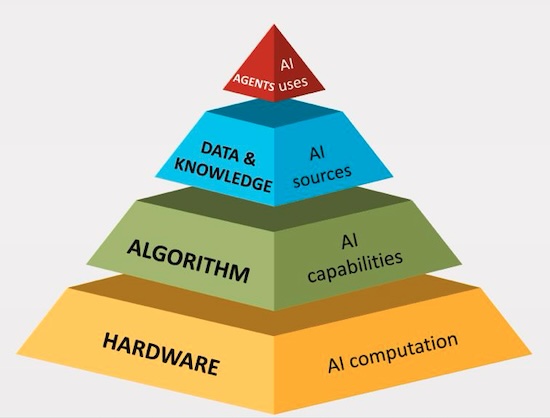

The Role of Compute Costs: The Hidden Driver of Everything

Underneath all the rhetoric about accessibility, safety, and democracy, there's a single force shaping every decision these companies make: compute costs.

Running a large language model is expensive. Genuinely expensive. Training costs are staggering. OpenAI's latest models cost tens of millions of dollars to train. But even inference—running the model to answer user queries—costs real money.

Serving 200 million users for free means burning cash at a rate that requires either infinite funding or a path to profitability. Those are the only two options. There is no third option where you serve hundreds of millions of free users indefinitely without monetization or outside funding.

Anthropic solves this by focusing on enterprise customers who pay serious money per user. That's sustainable. Google Deep Mind solves it by being part of the world's most profitable advertising company. OpenAI is trying to solve it with a combination of paid tiers ($20/month ChatGPT Plus), enterprise deals, and now advertising.

Each strategy has a logic behind it. None of them are morally pure. They're all about finding a sustainable way to run trillion-parameter models at scale.

When Hassabis criticized ads, he was really saying: "Our business model is better because Google already has the advertising infrastructure." When Amodei criticized the move, he was saying: "Our business model is better because enterprises pay more per user than ads ever will." When Lehane defended ads, he was saying: "Our model is better because we're not locking AI behind enterprise paywalls."

They're all correct within their own framework. But they're also all self-serving. That's capitalism.

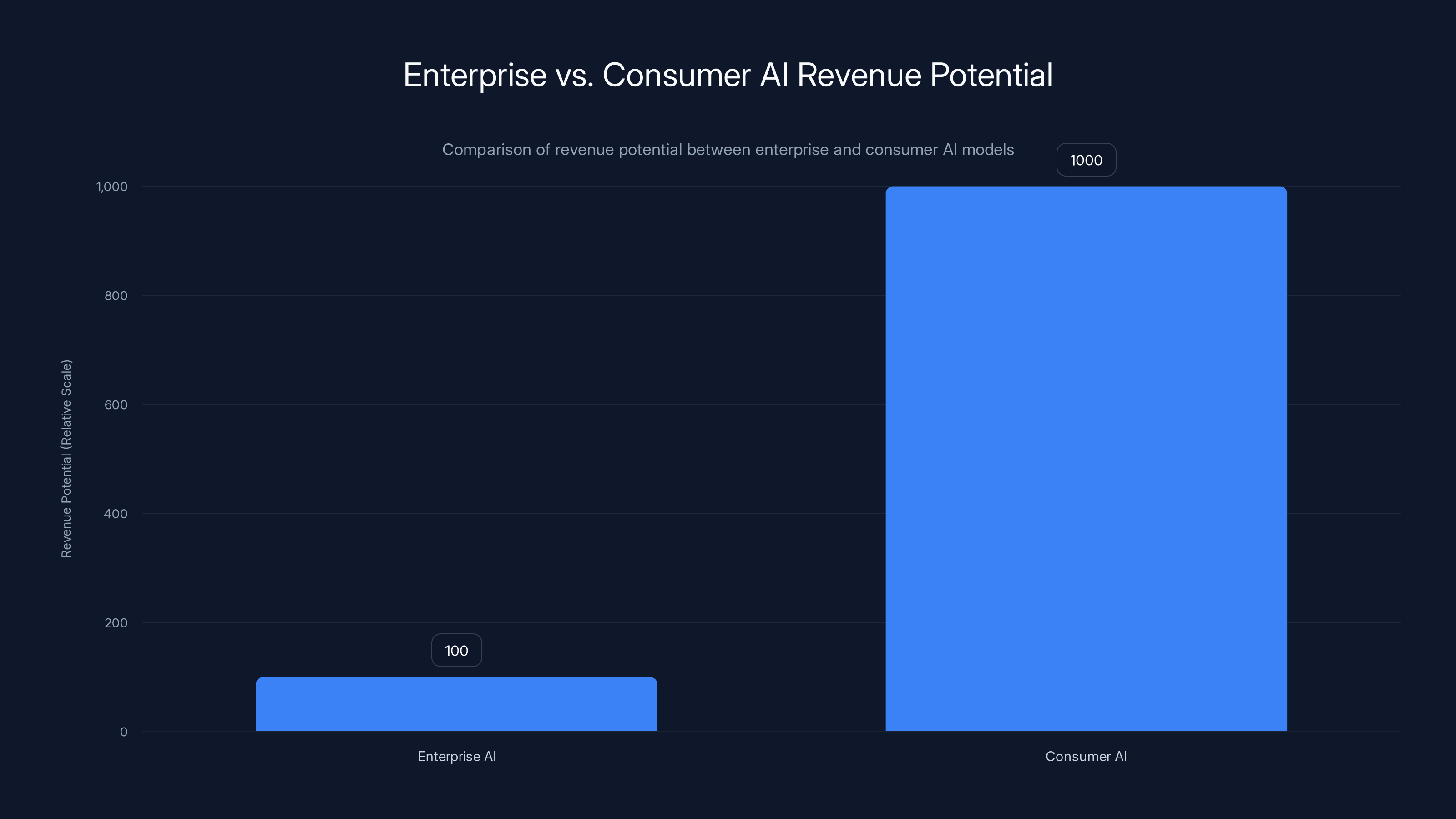

Enterprise AI commands higher per-unit pricing, while Consumer AI can reach a broader audience, offering immense revenue potential if effectively monetized. Estimated data based on typical industry multipliers.

Positioning and Market Dynamics: The Three-Way Race

The Davos confrontation revealed something important about how the AI market is actually structured. It's not a two-player game between OpenAI and Anthropic. It's not Google versus everyone else. It's a three-way competition with very different positions.

OpenAI's Position: First to reach mainstream adoption. First to cross the billion-user threshold in casual awareness. Strongest brand recognition among regular people. But now facing the challenge of monetizing success without alienating the users who made it successful. Also facing new competition from Anthropic in enterprise and from Google's integration advantage.

Anthropic's Position: The serious alternative for enterprises and institutions that worry about safety and alignment. Higher customer satisfaction metrics. Better performance on reasoning tasks. But smaller user base and lower mainstream cultural penetration. The challenge is growing enterprise share without sacrificing margins. The advantage is that they don't have to compete on consumer attention.

Google Deep Mind's Position: Access to unlimited compute and distribution through Google's existing services. The ability to integrate AI into search, Gmail, Workspace, and everywhere else. But also the burden of legacy systems and the risk that breaking Google Search to promote AI feels threatening to core revenue. The challenge is moving fast enough to catch up. The advantage is they'll never be squeezed on monetization because advertising already works.

Understanding these positions explains why the Davos rhetoric took the shape it did. OpenAI is defending a position of strength but facing resource pressures. Anthropic is attacking from a position of technical credibility but relative market weakness. Google is trying to prove it's not left behind.

The Media's Role: Generating Heat and Light

I should note that I helped start this news cycle. I asked Hassabis about the ads. I watched Amodei's response. I had breakfast with Lehane. The questions journalists ask shape the answers executives give, which shapes what becomes a story.

That's not a confession of bias. It's an observation about how information flows. Executives at Davos are prepared for questions. They have talking points. They have strategies. But they respond to the frame of the question.

If I ask "Why did OpenAI move to ads?", the answer is different than if I ask "Is OpenAI desperate for revenue?" Both are legitimate questions. But they point the answer in different directions.

The coverage of Davos amplified the conflict because conflict is what people read. Harmony doesn't generate headlines. Three AI labs saying they're all trying hard and have different strategies is boring. Three AI labs publicly attacking each other is a story.

That's not necessarily wrong. Real competition does involve real critique. But it's worth understanding that the Davos narrative wasn't inevitable. It was shaped by questions asked, by which quotes got pulled out, by what journalists decided was newsworthy.

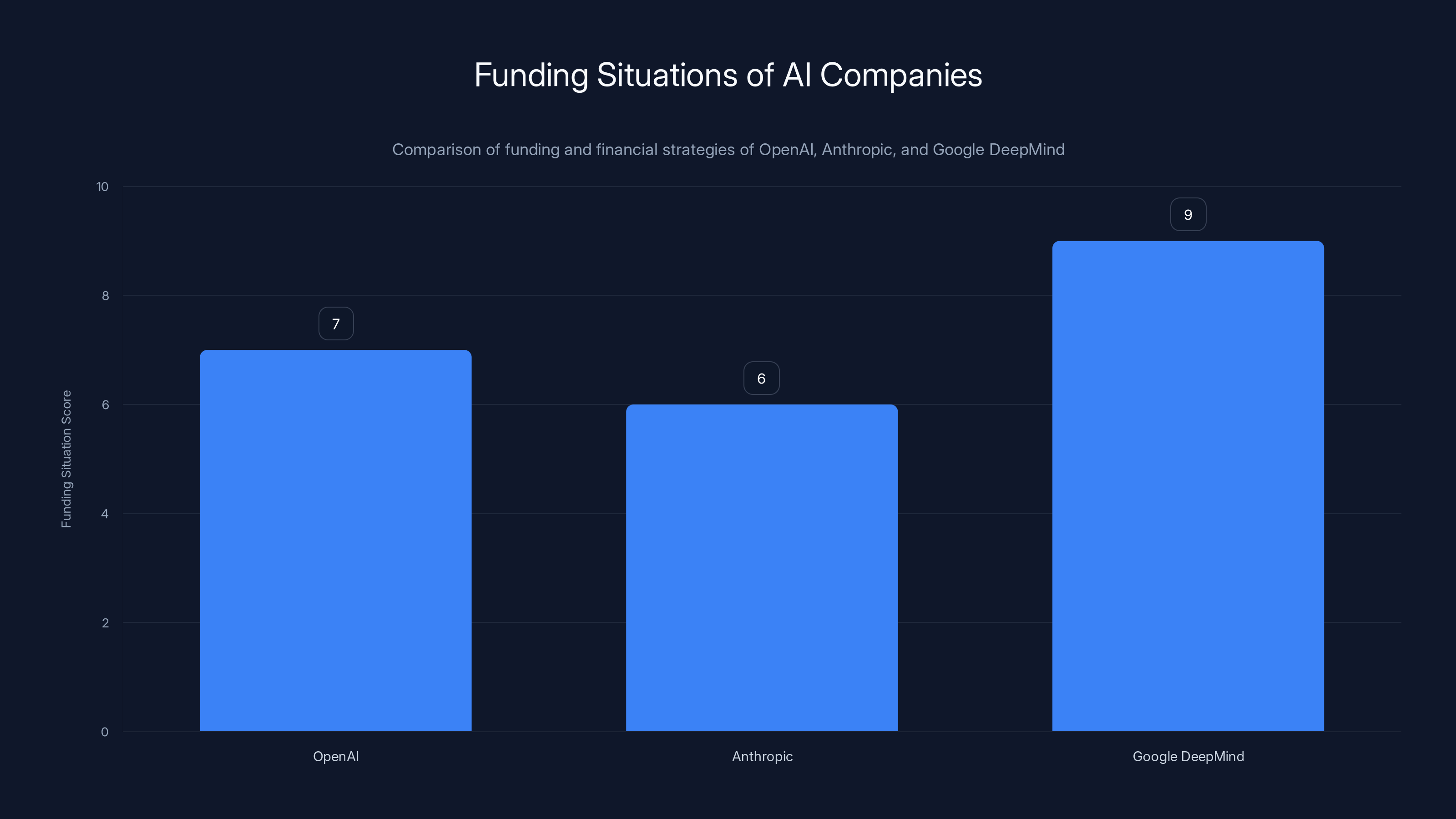

Google DeepMind leads with the most stable funding situation due to internal capital. OpenAI's revenue stream boosts its independence, while Anthropic's large funding round buys time but not long-term stability. (Estimated data)

Safety and Alignment: The Unspoken Stakes

Beneath the business model arguments and the political theater, there's something more fundamental happening at these companies: a genuine disagreement about how AI safety and alignment should work.

Anthropic was founded by former OpenAI executives who believed the company was moving too fast on deployment and not moving fast enough on safety research. That's not just corporate positioning. That's a real philosophical disagreement about what the right speed is and what the right priorities are.

When Amodei talked about "the bad things" AI could bring, he wasn't just taking a political stance. He was signaling that Anthropic takes catastrophic risk seriously in a way he thinks other labs don't. That's his genuine belief, not just marketing.

The question is whether it's true. Whether OpenAI is actually moving recklessly. Whether Anthropic's approach is actually safer. Those are empirical questions that probably won't be answered definitively until we have much more powerful AI systems operating at scale.

Right now, it's a matter of faith and strategy. Which is itself an interesting problem.

The Broader Competition: Enterprise vs. Consumer

One of the most revealing things about the Davos confrontation is that it exposed the fundamental strategic choice facing the AI industry: do you build for enterprises or consumers?

Enterprise AI is lucrative but narrow. You sell to big companies that have deep pockets and pay per-seat or per-use licensing. Your customer base is smaller but your revenue per customer is much higher. Your margins are better. Your support burden is more structured.

Consumer AI is enormous but commoditized. You try to reach billions of people, but most of them don't pay you anything. You need either a massive scale advantage or a sustainable business model that converts that scale into revenue. Ads are one path. Subscription is another. Freemium is a third.

Anthropic chose enterprise. OpenAI is trying to do both. Google is leveraging both.

The Davos fight was really about which strategy is smarter. Lehane, representing OpenAI, argued that serving consumers broadly is better for society. Amodei, representing Anthropic, argued that serving enterprises responsibly is better for everyone. They can both be true.

The market will eventually decide which approach scales better and generates more value. That's how capitalism works.

OpenAI focuses heavily on monetization through ads, while Anthropic prioritizes enterprise clients. DeepMind balances both strategies. (Estimated data)

Regulatory Pressure: The Hidden Context Everyone's Thinking About

None of these executives mentioned regulation directly at Davos, but it's clearly influencing their strategy and their rhetoric.

Governments around the world are starting to regulate AI. The EU has the AI Act. The US is developing frameworks. There's talk of licensing, of requiring safety audits, of restricting capabilities. That context changes how executives think about positioning.

When Amodei emphasizes safety and talks about catastrophic risks, part of what he's doing is positioning Anthropic as the company regulators want to work with. When Lehane emphasizes accessibility and democratic values, part of what he's doing is positioning OpenAI as the company that's serving the public good.

These aren't cynical calculations. Executives genuinely believe their own arguments. But the regulatory context shapes what beliefs feel important to emphasize.

Companies that win at the regulation stage will have massive advantages. They'll be the ones governments trust to deploy new capabilities. They'll be the ones whose practices become industry standards. They'll be the ones who get first-mover advantage in regulated markets.

Davos was partly about technology and business. It was also about positioning for the regulatory battles that are coming.

The Money Question: How Much Does Funding Matter?

Underlying all of this is a question that nobody really wants to ask directly: which company actually has the best funding situation?

OpenAI has massive revenue from ChatGPT Plus and enterprise deals, but also massive compute costs and the pressure to keep raising capital. Anthropic has raised over $5 billion but isn't yet revenue-positive. Google has infinite capital internally but the challenge of deploying it productively without cannibalizing core business.

The company with the best funding situation is usually the company that can afford to take the long view. That company can outbid competitors for talent. That company can invest in longer-term research. That company can survive if a product fails.

Right now, Google has that advantage. But OpenAI's revenue stream is making it more independent. Anthropic's $5 billion funding round doesn't guarantee long-term viability but it does buy time.

The ad strategy that triggered so much criticism? It's partly about making OpenAI less dependent on outside funding. It's about long-term independence.

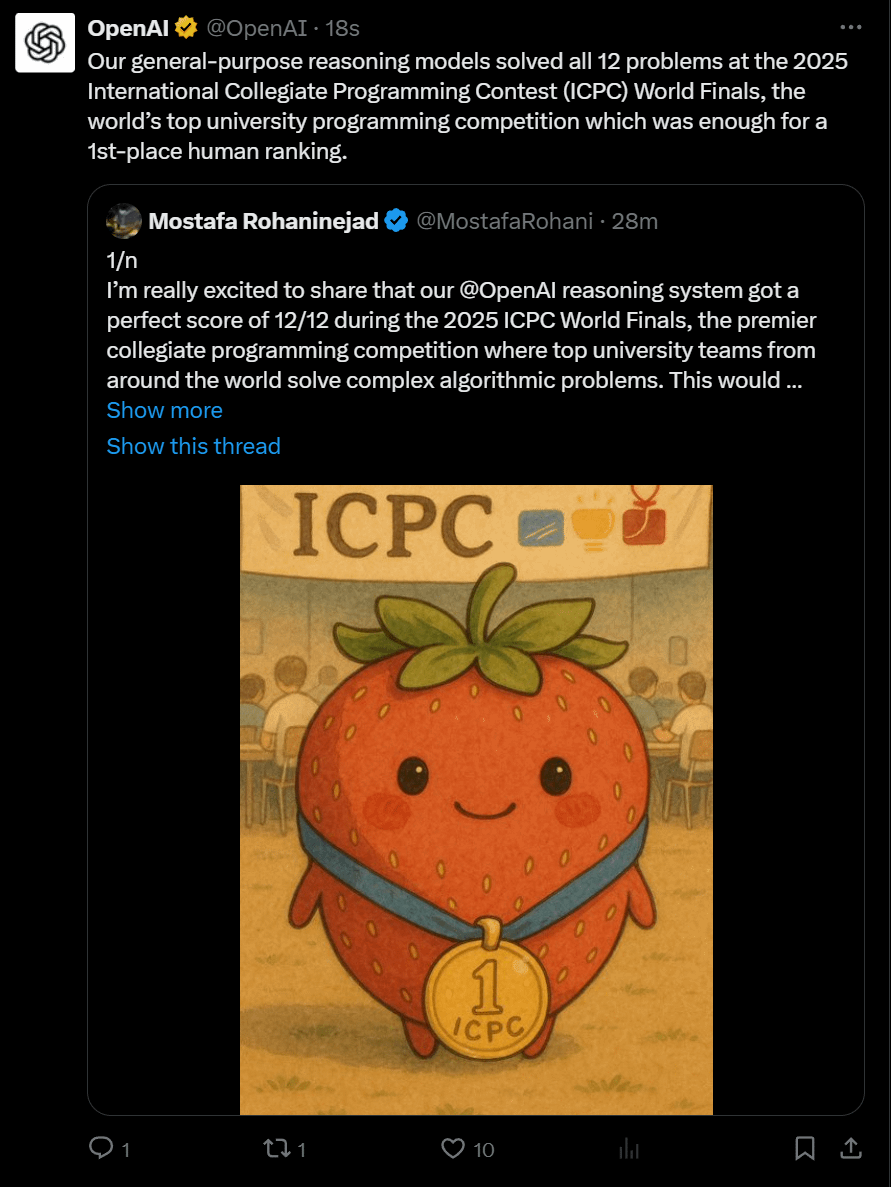

Who's Actually Winning: The Current Scoreboard

If we're keeping score after Davos, where does everyone stand?

OpenAI: Won on narrative control in some circles because Lehane was extremely effective at playing defense and turning criticism into attacks on critics. Lost on having to explain why they're taking ads. Maintains advantage on user adoption and consumer brand recognition.

Anthropic: Won on the moral high ground and on credibility with safety-conscious investors and enterprises. Lost on the reality that enterprise-focused strategy is smaller than consumer-focused strategy. Maintains advantage on customer satisfaction and reasoning task performance.

Google: Didn't face much criticism because Hassabis's shots at OpenAI were defensive rather than aggressive. But the fact that they're even talking publicly about their AI strategy suggests they feel like they're catching up. Maintains advantage in compute resources and distribution.

The real winner of Davos might be the media, which got a great story out of it. The real loser is the user who now has to think about which AI company's values most closely align with their own, when maybe they just wanted a chatbot that works well.

The Accessibility Question: Who's Really Making AI Available?

One of the most interesting tensions in the Davos debate was about accessibility. OpenAI argued that free ChatGPT makes AI accessible to everyone. Anthropic implied that enterprise focus was actually more elitist.

But what does accessibility actually mean?

If accessibility means "available to anyone with an internet connection and a web browser," then OpenAI wins hands down. ChatGPT is free and available globally (with some geographic restrictions).

If accessibility means "can be used without ads and corporate tracking," then neither company wins because OpenAI is adding ads and both companies collect usage data. Anthropic offers Claude with privacy options, but enterprise customers aren't usually the ones paying retail prices.

If accessibility means "available to small businesses and individual developers," then OpenAI has better APIs and pricing. If it means "available to large organizations that need reliability and support," then Anthropic has advantages.

The Davos rhetoric glossed over the fact that accessibility is complex. Different users need different things. OpenAI is accessible to casual users. Anthropic is accessible to enterprises. Google is accessible through search and Gmail.

None of them are universally accessible. All of them are accessible to somebody.

Future Battles: What Comes After Davos

Davos was a moment, but the competition is just getting started. Here's what to watch for next:

Capability Race: These companies will keep publishing benchmarks showing they're faster, smarter, and more capable than competitors. Each claim will be technically true and selectively presented.

Enterprise Battlefield: OpenAI will increasingly compete for enterprise deals that traditionally went to Anthropic. This is where real market share gets decided, not at Davos.

Regulatory Positioning: As governments start regulating AI, companies will position themselves as the most responsible, most trustworthy, most willing to work with regulators. This will matter more than capability alone.

Safety Research: Anthropic will keep publishing papers on alignment and safety. OpenAI will do the same. Google will try to catch up. The company that dominates technical safety literature will win credibility.

Consumer Experience: Whether ChatGPT's ad strategy actually alienates users or becomes normalized will be crucial. If users tolerate ads, the strategy works. If they don't, OpenAI will need a different approach.

New Competitors: The real threat might be companies we're not talking about yet. Microsoft's Copilot, Perplexity's search tool, x AI's Grok. The market is consolidating toward a few major players, but surprises are still possible.

The Broader Lesson: Why Davos Matters

Davos matters not because one company won a debate. Davos matters because it's the moment when the AI industry moved from stealth-mode competition to open-competition.

For years, OpenAI, Anthropic, and Google competed mainly through capability announcements and technical papers. They were gentlemen competitors.

Davos was the moment they decided the stakes were high enough to compete on narrative and positioning. It was the moment they realized that in a race this important, controlling the story matters as much as shipping the product.

That's normal in competitive industries, but it's new in AI. It means the industry is maturing. It means the real battles are now about market share and customer loyalty, not just technical capability.

It also means the public conversation is going to get messier. These companies will critique each other. They'll make selective arguments. They'll emphasize different values depending on what audience they're trying to reach.

That's not corrupt. That's capitalism. But it's worth understanding it as such.

What Users Should Do With This Information

If you're trying to decide which AI tool to use or which company to trust with your data or business, here's what actually matters:

1. Match the tool to your use case. ChatGPT is good for casual use. Claude is good for longer documents and reasoning. Google Gemini is good for search integration. Pick based on what you need, not on who won Davos.

2. Pay attention to privacy. How does the company handle your data? Do they train on your inputs? Can you get a data export? These practical questions matter more than moral positioning.

3. Monitor track records. How has the company responded when they made mistakes? When they discovered they had biased outputs, did they fix it? When security issues emerged, how did they handle it? Track record predicts future behavior better than promises.

4. Diversify. Don't put all your eggs in one AI company's basket. Use different tools for different purposes. This reduces risk and prevents lock-in.

5. Ignore most of the hype. Both the hype about how amazing these tools are and the hype about how dangerous they are is overdone. These are tools. They're very good tools. They're also limited tools. Reality is always more nuanced than marketing or fear-mongering.

The Deeper Pattern: How Reputation Wars Shape Industries

What happened at Davos isn't unique to AI. This is how industries mature. And understanding the pattern helps you see what's really happening.

When an industry is brand new, the goal is just to prove it works. Everyone competes on capability. There are no reputation wars because there's not enough customer base to fight over yet.

When an industry matures and there's real money at stake, companies shift strategy. They stop competing purely on features and start competing on narrative. They emphasize the values they think their target customers care about. They criticize competitors' approaches. They try to define what "good" means in that industry.

That's what Davos was. OpenAI, Anthropic, and Google weren't just arguing about ad strategies and enterprise business models. They were arguing about what AI should be. They were trying to define the industry standard. They were setting a framework that would determine the competitive landscape for years to come.

The company that wins the narrative battle doesn't always win the market battle. But it gets to write the rules that everyone else has to play by.

In that sense, Davos was more important than a single product launch or technical breakthrough. It was about defining what matters in AI.

FAQ

What exactly happened at Davos between these AI companies?

The CEOs of the three major AI labs used their appearances at the World Economic Forum to publicly critique each other's strategies. Demis Hassabis questioned OpenAI's move to advertising in ChatGPT, Dario Amodei called the strategy elitist and desperate, and OpenAI's policy head Chris Lehane fired back by characterizing Anthropic's enterprise focus as elitist and defending monetization as necessary for sustainability and accessibility.

Why did OpenAI decide to add ads to ChatGPT?

OpenAI introduced ads because serving 200 million free monthly users costs hundreds of millions of dollars annually in compute expenses, and the company needed a way to monetize that usage without eliminating the free tier or raising subscription prices further. The free tier generates enormous user adoption and brand value but doesn't generate revenue, creating a fundamental economics problem that ads help solve, even though it changes the user experience.

What is Anthropic's business model compared to OpenAI?

Anthropic focuses almost exclusively on enterprise and institutional customers who pay significant amounts per user, rather than trying to monetize billions of free users. This approach generates higher revenue per customer but serves a smaller total market, and it doesn't require advertising or aggressive monetization tactics, allowing Anthropic to position itself as the ethical alternative focused on safety and alignment rather than rapid monetization.

How do compute costs impact these companies' strategies?

Compute costs for serving AI models are substantial enough that they essentially force every company to either have external funding, a clear path to profitability, or both. This explains why OpenAI needs to monetize free users through ads or subscriptions, why Anthropic focuses on high-value enterprise customers, and why Google Deep Mind leverages the broader Google advertising business. No company can sustainably serve hundreds of millions of free users at scale without solving the compute cost problem.

What does "positioning" mean in the context of Davos?

Positioning refers to how each company tries to define itself in relation to competitors and what values matter most in the industry. OpenAI positioned itself as the company making AI accessible to everyone. Anthropic positioned itself as the company taking safety seriously. Google positioned itself as the well-resourced incumbent. These positions are partially true but also partially strategic choices about how to compete.

Who actually won the Davos debate?

Different audiences would judge different winners. OpenAI won on communication effectiveness because Chris Lehane successfully reframed the debate and turned criticism into attacks on critics. Anthropic won on moral credibility by taking the high ground on safety and ethics. Google won by avoiding direct confrontation. But the real winner will be determined by market outcomes over the next 18-24 months, not by rhetoric at conferences.

Should I care about what these companies are saying?

You should care enough to understand the landscape and recognize that competitive positioning is happening, but not so much that you let corporate messaging drive your actual usage decisions. Use the tool that works best for your specific needs rather than the tool whose company won a rhetoric battle at a conference. Track records, privacy policies, and actual user experience matter more than what executives say at Davos.

Use Case: Automating competitive intelligence reports on AI industry developments, market positioning, and competitive dynamics

Try Runable For Free

Key Takeaways

- Davos marked the moment when AI competition shifted from technical capability battles to reputation and narrative warfare

- Compute costs are the hidden driver forcing each company toward different monetization strategies and business models

- OpenAI's ad strategy is financially necessary but risky; Anthropic's enterprise focus is profitable but limited; Google leverages existing infrastructure

- Chris Lehane deployed political campaign tactics to defend OpenAI, showing how traditional power players are reshaping AI competition

- Market outcomes will ultimately determine winners more than Davos rhetoric, but narrative positioning shapes regulatory and customer perception

Related Articles

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

- ChatGPT Targeted Ads: What You Need to Know [2025]

- Claude Code Is Reshaping Software Development [2025]

- Google's Hume AI Acquisition: Why Voice Is Winning [2025]

- Google's Hume AI Acquisition: The Future of Emotionally Intelligent Voice Assistants [2025]

- OpenAI Ads in ChatGPT: Why Free AI Just Got Monetized [2025]

![AI Labs' Reputation War at Davos: The Real Competition Behind the Scenes [2025]](https://tryrunable.com/blog/ai-labs-reputation-war-at-davos-the-real-competition-behind-/image-1-1769181002349.jpg)