Google Play's AI-Powered App Security System: How 1.75 Million Malicious Apps Got Blocked in 2025

Google just dropped some impressive numbers. In 2025, the company blocked 1.75 million apps that violated its policies from Google Play using AI-powered safety systems. That's a significant shift from 2024's 2.36 million blocked apps. And here's the thing—the lower number isn't a sign of failure. It's actually evidence that AI-powered deterrence is working. Bad actors are increasingly discouraged from even trying to publish malicious apps in the first place.

This shift matters more than you might think. The fact that fewer apps are reaching the store in the first place means Google's multi-layer AI protections are catching problems earlier, at the submission stage. Developers trying to slip past safeguards are facing AI systems that learn from patterns, adapt to new threats, and work alongside human reviewers to spot malicious intent faster than ever before.

But there's a much bigger story here. Google Play hosts over 3.5 billion Android devices worldwide. That's roughly 70% of the global smartphone market. When Google's security systems fail—even in isolated cases—the impact ripples across billions of people. So understanding how these AI defenses work, what they catch, and where gaps might still exist isn't just tech trivia. It's about understanding the infrastructure that protects your phone.

We're going to walk through exactly how Google uses AI to identify bad apps, what the data really tells us about app security in 2025, and what the company still needs to address. Because while the headline numbers look great, there's nuance beneath the surface—and it's worth understanding.

TL; DR

- Google blocked 1.75 million bad apps in 2025: Down from 2.36 million in 2024, showing that AI-powered deterrence is preventing malicious submissions before they reach the store

- AI runs 10,000+ safety checks per app: Each submission goes through automated and manual review layers, with generative AI helping reviewers spot malicious patterns

- Review bombing and spam prevention improved significantly: Google blocked 160 million spam ratings and stopped 255,000 apps from accessing sensitive user data

- Play Protect detected 27+ million malicious apps: Google's Android defense system identified over 27 million new malicious apps, either warning users or preventing installation

- Developer accountability increased: Google banned 80,000 developer accounts and implemented stricter verification and pre-review requirements

- Bottom Line: AI has fundamentally changed how Google Play operates—shifting from reactive removal to proactive deterrence

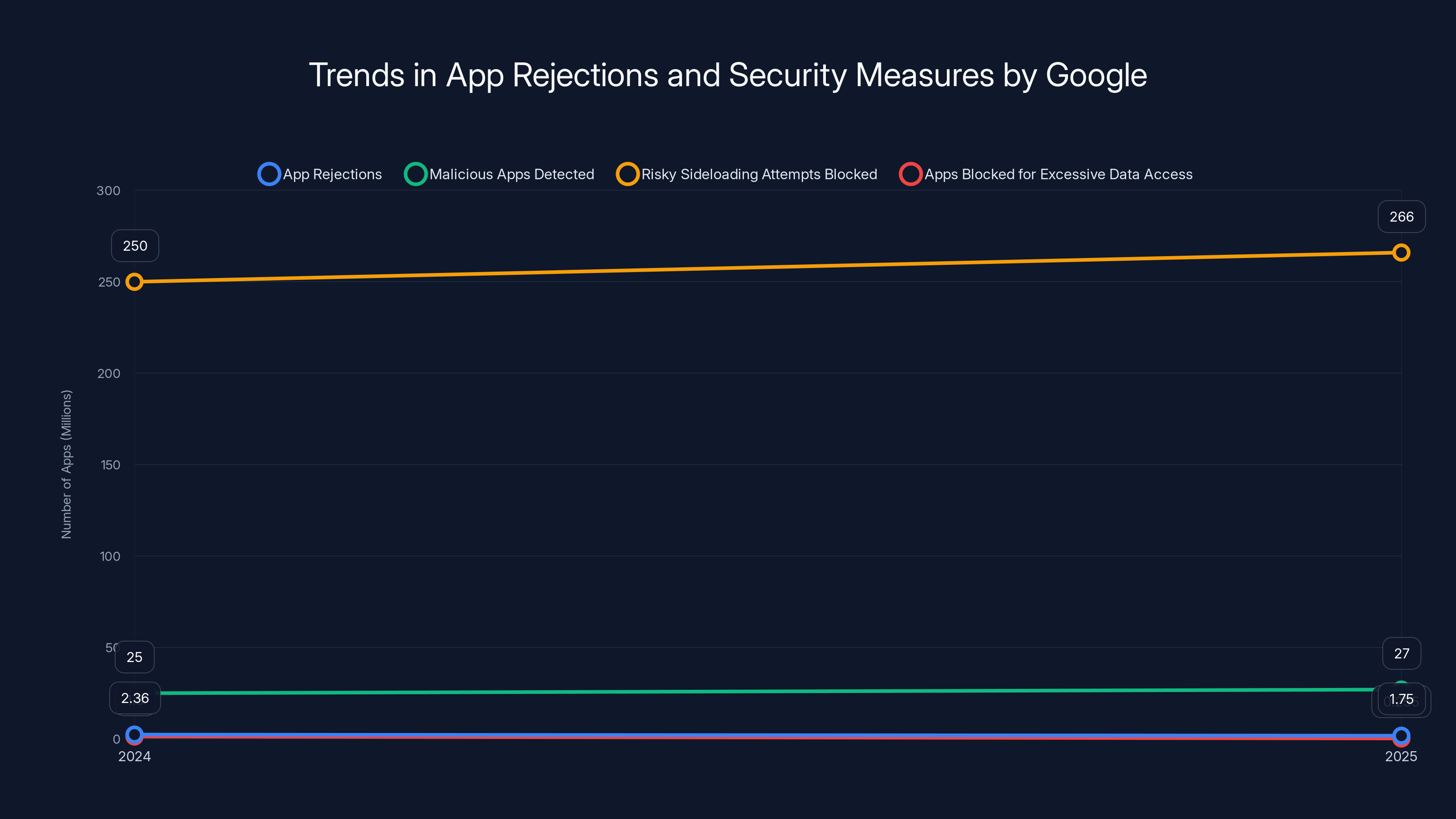

In 2025, Google reduced app rejections from 2.36 million to 1.75 million, while detecting 27 million malicious apps and blocking 266 million sideloading attempts. The drop in apps blocked for excessive data access from 1.3 million to 255,000 highlights improved AI detection. Estimated data.

The Architecture of Google Play's AI Defense System

Google's approach to app security isn't a single AI system. It's more like a layered fortress, with different AI models handling different threats at different points in the pipeline.

Every app submitted to Google Play goes through what the company calls its "multi-layer protections". That sounds vague, so let's break down what actually happens. When a developer uploads an app, it doesn't immediately go live. Instead, it enters a gauntlet of automated checks. Google runs more than 10,000 safety checks on every single submission. That's not hyperbole—that's their actual number.

These checks happen in parallel. Some are looking for known malware signatures. Others are analyzing the app's code structure, looking for patterns that suggest hidden functionality. Others are examining permission requests—if an app is asking for permission to access your GPS, your contacts, and your payment information all at once, that's a pattern worth flagging.

Then there's the generative AI layer. Google integrated newer generative AI models into its review process specifically to help human reviewers. What that actually means is the AI is trained to identify suspicious patterns that humans might miss, and surface them in a way that makes the human reviewer's job faster and more accurate.

The clever part is that this isn't just about detecting known bad patterns. AI models trained on enough data start to recognize novel malicious patterns—things the company has never seen before. A new type of permission abuse. A new way of hiding code in legitimate-looking libraries. A new social engineering tactic embedded in the app description. When the AI spots something unusual, it raises the alert level, and human reviewers jump in.

What's particularly interesting is that this system now includes a deterrent effect. When developers see that the barrier to entry is extremely high—that their malicious app will get caught almost immediately—many simply don't bother submitting at all. That's why the numbers went down. Google isn't catching fewer bad apps because bad actors got smarter. Google is blocking them before they even try.

How Google Identifies Malicious Patterns Using Machine Learning

Machine learning's power in app security comes from scale. Google has access to billions of data points about app behavior, user complaints, crash reports, and malware samples. That's data no independent security company can match.

The machine learning models work in different ways depending on what they're looking for. Permission analysis is one clear example. An AI model trained on millions of legitimate apps knows what a typical photography app asks for permissions-wise. It knows that a calculator app doesn't need access to your location or contacts. When a new app comes in and asks for unusual permission combinations, the model can flag it as suspicious.

Code analysis is another layer. Machine learning models can analyze an app's source code or compiled binary and identify obfuscated code—code that's intentionally made hard to read, which is a major red flag. They can identify if an app is trying to hide functionality, or if it includes known malicious libraries.

Behavior analysis happens post-launch too. Google Play Protect, the company's runtime defense system installed on Android devices, continuously monitors how apps actually behave. If an app that claimed to be a note-taking utility suddenly starts trying to exfiltrate contact data, the system detects that deviation and pulls the app from the store.

The generative AI component is newer and more ambitious. Instead of teaching the AI to recognize specific patterns, generative models are trained to understand context and intent. They can read app descriptions and identify suspicious claims. They can analyze marketing tactics and spot social engineering. They can even flag apps that are intentionally designed to confuse or deceive users through their UI design.

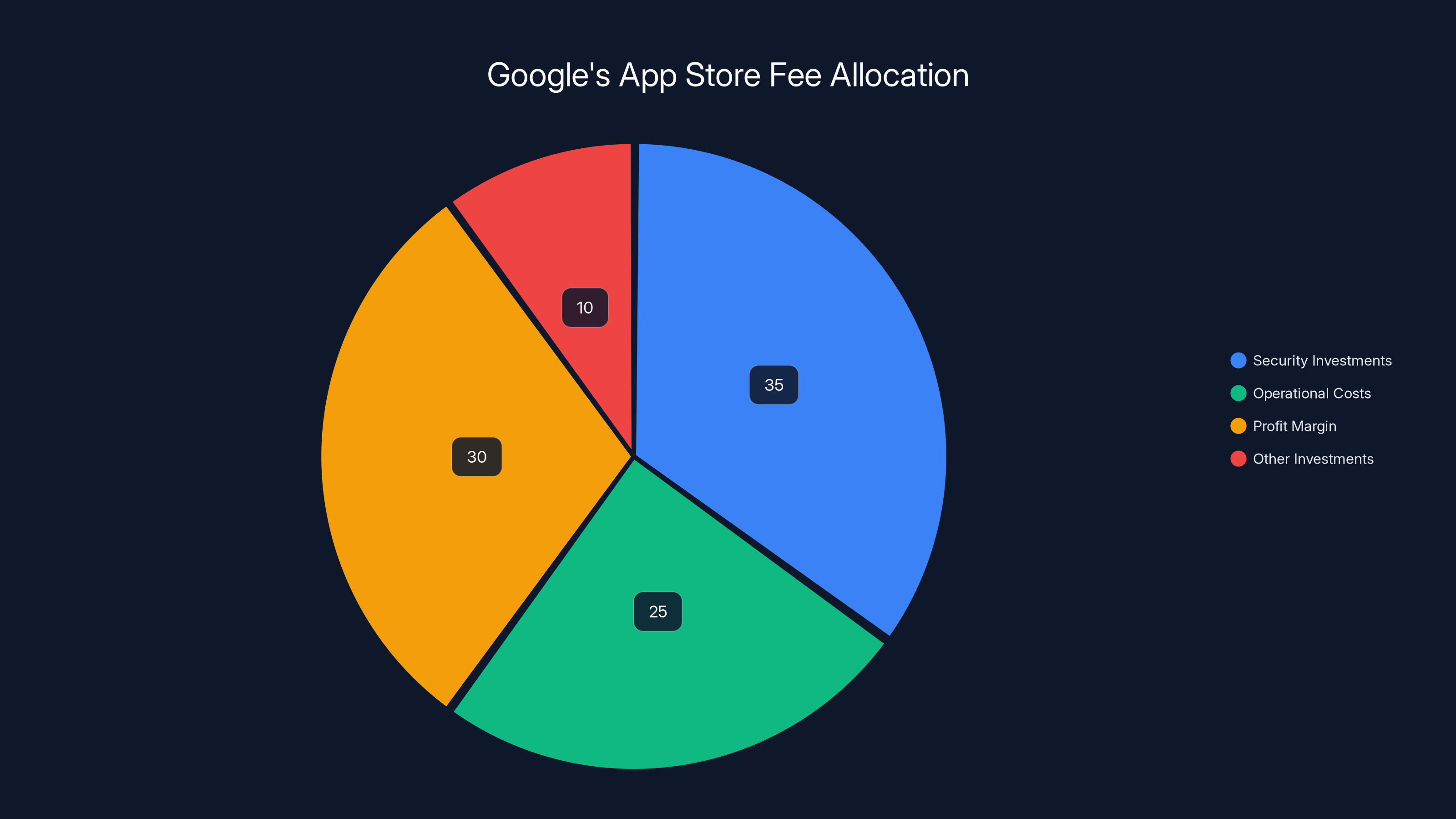

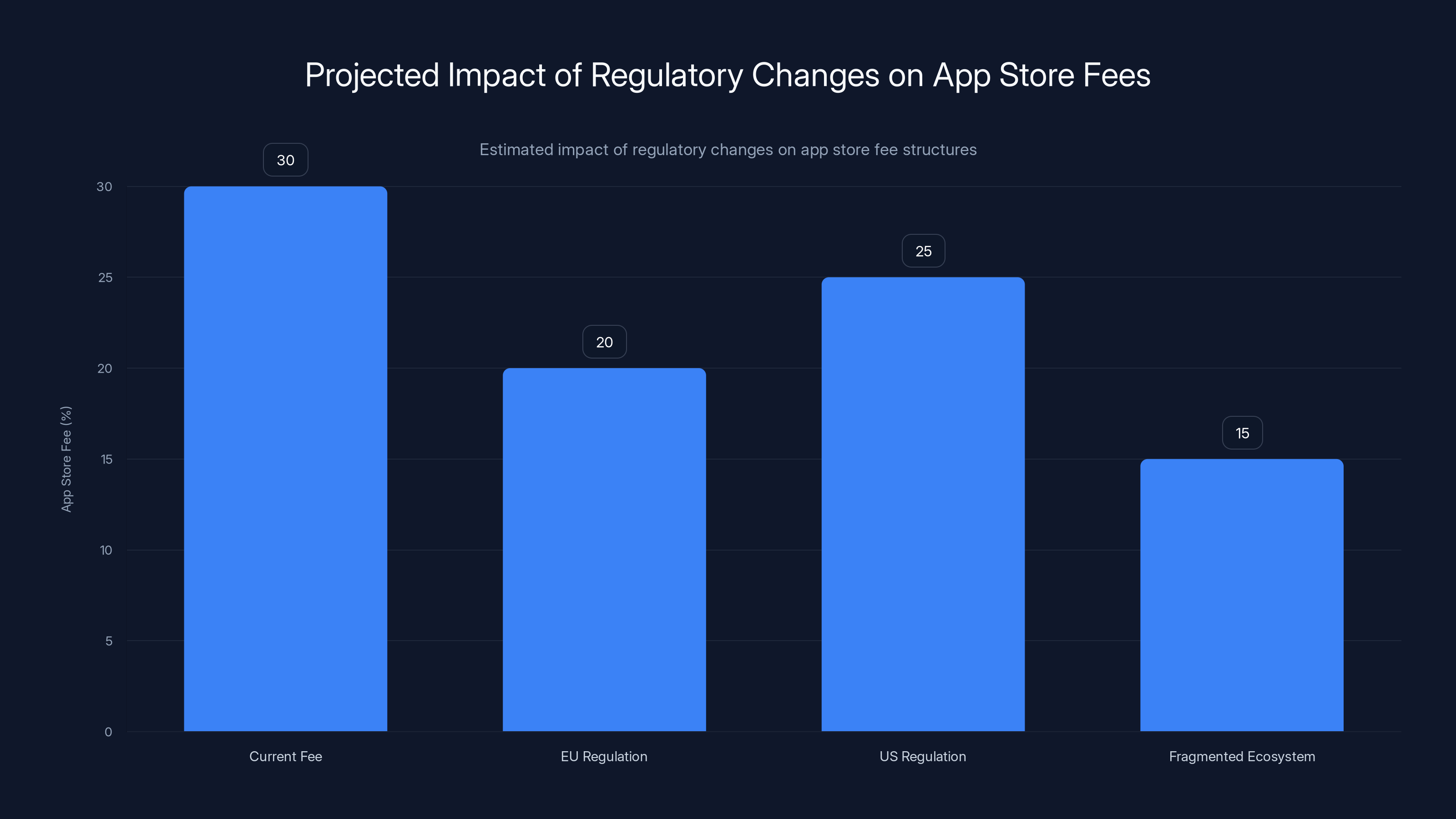

Estimated data suggests that a significant portion of Google's app store fees is allocated to security investments, justifying their fee structure amidst regulatory scrutiny.

The Role of Human Reviewers in Google's Hybrid Approach

Here's where people get it wrong about AI in security. They think Google built a purely automated system that makes all the decisions. That's not what happened.

Google's system is explicitly hybrid. AI handles the heavy lifting of pattern recognition and initial filtering. Humans make the actual judgment calls on complex or edge-case decisions. And this division of labor matters.

Consider a legitimate privacy app that genuinely needs to access location, contacts, and payment information because its feature set requires it. An automated system might flag this as suspicious based on the permission combination alone. A human reviewer, seeing the app's description, understanding its legitimate purpose, and examining its code, can make a more nuanced decision.

Or consider an educational app that teaches kids about world capitals and needs network access. If the network requests seem unusual or excessive, an AI might flag it. A human reviewer can determine if those requests are actually for delivering educational content.

Google says it uses generative AI specifically to help human reviewers work faster. That means the AI isn't making final decisions—it's doing the legwork of surfacing the most suspicious elements so the human can focus on making a good judgment call. The AI might surface "this app requests payment permission but never mentions purchases" or "this app asks for contacts access but has no contact management features visible in the code."

That's actually a smart approach. It combines the scale and consistency of AI with human judgment. Humans are better at context, nuance, and edge cases. AI is better at processing millions of data points. Together, they're more effective than either alone.

Review Bombing Prevention: The Often-Overlooked Security Battle

Not all of Google's security work is about blocking malicious apps. Some of it is about protecting legitimate apps from coordinated attacks by competitors or trolls.

Review bombing is surprisingly effective as a weapon. If you're a legitimate app with a 4.8-star rating, and suddenly competitors coordinate to give you thousands of one-star reviews, your rating tanks. Users don't click on apps with 2-star ratings, no matter how good they actually are. So review bombing is a form of attack that can tank a legitimate business.

In 2025, Google blocked 160 million spam ratings. That's an enormous number. These weren't individual bad ratings—they were coordinated campaigns. Some came from competitors trying to tank apps. Others came from troll campaigns. Others came from click farms trying to artificially boost their own apps.

The company estimated that without these protections, legitimate apps that were targeted by review bombing would have experienced an average 0.5-star rating drop. That doesn't sound like much until you understand what it means for discovery. On the Google Play Store, sorting by rating is how most users discover apps. A 4.5-star app gets recommended. A 4.0-star app starts disappearing from recommendations. The difference between 4.8 and 4.3 is enormous for an app's success.

How does Google catch this? Partly through pattern analysis. A legitimate app might get 100 reviews per day under normal circumstances. Suddenly getting 10,000 one-star reviews in 24 hours from new accounts is obvious. But competitors are smarter about it now. They spread it out. They use older accounts. So Google uses AI to spot the coordination patterns—multiple accounts rating the same app, accounts that rate apps in the same category, accounts with similar behavioral patterns.

This is actually one area where the hybrid human-AI approach really shines. AI spots the statistical anomalies. Humans investigate whether those anomalies indicate actual fraud or just a legitimate viral moment where everyone's talking about an app.

Privacy Permission Abuse: Detecting Apps That Steal Data

One of the most insidious threats on app stores is apps that request access to sensitive data they don't need. An app that claims to be a flashlight but actually requests your location and contact data. An app that wants your payment information for a "free" feature.

Google Play Protect is specifically designed to catch these. In 2025, it stopped 255,000 apps from gaining excessive access to sensitive user data. That's down from 1.3 million in 2024—again, suggesting that the deterrent effect is working.

What counts as excessive access? Google uses a few metrics. First, there's the concept of the principle of least privilege. An app should only request permissions it genuinely needs to function. A notes app doesn't need location. A calculator doesn't need contacts. A flashlight app definitely doesn't need camera access... wait, actually a flashlight app might need camera access to control the flash. That's the kind of nuanced distinction AI and humans have to make together.

Google compares what an app says it does against what permissions it's requesting. If there's a mismatch, that's a red flag. An app that describes itself as a weather app but requests payment permission? Suspicious. An app that claims to be a game but requests SMS access? That's attempting to send premium text messages.

Second, there's the matter of whether the app actually uses the permissions it requests. Through static code analysis, Google can see if an app imports a library that would require a permission, but then never actually calls it. That suggests the app is requesting permissions as a trojan—grabbing access to sensitive data even though it won't use it.

Third, Google compares app behavior against app category norms. Most productivity apps don't request microphone access. Games don't request payment information unless they have in-app purchases. Camera apps don't request contacts. When an app breaks these patterns, it gets scrutinized.

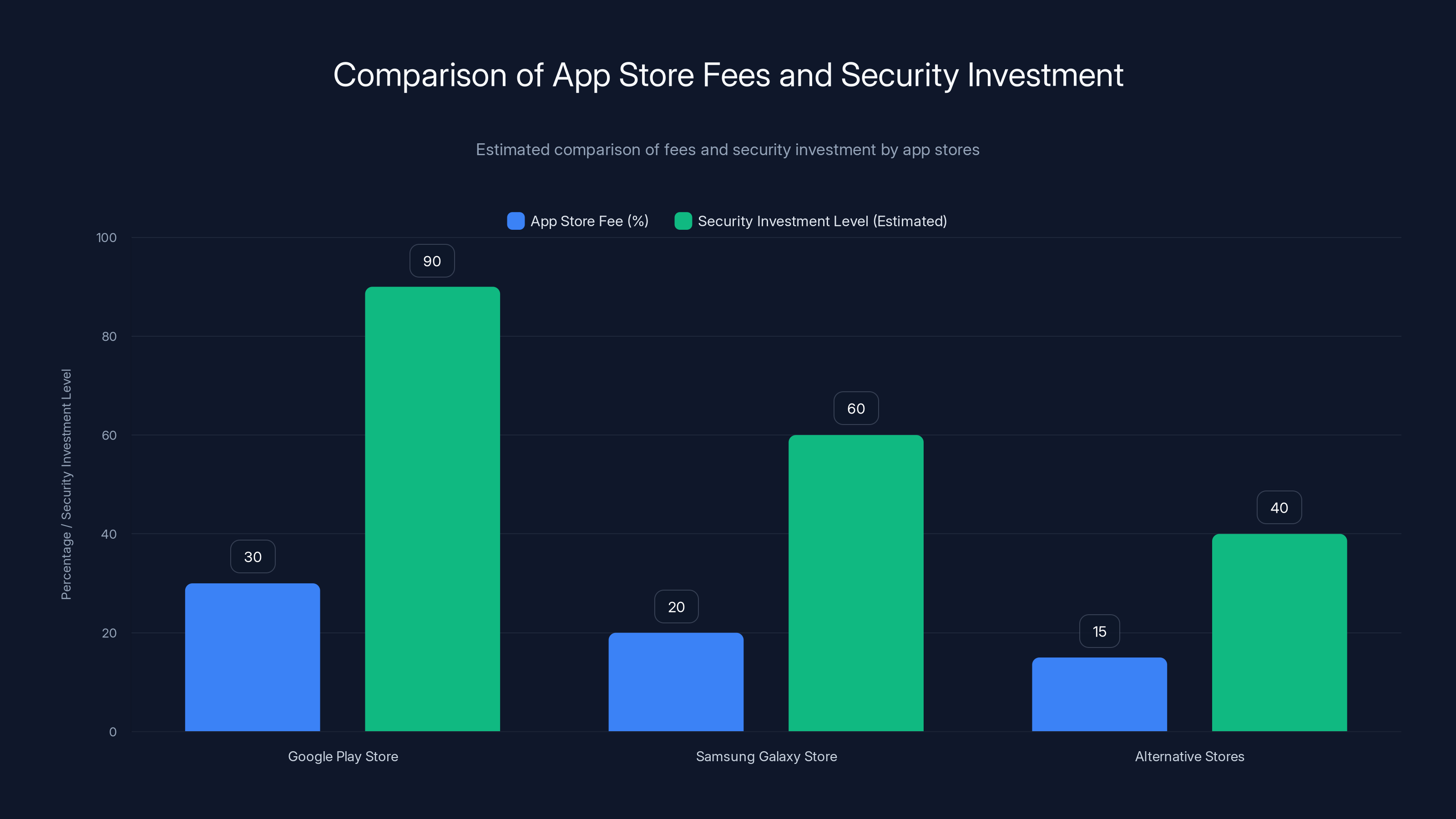

Google Play Store charges the highest fee at 30%, justified by its significant security investment. Competing stores charge lower fees, reflecting reduced security investment. (Estimated data)

The Developer Account Bans: Accountability Through Enforcement

Google banned 80,000 developer accounts in 2025. That's a significant number. These weren't individual malicious apps—these were accounts that had repeatedly violated policies or engaged in systematic abuse.

Developer account bans are the stick in Google's carrot-and-stick approach. The carrot is that Google Play offers access to 3.5 billion potential users with relatively low friction. The stick is that if you abuse that access, you're permanently barred.

What causes a ban? Repeated policy violations. Deploying malicious apps repeatedly. Attempting to deceive the review system. Coordinating review bombing or rating fraud. Collecting user data and selling it without consent. Using shell companies to evade previous bans.

The key insight is that Google is increasingly making this decision automated. A developer might get multiple warnings as they edge toward policy violations. But once the AI systems determine a pattern of intentional abuse, the ban is often applied automatically. This makes sense from a scale perspective—Google can't have humans manually review 80,000 accounts. The AI systems do the work of determining that accounts meet the criteria for permanent suspension.

This also creates a chilling effect. A developer might think about submitting a questionable app. But if they know that doing so risks their entire developer account—all their apps, all their revenue, everything—they're much less likely to try it.

Google Play Protect: Runtime Security on 2.8 Billion Devices

While Google Play's storefront security prevents many bad apps from being installed in the first place, some slip through. That's where Play Protect comes in.

Play Protect is Google's on-device security system, installed on essentially all Android devices. It runs continuously, monitoring apps for malicious behavior. In 2025, Play Protect detected over 27 million new malicious apps. Some got removed before installation. Others got removed after users installed them.

What's significant about this number is that it covers apps beyond just the Google Play Store. Users can sideload apps from third-party sources, or install apps from app stores in regions like China where Google Play isn't available. Play Protect protects against those too.

The way Play Protect works is partly through known malware signatures—if it recognizes a malicious app from its code fingerprint, it blocks it immediately. But it also does behavioral analysis. If an app suddenly starts trying to exfiltrate data in bulk, or trying to extract payment information, or attempting to gain root access, Play Protect detects the behavior and pulls the app.

Google also mentioned that Play Protect's enhanced fraud protection covered 2.8 billion Android devices in 185 markets and blocked 266 million risky sideloading attempts. That means it's not just detecting malicious apps—it's preventing users from installing apps from untrusted sources in the first place.

The sideloading protection is particularly interesting. Android allows users to install apps from anywhere—that's a feature and a security risk. When a user tries to sideload an app from a third-party source, Play Protect analyzes that app and either warns the user or blocks installation. Google blocked 266 million of these attempts, which is a massive number. That's nearly 100 sideloading attempts per Android device globally.

The Regulatory Pressure: Why Google Emphasizes These Numbers

It's worth understanding why Google is making such a public show of these security statistics. The company is under serious regulatory pressure, particularly in Europe.

The European Union's Digital Markets Act classifies Google as a "gatekeeper," meaning its app store has market dominance and regulatory obligations. Part of Google's defense against monopoly accusations is to demonstrate that it's investing heavily in safety and consumer protection—that its market position is justified because it's delivering real security value.

Google's app store fee structure—30% of in-app purchases and subscriptions—is justified partly by these safety investments. If Google wants to maintain that fee structure while regulators scrutinize it, the company needs to show that the security benefits are real and substantial.

That's not to say the security improvements are artificial or exaggerated. The numbers are real. But it's worth understanding the context. When Google talks about investing in AI-driven defenses, it's partly sincere technical innovation and partly regulatory strategy.

The company also mentioned that it's implementing stricter developer verification, mandatory pre-review checks, and testing requirements. These all raise the barrier to entry for malicious actors, which is good. But they also raise the barrier for legitimate indie developers, which is the trade-off nobody wants to talk about.

Estimated data shows potential reduction in app store fees due to regulatory changes, with a fragmented ecosystem potentially leading to the lowest fees.

The Challenge of False Positives: When Good Apps Get Blocked

When you're running AI systems at scale and blocking 1.75 million apps, you have to accept that some legitimate apps are going to get caught in the net.

False positives are the dark side of aggressive security. An indie developer creates a legitimate privacy app that actually needs location access for legitimate reasons. The app gets flagged as suspicious, rejected from the store, and the developer has no clear path to appeal. Or a small game developer creates something that gets flagged for unusual permission combinations, even though those permissions are necessary for the game's actual features.

Google doesn't publish specific numbers on false positive rates. That's a missing data point. How many legitimate apps get blocked? How many developers are wrongfully denied access to the store? These numbers matter because false positives represent real harm—lost revenue, frustrated users who can't access legitimate services, barriers to market entry for small developers.

The hybrid human-AI review process is supposed to address this. Appeals of rejected apps should go to human reviewers who can make more nuanced judgments. But the opacity of the process is a problem. Developers often don't get detailed feedback on why their app was rejected. They get generic explanations. Appealing is possible but not transparent.

This is a genuine tension in app security. Be more aggressive and you catch more malicious apps, but you also block more legitimate ones. Be more lenient and you create more opportunities for malicious actors. There's no perfect balance, and Google doesn't appear to have solved it.

Machine Learning Models and Continuous Learning

Google's AI systems aren't static. They're continuously updated and improved based on new data and new threats.

When a malicious app slips through and gets installed by users, Play Protect catches it and removes it. That event is a data point. Millions of such data points feed back into training the models. So the AI systems that check new submissions get smarter over time, trained on the actual apps that previously escaped detection.

This creates a continuous improvement cycle. Bad actors develop a new technique for hiding malicious functionality. It gets deployed in an app, Play Protect catches it, and within days or weeks, the model that reviews new submissions has been updated to catch similar attempts.

The generative AI models add another layer to this. Instead of just pattern matching, these models are trained to understand semantic meaning. An app might claim to be a "utility" when it's really a trojan designed to steal data. Generative models trained on millions of app descriptions can spot inconsistencies—when what an app claims to do doesn't match what it actually does.

Google also mentioned that it runs over 10,000 safety checks on every app. That number likely includes checks from different models, different approaches, and different analysis techniques. Some checks are static analysis of the code itself. Others are dynamic analysis where the app is actually run in a sandbox and its behavior is monitored. Others are heuristic-based, checking against known patterns of malicious behavior.

The Economics of App Security

There's a broader economic story here. Google invests billions in app security. That investment shows up as reduced malware, blocked spam ratings, and deterred bad actors. The benefit is distributed to 3.5 billion Android users.

The question that regulators are asking is whether that security investment justifies Google's 30% fee. This is where the numbers matter. If Google can demonstrate that its security infrastructure prevents significant harm, that justifies a premium. If the security is merely adequate or standard, then the 30% fee starts looking like monopoly rent extraction.

Competing app stores, like Samsung Galaxy Store or alternative stores in different regions, charge lower fees. How can they offer lower fees? Partly by investing less in security. So there's a real trade-off. Lower fees mean lower security. Higher fees mean better security.

Google's pitch is that it's worth the premium. The company is investing in the latest AI models, running 10,000+ checks per app, employing human reviewers, and maintaining a runtime security system on billions of devices. That's expensive. The 30% fee is what makes it possible.

But the regulatory argument is different. The argument is that Google's market dominance comes from Android itself, not from Play Store security. If Google is using that dominance to extract monopoly rents, that's a problem even if the security is good. The solution regulators are pushing for is alternative payment methods, lower fees for apps that don't use Google's payment system, and more transparency in the review process.

Google has started moving in that direction, allowing alternative payment methods in some cases. But regulators claim it's not enough.

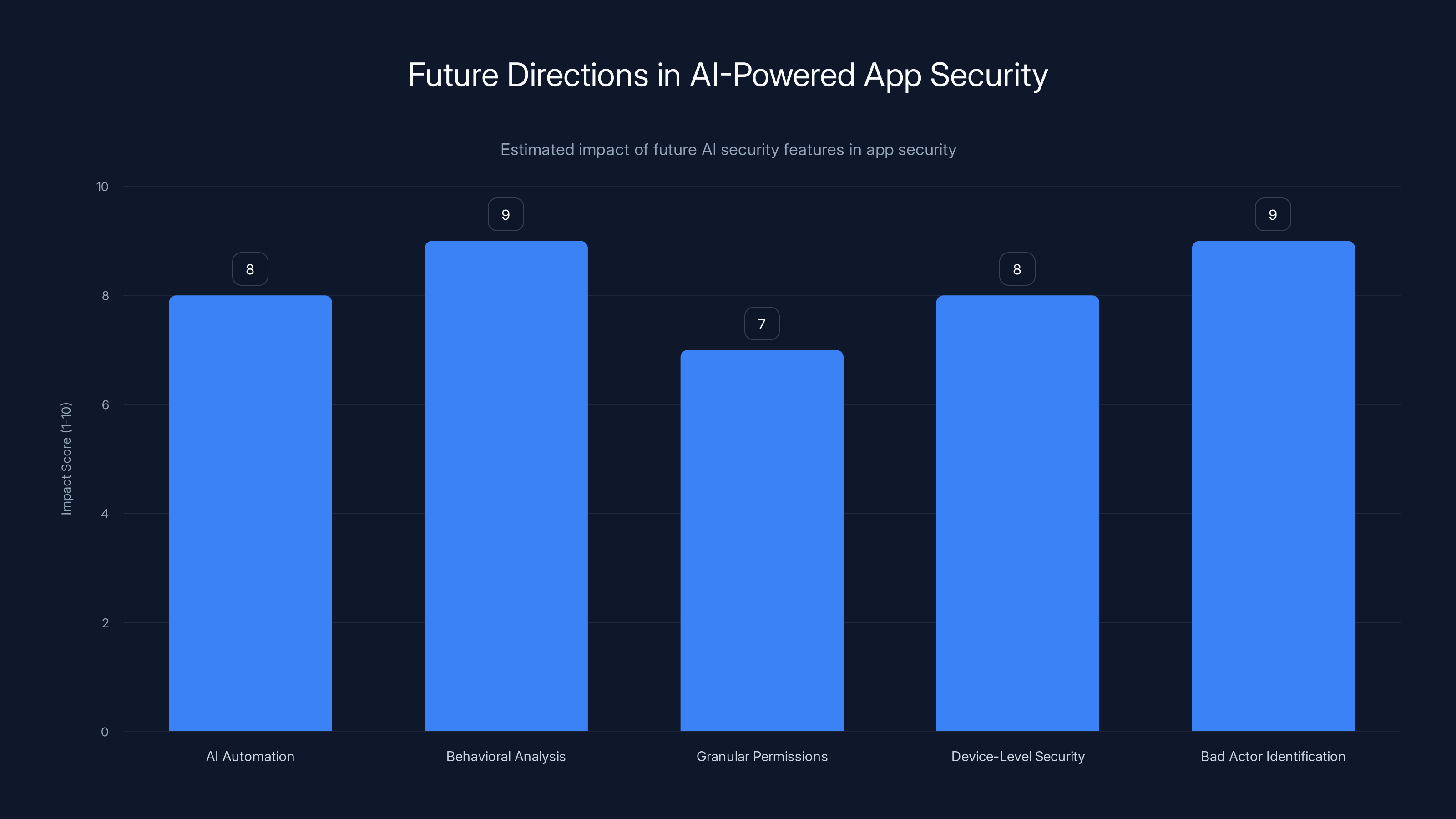

AI-driven features like behavioral analysis and bad actor identification are projected to have the highest impact on app security. Estimated data.

Future Directions: What's Next for AI-Powered App Security

Google mentioned that it will continue investing in AI-driven defenses to stay ahead of emerging threats. But what does that actually mean?

One direction is probably more aggressive AI automation. Instead of AI assisting human reviewers, AI might make more decisions independently. This would speed up the review process but also increase the risk of false positives unless the models get more sophisticated.

Another direction is probably more sophisticated behavioral analysis. As generative AI models get better at understanding context and intent, Google could move beyond checking what an app claims and what permissions it requests, to actually understanding what the app is designed to do and whether that matches user expectations.

More granular permission systems are also likely. Instead of apps having binary access to location or contacts, users might be able to grant apps access to specific data—one contact, or location only in certain scenarios. That would make it harder for apps to perform mass data exfiltration even if they gain access.

Longer term, there might be more device-level security features that complement app store security. Android could implement more runtime constraints on what apps can do, more transparent data access logging, or more granular user controls.

One interesting possibility is using AI to identify which bad actors are behind multiple malicious apps. Right now, Google bans developer accounts when they violate policies repeatedly. But sophisticated bad actors use different legal entities, different payment methods, different identities. AI that can link these separate accounts to the same underlying operator could be more effective.

Learning from Competitors: How Apple, Microsoft, and Others Approach Security

Google Play's security is often compared to Apple's App Store. The comparison is tricky because the two platforms have different trade-offs.

Apple's App Store has stricter approval processes and fewer apps get through. Apple reviews every app before it goes live and continues reviewing after publication. This results in very few malicious apps on iOS, but also means that indie developers sometimes have a harder time getting apps approved.

Google's approach is more permissive at the submission stage but more aggressive at runtime. More apps get onto Google Play, but Play Protect is continuously monitoring them and removing them if they misbehave.

Both approaches have merit. Apple's approach creates a smaller, curated store with higher quality control. Google's approach creates a larger, more open store with post-hoc removal of bad apps. Users trade off features and freedom for security on different platforms.

Microsoft's Windows is increasingly adopting AI-powered security similar to Google's approach. Amazon's Appstore for Android devices also has its own security reviews.

When Google talks about investing in AI, it's partly because AI allows for better security at the scale of billions of devices. Traditional human review can't scale to that level. AI can.

The Transparency Problem: What We Don't Know

For all the security numbers Google publishes, there are significant transparency gaps.

First, false positive rates aren't published. We don't know what percentage of rejected apps were actually malicious versus wrongfully rejected. That's a missing metric.

Second, the criteria for developer account bans aren't fully transparent. Developers get notified after the fact, but the specifics of what led to the ban decision aren't always clear.

Third, the specific AI models and techniques used aren't fully documented. Google publishes research on some of them, but the exact models running in production review are proprietary.

Fourth, regional differences aren't discussed. Are the same 10,000+ safety checks run in all 185 markets? Or do some markets get lower scrutiny? Are there regional variations in policy enforcement?

Fifth, the accuracy and reliability of different detection methods isn't quantified. How effective is permission analysis at catching data-stealing apps? How many malicious apps bypass it? These metrics exist internally at Google but aren't published.

This transparency gap creates problems. Developers can't understand what went wrong. Regulators can't assess whether the system is fair. Users can't evaluate security claims.

Google has published some research on its security techniques and has submitted to regulatory audits. But much remains proprietary, and that's a legitimate criticism.

The chart shows a significant decrease in policy violations and sensitive data access from 2024 to 2025, indicating improved app security measures. Estimated data used for developer accounts banned in 2024.

The Human Cost: What Happens to Blocked Developers

When Google blocks an app or bans a developer account, that's not just a technical event. For the developer, it might be a significant financial loss.

Consider a small indie developer who spent months building an app. It gets rejected from Google Play for a policy violation they didn't intentionally commit. The appeals process is slow and opaque. Meanwhile, competitors' similar apps are available on the store. The developer loses potential revenue and user base.

Or consider a developer in a country where Google Play is the primary app distribution channel. Getting banned from Google Play might mean losing access to the majority of the mobile market in their region.

Google's system, while well-intentioned, has created concentrated power in the hands of a single company. That company gets to decide who can distribute apps and who can't, with limited transparency or appeal process.

This is ultimately what regulators are concerned about. Even if Google's security is genuinely good—which it appears to be—the concentration of power to decide what software can be distributed is concerning when it rests in one company's hands.

Fractured app ecosystems with competing app stores might be messier and potentially less secure. But they distribute power and create options for developers.

What This Means for Users

For the average user, the practical implications of Google's AI-powered security system are straightforward.

First, the risk of getting malware through the Google Play Store is genuinely low. That 1.75 million blocked apps number means bad actors are mostly failing in their attempts to distribute malware through the official channel. Most users won't encounter malicious apps on Google Play if they stick to apps with decent review counts and decent ratings.

Second, Play Protect provides continuous protection even after apps are installed. If an app starts misbehaving, the system will catch it and remove it. Users don't need to manually monitor what their apps are doing.

Third, the review bombing prevention means app ratings are more trustworthy. You can generally trust that an app's rating reflects real user experience rather than coordinated attack campaigns.

Fourth, the privacy protection means apps are less likely to steal your data through excessive permissions. The system does check permission requests for reasonableness.

The practical upshot is that Google Play is pretty safe. Not perfectly safe—no system is—but safe enough that most users shouldn't worry about installing apps from the official store. The bigger security risks come from sideloading apps from untrusted sources or clicking suspicious links outside the app ecosystem.

The Broader AI Security Landscape

Google's use of AI in app security is part of a broader trend. Security across the tech industry is increasingly AI-powered.

Email security uses AI to detect phishing and spam. Network security uses AI to detect intrusions and anomalies. Cloud infrastructure uses AI to detect and respond to attacks. Antivirus software uses AI to identify malware variants it's never seen before.

The advantage of AI for security is scale and sophistication. AI can process millions of data points and identify patterns that humans would miss. AI can adapt to new attacks without requiring manual rule updates. AI can work 24/7 without fatigue.

The disadvantage is that AI can be fooled. Adversaries are increasingly focusing on tricking AI systems specifically. If you understand how a machine learning classifier works, you can sometimes craft inputs that fool it while still accomplishing your goals.

This creates an adversarial situation. Defenders build AI systems to detect attacks. Attackers build systems to evade AI detection. The AI arms race is real.

Google's approach of combining AI with human review is probably more robust than relying solely on AI. Humans can catch edge cases and novel techniques that fool the AI. AI can handle scale that humans can't.

Comparing 2024 vs 2025: Trends in App Security

Looking at Google's year-over-year numbers tells an interesting story.

Apps blocked for policy violations: 2.36 million (2024) to 1.75 million (2025). That's a 26% decrease. This likely indicates better deterrence and earlier detection.

Apps prevented from accessing sensitive data: 1.3 million (2024) to 255,000 (2025). That's an 80% decrease. This is the most striking improvement and suggests the permission analysis system is getting better at catching problematic apps earlier in the review process.

Developer accounts banned: The 80,000 number for 2025 is compared implicitly to higher numbers in previous years, though the exact prior year number isn't stated.

Malware detected by Play Protect: 27 million new samples in 2025. This is consistently high, indicating that even as more malicious apps are stopped at the store level, plenty still attempt to distribute through other channels.

Review bombing attempts blocked: 160 million in 2025. This is compared to implicit lower numbers in prior years.

The trend is clear: Google's AI systems are getting better at preventing bad apps from even reaching the store. Fewer apps are making it through submission that shouldn't. Fewer are being discovered to have excessive permission requests. The system is shifting from reactive removal to proactive prevention.

Implementation Challenges and Technical Debt

Running this kind of security system at Google's scale comes with real technical challenges.

One challenge is latency. Developers submit apps expecting a review to complete in hours or days. But with over 10,000 checks per app, plus human review, plus running the app in a sandbox, plus checking different device configurations, the latency adds up. Google probably has significant infrastructure just to handle the computational load of all these checks.

Another challenge is keeping up with new threats. Bad actors are continually innovating new techniques to hide malicious functionality. Each new technique requires new detections. The detection systems have to stay ahead of the threat curve.

A third challenge is balancing false positives and false negatives. Catch too many apps, and you block legitimate ones. Let too many through, and malicious ones get distributed. Finding that balance requires continuous tuning.

A fourth challenge is dealing with adversarial examples—attacks specifically designed to fool machine learning classifiers. Researchers have shown that small perturbations to code can sometimes fool malware classifiers while maintaining functionality. Google has to account for this.

A fifth challenge is handling scale. 3.5 billion devices means 3.5 billion different analysis contexts. An app that's fine for most users might be problematic in a specific regional context. Handling those variations at scale is genuinely hard.

What Developers Should Know

If you're developing an app for Google Play, here's what matters about this security system.

First, be transparent. Request only the permissions you actually need. Explain in your app description what sensitive data access you're using and why. The AI checks permission requests against claimed functionality—make sure they match.

Second, test thoroughly. Don't ship broken functionality. Don't include hidden features. Don't use obfuscated code. These all trigger security checks. Clean code and honest functionality pass reviews more easily.

Third, understand your dependencies. The libraries and SDKs you include in your app matter. If one of them has a security vulnerability or malicious behavior, your app gets flagged. Vet dependencies carefully.

Fourth, be patient with rejections. If your app gets rejected, read the rejection reason carefully. Many rejections are legitimate. If you believe your rejection was in error, appeal. Be specific in the appeal and explain why the security concerns don't apply.

Fifth, don't try to game the system. Developers who try to evade reviews through obfuscation or deception get caught and their accounts get banned. It's not worth it.

The Regulatory Future: What Happens Next

Google's security investments matter partly for technical reasons and partly for regulatory reasons.

The EU's Digital Markets Act requires gatekeepers like Google to maintain high security standards and justify their market position through genuine value delivery. Google's investment in app security is part of that justification.

But regulators are skeptical that the security benefits justify a 30% fee. European regulators are pushing for alternative payment methods and lower fees for apps not using Google's payment system.

In the US, the FTC has been scrutinizing Google's app store practices. Congress has considered legislation that would require app stores to allow sideloading and alternative app distribution channels.

The result is likely to be a more fragmented app ecosystem. Some regions might allow competing app stores more easily. Some might require lower fees. Some might implement sideloading by default.

This creates genuine security risks. An app store with 30% fees can invest more in security than one with 10% fees. A decentralized, competing ecosystem might be more secure because each store competes on security, or less secure because they cut costs.

The trade-off is between centralized security with market power concerns versus decentralized security with quality control concerns. Regulators are betting that competition and transparency matter more than concentrated security. That's a reasonable bet, but it's a bet on the unknown.

Final Analysis: The State of App Store Security in 2025

Google's 1.75 million blocked apps statistic represents a security system that's working reasonably well. Malicious apps are mostly being stopped from reaching users. Review bombing is being prevented. Excessive data access is being restricted.

The AI component of this system isn't magic. It's machine learning at scale, applied to code analysis, permission checking, behavioral monitoring, and pattern recognition. It works because Google has access to billions of data points about app behavior and user harm.

The human component matters just as much. Human reviewers make judgment calls on edge cases. They appeal decisions that are clearly wrong. They set policy boundaries. The combination of AI and human review creates a system better than either alone.

But there are gaps. False positive rates aren't transparent. Appeal processes are opaque. Regional variations aren't discussed. Some developers who did nothing wrong lose access to markets.

Looking forward, the system will probably get more sophisticated. Generative AI will get better at understanding app intent and spotting deception. Behavioral analysis will get more granular. Runtime protections will get stronger.

But the fundamental tension will remain. Effective security requires centralized control and high investment. That creates market power and enables monopolistic behavior. Decentralized, competitive ecosystems distribute power but might sacrifice security.

The resolution of that tension—what app ecosystem actually emerges—will be decided by regulators and users, not by technical engineers. The technology is the easy part. The policy is the hard part.

FAQ

What does it mean that Google blocked 1.75 million apps in 2025?

Google's review system rejected 1.75 million app submissions in 2025 for violating the company's policies. This number was lower than 2024's 2.36 million, not because the company is catching fewer bad apps, but because its AI-powered deterrent effects are preventing malicious developers from even submitting bad apps in the first place. Each rejected app represents a malicious app that never made it to users.

How does Google's AI system detect malicious apps?

Google runs more than 10,000 automated safety checks on every app submission, analyzing code structure, permission requests, behavioral patterns, and comparing claimed functionality against actual code. Generative AI models help human reviewers spot suspicious patterns more quickly, like permission combinations that don't match the app's stated purpose or obfuscated code that suggests hidden functionality. The system combines pattern matching against known malware with behavioral analysis to identify novel threats.

What is Google Play Protect and how does it work?

Google Play Protect is Google's runtime security system installed on nearly all Android devices. It continuously monitors apps for malicious behavior, checking against known malware signatures and analyzing unusual activities in real-time. In 2025, it detected over 27 million new malicious apps and blocked 266 million risky sideloading attempts. If an app starts stealing data or attempting to gain unauthorized access after installation, Play Protect removes it before significant harm can occur.

Why did the number of apps blocked for excessive data access drop so dramatically from 1.3 million to 255,000?

This 80% decrease suggests that Google's permission analysis system is catching problematic apps earlier in the review process, preventing them from reaching users in the first place. As the AI systems get better at identifying permission combinations that don't match app functionality, fewer problematic apps make it past initial screening. This reflects the deterrent effect—bad actors know they'll get caught earlier, so fewer attempt to submit problematic apps.

What happens if my app is rejected by Google Play?

When an app is rejected, you'll receive a notification from Google Play explaining the policy violation. You can review the details in your Google Play Console dashboard. You have the option to appeal the decision, providing additional context or fixes to address the violation. For legitimate apps that were wrongfully rejected, appeals can be successful. Make sure to read rejection reasons carefully and respond to the specific policy violation mentioned rather than providing generic appeals.

How many apps on Google Play are actually malicious?

Google doesn't publish exact percentages, but the security numbers suggest that well under 1% of apps on Google Play are malicious. Of the roughly 3.5 million apps available on Google Play at any given time, the system blocks over 1.75 million submissions annually. This means that most apps reaching users are legitimate, but the percentage of submissions containing malicious intent is significant enough to require continuous monitoring.

Can Google Play Protect protect me if I sideload apps from outside the store?

Yes, Google Play Protect runs on all Android devices and protects against malware regardless of where apps come from. It will analyze sideloaded apps and either warn you or prevent installation if it detects malicious behavior. However, sideloaded apps receive lower scrutiny than apps submitted to Google Play, so the risk is higher. You're relying on real-time malware detection rather than pre-submission review.

Why does Google emphasize these security statistics when it's under regulatory scrutiny?

Google is defending its market position and 30% app store fee by arguing that its heavy investment in security—using AI, human reviewers, and runtime protection—justifies the premium. Regulators in the EU and elsewhere are scrutinizing whether Google's market dominance is justified by genuine value delivery or represents anticompetitive behavior. By publishing strong security statistics, Google argues that users receive real security benefits that justify the fee structure.

Is iOS more secure than Android because of the App Store's review process?

Both platforms have strong security, but through different approaches. Apple's App Store has stricter upfront approval with pre-release review of every app, resulting in fewer malicious apps reaching users. Android's Google Play allows more apps through submission but relies more on post-installation monitoring through Play Protect. Both systems are effective; they represent different security trade-offs between curation and openness.

What can I do as a user to avoid malicious apps?

Install apps only from the official Google Play Store, read app reviews and check review dates to ensure feedback is recent, examine what permissions apps request and consider whether they're necessary for the app's function, check the developer's other apps and track record, and keep Google Play Protect enabled. Additionally, be suspicious of free apps offering premium services—those are more likely to have hidden functionality or aggressive data collection. Most malware comes from sideloading apps from untrusted sources, not from Google Play itself.

Google's investment in AI-powered app security represents the best attempt we've seen yet to protect billions of users from malicious software at scale. The numbers show that the system is working—fewer bad apps reach users than ever before. But transparency gaps, regulatory pressure, and the concentration of power in a single company's hands remain legitimate concerns. As the app ecosystem evolves and regulators force change, the security guarantees we rely on today might shift in ways we can't yet predict. The technology is sophisticated. The policy challenges are harder.

Key Takeaways

- Google blocked 1.75 million apps in 2025, down from 2.36 million in 2024, indicating better deterrence of bad actors before submission

- The company runs over 10,000 automated safety checks on every app, with generative AI helping human reviewers spot malicious patterns

- Play Protect detected 27 million new malicious apps and prevented 266 million risky sideloading attempts across 2.8 billion devices

- Review bombing prevention blocked 160 million spam ratings, protecting legitimate apps from coordinated attack campaigns

- Excessive data access attempts dropped 80% to 255,000 cases, showing improved permission-based threat detection

- 80,000 developer accounts were banned for repeated policy violations, creating accountability through enforcement

Related Articles

- Keenadu Android Backdoor: Firmware Malware Threat [2025]

- Google's AI Malware Detection on Play Store in 2025 [Guide]

- Texas Sues TP-Link Over China Links and Security Vulnerabilities [2025]

- AI Governance & Data Privacy: Why Operational Discipline Matters [2025]

- Data Leadership & Strategy: The Foundation of AI Success [2025]

- Billions of Exposed Social Security Numbers: The Identity Theft Crisis [2025]

![Google Play's AI Defenses Block 1.75M Bad Apps in 2025 [Analysis]](https://tryrunable.com/blog/google-play-s-ai-defenses-block-1-75m-bad-apps-in-2025-analy/image-1-1771583915176.png)