Introduction: The Human-First Revolution in AI

We're in the middle of an AI arms race. Every week, another startup claims to have the next breakthrough. But most of them get it wrong. They optimize for model size, not for what humans actually need.

Then comes Humans&. A three-month-old startup that just raised

Here's what makes Humans& different: they're not building another chatbot. They're building the connective tissue between humans and AI. Think of it like this: if current AI tools are individual instruments, Humans& is trying to create an orchestra where humans and machines play together.

The founding team reads like a who's who of AI's golden age. Andi Peng worked at Anthropic on Claude's post-training. Georges Harik was Google's seventh employee and built their first ad systems. Eric Zelikman and Yuchen He came from x AI, where they helped develop Grok. Noah Goodman is a Stanford psychology professor who bridges human behavior and AI. These aren't fresh graduates betting on a hunch. These are the people who literally built the AI foundation that everyone else is standing on.

What they're trying to do is ambitious: rethink how we train AI at scale, how AI remembers conversations, how it understands what you actually want (not just what you typed). Most AI companies focus on making models bigger or faster. Humans& is focused on making them smarter about understanding humans.

In this article, we're going to dig into what Humans& actually does, why investors threw nearly half a billion dollars at them, and what this means for the future of AI—both the technology and how we use it. Because $480 million doesn't get raised on theory alone.

TL; DR

- **Humans& raised 4.48B valuation from Nvidia, Jeff Bezos, Google Ventures, and others

- The team includes veterans from Anthropic, x AI, Google, and Stanford—not startup novices

- Their core thesis: AI should strengthen human connection, not replace human judgment

- They're focusing on multi-agent systems, memory architecture, and user understanding

- The mega-valuation suggests investors believe the space they're entering is massive

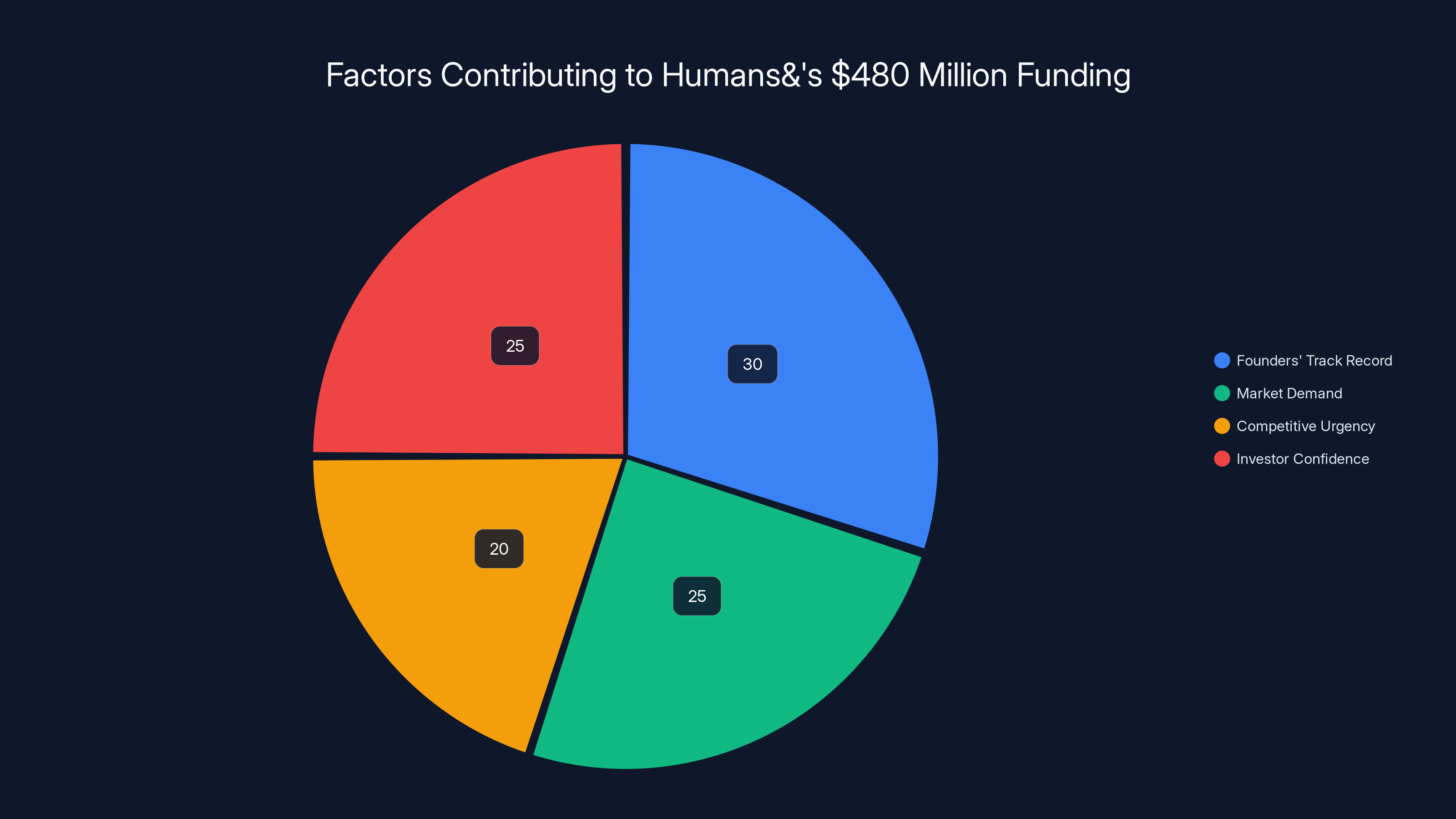

The $480 million funding for Humans& was influenced by the founders' track record (30%), market demand for AI collaboration (25%), competitive urgency (20%), and investor confidence (25%). Estimated data.

The Founding Team: Why This Actually Matters

You can't understand Humans& without understanding its founders. This isn't a story about three ambitious engineers with a slick pitch deck. This is about people who shaped the current AI landscape deciding that everyone's building it wrong.

Andi Peng worked on reinforcement learning and post-training for Claude at Anthropic. That means she was directly responsible for making Claude behave in ways that are actually useful to humans. If you've used Claude and thought "this model seems to understand nuance better than Chat GPT," that's partly Peng's work. She spent years thinking about alignment—how to make sure AI systems do what humans actually want them to do, not what they're technically optimized for.

Georges Harik is the rare founder who's been building AI infrastructure for longer than most people have been interested in AI. He was Google's seventh employee. Back when Google had seven employees, nobody thought search was going to be a trillion-dollar problem. Harik helped build Google's advertising system, the machine learning pipeline that turned search into a revenue machine. That's someone who understands what it takes to scale.

Eric Zelikman and Yuchen He came from x AI, where they worked on Grok development. Grok is Elon Musk's answer to Chat GPT, built from scratch in months. If you've used Grok, you've interacted with their work. These aren't people who studied AI in textbooks. They've built the systems.

Noah Goodman brings something different to the table: he's a Stanford professor who studies the intersection of psychology and AI. He's published research on how humans understand language, how we infer intent, how we build mental models of other people. In AI, that kind of research is usually siloed in academia. Here, it's part of the product strategy.

The rest of the team includes 20-plus people pulled from Open AI, Meta, Reflection AI, AI2, and MIT. You don't assemble that roster without serious credibility and serious money.

Why does this matter? Because funding follows pattern recognition. When Nvidia and Google Ventures write checks, they're not betting on personality. They're betting on a team's ability to execute at the highest level. These aren't first-time founders. They've all hit multi-billion dollar valuations. The question is: what made them leave those positions to bet on something new?

Humans& reached a $4.48 billion valuation in just 3 months, significantly faster than OpenAI and Anthropic, highlighting rapid investor confidence in their potential.

What Humans& Is Actually Building

The most important thing to understand about Humans& is what they're not building. They're not building another language model to compete with GPT-4 or Claude. They're not building a chatbot. They're not trying to replace humans in any job.

What they're building is the infrastructure for humans and AI to collaborate better. Think about how you use Slack. It's not the most sophisticated messaging app ever built, but it works because it's designed around how teams actually communicate. It has memory, context, the ability to thread conversations, integrations with tools people already use.

Humans& is trying to do that for human-AI collaboration. Imagine an AI tool that doesn't just answer your question once and move on. Imagine one that learns what matters to you, remembers decisions you've made, can work with other AIs and humans simultaneously on the same project.

Their technical approach focuses on three main areas:

Multi-agent reinforcement learning. Most AI systems work alone. They take your input, generate an output. Done. But humans work in teams. Sometimes you need five people working on something simultaneously, each with different expertise. Humans& is building AI that works in those same teams. That's harder because you can't just train one model. You need models that can understand what other models are doing, request help when needed, delegate tasks, verify each other's work.

Memory and context management. Current AI systems have this weird relationship with memory. They can see the conversation from the start of your current session, but they don't learn across sessions. You tell Chat GPT something about your business on Tuesday, and it forgets by Friday. That's fine for a casual chatbot. It's useless for a tool that's supposed to help you actually get work done. Humans& is building systems that maintain long-term, persistent memory of what matters to you and your team.

User understanding. Here's where the Stanford psychology professor comes in. Current AI is trained to match patterns in text. It's shockingly good at it, but that's still what's happening under the hood. Understanding users means understanding that when someone says "this should be faster," they might mean computationally faster, or they might mean it takes too many steps, or they might mean the feedback loop is too long. Those are three completely different problems with three different solutions. Humans& is trying to build systems that understand context, intent, and the actual human need beneath the words.

All of this builds toward one goal: AI that deepens organizations and communities, not replaces them. The company's own description is worth noting: they want to use AI as "a deeper connective tissue that strengthens organizations and communities." That's not the language of replacement. That's the language of infrastructure.

The Human-Centric AI Philosophy

There's a reason "human-centric" is in every description of Humans&. It's not marketing fluff. It's a genuine philosophy that shows up in how they approach problems.

Most AI companies are built on a specific assumption: more capability is always better. Build a bigger model, train it longer, make it smarter. Humans& starts from a different question: what would actually help humans do better work together?

Those are sometimes the same thing. But often they're completely different. A system that's 10% worse at language understanding but 10x better at remembering what your team is working on might be a bigger win. A system that refuses to make decisions for humans and instead asks clarifying questions might be less "capable" but more trustworthy.

This philosophy shows up in product decisions. They're building tools for collaboration, not tools that replace human judgment. They're focused on tools that sit between humans and enable better communication, not tools that replace humans in conversations.

Consider the difference: current AI assistants are often built as a replacement for a junior employee. "Ask the AI to draft this email." "Have the AI analyze this spreadsheet." "Let the AI make this decision." The human-centric approach is different. It's built around "use the AI to make better decisions with your team." Those are fundamentally different products.

The funding validates this philosophy. You don't get Nvidia, Google, and Jeff Bezos interested in replacement tech. But you do get them interested in infrastructure that makes collaboration better. Google was built on the idea that better search infrastructure creates more value. The investors backing Humans& seem to believe the same thing applies to human-AI collaboration.

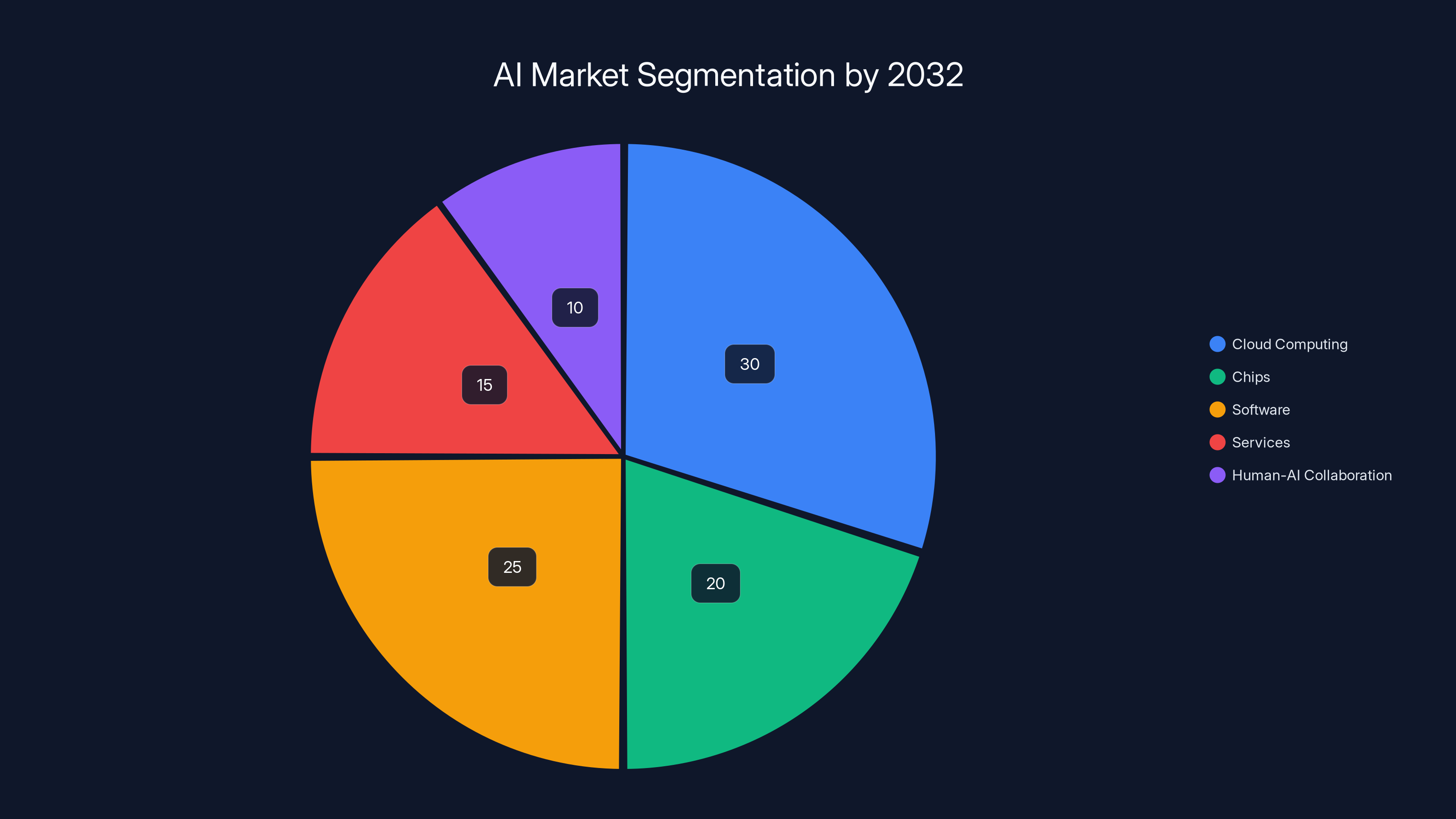

Human-AI collaboration is projected to be a significant segment of the AI market by 2032, potentially capturing 10% of the $1.3 trillion market. Estimated data.

Why $480 Million for a Three-Month-Old Company?

Let's talk about the elephant in the room.

For context: Open AI took about five years to hit a

First, the timing. This isn't 2020. We're not in the early hype phase of AI anymore. We're past the point where "we're building AI" is enough justification for funding. By 2025, the market has started separating genuine breakthroughs from hype. When a mega-round happens this fast, it usually means investors see something concrete, not just potential.

Second, the team. If you've been following AI closely, you know that talent is the actual constraint. You can raise money, you can rent compute, you can buy data. You can't easily recruit people who've already done billion-dollar technical problems. The fact that Humans& assembled that team tells investors something: these people believe in this vision enough to bet their second careers on it. That's harder to fake than a good pitch deck.

Third, the problem they're solving is genuinely large. The current AI market is dominated by model training and inference. But if you're right that human-AI collaboration is the next frontier, that's a different market entirely. It's not about selling API calls. It's about becoming the infrastructure layer that every organization uses to work with AI. That's a trillion-dollar opportunity, not a billion-dollar one.

Fourth, the investor group is telling. Nvidia doesn't randomly invest in startups. They invest when they see a pathway to selling more chips. Google Ventures doesn't invest when they're worried about threat. They invest when they see a future that's so big that even sharing the market with competitors is worth it. Jeff Bezos doesn't invest in projects that aren't infrastructure. His history shows consistent interest in platforms that other businesses build on top of.

So $480 million isn't just "a lot of money." It's a market signal. It's investors saying: we believe human-AI collaboration is the next trillion-dollar problem, we believe this team can own part of it, and we believe the time to build is now.

The Competitive Landscape: Who Else Is Doing This?

Humans& isn't building in a vacuum. There are other companies working on human-AI collaboration, multi-agent systems, and better memory architecture. Understanding the competition helps explain why the funding was so large.

Anthropic is building the best general-purpose AI models, but they're not focusing on the application layer. They're building the engine, not the car. That leaves room for others to build experiences on top.

Open AI has GPTs and agents, but they're focused on the model. Their applications are mostly built by third parties. There's no unified Open AI experience for team collaboration. That's a gap.

Google is building AI into their existing products: Gmail, Docs, Sheets. But their approach is to enhance existing tools, not to build new collaboration primitives. Different strategy entirely.

Meta is investing in AI, but they're primarily focused on consumer products and content creation. Not enterprise collaboration.

What Humans& is doing is building the middle layer. Not the model layer, not the application layer, but the layer that connects humans to AI and humans to each other through AI. That's genuinely different from what the major players are focused on. It's also genuinely hard to do well.

The risk is obvious: any of the big players could decide to build this themselves. Google could integrate multi-agent collaboration into Workspace. Microsoft could add it to Teams. Anthropic could release a suite of collaboration tools. When you're building at this level, you're always a few quarters away from a large competitor noticing what you're doing and allocating resources to it.

But that risk exists in exchange for a massive opportunity. If you're first to a truly great human-AI collaboration platform, and you execute well, the switching costs are enormous. Teams don't casually switch collaboration infrastructure.

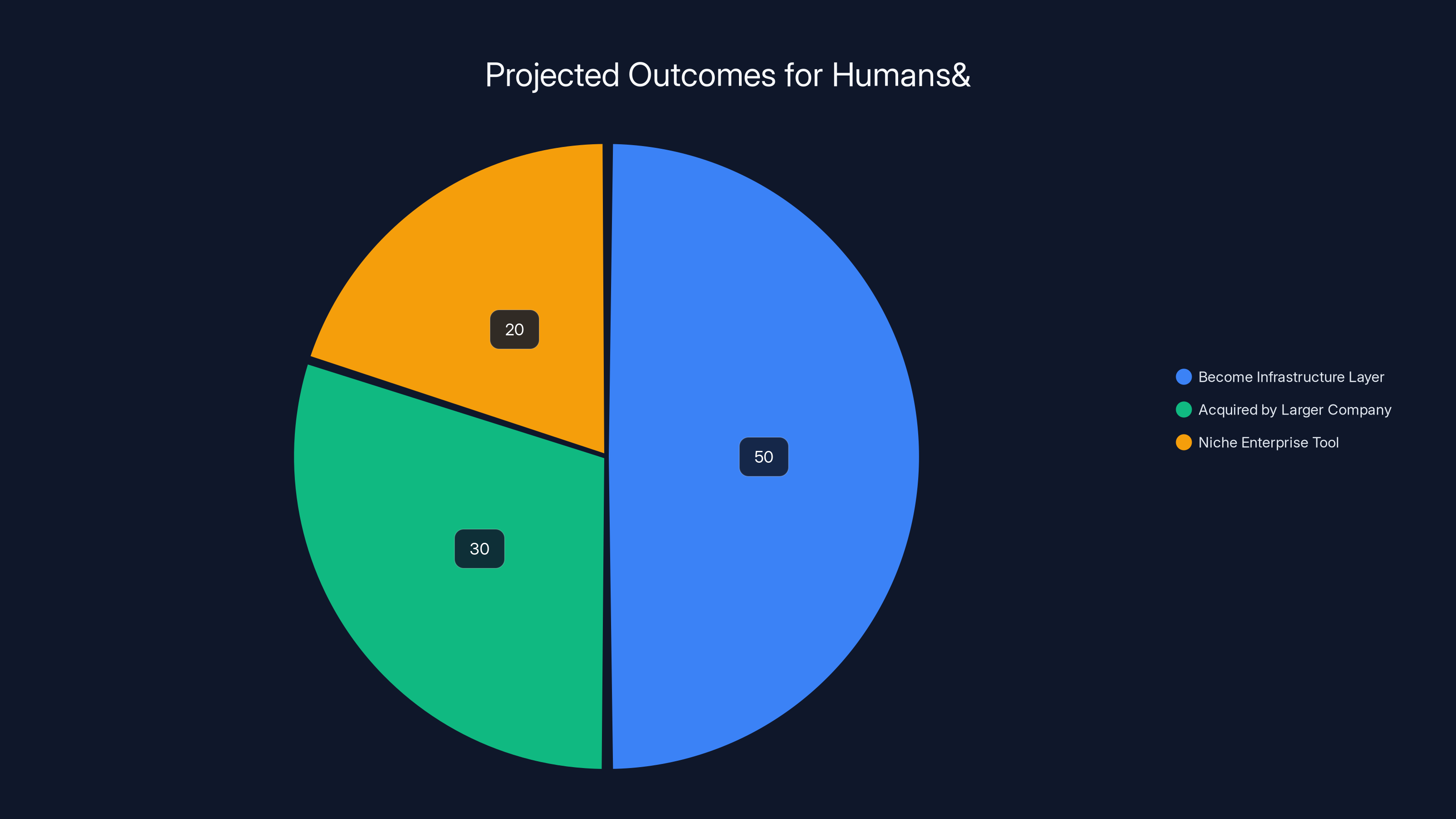

Estimated data suggests a 50% chance of Humans& becoming a fundamental infrastructure layer, similar to AWS or Stripe, with a 30% chance of being acquired by a larger company, and a 20% chance of becoming a niche enterprise tool.

Multi-Agent Systems: The Technical Foundation

Underneath all of Humans&'s philosophy is a specific technical challenge: how do you build AI systems that work together, not just individually?

Traditional AI is relatively simple. You train a model, you deploy it. Users interact with it directly. One human, one AI, conversation over.

Multi-agent systems are messier. Imagine you need to write a report. You need someone to research the market, someone to analyze finances, someone to write the narrative, someone to edit. In a human team, that's five people with different skills collaborating. In a multi-agent system, it's multiple AI instances that need to:

- Understand what the overall goal is

- Break it into subtasks

- Work on those subtasks in parallel or sequence

- Check each other's work

- Integrate the results

- Ask for human input when they're stuck

That's not significantly harder than training a single large model. It's incomparably harder. You need to solve coordination problems. You need to handle failure gracefully. You need to make sure one agent's mistake doesn't cascade and break everything.

The reinforcement learning part is crucial. Traditional supervised learning means you have labeled training data. "Here's a conversation. Here's the right answer." But with multi-agent systems, you don't have labeled data for how agents should coordinate. You have to give them rewards (or penalties) based on outcomes and let them learn what works.

That's not new technology. Reinforcement learning has been around for decades. But applying it at scale to AI agents working on complex tasks? That's genuinely difficult. It's also genuinely valuable. A system that can learn how to coordinate with other systems and humans is worth something real.

The other technical piece is architecture. How do you structure a system so that multiple agents can work together efficiently? Do they all run simultaneously? Do they pass control to each other? How do you prevent contradictory instructions? How do you make sure the human is always in the loop when decisions matter?

These aren't problems with obvious solutions. They're the kinds of problems that require deep technical expertise and iterative refinement. They're exactly the kinds of problems that an Anthropic-x AI-Google team would be well-suited to solve.

Memory Architecture: Why It Matters More Than You Think

Current large language models have a critical limitation: they don't really learn or remember. If you use Chat GPT daily for six months, it doesn't improve at understanding your preferences. Every conversation starts from scratch.

For casual use, that's fine. If you're asking a chatbot for cooking tips, you don't need it to remember your dietary preferences. But if you're using AI as a business tool, that becomes a major limitation. You want the system to learn what's worked for your team, what hasn't, what your actual preferences are.

Memory architecture is how you solve that problem. But it's not just about storing conversations. It's about storing the right information in the right way so the AI can actually use it effectively.

Let's say you work with an AI on quarterly planning. You tell it: "We failed last time because we underestimated how long the implementation would take. This time, add 50% to all timeline estimates." That's information the AI should remember. But it shouldn't remember it as a general principle. It should remember it as specific to this type of project, or this team, or these specific tasks. If you give the AI advice about timeline estimation, that shouldn't change how it estimates budgets.

Building systems that can do that kind of nuanced memory is hard. It requires understanding context, understanding what's generalizable and what's specific, understanding how different pieces of information relate to each other.

Humans& is building memory systems that can handle that complexity. That's valuable because it means AI tools that actually improve over time instead of resetting constantly. It's also valuable because it means less work for humans. You don't have to re-explain your preferences every time. The system learns.

There's also a privacy angle. If AI systems have memory but that memory is stored externally by a third party, that's a data security issue. Humans& is building with the assumption that memory should be stored securely, accessible only to the organization that owns it. That's both more secure and more compliant with regulations like GDPR or CCPA.

Multi-agent systems face significantly higher complexity across various aspects compared to single-agent systems, particularly in task coordination and error handling. (Estimated data)

User Understanding: Psychology Meets AI

Noah Goodman's role on the team is telling. Most AI teams are built around computer scientists and engineers. Goodman is a psychology researcher. He studies how humans understand language, how we make inferences, how we model other people's beliefs and intentions.

That might seem like an academic addition. It's actually strategic. Most AI systems are trained on text data and optimized to predict the next token. They're fantastically good at pattern matching. But pattern matching and understanding are different things.

Consider a simple example. Someone says: "This project is running late." What does that mean? It could mean:

- It's behind the original schedule

- It's behind expectations from earlier today

- It's behind industry standards

- It's behind a competitor's similar project

- The deadline was moved up and now it's not going to make it

- The schedule is fine but the scope increased

- It's not technically late but the person is anxious

Any decent language model can generate a response about late projects. But understanding which of those seven interpretations is actually relevant requires understanding the human context. What does this person care about? What's their role? What have they said before about deadlines? What's their emotional state?

Building AI that understands that requires psychology. It requires studying how humans actually communicate, where miscommunication happens, how people infer intent from incomplete information. That's Goodman's expertise.

Why does it matter? Because the gap between "can follow instructions" and "understands what you actually need" is where real value lives. A system that literally does what you ask might still fail to do what you actually wanted. Building AI that closes that gap is what separates tools people love from tools people tolerate.

The Funding: Who's Investing and Why

Let's break down the actual investor group. It's not a random collection of venture capitalists. There's a pattern.

Nvidia is the obvious play. If AI is going to be central to the future, Nvidia sells chips to everyone building AI. Any successful AI startup eventually becomes a customer. But Nvidia doesn't invest just to create customers. They invest in foundational infrastructure that will create larger markets. Their early investment in deep learning startups paid off massively as AI became central to every industry. They're betting Humans& is part of that next infrastructure wave.

Jeff Bezos is more interesting. He's not known for throwing money at hype. His venture arm focuses on founders and problems, not trends. His investment signals that he believes human-AI collaboration is a real problem with real solutions, not just another AI chatbot. AWS could theoretically be a distribution channel. But more likely, Bezos sees the founding team as world-class and believes in the problem they're solving.

Google Ventures is Google's corporate venture arm. They invest in startups that could be strategically relevant. Google Ventures backed Anthropic, arguably their biggest competitor now. So they don't avoid threats. They invest in what they think is the future. If they're backing Humans&, it's because they believe human-AI collaboration is big enough that even diluting their own focus is worth the upside. That's a strong signal.

Emerson Collective is the investment arm for the Powell Jobs family. They've backed education, healthcare, climate, and climate. They don't invest in random startups. They invest in founders and missions they believe in. Their participation suggests they believe Humans& is solving something important for how humans and organizations work.

SV Angel is the only traditional VC in the description, but they're not random either. They backed Pay Pal, Airbnb, Square, and Stripe. They back teams that are solving hard problems and executing at the highest level.

So the investor group is basically saying: this team, this problem, this moment. That's worth half a billion dollars at entry.

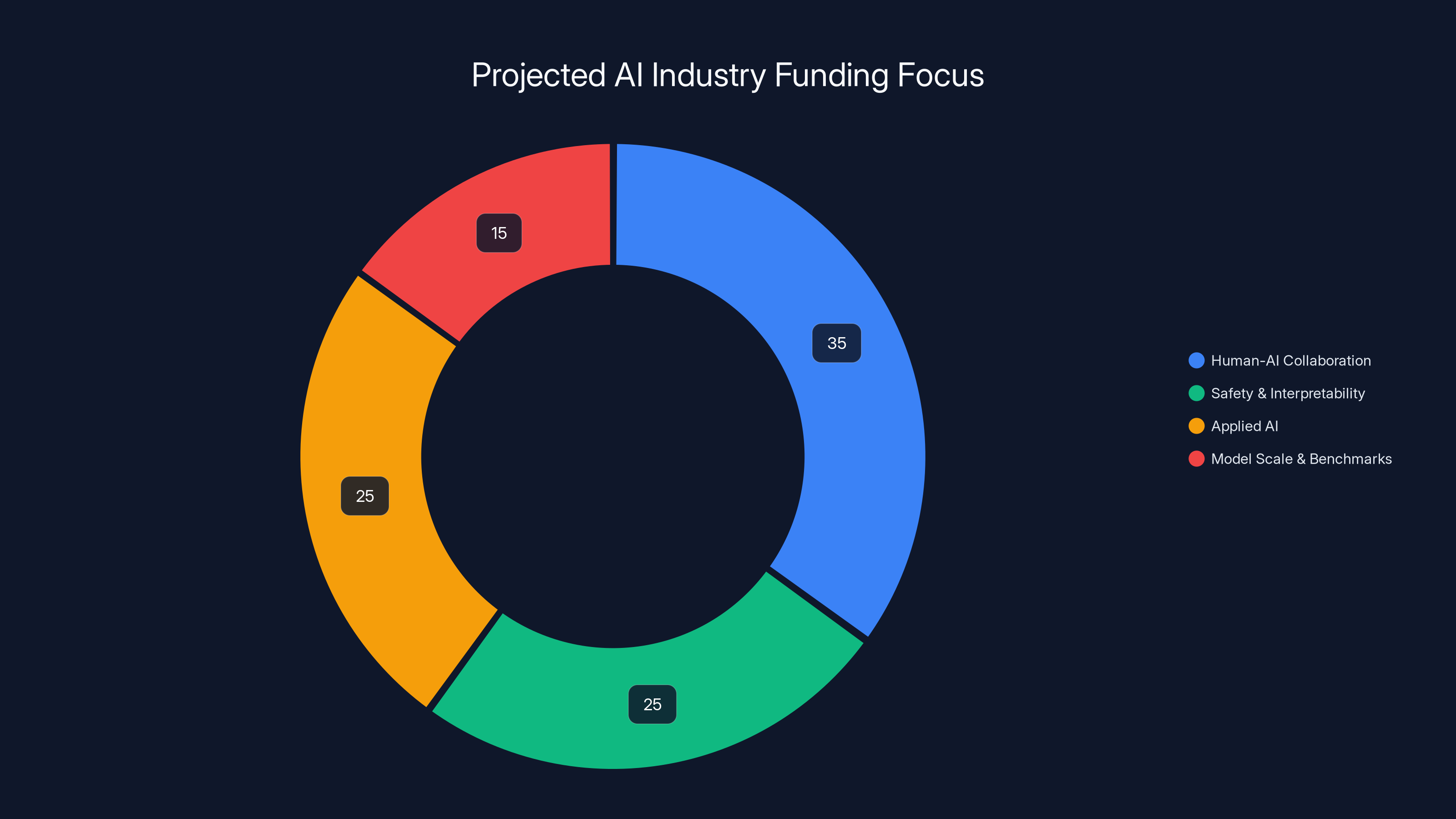

Estimated data shows a shift in AI industry funding towards human-AI collaboration and applied AI, with less emphasis on model scale and benchmarks.

The Market Opportunity: How Big Is This Actually?

For a $480 million seed round to make sense, the market opportunity has to be enormous. So how big is human-AI collaboration actually?

Let's start with a baseline. The total AI market is forecast to be around $1.3 trillion by 2032, depending on which analyst you ask. That includes everything: cloud computing for AI, chips, software, services. So human-AI collaboration is potentially a subset of an already massive market.

But the question is about SAM, or serviceable addressable market. What's the specific piece they can realistically serve? They're targeting organizations and teams that use AI. That's not just tech companies anymore. By 2025, every medium and large company is using AI in some form. Every knowledge worker is using AI tools. The market is basically everyone, globally.

With that kind of TAM (total addressable market), a unicorn valuation becomes reasonable. If you can capture even 1-2% of the enterprise software market by owning the collaboration layer, you're looking at a $10-20 billion company.

But here's why the funding was so large, so early: getting there is an arms race. If Humans& has a working prototype, a great team, and the right investors, they can hire the best people, build the best product, and establish themselves as the standard. But they need capital to do it fast enough before competitors emerge.

In a normal market, you'd grow gradually, raise money as you grew, achieve profitability. In the AI space, where switching costs are real but not infinite, speed matters. First to a really great human-AI collaboration platform can lock in enterprise customers and lock out competitors.

The Broader Trend: Why Breakaway Teams Are Hot

Humans& is part of a pattern. The last few years have seen an explosion of breakaway teams from major AI labs. Anthropic's founders left Open AI. Mistral's team left Meta. x AI came out of nowhere but included people from Tesla and Open AI. Humans& is the latest example.

Why is this happening? A few reasons.

First, the original teams got massive. Open AI, Google Brain, Meta AI Research all grew to hundreds or thousands of people. There's only so much decision-making authority to go around. Ambitious researchers and engineers hit ceilings.

Second, the original labs are focused on different problems. Open AI is focused on building the most capable AI possible. Google is focused on integrating AI into existing products. Meta is focused on open-source AI and consumer applications. If you have a vision for something different, you probably need to build it yourself.

Third, the capital is available. Investors have learned that the best AI teams can build faster than incumbents. Every successful breakaway team creates a template for the next one. The investors keep betting because the template works.

Fourth, equity and decision-making matter. At a large company, even if you're a brilliant researcher, you own a tiny percentage of the upside. In a startup, you own a meaningful percentage. For people who believe in the problem and have the skills to solve it, that's a powerful incentive.

Humans& is well-positioned in that landscape. They have the credibility of former employees from multiple successful labs. They have a vision that's differentiated from what the original labs are doing. They have investors who understand the space and can help them navigate it.

The risk is obvious: this trend can reverse. If AI labs start retaining their best people with significant equity and decision-making authority, the incentive to leave declines. If AI startups start failing to deliver, investor enthusiasm declines. We're probably past the point of unlimited venture capital for AI, so execution becomes crucial.

What Humans& Actually Ships: Current and Near-Term Products

Imagining products is easy. Actually shipping them is hard. So what's Humans& actually building?

The company is still in stealth mode, which means they're not publicly describing their product roadmap. But based on the technical focus areas mentioned, we can infer what they're working on.

At the foundation, they're likely building APIs and frameworks for multi-agent coordination. This wouldn't be a consumer product. It would be developer infrastructure. Think: how Anthropic gives you access to Claude via API. Humans& would give you access to multi-agent systems that can work together.

On top of that foundation, they're probably building specific applications. The mention of "AI version of instant messaging" suggests collaboration tools. Maybe something like Slack, but with actual AI agents participating as team members. Instead of just tools that help humans communicate, it's an environment where humans and AI can work together.

The memory architecture they're building is probably a vertical service that can be integrated into those applications. Persistent, contextual memory that individual agents and humans can access and that the system can use to improve over time.

They're also probably building analytics and understanding tools. If you want to know how well your team is collaborating with AI, what's working and what's not, you need visibility. That might be dashboards, might be AI-generated insights, probably both.

None of this is shocking. It's the logical extension of where AI is heading: from tools that augment individual work to platforms that enable team collaboration. But that's the space that matters most. The tools that individual engineers build are interesting. The platforms that teams use to collaborate are valuable.

The Challenges Ahead: It's Not All Tailwinds

Humans& faces real headwinds despite the massive funding and strong team.

First, there's the incumbent problem. Microsoft (with Teams), Google (with Workspace), and Amazon (with AWS) all have distribution advantages, existing customer relationships, and massive engineering resources. If they decide human-AI collaboration is important, they can outspend any startup. Humans& has to move faster and stay differentiated.

Second, there's the model problem. Humans& is building on top of existing models from Open AI, Anthropic, or other providers. They don't control that layer. If the model APIs change, pricing changes, or if the model provider launches their own collaboration tools, that's a problem. The smart play is probably to build multiple model integrations so they're not dependent on any single provider. But that's complex.

Third, there's the perception problem. "Human-centric AI" is a nice story, but execution is what matters. If the tools are hard to use, don't actually improve productivity, or feel like solutions in search of problems, funding runs out. There's a graveyard of well-funded AI startups that shipped promising products nobody wanted.

Fourth, there's the regulation problem. If Humans& builds tools that work across organizations, handle sensitive information, and involve AI making recommendations, they're suddenly subject to a massive compliance burden. GDPR, CCPA, industry-specific regulations, corporate governance, liability questions. These aren't things you can hand-wave away. They require legal infrastructure and expertise.

Fifth, there's the definition problem. What does "human-AI collaboration" actually mean? What problems does it solve that existing tools don't? If Humans& can't articulate this clearly and show real customers that it's true, the funding runs dry and the talent walks.

These aren't insurmountable. But they're real. The next 18-24 months will determine whether this is a generational AI company or another well-funded startup that ran out of ideas.

The Broader Implication: What This Means for the AI Industry

Humans& raising $480 million isn't just news about one startup. It's a signal about where the AI industry is heading.

For years, the story was about building bigger, better models. Who can train the largest language model? Who can achieve the best benchmarks? That's still happening, but it's not the frontier anymore. Model architecture is still important, but the base models are increasingly commoditized. You can use GPT-4, Claude, Grok, or Gemini depending on your needs. They're all capable.

The frontier is shifting to what you do with those models. How do you integrate them into real work? How do you make them safe? How do you make them trustworthy? How do you make humans and AI actually collaborate in ways that create value?

That's a different kind of problem. It's not solved with scale. It's solved with understanding human needs, building intuitive interfaces, creating feedback loops, managing risk, handling coordination.

Humans& is positioned in that new frontier. They're not trying to build the best model. They're trying to build the best experience of using models. That's a bigger market and a more defensible one, because the defensibility comes from understanding and design, not pure compute.

Other startups and larger companies will follow this shift. Over the next few years, expect to see more funding go to companies working on human-AI collaboration, safety, interpretability, and applied AI. Expect to see fewer funding rounds go to companies promising bigger models or better benchmarks.

That shift reflects a maturation of the AI market. We're past the phase where capability alone is enough. Now it's about utility, trustworthiness, and fit with how humans actually work.

The Team's Long-Term Vision: Where This Is Going

Humans& hasn't published a 10-year plan, but you can infer their long-term vision from the team and the focus areas.

The endgame probably looks like this: AI isn't something you use. It's something that's integrated into how you work. Your team, your tools, your environment all have AI capabilities built in. Sometimes you notice the AI. Often, you don't. What matters is that you're getting better work done, faster, with better decision-making.

That's different from today. Today, using AI means switching to an AI tool, typing a prompt, waiting for output, then switching back to your work. Tomorrow, the AI is part of the work.

Building that requires solving the problems Humans& is focused on. How do you make AI that understands context? How do you make it reliable enough for teams to depend on? How do you make it learn and improve? How do you keep humans in control of decisions that matter?

Humans& isn't trying to answer all of those questions. They're trying to be infrastructure that other companies and teams build on to answer those questions.

If they succeed, they become like AWS or Stripe. Not a consumer brand, but a fundamental layer that everyone else builds on. That's worth

If they fail, it's because the problems are harder than they thought, the market moves differently than expected, or larger companies execute faster. In that case, they sell to someone bigger or become a tool that some enterprises use but never reaches mainstream adoption.

The odds of success are genuinely hard to estimate. The team is world-class. The problem is real. The capital is available. But execution in AI is unpredictable, and the landscape can shift rapidly.

Practical Implications: Who Should Care About Humans&

If you're an individual contributor, the implication is that AI collaboration tools are coming, and they're going to change how you work. Start thinking about what that means for your role. Will AI make your job easier? Will it change what expertise matters?

If you're a manager or leader, the implication is that AI is shifting from individual tool to team infrastructure. You'll need to think about how to integrate AI into team workflows safely, ethically, and effectively. Companies that figure that out first will have advantage.

If you're a developer or product person, Humans& and companies like them are building the next layer of developer tools. Understanding what they're building and why will be important for your own work.

If you're an investor, this round is a signal about where capital is flowing. If you're not investing in human-AI collaboration or applied AI, you're probably missing a significant trend.

If you're starting a company, the template is clear: solve a real problem that large companies haven't solved, recruit world-class talent, move fast, and use capital efficiently. Humans& raised a huge round, but they need to deliver proportionally.

Looking Ahead: What's Next for Humans&

The next milestone for Humans& is probably coming out of stealth with an actual product. They'll ship something and let customers try it. That's when theory meets reality. A lot of well-funded AI startups have shipped products that nobody wanted to use.

After that, it's about scaling. Hiring engineering talent in AI is competitive. Building a sales organization to reach enterprises is complex. Managing growth while maintaining culture and quality is hard.

Parallel to that, they need to navigate the competitive landscape. What happens when Google or Microsoft decide to build similar capabilities? How does Humans& maintain differentiation? Usually it's through brand, community, and being best-in-class. That requires sustained excellence.

They also need to navigate regulation and trust. If they're storing data, managing AI decision-making, or handling sensitive information, governance matters. Building a compliance function before you need it is smart.

Over two to three years, we'll know if this was genius or hype. The team is good enough that it probably won't be the latter. But that doesn't guarantee success. Good teams ship products people don't want all the time.

FAQ

What exactly is Humans& and what problem are they solving?

Humans& is an AI startup building infrastructure for human-AI collaboration. Instead of AI tools that work independently, they're creating systems where AI agents and humans work together seamlessly on complex tasks. The core problem they're solving is that current AI tools are isolated, don't learn from experience, and don't understand human context well enough to be truly collaborative. They're focused on making AI a connective layer that strengthens organizations rather than replacing human judgment.

Who founded Humans& and what makes them credible?

The founding team includes Andi Peng (Anthropic's reinforcement learning researcher), Georges Harik (Google's seventh employee who built their ad systems), Eric Zelikman and Yuchen He (x AI researchers who worked on Grok), and Noah Goodman (Stanford psychology professor). These are not first-time founders, they're people who've already shaped the AI landscape at major companies and labs. This track record significantly influences why investors were willing to back them so heavily.

Why did Humans& raise $480 million so quickly?

The massive funding reflects several factors: the founders' proven track record, the real and growing market for human-AI collaboration, the competitive urgency to move fast before larger companies enter the space, and investor conviction that this team can build infrastructure-level products. In AI, capital comes down to: strong team solving a real problem in a massive market. Humans& checks all three boxes, which explains why Nvidia, Google, Jeff Bezos, and other sophisticated investors participated.

What are the key technical challenges Humans& is working on?

They're focused on three main areas: multi-agent reinforcement learning (how to make AI systems coordinate with each other), memory architecture (how to give AI systems persistent, contextual memory across sessions), and user understanding (how to make AI comprehend actual human intent, not just match text patterns). These are genuinely difficult problems that require deep expertise, which is why the team is specifically chosen for those capabilities.

How is Humans& different from Open AI, Anthropic, or other AI companies?

Open AI and Anthropic are focused on building the best possible language models. Google and Microsoft are focused on integrating AI into existing products. Humans& is specifically focused on the application layer for human-AI collaboration. They're not trying to compete on model capability, they're trying to own how AI integrates into how people actually work together. That's a fundamentally different business.

What's the realistic timeline for Humans& to ship products?

They're currently in stealth mode, which suggests they're probably in active development. For a well-funded AI startup, shipping a meaningful product usually takes 6-12 months from significant funding. Full market availability and enterprise adoption takes longer, probably 2-3 years. But the next big signal will be when they come out of stealth and let people actually try their product.

Could larger companies like Google or Microsoft just build this themselves?

They probably could, but there are reasons they might not. Large companies move slowly, have competing priorities, and often struggle with startup-speed execution. Humans& has focus and capital, which means they could ship a better product faster. Additionally, once an AI startup establishes market leadership in a vertical, it becomes sticky because teams don't casually switch their collaboration infrastructure. So Humans& has a window of opportunity to establish dominance before competitors respond.

What does human-centric AI actually mean in practice?

It means AI that's designed around supporting human decision-making rather than replacing it. Instead of "let the AI make this decision for you," it's "use the AI to get better information so you make a better decision." In practice, this shows up in features like explaining AI reasoning, requesting clarification from humans when context is unclear, remembering previous decisions and preferences, and working as part of a team rather than as a standalone tool.

What are the biggest risks for Humans& moving forward?

The main risks are: execution risk (shipping products people actually want), competitive risk (larger companies entering the space), model dependency (they build on top of Open AI/Anthropic models which could change), regulatory risk (handling sensitive data and AI-driven decisions creates compliance burden), and product-market fit risk (they might not find a market willing to pay for what they're building). Any startup faces these, but well-funded AI startups face them at higher stakes.

Conclusion: Why This Matters Beyond One Funding Round

Humans& raising $480 million is significant, but not because it's another big AI funding round. We're numb to those by now. It's significant because it represents a shift in how the AI industry is thinking.

For the last few years, the focus was on model capability. Who can build the largest, smartest language model? That question still matters, but it's not the frontier anymore. The frontier is: how do we actually use these models to make humans more capable?

That's a different problem. It requires psychology, not just computer science. It requires understanding human workflow, not just training objectives. It requires building products, not just publishing papers. It requires thinking about coordination, trust, memory, context, and all the things that make human teams actually work.

Humans& is betting that whoever solves that problem first will own a massive market. The investors backing them believe the same thing. So they're moving fast and moving big.

Will they succeed? That's the question that will define the next few years of AI. If they do, they'll have built the connective tissue that enables AI to actually integrate into how organizations work. If they don't, the space will be conquered by someone else, probably a larger company that eventually decided the market was worth the effort.

But one thing is clear: the era of "AI as tool" is shifting to "AI as infrastructure." Humans& is betting they can own that infrastructure. The market is deciding whether that bet is worth $4.48 billion.

Given the team, the problem, and the moment, it might actually be a good bet.

Key Takeaways

- Humans& raised 4.48B valuation with backing from Nvidia, Jeff Bezos, Google Ventures—signaling investor conviction in human-AI collaboration as the next trillion-dollar market

- The founding team includes world-class talent from Anthropic, xAI, Google, and Stanford who have already shaped the AI industry, lending credibility to execution

- The company is focused on multi-agent reinforcement learning, persistent memory architecture, and psychological understanding of user intent—solving problems most AI companies ignore

- The shift from capability-focused AI to collaboration-focused AI represents a maturation of the industry, where infrastructure and integration matter more than raw model power

- Larger competitors could replicate Humans&'s approach, but first-mover advantage in collaboration infrastructure is sticky and defensible, justifying the aggressive valuation and funding

Related Articles

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

- 55 US AI Startups That Raised $100M+ in 2025: Complete Analysis

- Why Agentic AI Pilots Stall & How to Fix Them [2025]

- The Hidden Cost of AI Workslop: How Businesses Lose Hundreds of Hours Weekly [2025]

- Anthropic's Economic Index 2025: What AI Really Does for Work [Data]

- Responsible AI in 2026: The Business Blueprint for Trustworthy Innovation [2025]

![Humans&: The $480M AI Startup Redefining Human-Centric AI [2025]](https://tryrunable.com/blog/humans-the-480m-ai-startup-redefining-human-centric-ai-2025/image-1-1768927578405.png)