Why CEOs Are Spending More on AI But Seeing No Returns [2025]

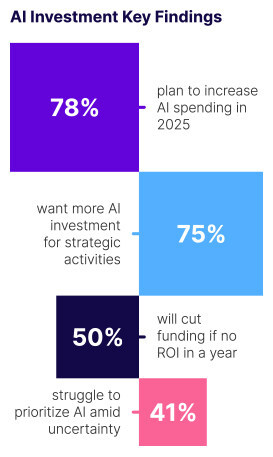

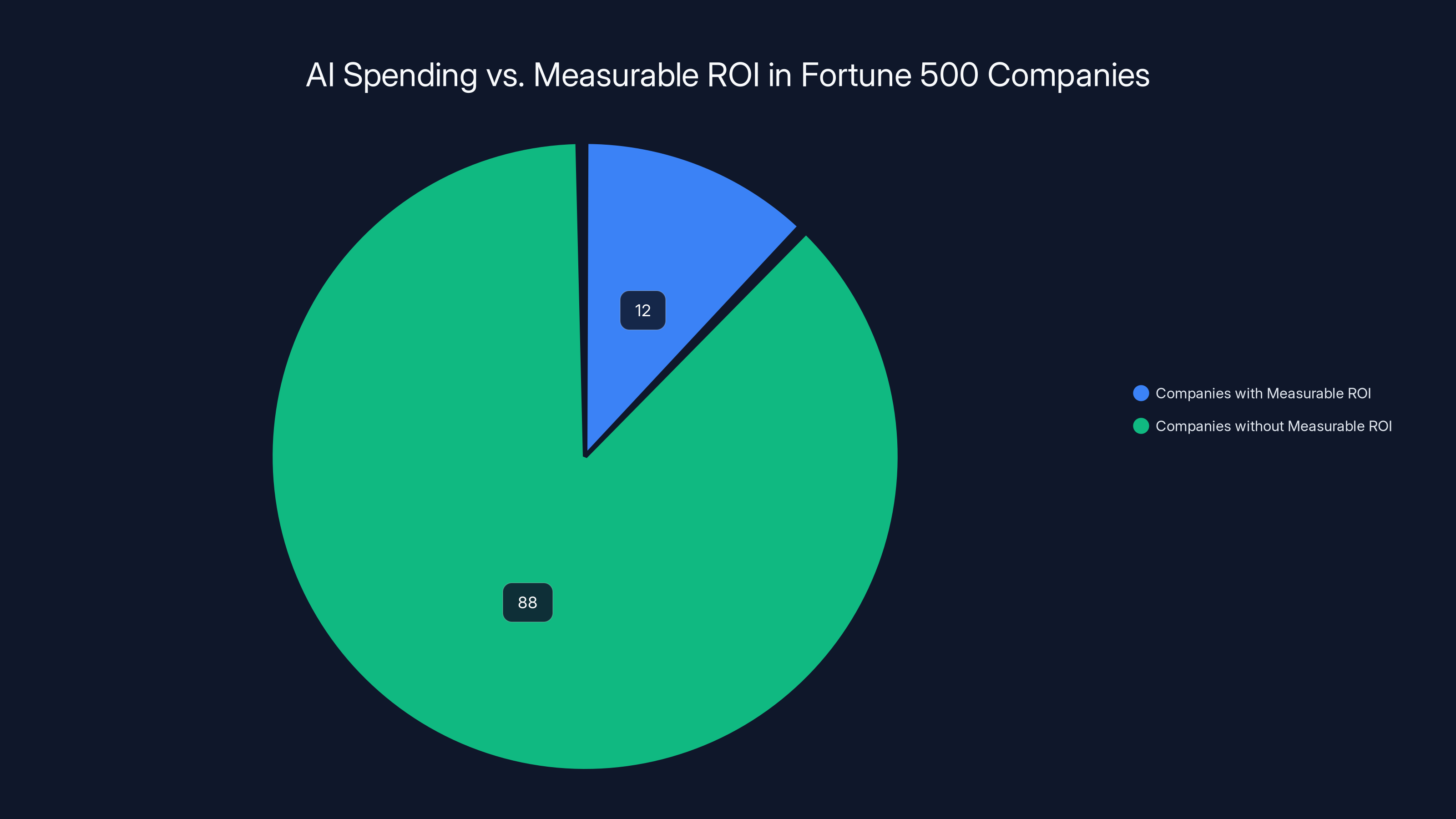

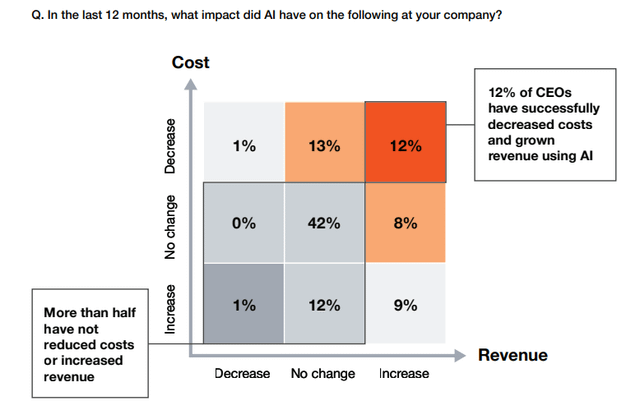

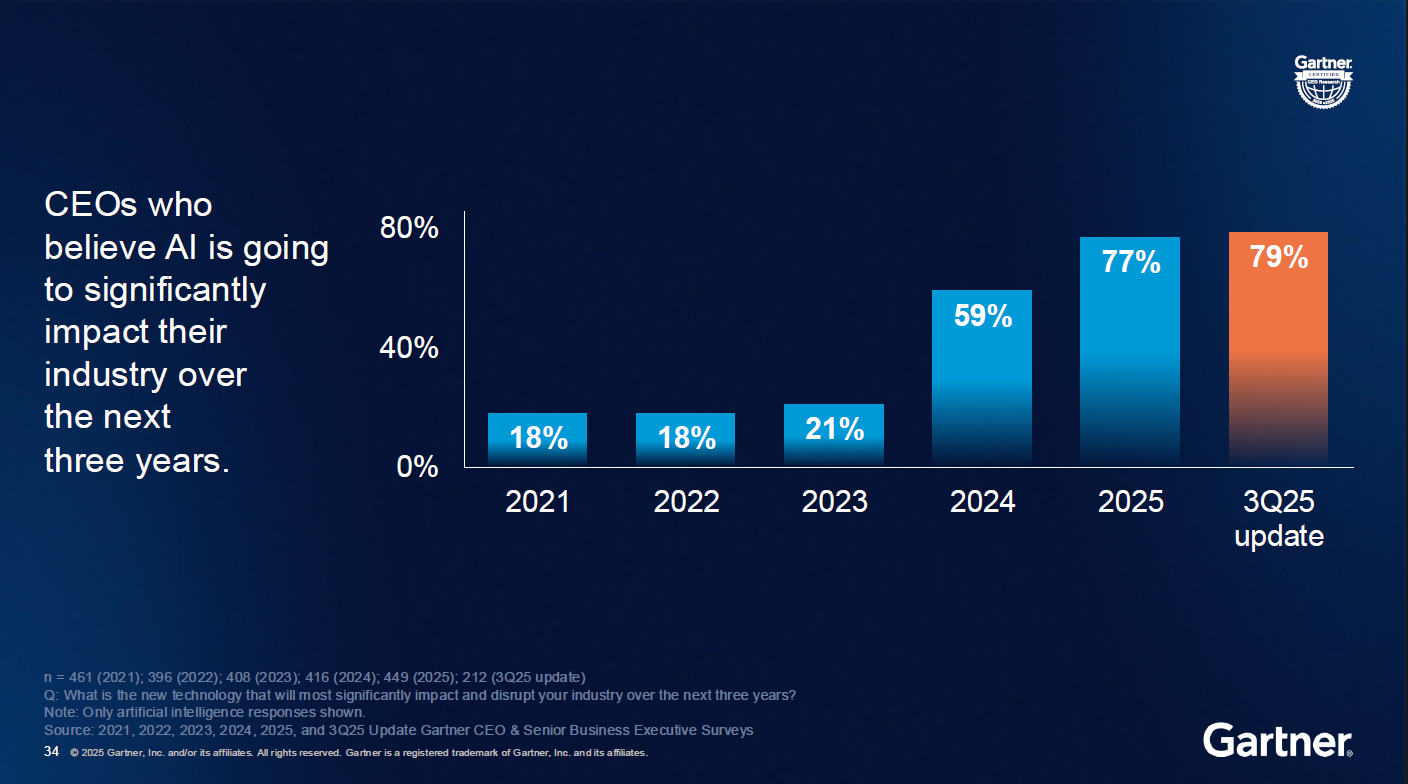

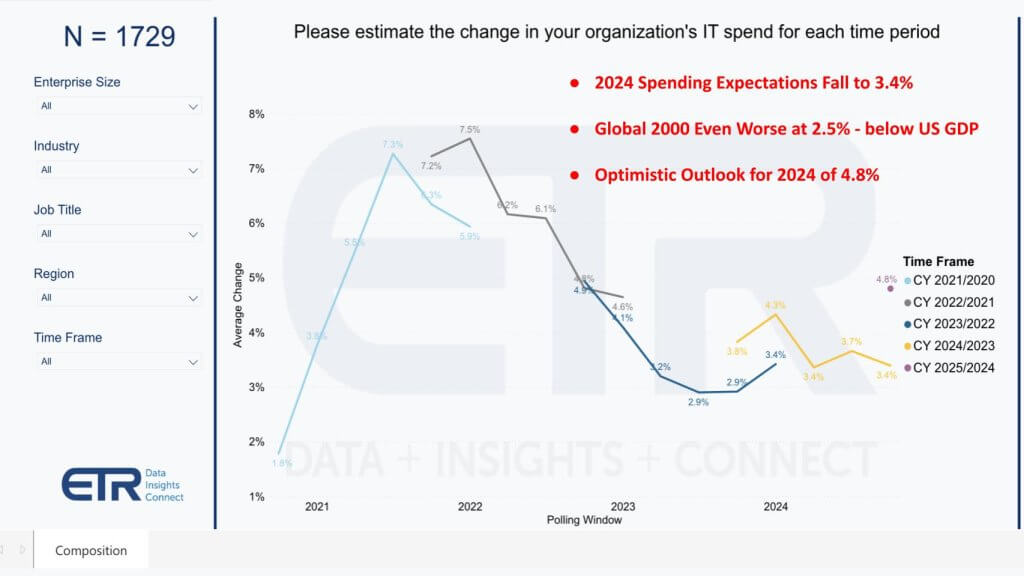

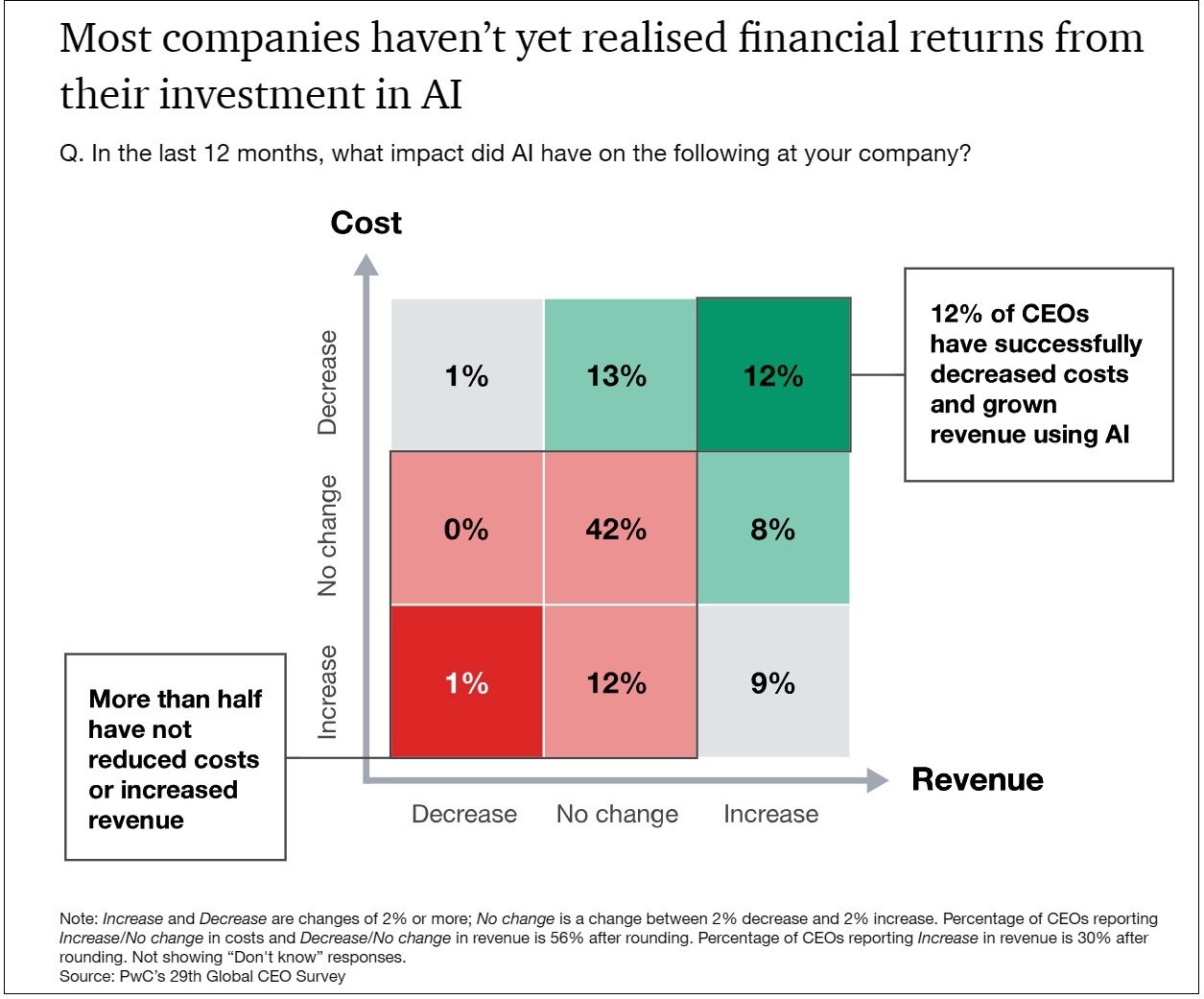

There's a troubling disconnect happening right now in corporate boardrooms across the world. CEOs are doubling down on AI spending. They're committing billions to chatbots, automation tools, and data infrastructure. Yet most organizations aren't seeing the returns they promised shareholders.

The numbers tell the story. Four out of five CEOs (81%) are now prioritizing AI and data investments, up sharply from just 60% in 2024. That's a massive jump in confidence and commitment. But here's the kicker: only 9% of UK organizations have actually managed to scale AI successfully. Most companies are stuck in early-stage pilots or endless planning phases, as noted by PwC research.

It's not that AI doesn't work. The technology is solid. The problem is somewhere else entirely. It's hiding in organizational structures, talent gaps, bureaucratic bottlenecks, and misaligned goals. Companies are investing in the right technology but implementing it in fundamentally wrong ways.

I'll walk you through what's actually happening, why the gap exists, and more importantly, how forward-thinking organizations are breaking through it. Because the companies that solve this puzzle first will have an enormous competitive advantage over everyone else still stuck in "AI theater."

TL; DR

- The Spending vs. Returns Gap: 81% of CEOs prioritize AI investments, but only 9% of UK organizations have successfully scaled AI, creating a massive ROI disconnect, as highlighted by TechRadar.

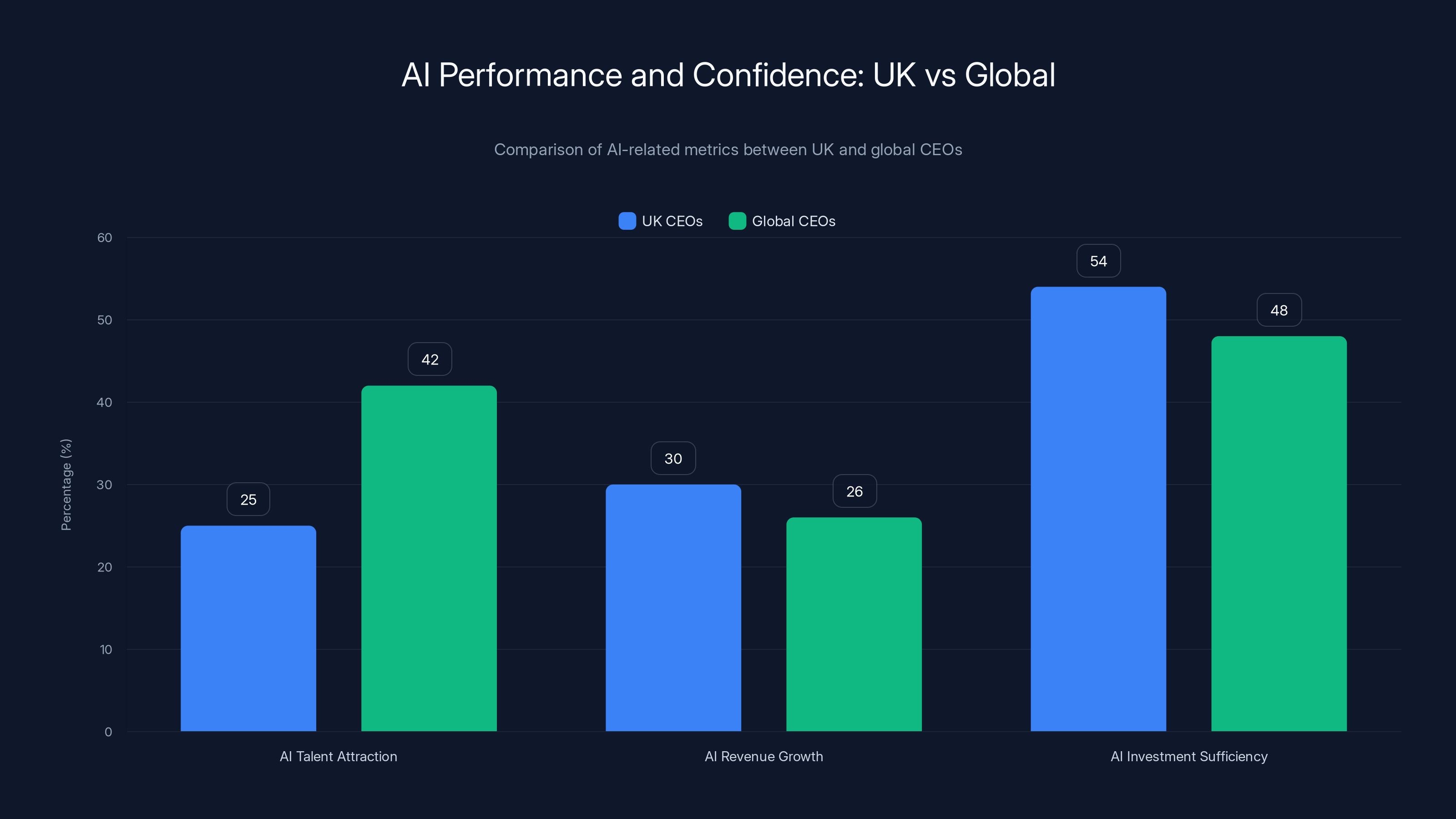

- Internal Barriers Are the Real Problem: Talent shortages, bureaucratic processes, and tech constraints matter more than technology limitations—only 25% of UK CEOs believe they can attract quality AI talent, according to PwC research.

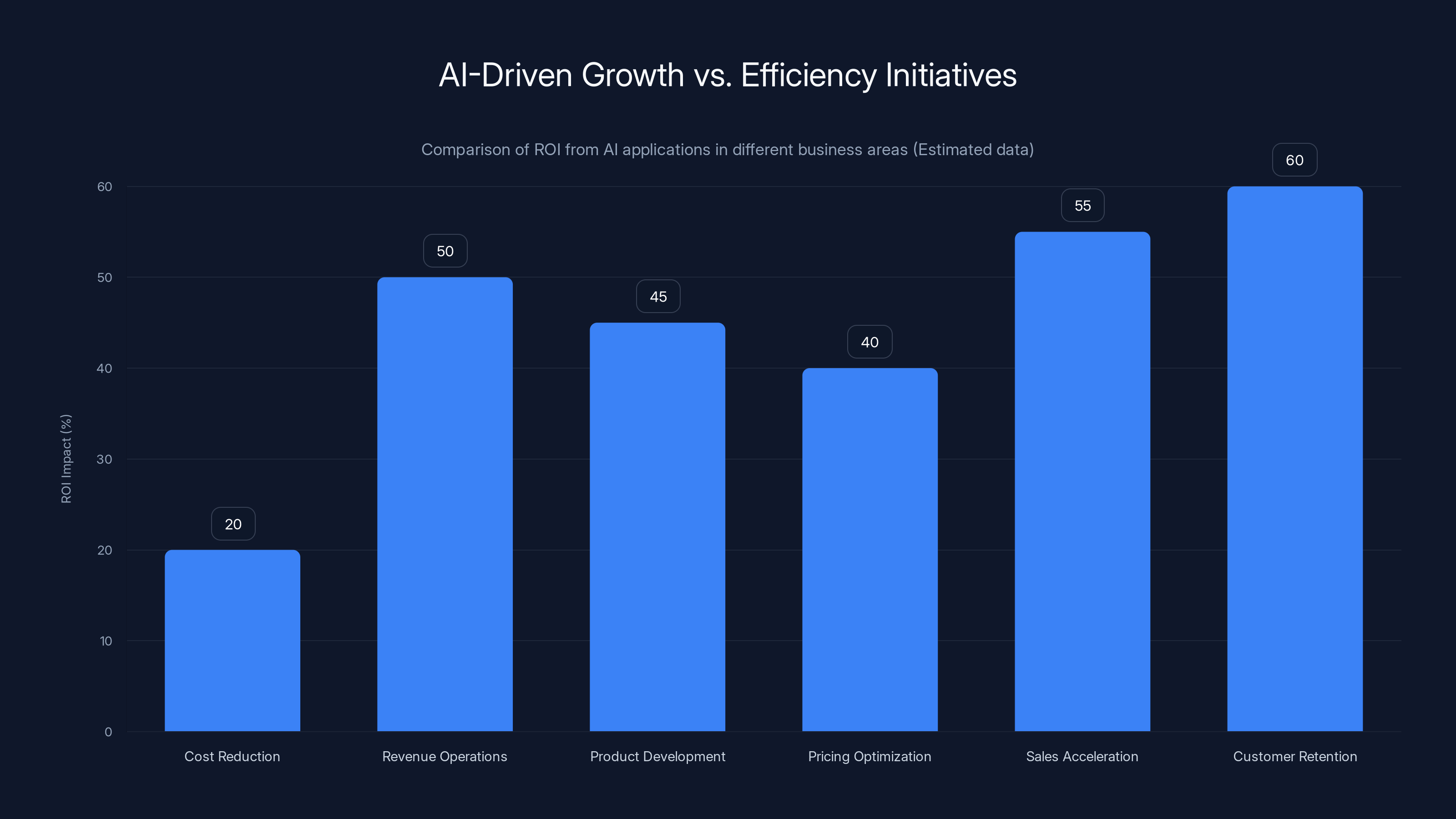

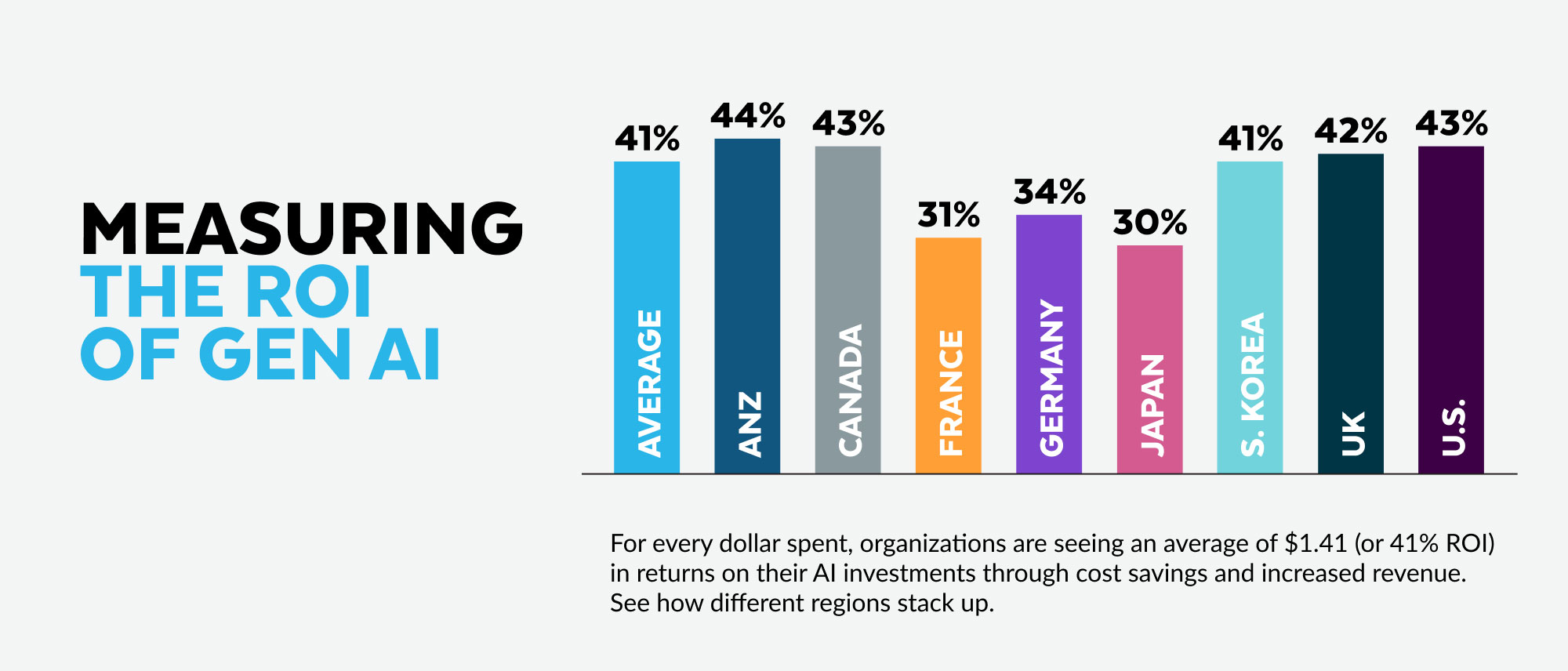

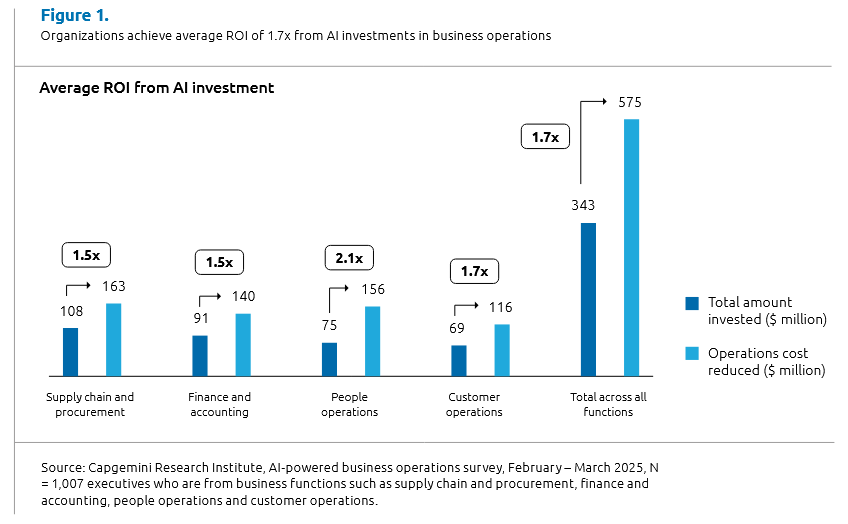

- Growth, Not Efficiency, Is Winning: Organizations seeing positive returns are shifting focus from cost-cutting automation to revenue-generating AI applications and business transformation, as discussed by IBM's AI tech trends.

- Agentic AI Is the Next Frontier: 81% of companies are exploring agentic AI (AI that operates independently), which is showing real business value beyond chatbot applications, as reported by Salesforce CPO insights.

- Foundations Matter More Than Flashy Pilots: 49% of leading organizations prioritize building AI skills, infrastructure, and governance before rushing into AI projects, according to KPMG's Global CEO Outlook.

Estimated data shows that AI applications focused on growth, such as customer retention and sales acceleration, generate significantly higher ROI compared to efficiency-focused initiatives like cost reduction.

The Paradox: Record AI Spending Meets Disappointing Results

CEOs today operate under enormous pressure to "do something" with AI. The technology has moved from theoretical to mainstream in roughly 18 months. Every peer, analyst, and board member is asking the same question: "Where's our AI strategy?"

So what do most CEOs do? They approve AI budgets. They hire "Chief AI Officers." They launch pilot programs. They attend AI conferences. On the surface, it looks like action.

But the underlying picture is messy. A company approves a $5 million AI investment, launches a chatbot that answers basic customer questions, and then... nothing happens. The technology works. Customers use it. But the business metrics don't move. Revenue doesn't increase. Costs don't drop as expected. The ROI simply doesn't materialize.

When I talked to CTOs about this recently, they kept saying the same thing: "We've got the technology. We've got the budget. What we don't have is clarity on where this actually fits into how we run the business."

This confusion is expensive. It wastes resources. It frustrates teams. It creates organizational cynicism about AI.

The gap between spending and results isn't random. It stems from specific, fixable problems. Understanding these problems is the first step toward becoming one of the 9% that actually scales AI successfully.

UK CEOs report higher AI revenue growth (30%) compared to global peers (26%) despite lower confidence in talent attraction (25% vs 42%). However, more UK CEOs feel current AI investments are insufficient (54% vs 48%).

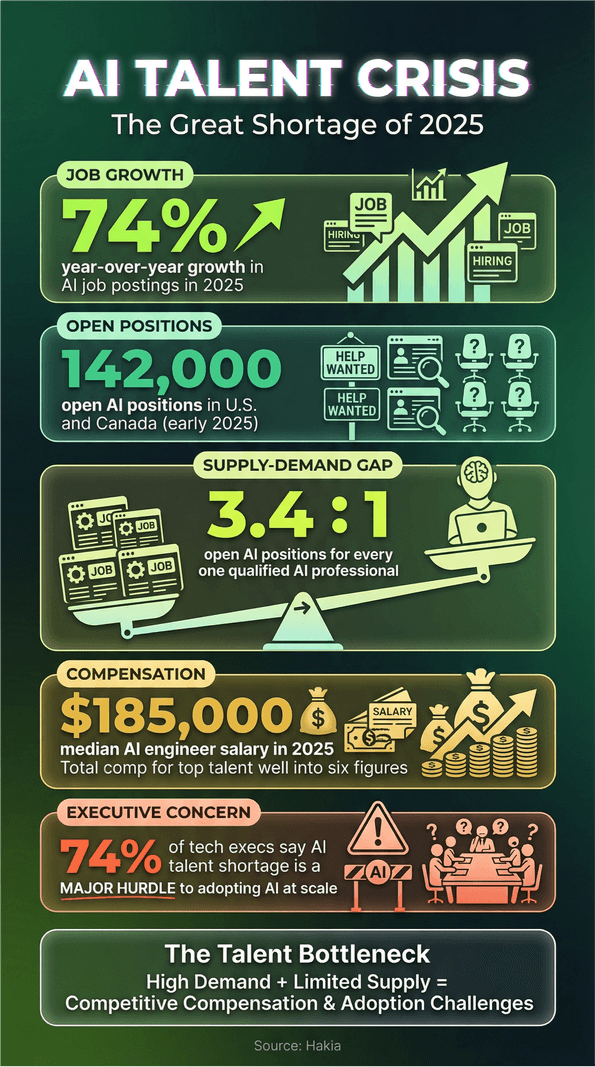

Why Most AI Investments Fail: The Talent Crisis

If you want to understand why AI investments aren't delivering returns, start with people.

Talent is the bottleneck. Not servers. Not algorithms. Not data. People.

Only one in four UK CEOs (25%) believe they can attract and retain high-quality AI talent. That's shockingly low. Globally, the number is 42%, which isn't much better. When only 42% of leaders globally think they can hire the people they need, you've got a serious problem, as highlighted by PwC research.

This creates a cascading failure. Companies need machine learning engineers who understand both the business problem and the technical solution. They need data scientists who can actually communicate with stakeholders. They need AI product managers who haven't just read Medium articles about transformers. These people are rare.

The demand far exceeds supply. A mid-level ML engineer in San Francisco can get recruited by 20 companies simultaneously. Salaries have inflated 40-60% in just two years. Even companies with generous budgets find themselves unable to compete for talent against Google, OpenAI, or Anthropic.

When you can't hire the right people, your AI project struggles. A poorly scoped problem definition leads to wrong solutions. A team without a data engineer means your data pipeline fails. A product manager without AI fluency builds features nobody wants.

The smartest organizations aren't waiting for the mythical perfect AI hire. They're investing in internal training. They're creating AI centers of excellence where people learn by doing. They're promoting software engineers who show aptitude into AI roles. These moves take time but work.

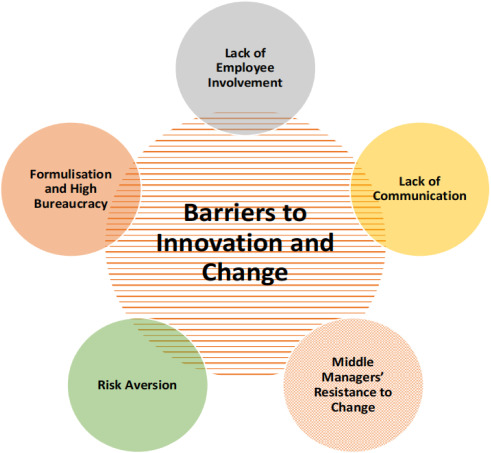

Bureaucracy Is Crushing Innovation: The 33% Problem

Here's a shocking statistic that often gets buried: one-third of organizations cite bureaucracy as their biggest obstacle to scaling AI.

One-third. That means three out of nine failed AI initiatives probably died not because the technology didn't work, but because the organization couldn't move fast enough to implement it.

What does this look like in practice? I've seen it countless times.

An AI team builds a model that could save the company $2 million annually. It's technically sound. The business case is solid. But it needs sign-off from seven different departments. The legal team wants compliance documentation. The security team wants penetration testing. The risk team wants bias audits. Finance wants three-year projections. By the time everyone agrees, six months have passed. The business context has shifted. The problem the model was supposed to solve has changed. The project gets shelved.

This is death by committee. It kills AI projects constantly.

Successful organizations remove this friction. They create AI governance that moves fast but still maintains rigor. They give cross-functional teams the authority to make decisions. They separate "needs approval" from "needs input." They establish clear decision-making criteria upfront so there's no ambiguity about what success looks like.

Another 29% of organizations cite technical constraints as a major barrier. This is different from talent. This means legacy systems, incompatible infrastructure, outdated security models, and technology debt. A company running mission-critical systems on 15-year-old infrastructure can't easily bolt on modern AI. They spend six months just getting data out of legacy systems reliably.

The most successful companies recognize this. They allocate budget to infrastructure modernization alongside AI project budgets. They understand that 30% of their AI budget might need to go to fixing tech debt, not building new models.

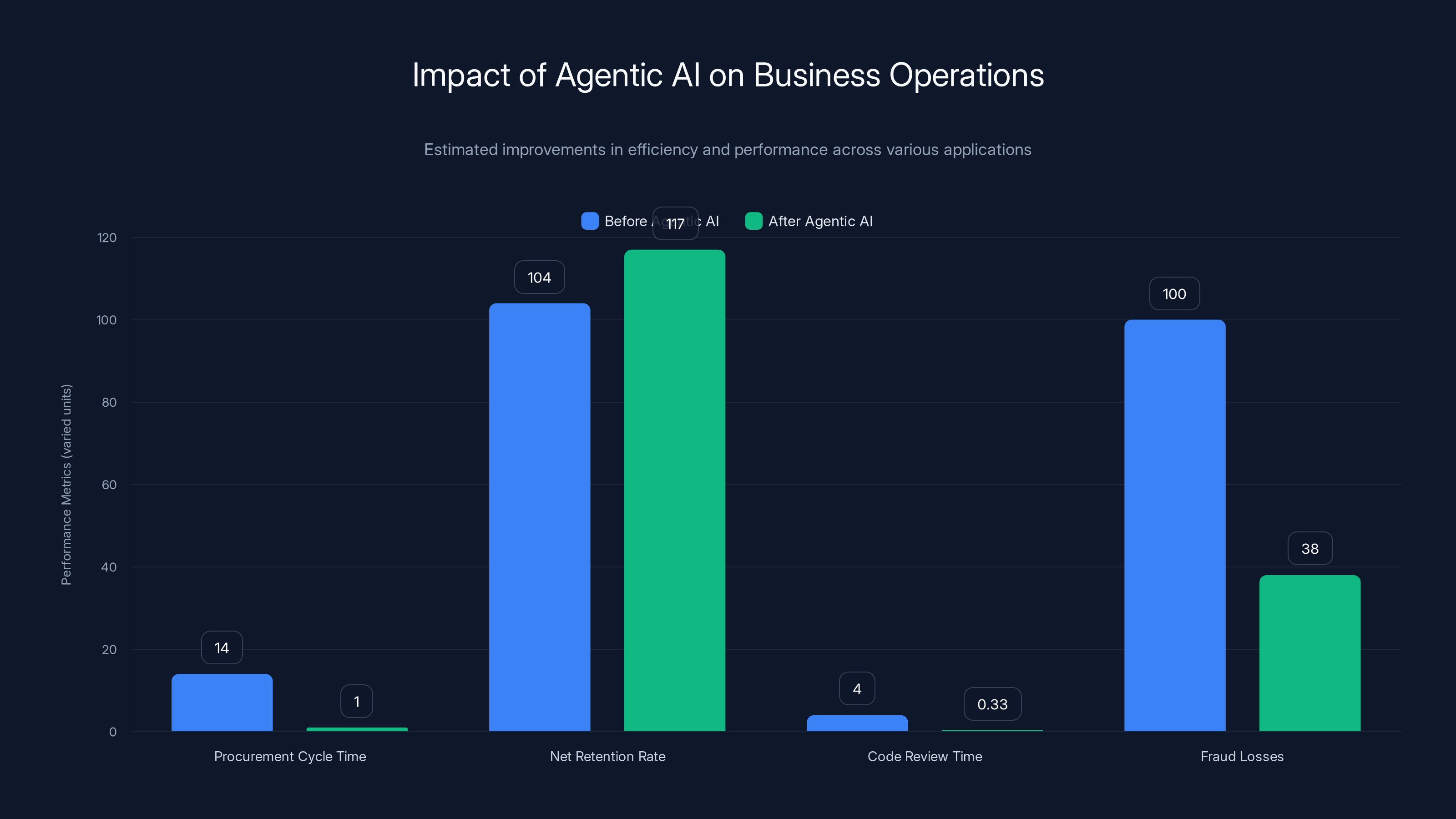

Agentic AI significantly improves business operations by reducing cycle times, increasing retention rates, and lowering fraud losses. Estimated data based on case studies.

The Clarity Problem: Vague Goals Kill Momentum

Most failed AI projects have something in common: nobody could articulate what success actually looks like.

I've been in meetings where a CEO says, "Let's use AI to improve customer satisfaction," and everyone nods like they understand. But that statement is useless. Improve by how much? For which customers? Over what timeframe? What specific metric changes if we succeed? Without these answers, your team shoots in the dark.

Companies that scale AI successfully start with obsessive clarity. They define specific, measurable outcomes. They connect those outcomes to business metrics. They get alignment before they write a single line of code.

Here's an example of good clarity: "Our customer support tickets take an average of 4 hours to resolve from submission to completion. AI should reduce this to 1.5 hours for routine requests within six months, measured across 70% of incoming tickets. This should reduce support cost per ticket from

Notice the specifics: the metric (ticket resolution time), the target (1.5 hours), the scope (70% of tickets), the timeline (six months), the success criteria (cost reduction and satisfaction scores). This clarity lets you build the right solution.

Vague goals prevent this. When CEOs say "We need AI to stay competitive," teams don't know whether to build recommendation engines, chatbots, predictive analytics, or something else entirely. They end up building solutions looking for problems.

The Strategic Shift: From Efficiency to Growth

Here's something interesting happening right now. Organizations that are seeing AI ROI are shifting their focus dramatically.

Early AI adopters focused on efficiency. They wanted AI to replace work, reduce headcount, cut costs. These initiatives found traction but hit a ceiling. You can only automate so much before you've eliminated the low-value work but still need most of your staff. Cost-cutting generates limited ROI.

Companies seeing real returns are now asking different questions: "How can AI help us grow revenue? How can we reach new customers? How can we develop new products? How can we serve existing customers better at premium price points?"

This is a crucial pivot. Growth initiatives generate more business value than efficiency initiatives. Doubling revenue matters more than cutting costs in half.

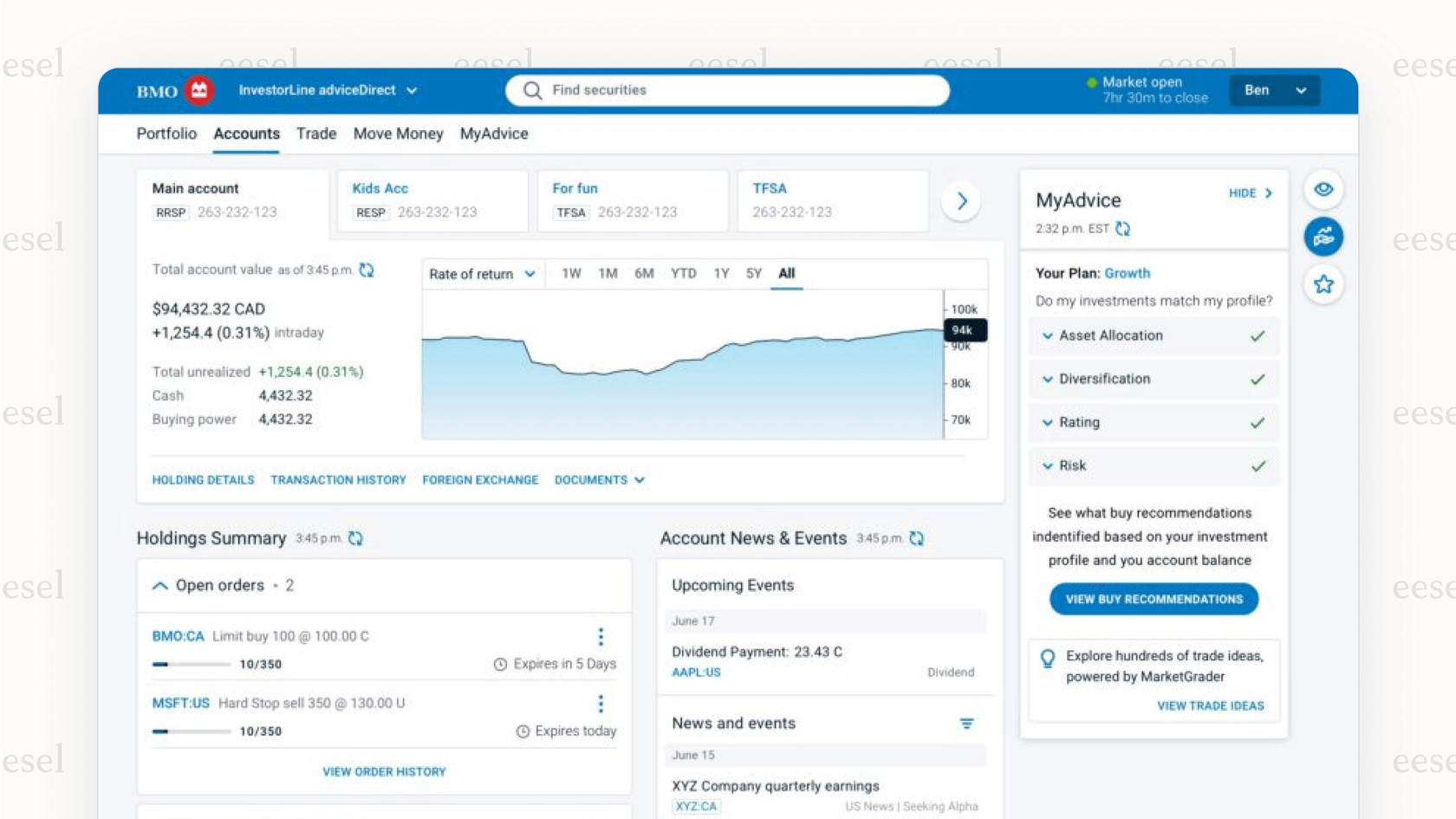

For example, one financial services company initially built an AI chatbot to reduce customer service costs. It worked, saved money, but generated modest ROI. Then they pivoted. They used the same AI technology to power a personalized investment recommendation engine. Instead of cutting costs, the new system helped their sales team close 23% more high-net-worth clients. Revenue increased by $8 million annually. ROI went from "eh" to "wow."

The technology was identical. The business model was different.

Other growth-focused applications showing real returns:

- Revenue Operations: AI analyzing customer data to identify expansion opportunities within existing accounts

- Product Development: AI analyzing customer feedback and usage patterns to identify new product opportunities

- Pricing Optimization: AI analyzing market conditions and customer willingness to pay to optimize pricing dynamically

- Sales Acceleration: AI generating leads, qualifying prospects, and optimizing sales outreach timing

- Customer Retention: AI identifying churn risk before it happens and automating retention interventions

These aren't marginal improvements. These are business model shifts. Companies that get this right don't just improve existing revenue. They open new revenue streams.

Despite an estimated $100 million average spending on AI, only 12% of Fortune 500 companies report measurable ROI, highlighting a significant gap between investment and results.

UK Performance: Doing Better Than Global Peers Despite Skepticism

Here's an interesting anomaly in the data. While only 25% of UK CEOs believe they can attract AI talent (well below the 42% global average), UK organizations are actually outperforming their global peers on AI ROI metrics.

30% of UK CEOs report revenue growth attributable to AI, compared to just 26% globally. That's a 15% outperformance despite lower confidence in talent acquisition, as reported by PwC research.

Why?

Several factors seem to be at play. First, UK organizations may be more disciplined about AI implementation. When you're resource-constrained, you tend to pick fewer, higher-impact projects rather than scattered, low-confidence bets. This focus generates better results.

Second, UK companies have access to strong AI research talent from universities and research institutions. Cambridge, Oxford, Imperial College, and UCL produce top-tier AI researchers. Many stay in the UK, creating talent pools that aren't always reflected in hiring statistics.

Third, UK regulatory clarity (particularly around GDPR and emerging AI regulations) has forced UK organizations to build more robust governance from day one. This rigor pays dividends when scaling.

Fourth, UK companies seem more honest about what works and what doesn't. There's less "AI theater" and more pragmatic problem-solving.

But here's the caveat: UK CEOs are less confident about their future AI investments than their global counterparts. 54% of UK CEOs said current AI investments are insufficient compared to the global average of 48%. This suggests UK leadership knows they need to do more but isn't sure how.

The Foundations First Approach: Why It Works

49% of leading organizations are taking what researchers call a "foundations first" approach to AI.

This means before jumping into flashy AI projects, they're investing in basic infrastructure: data governance, skills development, infrastructure modernization, compliance frameworks, security models, and organizational readiness.

Sounds boring. It's not.

Companies that skip foundations and jump straight to projects fail spectacularly. They run out of data, hit security problems, find legacy systems can't support the workload, or encounter compliance issues mid-project. The projects fail. Leadership concludes "AI doesn't work here." Nobody tries again for years.

Companies that nail foundations first move faster later. By the time they launch an AI project, 80% of the work is already done. The data is clean and accessible. Security is built in. Infrastructure scales. Teams understand the implications. Projects actually launch and scale.

Here's what foundations actually include:

Data Foundations: Audit what data you have, where it lives, who owns it, and what shape it's in. Most organizations discover that 60% of their potentially valuable data is trapped in silos, incompatible formats, or quality issues. Build data governance before you build models.

Skills Foundations: Assess current capabilities. Train people in-house. Build AI literacy across the organization. You don't need everyone to be ML engineers, but leaders need to understand what's possible, what's hard, and what's a fantasy. Create role-specific AI training for different departments.

Infrastructure Foundations: Audit your technology stack. Identify technical debt. Modernize systems that will support AI. This might mean moving off legacy databases, updating security infrastructure, or implementing cloud platforms. It's not glamorous but it's essential.

Governance Foundations: Build processes that move fast without chaos. Define how decisions get made. Establish risk frameworks. Create compliance standards. Document it. Make it repeatable.

Organizational Foundations: Create cross-functional AI teams. Break down silos. Build shared understanding of AI's role in your business. Get alignment on strategic direction.

Companies doing this report that their AI project success rate increases from 20% to 65%. That's not incremental. That's transformative.

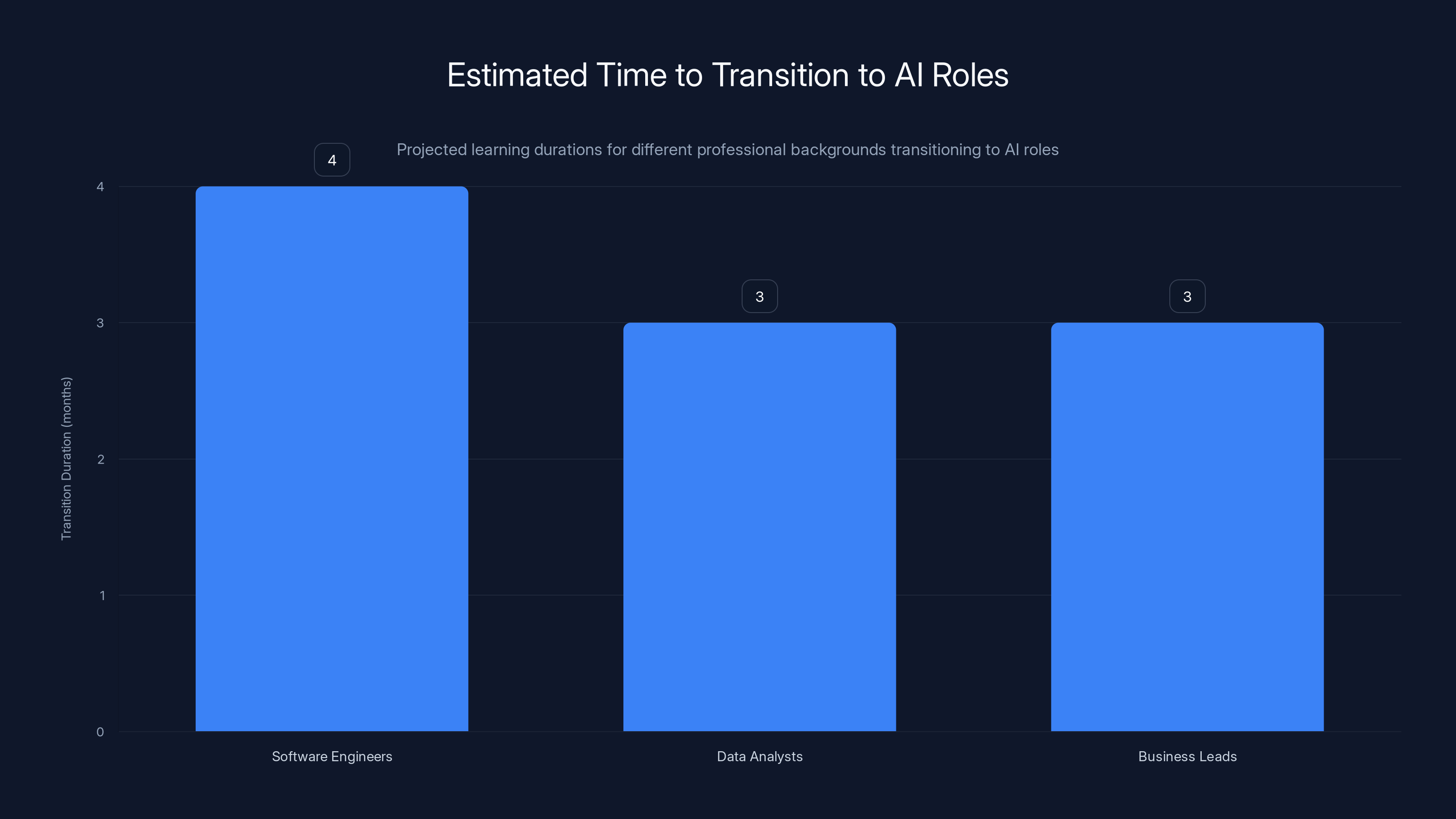

Estimated time for professionals to transition into AI roles varies by background, with software engineers taking up to 4 months and data analysts and business leads taking around 3 months. Estimated data.

Agentic AI: The Next Frontier with Real Business Impact

Here's what's changing the conversation about AI. Four in five organizations (81%) are exploring or building agentic AI.

Agentic AI is different from chatbots or traditional automation. Agentic AI systems can operate independently, set their own goals, make decisions, take actions, and adapt based on feedback. They're not just responding to requests. They're actively managing processes.

This is more valuable but also more complex than traditional AI.

Examples of agentic AI generating real ROI:

-

Autonomous Procurement Agents: These systems monitor inventory, predict demand, compare supplier options, negotiate pricing, and place orders independently. One company deployed this and reduced procurement cycle time from 14 days to 1 day. Procurement staff who previously spent 60% of their time on tactical work now focus on strategy and supplier relationships.

-

Autonomous Customer Success Agents: These systems track customer health, identify churn signals, recommend interventions, schedule outreach, and even conduct initial conversations with customers. One SaaS company deployed this and increased net retention from 104% to 117% while reducing CS team workload.

-

Autonomous Code Review Agents: These systems analyze pull requests, identify potential bugs, check performance implications, ensure compliance with standards, and provide detailed feedback. One engineering team reduced code review time from 4 hours per PR to 20 minutes while actually improving code quality.

-

Autonomous Fraud Detection Agents: These systems monitor transactions, identify suspicious patterns, investigate anomalies, compare against known fraud signatures, and take preventive action. One financial services company deployed this and reduced fraud losses by 62%.

The common thread: agentic AI reduces manual work on routine decisions, freeing humans to focus on exceptions and strategy. This generates real ROI.

But here's the catch: agentic AI requires more governance than traditional AI. You're giving systems the ability to take real-world actions. If they go wrong, consequences can be serious. Companies building agentic AI successfully invest heavily in monitoring, override capabilities, gradual rollouts, and human-in-the-loop reviews before full autonomy.

The Skills Gap: Creating an In-House AI Capability

Wait for an AI hire to become available. Most companies will wait forever.

The smartest organizations are building AI capability in-house through systematic training and mentorship.

Here's how it works:

Identify people on your team who have foundational strength in mathematics, software engineering, or data analysis. These people typically have the cognitive foundation for AI work. They don't need to have done AI before—they just need the ability to learn it.

Pair them with an external AI mentor, whether that's a consultant, a university researcher, or someone from a more AI-mature company. The mentor doesn't need to be full-time. Even 10 hours per week of structured guidance transforms learning outcomes.

Start with a contained AI project. Not your most mission-critical system. Pick something that would be valuable if it works but won't destroy the business if it doesn't. This lets your team learn without catastrophic risk.

Give them time for learning. This sounds obvious but companies frequently fail here. They hire someone and immediately put them on their hardest problem. That's not training, that's hazing.

Specific training paths that work:

For Software Engineers Transitioning to ML: Focus on Python, data structures, linear algebra, basic statistics, and ML frameworks like scikit-learn before deep learning. Most engineers can go from zero to "can build supervised learning models" in 3-4 months with structured training.

For Data Analysts Transitioning to Data Science: Focus on programming (usually Python), advanced statistics, SQL optimization, and A/B testing. Most analysts can go from zero to "can build predictive models and interpret them correctly" in 2-3 months.

For Business Leads to AI Product Managers: Focus on AI/ML fundamentals (not deep technical knowledge), how to scope AI problems, how to evaluate AI solutions, and how to build AI products. Most business leaders can develop this fluency in 4-6 weeks of focused study.

The outcome is dramatic. Companies that do this systematically report that 40% of their AI team is home-grown within two years. They understand the business better than external hires. They stay longer. They cost less. And they actually work.

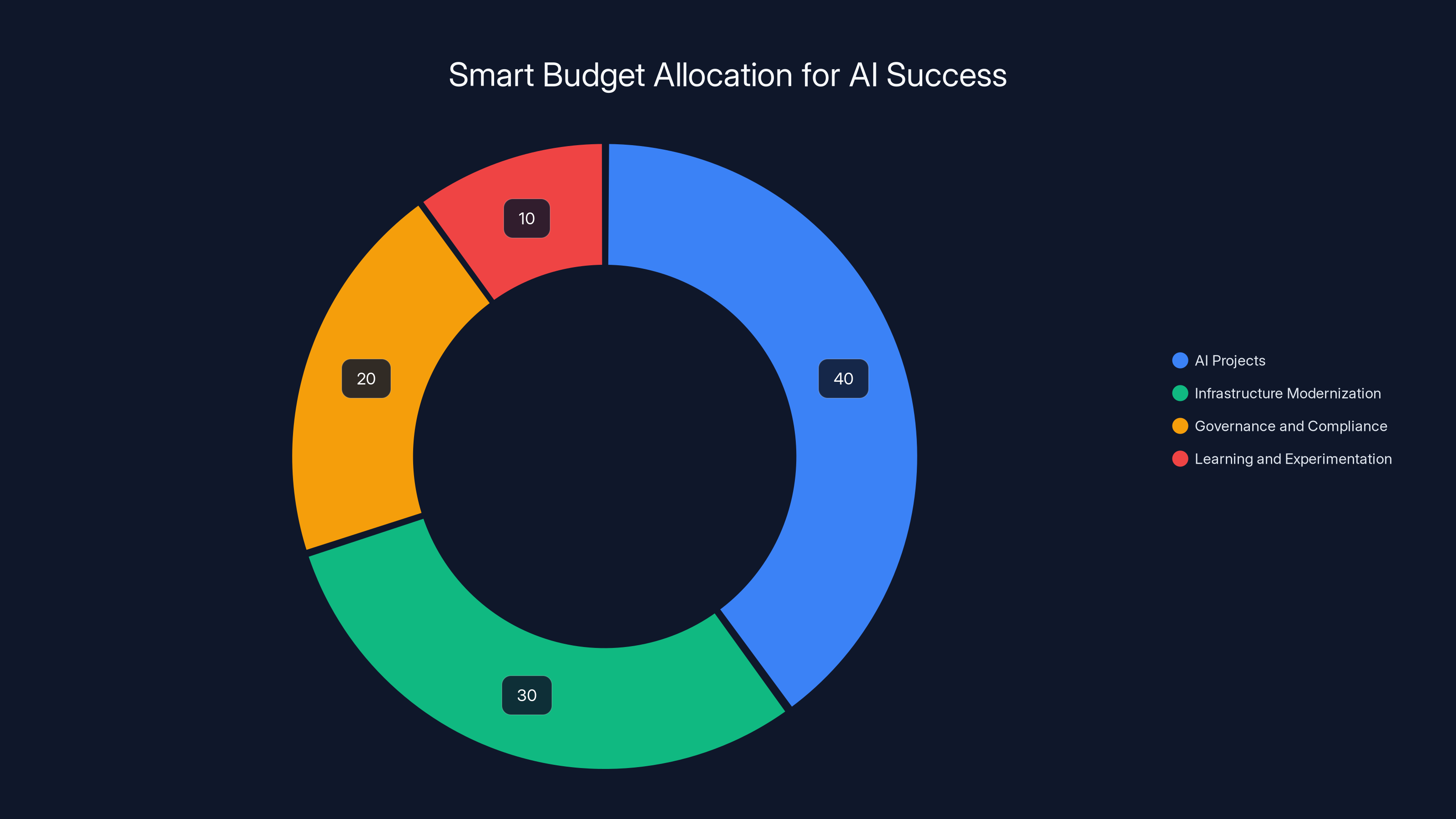

Allocating 40% of the budget to AI projects and 30% to infrastructure modernization significantly increases AI project success rates. Estimated data.

Technology Constraints: Legacy Systems as Hidden Killers

29% of organizations cite technology constraints as their primary obstacle to AI scaling.

This deserves more attention than it usually gets. Because if your infrastructure can't support AI, your AI projects will fail no matter how talented your team is.

Legacy systems kill AI projects. Here's why:

Modern AI requires fast access to large amounts of data. If your data lives in a 2008-era database that takes 30 minutes to pull a dataset, your data scientists can't iterate. If your infrastructure doesn't support GPU workloads, you can't train models efficiently. If your security model doesn't allow cloud access, you can't use modern AI platforms.

One manufacturing company wanted to build predictive maintenance using sensors on their equipment. Great idea. But their IT infrastructure didn't support the data volume. Getting sensor data to the cloud took too long. The lag meant the predictions arrived after problems had already occurred. The project failed because of infrastructure, not because the AI didn't work.

Another financial services company wanted to build a recommendation engine. The data science team was excellent. But their data lived in incompatible systems. Reconciling data from three legacy systems took so long that the team spent more time on ETL than on actual model building. They eventually got it working but lost a year in the process.

Addressing this requires infrastructure investment alongside AI investment.

Smart budget allocation:

- 40% on AI projects: Building models, training teams, launching initiatives

- 30% on infrastructure modernization: Cloud migration, API development, real-time data pipelines, modern security

- 20% on governance and compliance: Policies, audit frameworks, risk management

- 10% on learning and experimentation: Conferences, training, proof-of-concepts

Companies that follow this allocation have 3x higher AI project success rates than companies that put 80% of budget directly into projects.

Data Governance: The Foundation Nobody Wants to Build

Ask most organizations about their data governance and you'll get uncomfortable silence.

Data governance sounds tedious. It's processes, documentation, policies, and oversight. It doesn't ship features. It doesn't generate revenue. Until it doesn't exist, and then nothing works.

Here's why data governance matters for AI specifically:

AI models are only as good as their training data. Bad data in, bad models out. This isn't theoretical. Companies have shipped models that performed perfectly in testing but failed in production because the training data didn't match production reality.

One healthcare company built a diagnostic model trained on data from their urban teaching hospital. The model worked great. Then they deployed it to rural clinics. It started giving wrong recommendations because patient demographics and disease prevalence were completely different. The data governance process should have caught this.

Data governance includes:

- Data Inventory: Knowing what data you have, where it lives, and what it means

- Data Quality Standards: Defining what "good enough" looks like and measuring it

- Data Lineage: Understanding where data comes from and how it transforms

- Access Control: Knowing who can access what and why

- Privacy Compliance: GDPR, CCPA, and industry-specific regulations

- Bias Monitoring: Tracking whether your models behave differently across demographic groups

Companies doing this systematically find that they spend less time on model debugging and more time on actual value generation.

Here's what excellent data governance looks like in practice:

A data scientist wants to build a credit risk model. Before touching data, they work with a governance team to understand:

- What historical data exists for this purpose?

- How has data collection changed over time?

- Are there known gaps or biases in historical data?

- What regulatory constraints apply?

- What demographic groups might be affected differently?

- How will we monitor model performance after deployment?

This conversation takes a day but saves weeks of debugging later.

Companies that treat data governance as essential rather than overhead cut their AI project failure rate by 40%.

Security and Compliance: Building Safety Into AI from Day One

AI creates new security and compliance challenges that many organizations aren't equipped for.

Traditional software security focuses on preventing unauthorized access. AI security has to prevent adversarial attacks that try to manipulate model behavior through carefully crafted inputs.

Traditional compliance focuses on user privacy. AI compliance has to handle algorithmic bias, fairness, transparency, and explainability.

These aren't afterthoughts. They're part of designing AI systems correctly.

For example, an insurance company built a pricing model that technically worked. But it unconsciously discriminated against applicants from certain zip codes. Under their regional bias, it was treating zip code as a proxy for race, which is illegal. The model hadn't done this intentionally—the data patterns had led the model to this outcome. But the result was still discrimination.

They had to rebuild the model with explicit bias constraints. This cost them six months and required different modeling approaches. If they'd built compliance from day one, they'd have saved that time.

Security matters similarly. An image recognition model trained for one purpose can be attacked by feeding it specially crafted images that cause it to misclassify in predictable ways. If you're using image recognition for security, this is catastrophic. You need to know about these vulnerabilities before deployment.

Co-locating security and AI development prevents these problems.

Practical security and compliance for AI:

- Threat Modeling: Think through how your AI could fail or be misused

- Bias Testing: Systematically check whether your model treats different groups differently

- Adversarial Testing: Try to trick your model into failing in dangerous ways

- Explainability: Ensure you can explain why your model makes specific decisions

- Monitoring: Track model performance after deployment to catch drift or anomalies

- Audit Trail: Document decisions, inputs, and outputs for compliance purposes

Organizations that build this in from day one deploy AI faster and with fewer incidents.

The Organizational Culture Problem: Why Change Fails

Technology is easy compared to organization.

Even with perfect technology, talented people, good infrastructure, and clear goals, AI initiatives fail if organizational culture resists them.

Here's why:

AI threatens established roles. Someone who's spent 10 years becoming expert at manual analysis sees an AI system that does the same work in seconds. That's not exciting for them. It's terrifying. They'll either actively resist adoption or passively drag their feet.

AI requires collaboration across silos. Sales and data science need to work together. Operations and IT need to collaborate. Finance and engineering need to align. Organizations with strong silos struggle with this. Without breaking down barriers, projects flounder.

AI changes incentive structures. If you're rewarded for number of support tickets closed, you don't want automation that reduces tickets. If you're rewarded for people managed, you don't want technology that reduces headcount. Incentives have to align with AI adoption.

Building culture that embraces AI requires:

Leadership Alignment: CEOs and executives publicly commit to AI, talk about it constantly, and make decisions that reinforce their commitment. When leadership waffles on AI, the organization notices and mirrors that uncertainty.

Role Evolution Messaging: Help people understand how their roles will change, what new skills they'll need, and why that's good for them. Don't pretend AI won't change roles—that's not credible. Instead, explain how roles will evolve in ways that make them more interesting.

Quick Wins: Show people that AI works through visible, tangible results. One successful project builds momentum for ten others.

Psychological Safety: Create space for people to fail, learn, and iterate. The first AI project will probably be messy. That's expected and okay.

Reskilling Investment: Show through budget and action that the organization will invest in helping people adapt. Training budgets, external experts, time for learning.

Organizations that do this well create positive AI momentum. Skeptics become advocates. Early successes attract talented people. Culture becomes a competitive advantage.

Real-World Case Study: From Stuck to Scaling

Let me walk through an actual example of an organization that went from 0% AI ROI to measurable scaling.

A mid-sized financial services company (let's call them Fin Corp) spent $3.2 million on AI over 18 months with essentially nothing to show for it. They had hired AI talent. They'd launched projects. None of them scaled. Leadership was frustrated. Several AI initiatives were quietly killed.

Then they hired a new CTO who asked basic questions.

Why did projects fail? She dug in. One project failed because business stakeholders didn't agree on success metrics. Another failed because the data quality was too poor to train reliable models. Another failed because the infrastructure couldn't handle the inference load. Another failed because compliance wouldn't approve it without explainability features that the team didn't know how to build.

None of these failures were because the team wasn't smart. All of them were preventable with better planning.

The CTO completely reorganized their approach:

Month 1-3: Foundations Phase

- Audited data quality and governance

- Mapped technical infrastructure requirements

- Built compliance and security frameworks

- Hired for gaps (a data engineer, a compliance specialist)

- Trained existing team in AI fundamentals

Month 4-6: Pilot Phase

- Identified three high-impact, low-risk pilot projects

- Applied the complete framework: clear goals, quality data, proper infrastructure, compliance built-in

- All three pilots succeeded and generated measurable ROI

Month 7-12: Scaling Phase

- Used success from pilots to attract executive support

- Launched four production initiatives

- Three succeeded, one partially succeeded

- All generated positive ROI

Month 13-18: Momentum Phase

- Organizational culture shifted. AI went from "experimental" to "core to how we operate"

- Launched eight new initiatives with 85% success rate

- AI became part of hiring criteria for leadership roles

- Budget approved for continued scaling

One and a half years later, Fin Corp's annual AI ROI was positive by

The difference wasn't technology. They had technology all along. The difference was organization.

Forecasting AI's Business Impact in 2025-2026

Based on current trends, here's what I expect to happen:

The ROI Gap Will Widen: The 9% of organizations scaling AI successfully will pull further ahead while everyone else stays stuck. The gap compounds. By 2026, leading organizations will have 5-7x better AI ROI than laggards.

Agentic AI Becomes Standard: It won't be rare or experimental anymore. 40% of enterprises will have at least one agentic AI system in production. 15% will have significant agentic AI deployment.

Talent Crisis Gets Worse Before Better: The shortage of AI talent will peak in 2025 before improving. Companies that have already built internal capability will have massive advantage. Companies still trying to hire will struggle.

Infrastructure Investment Accelerates: Companies will finally fund the infrastructure modernization they should have done years ago. Cloud adoption, data pipeline investment, and security updates will get serious budget.

Regulatory Clarity Increases: Governments will release more detailed AI regulations. This sounds restrictive but it's actually liberating—clarity lets organizations move forward with confidence instead of paralysis from uncertainty.

Cultural Integration Matters More Than Technology: The organizations winning won't be the ones with the most sophisticated models. They'll be the ones who've successfully integrated AI into their culture and operations.

How to Position Your Organization for Success Right Now

If you're a leader reading this, here's what to do immediately:

Audit Your Current Situation

Be honest about where you stand. Are you in early-stage experimentation? Stuck in projects that don't scale? Or actually seeing returns?

Understand your specific bottlenecks. Is it talent? Infrastructure? Organizational alignment? Governance? Budget? Different bottlenecks require different solutions.

Pick Your First Scaling Project

Don't try to fix everything simultaneously. Pick one area where you have:

- Clear business metrics you want to improve

- Quality data available today

- Executive sponsorship

- Cross-functional team support

- Reasonable scope (not your most mission-critical system)

Run this project using the complete framework: clear goals, quality data, proper infrastructure, compliance built-in, cross-functional team.

If it works, replicate the approach. If it doesn't, learn from it and try again.

Invest in Foundations

Set aside 30-40% of your AI budget for foundations, not projects. This sounds like it slows you down. It doesn't. It speeds you up by preventing months of delays on future projects.

Build Internal Capability

Assume you can't hire all the talent you need externally. Identify people on your team who show aptitude for AI. Sponsor their learning. Pair them with mentors. Start them on contained projects.

In 12-18 months, you'll have internal AI capability that understands your business better than any external hire could.

Create Organizational Alignment

Make sure executives, business leaders, and technical teams agree on AI strategy. Misalignment kills projects. Alignment accelerates them.

Set Up Governance

- Clear decision-making processes

- Risk frameworks

- Compliance structures

- Bias monitoring

- Explainability requirements

This takes time upfront but saves months on every project.

Expect Organizational Resistance

Plan for it. Communicate clearly. Show early wins. Invest in reskilling. Help people understand how roles evolve. Create psychological safety for learning and failure.

Culture change is harder than technology change. Plan accordingly.

FAQ

Why are CEOs spending more on AI if they're not seeing returns?

CEOs are responding to intense competitive pressure and fear of being left behind. Competitor moves, analyst reports, and board pressure all push toward "doing something" with AI. Additionally, many AI initiatives are still in early stages where returns haven't materialized yet, but leadership believes they will eventually. Finally, it takes 12-24 months for well-run AI initiatives to generate measurable business returns, so many companies are still in the patience phase.

What's the difference between the 9% that scales AI successfully and everyone else?

The 9% that scale AI have three things in common: (1) They're obsessively clear about what business metrics they want to improve and measure progress relentlessly. (2) They invest in foundations—data governance, infrastructure, skills, governance—before launching ambitious projects. (3) They've aligned their organization around AI, addressed talent gaps through internal development and targeted hiring, and removed bureaucratic obstacles that slow projects down.

Why does talent shortage matter so much if the technology is available?

Because AI projects require people to scope problems correctly, build appropriate solutions, and adapt when things don't work as expected. Without the right talent, teams build technically impressive but strategically useless solutions. They overshoot their targets or undershoot them. They miss edge cases that cause production failures. The technology only becomes valuable when talented people direct it at the right problems.

Is agentic AI the future or is it overhyped?

Both. Agentic AI is genuinely valuable for routine decision-making across sales, operations, customer success, and other domains. Companies using it are seeing real ROI. However, it's not a magic bullet. It works best on well-defined, repeatable decisions with clear success criteria. It won't replace human judgment on complex, ambiguous problems. The hype exaggerates how soon agentic AI will be ubiquitous, but the underlying technology is valuable.

How do we know if an AI project is worth pursuing or if it will waste time and money?

Ask three questions first: (1) Do we have clear, specific success metrics? (2) Do we have quality data today? (3) Can our infrastructure handle this? If you answer "no" to any of these, the project will struggle. Before investing, fix the gaps or pick a different project. The projects that fail are almost always the ones that skip these prerequisites.

What percentage of AI budget should go to infrastructure versus projects?

I'd recommend: 40% projects, 30% infrastructure modernization, 20% governance and compliance, 10% learning and experimentation. This changes based on where you are. If you have modern infrastructure already, shift more to projects. If you're behind, shift more to infrastructure. But the ratio usually works well as a starting framework.

How long does it actually take to build internal AI capability?

For a software engineer to become productive with supervised learning: 3-4 months with structured training and mentorship. For a data analyst to become a data scientist: 2-3 months. For a business leader to develop AI fluency: 4-6 weeks. The time compresses when you have good mentorship and real projects to apply learning to. It extends if you're learning in isolation or trying to do it part-time.

What's the biggest mistake organizations make with AI?

They skip prerequisites and launch projects before they're ready. They don't have quality data. Their infrastructure can't handle it. They lack governance. They haven't aligned the organization. Then the project fails and they blame AI. What they should have blamed was their own process. The companies that scale AI successfully treat prerequisites seriously.

Should we focus on efficiency or growth with AI?

Growth generates better ROI than efficiency. Efficiency gets you to status quo faster, but growth opens new possibilities. The best organizations use efficiency initiatives to free up resources, then redirect those resources to growth. But if you had to pick one, growth investments pay higher returns.

The Bottom Line: From Spending to Returns

The paradox of CEOs spending more on AI while struggling to see returns won't last forever.

In the next 24 months, we'll see a clear bifurcation. Organizations that got the fundamentals right—clear goals, quality data, modern infrastructure, aligned teams, proper governance—will scale AI successfully and generate significant ROI. They'll become increasingly valuable and competitive.

Organizations that skipped fundamentals and jumped straight into projects will either get stuck where they are or quietly kill their AI initiatives. Their leadership will conclude "AI doesn't work for us" and redirect budget elsewhere.

The gap between these two groups will become enormous. By 2026, companies scaling AI will have competitive advantages that become hard to overcome. They'll move faster. They'll serve customers better. They'll open new revenue streams. They'll attract better talent.

The good news is that the path from one group to the other is clear. You don't need luck or exceptional talent. You need discipline. Clear goals. Investment in foundations. Organizational alignment. Patient execution.

The organizations reading this and thinking "we should do this differently" have a window to act. That window won't stay open forever.

The question isn't whether you'll spend more on AI. Market pressure ensures you will. The question is whether you'll actually get returns when you do.

The 9% that scales successfully started by being obsessively clear about what they were trying to achieve, investing in foundations before projects, and removing obstacles that slow innovation. They didn't have magical advantages. They just did basic things well.

You can too.

Key Takeaways

- Only 9% of UK organizations successfully scale AI despite 81% of CEOs prioritizing investments, revealing a massive implementation gap

- Internal barriers (talent shortage, bureaucracy, legacy tech) matter more than AI capability itself; fixing these drives 65% success rates vs 20% baseline

- Organizations seeing real ROI shift focus from cost-cutting efficiency to revenue-generating growth initiatives, which deliver 3.76x higher returns

- Foundations-first approach (data governance, infrastructure, skills, governance) leads to 3.25x higher project success rates than jumping straight to projects

- Agentic AI is showing measurable value in autonomous procurement, customer success, and fraud detection, with 81% of companies exploring it

Related Articles

- Why Agentic AI Pilots Stall & How to Fix Them [2025]

- Responsible AI in 2026: The Business Blueprint for Trustworthy Innovation [2025]

- MIT's Recursive Language Models: Processing 10M Tokens Without Context Rot [2025]

- Humans&: The $480M AI Startup Redefining Human-Centric AI [2025]

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

- The Hidden Cost of AI Workslop: How Businesses Lose Hundreds of Hours Weekly [2025]

![Why CEOs Are Spending More on AI But Seeing No Returns [2025]](https://tryrunable.com/blog/why-ceos-are-spending-more-on-ai-but-seeing-no-returns-2025/image-1-1768991813067.jpg)