The Largest Infrastructure Buildout in Human History Is Happening Right Now

Jensen Huang didn't mince words. Standing at the World Economic Forum in Davos, the Nvidia CEO made a declaration that deserves far more attention than it received in tech headlines. He called what's happening right now "the single largest infrastructure buildout in human history" during his discussion with BlackRock CEO Larry Fink.

Not an exaggeration for effect. Not Silicon Valley theatrics. A measured statement from someone who actually understands the scale of what's unfolding.

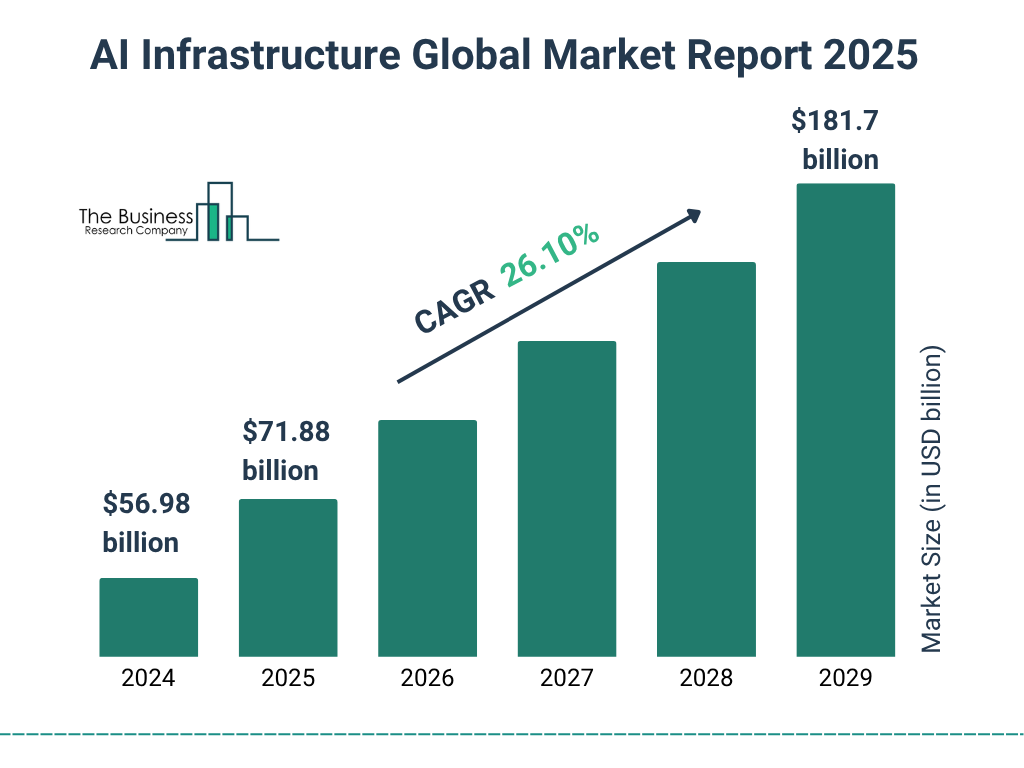

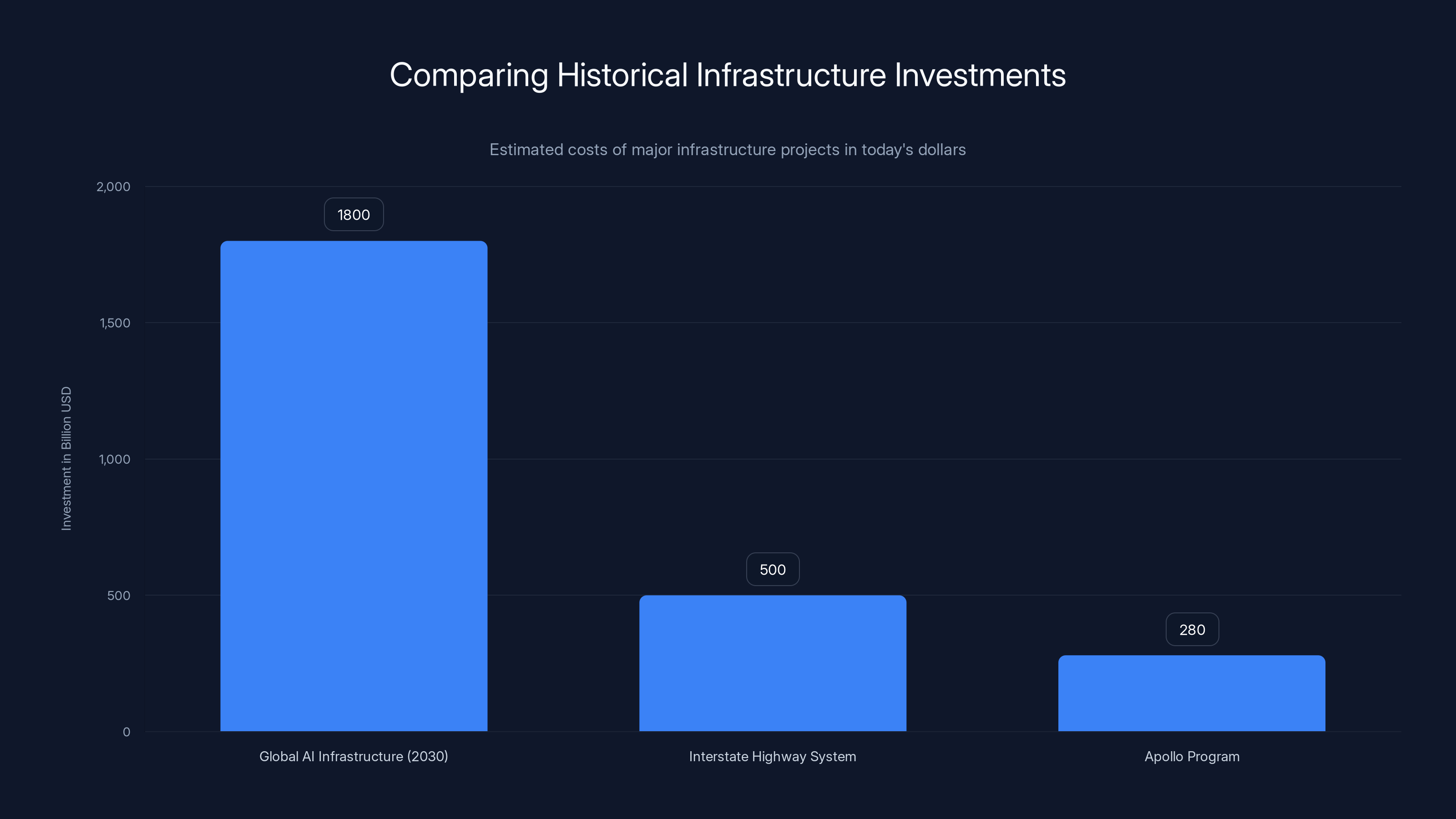

Here's what makes that claim stick: it's not just hype. IDC estimates predict that global AI infrastructure spending will exceed $1.8 trillion by 2030. That's not spread evenly either. Data center capacity expansions, power grid upgrades, GPU manufacturing, networking infrastructure—all accelerating simultaneously, all demanding resources at unprecedented scale.

For context, the Interstate Highway System cost roughly

But here's what actually matters: this infrastructure boom isn't just reshaping the tech industry. It's restructuring labor markets, forcing wholesale upskilling of workforces, creating entirely new job categories, and simultaneously threatening to displace workers in fields we thought were safe from automation.

The question isn't whether Huang is right about the scale. The question is: are you positioned to benefit from it, or are you about to get left behind?

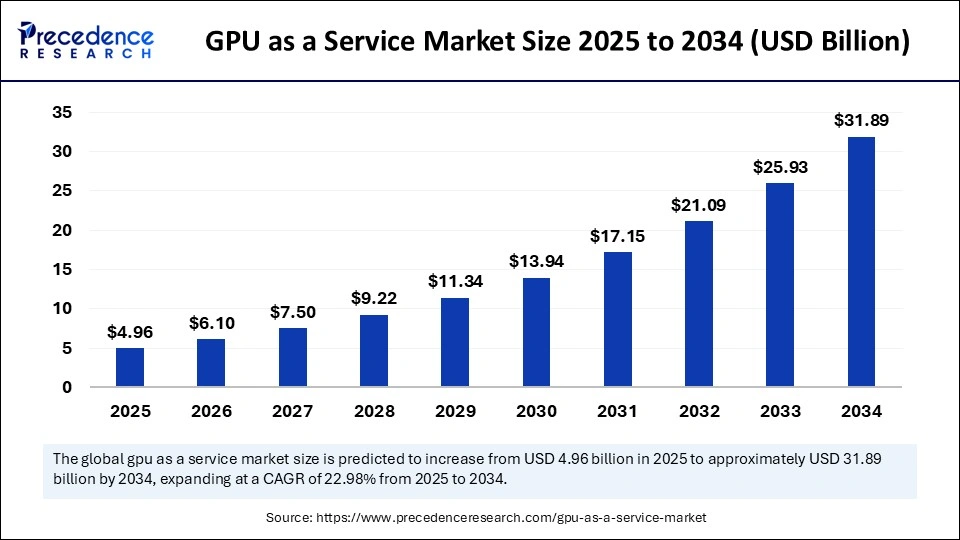

The Scale of GPU Demand Is Actually Staggering

Huang made a specific observation during his Davos interview with BlackRock CEO Larry Fink that reveals just how intense demand has become. He noted that the rental spot prices for GPUs aren't just driving up the cost of cutting-edge H100s and H200s. Older generations—two-generation-old GPUs—are also seeing price increases.

That's the signal that demand has compressed the entire supply chain. When legacy hardware is becoming scarce, you're looking at genuine capacity constraints across the entire stack.

The numbers back this up. In Q3 2024, McKinsey research showed that data center utilization rates for AI workloads had reached 87% capacity at major cloud providers. That's unsustainably high. Typically, infrastructure operates at 60-70% utilization to accommodate growth and spikes. At 87%, every new customer means either turning work away or initiating emergency expansion projects.

Nvidia's data center revenue hit $60.9 billion in fiscal 2024, representing a 217% year-over-year increase. Let that sink in. The company most directly benefiting from this buildout is seeing growth that completely exceeds normal business cycles.

But demand isn't uniform. Enterprises have different needs:

- Hyperscalers (AWS, Google Cloud, Azure) need massive quantities of the latest GPUs for training large language models

- Mid-market enterprises are acquiring previous-generation hardware for inference workloads and fine-tuning

- Edge computing providers need distributed GPU infrastructure closer to end users

- Startups and researchers are desperate for any available capacity at any price point

This fragmentation means that GPU supply challenges cascade across the entire ecosystem. When the newest chips are scarce, demand shifts to older inventory. When older inventory becomes scarce, prices go up everywhere.

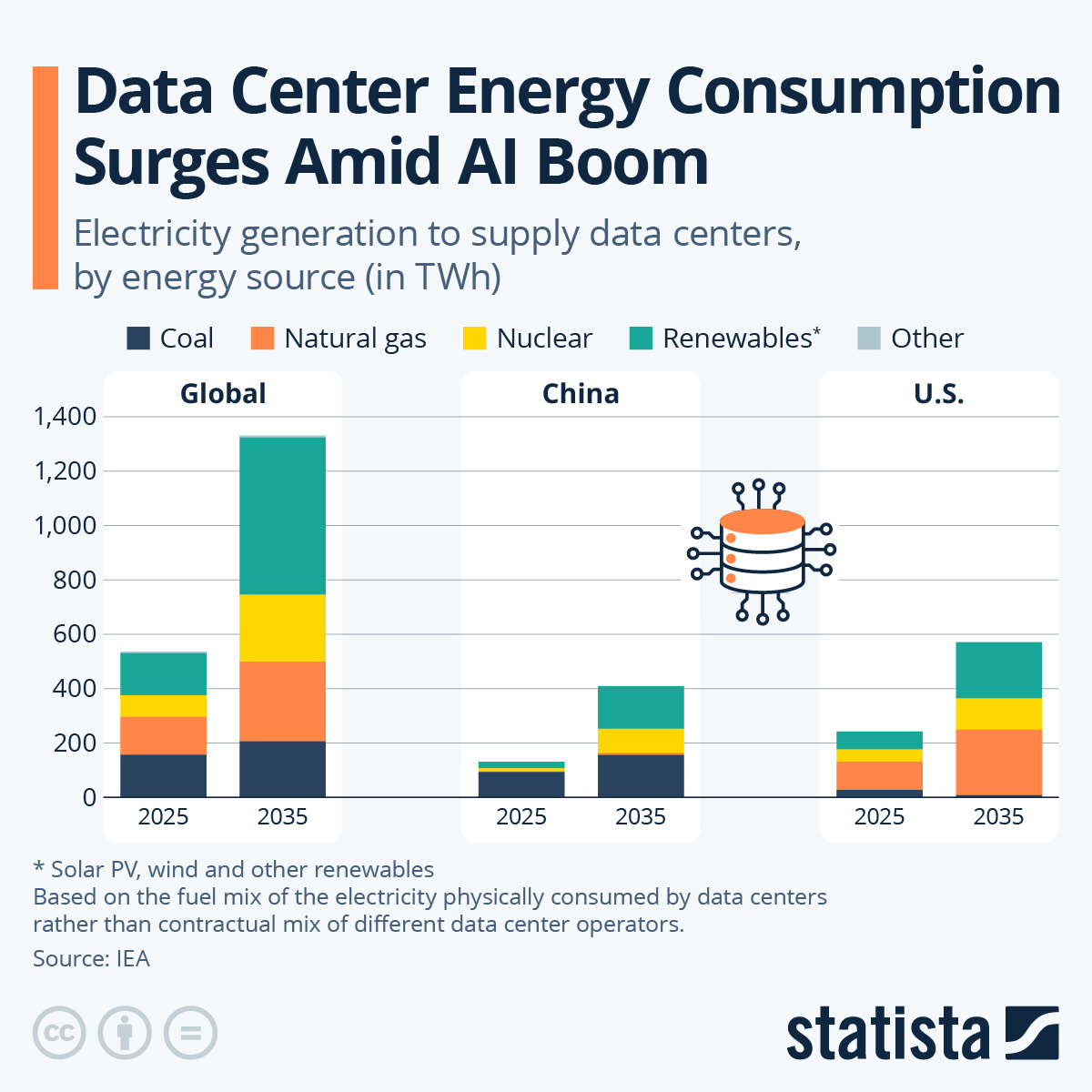

The Power Grid Crisis Nobody's Talking About

Here's the uncomfortable truth that gets buried in the excitement about AI: the infrastructure buildout isn't just about chips and data centers. It's about power.

A single large-scale AI data center consuming 500 megawatts of continuous power is now normal. Some planned facilities exceed 1,000 megawatts. For comparison, a typical nuclear power plant generates 1,000-1,200 megawatts. We're building data centers with power requirements equivalent to nuclear plants, and we're building dozens of them simultaneously across North America, Europe, and Asia.

This creates a genuine crisis. Regional power grids weren't designed for this kind of demand concentration. U.S. Department of Energy data shows that electricity demand growth had been stagnant at roughly 1% annually for the past 20 years. In 2023-2024, that accelerated to 3-5% growth, driven almost entirely by data center expansions.

The problem compounds because:

- New power plants take 5-10 years to plan and build. We're adding demand in months.

- Renewable energy infrastructure has its own supply chain bottlenecks. Solar panel manufacturing, battery storage production, transmission line installation all face capacity limits.

- Regional power markets have wildly different constraints. California has strict environmental regulations but limited nuclear capacity. Texas has deregulation but faces summer heat challenges. Virginia has capacity but struggling transmission.

- Data centers themselves are energy-hungry monsters. A single GPU generates substantial heat. A data center with thousands of GPUs creates cooling requirements that rival small cities.

Electric utilities are scrambling. Duke Energy, NextEra Energy, Southern Company—all announcing major capital expenditures specifically for data center power delivery. And yet, the grid remains a genuine constraint.

There's a second-order effect here that most analyses miss: energy costs. When regional power becomes constrained, prices spike. Data center operators have started negotiating directly with power plants, some signing 20-year contracts at premium rates just to secure allocation. This effectively locks in energy costs as a fixed operating expense, which changes data center economics fundamentally.

Microsoft and the Race for Infrastructure Control

Microsoft CEO Satya Nadella made his own case at Davos that's worth examining. He dismissed AI bubble fears by pointing out that the infrastructure investment thesis differs fundamentally from past tech bubbles. Previous bubbles were concentrated in a narrow sector. This one is pervading every industry.

Nadella's framing matters because Microsoft is making an aggressive bet. The company is committing to massive Azure infrastructure expansion, specifically AI-optimized data center capacity. Over the next three years, Microsoft is planning capex increases that could exceed $50 billion annually, with significant portions allocated to AI infrastructure.

Why Microsoft? Because they've realized something critical: the infrastructure layer is where margins and control concentrate. If you own the data centers, the GPUs, the interconnects, the power arrangements—you own the entire AI economy that sits on top.

This is different from being a software company. Azure is becoming manufacturing infrastructure. It's capital-intensive, margin-dependent on utilization rates, and strategically critical for anyone building AI applications.

Microsoft's partnership with OpenAI suddenly makes different sense when you understand this infrastructure play. OpenAI has trained ChatGPT, GPT-4, GPT-4 Turbo, and upcoming models on Microsoft infrastructure. The hosting relationship locks in OpenAI's dependencies while generating utilization guarantees for Azure.

The Robotics Opportunity Nobody Expected

Now here's where it gets interesting. Huang framed robotics as providing a "once-in-a-generation opportunity for European manufacturing." This wasn't throwaway commentary. It's strategic positioning.

Robotics companies need GPUs. They need edge computing. They need real-time AI inference—the ability to run trained models on local hardware, not cloud infrastructure. A humanoid robot performing warehouse work needs to process visual data, make decisions about object manipulation, and do it in real-time on local hardware.

This opens a completely different infrastructure market. You're not just selling cloud compute. You're selling edge chips, embedded processors, specialized hardware for specific robotic applications.

Companies like Boston Dynamics, Tesla's Optimus, Apptronik, and Kindred AI are all pushing toward commercial deployment of humanoid robots. But they all need computing infrastructure. Every robot shipped is a recurring revenue opportunity—cloud connections, model updates, telemetry processing, decision logging.

For manufacturing, particularly in high-labor-cost regions like Western Europe, robotics represents a genuine cost reduction play. German manufacturers competing with lower-cost Asian production can suddenly compete again if they automate effectively.

The infrastructure question is different for robotics than for LLMs. It's less about raw computational power and more about distributed edge computing, real-time inference, and supply chain optimization. But it's still infrastructure that needs to be built.

The Job Creation Thesis vs. The Upskilling Reality

Huang made an interesting argument about employment. He said the infrastructure buildout would create "a lot of jobs," specifically highlighting that "tradecrafts" will be vital. What he meant: data centers need electricians, HVAC technicians, construction workers, engineers, network installers. Physical infrastructure requires physical labor.

He's not wrong. The infrastructure boom IS creating jobs. U.S. Bureau of Labor Statistics data shows that data center technician positions grew 12% year-over-year in 2024, faster than overall job market growth. Construction and electrical trades are seeing increased demand specifically for data center buildout.

But here's the disconnect: those jobs require specialized training. You can't hire someone off the street to install and configure a GPU cluster. You need:

- HVAC engineers trained on data center cooling systems

- Network engineers who understand high-speed interconnects

- Database administrators who understand distributed systems

- Linux systems engineers with performance tuning expertise

- Data center technicians certified in specific protocols

The upskilling crisis is real. Educational institutions haven't caught up. Bootcamps are popping up, but they're scattered and inconsistent in quality. Companies are forced to either grow talent internally or bid aggressively for limited supply.

Nadella's counterpoint becomes clearer in this context. He suggested that AI adoption mirrors previous technology waves—employees need to upskill to stay relevant. "You're not going to lose your job to AI," Huang noted, "you're going to lose your job to someone who uses AI."

That's not comforting. That's a warning. It means you need to actively develop AI competency or become obsolete in your field.

Manufacturing and Industrial Transformation

Beyond services and white-collar work, the infrastructure buildout is forcing industrial transformation. Chip manufacturing is being reshored. Intel, Samsung, and TSMC are all planning significant capacity expansions in the United States and Europe.

Intel alone is investing $20 billion in new fabrication plants in Arizona, Ohio, and New Mexico. TSMC is building Arizona fabs and considering additional European facilities. This isn't just capacity expansion—it's geopolitical risk reduction. Countries want chip manufacturing sovereignty.

The industrial jobs created by chip manufacturing are different from data center jobs. They're specialized, high-skill, high-wage positions. A fab technician needs deep technical knowledge of semiconductor processing. These aren't jobs that get automated easily—they require human expertise and judgment.

But the geography matters. Manufacturing jobs are localizing in specific regions: Arizona, Ohio, South Korea, Taiwan, potentially Ireland and Germany. This creates regional economic transformation—suddenly, rural Ohio becomes a tech hub because that's where Intel built fabs.

The supply chain implications are profound. Every data center now depends on a functioning semiconductor manufacturing base that's distributed globally. Disruptions to any major manufacturing node cascade across the entire infrastructure ecosystem.

The Energy Transition Paradox

Here's a paradox nobody's resolving: the AI infrastructure boom requires massive energy. To power all these data centers sustainably, we need to massively expand renewable energy and grid infrastructure. But renewable energy manufacturing and grid buildout also requires energy.

The math gets uncomfortable. Expanding solar panel manufacturing in the U.S. requires industrial capacity that doesn't exist. Wind turbine production is geographically concentrated. Battery manufacturing for grid storage is bottlenecked by lithium and cobalt supply chains.

So you get a situation where data center expansion literally competes with renewable energy infrastructure expansion for the same resources, the same labor, the same manufacturing capacity.

Some forward-thinking data center operators are contracting directly with renewable energy producers. Google has signed massive power purchase agreements with solar and wind farms. Amazon similarly committed to renewable energy targets. These arrangements lock in longer-term energy costs while incentivizing renewable expansion.

But this creates another problem: not all regions have suitable renewable resources. A data center operator in Arizona can reasonably expect solar power abundance. A data center operator in the Pacific Northwest might rely on hydroelectric. A European operator faces completely different constraints.

This geographic variation in energy costs and availability will eventually force data center locations toward regions with reliable, affordable power. That means some planned facilities in energy-constrained regions might not happen, or might be dramatically more expensive.

AI Is Actually Pervading Every Industry, Not Just Tech

Nadella's Davos point deserves expansion. He said the telltale sign of a real technology revolution—as opposed to a bubble—is whether the conversation extends beyond tech companies. Are non-tech executives talking about AI? Are industrial companies investing in AI? Are financial institutions restructuring around AI capabilities?

The answer is unequivocally yes, and it's accelerating.

Financial Services: Banks are deploying AI for fraud detection, credit scoring, algorithmic trading, and customer service. JPMorgan has integrated AI across risk management, compliance, and trading operations. This requires infrastructure investment in data pipelines, GPU compute, and real-time inference systems.

Healthcare: Radiology firms are deploying AI diagnostic systems. Pharmaceutical companies use AI for drug discovery. Hospital networks need AI infrastructure for patient data analysis. Mayo Clinic established an entire AI research division. This represents infrastructure spending in healthcare IT.

Manufacturing: Industrial IoT sensors generate massive data streams. Manufacturing operations use AI for predictive maintenance, quality control, and supply chain optimization. Siemens is building AI capabilities into their industrial software platform. Every major industrial equipment manufacturer is doing similar work.

Agriculture: Precision agriculture uses AI-powered drones and sensors for crop monitoring, pest detection, and yield optimization. John Deere integrated AI into farm equipment. This requires edge compute infrastructure and cloud processing.

Energy Sector: Utilities use AI for grid optimization, demand forecasting, and predictive maintenance of power infrastructure. Enel and other European utilities are deploying AI across operations. This requires data center investment specifically for energy sector applications.

The point is clear: AI infrastructure isn't a tech industry phenomenon. It's genuinely cross-industry, and that means the infrastructure buildout touches every economic sector.

The Geographic Distribution of Infrastructure Investment

Infrastructure investment isn't evenly distributed. Certain regions are becoming AI hubs, which attracts more investment, which accelerates concentration.

United States dominates, with major data center expansions in Virginia, Texas, Arizona, California, and the Midwest. The U.S. benefits from abundant land, reliable power, and existing tech ecosystem density.

Europe is investing heavily but facing constraints. Stringent environmental regulations mean data center planning takes longer. Energy costs are higher. But the commitment to AI sovereignty is driving investment in Germany, France, the Netherlands, and Ireland.

Asia-Pacific sees concentrated investment in Singapore, Japan, South Korea, and Australia. China is building capacity separately, outside the Western infrastructure ecosystem. India is emerging as a secondary hub, partly due to labor cost advantages in AI development and training.

This geographic distribution has implications for where jobs get created, where talent concentrates, and where economic growth happens next.

The Upskilling and Retraining Imperative

Both Huang and Nadella acknowledge that upskilling is non-negotiable. But the path forward is unclear, and institutions are struggling.

Here's the challenge: the skills that matter in 2025 didn't exist five years ago. You can't train data center engineers from a 2020 curriculum. You can't teach GPU optimization techniques that were discovered last year using traditional educational models.

Companies have started taking training into their own hands. Nvidia offers extensive certification programs for GPU programming and data center administration. Coursera and edX partner with universities to offer AI and machine learning courses. Tech bootcamps have proliferated, offering AI-focused tracks.

But there's a quality problem. Not all training is rigorous. Not all certifications mean anything. The barrier to entry for legitimate training is being flooded by low-quality alternatives.

What's emerging is a two-tier labor market:

- High-skill, high-wage positions for engineers, architects, and specialists with deep expertise and provable credentials

- Mid-skill positions for technicians and operators trained through legitimate bootcamps or company programs

- Low-skill positions for physical labor—construction, installation, basic maintenance

Mid-tier positions are where vulnerability concentrates. These jobs might exist today but can be automated in a few years once the technology matures. A data center technician executing standard procedures today might be replaced by robotic systems in 2027.

The career strategy has shifted. It's no longer about finding a stable job. It's about continuously developing skills that stay ahead of automation. This requires:

- Active learning: Allocating time and resources to skill development every quarter

- Specialization: Developing deep expertise in areas less likely to be automated

- Adaptability: Being willing to shift to different fields if your current specialization becomes obsolete

- Certification: Getting recognized, verifiable credentials that employers trust

The Competitive Dynamics and Consolidation

Huang's comments about GPU scarcity reveal something uncomfortable: Nvidia has near-monopoly position in AI accelerators. AMD and Intel are competing, but Nvidia's software ecosystem (CUDA) and first-mover advantage are enormous.

This creates a weird dynamic. Every company building data centers needs Nvidia chips. Nvidia has unprecedented leverage in setting prices, terms, and availability. Yet Nvidia can't meet demand. So every buyer is frustrated but dependent.

This dependency is driving consolidation. Companies are integrating backward—building their own chips specifically for their workloads. Google built TPUs. Amazon developed Trainium and Inferentia chips. Apple created Neural Engine chips. These aren't replacing Nvidia across the board, but they reduce dependency for specific workloads.

For most organizations, custom silicon is too expensive and time-consuming. They depend on Nvidia, AMD, and Intel. But the major cloud providers are hedging by building custom silicon for their specific use cases. This creates a bifurcated market where:

- Hyperscalers use mix of commodity GPUs and custom silicon

- Enterprise customers rely almost entirely on commodity GPUs

- Startups and researchers use whatever they can get, usually older-generation hardware

The consolidation extends to data center operators themselves. Smaller regional providers struggle to compete for GPU allocation. Larger operators with deeper relationships with chip manufacturers get preferential allocation and better pricing. This is driving consolidation in the data center market toward a few dominant players.

Looking Ahead: The Next 18 Months

If the infrastructure buildout continues at current pace—and there's no reason to expect it to slow—the next 18 months will see significant developments.

First, we'll see broader evidence of power grid constraints. More data center projects will face delays due to power availability. Regional power auctions will become more competitive, driving energy costs up. This will force data center economics to reset, potentially pausing some planned expansions.

Second, the semiconductor manufacturing capacity will start increasing materially. Intel's new Arizona fabs will come online. TSMC Arizona production will scale. This will ease chip supply constraints but not eliminate them. Custom silicon from hyperscalers will become more prevalent.

Third, robotics will transition from lab demonstrations to meaningful commercial deployment. We'll see humanoid robots in actual warehouses, not just test deployments. This will validate the infrastructure opportunity Huang highlighted.

Fourth, upskilling will become a business in its own right. Companies specializing in AI talent development, training infrastructure, and certification will emerge and consolidate rapidly. The best players will partner directly with enterprises.

Fifth, energy constraints will force a more geographically distributed approach to AI infrastructure. Rather than concentrating in traditional data center hubs, infrastructure will spread toward energy-abundant regions. This could benefit areas like the Pacific Northwest (hydroelectric), Texas (wind), and Southwest (solar).

Sixth, government involvement will increase. Every government realizes AI infrastructure is strategic. Policy interventions—tax incentives, land grants, energy subsidies—will proliferate. This politicizes the infrastructure buildout, making it less purely market-driven.

The Opportunity for Organizations and Individuals

So what does this mean for you, whether you're leading a company, managing a team, or planning your career?

For companies, the infrastructure buildout creates opportunities but also imperatives. You need to:

- Invest in AI capability building now, before talent becomes impossible to recruit

- Develop strategy around AI adoption in your specific industry

- Build partnerships with infrastructure providers early, before they're overcommitted

- Plan for upskilling of existing workforce and recruitment of new talent

- Consider infrastructure as a strategic advantage, not just a cost center

For individuals, the landscape is simultaneously threatening and opportunity-rich.

- Learn AI and machine learning fundamentals, even if it's not your current specialty

- Develop infrastructure expertise if you're in tech—DevOps, cloud architecture, distributed systems

- Pursue certifications that employers actually recognize and value

- Build a portfolio of real work, not just coursework

- Stay adaptable—the specific skills that matter will change every few years

For everyone, Huang's fundamental message is true: this is a once-in-a-lifetime opportunity. The infrastructure buildout will create wealth, opportunity, and fundamental economic restructuring. The question is whether you'll position yourself to benefit from it.

The Risk Factors Nobody's Discussing Openly

For all the optimism about AI and infrastructure opportunity, there are genuine risks that deserve acknowledgment.

Economic sustainability risk: Current valuations assume AI applications will generate sufficient ROI to justify the infrastructure investment. If that doesn't materialize—if enterprises deploy AI and find productivity gains are smaller than expected—the infrastructure buildout becomes a stranded asset. Companies spent billions on data center capacity that isn't fully utilized.

Technological risk: Current AI architectures rely on specific approaches (transformer-based models, GPU-intensive training). What if the technology paradigm shifts? What if a fundamentally different approach emerges that's less computationally intensive? All the GPU infrastructure built for transformer models becomes partially obsolete.

Energy risk: The infrastructure buildout depends on affordable energy. If energy costs spike due to geopolitical events, regulatory changes, or supply shocks, data center economics reset dramatically. A facility designed to be profitable at

Geopolitical risk: Chip manufacturing is concentrated in Taiwan. Data center operations depend on global supply chains. Disruptions—trade wars, regional conflicts, export restrictions—cascade through the entire infrastructure ecosystem. China's potential restriction on Nvidia chip exports created supply shocks. Further restrictions could be catastrophic.

Regulation risk: Governments are increasingly interested in AI governance. Energy-intensive data centers might face carbon taxes or restrictions. Labor regulations might require specific training or certification. Antitrust scrutiny might break up dominant infrastructure providers. These aren't guaranteed, but they're real risks.

These risks don't invalidate Huang's fundamental thesis. The infrastructure buildout is real, and it is massive. But it's not risk-free, and assumptions about continuation of current trends aren't guaranteed.

TL; DR

- Infrastructure buildout is real: Global AI infrastructure spending could exceed $1.8 trillion by 2030, making it genuinely the largest in human history

- Power is the constraint: Data centers require massive power; regional grids are struggling to keep up, creating a genuine bottleneck for expansion

- Nvidia has extraordinary leverage: GPU demand vastly exceeds supply, giving Nvidia near-monopoly pricing power and dependency that's driving consolidation

- Upskilling is non-negotiable: The infrastructure boom creates jobs, but requires specialized skills; traditional education can't keep pace, and talent competition is intense

- This is cross-industry: AI adoption isn't concentrated in tech; finance, healthcare, manufacturing, agriculture, and energy are all deploying AI infrastructure, validating the scale thesis

- Geographic concentration matters: Infrastructure investment concentrates in specific regions, creating regional winners and losers; energy abundance and regulatory environment determine where buildout happens

- Robotics is the next wave: Humanoid robotics requires edge computing infrastructure; this represents a second phase of the buildout focused on distributed edge hardware, not just cloud GPU

- Career strategy must adapt: Jobs will be created, but specific skills matter enormously; continuous learning and specialization matter more than ever; the mid-tier is most vulnerable to obsolescence

Global AI infrastructure spending is projected to reach $1.8 trillion by 2030, far surpassing historical projects like the Interstate Highway System and the Apollo Program. Estimated data.

FAQ

What does Jensen Huang mean by "the single largest infrastructure buildout in human history"?

Huang is referring to the combined global investment in data centers, power infrastructure, semiconductor manufacturing, and networking hardware required to support AI applications. This infrastructure spending is projected to exceed

Why is GPU availability such a constraint on AI infrastructure buildout?

Demand for GPUs currently far exceeds supply. Nvidia alone cannot manufacture enough H100 and H200 chips to meet demand from cloud providers, enterprises, startups, and researchers. This constraint cascades through the market—when cutting-edge GPUs are unavailable, demand shifts to older-generation hardware, driving up prices across the entire GPU market. Spot rental prices for two-generation-old GPUs are increasing, which is a clear signal of severe supply constraints. This forces many organizations to wait months or negotiate long-term contracts at premium prices just to secure GPU allocation.

What's driving the power grid crisis related to AI data centers?

A single large-scale AI data center can consume 500-1,000 megawatts of continuous power, equivalent to a nuclear power plant. Multiple such facilities are being planned simultaneously across North America, Europe, and Asia-Pacific. Regional power grids, designed for much lower demand growth (historically 1% annually), now face 3-5% annual growth driven by data center expansions. Building new power plants takes 5-10 years, while data center deployment happens in months. This time mismatch creates genuine electricity supply constraints in many regions, forcing some data center projects to face delays or become more expensive due to premium energy costs.

How will the infrastructure buildout affect employment?

The buildout will create jobs across multiple categories: data center technicians, HVAC engineers, network specialists, electricians, construction workers, and GPU/chip designers. Data center technician positions grew 12% year-over-year in 2024, faster than overall job market growth. However, these jobs require specialized training that educational institutions are struggling to provide quickly enough. The real risk isn't unemployment from automation—it's displacement of workers who don't develop relevant skills. Nadella's point was accurate: "You're not going to lose your job to AI; you're going to lose your job to someone who uses AI."

What's the difference between cloud GPU infrastructure and edge robotics infrastructure?

Cloud GPU infrastructure (hyperscaler data centers with massive GPU clusters) focuses on training large language models and running inference at scale for cloud applications. This is centralized, capital-intensive, and bandwidth-heavy. Edge robotics infrastructure, by contrast, distributes compute closer to end devices—robots, IoT sensors, industrial equipment. This requires smaller-scale but more numerous computing nodes optimized for real-time inference and local decision-making. The robotics opportunity Huang highlighted requires a fundamentally different infrastructure approach: edge chips, distributed systems, and local processing rather than centralized cloud compute.

Why are hyperscalers like Google and Amazon building custom AI chips?

Hyperscalers are building custom silicon (Google's TPUs, Amazon's Trainium and Inferentia chips) to reduce dependency on Nvidia's near-monopoly and optimize hardware for their specific workloads. A hyperscaler running millions of inference requests for their own services doesn't need the flexibility of general-purpose GPUs; they can optimize for their exact use case. Custom silicon provides better cost-per-inference, faster training for specific architectures, and independence from Nvidia's supply constraints and pricing. For enterprises and smaller organizations, custom silicon remains too expensive, so they depend on commodity GPUs. This creates a bifurcated market.

What's the timeline for when these infrastructure constraints will ease?

Semiconductor manufacturing capacity from Intel, TSMC, and Samsung new fabs will begin coming online in 2025-2026, providing some supply relief. However, demand growth may accelerate faster than new capacity comes online. Power grid constraints will remain acute for 2-3 years in many regions before new power plants and renewable energy infrastructure come online. Upskilling and talent constraints will persist for 3-5 years minimum, as educational institutions slowly adapt curricula and bootcamp quality varies widely. The most likely scenario is incremental easing over time, not sudden relief—certain constraints will ease while others tighten.

Should I pursue a career in AI or infrastructure roles?

Yes, with important caveats. The demand is genuine, salaries are competitive, and job security is reasonable in the near term (2-3 years). However, you must commit to continuous learning—the specific skills that matter today may be less relevant in 3-5 years. Pursue hands-on experience and verifiable credentials rather than just coursework. Specialize in areas less likely to be automated (architecture and strategy rather than execution and routine optimization). Recognize that some mid-tier technical roles might be vulnerable as automation technology matures. The safest career moves involve developing skills that create competitive advantage and can't be easily commoditized.

What does the infrastructure buildout mean for investors?

Investors have multiple exposure avenues: semiconductor manufacturers (Nvidia, AMD, Intel), data center REITs and operators, renewable energy companies, power grid infrastructure providers, AI software companies, and infrastructure-focused private equity. However, competition is intense, valuations are stretched in many segments, and execution risk is real. The best opportunities often lie in less-obvious places: specialized service providers for data center deployment, energy infrastructure companies focused on long-term power purchase agreements, and talent/upskilling providers. The infrastructure buildout is real, but identifying which specific investments will deliver returns requires deep domain knowledge.

What happens if AI applications don't deliver the promised productivity gains?

This is the real risk nobody discusses openly. If enterprises deploy AI and discover productivity improvements are smaller than expected—say, 5% efficiency gains rather than 30%—the ROI calculation for the massive infrastructure investment becomes questionable. Companies might have built billions in data center capacity that's underutilized. This could result in a stranded asset crisis where infrastructure investments fail to deliver expected returns, companies write down assets, and growth slows dramatically. This risk is why energy costs, power availability, and geographic diversification matter—if one region or approach proves uneconomical, others can absorb the loss.

For teams and organizations looking to navigate this infrastructure revolution effectively, platforms like Runable are offering practical solutions for AI-powered automation. Whether you're building internal documentation, creating automated reports, generating presentations from data, or establishing AI-driven workflows, having the right tools becomes critical as your team adapts to this new landscape. At just $9/month, Runable enables teams to automate content generation and workflow processes, freeing engineers and product teams to focus on high-level strategy rather than repetitive tasks.

Use Case: Automate your weekly infrastructure reports and stakeholder presentations with AI-generated slides and documents that pull real-time data automatically.

Try Runable For Free

Projected AI infrastructure spending by 2030 is estimated to exceed $1.8 trillion, surpassing historical projects like the Interstate Highway System and the Apollo program.

Conclusion

Jensen Huang's statement about the largest infrastructure buildout in human history isn't hyperbole. The numbers are real. The scale is unprecedented. The implications are profound.

But recognizing scale doesn't mean assuming smooth execution or guaranteed returns. Infrastructure buildouts face constraints: power availability, semiconductor supply, talent scarcity, geopolitical risk, and regulatory uncertainty. The organizations and individuals who succeed will be those who understand these constraints, position themselves strategically, and commit to continuous adaptation.

The opportunity is genuine and transformative. The window to position yourself is real but narrowing. The cost of missing it is significant but still manageable today. The question isn't whether the infrastructure buildout is happening. The question is whether you're going to be part of it, or disrupted by it.

The infrastructure boom created by AI and robotics represents a generational shift in technology, labor markets, and economic structure. For those willing to invest in developing the right skills, building the right partnerships, and adapting to rapidly changing requirements, it offers opportunity on a scale that might not repeat for another generation. For those who ignore it and assume their current skills and position are safe, the risk of obsolescence is very real.

The time to decide which camp you'll be in isn't in 2026 or 2027. It's now, in 2025, when the infrastructure buildout is accelerating and talent competition is intensifying. The infrastructure revolution is here. The question remaining is: what role will you play in it?

Key Takeaways

- $1.8 trillion in global AI infrastructure spending by 2030 exceeds Interstate Highway System and Apollo program costs combined

- GPU supply constraints extend beyond latest generation—two-generation-old GPUs seeing price increases due to severe demand imbalance

- Regional power grids face 3-5% annual demand growth from data centers, but new power plants take 5-10 years to build, creating genuine energy constraints

- Data center technician positions growing 12% annually, 6.7x faster than overall employment, creating intense talent competition and 23% salary increases

- Robotics represents second phase of AI infrastructure buildout, requiring edge computing infrastructure distributed differently than cloud GPU concentration

- Hyperscalers building custom silicon (Google TPUs, Amazon Trainium) to reduce Nvidia dependency and optimize for specific workloads, bifurcating the GPU market

- AI upskilling isn't optional—workers must continuously develop new skills or face displacement by competitors with AI competency

- Geopolitical, energy, and regulatory risks could strand infrastructure investments if economic assumptions about AI ROI prove optimistic

Related Articles

- Physical AI: The $90M Ethernovia Bet Reshaping Robotics [2025]

- RadixArk Spins Out From SGLang: The $400M Inference Optimization Play [2025]

- GameStop Outage January 2026: Everything You Need to Know [2026]

- Why CEOs Are Spending More on AI But Seeing No Returns [2025]

- Humans&: The $480M AI Startup Redefining Human-Centric AI [2025]

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

![Nvidia's $1.8T AI Revolution: Why 2025 is the Once-in-a-Lifetime Infrastructure Boom [2025]](https://tryrunable.com/blog/nvidia-s-1-8t-ai-revolution-why-2025-is-the-once-in-a-lifeti/image-1-1769089100485.jpg)