Can We Move AI Data Centers to Space? The Physics Says No [2025]

The idea sounds almost elegant in its simplicity. Earth's AI data centers are burning through electricity at catastrophic rates, draining water supplies, and heating up entire regions. Solar panels in space never stop receiving sunlight. Radiation works fine without air. Problem solved, right?

Wrong.

This is one of those moments where a superficially brilliant idea collides with the actual laws of physics. And physics, as it turns out, doesn't negotiate.

Let me walk you through why space data centers sound good on a pitch deck but fail catastrophically when you do the math.

TL; DR

- Energy reality: Space-based data centers would need 2-3 times more power than Earth-based ones due to radiation inefficiency

- Thermal nightmare: Computers in space cool down extremely slowly through radiation alone, requiring massive radiator panels

- Launch economics: Sending a single petabyte of processing power to orbit costs billions, not millions

- Radiation damage: Space exposes electronics to cosmic rays and solar radiation that degrade hardware at accelerated rates

- Bottom line: Earth-based data centers with renewable energy and water recycling remain vastly more practical than orbital alternatives

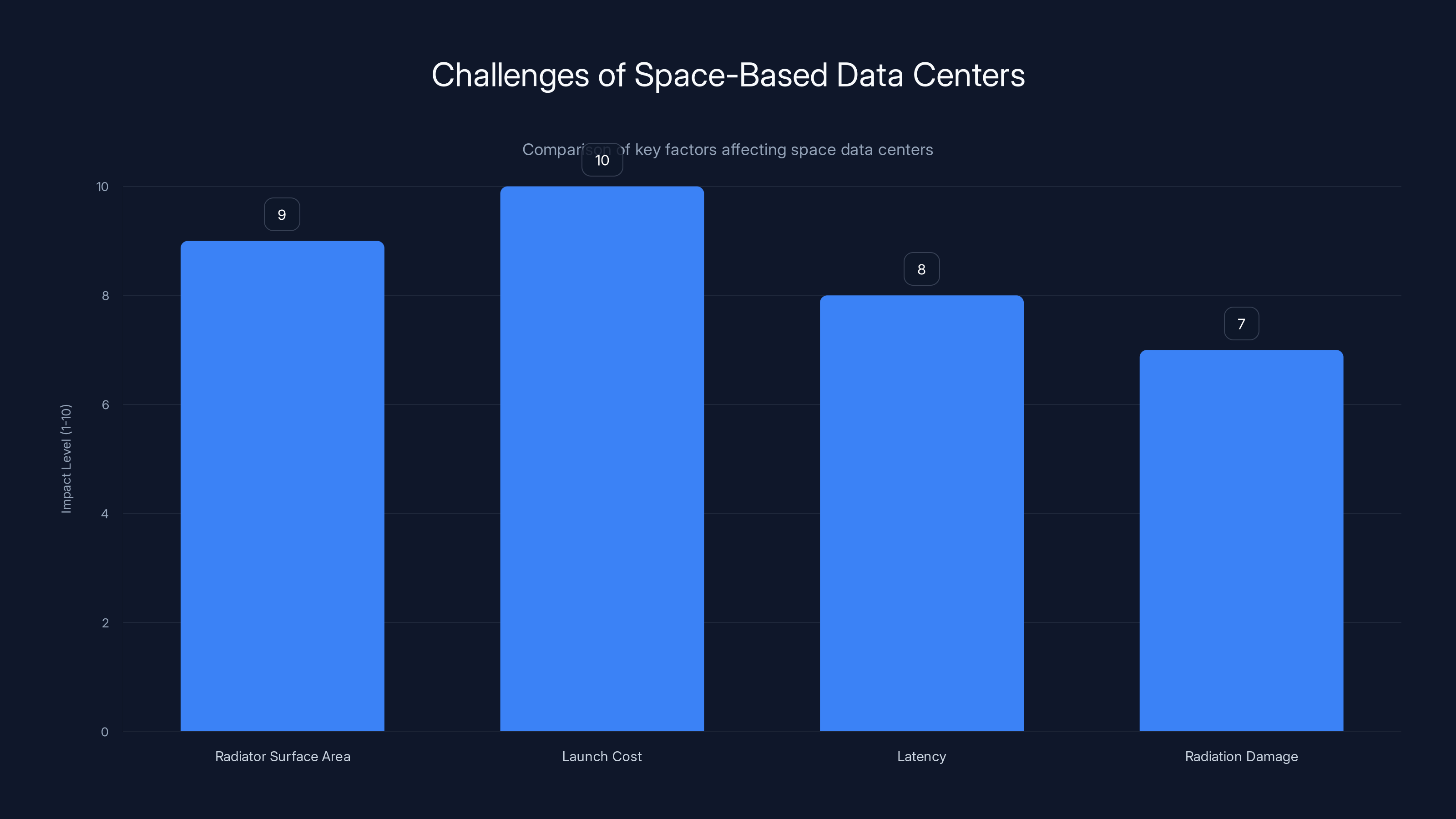

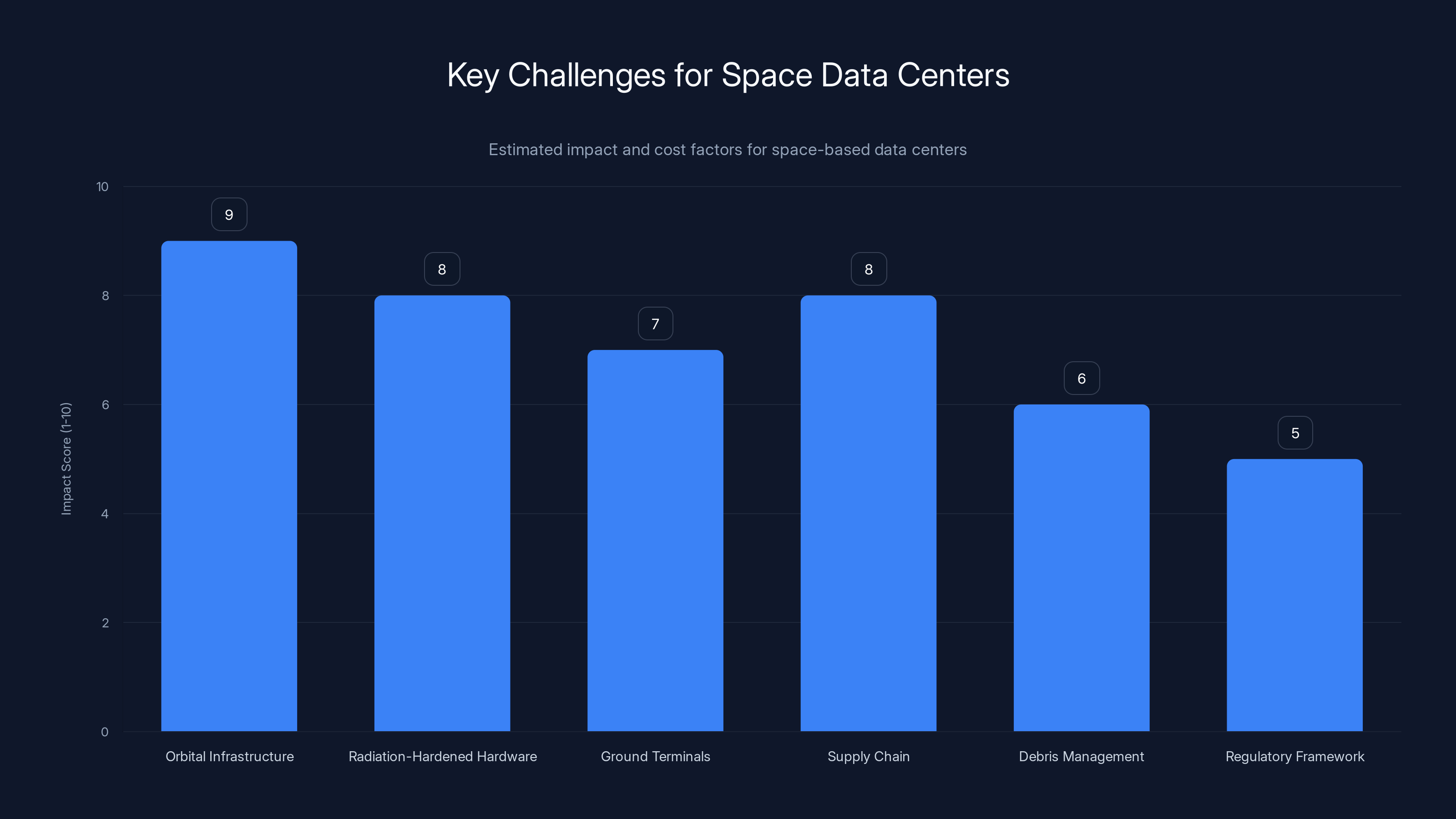

Space data centers face significant challenges: enormous radiator surface requirements, prohibitive launch costs, high latency, and potential radiation damage. Estimated data based on typical space constraints.

The AI Energy Crisis Is Real

Let's start with the actual problem we're trying to solve. This isn't hypothetical.

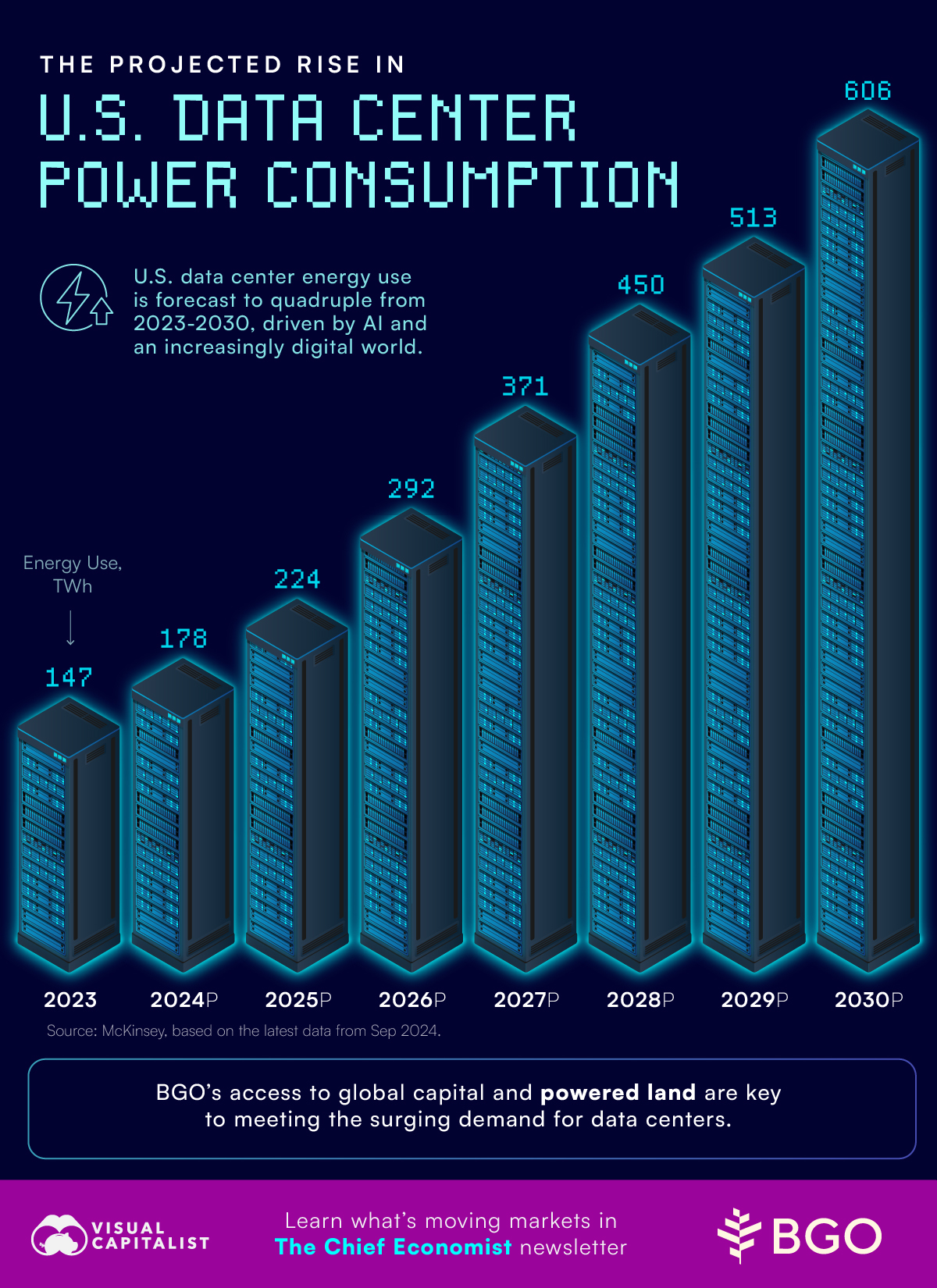

A single training run of a large language model can consume as much electricity as 100 homes use in a year. OpenAI's latest models train on clusters containing tens of thousands of GPUs running in parallel. That's not terawatts of power—not yet—but we're accelerating fast.

Current projections suggest AI servers alone will consume 22 percent of total US household electricity by 2028. That's staggering. For context, that's roughly equivalent to powering the entire state of Florida.

The water problem compounds the crisis. Modern high-density chips run hot enough to require direct water cooling instead of air alone. Large facilities consume millions of gallons daily. Intel's new Arizona data center will consume as much water as a city of 200,000 people. Communities are already fighting back. Colorado rejected multiple data center proposals. Iowa is scrutinizing new facilities. And Arizona is facing genuine water scarcity.

So the underlying problem is legitimate. The question becomes: what are the actual solutions, and does space really belong on that list?

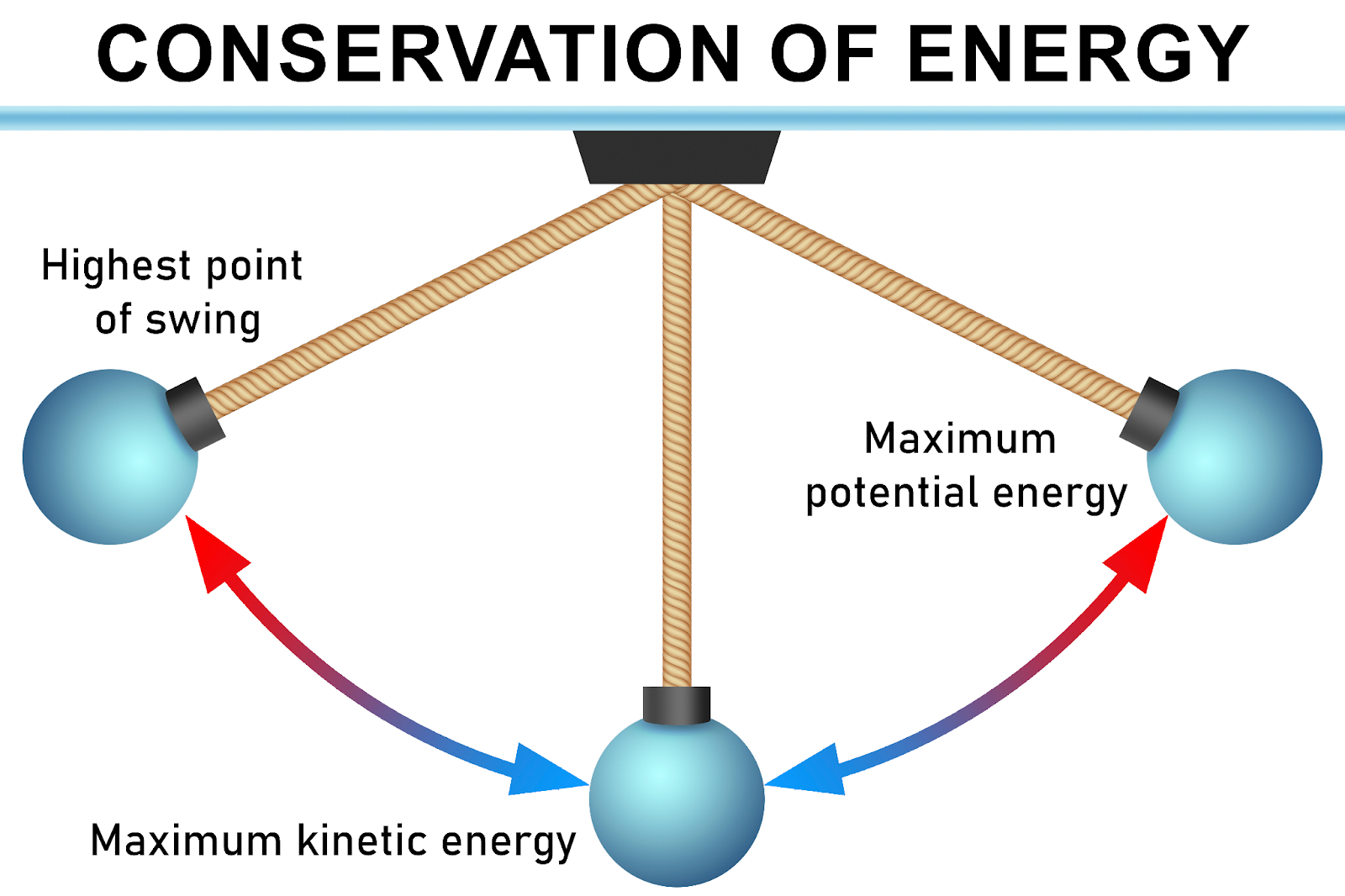

The Conservation of Energy Fundamentals

Here's where we need to get rigorous, because this is where the space data center idea starts to break apart.

Conservation of energy states that energy cannot be created or destroyed, only transformed. For any system, the power flowing in must equal the power flowing out plus any internal energy changes:

Take a desktop computer with a 300-watt power supply. All 300 watts of electrical input must exit the system as heat (once thermal equilibrium is reached). Your computer is essentially a 300-watt space heater that also plays video games.

Now scale that to a data center with 50,000 servers. Each pulling 500 watts. That's 25 megawatts total power input. All 25 megawatts must leave that building as heat. All of it.

On Earth, we have a massive advantage: air. We have fans, cooling towers, and convection. Heat transfer through direct contact (conduction) is incredibly efficient. Immerse your hand in 70-degree water and you lose heat rapidly because the water molecules are physically touching your skin.

In space? There's no air. No molecules to move around. Conduction is impossible.

Launching a data center to space is currently 37.5 times more expensive than building one on Earth. Even with future reductions, space remains 6.25 times costlier. Estimated data.

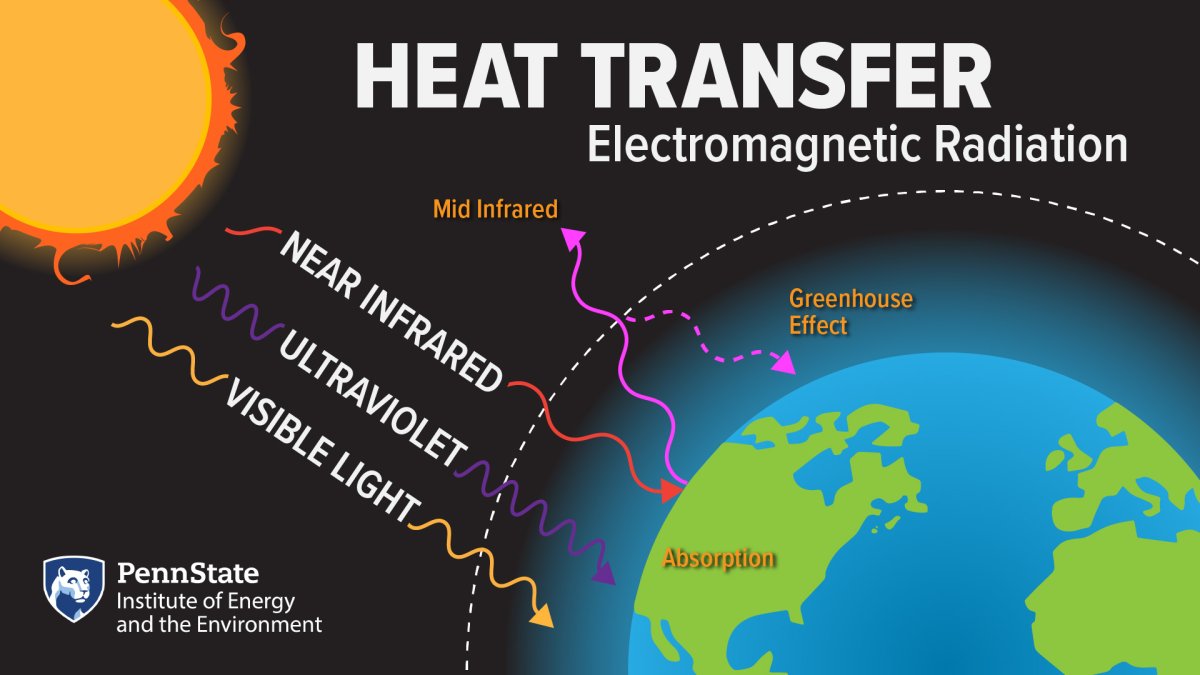

How Heat Actually Escapes in Vacuum

Without convection or conduction, the only way to shed heat in space is through thermal radiation. Objects emit infrared light (among other wavelengths) proportional to their temperature. This is how an electric oven heats your pizza without touching it.

The rate of thermal radiation follows the Stefan-Boltzmann Law:

Where:

- P = radiated power (watts)

- ε = emissivity (how good the object is at radiating; 0 to 1)

- σ = Stefan-Boltzmann constant (5.67 × 10⁻⁸ W/m²K⁴)

- A = surface area (square meters)

- T = temperature in Kelvin

Notice that temperature is raised to the fourth power. This is critical. Hotter objects radiate exponentially more power than cooler ones.

Let's do actual math. Suppose your gaming PC in space runs at 200°F (366 Kelvin). It's a cube-shaped box with 1 square meter of surface area. Assuming it's a perfect radiator (ε = 1), the radiated power would be:

Your PC inputs 300 watts of power. It radiates 1,000 watts. So it'll cool down. This actually works for small systems.

Now scale up. You want to run AI workloads. That requires roughly 8 times the computing power, so 8 times the volume. A cube with edges twice as long has 8 times the volume (2^3) but only 4 times the surface area (edges are 2x, so area scales with length squared).

With 8 times the power input (2,400 watts) but only 4 times the radiating surface area, you're in trouble. Surface area scales as length squared, but power demand scales with volume (length cubed). This is a fundamental geometric problem.

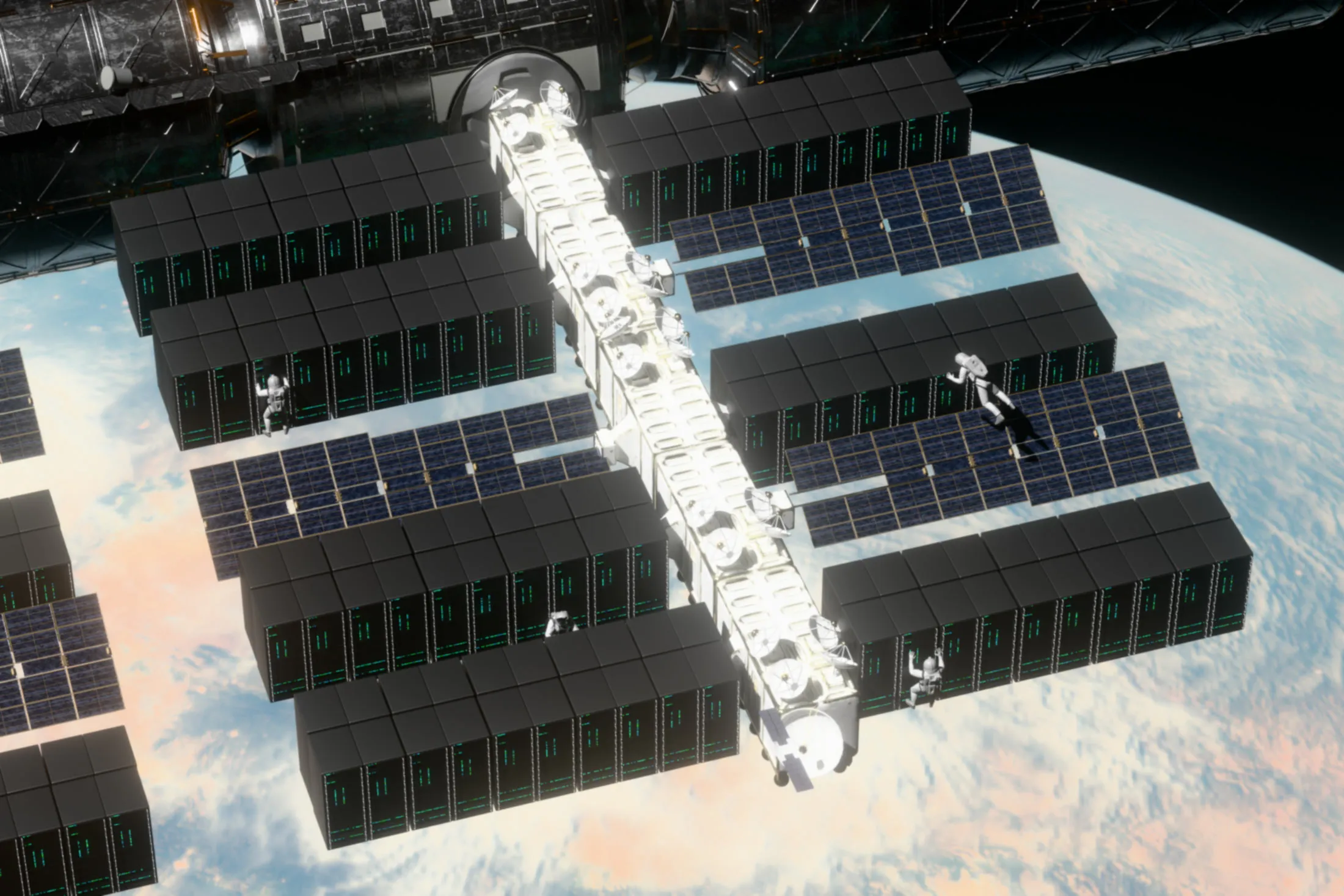

For a data center with 50,000 servers needing 25 megawatts, the radiator panels required would be astronomically large. We're talking square kilometers of radiator surface. On an orbital platform, that becomes a structural engineering nightmare.

The Scaling Problem That Kills the Whole Idea

This is where the space data center concept falls apart completely.

A typical modern data center requires roughly 5 to 10 square meters of radiator surface per megawatt of heat dissipation when using radiation alone in vacuum conditions. Some estimates go even higher depending on acceptable operating temperature.

For a 25-megawatt orbital data center, you'd need 125 to 250 square kilometers of radiator panels. To put that in perspective, that's larger than the island of Manhattan. And this isn't a solid surface—it's panels that must be deployed, maintained, and pointed correctly.

Worse, these calculations assume perfect conditions. Real radiators have emissivity less than 1. Real computers run hotter than optimal. Real systems need redundancy. The actual numbers are significantly worse.

Let's compare to Earth solutions. A water-cooled facility on Earth can reject heat at roughly 1 to 2 megawatts per 100 square meters of cooling tower surface area. That's orders of magnitude better because water cooling leverages conduction, not radiation.

The fundamental physics creates an insurmountable problem: space cooling only works for small systems. For the massive data centers AI requires, you'd paradoxically need MORE heat rejection infrastructure in space than on Earth.

Solar Power in Space Isn't the Game Changer You Think

Proponents argue for space data centers because of constant solar power. Earth data centers need batteries, solar panels at limited angles, or grid power at night. Space gets 24/7 sunlight.

But here's the catch: solar panels in space aren't dramatically more efficient than on Earth. Modern commercial panels achieve 15-22% efficiency both places. The real gain comes from eliminating nighttime, weather, and atmospheric losses.

A 25-megawatt data center needs roughly 150 to 200 square kilometers of solar panels in space (accounting for panel efficiency, solar constant of 1,361 W/m², and system losses). That's comparable to the radiator panel area needed—you're still building a massive infrastructure structure.

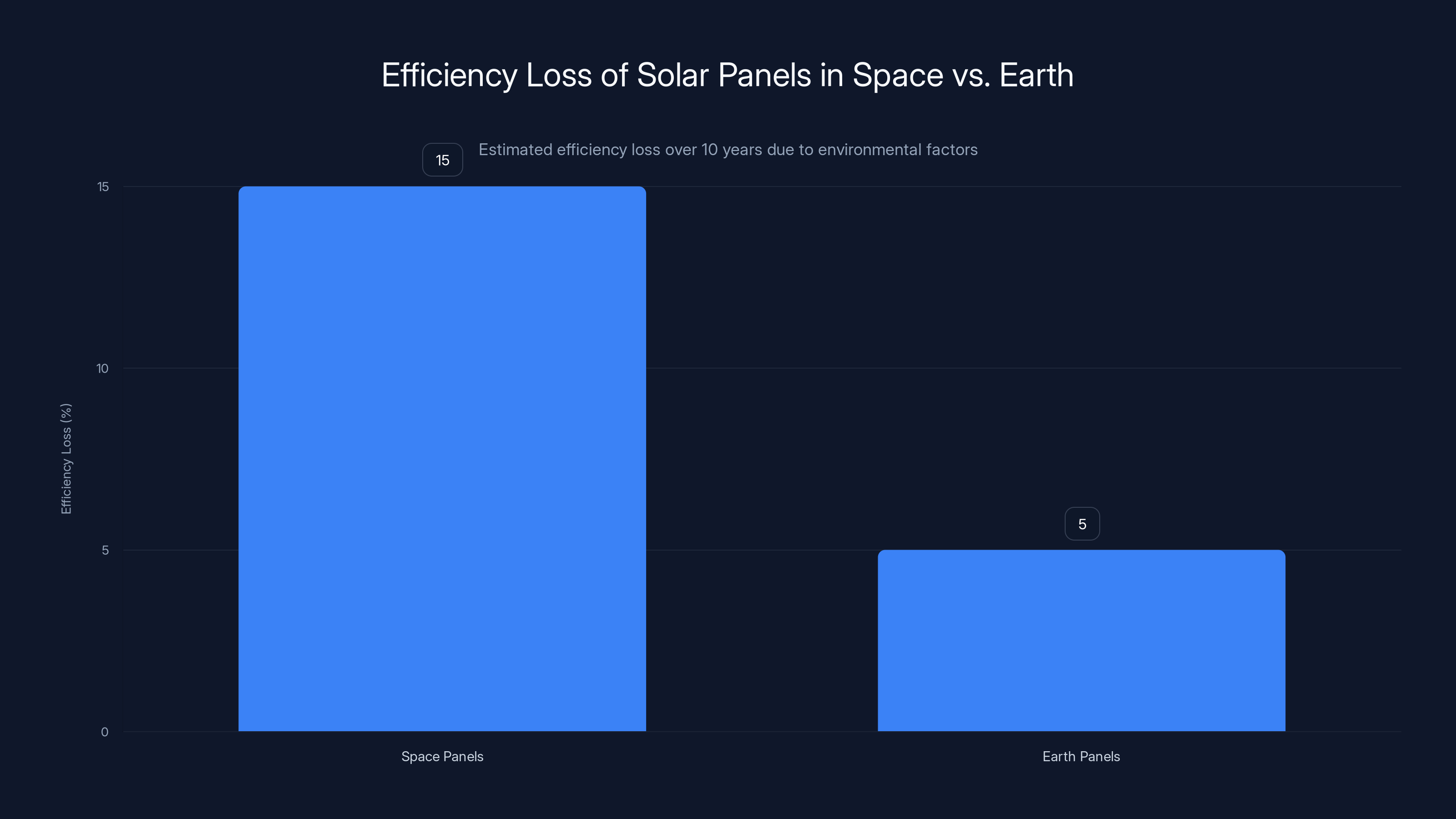

Solar panels in space also degrade. Cosmic radiation, micrometeorite impacts, and thermal cycling all reduce efficiency. A panel in orbit loses 1-2% efficiency per year. After 10 years, you've lost 10-20% of your power output.

On Earth, you replace solar panels every 25-30 years and pay minimal launch costs. In space, replacement becomes an engineering expedition. The logistics become prohibitive.

The most significant challenges for space data centers include massive orbital infrastructure and radiation-hardened hardware, each with high impact scores. Estimated data based on described requirements.

Launch Economics Are Utterly Brutal

Even if we solved every physics problem (we can't), the economics make space data centers laughable.

Launching cargo to low Earth orbit currently costs

A single server weighs roughly 20-30 kg and costs

A data center with 50,000 servers would weigh 1-1.5 million kilograms. At Space X's theoretical

For comparison, a ground-based data center costs roughly

Space just can't compete economically. Even if Space X achieves

Then consider replacement cycles. Servers typically last 4-5 years. Your entire orbital fleet becomes obsolete. You need to launch replacements. The economic model collapses immediately.

Radiation Damage: The Silent Hardware Killer

Here's something space advocates rarely mention: cosmic radiation.

Earth's magnetic field protects us from solar wind and cosmic rays. In low Earth orbit, you're outside that protective bubble. High-energy particles bombard everything constantly.

These particles cause bit flips—random errors where a 1 becomes a 0 in computer memory. In isolated cases, that's tolerable. In massive data centers running millions of calculations simultaneously, bit flips become reliability nightmares.

Modern server hardware experiences roughly 1 bit flip per 256 GB of RAM per day on Earth. In space, you're looking at 100-1,000 times higher rates depending on solar activity and orbital altitude.

Handling that requires:

- Massive error-correction overhead (slowing computation)

- Extensive redundancy (tripling hardware costs)

- Continuous monitoring and replacement (operational nightmare)

Network equipment faces similar degradation. Transistors in CPUs are particularly vulnerable. Over 3-5 years, you're looking at 20-30% degradation in semiconductor performance just from radiation damage.

Earth-based data centers in radiation-hardened facilities with appropriate shielding avoid this entirely.

Network Latency: Speed of Light Becomes a Bottleneck

Data centers need to connect to users and other systems. Communication happens at the speed of light: 300,000 kilometers per second.

Low Earth orbit is roughly 400 kilometers altitude. Light takes about 2.6 milliseconds to travel from orbit to Earth and back. That's the theoretical minimum latency—the physics-imposed speed of light delay.

Earth-based data center latency is typically 1-10 milliseconds. Adding 2.6ms to every transaction is non-trivial. For high-frequency trading, multiplayer gaming, video conferencing, and real-time AI inference, this becomes a genuine problem.

You could argue for processing in orbit and sending results down, but then you're back to the original problem: you've offloaded computation that can't talk efficiently to Earth. You'd need to place AI models in orbit, training data in orbit, inference in orbit. That's not solving the energy problem—that's multiplying it.

Geosynchronous orbit (where satellites stay fixed above one point) has 35,786 kilometers altitude. Light takes roughly 120 milliseconds round trip. That's unacceptable for interactive applications.

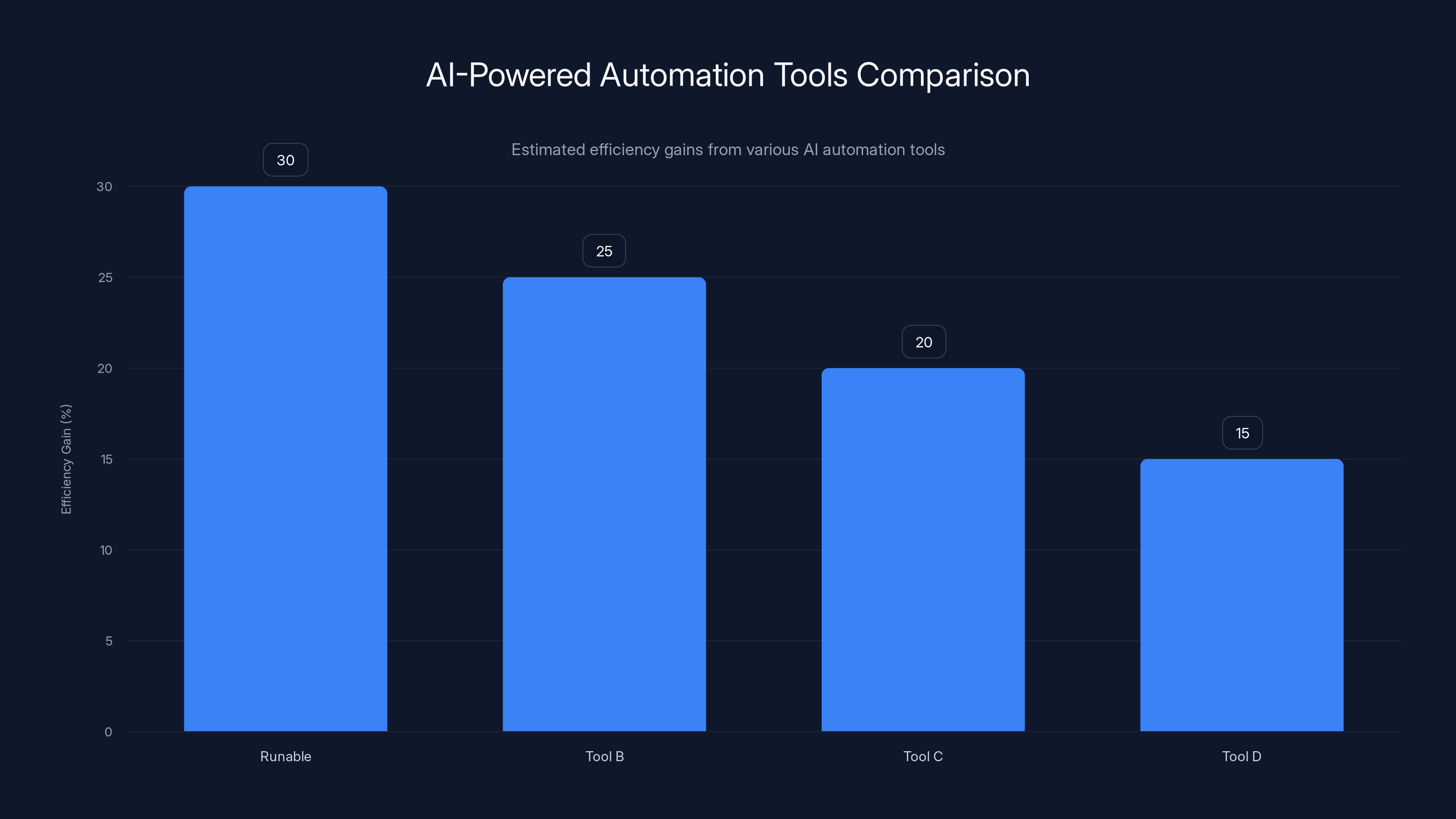

Runable offers the highest estimated efficiency gain of 30% among AI automation tools, enhancing productivity by automating tasks. Estimated data.

The Water Problem Space Doesn't Solve

Proponents claim space solves the water problem. No atmosphere means no evaporative cooling, so no water needed.

True, but misleading.

First, space cooling doesn't actually work for large systems, so the water savings are hypothetical. Second, humans need water. Life support systems in orbital facilities need water. Growing food in space needs water. Even if your servers don't, your crew does.

Moreover, the real water crisis is localized. Arizona doesn't care about cooling a facility in space—they care about keeping Lake Mead filled. Space data centers don't help Arizona, Iowa, or Colorado.

The solution to water scarcity is better Earth-based solutions: closed-loop water recycling, wetland reclamation, location diversification away from arid regions, and efficiency improvements.

Israel recycles 90% of wastewater. Singapore treats and recycles essentially all water. These are proven, scalable approaches that work on Earth.

Real Solutions: What Actually Works

This is the part where we stop critiquing space and focus on what genuinely solves the problem.

Immersion Cooling: Submerge entire server racks in non-conductive liquid (mineral oil, special coolants). This enables heat transfer 100-1,000 times more efficient than air cooling. Companies like Liquid Cool Solutions and Asetek are commercializing this. It cuts cooling energy by 40-60%.

Data Center Efficiency: Most facilities waste enormous amounts of energy. Power Usage Effectiveness (PUE) of 1.5 means 50% of energy goes to cooling, not computation. Leading facilities achieve PUE of 1.1-1.2. That's 10-20% improvement through better design, sensors, and management.

Geographic Diversification: Build data centers in cooler climates (Iceland, Northern Europe, Canada) where ambient temperature is naturally low. This reduces cooling energy by 30-40%.

Renewable Integration: Pair data centers with solar and wind farms. Use battery storage or hydro for baseline power. Google, Meta, and Microsoft are already doing this at scale.

Water Recycling: Closed-loop systems with wastewater treatment can reduce consumption by 80%+. Cooling towers can recirculate instead of evaporate.

Demand Reduction: Optimize AI models. Smaller, more efficient models require exponentially less compute. Distillation and quantization can reduce inference power by 50-90%.

Each of these is proven, scalable, and economically viable on Earth.

What Would Actually Be Required for Space Data Centers

Let's be intellectually honest: could we make space data centers work if money were unlimited?

Yes. But the requirements are absurd.

You'd need:

-

Massive orbital infrastructure: Station modules to house and cool servers, power generation, radiator arrays, and redundant life support for human maintenance crews.

-

Radiation-hardened hardware: Custom processors with massive error-correction overhead, reducing computational efficiency by 20-30% immediately.

-

Ground terminals: High-speed laser communication links (optical ground stations) distributed globally to minimize latency. These are expensive, complex, weather-dependent infrastructure.

-

Supply chain: Regular resupply missions to replace degraded components. Every 4-5 years, massive replacement operations.

-

Debris management: Space is increasingly crowded with defunct satellites and collision debris. You'd need active debris avoidance and maneuvering capability.

-

Regulatory framework: Space law is still developing. Operating a commercial data center in orbit would face unknown legal and technical requirements.

The total cost? Easily

We could do it. It would be magnificent engineering. It would also be the worst possible use of $500 billion for solving climate and energy problems.

Solar panels in space lose 15% efficiency over 10 years due to cosmic radiation and other factors, compared to only 5% on Earth. Estimated data.

The Real Issue: Peak Oil Replaced by Peak Compute

This is the uncomfortable truth nobody wants to hear.

AI companies have created demand that exceeds sustainable power generation growth. The solution isn't technological escape—launching the problem to space. The solution is managing demand.

That means:

- Smaller AI models

- More efficient architectures

- Paying actual environmental costs

- Stopping trivial applications (AI pet name suggestions don't need a data center)

- Accepting slower innovation in areas where speed doesn't matter

Tech culture loves moving fast and breaking things. But sometimes the thing being broken is our water supply and power grid.

The honest conversation isn't "can we put data centers in space?" It's "why do we think space solves problems our actual lifestyle choices create?"

Space is incredible for applications where you really need it: communications satellites, scientific observation, national security. Building data centers there to avoid solving Earth-based problems is intellectual surrender.

Why Space Data Centers Persist as an Idea

If the physics is this clear, why does the concept keep resurfacing?

Partly because it's genuinely creative thinking. Partly because it reflects real desperation about Earth-based solutions. Partly because venture capitalists love "moonshot" thinking.

But mostly because it avoids hard decisions.

Managing energy consumption means saying no to some applications. Managing water consumption means locating facilities away from deserts. Managing costs means accepting lower profit margins. None of these are exciting innovation stories.

"We're building data centers in space" sounds better than "we're going to run smaller AI models and charge more money for them."

But one is physics-possible. The other is economically-possible.

Alternative Orbital Applications That Actually Make Sense

To be fair, there are orbital applications that legitimately benefit from space:

Scientific Computing: Processing satellite imagery, radio telescope data, or climate modeling where computational requirements are moderate and location flexibility is high.

Manufacturing: Some materials (semiconductors, crystals, pharmaceuticals) manufacture better in microgravity. Processing data on-site makes sense.

Earth Observation: Storing and processing data from environmental monitoring satellites locally reduces transmission bandwidth.

These are real use cases where orbital processing provides genuine advantage. They're also vastly smaller in scale than general-purpose AI data centers.

They're excellent applications for 1-2% of our computing infrastructure. They're catastrophic ideas for 98% of it.

The Bottom Line: Physics Wins

Can we move AI data centers to space?

Physically: Yes, with enormous infrastructure.

Practically: No. You'd spend more energy cooling them than you save from continuous solar power.

Economically: Absolutely not. The launch costs alone destroy the financial model.

Climatologically: It solves nothing. You're just making the same carbon footprint problem someone else's problem.

The actual solutions are boring, terrestrial, and available today:

- Immersion cooling

- Renewable energy integration

- Water recycling

- Efficiency improvements

- Demand management

None of these require leaving the planet. All of them require hard work and honest conversations about what AI actually needs to do.

Space is vast and beautiful and full of possibilities. Building data centers there isn't one of them.

FAQ

Why can't we use air cooling in space like we do on Earth?

Space is a vacuum with essentially no air molecules. Air cooling relies on convection, where moving air transfers heat away from hot components. Without air, convection is impossible. The only heat transfer mechanism in space is radiation, which is orders of magnitude slower than conduction or convection. That's why space-based systems would require enormous radiator panels rather than simple fans.

How much radiator surface area would a space data center actually need?

Based on the Stefan-Boltzmann law and typical computer operating temperatures, a 25-megawatt data center would require approximately 125 to 250 square kilometers of radiator surface. That's roughly the size of New York City's Manhattan island. The geometric scaling problem (surface area grows as length squared, but volume/power grows as length cubed) means larger systems become exponentially harder to cool through radiation alone.

Is the cost of launching equipment to space actually prohibitive?

Yes. Current launch costs are

What about the latency problem—wouldn't that make space data centers slow?

Light takes 2.6 milliseconds just to travel from low Earth orbit to ground and back. This is the theoretical minimum, imposed by physics. For latency-sensitive applications (real-time AI, gaming, trading), this adds significant delay compared to Earth data centers running at 1-10 milliseconds. Geosynchronous orbit (where satellites stay fixed above one location) has 120+ milliseconds latency, making it unsuitable for interactive work.

How would radiation damage affect servers in orbit?

Cosmic rays and solar wind particles cause bit flips in computer memory at rates 100-1,000 times higher than on Earth's surface. This requires extensive error correction and redundancy, which increases hardware costs and reduces computational efficiency. After 3-5 years, semiconductor performance degrades 20-30% from radiation damage, meaning entire server fleets would need replacement far more frequently than on Earth.

What's a realistic solution to AI data center energy and water problems?

Proven solutions available today include immersion cooling (40-60% efficiency improvement), geographic diversification to cooler climates, renewable energy integration, closed-loop water recycling systems, and data center efficiency optimization. Advanced approaches like direct-to-chip cooling and waste heat recovery can reduce energy consumption by 30-50%. These are economically viable, scalable today, and don't require leaving the planet.

Could we build just small data centers in space for specific applications?

For very specific, high-value applications like processing satellite data or pharmaceutical manufacturing in microgravity, orbital computing could make sense. These would represent perhaps 1-2% of global computing infrastructure and have legitimate reasons for space-based processing. However, they wouldn't address the core AI energy crisis, which requires massive, Earth-based general-purpose compute centers.

What about solar power being better in space since it's always sunny?

While space-based solar panels do operate 24/7 without weather interference, this advantage is smaller than it sounds. Modern commercial solar panels achieve similar efficiency on Earth and in space. The real gains come from eliminating nighttime and weather, but in-space panels also degrade from cosmic radiation at 1-2% per year. You'd still need 150-200 square kilometers of solar panels for a large data center, creating massive infrastructure requirements.

What AI-Powered Automation Tools Exist Today

While we're waiting for space data centers to become feasible (they won't), organizations can leverage AI tools to optimize their actual, Earth-based operations.

Platforms like Runable enable teams to automate documentation, create data-driven reports, generate presentations automatically, and optimize workflows without building massive new infrastructure. For organizations drowning in manual processes, AI automation tools starting at $9/month can recover substantial efficiency gains.

Use Case: Automatically generate quarterly reports from raw data without manual compilation and formatting.

Try Runable For FreeFor teams managing massive data or infrastructure, efficiency improvements at home (on Earth) remain vastly more cost-effective than exotic orbital solutions. The best time to optimize operations was years ago. The second-best time is today.

Key Takeaways

- Space cooling through radiation alone is 30-100x less efficient than Earth-based convection and water cooling

- A 25-megawatt orbital data center would require 125-250 square kilometers of radiator panels—larger than Manhattan

- Launch costs (10/kg in future) make space economically unviable compared to ground infrastructure

- Cosmic radiation causes bit flip rates 100-1,000x higher in space, requiring expensive error correction and redundancy

- Proven Earth solutions—immersion cooling, water recycling, renewable integration, efficiency optimization—are cheaper and faster to deploy

- The 2.6-millisecond speed of light delay introduces unavoidable latency for interactive applications

- Managing AI's energy and water footprint requires demand-side solutions and efficiency improvements, not exotic infrastructure

Related Articles

- AI Data Centers & Energy Storage: The Redwood Materials Revolution [2025]

- G42 and Cerebras Deploy 8 Exaflops in India: Sovereign AI's Turning Point [2025]

- Google Gemini 3.1 Pro: AI Reasoning Power Doubles [2025]

- SoftBank's $33B Gas Power Plant: AI Data Centers and Energy Strategy [2025]

- Mirai's On-Device AI Inference Engine: The Future of Edge Computing [2025]

- Rapidata's Real-Time RLHF: Transforming AI Model Development [2025]

![Can We Move AI Data Centers to Space? The Physics Says No [2025]](https://tryrunable.com/blog/can-we-move-ai-data-centers-to-space-the-physics-says-no-202/image-1-1771591154655.jpg)