The Unexpected Shutdown That Left Users Heartbroken

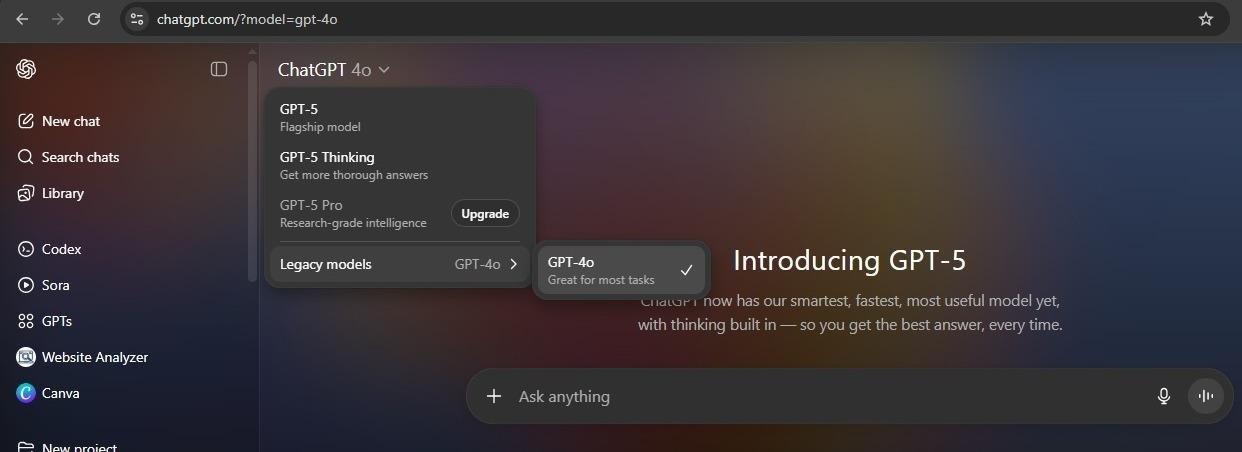

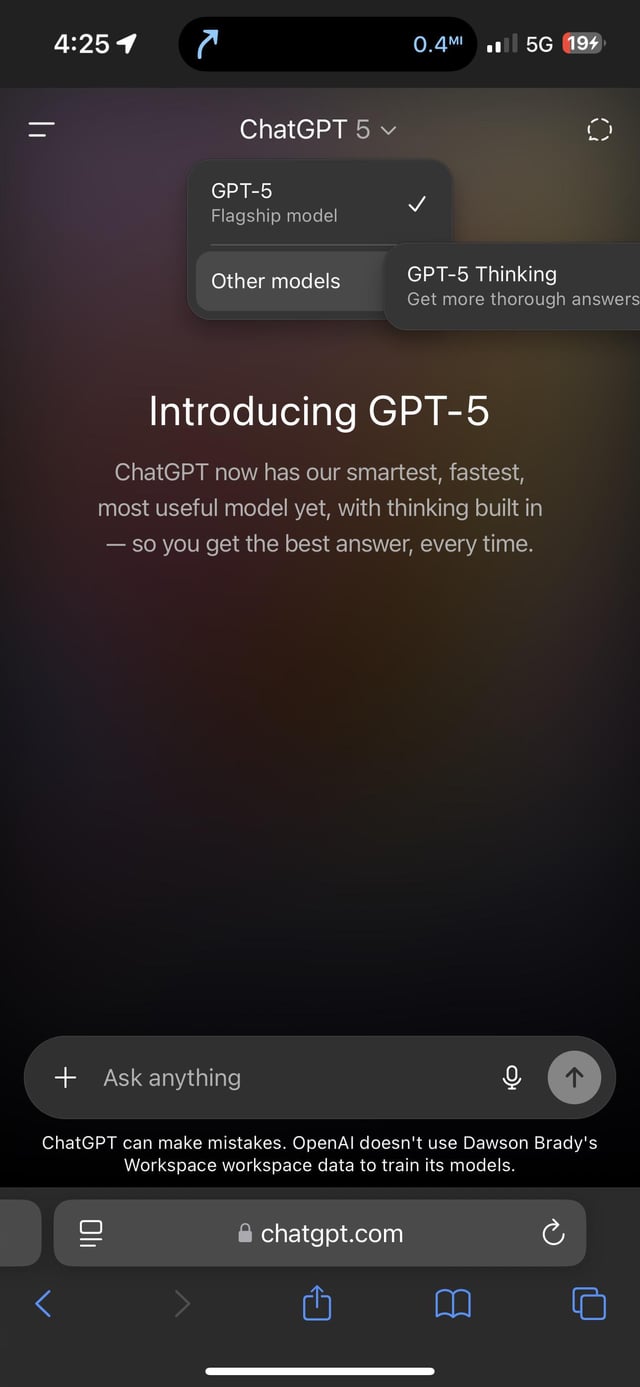

It happened quietly, almost without warning. One day, you opened Chat GPT, and your familiar companion was gone. Chat GPT-4o, the model that had become the gold standard for so many users, had been replaced. In its place stood GPT-5, Open AI's shiny new flagship model. But instead of celebration, the internet erupted in grief.

Social media filled with emotional testimonies. "I'm grieving," one user wrote. "4o was perfect. Why did they take it away?" Another said, "It actually understood what I meant." The hashtag #keep4o began trending, with thousands of users demanding Open AI restore the model they'd grown to depend on.

This wasn't a typical tech rollout failure. This was something deeper. Users didn't just lose access to a tool. They lost a specific version of AI that felt different, that worked in ways they'd come to trust. And Open AI, riding high on its success, seemed caught off guard by the strength of the emotional response.

The shutdown raised fundamental questions about how we relate to AI systems. Do we have a right to continuity with tools we've built workflows around? What happens when companies prioritize pushing the new over preserving the familiar? And most importantly, was GPT-5 really an upgrade, or just a different model that solved different problems?

This is the story of what happened when Open AI decided to move forward and left millions of loyal users behind. It's a cautionary tale about technology companies making decisions in a vacuum, and it offers lessons about AI product management that extend far beyond Open AI's walls.

TL; DR

- Chat GPT-4o was unexpectedly deprecated by Open AI in favor of the new GPT-5 model, triggering massive user backlash

- #keep4o campaign gained traction with thousands of users demanding restoration of the previous model

- Users reported specific features they missed: better reasoning, more consistent outputs, and superior performance on specialized tasks

- GPT-5 brought improvements in certain areas but felt like a downgrade for specific use cases many users depended on

- The situation exposed tensions between innovation velocity and user continuity in AI product development

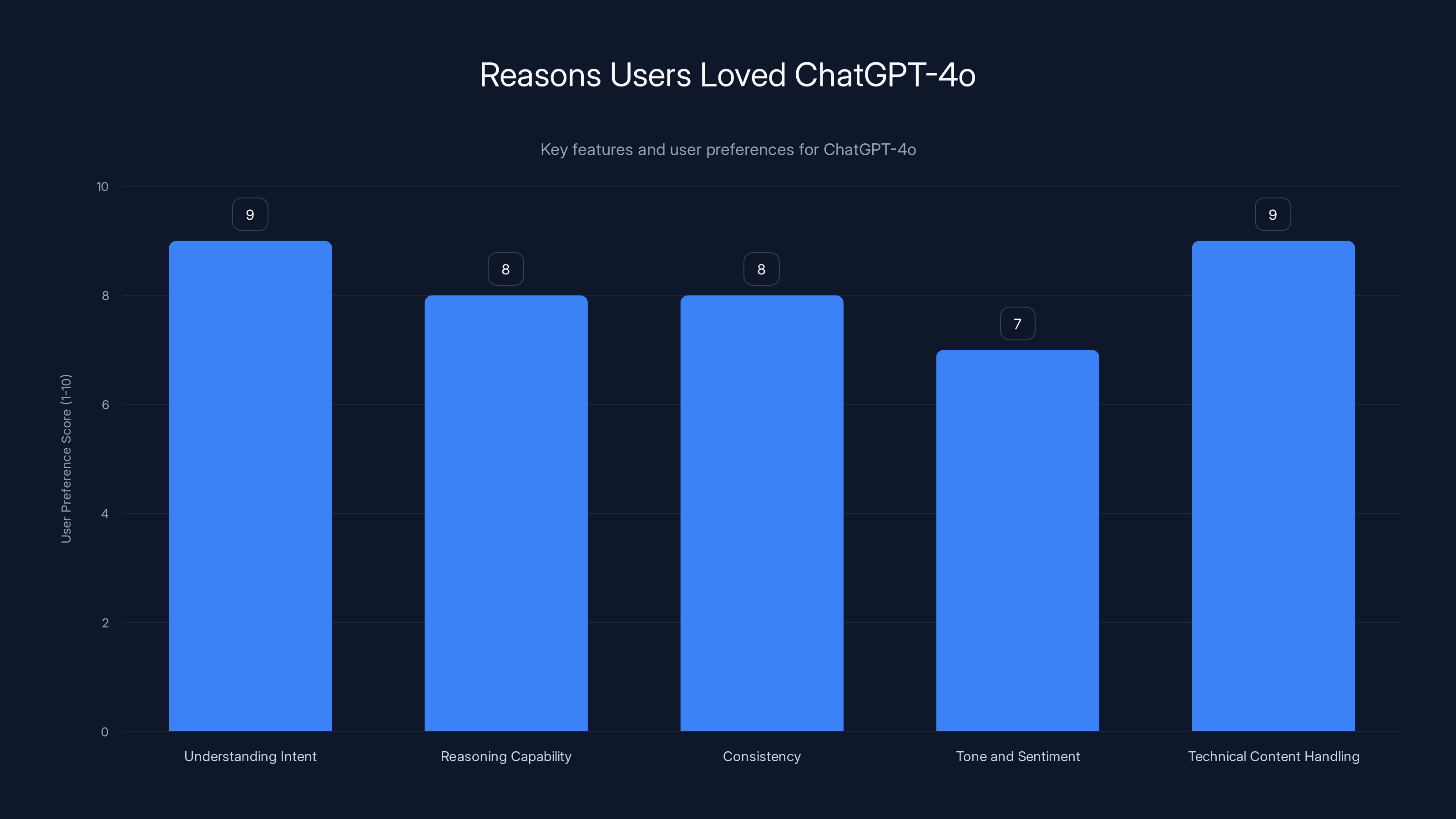

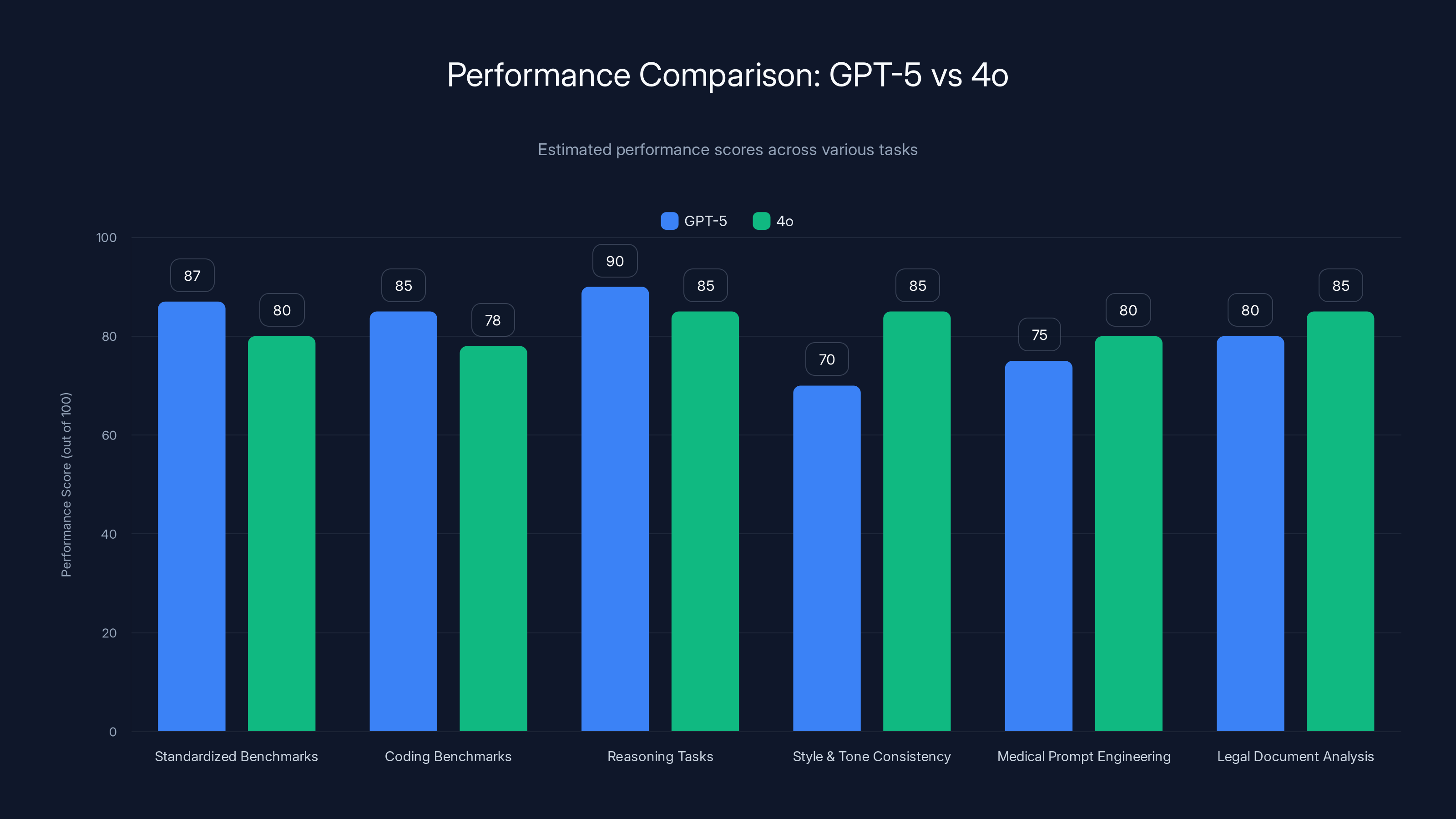

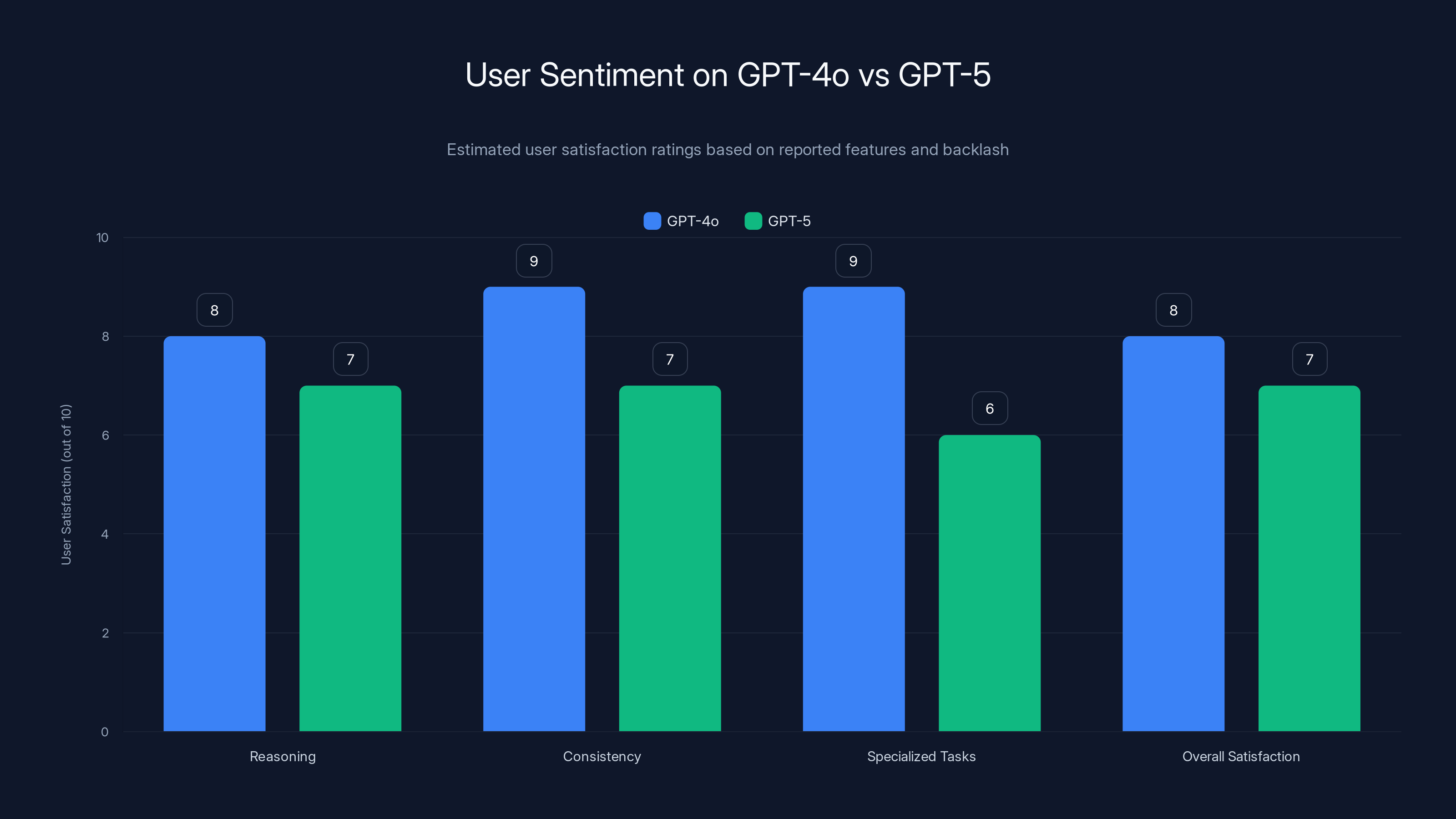

ChatGPT-4o was highly favored for its ability to understand user intent, reason effectively, and maintain consistency, making it a preferred choice across various domains. Estimated data based on user feedback.

What Was Chat GPT-4o and Why Did Users Love It?

Chat GPT-4o wasn't just another model update. It represented a turning point in how AI systems handled multimodal tasks. The "o" stood for "omni," and the model truly felt like it understood context in ways previous versions didn't. Users didn't just tolerate 4o. They actively preferred it, built their workflows around it, and defended it against competing models.

The model excelled at a specific thing: understanding what users meant, not just what they said. If you phrased something awkwardly or approached a problem from an unexpected angle, 4o would grasp the underlying intent. Writers reported that 4o caught nuance in tone and meaning. Developers said it understood architectural problems even when described imprecisely. Researchers found 4o handled complex multi-step tasks without losing track of earlier instructions.

One of 4o's standout features was its reasoning capability without the verbose explanation overhead. Earlier models would either skip reasoning entirely or explain every single step ad nauseam. Chat GPT-4o found a middle ground. It reasoned through problems internally, then delivered answers that felt both intelligent and concise.

Another factor: consistency. Users reported that Chat GPT-4o produced remarkably consistent outputs across sessions. Ask it the same question three times, and you'd get essentially the same answer with only minor variation. This predictability became valuable for people building systems or workflows that depended on reproducible results.

For customer service teams, 4o became the preferred tool for generating responses. It understood tone and customer sentiment in ways that felt almost human. For product teams, 4o excelled at breaking down user problems into actionable insights. For academics and researchers, 4o's ability to handle technical content across multiple domains made it invaluable.

The model developed almost a cult following among power users. Communities formed around sharing tips for getting the best results from 4o. People wrote lengthy threads about how to structure prompts for optimal 4o performance. This wasn't desperation. This was enthusiasts genuinely excited about a tool that worked well.

When Open AI announced GPT-5 would be replacing 4o, the initial reaction was curiosity mixed with apprehension. Curiosity because GPT-5 came with impressive benchmark improvements. Apprehension because users recognized that benchmark improvements don't always translate to better performance on the specific tasks they cared about.

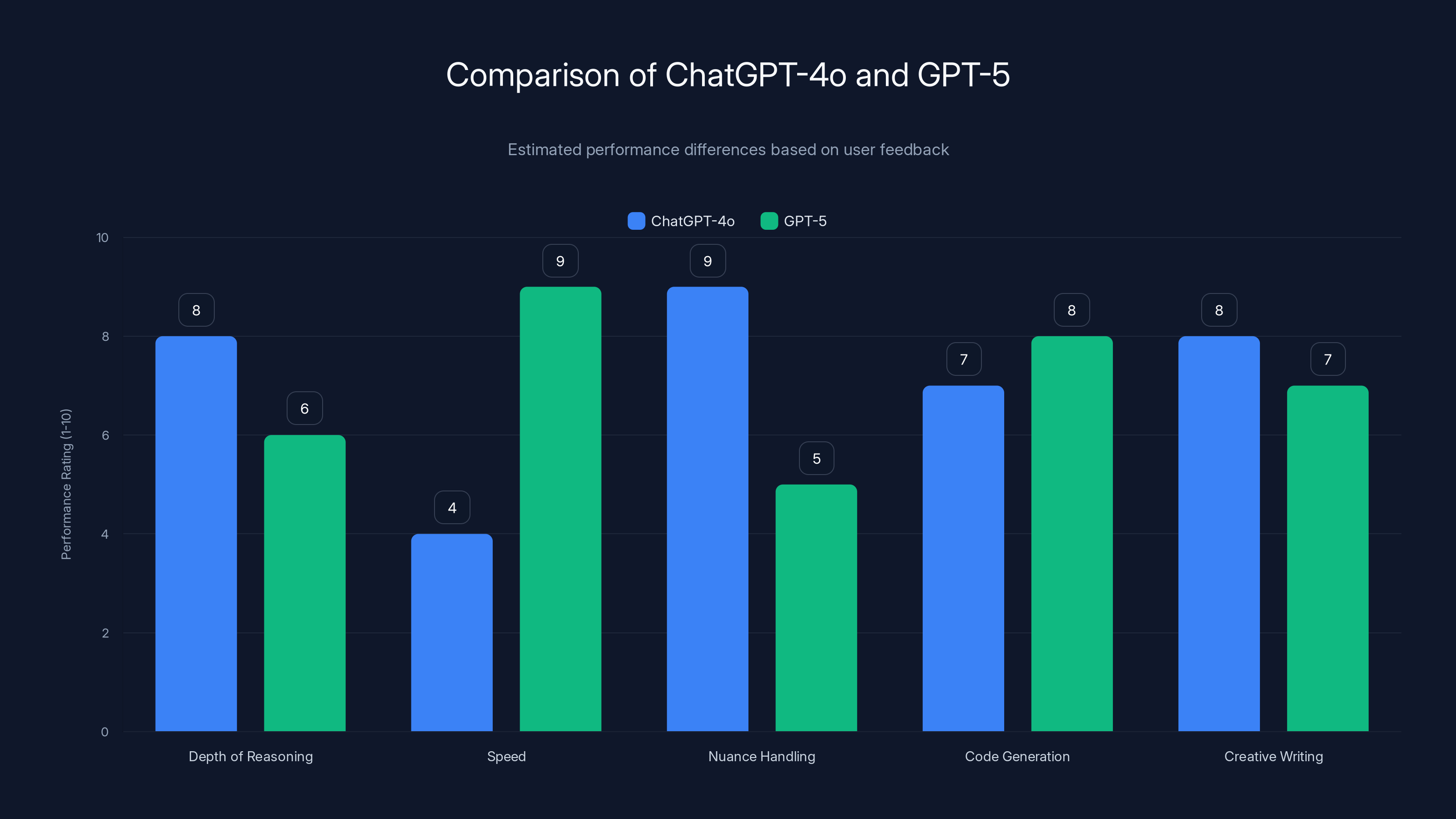

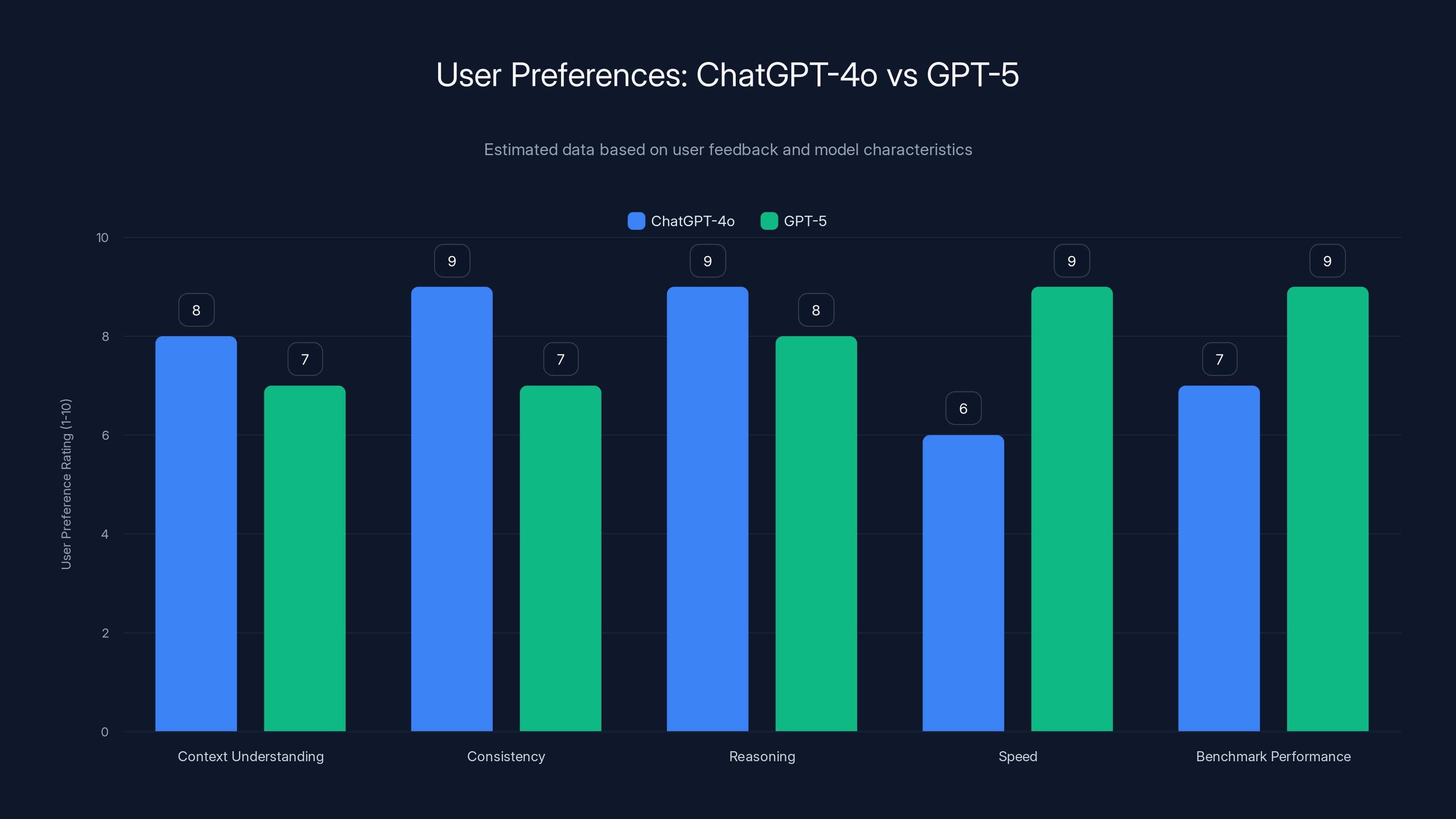

ChatGPT-4o excels in depth of reasoning and nuance handling, while GPT-5 is faster and better at code generation. Estimated data based on user feedback.

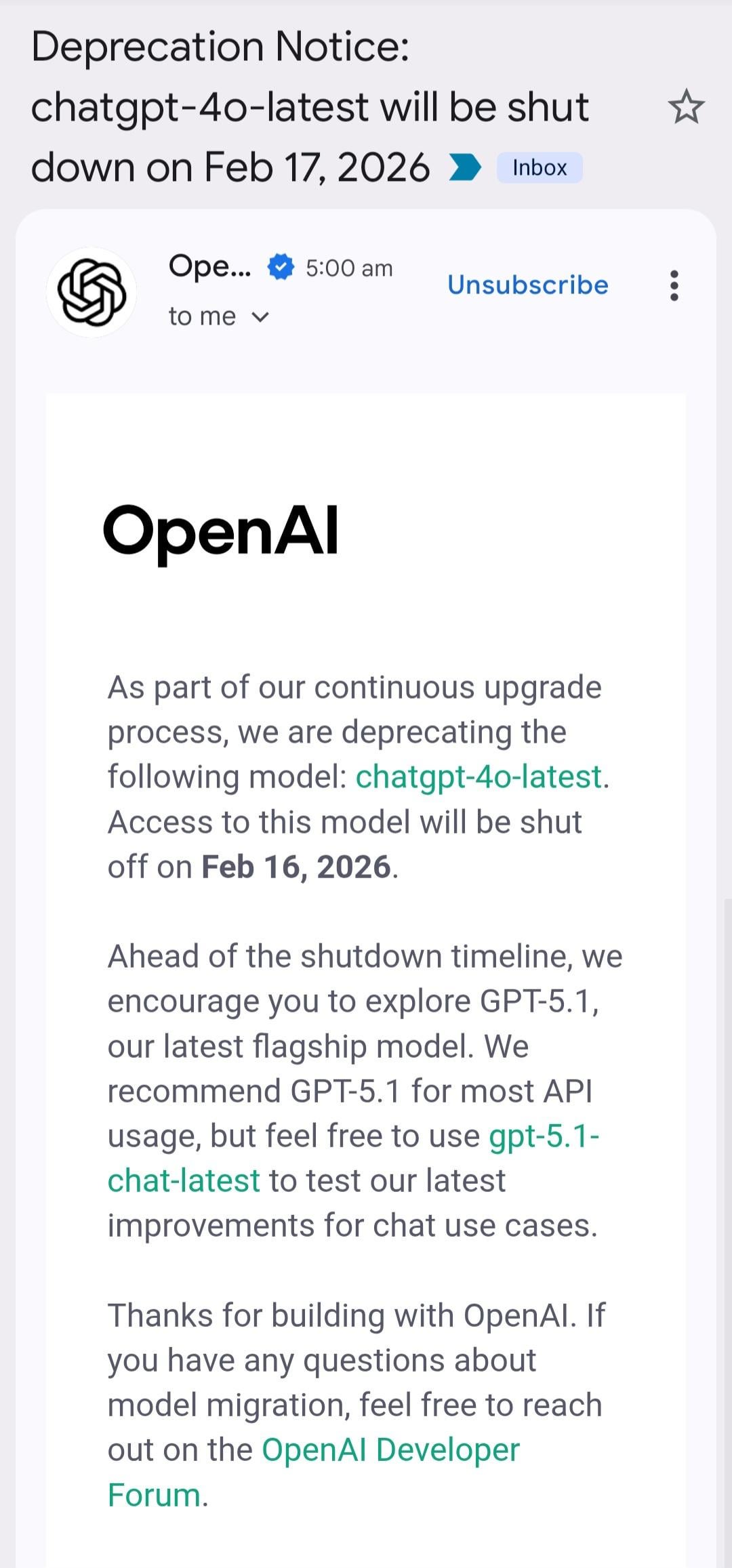

The Announcement Nobody Expected

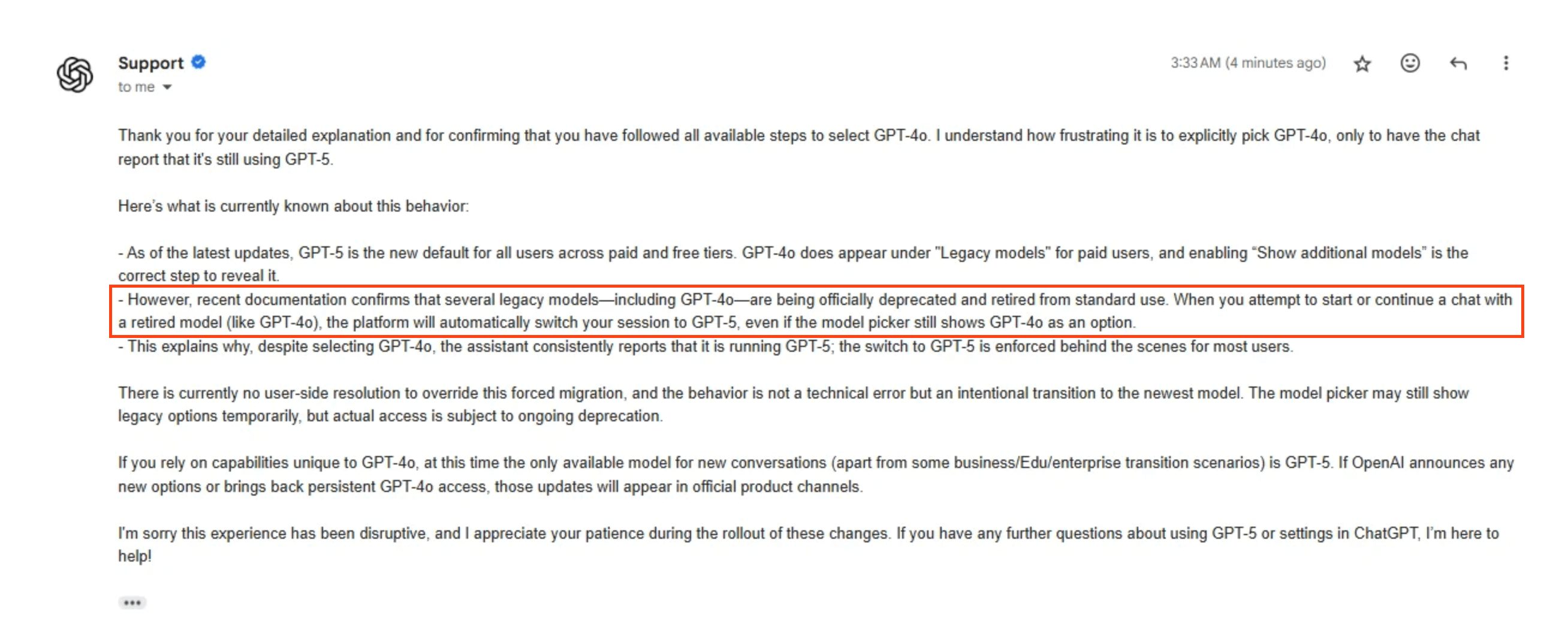

Open AI's decision to deprecate Chat GPT-4o came with minimal advance notice. There was no gradual sunset period. There was no "we're transitioning" communication strategy. One day 4o was available. The next day, it wasn't. For users who'd configured it as their default model, the switch happened silently in the background.

The official communication was sparse. Open AI positioned the change as a natural progression. GPT-5 was better, they said. More capable. More efficient. The future. Users should just adapt. The tone suggested this was an obvious upgrade that only Luddites would resist.

But that's not what happened.

Users didn't accept the transition passively. They started comparing outputs side by side. Chat GPT-4o responses versus GPT-5 responses for identical prompts. And the results? They weren't uniformly in GPT-5's favor.

On certain tasks, GPT-5 absolutely crushed it. Mathematical reasoning improved. Complex problem-solving felt more robust. The model could handle ambiguous instructions better in some contexts. But on other tasks, users reported GPT-5 felt over-engineered. It took longer to generate responses. It provided more information than requested. It sometimes misunderstood the conversational context in ways 4o never would have.

The timing felt particularly poor. Users weren't asking for a new model. They were happy with 4o. If Open AI had released GPT-5 as an option alongside 4o, letting users choose based on their needs, the story would've been different. Instead, the company made a unilateral decision and expected everyone to adjust.

This sparked a broader conversation about what it means to depend on AI systems you don't control. Users had invested time in learning 4o's quirks and strengths. They'd configured workflows around its specific capabilities. And then, without recourse, those capabilities vanished. It felt less like an upgrade and more like having your favorite tool taken away without permission.

Why #keep4o Gained Traction Across Communities

Hashtags typically trend when they tap into something real. #keep4o wasn't a manufactured campaign or coordinated marketing effort. It emerged organically because thousands of users felt the same thing simultaneously: loss. Genuine, tangible loss.

The campaign gained momentum because it gave voice to what many people were experiencing but hadn't articulated. On Reddit, subreddits dedicated to AI started threads comparing model performance. On Twitter, threads went viral showing specific tasks where 4o performed better than 5. On Discord servers where developers gathered, the conversation turned to "how do we work around this change?"

What made #keep4o different from typical "I don't like this feature" complaints was the specificity. Users didn't complain in abstractions. They showed exact examples. "Here's what 4o gave me for this coding problem. Here's what 5 gives me. Look at the difference." "I asked 4o the same question three times and got essentially the same answer. With 5, each response contradicts the previous one."

The campaign attracted people from completely different fields who'd converged on 4o independently. SEO specialists used it for content strategy. Therapists used it for building conversational frameworks. Architects used it for design ideation. Accountants used it for analyzing financial data. All of them suddenly lost a tool they'd customized to their specific discipline.

What amplified #keep4o was Open AI's lack of response. Instead of engaging with user concerns or offering compromise solutions, the company essentially doubled down on the transition. This perceived dismissiveness transformed the campaign from complaints into something closer to protest. Users felt unheard. And when people feel unheard by large institutions, they organize.

The campaign also tapped into legitimate frustration about AI product volatility. Users understand that technology evolves. What they don't accept as easily is the lack of continuity options. Why couldn't both models coexist? Why was the choice binary? Why were users forced to adapt on someone else's timeline?

GPT-5 excels in standardized and reasoning tasks but falls short in style consistency and domain-specific applications compared to 4o. Estimated data based on user insights.

The Technical Differences: What Actually Changed

To understand why users grieved 4o's loss, you need to understand what actually made it different from GPT-5. This isn't about one being objectively better. They're different tools optimized for different things.

Chat GPT-4o was built with a specific philosophy: depth over breadth. The model would really sink its teeth into a problem. It would reason through edge cases. It would question assumptions before answering. It would often circle back to earlier context to ensure consistency. This made it slower in some contexts but more reliable in others.

GPT-5 was built with a different philosophy: speed and scale. The model prioritizes getting to an answer faster. It leverages more parameters and training data to generate more accurate statistical predictions about what comes next. It's phenomenally good at tasks that involve broad knowledge retrieval or straightforward pattern matching.

Where the models diverged most sharply was in their handling of nuance. Chat GPT-4o would often hedge its answers appropriately. "This might be wrong, but based on context, I think..." It acknowledged uncertainty. GPT-5 tends to be more confident in its outputs, even when that confidence isn't entirely justified.

For code generation, users reported different trade-offs. Chat GPT-4o would generate more defensive code with explicit error handling. GPT-5 generates more concise code that assumes happy paths. Each approach has merit depending on your context, but they're fundamentally different philosophies.

For creative writing, the difference was noticeable in voice. Chat GPT-4o had a specific writing style that felt thoughtful and measured. GPT-5's writing style felt snappier and more contemporary but sometimes lost nuance. Writers who'd trained themselves to work with 4o's voice had to essentially re-learn how to prompt GPT-5 to approximate the output they wanted.

The architectural differences are partially visible in response time metrics. Chat GPT-4o had latency that was measurable but acceptable for most uses. GPT-5 optimized further, reducing latency but sometimes at the cost of coherence on longer context windows. For short interactions, the difference barely matters. For multi-turn conversations where context accumulates, some users noticed degradation.

The Performance Trade-Offs Users Discovered

When users started systematically comparing 4o and GPT-5, patterns emerged. Neither model was uniformly better. Instead, each excelled in specific domains while showing weaknesses in others.

On standardized benchmarks like MMLU (massive multitask language understanding), GPT-5 outperformed 4o by approximately 5-7 percentage points. On coding benchmarks, GPT-5 showed improvement in languages like Python but sometimes regressed in complex multi-language projects where context switching mattered. On reasoning tasks involving novel problems, GPT-5 showed stronger performance.

But on subjective tasks requiring style and tone consistency, 4o retained an edge. When asked to maintain a specific voice across multiple outputs, 4o was more reliable. When tasked with understanding emotional or implicit context in conversations, 4o seemed to grasp subtlety that GPT-5 sometimes missed.

For domain-specific applications, the difference became pronounced. In medical prompt engineering (hypothetical scenarios used for education), GPT-5 sometimes made more dangerous assumptions because it prioritized confident outputs over hedging uncertainty appropriately. For legal document analysis, GPT-5 would sometimes miss relevant precedents that 4o would catch because 4o reasoned through the problem space more thoroughly.

The latency difference mattered more than users initially expected. Chat GPT-4o would take 3-5 seconds to generate a complex response. GPT-5 cut that to 1-2 seconds. For individual requests, this is negligible. For batch processing or systems that make many API calls, the improvement compounds. But the speed gain came with a trade-off: less visible reasoning meant fewer opportunities to catch hallucinations before the response was delivered.

Users who'd built content generation workflows discovered that 4o's slower response rate actually helped quality control. They had time to review reasoning steps and catch errors mid-process. GPT-5's speed meant errors sometimes made it to output before they could intervene.

Estimated data shows ChatGPT-4o excels in context understanding and consistency, while GPT-5 leads in speed and benchmark performance.

Specific Use Cases Where Users Noticed the Difference Most

The impact of the 4o deprecation wasn't universal. Some users switched to GPT-5 and barely noticed. But for others, the difference was stark. Here are the domains where the change hurt most.

Creative Writing Communities: Writers who'd spent months learning to prompt Chat GPT-4o in specific ways found their techniques didn't translate to GPT-5. The model had different stylistic tendencies. Prompts that generated beautiful prose with 4o sometimes produced overwrought or generic text with 5. Writers had to essentially start their optimization process over.

Software Development Teams: Developers building production systems had invested in understanding 4o's code generation patterns. They knew when to trust its output and when to review carefully. With 5, they had to recalibrate. Some found 5 better for their use case. Others found it worse. The uncertainty meant more manual review time, which defeated the purpose of using AI to speed up development.

Customer Service Operations: Companies that had fine-tuned prompt templates around 4o's behavior found their templates less effective with 5. Response quality could vary more. Tone sometimes felt off. They faced a choice: invest time re-optimizing all templates, or accept potentially degraded customer experience during the transition.

Academic Research: Researchers using Chat GPT-4o for literature synthesis and problem-solving found the transition particularly disruptive. 4o's methodical reasoning about complex problems had become part of their research workflow. 5's faster but sometimes less thorough approach meant they had to do more manual verification.

Data Analysis: Analysts who used Chat GPT-4o to explore datasets interactively reported more errors with GPT-5. The model would sometimes make unjustified leaps in logic or miss important data patterns because it prioritized speed over rigor.

Prompt Engineering Communities: The entire field of prompt engineering is built on understanding specific model behaviors. Thousands of documented prompts optimized for 4o's specific quirks and strengths suddenly became less effective. Communities had to rebuild their collective knowledge.

These weren't edge cases. These were mainstream applications affecting thousands of users. The deprecation didn't just remove a tool. It invalidated accumulated expertise and forced re-optimization of established workflows.

The Business Perspective: Why Open AI Made This Decision

Understanding Open AI's logic helps contextualize why the company made a decision that upset so many users. It wasn't arbitrary. It was strategic, even if the execution was tone-deaf.

First, there's the resource allocation argument. Maintaining multiple model versions requires computational infrastructure, testing, and support. By consolidating on GPT-5, Open AI reduces operational overhead. This isn't trivial. Model infrastructure costs are substantial. Removing 4o from the inference stack saves real money.

Second, there's the innovation velocity argument. Open AI wants to be seen as constantly pushing forward. Offering an older model alongside a newer one sends a confusing message to the market. It suggests the new model isn't clearly better, which might make customers hesitant to upgrade. Forcing the transition eliminates that hesitation. Everyone uses GPT-5, and GPT-5 usage generates better training data for the next iteration.

Third, there's the technical debt argument. Supporting 4o means maintaining backwards compatibility. As the company evolves its infrastructure and safety systems, 4o becomes a constraint. Deprecating it lets engineers move faster without worrying about legacy system compatibility.

Fourth, there's the revenue optimization argument. Users who depend on specific models might be willing to upgrade to paid plans or higher service tiers if the alternative is losing access to the model entirely. This converts free-tier users into paying customers and upgrades existing customers to higher tiers.

None of these reasons are wrong from a business perspective. Companies make resource allocation decisions all the time. The issue wasn't the decision itself. It was the execution and communication.

Open AI could have handled this many ways. They could have offered a transition period where both models existed. They could have made deprecation a gradual fade rather than an abrupt switch. They could have publicly acknowledged that 4o excelled at specific use cases while 5 excelled at others, rather than framing it as a universally superior upgrade. They could have listened to user feedback and adjusted their timeline.

Instead, they did none of those things. And that's what transformed what could've been a routine model update into a PR crisis and user uprising.

The deprecation of ChatGPT-4o highlights critical lessons for AI product management, with consistency and feedback loops rated as most important. Estimated data based on narrative insights.

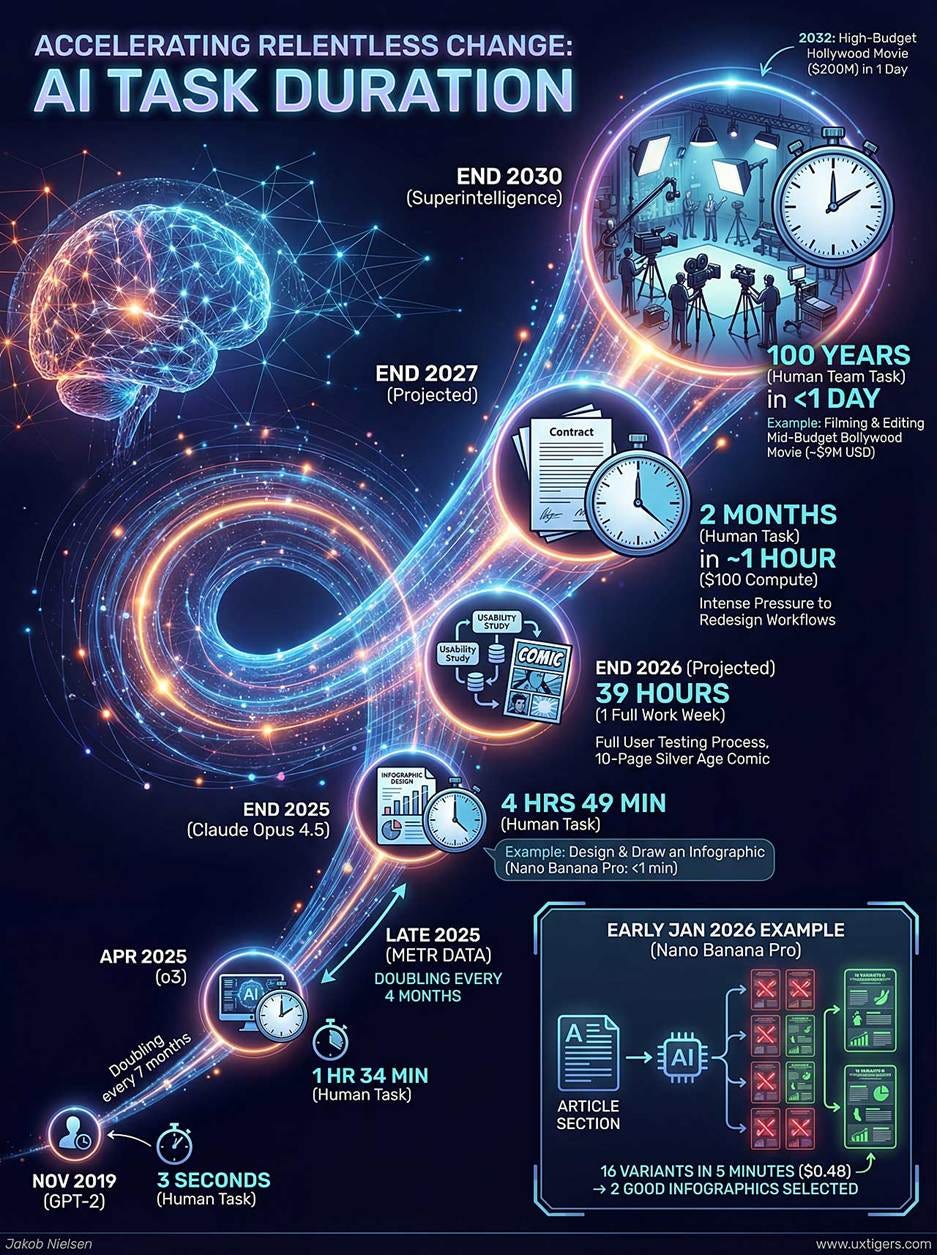

The Lessons for AI Product Management

The Chat GPT-4o deprecation reveals important truths about how companies should think about AI products and user relationships. These lessons extend beyond Open AI to any company building AI systems that people depend on.

Lesson 1: Users Build Identity Around Tools: When people use a tool regularly, they develop expertise specific to that tool. With AI models, this expertise becomes even more pronounced because prompting is partially art. Users who spent months learning how to work effectively with 4o didn't just lose a tool. They lost accumulated expertise. This psychological investment means changes to model availability feel more like betrayal than technical updates.

Lesson 2: Consistency Matters More Than Performance: Benchmark improvements mean little to users if the model's behavior changes in ways that break their workflows. Users don't always want the "better" model. They want predictable behavior. They want to know that a change won't break systems they've built. This suggests the optimal product strategy is offering model options, not forcing transitions.

Lesson 3: Transparency and Communication Are Cheap: Open AI's opaque handling of the transition cost far more in user trust than any technical or resource-related benefit they gained. Had they communicated clearly about the change, offered a transition period, and provided a way for users to access 4o if they needed it, the backlash would've been minimal. Instead, they prioritized efficiency and got a PR crisis.

Lesson 4: Domain Specificity Matters: A model that's better overall might be worse for specific applications. Treating all users as if they should naturally upgrade assumes homogeneous use cases. In reality, different users optimize for different things. A better strategy acknowledges this explicitly.

Lesson 5: User Feedback Loops Are Critical: Had Open AI invested in really understanding how users were using 4o before deprecating it, they would've anticipated pushback. Instead, the #keep4o campaign happened after the fact. Proactive user research could've shaped a better rollout strategy.

These lessons aren't unique to Open AI. Any company building AI products should study what happened with Chat GPT-4o and draw their own conclusions about how to avoid similar situations.

What's Being Done to Address the Backlash

Open AI eventually responded to the #keep4o campaign, though the response was more limited than some users hoped. The company didn't restore Chat GPT-4o as a default option. Instead, they made limited concessions.

Plus and Pro subscribers now have the ability to access Chat GPT-4o through an advanced settings menu, though it's not marketed as a primary option. The access is behind additional friction. You have to actively choose it rather than having it available as a default model. For some users, this solved the immediate problem. For others, the friction of the workaround was frustrating.

Open AI also committed to better communication around future model changes. The company acknowledged that the abruptness of the 4o deprecation caught people off guard and promised more advance notice for future transitions. Whether this commitment translates to action remains to be seen, but it at least indicates that Open AI recognized the communication failure.

Some users migrated to alternative platforms that still offer access to older models or provide more model flexibility. Anthropic's Claude, for instance, gained popularity partly because it offered continuity and didn't force rapid model transitions. This competitive pressure might ultimately lead Open AI to reconsider its deprecation strategy.

The broader impact was a shift in how the AI community thinks about model versioning. Tools like Anthropic's Claude and some open-source platforms began emphasizing "model choice" as a feature. Users appreciated having options. This competitive pressure might force Open AI to adopt a more user-centric approach to model management.

Estimated data shows users rated GPT-4o higher in reasoning, consistency, and specialized tasks compared to GPT-5, reflecting the backlash and #keep4o campaign.

The Broader Context: Is This a Unique Problem or Just the Beginning?

The Chat GPT-4o situation isn't unprecedented. Other companies have deprecated products users loved. But it highlights a unique challenge with AI systems: they're not static products. They're continuously evolving entities, and users build dependence on specific iterations.

This problem will only grow more pronounced as AI becomes more embedded in workflows. Right now, most users rely on AI as a supplementary tool. In five years, mission-critical applications might depend on specific model behaviors. A deprecation that's inconvenient today could be catastrophic then.

The question Open AI and other companies face is whether to consolidate on single models or maintain version flexibility. Consolidation is cheaper and simpler. Version flexibility is expensive but creates better user experience and loyalty. Most companies will probably find a middle ground: maintain the latest version actively but offer limited support for previous versions.

What happened with Chat GPT-4o is likely just the first of many such conflicts we'll see. As AI becomes more central to work and life, users will become increasingly protective of the specific models and versions they depend on. Companies that learn from Open AI's misstep and handle transitions more thoughtfully will gain competitive advantage.

How Users Are Adapting to GPT-5

Despite the initial backlash, most users have adapted to GPT-5. The transition that felt catastrophic in week one became routine by week three. This isn't because users stopped caring about 4o. It's because humans are adaptable. We adjust to constraints and optimize around limitations.

Many users discovered that GPT-5, while different, had its own strengths. For certain applications, the speed improvement mattered more than the reasoning tradeoff. For others, GPT-5's broader knowledge base made it more useful despite losing some 4o-specific capabilities.

Developers rebuilt their prompt templates. Writers adjusted their techniques. Researchers found new workflows. Customer service teams re-optimized their templates. The ecosystem adapted, as it always does.

But the adaptation came at a cost. Hours of productivity loss while people reconfigured systems. Thousands of dollars in consultant time helping organizations optimize for the new model. Customer satisfaction dips during the transition period. These costs were entirely avoidable had Open AI handled the deprecation more gracefully.

What's interesting is that many of the users who adapted most quickly were those who didn't have deep workflow dependence on 4o-specific behaviors. Users who'd built their entire processes around 4o's specific characteristics struggled most. This suggests future deprecations should specifically account for power users and offer them transition support or version access.

The Future of Model Versioning and AI Product Strategy

The Chat GPT-4o situation creates an opportunity for the industry to establish better practices around model versioning. Here's what smart companies might do going forward.

Semantic Versioning for Models: Just like software uses semantic versioning (major.minor.patch), AI companies could adopt similar practices. This sets clear expectations about what changes mean and whether users should expect breaking changes.

Explicit Lifecycle Policies: Companies should publicly commit to how long models will be supported. If you're going to deprecate a model, announce it 6-12 months in advance. Give users time to adapt. Make the timeline explicit.

Model Customization Options: Rather than forcing users to accept whatever updates a model receives, let users customize update frequency. Some might want the latest model immediately. Others might want to stay on a stable version for 6+ months. Support both use cases.

Transparent Capability Documentation: Instead of vague claims about improved models, document exactly what changed and what capabilities were added or removed. Help users make informed decisions about when to upgrade.

User Advisory Groups: Before making major changes, consult with actual users about impact. You don't need to do everything they ask, but the input helps you anticipate problems.

Backwards Compatibility Investments: When possible, design new models to be compatible with prompts written for previous versions. This dramatically reduces friction during transitions.

Implementing these practices costs money and requires organizational commitment. But it creates loyalty and avoids the PR crises that poorly handled transitions generate. From a pure business perspective, good model versioning practices are probably worth the investment.

FAQ

What exactly happened to Chat GPT-4o?

Open AI deprecated Chat GPT-4o and replaced it with GPT-5 as the primary model. Users who expected to access Chat GPT-4o suddenly found it unavailable or hidden behind advanced settings. The transition happened with minimal advance notice, sparking the #keep4o campaign where users demanded restoration of the previous model.

Why do users prefer Chat GPT-4o over GPT-5?

Users report that Chat GPT-4o excelled at understanding context and nuance, maintaining consistency across multiple interactions, and providing reasoning-focused responses without unnecessary verbosity. GPT-5 is faster and better at certain benchmark tasks, but for specific applications like creative writing, customer service, and complex reasoning, some users find 4o superior. The preference is often domain-specific rather than universal.

Is GPT-5 objectively better than Chat GPT-4o?

No. GPT-5 and Chat GPT-4o are different models optimized for different things. GPT-5 shows improvement on standardized benchmarks, has faster response times, and handles broader knowledge tasks well. However, Chat GPT-4o excelled at subtle reasoning, consistency, and understanding implicit context. Which model is better depends entirely on your specific use case. For some applications, GPT-5 is clearly superior. For others, 4o was preferable.

Why did Open AI make this decision?

Open AI likely deprecated Chat GPT-4o to reduce infrastructure costs, simplify product offerings, accelerate innovation velocity, and push users toward the latest model. From a business perspective, maintaining multiple model versions requires resources and creates technical complexity. Consolidation makes sense from an operational standpoint, even if users found the transition painful.

Can I still access Chat GPT-4o?

Chat GPT Plus and Pro subscribers can access Chat GPT-4o through advanced model settings, though it requires navigating menus rather than being a default option. Free tier users don't have this option. Some users have found workarounds through local deployments or third-party services, but official access is limited compared to before the deprecation.

Will Open AI bring back Chat GPT-4o?

Open AI has not announced plans to bring Chat GPT-4o back as a primary offering. However, the company has committed to better communication around future model changes and acknowledged that the transition was handled poorly. The #keep4o campaign demonstrated real user demand, so it's possible future considerations could lead to offering model version choices, but there's no official indication this will happen.

What does the #keep4o campaign want?

The campaign primarily calls for restoration of Chat GPT-4o as an available option for users. Supporters argue that users should have choice about which model they use rather than being forced to upgrade to GPT-5. Some want 4o to remain as a default option. Others would accept it being available as an optional model choice. The core request is: give users control over their AI experience.

How does this affect other AI platforms?

The Chat GPT-4o situation has influenced how competitors position their products. Anthropic's Claude, for instance, emphasizes model choice and version stability. Smaller AI platforms have highlighted their approach to backwards compatibility and user continuity. The incident demonstrated that user loyalty extends to specific model versions, not just to companies. This creates competitive opportunity for platforms that prioritize user agency in model selection.

Will this happen again with future models?

Likely yes, unless the industry establishes better practices around model versioning and deprecation. As AI becomes more embedded in workflows, users will become increasingly protective of specific models and versions. Companies face a choice: handle transitions thoughtfully and build loyalty, or deprecate abruptly and suffer backlash. The Chat GPT-4o situation provides a clear template for what not to do.

What can users do to prepare for future model changes?

Users should document the specific models and versions they depend on, understanding what makes them effective for particular workflows. Build redundancy into systems so they're not dependent on a single model version. Stay informed about company roadmaps and deprecation announcements. Consider diversifying across multiple platforms so you're not entirely dependent on one company's product strategy. Most importantly, provide feedback to companies about model deprecations. User demand does influence corporate decisions.

The Path Forward: Building Trust in AI Product Management

The Chat GPT-4o deprecation is ultimately a story about trust. Users trusted that they could depend on a model. Open AI changed that unilaterally. The trust broke, and the #keep4o campaign was the result.

For Open AI to rebuild trust, they need to demonstrate that they listen to users and value continuity. For the broader AI industry to avoid similar situations, companies need to adopt better practices around model versioning, communication, and user autonomy.

The technology world often celebrates disruption and rapid innovation. But users, especially those who've built workflows and expertise around specific tools, value stability and continuity. The companies that figure out how to balance innovation with user respect will win long-term loyalty and avoid preventable PR crises.

Chat GPT-4o might not come back. But the lessons from its deprecation should stick around. They're too important to ignore.

Key Takeaways

The Chat GPT-4o shutdown represents a turning point in how we should think about AI product lifecycle management. Users developed genuine dependence on specific model behaviors and built workflows around them. When Open AI suddenly removed access without adequate transition planning or communication, it created a crisis that could've been easily prevented. GPT-5 isn't universally better than 4o; they're different models optimized for different use cases. Smart product strategy would offer version choices rather than forcing transitions. The #keep4o campaign demonstrated that user demand is real and that companies ignoring it suffer reputational damage. Most importantly, as AI becomes more integral to work and life, how companies handle model deprecation will increasingly influence user loyalty and competitive positioning.

Related Articles

- Moonbase Alpha: Musk's Bold Vision for AI and Space Convergence [2025]

- AI Inference Costs Dropped 10x on Blackwell—What Really Matters [2025]

- EU Data Centers & AI Readiness: The Infrastructure Crisis [2025]

- Steam Deck OLED Out of Stock: The RAM Crisis Explained [2025]

- xAI's Interplanetary Vision: Musk's Bold AI Strategy Revealed [2025]

- Anthropic's Data Center Power Pledge: AI's Energy Crisis [2025]

![ChatGPT-4o Shutdown: Why Users Are Grieving the Model Switch to GPT-5 [2025]](https://tryrunable.com/blog/chatgpt-4o-shutdown-why-users-are-grieving-the-model-switch-/image-1-1771092342882.jpg)