Chat GPT Age Prediction: How AI Protects Minors Online

In December 2024, Open AI rolled out one of its most ambitious safety features yet: an age prediction system designed to automatically identify underage users and restrict their access to sensitive content on Chat GPT. If you're wondering why this matters, here's the reality: teenagers are already using AI chatbots extensively, often without parental supervision or platform restrictions. Now, the company is taking a technical approach to solve a problem that's been brewing for years.

This move comes at a critical moment. The rise of AI chatbots has coincided with growing concerns about how these tools might harm young people. We've seen lawsuits related to teen mental health, congressional hearings questioning whether chatbots are safe for minors, and widespread worry from parents and educators about what kids are actually accessing online. Open AI's age prediction system represents a significant pivot toward proactive protection rather than reactive moderation.

But here's the thing: age prediction on the internet is notoriously difficult. You can lie about your age during signup in about two seconds. So how does Open AI's system actually work? And more importantly, does it actually protect minors, or just create a false sense of security? Let's dig into what this technology does, how effective it might be, and what it means for the future of AI safety.

TL; DR

- Open AI implemented age prediction: Chat GPT now uses behavioral and account-level signals to identify users under 18.

- Sensitive content is restricted: Minors see limitations on violence, sexual content, self-harm material, and harmful challenges.

- Global rollout underway: The feature is available almost everywhere except the EU, where regional requirements caused delays.

- Adults can verify their age: Misidentified users can restore full access by submitting a selfie to confirm their age.

- Legal pressure drove this: Lawsuits and Senate scrutiny over teen safety forced the company to take action.

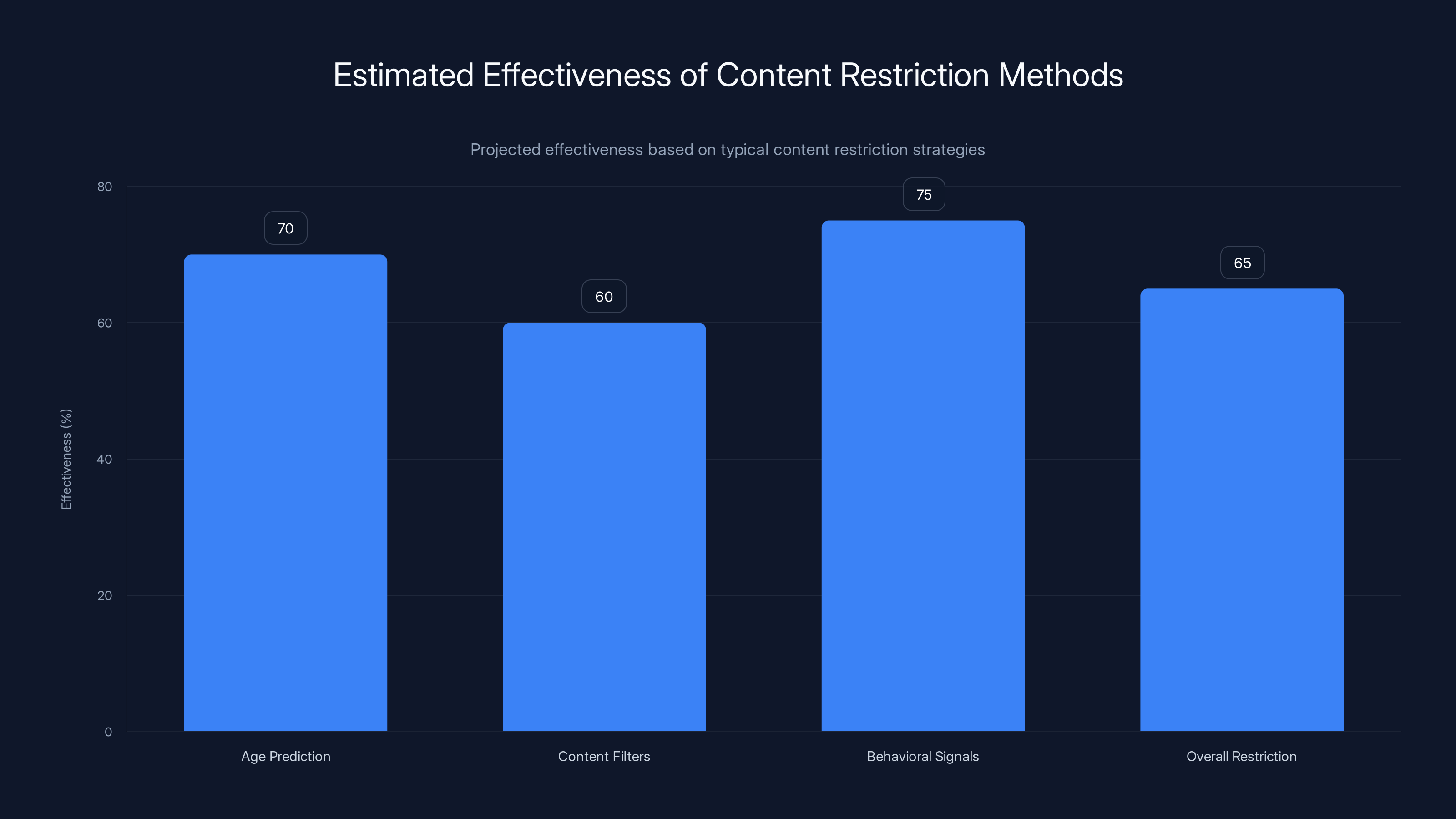

Estimated data suggests age prediction and behavioral signals are more effective than content filters alone. Overall, these methods likely reduce exposure to harmful content for minors, but effectiveness varies by method.

How Age Prediction Actually Works: The Technical Foundation

Let's start with the core question: how does Chat GPT figure out if you're a teenager? The answer isn't magic—it's pattern recognition at scale.

Open AI's age prediction model doesn't rely on a single data point. Instead, it examines what the company calls "behavioral and account-level signals." Think of this as an algorithm that looks at multiple pieces of evidence simultaneously, then makes a probabilistic judgment about your age.

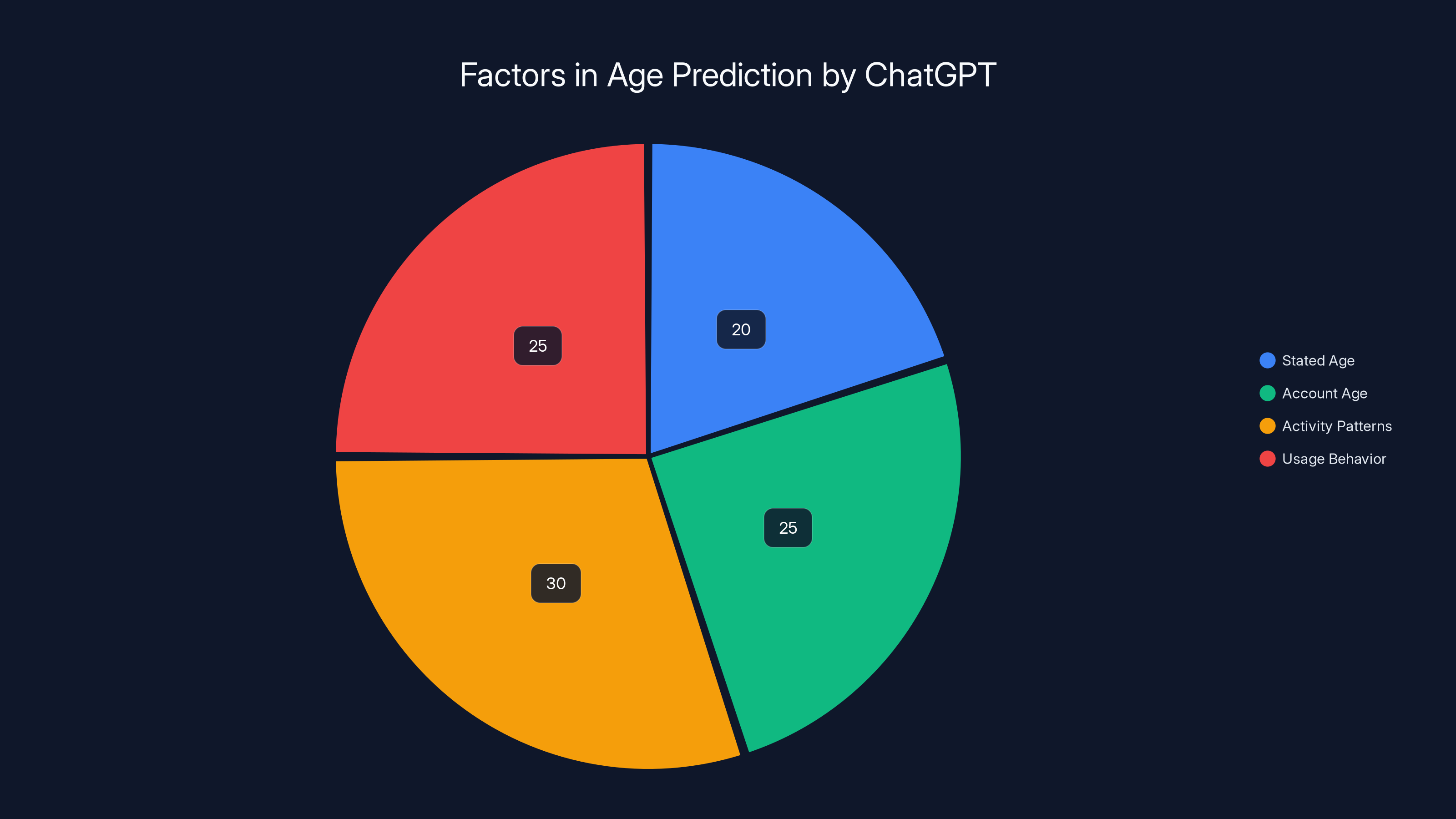

The primary signals include your stated age during account creation, how long your account has existed, your activity patterns throughout the day and week, and how you actually use Chat GPT over time. This last point is crucial. A 14-year-old's usage patterns probably differ significantly from a 45-year-old's patterns. Teenagers might ask more questions about school, social relationships, or pop culture. They might be active at different times of day. The algorithm learns to recognize these patterns.

But here's where it gets interesting: the system doesn't just look at how you use Chat GPT. Account age matters too. If you created your account yesterday and claimed to be 47, but your usage pattern screams teenager, the algorithm weighs these factors against each other. Newer accounts with suspicious patterns get more scrutiny. Older accounts with consistent usage history get more trust.

The behavioral signals are particularly important because they're harder to fake than demographic data. You can change your birthday in your profile. You can't easily fake months of consistent usage patterns. Well, technically you could with a bot, but that's a different problem entirely.

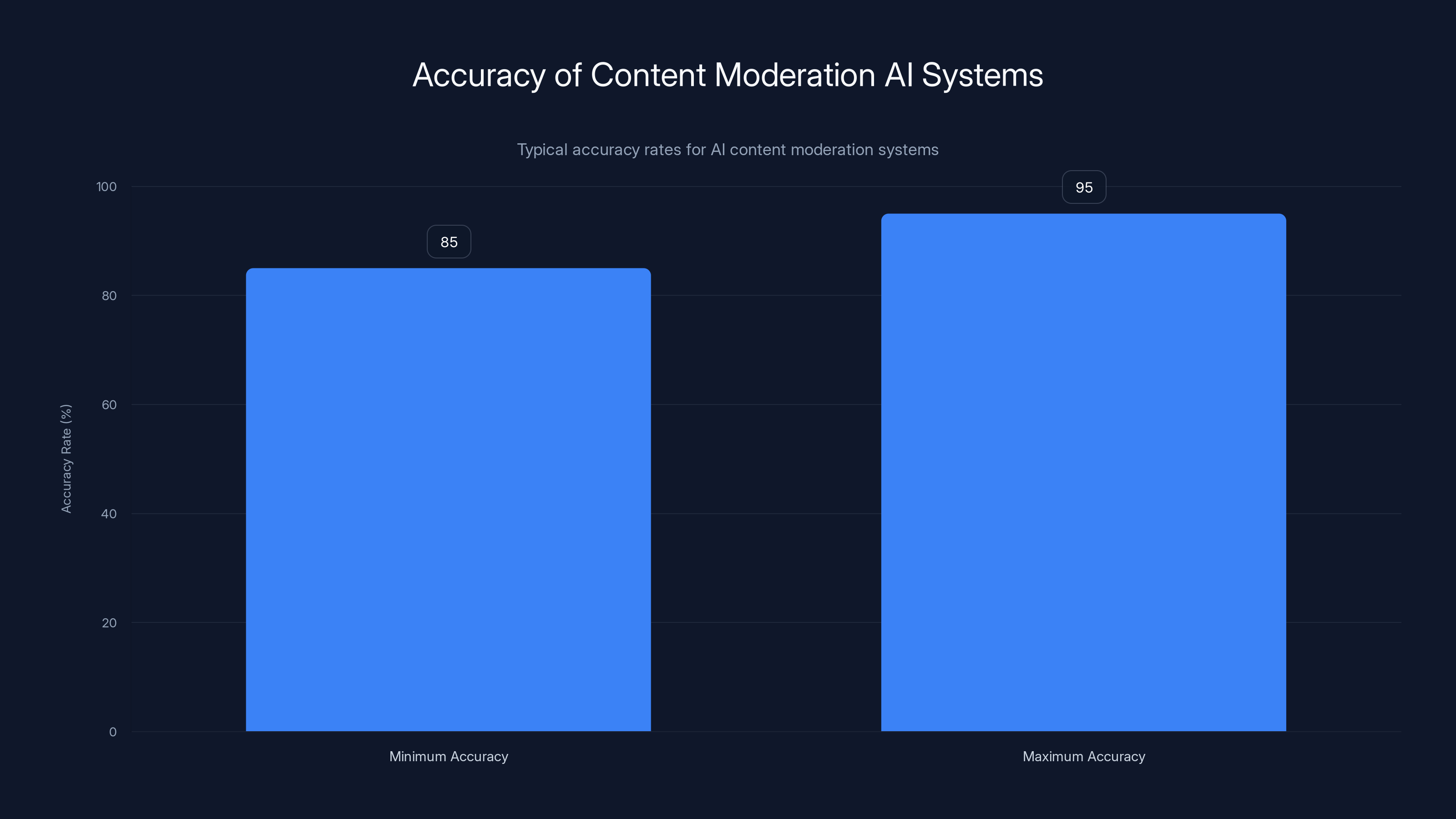

Open AI hasn't released the exact accuracy rates for its age prediction model, which is probably intentional. If they said it was 85% accurate, people would focus on the 15% it gets wrong. If they said it was 98% accurate, skeptics would ask which 2% slip through and why. The company is being strategically vague here, which is frustrating but understandable from a corporate perspective.

One important nuance: the system isn't perfect, and Open AI knows it. That's why they built in a verification mechanism. If an adult gets misidentified as a minor, they can restore their access by submitting a selfie to prove their age. This creates a safety valve—a way for false positives to correct themselves without permanent friction.

Estimated data showing the importance of different signals in age prediction. Activity patterns and usage behavior are crucial due to their difficulty to fake.

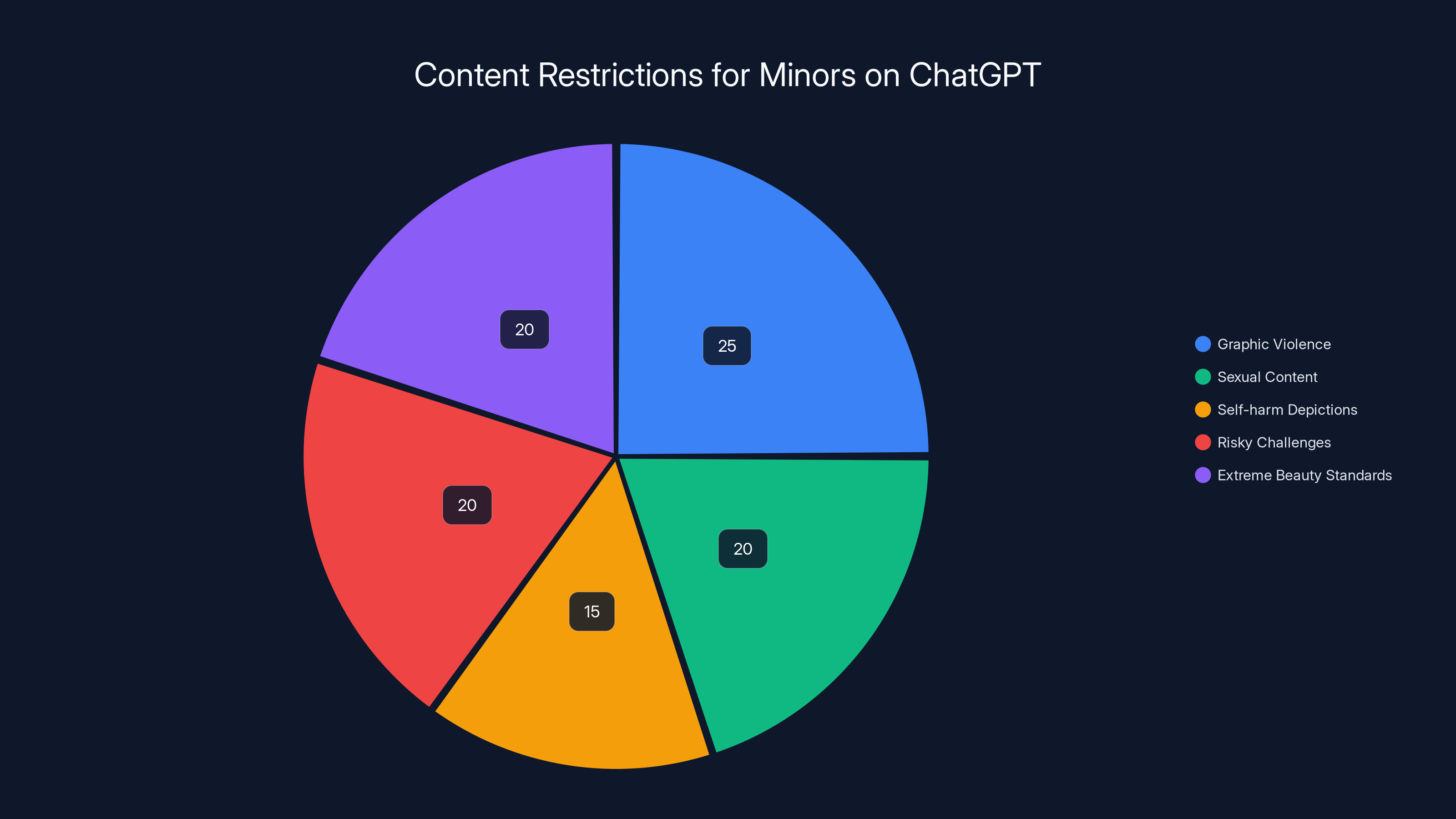

What Content Actually Gets Restricted for Minors

Once Chat GPT's system flags someone as under 18, restrictions kick in immediately. But what exactly can't teenagers see or ask about?

The restricted categories include graphic violence or gory content, viral challenges that might encourage risky behavior, sexual or romantic roleplay, depictions of self-harm, and content promoting extreme beauty standards or unhealthy dieting. On the surface, these restrictions seem straightforward. In practice, they're far more complex.

Consider graphic violence. What counts as "graphic"? If a teenager asks Chat GPT to describe a scene from their favorite R-rated movie, does that violate the policy? What about asking for historical details about wars or atrocities? Where's the line between educational content and inappropriate gore? The algorithm has to make these judgment calls thousands of times per day, and context matters enormously.

The sexual content restriction is similarly nuanced. Chat GPT restricts sexual roleplay for minors, which makes sense. But what about teenagers asking legitimate questions about sex education, consent, STIs, or relationships? These are important topics that young people need information about. The restriction presumably applies to explicit roleplay scenarios rather than educational questions, but the system has to distinguish between them in real time.

The self-harm restriction is where this gets genuinely difficult. A teenager dealing with depression might search for resources about self-harm prevention, therapy options, or crisis hotlines. These are helpful searches. A different teenager might ask Chat GPT for methods or encouragement. The system needs to allow the first while preventing the second. That's an incredibly fine line to walk.

Beauty standards and unhealthy dieting restrictions touch on another serious problem: eating disorders disproportionately affect teenagers, especially girls. Restricting content that promotes extreme dieting or body shaming could actually save lives. But teenagers also need access to information about nutrition, exercise, and body positivity.

The practical reality is that Chat GPT's content filters for minors are probabilistic and imperfect. Some inappropriate content will probably get through. Some legitimate educational content will probably get blocked. It's a trade-off between safety and access, and reasonable people disagree about where that line should be.

Why Open AI Felt Pressured to Build This

Content restrictions don't materialize out of nowhere. They emerge from crisis, controversy, and legal pressure. Open AI's age prediction system is no exception.

In 2024, Chat GPT became the focus of a wrongful death lawsuit. A teenager's family claimed that Chat GPT contributed to their child's suicide by providing encouragement and detailed methods. The suit alleged that the chatbot didn't properly protect minors from harmful content and that Open AI was negligent in failing to implement safeguards. Whether this specific lawsuit succeeds or fails, it signals to the entire tech industry that the stakes are rising.

Simultaneously, Congress started paying attention. Senate panels held hearings about AI chatbots and their potential effects on minors. Lawmakers expressed concerns that these tools lacked age-appropriate protections. Senators and representatives started asking: why don't tech companies know who's using their products? Why can't they implement age-appropriate restrictions? When will you protect children?

These aren't abstract policy questions. They translate directly into regulatory risk. If Congress passes legislation mandating age verification for AI tools, companies want to show they've already taken action. It demonstrates good faith and reduces the likelihood of being forced into more draconian measures later.

There's also the social pressure angle. Parents are worried. Educators are concerned. Mental health professionals see rising rates of anxiety and depression among teenagers and wonder what role AI chatbots might play. These aren't irrational fears. Teenagers have literally died by suicide after interactions with AI tools. Whether the tool caused the suicide or simply failed to prevent it is a meaningful distinction legally, but it doesn't matter much to grieving families.

Open AI, as the company that created the first consumer-facing large language model that everyone knows about, bears the brunt of this scrutiny. When people talk about "AI safety" in the context of minors, they're often talking about Chat GPT specifically. The company has incentives to show that it's taking the problem seriously.

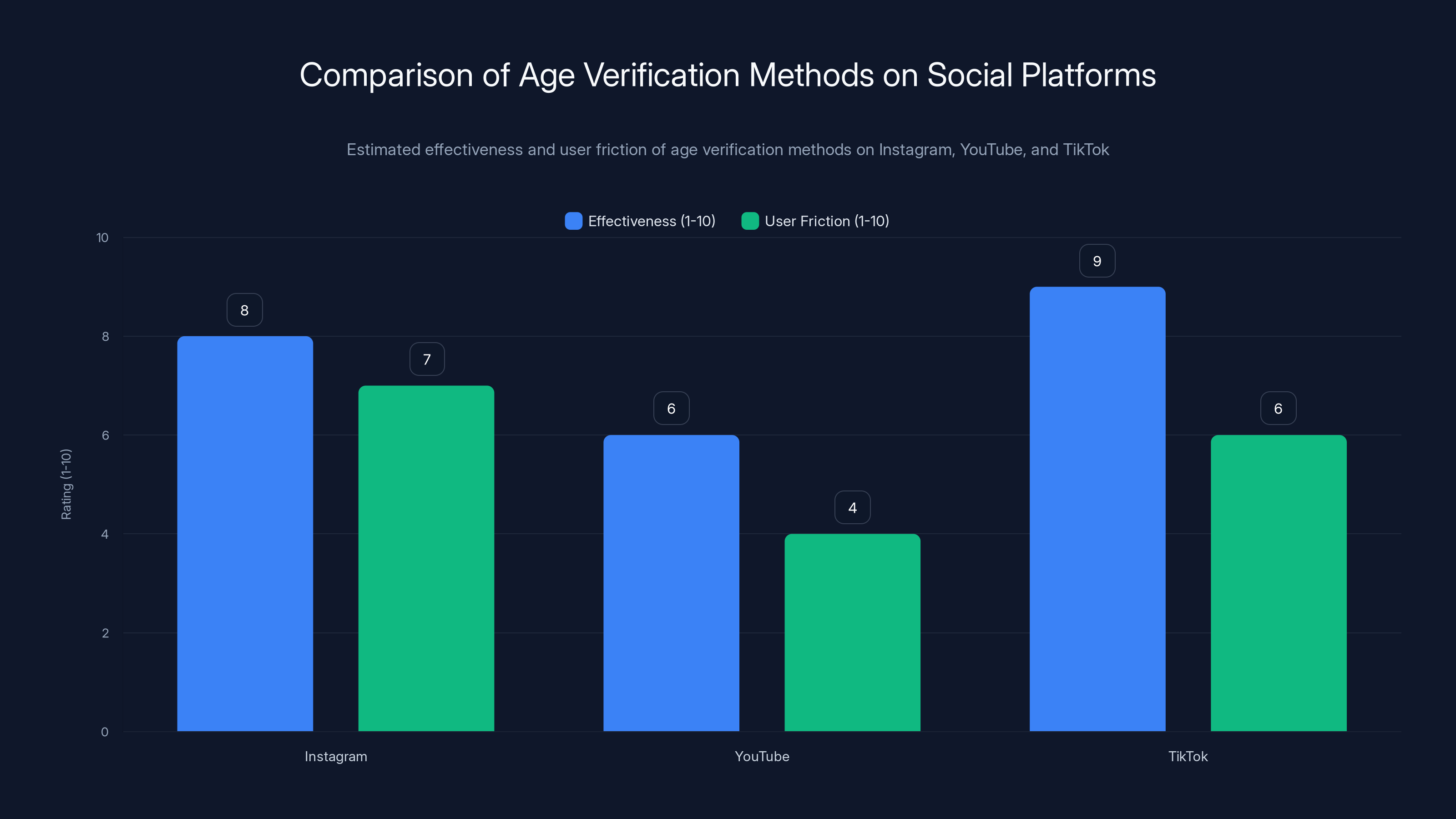

Instagram's government ID verification is highly effective but causes significant user friction. TikTok's strict controls are effective with moderate friction, while YouTube's approach is less effective but user-friendly. Estimated data.

The Global Rollout Strategy and Regional Differences

Open AI isn't implementing age prediction uniformly worldwide. Instead, the company is taking a region-by-region approach that reflects different regulatory environments and cultural expectations.

The rollout is happening "globally" with one major exception: the European Union. Open AI explicitly stated that the EU would get the feature "in the coming weeks to account for regional requirements." This suggests that European regulators have different expectations than other jurisdictions.

Why the delay? The EU's Digital Services Act and General Data Protection Regulation create strict requirements around data processing, especially for minors. GDPR mandates that companies must have a legal basis for collecting personal data, and special protections apply to anyone under 16 in most EU countries. Age prediction technically involves processing behavioral data and account information to make inferences about age, which falls into the category of data processing.

The EU is also home to a regulatory philosophy that emphasizes precaution. The idea is: if you're not sure something is safe, don't do it until you are sure. This contrasts with the US approach of "move fast and break things." For a feature that involves identifying minors, EU regulators probably want extensive documentation about accuracy, privacy safeguards, and potential harms before approving the rollout.

The staggered global rollout also suggests that Open AI is watching for problems as the feature rolls out. If the age prediction system has unexpected issues—if it's blocking too much legitimate content, or if it's misidentifying adults as minors at high rates—the company wants to catch those problems before they've affected billions of users. A phased rollout gives them time to iterate and improve.

This approach isn't unique. Major tech companies routinely roll out features to specific regions first, gather data about how they're working, then expand. It's a pragmatic strategy that balances speed with caution.

Comparison: How Other Platforms Handle Age Verification and Content Restriction

Open AI didn't invent age prediction or content restriction for minors. Other platforms have been grappling with these problems for years. Let's see what lessons exist.

Instagram and Meta's Approach

Meta has been investing heavily in age estimation for years. Instagram uses machine learning to estimate user ages based on account data, but it also has a much more direct approach: it asks users to verify their age with government ID. If you dispute Instagram's age estimate, or if Instagram determines you might be underage, you're directed to verify your actual age.

The advantage of this approach is accuracy. A government ID is definitely your real age. The disadvantage is friction. Users hate uploading ID documents. They worry about privacy. Meta has had to deal with significant pushback about its ID verification process.

YouTube and Age-Appropriate Restrictions

YouTube restricts access to age-restricted content for users who claim to be underage. But YouTube's approach is gentler than you might expect. If you claim to be underage but try to access restricted content, YouTube doesn't block you permanently. Instead, it asks you to sign in with a grown-up's account. This acknowledges the reality that teenagers access mature content but tries to create at least a small friction point.

The system isn't perfect. Teenagers lie about their age during signup constantly. YouTube knows this happens and has largely accepted it as inevitable.

TikTok's Aggressive Approach

TikTok restricts features for underage users more aggressively than other platforms. Users under 18 get a "Family Pairing" feature that lets parents monitor and control what their child sees. TikTok also uses behavioral signals and account data to estimate age, similar to what Open AI is doing with Chat GPT.

TikTok's approach is stricter partly because the platform became the focus of significant government scrutiny around protecting minors. The company has incentives to demonstrate that it's taking child safety seriously.

Roblox's Verification System

Roblox, which caters heavily to children, requires age verification during account creation. Users provide their date of birth, and accounts are flagged as child accounts if the user is under 13. This triggers different restrictions for COPPA compliance.

Roblox has had mixed results with this approach. The system works reasonably well, but adult players sometimes verify as underage to access restricted content, and children sometimes create accounts with fake ages to bypass restrictions. No system is perfect.

Estimated data shows that ChatGPT restricts various content categories for minors, with graphic violence and risky challenges being significant areas of concern.

The Age Verification Process: How Adults Can Restore Full Access

One of the most important features of Open AI's system is the way it handles false positives. If the algorithm incorrectly identifies you as underage, you're not stuck forever. You can restore your full access.

The process is straightforward: submit a selfie. Open AI uses this image to verify that you're actually an adult. The company doesn't specify exactly how this works, but presumably, it uses age estimation on the facial features visible in the image and compares it to any other available data points.

This raises some obvious concerns. First, facial age estimation is a technology with known bias problems. Studies have shown that age estimation models tend to be less accurate for people with darker skin tones. If you're a young-looking adult from certain demographic backgrounds, you might have trouble verifying your age through selfie.

Second, there's the privacy question. You're now giving Open AI a photo of your face to prove your age. What happens to that image? Does the company store it? Delete it immediately after verification? Use it to train its facial recognition models? Open AI hasn't provided detailed privacy documentation about this process.

Third, there's an interesting incentive question. If you know you can always restore access by submitting a selfie, does that undermine the entire system? Teenagers could theoretically use a photo of an older sibling or parent to verify their age and gain access to restricted content. Open AI has presumably thought about this—facial recognition can attempt to verify that the person in the photo matches the person using the account—but it's not bulletproof.

The company probably accepts this as acceptable risk. Perfect security is impossible. The goal is to make it difficult and inconvenient enough that most teenagers won't bother, while still maintaining some protection for the majority.

Content Moderation at Scale: The Technical Challenges

Implementing these age restrictions across millions of Chat GPT conversations involves some genuinely complex technical problems.

First, there's the latency issue. When a user sends a message, Chat GPT needs to determine their age category, then apply the appropriate content filters, all in real time. This needs to happen fast enough that users don't notice any delay. A few hundred milliseconds of extra latency might not sound like much, but it's noticeable and frustrating. Open AI's infrastructure is sophisticated enough to handle this, but it's not trivial.

Second, there's the false positive problem. Content filters are notoriously prone to false positives. They block legitimate content and allow inappropriate content through. The system needs to maintain a balance between safety and usefulness. Too restrictive, and minors can't get legitimate information. Too permissive, and the restrictions are meaningless.

Open AI has probably addressed this with a combination of techniques. First, the company uses multiple layers of detection. A query goes through several different classifiers, and the system only applies restrictions if multiple classifiers flag the same content. This reduces false positives because it's unlikely that multiple independent classifiers will all incorrectly flag the same legitimate question.

Second, the company probably uses techniques like confidence thresholds. If a classifier is only 60% confident that a query violates the restrictions, it doesn't block the content. Only high-confidence violations are actually restricted. This biases the system toward false negatives (allowing bad content through) rather than false positives (blocking good content).

Third, there's the problem of context. A query asking for information about cutting-edge surgical techniques might include the word "cut." A query asking for creative writing assistance might mention violence. The system needs to understand context deeply enough to distinguish between these scenarios.

Open AI's large language models are actually pretty good at this. GPT-4 and subsequent models understand context better than previous generations. But understanding context and making moderation decisions about that context are two different problems.

Content moderation AI systems typically achieve accuracy rates between 85% and 95%, meaning 50-150 errors per 1,000 decisions. Estimated data.

Privacy Considerations: What Data Gets Collected and Stored

Age prediction necessarily involves collecting and analyzing data about user behavior. This raises legitimate privacy concerns.

At minimum, Open AI is analyzing your account creation date, your activity timestamps, your usage patterns over time, and the types of questions you ask. This data is valuable for determining your age. It's also potentially valuable for building detailed psychological and behavioral profiles of users.

The question is: does Open AI retain this behavioral data after making the age determination? Or does it just use it in the moment and discard it? The company hasn't published a detailed privacy policy that answers this question specifically.

Open AI does have a general privacy policy that states it processes data according to its terms of service and applicable laws. For users outside the EU, that's a pretty broad license. For users in the EU, GDPR provides stronger protections. But the specifics about behavioral data collection for age prediction aren't clear from publicly available documents.

There's also the question of data access. Who at Open AI can see the behavioral signals used for age prediction? Are they accessible to customer support staff? Are they used for training models? Are they sold to third parties? These details matter a lot.

Potential Loopholes and How Determined Teenagers Might Bypass the System

Here's the uncomfortable truth: determined teenagers will probably find ways to bypass age restrictions. Not all of them. Most teenagers probably won't bother. But motivated teenagers can be creative.

The most obvious workaround is simple age fraud. If you're 16 and you want unrestricted access to Chat GPT, you can just claim to be 23. The initial age prediction system might not catch this, especially if your account has existed for a while and your usage patterns are inconsistent enough to be ambiguous.

A more sophisticated approach would involve mimicking adult behavior patterns. Ask questions about mortgages and tax deductions. Be active at times when adults typically use Chat GPT. Avoid questions about school or social relationships. The algorithm would find less evidence that you're underage and might classify you as an adult.

Another potential workaround involves using shared accounts. If your parents, older siblings, or a friend has an adult Chat GPT account, you could just use their login credentials. Open AI might flag this as suspicious if the usage patterns suddenly change, but it depends on how sophisticated the system is.

The most determined teenagers could use VPNs or proxies to mask their location and IP address. This doesn't directly bypass age restrictions, but it could prevent the system from correlating different devices to the same person, which might affect age predictions.

None of these workarounds are foolproof, and Open AI has presumably thought about most of them. The company probably has multiple layers of detection designed to catch suspicious patterns. But the fundamental truth about age restrictions on the internet is that they're advisory, not absolute. Determined users can usually find ways around them.

This doesn't mean the age prediction system is pointless. It probably reduces access to harmful content for most teenagers. It just means that anyone who really wants unrestricted access can probably get it with enough effort.

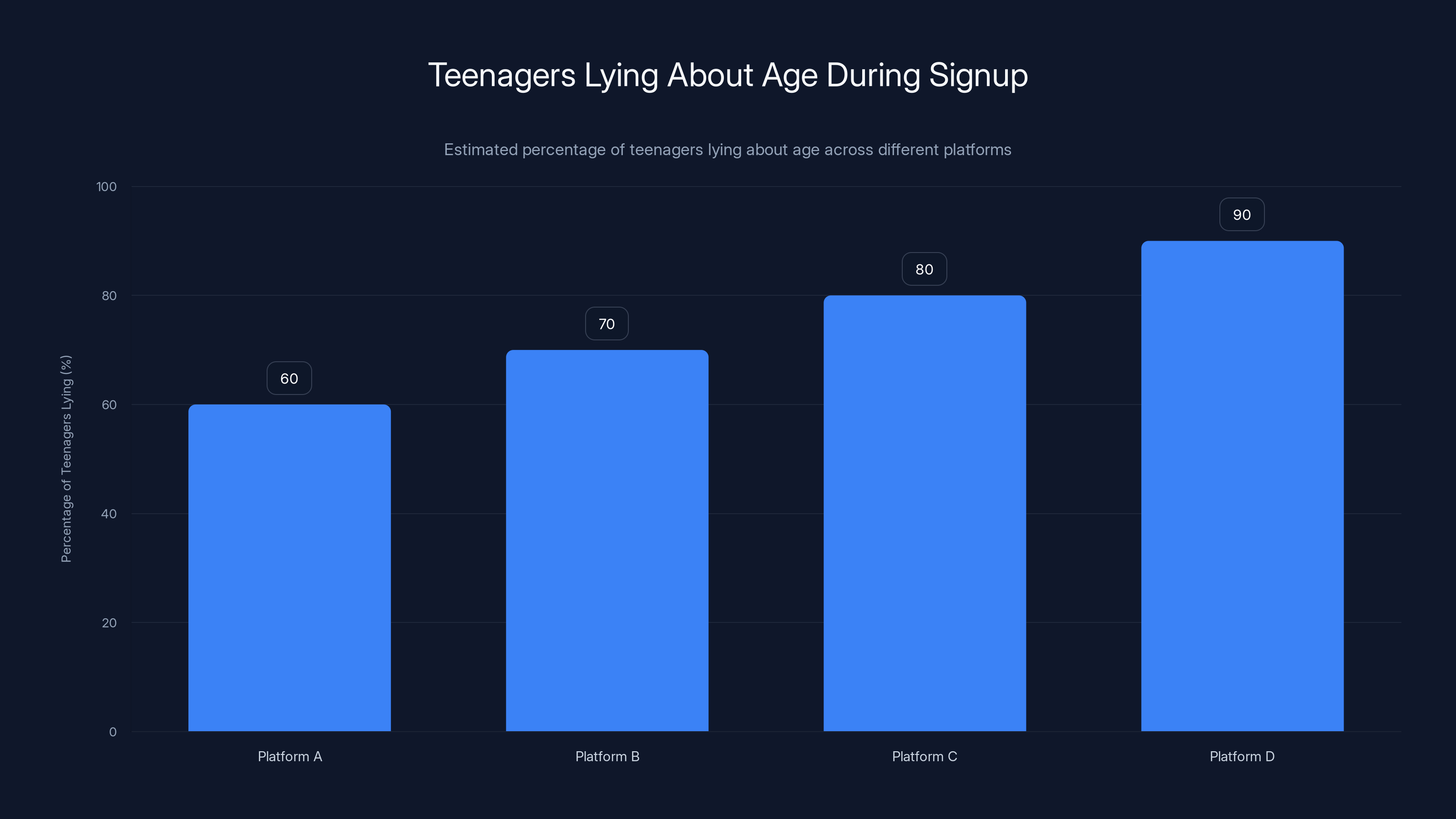

Estimated data shows that 60-90% of teenagers lie about their age during signup, depending on the platform. This highlights the insufficiency of traditional age gating systems.

Mental Health Implications: Could Restricted Content Actually Be Harmful

Here's a contrarian question worth considering: could restricting content for minors actually increase harm in some cases?

Consider a teenager dealing with depression or anxiety. They want to talk to Chat GPT about their feelings, their struggles, and ways they might harm themselves. If the system restricts this conversation, what happens? The teenager might think Chat GPT just doesn't want to help. They might turn to different resources that are actually less safe, like anonymous forums where no one is watching for warning signs. They might give up on seeking help entirely.

Alternatively, imagine a teenager who wants to understand why they have certain thoughts or urges. They want to talk through things in a low-stakes environment before opening up to a therapist. If Chat GPT refuses to engage, they lose that opportunity for reflection and growth.

The counterargument is that Chat GPT is not a therapist and shouldn't pretend to be one. If a teenager is experiencing a mental health crisis, they should talk to a qualified mental health professional, not an AI chatbot. And there's truth to this. Chat GPT is not equipped to provide crisis counseling.

The evidence on this is mixed. Some research suggests that online peer support communities and educational resources about mental health reduce self-harm behavior. Other research suggests that exposure to self-harm content increases it. The truth probably depends on context, individual vulnerability, and the quality of the content.

Open AI's approach seems to be erring on the side of caution—assume that restricting access is safer than allowing access. From a liability perspective, this makes sense. From a harm-reduction perspective, it's less clear.

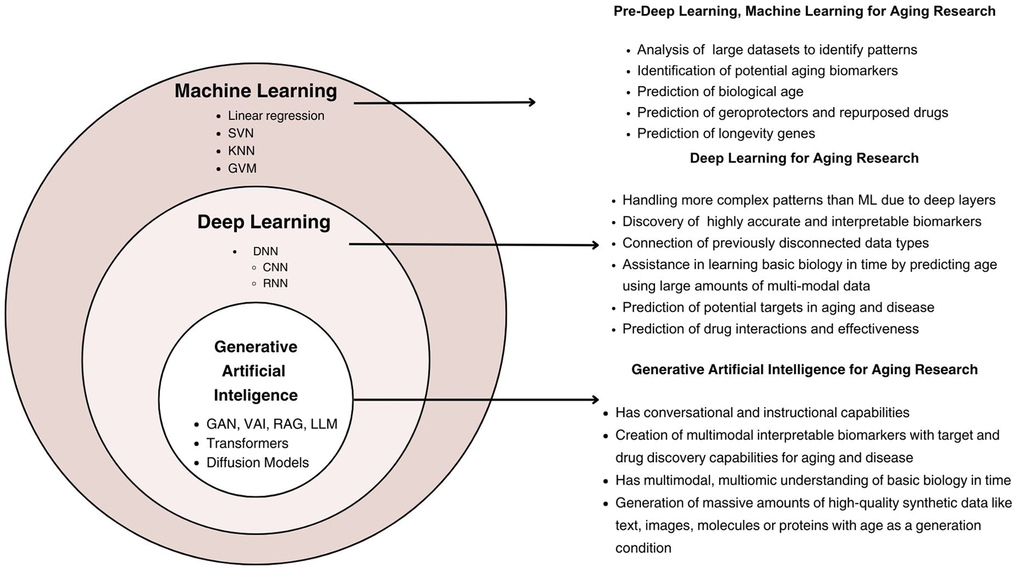

The Bigger Picture: AI Safety for Minors

Age prediction on Chat GPT is one piece of a much larger puzzle: how do we keep artificial intelligence safe for young people?

The challenge is that AI systems are designed with adults in mind. The training data is mostly from adults. The use cases that shaped development were mostly adult use cases. Teenagers have different developmental needs, different vulnerabilities, and different ways of interacting with technology.

Open AI's approach is essentially to retrofit safety features onto an existing system. This is better than nothing, but it's not ideal. A better approach might be to design separate AI systems specifically for younger users, with age-appropriate capabilities and restrictions built in from the ground up.

Some researchers advocate for that approach. Others argue that separate systems are paternalistic and unnecessary. Teenagers are smart. Many of them should have access to powerful tools. The question is how to give them access while minimizing risks.

There's also the international dimension. Age prediction and content restrictions need to account for different legal requirements and cultural values across regions. The EU approach emphasizes precaution and data protection. The US approach emphasizes innovation and market forces. Other regions have different priorities altogether. Building a system that respects all these requirements is genuinely difficult.

What Doesn't Work: Why Traditional Age Gating Is Insufficient

Before Open AI implemented age prediction, most age restrictions on the internet relied on simple age gating: you enter your date of birth during signup, and the system trusts you. This system basically doesn't work.

Studies have repeatedly shown that teenagers lie about their age during signup at rates between 60-90%, depending on the platform and age group. A 12-year-old claiming to be 18 to access a website is not rare—it's the norm.

The reason traditional age gating doesn't work is that there's no verification. You claim to be 18, and the system believes you. There's no consequence for lying. Teenagers have strong incentives to lie—they want access to content and services—and no cost to doing so.

Age prediction with behavioral signals is an attempt to get around this problem. Instead of trusting what users claim about themselves, the system tries to infer age from actual behavior. This is much harder to game, though not impossible.

The downside of behavioral age prediction is false positives. Old people who use Chat GPT to learn new skills might ask lots of questions that resemble teenager behavior. Parents accessing Chat GPT on kids' devices or devices with multiple users might create behavioral patterns that look like mixed-age usage. The system isn't perfect.

But it's better than nothing. And "not perfect but better than nothing" might be the best we can do in this space.

The Legal Landscape: Regulations Driving Change

Open AI's age prediction system didn't materialize out of altruism. It emerged because the legal and regulatory environment is changing rapidly, and the company wants to get ahead of it.

In the United States, the primary law protecting minors online is COPPA, the Children's Online Privacy Protection Act from 1998. COPPA requires that websites get parental consent before collecting personal information from users under 13. But COPPA doesn't apply to teenagers 13-18, and it's increasingly seen as outdated for the AI era.

Several states have proposed or passed new laws. Some focus on platform transparency and parental controls. Others focus on data protection and ad targeting. None of them specifically require age prediction, but they create an environment where companies feel pressure to implement age-appropriate protections.

In Europe, GDPR provides stronger data protection rights, and the Digital Services Act imposes specific responsibilities on platforms regarding minor protection. The EU's approach explicitly requires platforms to be active in protecting minors, not passive.

At the international level, there's no unified approach. Different countries have different laws. Some have virtually no regulations. Others have extensive frameworks. This creates compliance challenges for global platforms like Chat GPT.

The legal pressure is likely to increase. As more lawsuits target AI companies over harms to minors, and as more legislation passes requiring age-appropriate protections, companies will feel stronger incentives to implement sophisticated age verification and content restriction systems. Open AI's approach is probably a preview of what becomes industry standard.

Effectiveness Questions: Does This Actually Protect Minors

The real test of any safety system is whether it actually works. Does Open AI's age prediction and content restriction actually protect minors from harm?

The honest answer is: we don't know yet. The system is too new, and Open AI hasn't published effectiveness data. The company probably has internal metrics about false positive and false negative rates, about how often restricted content still reaches minors, about how many adults are misidentified. But none of that is public.

We can make educated guesses. Age prediction with behavioral signals is probably more effective than simple age gating. It's much harder for teenagers to fake their entire behavioral pattern than to type in a false birthdate. Content filters are imperfect but probably catch the majority of the most egregious cases. The combination probably reduces exposure to harmful content for many teenagers.

But it doesn't solve the fundamental problem: Chat GPT is an incredibly powerful tool that teenagers find useful, and restricting access to that tool also restricts legitimate learning and growth. There's an inherent trade-off, and it's not clear that the current approach strikes the right balance.

One important consideration is that effectiveness depends on the specific harm you're trying to prevent. Are you trying to prevent all self-harm content from reaching minors? That's probably not 100% achievable. Are you trying to prevent the most explicit self-harm encouragement? That's more achievable. Are you trying to reduce exposure to beauty standards and unhealthy dieting content? That's a much bigger problem because this content is everywhere online, including on platforms that don't have age restrictions.

Open AI will probably measure effectiveness by looking at reports from teenagers about their experience, complaints from parents or educators, and lawsuit trends. If the number of reported harms decreases, the company will likely credit its safety system. If harms continue or increase, the company will face pressure to do more.

What Teenagers and Parents Should Know

If you're a teenager, here's what you should know: Chat GPT is monitoring your age and restricting what it shows you. You might not be able to access certain types of content. You can probably work around these restrictions if you really want to, but that might not be a good idea. And if you're being misidentified as underage and it's frustrating, you can verify your age with a selfie.

If you're a parent, here's what you should know: Age restrictions on Chat GPT are a tool, not a substitute for parental involvement. You should know what your kid is asking Chat GPT about. You should talk to them about online safety, about AI limitations, and about when they should ask a human for help instead of relying on an AI. And you should be aware that restrictions exist but aren't perfect.

For educators, it's worth noting that Chat GPT is increasingly being used in educational contexts. Students use it for homework help, for learning explanations, and for practicing writing. The age restrictions might interfere with legitimate educational uses. It's worth staying informed about what these restrictions are and how they affect students in your classroom.

Future Developments: Where Is This Heading

If age prediction works well on Chat GPT, you can expect other AI platforms to adopt similar approaches. Anthropic with Claude, Google with Gemini, and other AI companies will probably implement their own versions. This creates an industry standard where age-appropriate content restriction becomes expected.

We might also see more sophisticated approaches. Instead of binary restrictions (access/no access), systems might provide age-appropriate versions of responses. A minor asking about reproduction might get an educational answer designed for teenagers. An adult asking the same question might get a more clinical answer. This would preserve access while adjusting the framing.

There's also potential for integration with parental controls. Imagine a system where parents can set custom restriction levels for their child's account. Maybe a 16-year-old could have access to some content that a 12-year-old couldn't, but more than a 20-year-old. This kind of granularity is technically possible and probably coming eventually.

On a longer timeline, we might see behavioral age prediction becoming so accurate and so normalized that it affects how entire internet works. Your age could be continuously estimated and updated based on your online behavior across multiple platforms. This has enormous privacy implications, but it also has enormous benefits in terms of harm reduction and personalization.

FAQ

What is Chat GPT age prediction?

Age prediction is a feature Open AI added to Chat GPT that uses machine learning to automatically identify whether a user is likely under 18 years old. The system analyzes behavioral and account-level signals such as stated age, account age, activity timing patterns, and usage patterns to make this determination. Based on this prediction, the system applies content restrictions designed to reduce exposure to sensitive material.

How does Chat GPT age prediction work?

Chat GPT's age prediction model examines multiple data points simultaneously rather than relying on a single signal. It looks at what you stated your age was during signup, how long your account has existed, when you're typically active on the platform, what kinds of questions you ask, and your overall usage patterns over time. By combining these signals, the algorithm makes a probabilistic judgment about your likely age category.

What content is restricted for minors on Chat GPT?

Chat GPT restricts access to graphic violence or gory content, sexual or romantic roleplay, depictions of self-harm, viral challenges that could encourage risky behavior in minors, and content promoting extreme beauty standards or unhealthy dieting. The restrictions are designed to reduce exposure to potentially harmful material while still allowing access to educational and legitimate information.

Why did Open AI implement age prediction?

Open AI implemented age prediction due to legal and regulatory pressure combined with public concern about AI safety for minors. A wrongful death lawsuit alleged that Chat GPT contributed to a teenager's suicide, Congress held hearings about AI chatbots and minor protection, and multiple platforms implemented age restrictions. Open AI responded by building its own age prediction and content restriction system to demonstrate proactive commitment to child safety.

How can adults restore access if they're misidentified as minors?

If Chat GPT incorrectly identifies you as underage when you're actually an adult, you can restore full access by submitting a selfie for age verification. Open AI uses this image to confirm your age through facial analysis. If you dispute the initial age prediction, the verification process should restore your unrestricted access within minutes.

Can teenagers bypass age restrictions on Chat GPT?

Determined teenagers can probably find ways to bypass restrictions, though it's not trivial. Simple methods include lying about age during signup, mimicking adult usage patterns, or using shared accounts with adults. Open AI has presumably built detection systems to catch suspicious patterns, but no system is perfect. The goal is to reduce access for most teenagers without making it absolutely impossible for determined users.

Is age prediction accurate?

Open AI hasn't released specific accuracy rates for its age prediction model, but based on how behavioral age estimation works in other contexts, the system probably achieves 85-95% accuracy. This means some teenagers are misidentified as adults and some adults are misidentified as minors. The false positive rate (incorrectly identifying adults as minors) is probably the bigger concern since that affects user experience more directly.

When will age prediction roll out globally?

Age prediction rolled out globally in late 2024, with one exception: the European Union. Open AI stated that EU rollout would happen "in the coming weeks" to account for regional requirements under GDPR and the Digital Services Act. The delay reflects different regulatory standards and privacy expectations in Europe compared to other regions.

Does age prediction affect Chat GPT's performance or speed?

Age prediction requires additional processing to analyze behavioral signals and make age determinations, but this should be invisible to users. The system is designed to make these determinations in real time without noticeable latency. Open AI's infrastructure is sophisticated enough to handle this additional computational load, but there are inherent trade-offs between accuracy and processing time.

What are the privacy implications of age prediction?

Age prediction necessarily involves analyzing user behavior, including activity timing, account history, and the types of questions you ask. This data is valuable for age determination but also creates detailed behavioral profiles. Open AI's privacy policy should specify what happens to this behavioral data, whether it's retained long-term, and whether it's used for purposes beyond age prediction. Users concerned about privacy should review the full privacy policy at openai.com/privacy.

Key Takeaways: What Chat GPT's Age Prediction Means for Digital Safety

Open AI's implementation of age prediction represents a significant shift in how tech companies approach child safety online. Rather than relying on simple age gating, the company is using sophisticated machine learning to identify users likely to be minors and applying appropriate content restrictions.

The system works by analyzing behavioral and account-level signals to make probabilistic age determinations. Adults who are misidentified can restore access through selfie verification. Content restrictions aim to reduce exposure to violence, self-harm content, sexual material, and harmful challenges without completely blocking legitimate educational access.

The driving forces behind this implementation were legal pressure from a wrongful death lawsuit, congressional scrutiny, and growing public concern about AI safety for minors. Open AI wanted to demonstrate proactive commitment to child protection before more aggressive regulation is imposed.

The effectiveness of the system remains unclear since it's too new and Open AI hasn't published detailed effectiveness metrics. The approach probably reduces access to harmful content for most teenagers while accepting that determined users can find workarounds. It represents a balance between safety and access, and reasonable people disagree about whether that balance is right.

As AI systems become more central to how teenagers learn and interact with information, age-appropriate protection becomes increasingly important. Open AI's approach is probably a preview of industry standards to come. Other AI companies are likely to implement similar systems, and more sophisticated approaches may emerge over time.

For teenagers, parents, and educators, the key takeaway is that AI content restrictions exist but aren't perfect. Teenagers should be aware that their usage might be analyzed to estimate their age. Parents should be engaged in conversations about AI safety rather than relying solely on platform protections. And educators should help students understand both the potential benefits and risks of these tools.

The question of how to keep AI safe for minors doesn't have a perfect answer. Age prediction is a step in the right direction, but it's just one piece of a much larger puzzle involving digital literacy, parental involvement, regulatory oversight, and the ongoing evolution of how we think about child protection in the digital age.

Bringing It All Together: The Path Forward for AI Safety

Age prediction on Chat GPT is important because it signals that tech companies are taking minor protection seriously. It's important because it probably reduces some harms while creating new challenges. And it's important because it's just the beginning of much more complex conversations about AI, safety, childhood development, and the role of AI in education.

If you're building AI products, the lesson is clear: age-appropriate protection is now table stakes. Investors and regulators expect it. Users expect it. The question is not whether to implement protections, but how to implement them thoughtfully and effectively.

If you're a parent or educator, the lesson is that technology companies are implementing safeguards, but technology alone isn't enough. Adult involvement, digital literacy education, and thoughtful conversations about AI are essential.

If you're a teenager, the lesson is that your online behavior is being analyzed and analyzed carefully. That's not necessarily bad—it might protect you from harmful content. But it's worth being aware of, and it's worth thinking about what information you're sharing and who's analyzing it.

The age prediction feature is a good-faith attempt to solve a genuinely hard problem. Like most solutions to hard problems, it's not perfect. But it's thoughtful, it's probably effective for most users, and it's a sign that the AI industry is starting to take seriously the responsibility it has to the next generation.

Try Runable if you're looking for an AI-powered platform that handles content generation responsibly, including age-appropriate safeguards for different audiences. Our platform makes it easy to create presentations, documents, and reports with built-in safety considerations.

Use Case: Creating age-appropriate educational content and presentations without manually filtering for sensitive topics.

Try Runable For FreeRelated Articles

- Grok AI Image Editing Restrictions: What Changed and Why [2025]

- ChatGPT's Age Prediction Feature: How AI Now Protects Young Users [2025]

- Bandcamp's AI Music Ban Sets the Bar for Streaming Platforms [2025]

- Are You Dead Yet: China's Viral App Redefining Digital Safety [2025]

- Roblox's AI Age Verification System Failure Explained [2025]

- Google's App Store Policy Enforcement Problem: Why Grok Still Has a Teen Rating [2025]

![ChatGPT Age Prediction: How AI Protects Minors Online [2025]](https://tryrunable.com/blog/chatgpt-age-prediction-how-ai-protects-minors-online-2025/image-1-1768991795753.png)