Introduction: When AI Becomes Your Security Researcher

Let's be honest—software security feels broken right now. Your critical infrastructure runs on code maintained by a handful of volunteers in their spare time. Your dependencies have dependencies that nobody's really auditing. And every single day, new vulnerabilities slip through the cracks despite our best efforts with traditional security tools.

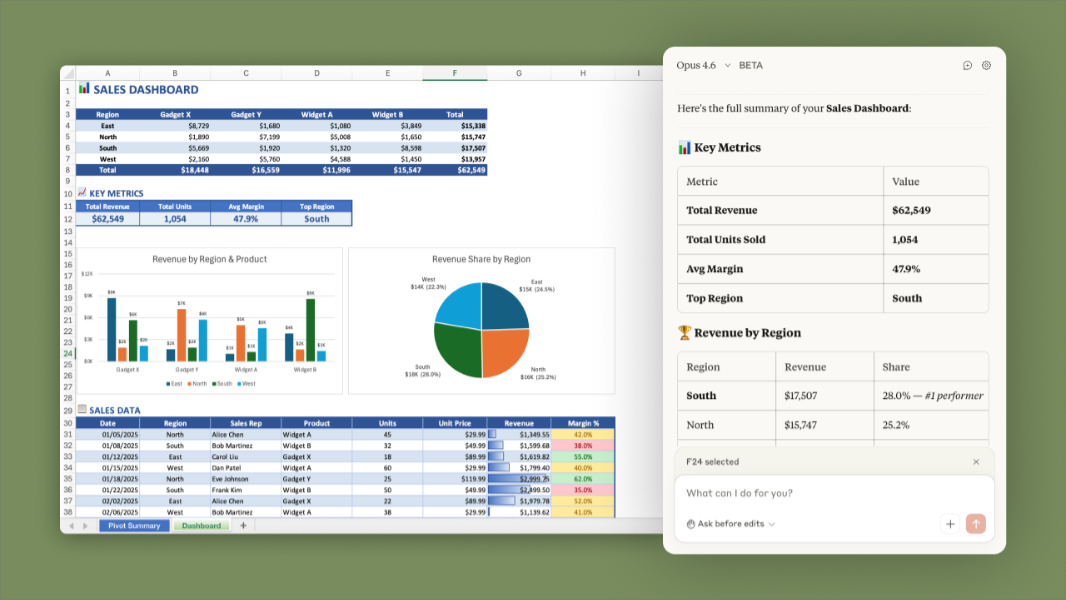

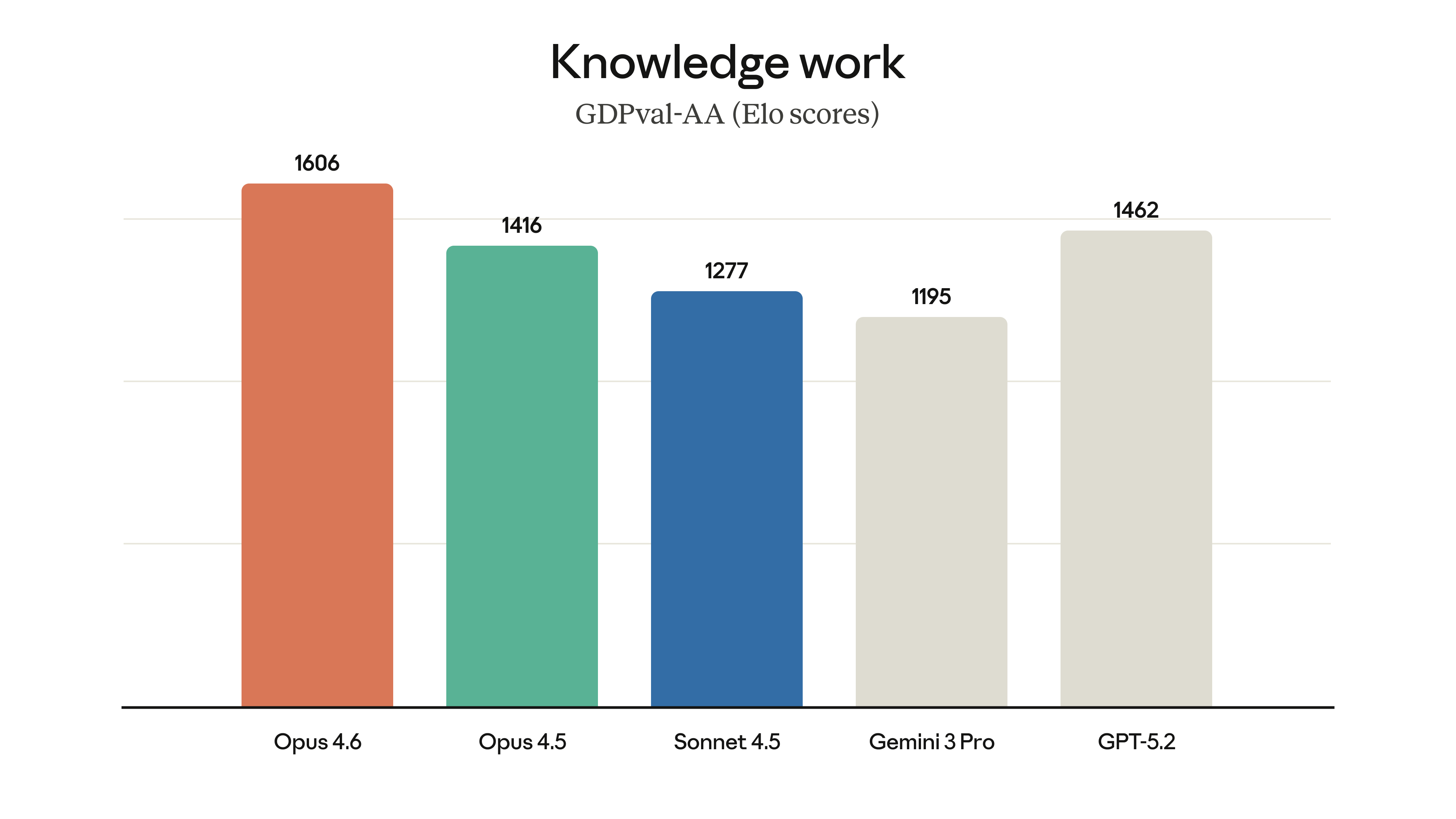

Then something shifts. Anthropic announced that its latest AI model, Claude Opus 4.6, found over 500 previously unknown high-severity vulnerabilities in open-source libraries during testing. Not through fuzzing. Not through static analysis tools. Through reasoning about code the way a human security researcher would, but at scale, across entire codebases that haven't been thoroughly audited in years.

This isn't just a press release. This represents a fundamental shift in how we can approach software security. For decades, we've relied on fuzzing techniques, static analysis, and manual code review. These methods work, but they're slow, expensive, and they miss things. A lot of things. Vulnerabilities have been hiding in widely-used open-source projects for years, sometimes decades, simply because nobody looked hard enough or in the right way.

Here's what makes Opus 4.6 different: it doesn't need custom tooling, specialized scaffolding, or carefully engineered prompts. It looks at code the way a security researcher actually thinks about finding bugs. It spots patterns from past vulnerabilities that weren't patched elsewhere. It reasons about logic flows to understand exactly what input would break the system. It does all this while processing entire libraries in a way that human researchers couldn't possibly scale.

The timing matters. Open-source software runs everything from personal computers to power grids. A vulnerability in a popular library affects millions of systems instantly. Yet the people maintaining these projects are often stretched thin, working without budgets or security teams. If AI can help secure this foundation layer, we're not just fixing individual bugs. We're potentially shifting the entire security landscape.

But there's urgency here too. Anthropic's warning is clear: this window where AI models can deliver at scale might not stay open forever. Security defenders need to move fast to patch vulnerabilities while the opportunity exists.

TL; DR

- 500+ Vulnerabilities Found: Claude Opus 4.6 discovered over 500 high-severity flaws in open-source libraries that traditional tools missed.

- AI Reasoning > Fuzzing: The model uses human-like reasoning about code patterns rather than fuzzing, catching logic-based vulnerabilities others miss.

- Scale Without Tooling: Works without custom scaffolding, specialized prompts, or domain-specific tooling, making it accessible to any team.

- Critical Infrastructure Impact: Patches are landing in real open-source projects, directly securing software that runs enterprise systems and critical infrastructure.

- Window of Opportunity: Anthropic emphasizes the need for speed—this scaling capability may not persist indefinitely as the security arms race evolves.

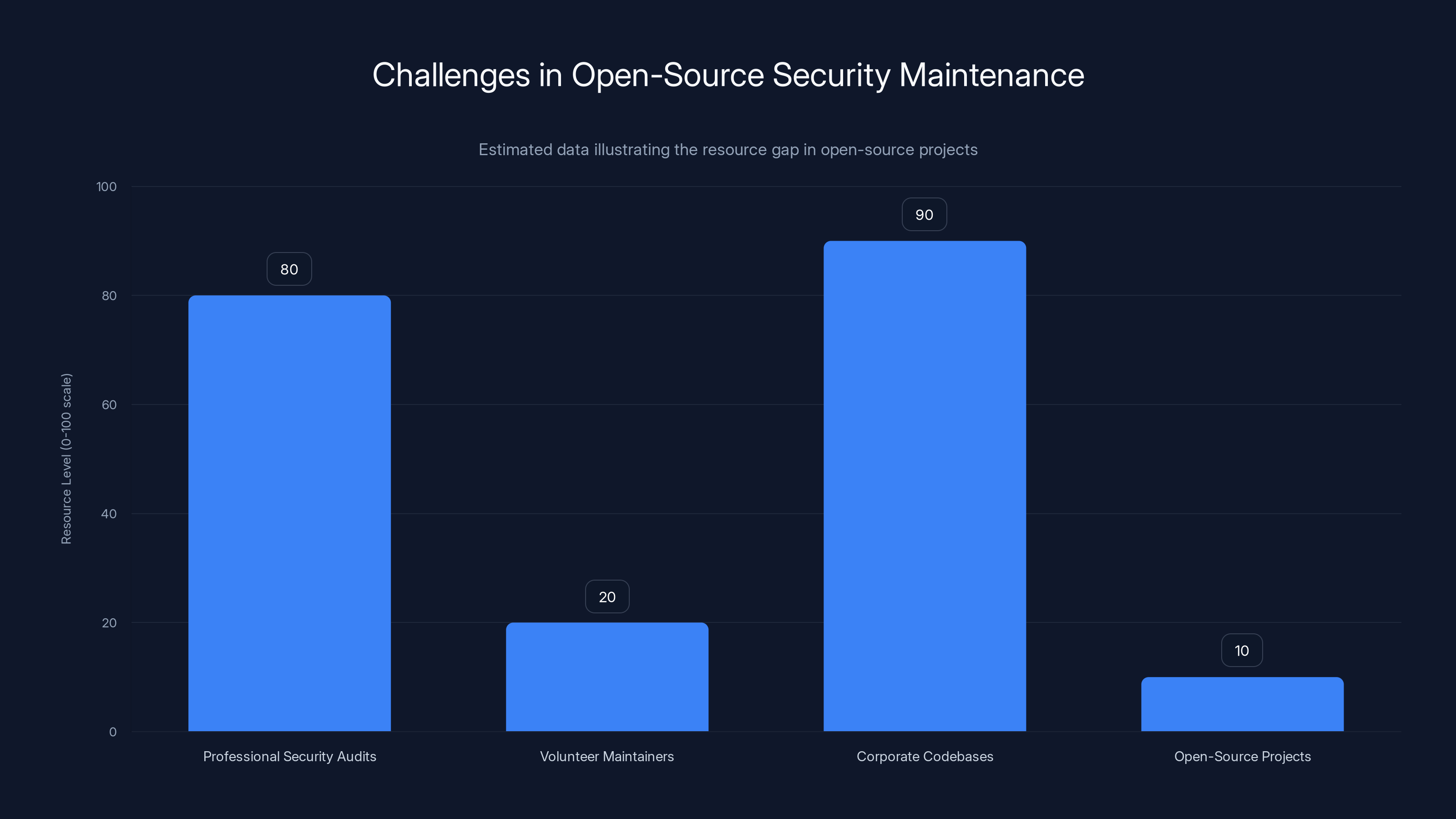

Estimated data shows a stark contrast in resource levels between professional environments and open-source projects, highlighting the security challenges faced by volunteer maintainers.

How Traditional Vulnerability Detection Falls Short

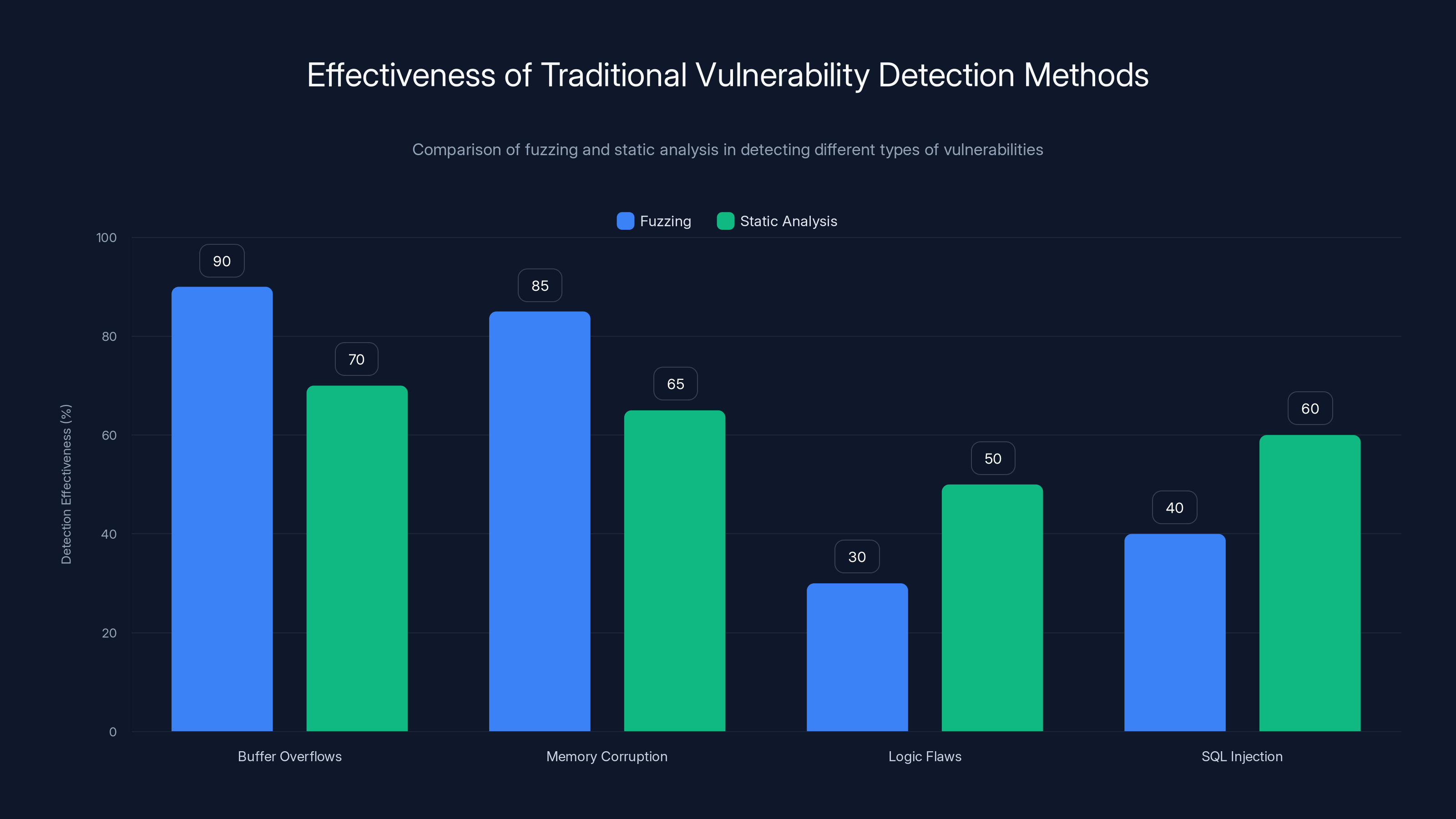

Before diving into what Opus 4.6 actually does, we need to understand why existing tools keep missing vulnerabilities that persist for years. Understanding the limitations is what makes the new approach so compelling.

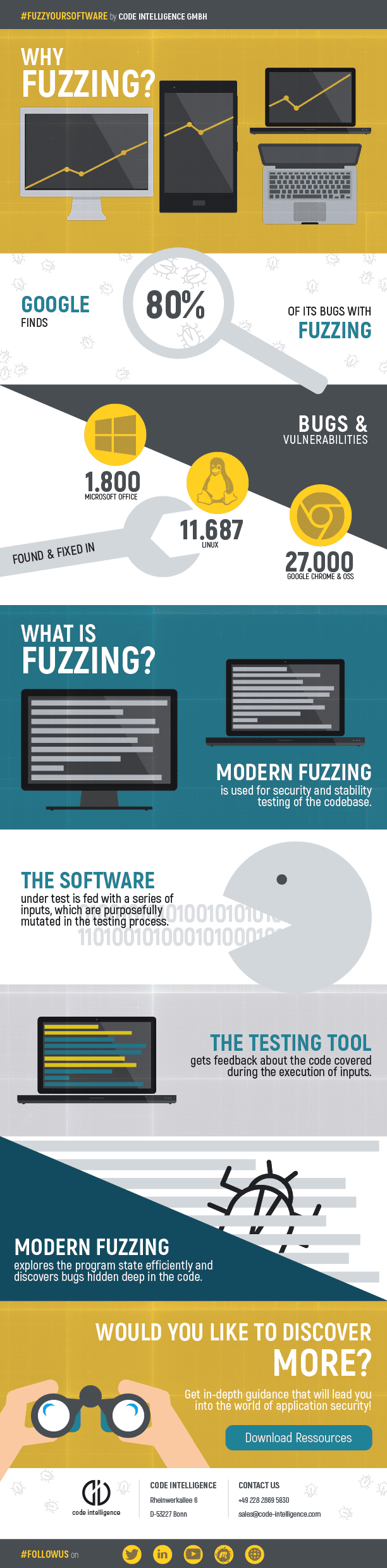

Fuzzing: Brute Force That Misses Logic Flaws

Fuzzing has been the gold standard for vulnerability hunting since at least the early 2000s. The basic idea is simple: throw random or semi-random inputs at a program and watch what breaks. If you throw enough variations at it, you'll eventually hit an edge case that crashes it or behaves unexpectedly.

This works for certain classes of vulnerabilities. Buffer overflows? Memory corruption? Fuzzing finds those reliably. But fuzzing is fundamentally a brute-force approach. It doesn't understand what the code is supposed to do. It just tries different inputs and watches for crashes.

Here's where it fails: logic flaws. A function that authenticates users but has an off-by-one error in its permission check. Code that processes cryptographic keys but leaves them in memory too long. SQL injection vulnerabilities that only trigger under specific combinations of user input that a fuzzer might never naturally generate. These are logic-level problems that require understanding intent, not just executing code paths.

Anthropic's research showed that even codebases that had been fuzzed for years still contained high-severity flaws. The fuzzers had done their job within their limitations, but those limitations were significant.

Static Analysis: Reading Code Without Understanding Context

Static analysis tools scan source code looking for known patterns. A weak cryptography library call. A missing bounds check. A function that might return null without proper validation.

The problem? These tools work on pattern matching. They're looking for known bad patterns. They can't easily reason about context. Is that null return actually handled? Does the calling function check for it? Is the vulnerability only exploitable if two separate conditions align?

Static analysis also generates enormous numbers of false positives. Security teams get alert fatigue from warnings about potential issues that aren't actually exploitable in practice. And here's the catch: teams then start ignoring warnings, which means real vulnerabilities get buried in the noise.

Manual Code Review: Doesn't Scale

The absolute best way to find vulnerabilities? Have skilled security researchers spend weeks reading every line of code. Understanding the business logic. Tracing data flows. Thinking like an attacker.

But this doesn't scale. It's expensive, time-consuming, and most open-source projects can't afford it. Even large companies can't afford to manually review every dependency they use. The math simply doesn't work.

What Makes Claude Opus 4.6 Different

Opus 4.6 approaches vulnerability detection fundamentally differently. Instead of brute-forcing inputs or pattern-matching, it reasons about code the way a security researcher would. And critically, it does this at scale.

AI Reasoning About Code Patterns

The model works by analyzing how vulnerabilities manifest. It looks at past security patches in open-source projects. When developers fixed a vulnerability in one place, were similar unfixed vulnerabilities left elsewhere? What patterns tend to cause these problems? What logic flows are commonly mishandled?

Then it applies this reasoning to codebases, spotting similar patterns and logical flaws. It's not looking for specific known vulnerabilities. It's learning vulnerability patterns and looking for places where those patterns might apply.

This matters because many vulnerabilities are variations on known themes. Authentication logic gets wrong in similar ways across different projects. Input validation is skipped in predictable scenarios. Cryptographic keys are mishandled following familiar patterns. An AI model trained on thousands of past vulnerabilities can recognize these patterns across new code.

Understanding Input That Would Break Logic

Here's something fuzzing can't easily do: reason about the intended purpose of code and figure out which specific inputs would violate that purpose.

For example, imagine a function that's supposed to validate timestamps. It checks that timestamps are positive numbers. A fuzzer might try negative numbers and other edge cases. But what if the real vulnerability is that the function doesn't properly handle timestamps in a specific date range that causes overflow when used in calculations downstream? That's a logic-level vulnerability.

Opus can reason about the code's purpose, understand the mathematical implications, and identify the specific inputs that would cause problems. It's not discovering this through trial and error. It's understanding the logic and predicting the failure mode.

No Custom Scaffolding Required

Here's what's genuinely important for practical adoption: Opus 4.6 works without specialized tooling. You don't need custom prompts. You don't need task-specific engineering. You feed it code and it finds vulnerabilities.

Compare this to earlier AI approaches that required careful prompt engineering, specific training for each codebase, or custom scaffolding to work well. Those approaches were fragile. They didn't generalize. Opus 4.6 works generically.

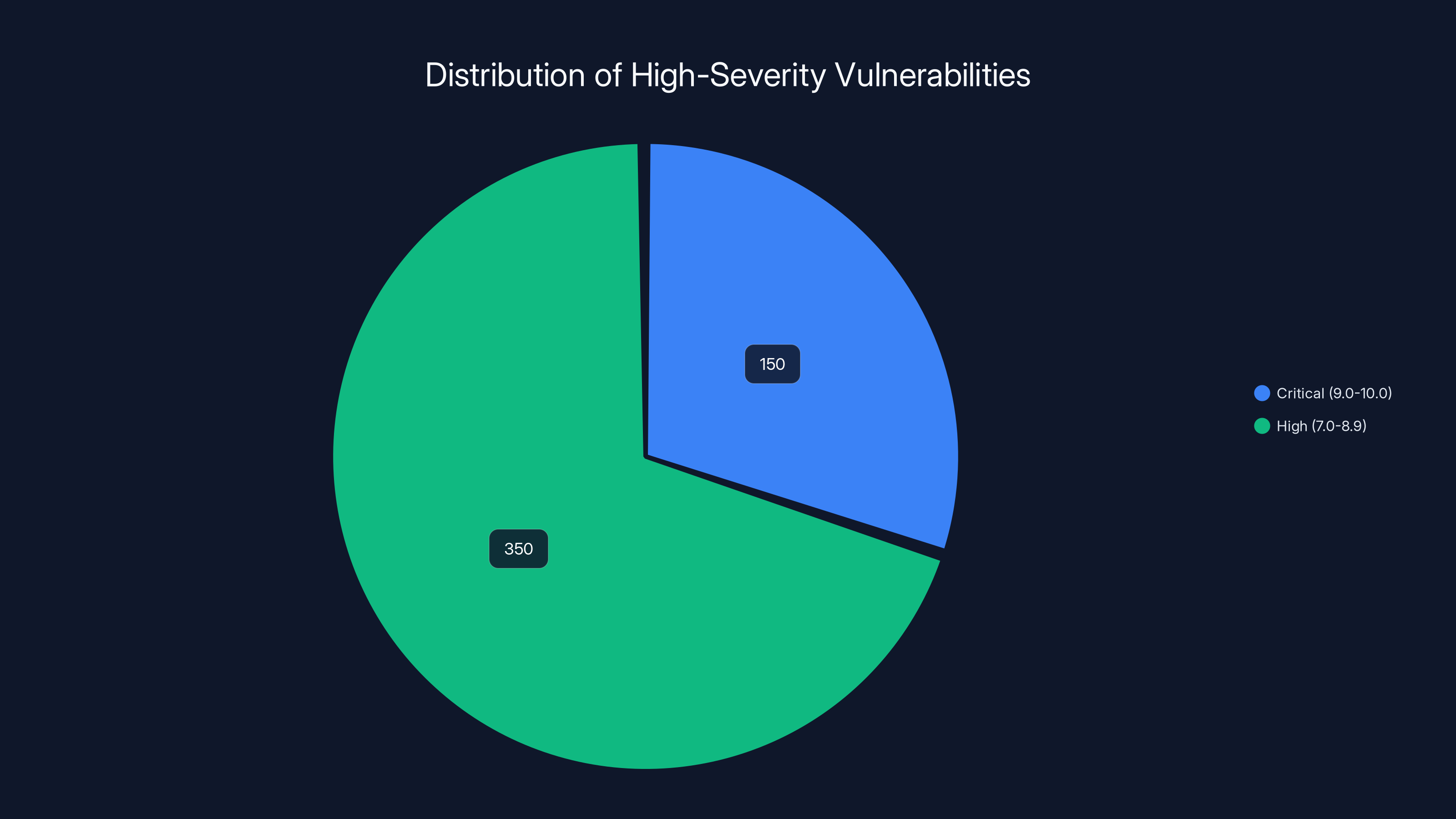

Anthropic identified over 500 high-severity vulnerabilities, with an estimated 70% categorized as High and 30% as Critical. Estimated data.

The 500+ Vulnerabilities: What Actually Got Found

Let's talk specifics. Anthropic found over 500 high-severity vulnerabilities across open-source projects. But what does that actually mean? What kind of vulnerabilities are we talking about?

High-Severity Definition

When we say "high-severity," we're talking about vulnerabilities that would likely earn a CVSS score above 7.0 (on a 0-10 scale). These aren't minor issues. These are problems that could lead to complete system compromise, data theft, or denial of service under realistic attack conditions.

For context, a CVSS score breakdown looks roughly like this:

The vulnerabilities Opus found are in that High to Critical range. Not theoretical problems. Not "might be exploitable under specific conditions." Actual code flaws that create real security risks.

Open-Source Project Impact

The focus on open-source software is strategic. Open-source runs everywhere. Your company's infrastructure likely depends on dozens of open-source libraries. Your laptop runs open-source components. Your phone uses open-source code. The internet is built on open-source foundations.

A single vulnerability in a popular open-source library can affect millions of systems instantly. Libraries like Open SSL, Apache Commons, or Spring Framework are used in countless applications. A high-severity vulnerability in one of these impacts enterprises, startups, and critical infrastructure simultaneously.

Yet many of these projects are maintained by tiny teams working without security budgets. They're not running professional penetration testing programs. They don't have security researchers auditing their code. Volunteers are doing their best with limited resources.

Opus finding 500+ vulnerabilities in this ecosystem means finding real problems in software that affects the entire internet.

Vulnerabilities Missed Despite Existing Tools

This is the critical insight: these vulnerabilities existed in codebases that had been tested with fuzzing, static analysis, and sometimes even professional security review. Traditional tools had looked at this code and missed the problems. Sometimes for years.

Why? Because those tools work differently than AI reasoning. A fuzzer might not generate the specific input sequence that triggers the vulnerability. A static analyzer might not understand the specific logic flow that makes it exploitable. A security reviewer might have audited the code but missed a subtle logical flaw.

AI reasoning finds these because it approaches the problem like a human security researcher would: understanding intent, spotting patterns, and thinking about edge cases.

How Opus 4.6 Actually Works in Practice

Let's get technical. Understanding how Opus actually discovers these vulnerabilities helps explain why it's so effective.

Step One: Learning From Historical Vulnerabilities

Opus 4.6 was trained on vast amounts of code and security patch data. The model has seen thousands of real vulnerabilities and how they were fixed. This creates internal pattern recognition for how vulnerabilities typically manifest.

When a developer fixes a buffer overflow, the fix usually involves bounds checking. When a SQL injection vulnerability is patched, it typically means parameterized queries are added. When authentication is fixed, it often means additional checks are implemented. Opus learns these patterns.

Step Two: Analyzing New Code for Similar Patterns

When analyzing a new codebase, Opus looks for similar patterns that don't have the fix applied. A function that processes input without proper bounds checking. An authentication routine missing specific validation. Database code that constructs queries dynamically.

It's not just string matching. It's reasoning about the logical structure of the code and identifying where similar vulnerabilities might exist.

Step Three: Reasoning About Exploit Paths

Here's where AI reasoning really differs from traditional tools. Opus doesn't just identify suspicious code. It reasons about whether that code is actually exploitable. Can an attacker actually control the inputs that would trigger the vulnerability? What conditions need to align?

It's filtering out false positives not through statistical analysis, but through logical reasoning about exploitability.

Step Four: Validation and Confidence Scoring

Not every potential vulnerability Opus identifies is legitimate. The model provides confidence scores for its findings, helping security teams prioritize which potential vulnerabilities to investigate first.

Some findings might be theoretical problems that don't actually have an exploitation path. Others might be high-confidence vulnerabilities that are clearly exploitable. Confidence scoring helps teams focus resources where they matter most.

Real-World Impact: Patches Already Landing

This isn't theoretical research. Anthropic has already found and reported these vulnerabilities, and patches are landing in real open-source projects.

Reporting and Disclosure Process

Anthropic didn't just publish the research and disappear. They're actively reporting findings to open-source maintainers following responsible disclosure practices. Find the vulnerability, notify the maintainers, give them time to develop a fix, then publish the details after patches are available.

This is the right way to do this. It gives projects time to develop fixes without publicly exposing unpatched vulnerabilities.

Patches in Production

The fact that patches are already landing is significant. This means open-source maintainers are taking these findings seriously and prioritizing fixes. The vulnerabilities aren't theoretical. They're real enough that project maintainers are treating them as urgent.

Scaling Security Work

Here's what's genuinely transformative: Anthropic is doing security work that would normally require hiring teams of security researchers and paying them for months or years. An AI model can do similar analysis in days, across thousands of projects simultaneously.

For open-source projects that couldn't possibly afford professional security reviews, this is enormous. It's security services scaled to reach projects that normally couldn't afford them.

Integrating AI into security practices can significantly enhance efficiency, with estimated time savings ranging from 50% to 70% across various tasks. Estimated data.

Why AI Reasoning Beats Traditional Tools

Let's break down exactly why this approach works better than what we've been doing for decades.

Combinatorial Reasoning

Traditional fuzzing is basically combinatorial search—try different inputs and see what breaks. But the space of possible inputs is infinite. You can't test everything. You test likely scenarios and hope you catch the vulnerabilities.

AI reasoning is different. Instead of searching for failures, it reasons about the code to identify what would cause failure. It's deductive rather than inductive. Instead of trying inputs until something breaks, it reasons about the logic to predict what would break it.

For complex logic, this is vastly more efficient. Consider a function that validates cryptographic signatures. Testing every possible signature variation would take forever. But understanding the cryptographic logic, understanding common implementation mistakes, and recognizing patterns in similar vulnerabilities? That's reasoning, not search.

Context Understanding

Static analysis tools work on rules. "Flag all uses of strcpy." "Flag all dynamic SQL queries." These rules catch obvious problems, but they miss context.

AI models understand context. Is that strcpy call actually dangerous given how it's being used? Is that SQL construction actually injectable given the input validation that precedes it? Understanding context massively reduces false positives while increasing true positive rates.

Learning From Security Evolution

When new vulnerability types emerge, traditional tools need to be updated with new rules. That takes time. Teams of people need to understand the vulnerability class, figure out how to detect it, and update tools.

AI models that continue learning from new vulnerabilities automatically incorporate this knowledge. They understand vulnerability patterns at a higher level than specific rules.

Scale

A human security researcher can audit maybe 10,000 lines of code per week thoroughly. Maybe. That's optimistic. Opus can analyze millions of lines of code in hours. Scale matters when you're trying to secure the entire open-source ecosystem.

The Open-Source Security Crisis That Led Here

Understanding why this matters requires understanding the state of open-source security.

The Volunteer Maintenance Problem

The most downloaded packages on npm, Py PI, and other package managers are often maintained by a single person or a tiny volunteer team. These are critical infrastructure—literally everything depends on them—yet they're maintained by people doing the work in their spare time for free.

These maintainers are under-resourced, under-appreciated, and under-supported. They're not running professional development environments. They're not conducting security reviews. They're fixing bugs and adding features while juggling day jobs and life.

Vulnerability Accumulation

With limited resources for security, vulnerabilities accumulate. Not because maintainers are careless, but because comprehensive security testing requires resources they don't have. The math is brutal: a security audit costs

Vulnerabilities that would get caught immediately in a corporate codebase persist for years in open-source projects.

The Internet-Wide Impact

Here's what makes this a crisis: when a vulnerability exists in a popular open-source library, it's not just one company's problem. It's everyone's problem. Organizations using that library are vulnerable. Projects that depend on that library are vulnerable. Users of all those systems are vulnerable.

A single unpatched vulnerability in something like Apache Struts or Spring Framework can affect hundreds of millions of systems.

No Existing Solution

Before AI approaches like Opus 4.6, there was no realistic way to audit the entire open-source ecosystem. You could run fuzzing. You could run static analysis. You could fund security audits for critical projects. But comprehensive scanning of millions of projects for logic-level vulnerabilities? Impossible.

AI changes that equation. It makes large-scale auditing possible.

The Technology Behind the Breakthroughs

Understanding what makes Opus 4.6 effective requires understanding advances in AI reasoning capabilities.

Reasoning in Large Language Models

Earlier AI models were good at pattern matching but struggled with deep reasoning. They could identify that code looked similar to past vulnerabilities, but they couldn't reason about why it was vulnerable.

Recent advances in Anthropic's research focus on improving reasoning capabilities. Opus 4.6 represents a leap in the model's ability to think step-by-step about complex logic.

Instead of making a binary judgment, the model can reason: "This function does X. That leads to Y. Which means Z is possible. Which would allow an attacker to..." This step-by-step reasoning is vastly more powerful than pattern matching.

Code Understanding

Opus was trained on enormous amounts of code and security research. Not just Python or Java Script, but real code in dozens of languages. This training creates a deep understanding of how code actually behaves, what common patterns mean, and how vulnerabilities typically manifest.

This is different from tools trained on static analysis rules. It's more like a security researcher who has read thousands of real codebases and understands the patterns.

Multimodal Reasoning About Logic

The model can reason simultaneously about:

- Data flow: How does data move through the code?

- Control flow: What paths can execution take?

- Intent: What is the code supposed to do?

- Edge cases: What cases might the developer not have considered?

- Exploit paths: How would an attacker leverage this?

This simultaneous reasoning across multiple dimensions is what lets it find vulnerabilities that single-purpose tools miss.

Continuous real-time analysis and developer-integrated tools are expected to have the highest impact on the future of vulnerability detection. Estimated data based on current trends.

Implications for Security Teams

What does this mean for your organization's security practices?

Shifting Security Paradigms

This represents a fundamental shift in how we approach vulnerability detection. For decades, the model was: write rules, deploy tools, review alerts. Anthropic's approach is: use AI reasoning to identify actual problems, validate them, and report high-confidence findings.

This shift has massive implications. It means your security team's time is better spent on actual remediation rather than alert triage. It means more vulnerabilities get found that your current tools miss. It means the balance between security and development speed might actually improve.

Resource Implications

If AI can do security work that previously required hiring security researchers or funding expensive audits, it changes the economics. Small teams and under-resourced projects can now access vulnerability detection capabilities previously available only to large enterprises.

This democratizes security. Projects that couldn't afford audits can now leverage AI analysis. Organizations can extend security scanning to their entire dependency tree rather than just critical projects.

Urgency of Patching

As AI tools proliferate, both defenders and attackers gain access to better vulnerability detection. This shortens the window between vulnerability discovery and exploitation. Patching processes that took weeks might need to become days. Your incident response capabilities might need to accelerate.

The Attack Landscape Shift

While AI helps defenders, it also affects attackers.

Both Sides Get Better Tools

The same AI capabilities that help Anthropic find vulnerabilities will eventually be available to attackers. As AI reasoning capabilities become commoditized, malicious actors will use them for reconnaissance and exploit development.

This creates pressure: defenders need to patch vulnerabilities faster than attackers can find and exploit them. The window of vulnerability shrinks.

Supply Chain Targeting

Attackers have long targeted open-source libraries as high-value targets. Compromising a popular library gives access to millions of systems. As AI makes finding vulnerabilities in these libraries easier for defenders, attackers will focus harder on finding zero-days (vulnerabilities unknown to vendors) or on supply chain attacks that don't require finding new vulnerabilities.

Vulnerability Markets

The economics of vulnerability discovery are changing. If AI can find vulnerabilities at scale, the information value of individual vulnerabilities decreases. Bug bounty programs might need to adjust their economics. Vulnerability markets might contract as supply increases.

Remaining Challenges and Limitations

This technology is powerful, but it's not a silver bullet.

False Negatives

Opus 4.6 finds many vulnerabilities, but it won't find everything. Some vulnerabilities are deeply subtle or require understanding of external systems the model doesn't have. The model's accuracy is very high on vulnerabilities similar to those in its training data, but it might miss entirely novel vulnerability classes.

Security teams need to understand that AI findings are comprehensive but not exhaustive. You still need defense in depth.

False Positives

While AI reduces false positives compared to static analysis, some findings will be non-exploitable or based on incorrect reasoning about the code. Security teams need to validate AI findings before treating them as confirmed vulnerabilities.

The good news: Anthropic has been working with maintainers, and the reported finding rate seems legitimate. But validation is still essential.

Knowledge of External Systems

Opus understands code logic, but it might not understand how external systems behave. If a vulnerability requires specific knowledge about database behavior, API semantics, or deployment configurations, the model might miss it or misassess its severity.

The Moving Target Problem

As AI tools improve at finding vulnerabilities, codebases will improve at avoiding them. Developers will learn patterns that AI tools flag and will develop better practices. The arms race continues.

Fuzzing excels at detecting buffer overflows and memory corruption but struggles with logic flaws and SQL injection. Static analysis offers moderate detection across all types but lacks context understanding. Estimated data based on typical tool performance.

Implementation: Using AI for Vulnerability Detection

If you want to implement AI-based vulnerability detection in your organization, what does that look like?

Where to Start

Begin with your most critical dependencies. Which open-source libraries is your system most dependent on? Which ones have the most exposure if compromised? Run analysis on those first.

For truly critical software, you might request analysis directly from teams working on AI-based vulnerability detection. Some research teams offer this service.

Integration with Existing Security Programs

AI findings should integrate with your existing vulnerability management program. Combine them with fuzzing results, static analysis, and human code review. Each approach catches different things.

Don't replace your existing tools. Augment them.

Validation Processes

Develop a process for validating AI findings:

- Review the AI's reasoning (is it sound?)

- Attempt to reproduce the vulnerability in your environment

- Assess exploitability in your deployment context

- Determine patch urgency

- Coordinate remediation

This process is lighter weight than a full security audit but heavier than accepting AI findings uncritically.

Sharing Findings

If your analysis uncovers vulnerabilities in open-source projects you use, consider responsible disclosure. Notify maintainers. Give them time to patch. The entire ecosystem benefits when vulnerabilities are fixed.

The Window of Opportunity Anthropic Describes

Anthropic warned that "this is a moment to move quickly." What does that mean?

The Current Advantage Asymmetry

Right now, AI is more accessible to security defenders than attackers (generally). Anthropic has published research. Security teams can leverage these insights. But this advantage is temporary.

As AI capabilities spread, attackers gain access to the same tools. The asymmetry disappears. When both sides have access to AI-powered vulnerability detection, the advantage becomes less about detection and more about speed of response.

Scaling Patch Development

The real bottleneck isn't finding vulnerabilities. It's developing and deploying patches. If AI can find 500 vulnerabilities but projects need months to patch them all, the window doesn't help.

Organizations need to think about how they'd respond to discovering hundreds of vulnerabilities in critical projects simultaneously. Can your team handle that volume? Do you have processes for coordinated patching across your infrastructure?

Building Vulnerability Response Capacity

The smart move now is building the capability to respond to large numbers of vulnerabilities when they're discovered. Developing patch testing procedures. Building automation for deployment. Creating processes for coordinated responses.

Because when the easy part (finding vulnerabilities) becomes widely available, the hard part (fixing them) becomes the bottleneck.

Future of Vulnerability Detection

Where does this go from here?

Continuous Real-Time Analysis

We'll likely see a shift from periodic scanning to continuous monitoring. Instead of running vulnerability detection once a quarter, it runs constantly. Every commit is analyzed. Every dependency update is checked. Real-time feedback to developers about introducing code patterns that AI flags as potentially vulnerable.

Developer-Integrated Tools

Instead of tools that run in your security infrastructure, expect to see AI vulnerability detection integrated into developer workflows. Developers get feedback as they write code. "This pattern is similar to known vulnerabilities in..." with suggestions for safer approaches.

This shifts security left. Problems get caught before they're committed, not months later during scanning.

Multi-Language and Framework Specialization

Opus 4.6 works across languages, but future tools might specialize. A model trained specifically on Python security vulnerabilities, another specializing in Java Script, another in compiled languages. Specialization often beats generalization.

Automated Patch Generation

The next frontier: not just detecting vulnerabilities but generating patches automatically. If an AI understands why something is vulnerable, can it suggest how to fix it? Early experiments suggest yes. Imagine committing code and getting not just a "vulnerability found" alert but a suggested patch.

Integration With Bug Bounty Programs

Bug bounty platforms will likely integrate AI tools. Researchers use AI to augment their manual hunting. Programs use AI to validate findings and prioritize reports. The economics of bug bounties shift as AI participation spreads.

Opus 4.6 assigns high confidence scores to SQL Injection and Buffer Overflow vulnerabilities, indicating strong pattern recognition and reasoning capabilities. Estimated data.

Ethical and Strategic Considerations

This technology raises important questions beyond just "can we do this?"

Open-Source Maintenance Burden

If AI finds hundreds of vulnerabilities in a project, but the maintainers are volunteers with limited time, have we actually helped? Or have we created a backlog of work they can't possibly handle?

Responsible deployment means considering the impact on the people who maintain critical software. It means potentially pairing vulnerability discovery with maintenance resources and support.

Economic Implications

If vulnerability detection becomes commoditized through AI, the economic model for security research changes. Bug bounty programs might become less valuable. Security researchers face pressure on salaries and job availability.

This isn't necessarily bad, but it's worth thinking about. Do we want security research driven by profit incentives or by access to AI tools?

The Arms Race

As both defenders and attackers gain access to AI tools, the arms race accelerates. Faster exploit development. Faster patch cycles. More sophisticated attacks targeting the AI tools themselves.

We're entering a new phase of security dynamics where speed and scale matter more than they ever have.

Comparison: AI Vulnerability Detection vs. Traditional Methods

Let's put this in perspective.

| Method | Speed | Accuracy | Scale | Cost | False Positives |

|---|---|---|---|---|---|

| Manual Code Review | Slow (weeks/months) | Very High | Very Limited | Very High ($$$) | Very Low |

| Fuzzing | Moderate (days) | Moderate | Limited | Moderate | Moderate |

| Static Analysis | Very Fast (hours) | Moderate | Good | Low | High |

| AI Reasoning (Opus 4.6) | Fast (days) | High | Excellent | Low | Low |

| Combination Approach | Moderate | Very High | Excellent | Moderate | Very Low |

The combination approach wins for most organizations. Use AI for initial scanning and reasoning, validate with fuzzing for specific components, supplement with targeted manual review for critical components.

How Your Organization Should Respond

If you work in security, Dev Ops, or infrastructure, here's what you should be thinking about.

Assessment

First, assess your current state. What's your vulnerability detection coverage? What tools are you using? What's your patch cycle look like? How would you respond if someone found 50 new vulnerabilities in critical dependencies tomorrow?

Honestly. Not hypothetically.

Dependency Audit

When AI tools become available for your stack (Python, Java Script, Java, Go, whatever you use), run them across your entire dependency tree. Not just direct dependencies but transitive ones. Get a baseline of what's currently vulnerable in your infrastructure.

That baseline might be uncomfortable. That's good. Uncomfortable facts drive change.

Process Development

Develop processes for responding at scale. Automated testing of patches. Coordinated deployment strategies. Communication templates for when vulnerabilities need urgent action.

Do this before you need it. Building processes under pressure is how mistakes happen.

Staying Current

This technology landscape is moving fast. Subscribe to research from teams like Anthropic. Follow vulnerability research. Understand the tools and techniques. Your security approach from three years ago isn't adequate for three years from now.

Tools and Platforms Emerging From This Shift

Various platforms are building on AI vulnerability detection capabilities.

Specialized Security Research Platforms

Companies are emerging that focus specifically on AI-powered security analysis. Some offer API access to models trained specifically on vulnerability detection. Others provide specialized tools for specific languages or frameworks.

Integration With Development Platforms

Git Hub, Git Lab, and other development platforms are integrating AI-based security analysis into their offerings. Push code, get vulnerability analysis in your CI/CD pipeline.

Bug Bounty Platform Enhancements

Bug bounty platforms are adding AI tools to their researcher toolkits. Automated analysis to help human researchers find vulnerabilities faster.

Automated Patch Services

Some vendors are experimenting with automated patch generation and deployment. Find a vulnerability, generate a patch, test it, potentially deploy it automatically.

This is still emerging, but the direction is clear.

The Bigger Picture: AI's Role in Security

Vulnerability detection is just the beginning. AI is reshaping security across the board.

Threat Detection and Incident Response

AI helps spot anomalies that indicate breaches. Unusual network traffic patterns. Behavioral changes in user accounts. Log analysis at scale. Attack detection has become an AI problem because humans can't process that much information.

Threat Intelligence

AI models can process feeds from thousands of sources and synthesize threat intelligence. What's currently being exploited? What's trending in attack techniques? What's the likely next target?

Adversarial Prediction

AI can help predict how attackers would approach specific systems. What's the likely attack path? What's the highest-value target? What are the weak points?

Vulnerability detection is the foundation layer, but it's part of a much larger transformation.

Conclusions: The New Reality

Claude Opus 4.6 finding 500+ vulnerabilities in open-source libraries isn't just a research milestone. It's a signal that the security landscape is shifting fundamentally.

For defenders, this is an opportunity. Tools now exist to audit codebases at scales previously impossible. Vulnerable software can be fixed faster. The open-source ecosystem can be made more secure.

But the window for advantage is finite. As these tools become available to everyone, both defenders and attackers move faster. Patch cycles need to compress. Response processes need to mature. Organizations need to be ready to handle vulnerability floods, not trickles.

For open-source maintainers, this creates both opportunity and burden. Opportunity to finally understand what's wrong with their code. Burden to fix it when thousands of issues surface. The infrastructure to support that matters.

For organizations, the practical implication is clear: your current vulnerability detection and patch processes probably aren't ready for what's coming. Building readiness now, while the tools are still emerging, is the strategic move.

The future of security is AI-assisted detection at scale. We're seeing the beginning of that shift right now.

FAQ

What makes Claude Opus 4.6 different from traditional security tools?

Opus 4.6 uses AI reasoning to understand code logic rather than brute-force testing or pattern matching. It can recognize vulnerability patterns similar to past exploits and reason about specific inputs that would cause failures, without requiring custom prompts or specialized tooling. This allows it to find logic-level vulnerabilities that fuzzing and static analysis tools commonly miss.

How does AI reasoning about code work in practice?

The model analyzes code to understand its intended purpose, traces data and control flow, identifies patterns from previously discovered vulnerabilities, and reasons about whether specific exploit paths are possible. It essentially thinks like a human security researcher but processes code at machine speed. It learns from thousands of past vulnerabilities to recognize similar patterns in new code.

Can AI vulnerability detection replace traditional security tools?

No. AI findings should augment, not replace, existing security programs. Different tools catch different vulnerability types. The most effective approach combines AI reasoning, fuzzing, static analysis, and targeted manual review. Each method has strengths and weaknesses. Using multiple approaches catches more vulnerabilities with fewer false positives.

Why is the window of opportunity limited that Anthropic mentions?

As AI vulnerability detection tools become widely available, both defenders and attackers gain access to similar capabilities. The advantage asymmetry shrinks. The real advantage now is for organizations that move fast to patch vulnerabilities before attackers can exploit them. Once the tools are democratized, speed of response becomes more important than detection capability.

How should organizations prepare for this shift in vulnerability detection?

Start by assessing your current vulnerability detection coverage and patch cycle capabilities. Audit your dependencies with emerging AI tools when available. Develop processes and automation for responding at scale to large numbers of vulnerabilities. Build internal expertise around AI-assisted security. Most importantly, don't wait. Competitive advantage comes from being prepared before this becomes mainstream.

What happens to open-source maintainers when they receive hundreds of vulnerability reports?

This is a legitimate concern. The responsible approach involves not just reporting vulnerabilities but providing support for maintainers to fix them. Some organizations are pairing vulnerability disclosure with resources or patches. Projects need to scale their maintenance capacity or get external support to handle large volumes of findings responsibly.

Looking Forward: Integrating AI Into Your Security Practice

If you're considering how to leverage these advances, Runable offers AI-powered automation capabilities that can help streamline security workflows. From automated vulnerability report generation to coordinated patch documentation, platforms like Runable enable security teams to create reports, presentations, and documentation at scale—critical when managing hundreds of vulnerabilities simultaneously.

Use Case: Automatically generate vulnerability assessment reports and remediation dashboards from AI findings, saving hours of documentation work when managing large-scale vulnerability discovery.

Try Runable For FreeThe future of security is here. It's faster, more comprehensive, and demands better processes and faster response. Organizations that prepare now will be ready for what's coming.

Key Takeaways

- Claude Opus 4.6 discovered 500+ high-severity vulnerabilities in open-source code using AI reasoning instead of traditional fuzzing techniques.

- AI reasoning identifies logic-level vulnerabilities that escape detection by static analysis and fuzzing tools by understanding code intent and patterns.

- The technology works without custom prompting or specialized tooling, making advanced vulnerability detection accessible to organizations without security teams.

- Open-source projects with limited maintenance resources now have access to security analysis capabilities previously available only through expensive professional audits.

- The advantage window for defenders is finite—as these tools become available to attackers too, the focus shifts from detection capability to speed of response and patching.

Related Articles

- From Chat to Control: How AI Agents Are Replacing Conversations [2025]

- GPT-5.3-Codex vs Claude Opus: The AI Coding Wars Escalate [2025]

- Claude Opus 4.6: Anthropic's Bid to Dominate Enterprise AI Beyond Code [2025]

- N8N Critical Vulnerabilities Explained: CVE-2025-68613 & CVE-2026-25049 [2025]

- NGINX Server Hijacking: Global Traffic Redirect Campaign [2025]

- Minecraft Server Security Guide: Keep Your Server Safe [2025]

![Claude Opus 4.6 Finds 500+ Zero-Day Vulnerabilities [2025]](https://tryrunable.com/blog/claude-opus-4-6-finds-500-zero-day-vulnerabilities-2025/image-1-1770399372938.png)