Realizing AI's True Value in Finance: A Strategic Implementation Guide

The finance function is at a crossroads. Artificial intelligence isn't a buzzword anymore—it's become essential infrastructure. But here's the uncomfortable truth: most finance teams are implementing AI without fully understanding how to extract its genuine value.

This is a pivotal moment. The technology is mature. The use cases are proven. Yet many organizations still treat AI adoption like they're playing with a fire hose, spraying resources everywhere hoping something sticks. The result? Expensive implementations that never deliver the promised ROI, frustrated teams, and executives asking why they invested millions into a tool that's not delivering tangible results.

I've spent the last few years watching this play out across dozens of organizations. The pattern is always the same. Finance leaders see competitors using AI. They hear about massive efficiency gains. They get pressure from the board. Then they launch initiatives without a clear strategy for integration, governance, or measurement. And predictably, they get disappointed.

But it doesn't have to be this way. The finance teams that are actually winning with AI aren't doing anything revolutionary. They're simply following a different playbook. They're starting with clear business problems. They're building governance structures from day one. They're measuring everything. And they're being ruthlessly honest about what's working and what isn't.

This guide walks you through the exact approach that's delivering results. We'll cover the strategic shifts happening in finance right now, the operational reality of integrating AI without breaking your compliance frameworks, and the specific measurement strategies that separate hype from reality. If you're tired of hearing about AI's potential and want to understand how to actually deliver it in your finance function, this is for you.

TL; DR

- 99% of UK finance leaders now view AI as essential, with 85% already integrating it into core operations, according to Deloitte's report.

- AI succeeds when built on trust, visibility, and human oversight, not autonomous decision-making—78% of finance leaders have concerns about AI risks that require proper governance, as highlighted by Modern Healthcare.

- Trust and control aren't obstacles to AI value—they're prerequisites; organizations implementing proper oversight see faster adoption and better outcomes, as noted by EY's insights.

- Resilience matters more than raw capability: AI systems must adapt to regulatory changes, geopolitical shifts, and dynamic market conditions, as discussed in BCG's publication.

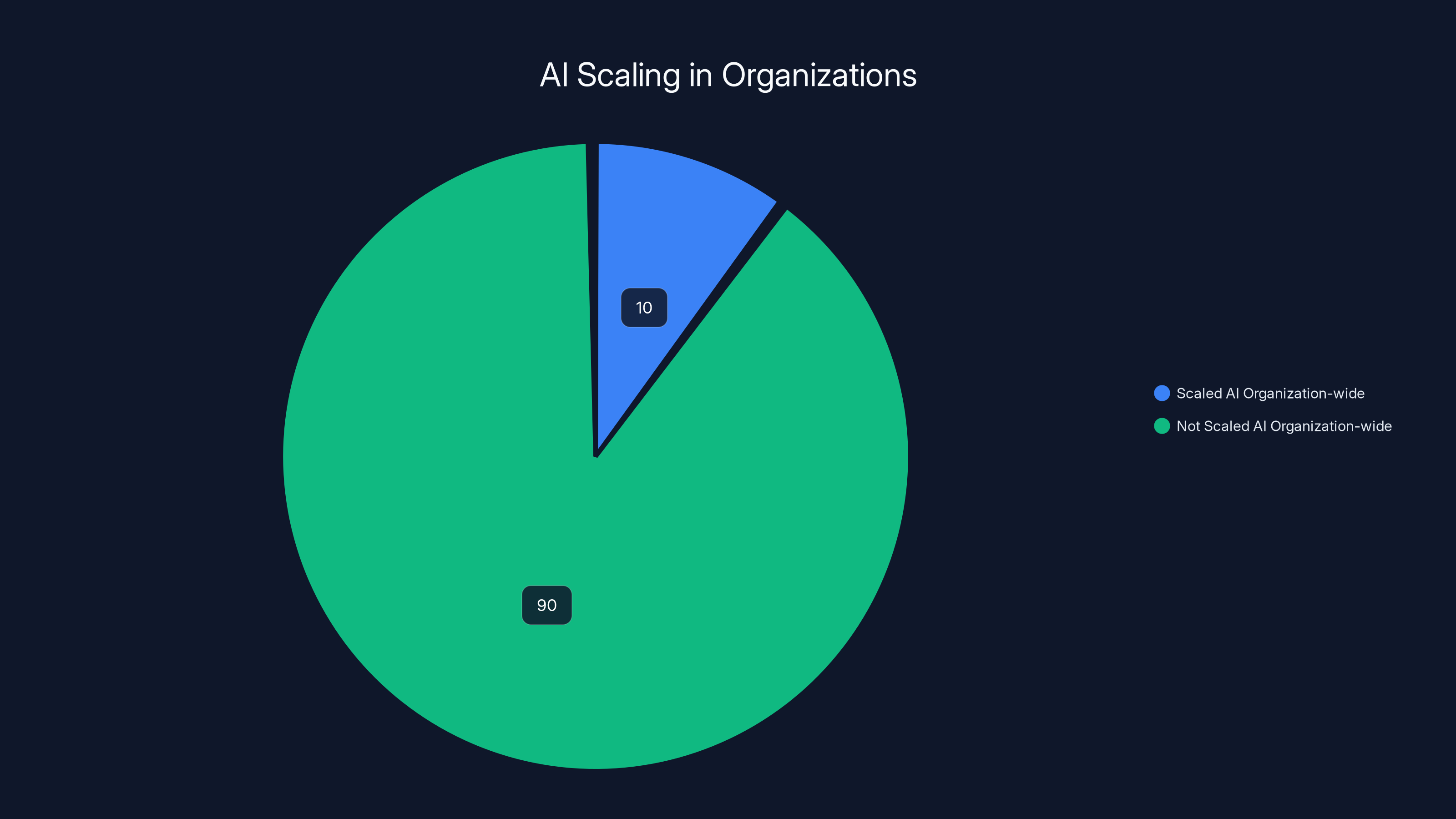

- Most organizations are in the optimization phase: Only 10% have scaled AI agents organization-wide, meaning deliberate, phased deployment is the smart approach, as reported by The CFO.

- Bottom line: Finance teams realizing AI's true value focus on measurable ROI, regulatory alignment, and sustainable scaling rather than aggressive experimentation.

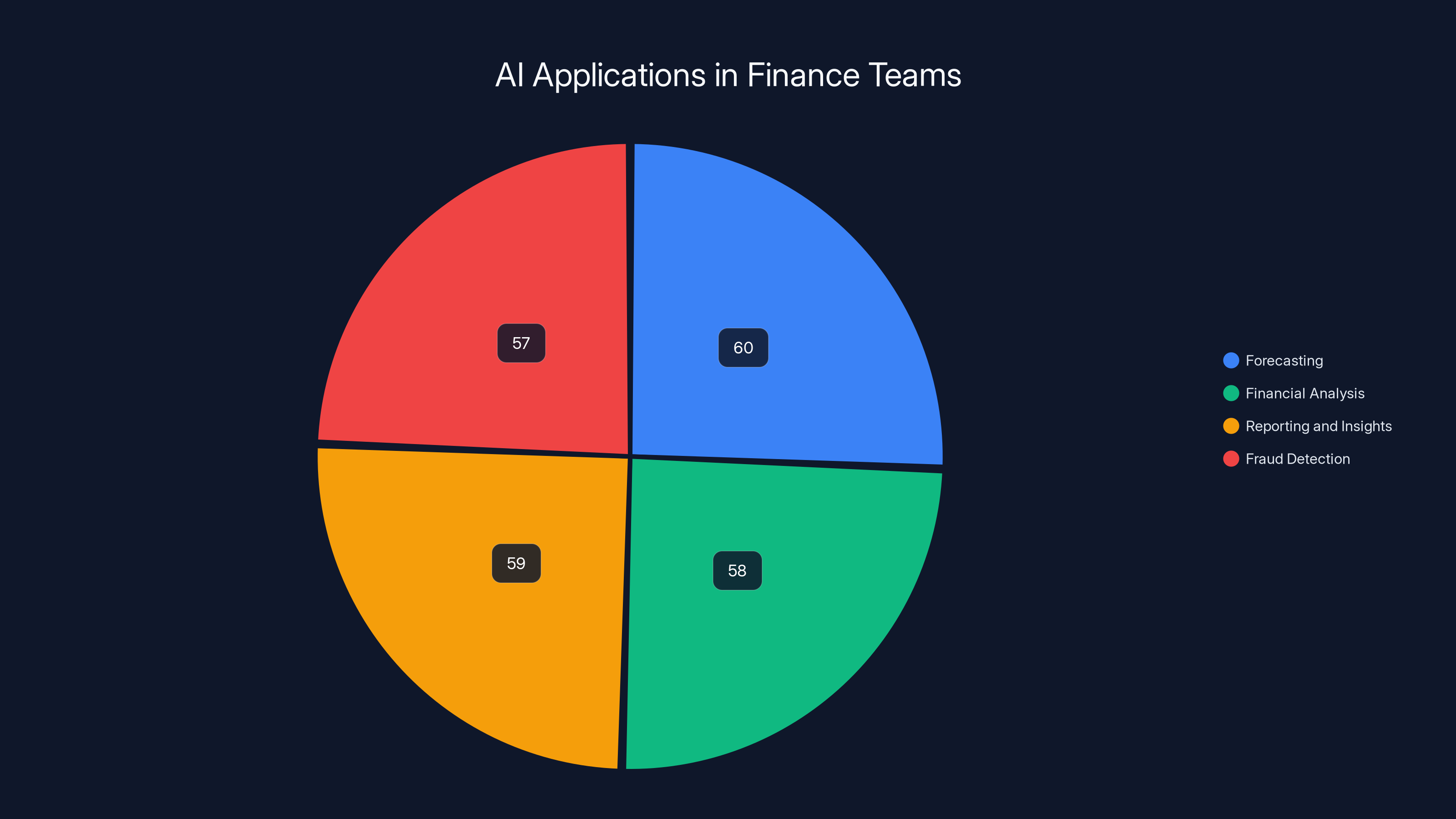

Forecasting is the most common AI application in finance, used by 60% of teams, followed closely by reporting and insights, financial analysis, and fraud detection. These applications are delivering measurable value.

The Current State: Finance's AI Reality Check

Let's start with what's actually happening, not what vendor marketing says is happening.

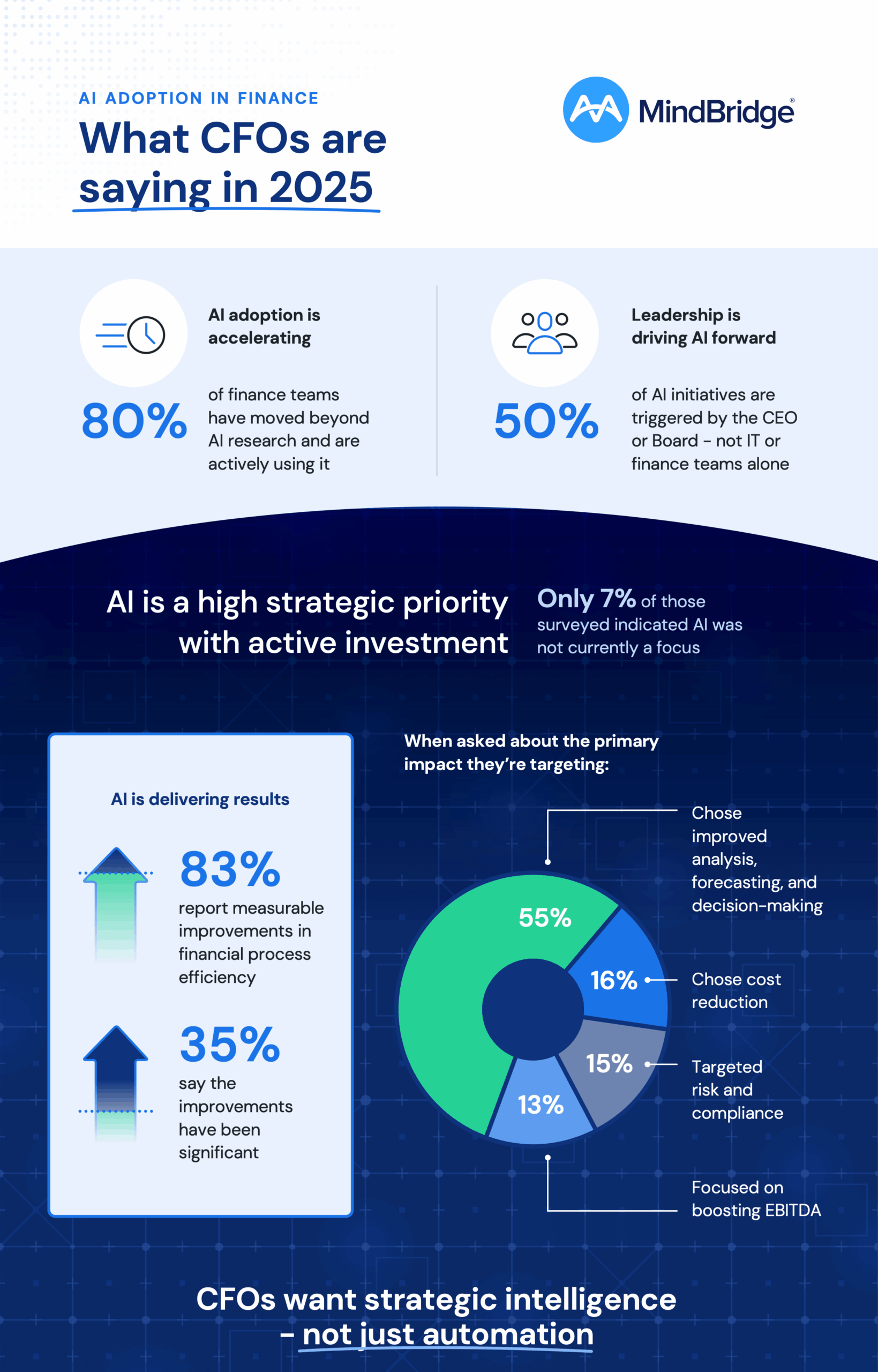

Finance teams have largely stopped experimenting with AI. The pilot phase is over. This year, organizations are moving into execution mode, and that shift changes everything about how you should think about implementation.

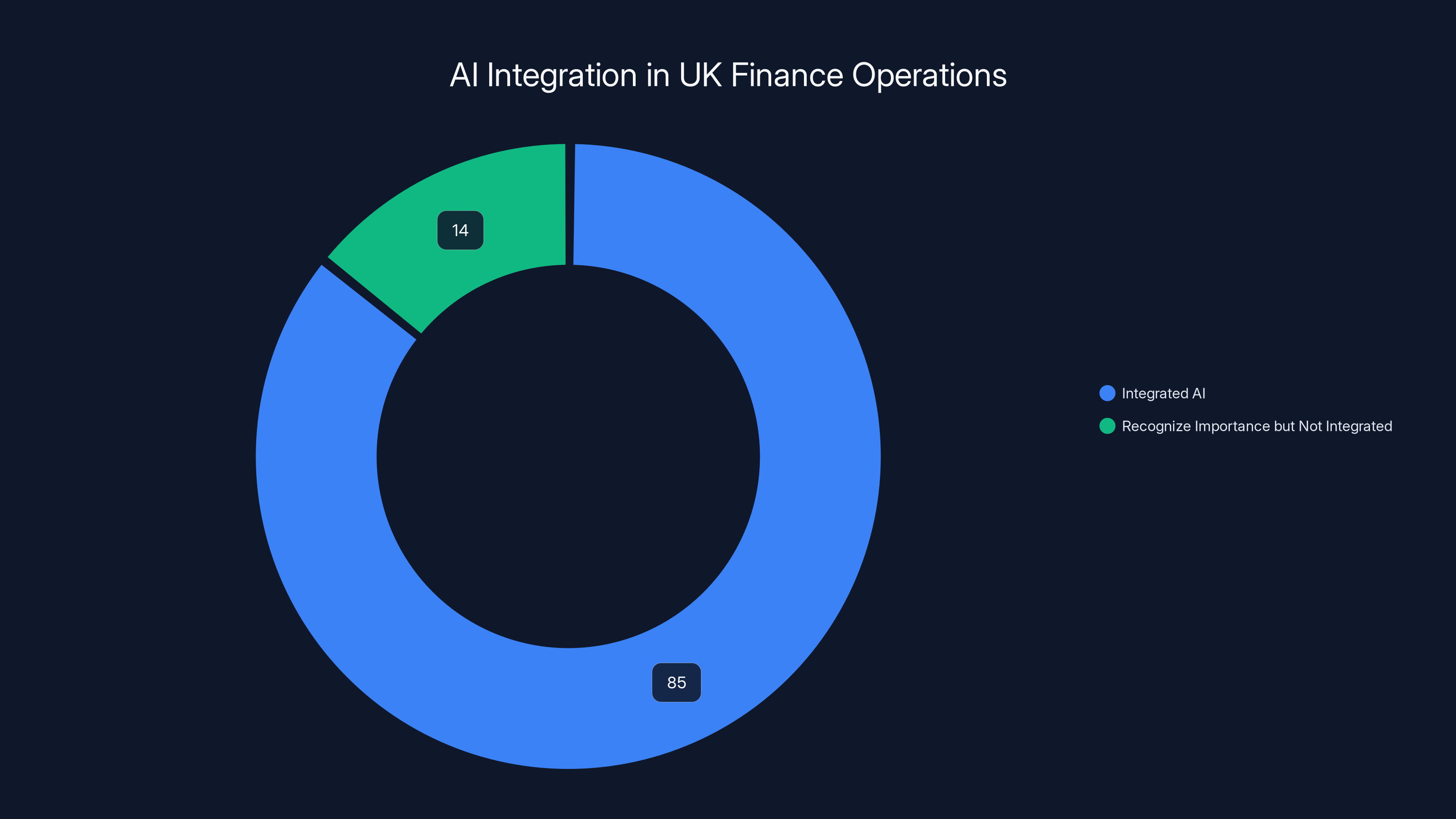

The data backs this up. Recent industry research shows that 99% of UK finance leaders consider AI essential to their operations. That's not hype. That's near-universal recognition that AI is now core infrastructure. But here's where it gets interesting: only 85% have actually integrated it into their workflows. That 14-point gap matters. It represents organizations that understand AI's importance but haven't figured out how to deploy it responsibly.

The adoption trend is accelerating. Investment in AI capabilities is expected to continue climbing through 2026 as organizations move beyond pilots into full-scale operations. But this isn't blind enthusiasm anymore. The market is maturing. Finance leaders are asking harder questions. They're demanding clarity on governance, risk mitigation, and measurable outcomes.

What's driving this shift? Several factors converge. First, the technology itself has become more accessible and reliable. Early AI implementations required teams of specialists. Now, modern platforms enable broader adoption across finance functions without needing AI PhDs embedded in every department. Second, the business case is undeniable. Automation of repetitive tasks saves time—lots of it. Better forecasting prevents costly mistakes. Fraud detection catches anomalies humans would miss. The value is real, not theoretical.

But there's a catch. Many AI projects still fall short of expectations. The reason isn't typically the technology itself. It's misalignment between implementation and business reality. Teams deploy AI without addressing regulatory complexity. They build systems on assumptions that don't hold when economic conditions shift. They create autonomous processes without maintaining the oversight necessary for auditable, compliant operations.

The organizations winning with AI right now aren't the ones moving fastest. They're the ones moving smartest. They're defining clear problems first. They're building governance into the implementation from day one. They're measuring outcomes continuously. And they're being honest about limitations.

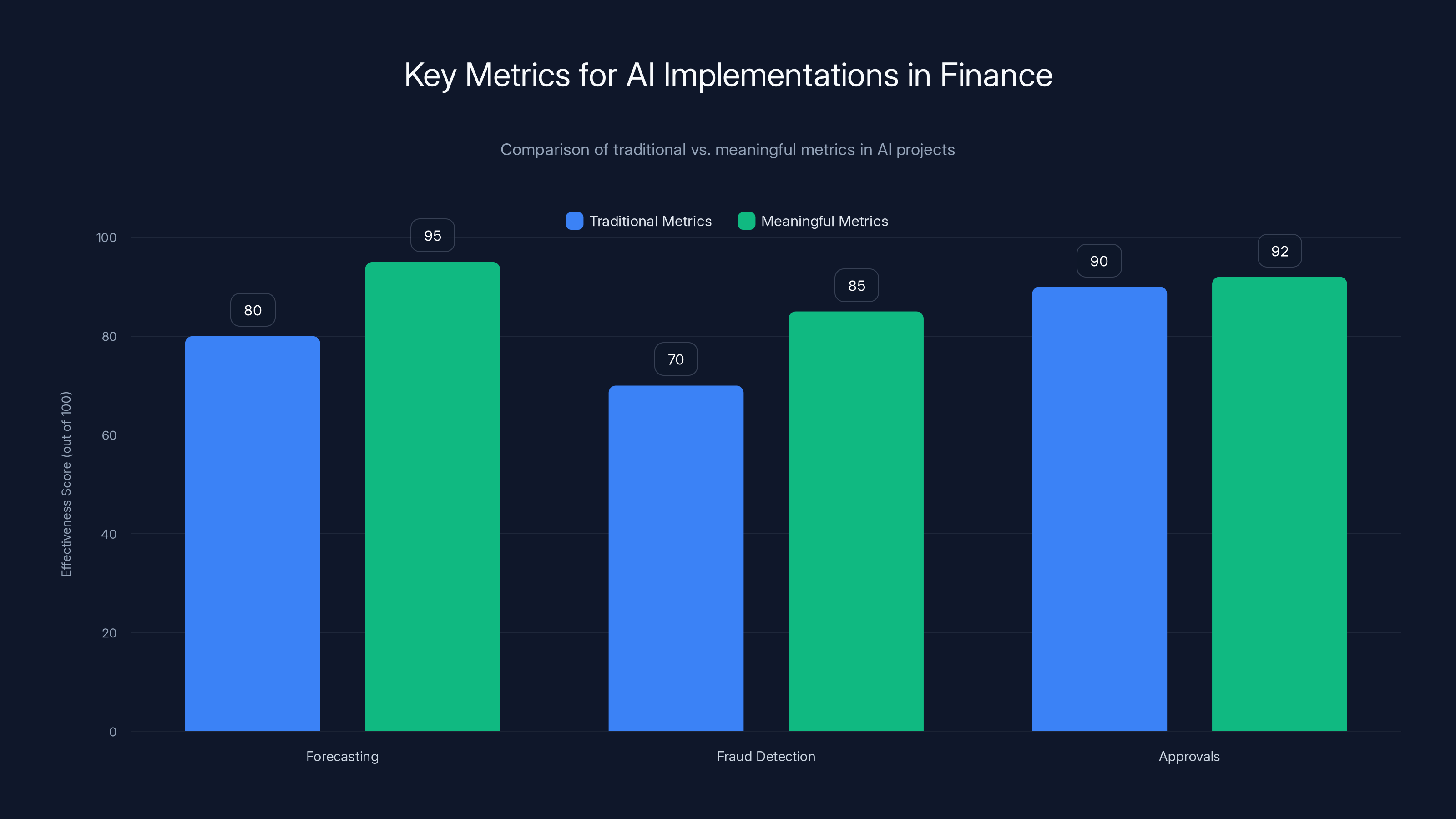

While traditional metrics focus on speed and volume, meaningful metrics emphasize accuracy and net impact, showing higher effectiveness in AI implementations. Estimated data.

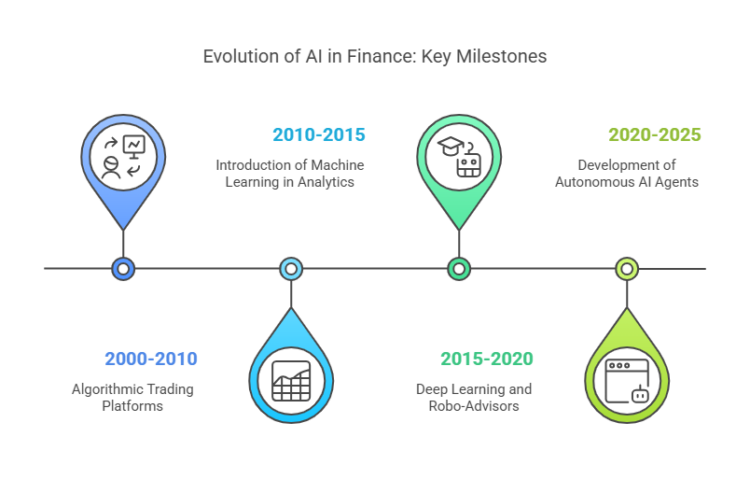

Why AI's Role in Finance Is Evolving

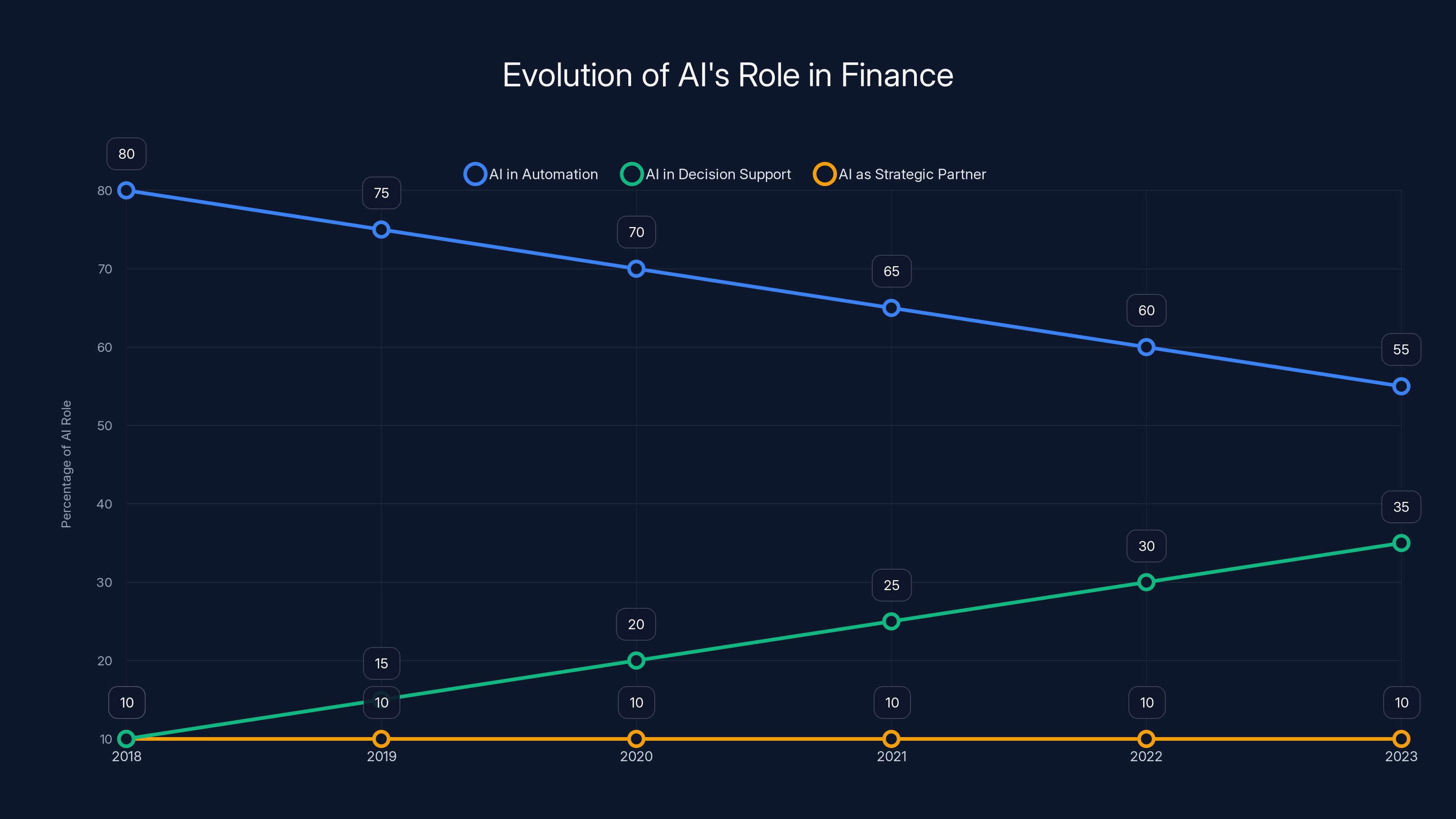

Three years ago, AI in finance meant automation. Simple, mechanical tasks. Invoice processing. Data entry. Rule-based decision triggers. That's still valuable—automation saves time and eliminates human error from repetitive work.

But the conversation has shifted. Finance leaders aren't just looking for faster execution of existing processes anymore. They're looking for strategic transformation. AI as a thinking partner, not just a task executor.

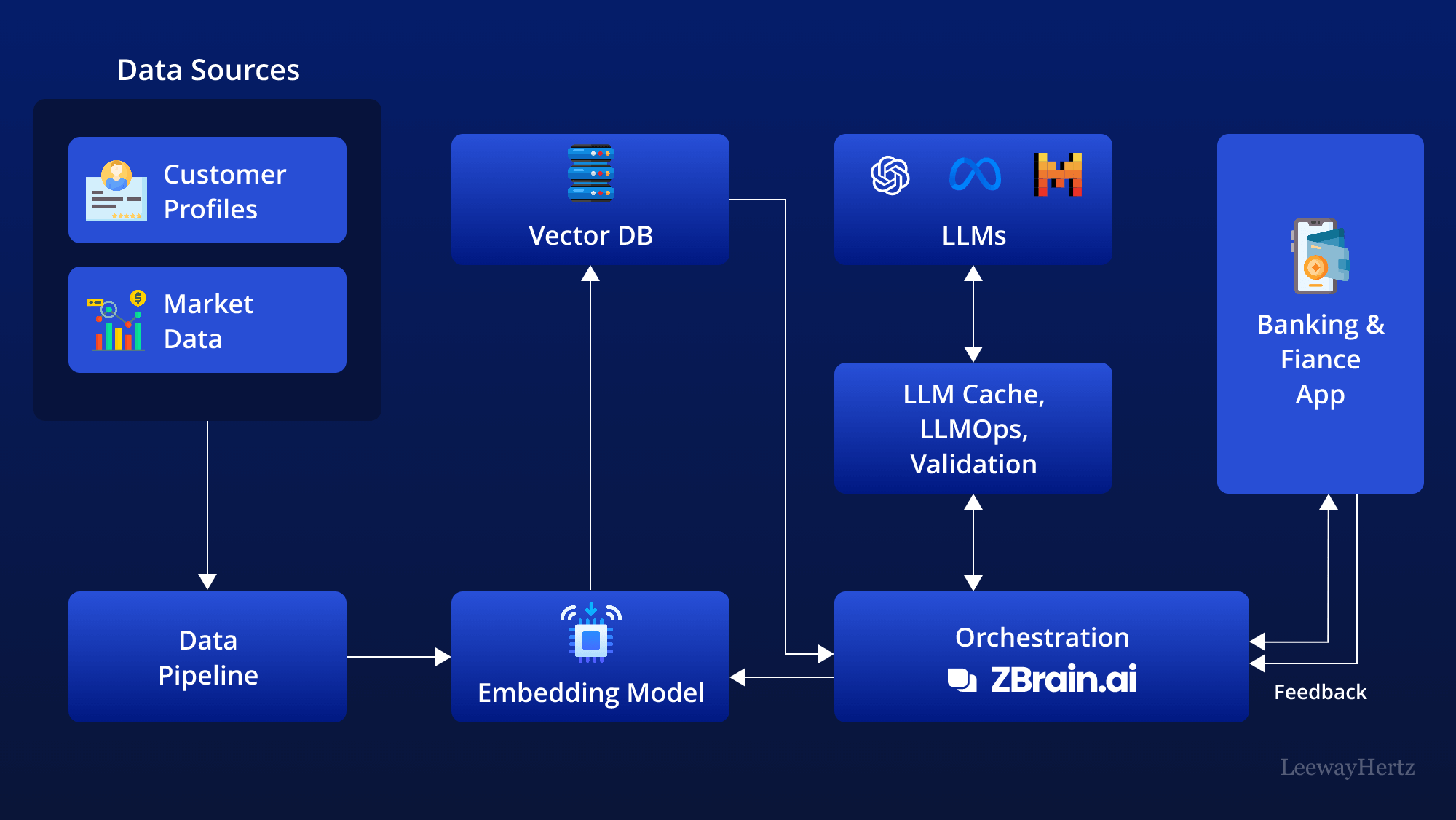

This evolution is driven by what modern AI can actually do. Earlier systems were narrow. They could handle one specific task well. Today's AI architectures can understand context, synthesize information across multiple datasets, and provide insights that would take human analysts weeks to uncover. That capability changes what's possible in finance.

Consider forecasting. Traditional financial forecasting relies on historical data, applied with human judgment and assumptions about future conditions. An experienced finance leader might take those inputs and produce a quarterly forecast in a few days. That's valuable, but it's limited by one person's analytical capacity and the time available.

Now imagine AI augmenting that process. The system ingests historical data, current market conditions, regulatory changes, supply chain signals, and macroeconomic indicators. It runs hundreds of scenario variations simultaneously. It flags assumptions that have shifted. It suggests where the traditional forecast might diverge from actual outcomes. Suddenly, the finance leader isn't replacing their judgment—they're extending it. The forecast improves not because AI is replacing human thinking but because it's amplifying it.

This is the evolution happening across finance functions. AI is moving from task automation to decision support to strategic partnership. The implications are significant.

When AI was purely automation, the value proposition was clear: faster, cheaper, fewer errors. Implementation was straightforward. Deploy the tool, measure the time saved, done.

When AI becomes strategic, everything becomes more complex. You're relying on the system's analytical judgment. You need confidence in its recommendations. You need to understand how it reached its conclusions. You need governance frameworks that work with AI systems, not just around them. You need to ensure that speed doesn't compromise the control necessary for auditable operations.

For finance, this evolution is crucial. Finance isn't a function where you can afford to move fast and break things. You need to move fast while maintaining precision, control, and compliance. That's the balance AI implementation must strike.

The organizations that understand this evolution are approaching AI differently than they did three years ago. They're not looking for the magic automation tool. They're building AI into their analytical infrastructure. They're asking how AI can help their best people work even better. And they're designing governance that accommodates AI's capabilities without sacrificing the control they need.

Trust and Visibility: The Foundation Everything Else Builds On

Here's something that might surprise you: the biggest barrier to AI value in finance isn't technical capability. It's trust.

Finance teams are fundamentally conservative, and for good reason. They're responsible for capital allocation, risk management, and regulatory compliance. You can't be cavalier about those functions. Yet many organizations approach AI adoption as if it requires abandoning caution and embracing blind automation. That framing is backwards and guaranteed to fail.

The reality is that finance teams realizing genuine value from AI aren't the ones trusting the system most—they're the ones who've figured out how to build trust systematically. That's a different thing entirely.

Consider what happens when you implement a forecasting system that runs autonomously, makes recommendations, and your team just accepts them. Sure, it's fast. But it's also a liability. When a recommendation turns out wrong, can you explain why? Do you understand the reasoning? If market conditions shift, does the system adapt? If it's trained on static data, what happens when assumptions change? These aren't just operational questions—they're audit questions. They're governance questions. And if you can't answer them clearly, you've created risk, not value.

Compare that to a different approach. Same AI forecasting system, but it's deployed as a recommendation engine. It produces forecasts, flags the assumptions driving them, and highlights where conditions might diverge from historical patterns. Human analysts review the recommendations. They adjust when needed. They understand the reasoning. When questioned, they can defend the forecast because they've actually engaged with the underlying logic. That's when trust works.

The data on this is compelling. Over half of finance teams say they need to review AI actions before they take effect. Nearly half say retaining decision-making control is essential. These aren't obstacles to overcome. They're requirements that should shape how you implement. Finance teams that design AI systems around these requirements see faster adoption and more sustainable value.

Visibility is the other part of this equation. You can't trust what you can't see. Yet many AI implementations are black boxes. The system makes a decision. You don't know why. That's fine for some use cases. For finance, it's a problem.

The finance teams with the healthiest AI implementations are the ones obsessing over visibility. They want to understand how the system reached its conclusions. They want traceability. They want to be able to point to a transaction and explain exactly what happened and why. That transparency isn't slowing them down—it's enabling them to scale more aggressively because they have confidence in what they're scaling.

Implementing visibility requires specific choices. It means selecting tools that explain their reasoning. It means building documentation of model inputs and assumptions. It means creating audit trails. It's not free—it requires time and attention. But it's the difference between AI that delivers sustainable value and AI that becomes a compliance liability.

The organizations that get this right aren't moving slower than the ones chasing pure automation. They're moving differently. They're building control into the system rather than adding it after the fact. And that architectural choice makes all the difference in whether AI becomes genuinely valuable or just another expensive experiment.

Only 10% of organizations have successfully scaled AI agents organization-wide, highlighting the significant challenges in moving from pilot to production.

The Risk Perspective: Understanding Why Concerns Are Rational

Let's talk about the elephant in the room: AI risk in finance.

Three-quarters of UK finance leaders express some level of concern about AI risks. Most of that concern falls in the "somewhat concerned" category, which is rational. An organization implementing any major technology without any risk concerns is either naive or not taking governance seriously.

But here's what's important to understand: this concern isn't paralyzing adoption. It's shaping it. Mature organizations are using risk awareness to build better implementations, not as an excuse to delay.

What specific risks are finance leaders thinking about? The data gives us clarity. Inadequately regulated AI systems face exposure to malware and data integrity issues. That's real. Financial systems are targets. You're moving sensitive data and making capital allocation decisions. Attackers care about that. An AI system without proper security architecture becomes an attack surface, not just a tool.

Beyond security, there are operational risks. An AI system trained on historical data might make decisions based on patterns that no longer apply. Market conditions change. Regulatory requirements shift. If the system doesn't adapt, it becomes a liability. This is particularly acute for finance because conditions can shift rapidly. An economic shock changes everything the model was trained on.

There's also the governance risk. You implement AI. It makes recommendations. An employee acts on them without proper review. Something goes wrong. Now you're in front of a regulator trying to explain why you automated a decision without maintaining appropriate controls. That's not a theoretical risk—it's a real exposure organizations need to address.

These aren't reasons to avoid AI. They're reasons to implement it thoughtfully. The organizations managing risk effectively do several things:

First, they maintain human decision-making authority in high-stakes scenarios. AI augments judgment; it doesn't replace it in critical situations.

Second, they build governance structures that accommodate AI. That means documented processes, clear ownership, regular review, and the ability to trace decisions back to their reasoning.

Third, they invest in security. AI systems accessing financial data need the same security architecture you'd apply to any high-risk system.

Fourth, they monitor and measure continuously. What was true about the model when you deployed it might not be true six months later. You need systems that catch that drift.

Finance teams taking this approach aren't moving slower. They're building sustainable implementations that will be easier to scale because they're already audit-ready from day one. That's not a constraint—it's actually an acceleration because you're not dealing with cleanup and remediation later.

Governance as Enabler, Not Obstacle

Here's something counterintuitive that transforms how you think about AI implementation in finance: governance isn't the enemy of speed. Properly designed, it's the accelerant.

When finance leaders think about governance, they often imagine bureaucratic approval processes that slow everything down. Sign-offs on every decision. Committees that meet monthly. Procedures that require weeks to implement anything. That's a straw man. That's bad governance, not governance itself.

Effective AI governance actually looks different. It's clear rules established upfront that enable autonomous operation within defined parameters. It's transparency built into the system so decisions are automatically auditable. It's measurement and monitoring that tell you when something's wrong so you can course-correct quickly.

Consider approval processes. Traditional finance has them because you need oversight on significant decisions. Now imagine AI recommending actions within defined parameters. The governance question becomes: what parameters define safe operation? Once you've answered that, the AI can operate within them without approval on every action. You're not removing oversight; you're making it systematic and automated rather than manual.

This is why organizations with strong AI governance actually move faster. They've clarified where AI can operate autonomously versus where it needs human review. That clarity eliminates delays. Everyone knows the rules. The system knows the rules. Work flows efficiently.

Document what the AI system can do, what it can't do, what triggers human review, and how it will be audited. That clarity costs time upfront but saves enormous amounts later because nothing is surprising. There are no discoveries during audit that require expensive remediation. The system was built audit-ready from day one.

Implementing governance for AI in finance typically involves several components. First, you need to define the decision framework. What types of decisions can this system make? What are the limits? Under what conditions does human review trigger? These aren't vague guidelines—they're concrete rules coded into the system.

Second, you need measurement. How do you know the system is performing as expected? What metrics matter? How often will you review performance? Weekly? Monthly? This is where many organizations stumble. They implement AI without defining success criteria. They can't tell if it's working because they didn't define what working looks like.

Third, you need escalation procedures. What happens when something goes wrong? Who gets notified? What actions get taken? How do you ensure the organization learns from problems rather than just fixing them in isolation?

Fourth, you need documentation. This is unsexy and tedious, but it's critical. You need to be able to explain to regulators, auditors, and stakeholders exactly how the system works, what data it uses, and why it makes the decisions it does. That documentation needs to be current and accurate.

Fifth, you need regular governance reviews. AI systems change over time. Data distributions shift. You need regular checkpoints where you assess whether the governance framework is still adequate or needs adjustment.

Organizations implementing this approach aren't slowing down. They're typically scaling faster because they have stakeholder confidence. The CFO isn't nervous about the system because they understand it. The audit function isn't preparing questions because they're already included in the process. The compliance team isn't an obstacle because they've been part of the solution from the start.

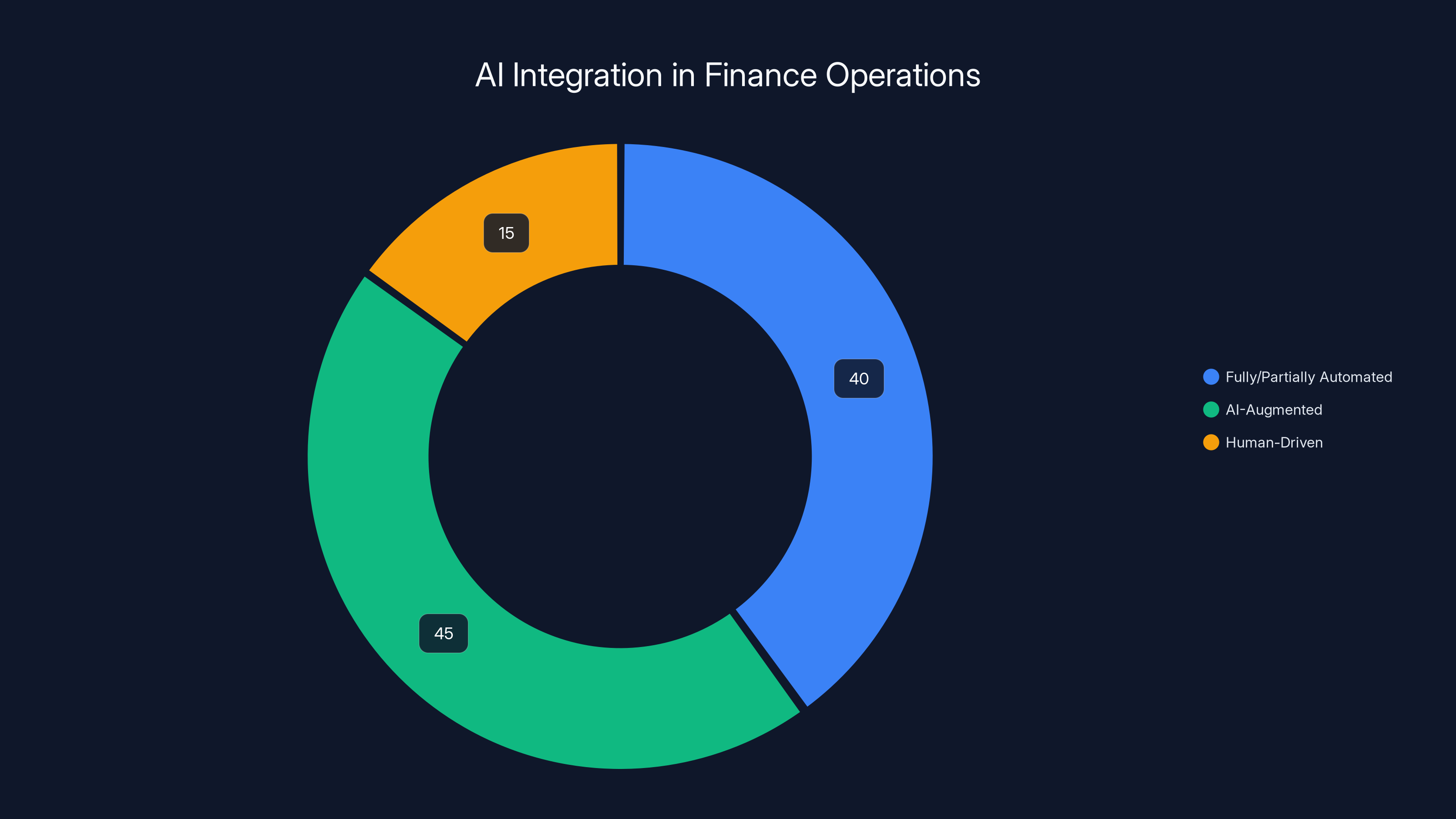

Most mature finance operations have 40% of processes automated, 45% AI-augmented, and 15% remain human-driven. (Estimated data)

Where Finance Teams Are Actually Using AI Today

Understanding where AI is being successfully deployed gives you a roadmap for where to focus.

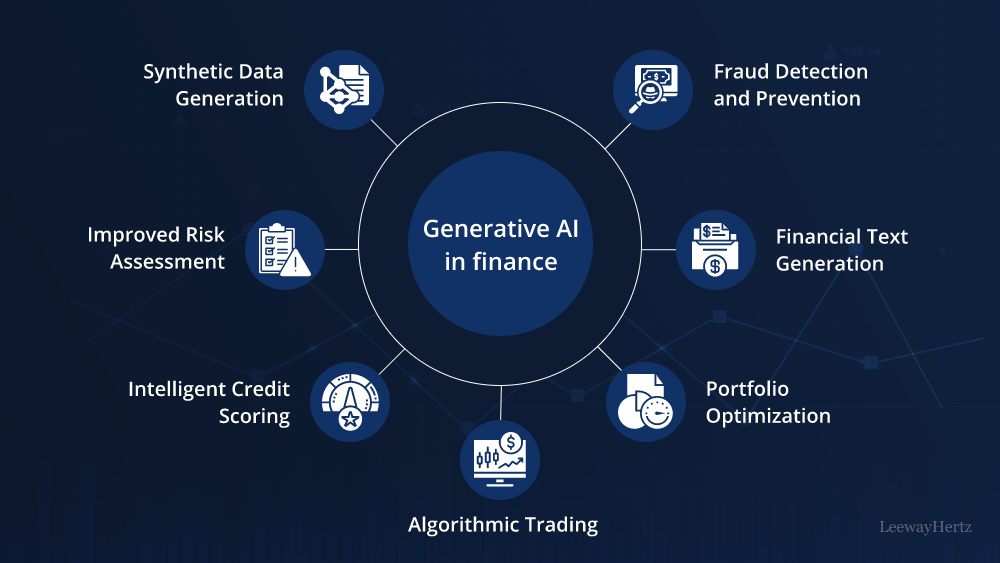

The most common AI applications in finance right now are: forecasting (used by 60% of finance teams), financial analysis (58%), reporting and insights (59%), and fraud detection (57%). These aren't bleeding-edge use cases. They're proven applications delivering measurable value.

Forecasting is the marquee application, and for good reason. Creating financial forecasts is time-consuming and prone to systematic biases. Humans tend to anchor too heavily on recent history. They're prone to overconfidence. They struggle to incorporate multiple data sources simultaneously. AI excels at exactly what humans struggle with. It can weight dozens of data sources, identify patterns across complex datasets, and generate alternatives the human team might not have considered. The result is better forecasts that capture more perspectives.

The key implementation insight: AI forecasting works best when it's augmented forecasting. The system generates possibilities. Humans review and adjust. The combination of AI capability and human judgment produces better results than either alone. Teams trying to make forecasting fully autonomous typically end up disappointed because they lose the human judgment component that actually adds value.

Financial analysis is similar. AI can process raw financial data much faster than humans and generate structured insights. It spots trends. It flags anomalies. It creates comparisons across datasets. Again, the best implementations are those where AI does the heavy analytical lifting and humans interpret and validate the results. The AI saves the analysis team months of work. The humans ensure the analysis is actually correct and meaningful.

Reporting and insights is where AI delivers enormous value with relatively straightforward implementation. Generating reports is tedious. Extracting insights from those reports requires reading and synthesizing information across dozens of documents. AI can automate the report generation and extract the key insights. This is one area where more automation is genuinely appropriate because the risk of being wrong is lower.

Fraud detection is another area where AI excels. Most fraud detection is pattern matching on steroids. Transaction X has characteristics typically associated with fraud. Flag it. Most fraud detection systems are rule-based. AI-based approaches can find patterns humans might miss and adapt as fraud techniques evolve. This is genuinely higher-value than rule-based approaches.

Across all these applications, the implementation that works is similar: AI augmentation of human decision-making rather than replacement. The common thread: teams are still actively involved. They're reviewing outputs. They're applying judgment. They're maintaining control.

Where organizations struggle is when they try to do something different. Fully automated forecasting. Fully automated fraud detection. Fully automated approvals. Those implementations sound efficient but typically fail because they lose the human component that actually provides value and maintains appropriate control.

Measuring What Actually Matters: Beyond Hype Metrics

Here's where many AI implementations fail: the measurement strategy.

Organizations measure the things that are easy to measure rather than the things that matter. They track time saved on data entry but ignore whether the forecasts actually improved. They count the number of processes automated but don't measure whether outcomes improved. They report on tool adoption but ignore whether users actually find the tool valuable.

Real measurement in AI for finance is harder because it requires thinking about outcomes rather than activities.

Start with the business problem you're solving. If you're implementing AI forecasting, the metric isn't "how fast does the system generate a forecast." It's "how accurate is the forecast." Better forecasts lead to better planning decisions. Better planning decisions lead to better business outcomes. That's what actually matters.

If you're implementing AI for fraud detection, the metric isn't "how many transactions does the system review." It's "what's the accuracy rate." How many actual frauds does it catch? How many false positives does it generate? False positives matter because they create work. You catch 100 frauds but generate 10,000 false alarms, you've made your fraud team's life worse, not better. Accuracy is what matters.

If you're automating approvals, the metric isn't "what percentage of approvals are automated." It's "what's the error rate on automated approvals." And separately: how much time did you actually save. Many automation projects save time on the machine but cost time on the human side dealing with issues. Net impact is what matters.

Three metrics matter most for AI in finance:

First, accuracy. Is the system performing better than the alternative? This is where you need to be brutally honest. Many AI implementations aren't actually more accurate than experienced humans. They're just faster. That's valuable, but it's different. Measure against the right benchmark.

Second, consistency. Does the system perform the same way in good times and bad times? Does it break when conditions change? A system that works great during stable periods but fails in crisis is dangerous. Most AI systems trained on historical data have this problem. You need to test robustness.

Third, time saved. What's the net time impact? How much time did the system save on the primary task? How much time does error correction require? What's the total impact on the team's available capacity? This is more complex to measure than just saying "the system is faster" but it's the only number that actually matters.

Beyond these core metrics, you need proxy metrics that tell you if something is going wrong before it becomes a crisis. Drift in model performance. Change in input data patterns. Unusual decision patterns. These are early warning signs that the system isn't working as expected and needs attention.

Organizations that measure well have several practices in common. They define measurement before deployment. They measure continuously, not at the end of implementation. They measure against the baseline of what would happen without AI, not against an idealized vision. And they're willing to shut down systems that don't deliver, even if they cost a lot to build.

85% of UK finance leaders have integrated AI into workflows, while 14% recognize its importance but haven't yet implemented it.

The Scaling Problem: Why Most Organizations Stall

Organizations often struggle moving from pilots to production at scale. This is where the rubber meets the road and many initiatives stall.

Right now, only 10% of organizations have scaled AI agents organization-wide. That's not because the other 90% haven't figured out that AI works. They have. It's because scaling creates problems that pilots hide.

Pilots are controlled environments. You choose a use case where AI works well. You give it careful attention. You have a small team babysitting the system, making sure it's performing. You fix problems quickly. Everything is bespoke.

Scaling requires systematization. You need the system to work reliably without constant human intervention. You need it to work for different teams with different needs. You need processes that don't require AI experts to maintain. You need documentation that lets someone who wasn't involved in the initial build understand how to operate and support it.

These aren't small problems. They're fundamental challenges that require a different approach.

Most organizations discover the scaling problem too late. They build a cool AI application in controlled conditions. It works. They try to roll it out across the organization. Suddenly, edge cases appear. The data quality in other departments is different. The use case has nuances that don't generalize. The tool that worked perfectly in the pilot breaks when real diversity of use hits it. They discover that their AI expert who built the pilot is now a bottleneck because they're the only person who understands the system.

The organizations scaling effectively are doing something different. They're not trying to scale the same bespoke system. They're building platforms that can handle variation. They're documenting as they go. They're training broader teams. They're building monitoring that tells them when something is wrong. They're managing it like they would any enterprise system, not like a special project.

This is where solutions like Runable become valuable. Rather than building bespoke AI implementations for each use case, platforms offering automated document generation, reporting, and presentation creation enable finance teams to scale AI-powered processes across multiple functions simultaneously. With Runable starting at just $9/month, organizations can experiment with AI automation for financial reporting, analysis presentations, and automated documentation without the overhead of building custom systems. The platform's AI agents handle routine report generation, slide creation, and document automation, allowing finance teams to scale these processes organization-wide without requiring deep technical expertise.

The scaling path looks like this. Start with a clear use case. Build a working pilot. Learn what works and what doesn't. Then, instead of trying to clone that pilot across the organization, refactor it into a reusable pattern. Can you abstract the core logic so it works for different teams with different requirements? Can you build it on a platform that handles the operational complexity so your team doesn't have to? Can you document it clearly enough that someone other than the original builder can support it?

Organizations that move through this phase successfully go from single-use AI applications to AI-augmented processes that are genuinely part of how the team works. That's when you see real value extraction.

Regulatory Resilience: Building AI That Survives Change

Here's something that keeps finance leaders up at night: what happens to your AI systems when the rules change?

Regulation isn't static. Regulatory environments shift. New rules emerge. International standards change. Tax codes get updated. Reporting requirements evolve. The entire foundation of how your AI system was trained might need to change with remarkably short notice.

Consider what happened with recent tariff policies. Tariffs affect profit margins directly. They affect cost structures. They change liquidity pressures. They create new revenue recognition questions. An AI system trained before these changes wouldn't have been anticipating them. It would need retraining. But retraining takes time, and policy changes happen fast. During that gap, the system is making decisions based on outdated assumptions.

This is more than a theoretical problem. It's a practical risk that finance teams need to address in how they build AI systems.

Resilience to regulatory change requires several things. First, you need systems that can adapt. That doesn't mean the system rewrites itself when rules change. It means the architectural design allows for updating assumptions and parameters without rebuilding the entire model. This is where the design decisions you make upfront matter enormously.

Second, you need people who understand when rules have changed and what that means for your systems. You can't have an AI system running on assumptions that are no longer valid. You need monitoring and governance that catches regulatory changes before they break your systems.

Third, you need flexibility in how you deploy AI. If you've baked AI decisions into your core processes and can't easily switch them off or modify them, you're vulnerable to regulatory change. You need the ability to adjust course quickly.

Fourth, you need documentation about why the system makes decisions the way it does. When regulation changes, that documentation helps you understand what needs to change in the system. If nobody understands how the system works, updating it is a nightmare.

Organizations building regulatory resilience into their AI are typically doing better with regulation compliance than those trying to bolt it on afterward. Compliance isn't something you add to AI. It's something you build in from the start. That's the difference between AI systems that create regulatory risk and AI systems that actually help you manage it.

AI's role in finance is evolving from primarily automation (80% in 2018) to increasingly supporting decision-making and strategic partnerships (35% by 2023). Estimated data.

Building Organizational Alignment: Getting Everyone on the Same Page

Here's something that's technically unrelated to AI but practically critical: organizational alignment.

AI implementations fail as often for organizational reasons as they do for technical reasons. You've got different departments with different priorities. Finance wants to optimize for cost reduction. Compliance wants to optimize for risk management. Operations wants systems that are easy to support. IT wants systems that integrate with existing infrastructure. Getting these perspectives aligned is harder than building the AI.

Most organizations skip this step or treat it as something to address after implementation. That's backwards. The organizations with successful AI implementations spend significant time upfront aligning around what they're trying to achieve.

What does this actually look like? It starts with clear objectives. Not "implement AI." Not "automate more processes." Specific objectives like "reduce the monthly close timeline by two days" or "improve forecast accuracy by 15%." Those concrete objectives help everyone understand what they're working toward.

Next, map the stakeholders. Who needs to support this? Who will use it? Who owns outcomes? Who has concerns? Get everyone in the room and actually discuss their concerns. What keeps them up at night about this change? What do they need to succeed? This sounds like process overhead, but it's actually the difference between smooth implementation and constant political resistance.

Then, document the trade-offs. You're making decisions that have winners and losers. If you're automating a process, some people's jobs change. Maybe they're freed up for more valuable work, but it's still change. Acknowledge that. Don't try to pretend everyone wins. Make clear why the trade-offs are worth it. Rational people can accept trade-offs they understand and see the reasoning behind. What they won't accept is being surprised.

Finally, create feedback loops. Things will change once implementation starts. You need mechanisms for people to raise problems or ask questions without it becoming a barrier to progress. Regular check-ins. Clear escalation paths. Willingness to adjust plans based on feedback.

Organizations that do this work upfront move faster and experience fewer organizational blockers during implementation. That might sound surprising—doesn't all this talking slow things down? No. It prevents delays and resistance later that actually slow things down more.

Change Management: Making AI Stick

Implementing AI isn't a one-time event. It's the beginning of ongoing change that needs to be managed actively.

Most organizations underestimate the change component. They focus on the technology. They build the system. They deploy it. Then they expect people to just start using it. That's not how change works.

People are often genuinely concerned about AI. They might fear job loss. They might distrust systems they don't understand. They might have legitimately experienced failed technology implementations before. Those concerns are real, and they won't go away by ignoring them. They need to be addressed directly.

Effective change management for AI typically includes several elements. First, education. People need to understand what the system does, how it works, and what it means for their job. This isn't a one-time training. It's ongoing communication that helps people develop comfort with the tool.

Second, involvement. The people who will use the system should have input into how it's deployed. They'll identify issues that broader stakeholders miss. They'll also have more ownership of the outcome if they've been part of shaping it.

Third, support. When the system goes live, people need help. Early adopters will discover issues. Some people will struggle with using the tool. You need resources available to help them succeed. That support is what the difference between adoption and abandonment.

Fourth, celebrating wins. When the AI system solves a problem, acknowledge it. Amplify success stories. Show people why this change matters. Recognition is powerful motivator for broader adoption.

Fifth, addressing concerns seriously. If someone raises a legitimate problem, fix it. If someone is struggling, help them. Don't treat resistance as obstruction to overcome. Treat it as information about what's not working.

Organizations that invest in change management see significantly higher adoption rates and faster value realization. The technology is only half the battle. The people half is equally important.

Future-Proofing Your AI Investments

AI technology is moving fast. Systems that are state-of-the-art today might be outdated in two years. That's not necessarily a problem if you've built your implementations in a way that allows evolution.

Future-proofing your AI investments means making architectural decisions that allow you to upgrade and evolve without ripping everything out and starting over. It means building with modularity so you can swap components without rebuilding the whole system. It means documenting so you're not dependent on the original builders understanding the system.

It also means being honest about the pace of change. New AI models emerge regularly. Capabilities improve. What couldn't be done last year might be straightforward this year. You want to be able to take advantage of that improvement without massive restructuring.

One practical approach: treat your AI implementations as platforms, not permanent solutions. Build them expecting they'll need to evolve. Design with interfaces that let you swap underlying components. Invest in monitoring that tells you when better alternatives become available. Keep tabs on what's happening in the broader AI market.

This sounds expensive—and it is, compared to building quick-and-dirty solutions. But it's cheap compared to rebuilding entire systems when better approaches emerge. And that's the timeline finance organizations are working on. Multi-year implementations. Investments that need to deliver value for years.

Organizations planning for evolution see better long-term ROI because they're not constantly ripping and replacing. They're iterating and improving. That's what sustainable AI value actually looks like.

Measuring Long-Term ROI: Beyond the Pilot Phase

Pilots are great at proving concept. They're terrible at predicting long-term value. A pilot that shows 15% time savings might deliver only 7% in production because of all the exceptions and edge cases the pilot didn't encounter. Understanding long-term ROI requires longer-term measurement.

Long-term ROI measurement typically looks at several components. First, the direct time savings. How much time do people actually save with the AI system? Not in the best case scenario, but on average, accounting for errors and fixes.

Second, the quality improvements. Are outcomes better? Are decisions more accurate? Are risks being identified that weren't being caught before? Quality improvements often deliver more value than time savings but are harder to quantify.

Third, the organizational flexibility improvements. Can the team handle more work with the same headcount? Can they respond to new requirements faster? Can they support new regulations without hiring? This is where some of the biggest value often hides.

Fourth, risk mitigation. Is the organization actually more resilient? Are fraud cases being caught earlier? Are forecast errors smaller? Risk mitigation is valuable but only if you measure it.

Measuring all of this requires data collection that spans months or years. You need baselines from before the AI system. You need ongoing measurement after implementation. You need to account for confounding factors—business changes that aren't related to the AI system but affect outcomes.

Organizations serious about understanding ROI do this measurement. They might find that the AI system delivered more value than expected, or less. Either way, they have actual data to inform decisions about expansion or modification.

Competitive Implications: What Happens if You Don't Move

This gets real pretty quickly. The finance landscape is shifting. Competitors are moving to AI. The question isn't whether AI will reshape finance. It's when.

Organizations that move early and deliberately are building capabilities their competitors won't have for years. That's a significant advantage. Better forecasts. Faster close. Better fraud detection. More responsive analysis. Those capabilities compound. They compound into better decision-making. Better decision-making compounds into better business outcomes.

Meanwhile, organizations that delay or move half-heartedly are falling behind. It's not that fast movers will be unbeatable. It's that they'll have accumulated so much operational advantage by the time slower movers catch up that catching up becomes harder.

But here's the crucial nuance: moving fast matters less than moving smart. You don't need to be the first mover. You need to be a smart mover. You need to learn from early movers' mistakes. You need to build implementations that deliver actual value, not hype. You need to scale deliberately rather than chaotically.

The competitive advantage isn't in being first. It's in being best. And best means sustainable, measurable, compliant, and continuously improving. Organizations that focus on those characteristics will have significant competitive advantage whether they move first or third or fifth.

The Human Element: What Actually Drives Success

If you had to point to one factor that separates successful AI implementations from disappointing ones, it would be this: people.

Not the technology. Not the budget. Not the sophistication of the use case. People. Specifically, whether the organization has talented people who understand both the business problem and the technology problem, and can hold them in tension simultaneously.

You need finance people who understand AI well enough to ask intelligent questions. You need technology people who understand finance well enough to appreciate constraints that aren't obvious to outsiders. You need leadership that understands you can't have a set-it-and-forget-it AI system in finance. You need ongoing attention and care.

This might sound like a resource constraint. In a way, it is. But it's also a differentiator. Organizations that recruit and retain this talent build AI capabilities that compound. Organizations that don't are constantly starting over with consultants or struggling with knowledge that walks out the door.

Investing in people—hiring, training, retaining—might be the single best investment you can make in AI capability.

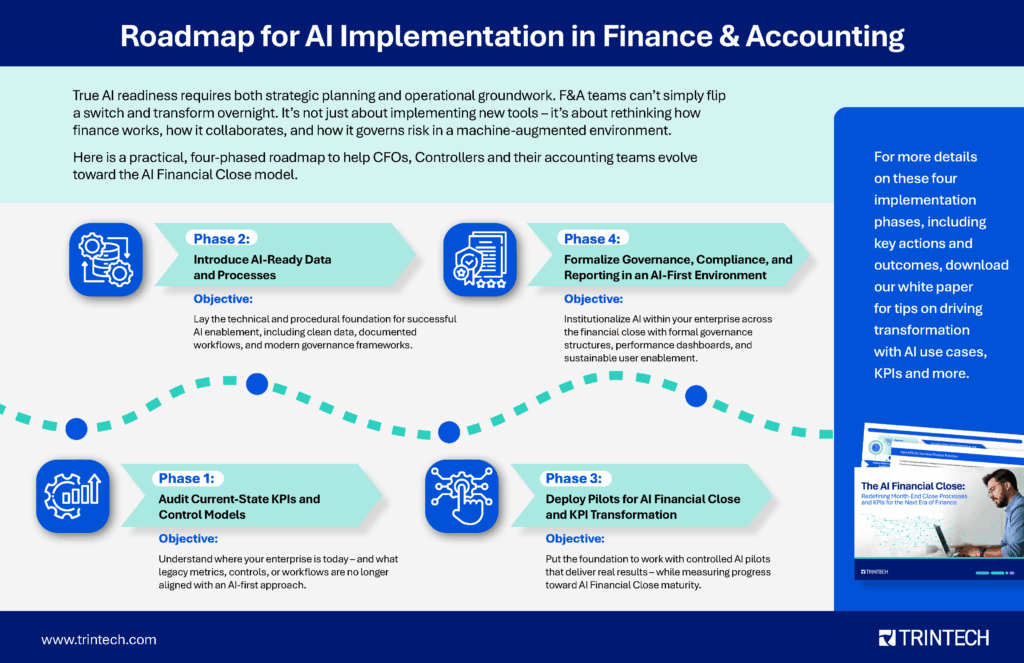

Putting It Together: A Practical Roadmap

Let's synthesize all of this into something actionable.

If you're starting an AI initiative in finance, here's a proven path:

Phase 1: Assessment and Alignment (Weeks 1-4) Identify where your team spends the most time on manual, repetitive work. That's your starting point. Align stakeholders on what you're trying to achieve. Define clear success metrics before you start building.

Phase 2: Build Governance (Weeks 3-6) Don't wait for deployment. Build governance into the plan now. Who owns outcomes? How will decisions be made? What oversight is required? How will you audit? Document it.

Phase 3: Pilot Implementation (Weeks 6-14) Start with a focused use case that will work. Keep it small. Involve the team that will use it. Measure everything. Learn what works and what doesn't.

Phase 4: Refactor for Scale (Weeks 14-20) Take what worked in the pilot and abstract it into a reusable pattern. What was specific to the pilot? What's generalizable? Can you build this on a platform that reduces operational overhead?

Phase 5: Broader Deployment (Weeks 20+) Start rolling out to other teams. Support them. Measure. Refine. Expand based on what's working.

This timeline assumes active, focused work. It might take longer depending on your organization's pace. But it's a real path that organizations are successfully executing.

The key is don't skip steps. Skipping the governance phase to move faster creates problems that slow you down way more later. Skipping the measurement phase means you have no idea if something's actually working. Skipping stakeholder alignment means you have constant resistance.

Smart, deliberate progress beats fast, chaotic movement every time.

Why Now Is the Right Time

The window for AI adoption in finance isn't closing, but the opportunity is shifting.

Early movers got to learn what works and what doesn't on relatively simple problems. They've now extracted that value. Current movers are learning from their mistakes and tackling more complex use cases. By the time this becomes standard practice, the easy value will be taken.

Right now, if you move, you can build genuine competitive advantage before this becomes table stakes. In two years, not moving won't be an option—it'll be a competitive liability.

But more than the competitive argument, there's the value argument. Your finance team is probably spending time on work that doesn't require human judgment. That time could be freed up for work that does. Better analysis. Better planning. Better decision-making. That's not hype. That's what good finance teams want to be doing, and AI enables it.

The technology is proven. The business case is real. The implementation path is clear. The right time to start is now, with a deliberate, thoughtful approach that builds sustainable value.

FAQ

What does it actually mean when finance leaders say AI is essential?

When 99% of finance leaders describe AI as essential, they're indicating that AI capabilities have moved beyond optional nice-to-have into core competitive infrastructure. Organizations not leveraging AI for forecasting, analysis, and fraud detection are working at a disadvantage. It doesn't mean every organization needs the same AI implementation, but it does mean ignoring AI entirely puts you at a significant competitive disadvantage. Essential means you can't ignore it and expect to maintain your competitive position.

How can we build trust in AI systems when finance requires control and oversight?

Trust and control aren't opposing forces. They work together. You build trust by making the system transparent, explainable, and auditable. You maintain control by designing human oversight into the system rather than adding it afterward. Teams that treat control as a requirement that shapes design from day one end up with more trustworthy systems because the oversight is systematic rather than ad-hoc. The organizations winning with AI aren't those that trust the system blindly—they're those that have built trust systematically through proper design.

What percentage of finance operations should we automate with AI?

There's no universal percentage that works. It depends on your specific context. The question to ask is: where is AI actually making decisions better or making work faster? Automate those processes. Where does AI make recommendations but humans should review before action? Build that with oversight. Where does human judgment matter most? Keep that human. Most mature finance operations end up with a mix of 30-50% partially or fully automated processes, 40-50% augmented processes where AI helps humans work better, and 10-20% that remain purely human-driven because that's where judgment matters most.

How do we handle the risk that AI systems trained on historical data will fail when conditions change dramatically?

This is a real risk that requires active management. First, you need monitoring systems that tell you when the underlying conditions that trained the model have shifted. Second, you need governance processes that trigger model retraining when needed. Third, you need to stress-test your models against scenarios that differ from historical conditions. Fourth, you need fallback procedures—ways to operate if the AI system becomes unreliable. Organizations serious about resilience build these safeguards in from the start rather than discovering they're needed the hard way during a crisis.

What's the difference between AI that we implement ourselves and AI platforms we subscribe to?

Build-versus-buy considerations are real. Custom-built AI solutions give you maximum control and can be tailored exactly to your needs, but they require substantial internal AI expertise to build and maintain. Platforms like Runable provide pre-built AI capabilities for specific use cases at a fraction of the cost and complexity. Runable's platform for automated report generation, presentations, and documentation allows finance teams to capture AI benefits without building custom systems. For most finance teams, a hybrid approach works best: use platforms for standard applications like document generation and reporting, and build custom solutions only where your competitive advantage depends on unique capability.

How long does it typically take to see measurable ROI from an AI implementation?

Simple automation projects often show ROI within 3-6 months. More complex analytical applications might take 6-12 months before you have enough data to measure impact reliably. The key is defining what ROI actually means upfront. If it's time saved, you can measure that quickly. If it's improved forecast accuracy, you need at least one full forecasting cycle plus comparison to baseline. If it's risk mitigation, you might need 12+ months to accumulate enough data. The best practice is to plan for measurement over 12 months minimum and track both immediate metrics and longer-term impact.

Should we create a separate AI team or embed AI into existing finance teams?

Organizations trying both approaches find that embedding works better long-term than separation. A separate AI team can move fast initially but often builds things that existing teams don't want to use. Embedding AI expertise into finance teams ensures the solutions actually address business problems. The transition often looks like: start with a dedicated AI team to build core capabilities, then gradually embed AI people into finance teams as those teams develop capability. By 18-24 months, your best AI people are working within the finance function, not as a separate department.

What should we do if an AI system we deployed starts producing poor results?

First, diagnose why. Is the underlying model degrading? Has the data changed? Have business conditions shifted? Second, don't panic or immediately shut it down. Build investigation into the monitoring process. Third, if it's a training issue, update the model. If it's a data quality issue, fix the data source. If it's changed business conditions, adjust the system's assumptions. If none of those help, then you scale back or sunset the application. The key is treating this as a normal operational issue rather than a system failure. AI systems require ongoing care and maintenance like any enterprise system.

Conclusion: The Real Path Forward

We've covered a lot of ground. The state of AI adoption in finance. How to build governance that enables rather than constrains. Where organizations are successfully deploying AI today. How to scale from pilot to production. How to measure what actually matters.

The through-line across all of this is the same: AI's true value in finance comes not from raw capability or cutting-edge algorithms. It comes from thoughtful integration into how your team actually works. It comes from clear governance that maintains control while enabling speed. It comes from honest measurement of what's actually working. It comes from treating it as a multi-year evolution rather than a one-time implementation.

The finance teams realizing the most value from AI right now aren't the ones moving fastest. They're the ones moving smartest. They understand their business problems deeply. They're building AI systems that augment human capability rather than replacing it. They're maintaining the oversight necessary for compliance while still capturing the efficiency benefits. They're measuring outcomes, not activities. They're investing in people as much as technology.

If you take nothing else from this, take this: start with a clear problem, not a tool. Build governance from day one, not afterward. Measure what actually matters. Plan for scale from the beginning. And invest in people who understand both the business and the technology.

The opportunity in front of finance teams right now is real. AI can genuinely improve decision-making, reduce the time spent on manual work, and catch problems earlier. But only if you implement it right. And implementing it right means being deliberate, thoughtful, and focused on measurable value rather than hype.

Your competitors are moving. The question isn't whether to move. It's how to move in a way that actually delivers value. This guide gives you the roadmap. The execution is up to you.

Key Takeaways

- 99% of UK finance leaders view AI as essential, with 85% already integrating it—adoption is widespread but scaling remains the primary challenge

- Trust and control aren't obstacles to AI value; they're prerequisites that should shape implementation from day one, not added afterward

- Finance teams realizing measurable value focus on augmenting human judgment rather than full automation, maintaining oversight in critical decisions

- Only 10% of organizations have scaled AI agents enterprise-wide; most are in deliberate, phased deployment—this is the smart approach, not slow-moving

- Governance frameworks, measurement strategy, and change management are often more critical to success than the underlying technology itself

- Organizations measuring long-term ROI find that sustainability matters more than initial speed; smart, deliberate implementation outperforms aggressive experimentation

Related Articles

- Why AI ROI Remains Elusive: The 80% Gap Between Investment and Results [2025]

- Enterprise AI Adoption Report 2025: 50% Pilot Success, 53% ROI Gains [2025]

- The Hidden Cost of AI Workslop: How Businesses Lose Hundreds of Hours Weekly [2025]

- Responsible AI in 2026: The Business Blueprint for Trustworthy Innovation [2025]

- Building Your Own AI VP of Marketing: The Real Truth [2025]

- Microsoft CEO: AI Must Deliver Real Utility or Lose Social Permission [2025]

![Realizing AI's True Value in Finance [2025]](https://tryrunable.com/blog/realizing-ai-s-true-value-in-finance-2025/image-1-1769182818375.jpg)