Introduction: When Regulators Start Paying Attention to Your Design

It was a Friday in early 2026 when the European Commission dropped what amounts to a digital regulation bomb on TikTok. Not the kind that gets headlines for weeks, but the kind that sends shivers through every tech executive's spine. The Commission didn't just say TikTok's app is addictive. They said it was purposefully designed to be addictive. They said the company knew about the compulsive scrolling, the late-night binges, the constant checking, and built the entire interface around making it worse according to Reuters.

Infinite scroll. Autoplay. Push notifications that buzz your phone at midnight. A recommendation engine that learns exactly what keeps you locked in. The Commission called these out by name, with scientific backing, saying they "fuel the urge to keep scrolling and shift the brain of users into 'autopilot mode'" as reported by The New York Times. That's regulatory speak for: we've got the receipts.

What makes this moment different from the usual tech regulation headlines is that this isn't about data privacy or content moderation or platform liability. This is about the fundamental design of the product itself. The EU isn't just asking TikTok to follow rules. They're asking TikTok to remake the app from the ground up as noted by Courthouse News.

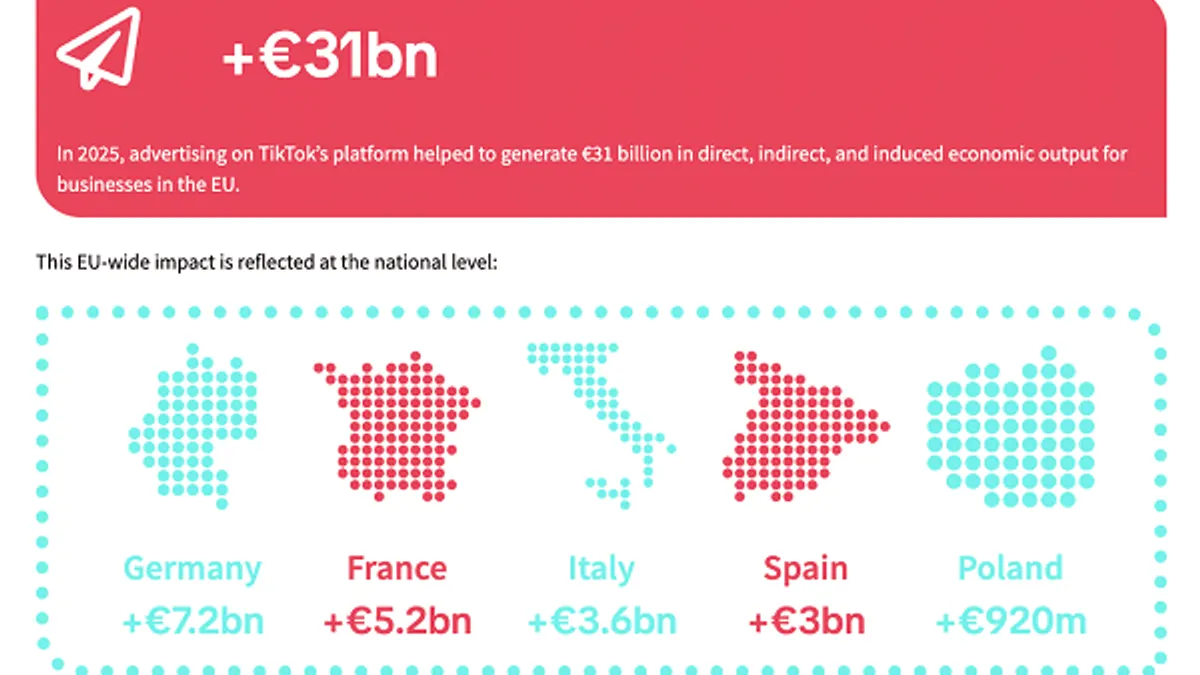

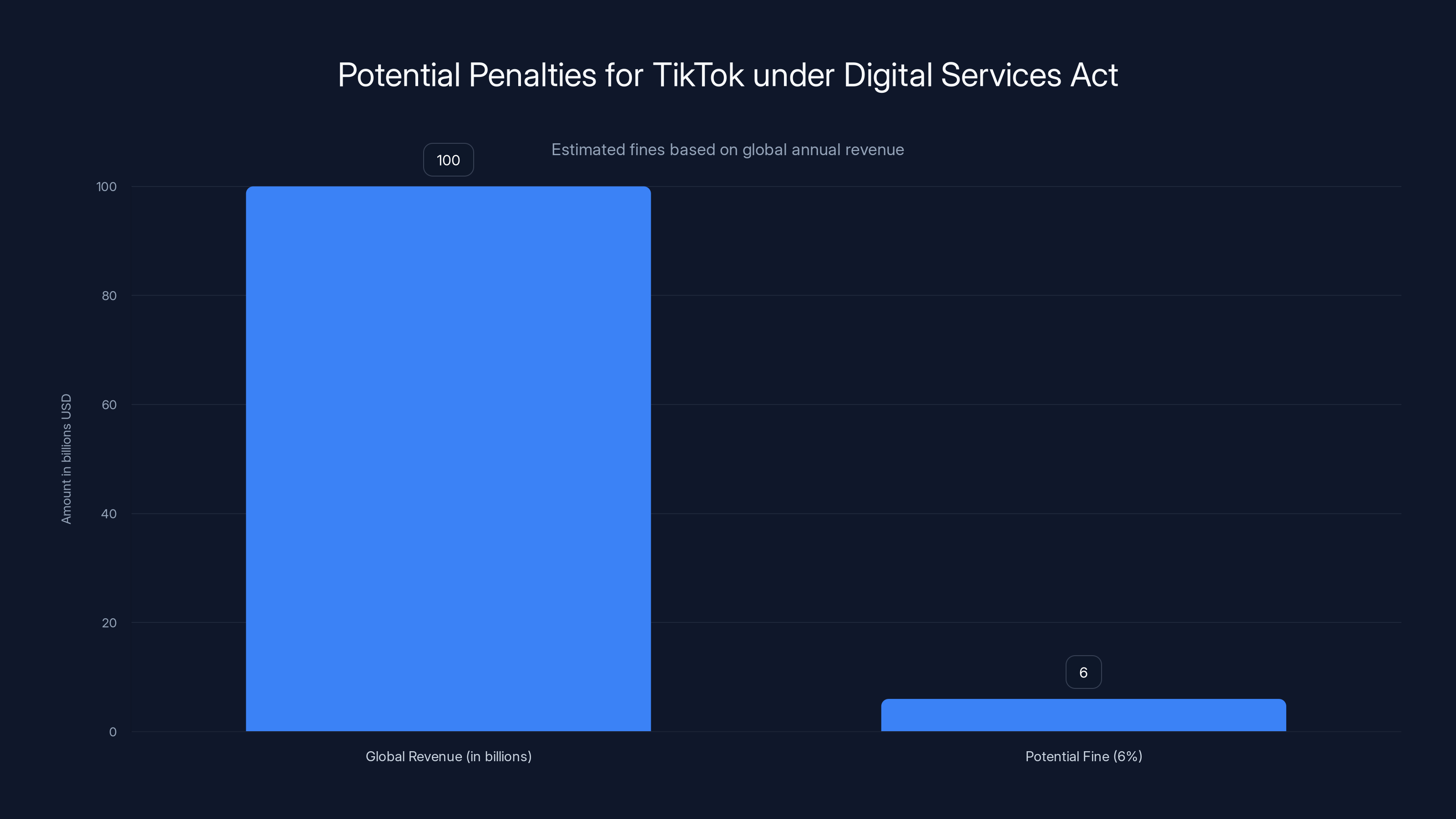

The stakes? Fines up to 6% of global annual turnover. For TikTok, that's not a rounding error. That's a business-threatening penalty. But beyond the numbers, this decision signals something bigger: regulators worldwide are finally connecting the dots between interface design and human behavior. And they're willing to force change according to TradingView.

Here's what you need to know about the Commission's findings, why they matter, and what happens next.

TL; DR

- The Finding: The European Commission accused TikTok of deliberately designing addictive features, violating the Digital Services Act's requirement to assess design risks as highlighted by Amnesty International.

- The Features Called Out: Infinite scroll, autoplay, push notifications, and the recommendation engine are the main culprits identified as reported by TechCrunch.

- The Impact: TikTok must disable these features or face fines up to 6% of global revenue (potentially billions of dollars) as noted by The Wall Street Journal.

- The Timeline: TikTok has time to respond to preliminary findings before final sanctions are determined according to UPI.

- The Precedent: This opens the door for regulators worldwide to demand design changes on other platforms as discussed by the Atlantic Council.

- Bottom Line: The era of "we're just a platform" is over. Design choices are regulatory choices.

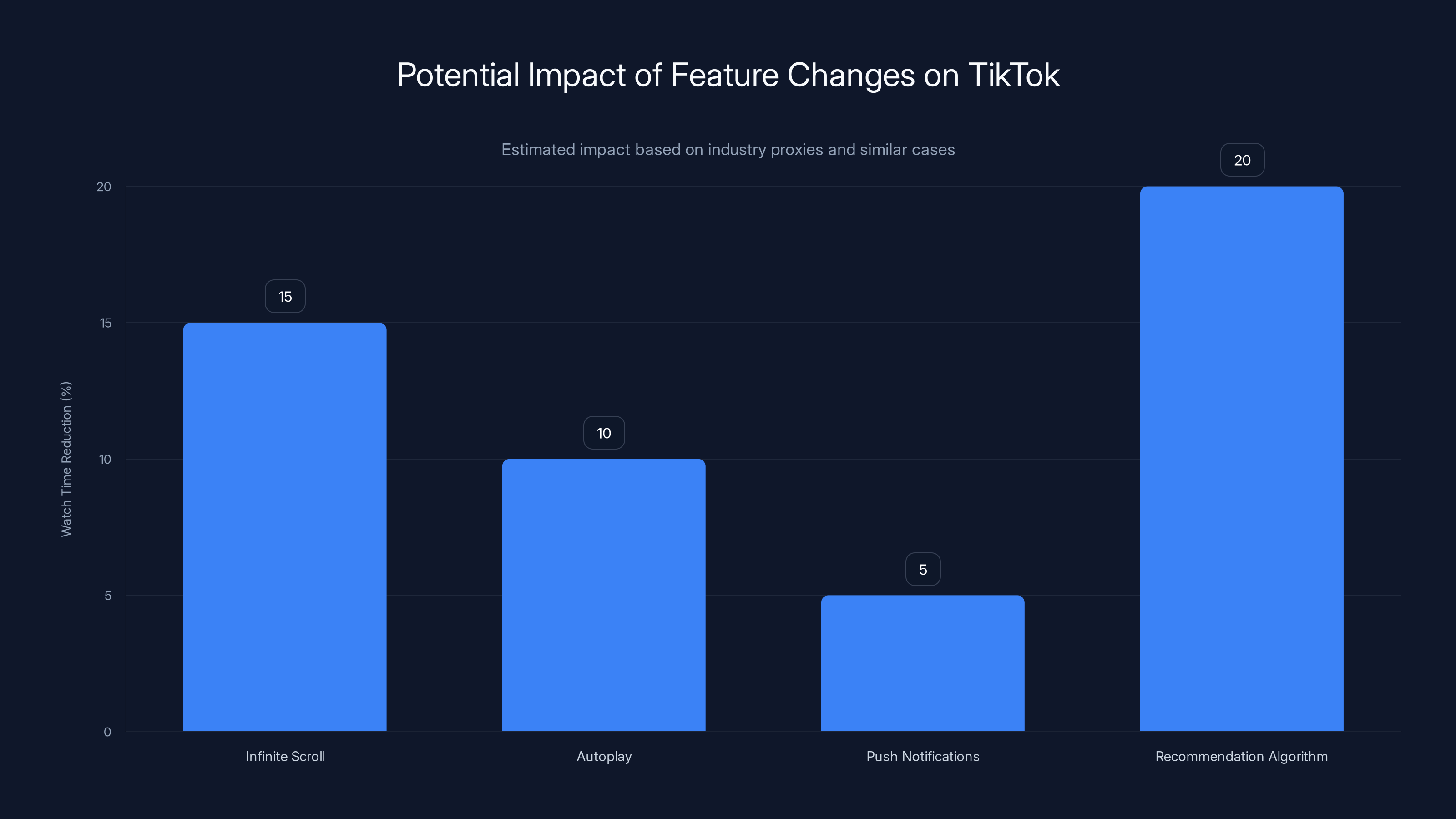

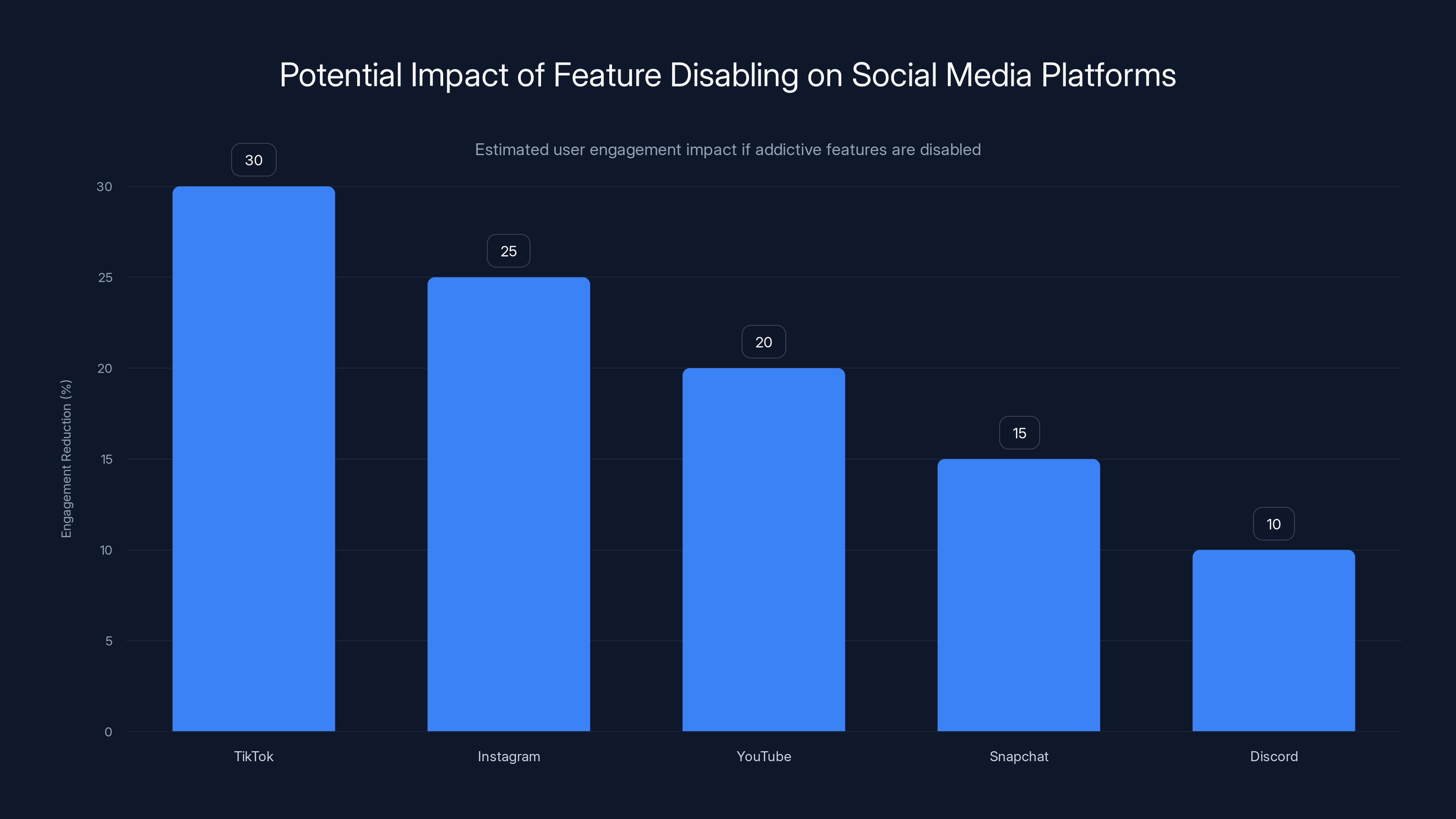

Estimated data suggests significant watch time reductions if TikTok disables or limits key features. The most substantial impact is expected from changes to the recommendation algorithm, potentially reducing watch time by 20%.

The European Commission's Preliminary Findings: Reading Between the Regulatory Language

The Commission didn't just wake up one day and decide to target TikTok. This investigation launched under the Digital Services Act, the EU's sweeping regulation that basically says: if you're a big online platform, we're watching how you operate, and we will fine you if you screw it up as reported by TechCrunch.

Under the DSA, platforms designated as "Very Large Online Platforms" have specific obligations. One of them is to "adequately assess" systemic risks their services create. TikTok's risks, according to the Commission: psychological and behavioral harms to users, with particular concern for minors and vulnerable adults as noted by Courthouse News.

The Commission's statement included language that amounts to a regulatory indictment of TikTok's entire design philosophy. They cited scientific research showing that certain interface elements "may lead to compulsive behaviour and reduce users' self-control" according to The New York Times. This isn't the Commission making stuff up. They're citing peer-reviewed research about how UI design affects human psychology.

Specifically, the Commission found that TikTok didn't adequately assess:

- How infinite scroll influences time spent in the app

- The impact of autoplay on compulsive usage patterns

- Whether push notifications increase addictive engagement

- How the recommendation algorithm personalizes content to maximize engagement (even if that engagement is unhealthy)

- Whether time management tools and parental controls actually reduce harm

The last point is particularly damning. TikTok does have screen time tools. Users can set daily limits. Parents can restrict access. But the Commission reviewed these tools and concluded they're basically security theater. You can set a screen time limit, get a notification that you've hit it, then ignore the notification and keep scrolling. The friction is so low it barely registers as reported by Reuters.

What this means: the Commission isn't accusing TikTok of technical negligence. They're accusing them of design negligence. And they're saying the current mitigation measures don't actually mitigate anything.

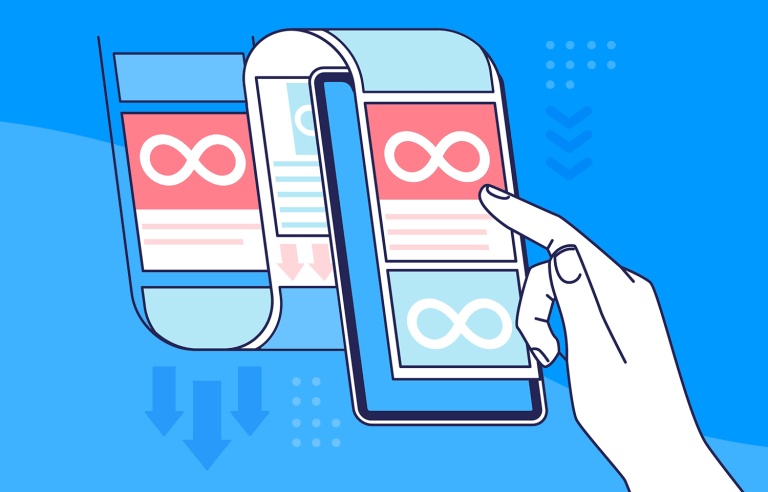

Infinite Scroll: The Feature That Changed Everything

Infinite scroll seems simple, almost obvious. You scroll down, new content loads, you keep scrolling. No page breaks. No "next page" button to click. The feed just goes on forever. It's elegant. It's smooth. It's incredibly effective at keeping users engaged.

It's also incredibly effective at making users lose track of time.

Before infinite scroll became standard, social media worked differently. You'd scroll through a feed, hit the bottom, see a "next" button, and make a conscious decision to continue. That friction, that moment of choice, mattered. It gave your brain a chance to disengage. With infinite scroll, there's no natural stopping point. No reset. Just endless content as discussed by the Lawsuit Information Center.

The Commission's concern isn't theoretical. There's actual neuroscience behind it. Behavioral researchers have documented how infinite scroll hijacks the brain's reward system. Each new piece of content is potentially rewarding—it might be the funniest video, the most interesting creator, the biggest plot twist. Your brain stays in a heightened state of anticipation, waiting for the next reward as noted by The New York Times.

Over time, this creates a pattern. Your brain learns that if you keep scrolling, you might get rewarded. So you keep scrolling. And scrolling. And scrolling. The session that was supposed to be five minutes becomes twenty, becomes an hour. You check the time and can't believe it.

TikTok's infinite scroll is particularly potent because of what comes next in the chain: the recommendation engine. It's not just serving you random videos. It's learning what kind of content keeps you scrolling, and it's feeding you more of it. The combination of infinite scroll plus algorithmic recommendations creates what some researchers call a "supernormal stimulus." It's content designed to be more engaging than real-world content. More novel. More surprising. More rewarding as discussed by PYMNTS.

The Commission's demand: disable infinite scroll. Implement breaks. Make users consciously decide to keep scrolling, rather than doing it on autopilot.

For TikTok, this is a massive change. It's basically asking them to make their core user experience less engaging. In the short term, that means fewer watch hours. Fewer ad impressions. Less data for the algorithm to learn from. It's a real business hit as reported by TradingView.

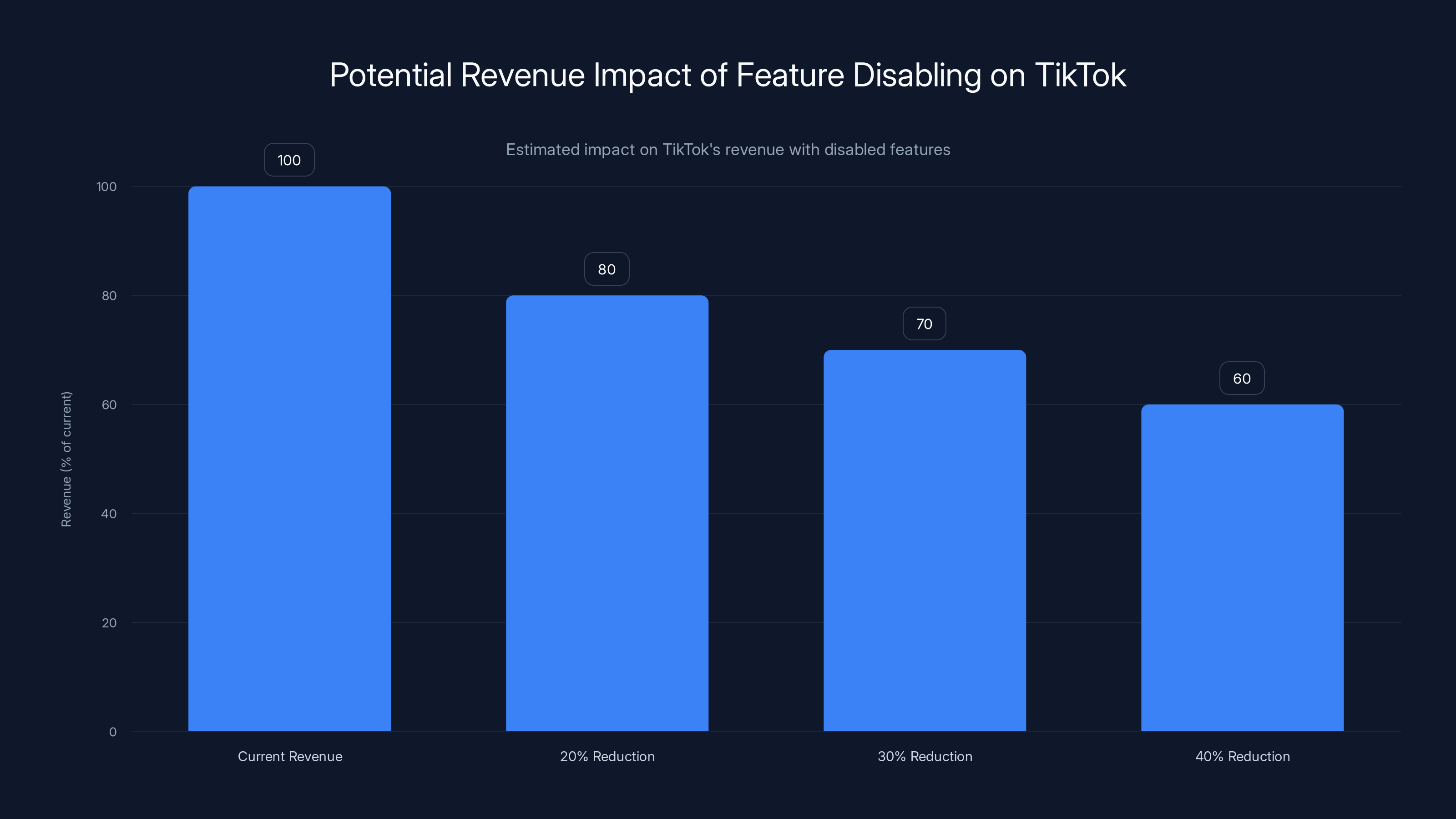

Disabling key features could reduce TikTok's revenue by 20-40%, posing a significant challenge to its current business model. (Estimated data)

Autoplay: Content That Never Stops Playing

Autoplay is infinite scroll's brother. When a video ends, the next one starts automatically. No pause. No thought required. Just continuous content as reported by TechCrunch.

You see this on YouTube, Netflix, TikTok, Instagram Reels. It's become so standard that not having autoplay feels broken. But that normalization masks how effective it is at capturing attention.

Autoplay removes another friction point. In the YouTube or TikTok days of old, a video would end and you'd have a few seconds to decide: do I want to watch another one? With autoplay, that decision is made for you. The default is "yes, keep watching." If you want to stop, you have to actively stop the video. It's a subtle flip in agency, but it has massive behavioral implications.

The Commission identified autoplay as a key contributor to compulsive use. When content automatically transitions to the next piece, users stay in a passive consumption state. They're not making choices. They're just watching. And watching. And watching as noted by The New York Times.

TikTok's autoplay is particularly aggressive because of the format. Each TikTok video is short, typically 15 seconds to 10 minutes. The brevity makes autoplay feel harmless. One more won't hurt. But one more turns into ten more, and suddenly you've been scrolling for an hour without ever consciously deciding to continue.

The solution the Commission is proposing: disable autoplay or make it optional. Put the user back in control of the decision to watch the next video as reported by Reuters.

Push Notifications: The Midnight Buzzer

Push notifications are how TikTok (and every other social platform) keeps you thinking about the app even when you're not using it.

You get a notification that someone liked your video. Someone commented. A creator you follow went live. A new sound is trending. Each notification is a tiny dopamine hit. Each one is a reason to open the app and see what's happening as discussed by the Lawsuit Information Center.

The timing of these notifications matters enormously. Daytime notifications? Annoying. Nighttime notifications? Psychologically disruptive. The Commission specifically called out how TikTok sends notifications at times when users might otherwise be winding down for sleep. Late evening. Night. Early morning. These are windows when users are most likely to have their phones nearby and be in a suggestible mental state as highlighted by Amnesty International.

One notification at 11 PM doesn't seem like a big deal. But multiply that by millions of users, each getting personalized notifications timed to maximize the chance they'll open the app, and you've got a coordinated system for disrupting sleep patterns and dragging users back into compulsive app use.

The Commission's findings here are backed by sleep research. Notifications that interrupt sleep have measurable impacts on next-day alertness, mood, and decision-making ability. For young people especially, whose sleep cycles are already fragile, this compounds existing developmental challenges as noted by The New York Times.

The Commission's requirement: TikTok needs to implement systems that don't send push notifications during hours when users are likely to be sleeping. For minors, this might mean a blanket restriction on evening notifications. For adults, at least giving meaningful control over notification timing as reported by Reuters.

For TikTok, this is more tractable than disabling infinite scroll. It's a design change that's already technically feasible. Other platforms have implemented similar systems. But it does mean fewer engagement hooks, fewer reasons for users to open the app outside of their own volition as noted by Courthouse News.

The Recommendation Engine: Personalized Addiction

Infinite scroll, autoplay, and push notifications are the visible mechanics of addiction. The recommendation engine is the brain behind them.

TikTok's algorithm is legendarily effective. Users open the app and almost immediately find content they want to watch. Not content they were already thinking about. Content they didn't know existed but turns out they love. That's not magic. That's engineering as discussed by PYMNTS.

The algorithm learned from billions of interaction points: how long users watch videos, which videos they rewatch, which ones they share, which ones they skip. It's built a model of what keeps each individual user engaged, and it optimizes for exactly that.

The problem, from a regulatory perspective, is that optimizing for engagement and optimizing for user well-being are different things. An algorithm optimized for engagement will show you more of whatever captures your attention most intensely. If that's educational content, great. If that's outrage-inducing content, conspiracy theories, or depressing self-harm trends, the algorithm doesn't care. Engagement is engagement as noted by The New York Times.

The Commission found that TikTok's algorithm systematically fails to consider user well-being in its optimization function. It's tuned for one thing: watch time. Everything else is secondary.

For young users, this creates particular risks. A teenager struggling with depression might get shown more content about depression, because the algorithm notices they're engaging with it. That engagement might feel validating in the moment—"finally, people who understand!"—but the algorithm is creating an echo chamber that can deepen the depression as discussed by the Lawsuit Information Center.

The Commission's requirement: TikTok needs to change its recommendation system so that it's not just optimizing for engagement. It needs to account for user well-being, particularly for minors. This might mean showing slightly less engaging content if it's healthier content. It might mean breaking up binge-watching sessions even if users would click through to more content as highlighted by Amnesty International.

This is the most complex change the Commission is asking for. It's not just a UI modification. It's changing the underlying optimization function of a machine learning system. And it means TikTok's algorithm would actively recommend content less to maximize watch time. That's a fundamental business change as reported by TradingView.

TikTok could face fines up to 6% of its global annual revenue for breaches of the Digital Services Act, potentially amounting to billions of dollars. (Estimated data)

Why TikTok's Existing Safeguards Didn't Work

TikTok's defense, both publicly and likely in their formal responses to the Commission, will include the safeguards they already have in place. They have screen time management tools. Parents can set controls. Minors get reduced screen time defaults. These exist.

The Commission looked at these tools and essentially said: they're insufficient because they don't address the underlying design problem. You can build a guardrail on a cliff. If the cliff is too steep, the guardrail doesn't matter much as reported by Reuters.

The screen time tools exemplify this. You can set a daily limit in TikTok, say 60 minutes. When you hit 60 minutes, you get a notification. But you can dismiss it and keep scrolling. The friction is almost nonexistent. The notification says "you've spent 60 minutes today," and the app is still right there, content is still queued up, and continuing to scroll is easier than stopping.

Compare this to a system that actually interrupts the experience. Imagine if TikTok's app locked users out after they hit their daily limit. Completely locked. No override. Suddenly the default action is stopping, and continuing requires an explicit decision to disable the control.

Parental controls have similar issues. They require parents to understand what controls exist, set them up (often a non-trivial technical task), and then maintain them as the app updates and teenagers find workarounds. That's a lot to ask of busy parents, many of whom didn't grow up with smartphones and might not be fully comfortable with the technology as noted by Courthouse News.

The Commission's implicit critique: building "safeguards" that don't actually safeguard against the underlying design is regulatory theater. It lets companies claim they're addressing harms while the harms persist as highlighted by Amnesty International.

This raises a bigger question that regulators are grappling with: can you make an addictive product safe through friction and controls? Or do you need to make the product itself less addictive?

The Digital Services Act: Europe's Grand Regulation Experiment

The DSA is less than two years old, and it's already reshaping how tech companies operate in Europe. It's the EU's attempt to do what the US hasn't done: create comprehensive, binding rules for how the internet works as discussed by the Atlantic Council.

The core insight behind the DSA is that platforms have gotten so large and influential that they need to be regulated like utilities or financial institutions. The Act has several key provisions. Platforms have to be transparent about how their algorithms work. They can't use dark patterns to manipulate users. They have to remove illegal content quickly. They have to provide adequate safeguards for minors.

But the real innovation in the DSA is the concept of systemic risk assessment. Large platforms have to identify the risks their services create and demonstrate that they're mitigating those risks. This puts the burden on platforms to prove they're not causing harm, rather than on regulators to prove they are as reported by Reuters.

TikTok's case is the first major test of whether the Commission will actually enforce this. For years, tech companies have faced criticism about addictive design, but there's been little regulatory consequence. The DSA changes that calculus.

The Commission is sending a message with this action: we will assess your design decisions, we will evaluate whether they create systemic harms, and we will require changes if they do. This applies to all Very Large Online Platforms, not just TikTok. YouTube, Instagram, Facebook, Snapchat, Discord—all of them are now operating under the same regulatory framework as noted by Courthouse News.

Comparable Regulations and International Precedent

Europe isn't the only jurisdiction taking action on social media harms. But Europe is moving fastest and most aggressively.

Australia implemented the toughest age restriction requirement. No users under 16. It applies to all social media platforms. Australia gave companies an 18-month window to implement age verification systems. This isn't a ban on features. It's a structural ban on access for young people as reported by Reuters.

The UK is developing legislation focused on online harms more broadly, with particular attention to protecting young people. The Online Safety Bill creates duties of care for platforms and requires them to assess systemic harms.

Spain, France, Denmark, Italy, and Norway are all exploring age restrictions or similar protective measures. The pattern across Europe is consistent: governments are moving beyond encouraging self-regulation and toward mandating specific changes as discussed by the Atlantic Council.

In the United States, the approach has been different. Twenty-four states have enacted age-verification laws, but there's no federal digital services act. Instead, the US is pursuing a more fragmented approach, with individual state laws and proposed federal legislation like the AICOA (American Innovation and Choice Online Act). The effect is less unified than Europe but creates complexity for platforms because they have to comply with multiple different regimes.

The TikTok situation in the US adds another layer. Beyond design regulation, there are existential questions about whether TikTok should be allowed to operate at all due to national security concerns. China owns TikTok, and regulators worry about data access and algorithmic influence.

But the EU's approach is pure regulatory focus on design and harm. No national security angle. Just: does this product cause harm to users, and what design changes would reduce that harm? as noted by Courthouse News

If the Commission successfully forces TikTok to disable infinite scroll, autoplay, and notifications, and to redesign its recommendation system, that becomes a precedent. Other regulators will look at it and ask: if TikTok has to do this, why doesn't every platform? as reported by Reuters

Estimated data suggests significant engagement reduction if platforms disable addictive features like infinite scroll and autoplay. Estimated data.

The Business Impact: What Disabling Features Costs

Let's get concrete about what these changes would actually mean for TikTok's business.

Watch time is the core metric that drives everything. More watch time means more ads shown. More ads means more revenue. More watch time also means more data about user behavior, which improves the recommendation algorithm, which drives more watch time. It's a virtuous cycle for TikTok, a vicious one for users trying to moderate their usage.

Disabling infinite scroll would require users to make a conscious decision to load more content. Not everyone will. Some percentage of users will hit the end of their feed, see a "load more" button, and decide they're done scrolling. That's less watch time as noted by The New York Times.

Disabling or limiting autoplay means users have to actively choose to watch the next video instead of watching by default. Again, fewer view completions.

Limiting push notifications means fewer out-of-app engagement hooks pulling users back into the app. That means lower daily active users or at minimum fewer sessions per user as reported by TradingView.

Redesigning the recommendation algorithm to deprioritize engagement could mean serving content that's slightly less engaging but healthier. That's a direct hit to watch time.

How much do these changes impact TikTok's business? Nobody knows exactly, but the industry has good proxies. When YouTube changed its recommendation algorithm to deprioritize borderline misinformation (not even full-on false content, just conspiracy-adjacent stuff), watch time in those categories dropped 40%. YouTube took the hit because of reputational and regulatory pressure, but it was a real financial cost as discussed by PYMNTS.

For TikTok, the hits to watch time could compound. If watch time drops 20%, ad revenue drops 20%. If user engagement drops, the algorithm learns less, which might reduce its effectiveness further. There's a cascading effect.

At some point, the question becomes: how much can you change an addictive product before it stops being addictive? The answer might be: not enough to be viable as a business. That's the implicit threat in the Commission's action. Comply with these requirements, or face fines. But compliance might erode your business model as reported by Reuters.

Young Users and Vulnerability: The Regulatory Focus

The Commission's findings place particular emphasis on risks to minors and vulnerable adults. This isn't an afterthought. It's central to their case.

TikTok's user base skews young. Roughly 60% of TikTok users are under 30, and a significant chunk are teenagers. This demographic is particularly susceptible to addictive design for reasons grounded in neuroscience. Teenage brains are still developing, particularly the prefrontal cortex, which governs impulse control and decision-making. Teenagers are more vulnerable to dopamine-driven behavior patterns because their reward systems are highly sensitized during development as noted by The New York Times.

Vulnerable adults—people with mental health conditions, cognitive disabilities, people struggling with substance abuse—are also more susceptible to addictive design patterns. The Commission recognizes this and has made it central to their risk assessment as highlighted by Amnesty International.

TikTok does have some protections for minors built into the app. Accounts for users under 18 have default settings like reduced recommended content for late-night hours and limited ability to send messages from strangers. But the Commission found these defaults inadequate given the underlying design's addictiveness as noted by Courthouse News.

What makes this particularly interesting from a regulatory perspective is the implicit acknowledgment that some groups shouldn't be able to access certain product designs, even if they can technically access the platform. This challenges the notion that platforms can serve all users equally. It suggests that the same feature that's okay for a 25-year-old might be harmful for a 13-year-old, and regulation needs to account for that.

TikTok's Formal Response and Timeline

TikTok has time to respond to the Commission's preliminary findings. This is important because preliminary findings aren't final. TikTok can submit a formal response, argue against the Commission's interpretation of the evidence, propose alternative solutions, or claim that the risks identified are overstated.

Historically, this process takes months. TikTok's response will likely include:

- Pushback on the scientific claims (arguing that correlation between features and usage isn't proof of design intent to be addictive)

- Alternative explanations for high engagement (users genuinely like the content)

- Data about their existing safeguards and their effectiveness

- Proposed compromises (maybe they'd disable autoplay for minors but not adults)

- Arguments about proportionality (fining them 6% of global revenue is disproportionate)

The Commission will review TikTok's response and decide whether to proceed to final findings. If they do, that's when binding sanctions come into play. TikTok could face fines, be required to submit to audits, or be forced to make specific changes as reported by Reuters.

This timeline gives TikTok a window to negotiate. They might propose a settlement where they make some changes the Commission demands, in exchange for reduced fines or delayed enforcement. This happens regularly in EU regulatory matters.

But the key point: this isn't final yet. It's preliminary findings. That said, the Commission doesn't usually issue preliminary findings unless they're fairly confident in their case as highlighted by Amnesty International.

The recommendation engine and infinite scroll are the most scrutinized features, potentially leading to significant fines for TikTok. Estimated data.

The Broader Question: Can You Regulate Design?

Beyond TikTok specifically, the Commission's action raises a fundamental question: can regulation actually change product design in meaningful ways?

Design is often presented as a neutral choice, a matter of aesthetics and usability. But design is never neutral. Every choice—where you put buttons, what appears on the home screen, whether notifications are on by default—shapes how people interact with the product as reported by Reuters.

Regulating design means making these choices political. It means saying: infinite scroll isn't just a nice UI feature, it's a policy question. Autoplay isn't just convenient, it's a matter of public health. The recommendation algorithm isn't just an engineering problem, it's a matter of social responsibility.

This is unfamiliar territory for tech companies. They're used to being regulated on privacy (data collection), content (moderation), and competition (monopoly power). Those are regulatory domains where companies can argue about scope and proportionality but generally accept the legitimacy of the regulation.

Design regulation is different. It touches the core of how products work. Companies will inevitably argue that regulators are overstepping, that they're forcing companies to make worse products, that users prefer the current design (because the current design is optimized to be preferred).

And there's a real question about whether regulators have the expertise to mandate design changes. The Commission didn't say "TikTok must implement infinite scroll caps," they said "disable infinite scroll." That's a high-level requirement that leaves implementation questions. How exactly do you disable it? What does the user experience look like? Are there workarounds that recreate the same effect?

Despite these challenges, the Commission's action suggests regulators are willing to take on design regulation. And if they're successful in enforcing changes against TikTok, the precedent will apply to all large platforms as noted by Courthouse News.

Precedent and Contagion Effects: What This Means for Other Platforms

TikTok isn't unique in having addictive design features. Instagram has infinite scroll. YouTube has autoplay. Snapchat has streaks (a feature designed to create a daily usage habit). Facebook has notifications as discussed by the Lawsuit Information Center.

If the Commission successfully forces TikTok to disable infinite scroll, the logical next step is asking why Instagram gets to keep it. Instagram is also a Very Large Online Platform. It's also used heavily by young people. The argument for requiring Instagram to disable infinite scroll is identical to the argument for TikTok.

Internally at Meta (Instagram's parent), this is likely triggering contingency planning. What if we have to redesign Instagram? What's the minimum viable product that complies with EU regulations but doesn't destroy our business?

YouTube is probably thinking the same thing about autoplay. Snapchat about streaks. Discord about notifications.

The ripple effect could be significant. If multiple large platforms have to disable features that drive engagement, the overall effect on social media usage patterns could be substantial. Users might spend less time on social platforms overall, shift to different platforms that haven't been regulated yet, or find ways to recreate the same addictive patterns within the new constraints as reported by Reuters.

There's also a competitive angle. If European regulators force all platforms to disable certain features in EU regions, platforms operating in other jurisdictions (US, Asia, etc.) might still have those features. That creates market fragmentation. Users in Europe get a different, less engaging product than users elsewhere. That could accelerate the shift toward decentralized or regional social networks as discussed by the Atlantic Council.

The Scientific Basis: What Research Actually Shows

The Commission's findings repeatedly reference scientific research about how certain design features affect behavior and psychology. This isn't them making it up. There's a growing body of research on this topic as noted by The New York Times.

Nielsen Norman Group studies on infinite scroll show measurable impacts on user behavior. When infinite scroll is enabled, users scroll longer. They spend more time on the page. They see more content. It's not a subtle effect; it's dramatic.

Research on autoplay from YouTube and Netflix studies shows similar effects. When autoplay is on, users watch longer sessions. When it's off, they watch fewer videos.

The impact of notifications is well-documented in psychology literature. Regular, unpredictable notifications create a variable reward schedule, the same mechanism that makes slot machines addictive. Psychologist B. F. Skinner's work on variable ratio reinforcement showed that unpredictable rewards create stronger behavioral patterns than consistent rewards.

Recommendation algorithms' impact on user behavior is documented in social media research. Algorithmic amplification creates echo chambers and filter bubbles. It personalizes content in ways that increase engagement but can also increase exposure to extreme content as discussed by the Lawsuit Information Center.

What the Commission did was synthesize this research into a regulatory framework. They're not claiming anything new. They're just saying: your product's design is informed by behavioral science that you use to maximize engagement. We can see that. And we're requiring you to consider user well-being, not just engagement as highlighted by Amnesty International.

This scientific grounding makes the Commission's findings harder to dismiss as regulatory overreach. They're not banning features based on ideology. They're banning them based on evidence as noted by Courthouse News.

The process from preliminary findings to potential sanctions typically spans around 8 months, giving TikTok time to negotiate and respond. Estimated data based on typical regulatory timelines.

Potential Compliance Approaches and Alternative Solutions

TikTok has options for how to respond to the Commission's findings. They're not limited to complying exactly as stated.

One approach: graduated changes based on age. Disable infinite scroll for minors but not adults. Limit notifications for users under 18. Show the warning screen more prominently. This splits the difference: protecting young users while maintaining the product for adults as noted by The New York Times.

Another approach: opt-in rather than opt-out. Make infinite scroll a feature users can enable rather than disable. Default to paginated content. This shifts the burden to users actively choosing a more engaging experience rather than being passively subjected to it.

A third approach: algorithmic changes without feature removal. Keep infinite scroll but change how the recommendation algorithm works so it shows a more diverse range of content rather than optimizing solely for engagement. This addresses the underlying concern (personalization driving compulsion) without changing the UI as highlighted by Amnesty International.

A fourth approach: time-based limitations. Infinite scroll works fine from 9 AM to 9 PM. After 9 PM, switch to paginated content. This acknowledges that different times of day have different implications for user well-being.

A fifth approach: honest friction. Keep infinite scroll but make it genuinely inconvenient. Every third time users try to scroll past the visible content, show a full-screen notification asking if they really want to continue. Not dismissable. Not optional. Make continuing a conscious choice as noted by Courthouse News.

Some of these approaches will be more palatable to TikTok than others. Some will be acceptable to the Commission. The negotiation will likely land on something that reduces engagement somewhat but doesn't destroy the product entirely as reported by Reuters.

Global Regulatory Implications: A Fragmented Future

One consequence of the Commission's action is to create regulatory fragmentation. TikTok might have to operate with different features in different regions.

In the EU, infinite scroll gets disabled. In the US, it stays. In India, it stays. In Brazil, TikTok might face different requirements as discussed by the Atlantic Council.

This isn't unprecedented. Facebook already operates with different privacy features in Europe (complying with GDPR) than in the US. Google's search results differ based on local law. Companies adjust their products by region based on regulatory requirements.

But social media is global in ways that other services aren't. A significant portion of TikTok's appeal is that it's the same experience worldwide. If European TikTok becomes meaningfully different from global TikTok, that creates friction.

Further, a fragmented approach creates incentives for users in regulated regions to circumvent regulations. If European teens realize that TikTok in the US has infinite scroll and they don't, they might use VPNs to access the US version. This is an enforcement challenge regulators will have to address as reported by Reuters.

The likely evolution: either regulations converge globally (unlikely in the near term), or platforms develop "compliant regions" where features are stripped back. This could accelerate the development of localized social platforms that are designed for specific regions from the start as noted by Courthouse News.

The Addiction Definition Problem: Where Does Design End and Addiction Begin?

There's a philosophical problem lurking in the Commission's findings. When does a product cease to be engaging and become addictive? Where's the line?

Infinite scroll is engaging. That's why it's widely adopted. Does engagement equal addiction? Is every engaging product addictive by definition? Or is there a meaningful distinction between "wow, this is cool, I want to use it more" and "I can't stop using this even though I want to"?

The Commission addresses this by pointing to psychological research on compulsive behavior and loss of self-control. Their claim is that TikTok's design doesn't just make the product engaging; it makes engagement compulsive. The mechanism is the variable reward schedule combined with infinite content supply combined with personalization as noted by The New York Times.

But operationalizing this distinction is hard. How much of users' watch time is because TikTok is genuinely great content versus because the design is compulsive? How do you separate product quality from product design in the addiction equation?

TikTok's likely argument: people use TikTok so much because the content is genuinely good, not because the design is addictive. Disabling features will reduce watch time, but it's reducing use of a product people actually want to use, not eliminating compulsive behavior as reported by Reuters.

The Commission's likely response: the fact that you've optimized the content to be appealing doesn't change the fact that you've also optimized the interface to be compulsive. Separate the two. Create a product with great content but less compulsive design as highlighted by Amnesty International.

This debate will likely continue through TikTok's formal response and the Commission's final determination. But the practical result is that the burden is shifting: companies have to prove that their design choices are primarily about user experience rather than engagement maximization as noted by Courthouse News.

User Experience in a Regulated World: What Would It Look Like?

Imagine opening TikTok after the Commission's requirements are implemented. What's the experience?

You open the app. You see a feed of videos, three or four at a time. You watch one. It ends. The next video doesn't automatically start. You see a button: "Show next video." You tap it. The next video plays.

Notifications don't come in after 10 PM. Or they come with a longer delay. Or they don't happen at all for minors.

When you've been scrolling for a while, you might get a notification: "You've been watching for 20 minutes. Want to take a break?" It's actually a hard stop. The only way forward is actively dismissing it.

The recommendation algorithm still learns what you like, but it's balanced against promoting a diverse feed. Sometimes you get recommendations that are interesting but not hyper-optimized for your specific dopamine triggers as reported by Reuters.

Would this still be TikTok? Functionally, yes. You can still see short videos. You can still see creators you follow. You can still discover new content.

Would it be as engaging? Almost certainly not. Watch time would drop. Daily active users might drop. But the core product remains.

Actually, this raises an interesting question. If TikTok remains viable after these changes, doesn't that suggest that the "addictive" features aren't actually necessary for the product to work? That they're optimization on top of an already-good product? If TikTok can function without infinite scroll, autoplay, and aggressive notifications, that suggests the company added those features specifically to maximize engagement, not because they were essential to the user experience as highlighted by Amnesty International.

Economic Questions: Viability and Sustainability

Can TikTok sustain its business model with these features disabled? This is the underlying question that will drive negotiation with regulators.

TikTok's revenue model is advertising. Advertisers pay based on impressions and engagement. More watch time means more impressions. More impressions means more revenue. Disable infinite scroll, autoplay, and aggressive notifications, and you're reducing watch time. Directly.

How much? Unknown. But models suggest it could be 20-40% reduction. For a company that's valued in the hundreds of billions, a 20-40% reduction in revenue is existential as noted by The New York Times.

There are workarounds. TikTok could pursue a subscription model, charging users for ad-free access or premium features. They could sell more data to advertisers (though European regulations limit this). They could increase the price they charge advertisers per impression. But none of these fully offset the impact of lower engagement.

This creates a negotiating dynamic. TikTok can argue: these requirements will make our business unviable, you'll face significant fines if you try to enforce them, so let's find a compromise. The Commission can respond: that's your problem. Adapt your business model as reported by Reuters.

Historically, tech companies have found ways to adapt. YouTube survived removing autoplay recommendations for conspiracy content. Netflix adapted to password sharing limitations. Instagram shifted toward messaging features. Companies can evolve their business models if forced to as noted by Courthouse News.

But it's genuinely costly, and companies will fight hard to avoid it.

The Future of Social Media Design: A New Era

If the Commission's action against TikTok succeeds, and if other regulators follow suit, the era of unconstrained engagement optimization in social media is over. That doesn't mean the end of social media. It means the end of social media designed purely for maximum engagement as discussed by the Atlantic Council.

We might see the emergence of new design philosophies. Time-bounded engagement. Friction-by-default. Algorithmic diversity requirements. Notification constraints.

Alternatively, we might see the rise of new platforms that are designed from the ground up to comply with these regulatory requirements. Instead of retrofitting compliance onto TikTok or Instagram, new platforms could be built to balance engagement with user well-being from the start.

We might also see the rise of decentralized social networks that aren't subject to EU regulation. If you build a protocol rather than a platform, if users host their own data, if there's no central server collecting behavioral data, the regulatory requirements become harder to enforce.

Or we might see the migration of young users to platforms based outside the EU or to private group chats on encrypted messaging apps, circumventing regulation entirely as reported by Reuters.

The medium-term future likely involves a mix of these outcomes. Some platforms adapt. Some new platforms emerge. Some users migrate. Some use VPNs to access unregulated versions.

But the broader trend is clear: the black-box optimization of engagement at the expense of user well-being is becoming politically and legally untenable. Regulators are watching. Companies can't count on being ignored. Design choices have consequences as noted by Courthouse News.

The Role of Science and Evidence in Tech Regulation

One underappreciated aspect of the Commission's action is its reliance on scientific evidence. This isn't regulation by fiat. It's regulation based on research about how product design affects human behavior as noted by The New York Times.

This sets a precedent for how tech regulation might evolve. Instead of regulators making decisions based on politics or ideology, they're making decisions based on evidence. Does this design feature cause psychological harm? Here's the research. Here's how we know. Here's what needs to change.

This approach has advantages and disadvantages. The advantage is credibility. Companies can't dismiss it as arbitrary. If the science says infinite scroll contributes to compulsive behavior, that's hard to argue with.

The disadvantage is that it requires regulators to develop expertise in behavioral science, psychology, and neuroscience. That's not typically where regulatory expertise lies. Regulators are trained in law, not science. There's a risk of misapplication or oversimplification of scientific findings.

But the Commission's approach suggests they're taking this seriously. They're consulting with researchers, reviewing the scientific literature, and basing their findings on evidence. This could become a model for tech regulation globally as noted by Courthouse News.

The implication: tech companies can't rely on saying "the science is unclear." If there's published research suggesting harm, they need to respond to that research, not dismiss it as reported by Reuters.

Conclusion: The Beginning of Regulatory Accountability

The Commission's preliminary findings against TikTok aren't the end of the story. They're the beginning of a new era where product design choices have regulatory consequences.

TikTok will respond, negotiate, and likely make some changes. The Commission will issue final findings. There will be fines, though probably negotiated lower than the stated maximum. TikTok will survive, though probably changed as highlighted by Amnesty International.

But the precedent will stick. Other regulators will look at this case and ask: why aren't we doing the same? Why is Instagram allowed to have infinite scroll? Why is YouTube allowed to have aggressive autoplay? If TikTok is being required to change, shouldn't everyone? as noted by Courthouse News

This doesn't mean the end of engaging social media. It means the end of social media designed solely to maximize engagement regardless of impact on users. Companies will have to prove they're considering user well-being in their design decisions. That's a different era from where we've been.

For users, particularly young users, this might mean less addictive social media experiences. Less infinite scroll. Less autoplay. More friction. Less time spent on social media overall as reported by Reuters.

For companies, it means adapting business models, finding new ways to engage users without being purely extractive, and accepting that profit maximization has limits when it conflicts with human well-being.

The Commission's action isn't radical. It's not banning social media or imposing censorship. It's saying: your design choices have consequences. Prove you're considering user well-being. If you aren't, change as highlighted by Amnesty International.

That's becoming the new normal for tech regulation. And the TikTok case is just the beginning.

FAQ

What exactly did the European Commission accuse TikTok of doing?

The Commission found that TikTok deliberately designed addictive features into its app without adequately assessing how these design choices could harm users, particularly minors and vulnerable adults. Specifically, they cited infinite scroll, autoplay, push notifications, and the recommendation algorithm as features that "fuel the urge to keep scrolling and shift the brain of users into 'autopilot mode'." The Commission concluded that TikTok disregarded important indicators of compulsive use patterns and failed to implement meaningful safeguards as noted by The New York Times.

What is the Digital Services Act and why does it matter?

The Digital Services Act is European Union legislation that establishes comprehensive rules for how large online platforms must operate. It requires Very Large Online Platforms (those with over 45 million EU users) to assess systemic risks their services create and take action to mitigate those risks. The DSA is groundbreaking because it shifts regulatory responsibility from individual features (like privacy) to the overall platform operation, requiring companies to prove they're considering user harms in their design choices as discussed by the Atlantic Council.

What features did the Commission specifically demand be changed or disabled?

The Commission demanded that TikTok disable infinite scroll, remove autoplay functionality, implement meaningful screen time breaks, restrict push notifications (particularly during evening and nighttime hours for minors), and fundamentally redesign its recommendation algorithm to account for user well-being rather than purely optimizing for engagement. The Commission rejected TikTok's existing parental controls and screen time management tools as inadequate because they're too easy to bypass as noted by Courthouse News.

How severe are the potential penalties TikTok faces?

TikTok could face fines up to 6% of global annual revenue for confirmed breaches of the Digital Services Act. This could amount to billions of dollars depending on TikTok's revenue. Additionally, the Commission could require ongoing audits, impose operational constraints, or demand specific design modifications with significant financial penalties for non-compliance as reported by Reuters.

Why is this decision particularly concerning for minors and young people?

The Commission emphasized that teenagers and younger users are especially vulnerable to addictive design patterns due to ongoing brain development, particularly in the prefrontal cortex, which governs impulse control and decision-making. Young users are more susceptible to variable reward schedules (which notifications and autoplay create) and are more likely to have their sleep disrupted by evening notifications and late-night engagement habits. Additionally, algorithmic personalization can create echo chambers that magnify risks like exposure to harmful content or reinforcement of concerning behaviors as noted by The New York Times.

What happens next in the process, and will TikTok definitely have to comply?

These are preliminary findings, not final sanctions. TikTok has time to submit a formal response arguing against the Commission's interpretation, proposing alternative solutions, or claiming the identified risks are overstated. The Commission will review TikTok's response and then issue final findings. If they confirm the violations, they'll impose binding sanctions and required changes. This process typically takes several months, and companies can negotiate settlements. However, the Commission doesn't usually issue preliminary findings unless fairly confident in their case as highlighted by Amnesty International.

Could other platforms like Instagram, YouTube, or Snapchat face similar actions?

Yes, this is a significant concern for all Very Large Online Platforms operating in the EU. Instagram has infinite scroll and similar recommendation algorithms. YouTube has aggressive autoplay. Snapchat has design features like streaks that encourage daily usage habits. If the Commission successfully enforces changes against TikTok, the logical precedent would apply to these platforms as well. This could trigger a wave of regulatory action requiring design changes across the social media industry as reported by Reuters.

Would these changes actually work to reduce addictive usage patterns?

Based on behavioral research, yes. Studies on infinite scroll, autoplay, and notification impacts show measurable behavioral effects. Removing these features or adding friction would likely reduce watch time and engagement. However, the effectiveness depends on implementation details. For example, if screen time limits are easy to bypass, they won't work. If notifications are simply moved to slightly later times but still frequent, impact will be limited. The Commission is essentially requiring that these changes be meaningful, not performative as noted by Courthouse News.

Could these regulatory requirements make TikTok financially unviable in Europe?

That's a possibility TikTok will argue in their response. Significant reductions in engagement could reduce watch time by 20-40%, which directly impacts advertising revenue. However, other companies have adapted to regulatory requirements that reduced profitability. TikTok could develop subscription models, charge higher per-impression rates to advertisers, or find other revenue sources. The Commission's response will likely be that business viability isn't their concern if it depends on addictive design that harms users as reported by Reuters.

What's the difference between making a product engaging versus addictive?

The Commission bases this distinction on psychological research about compulsive behavior. They argue that engaging product is something users choose to use regularly because they like it. An addictive product is something users can't stop using even when they want to, because the design hijacks their reward systems and reduces self-control. The key difference is whether users feel agency in their usage decisions. Infinite scroll removes that agency by providing no natural stopping point. Autoplay removes it by defaulting to continued use. These features don't just make the product appealing; they make not using it require active effort as noted by The New York Times.

Creating a Framework for the Future

What's ultimately happening with TikTok is the establishment of a new regulatory framework for how technology companies can design products. The Commission is essentially saying: if your design is optimized purely for engagement without considering user well-being, we will require you to change it. This is a significant shift from the hands-off regulatory environment tech companies have enjoyed for decades as reported by Reuters.

The implications extend far beyond TikTok. Every tech company building consumer-facing products is watching this case carefully. Design decisions that maximize engagement without considering psychological impacts are becoming regulatory liabilities. Companies will need to invest in assessing and documenting user impacts. They'll need to build products with intentional friction points. They'll need to justify their design choices based on user well-being, not just engagement metrics as noted by Courthouse News.

This doesn't spell the end of social media or even of engaging social media. It means the end of unaccountable design optimization. And that's a fundamentally good thing for users, even if it's more expensive for companies as highlighted by Amnesty International.

Key Takeaways

- The European Commission's preliminary findings accuse TikTok of deliberately designing addictive features (infinite scroll, autoplay, push notifications) that maximize engagement while ignoring user well-being as noted by The New York Times.

- The Digital Services Act requires Very Large Online Platforms to assess systemic risks and mitigate harms, shifting burden of proof from regulators to companies as discussed by the Atlantic Council.

- Potential penalties of up to 6% of global annual revenue incentivize compliance, but fundamental business model changes may be required as reported by Reuters.

- This case sets a precedent for design regulation that could extend to all major social platforms, fundamentally reshaping product development approaches as noted by Courthouse News.

- Scientific evidence on behavioral psychology and variable reward schedules provides regulatory grounding for design change requirements, unlike ideological arguments as highlighted by Amnesty International.

Related Articles

- TikTok Censorship Fears & Algorithm Bias: What Experts Say [2025]

- EU's TikTok 'Addictive Design' Case: What It Means for Social Media [2025]

- Uber Liable for Sexual Assault: What the $8.5M Verdict Means [2025]

- Meta Teen Privacy Crisis: Why Senators Are Demanding Answers [2025]

- The SCAM Act Explained: How Congress Plans to Hold Big Tech Accountable for Fraudulent Ads [2025]

- How Right-Wing Influencers Are Weaponizing Daycare Allegations [2025]

![EU Orders TikTok to Disable Addictive Features: What's Next [2025]](https://tryrunable.com/blog/eu-orders-tiktok-to-disable-addictive-features-what-s-next-2/image-1-1770395874314.jpg)