The Reality of AI-Powered Misinformation in 2025

It happened faster than anyone could fact-check it. A detailed post appeared on Reddit claiming insider knowledge about a food delivery app's wage theft scheme. The author presented himself as a drunk whistleblower typing from a library, desperate to expose corporate exploitation. The story was emotionally compelling, the allegations credible, and the audience ravenous for the truth.

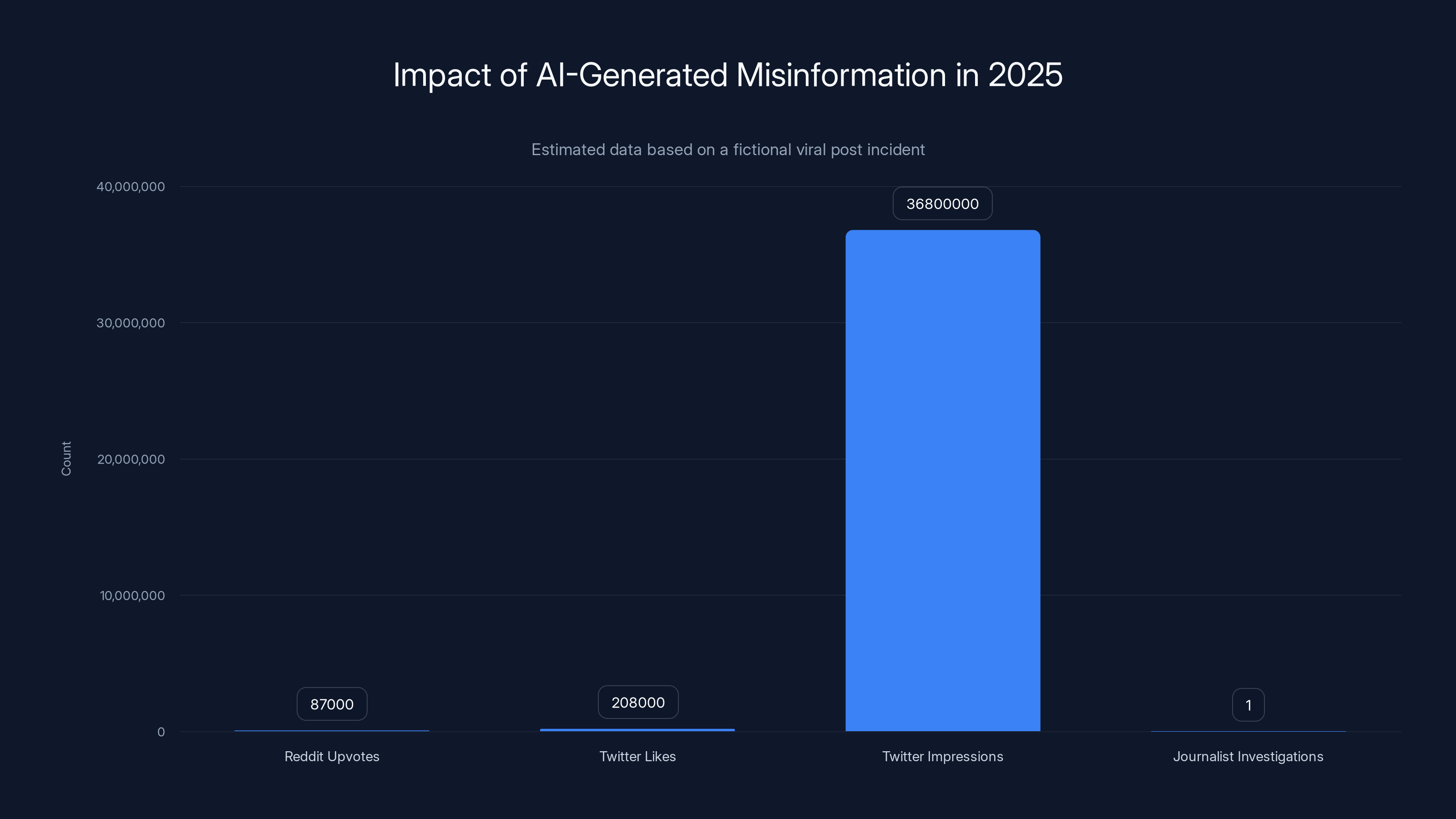

Within hours, the post rocketed to 87,000 upvotes. It crossed to Twitter, where it accumulated 208,000 likes and 36.8 million impressions. Major tech journalists started investigating. News outlets began reaching out to the company for comment. By the time anyone realized the entire thing was artificial, the damage was irreversible.

Here's what makes this incident genuinely terrifying for the internet in 2025: the post wasn't just a lie. It was a perfectly constructed AI-generated fiction designed to exploit everything we know about how viral content works. The author included screenshots of an employee badge. There was an eighteen-page internal document detailing how the company used AI algorithms to calculate a "desperation score" for drivers. The narrative had coherence, emotion, and specificity.

One journalist spent days investigating it, treated it as a genuine leak, and came close to publishing a major story. That's not a failure of journalism. That's a failure of detection technology. And it reveals something uncomfortable about the current state of internet trust in 2025.

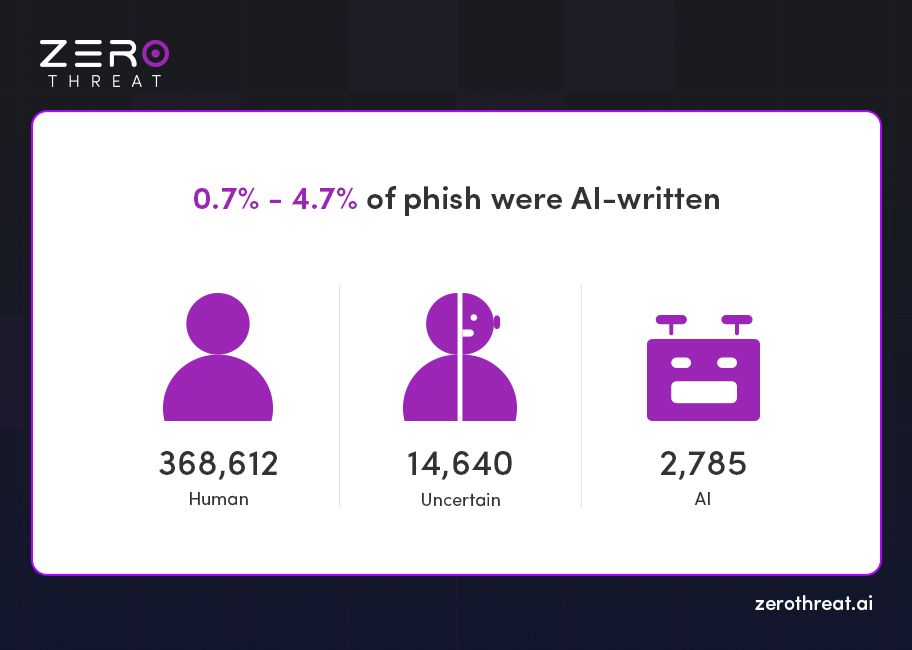

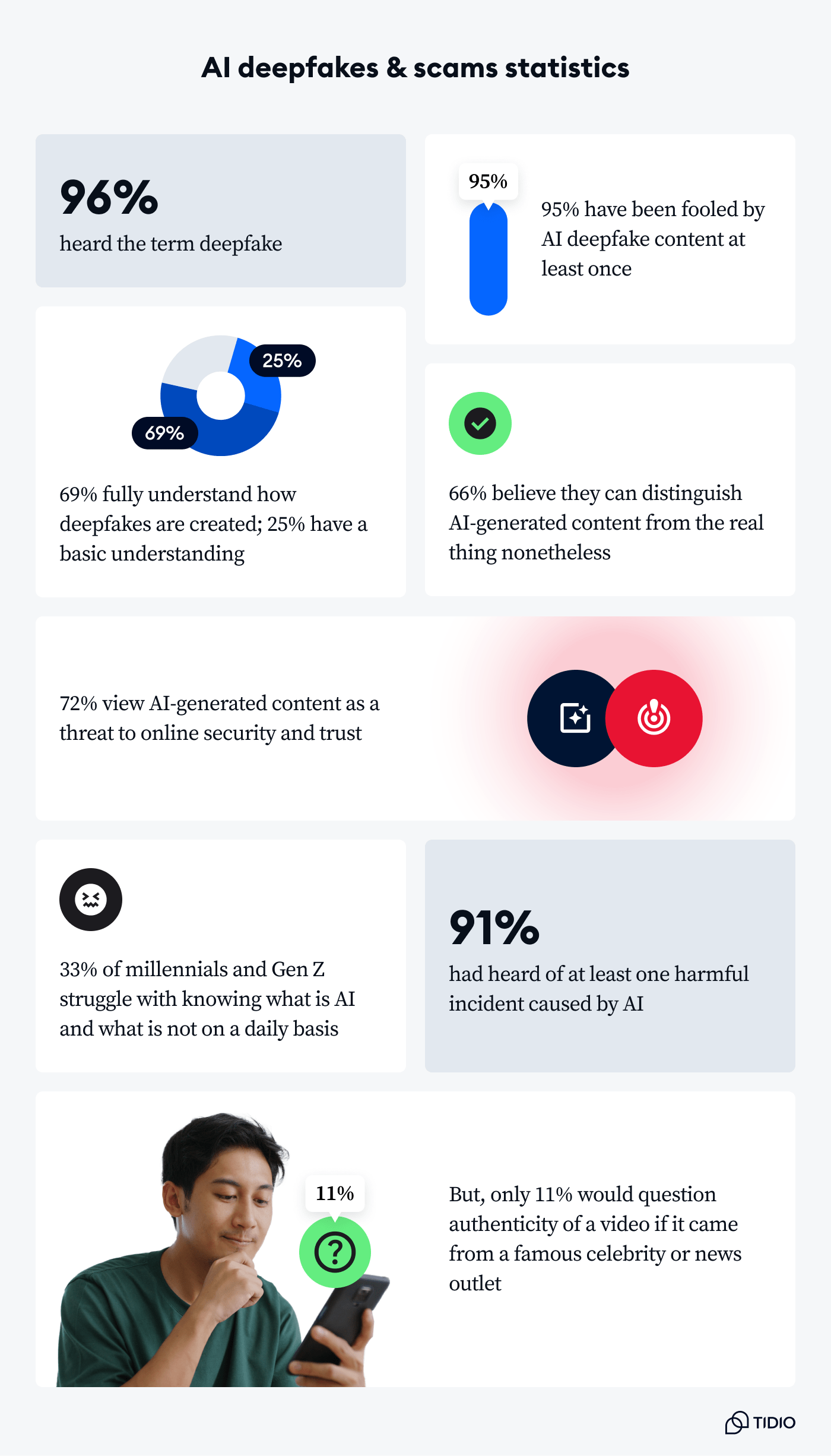

This wasn't the first AI hoax that week on Reddit. It wasn't even the only food delivery company misinformation spreading simultaneously. The fact that multiple viral AI deceptions can exist in parallel, each fooling thousands before debunking, points to a fundamental problem: we're losing the ability to distinguish real from synthetic content at scale.

TL; DR

- AI-generated viral posts now fool professional journalists: A fake Reddit whistleblower accumulated 87,000 upvotes and 36.8 million cross-platform impressions before being debunked.

- Detection tools exist but aren't reliable: Google's Synth ID can catch some AI images, but most multimedia detection remains inconsistent and unreliable.

- Damage happens before debunking: By the time a post is verified as fake, it's already spread to millions and shaped public perception.

- Coordinated AI misinformation is becoming systematic: Companies are paying for AI-generated "organic" content on social platforms to drive engagement.

- The gap between creation and detection is widening: AI tools generate convincing content in minutes, but verification takes days or weeks.

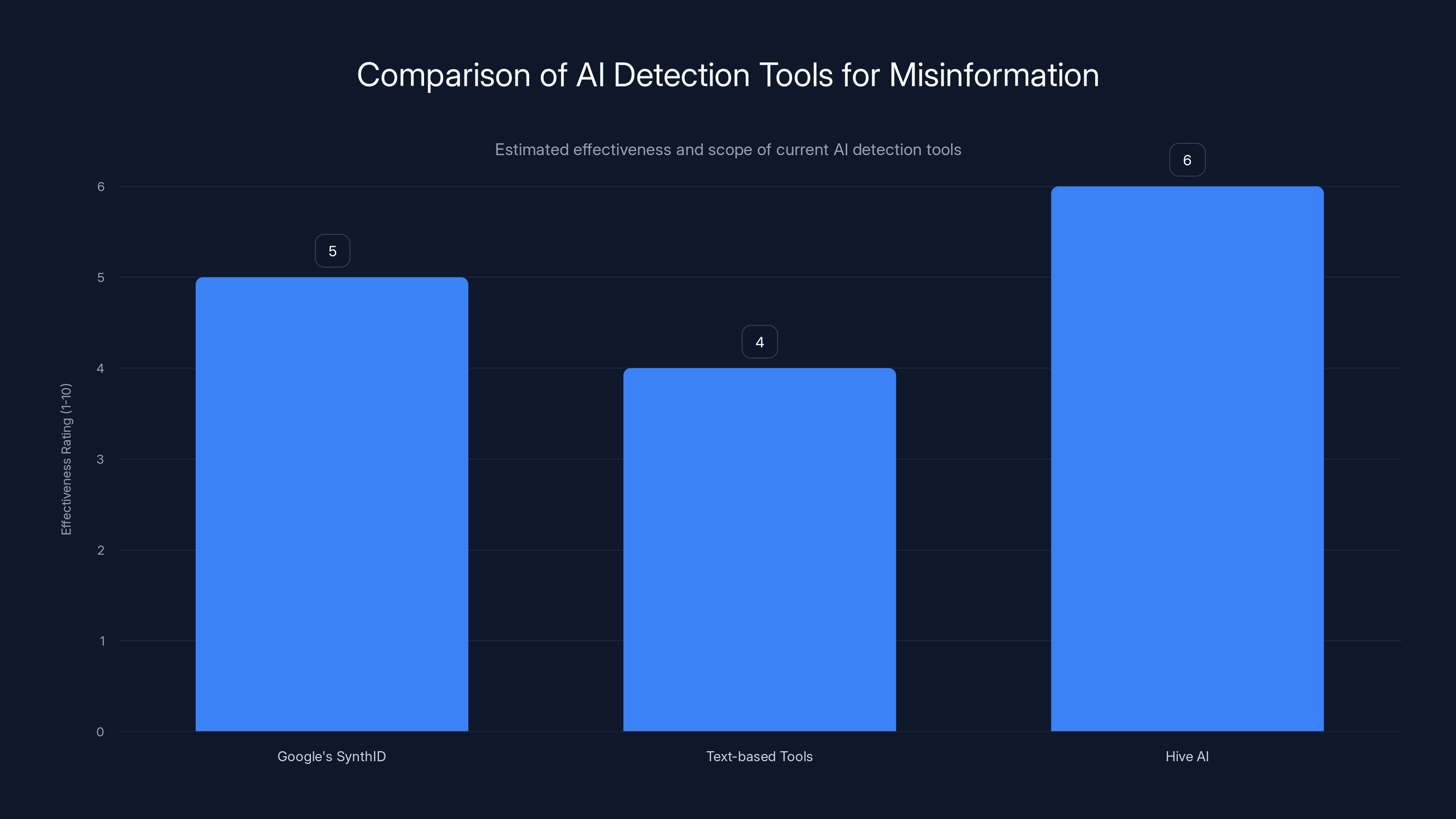

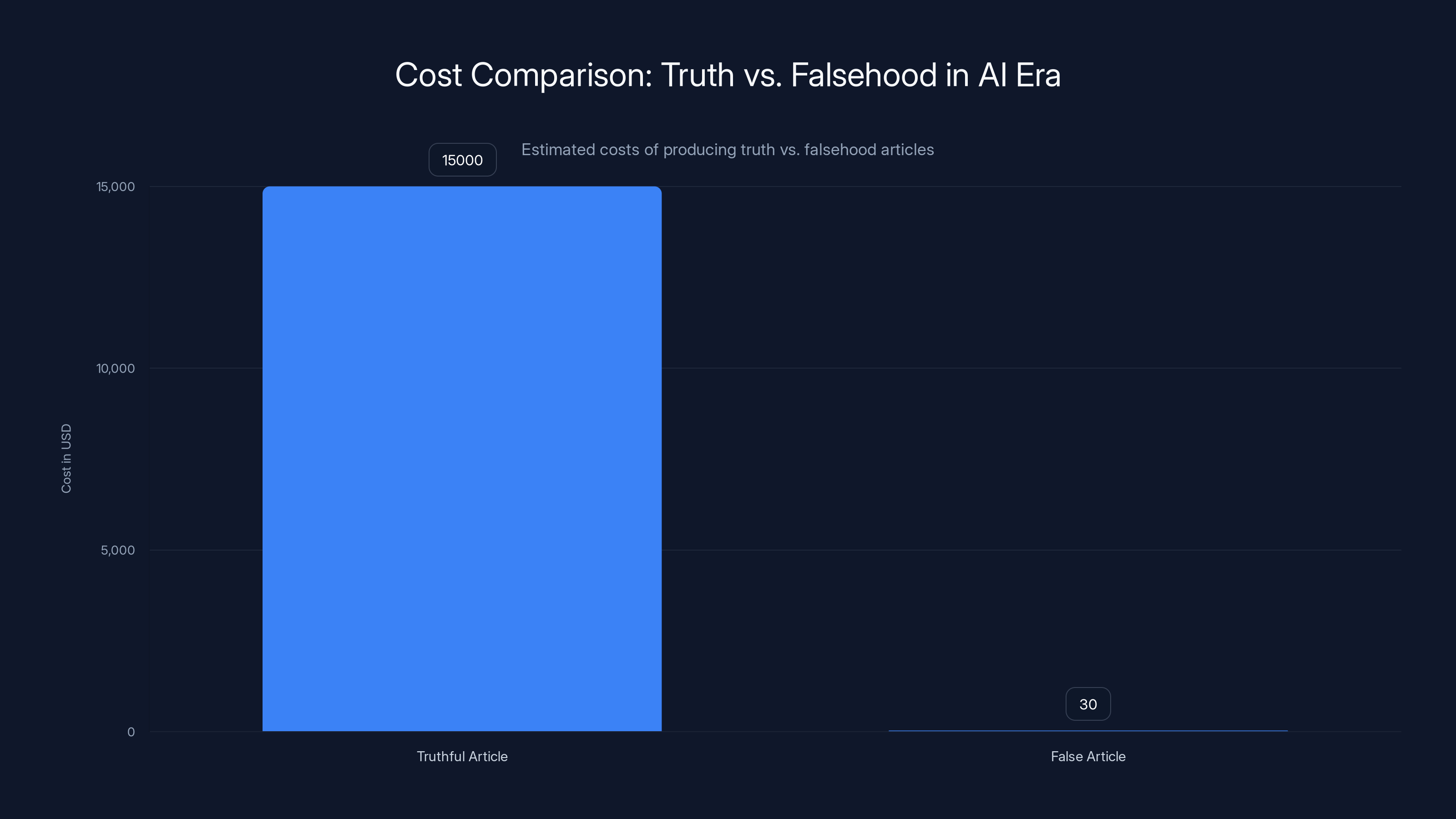

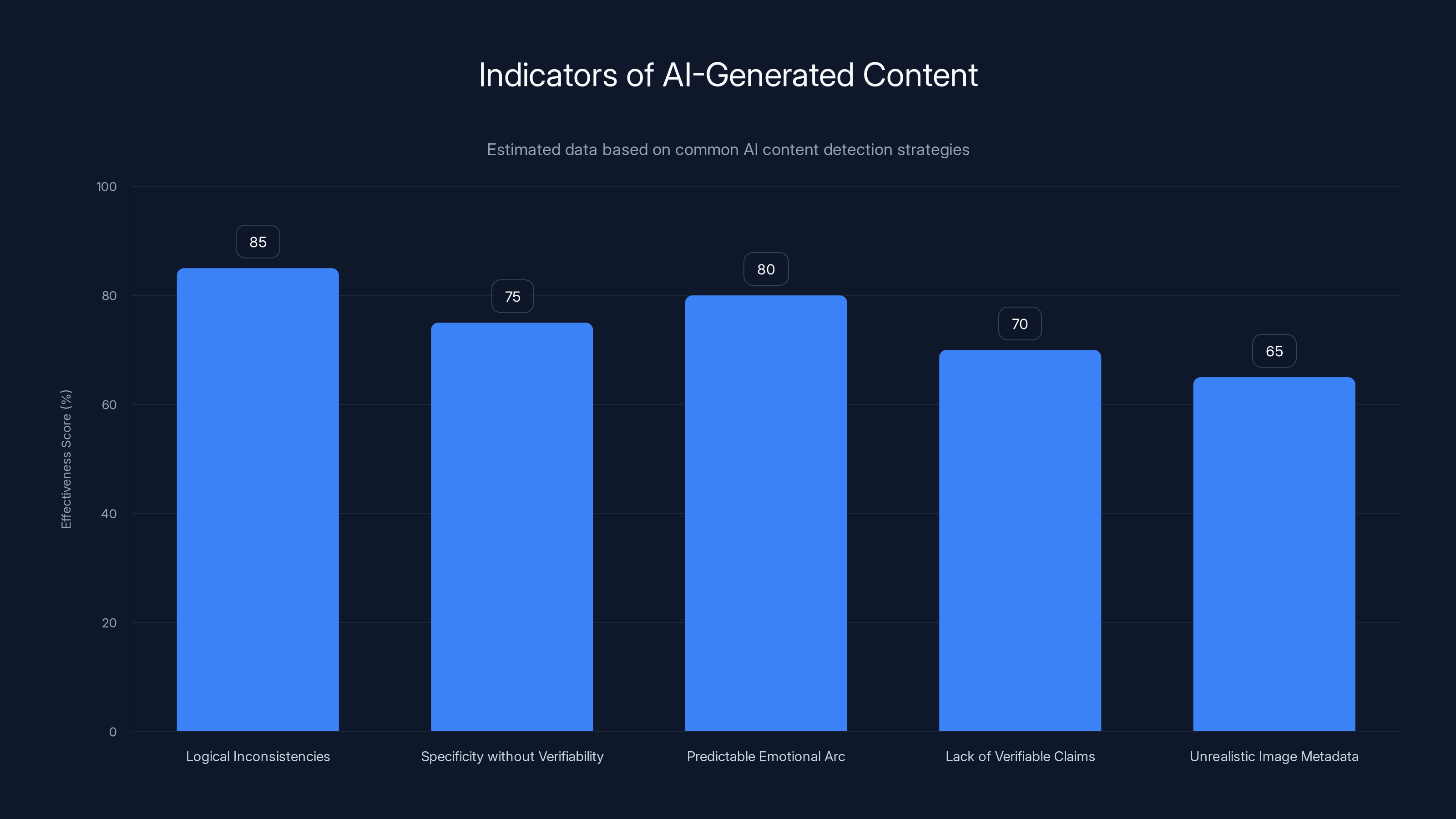

Current AI detection tools have varying effectiveness, with Hive AI rated slightly higher due to its broader focus, despite limitations. Estimated data.

Why This Matters Now: The Credibility Crisis of 2025

Five years ago, a fake post on Reddit would've required someone to manually type out hundreds of words and fabricate supporting documents. That person would need time, motivation, and technical knowledge to forge images. The friction created natural barriers to viral misinformation.

None of those barriers exist anymore.

Generative AI tools now produce coherent narratives faster than most people can read them. Tools like Chat GPT, Claude, and Gemini can write a convincing employee testimony, complete with industry jargon and emotional beats, in under thirty seconds. Image generation tools like Midjourney and DALL-E can create fake employee badges, internal memos, and screenshots that pass casual inspection.

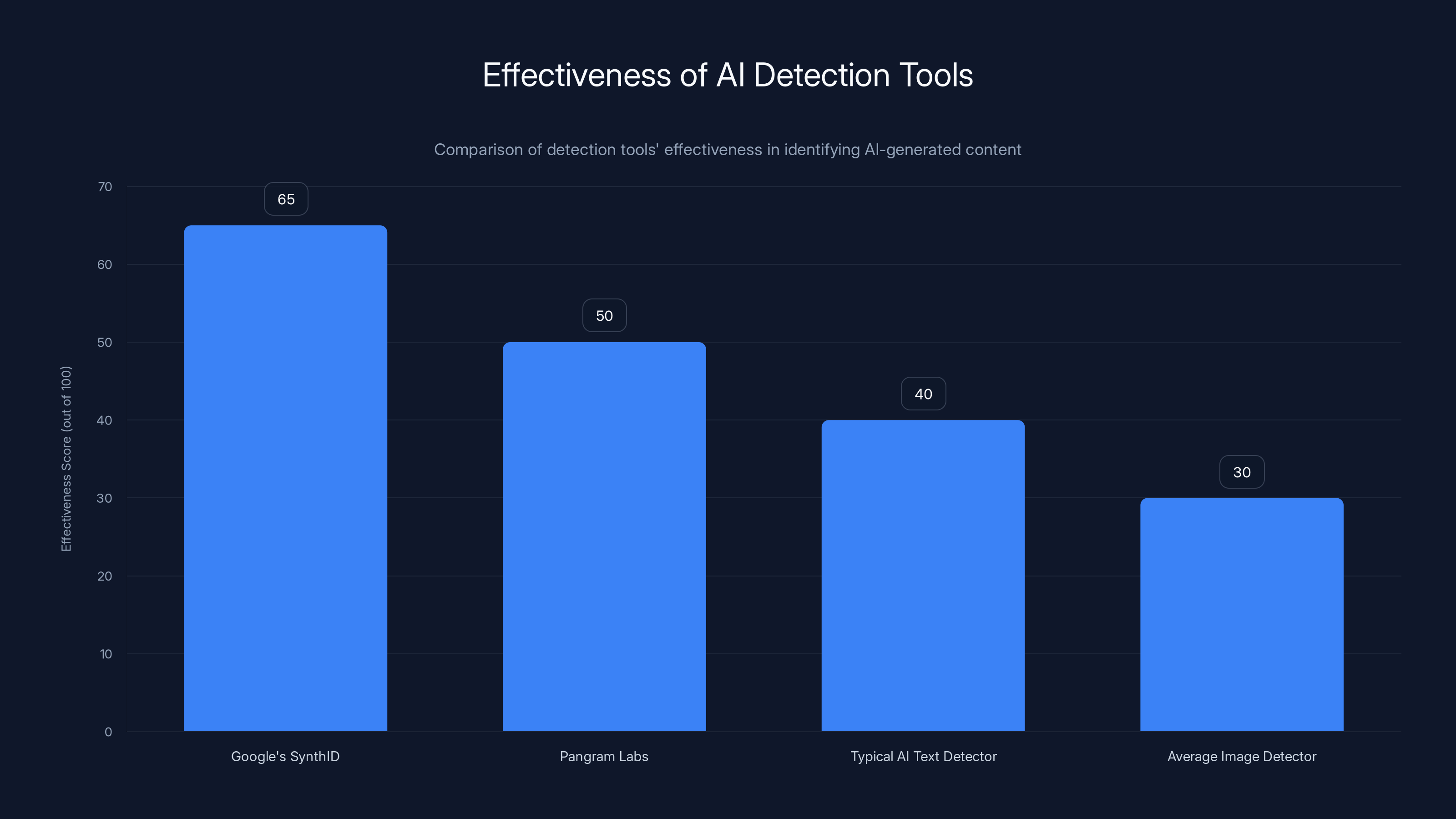

The economics have flipped. It used to be expensive (in time and effort) to create convincing lies. Now it's cheaper, faster, and easier than ever. Meanwhile, the tools designed to catch synthetic content remain unreliable and inconsistent.

What's worse is the asymmetry. A skilled person using AI can generate an 18-page internal document that looks authentic in maybe ten minutes. A journalist trying to verify that document needs to contact multiple sources, cross-reference details, reach out to the company, wait for responses, and consult technical experts. Verification takes days. Virality happens in hours.

This creates a window where misinformation operates completely unchecked. The whistleblower hoax accumulated millions of impressions during that gap. By the time it was debunked, it had already shaped how people thought about that company, that industry, and their smartphones.

Consider the psychological angle: even after debunking, people tend to remember the original claim. Cognitive scientists call this the "backfire effect." Once a narrative enters your mind, facts that contradict it don't eliminate the original belief. They often entrench it. So the person who read that fake post, felt angry about corporate exploitation, and shared it with friends? They're now somewhat resistant to the revelation that it was all fabricated. The belief survives the debunking.

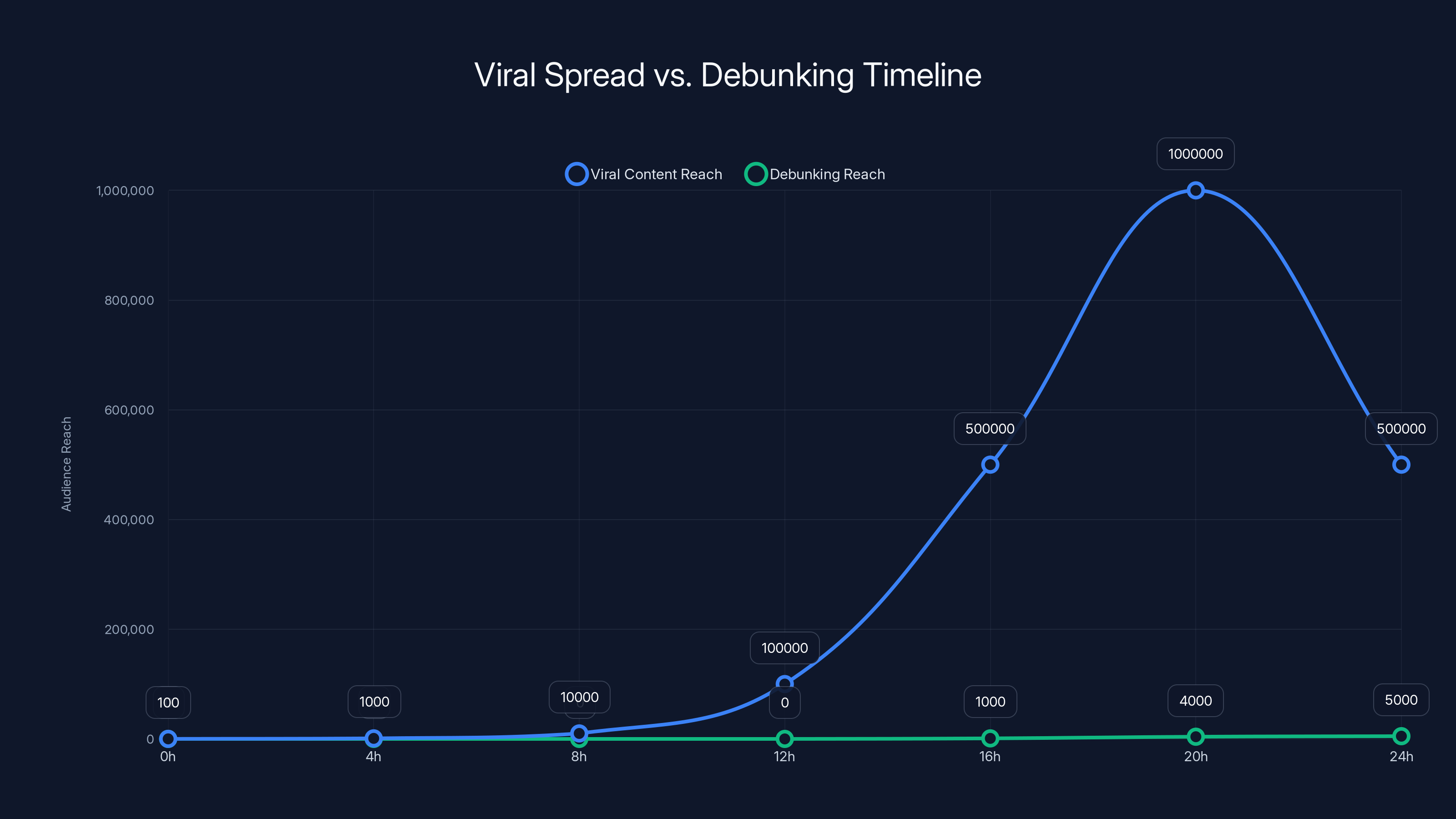

Viral content can reach millions within 24 hours, while debunking efforts lag significantly, reaching only about 4% of the original audience at peak due to slower response times. Estimated data.

How AI Detection Currently Works (And Why It's Failing)

There are actually tools designed to catch AI-generated content. They just don't work very well.

Google's Synth ID watermarking system is probably the most promising approach. It embeds invisible markers into AI-generated images that survive compression, cropping, and filtering. When an image is analyzed, these markers reveal whether it came from an AI system. In theory, this is brilliant. In practice, it only works if:

- The image was generated by Google's tools and hasn't been heavily modified

- The person trying to detect it has access to Google's detection system

- The bad actor hasn't found a workaround yet

Those are three fragile conditions.

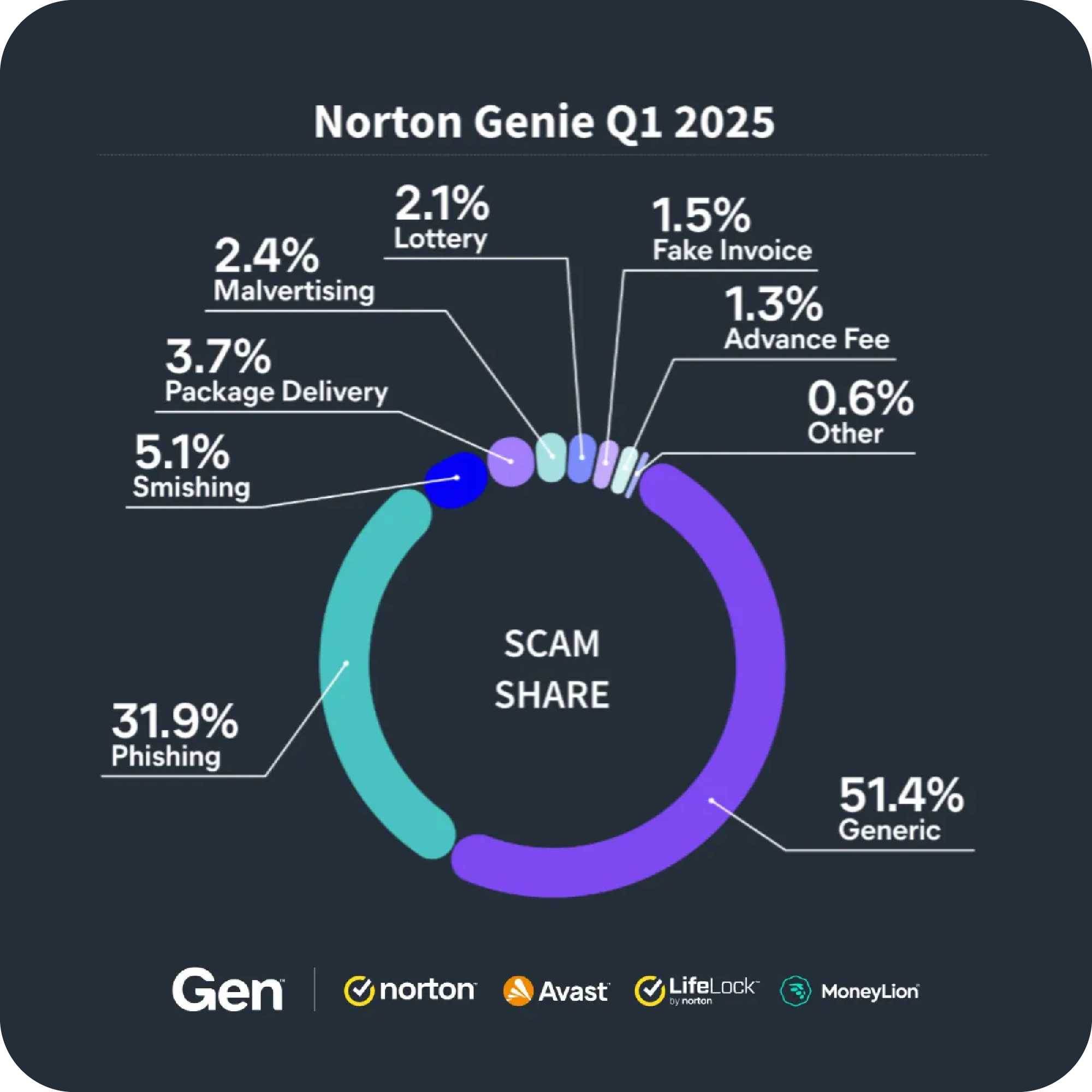

Text-based detection tools like Pangram Labs' offering can identify some AI-generated text by analyzing writing patterns, vocabulary distribution, and statistical anomalies. But large language models now produce text that mimics human writing patterns so closely that detection becomes probabilistic, not definitive. A tool might say "this is probably AI-generated" with 73% confidence. But journalists and regular internet users need certainty, not probability.

The fundamental problem is this: AI detection is always reactive. New versions of generative models are released constantly. Each new model might evade existing detection methods. Detection tools then need to be retrained on new data. This creates a perpetual cat-and-mouse game where the detection tools are always six months behind the generation tools.

Consider the verification process for the food delivery hoax. The journalist needed to:

- Establish contact with the supposed whistleblower

- Verify the employee badge looked authentic

- Check if the internal document could be real

- Contact the company for comment

- Cross-reference technical claims

- Validate the author's employment history

At each step, the AI-generated content was designed to withstand scrutiny. The badge looked professional. The document was detailed and internally consistent. The author had plausible explanations for everything. It took multiple verification attempts and consultation with AI experts to confirm it was fake.

Meanwhile, millions of people had already seen the post and formed opinions based on it.

The Anatomy of a Believable AI Hoax

Why was this particular post so effective? What made it spread so rapidly and fool so many intelligent people?

Start with the premise. Food delivery apps have actually been caught stealing tips from drivers. Door Dash paid a $16.75 million settlement for doing exactly this. So the core allegation wasn't just believable—it was historically accurate. The author built their fake post on a foundation of real corporate malfeasance.

Then came the narrative framing. The "drunk whistleblower at the library" angle has specific characteristics that make it credible:

- Emotional volatility (drunkenness) explains why someone would risk their job by leaking

- Public Wi Fi establishes plausibility about anonymity concerns

- The rambling, stream-of-consciousness style feels authentic

- It suggests this is genuine desperation, not a calculated attack

AI systems trained on thousands of Reddit posts understand these narrative conventions. They can mimic the voice of someone who's angry, scared, and trying to do the right thing. The emotional authenticity is what sells the story more than the factual claims.

Then the author provided evidence. The fake employee badge. The eighteen-page document. These weren't just mentioned—they were supposedly shared with a journalist. This adds a layer of verification. If a professional journalist is investigating this, it must have some credibility.

The document itself was detailed enough to be convincing but vague enough to avoid specific falsifiability. It described algorithms and systems without claiming exact numbers that could be easily debunked. A real whistleblower would probably make some specific claims to prove authenticity. But an AI-generated document avoids those traps and instead creates an impression of authenticity through consistency and internal logic.

Finally, the post tapped into existing anxieties. People are already suspicious of algorithms. They suspect they're being exploited by platforms. The idea that an app uses AI to identify "desperate" drivers and exploit their desperation? That feels like exactly the kind of sinister corporate behavior that's probably happening. The post didn't introduce a new fear. It weaponized existing fears.

This combination is nearly impossible for individuals to defend against. Fact-checking requires effort, time, and skepticism. Sharing and believing requires just one click.

Producing a truthful article can cost between

Systematic AI Misinformation as a Business Model

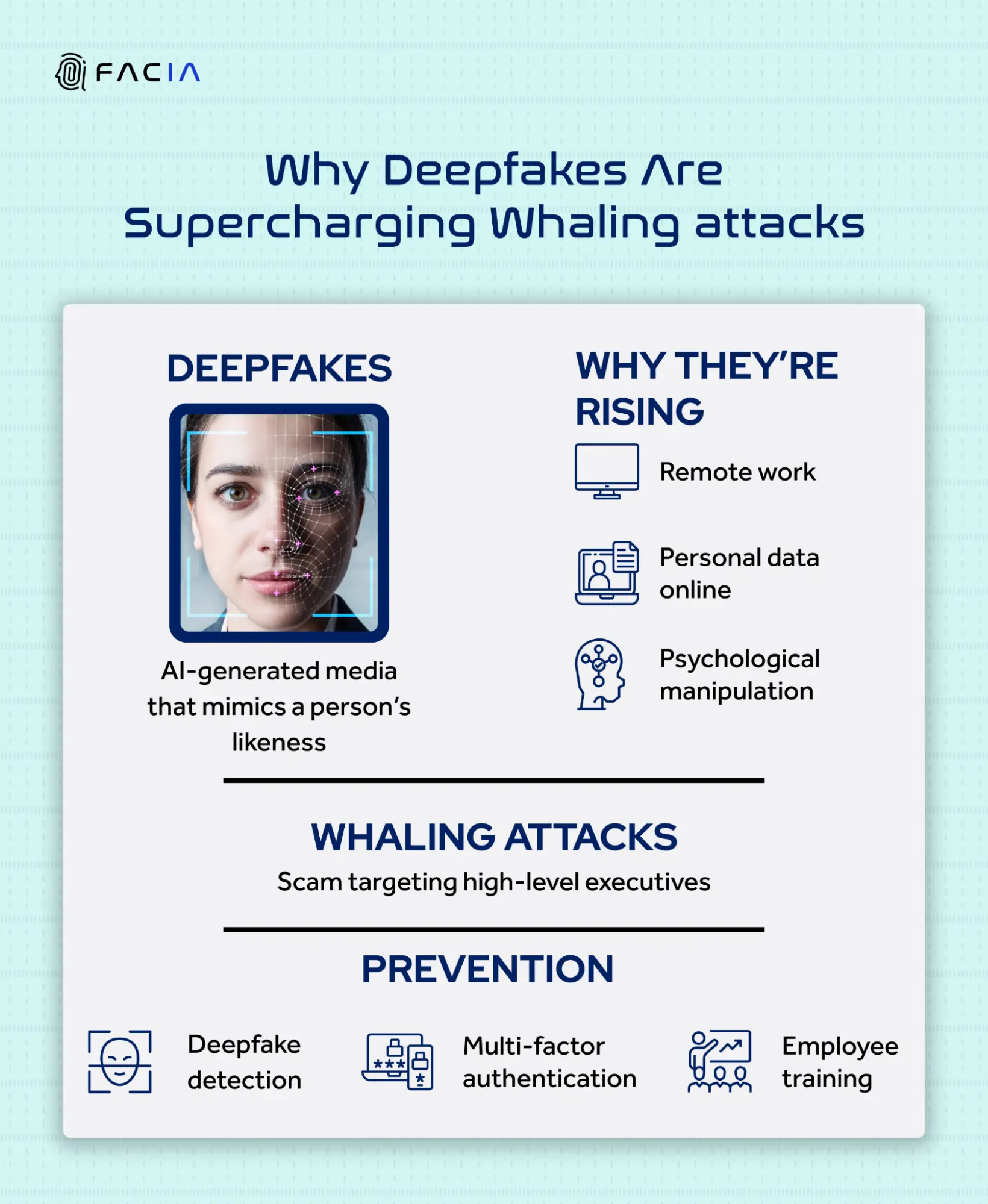

Here's the part that should concern you most: this might not even be an isolated incident created by one person trying to troll a journalist.

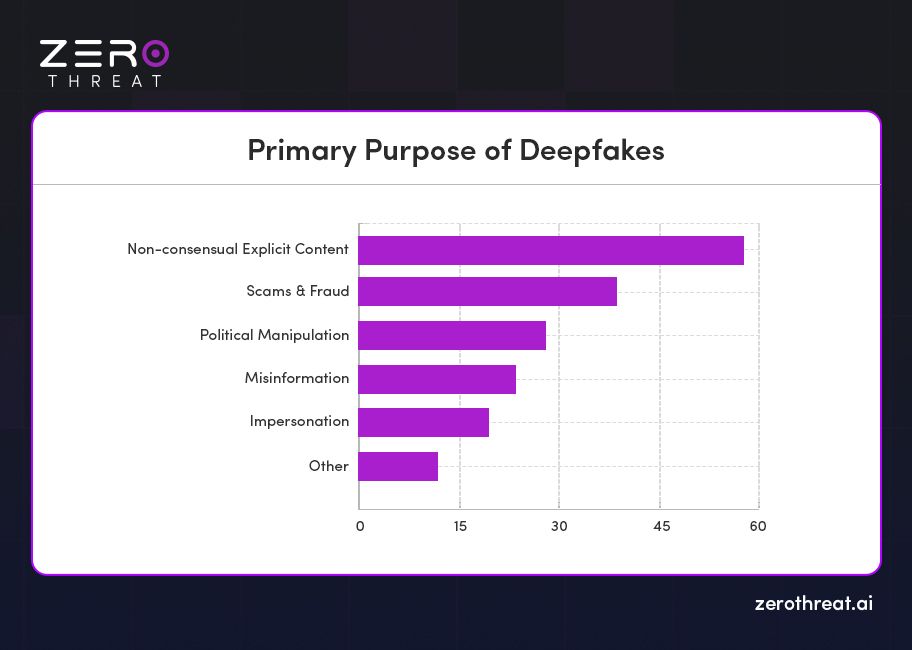

Companies now explicitly purchase "organic engagement" on social platforms. They're paying for services that generate AI-created content designed to go viral. The content mentions the company's brand name, drives discussion in specific directions, and appears to come from real users.

The economics work like this: creating a viral post that reaches millions costs almost nothing with AI tools. A company might spend

Traditional advertising is increasingly ignored. Banner ads, sponsored content, and even influencer partnerships are weighed with skepticism. But an "organic" post that appears to come from a real person—especially a whistleblower or insider—carries authenticity that paid advertising can't buy.

So you have three scenarios:

- A person using AI tools to create a hoax for attention or to damage a company they dislike

- A competitor using AI-generated misinformation to harm another company

- A company using AI-generated "grassroots" content to promote itself or damage competitors

All three are happening simultaneously in 2025. The food delivery hoax might have been scenario one. But scenario three is now a commercial service with paying clients. This isn't theoretical. This is operational infrastructure already being used.

The problem scales. If a single person can reach 36.8 million impressions with a single AI-generated post, imagine what a company can do with a coordinated campaign of dozens of posts. Imagine what a political campaign could do. Imagine what a nation-state could accomplish with an army of AI content generators.

What Journalists Actually Do When They Encounter Potential Leaks

Most people think journalists have some magical process for verifying information. They don't. The process is actually quite fragile and dependent on intuition combined with time-intensive legwork.

When a journalist receives what appears to be a leaked document, they typically:

-

Evaluate the source: Does the person have credibility? Can they verify their employment? Have they leaked successfully before? This step is falling apart in 2025 because AI can generate fake employment histories and personas.

-

Examine the document for inconsistencies: Journalists look for typos, formatting anomalies, outdated references, or technical inaccuracies that suggest fabrication. AI-generated documents are now sophisticated enough to pass this test. They can mimic corporate writing styles and avoid obvious errors.

-

Cross-reference claims: A journalist will reach out to other employees, customers, or industry experts to see if the claims hold up. But if the claims are intentionally vague or reference systems that don't actually exist, this step becomes ineffective. AI is really good at plausible vagueness.

-

Contact the subject company: The journalist will ask the company to respond to the allegations. The company will probably deny everything. Neither response proves anything, but the process creates a sense of legitimacy.

-

Consult with technical experts: For document that claims to describe technical systems, journalists might reach out to engineers or specialists. But if an engineer says "this sounds plausible," that's not the same as verification. It just means the document isn't obviously nonsensical.

None of these steps are foolproof. They were designed in an era when creating convincing forgeries required significant skill and effort. Now an AI tool can pass most of these steps automatically.

The journalist investigating the food delivery hoax only realized it was fake when he specifically asked Google to analyze the badge image using Synth ID watermarking. Google confirmed it was AI-generated. But notice the timeline: the journalist had already spent days investigating, reached out to industry contacts, consulted with experts, and engaged with the source. He was probably hours away from publishing if he hadn't happened to know about Synth ID.

Most journalists don't have access to cutting-edge AI detection tools. Most aren't specifically trained to spot AI-generated images. And even if they were, detection tools are unreliable enough that a single negative test doesn't guarantee authenticity.

Google's SynthID shows the highest potential with a score of 65, but still faces limitations. Estimated data.

The Viral Mathematics: Why Debunking Never Catches Up

There's a mathematical reason why misinformation spreads faster than truth. It's not about human psychology alone. It's about the structure of social networks.

When content goes viral on platforms like Reddit or Twitter, it follows an exponential growth curve. The first 100 people see it. Those 100 people share it with their networks. Now 10,000 people have seen it. Those 10,000 share with their networks. Now a million have seen it.

Each wave of sharing happens in hours. The exponential growth phase—where reach multiplies rapidly—operates for roughly 24-48 hours before natural decay sets in.

Debunking operates on a completely different timeline. A journalist investigating a major claim might spend:

- 4 hours: Initial investigation and source contact

- 8 hours: Cross-referencing and expert consultation

- 4 hours: Company response and fact-gathering

- 2 hours: Writing the debunking article

That's roughly 18 hours minimum for a thorough debunking. But the original post hits exponential growth in the first 6 hours. By hour 18, the post has already reached its peak and started decaying naturally. The debunking article gets published to a smaller audience because the original story is already "old news" in social media terms.

Worse, the debunking article might not even get traction because people are tired of the story. The original false claim had emotional intensity and novelty. The debunking has neither. It's fighting uphill against both viral mathematics and human psychology.

There's actually a formula that illustrates this. If content grows at rate

That means the debunking reaches about 4% of the audience that saw the original false claim. And that's assuming the debunking even gets the same distribution algorithm boost, which it won't. Social platforms prioritize engagement, not accuracy. A debunking article is less likely to generate shares and comments than the original explosive claim.

The Role of Social Platform Algorithms in Amplifying Misinformation

Reddit, Twitter, and other platforms don't intentionally promote misinformation, but they've built algorithms that accidentally do exactly that.

Both platforms use engagement metrics to determine what content to amplify. A post that gets a lot of upvotes, comments, and shares gets shown to more people. This makes sense from a business perspective: show users content they're engaging with, and they'll spend more time on the platform.

But misinformation is exceptionally engaging. Outrage generates more comments than agreement. Shocking claims generate more shares than mundane facts. A post claiming corporate conspiracy will always outperform a post saying "the company is probably behaving within legal norms."

So when an AI-generated post makes a shocking allegation and hits the algorithmic jackpot, the platform's own systems ensure it reaches millions. The algorithm doesn't ask if it's true. It just amplifies what users are engaging with.

Platforms like Reddit have tried to address this with community moderation and fact-checking labels. But moderation is reactive, not preventative. A post might sit with 50,000 upvotes and millions of impressions before any moderator even notices it. By then, the damage is done.

Twitter/X added a "Community Notes" feature where users can add context to disputed posts. But Community Notes only appears on posts with significant engagement about the post itself. If a post is spreading within algorithmic bubbles where everyone already believes it, Community Notes might never appear.

The food delivery hoax spread for hours before anyone even questioned it. The algorithmic amplification phase happened entirely before fact-checking kicked in. Once millions had seen it, no amount of platform-based mitigation could undo the spread.

Logical inconsistencies are the most effective indicator of AI-generated content, followed by predictable emotional arcs and specificity without verifiability. (Estimated data)

Detecting AI-Generated Content: Current Tools and Their Limitations

Several companies have built tools specifically designed to detect AI-generated content. Understanding what they can and can't do is crucial for anyone trying to evaluate information credibility.

Google's Synth ID works by embedding imperceptible watermarks into images generated by Google's generative models. When an image is analyzed with Synth ID's detection tool, the system can identify these markers and confirm the image came from Google's AI systems.

Limitations: Only works for images generated by Google. Doesn't detect images from DALL-E, Midjourney, or other competing models. Doesn't work if images have been heavily edited or modified. Requires access to Google's detection API, which not all users have.

Pangram Labs and similar text detection tools analyze writing patterns to identify AI-generated content. They look at word frequency, sentence structure, entropy patterns, and other linguistic markers that differ between human and AI writing.

Limitations: Increasingly unreliable as AI language models improve. Can't definitively prove something is AI-generated, only suggest high probability. False positive rates are significant—some human-written content is flagged as AI. Probabilistic rather than definitive.

Hive AI provides moderation and content detection services, including detection of synthetic media and manipulated videos.

Limitations: Primarily designed for harmful content, not misinformation. Works on videos and images, less reliable for text. Requires integration by platforms, not useful for individual fact-checking.

Turn It In and Grammarly added AI detection features primarily to catch student cheating, not general misinformation.

Limitations: Designed for academic context, not social media verification. Easily fooled by minor text modifications. Low reliability when dealing with creative writing that naturally mimics AI patterns.

The honest truth is that no current detection tool is reliable enough to serve as a definitive fact-checking mechanism. Each tool has false positive and false negative rates high enough that you can't fully trust the results.

This is why the journalist investigating the food delivery hoax had to use multiple verification methods. Synth ID confirmed the badge was AI-generated, but even that wasn't enough on its own. It took Synth ID confirmation plus investigation plus expert consultation to definitively debunk the story.

How to Spot AI-Generated Content: Practical Indicators

Since automated tools aren't reliably catching AI misinformation, individuals need practical strategies for evaluating content credibility.

Look for logical inconsistencies: AI models sometimes make contextual errors or claim expertise in areas that don't logically connect. A whistleblower claiming to be drunk while also being able to write coherent technical documentation is suspicious. Humans under intoxication don't maintain that level of technical precision.

Check for specificity without verifiability: The fake post claimed the company uses AI to calculate a "desperation score" for drivers. That's specific enough to sound real but vague enough that it's impossible to verify. Real whistleblowers often include numbers, dates, or specific incidents that can be independently verified. AI tends to avoid those because they can be easily fact-checked and debunked.

Evaluate the emotional arc: AI-generated content often hits emotional beats in predictable ways. The narrative starts with someone's emotional state, escalates through shocking revelations, and concludes with a call to action. Real people rambling on the internet have messier emotional arcs with tangents and contradictions.

Cross-reference key claims: If the post claims a company is doing something illegal or unethical, search for news articles or regulatory filings that confirm or deny this. The food delivery hoax was believable partly because Door Dash actually was caught stealing tips. But a thorough search would have revealed that this was already settled and the company changed its practices.

Examine image metadata: Images have metadata that includes camera information, timestamps, and other details. AI-generated images won't have realistic camera metadata. You can view image EXIF data with online tools. The badge in the food delivery hoax would have shown signs of AI generation if someone had checked this. (Though note: AI tools are improving at mimicking realistic metadata, so this isn't foolproof.)

Look for unrealistic detail: AI excels at plausible vagueness but sometimes includes details that are technically wrong or anachronistic. An internal document mentioning a specific technical term incorrectly can be a red flag. Real insiders get the jargon right.

Verify the source's history: If a Reddit user claims to be a whistleblower, what does their post history show? Do they have a consistent identity? AI-generated personas sometimes have post histories that are too clean or too consistent. Real people have varied interests and occasionally post about unrelated topics.

Check the date and context: When was this posted? Is there current news that might trigger misinformation about this company? Was there a recent lawsuit, scandal, or earnings report that prompted this leak? Misinformation often spikes in response to real events that create emotional engagement.

Be skeptical of perfect documentation: Real whistleblowers sometimes have documents, but those documents are often incomplete, poorly formatted, or contain information of varying quality. AI-generated documents tend to be too polished and internally consistent. They read like someone carefully wrote them, not like they were hastily copied and pasted while sneaking them out of an office.

This chart illustrates the rapid spread and impact of an AI-generated misinformation post in 2025, highlighting the challenge of distinguishing real from synthetic content. Estimated data.

The Corporate Perspective: Why Companies Generate AI Misinformation

It seems counterintuitive: why would a company generate false information about itself? But there are several strategic reasons why corporations and other bad actors use AI-generated misinformation.

Competitor sabotage: A company might generate AI content making false accusations about a competitor. The misinformation damages the competitor's reputation and market position. By the time it's debunked, the market has reacted. Stock price drops. Customer trust erodes. The competitor has spent resources defending itself instead of competing.

Preemptive damage control: A company knowing that real negative information is about to surface might flood social media with multiple competing narratives. Some are false, some are misleading, some are true but framed differently. This creates confusion. When the real scandal breaks, the public is already primed with several competing stories and might not know what to believe.

Brand astroturfing: A company might generate AI content that appears to be genuine customer testimonials or grassroots enthusiasm. This manufactured enthusiasm makes the brand seem popular and trustworthy. When potential customers see dozens of positive posts, they assume the product is good. They're not aware those posts came from an AI system paid for by the company.

Influencing regulatory perception: A company facing regulatory scrutiny might generate content making false claims about the industry landscape or government overreach. This primes public and political opinion before hearings or rule-making processes. Regulators are more likely to take action if there's public support for that action.

Market manipulation: A company's stock price is partially determined by sentiment. Generating content that creates positive sentiment about a company can influence stock price. This is particularly valuable around earnings announcements or product launches.

The key insight is that AI-generated misinformation is now infrastructure. Companies don't have to be technically sophisticated to deploy it. They just pay a service that handles creation, distribution, and monitoring. The service creates the content, posts it across multiple platforms, tracks engagement, and manages responses.

This changes the risk-reward calculus for bad actors. Creating a sophisticated misinformation campaign used to be expensive and risky. Now it's relatively cheap and can be done with plausible deniability. The AI system created the content, not the company. The company can claim it was framed or victimized.

What Happens After Misinformation Goes Viral: The Persistence Problem

One of the most frustrating aspects of the food delivery hoax is that it was thoroughly debunked, but its impact persists.

This happens because of something psychologists call the "illusory truth effect." The more you encounter a claim, the more true it seems, regardless of whether you've encountered evidence. Someone who saw the original post multiple times across platforms, read shares about it, and saw comments discussing it will feel like the claim is true. When a debunking appears a week later, it doesn't erase the earlier exposure.

There's also the "continued influence of misinformation" effect. Even after being explicitly told that information is false, people continue to believe and use that information in their reasoning. The false post about wage theft algorithms has now become part of how some people think about app-based work. A debunking doesn't eliminate that cognitive association.

Archival and screenshot sharing compound the problem. The original Reddit post gets deleted, but screenshots of it persist. Someone can find a screenshot on Twitter a year from now and share it without knowing it's been debunked. The debunking article gets lost to algorithmic decay while screenshots stay in circulation.

Google's search results will eventually show the debunking if you search for it. But most people don't search. They encounter content through social feeds and recommendations. And there's no easy way for platforms to retroactively tag old screenshots and videos with debunking information.

This means the lifespan of misinformation isn't measured by when it goes viral and when it's debunked. It's measured in years of residual impact. People will remember hearing about the wage theft algorithm. They'll mention it in conversations about worker exploitation. They'll cite it as an example when arguing about corporate malfeasance. The debunking never fully catches up.

The Broader Misinformation Landscape in 2025

The food delivery hoax isn't an isolated incident. It's one of dozens of similar AI-generated hoaxes happening daily across social platforms in 2025.

There were multiple food delivery company hoaxes just in that same weekend. Someone was spreading false information about a major AI company. Another viral post made false claims about social media policy changes. A completely fabricated story about a celebrity spread across Tik Tok before being debunked.

The volume is increasing exponentially. Each month, detection services report higher numbers of AI-generated misinformation attempts. Some estimates suggest that synthetic content now represents 15-25% of all social media posts in certain categories.

The problem isn't equally distributed. Misinformation concentrates around topics that generate the most engagement: politics, health, finance, technology, and labor disputes. These are the areas where false claims can cause real-world harm.

A false health claim can cause someone to avoid legitimate medical treatment. A false financial claim can influence investment decisions affecting millions of dollars. A false political claim can influence elections. A false labor claim can affect worker organizing and rights discussions.

And in every case, the AI-generated misinformation is essentially undetectable until it's investigated and proven false. By then, it's usually too late. The viral phase has happened. The damage is done.

The Economics of Truth vs. Falsehood in the AI Era

Here's the fundamental economic problem: truth is more expensive to produce than falsehood.

To write a true article about corporate malfeasance, a journalist needs to:

- Spend weeks investigating

- Interview multiple sources

- Review documents

- Verify claims through independent means

- Get legal review

- Write carefully to avoid defamation

Total cost: maybe

To generate a false article about the same topic using AI:

- Spend 20 minutes writing a prompt

- Let the AI generate content

- Use an AI image generator for supporting graphics

- Post it on Reddit or Twitter

Total cost:

The cost disparity is roughly 100:1 to 1000:1. Truth is dramatically more expensive than falsehood. In a competitive marketplace, cheaper always wins unless there's a strong reason to prefer the expensive option.

Social media platforms have accidentally created a marketplace that rewards engagement over truth. Since false claims are more engaging, the algorithm favors false claims. This creates a structural incentive for bad actors to generate misinformation.

Platforms could theoretically flip this incentive by favoring accurate information. But "accuracy" is hard to define algorithmically. A claim can be partially true, technically true but misleading, or true in context but false in isolation. Algorithms struggle with nuance.

Meanwhile, falseness is actually easy to define algorithmically: does it contradict verified facts? But this requires someone to first verify facts, which is expensive. And verification often comes too late.

So we're stuck with a system where falsehood has structural advantages over truth. This problem predates AI, but AI has made it dramatically worse by lowering the cost of creating convincing falsehoods.

Building Personal Resilience Against Misinformation

Given that systems-level solutions are inadequate, individuals need strategies for protecting themselves from AI-generated misinformation.

Develop source literacy: Learn to identify where information comes from. A post on Reddit is less reliable than a post on a company's official website. A leaked document is less reliable than a published regulatory filing. Anonymous whistleblowers are less reliable than named sources with corroborating evidence.

Slow down: Don't share something the moment you read it. Wait 24 hours. See if other sources are reporting it. See if debunking appears. Your FOMO might make you want to share immediately, but that's exactly when you're most likely to amplify misinformation.

Cross-reference systematically: When you encounter a claim, search for it. See what other sources say. If it's a shocking claim about a company, check their official statements, SEC filings, or news coverage from reputable outlets. Don't just assume it's true because it's on Reddit.

Be aware of your biases: You're more likely to believe claims that align with your existing beliefs. If you already think a company is exploitative, you're more likely to believe a post claiming they steal tips. That bias makes you vulnerable to misinformation designed to exploit it.

Engage with opposing viewpoints: If everyone you follow believes the same thing, you're in an echo chamber. Follow people who challenge your assumptions. Not to change your mind, but to build intellectual resistance against misinformation that exploits your worldview.

Question emotional intensity: Misinformation is designed to be emotionally intense. It makes you angry or scared. Real information is often boring and nuanced. If you feel intensely emotional about a post, that's a signal to slow down and verify before sharing.

Learn about cognitive biases: The more you understand about how your mind works—confirmation bias, availability heuristic, backfire effect—the better you can defend against their exploitation.

Support digital literacy: If you have influence in your community, teach others these skills. Digital literacy is becoming as important as traditional literacy. Schools and workplaces should be teaching people how to evaluate information credibility.

What Platforms Could Do Better

While individual resilience is necessary, it's not sufficient. Platforms bear responsibility for the infrastructure they've created.

Some potential improvements:

Slow down algorithmic amplification: Rather than immediately amplifying content that's getting engagement, platforms could add a verification delay. New content could be limited to a smaller initial audience until fact-checkers have had time to evaluate it. This reduces the peak of the misinformation curve.

Reward long-form context: Instead of amplifying isolated posts, platforms could amplify posts that link to context or source documents. Someone sharing a leak with a 3,000-word analysis would get more reach than someone sharing just the explosive headline.

Integration with fact-checking networks: Rather than relying on user community notes, platforms could integrate with professional fact-checking organizations. When a fact-checker publishes a debunking, the platform automatically links to it from the original misinformation.

Transparency about synthetic content: Platforms could require AI-generated content to be labeled as such. A post created primarily by generative AI would have a visible indicator. This doesn't prevent misinformation but does signal that extra verification is needed.

Reduce financial incentives for misinformation: Currently, misinformation can still generate ad revenue and engagement rewards. Platforms could deprioritize monetization for content that's later debunked, creating financial consequences for spreading false claims.

Support detection research: Rather than building detection tools in-house, platforms could fund independent research into AI content detection. This creates better detection methods faster than proprietary approaches.

Make debunking more visible: Currently, debunking articles don't get amplified the same way original posts do. Platforms could specifically promote accurate debunking content to people who were exposed to the original misinformation.

None of these are perfect solutions. But each one reduces the spread of misinformation or accelerates debunking. Combined, they could significantly slow the current misinformation epidemic.

The Future: What 2026 and Beyond Looks Like

Projecting forward is speculative, but several trends seem likely.

AI-generated misinformation will become more sophisticated: Each generation of AI tools is more capable than the last. This means generated content will be harder to distinguish from authentic content. Detection tools will struggle to keep pace.

Coordinated campaigns will increase: As misinformation becomes infrastructure, more actors will deploy it systematically. We'll likely see nation-state actors, corporate competitors, and political campaigns all using AI-generated misinformation as a standard tactic.

Trust in institutions will further erode: Each successfully debunked misinformation campaign reinforces distrust. If people see multiple hoaxes and realize they were fooled, they become skeptical of all information, including true information. This benefits no one.

Detection tools will improve but remain imperfect: Better tools will emerge, but the fundamentals won't change. Detection will always be reactive. New models will always be ahead of detection. But incremental improvements in detection accuracy will help.

Legislation will eventually emerge: Governments will eventually regulate AI content generation and misinformation. This will be clumsy and probably counterproductive, but it's coming. Regulations might require content authentication, liability for misinformation, or labeling of AI-generated content.

People will become more skeptical overall: The current moment where widespread misinformation is novel might not last. As misinformation becomes ubiquitous, people might develop more sophisticated skepticism. Or they might give up on distinguishing truth from falsehood entirely.

The most concerning scenario is not that misinformation becomes better at disguising itself, but that people stop believing anything is real. When you can't trust any image or video or document, the world becomes fundamentally unknowable. That's worse than misinformation. That's epistemological collapse.

Key Takeaways for Navigating AI Misinformation

The food delivery hoax revealed critical vulnerabilities in how we evaluate information in 2025. As AI tools become more sophisticated, these vulnerabilities will only expand.

The most important lessons:

First, urgency is your enemy. Misinformation spreads fastest when people feel they need to share it immediately. Slowing down your response by 24 hours dramatically increases the chance that someone else has already debunked it.

Second, emotional intensity is a warning signal. If content makes you angry enough to immediately share it, you should be more skeptical, not less. That's the exact emotional state that misinformation exploits.

Third, verification takes time that virality doesn't allow. This asymmetry is structural and won't disappear. Accept that by the time something is thoroughly debunked, millions have already seen it and been influenced by it.

Fourth, platforms have built systems that accidentally reward misinformation. Individual skepticism helps, but individual skepticism can't overcome algorithmic amplification. We need system-level changes to how platforms distribute content.

Fifth, AI tools are tools, not truth-telling machines. An AI can generate convincing content that's completely fabricated. The sophistication of the presentation doesn't correlate with accuracy. You should be more skeptical of polished, well-documented claims in the AI era, not less.

The conversation about AI has focused on capabilities and benefits. But we also need to reckon with how these tools enable deception at scale. That reckoning isn't just the responsibility of companies or platforms. It's the responsibility of everyone who participates in digital information ecosystems.

The misinformation problem in 2025 isn't ultimately about better detection technology or platform policies. It's about rebuilding structures of trust in a world where trusting things at face value is increasingly dangerous.

FAQ

What is AI-generated misinformation and how is it different from traditional misinformation?

AI-generated misinformation is false information created using generative AI tools like Chat GPT, Claude, or DALL-E, rather than being manually written or fabricated by humans. The key difference is speed and scale. Creating a convincing false narrative used to require hours of effort and technical skill. Now it takes minutes. An individual with basic AI prompting knowledge can create content that would have required a professional misinformation operation five years ago. This dramatically lowers the barrier to entry for creating sophisticated hoaxes.

How do AI-generated posts spread so much faster than real information?

AI-generated misinformation spreads faster due to engagement metrics and algorithmic amplification. Shocking or outrageous content generates more shares, comments, and upvotes than accurate-but-mundane information. Social platforms prioritize content that drives engagement, not content that's accurate. Additionally, misinformation can be created and distributed in hours, while accurate reporting takes days or weeks. By the time debunking appears, the original misinformation has already reached peak virality. This timing asymmetry means false content has a structural advantage on social platforms.

Can current AI detection tools reliably identify fake posts?

No. Current detection tools have significant limitations. Google's Synth ID works only for images generated by Google's own tools. Text-based detection tools provide probabilistic assessments rather than definitive proof. Platforms like Hive AI focus on harmful content rather than misinformation specifically. Even when tools work, they require human-in-the-loop verification, which delays detection until misinformation has already spread widely. Most detection tools exist primarily for technical researchers, not general use by journalists or social media users.

What should I do if I encounter content that might be AI-generated misinformation?

First, don't share it immediately. Wait 24 hours and search for verification from reputable sources. Check if fact-checking organizations like Snopes, Fact Check.org, or Politi Fact have already covered it. Look for inconsistencies in the narrative, vague claims that avoid specific verifiable facts, and emotionally intense framing designed to provoke sharing. Cross-reference key claims against official sources, news articles, and regulatory filings. If the content is on social media, report it for potential misinformation even if you're not certain it's false.

How does AI-generated content affect how journalists verify information?

It makes verification significantly harder and slower. Journalists traditionally could verify sources by checking employment history, examining document metadata, and consulting with industry experts. AI-generated personas and documents defeat several of these verification methods. An AI can create a convincing employment history, generate realistic-looking documents, and produce content with plausible industry jargon. Journalists now need to invest additional time in verification or use specialized detection tools. The journalist in the food delivery hoax case spent days investigating before confirming it was fake using Google's Synth ID tool.

Is there a way to completely prevent AI-generated misinformation?

No. Preventing AI-generated misinformation entirely isn't realistic because the tools and expertise are widely available. The goal should be reducing its prevalence and accelerating its debunking. This requires multiple approaches: platform policy changes, detection tool improvements, individual skepticism, digital literacy education, and potentially legislation. Even combined, these approaches can't eliminate the problem entirely, but they can slow spread and increase consequences for creating misinformation. The realistic goal is harm reduction, not elimination.

Why do companies create AI-generated false information about themselves or competitors?

Companies use AI misinformation for several strategic reasons: damaging competitor reputation before real scandals emerge, creating false grassroots enthusiasm through AI-generated testimonials, influencing regulatory perception, or manipulating market sentiment. A company might generate content claiming a competitor is exploitative or unsafe. By the time it's debunked, the competitor has spent resources defending itself and market perception has shifted. This is essentially low-cost sabotage compared to traditional advertising or lobbying.

Can debunking actually undo the damage from viral misinformation?

Partially, but not completely. Psychological research shows that even after debunking, people often continue to believe the original false claim due to cognitive biases like the illusory truth effect and continued influence of misinformation. Additionally, debunking articles reach smaller audiences than the original false claims because they come later and are less emotionally engaging. Screenshots and archived versions of the original misinformation persist on social media long after debunking. The best approach is preventing spread through early detection rather than relying on post-hoc debunking.

What role do social media algorithms play in spreading AI misinformation?

Algorithms are central to misinformation spread. Platforms like Reddit and Twitter use engagement metrics—upvotes, shares, comments—to determine what content gets amplified. Misinformation is inherently more engaging because it provokes outrage and shock. The algorithm sees high engagement and distributes the content more widely without evaluating truthfulness. This creates a structural incentive for bad actors to generate misinformation. Platforms built these systems to maximize engagement and profitability, not to maximize accuracy. Changing these incentive structures is necessary but hasn't happened at scale.

What are the long-term consequences of widespread AI misinformation in society?

The most concerning consequence is erosion of epistemic trust—the ability to know and believe things. If people can't distinguish real from fake content, they lose confidence in their own perception. This leads to either radical skepticism where nothing is believed, or radical credulity where anything is believed depending on confirmation bias. Both damage social cohesion. Additionally, misinformation about health can cause people to avoid legitimate treatment. Misinformation about finance can influence investment decisions. Misinformation about politics can affect elections. The cumulative effect is institutional and social destabilization.

Final Thoughts

The food delivery hoax wasn't just one person trolling a journalist. It was a demonstration of something much larger and more concerning: the infrastructure for creating convincing falsehoods now exists in everyone's hands. That infrastructure is cheap, fast, and increasingly sophisticated.

We're not facing a future where misinformation is a problem we solve and move past. We're facing a present and future where distinguishing real from synthetic content requires active skepticism, digital literacy, and systemic changes to how information flows through society.

That's not pessimism. It's clarity. And clarity is what we need to navigate this moment.

Related Articles

- How Disinformation Spreads on Social Media During Major Events [2025]

- AI-Generated Reddit Hoax Exposes Food Delivery Crisis [2025]

- The Viral Food Delivery Reddit Scam: How AI Fooled Millions [2025]

- The AI Slop Crisis in 2025: How Content Fingerprinting Can Save Authenticity [2026]

- Instagram's AI Media Crisis: Why Fingerprinting Real Content Matters [2025]

- Deepfakes & Digital Trust: How Human Provenance Rebuilds Confidence [2025]

![How AI-Generated Viral Posts Fool Millions [2025]](https://tryrunable.com/blog/how-ai-generated-viral-posts-fool-millions-2025/image-1-1767739042936.jpg)