Service Now and Open AI: Enterprise AI Shifts From Advice to Execution

Here's what's happening in enterprise AI right now, and it's honestly kind of wild. For the past two years, we've all been obsessed with frontier models. Chat GPT, Claude, GPT-5—the race to build better language models has dominated the conversation. Billions invested, headlines written, startups funded on the promise of better reasoning, faster inference, cheaper tokens.

But something just shifted.

Service Now just announced a multi-year strategic partnership with Open AI, and it's not really about getting access to better models. It's about something much more fundamental: the enterprise AI playbook is moving from "advice generation" to "actually executing workflows."

This matters more than you probably think, because it signals where the real competitive moat lives in enterprise AI. Spoiler alert: it's not with the model builders anymore.

TL; DR

- The partnership reinforces a critical insight: General-purpose models are becoming commoditized, while orchestration platforms are where enterprises gain strategic advantage

- Execution over conversation: Enterprise AI is shifting from advisory tools (answering questions) to agentic systems that actually run business processes end-to-end

- Guardrails and governance are now table stakes—enterprises won't adopt AI at scale without monitoring, security, and compliance layers

- Multi-model strategy wins: Companies betting on flexibility (mix-and-match models) will outpace those locked into single-model ecosystems

- The real battlefield: Control layers that coordinate AI models, manage human oversight, and govern deployment are where differentiation happens

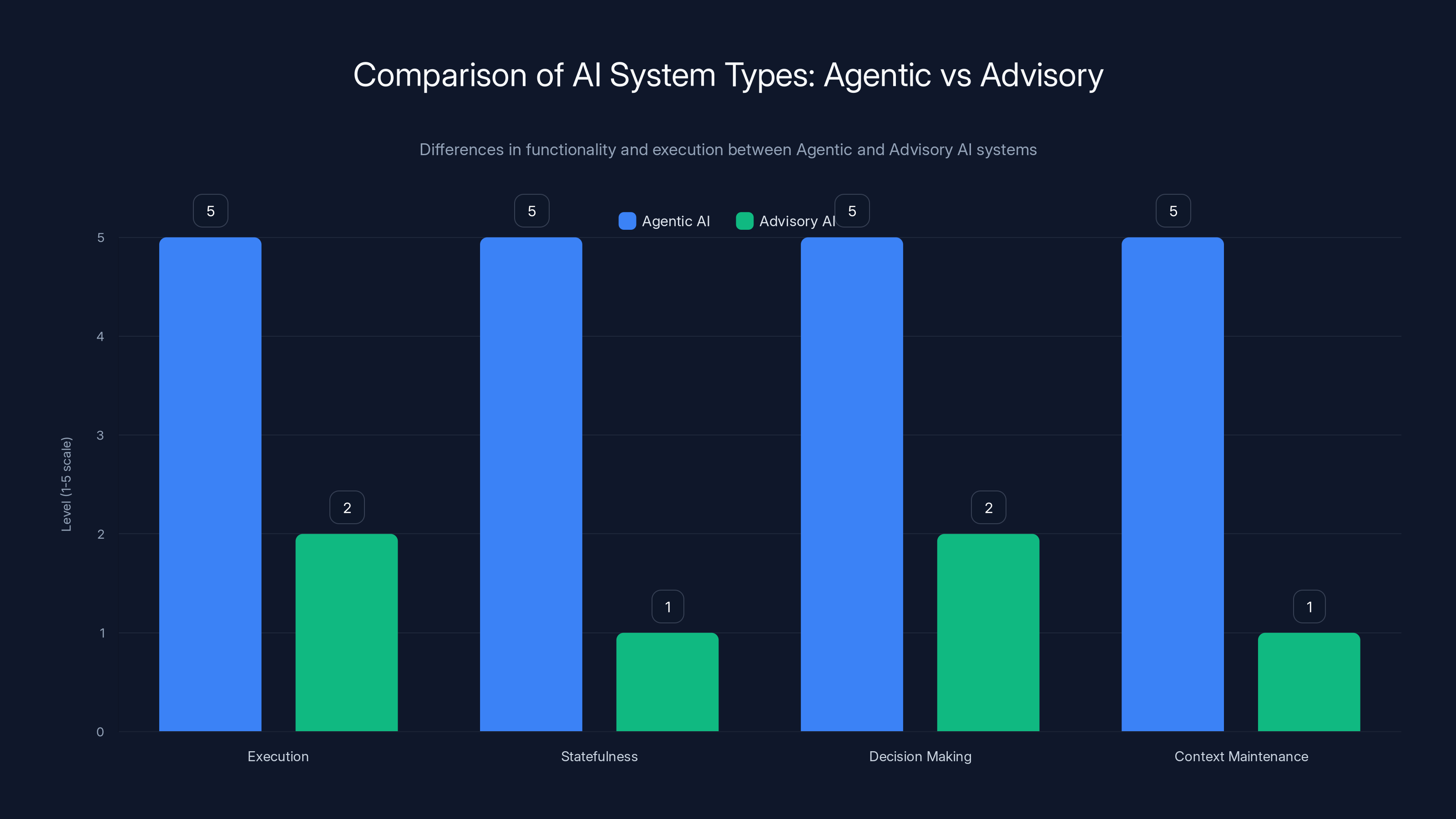

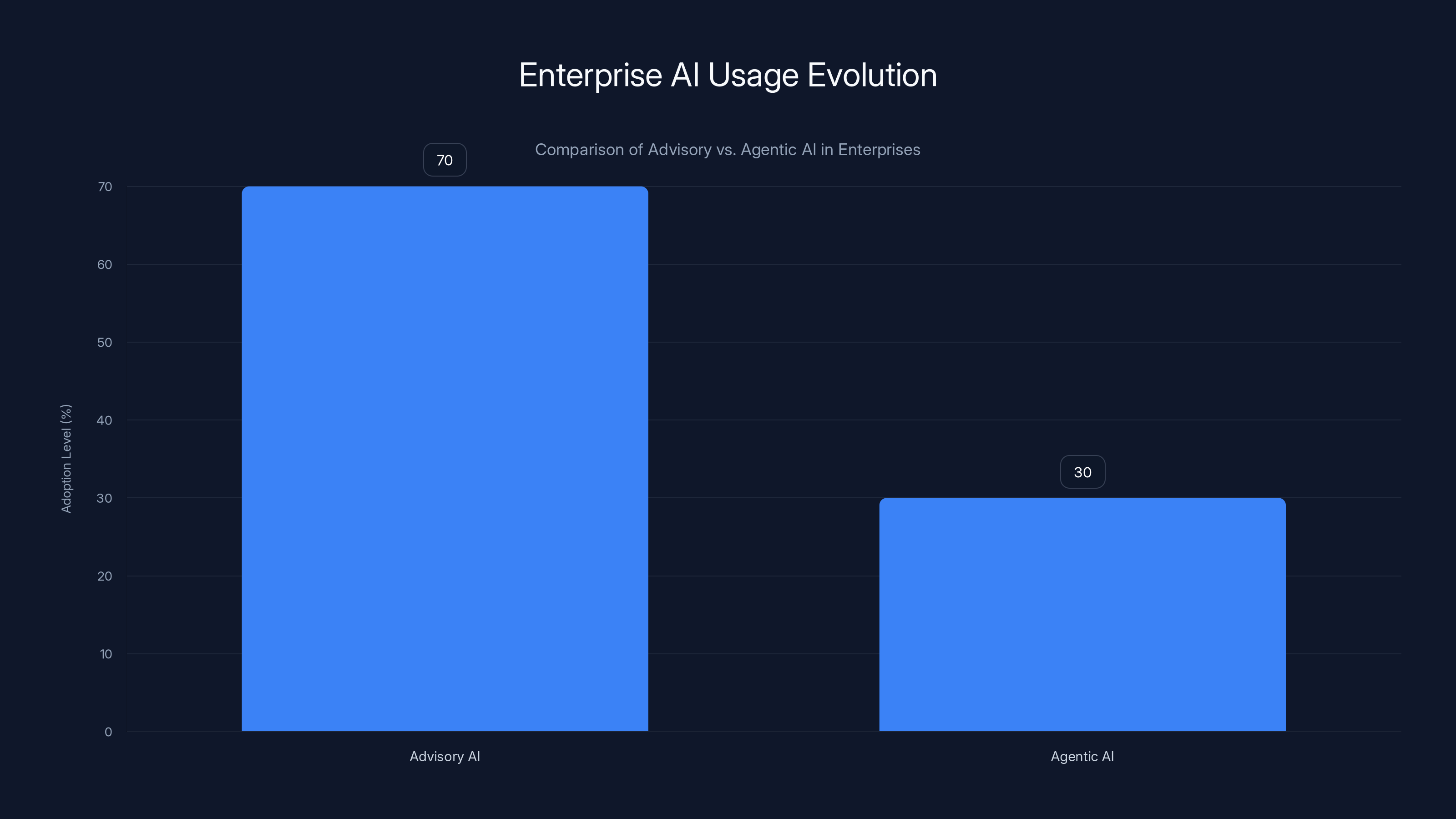

Agentic AI systems excel in execution, statefulness, decision making, and context maintenance compared to Advisory AI, which primarily provides recommendations. Estimated data based on typical AI system capabilities.

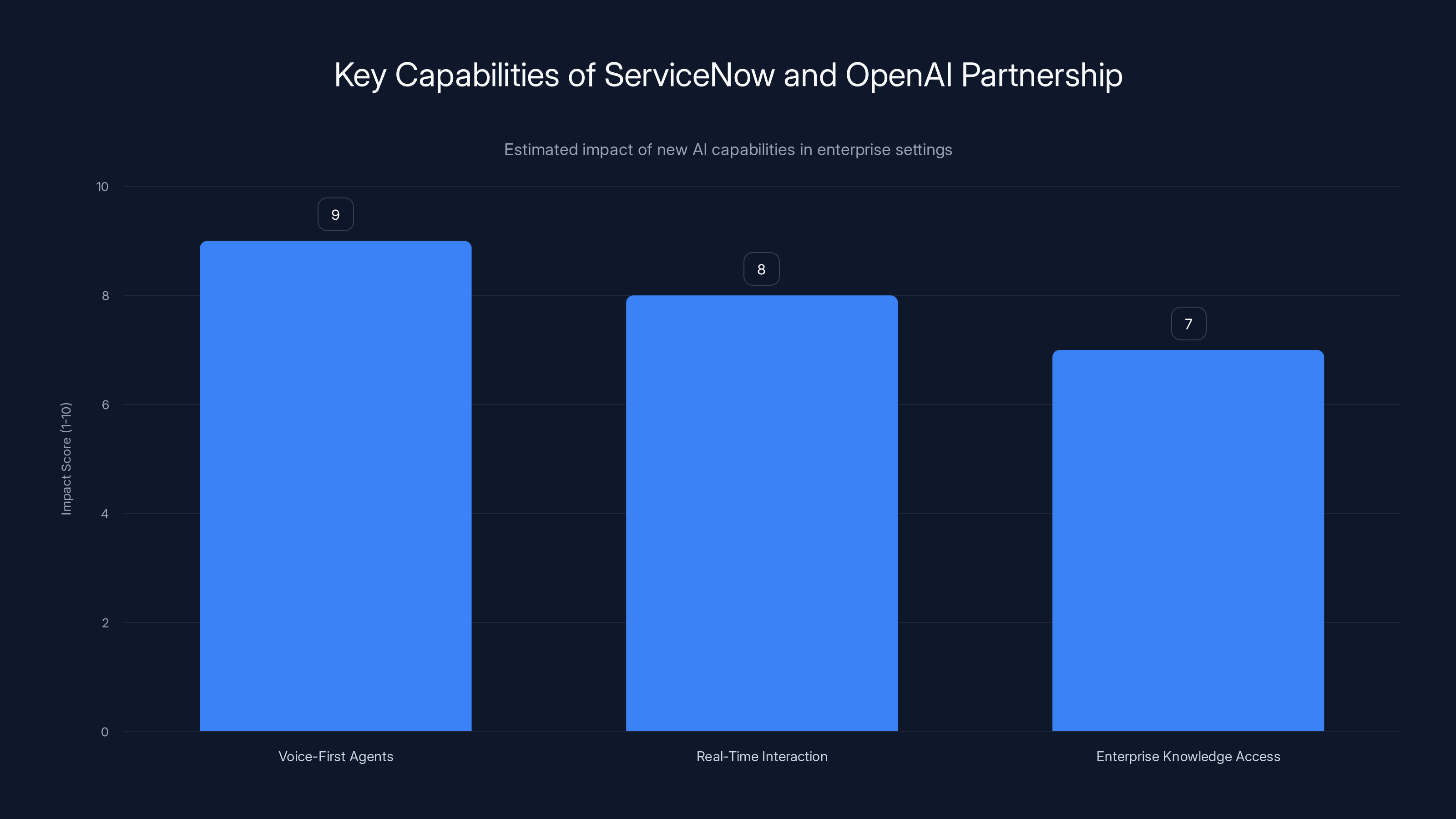

What Service Now and Open AI Are Actually Building Together

Let's get specific about what this partnership unlocks, because the devil's in the details.

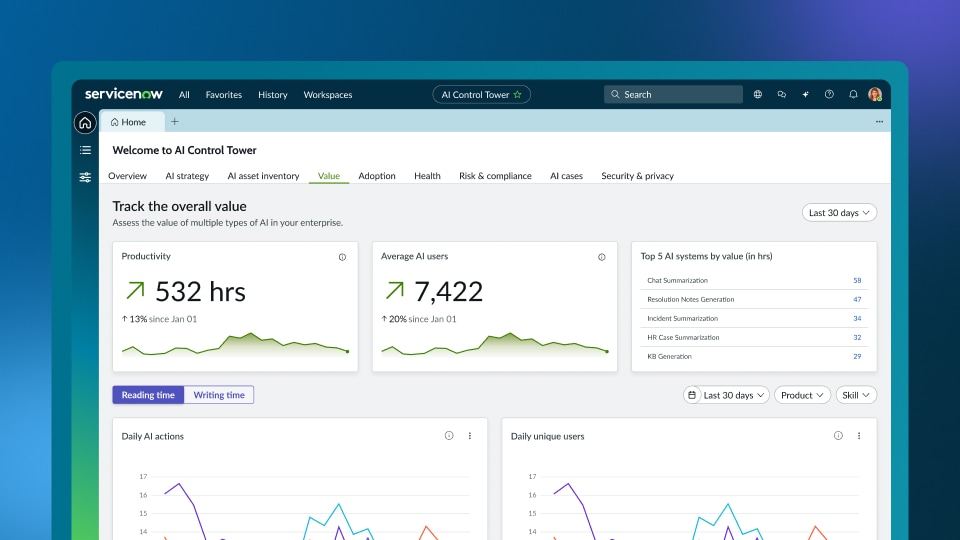

Service Now announced integration of GPT-5.2 into its AI Control Tower and Xanadu platform. But here's the key insight: they're not just plugging a model into an existing product. They're building three specific capabilities that signal where enterprise AI is actually going.

Voice-First Agents and Natural Interaction

The partnership includes building "real-time speech-to-speech AI agents that can listen, reason and respond naturally without text intermediation."

This is more significant than it sounds. Most enterprise AI today still runs through chat interfaces or APIs. You type a question, the system returns text. It's functional but clunky for actual business operations. An incident commander dealing with a production outage doesn't want to type prompts into a chat box. They want to talk, have the system understand context, ask clarifying questions, and return structured responses—all in real time.

Implementing this means solving hard problems: handling interruptions mid-speech, maintaining context across multiple dialogue turns, routing to the right knowledge base when needed, and doing it fast enough that latency doesn't feel unnatural. Open AI's speech models and reasoning capabilities are purpose-built for exactly this kind of real-time interaction.

Enterprise Knowledge Access and Grounding

The second capability is what they're calling "Q&A grounded in enterprise data, with improved search and discovery."

Translation: the system can answer questions about your actual business data—incident history, service catalogs, runbooks, past resolutions—rather than hallucinating generic answers. This is where Service Now's domain advantage becomes obvious. They've spent decades building platforms that store all this enterprise context. Incidents, change requests, customer interactions, asset inventory. That data is gold for training and grounding AI.

The challenge isn't getting the model to answer questions. It's ensuring the model pulls from the right knowledge, doesn't confuse similar incidents, and knows when to admit uncertainty. Open AI's improved reasoning in GPT-5.2 helps with that grounding problem significantly.

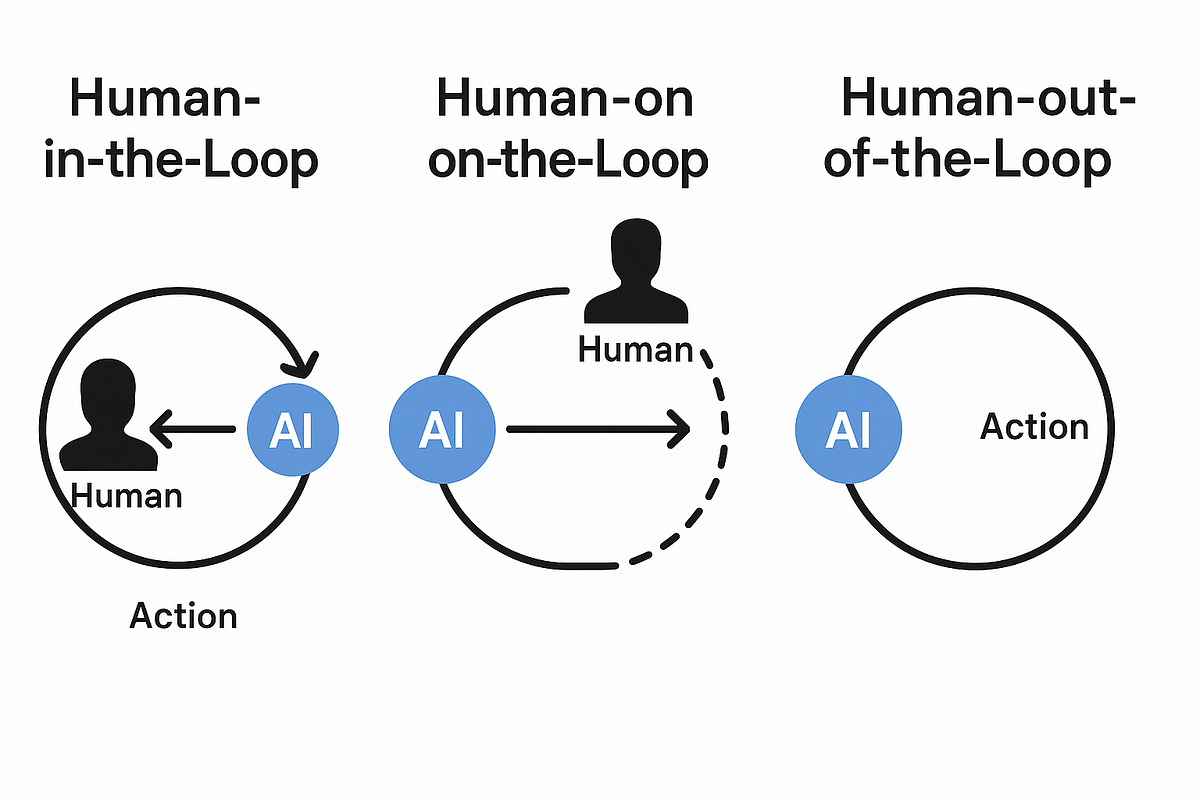

Operational Automation with Human Oversight

The third piece is operational automation: "Incident summarization and resolution support."

This is where the partnership gets genuinely interesting. Summarization is just the visible part. What they're actually describing is an AI system that can:

- Detect when an incident occurs

- Gather relevant context (logs, alerts, related incidents, assigned owner)

- Summarize the situation in plain language

- Suggest remediation steps

- Get human approval before executing

- Monitor the remediation and escalate if needed

That's a closed-loop automation workflow. It's not giving advice. It's actually doing work, with guardrails.

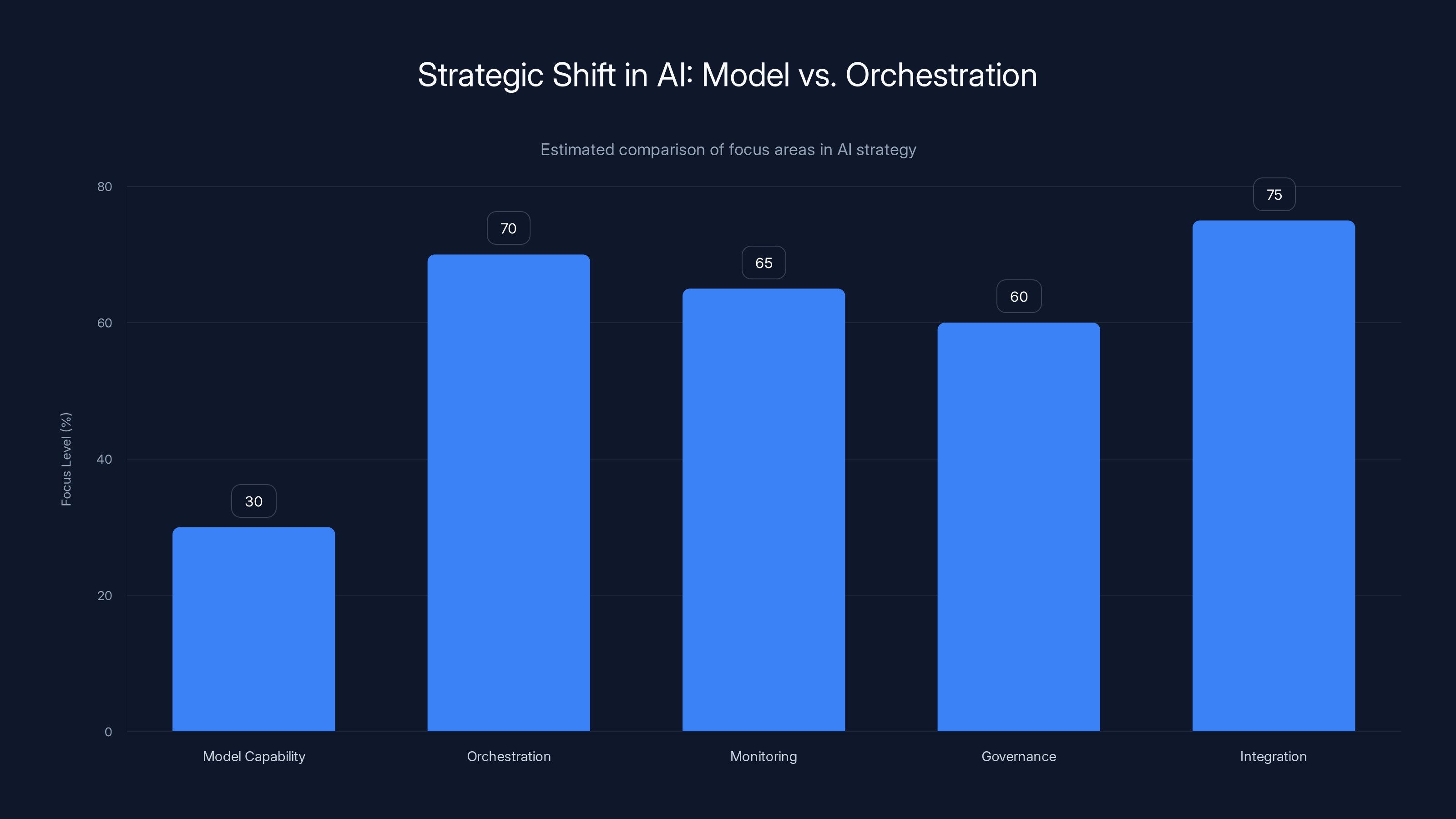

The Broader Strategic Shift: From Models to Orchestration

Here's where the partnership becomes a signal for the entire industry.

Service Now has made a conscious choice: they're not building their own frontier language model. They could have. They have the resources and customer base. Instead, they're saying: "We'll partner with whoever builds the best model for our use cases, and we'll build the platform that orchestrates how those models are used in production."

That's a strategic positioning decision, and it reveals something important about where competitive advantage actually lives in enterprise AI.

Why General-Purpose Models Are Becoming Commodities

Two years ago, having access to the best language model was a moat. Open AI and Anthropic could charge premium prices because their models were meaningfully better than alternatives.

That's still true at the frontier. But here's what's changed: the gap between "best model" and "good enough model" is shrinking fast. Meta's open-source models are catching up in capability. Pricing for API access has compressed. You can now get reasonably good responses from models costing 95% less than the premium tier.

For most enterprise use cases, you don't need the absolute frontier model. You need a model that's reliable, can be monitored, works with your data infrastructure, and respects your compliance requirements. Those characteristics are becoming table stakes, not differentiators.

Where the Real Moat Lives: Orchestration and Guardrails

If models are commoditizing, what isn't?

Control layers that can:

- Coordinate multiple models and route requests based on cost/performance/safety requirements

- Monitor inference in real time and catch hallucinations, off-topic outputs, or safety violations

- Enforce compliance (PII redaction, data retention, audit trails) automatically

- Manage human oversight at scale—knowing when to escalate to humans, capturing their feedback, and improving from it

- Version and test AI applications with confidence, like you do with code

- Integrate with existing workflows so AI augments actual business processes, not runs in isolation

These are hard problems that require deep domain knowledge of enterprise IT. You need to understand incident management workflows to build incident resolution agents. You need to understand change management to catch when an AI system is suggesting a change that violates policy.

Service Now has spent two decades accumulating that knowledge. They're not giving that up. Instead, they're using it to build orchestration platforms that any model can plug into.

That's the strategic insight. The partnership with Open AI is less about model access and more about validating that orchestration platforms are where the value lives.

The strategic focus in AI is shifting from pure model capability to orchestration, monitoring, governance, and integration. Estimated data highlights this trend.

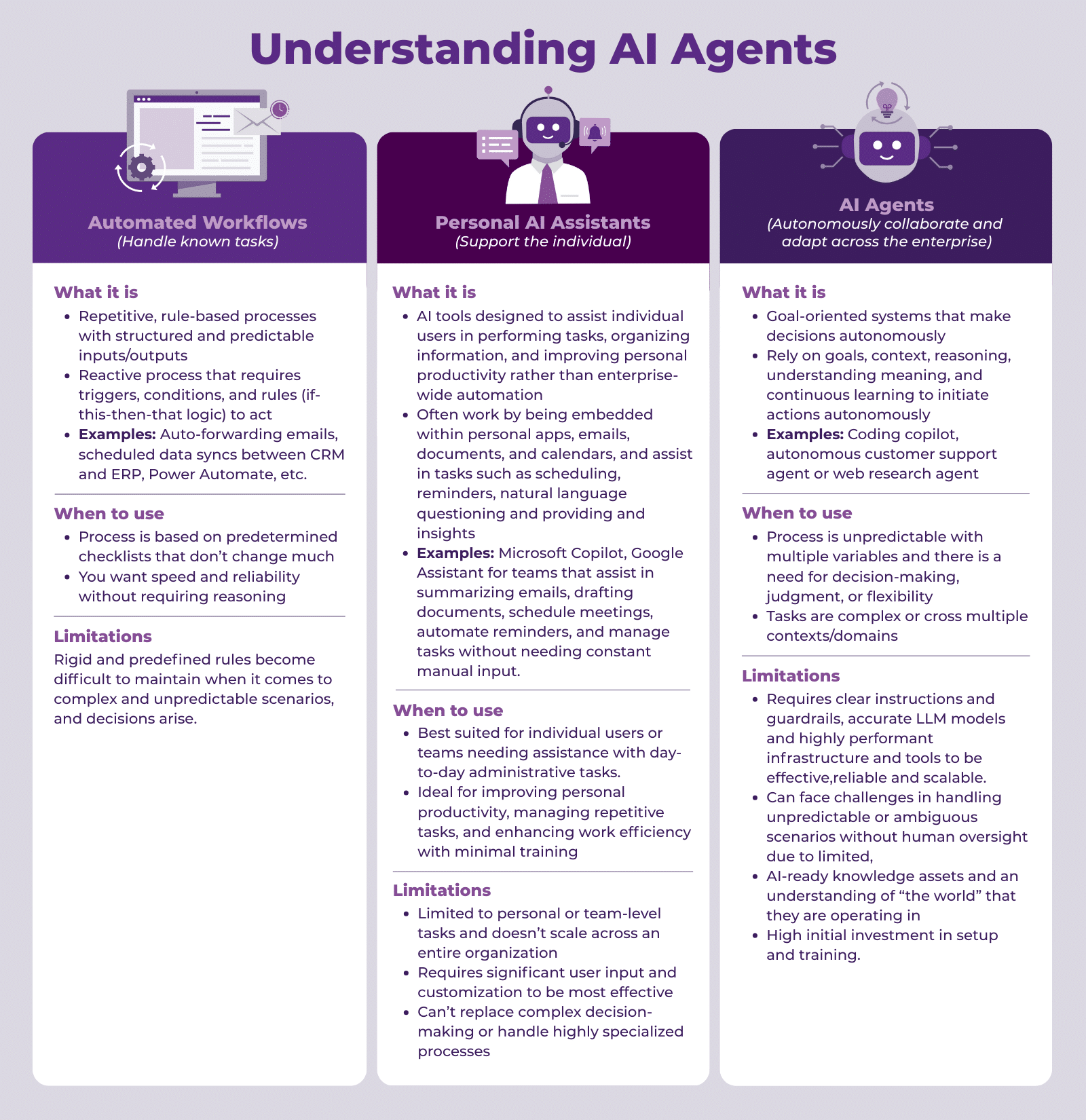

The Three Layers of Enterprise AI (And Where Each One Matters)

To understand why this partnership signals a shift, you need to understand the three-layer model of how enterprise AI works.

Layer 1: The Foundation Model

This is the LLM itself. Open AI, Anthropic, Meta, Mistral—they're all competing on instruction-following, reasoning, context window, and inference speed.

Three years ago, this layer was everything. It's where all the innovation was happening and where companies differentiated.

Today, it's table stakes. You need a decent foundation model. But you don't need the absolute best. A B-tier model from 2024 can do the job just fine for most use cases.

Layer 2: Application Logic

This is the "prompt engineering" or "agent framework" layer. How do you structure prompts to get consistent outputs? How do you build chains of reasoning? How do you route to different models based on task type?

Tools like Lang Chain and Open AI's agent frameworks exist here. This layer is where a lot of startups are innovating right now.

But—and this is critical—application logic is hard to monopolize. Once the patterns become clear, building frameworks becomes standard engineering work. It's valuable but not a defensible moat.

Layer 3: Orchestration and Governance (The Real Moat)

This is the control plane. It includes:

- Workflow orchestration: Connecting AI outputs to business processes

- Monitoring and observability: Tracking what the system is doing, catching failures

- Compliance and governance: Enforcing policies automatically

- Human-in-the-loop workflows: Managing when humans need to step in

- Cost optimization: Routing to cheaper models when appropriate

- Version control: Managing different versions of prompts/agents like code

This layer requires deep domain expertise. You need to understand incident workflows to automate incident resolution. You need to understand change management to build change automation safely. You need to understand security to build secure AI systems.

Service Now has spent decades accumulating this expertise. That's not easily replicated.

How Enterprises Actually Want to Use AI (And Why It Matters)

Let's get concrete about what enterprise customers are actually asking for, because that's where the partnership makes sense.

The Shift From Advice to Action

Two years ago, when enterprises first started using AI, they wanted it to answer questions. Give me a summary of this incident. Draft a response to this customer complaint. Suggest what might be wrong.

That's advisory AI. It augments human decision-making but doesn't change the actual workflow. A human still has to take the information and do the work.

What enterprises are realizing now is: why stop at advice? If the AI can suggest a fix, why can't it apply the fix (with human approval)?

This requires a different architecture entirely. Advisory systems are stateless. You ask, you get an answer, you're done. Agentic systems are stateful. They need to:

- Maintain context across multiple steps

- Make decisions about which action to take next

- Check whether actions succeeded

- Escalate if something goes wrong

- Maintain an audit trail of everything that happened

That's an orchestration problem, not a model problem.

The Incident Resolution Use Case

Let's walk through a real example: incident resolution, which is mentioned specifically in the partnership.

Here's how it works today (mostly manual):

- Alert fires at 2 AM

- On-call engineer wakes up, logs into the system

- Engineer searches through logs and documentation to understand the incident

- Engineer discusses with team (if it's complex) or directly executes fix

- Engineer updates the ticket, documents the resolution

Time to resolution: 30 minutes (for a simple issue) to hours (for complex issues).

Here's how it could work with agentic AI (what the partnership is enabling):

- Alert fires at 2 AM

- System automatically gathers context: recent deployments, related incidents, error logs, relevant runbook

- System summarizes the situation and routes it to the on-call engineer

- Engineer approves suggested remediation (or suggests alternatives)

- System executes the fix and monitors for success

- System documents everything automatically

Time to resolution: 5-15 minutes, human effort reduced by 70%.

The second scenario requires orchestration. You need to:

- Know where to find context (incident database, deployment logs, monitoring)

- Know how to validate the proposed fix is safe

- Know how to execute it (API calls, configuration changes, service restarts)

- Know how to verify it worked

- Know how to escalate if it didn't

That knowledge lives in Service Now's platform. The model is just the reasoning engine that powers it.

Multi-Model Strategy: Why Service Now Won't Go Exclusive

Here's a critical detail from the partnership announcement that deserves more attention.

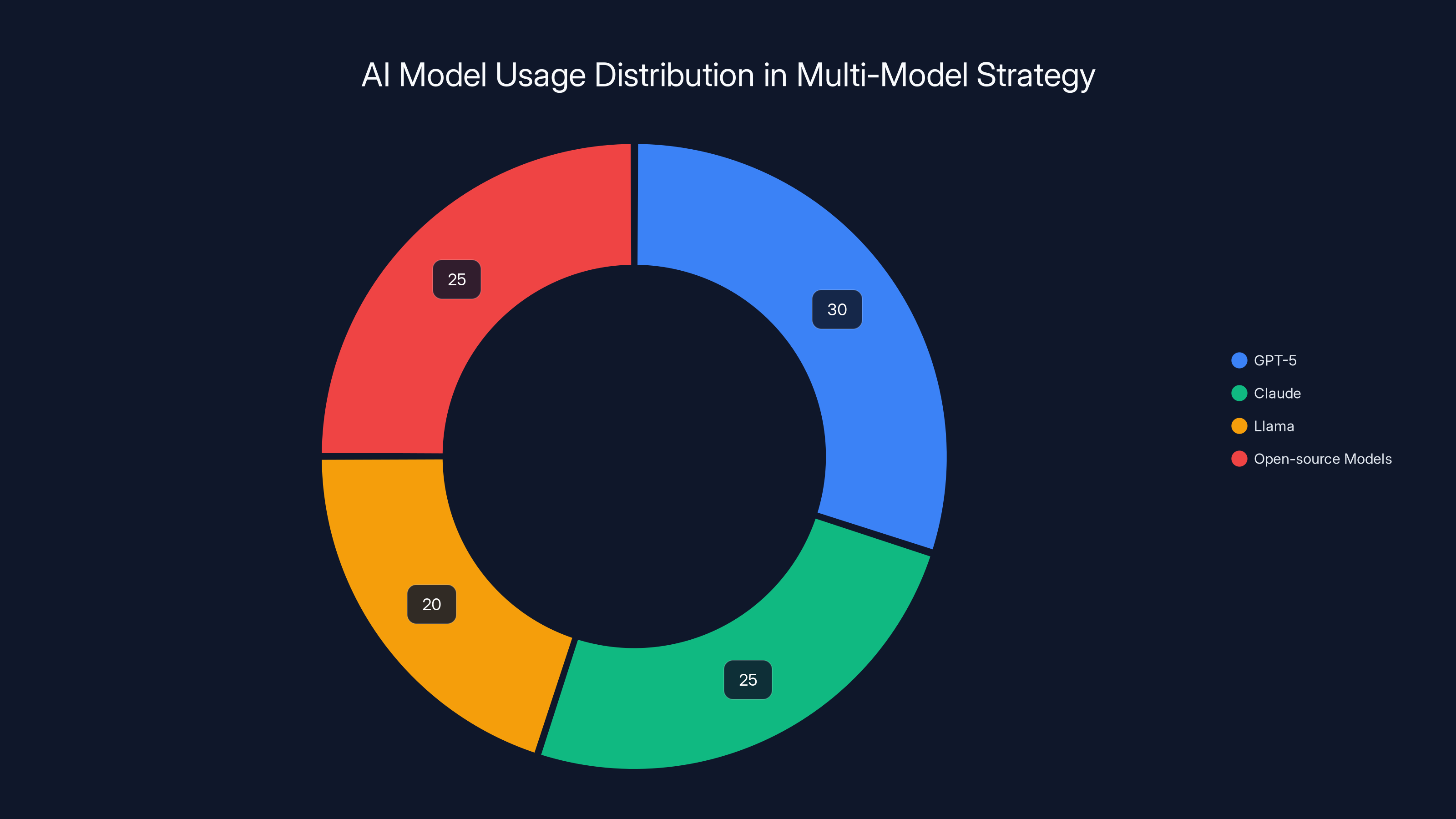

Service Now's SVP of product management made this explicit: "We will remain an open platform. Service Now will continue to support a hybrid, multi-model AI strategy where customers can bring any model to our AI platform."

Translate that: We're not exclusive to Open AI. We're building a platform that works with any model.

That's a strategic choice, and it's the opposite of what you might expect. Why not lock enterprises into Open AI? It would be lucrative short-term.

Because enterprise customers hate being locked in, and they know it. Enterprise procurement teams have learned (often painfully) that single-vendor lock-in limits their negotiating leverage and creates risk if that vendor stumbles.

Here's what a multi-model strategy actually enables:

Cost Optimization Across Models

Different models have different cost profiles. Open AI's models are optimized for reasoning and accuracy. Claude excels at nuanced writing. Llama 3 is cheap and open-source.

A smart orchestration platform would route different tasks to different models:

- Expensive reasoning → GPT-5 (when accuracy is critical)

- General Q&A → Claude or Llama (when cost matters more)

- Streaming text → Open-source models (when speed matters)

Done right, this could reduce AI infrastructure costs by 40-60% without sacrificing quality on tasks that matter.

Insurance Against Model Provider Risk

What if Open AI has an API outage? What if pricing changes 10x? What if a competitor releases something dramatically better?

Enterprises need optionality. A platform locked to one model is taking on unnecessary risk.

Specialization for Different Domains

Different enterprise domains might need different models. Financial services might want models trained on compliance data. Healthcare might want models trained on medical literature.

A multi-model platform can support specialized models for specialized domains without forcing everything through a general-purpose system.

Estimated data shows a balanced distribution of tasks across different AI models, optimizing cost and performance.

The Guardrails Problem: Why It's Actually Harder Than Model Building

Here's something that rarely gets discussed: building guardrails and governance for enterprise AI is harder than building the models themselves.

Building a language model is hard. But the hard problems are well-understood: training data quality, optimization algorithms, inference efficiency. Companies like Open AI, Anthropic, and Meta have cracked it.

Building guardrails for enterprise AI? That's a different beast entirely, and nobody's really solved it.

The Guardrails Challenge

Enterprises need to ensure AI systems:

- Don't leak sensitive data (PII, trade secrets, internal information)

- Don't make decisions that violate policies (compliance, risk, budgets)

- Can be audited and explained (regulators will demand it)

- Can be turned off immediately if they start misbehaving

- Respect cost budgets (don't accidentally spend $100K on API calls)

- Handle edge cases gracefully (what do you do if the AI is confident in a wrong answer?)

Solving these problems requires understanding the enterprise's actual workflows, compliance requirements, and risk tolerance. It's domain-specific and hard to generalize.

How Orchestration Platforms Address This

Service Now's approach to guardrails goes through their orchestration platform:

- Workflow guardrails: If AI suggests an action that would violate policy, the system catches it before it happens

- Monitoring guardrails: If AI output looks wrong (hallucination, off-topic), the system flags it for human review

- Audit guardrails: Everything the system does is logged with reasoning and evidence

- Cost guardrails: The system tracks API spend and can route to cheaper models when expensive ones aren't needed

- Escalation guardrails: If the system detects uncertainty or complexity, it routes to humans instead of guessing

Building these guardrails requires deep knowledge of enterprise IT. You need to know that change management systems have approval workflows for a reason. You need to understand why incident resolution isn't just about fixing the immediate problem but about learning why the problem happened.

That's where Service Now's decades of domain expertise become a defensible moat.

What This Means for AI-Native Startups

If you're an AI startup, this partnership might worry you. If orchestration platforms are where the value is, and Service Now owns enterprise workflows, where do startups compete?

The answer: specialization and vertical focus.

The Role of Specialized AI Platforms

Service Now is general-purpose. They handle incident management, change management, asset management, HR workflows, and dozens of other use cases.

That generality is also a limitation. They can't go as deep on any single domain as a specialized platform can.

AI startups have a window to build specialized orchestration platforms for specific domains:

- Legal AI: Orchestration specifically for contract review, compliance checking, legal workflow automation

- Healthcare AI: Orchestration for patient workflows, clinical decision support, regulatory compliance

- Financial Services AI: Orchestration for trading, risk management, compliance monitoring

- Engineering AI: Orchestration for code review, testing, deployment workflows

The pattern is the same as Service Now but adapted to specific domains. Deep domain expertise + orchestration + multi-model support = defensible moat.

Where Startups Can Still Win

- Faster integration: Startups can move faster than Service Now to integrate new models and capabilities

- Better UX: Horizontal platforms serve everyone, which often means mediocre UX for everyone. Startups can optimize for specific workflows

- Community and ecosystem: Open platforms (like how Service Now supports multi-model) create opportunity for ecosystems of specialized tools

- Change velocity: Large enterprises move slower. Startups can iterate faster on feedback

The Competitive Response: What Other Platforms Will Do

This partnership is a signal to every enterprise platform vendor: orchestration and governance are now critical features, not nice-to-haves.

What Salesforce Has to Do

Salesforce has its own AI platform, Einstein. They need to announce similar orchestration capabilities—the ability to route between multiple models, enforce guardrails, monitor inference, and integrate with existing workflows.

They'll probably do it. Salesforce has the customer base and domain expertise to make it work.

What Microsoft Has to Do

Microsoft owns enterprise IT infrastructure through Azure. They have Copilot as their AI layer.

But they're playing a different game. Microsoft is betting on AI embedded directly in productivity tools (Office, Teams, Dynamics) rather than a separate orchestration layer.

That's a valid strategy but different from what Service Now is doing. Microsoft sees AI as something embedded in existing workflows. Service Now sees it as a separate agentic layer that coordinates across systems.

What New Entrants Could Do

There's still room for new platforms that are born AI-native and focus purely on orchestration and governance.

Service Now has to maintain their massive existing product suite. A pure-play orchestration platform could move faster, have better UX, and focus entirely on the agentic layer.

They'd have limited direct revenue (most value captured by the platform they're orchestrating on) but could become critical infrastructure that every enterprise deployment depends on.

Estimated data: The integration of GPT-5.2 into ServiceNow aims to enhance enterprise AI with voice-first agents, real-time interaction, and better knowledge access, with voice-first agents expected to have the highest impact.

Enterprise AI Execution: What Gets Better With This Partnership

Let's get concrete about what enterprises actually get from this partnership, because the business impact is real.

Faster Time to Value

Enterprises adopting AI today move slowly. Deploy an experiment, get some value, roll out to more teams, gradually scale.

A year ago, your timeline looked like:

- Months 0-3: Build proof of concept, test with a small team

- Months 3-6: Get approvals, integrate with existing systems

- Months 6-12: Deploy to broader team, monitor, iterate

- Year 2+: Scale and optimize

With Service Now's orchestration platform and Open AI's models, that timeline compresses:

- Weeks 0-4: Build proof of concept using pre-built orchestration components

- Weeks 4-8: Integrate with existing Service Now workflows (easier integration)

- Weeks 8-16: Deploy to broader team with built-in monitoring and governance

The time to business impact shrinks from 6+ months to 4 months. For enterprises, that's huge.

Reduced Integration Burden

Integration is where AI projects die. You build a great AI system, but getting it to work with your existing tools takes forever.

Orchestration platforms solve this because they're designed to be the integration layer. Instead of building custom integrations, you use the platform's built-in connectors and workflow builders.

Service Now already has deep integrations with the enterprise tools you're using (ticketing systems, CMDB, asset management, ITSM workflows). Plugging AI into those becomes straightforward.

Better Governance Out of the Box

Enterprises are terrified of uncontrolled AI deployments. Who audits the model? What if it makes a bad decision? How do we ensure compliance?

Service Now answers these by building governance directly into the platform. Every AI action is logged, approvals can be required before execution, and everything is auditable.

That's not something you get from just using Open AI's API directly. You have to build it yourself or buy another governance layer.

The Vendor Lock-In Question: Is Enterprise Freedom Real?

Here's the skeptical take: Service Now says they support multi-model strategy and won't lock customers into Open AI.

But they own the orchestration layer. If you build agents and automations on their platform, you're locked into their platform even if you can swap models underneath.

Is that a problem? Not really, and here's why.

Platform Lock-In vs. Vendor Lock-In

There's a difference between being locked into a platform and locked into a vendor.

Vendor lock-in is bad: you can't switch vendors without rewriting everything. This happens when features are proprietary to the vendor.

Platform lock-in is tolerable: you're using a platform because it solves your problem better than alternatives, but your investment isn't totally wasted if you switch. This happens when platforms embrace standards.

Service Now is creating platform lock-in but avoiding vendor lock-in by:

- Supporting multi-model (you can switch models without switching platforms)

- Using open standards for workflow definition (you could potentially export and migrate to another platform)

- Being transparent about capabilities (no hidden magic that only works on their infrastructure)

Enterprises are okay with platform lock-in because the platform solves a genuine problem and provides enough value that switching costs are rational.

The Broader Implications: What This Means for the Industry

The Service Now-Open AI partnership isn't just about one deal. It signals a shift in how the entire industry thinks about enterprise AI.

Implication 1: Models Are Becoming Infrastructure

Five years ago, building a language model was a startup's founding mission and ongoing competitive advantage.

Today, language models are becoming infrastructure. You can grab a good one, plug it in, and move on.

The differentiation happens at higher layers: orchestration, domain specialization, integration depth, governance.

That's a fundamental shift in where AI startups will create value.

Implication 2: Vertical Integration Beats Best-of-Breed

Enterprise customers used to prefer best-of-breed tools from different vendors. CRM from Salesforce, ITSM from Service Now, data warehouse from Snowflake.

But when you add AI orchestration on top, integration friction explodes. A unified platform that handles CRM, ITSM, and AI orchestration all together becomes more valuable than the sum of parts.

That doesn't mean best-of-breed is dead. It means best-of-breed tools need to integrate deeply with orchestration platforms.

Implication 3: Compliance and Governance Are Now Features, Not Afterthoughts

Enterprise customers will not adopt AI at scale without strong governance.

Vendors that make governance a first-class feature (not a bolt-on) will win.

Service Now is embedding governance into their orchestration platform. Every workflow includes audit logging, approval gateways, and compliance tracking.

Other platforms will have to match this or lose deals.

Implication 4: Domain Expertise Becomes the Real Moat

If models commoditize (which they are), then domain expertise is what separates winners from losers.

Service Now has 20+ years of domain expertise in IT service management. Salesforce has domain expertise in sales and customer service. Workday has domain expertise in HR and finance.

Those moats matter more now than they did five years ago.

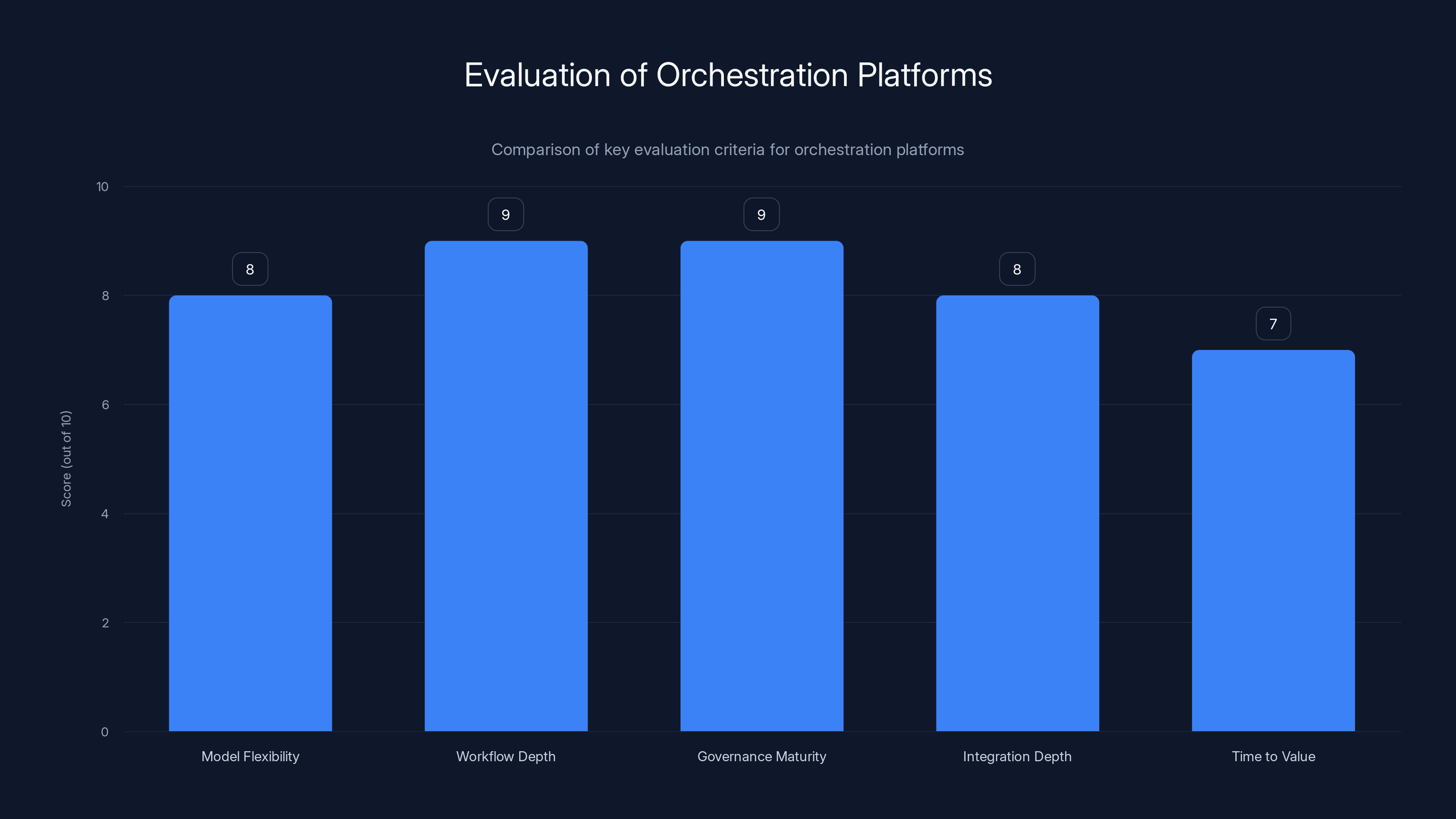

This bar chart illustrates the importance of various criteria when evaluating orchestration platforms. Workflow depth and governance maturity are rated highest, indicating their critical role in platform selection. (Estimated data)

Common Enterprise Objections and How Orchestration Addresses Them

Enterprises have legitimate concerns about deploying AI at scale. This partnership addresses several of them directly.

Objection 1: "We Can't Trust AI to Make Real Decisions Without Oversight"

Solution: Orchestration platforms enable human-in-the-loop automation.

AI suggests an action. Human approves or rejects. System executes with full audit trail. This isn't about removing human judgment; it's about AI accelerating the process.

Objection 2: "We Don't Know Where Our Data Goes When We Use AI"

Solution: Orchestration platforms can be deployed on-premise or in your own cloud.

You maintain control of data. The AI model runs where you say it runs. Sensitive data never leaves your infrastructure.

Objection 3: "AI Is a Black Box—We Can't Explain Decisions to Regulators"

Solution: Orchestration platforms create complete audit trails.

Every decision is logged with supporting evidence. You can explain exactly how the system arrived at a conclusion and show the human approvals that happened along the way.

Objection 4: "AI Keeps Getting Cheaper. Will We Overspend?"

Solution: Multi-model orchestration enables cost optimization.

The platform routes expensive reasoning tasks to expensive models and cheap tasks to cheap models. Cost budgets can be enforced automatically.

Looking Forward: The Next 12-24 Months

If this partnership signals where the industry is heading, what should you expect to see?

Expect More Partnerships Like This

Salesforce will announce partnerships with model providers. Workday will do the same. Microsoft will deepen integration with Open AI beyond what they've announced.

These aren't exclusive deals. They're partnerships that validate a model/platform combination and build reference customers.

Expect Governance Frameworks to Harden

Enterprises will demand standardized approaches to AI governance. Frameworks will emerge: approved workflows, guardrails, monitoring standards.

Service Now will likely publish governance frameworks and best practices, becoming a standard in the industry.

Expect Consolidation in the Orchestration Layer

Right now, there are dozens of small tools trying to be the "orchestration platform" for AI. Within two years, three to four clear leaders will emerge.

Service Now is likely one of them. Others will be built by Salesforce, Microsoft, or new entrants focused on this layer.

Smaller tools will either specialize in vertical domains or get acquired.

Expect Agentic AI to Move Beyond IT Operations

Incident resolution is just the starting point. Within 12 months, expect to see:

- Sales AI agents: Managing lead qualification, follow-ups, and deal workflows

- HR AI agents: Handling onboarding, benefit administration, and employee inquiries

- Finance AI agents: Processing invoices, expense reports, and payment workflows

- Customer service AI agents: Handling support tickets, escalations, and knowledge synthesis

Each will require domain-specific orchestration platforms.

How to Evaluate Orchestration Platforms (A Practical Framework)

If you're an enterprise evaluating platforms after this partnership, here's how to think about it.

Evaluation Criterion 1: Model Flexibility

Does the platform support multiple models? Can you switch models without rewriting your automations?

Poor answer: "We work with Open AI and have custom integrations for other providers." Good answer: "Any model accessible via API can be plugged in. Model switching is a configuration change."

Evaluation Criterion 2: Workflow Depth

How deep does the platform understand your actual workflows? Can it model your specific incident resolution process or only generic ones?

Poor answer: "We have pre-built incident templates." Good answer: "We can model your exact incident resolution workflow, including all your custom steps and approval gates."

Evaluation Criterion 3: Governance Maturity

Does the platform make compliance and governance a first-class feature or an afterthought?

Poor answer: "We have audit logging." Good answer: "Audit logging, approval workflows, cost controls, PII redaction, compliance reporting, and escalation rules are all built-in and configurable."

Evaluation Criterion 4: Integration Depth

How deep does the platform integrate with your existing tools?

Poor answer: "We have APIs for common enterprise tools." Good answer: "We have native integrations with your specific tools (your CMDB, your ticketing system, your asset management) and understand their data models and workflows."

Evaluation Criterion 5: Time to Value

How quickly can you move from evaluation to production automation?

Poor answer: "3-6 months of implementation and integration." Good answer: "4-8 weeks, with pre-built workflows for your industry and clear integration patterns."

Enterprises are shifting from Advisory AI, which currently holds a 70% adoption rate, to Agentic AI with a 30% adoption rate. This shift indicates a growing interest in AI systems that can execute actions, not just provide advice. (Estimated data)

Building Your Own Orchestration Layer: When and Why

Some large enterprises ask: why not build our own orchestration platform?

You could. But you probably shouldn't, and here's why.

The Build vs. Buy Analysis

Cost of building:

- Team of 10-15 engineers: $2-3M per year

- Infrastructure and tooling: $500K per year

- 18-24 months to production-quality MVP

- Total: $4-6M in first two years

Cost of buying:

- Service Now or similar: $50-200K per year depending on usage

- Implementation: $100-300K

- Total: $200-400K in first year

Even if you have a large team, the ROI calculation favors buying unless you have genuinely unique requirements that a vendor can't meet.

When to Build (Rare Cases)

You build if:

- Unique domain requirements: Your industry is so specialized that no vendor platform serves it (some financial trading firms, some manufacturing operations)

- Extreme scale: You're processing so many automations that vendor infrastructure costs become prohibitive

- Competitive moat: Your orchestration capability is itself a product you sell to customers

Otherwise, buy. Let vendors handle the orchestration layer complexity.

The Developer Experience Question: What's Missing

Here's something the partnership doesn't directly address but will become critical in the next year: developer experience.

Building AI agents and automations is still tedious. You have to wire up models, implement retries, handle edge cases, build monitoring, manage state.

Service Now is probably abstracting a lot of this with visual workflows and pre-built patterns. But will it be as easy as writing code?

No, probably not. Low-code platforms never are for power users.

So we'll likely see:

- Low-code solutions for "common" automations (incident resolution, ticket routing)

- Code-based solutions for complex automations

- Increasingly, the boundary between low-code and code-based becomes fuzzy (LLMs can generate code, engineers can tweak the generated code)

The platforms that win will support both paradigms: visual for people who prefer it, code for people who need precision.

Competitive Positioning: How This Shifts the Landscape

Let's map out how this partnership affects various competitors.

Anthropic (Model Provider)

Impact: Moderate. They're still building great models. But they don't have the platform relationships Open AI is building. They'll need their own platform partnerships with vendors like Salesforce or Workday.

Google (Model Provider + Platform)

Impact: Moderate. Google has models and cloud infrastructure but limited enterprise workflow platforms. They're not Service Now or Salesforce. They'll compete on cloud infrastructure and pricing but probably not win on orchestration.

Salesforce (Platform)

Impact: High. They need to match Service Now's orchestration capabilities and announce model partnerships. If they don't, they'll lose in enterprise sales to Service Now + Open AI combinations.

Microsoft (Platform + Model Provider)

Impact: Moderate. Microsoft is well-positioned with both Azure infrastructure and Open AI partnership. But they're playing a different game (AI embedded in productivity tools) rather than a separate orchestration layer. Both strategies can win.

Specialized AI Startups

Impact: Mixed. Startups building narrow orchestration solutions for specific domains (legal, healthcare, finance) can still win. Startups building horizontal orchestration platforms will struggle against Service Now and similar incumbents.

Lessons for AI Practitioners

If you're building AI systems or evaluating them, here's what to take away from this partnership.

Lesson 1: Domain Expertise > Model Quality

The best model doesn't win. The best orchestration of models for your specific domain wins.

Invest in understanding your domain's workflows, constraints, and requirements. Use that to guide which models to use and how to route between them.

Lesson 2: Governance Is Not a Feature—It's Table Stakes

Enterprises will not deploy AI without strong governance. Period.

If you're building AI systems, embed governance from the start: monitoring, audit trails, approval workflows, cost controls.

Lesson 3: Multi-Model Is Not a Luxury

Build systems that can work with multiple models from the ground up. Single-model lock-in will hurt you.

Lesson 4: Platforms Beat Point Solutions

Point solutions ("We solve X with AI") are getting harder to defend against platforms ("We orchestrate all your AI needs") because integration is so valuable.

You can compete as a point solution if you have extremely deep domain expertise and your domain is large enough to support a standalone business. Otherwise, embed into platforms.

Lesson 5: Execution Beats Advice

Building systems that answer questions is table stakes. Building systems that actually execute workflows (with proper oversight) is where the value is.

Design your AI systems to close the loop and take action, not just provide information.

The Honest Assessment: What Service Now Gets Right and Wrong

Let me be straight about this: Service Now is well-positioned for this shift, but they're not perfect.

What They Get Right

- Deep domain expertise: 20+ years understanding enterprise IT operations. That's hard to replicate.

- Installed base: Millions of users already use their platform. Embedding AI is easier than winning new customers.

- Workflow understanding: They know how incidents actually get resolved, changes actually get approved, and assets actually get managed.

- Multi-model strategy: They're not betting everything on Open AI, which is smart.

Where They Could Stumble

- Speed of iteration: Large companies move slower than startups. Open AI ships fast; Service Now has more process overhead.

- User experience: Enterprise software has a reputation for clunky interfaces. If their AI orchestration platform feels as rigid as their main platform, it'll be hard to adopt.

- Developer experience: Building advanced automations might still require deep platform knowledge. If it's too hard, developers will build workarounds.

- Cost model: How they price AI orchestration will be critical. If they charge per API call or per automation, they could price themselves out of some use cases.

But these are execution challenges, not strategic ones. The strategy is sound.

FAQ

What is the Service Now and Open AI partnership?

Service Now announced a multi-year strategic partnership with Open AI to integrate GPT-5.2 into its AI Control Tower and Xanadu platform. The partnership enables voice-first AI agents, enterprise knowledge access grounded in customer data, and operational automation capabilities like incident summarization and resolution support. Importantly, Service Now is maintaining a multi-model strategy and will continue supporting other model providers, not creating an exclusive relationship.

How does agentic AI differ from advisory AI?

Advisory AI systems answer questions and provide recommendations but leave execution to humans. They're stateless: you ask, you get an answer, you're done. Agentic AI systems actively execute workflows, make decisions about next steps, take actions (with human oversight), and adapt based on results. Agentic systems are stateful and require maintaining context across multiple steps, checking whether actions succeeded, and escalating if something goes wrong. This requires orchestration platforms that understand workflows and can enforce guardrails.

Why is orchestration becoming more important than the AI models themselves?

Different models are converging in capability while diverging in cost and specialization. The gap between "best model" and "good enough model" is shrinking, making models increasingly commoditized. Meanwhile, orchestrating how models are used in production—routing between models for cost efficiency, enforcing compliance, monitoring for failures, integrating with existing workflows—remains domain-specific and hard to replicate. Platforms that excel at orchestration gain defensible moats even if underlying models are commodity.

What are enterprise guardrails and why do they matter?

Guardrails are controls that ensure AI systems operate safely and compliantly. They include monitoring systems for hallucinations or off-topic outputs, workflow policies that prevent AI from suggesting actions that violate compliance requirements, audit logging that tracks every decision with supporting evidence, cost controls that prevent overspending on API calls, and escalation rules that route uncertain situations to humans. Enterprises won't deploy AI at scale without strong guardrails because the business and regulatory risk is too high.

How does multi-model strategy reduce vendor lock-in?

Enterprises building on single-model systems face significant risk if that model provider has an outage, changes pricing dramatically, or loses technical advantage to competitors. A multi-model platform lets enterprises route different tasks to different models based on cost, performance, or safety requirements. You can start with one model provider, then integrate alternatives as they emerge. If your first provider stumbles, switching costs are lower because the orchestration platform remains constant and just connects to different models underneath.

What does this partnership mean for AI startups?

The partnership signals that general-purpose orchestration platforms will likely consolidate around a few winners (probably existing enterprise platforms like Service Now, Salesforce, Workday). For startups, the opportunity is in vertical specialization: building orchestration platforms for specific industries (legal, healthcare, financial services) with deep domain expertise that general platforms can't match. Horizontal orchestration startups competing directly with Service Now will struggle unless they can differentiate on speed, cost, or user experience.

How quickly can enterprises deploy agentic AI with this partnership?

With pre-built orchestration components and deep platform integration, enterprises can move from proof of concept to production automation in 4-8 weeks for common use cases like incident resolution. Compare this to 6-12 months for custom-built solutions. The faster timeline comes from eliminating custom integration work (Service Now understands your existing workflows) and leveraging pre-built guardrails and monitoring. Complex automations requiring significant domain customization might take longer.

Should enterprises build or buy orchestration platforms?

For most enterprises, buying is the right answer. Building a production-quality orchestration platform costs

What will happen to other enterprise platforms after this partnership?

Expect competitive responses from Salesforce, Microsoft, Workday, and other major platforms. Salesforce will likely announce similar orchestration capabilities and model partnerships. Microsoft will deepen Azure and Copilot integration. Workday will do the same for HR and finance workflows. This is not an exclusive partnership but rather a signal that orchestration and multi-model support are now table-stakes features that major platforms must offer. Within 2-3 years, expect every major platform to have comparable capabilities.

Conclusion

The Service Now and Open AI partnership isn't just another enterprise software deal. It's a signal that the enterprise AI industry is maturing out of the "conversation" phase and into the "execution" phase.

For the past two years, we've been building AI systems that answer questions. Chat interfaces. Q&A systems. Assistants that help humans make decisions faster.

That's still valuable. But it's not the bottleneck anymore.

The bottleneck now is: how do we actually automate workflows? How do we have AI take actions (with proper oversight)? How do we integrate this into existing systems without massive re-architecture? How do we ensure it stays compliant and doesn't leak sensitive data?

These are orchestration problems. And they require deep domain expertise that's been accumulating for decades in platforms like Service Now.

That's why this partnership matters. It's not about Open AI's models being great (they are) or Service Now's platform being comprehensive (it is). It's about the strategic positioning: models are becoming infrastructure, and the platforms that orchestrate how models are deployed are becoming the new moat.

If you're building enterprise AI, remember this. Domain expertise beats model access. Orchestration beats point solutions. Governance becomes a feature, not a cost. And flexibility—the ability to swap models, integrate with multiple vendors, and adapt to changing requirements—is worth paying for.

The enterprises that win in the next few years won't be those with the most advanced AI models. They'll be the ones with the smartest orchestration layers managing those models.

The Service Now-Open AI partnership is betting that Service Now knows how to be that orchestration layer. And honestly, they probably do.

Key Takeaways

- General-purpose AI models are commoditizing while orchestration platforms become the real competitive advantage

- Enterprise AI is shifting from advisory (answering questions) to agentic (executing workflows with oversight)

- Guardrails and governance are now table-stakes features enterprises require before deploying AI at scale

- Multi-model strategy beats single-model lock-in for enterprise flexibility and risk management

- Domain expertise accumulated over decades in workflow platforms creates defensible moats that model providers can't easily replicate

Related Articles

- MIT's Recursive Language Models: Processing 10M Tokens Without Context Rot [2025]

- Why Agentic AI Pilots Stall & How to Fix Them [2025]

- The Hidden Cost of AI Workslop: How Businesses Lose Hundreds of Hours Weekly [2025]

- Why CEOs Are Spending More on AI But Seeing No Returns [2025]

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

- Anthropic's Economic Index 2025: What AI Really Does for Work [Data]

![ServiceNow and OpenAI: Enterprise AI Shifts From Advice to Execution [2025]](https://tryrunable.com/blog/servicenow-and-openai-enterprise-ai-shifts-from-advice-to-ex/image-1-1769017109609.png)