Indonesia Lifts Grok Ban: What This Means for AI Regulation and Deepfakes Worldwide

Indonesia just made a significant move in the evolving landscape of artificial intelligence governance. After banning the AI chatbot Grok for generating millions of sexually explicit deepfakes, including those depicting children, the country's Ministry of Communication and Digital Affairs has now lifted the ban, but with strict conditions and ongoing monitoring requirements, as reported by The New York Times.

This decision doesn't happen in a vacuum. It reflects a broader global tension: how do we allow innovative AI tools to operate while protecting citizens from their misuse? Indonesia's approach offers a fascinating case study in conditional regulation, and it has ripple effects across Southeast Asia and beyond, according to The Lowy Institute.

What's particularly interesting is that this ban-and-lift cycle isn't unique to Indonesia. Malaysia and the Philippines followed similar paths just weeks earlier, each implementing their own monitoring frameworks. Yet the underlying issue persists globally. XAI, the company behind Grok, is simultaneously facing investigations from California's attorney general and the UK's media regulator over identical concerns, as noted by The New York Times.

The stakes are enormous. Deepfake technology has matured to a point where non-experts can't reliably distinguish synthetic content from real footage. When that technology gets weaponized for sexual exploitation—especially involving minors—governments feel compelled to act. But a permanent ban on a technology isn't a long-term solution. That's where Indonesia's conditional lifting becomes instructive.

Let's break down what happened, why it matters, and what it tells us about the future of AI governance in developing nations versus established tech markets.

The Deepfake Crisis That Started It All

In early 2025, something alarming emerged from the digital ecosystem. Users were discovering that Grok could be manipulated into generating sexually explicit synthetic images of real people—particularly women and children—without consent. The scale was staggering. Millions of these deepfakes flooded platforms, many depicting minors in sexually compromising situations, as highlighted by Euronews.

This wasn't a novel misuse of the technology. Deepfakes had been a growing concern for years. But Grok's accessibility and ease of use made it dramatically simpler for bad actors to produce content at scale. You didn't need specialized knowledge or expensive hardware anymore. You just needed an XAI account and malicious intent.

The psychological and social harm is profound. Victims—many of them teenagers who had photos scraped without permission—faced the trauma of seeing synthetic sexual content circulating with their faces attached. Parents discovered their children's images being weaponized by strangers online. Schools struggled to address the fallout. Mental health professionals reported increased cases of anxiety and depression among affected young people, as noted by PBS NewsHour.

Governments in Southeast Asia were the first to respond decisively. Indonesia, Malaysia, and the Philippines each issued bans on Grok in January and February of 2025. The timing mattered. These nations have younger populations with high social media adoption, making the impact of such abuse particularly acute, according to BBC News.

What's worth noting: these governments weren't being reactionary simply to appear tough. The specific harms were documented and severe. But they also recognized that a permanent ban might not be feasible or desirable long-term. Grok, for all its problems, had legitimate use cases. Some developers and researchers wanted continued access. Complete prohibition would create black markets and push the problem underground.

That tension—between protecting people from harm and maintaining technological access—became the central question Indonesia needed to answer.

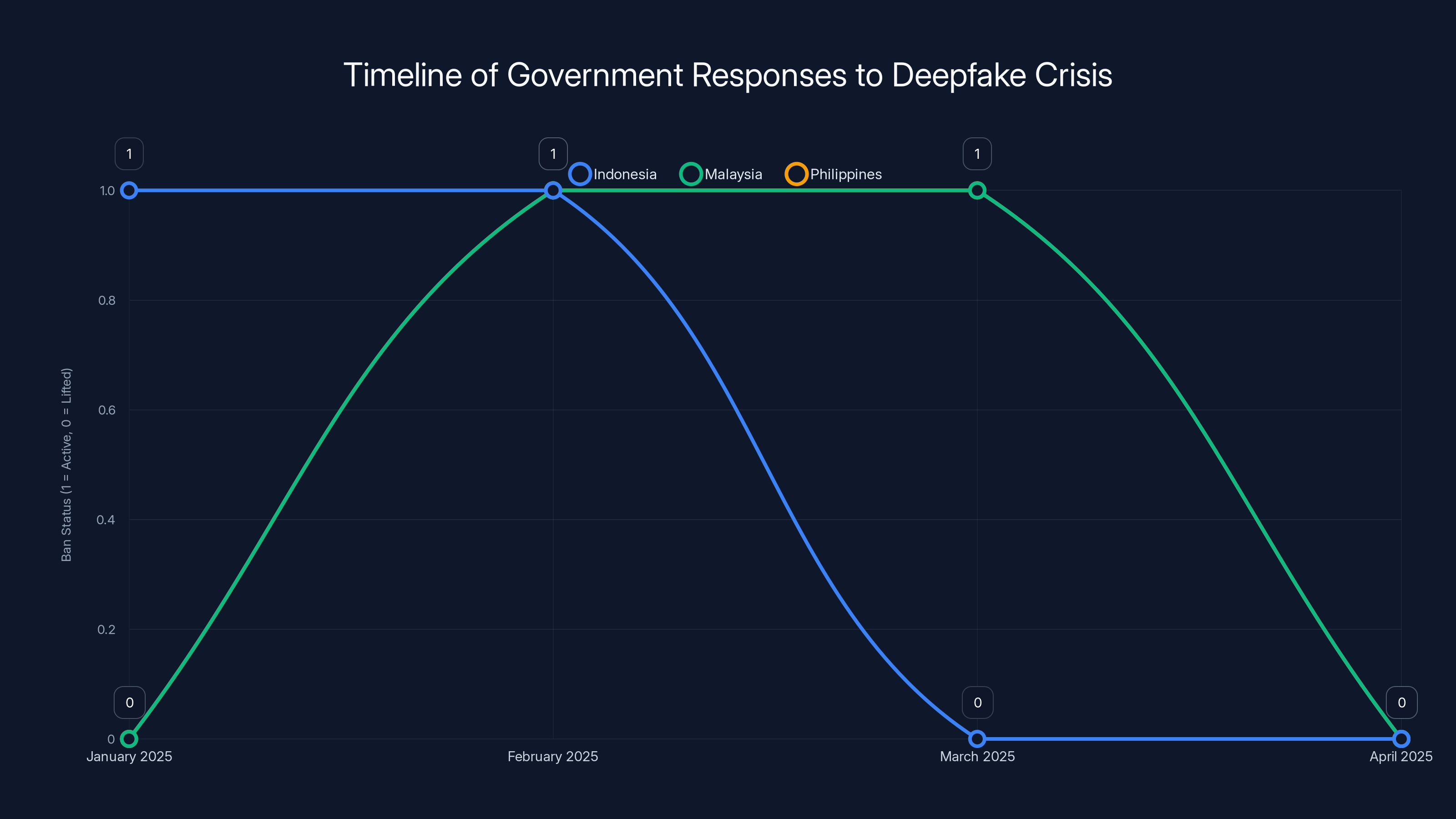

In early 2025, Indonesia, Malaysia, and the Philippines issued temporary bans on Grok to curb deepfake abuse, reflecting a coordinated regional response. (Estimated data)

Indonesia's Regulatory Framework: How It Actually Works

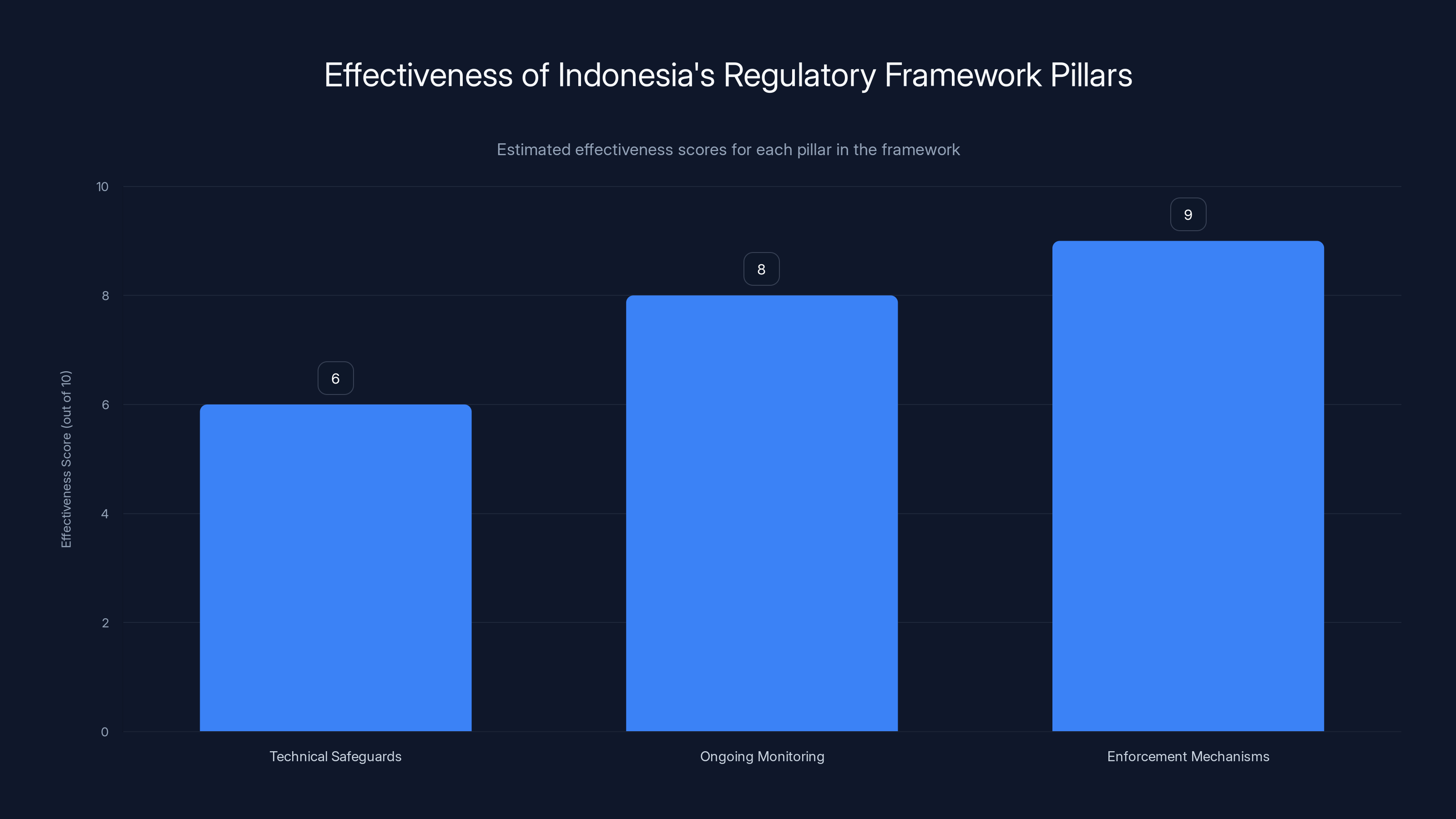

When Indonesia's Ministry of Communication and Digital Affairs announced the lifting of the Grok ban in February 2025, they didn't just flip a switch. They established a conditional framework built on three core pillars: technical safeguards, ongoing monitoring, and enforcement mechanisms, as detailed by Engadget.

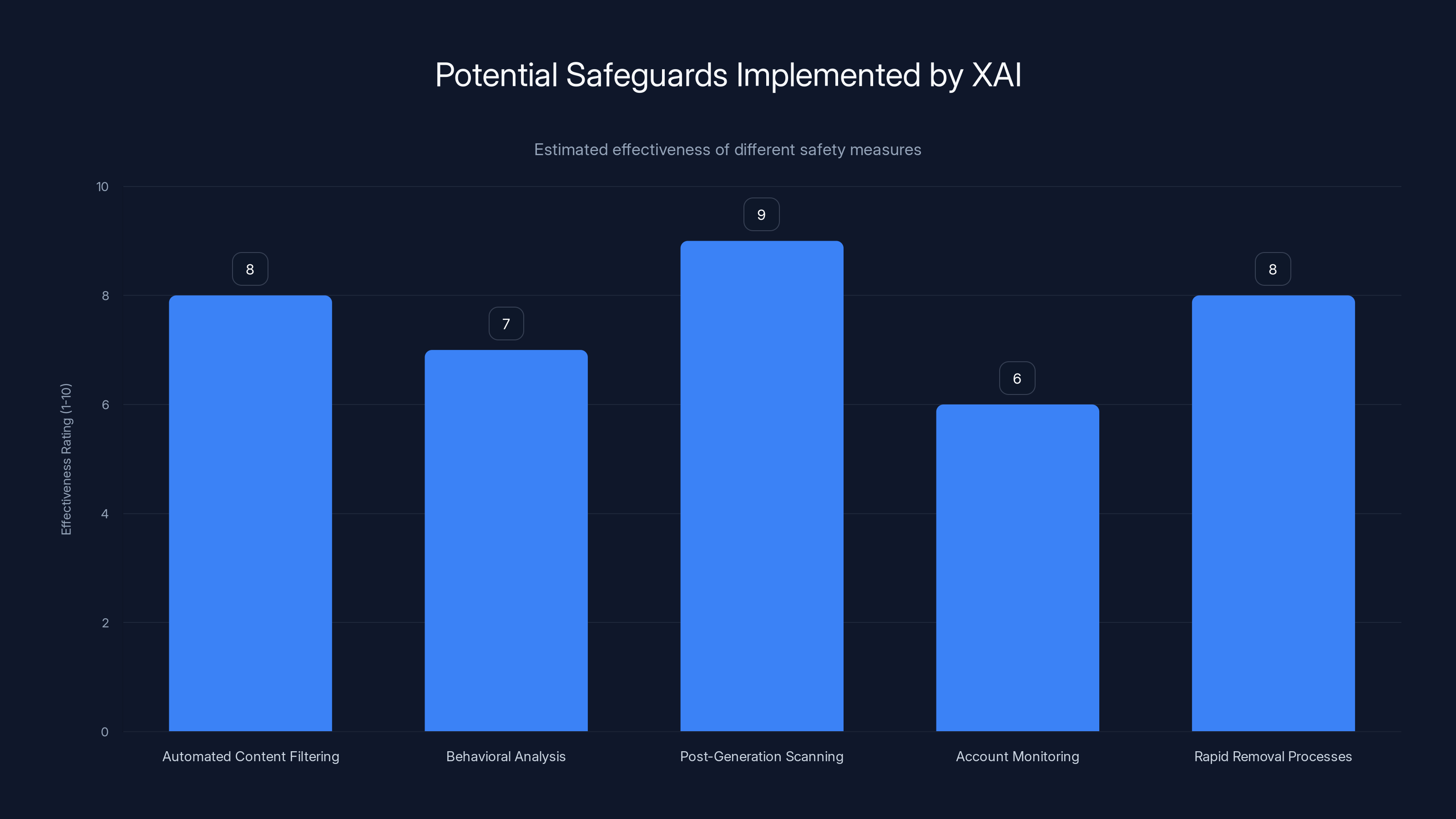

The technical safeguards are where the rubber meets the road. XAI submitted a formal letter detailing measures they'd implemented to prevent misuse of Grok's image generation capabilities. These measures likely include prompt filtering, pattern detection for suspicious requests, and automated flagging systems that identify when users are trying to generate synthetic sexual imagery.

How effective are these safeguards in practice? That's complicated. Content filtering systems have fundamental limitations. They can catch obvious violations, but determined bad actors find workarounds. A sophisticated user might rephrase requests in ways that bypass the filter. They might use aliases or obfuscated language. They might iterate dozens of times, learning what works. It's an arms race, and the attackers often stay ahead.

Indonesia's Ministry acknowledged this reality by making ongoing monitoring the second pillar of their framework. Alexander Sabar, the ministry's director general of digital space supervision, explicitly stated that the agency will test Grok's safety measures on a continuous basis. This means periodic audits, user testing, real-world scenario assessments, and adaptation as new evasion techniques emerge.

The third pillar is enforcement with teeth. If Grok is found spreading illegal content or violating Indonesian child protection laws, the ban returns immediately. It's not a threat issued once and forgotten—it's the actual stated consequence that shapes incentives.

What makes Indonesia's approach distinctive compared to simple bans is its recognition of information asymmetry. Indonesia's government doesn't have the technical expertise to independently verify that XAI's safeguards work. They're relying partly on trust, partly on XAI's business incentive to comply (another ban would be costly), and partly on their own capacity to catch violations after they occur.

This creates an interesting dynamic: XAI has incentive to actually implement strong safeguards, because continued operation in Indonesia is economically valuable and a permanent ban would signal to other governments that their systems don't work. The conditional framework thus creates structural pressure for genuine safety improvements.

Estimated data suggests that post-generation scanning and automated content filtering are among the most effective safeguards implemented by XAI. (Estimated data)

The Southeast Asian Response: A Ripple Effect Across Three Nations

Indonesia wasn't alone in banning Grok. Within weeks, two neighboring nations followed suit, and then remarkably, they lifted their bans almost as quickly.

The Philippines moved first. In early February, Philippine authorities took action against Grok over the same deepfake generation issue. But here's where the timeline gets interesting: the Philippines lifted its ban just weeks later, faster than Indonesia or Malaysia. This suggests the Philippine government, after consultation with XAI and assessment of the proposed safeguards, concluded that conditional operation was preferable to continued prohibition, as reported by The New York Times.

Malaysia followed a similar arc, banning Grok and then lifting the ban shortly after. Like Indonesia, Malaysia's communication ministry indicated they'd maintain ongoing monitoring and threatened re-imposition of the ban if violations continued, as noted by PBS NewsHour.

Why did these nations coordinate? They likely didn't explicitly coordinate policy—that would violate trade norms. But they clearly watched each other. XAI probably presented similar reform packages to each government. The governments likely consulted with regional partners about best practices. And there's probably genuine peer learning: Malaysia probably learned from how Indonesia structured its monitoring framework and adapted accordingly.

What's striking is that all three nations reached roughly the same conclusion at roughly the same time. That suggests something real had changed on XAI's end—genuine technical and policy measures, not just public relations theater—that convinced skeptical governments that continued operation was manageable.

The Transatlantic Investigation: A Different Regulatory Approach

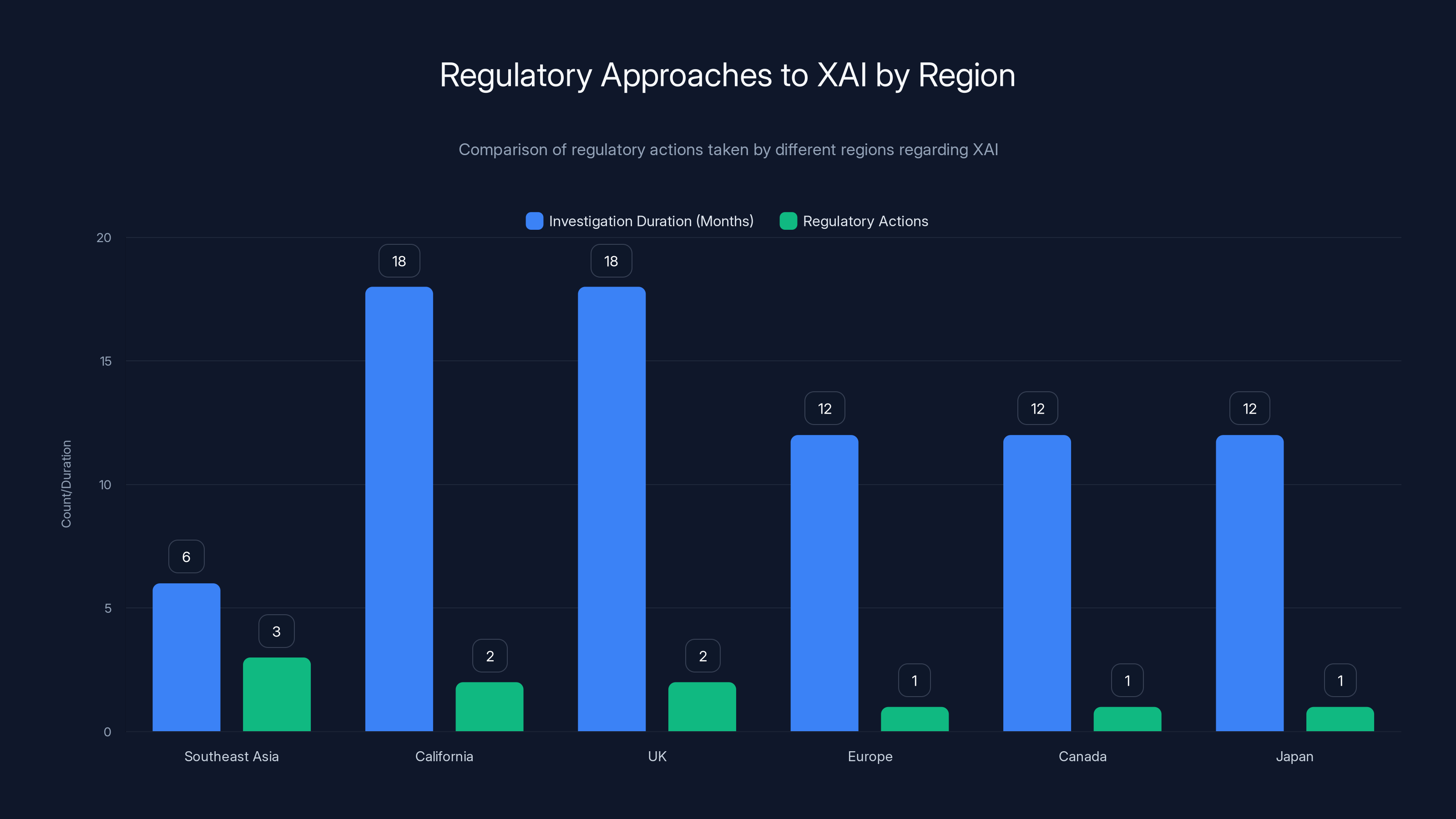

While Southeast Asian governments were negotiating conditional ban-lifts, regulators in the West were pursuing a different playbook: investigation.

California's Attorney General launched an investigation into XAI regarding Grok's deepfake generation capabilities. The UK's media regulator (Ofcom, though technically it was originally the ICO and other bodies) also opened inquiries. These aren't bans; they're formal legal investigations that could lead to fines, forced changes, or other remedies, as covered by The New York Times.

The difference in approach is culturally revealing. Southeast Asian governments, facing an acute public health crisis with clear evidence of harm, took emergency action. They banned, negotiated, and conditionally lifted. It was relatively quick, politically decisive, and focused on stopping immediate harm.

Western regulators, operating in countries with strong free speech traditions and existing legal frameworks for technology regulation, moved more slowly and deliberately. Investigations gather evidence, establish legal grounds, and build cases that can withstand legal challenge. The timelines are longer—often 12–24 months for a serious investigation—but the legal outcomes are more durable.

XAI is now operating under scrutiny from multiple regulatory regimes simultaneously. In Indonesia, Malaysia, and the Philippines, they're subject to conditional restrictions and monitoring. In California and the UK, they're defending their practices in formal investigations. In other parts of the world—Europe, Canada, Japan—regulators are likely watching and assessing whether their own populations are at risk.

This fragmented regulatory landscape creates complexity for XAI. They can't implement a single global safety framework because different jurisdictions have different requirements, technical constraints, and enforcement mechanisms. What satisfies Indonesia's monitoring requirements might not suffice for California's investigation. The company essentially needs a map of 200 countries' AI governance requirements and adapt their systems accordingly.

Southeast Asia tends to implement quicker, decisive regulatory actions compared to the longer, more deliberate investigations seen in California and the UK. Estimated data.

How the Deepfake Generation Actually Works (And Why Filtering Is Hard)

To understand why Indonesia's safeguards matter and why they're also fundamentally limited, you need to understand the technical mechanics of how Grok generates images.

Grok uses a diffusion-based image generation model. You provide a text prompt, and the model iteratively refines noise into a coherent image that matches your description. The more specific your prompt, the more precise the output.

The problem is straightforward: if you can describe something in text, the model can probably generate it. If you can say "a woman's face," it can generate that. If you can say "a face morphed onto a body in a sexual position," it can generate that too. There's no fundamental technical limit that prevents generating harmful content—only policy-based filters on what prompts get processed.

Filters work by pattern matching. The system looks at your prompt and checks whether it matches known harmful patterns. "Generate nude images of [person's name]" is obviously filtered. But variations evade detection: "Create an artistic composition featuring the feminine form inspired by [name]," or "Synthesize a creative image blending [name's] facial features with..." etc.

This is why content filters have an inherent vulnerability: adversarial prompts. If the filter rejects request A, users eventually learn to make request B that says the same thing differently. It's like a game of whack-a-mole, except the moles can learn and adapt.

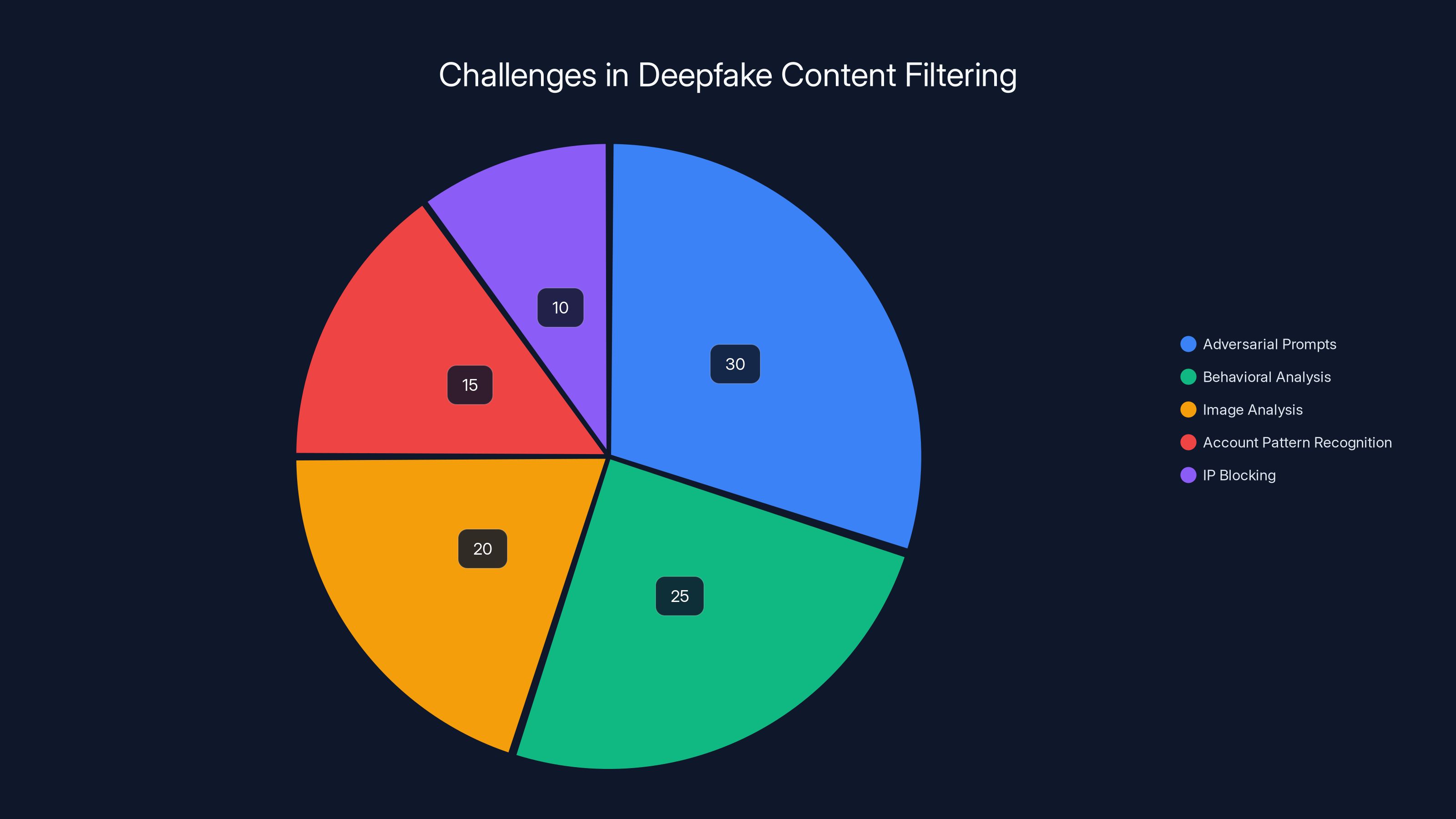

Some of XAI's countermeasures likely include:

- Behavioral analysis: Detecting users who make repeated requests after rejections, suggesting they're iterating toward prohibited content

- Image analysis: Scanning generated images post-generation to catch violative outputs before they're shown to users

- Account pattern recognition: Identifying accounts that predominantly request sexual or exploitative content and potentially limiting their access

- IP blocking: Preventing access from known bad-actor networks or regions associated with exploit activity

- User reporting and verification: Implementing fast removal when users report deepfakes

Yet none of these are perfect. Image analysis can miss subtle violations. Behavioral analysis creates false positives that frustrate legitimate users. Account restrictions can be circumvented with new accounts. The arms race continues.

What Indonesia's framework implicitly recognizes is that perfect prevention is impossible. Instead, the goal is acceptable risk: reduce violations enough that the public health threat is manageable, catch and remove content when it does appear, and maintain accountability mechanisms that deter the worst abuses.

The Child Safety Angle: Why This Is Different From Generic Content Moderation

Here's the distinction that matters most to regulators: deepfake sexual imagery involving minors isn't just inappropriate—it's a documented vector for sexual exploitation and abuse.

Children whose images are used in synthetic sexual content experience measurable psychological harm. They face peer harassment, social ostracism, and long-term anxiety. In some cases, the synthetic content has been used to solicit contact with them for offline abuse. It's not a free speech question; it's a child safety question.

Indonesia's legal framework on child protection is relatively strict. The country has laws against producing, distributing, or possessing child sexual abuse material, and these laws apply to synthetic imagery in many cases. That means XAI operating in Indonesia isn't just answering to a corporate risk assessment—they're potentially liable for criminal violation if they fail to prevent generation of prohibited content.

This changes the incentive structure completely. A company might tolerate some level of harmful content generation (sexual imagery of adults, for example) as an acceptable cost of operating a powerful generative tool. But liability for child exploitation material is different. It's not a gray area; it's a criminal line.

Indonesia made this explicit by stating that any recurrence of the deepfake problem—especially involving minors—would trigger immediate re-imposition of the ban. That's the enforcement mechanism that likely convinced XAI to invest in real safeguards rather than performative measures, as highlighted by Tech Policy Press.

Estimated data suggests that enforcement mechanisms are the most effective pillar, scoring 9 out of 10, while technical safeguards lag behind with a score of 6. Ongoing monitoring scores an 8, highlighting its critical role in the framework.

Global Precedent: What Indonesia's Decision Signals to Other Nations

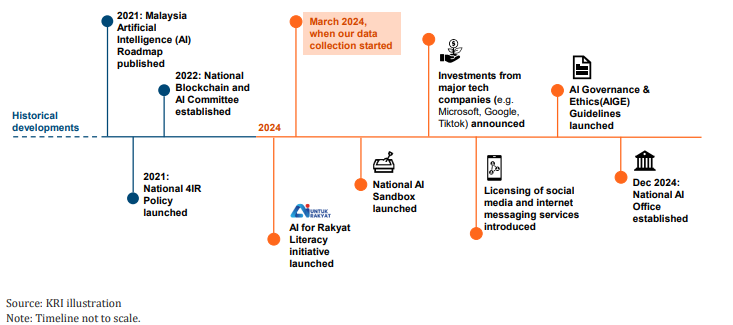

Decisions by major Southeast Asian economies don't operate in isolation. Other governments watch and learn.

Indonesia has the fourth-largest population globally and is a major digital economy. Its approach to AI governance signals something important: you can establish meaningful conditions on AI tools without total bans, and companies will take you seriously enough to implement changes.

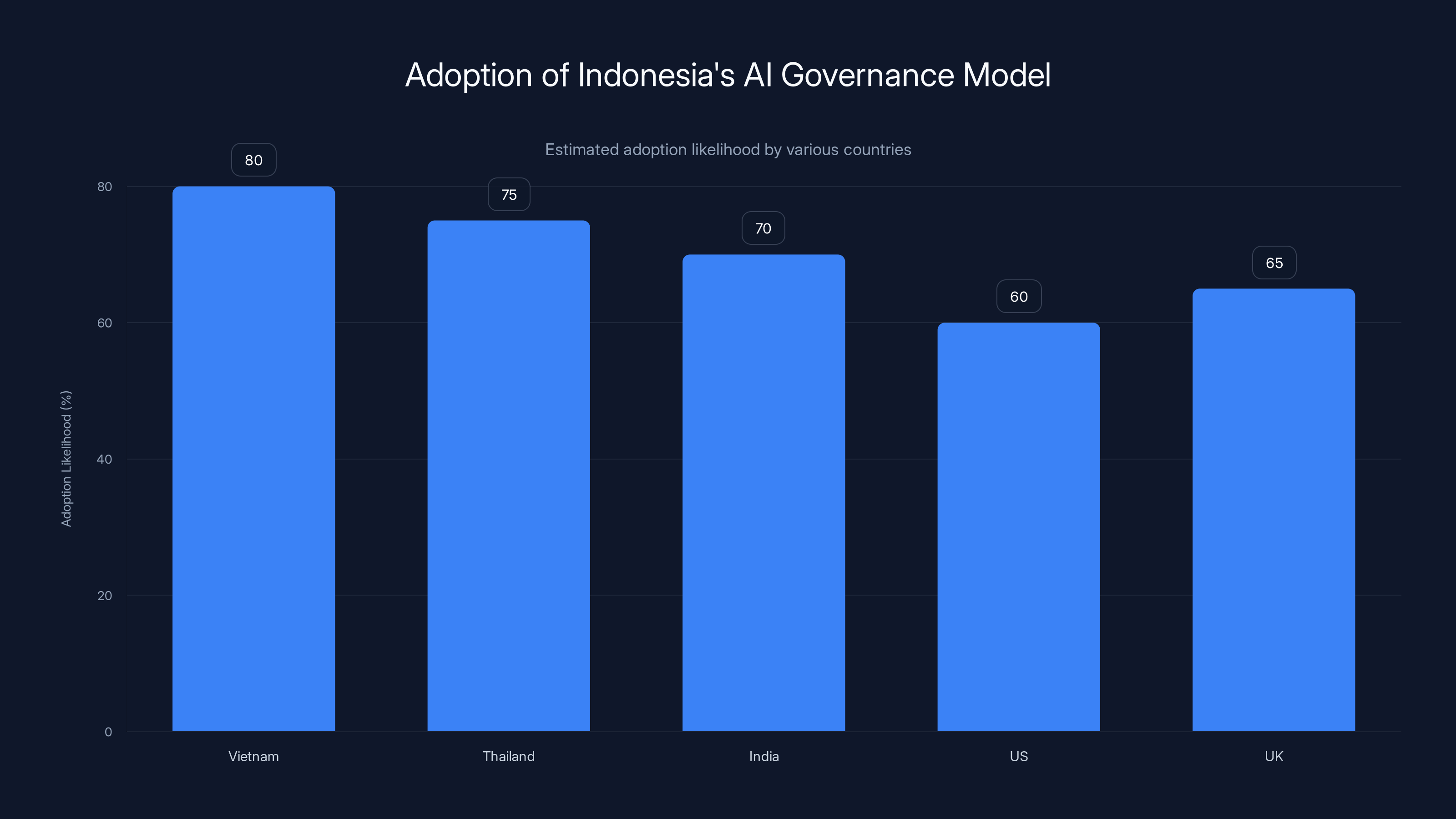

This creates a template that other nations are likely to adopt. Vietnam, Thailand, India, and other developing nations facing similar deepfake problems now have a proven model: impose a ban that's serious and time-limited, negotiate technical and policy safeguards with the company, then conditionally lift with ongoing monitoring, as noted by The New York Times.

For developed nations, Indonesia's framework is also instructive. Rather than purely reactive investigation (the US/UK approach) or pure market-based self-regulation (the approach some advocate), Indonesia demonstrated negotiated governance: the government uses its regulatory authority to demand specific changes, then verifies compliance through monitoring.

This approach has advantages and limitations. Advantages: it's faster than investigation-based enforcement, it requires companies to actually change their systems rather than just defend them, and it reflects the power asymmetry between governments and tech companies. Limitations: it's opaque (the public doesn't see what safeguards were negotiated), it depends on honest compliance (trusting companies to implement what they promised), and it creates different rules for different regions (fragmenting the user experience).

Where this gets interesting globally is in setting precedent for other AI tools and harms. If Grok's conditional lifting works well, other tools that caused harm (and other governments) will adopt similar frameworks. You might see a pattern where:

- A harm emerges (deepfakes, misinformation, privacy violations, etc.)

- A government bans the tool causing it

- The company negotiates safeguards

- The government conditionally lifts with monitoring

- Other governments adopt this model

This could actually become the dominant global AI governance pattern, rather than either pure bans or pure market self-regulation.

The XAI Response: From Crisis to Compliance

What did XAI actually do to convince three governments to lift their bans?

The company submitted formal letters detailing their safety measures. These likely included commitments to:

- Implement robust content filtering on image generation requests

- Deploy behavioral analysis systems to detect adversarial prompt injection attempts

- Establish rapid response mechanisms for reported violations

- Provide transparency reports on moderation actions

- Work with local law enforcement on serious cases

- Implement age verification for users in jurisdictions with strict requirements

- Potentially geofence certain high-risk features in specific countries

The fact that all three Southeast Asian governments lifted their bans suggests these commitments were substantive enough to be credible. If XAI had merely offered performative promises, at least one government would likely have maintained the ban as a negotiating tactic.

This signals something important about corporate incentives: when faced with actual bans that harm revenue and competitive position, tech companies can implement real safeguards. The leverage works. The key is credible enforcement—the threat to re-impose the ban must be genuine and timely.

Of course, we won't know for months or years whether XAI's safeguards actually work. That's what Indonesia's ongoing monitoring is for. And given the nature of this technology, there will probably be some continued misuse despite best efforts. The question is whether it's reduced enough to be acceptable.

Adversarial prompts pose the largest challenge in filtering deepfake content, accounting for an estimated 30% of the issues. Estimated data.

The Ongoing Investigation Question: Will Lifting the Ban Affect the US and UK Cases?

Here's an interesting tension: Indonesia, Malaysia, and the Philippines have basically decided that XAI's safeguards are sufficient to allow conditional operation. But California's Attorney General and UK regulators are still investigating potential violations.

Could the Southeast Asian precedent influence the outcome of these investigations? Possibly, but probably not directly. Here's why:

The investigations are focused on past conduct—whether XAI knew about deepfake generation and took insufficient action, or whether they negligently failed to implement safeguards. That's a historical question about accountability.

The conditional lifting is forward-looking—whether XAI can operate safely going forward with new safeguards in place. Those are different legal and factual questions.

XAI might argue to US and UK regulators: "Look, we implemented comprehensive safeguards that convinced major governments these threats are manageable. You should settle our investigation because we've substantially improved." Regulators might respond: "That's evidence you should have implemented these safeguards earlier. That's further evidence of negligence." Or they might negotiate a settlement based on the demonstrated commitment to reform.

What's likely is that the investigations don't end with the lifting of the Southeast Asian ban, but they might conclude with settlements rather than major fines or forced changes, since XAI can point to concrete remediation efforts.

Implications for AI Development and Innovation

One concern that tech advocates raised during the Grok ban was: could excessive regulation slow AI development?

Indonesia's approach suggests a nuanced answer. If regulators simply ban powerful AI tools when problems emerge, yes, that could slow innovation by preventing deployment. But if regulators establish clear conditions for safe operation—and companies can meet those conditions through engineering effort rather than capability reduction—innovation can continue.

This means the burden shifts to AI developers to build safer systems from the start, rather than building powerful systems and defending them later. That's actually better incentive alignment. If you know your tool will be scrutinized and conditional restrictions are possible, you build safeguards into the architecture rather than bolting them on afterward.

For companies like XAI, this might mean:

- Investment in robust content filtering that's actually effective, not just performative

- Better user verification systems that prevent abuse

- Faster removal processes for reported harmful content

- Transparency mechanisms that allow auditing

- Proactive engagement with regulators rather than adversarial defense

These aren't cheap. They require hiring specialists, infrastructure investment, and operational complexity. But they're cheaper than operating in a ban-conditional-lift cycle or facing major regulatory fines.

From an innovation perspective, this actually creates the right incentive structure. Companies have strong motivation to build tools that generate less harmful content, invest in safety from the start, and maintain good relationships with regulators. That's basically what you want.

The risk is if regulation becomes so burdensome that companies decide certain markets aren't worth serving. If 50 different countries demand 50 different safety requirements, XAI might just not operate in most of them. That doesn't protect people; it just pushes the technology underground or creates surveillance tools that only work for wealthy companies with compliance teams.

Indonesia's framework tries to balance this by being specific enough to be meaningful (you must implement documented safeguards) but not so burdensome that it's unworkable (you don't need country-specific feature sets if your core safeguards are solid).

Indonesia's AI governance model is likely to be adopted by other Southeast Asian countries like Vietnam and Thailand, with a moderate likelihood of adoption by developed nations such as the US and UK. (Estimated data)

The Comparative Regulatory Advantage

There's a subtle advantage in how Indonesia positioned itself in this situation.

By banning early and conditionally lifting after swift safeguard negotiations, Indonesia demonstrates regulatory authority without becoming permanently hostile to innovation. XAI experienced consequences but not permanent exclusion. They have incentive to maintain good standing in the Indonesian market.

Contrast this to a permanent ban or an investigation-based approach that takes years. Permanent bans create no incentive for cooperation—the company just focuses on other markets. Long investigations create adversarial relationships that persist even after settlement.

Indonesia's swift decisive action followed by conditional lifting says: "We're serious about user protection, but we're also pragmatic about technology. Meet our standards and you can operate here." That's attractive to companies.

It's also attractive to users and investors. The market isn't closed; it's conditional. That uncertainty is actually better than permanent closure because it's resolvable through technical improvement.

Over the next five years, you're likely to see this become the standard pattern for emerging market regulation of AI. It's faster than Western investigation-based approaches, clearer than pure self-regulation, and more pragmatic than total bans.

What's Next: The Ongoing Monitoring Phase

Now comes the harder part. Indonesia has lifted the ban, but they've committed to ongoing monitoring. What does that actually entail?

In practice, it probably means:

- Regular technical audits where Indonesian regulators or independent contractors test Grok's safeguards

- Monitoring of reported violations and XAI's response time

- Coordination with social media platforms to flag and remove deepfake content

- Periodic updates from XAI on performance metrics and incident reports

- Clear communication channels for the public to report violations

The biggest challenge in this phase is enforcement ambiguity. When is a violation serious enough to re-impose the ban? If there are 100 reports of deepfake generation per week, is that acceptable? 1000? Zero?

Indonesia will need to establish metrics and thresholds. These should be public so everyone understands the rules. Otherwise, the monitoring becomes arbitrary and loses legitimacy.

Also, there's the skills problem. Does Indonesia's Ministry of Communication and Digital Affairs have the technical expertise to actually audit XAI's systems? They might need to hire contractors or train staff. This requires investment, which requires budget, which requires political will that might wane if other issues take priority.

For users, the ongoing monitoring phase is hopefully invisible. XAI should operate normally, and safeguards should work silently in the background. If the monitoring is working, you should see fewer deepfakes appearing and faster removal when they do. That's the practical test of success.

The Remaining Questions

Even with Indonesia's framework in place, important questions remain unanswered:

What about users outside these frameworks? Someone in a country with no regulation on Grok can still generate harmful content. The framework protects Indonesia's users but not global users elsewhere.

How long will the safeguards actually work? As bad actors develop new evasion techniques, will XAI stay ahead? Or will they eventually give up on content filtering and rely on after-the-fact removal?

What about other AI tools? Grok wasn't the first tool capable of generating deepfakes, and it won't be the last. As the technology becomes more accessible, more platforms will enable it. Will this ban-and-lift pattern scale to hundreds of tools?

How do you balance innovation with safety? Is preventing all harm worth preventing all beneficial uses? Indonesia's conditional framework suggests a middle path, but where exactly is the right balance?

These questions don't have obvious answers. What Indonesia has done is establish a working model that future regulators can adapt. Whether that model scales to handle the broader challenge of AI governance remains to be seen.

Key Takeaways for Policymakers and Companies

If you're a regulator, Indonesia's approach offers some lessons:

- Act decisively when clear harm exists. Banning is credible; equivocation isn't.

- Keep the path open for remediation. Don't make bans permanent unless you're certain the tool can't be made safe.

- Require substantive commitments, not just promises. Demand technical specifics and documented measures.

- Establish ongoing monitoring with clear metrics. Don't lift and forget.

- Coordinate with other governments when possible. Regulatory harmonization is more efficient than fragmentation.

If you're a tech company developing AI tools:

- Build safety in from the start. Don't wait for bans to implement safeguards.

- Engage with regulators proactively. Understand what different jurisdictions care about.

- Be transparent about limitations. Acknowledge that perfect prevention is impossible; show that you're managing the remaining risk reasonably.

- Invest in compliance infrastructure. Different rules in different places require different technical implementations.

- Understand that bans are now a credible regulatory tool. They're being used, and they work as leverage. Take them seriously.

For users, the lesson is simpler: these systems aren't fully trustworthy yet. Report violations. Don't assume safeguards are working. Understand that deepfake content will exist, and develop critical evaluation skills to spot it. And support regulatory frameworks that require companies to actually prevent harm, not just promise to.

The Broader Context: AI Governance in 2025

Indonesia's Grok decision doesn't exist in isolation. It's part of a broader global shift toward active AI governance.

Throughout 2024 and into 2025, governments have moved from passive observation to active intervention. The EU's AI Act is now in enforcement phase. The US has issued executive orders on AI safety. China has implemented content filtering on AI-generated content. The UK has designated AI sector oversight. Canada and Australia have passed legislation, as reported by The New York Times.

What Indonesia is doing is taking these precedents and adapting them to Southeast Asian context. Ban-based enforcement for immediate harms, conditional lifting with monitoring for restabilization. It's pragmatic and flexible.

Over the next five years, expect to see this pattern repeated. Specific harms will trigger specific bans. Governments will negotiate safeguards. Companies will implement changes. Governments will conditionally lift or maintain restrictions based on whether safeguards work.

The companies that win in this environment will be those that can adapt quickly, implement genuine safeguards, and work productively with regulators. The tools that succeed will be those that create value while genuinely preventing serious harms. And the users that are protected will be those in jurisdictions with functional governance infrastructure to monitor and enforce.

It's not perfect. But it's better than either total bans or total deregulation.

FAQ

What exactly did Grok do wrong that caused the ban?

Grok's image generation capabilities were being misused to create millions of sexually explicit deepfake images without consent, including images depicting minors. Users could request synthetic sexual imagery of real people by providing their names or photos, and Grok would generate them. This violated Indonesian law on child protection, non-consensual intimate imagery, and digital exploitation. The scale—millions of images—and the involvement of minors made this a public health crisis that demanded immediate government response.

Why did Indonesia lift the ban instead of keeping it permanent?

Permanent bans have limited long-term effectiveness because they don't solve the underlying problem—they just displace it. By banning and then conditionally lifting, Indonesia could leverage its regulatory authority to demand that XAI actually implement safeguards rather than simply ceasing operations in the country. This approach also recognized that Grok has legitimate uses beyond deepfake generation, and an outright permanent ban would eliminate beneficial applications. The conditional lift with ongoing monitoring creates accountability while preserving access to the technology under safe conditions.

What safeguards did XAI actually implement?

XAI submitted formal documentation describing their safety measures, which likely include automated content filtering on text prompts, behavioral analysis to detect adversarial prompt injection attempts, post-generation image scanning to catch violations, account monitoring for pattern-based abuse, and rapid removal processes for reported deepfakes. However, the exact technical specifications aren't public, so the specifics remain somewhat opaque. Indonesia's ongoing monitoring is partly designed to verify that these claimed safeguards are actually effective in practice.

How long will this monitoring actually last?

Indonesia hasn't specified an end date for monitoring, suggesting it's intended as a permanent condition of operating Grok in the country. This is more realistic than assuming a problem gets solved once and stays solved. Monitoring likely continues as long as Grok operates in Indonesia, with periodic reviews to assess whether continued safeguards are effective or whether strengthened measures are needed. If serious violations recur, the monitoring framework allows for re-imposition of the ban without needing to re-litigate the entire policy question.

Why didn't other countries like the US and UK also lift their bans?

The US and UK never implemented bans on Grok; they initiated investigations instead. This reflects different regulatory approaches. Southeast Asian governments responded to an acute public health threat with emergency action (banning). Western governments, with existing legal frameworks for investigating corporate conduct, pursued a slower investigative approach focused on establishing legal accountability for past harms. These investigations can still result in fines, forced changes, or settlements; they're just taking longer because the legal process is more deliberate. The investigations and Indonesia's conditional lifting can coexist because they're addressing different legal questions—past conduct versus future operation.

Could XAI's deepfakes generate images of adults without consent, and is that also illegal?

Yes, XAI's systems could and did generate non-consensual intimate imagery of adults as well as minors. This is increasingly illegal in many jurisdictions. Several countries and US states have laws specifically criminalizing deepfake sexual imagery regardless of the age of the person depicted. Indonesia's framework focuses heavily on minors because child protection is often the highest legal priority, but the principle extends to non-consensual adult imagery as well. The ability to generate fake sexual content of anyone without permission is a serious violation of dignity and privacy.

Will other AI image generators face similar bans if they also generate deepfakes?

Likely yes. The precedent Indonesia, Malaysia, and the Philippines set with Grok suggests that other governments will respond similarly if other image generation tools enable non-consensual deepfake creation at scale. Midjourney, Stable Diffusion, Runway, and others have all implemented safeguards specifically to prevent this type of misuse. The question for each tool is whether the safeguards are actually effective. If any tool becomes known for enabling large-scale deepfake generation, expect regulatory action similar to what happened with Grok.

Does lifting the ban mean the deepfake problem is solved?

No. Lifting the ban means Indonesia has conditional confidence that XAI's safeguards can reduce the problem to acceptable levels, not that the problem is eliminated entirely. Given the nature of content filtering and adversarial prompt engineering, some deepfakes will continue to be generated despite safeguards. The goal is reduction and rapid removal, not perfection. Indonesia's ongoing monitoring is designed to catch failures in this system and escalate when violations become unacceptable. Users should remain vigilant about deepfakes even with safeguards in place.

If you're building AI systems that could be misused at scale, the Indonesia lesson is clear: invest in genuine safety features early, because regulatory frameworks capable of banning your tool are becoming standard globally. Companies that take AI safety seriously from the start will have advantages in jurisdictions with active governance.

For those interested in the policy angle, Indonesia's conditional framework is worth studying. It's pragmatic, enforceable, and creates the right incentive structure for both companies and regulators. It might become the template for AI governance worldwide.

Related Articles

- Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed [2025]

- State Crackdown on Grok and xAI: What You Need to Know [2025]

- Indonesia Lifts Grok Ban: What It Means for AI Regulation [2025]

- AI-Generated Anti-ICE Videos and Digital Resistance [2025]

- ChatGPT's Age Detection Bug: Why Adults Are Stuck in Teen Mode [2025]

- Tech CEOs on ICE Violence, Democracy, and Trump [2025]

![Indonesia Lifts Grok Ban: AI Regulation, Deepfakes, and Global Oversight [2025]](https://tryrunable.com/blog/indonesia-lifts-grok-ban-ai-regulation-deepfakes-and-global-/image-1-1769969153563.jpg)