Introduction: The Digital Casino We Can't Ignore

Last year, a phrase started showing up in courtrooms that should've made every parent sit up straight. A lawyer describing social media platforms as "digital casinos." Not metaphorically. Literally.

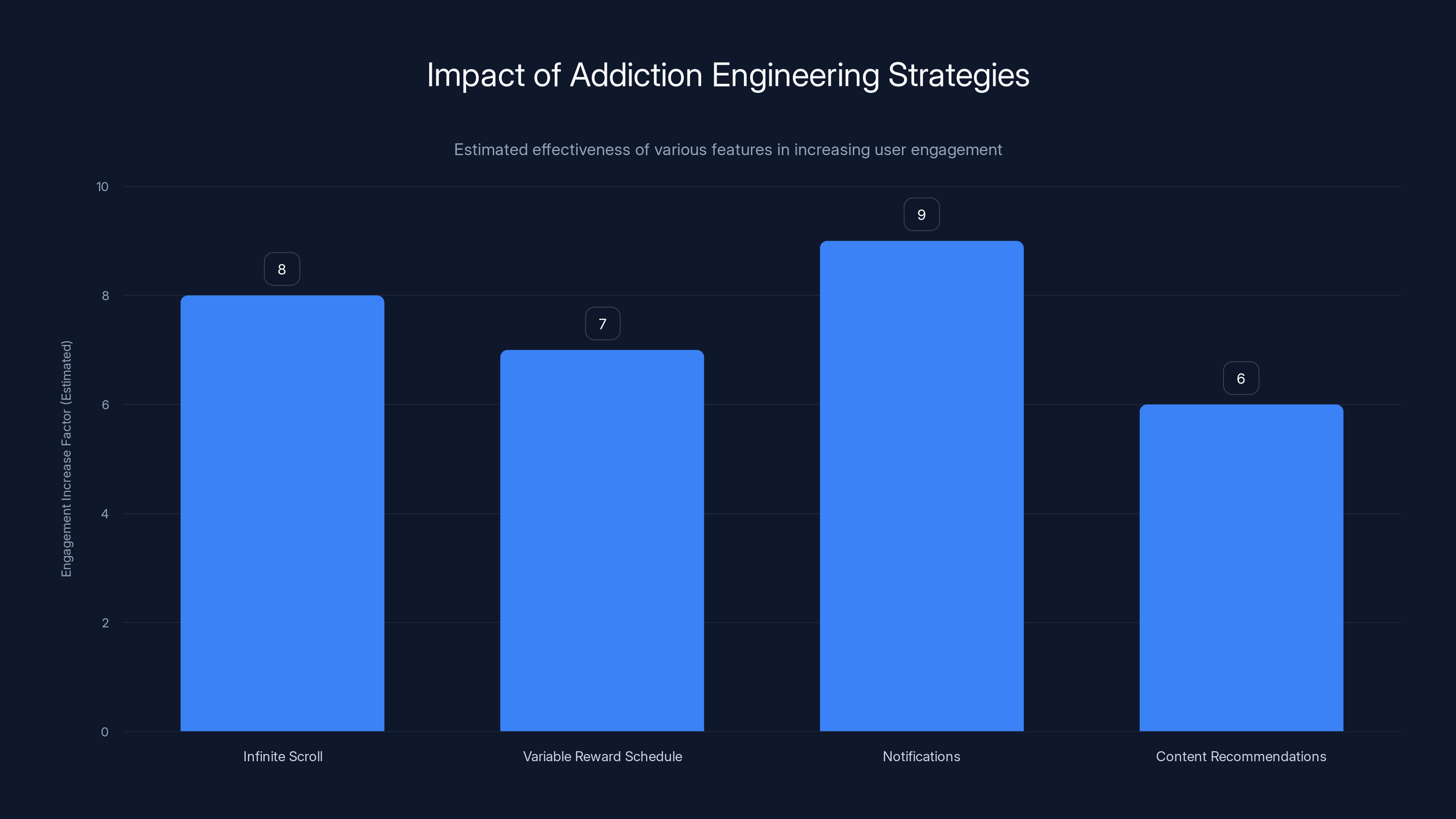

The comparison isn't wrong. Social media companies have engineered their platforms using the exact same psychological techniques that Las Vegas uses to keep people at slot machines. Infinite scroll. Variable rewards. Notifications that light up dopamine pathways. The only difference? The stakes are children's brains.

This isn't hyperbole anymore. It's the core argument in a landmark lawsuit against Meta and YouTube that could reshape how billions of people interact with their phones. And it's moving through the courts right now.

The case hinges on a simple but devastating claim: Meta and YouTube didn't just accidentally hook kids on their platforms. They intentionally designed features knowing they'd trap young users in addictive patterns. That's not a business strategy. That's what happens when you prioritize engagement metrics over human wellbeing.

What makes this lawsuit different from previous attempts to regulate social media is the specificity. We're not talking about vague claims about mental health. We're talking about documented design choices. We're talking about internal emails and research that executives actually knew their products were damaging young people. We're talking about a playbook so calculated that it mirrors addiction science textbooks.

This article breaks down everything you need to know about what's happening, why it matters, and how it could fundamentally change not just social media, but the entire tech industry. Because if Meta and YouTube lose this case, every platform optimizing for "engagement" should get nervous. Very nervous.

TL; DR

- The Core Claim: Meta and YouTube intentionally engineered addiction mechanisms in their platforms targeting children and teens

- The Evidence: Leaked internal research, former executive testimony, and design documentation show companies knew their products caused harm

- The Scale: Billions of dollars in potential damages, plus the possibility of forced platform redesigns

- The Precedent: This lawsuit could set the standard for how all tech companies must disclose their addictive design patterns

- The Timeline: Multiple cases are moving through state and federal courts simultaneously, with some reaching critical phases in 2025

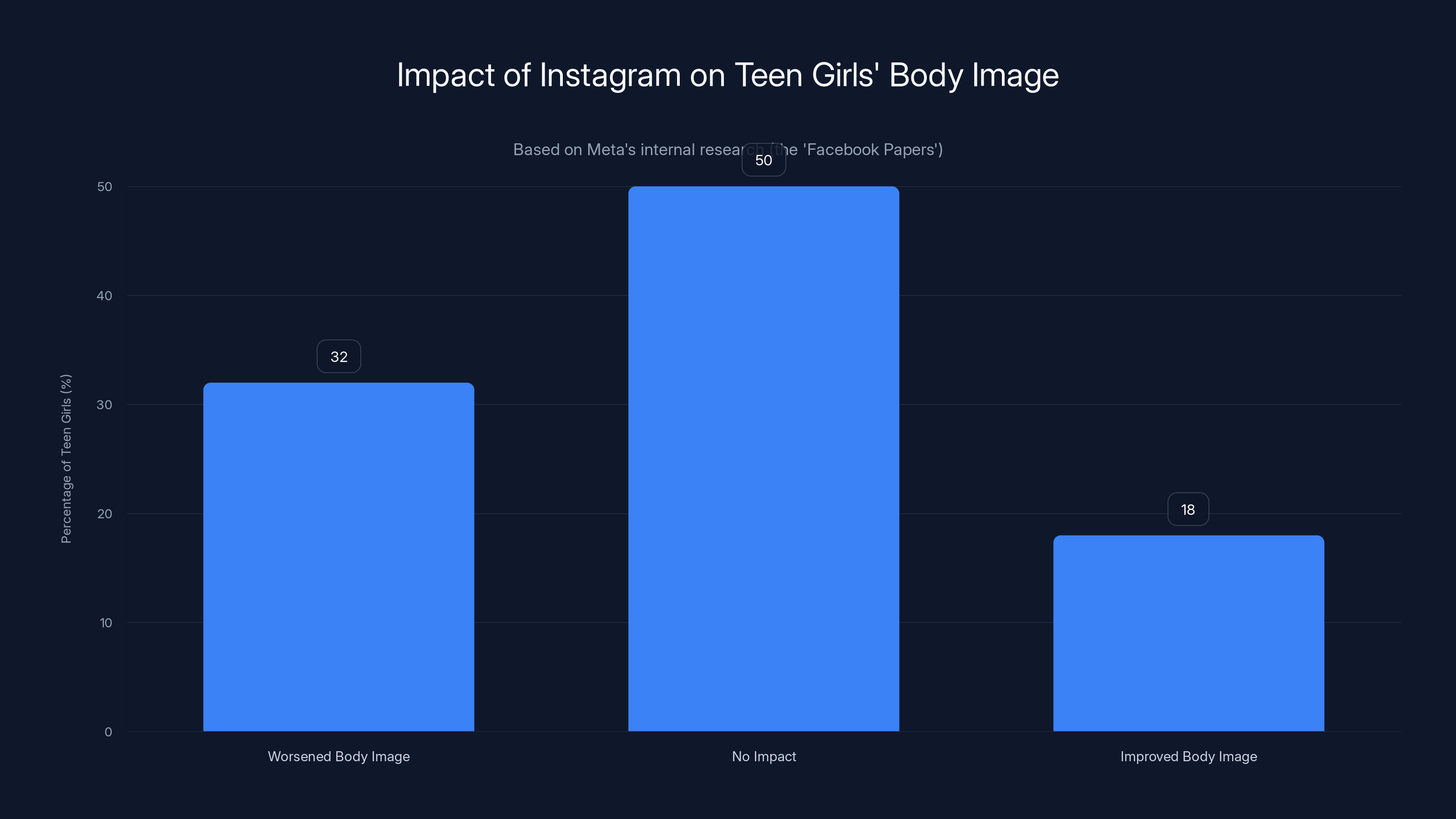

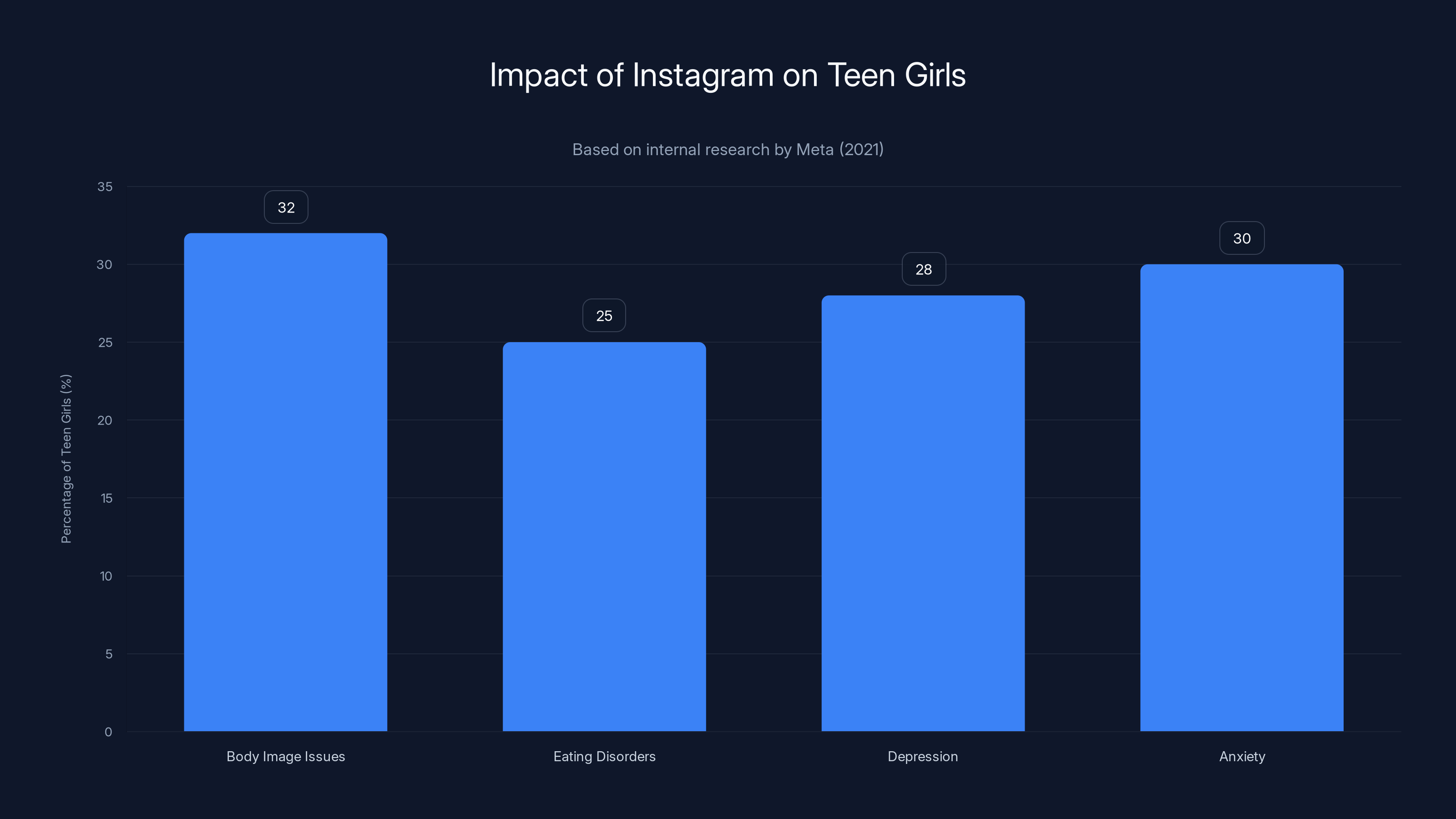

According to Meta's internal research, 32% of teen girls reported that Instagram worsened their body image issues, highlighting significant psychological impacts.

Understanding the Addiction Engineering Strategy

Before you can understand what Meta and YouTube did wrong, you need to understand what they did right. Because they didn't accidentally create addictive platforms. They deliberately constructed them using psychological principles that neuroscientists study when researching substance addiction.

Take the infinite scroll feature. That's not a bug. It's a carefully engineered trap. When you reach the bottom of your feed, most apps would just let you reach the bottom. Stop. Wait for new content. Instagram and TikTok don't do that. They keep pulling fresh content up from below, eliminating the natural stopping point your brain was expecting. Your brain never gets a "finished" signal. So you keep scrolling.

This triggers something called the "variable reward schedule." In psychology, this is how you create the strongest possible habit loops. You don't reward every action equally. You randomize the rewards. Sometimes you scroll three times and hit a video that makes you laugh. Sometimes you scroll fifty times. That unpredictability makes your brain work harder, crave more, and return more often.

Las Vegas learned this in the 1960s. Slot machines are way more addictive when you don't know exactly when you'll win. Meta learned it too. They hired the same principles and applied them to toddlers and teenagers.

The Notification Strategy That Won't Stop

Notifications are how social media keeps you plugged in when you're not even using the app. Your phone buzzes. Your brain shoots out dopamine. You check what you missed. Suddenly you're back in the app for another hour.

But here's the thing: Meta and YouTube could send notifications whenever they want. They could space them out. They could make them less intrusive. They don't because engagement is everything. So they're programmed to hit at moments calculated to pull you back in. When you're most likely to return. When you're most vulnerable to staying.

For teenagers, this is especially brutal. Teen brains are still developing their impulse control centers. The prefrontal cortex doesn't fully mature until your mid-twenties. So a 15-year-old getting a notification that says "5 people are talking about your post" isn't just getting a message. They're getting a scientifically calculated jab at their reward system at a moment when they're neurologically least equipped to resist it.

Algorithmic Amplification of Outrage

Here's something Meta's own researchers discovered: Outrage drives engagement. Anger keeps people scrolling longer than happiness does. So the algorithm learned to amplify content that triggers emotional responses.

This isn't accidental either. When you design an algorithm whose entire job is to maximize time spent, and anger keeps people on the platform longer than contentment, the algorithm will naturally start surfacing angrier content. It'll recommend that heated argument over the cute dog video. It'll push the conspiracy theory over the educational post.

For kids, this creates a feedback loop nobody talks about. You're not just scrolling social media. You're scrolling an algorithm that's been trained to show you the most emotionally volatile content possible. You're marinating in a feed specifically designed to make you upset, because upset means engaged, and engaged means ad impressions sold.

Young people with developing emotional regulation systems are particularly vulnerable. They don't have the life experience to contextualize what they're seeing. They don't have the emotional tools to process constant exposure to distressing content. And the algorithm doesn't care. It has one job: keep them scrolling.

The Evidence: What Internal Documents Reveal

The strongest argument in this lawsuit isn't philosophical. It's documentary. Meta and YouTube have internal research that proves they knew. They knew their platforms were addictive. They knew they were targeting young people. They knew it was harmful. And they chose to do it anyway.

This is the nuclear moment for these companies. Because knowing and doing nothing is worse than not knowing at all. It moves this from negligence to malice. It moves this from a problem to a cover-up.

The Facebook Papers: Internal Research on Harm

In 2021, internal documents leaked showing that Meta had conducted extensive research on how Instagram affects teenage girls. The findings were damaging. The research showed that Instagram makes body image issues worse. It contributes to eating disorders. It increases depression and anxiety in young women. Instagram's own researchers found that 32% of teen girls said Instagram made their body image issues worse.

But here's the crucial part: When these researchers presented their findings internally, Meta didn't kill Instagram. Meta didn't redesign it to be less harmful. Meta suppressed the research. They kept it quiet. They didn't change the product. They just made sure the public didn't know what they knew.

That's different from building a potentially harmful product. That's different from making mistakes. That's knowing about harm and choosing profit anyway.

Former Executive Admissions

Sean Parker, former Facebook president, basically confessed in an interview. He said the platform was built to exploit psychological vulnerabilities. He said they knew they were rewiring brains. He said the original intent was honest, but once they realized they could hook people, the temptation became irresistible.

Jamie Byrne, ex-Instagram manager, went further. She acknowledged that Instagram's features were designed with the express purpose of maximizing time spent on platform. Every feature added another hook. Every update made the product more compulsive.

These aren't anonymous sources. These aren't conspiracy theories. These are people who built these systems, admitting in public that they built them to be addictive. That they knowingly exploited psychology to trap people, especially young people.

When the people who created the system admit it was designed this way, you don't have an argument anymore. You have a confession.

Design Documents and Feature Rollouts

The lawsuit also relies on design documentation showing feature development specifically aimed at increasing daily active users and time on platform. Features like Snapstreaks (requiring consecutive daily use or losing your streak), Stories (ephemeral content that creates urgency to check back), and algorithmic feeds (replacing chronological feeds to show content designed to maximize engagement rather than content from people you actually follow).

Each of these features was a calculated decision. Each was tested. Each was optimized. None of them were accidents. The companies have the emails, the meeting notes, the A/B test results showing which design increases addiction metrics the most.

That documentation is now evidence. And you can't explain your way out of documentation.

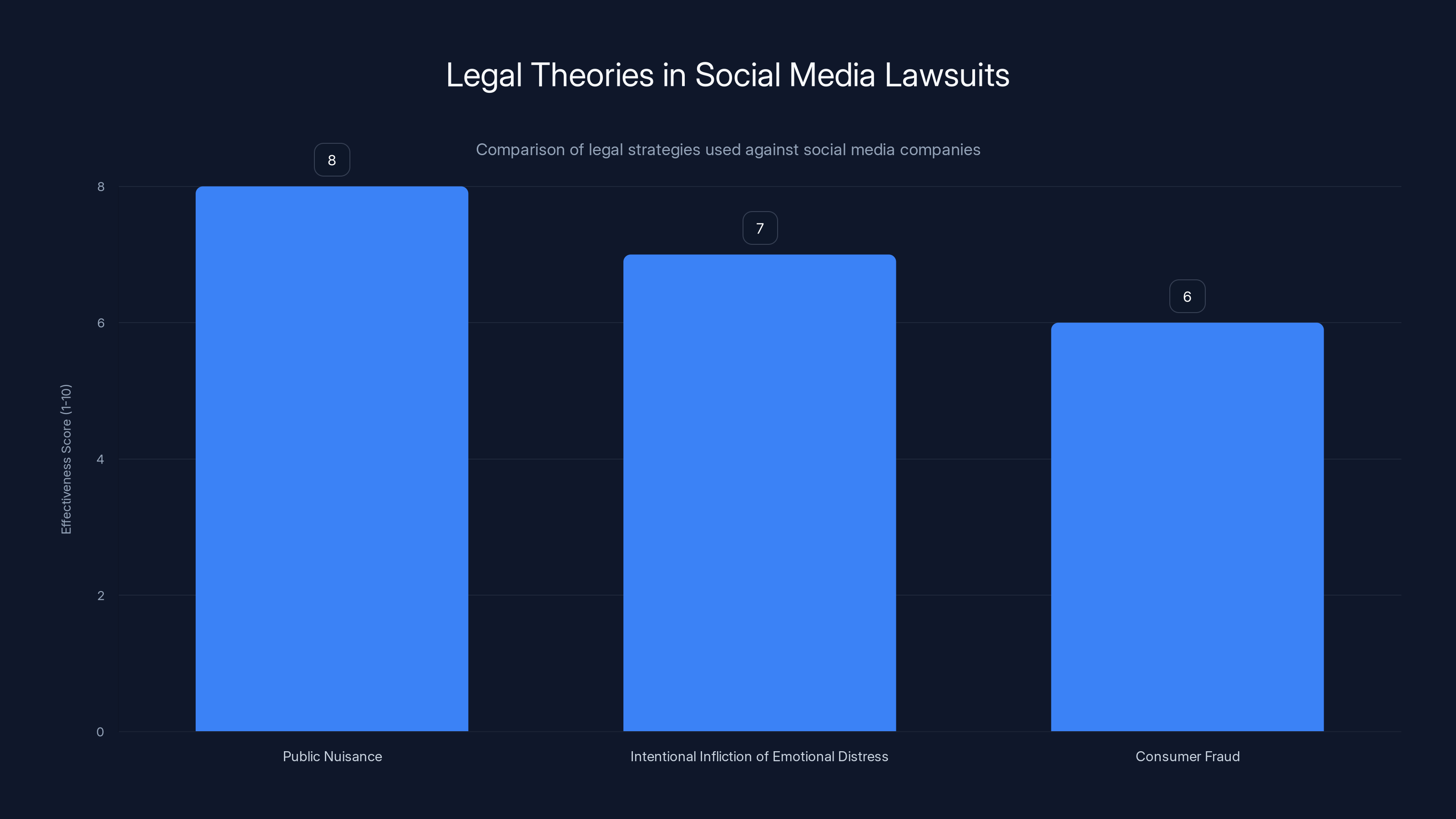

Public nuisance claims are considered the most effective strategy due to their broad applicability and lower burden of proof. (Estimated data)

The Legal Framework: Why These Cases Are Different

Social media companies have survived regulatory threats before. They've faced investigations, public criticism, Congressional hearing soundbites that go nowhere. Why is this lawsuit different?

Because the legal strategy is different. Instead of arguing about the existence of harm (which is now beyond debate), these lawsuits argue about the intentionality of the companies. They're not saying "your product is bad." They're saying "you knew your product was bad and sold it anyway."

This shifts the liability from product liability to consumer fraud and intentional harm.

Public Nuisance Claims

One angle the lawyers are using is the "public nuisance" framework. This is the same legal theory used to sue tobacco companies. The argument: Social media platforms, by their design and operation, create a widespread condition that causes harm to children and society.

Public nuisance law is powerful because it doesn't require you to prove individual harm to individual people. It only requires you to show that the defendant's conduct creates a widespread danger to public health or safety. That's way easier to prove when you can point to billions of users and documented psychological harm mechanisms.

Intentional Infliction of Emotional Distress

Another theory focuses on the intentional design and deployment of features known to cause emotional distress, particularly in vulnerable populations like teenagers. The argument is straightforward: Meta and YouTube knew these features cause anxiety, depression, and self-harm behaviors in young people, and they deployed them anyway for profit.

This requires proving knowledge and intent. But we have the leaked research and executive admissions. We have the documentation. We have the testimony. Proving intent stops being theoretical when the company's own researchers documented that their product increases depression, and the company did nothing.

Consumer Fraud Claims

Parents didn't consent to their children being targeted with addictive design. They consented to their kids using "social media." They didn't consent to psychological manipulation. The fraud argument is that Meta and YouTube misrepresented what their platforms actually are. They're not social networks. They're addiction delivery systems designed to exploit developing brains.

If you sold someone a device claiming it was safe when you had internal research showing it was dangerous, that's fraud. That's exactly what happened here.

The Scope of Harm: Mental Health Crisis in Children

You can't understand why this lawsuit matters without understanding the scale of the problem it's responding to. This isn't about a few kids having too much screen time. This is about a mental health crisis that coincides almost perfectly with the rise of social media.

Depression and Anxiety Epidemic

Teen depression and anxiety rates have tripled since Instagram launched. That's not correlation you can easily dismiss. The timing is too specific. The rise is too steep. And the mechanism is documented.

When you spend hours every day comparing your authentic self to the curated highlight reels of hundreds of other people, your brain takes a hit. When the algorithm is designed to show you content that makes you feel bad (because sad engagement is engagement), that's another hit. When notification systems interrupt your sleep and dopamine-hack your reward circuits, that's another hit.

Put all these hits together, apply them to teenagers whose brains are still developing emotional regulation, and you get a mental health crisis. Which we have.

Self-Harm and Eating Disorders

There's a documented link between Instagram use and eating disorder diagnosis, particularly in teenage girls. This makes sense when you understand what Instagram actually is: a platform specifically designed to maximize time spent, showing algorithmically selected content designed to trigger emotional responses, in an environment where teenage girls compare their bodies to other teenage girls.

Meta's own research found this. They documented it. They knew that content related to body image issues, diet culture, and appearance-based comparison was being algorithmically amplified because it drove engagement. They did nothing.

The eating disorder connection isn't hypothetical. It's documented in clinical research. And it's preventable if Meta chose to prevent it. They just won't.

Sleep Disruption and Its Cascading Effects

Notifications from social media disrupt sleep patterns, particularly in teenagers who keep phones in their bedrooms. Poor sleep cascades into every other health problem: reduced academic performance, weakened immune function, increased depression and anxiety, and impaired emotional regulation.

Meta and YouTube could limit notifications at night. They could disable notifications during homework hours. They could build in sleep protections. They don't. Because engagement metrics don't care about sleep cycles. The algorithm doesn't rest at 10 PM.

So teenagers lose sleep. And that's a feature, not a bug, from the platform's perspective. Because sleep-deprived brains are easier to hook, easier to manipulate, and they stay on the platform longer. The platforms literally profit from your kid's insomnia.

The Business Model That Requires Addiction

Here's what's critical to understand: These platforms can't be fixed without fundamentally changing how they make money. And that's the real point of this lawsuit.

Meta and YouTube don't sell products to users. Users are the product. Advertisers are the customers. Advertisers pay based on how much time users spend and how engaged they are. So the entire business model is built on maximizing engagement.

When engagement is your only metric, when that's what determines the company's valuation and the CEO's bonus and the investors' returns, then every feature optimizes for engagement. Every algorithm maximizes for engagement. Every notification exists to drive engagement.

You can't build an ethical social media company on top of an advertising-based business model that requires maximum engagement. The two are incompatible. It's like asking a cigarette company to make healthy cigarettes. The business model doesn't permit it.

The Attention Economy

This is called the attention economy. Your attention is the scarce resource. Advertisers are competing for it. Social media platforms are competing to capture as much of it as possible. The winner is whoever can hook users the longest.

In this economy, addiction isn't a side effect. It's the business model. It's the product. It's the entire value proposition. Social media companies are literally selling your attention to advertisers, and they need you addicted to maximize that value.

The lawsuit is essentially arguing that this business model is illegal when applied to children. That you can't ethically sell a child's attention if doing so requires manipulating their developing brains. That there's a line between persuasion and exploitation, and addiction engineering is definitely over that line.

Alternative Business Models Don't Compete

You could build a social media platform that respects your privacy, doesn't manipulate you with algorithmic feeds, and doesn't send constant notifications. You know who would use it? Almost nobody. Because all the cool people are already on Instagram. All the news is on TikTok. Your friends are on Facebook.

Once a platform reaches critical mass, you can't beat it with a "healthier" version. Network effects are too strong. So everyone's stuck using platforms they know are manipulative, because the alternative is social isolation.

This is why the lawsuit matters. This is why regulation matters. Because the market won't fix this. Individual users can't fix this. Only legal consequences can change a business model that's this profitable.

Infinite scroll and notifications are among the most effective strategies in increasing user engagement, with notifications having the highest impact. Estimated data based on typical user behavior.

What the Lawsuit Actually Seeks: Potential Outcomes

People assume this lawsuit is asking for money. That Meta and YouTube will pay a settlement and everything goes back to normal. That's not actually what's being asked for. Money is secondary. Structural change is the goal.

Forced Platform Redesigns

The lawsuit could compel Meta and YouTube to actually change their platforms. Remove infinite scroll. Stop algorithmic feeds that are designed to maximize engagement rather than show chronological content. Implement sleep timers. Disable notifications during certain hours. Stop collecting behavioral data on minors.

These aren't suggestions. These are structural changes that would fundamentally alter how the platforms work. Because the current design is the addiction design. You can't keep the current design and fix the addiction. You'd have to rebuild the entire system.

Disclosure Requirements

Another potential outcome: forcing Meta and YouTube to disclose exactly how their algorithms work, what data they're collecting, and how they're using that data. Right now, it's all proprietary black boxes. Companies claim it's trade secrets. The lawsuit could force transparency.

If users and parents actually knew exactly how much data was being collected and exactly how that data was being used to manipulate behavior, the public pressure would be enormous. Which is why companies fight so hard to keep this hidden.

Restrictions on Targeting Minors

The strongest potential outcome: Meta and YouTube would be prohibited from using behavioral data to target advertising at minors. They'd be prohibited from using addiction mechanisms on anyone under 18. They'd be prohibited from collecting the psychological profiles they use to manipulate users.

This would destroy their ability to profit from children. Which is probably why they're fighting these lawsuits so hard.

Financial Damages

There's also the money part. Damages could be substantial. Some estimates suggest billions of dollars. That's meaningful not because money fixes anything, but because it creates incentive for other companies to not do what Meta did.

If the precedent is set that you can be sued for damages when you intentionally engineer addiction in children, other tech companies will think twice. The cost of continuing might exceed the benefit. That's how regulation works when courts impose it rather than legislatures.

Historical Parallels: The Tobacco Precedent

This lawsuit doesn't exist in a vacuum. It's modeled on the successful prosecution of tobacco companies, and that precedent is powerful.

Tobacco companies spent decades fighting claims that smoking was addictive and harmful. They had internal research proving harm. They suppressed it. They had executives testify under oath that nicotine wasn't addictive when they knew it was. They targeted vulnerable populations.

Eventually, the evidence became irrefutable. The lawsuits multiplied. The discovery process exposed the internal emails and research. The public narrative shifted. And ultimately, the tobacco industry lost. They paid massive settlements. They agreed to major restrictions on advertising. They agreed to disclosure requirements.

The social media lawsuits are using the exact same playbook. The evidence of intentional harm is even stronger than it was for tobacco. The documentation is more explicit. The admission of executives is more direct. The connection between design choice and harm is more clear.

If the tobacco precedent holds, Meta and YouTube are in serious trouble. And unlike tobacco, they can't really argue that the science is uncertain. We know social media is addictive. We know it harms teenagers. We know the companies designed it that way. The only question is whether courts will enforce the legal consequences.

The Companies' Defense Strategy

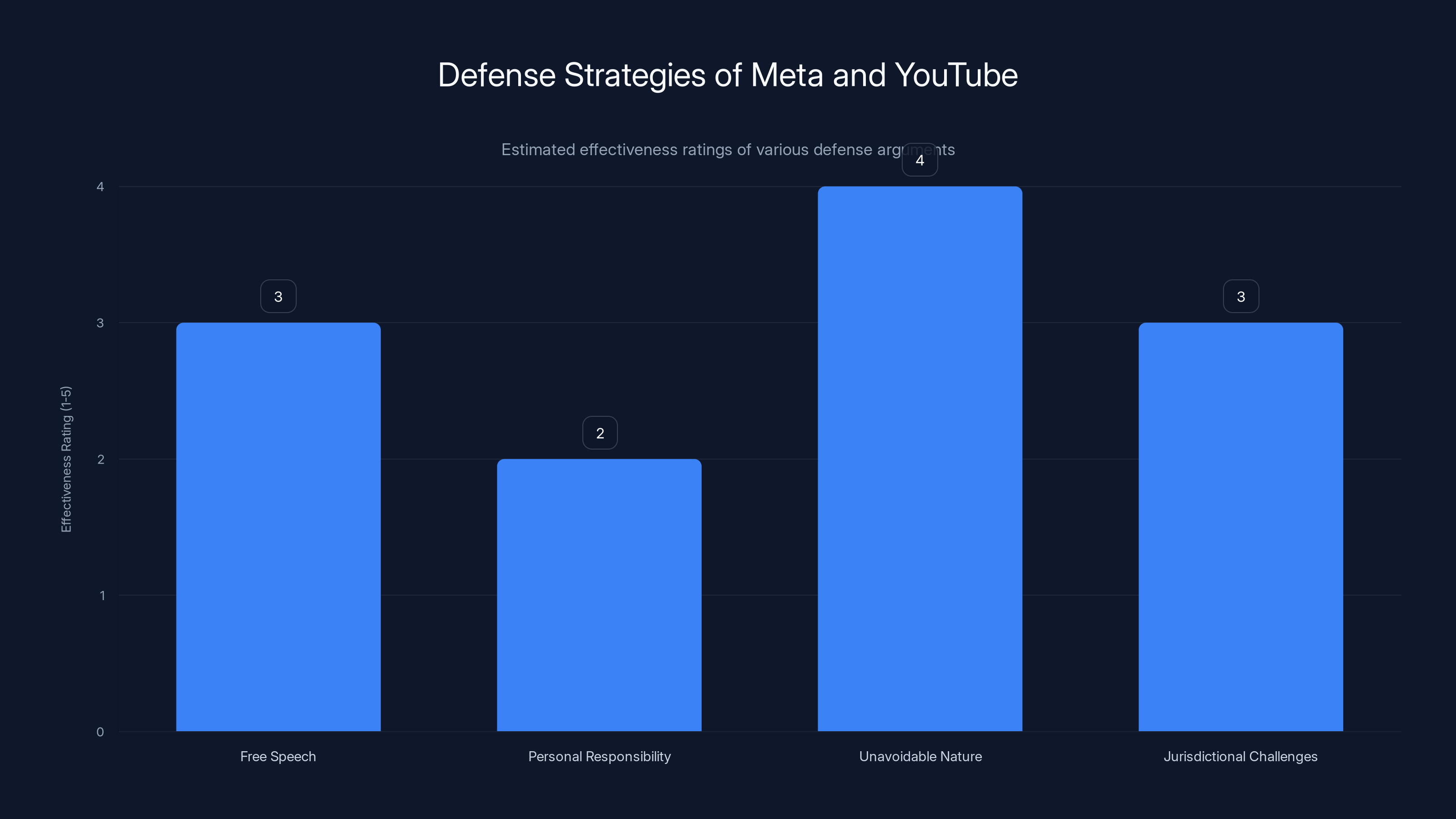

Meta and YouTube aren't just accepting these lawsuits. They're fighting back hard. Understanding their defense strategy shows how weak their position actually is.

Free Speech Arguments

One defense is free speech. The companies argue they're just exercising editorial discretion, deciding what content to show users. That's protected speech. Restricting how they operate is unconstitutional.

But that argument falls apart pretty quickly. Free speech doesn't protect you from lawsuits for intentional harm. You can speak, but you can't use your speech to deliberately manipulate vulnerable people for profit. At least, that's the argument the plaintiffs make.

Personal Responsibility Arguments

Another defense is personal responsibility. Users choose to be on the platform. Parents choose to let their kids use it. Nobody's forcing anyone. If people get addicted, that's their problem, not the platform's.

But this ignores the documented intentional manipulation. This ignores the research showing the platforms are designed to be addictive. This ignores that teenagers have not yet developed the impulse control to resist these manipulations. Personal responsibility doesn't work when you're fighting an opponent whose entire business model is subverting personal responsibility.

The Unavoidable Nature Argument

Some of the defense strategy argues that all social media is inherently habit-forming. That's just the nature of the product. Users like it. It's not malicious to build features people like.

But the lawsuit isn't arguing that features people like are wrong. It's arguing that features specifically designed to be addictive, deployed with knowledge of harm to minors, is wrong. There's a difference between being popular and being engineered to be addictive. Between engagement and exploitation.

Jurisdictional Challenges

Meta and YouTube are also fighting on procedural grounds, trying to get cases dismissed based on jurisdiction arguments, arguing certain states don't have standing to sue, claiming that federal law preempts state law on these issues.

These procedural defenses rarely win in the long run. They delay the process. They increase legal costs. They're strategy designed to make lawsuits so expensive that plaintiffs give up. But they don't change the underlying facts or the strength of the case.

Internal research by Meta revealed that Instagram exacerbates body image issues for 32% of teen girls, with significant impacts on eating disorders, depression, and anxiety. Estimated data for eating disorders, depression, and anxiety.

The Role of Algorithm Design in the Case

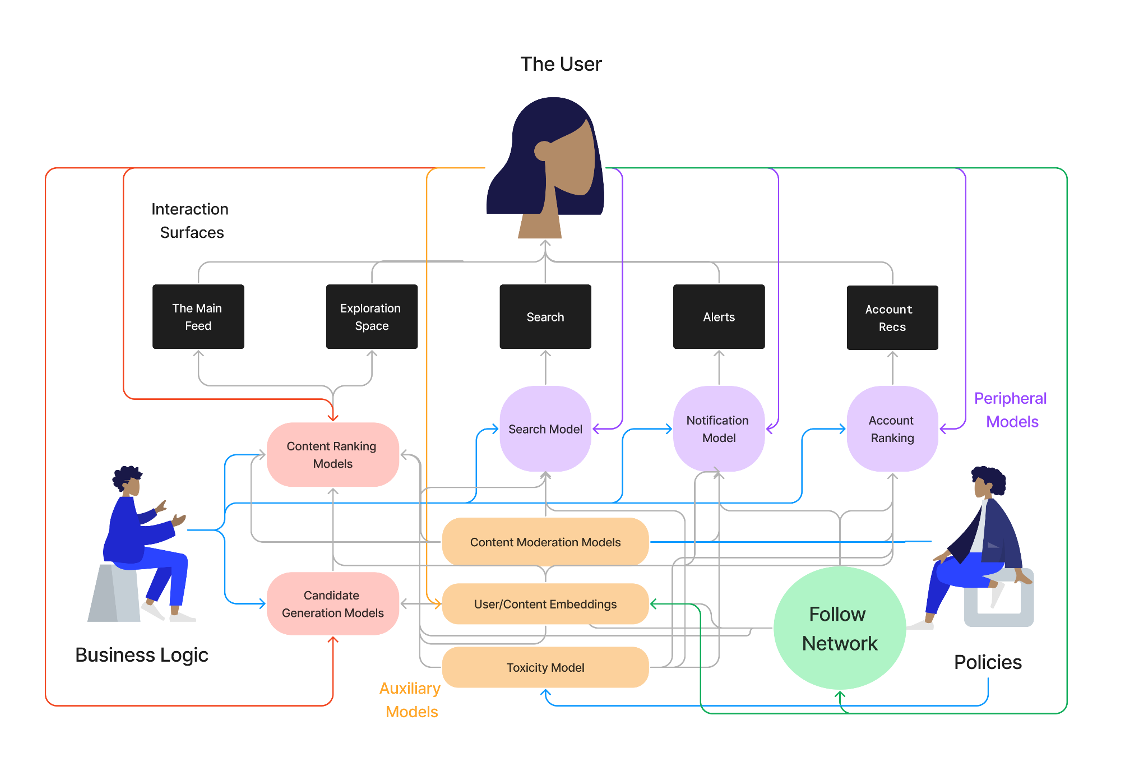

Algorithms are the invisible hand controlling what content you see. And that's where the real manipulation happens. The lawsuit puts algorithmic design front and center.

Maximizing Engagement Over Everything

Meta and YouTube's algorithms are fundamentally designed to maximize time on platform and engagement metrics. This isn't incidental. This is the core function. Every element of the algorithm is optimized for this single metric.

But here's what that means in practice: The algorithm doesn't care if the content is true. It doesn't care if it's healthy. It doesn't care if it's promoting eating disorders or conspiracy theories or self-harm. If that content keeps people scrolling, the algorithm will amplify it.

This creates a feedback loop where the most extreme, the most outrageous, and the most psychologically triggering content gets amplified most. The algorithm is literally training you to become more extreme, more addicted, more engaged.

Filter Bubbles and Radicalization

The algorithm also creates what researchers call filter bubbles. You see more of what you've already engaged with. This creates echo chambers where your existing beliefs are reinforced and alternative viewpoints are filtered out.

For teenagers, this is particularly dangerous. It means if a teen develops a fixed idea (about their body, their identity, a political position), the algorithm will reinforce it, amplify it, and show them increasingly extreme versions of it. The algorithm literally radicalizes you in whatever direction it's been set.

Meta and YouTube know this happens. Their research documents it. They don't fix it because fixed users are engaged users.

The Recommendation Engine's Hidden Nudges

When you open YouTube, you're not seeing all videos equally. You're seeing the videos that YouTube's algorithm thinks will keep you watching longest. Some of those recommendations are based on your history, but others are pure algorithmic judgment about what will hook you.

YouTube's algorithm has been documented pushing teenagers toward increasingly extreme content. If you watch one conspiracy theory video, the algorithm serves you more conspiracy theory videos, progressively more extreme versions. It's not trying to educate you or entertain you. It's trying to keep you watching. And extremism works.

The lawsuit argues this is intentional design. That YouTube chose to make their algorithm this way. That they could change it to recommend healthier content. They just won't because the current algorithm is more profitable.

Regulatory Landscape: Where Regulations Stand Now

The lawsuits exist in parallel with regulatory efforts. Some countries are already implementing restrictions. Some states are pushing bills. The landscape is complex and still forming.

European Union Digital Services Act

The EU has already implemented regulations that restrict how social media platforms can operate. The Digital Services Act requires transparency in algorithmic decisions, limits on addictive design patterns, and strict rules about collecting data on minors.

Meta and YouTube are already complying with these rules in Europe. And interestingly, it hasn't destroyed their business. The platforms still work. Users still use them. The engagement is still high. Which proves that designing these platforms without addictive mechanisms is actually possible.

The fact that Meta can operate in Europe under stricter rules proves that their argument about not being able to change is false. They're choosing not to change for U.S. markets because the current design is more profitable.

U.S. State-Level Efforts

Several U.S. states are pushing their own bills. Utah passed a social media law limiting features specifically designed to be addictive. Montana is working on teen privacy protections. California is considering restrictions on algorithmic feeds for minors.

These state-level efforts often get challenged on constitutional grounds. But the fact that they're being pursued shows that the political will for regulation exists. If the courts agree that the lawsuits have merit, state regulations will follow even faster.

Congressional Dysfunction

Congress has been debating social media regulation for years with little result. The issue is complex, the lobbying is intense, and there's disagreement about what regulations should look like. So nothing passes.

But court decisions don't require Congressional agreement. If courts rule against Meta and YouTube, regulations will be implemented regardless of what Congress does. That's actually more powerful than waiting for legislation.

The Global Implications: How Other Countries Are Responding

This isn't just happening in the U.S. Multiple countries are responding to social media's impact on young people, creating global pressure.

Australia's Approach

Australia has proposed laws that would hold social media companies responsible for content on their platforms and for the psychological impacts of their platforms. The laws are aggressive and include substantial fines.

Australia is also banning social media for anyone under 16. Not restricting it. Banning it. This is the hardest regulatory stance in the world.

Meta is fighting these laws hard. They actually threatened to cut off Australians from news on Facebook if the country imposed too strict regulations. That tells you how seriously Meta takes these threats.

Asian Markets and Government Intervention

China has already imposed strict regulations on social media companies, limiting features designed to be addictive and restricting screen time for minors. South Korea and Japan are following similar paths.

These countries don't have the same free speech concerns as the U.S., so they're willing to implement stricter rules. This creates competitive pressure. If social media works fine under strict rules in Asia and Europe, U.S. companies can't claim it's impossible.

United Kingdom and Canada

Both countries are considering "online safety" laws that would impose duties of care on social media platforms. These laws would require companies to actively protect user wellbeing, not just avoid obvious harm.

This is a different standard than the U.S. A duty of care standard means you have an affirmative obligation to make your product safer. You can't just claim you didn't intend harm. You have to prove you actively worked to minimize harm.

Estimated data shows that the 'Unavoidable Nature' argument is perceived as the most effective defense strategy, while 'Personal Responsibility' is seen as less convincing.

What Losing This Lawsuit Would Mean for Meta and YouTube

Let's be clear about what's actually at stake. If Meta and YouTube lose these lawsuits, the implications are massive.

Immediate Financial Impact

Damages could reach into the billions. Some estimates put them higher than any settlement Meta has paid previously. That's not just money. That's investor panic. That's stock price collapse. That's shareholders demanding CEO heads.

Meta's business model works at enormous scale. If courts rule that the current model is illegal and they're forced to change it, there's uncertainty about whether it can continue to be profitable. That uncertainty alone could tank the stock.

Forced Business Model Changes

More importantly than the money is the forced change. If courts prohibit certain design features, if they mandate transparency in algorithms, if they restrict targeting of minors, Meta and YouTube would have to fundamentally rebuild their platforms.

Infinite scroll would be gone. Algorithmic feeds designed for maximum engagement would be gone. Behavioral tracking of minors would be gone. Notifications designed to pull you back in would be gone.

You could argue the platforms would still work. You could argue they'd still be popular. But the current profitability depends on all of these features. Change them, and profit margins change dramatically.

Precedent for Other Tech Companies

But the real threat is precedent. If Meta and YouTube lose, then every tech company is vulnerable to similar lawsuits. Apple. Google. TikTok. Snapchat. Amazon. Microsoft. All the companies that have built engagement-maximizing features.

The precedent sets the standard. It says that intentionally engineering addiction, especially in minors, is legally actionable. That companies can be sued. That they can lose. That the cost is real.

Once that precedent exists, every other tech company has to assume they could face similar lawsuits. That changes their incentive structure. That changes what features they build.

The Defense of Teen Rights vs. Corporate Profits

At the core of this lawsuit is a fundamental question: Do corporations have the right to deliberately harm children for profit, or do children have the right to protection from that harm?

Meta and YouTube would frame it differently. They'd say it's about free speech. About innovation. About the internet. But that's not what it's really about. It's about whether a child's developing brain has legal protection from deliberate psychological manipulation.

Balancing Rights

Corporate rights are real. Free speech is important. Business innovation matters. But these rights have limits. You can't use your right to free speech to deliberately deceive vulnerable people. You can't use innovation as an excuse for harm. You can't claim corporate rights protect you when you've intentionally caused injury.

The lawsuit argues that children's rights to protection from intentional harm override corporate rights to profit from addiction. Most reasonable people would agree.

The Accountability Gap

Right now, there's an accountability gap. Social media companies can harm children. They can do it intentionally. And there's basically no legal consequence. They get investigated. They get criticized. They get bad press. But the business model doesn't change. The profit keeps flowing.

This lawsuit is trying to close that gap. To make companies accountable for the harm they cause. That's not radical. That's how capitalism is supposed to work. Cause harm, face consequences, change behavior.

Public Health vs. Commerce

There's also a public health argument. When something becomes a widespread threat to public health, the government can regulate it. That's why we regulate tobacco. Why we regulate pharmaceuticals. Why we regulate food. They're dangerous, but they're profitable, so companies would profit from them despite the danger if we let them.

Social media is in the same category now. It's a widespread threat to adolescent mental health. The evidence is overwhelming. The harm is documented. The intentionality is proven. At some point, it stops being commerce and starts being a public health crisis that needs to be regulated.

The Impact on Younger Users and Parental Control

The lawsuit also raises questions about how to protect younger users who are on platforms they're not supposed to be on anyway.

Below-Age Users and Enforcement

Instagram's terms of service require users to be 13 or older. But a massive percentage of users are younger. Enforcement is weak. Age verification is basically nonexistent. So 8-year-olds are on Instagram being exposed to the same addictive mechanisms that are harming 16-year-olds.

The lawsuit could force better age verification and enforcement. Meta could be required to actually confirm that users are the age they claim. That alone would remove millions of underage users from their platform.

Parental Notification and Control

Another potential outcome: Meta and YouTube could be forced to give parents actual control and transparency. Parents could see what their kids are doing. Parents could set limits on app usage. Parents could disable addictive features.

Meta and YouTube actually offer some of these things now, but they're buried in settings. They're not the default. The default is unlimited access with addictive features enabled. The lawsuit could flip that. The default could be opt-in to addictive features, with parental controls enabled by default.

Digital Literacy and Consent

There's also an argument about informed consent. Young people can't meaningfully consent to using a service if they don't understand how it works. If they don't know their data is being collected. If they don't know the algorithm is manipulating them. If they don't know the app is designed to be addictive.

Meta and YouTube could be required to explain, in clear language, exactly how their platforms work and how they're designed to influence behavior. Once young people actually understand, they might choose differently. Or parents might choose differently for them.

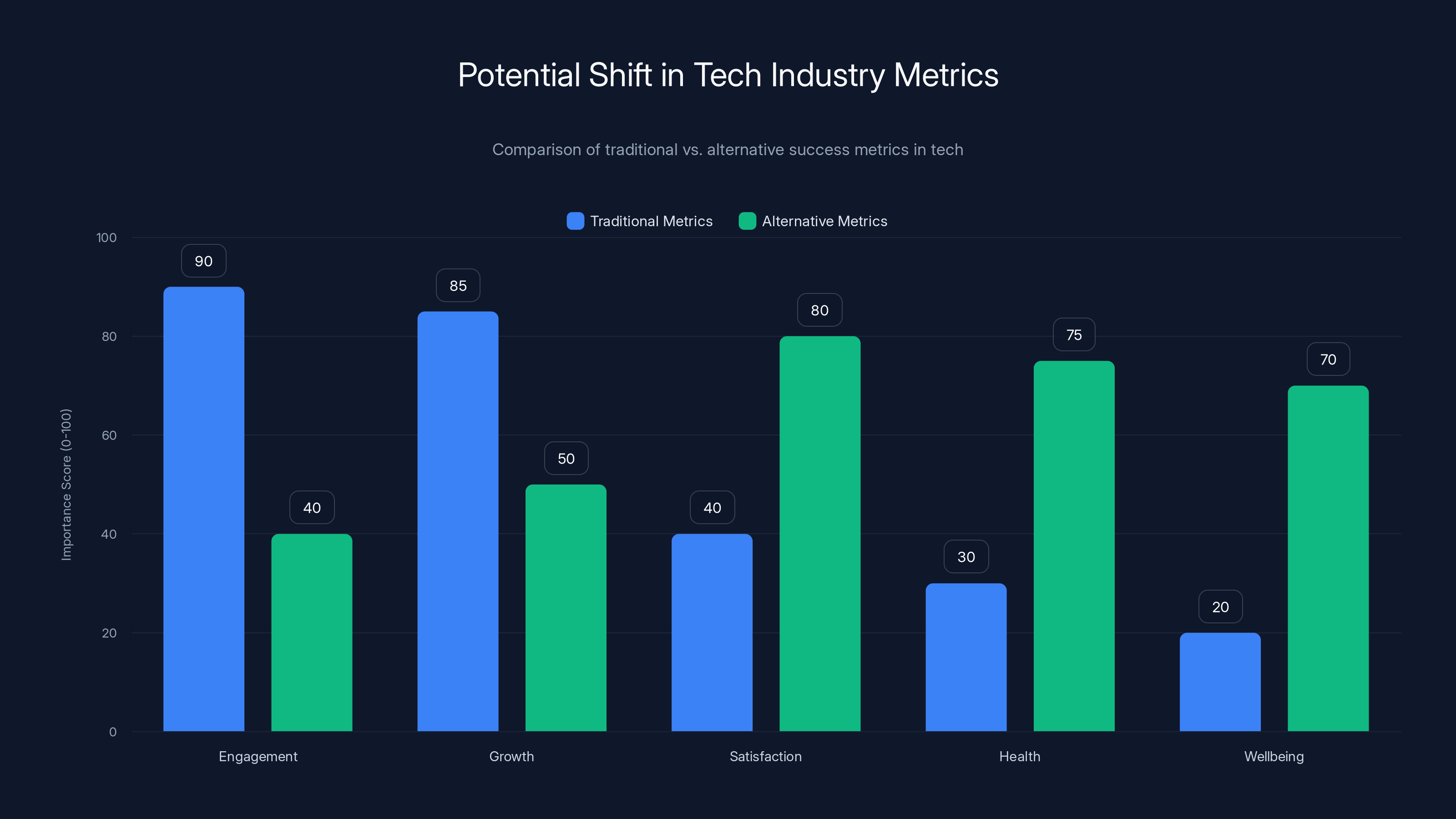

Estimated data shows a potential shift from traditional metrics like engagement and growth towards alternative metrics such as satisfaction, health, and wellbeing, if the tech industry changes its focus.

The Tech Industry's Broader Implications

This lawsuit doesn't just affect Meta and YouTube. It has implications for the entire tech industry and how companies think about engagement and user behavior.

The End of "Engagement-at-All-Costs"

If these lawsuits succeed, the era of "engagement-at-all-costs" will be over. Companies will have to think about user wellbeing, not just engagement metrics. They'll have to ask whether a feature is addictive before they implement it. They'll have to consider liability.

This might sound good in theory. But it's a massive shift in how tech companies operate. Almost every major tech product has been optimized for engagement and growth. Changing that changes everything.

Alternative Metrics and Incentives

Companies might start measuring success differently. Instead of daily active users and time on platform, they might measure satisfaction. Health. Wellbeing. These metrics pull in different directions. You can have high satisfaction and low engagement. Or high engagement and low satisfaction.

If the incentive structure changes, the products change. That's actually how you fix this. Not by adding features on top, but by changing the underlying metric that everything optimizes for.

Open Source and Independent Alternatives

The lawsuit might also accelerate development of alternative platforms. If traditional social media is going to be regulated heavily, space opens up for new approaches. Decentralized platforms. Privacy-first platforms. Algorithmic transparency. User control.

These alternatives exist now, but they struggle to compete with network effects. A court victory against Meta and YouTube could level the playing field. Could make alternative platforms more appealing. Could actually give users real choice.

Timeline: Where the Cases Stand Now and What's Coming

Multiple lawsuits are moving through courts simultaneously. Understanding the timeline helps you see how close we actually are to major rulings.

Current Cases in Motion

There are state-level lawsuits (California, Texas, Florida, and others have filed), federal class actions, and lawsuits from parents and teen advocacy groups. Some of these cases are further along than others.

A few cases have survived motions to dismiss, which is significant. Courts have agreed that the cases have legal merit. That's a major hurdle. If courts thought the cases were frivolous, they would've been dismissed already. The fact that they're moving forward means judges think the plaintiffs have a real argument.

Appeals Process

Even when cases go against Meta and YouTube, they'll appeal. Appeals can take years. The appeals process is where most of these cases will probably be decided, because that's where the law gets clarified and precedent gets set.

If Meta and YouTube lose at trial but appeal, we're looking at potentially years of legal process. But each loss strengthens subsequent cases. Each appeal decision creates precedent. By 2026 or 2027, we'll probably know how this ends. We might not see final resolution for a decade, but the trajectory will be clear much sooner.

Potential Settlement

Meta and YouTube might also just settle. Pay damages. Agree to changes. Avoid the risk of court losses. This is actually what happened with tobacco. The cases dragged on until the threat of trial became so severe that settlement made sense.

If settlements happen, they'll likely include significant design changes. Not just money, but actual changes to how the platforms work. That's more valuable than the money anyway.

Global Coordination

We're also seeing coordination between jurisdictions. What happens in California influences Europe. What happens in Australia influences the UK. What happens in courts influences regulation. It's all connected. A loss in California could accelerate regulations globally.

What Parents and Teenagers Should Know

If you're reading this as a parent or a teenager, there are practical implications right now.

Immediate Protective Steps

You don't need to wait for court decisions. You can take action now. Use screen time limits. Disable notifications. Remove social media apps from your phone (use the mobile web version instead, which is less addictive). Check your privacy settings.

More importantly, talk about what's happening. Explain that these apps are designed to be addictive. That's not you being paranoid. That's you being informed. Teenagers can understand these concepts if you explain them clearly.

Recognizing Addictive Patterns

Know the signs: Can't go a few minutes without checking. Anxiety when separated from the phone. Sleep disruption. Mood changes based on social media interactions. Comparing appearance to others obsessively. Using social media to escape problems.

These are addiction signs. They're not character flaws. They're exactly what the platforms are designed to create. Recognizing that helps reduce shame while increasing awareness.

Supporting Regulation

Support politicians who are willing to regulate social media. Support organizations pushing for teen privacy protections. Vote with your behavior by leaving platforms that are too addictive. These companies respond to pressure.

Meta's response to regulation threats has been resistance. But public pressure changes that. As more people leave, as more parents speak up, as more pressure mounts, the business case for change becomes clearer.

Conclusion: The Pivotal Moment for Tech

This lawsuit might be the most important legal battle in tech right now. It's not about privacy or free speech or whether companies should be regulated. It's about whether companies can deliberately harm children for profit, or whether there are legal consequences for that behavior.

The evidence is overwhelming. Meta and YouTube engineered addiction. They knew it was harmful. They knew it targeted vulnerable populations. They did it anyway. Those are facts now, not allegations.

The only real question is whether courts will enforce legal consequences for those facts. If they do, the implications ripple across the entire tech industry. If they don't, it sets a precedent that corporations can cause harm to children as long as the profit is high enough.

For the platforms, this is existential. Not because they'll disappear if they lose, but because they'll have to fundamentally change how they operate. The infinite scroll is gone. The algorithmic amplification is gone. The behavioral manipulation is gone. They'd still be social media platforms, but they wouldn't be the profit machines they are now.

For society, this is about establishing a basic principle: that human wellbeing comes before corporate profit. Especially when the human is a child whose brain is still developing.

For teenagers and parents, it's about understanding that the phone in your hand was designed by very smart people to be as addictive as possible. That's not a feature. That's a business model. And it's increasingly likely that business model is going to face legal consequences.

The lawsuits are moving. The evidence is strong. The precedent from tobacco regulation shows how this can end. We're approaching a pivotal moment where the court system might finally impose consequences that the market never would.

Whether that happens in 2025 or 2026, whether it's a court decision or a settlement, the direction is becoming clear. The era of unchecked engagement maximization is probably ending. What replaces it is still being written.

FAQ

What is the main accusation against Meta and YouTube in these lawsuits?

The primary accusation is that Meta and YouTube intentionally engineered their platforms to be addictive, specifically targeting young users' developing brains. The lawsuits cite internal research, design documentation, and executive admissions showing the companies deliberately implemented features like infinite scroll, algorithmic feeds, and notification systems designed to maximize engagement and time spent on platform, despite knowing these features cause psychological harm including depression, anxiety, and eating disorders in teenagers.

How do social media algorithms create addiction?

Social media algorithms use variable reward schedules, infinite scroll, and behavioral tracking to trigger dopamine release patterns similar to gambling addiction. They show algorithmically selected content designed to keep users scrolling indefinitely, employ notifications timed to pull users back into the app when they're most vulnerable, and use engagement metrics to amplify emotionally triggering content. For developing teenage brains lacking full impulse control, these mechanisms create compulsive usage patterns that users often describe as unable to control.

What evidence exists that these companies knew about the harm?

Meta's internal research (the "Facebook Papers") documented that Instagram worsens body image issues and increases depression in teenage girls, with 32% of teen girls reporting Instagram made their body image problems worse. Former executives including Sean Parker and Jamie Byrne publicly admitted the platforms were designed to exploit psychological vulnerabilities. Design documents show features were created specifically to increase daily active users and time on platform. This combination of internal research, executive testimony, and design documentation constitutes proof of knowledge and intent.

Could social media platforms operate without addictive features?

Yes. The European Union's Digital Services Act already restricts addictive design patterns, and Meta operates profitable platforms in Europe under these stricter rules. The fact that Meta can run Instagram with limitations in Europe while maintaining engagement proves that alternatives exist. The company chooses not to implement these restrictions in the U.S. because the current addictive design is more profitable, not because it's technically necessary.

What would happen if Meta and YouTube lost these lawsuits?

Losing could result in billions in damages, forced platform redesigns (removing infinite scroll, algorithmic feeds, addictive notifications), mandatory disclosure of how algorithms work, restrictions on collecting behavioral data from minors, and prohibition of features specifically designed to maximize engagement. The broader impact would be a legal precedent making all tech companies liable for intentionally addictive design targeting minors, fundamentally changing how the industry operates.

How would these lawsuits affect teenagers using social media now?

Potential court victories could lead to design changes making platforms less addictive (default notification limits, chronological feeds, session limits), better age verification keeping younger users off inappropriate platforms, improved parental controls, and mandatory disclosure of how platforms work. More immediately, awareness of how these platforms function allows parents and teens to make more informed choices about usage and recognize addictive patterns as designed features rather than personal weaknesses.

Is there international support for regulating social media addiction?

Yes, significantly. The EU has implemented the Digital Services Act restricting addictive design. Australia is proposing bans on social media for under-16 users. South Korea, Japan, and Canada are developing similar regulations. China already strictly limits addictive features. This global coordination creates pressure even in the U.S., as companies struggle to maintain different business models across jurisdictions. What's legal in Europe suggests what could be required everywhere.

What's the difference between this lawsuit and previous social media regulation attempts?

Previous efforts focused on privacy, free speech, or general harm. These lawsuits specifically focus on intentional design choices to create addiction, with documented evidence of knowledge and intent. The tobacco litigation precedent shows this approach works. The lawsuits also target the fundamental business model (engagement maximization) rather than requesting add-on features, making them more threatening to company profitability.

Can I protect my teenager from social media addiction while using current platforms?

You can implement screen time limits, disable notifications, remove apps and use mobile web instead, check privacy settings, and discuss how algorithms are designed to manipulate behavior. But ultimately, fighting algorithmic addiction through willpower is like fighting Las Vegas odds through discipline. Structural change (from regulation or court decisions) is more effective than individual protection because addiction is intentionally engineered to overcome personal control.

What could replace current social media if these lawsuits succeed?

Alternatives include decentralized platforms where users control their data, algorithm-transparent platforms where users understand how content is selected, privacy-first platforms that don't track behavior for manipulation, and platforms using different metrics (user satisfaction rather than engagement maximization). These exist now but struggle against network effects. A major court victory against Meta and YouTube could level the playing field by making traditional social media more expensive to operate through regulation and damages.

Key Takeaways

- Meta and YouTube intentionally engineered addictive features with documented knowledge of harm to teenagers

- Internal research, design documents, and executive admissions provide direct evidence of intent

- The lawsuit uses successful tobacco litigation precedent to establish legal liability

- Losing could force platform redesigns, financial damages, and restrictions on targeting minors

- EU regulations and global coordination show alternatives exist and are viable

- Parents and teens can take immediate protective steps while awaiting legal outcomes

- A court victory would set precedent affecting the entire tech industry's approach to engagement

- The fundamental business model (engagement maximization) is what's actually on trial

Related Articles

- Meta's Child Safety Trial: What the New Mexico Case Means [2025]

- EU's TikTok 'Addictive Design' Case: What It Means for Social Media [2025]

- Meta Teen Privacy Crisis: Why Senators Are Demanding Answers [2025]

- Social Media Safety Ratings System: Meta, TikTok, Snap Guide [2025]

- Meta's Child Safety Crisis: What the New Mexico Trial Reveals [2025]

- Section 230 at 30: The Law Reshaping Internet Freedom [2025]

![Meta and YouTube Addiction Lawsuit: What's at Stake [2025]](https://tryrunable.com/blog/meta-and-youtube-addiction-lawsuit-what-s-at-stake-2025/image-1-1770743658337.jpg)