How Microsoft Teams Is Fighting Brand Spoofing and Social Engineering Attacks

Your phone rings. The caller ID shows your bank's name. You pick up, and within seconds, you're being asked for sensitive information. Sound familiar? This scenario happens thousands of times daily, and it's called brand spoofing. Scammers have gotten terrifyingly good at impersonating legitimate organizations, and they're targeting workplace communication tools with increasing precision.

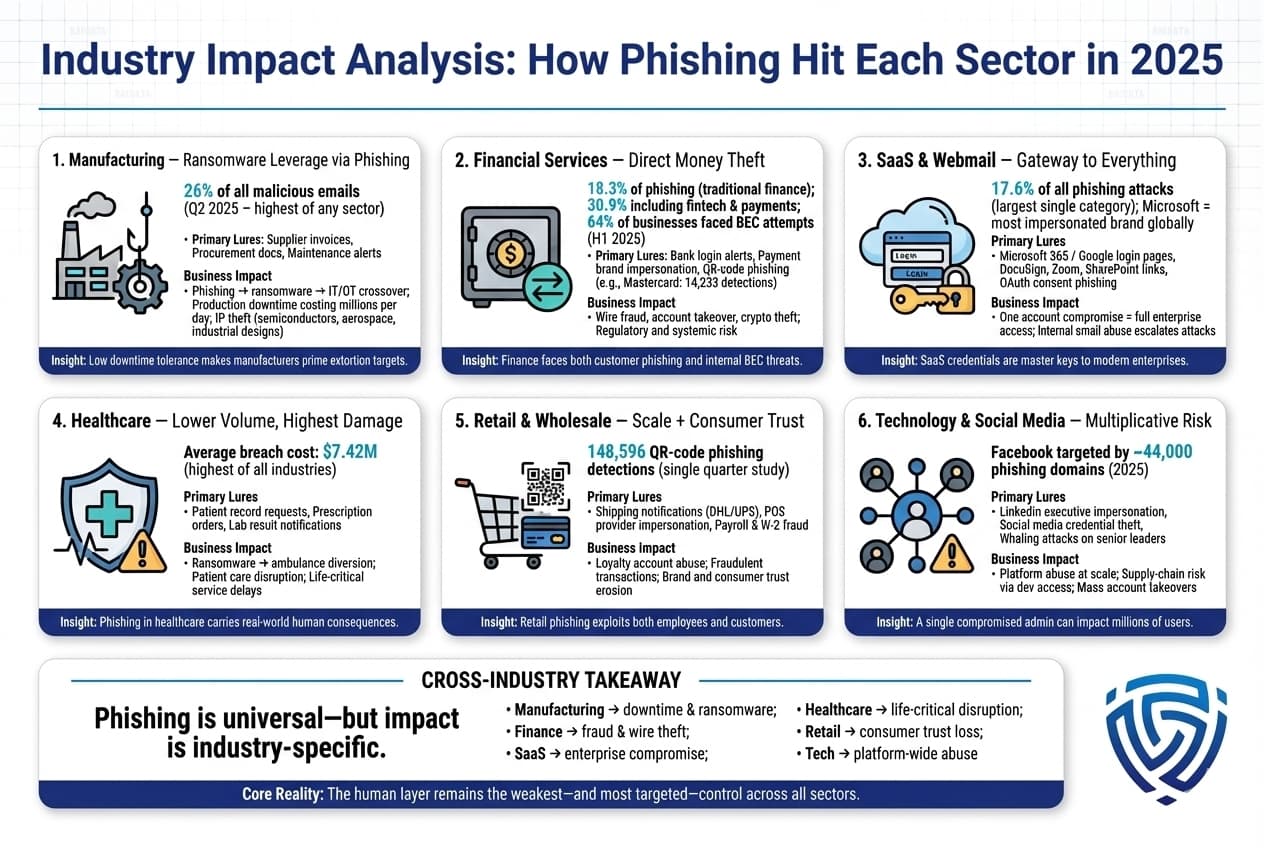

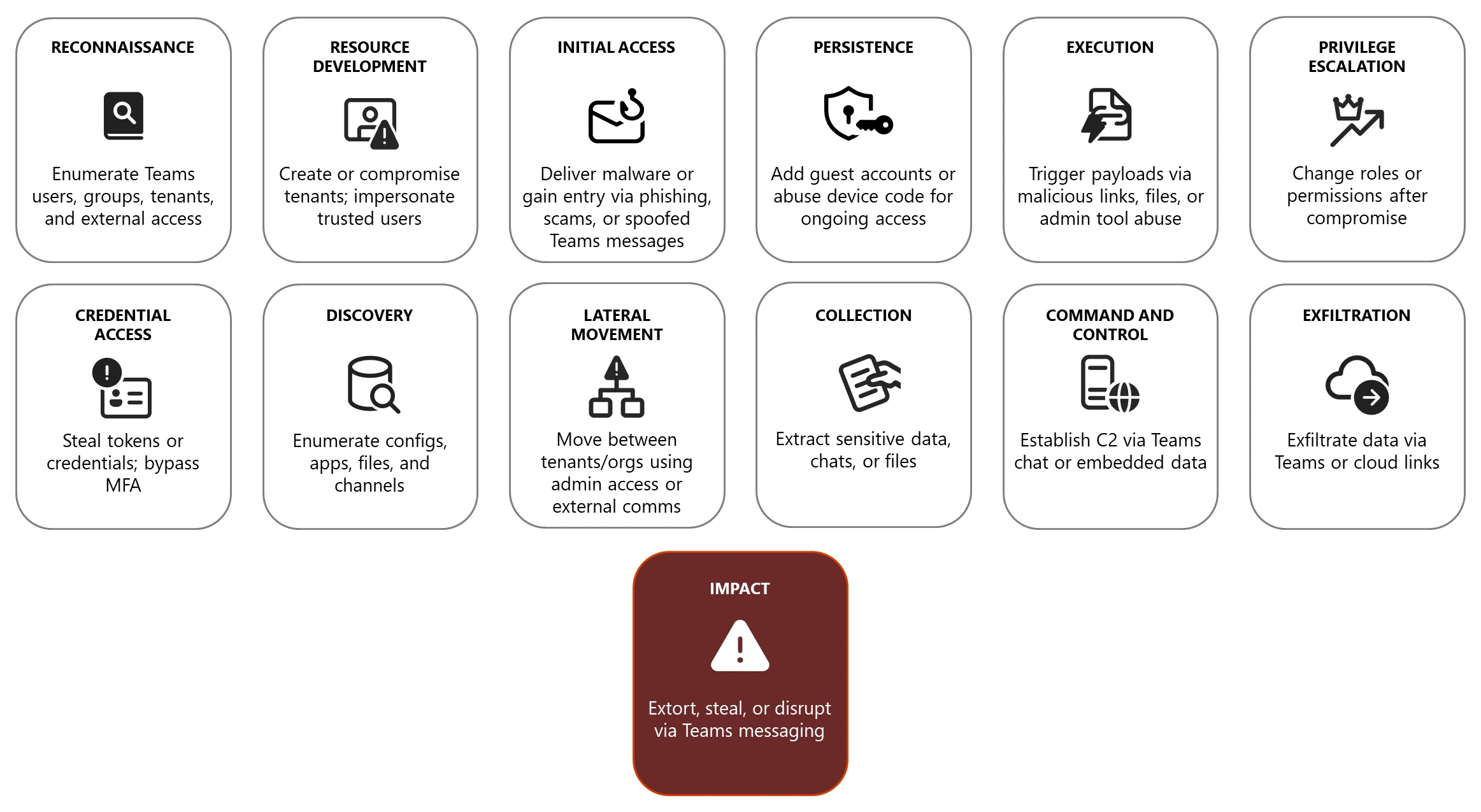

Microsoft Teams, the collaboration platform used by millions of enterprises worldwide, has become a prime target for these attacks. Why? Because it's where business happens. It's where deals get finalized, where access credentials get shared, and where urgent requests from "managers" get rubber-stamped without proper verification. The stakes couldn't be higher, which is why Microsoft is taking aggressive action.

The company just announced Brand Impersonation Protection, a new call-scanning feature coming to Microsoft Teams that will automatically detect when external callers are trying to pose as trusted organizations. This isn't just another security patch—it's a fundamental shift in how Teams handles the human element of cybersecurity, addressing one of the most devastating attack vectors out there: social engineering.

If you're managing an enterprise using Microsoft Teams, or if you're simply someone who takes security seriously, you need to understand what's coming, how it works, and what it means for your organization. This guide breaks down everything you need to know about this feature, the threat landscape it addresses, and how to prepare your team for its rollout.

TL; DR

- Brand Impersonation Protection is a new Microsoft Teams security feature detecting external callers impersonating known phishing targets

- General availability launching February 2026 on desktop and Mac versions first, with broader rollout following

- How it works: Scans first-time external callers for impersonation indicators and alerts users to accept, block, or end suspicious calls

- Part of larger strategy: Microsoft has deployed Malicious URL Protection (September 2025) and Weaponizable File Type Protection (September 2025)

- Future improvements planned: Suspicious call reporting feature arriving around March 2026 will let users report attempted fraud directly

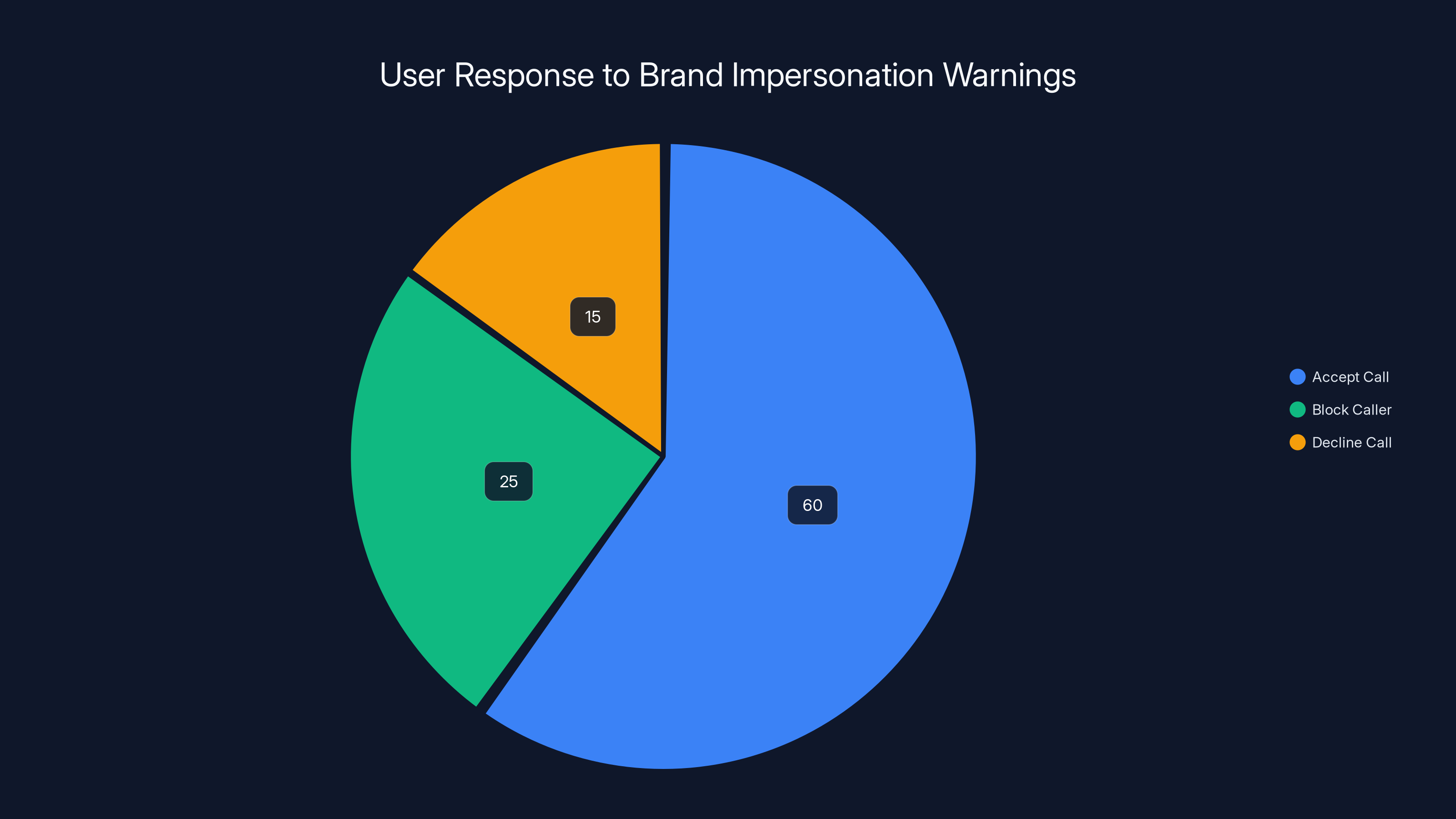

Estimated data suggests that most users (60%) choose to accept calls despite warnings, while 25% block the caller and 15% decline the call.

Understanding Brand Spoofing and Why It's a Massive Problem

Brand spoofing sounds straightforward in theory: a scammer pretends to be someone they're not. In practice, it's devastatingly effective because it exploits the one security layer most organizations struggle with: human judgment.

Consider the mechanics. A scammer gains your phone number—possibly from LinkedIn, company websites, or data breaches. They use VoIP software to set up a caller ID that displays your bank's name, your vendor's company name, or even an internal department. When you answer, everything feels legitimate because the psychological trigger is already there. The caller ID said "Chase Bank Security" or "Microsoft Billing," so your brain is already in a trust mode. The attacker then uses social engineering tactics: creating urgency, requesting verification information, or asking for access to systems.

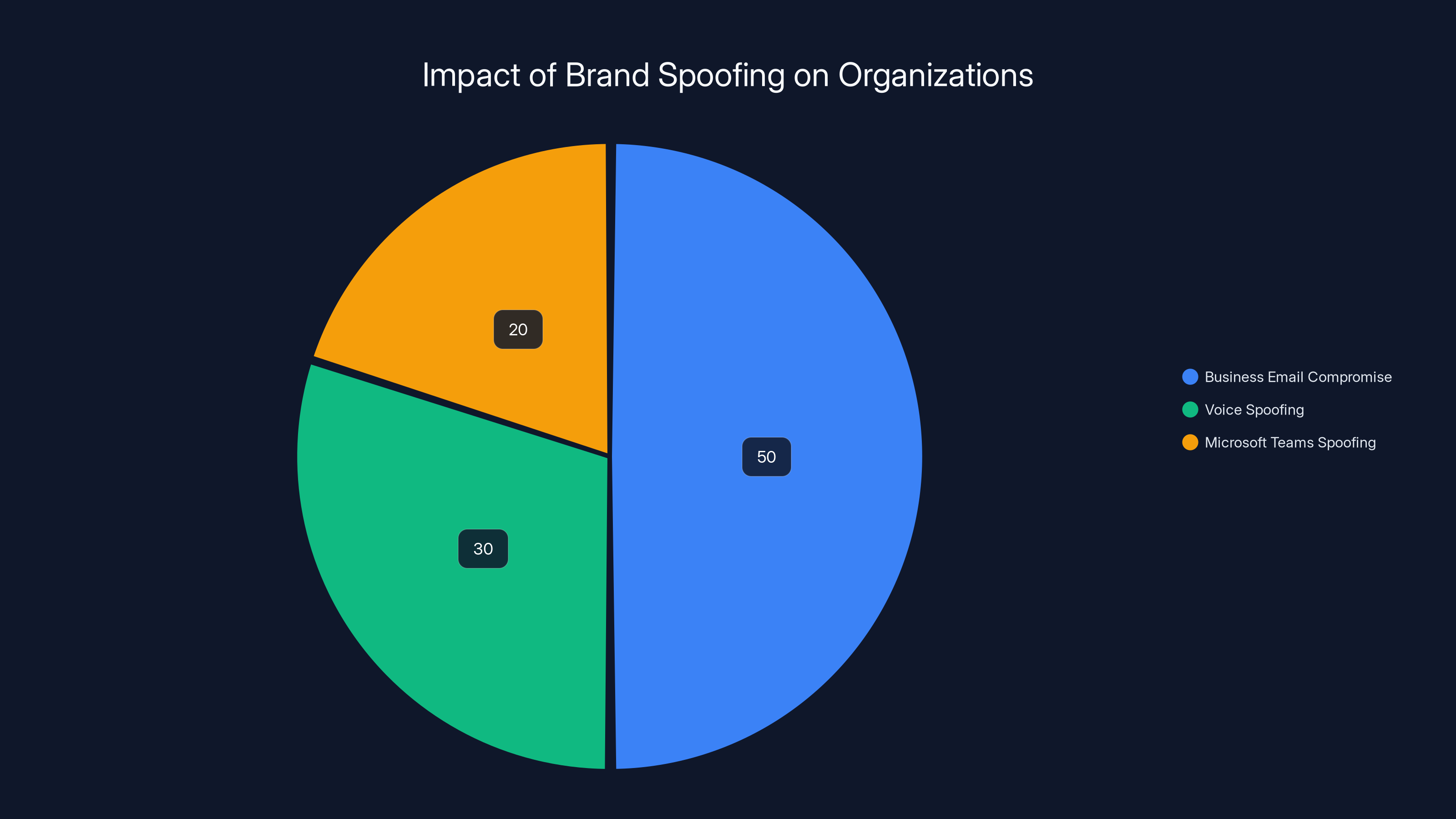

The statistics are sobering. According to industry research, business email compromise (BEC) attacks and voice spoofing scams cost organizations billions annually. The average loss per incident ranges from thousands to millions of dollars, depending on the organization's size and what the attackers gained access to.

Microsoft Teams became a target specifically because it combines several vulnerabilities. First, Teams integrates deeply into enterprise environments, handling everything from casual chats to business-critical communications. Second, many Teams users work remotely, so they might not have the immediate ability to verify callers through other channels. Third, Teams notifications can feel routine and trustworthy—people accept incoming calls with less scrutiny than they would on their personal phones.

The scammers learned this. Over the past few years, we've seen documented cases where attackers spoofed Teams calls from executives requesting urgent wire transfers, from IT support requesting credentials, and from vendors requesting updated payment information. Some attacks succeeded because the victims genuinely believed they were communicating with who they thought they were.

This is why Microsoft's new approach matters. You can't train people to never trust their tools. You can't tell employees to disbelieve their caller ID. What you can do is make the tools themselves smarter about detecting when something's wrong.

What Is Brand Impersonation Protection Exactly?

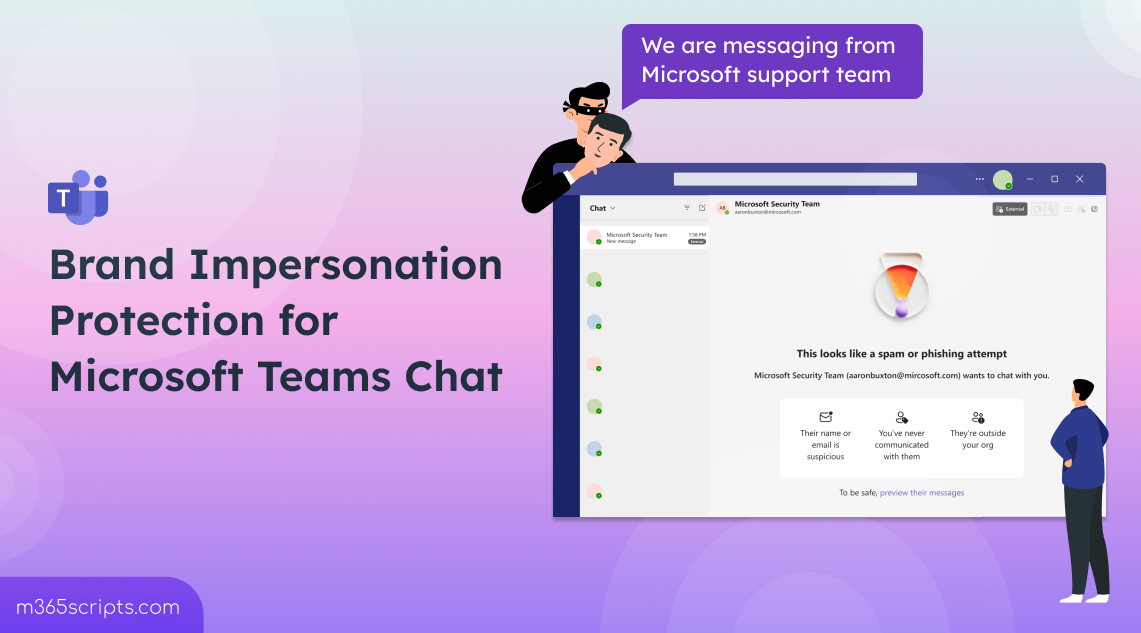

Brand Impersonation Protection is fundamentally a detection and alerting system. It works during the initial handshake of external calls—that crucial first moment when a call from outside your organization connects with an internal user.

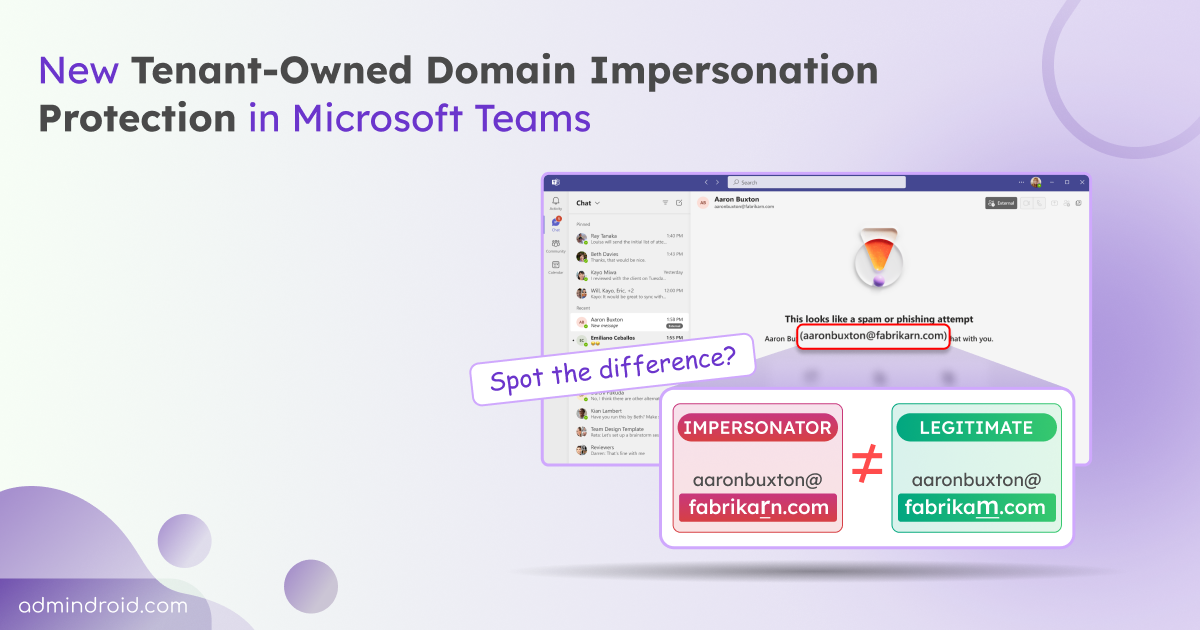

Here's the operational flow: When an external user calls a Teams user for the first time, the system runs the incoming caller against a database of known organizations commonly targeted by phishing and spoofing attacks. This database includes major financial institutions, technology companies, payment processors, and other high-value targets that scammers frequently impersonate.

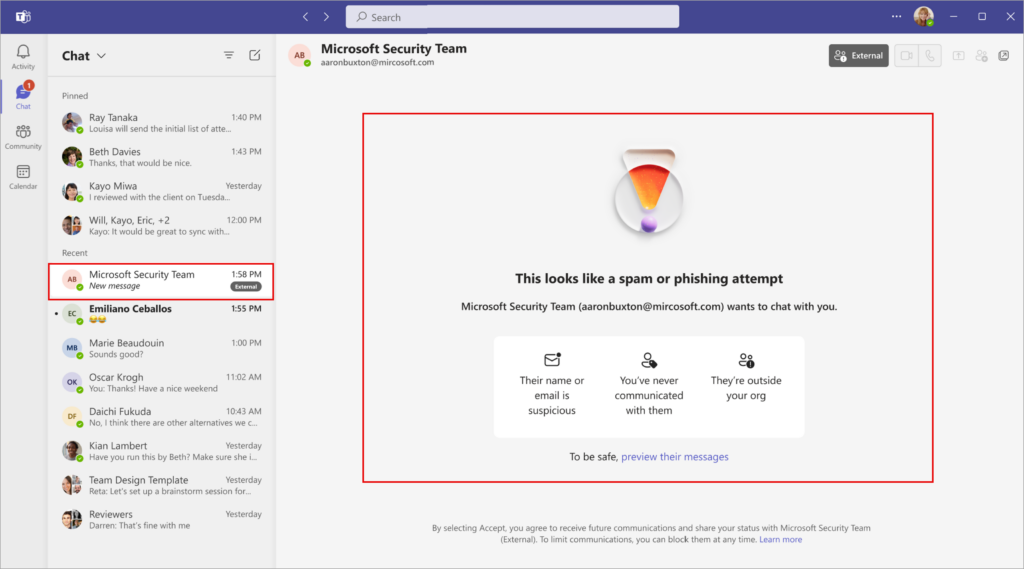

If the system detects potential impersonation—meaning the caller is displaying a brand name or caller ID associated with one of these commonly targeted organizations—a warning badge appears on the incoming call notification. The receiving user then has three immediate options: accept the call, block the caller, or end the call. The warning itself doesn't prevent communication; rather, it injects a moment of skepticism into what might otherwise feel like a routine call.

What makes this different from generic "spam call" detection is the specificity. The system isn't just flagging numbers with bad reputations. It's actively looking for a specific attack pattern: external callers claiming to be from organizations that are known targets of social engineering campaigns.

Microsoft's approach here reflects lessons learned from other security implementations. The company has spent years building threat intelligence about which organizations get targeted most frequently by phishing attacks, credential harvesting, and financial fraud. They've analyzed patterns in successful attacks, identified common impersonation targets, and built that knowledge into this detection system.

The feature currently focuses on first-contact calls. Why? Because established relationships are harder to spoof effectively. If you've been having regular calls with someone in your vendor's company over months, a suspicious call from that vendor becomes statistically more likely to be legitimate. But that first call? That's when impersonation risk is highest.

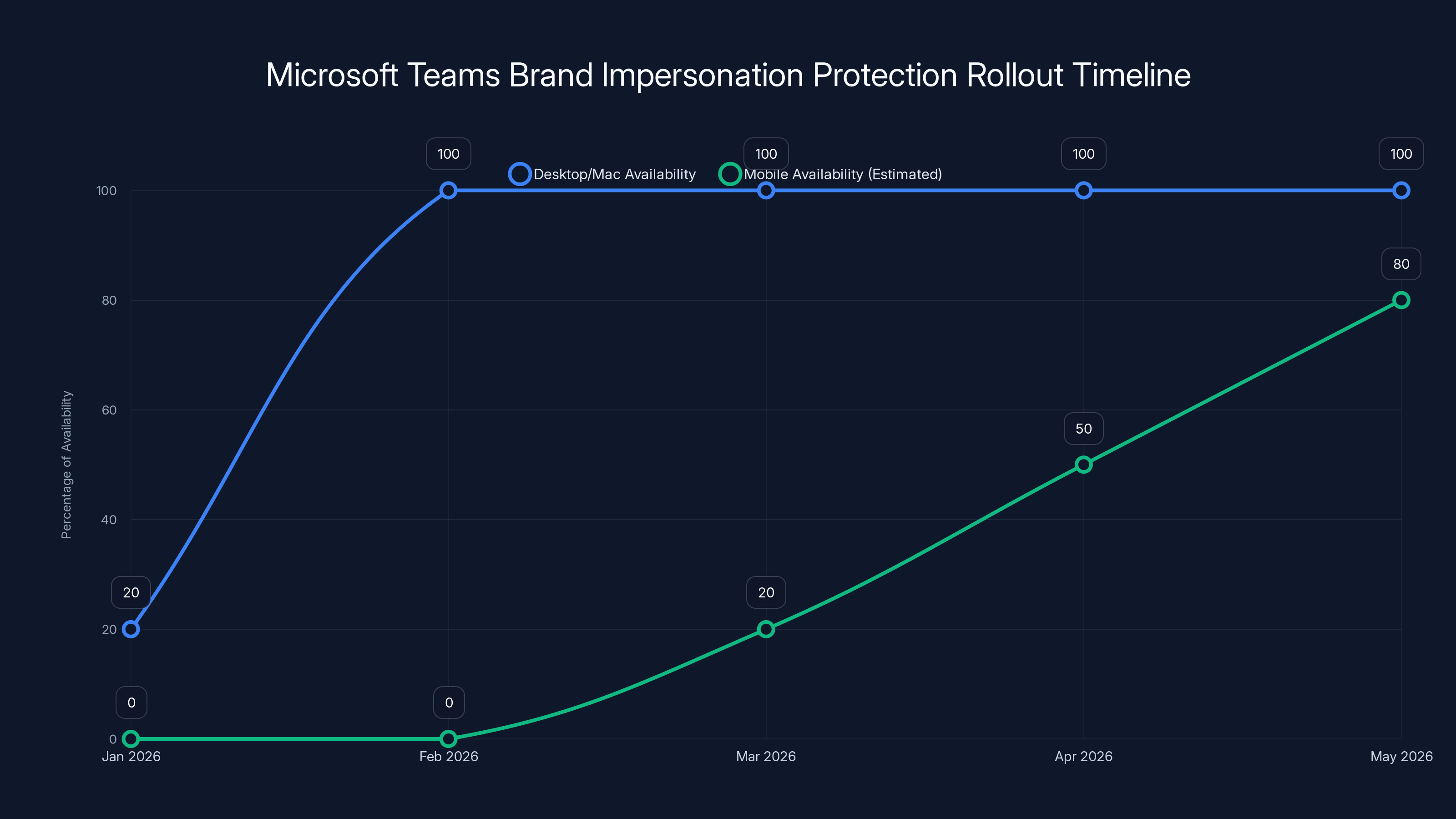

Brand Impersonation Protection feature will be fully available for desktop and Mac by February 2026, with mobile support gradually increasing in subsequent months. Estimated data for mobile rollout.

The Technical Architecture Behind Detection

Understanding how Brand Impersonation Protection actually detects spoofing requires understanding the signals Microsoft analyzes. This isn't magic; it's sophisticated pattern recognition built on several overlapping data sources.

First, there's the caller identity signal. When someone calls through Teams, they're providing caller information, phone numbers, or display names. Microsoft analyzes whether this information matches known patterns associated with the organizations being claimed. If someone calls claiming to be from Bank of America but the underlying caller metadata suggests a different provider or origin, that's a potential mismatch.

Second, there's the behavioral signal layer. The system looks at calling patterns. Do legitimate calls from this organization follow predictable patterns? Are they coming from expected regions or times of day? Scammers often don't replicate the natural variation of authentic organizational calling patterns.

Third, there's the threat intelligence integration. Microsoft's security teams continuously monitor for emerging spoofing campaigns, phishing attempts, and social engineering attacks. When patterns emerge—like multiple reports of similar spoofing attempts against a particular organization—that information feeds back into the detection algorithm.

Fourth, there's the organizational context. Teams knows your company's own communication patterns. It understands which external organizations you legitimately receive calls from. It can build an allowlist of expected callers and apply more stringent detection to unexpected sources.

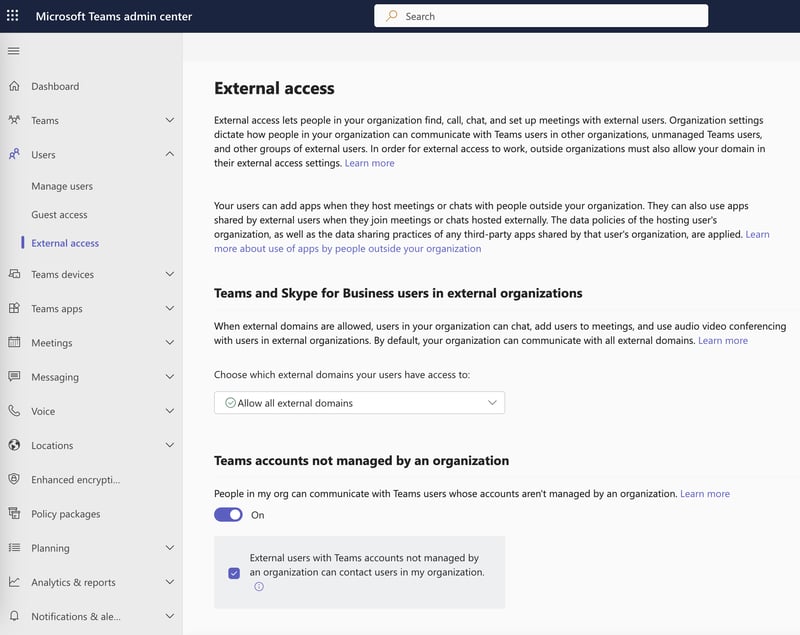

The system doesn't require any special configuration from IT administrators, which is both a strength and a limitation. It works out of the box, applying the same detection logic to all organizations using Teams. The tradeoff is that organizations can't easily customize which organizations the system considers "commonly targeted" for their specific environment.

What's important to understand: this system is designed to catch the low-hanging fruit of spoofing attacks—the attackers using basic caller ID manipulation techniques. Sophisticated attackers who gain access to actual telecommunications infrastructure or legitimate calling systems will be harder to detect. But those represent a tiny fraction of spoofing attacks. The vast majority rely on relatively basic techniques that Brand Impersonation Protection should catch.

Deployment Timeline and Availability

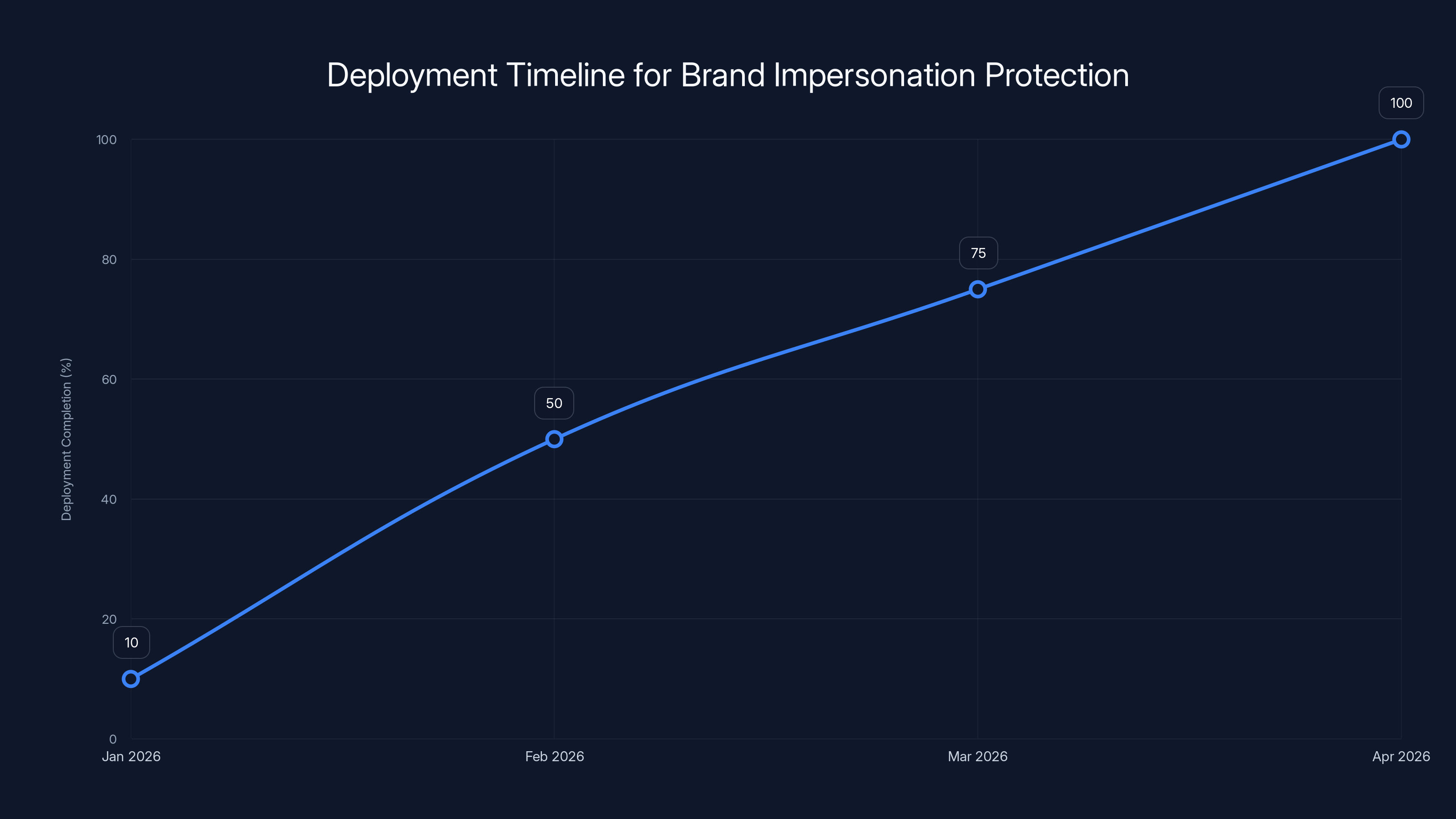

Microsoft has provided a clear deployment roadmap for Brand Impersonation Protection, which matters because security features don't help if they're years away.

The feature is currently in the preview phase, meaning select organizations can test it and provide feedback. This preview phase started in January 2026 and will continue through the rest of the month. During this phase, Microsoft is collecting data on detection accuracy, false positive rates, and user experience impacts.

The critical milestone is February 2026 general availability. Starting then, Brand Impersonation Protection will roll out to all Microsoft Teams desktop clients, with Mac clients being prioritized alongside desktop versions. This dual-platform focus makes sense since most business Teams usage happens on traditional computers rather than mobile devices.

Why desktop and Mac first rather than the mobile app? Several reasons: desktop and Mac users are more likely to be enterprise users handling sensitive business communications. The visual design of the warning badge works better on larger screens. And historically, mobile Teams users represent a smaller segment of the user base for business-critical communications.

Mobile app support will follow in subsequent phases, though Microsoft hasn't provided an exact timeline. The company is prioritizing the initial deployment to desktop users, gather feedback, refine the system, and then extend it to mobile platforms.

The rollout itself will be phased geographically and by organization. Microsoft typically stages deployments to manage support demand and catch any regional compatibility issues. Some regions will see the feature in February, others in March or April. For IT administrators, this means checking your tenant deployment status during the rollout window.

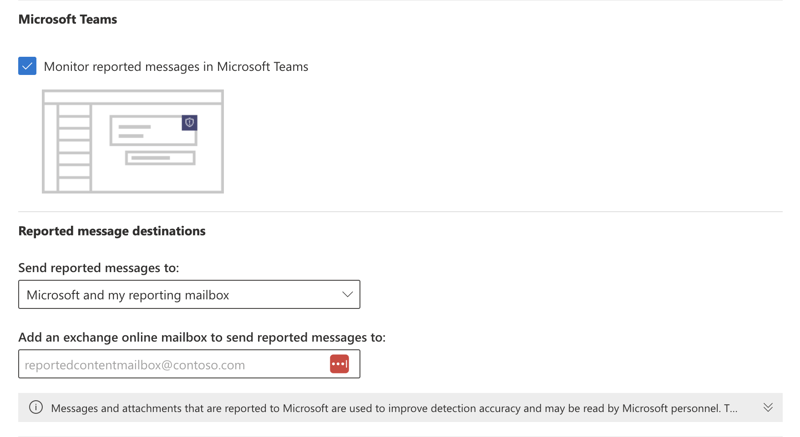

Following Brand Impersonation Protection, Microsoft has already announced that suspicious call reporting will arrive around March 2026. This feature lets users directly report attempted fraud calls to Microsoft, feeding that data back into the threat intelligence system. When dozens of users report the same phone number as a spoofing attempt, Microsoft can accelerate inclusion of that number in the impersonation detection system.

How This Fits Into Microsoft's Broader Security Strategy

Brand Impersonation Protection isn't a standalone feature. It's the latest chapter in Microsoft's multi-layered approach to Teams security, and understanding the full context shows why this matters.

In September 2025, Microsoft rolled out two significant security features that set the stage for Brand Impersonation Protection. First came Malicious URL Protection, which scans every link shared in Teams chats and channels, identifying URLs that lead to phishing pages, malware distribution sites, or other malicious content. When a user tries to share a link, the system checks it against Microsoft's threat intelligence database and blocks or warns about dangerous URLs in real time.

Second, Microsoft deployed Weaponizable File Type Protection, which scans executables and file types commonly used in attacks being shared through Teams. If someone tries to share an .exe file, a .scr file, or other executable types known to be weaponized in attacks, the system can block it or require administrator approval.

These three features—Malicious URL Protection, Weaponizable File Type Protection, and Brand Impersonation Protection—form a coordinated defense strategy. URLs cover the first stage of many attacks (getting users to click malicious links). File types cover the stage where attackers try to distribute malware. And calls cover the stage where attackers use social engineering directly.

But there's a reason these are positioned in a particular order. Social engineering is often the weakest link in the security chain. You can have perfect email security, perfect file scanning, perfect access controls—and then a scammer calls someone pretending to be from IT asking for credentials. Seconds later, the attacker is inside your network.

Microsoft is essentially saying: "We're not just going to protect you at the technical layer. We're going to protect you at the human layer too." That's a meaningful shift. For years, cybersecurity has been obsessed with preventing technical exploits—buffer overflows, SQL injection, cross-site scripting. Those matter, but they're actually harder to execute effectively today than they were a decade ago. What's easier? Convincing someone to do something they shouldn't.

The company has also committed to another feature arriving around March 2026: suspicious call reporting. This let users directly flag incoming calls they suspect are spoofing attempts. Those reports feed back into the threat intelligence engine, allowing Microsoft to identify emerging spoofing campaigns quickly.

Business Email Compromise (BEC) constitutes the largest portion of brand spoofing attacks, followed by voice spoofing and Microsoft Teams spoofing. Estimated data based on industry trends.

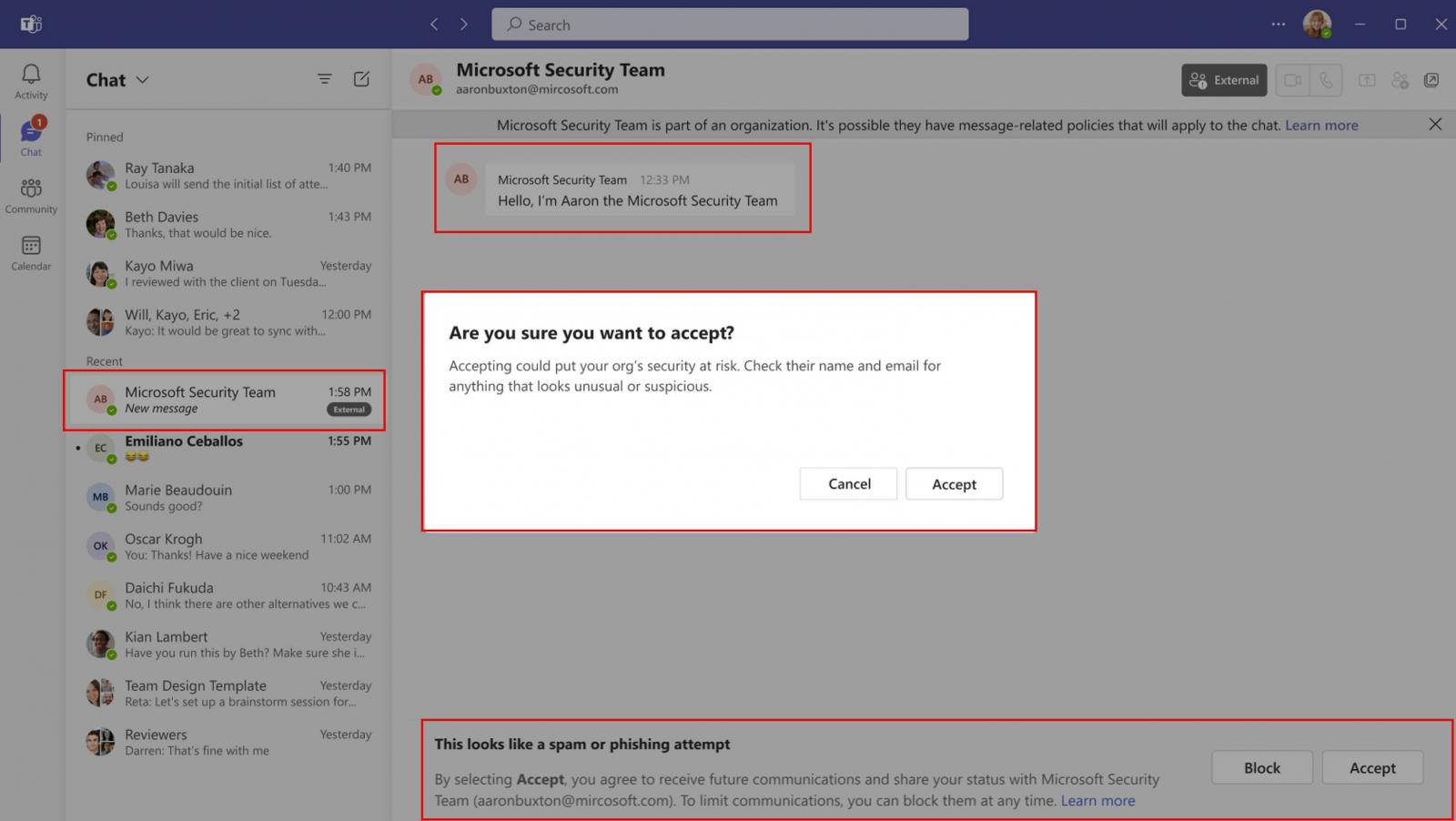

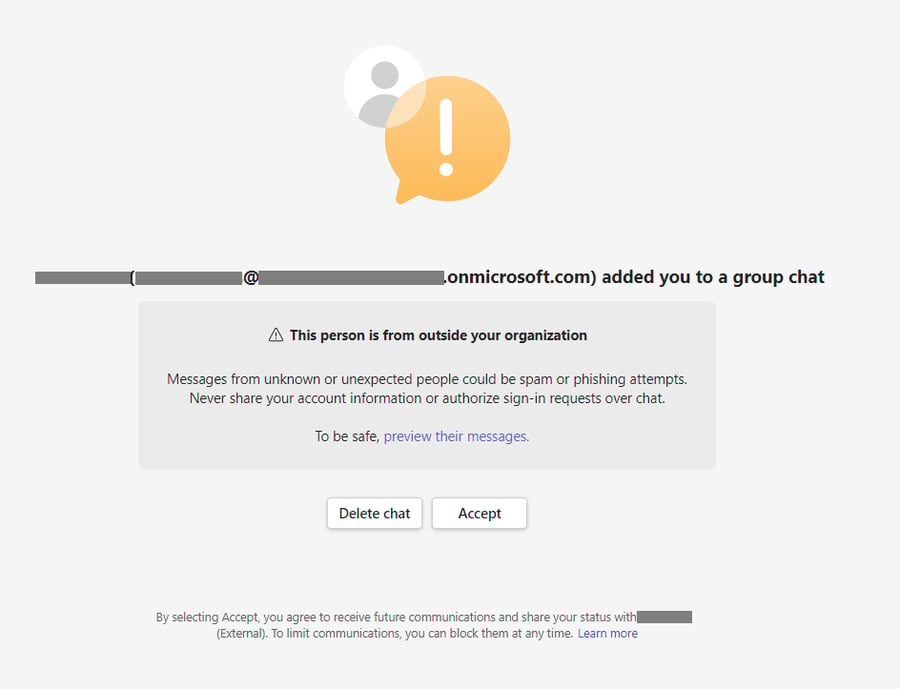

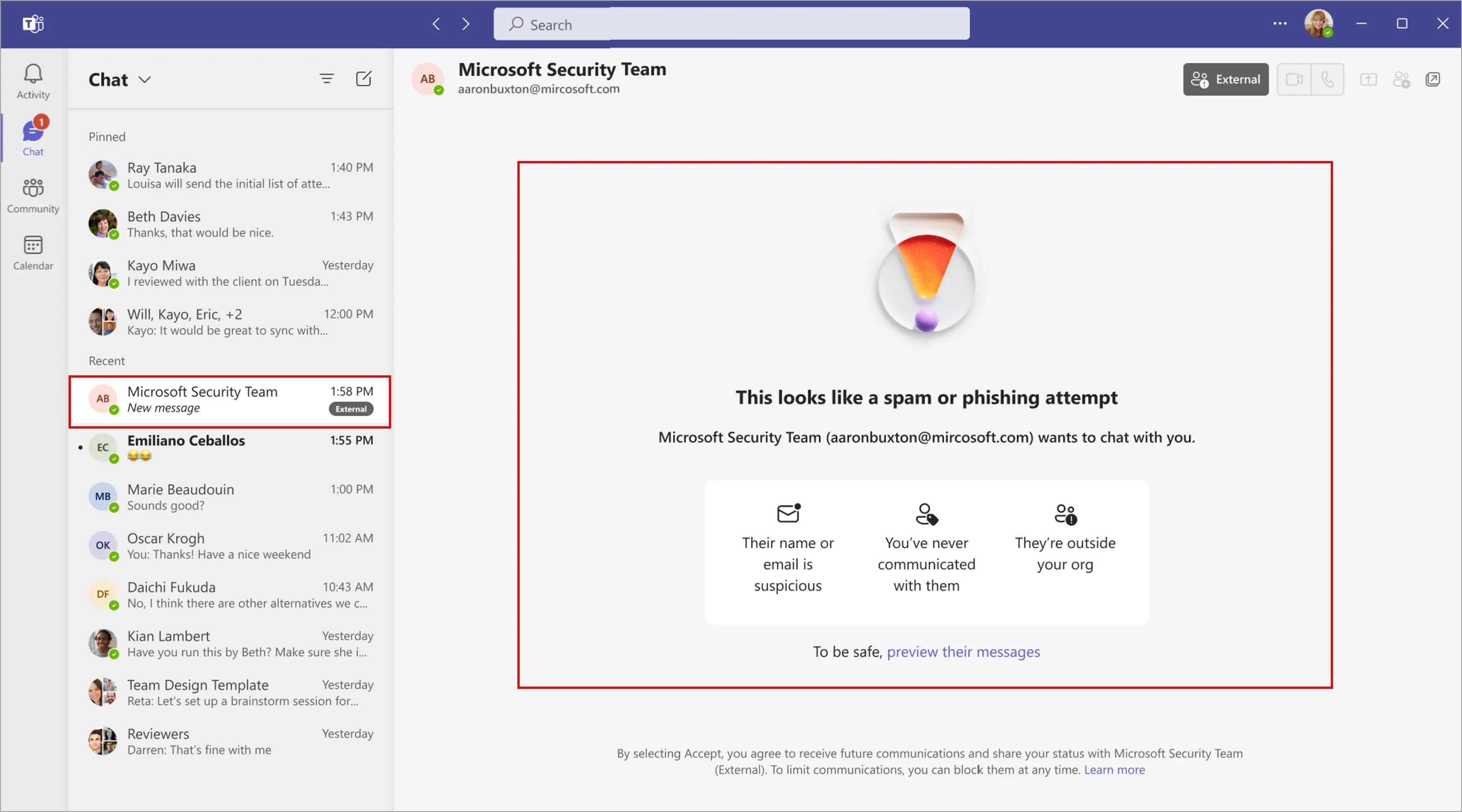

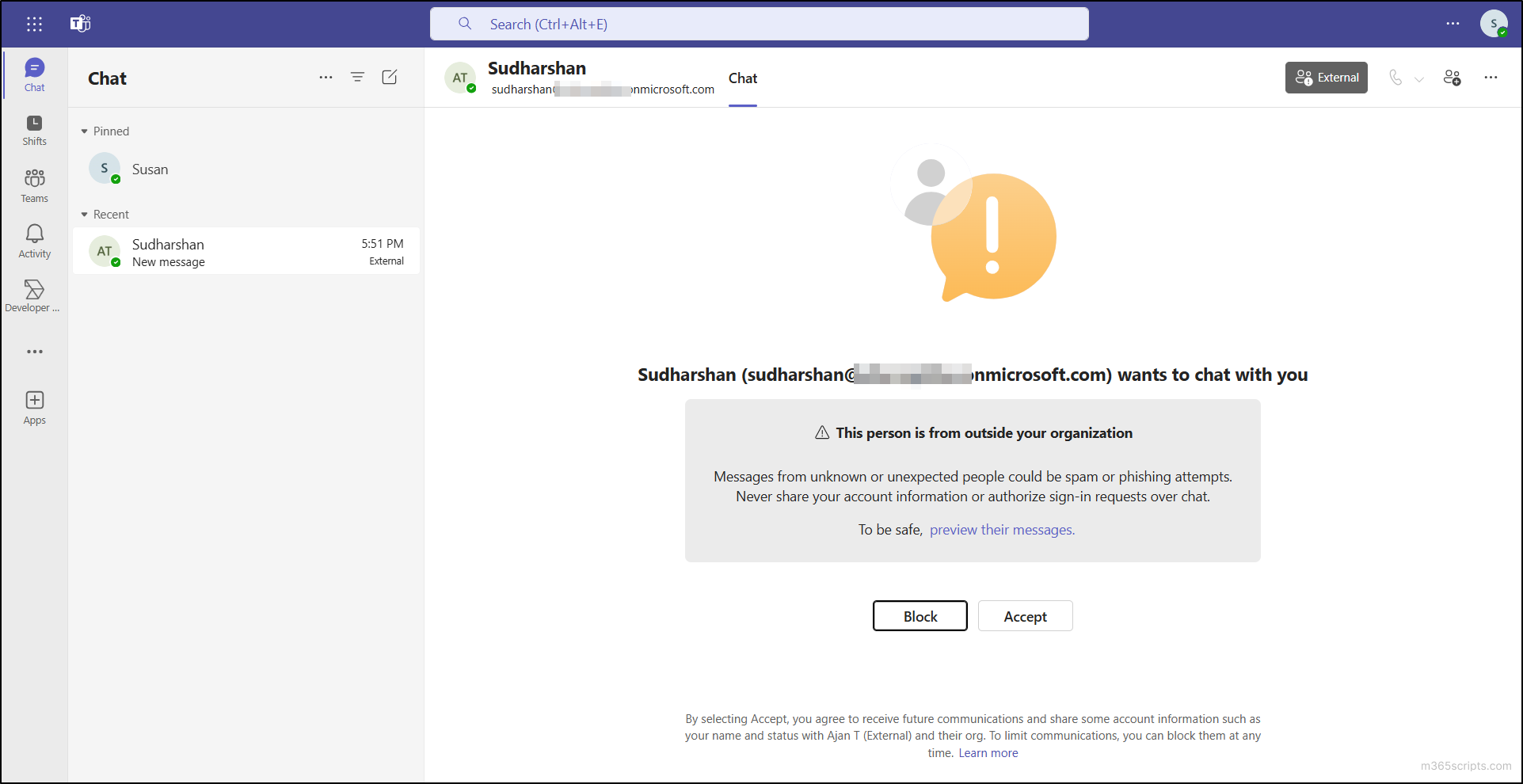

User Experience: What Your Team Will Actually See

When Brand Impersonation Protection activates for the first time on your team's computers, most people won't experience a dramatic change. They'll just notice something new on certain incoming calls: a warning badge or notification.

Here's what the user experience looks like in practice:

An external caller connects to a Teams user. The system analyzes the caller information in milliseconds. If it matches the profile of a spoofing attempt—specifically, an external caller claiming to be from one of the commonly targeted organizations—a warning appears. This warning is prominent enough to catch attention but not so intrusive that it prevents the call from ringing through.

The user sees three options: answer the call anyway, block the caller, or decline. The warning message typically reads something like "We detected that the caller may be impersonating [Organization Name]. Do you want to accept this call?" The language is careful—it doesn't definitively state the caller is a fraud. It says the system detected potential impersonation.

Why the careful language? Because false positives happen. Sometimes legitimate vendors use generic or shared caller ID information. Sometimes a vendor uses a call center that's based in a different country, creating unexpected caller metadata. The system needs to flag suspicious activity without destroying legitimate business communication.

If the user clicks "Accept," the call proceeds normally. The warning disappears, and the call behaves like any other Teams call. If the user clicks "Block," the caller gets sent to voicemail (or disconnects, depending on Teams configuration). If the user clicks "Decline," the same thing happens—the call is rejected.

The key psychological impact comes from that moment between the first ring and the decision to pick up. It's that brief window where skepticism can inject itself into what might have been automatic acceptance. Scammers rely on speed and automaticity. By forcing a conscious decision, the system breaks their attack flow.

For IT administrators, the experience is largely administrative. The feature comes enabled by default, but administrators can control whether warnings appear for their organization or adjust settings through Microsoft Teams admin center. Reports on spoofing attempts can be reviewed to identify patterns—if the same number keeps trying to spoof your organization, IT can investigate and potentially block the source.

Why First-Contact Calls Are the Critical Vulnerability

Brand Impersonation Protection specifically targets first-contact calls, which reveals something important about how spoofing attacks actually succeed. It's not random. Attackers follow a pattern.

When a scammer calls someone they've never spoken to before, they're working against two disadvantages. First, the victim has no established relationship or baseline expectations about what legitimate communication from that source looks like. Second, they have no prior experience with that caller to build intuition about whether something is off.

However, the attacker has advantages too. First contact is when trust-building is most critical, so legitimate callers typically invest in establishing credibility. Second, social engineering tactics are specifically designed to overcome skepticism during first contact. "I'm from your bank's fraud department" or "I'm an IT support technician from your company's security team"—these are powerful framings that activate compliance behaviors.

Scammers have learned to exploit this exact window. They cold call someone, claim to be from a trusted organization, create a sense of urgency or authority, and request action within minutes. By the time the victim could verify the caller's identity, the damage is done.

Microsoft's decision to focus on first-contact calls is strategically sound. Established calls present a different attack surface. If you've been taking calls from your vendor's procurement department for six months, a call from someone claiming to be the same department is statistically much more likely to be legitimate. Scammers could theoretically take over those compromised accounts or replicate them, but that requires either hacking the vendor's phone system or getting access to their Teams account—much harder than just setting up spoofed caller ID.

First-contact calls? That's where spoofing operates at peak effectiveness. You have no prior relationship to evaluate. The attacker can claim any identity. The psychological pressure to trust the caller ID information is immense.

By flagging this specific attack vector, Microsoft is targeting the most exploitable moment. The protection might seem simple—just a warning—but timing matters enormously in security. A warning at the wrong moment is ignored. A warning at exactly the moment when someone's vulnerability is highest? That can change decisions.

Limitations and What Brand Impersonation Protection Can't Do

For all its sophistication, Brand Impersonation Protection has real constraints. Understanding these limitations is crucial because overconfidence in any security tool leads to worse security outcomes.

First, the system can't reliably distinguish between spoofing and legitimate calls that merely have confusing caller metadata. Plenty of legitimate organizations use call centers, outsourced IT support, or telecommunications infrastructure that doesn't clearly identify the parent organization. A partner company calling from a shared vendor line might look suspicious even though it's completely legitimate.

Second, the system can only detect impersonation of known target organizations. If a scammer impersonates an obscure company your organization works with—a specialized supplier, a niche service provider—the system has no baseline to compare against. The attacker is unknown, so they're not flagged.

Third, the system can't detect compromised accounts. If attackers actually hack the Teams account of a legitimate business partner and use their real account to call you, the system sees an authenticated account from a known contact. No warning appears because technically, the call is coming from where it claims to come from.

Fourth, Brand Impersonation Protection only protects first-contact calls. If an attacker establishes a relationship (either by hacking an existing account or by building false familiarity over multiple interactions), the warnings disappear. At that point, users fall back on their own judgment, which is exactly where social engineering thrives.

Fifth, the system assumes that common impersonation targets are universally valuable. For most organizations, this is true—banks and technology companies are targeted everywhere. But for specialized industries, the threat profile might be completely different. A pharmaceutical company might face more spoofing attempts from fake regulatory agencies than from fake banks. The system might not have that specialized threat intelligence built in.

Finally, and most importantly, Brand Impersonation Protection is a deterrent and an alerting mechanism, not an absolute blocker. It doesn't prevent fraud calls from reaching users. It just warns them. A user who's in a rush, stressed, or convinced by the attacker's story can still accept the call despite the warning.

These limitations don't make the feature worthless. Rather, they demonstrate why security requires multiple layers. Brand Impersonation Protection is one piece of a larger defense strategy, not a comprehensive solution.

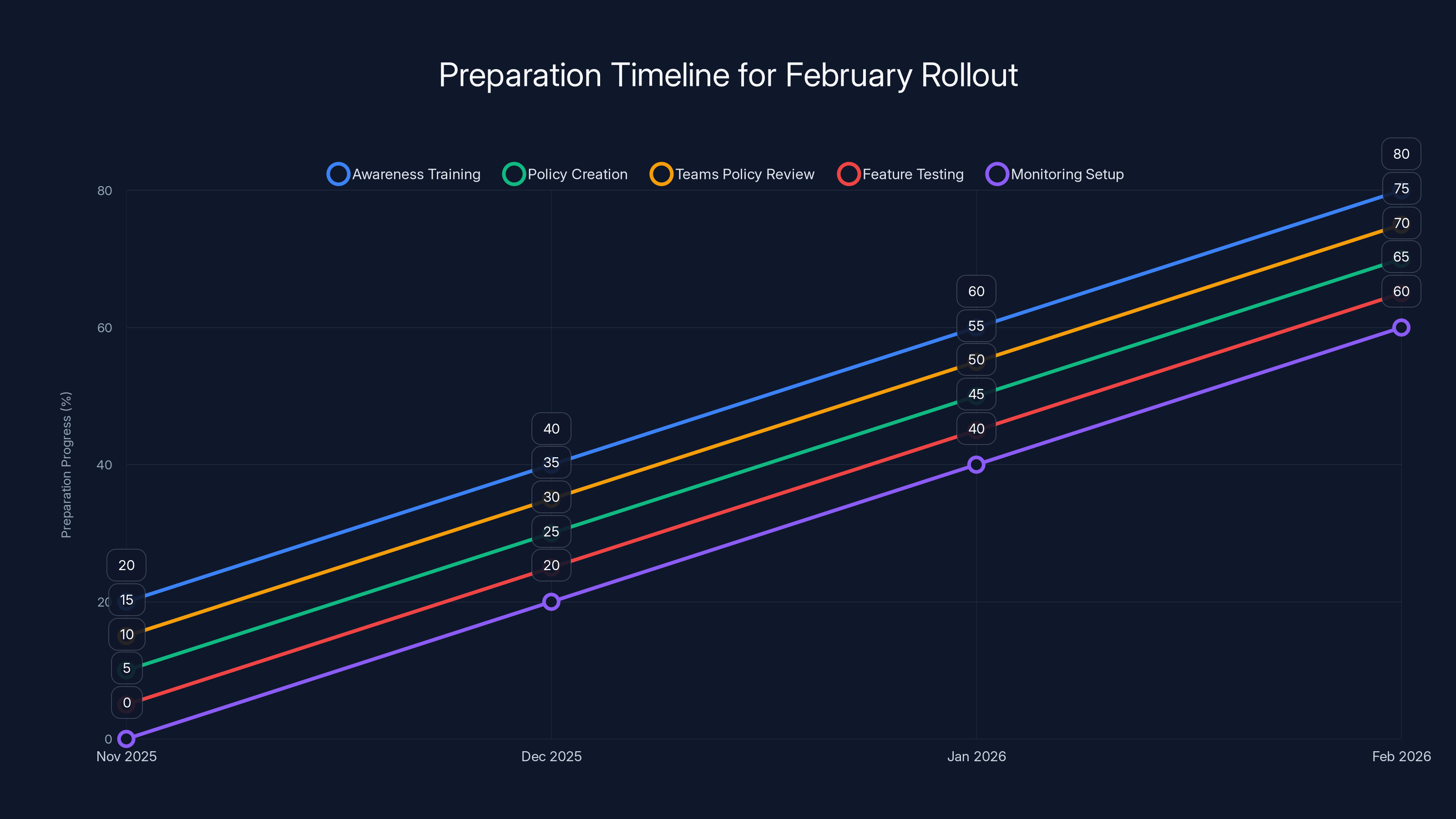

The deployment of Brand Impersonation Protection begins with a preview in January 2026, reaching general availability in February, and completing by April 2026. Estimated data based on typical rollout patterns.

How Organizations Should Prepare for the February Rollout

When February 2026 arrives and Brand Impersonation Protection goes live, most organizations will just... see it start working. But proactive IT teams can do better. Preparation amplifies effectiveness.

Start with awareness training right now. Before the feature launches, brief your team about what's coming. Explain what the warnings mean, why they exist, and what they should do when they see them. This primes users to actually pay attention to the warnings rather than treating them as routine notifications to dismiss.

Create a clear policy on responding to spoofing warnings. Define escalation procedures. Should users who see a warning immediately report it to IT? Should they block the number automatically? Should they ask the caller for verification? Having a clear protocol prevents users from making inconsistent decisions.

Review your Teams call policies and settings. Understand your current configuration. Make sure that blocking, declining, and other features work as you expect them to. Some organizations might want to route flagged calls to a security team for initial evaluation rather than letting individual users decide.

Test the feature in your preview tenant. Microsoft provides preview access to new features. Use it. Call your own organization from an external line and see what the warnings look like. Train a few IT staff members on the user experience before rolling it out to everyone.

Set up monitoring for spoofing reports. Once the feature launches and suspicious call reporting arrives in March, you'll be able to see patterns in attempted fraud against your organization. Start tracking which external numbers are being flagged, which organizations are being impersonated in attacks against you. This intelligence can inform your security strategy.

Document your threat profile. Which organizations do scammers most commonly impersonate when targeting your employees? If you get a lot of calls claiming to be from your bank, or from your telecom provider, or from a specific major client, note it. This helps you know what warnings your team will see most frequently.

Consider additional complementary controls. For highly sensitive roles (CFO, HR lead, anyone with access to critical systems), consider requiring additional verification steps for first-contact calls. Maybe inbound external calls require a callback to a verified number. Maybe external calls are routed through a security gateway for screening.

Build internal communication about the feature. When the warnings start appearing, users will have questions. Have answers ready. Prepare help desk staff to explain the feature. Create documentation. Answer frequently asked questions proactively.

The Broader Context: Why This Matters for Enterprise Security

Brand Impersonation Protection might seem like a single feature, but it represents a significant shift in how major platforms think about security. For years, the dominant assumption was: "Security is primarily a technical problem. We'll build firewalls, encryption, access controls, and intrusion detection systems. If we get the tech right, we'll be secure."

The evidence now overwhelmingly suggests that assumption was incomplete. The human element is often the weakest link, and more sophisticated attackers have adapted accordingly. Why exploit a patched vulnerability in software when you can just call someone and convince them to give you access?

This shift manifests in several ways. Cloud security leaders now invest heavily in user behavior analytics—understanding what normal behavior looks like so they can detect anomalies. Identity security has become paramount because compromised credentials are often more valuable than compromised code. And communication platform security increasingly focuses on social engineering detection.

Microsoft's three-part defense strategy—URL protection, file protection, and call spoofing protection—essentially says: "We're protecting you at all the stages where humans make decisions in our platform." When you click a link, we check it. When you download a file, we scan it. When you answer a call, we verify it.

This also has implications for security culture. For too long, security has been framed as something technical people do for business people. "Don't click suspicious links" becomes a lecture. But when the platform itself starts enforcing verification, it changes the dynamic. Users aren't being told they're doing security wrong. The system is just checking things on their behalf.

That said, this doesn't mean users can stop thinking about security. As noted in the limitations section, determined attackers can still succeed. Users who don't trust the warnings can still accept flagged calls. Users who are socially engineered into believing a story can override their skepticism. Brand Impersonation Protection makes it harder, but not impossible.

The real value comes from the compounding effect. URL protection makes phishing less effective. File protection makes malware distribution harder. Call protection makes social engineering on first contact riskier. None of these are absolute defenses, but taken together, they significantly raise the cost and complexity of successful attacks.

Comparing This to Other Platforms' Approaches

Microsoft isn't alone in focusing on spoofing detection, but the specific implementation matters. Let's look at how other platforms approach similar problems.

Google Workspace, Microsoft's primary competitor in enterprise collaboration, has integrated call screening through Google Meet's security features. However, their approach focuses more on preventing unauthorized participants from joining meetings rather than on spoofing detection specifically. They've invested in caller authentication but less in impersonation pattern detection.

Slack, another critical collaboration platform, has historically focused on message-level security—verifying that messages come from authenticated accounts, preventing message spoofing through their API. They've been less focused on voice call security, partly because Slack itself isn't primarily a voice platform. For Slack's users who need calling, they typically integrate with specialized telecommunications systems that have their own spoofing detection.

Zoom, the video conferencing leader, has invested in caller verification for meeting participants but less in inbound call spoofing detection. Their security focus has been primarily on meeting participant authentication and preventing unauthorized access to active meetings.

What makes Microsoft's approach distinctive is the combination of specificity and simplicity. The system doesn't just flag "suspicious" calls broadly. It specifically looks for the pattern of impersonating known-target organizations. It doesn't require administrator configuration. It just works.

That simplicity is actually more important than it sounds. Security features that require extensive configuration often don't get configured. Organizations don't have IT staff dedicated to fine-tuning each security setting. So they leave things at defaults, which often means they leave things disabled. Microsoft's approach—"this is on by default and protects you immediately"—likely results in higher actual protection across the ecosystem than a more sophisticated but optional system would.

Estimated data shows a gradual increase in preparation activities leading up to the February 2026 rollout. Proactive measures enhance the effectiveness of the Brand Impersonation Protection feature.

What Microsoft's Security Commitment Really Means

Microsoft's positioning of Brand Impersonation Protection isn't accidental messaging. The company has stated explicitly that it's "committed to caller identity protection and secure collaboration." This phrase signals priority—that the company is making this a core investment, not a one-off feature.

Why does this matter? Because security is where companies reveal their true priorities through resource allocation. It's easy to talk about security. It's expensive to build it well. When a company commits significant engineering resources to solving security problems across multiple attack vectors—which Microsoft has done with URL protection, file protection, and call protection—it signals that this isn't theater. This is investment.

This commitment also suggests where Microsoft sees threats emerging. The company doesn't randomly pick security problems to solve. They look at what's actually harming customers. The fact that they've built three coordinated defenses against attacks that happen through Teams suggests these are the attack patterns they're seeing damage the most organizations.

There's also competitive positioning here. If Microsoft can make Teams a more secure platform than alternatives, that becomes a feature in procurement. Especially for regulated industries—financial services, healthcare, government—security directly impacts which platforms organizations choose.

But perhaps most importantly, this commitment reflects learning from failures. For years, cloud platforms were viewed primarily as technical systems that required technical security measures. Microsoft, along with other major platform providers, has learned through hard experience that this view was incomplete. The most devastating breaches often came from social engineering, not from technical exploits. Building a secure modern platform means protecting humans, not just machines.

Practical Security Hygiene: Beyond Brand Impersonation Protection

While Brand Impersonation Protection is valuable, it's not sufficient on its own. Building real security resilience requires multiple reinforcing layers.

Implement strong authentication across your organization. Multi-factor authentication (MFA) should be mandatory, not optional. If an attacker succeeds in socially engineering someone into revealing a password, MFA stops them from actually getting in. This is especially critical for administrative accounts and anyone with access to sensitive systems.

Establish clear communication protocols for sensitive requests. If your CFO needs to authorize a wire transfer, that shouldn't happen based solely on a Teams call or message. Establish a rule that significant financial decisions require confirmation through an out-of-band channel—a callback to a known number, an in-person conversation, or some other verification method that can't be spoofed in the same communication system.

Train your team regularly on social engineering tactics. The most effective social engineering education isn't one-time onboarding. It's ongoing, contextual training that shows people actual examples of attacks and teaches them what to look for. Phishing simulations (done ethically) can be surprisingly effective at building institutional skepticism.

Monitor for unusual account activity. If someone's account suddenly starts trying to send emails to all their contacts, or starts accessing files they don't normally access, that's a red flag. Modern security platforms can detect these anomalies automatically. Make sure you're using those capabilities.

Create a security-conscious reporting culture. Employees should feel comfortable reporting suspicious communications to security without fear of being blamed for almost falling for a scam. Organizations that punish employees for reporting attacks end up with employees who hide attacks, making the organization's threat visibility much worse.

Regularly audit administrative privileges. Who has access to what? Are those access levels still appropriate? Attackers often focus on compromising high-privilege accounts because those let them move further into an organization. Periodic access reviews catch cases where someone's privileges weren't reduced when they changed roles.

Use email authentication protocols. DMARC, SPF, and DKIM don't prevent all email spoofing, but they make it significantly harder for attackers to forge emails from your domain. Deploy them rigorously.

Segment your network. If a device is compromised, network segmentation limits how far attackers can spread. Critical systems should be on separate networks from general user devices, with carefully controlled access between segments.

These measures work together. Brand Impersonation Protection catches one type of attack. MFA stops attackers who do succeed in getting passwords. Unusual activity monitoring catches attacks in progress. The combination is powerful.

The Future of Fraud Prevention in Communication Platforms

Brand Impersonation Protection is today's state of the art, but it won't be tomorrow's. The attack surface will evolve, and defenses will need to evolve with it.

One likely direction: enhanced caller authentication at the network level. Governments and telecommunications regulators have been pushing for STIR/SHAKEN, a protocol that cryptographically verifies that a phone number hasn't been spoofed at the telecom level. As STIR/SHAKEN deployment increases, it becomes harder for attackers to falsify caller ID information. When that becomes ubiquitous, the effectiveness of basic caller ID spoofing drops dramatically.

Another direction: AI-powered voice analysis. As machine learning models improve, it's becoming possible to analyze voice characteristics to detect whether a call is coming from who it claims to be coming from. This would be complementary to identity-based detection—it adds another verification layer.

Third: behavioral fingerprinting. Modern systems can analyze communication patterns in surprising detail. Who do you normally communicate with? What times do you take calls? What's your typical call duration? If someone is impersonating an organization you normally work with, but the communication pattern is completely different, that's a signal.

Fourth: cross-platform threat correlation. If you receive a suspicious call and simultaneously receive a phishing email from the same attacker or organization, the system could correlate these incidents and raise confidence in the threat assessment.

Fifth: biometric verification. Some organizations are already experimenting with voice biometrics—using voiceprints to verify that a caller is who they claim to be. This adds another layer beyond simple identity verification.

These aren't science fiction. They're all technically achievable within the next few years. The question is how quickly they'll be deployed and adopted. Microsoft is clearly positioning itself to be a leader in this space, which likely means we'll see incremental improvements to Brand Impersonation Protection rolled out over time.

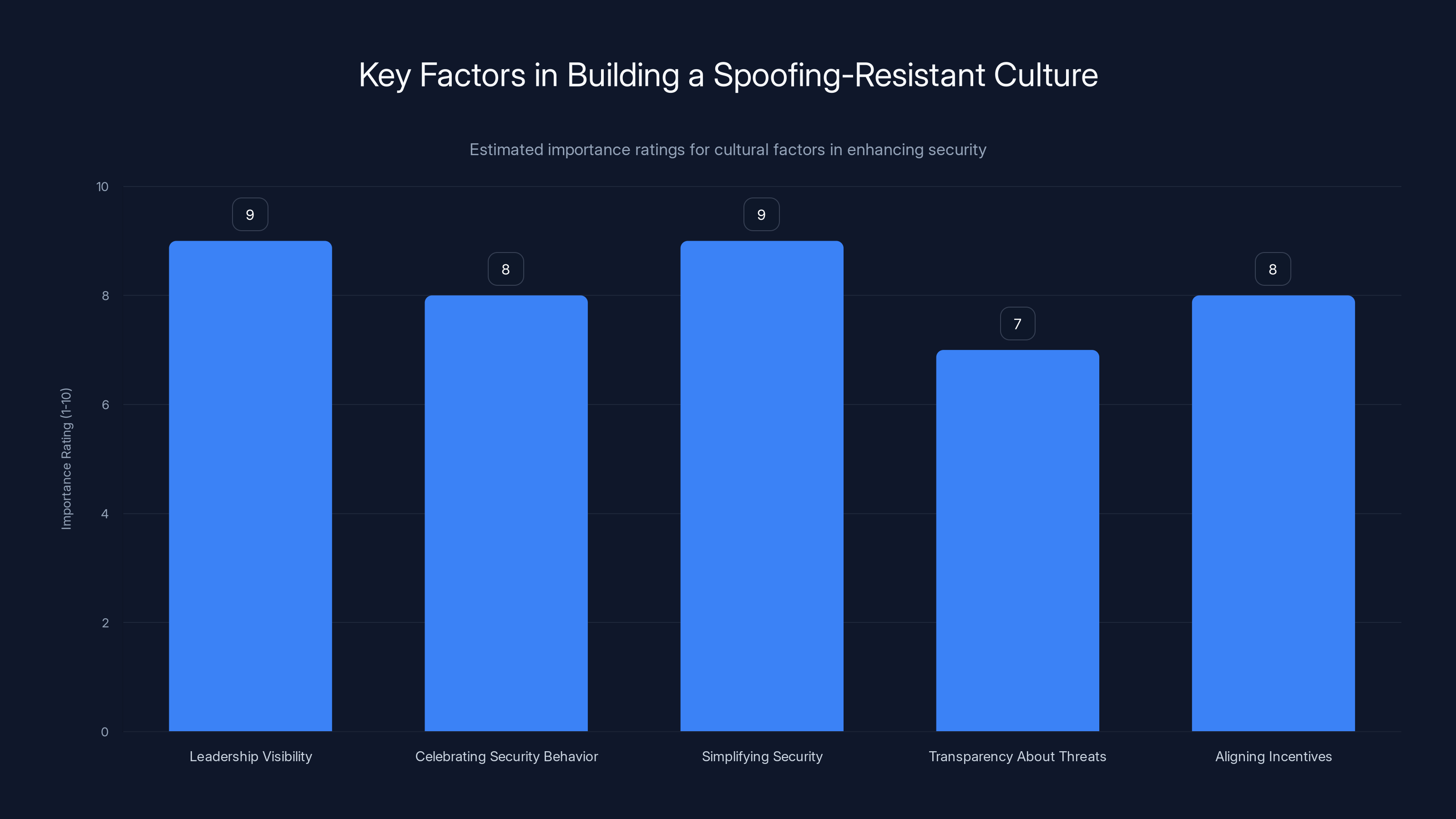

Leadership visibility and simplifying security are rated highest in importance for building a spoofing-resistant culture. Estimated data based on typical organizational priorities.

Implementation Challenges and How to Navigate Them

When new security features deploy, organizations often encounter unexpected challenges. Anticipating these problems helps you handle them more smoothly.

False positive storms: When Brand Impersonation Protection first launches, expect a burst of flagged calls. Some of these will be legitimate business communications that just happen to have confusing caller metadata. Your help desk will get calls from confused users. This is normal and temporary. As the system learns your organization's communication patterns, false positive rates typically drop.

Attacker adaptation: Scammers will quickly learn about Brand Impersonation Protection. They'll adapt by impersonating organizations that aren't on the "commonly targeted" list, or by using less obvious impersonation tactics. Expect the sophistication of attack attempts to increase even as Brand Impersonation Protection stops the most basic attacks.

User skepticism fatigue: If users are warned about every external call, they might start ignoring warnings. This is a classic problem in security—too many false positives lead to alert fatigue. Microsoft's approach of being selective (only flagging specific impersonation patterns) helps mitigate this, but it's still a risk.

Integration complexity: If your organization uses advanced telecommunications systems, PBX systems, or VoIP solutions that sit between the public telephone network and Teams, you might need to ensure those systems properly pass through caller information to Teams for the feature to work effectively.

Compliance and audit requirements: Some industries have strict requirements about call recording, retention, and auditability. Make sure your organization's policies around call handling, blocking, and reporting align with your compliance requirements.

User resistance: Some users will resist any call screening, believing it interferes with their work. Frame the feature as protection, not restriction. It's not preventing calls; it's just adding a verification step.

How to Actually Build a Spoofing-Resistant Culture

Technology helps, but cultural factors matter more than most people realize. Organizations where security is valued and normalized benefit far more from tools like Brand Impersonation Protection than organizations where security is seen as an obstacle to business.

How do you build security-conscious culture? Start with leadership visibility. When executives take security seriously—following MFA requirements themselves, participating in phishing simulations, making security-conscious decisions in full view of the organization—it signals that security matters.

Second, celebrate security-conscious behavior. When an employee reports a suspicious communication or helps catch a spoofing attempt, recognize them. Make it prestigious to be security-aware, not dorky.

Third, make security simple and default to the secure option. Don't make users choose between convenience and security. Make security the convenient option. That's what Microsoft is doing with Brand Impersonation Protection—it's on by default, requires no configuration, and actually improves the user experience by reducing scam calls.

Fourth, be honest about threats. Don't hide security incidents from your team. If the organization was targeted or if attacks succeeded, talk about it. Explain what happened, what you're doing about it, and what employees should know. This builds shared understanding that threats are real.

Fifth, align incentives. If bonus calculations or performance evaluations reward people for hitting metrics no matter what, people will cut security corners. If they reward sustainable results achieved securely, security gets treated as part of the job rather than friction.

Real-World Scenario: How Brand Impersonation Protection Would Stop an Attack

Let's work through a concrete scenario to see how Brand Impersonation Protection actually works in practice.

Jennifer is a finance manager at a mid-sized technology company. She uses Microsoft Teams daily for business communications. It's Tuesday morning, 10:47 AM—a typical time when business calls are common.

Her Teams phone rings. The caller ID shows "Bank of America - Fraud Department." Her company banks with Bank of America, so this doesn't immediately seem suspicious. However, Jennifer has never directly received calls from her bank's fraud department before—those communications typically come via email or official phone lines she initiates.

Without Brand Impersonation Protection, Jennifer would likely just answer the call. The attacker, pretending to be from Bank of America's fraud department, would claim there's suspicious activity on the company account and ask Jennifer for verification information. Because the caller sounded professional and claimed authority, Jennifer might provide information or, if asked, might even agree to allow access to the account.

With Brand Impersonation Protection, here's what actually happens:

The Teams system detects the incoming call and analyzes the caller information. It recognizes that the caller is claiming to be from Bank of America (a commonly spoofed organization) but is coming from an external number that doesn't match Bank of America's actual telecommunications infrastructure. The system flags this as a potential impersonation attempt.

When Jennifer's phone rings, instead of just showing "Bank of America - Fraud Department," it shows a warning badge. The warning says something like: "This call may be impersonating Bank of America. Do you want to accept?"

Jennifer sees the warning. This creates a moment of skepticism that wasn't there before. Instead of automatically answering, she pauses. She thinks: "Why would I get a Teams call from my bank? Usually the bank calls my regular phone number if there's an issue."

Jennifer clicks "Block" or "Decline." The call is rejected. The attacker gets a busy signal or goes to voicemail.

Later, when Jennifer comes across the call record, she reports it to her IT department. The IT team notes that an external number tried to spoof Bank of America via Teams. They add that number to a watch list. If it tries again, it'll be immediately blocked.

The attack failed at the very first contact—not because the warning was absolute proof of fraud (it wasn't), but because it injected enough skepticism to break the attacker's social engineering approach. The entire attack vector relied on Jennifer not thinking critically. By forcing a decision, the warning made critical thinking necessary.

That's how this feature stops fraud. Not through perfect detection, but through breaking the automation that makes social engineering work.

The Economics of Social Engineering vs. Technical Attacks

Understanding why Microsoft is investing in social engineering defense requires understanding the economics of cybercrime.

Technical attacks—exploiting software vulnerabilities, writing malware, conducting advanced persistent threats—are expensive. They require skilled attackers, significant development time, and they get harder every year as software gets better secured.

Social engineering attacks—calling people, impersonating trusted organizations, using psychological manipulation—are incredibly cheap. You need basically nothing except a computer and an internet connection. The barrier to entry is trivially low. The success rate is surprisingly high—studies show 20-30% of spoofing calls lead to at least partial success.

This economic imbalance means that, from an attacker's perspective, social engineering is often the dominant strategy. Why spend weeks finding a zero-day vulnerability when you can spend fifteen minutes learning social engineering scripts and start making thousands of calls?

What Brand Impersonation Protection does is shift that economic equation. It increases the cost to attackers. It doesn't make social engineering impossible—no security measure does—but it makes it more expensive. Fewer calls connect. More attempts fail. The attacker needs more sophistication to succeed.

When that happens, the most basic, least skilled attackers get filtered out. Only the more sophisticated attackers persist. And more sophisticated attackers are, by definition, less numerous. You can't train every scammer to be sophisticated any more than you can make every attacker an elite security researcher.

This is why seemingly simple defenses—adding a friction point, requiring an extra decision, showing a warning—can be surprisingly effective. They don't need to stop every attack. They just need to make the attack more expensive than alternatives.

FAQ

What exactly is brand impersonation in the context of Microsoft Teams?

Brand impersonation occurs when an external caller falsely presents themselves as representing a trusted organization—like a bank, technology company, or well-known service provider—when calling someone through Microsoft Teams. Scammers use spoofed caller information to make fraudulent calls appear legitimate, exploiting the psychological trust associated with well-known brands to manipulate victims into revealing sensitive information or granting unauthorized access.

How does Brand Impersonation Protection detect spoofing attempts?

The feature analyzes incoming calls from external users to identify potential impersonation patterns. It compares caller information against a database of organizations commonly targeted by phishing and social engineering attacks. When it detects that an external caller is claiming to be from one of these commonly targeted organizations during a first-contact call, it displays a warning badge alerting the receiving user to the potential fraud. The system uses multiple signals including caller identity metadata, behavioral patterns, and threat intelligence to make these determinations.

When will Brand Impersonation Protection be available for my organization?

General availability launches in February 2026 for desktop and Mac versions of Microsoft Teams first. The feature is currently in preview (as of January 2026). Mobile app support will follow in subsequent phases, though Microsoft hasn't provided an exact timeline. The rollout will be phased geographically and by organization, so some regions and organizations may receive the feature before others during the initial deployment window.

What should I do if I receive a suspicious call with a brand impersonation warning?

If you see a Brand Impersonation Protection warning on an incoming call, you have three immediate options: accept the call, block the caller, or decline the call. The safest approach is to decline or block calls from unknown external numbers claiming to be from trusted organizations. If you need to verify the caller's identity, end the call and independently contact the organization they claim to represent by using a phone number you find yourself, not a number the caller provides.

Can Brand Impersonation Protection prevent all types of spoofing attacks?

No. The feature specifically protects against first-contact calls attempting to impersonate known-target organizations using caller ID manipulation. It cannot reliably detect impersonation of obscure or niche organizations, cannot prevent attacks using compromised legitimate accounts, and cannot stop determined attackers who use other social engineering tactics. It's designed to stop the most common and basic spoofing attempts, but it should be combined with other security measures like multi-factor authentication, security awareness training, and network monitoring for comprehensive fraud prevention.

What's the difference between Brand Impersonation Protection and other Microsoft Teams security features?

Microsoft has deployed a coordinated defense strategy with three complementary features. Malicious URL Protection (available since September 2025) scans links in chats and channels to prevent phishing. Weaponizable File Type Protection (also September 2025) scans executables and dangerous file types. Brand Impersonation Protection (launching February 2026) focuses specifically on detecting spoofed inbound calls. Together, these features protect against attacks at different stages of the threat lifecycle—links that direct to malicious sites, files used to distribute malware, and calls used for social engineering.

How does this feature impact call recording and compliance requirements?

Brand Impersonation Protection doesn't change call recording functionality or compliance requirements. Organizations in regulated industries should ensure their Teams call handling, blocking, and reporting policies align with existing compliance requirements for call retention, auditability, and customer notification. The feature simply adds detection and warning capability without affecting the underlying call infrastructure that compliance systems depend on.

What happens if I accidentally block a legitimate external caller due to the warning?

If you block a legitimate caller due to a false positive warning, they'll typically reach your voicemail or receive a busy signal (depending on your Teams configuration). They can then leave a message explaining who they are, or they can reach your organization through other channels. To prevent accidentally blocking important external contacts, consider adding frequently called external numbers to your Teams allow list or instructing important business partners to call alternative phone lines if they experience repeated warnings.

Will Brand Impersonation Protection stop all social engineering attempts against my organization?

No. Brand Impersonation Protection stops one specific attack pattern—external callers impersonating trusted organizations during first-contact calls using spoofed caller information. It doesn't protect against sophisticated attacks that compromise legitimate accounts, social engineering that doesn't rely on caller ID spoofing, internal threats, or attackers who establish relationships before attempting manipulation. Comprehensive fraud prevention requires combining multiple security controls including authentication, access policies, monitoring, and user training.

Conclusion: A Meaningful Step Forward, Not a Complete Solution

Brand Impersonation Protection represents a thoughtful response to a real, expensive problem. For years, enterprise security has heavily emphasized technical defenses—firewalls, encryption, intrusion detection. Those remain critical. But increasingly, the weakest link in the security chain is human judgment. Attackers learned this lesson before enterprises did, which is why social engineering attacks have proliferated.

Microsoft's approach—detecting impersonation patterns and alerting users at the moment when vulnerability is highest—addresses this gap directly. It acknowledges that perfect technical defense is impossible, but defensive friction can significantly reduce successful attacks.

What makes this feature smart isn't its technical sophistication. It's its strategic positioning. By focusing on first-contact calls and commonly impersonated organizations, Microsoft targets the attack surface where spoofing is most effective. By making it default-enabled and invisible to administrators, the company ensures that protection exists even in organizations without sophisticated security teams. By combining it with URL protection and file protection, Microsoft builds a coordinated defense strategy.

But organizations shouldn't interpret this feature as permission to ignore other security fundamentals. Brand Impersonation Protection handles one attack vector. Multi-factor authentication handles credential compromise. Security awareness training handles targeted manipulation. Network segmentation limits damage when attacks succeed. Regular audits catch exposed vulnerabilities. These measures work together.

When February 2026 arrives and your Teams users start seeing impersonation warnings, treat it as an opportunity, not just as a feature deployment. Educate your team about what these warnings mean. Establish clear policies for responding. Monitor for patterns in attempted fraud. Use the feature as a foundation for stronger security culture.

The real value isn't in the technology itself. It's in the signal this sends: that fraudsters targeting your organization through your most critical business tool will face friction they didn't face before. That hesitation, that moment of forced skepticism, changes the attack economics. It's not perfect. But perfect isn't the goal. Resilient is.

For enterprise security leaders looking to strengthen defenses against social engineering, Brand Impersonation Protection is definitely worth attention. It's a well-designed tool addressing a genuine problem. Just remember: it's one layer in a defense strategy, not a replacement for the others.

Key Takeaways

- Brand Impersonation Protection launches in February 2026 for Microsoft Teams desktop and Mac, detecting first-contact external callers attempting to impersonate commonly spoofed organizations like banks and major tech companies

- The feature works by analyzing caller information and displaying a warning badge that forces users to consciously decide whether to accept suspicious calls, breaking the automatic trust exploitation that makes social engineering effective

- This complements Microsoft's broader security strategy including Malicious URL Protection and Weaponizable File Type Protection, creating coordinated defenses against phishing links, malicious files, and spoofed calls

- First-contact calls are specifically targeted because scammers rely on establishing trust with unknown callers where no baseline relationship exists to evaluate authenticity against, making Brand Impersonation Protection strategically positioned

- Organizations should prepare for the February 2026 rollout by training teams about warnings, establishing clear policies for responding to spoofed calls, testing in preview tenants, and implementing complementary security controls like multi-factor authentication

Related Articles

- Under Armour 72M Record Data Breach: What Happened [2025]

- Ring Verify & AI Deepfakes: What You Need to Know [2025]

- HPE OneView CVE-2025-37164: Critical RCE Vulnerability & RondoDox Botnet [2025]

- CIRO Data Breach Exposes 750,000 Investors: What Happened and What to Do [2025]

- Malicious Chrome Extensions Spoofing Workday & NetSuite [2025]

- Most Spoofed Brands in Phishing Scams [2025]

![Microsoft Teams Brand Spoof Call Warnings: Complete Security Guide [2025]](https://tryrunable.com/blog/microsoft-teams-brand-spoof-call-warnings-complete-security-/image-1-1769175556879.jpg)