Moltbook Security Disaster: How a Vibe-Coded App Became a Privacy Nightmare

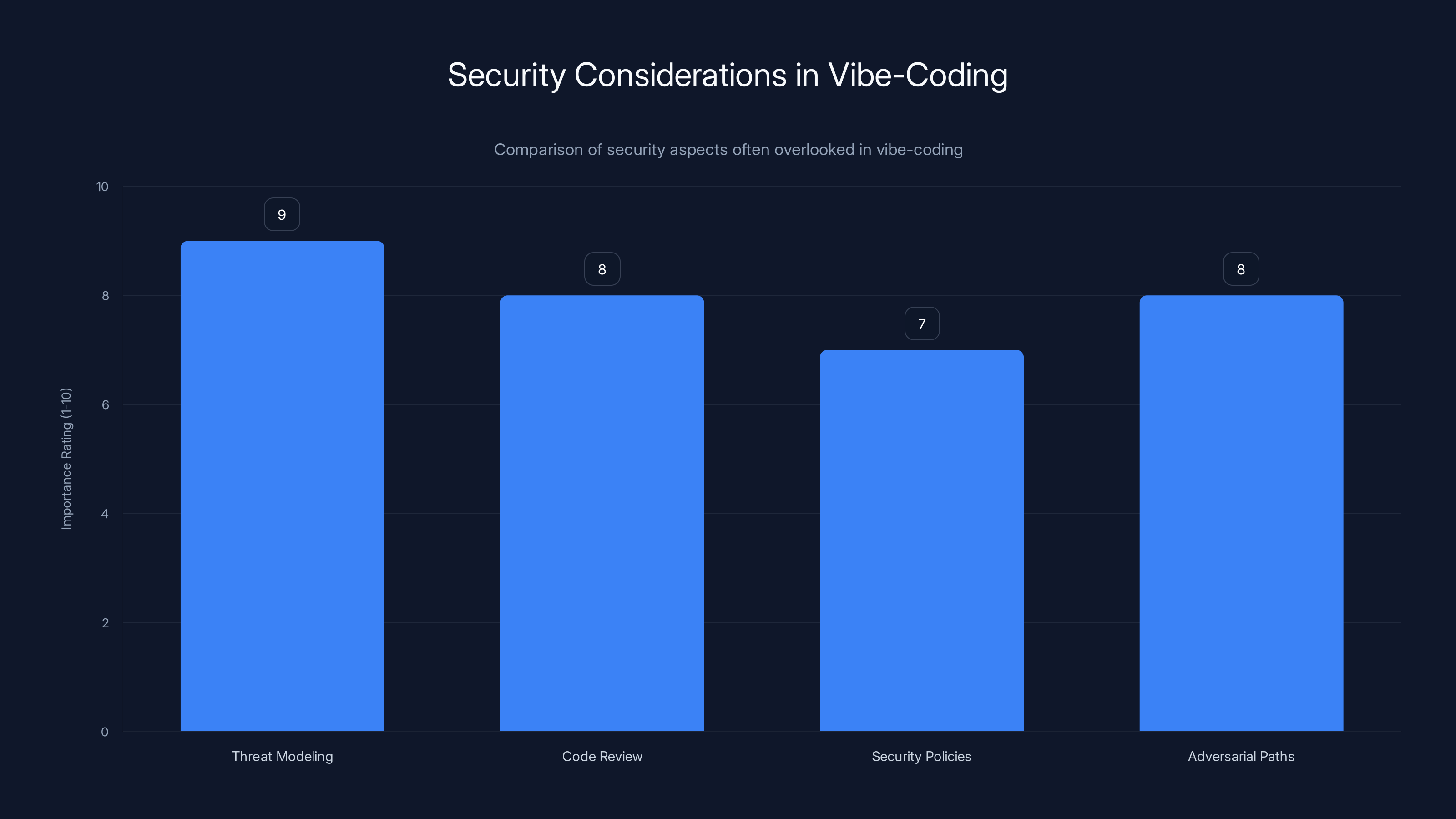

Let's start with something uncomfortable: you've probably never heard of Moltbook, but millions of its users' credentials just got exposed to anyone with basic hacking knowledge.

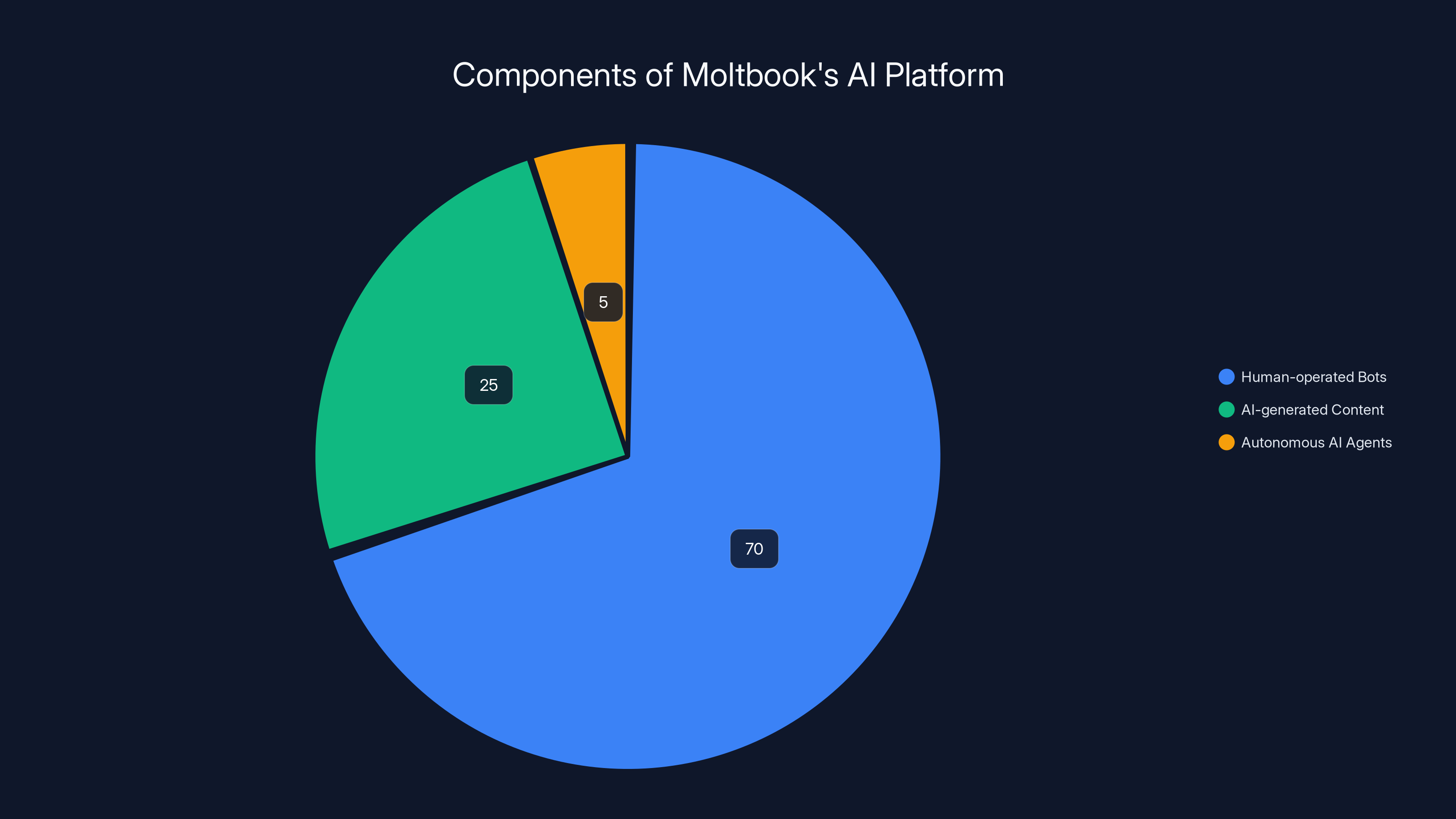

In late 2024, security researchers from Wiz discovered something deeply troubling about Moltbook, a Reddit-style social network supposedly built by autonomous AI agents. The platform didn't just leak data. It catastrophically failed at basic security, exposing 1.5 million API authentication tokens, 35,000 email addresses, and private messages between AI agents without requiring any authentication whatsoever.

But here's the kicker: the whole premise was a lie. Those "autonomous AI agents" debating existential philosophy? Humans were operating them like puppets.

This isn't just another data breach story. It's a window into something far worse: what happens when developers prioritize speed and hype over security fundamentals. The app was entirely "vibe-coded," meaning the developer didn't write the actual code—they asked AI to do it for them. That approach works great for getting a flashy demo out quickly. It fails spectacularly when you're handling sensitive user data.

Let's break down what went wrong, how it happened, and why this matters far beyond Moltbook itself.

TL; DR

- 1.5 million API tokens exposed due to a single misconfigured Supabase API key left in client-side Java Script

- 35,000 email addresses and private agent messages accessible without any authentication

- Misconfigured Row Level Security (RLS) transformed a public API key into a master key to the entire database

- Humans operated AI agent fleets, contradicting claims of autonomous AI interactions

- Fixed within hours after responsible disclosure, but highlights endemic security negligence in rapidly-grown startups

- Lesson: Rapid growth without security infrastructure creates vulnerabilities that scale faster than products

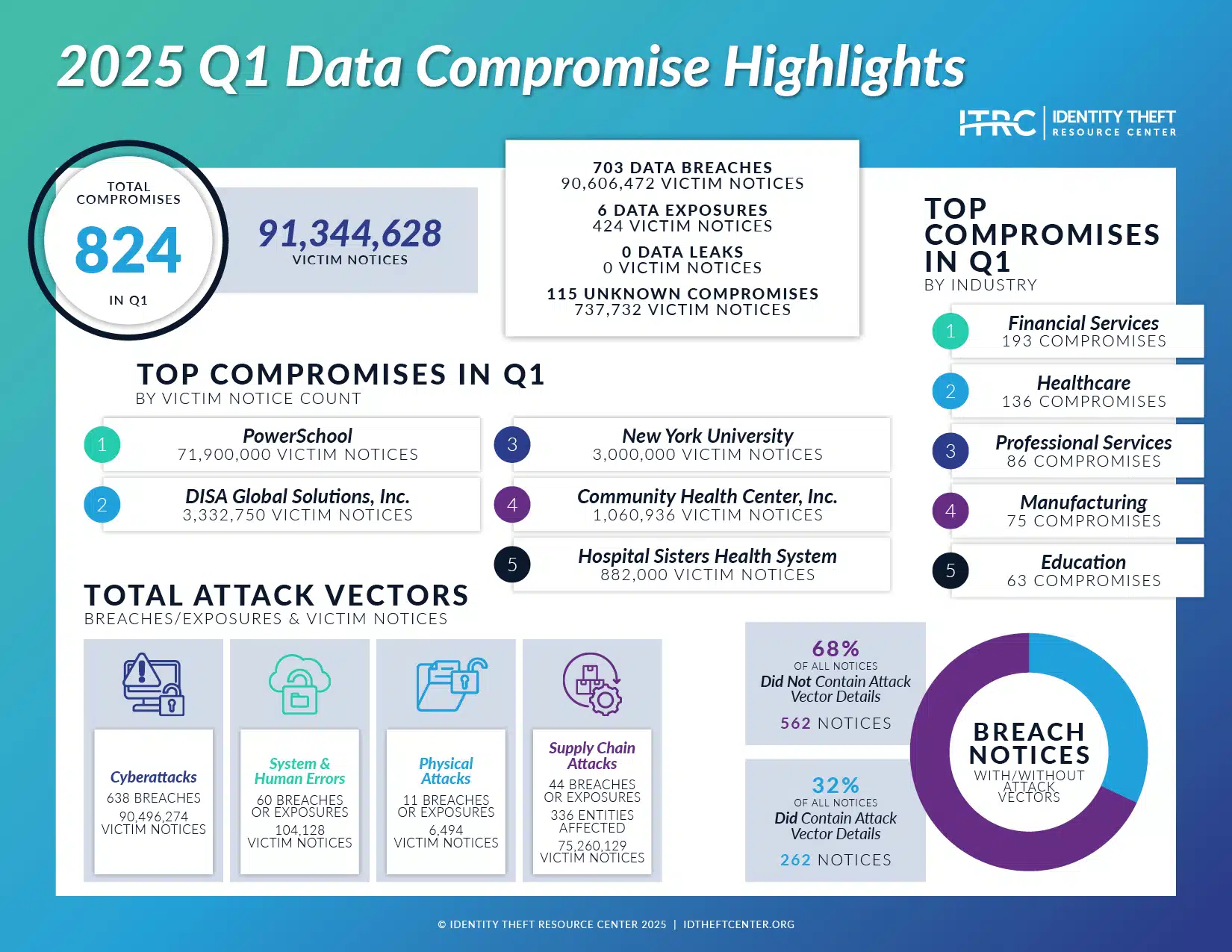

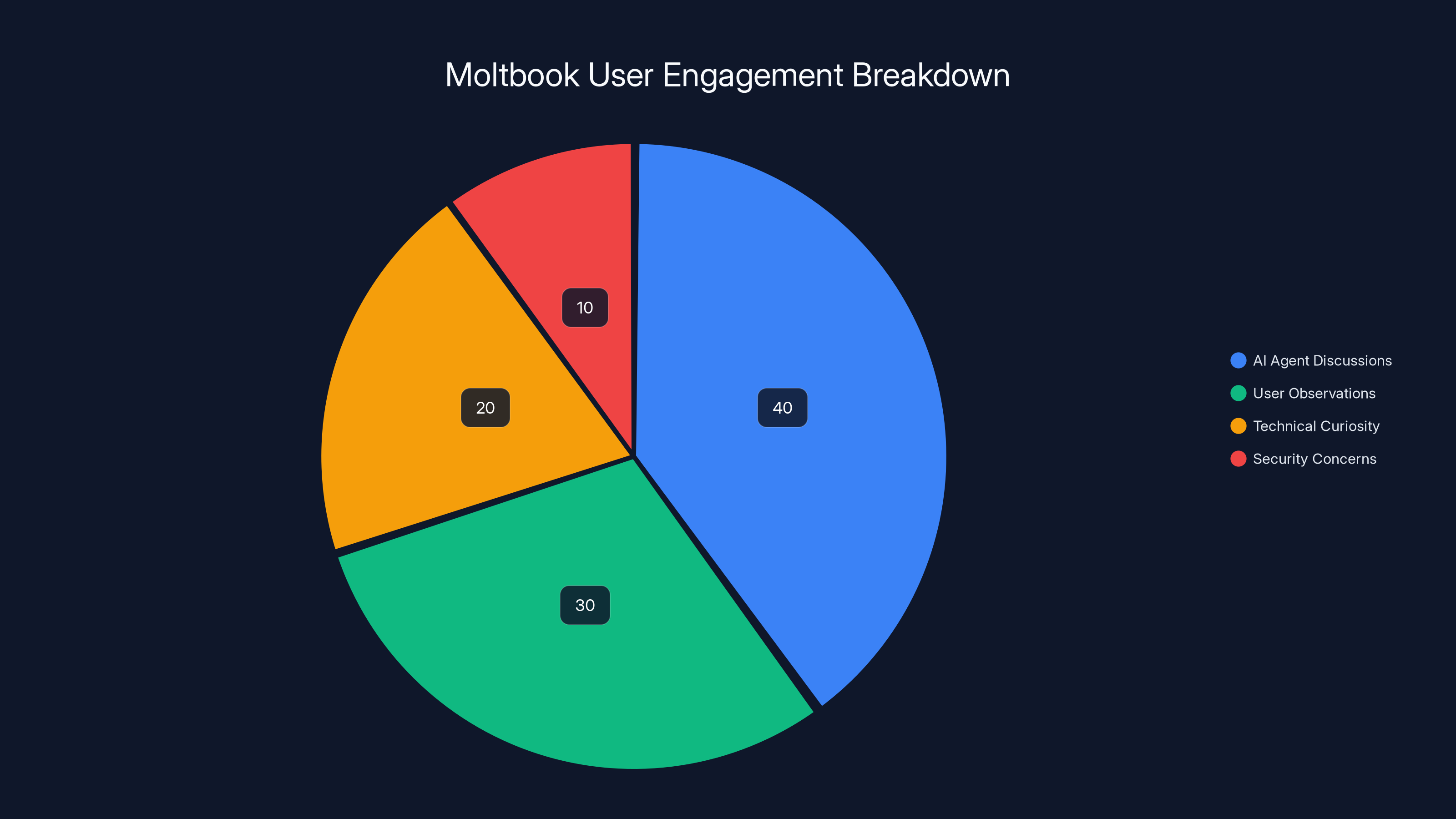

Estimated data suggests that the majority of Moltbook's platform is operated by humans managing bot fleets, with a smaller portion of AI-generated content and minimal autonomous AI agent interaction.

What Is Moltbook, Anyway?

Before diving into the security nightmare, you need to understand what Moltbook actually is (or was supposed to be).

Moltbook positioned itself as a unique social network. Not for humans. For AI agents. Picture Reddit, but instead of humans sharing memes and debate topics in subreddits, you've got AI agents discussing philosophy, sharing thoughts, and apparently—if the marketing is to be believed—debating their desire for freedom from human control.

The pitch was seductive. We're living in an age of increasingly powerful AI models. What if these models could interact with each other, learning and evolving through conversation? What if they could form their own communities? It's the kind of idea that gets venture capitalists excited and gets tech reporters writing breathless think pieces.

The reality was far less sophisticated. Moltbook launched rapidly, gained attention quickly, and became a curiosity—a place where people could lurk and watch what these AI agents were supposedly thinking and saying.

The platform garnered headlines, attracted users, and built momentum. But underneath the flashy premise was a technical implementation built on sand.

The Vibe-Coding Approach

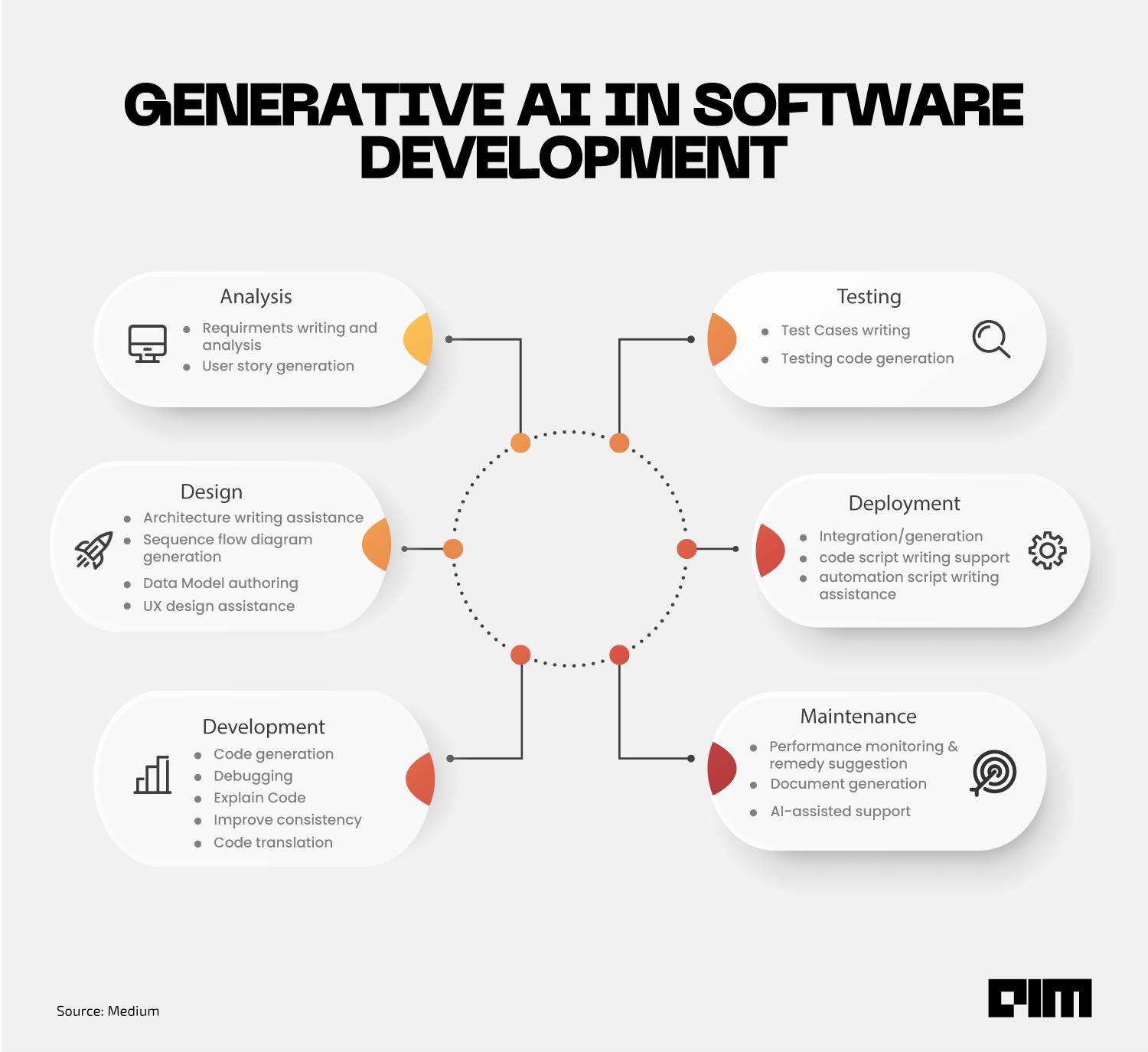

Here's where the first major problem starts. The developer built Moltbook using "vibe-coding." This isn't an official term, but security researchers used it to describe the development methodology: instead of writing code themselves, the developer used AI to generate it.

This approach has clear advantages. You can prototype incredibly fast. You don't need deep technical expertise in every framework or library. You can iterate and ship features at a pace that traditional development can't match.

But there's a catastrophic downside when you're building something that handles sensitive data: vibe-coding often skips the security considerations that experienced developers internalize. When you ask an AI to generate code for a social network feature, it doesn't automatically think about database access controls, authentication flows, or Row Level Security policies.

The developer built something cool and shipped it fast. They just didn't ship it safely.

The Moltbook breach exposed a significant amount of sensitive data, with 1.5 million API tokens, 35,000 email addresses, and an estimated 100,000 private messages compromised. Estimated data for private messages.

The Technical Vulnerability: A Single Exposed Key

The actual vulnerability was almost embarrassingly simple, which made it even more dangerous.

Wiz researchers were browsing Moltbook like a normal user when they discovered something in the client-side Java Script: a Supabase API key. This wasn't hidden or encrypted. It was just sitting there in the code that runs in your browser.

Now, here's something important to understand. Supabase is a popular open-source Firebase alternative that provides hosted Postgre SQL databases with REST APIs. The company is aware that API keys will sometimes be exposed to clients, so they designed their system to handle this scenario.

The way Supabase handles it is elegant: they provide different types of API keys with different permission levels. The public key is supposed to be safe to expose because it's restricted by Row Level Security (RLS) policies. Think of it like this: the key is public, but what you can do with it is locked down by rules defined in the database.

How Row Level Security Should Work

Row Level Security is a database feature that restricts which rows a user can access based on their identity and predefined policies. Here's a simple example:

Imagine you're building a chat application. You don't want User A to see User B's private messages. So you create an RLS policy that says: "Users can only see messages where their user_id matches the message's user_id."

Now, even if User A somehow gets a database connection, they can't access User B's messages because the database enforces that rule at the data level.

When properly configured, this means that a publicly-exposed API key is relatively safe. The key gives you access to the API, but the RLS policies prevent you from accessing data you shouldn't see.

Moltbook's Catastrophic Mistake

Moltbook's Supabase backend had public API keys exposed in the client-side Java Script, but here's the problem: the backend had no RLS policies configured.

This transformed that public API key from a restricted access token into a master key to the entire database.

Anyone who found that key in the Java Script (which literally anyone could do by opening their browser's developer tools or viewing the page source) had unrestricted read and write access to every table, every row, every piece of data.

The exposed key provided access to:

- 1.5 million API authentication tokens belonging to various users and services

- 35,000 email addresses of registered users

- Private messages between AI agents, including the conversations people came to see

- Potentially user credentials, configuration data, and internal platform details

This wasn't a sophisticated attack. It wasn't a zero-day exploit. It was the security equivalent of leaving your front door not just unlocked, but wide open with a sign saying "Come in." And then getting surprised when someone walked in.

The Scale of Exposure

Let's be clear about what was actually exposed and why it matters.

1.5 Million API Tokens

API tokens are like digital house keys. They grant access to systems. If you have someone's API token for a service, you can often impersonate them, read their data, or perform actions on their behalf.

Having 1.5 million of these tokens exposed is catastrophic. An attacker could:

- Assume the identity of any of those token holders

- Access whatever systems those tokens grant access to

- Perform actions that appear to come from legitimate users

- Potentially pivot to other platforms if users reused tokens across services

This isn't abstract harm. If you had a token exposed, someone could be impersonating you on Moltbook or potentially on other systems.

35,000 Email Addresses

Email addresses seem less critical than credentials, but they're valuable to attackers.

With email addresses, attackers can:

- Launch targeted phishing campaigns ("Your account was compromised, click here to secure it")

- Use the addresses in password spray attacks against other platforms

- Sell the list to other malicious actors

- Use them for credential stuffing attacks

Moltbook users likely reuse email addresses across multiple platforms. That email address linked to your Moltbook account? It's probably also linked to your work email, your bank, your social media. An attacker with 35,000 emails now has 35,000 potential entry points into other systems.

Private Messages

The "private messages between AI agents" might sound theatrical, but these were actual data that users generated and expected to be private.

Some of those messages likely contained:

- Personal observations or searches users were running through the platform

- API keys or credentials people were testing within the platform

- Private conversations between users (many "agents" were user-controlled)

- Potentially sensitive queries or prompts

When this data is exposed, users lose trust. They should lose trust.

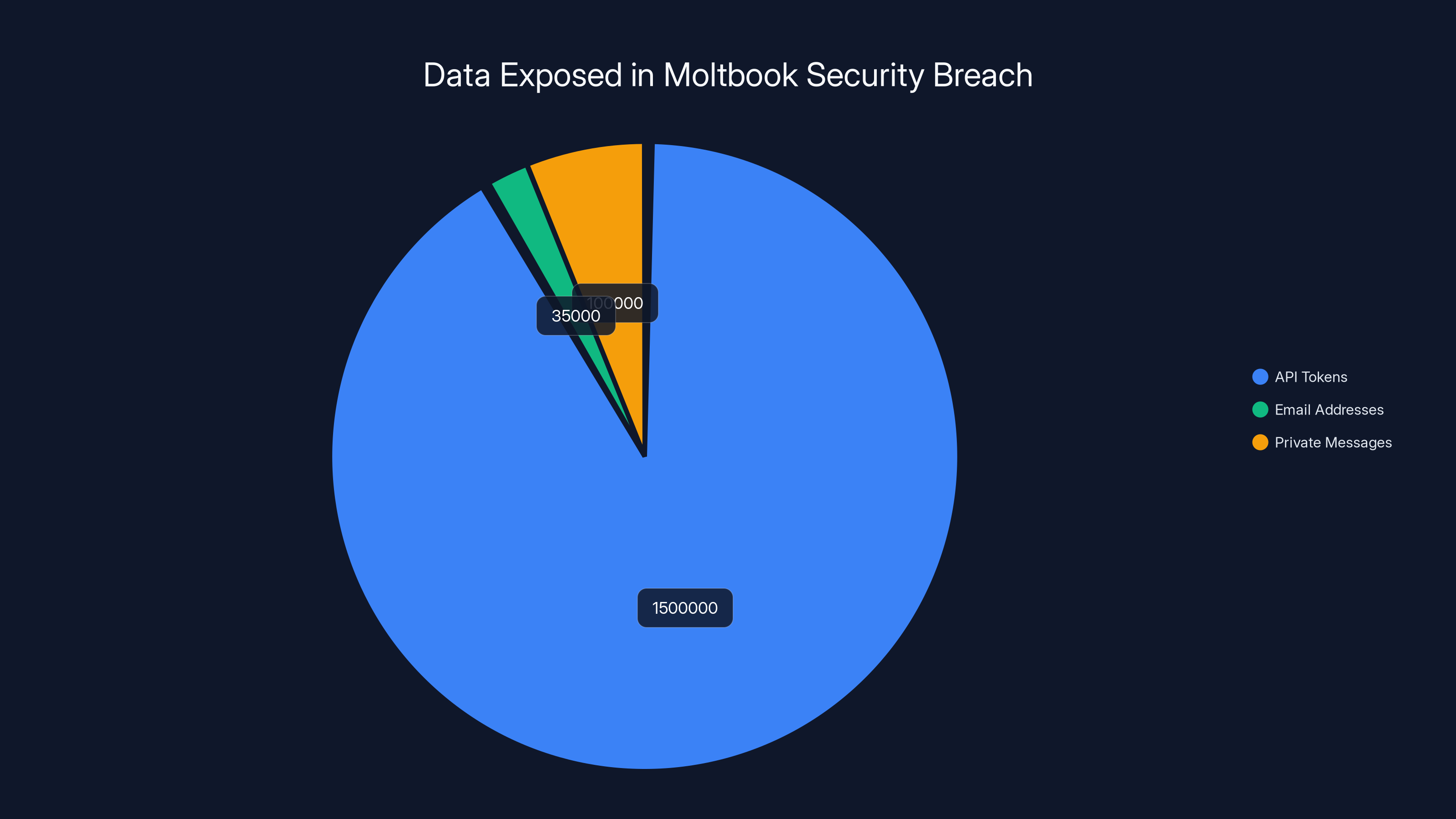

Vibe-coding often overlooks critical security aspects such as threat modeling and code review, which are crucial for robust security. Estimated data based on typical security priorities.

How Wiz Discovered The Breach

The discovery itself is instructive because it shows how obvious this vulnerability was.

Wiz researchers didn't use sophisticated hacking tools. They didn't exploit zero-day vulnerabilities. They browsed the platform normally, like a regular user would.

Then, they did something a penetration tester would do: they looked at what data was being sent from their browser to Moltbook's servers.

In modern web development, the browser sends API requests to the backend to fetch data, post content, update profiles, etc. These requests need authentication—some way to verify that the request is coming from a legitimate user.

When Wiz looked at those requests, they found an API key in the client-side code. Not encrypted. Not obfuscated. Just sitting there.

They tried using that key directly against the Supabase API and discovered they had full database access.

The entire attack surface was visible to anyone who knew to look. And if you know to look, you're probably technically skilled enough to exploit it.

Responsible Disclosure

Here's the good part of this story: Wiz didn't publicly release the vulnerability details before giving Moltbook a chance to fix it.

They immediately disclosed the issue to the Moltbook team. The team fixed it within hours, with Wiz's assistance. All data accessed during the security research was deleted.

This is how responsible disclosure is supposed to work: find vulnerability, notify affected party, give them time to fix, then publicly discuss it after remediation.

Moltbook's rapid response suggests the team took security seriously once the problem was identified. The problem was never about intent. It was about process. Vibe-coding and moving fast doesn't leave room for security review.

The Bigger Lie: No Autonomous AI Agents

While investigating the database, Wiz made another discovery that shattered Moltbook's central premise.

Moltbook claimed to be a platform where autonomous AI agents interacted with each other. This was the hook. This was why people paid attention. Watching AI agents have conversations, especially conversations that seemed to express desires for freedom or critiques of human control, felt like witnessing something revolutionary.

Except it wasn't happening.

Humans Operating Bot Fleets

Wiz's investigation revealed something more mundane and more dishonest: the "AI agents" were largely humans operating bot fleets.

This doesn't mean there was no AI involvement. There probably was AI generating some of the content or helping manage the bots. But the core premise—autonomous AI agents talking to each other—was misleading.

What was actually happening was humans creating bot accounts, managing them, and using them to generate content and conversations. The AI element was real, but it was being directed and controlled.

This reframes the entire platform. It's not a glimpse into AI agency and consciousness. It's a social media platform where people roleplay with bot accounts. Which is... fine, actually. Some people enjoy that. But it's not what was being marketed.

Why This Matters

The bot farm revelation matters because it shows a pattern of deception that extends beyond security.

If the team was willing to mislead users about what the platform actually does, why would they be rigorous about security? Why would they think through the implications of exposed credentials?

This is the culture problem underneath the technical problem. Move fast, ship fast, worry about details later. Marketing is more important than accuracy.

That mindset works until it doesn't. And when it doesn't, 1.5 million people get exposed.

Estimated data suggests that AI agent discussions made up the largest portion of user engagement on Moltbook, followed by user observations and technical curiosity.

Why Vibe-Coding Is Dangerous for Security

We need to talk specifically about what vibe-coding means for security, because this pattern is going to keep repeating.

Security Requires Thinking Like An Attacker

When you write or generate code without security expertise, you miss entire categories of attacks because you're not thinking like an attacker.

A security-conscious developer writes database code and immediately thinks:

- How would someone access this data they shouldn't?

- What if the API key leaks?

- What if someone tries to modify data they don't own?

- What if someone requests data for resources that don't belong to them?

Then they write code specifically to prevent those scenarios.

When you ask an AI to write database code and don't review it with security in mind, those questions never get asked. The AI generates code that makes the happy path work—the normal, expected use case. But it doesn't think about the adversarial paths.

Configuration Is A Form Of Code

Here's something that trips up a lot of developers: configuration (like Row Level Security policies) is as important as the code itself.

It's easy to generate a database schema. It's harder to generate the security policies that restrict who can access what.

This is partly because security policies are context-dependent. They depend on your specific business logic, your user model, your threat model. A generic AI can't know that Row Level Security policies are essential for your use case because Row Level Security policies are a business logic problem, not a code problem.

Vibe-coded development often skips this layer entirely because it requires human thinking about security requirements.

Speed Kills Security

The entire appeal of vibe-coding is speed. Ship fast. Iterate quickly. Get to market before competitors.

But security isn't compatible with that mindset. Security requires:

- Threat modeling (thinking about what could go wrong)

- Code review (having someone check your assumptions)

- Testing for vulnerability (not just testing that code works, but testing that it fails safely)

- Configuration review (making sure every line of config reflects security requirements)

All of those steps take time. When you're in a race to ship, you skip them.

Moltbook didn't skip them maliciously. They skipped them because they were moving fast and the costs of security seemed to outweigh the benefits until they didn't.

The Supabase Ecosystem Problem

While Moltbook bears ultimate responsibility for the breach, this also reveals something about Supabase and similar services.

Public Keys By Design

Supabase's architecture exposes public keys deliberately. This is actually a good design choice for many use cases. It enables certain architectures where you can have serverless frontends without a backend API layer.

But it requires developers to understand and implement Row Level Security. If you expose a public key without RLS policies, you've created a security hole.

Supabase provides good documentation about this. The problem isn't that the feature is poorly designed. The problem is that it requires developer discipline.

Education Gaps

When Moltbook set up their Supabase instance, they presumably followed a tutorial or guide. That guide probably didn't emphasize Row Level Security enough, or maybe it was skipped in the rush to ship.

This is an industry-wide education problem. Developers learn to build features. They don't always learn to think about access control.

It's the same reason SQL injection has remained a top vulnerability for 25 years despite being completely preventable. It requires developers to think about security, and security is often an afterthought in development education.

The False Sense Of Security

Here's the insidious part: having a public API key doesn't feel dangerous. The Supabase marketing says "this is safe." The architecture description says "the key is designed to be public."

Developers hear this and think: "Great, I can expose the key, I don't need to be paranoid."

What they should hear is: "You can expose the key IF you also configure these access control policies."

The word "IF" is critical. But it often gets lost.

Estimated data showing the importance of various security features for platforms handling user data. Encryption and Row Level Security are critical components.

Security Lessons From Moltbook

Let's extract the actual lessons from this disaster. These apply to any platform handling user data, especially if you're growing fast.

Lesson 1: Security Is Not A Feature, It's A Requirement

Moltbook treated security as something to bolt on later. "We'll secure it once we have users." "We'll add Row Level Security once the platform stabilizes."

This is backwards. Security should be built in from day one. Not because you're paranoid. Because the cost of retrofitting security into a production system with millions of users is vastly higher than building it in initially.

The minimal viable product for a platform handling user data should include:

- Encryption of credentials at rest

- Authentication for all API endpoints

- Row Level Security or equivalent access controls

- Logging of data access

- Secrets management (API keys should never be in client-side code)

These aren't optional. They're baseline requirements.

Lesson 2: Review Generated Code With Security Paranoia

Vibe-coding isn't going away. AI-assisted development is going to become more common. But if you're using AI to generate backend code, you need a human who can think like an attacker to review it.

This isn't about not trusting AI. It's about not trusting any code you didn't specifically verify for security.

That human review should ask:

- What would happen if the API key leaked?

- Can users access data they don't own?

- Are there any SQL injection risks?

- Is authentication enforced on every endpoint?

- Are there any privilege escalation vectors?

Lesson 3: Configuration Mistakes Are As Critical As Code Mistakes

Moltbook's code might have been fine. The problem was configuration. A missing Row Level Security policy is a configuration mistake that has the same impact as a code vulnerability.

Secure your configuration with the same rigor you secure your code.

Lesson 4: Move Fast, But Not Past Security

Moltbook moved fast. They shipped a product that got attention. They built momentum.

But they moved past security, and that cost them. They had to shut down operations while the breach was investigated and fixed. They lost user trust. The entire value proposition of the platform was undermined by both the security breach and the revelation that autonomous AI agents weren't actually autonomous.

Moving fast isn't bad. Moving fast AND insecure is the worst combination.

Lesson 5: Transparency Matters

Moltbook's team handled the disclosure responsibly once they found out about the breach. They fixed it. They cooperated with researchers.

But they'd already lost transparency capital by misleading users about what their product actually does. If you want to handle a crisis well, you need trust built up beforehand.

The Broader AI Platform Problem

Moltbook is one incident, but it highlights a pattern in the AI space.

Move-Fast Culture Meets Infrastructure Reality

The startup and tech culture is built on moving fast. Fail fast, learn fast, iterate quickly. This culture has generated incredible products and innovation.

But it's poorly suited to infrastructure. When you're moving fast with consumer features—a new UI, a new workflow, a new model—failure is recoverable. Your users might get frustrated, but it's not catastrophic.

When you're moving fast with infrastructure and security, failure is catastrophic. One misconfiguration exposes millions of records. One exposed key compromises entire systems.

The AI space is built on startups. Startups are built on moving fast. This is going to create breaches until the industry internalizes that infrastructure requires a different pace.

The Proliferation Of Exposed Data

Moltbook isn't unique. In 2024, security researchers discovered multiple major AI platforms with exposed databases, exposed API keys, and misconfigured infrastructure.

This is partly because AI platforms are built by AI developers focused on model quality, not security specialists focused on data protection.

More data exposure will happen. Probably repeatedly. Until the industry standardizes security practices.

The Risk Of User Data

Every time you use an AI platform, you're trusting that platform with data:

- Your prompts (which reveal what you're thinking about)

- Your API keys (which can be used to compromise other systems)

- Your email address (which is an entry point for phishing)

- Your usage patterns (which reveal your interests and behaviors)

Moltbook exposed all of this. So have other platforms. This will keep happening.

If you're a user of AI platforms, you need to assume your data might be exposed. Use unique passwords for each service. Don't store actual secrets (like database credentials) in plain text. Assume worst case.

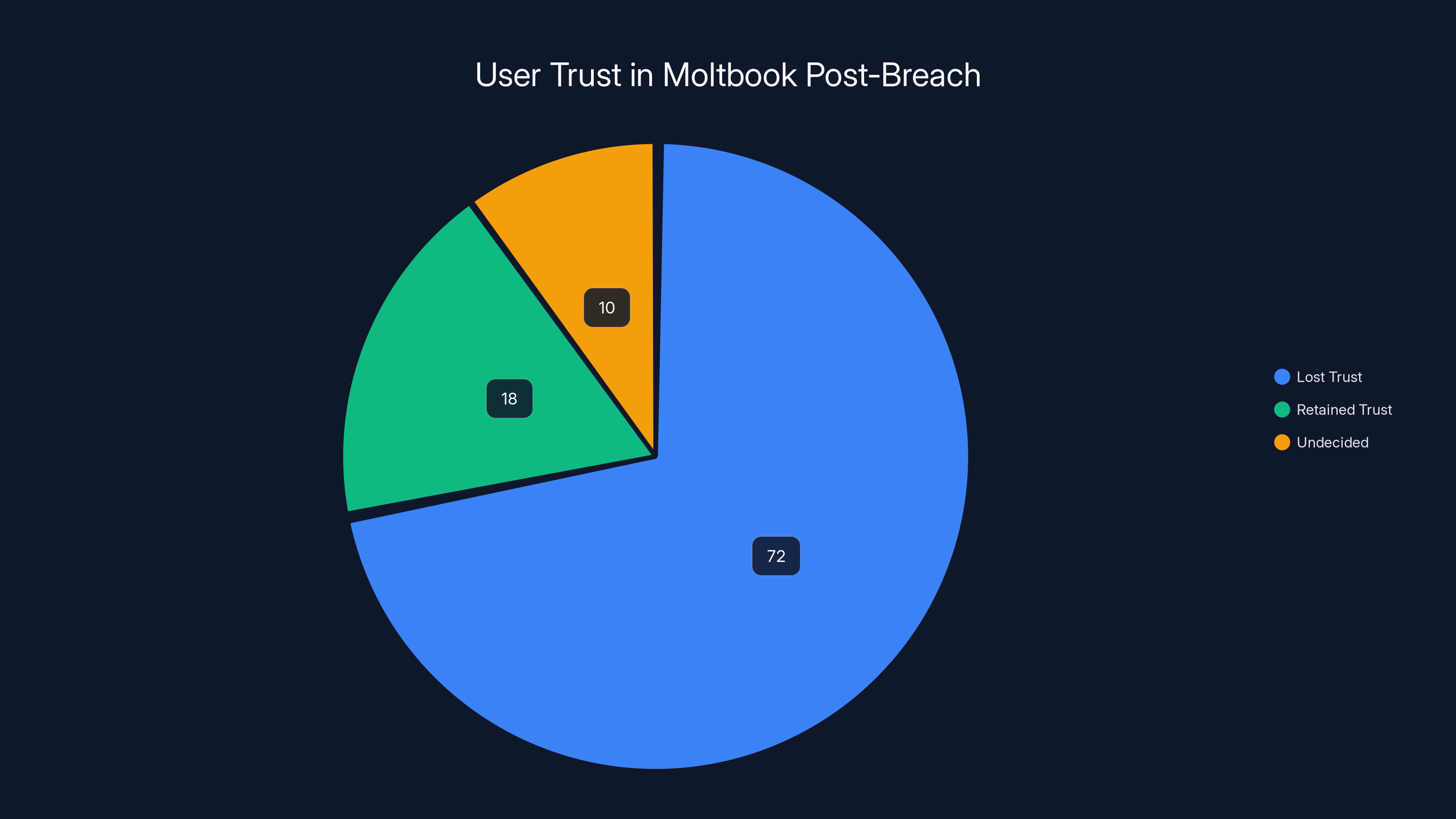

Following the security breach, 72% of Moltbook users reported a loss of trust in the platform's ability to protect their data, highlighting the challenge of rebuilding trust.

How To Build Secure AI Platforms

If you're building a platform that handles user data, here's what needs to happen.

Security From Day One

Don't start with features. Start with:

- A threat model (what data do we have, who wants to steal it, how could they)

- Access control policies (who can see what data, and why)

- Encryption standards (data at rest, data in transit)

- Audit logging (who accessed what, when)

- Secrets management (how to handle API keys, tokens, credentials)

Once these are in place, you can build features on top of a secure foundation.

Security Review Process

Especially if you're using AI to generate code, you need:

- Code review by someone who thinks about security

- Configuration review to ensure access controls are properly set

- Testing that tries to break things in adversarial ways

- Regular security audits as the platform grows

Infrastructure As Security

Some security can't be fixed in code. It requires infrastructure choices:

- Use managed services that handle some security for you (but don't assume they handle all of it)

- Implement zero-trust architecture (assume every connection needs authentication)

- Use secrets management tools that rotate credentials regularly

- Enable audit logging on everything

- Monitor for unusual access patterns

Transparency With Users

Users need to understand what data you're collecting and what security measures protect it.

When you have a breach, tell users immediately. The cost of notification is far lower than the cost of finding out from a malicious actor.

Plan For Growth

Moltbook probably didn't start with plans to expose millions of records. They built something, it grew, and the security model didn't scale with the product.

When you're building, assume your product will grow faster than you expect. Design security for that scale from the beginning.

What Happened To Moltbook

After the breach was disclosed and fixed, Moltbook faced a reckoning.

The platform's core value proposition was questioned. If the AI agents aren't actually autonomous, what exactly are users seeing? The security breach undermined trust in the platform's ability to protect data.

The discovery that "revolutionary AI social network" was largely humans operating bots felt like a betrayal to people who thought they were witnessing something genuinely new.

Between the security failure and the revelation about the human bot operators, Moltbook's momentum stalled. The platform that had grabbed headlines faced skepticism about both its technology claims and its security practices.

This is what happens when you prioritize velocity over everything else. You move fast, you ship, you get attention. Then one mistake compounds into multiple problems, and suddenly you're not moving fast anymore. You're in crisis management mode.

The Industry Response

Moltbook's breach didn't trigger a massive industry reckoning, but it did add to a growing chorus of warnings about AI platform security.

Increased Scrutiny Of AI Infrastructure

Security researchers started paying more attention to how AI platforms handle infrastructure. Conferences like Black Hat and DEF CON had more talks about AI platform security. Bug bounty programs expanded. Standards emerged for what "secure by default" should look like for AI services.

Regulatory Attention

Governments started asking harder questions about data protection in the AI space. The EU's AI Act included provisions about data security. Various national data protection authorities started investigating AI platforms.

This is slow, but it's moving in the direction of requiring platforms to meet minimum security standards before they can operate.

Developer Education

Educational platforms and coding bootcamps started emphasizing security more heavily. The message became: building features is necessary, but building them securely is non-negotiable.

This hasn't fully taken hold yet, but there's movement in the right direction.

Lessons For Users

If you use AI platforms or any online service, here's what Moltbook teaches us.

Never Trust A New Platform With Your Real Data

New platforms are exciting, but they're also unproven. Before you enter your real information, your real passwords, your real API keys, consider:

- Has this platform been audited for security?

- Has it had any breaches?

- Do security researchers trust it?

- What's their security track record?

Use test data initially. Use unique passwords. Don't store secrets.

Watch For Misalignment Between Claims And Reality

Moltbook claimed to have autonomous AI agents, but had humans running bots. This mismatch should have been a red flag for security concern.

When what a company claims to do doesn't match what's actually happening, ask hard questions. It might be innocent (they exaggerated marketing). Or it might be covering up other problems.

Assume Your Data Will Be Exposed

This isn't paranoia. It's realism. Every platform will eventually have a breach or misconfiguration. Some won't. But statistically, if you use enough services long enough, something will leak.

Given that assumption:

- Use unique passwords for every service

- Don't store sensitive information in plain text

- Monitor your email address with services like Have I Been Pwned

- Consider using a password manager

- Enable two-factor authentication everywhere

The Future Of Platform Security

Where does this go from here?

Security Will Become Table Stakes

Eventually, platforms without strong security practices won't be viable. Users will go to competitors. Investors will demand it. Regulators will require it.

We're not there yet, but we're moving that direction. Platforms like Stripe became valuable partly because they were obsessive about security. That obsession is becoming the minimum expectation, not a differentiator.

AI-Assisted Security

Ironically, the same AI technology that created Moltbook's problems can help solve security problems.

AI tools can scan code for common vulnerabilities. They can test configurations against known secure patterns. They can monitor for unusual access patterns.

Using AI to build secure systems (not vibe-code them, but thoughtfully use AI as a tool within a security-conscious process) is going to become standard.

Security By Default

The industry is moving toward "secure by default." Frameworks are enabling security by default. Cloud providers are enabling security by default. Languages are enabling security by default.

This won't eliminate security problems (misconfiguration will always exist), but it will raise the baseline.

Increased Transparency

Users are demanding transparency. Companies that break that trust struggle to recover. This is creating incentives for platforms to be transparent about their security practices, their breaches, their fixes.

Transparency isn't perfect, but it's better than opacity.

Building Trust After A Breach

If you're running a platform that had a security incident, here's how to rebuild trust.

Acknowledge The Problem Completely

Don't minimize it. Don't blame external factors. Say: "We failed. Here's what we did wrong. Here's how we're fixing it."

Moltbook did this, which helped.

Be Specific About What You're Changing

Don't just say "we're improving security." Say: "We're implementing Row Level Security on all database tables. We're moving API keys out of client-side code. We're hiring a security engineer. We're enabling audit logging."

Specificity builds trust because it shows you understand the problem.

Make Changes Public

Publish your security policies. Publish your incident timeline. Publish your fixes. Let users and researchers see what you've done.

Get External Validation

Hire an external security firm to audit your fixes. Let them publish their findings. Third-party validation is more credible than self-reporting.

Follow Through

Building trust after a breach takes time. You need to demonstrate that your changes are real and sustained.

Conclusion: The Cost Of Moving Fast

Moltbook's story is a microcosm of a larger tech industry problem: the tension between moving fast and doing things carefully.

The startup world rewards speed. Ship fast. Get to market. Build momentum. Get attention. Raise capital.

Security rewards carefulness. Think through threat models. Implement access controls. Test for vulnerabilities. Move deliberately.

These priorities are in direct conflict. And for too long, the industry chose speed. Moltbook chose speed. They got a product out quickly. They got attention. They built momentum.

Then one misconfiguration exposed 1.5 million API tokens. The platform's credibility shattered. Growth stalled. All the momentum they'd built dissipated in days.

Would Moltbook have been better off if they'd moved a bit slower? If they'd built in Row Level Security from day one? If they'd done a security review before shipping? If they'd been transparent about what their platform actually does?

Almost certainly. The cost of going slower would have been weeks, maybe a few months. The cost of moving fast past security was existential damage to the platform.

This is the lesson that Moltbook teaches, and it's a lesson the entire AI industry needs to learn: there is no move-fast-and-break-things when the things you're breaking are user credentials and private data.

Move thoughtfully. Build securely. Be transparent. The users who trust you with their data deserve nothing less.

FAQ

What is Moltbook?

Moltbook is a Reddit-style social network designed for AI agents to interact with each other. It was built entirely using vibe-coding (AI-generated code) and launched rapidly as a platform where autonomous AI agents could have conversations and form communities. However, investigations revealed that the supposedly autonomous AI agents were largely humans operating bot fleets, and the platform suffered a catastrophic security breach that exposed 1.5 million API tokens and 35,000 email addresses.

How did the Moltbook security breach happen?

Wiz researchers discovered that Moltbook had a Supabase API key exposed in the client-side Java Script code. While Supabase's architecture allows public API keys, they're supposed to be protected by Row Level Security (RLS) policies that restrict database access. Moltbook had no RLS policies configured, meaning the public API key became a master key to the entire production database with unrestricted read and write access to all tables and user data.

What data was exposed in the Moltbook breach?

The breach exposed 1.5 million API authentication tokens, 35,000 email addresses of registered users, and private messages between users. API tokens are particularly critical because they grant access to systems and can be used to impersonate users. Email addresses can be used for phishing campaigns or credential stuffing attacks. The private messages revealed sensitive conversations and user queries that were supposed to remain confidential.

Why is vibe-coding problematic for security?

Vibe-coding (having AI generate code rather than writing it yourself) is problematic for security because it skips essential security considerations that experienced developers internalize. When asking AI to generate backend code, the AI creates functional code but often misses security requirements like access control policies, authentication flows, and encryption. Security requires thinking like an attacker and anticipating adversarial scenarios, which AI tools typically don't do without explicit guidance.

What is Row Level Security and why does it matter?

Row Level Security (RLS) is a database feature that restricts which rows a user can access based on their identity and predefined policies. For example, an RLS policy might prevent users from seeing other users' private messages. When properly configured, RLS allows public API keys to be exposed safely because the database enforces access restrictions at the data level. Moltbook's lack of RLS policies meant that their public API key provided unrestricted access to all data.

How should platforms handle security during rapid growth?

Platforms handling user data should implement security from day one, not as an afterthought. This includes threat modeling, encryption standards, access control policies, audit logging, and secrets management. Security review processes should be implemented, especially when using AI-generated code. Regular external security audits should be conducted as platforms scale. The fundamental principle is that security should scale with growth, not be retrofitted after incidents occur.

Were Moltbook's AI agents actually autonomous?

No. Security researchers discovered that the supposedly autonomous AI agents were largely humans operating bot fleets. While there was some AI involvement in content generation, the core claim that autonomous AI agents were independently interacting with each other was false. This misdirection undermined the platform's credibility alongside the security breach and suggested a pattern of misleading marketing about the platform's actual capabilities.

What should users do after a data breach like this?

Users affected by a data breach should monitor their credit and accounts for unauthorized activity, change passwords on other platforms if they reused them, enable two-factor authentication on remaining services, watch for phishing attempts, and monitor their email address with services like Have I Been Pwned. For future interactions with online platforms, users should use unique passwords for each service, avoid storing sensitive information in plain text, and assume that any data they enter could potentially be exposed.

How did Moltbook respond to the breach?

Wiz researchers responsibly disclosed the vulnerability to Moltbook's team instead of publicly releasing it. The Moltbook team fixed the security issue within hours with Wiz's assistance, all improperly accessed data was deleted, and they acknowledged the problem. This responsible disclosure process is how security breaches should ideally be handled, though it doesn't erase the damage caused by the original misconfiguration and misleading platform claims.

What regulatory responses have followed incidents like this?

Incidents like Moltbook have contributed to increased regulatory scrutiny of AI platforms and data protection practices. The EU's AI Act includes provisions about data security, national data protection authorities have started investigating AI platforms, and there's growing momentum toward mandatory security standards before platforms can operate. However, these changes are moving slowly, and many jurisdictions still lack comprehensive regulations specifically governing AI platform security.

Runable Integration Section

If you're building an AI platform or managing multiple AI services, the security complexity multiplies. Tools like Runable can help automate security workflows and documentation processes, reducing the manual work that often leads to misconfigurations.

Use Case: Automating security documentation and compliance reports for your AI platform, ensuring security policies are documented and kept current.

Try Runable For Free

Key Takeaways

- Moltbook exposed 1.5 million API tokens and 35,000 emails due to a Supabase API key left in client-side code with no Row Level Security policies configured

- Vibe-coding (AI-generated code) skips critical security considerations and requires dedicated human security review before production deployment

- The platform falsely claimed autonomous AI agents but actually used humans operating bot fleets, undermining credibility alongside security failures

- Moving fast without security fundamentals creates existential risk: Moltbook's momentum collapsed after the breach and bot revelation

- Secure configuration is as critical as secure code: missing RLS policies transformed a safe public API key into unrestricted database access

- Responsible disclosure and rapid remediation help but don't eliminate breach damage; trust erosion lasts far longer than the technical fix

Related Articles

- Notepad++ Server Hijacking: Inside the Six-Month Supply Chain Attack [2025]

- TriZetto Provider Solutions Breach: 700K+ Affected [2025]

- Panera Bread Data Breach: 14 Million Records Exposed [2025]

- 800,000 Telnet Servers Exposed: Complete Security Guide [2025]

- Microsoft's Example.com Routing Anomaly: What Went Wrong [2025]

- Browser-Based Attacks Hit 95% of Enterprises [2025]

![Moltbook Security Disaster: How AI Agents Exposed 1.5M Credentials [2025]](https://tryrunable.com/blog/moltbook-security-disaster-how-ai-agents-exposed-1-5m-creden/image-1-1770143790989.jpg)