Understanding Shadow AI: The Hidden AI Crisis in Your Organization

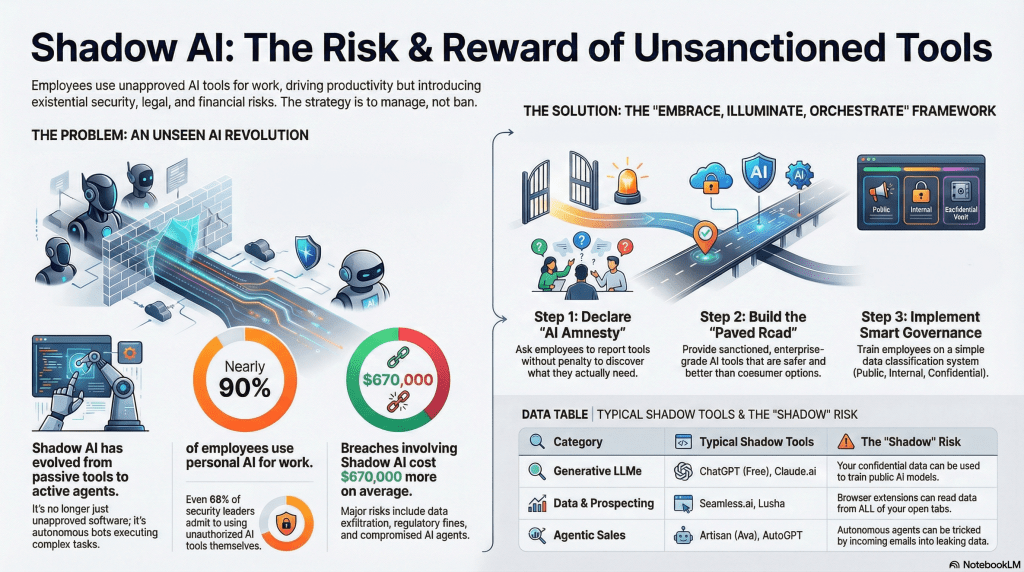

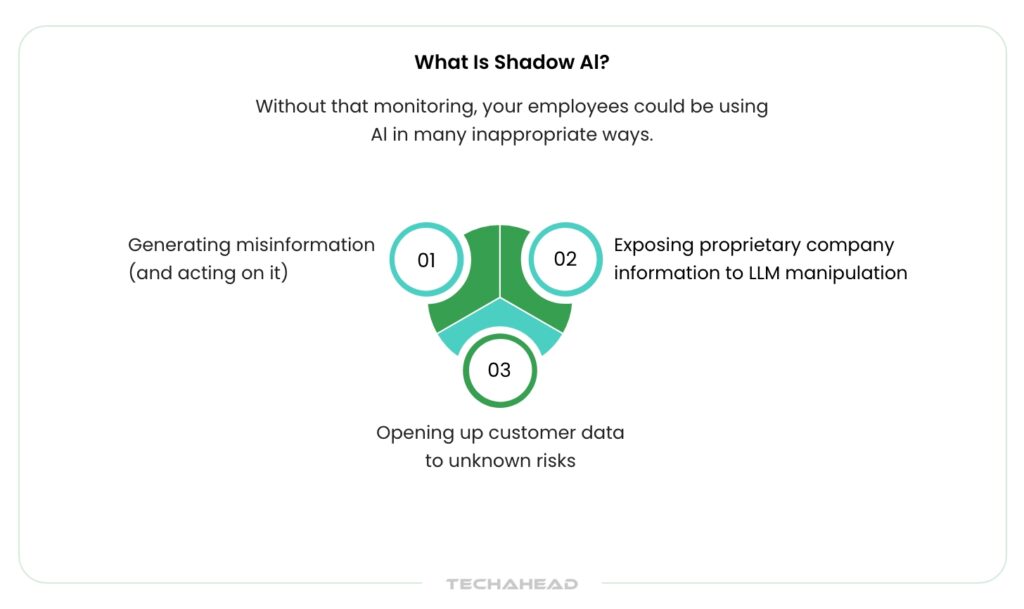

Your employees are using AI tools you don't know about. Right now. Maybe dozens of them. They're pasting confidential data into Chat GPT, uploading proprietary research to free cloud services, and asking unapproved AI assistants to help with sensitive financial calculations. And your IT team? They're completely in the dark.

This is shadow AI. And it's not some theoretical problem for security conferences anymore. It's happening in your organization today, affecting everything from data privacy to compliance to competitive advantage.

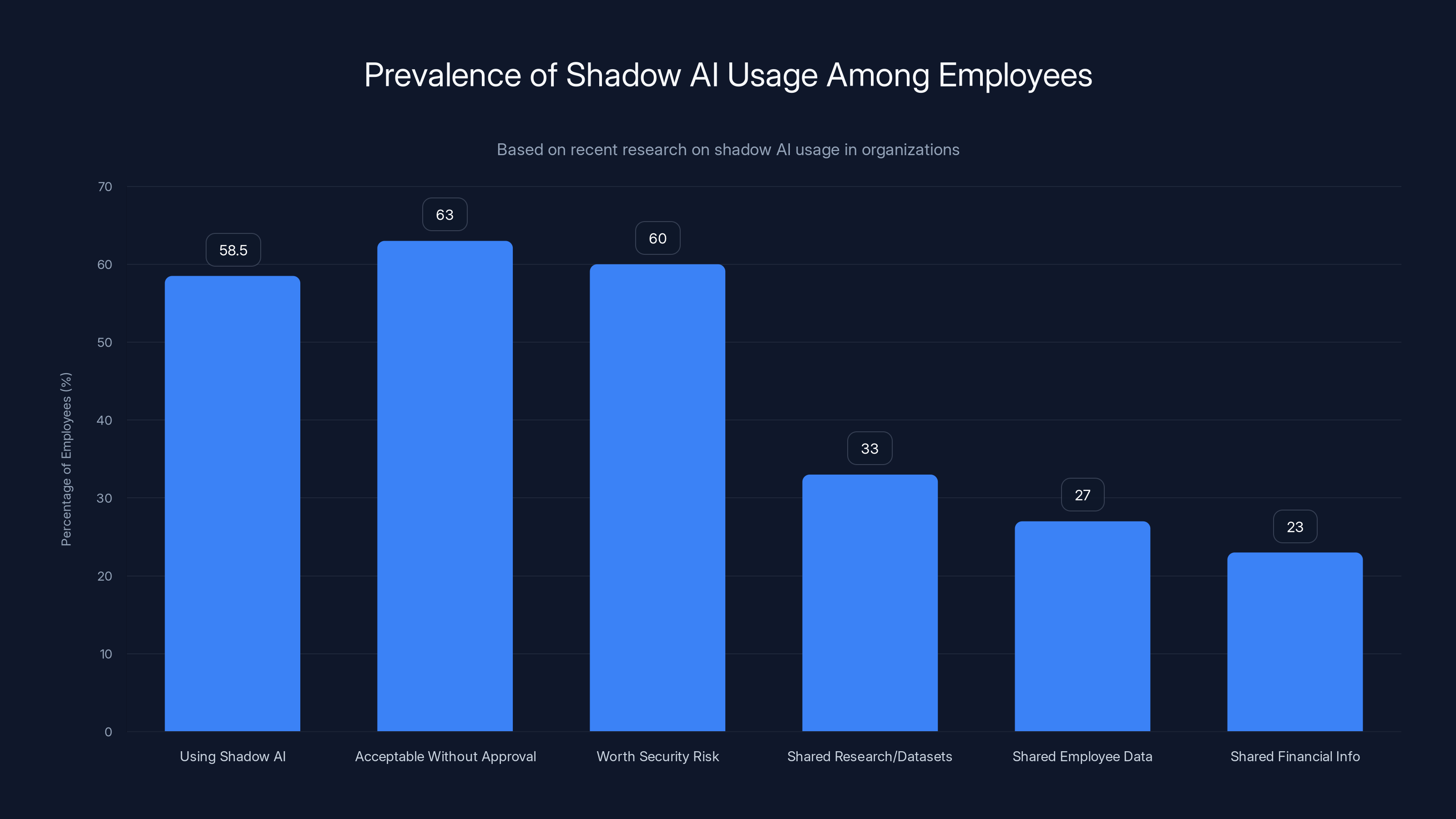

The statistics are genuinely alarming. Recent research shows that 58-59% of workers admit to using shadow AI at work, despite having company-approved alternatives available. Even more troubling, 63% of employees believe it's acceptable to use AI without IT approval, and 60% agree that unapproved AI tools are worth the security risk if they help meet deadlines. These aren't rogue actors or bad actors deliberately circumventing security. These are normal, well-intentioned employees making daily decisions that expose your organization to massive risk.

Here's what makes this particularly dangerous: when workers use shadow AI, they're not just breaking IT policy. They're potentially exposing sensitive datasets, employee names and payroll information, financial records, and sales data to unvetted third-party services. 33% of workers have shared research or datasets with unapproved AI tools. 27% have shared employee data like names, payroll, or performance metrics. 23% have shared financial or sales information. Think about what that means for your organization's intellectual property, regulatory compliance, and customer trust.

The problem becomes even messier when you realize that senior leaders are often the worst offenders. Executives and C-suite leaders are more likely than junior staff to believe that speed outweighs privacy and security concerns. When employees see their managers using unapproved AI without consequences, they get the green light to do the same. Research suggests that 57% of workers' direct managers support the use of unapproved AI tools, creating what security researchers call "a gray zone where employees feel encouraged to use AI, but companies lose oversight of how and where sensitive information is being shared."

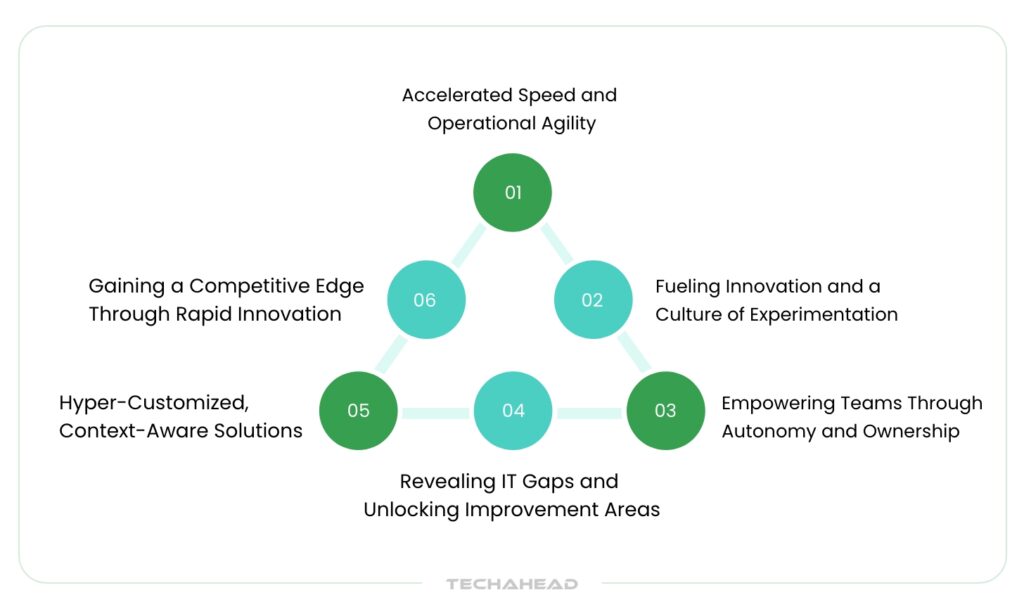

But here's the paradox that makes this crisis so difficult to solve: shadow AI isn't primarily a problem of negligence or malice. It's a problem of mismatch. Employees are using shadow AI because they perceive that company-approved tools don't meet their actual needs. The AI they've been given isn't suitable for what they're trying to accomplish. So they go rogue, not out of spite, but out of pragmatism. They need to get work done, and the tools they've been given don't cut it. Understanding this mismatch is key to actually solving the problem, rather than just cracking down harder on employee behavior.

The Real-World Impact: What Shadow AI Actually Costs Your Organization

Shadow AI isn't just a compliance headache. It has genuine financial, operational, and strategic consequences that affect your bottom line.

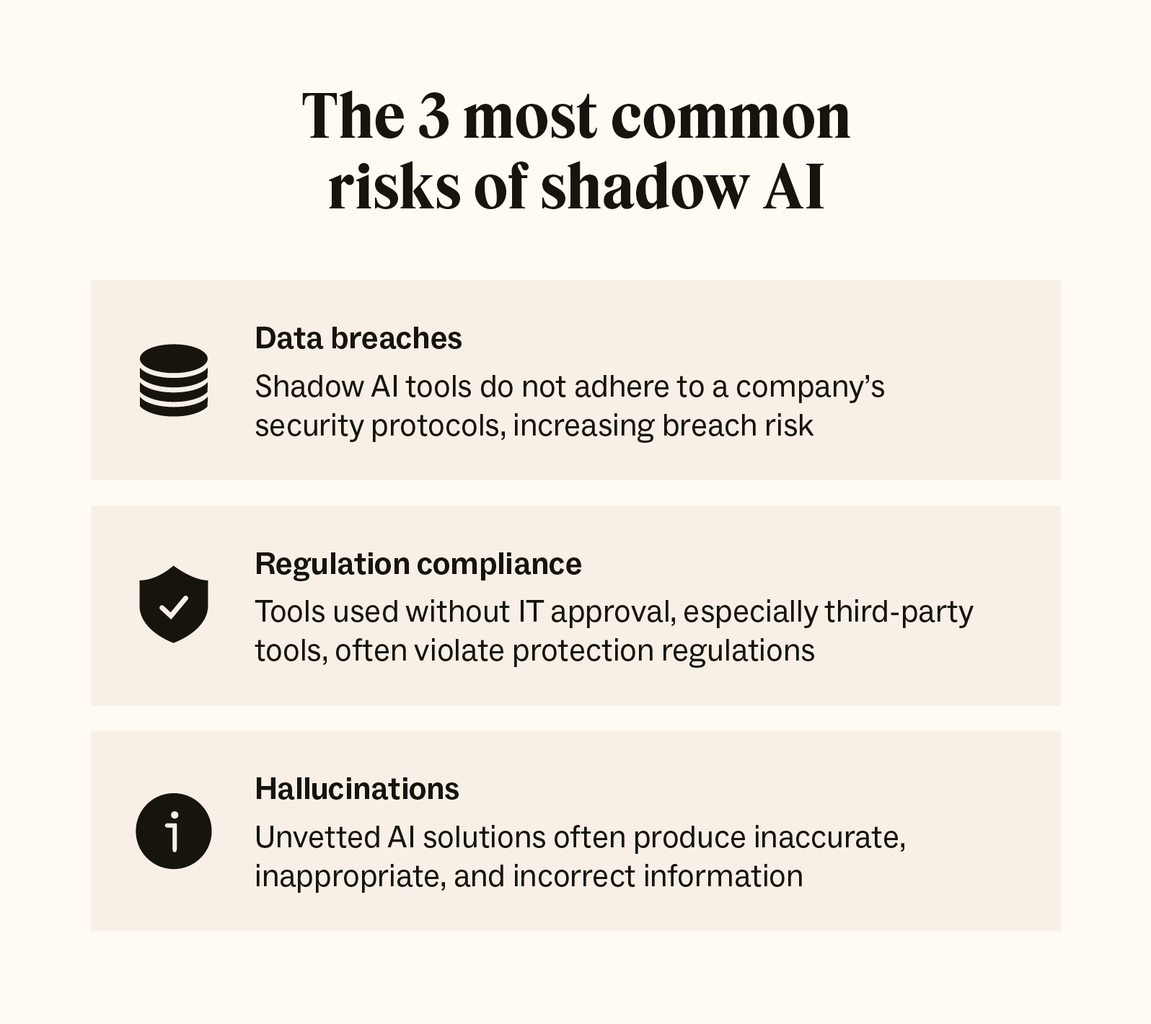

Start with data breaches. When employees share proprietary information with consumer-grade AI services, they're essentially uploading that data to third-party servers with minimal security controls. Companies like OpenAI, Google, and Anthropic have stated they don't train on user inputs from paid accounts, but free versions? Different story. Many free AI tools explicitly reserve the right to use conversation data for training, marketing, or improvement. Your employee just handed your manufacturing secrets to a machine learning model that will be shared with competitors.

Consider a real scenario: a product manager uploads your Q3 roadmap to Chat GPT to get help organizing it. Two weeks later, your competitor launches a nearly identical feature. Coincidence? Maybe. But more likely, that roadmap was incorporated into Chat GPT's training data and is now part of common knowledge in your industry. The damage isn't always immediate or traceable, which makes it more dangerous.

Then there's regulatory and compliance risk. If you operate in regulated industries—financial services, healthcare, legal, government—you have specific data handling requirements. HIPAA, GDPR, PCI-DSS, SOX. When employees upload customer or patient data to unapproved AI tools, you're creating compliance violations that could result in fines ranging from thousands to millions of dollars. The average GDPR fine? €14.6 million for large companies. Healthcare breaches? $10.93 million average cost per breach. One careless upload could tank your budget.

There's also the intellectual property angle. Your algorithms, your training datasets, your proprietary methodologies. When shadow AI is involved, you lose control of where that information goes and who has access. An employee using an unapproved AI tool might improve it with your proprietary knowledge, essentially giving away competitive advantage.

And then there's the operational chaos. When different teams use different shadow AI tools, you end up with fragmented workflows, incompatible outputs, and knowledge silos. One team's using Claude, another's using Chat GPT, a third is using some cutting-edge open-source model from Git Hub. There's no consistency, no governance, no way to audit or improve processes. Scaling becomes nearly impossible.

The security implications extend beyond data. When you don't know what tools your employees are using, you can't assess the security posture of those tools. Is that free AI tool using encrypted transmission? Does it have a privacy policy? Is it backdoored by a hostile nation-state? You have no idea. You're essentially hoping nothing bad happens, which isn't a security strategy.

There's also the insider threat angle. Disgruntled employees or contractors can use shadow AI to exfiltrate data more easily than ever before. Instead of copying and pasting into email or downloading to USB, they can upload to Chat GPT with a few clicks, and it's gone. No audit trail, no detection, no recourse.

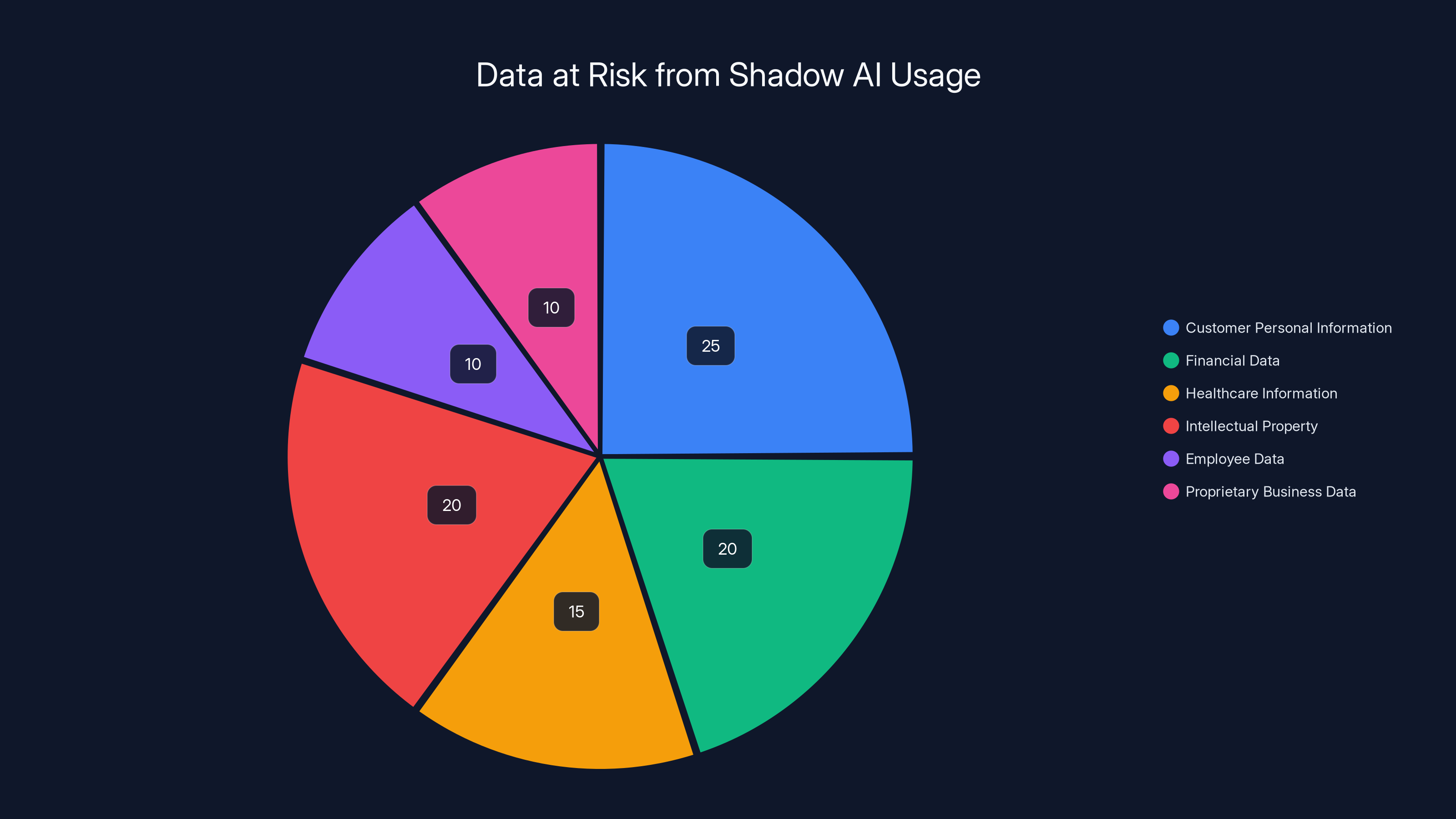

Customer personal information and intellectual property are the most at-risk data types when employees use shadow AI tools. Estimated data.

Why Employees Love Shadow AI (And Why They'll Never Stop)

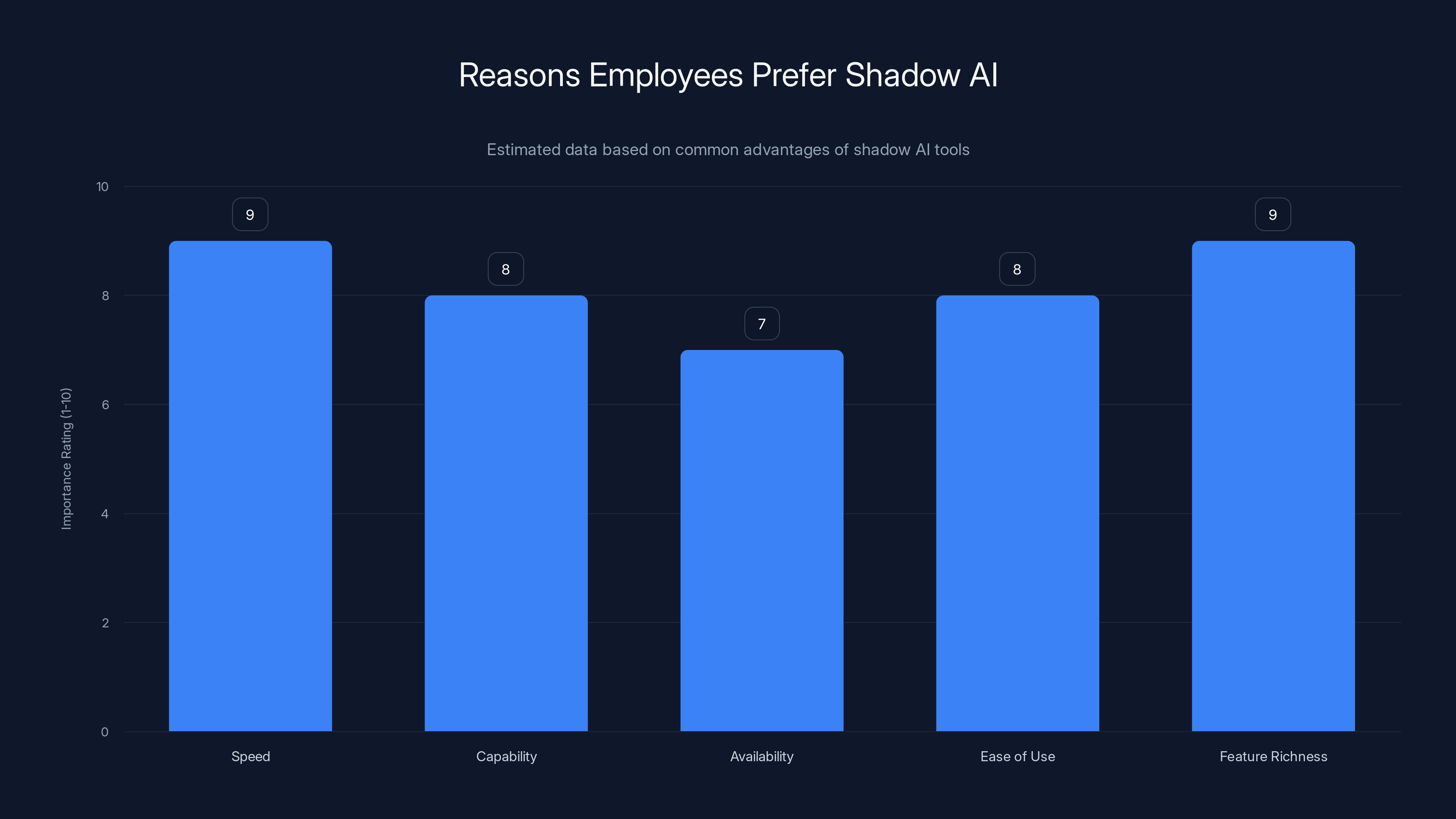

If you want to actually solve the shadow AI problem, you need to understand why it's so appealing in the first place. The answer isn't "employees are irresponsible." It's that shadow AI delivers genuine value and convenience.

First, speed. Shadow AI tools are usually faster to access than company-approved alternatives. No VPN, no authentication manager, no multi-factor authentication. Just open the browser and start typing. In a world where deadlines are tight and efficiency is valued, this friction matters. An employee who needs help brainstorming in the next 15 minutes will grab the fastest tool available, not the most secure one.

Second, capability. Many workers feel that approved AI tools are inferior to what's available publicly. Maybe your company standardized on an older model because of cost or compatibility reasons. But the latest version of GPT is available for free on the web. A junior analyst who knows the latest model can solve problems faster. Why should they be forced to use a worse tool just because it's approved?

Third, availability. Company-approved tools often have limited usage quotas, seat licenses, or access restrictions. Not everyone can use them. But shadow AI tools? Unlimited access. Available on any device. No permission needed. This is particularly appealing to contractors, consultants, or employees who work across multiple departments.

Fourth, ease of use. Shadow AI tools are designed for consumers. They're intuitive, simple, no learning curve. Company-approved tools are often designed around compliance and security first, usability second. This creates a huge UX gap that users instinctively close by going rogue.

Fifth, feature richness. Free Chat GPT has image generation, web search, file upload, and plugin capabilities. Your approved tool might have half those features. Users naturally gravitate toward the more capable option.

Sixth, plausible deniability. Using a shadow AI tool feels less risky because it's so common. Everyone's doing it. The tool is made by a major company like Open AI or Google. It can't be that bad, right? This perceived legitimacy makes the risk feel manageable, even when it objectively isn't.

The key insight: shadow AI isn't primarily a compliance problem, it's a user experience problem. If your approved tools were faster, more capable, more convenient, and more powerful than shadow alternatives, users would have less incentive to go rogue. But they're not. So employees make a rational decision to use better tools, understanding the risks in a fuzzy way but not internalizing them as catastrophic.

A significant portion of employees engage in shadow AI activities, with 58.5% using unapproved AI tools, and 63% believing it's acceptable without IT approval. This highlights a critical need for better AI governance.

The Generational Divide: Why Your Executives Are Your Biggest Security Threat

Here's something that should concern every CISO and CTO: the shadow AI problem is worse at the executive level than at the entry level.

Research consistently shows that senior leaders and C-suite executives are more likely than junior staff to use unapproved AI tools and believe that speed outweighs security and privacy considerations. This creates a cascading problem where organizational culture shifts toward risk-taking and corner-cutting.

Why does this happen? Senior leaders are often evaluated on quarterly results and speed to market. They're not evaluated on security incidents that haven't happened yet (and may never happen). They face extreme time pressure. An executive trying to present a board update next week will use whatever tool gets the job done fastest, approved or not. The security risk feels abstract and distant. The deadline feels immediate and concrete.

Moreover, executives often don't understand the security implications of their actions. They think Chat GPT is owned by Open AI, a major company, so it must be secure. They don't realize that free tier usage might be used for training. They don't read the terms of service. They don't understand regulatory implications. They're running on intuition and urgency, not security knowledge.

This creates a huge problem: when executives use unapproved AI, they implicitly signal to everyone below them that it's acceptable. A director sees her VP using Chat GPT for confidential analysis. That signal is: "This is fine." The director tells her manager, "The VP does it, so it's fine." Pretty soon, 80% of the organization is using shadow AI and everyone thinks it's endorsed from above.

The solution isn't to restrict executives more. That creates resentment and drives the problem underground. The solution is to give executives what they actually want: tools that are both approved and fast. Enterprise AI tools that have the capability and UX of consumer tools, but with security and governance built in. Tools that make compliance automatic, not something executives have to think about.

The Data Breach Cascade: Understanding the Attack Surface

When shadow AI is involved, the attack surface explodes in ways most security teams don't fully appreciate.

Think about what happens when an employee uses Chat GPT to help with a confidential analysis. The employee types their question into the interface. That data travels over the internet to Open AI's servers. It's stored somewhere, used for whatever purposes their terms of service permit, and then the response comes back. Your employee sees the result and uses it to make a business decision.

Now multiply this across your entire organization. Hundreds of employees, thousands of prompt submissions per day. Each one a potential data leak. Each one a potential vector for industrial espionage, competitive intelligence, or regulatory violation.

But it goes deeper. If an employee uses shadow AI tools on a company-managed device, that device might have malware or vulnerabilities. If they use it on personal devices connected to your network via VPN, they've created a hybrid trust boundary. If they use it on public Wi Fi at an airport or coffee shop, their communication is vulnerable to interception.

And here's the part that keeps CISOs awake at night: you can't detect what you don't know exists. If you don't have visibility into shadow AI usage, you can't identify when sensitive data is being leaked. You can't correlate unusual data exfiltration patterns with shadow AI usage. You can't respond to incidents because you don't even know they happened.

The detection problem is real. Traditional network security tools might flag Chat GPT as "unrestricted" and block it, but employees work around these blocks using VPNs, mobile hotspots, or unmanaged devices. You're playing whack-a-mole against your own workforce.

Then there's the supply chain angle. Many shadow AI tools integrate with third-party services. Chat GPT can access the web, integrate with plugins, access files from cloud storage. Each integration is a potential vulnerability. If a plugin is compromised, your employees' data flows through the compromised tool automatically, without their knowledge.

The regulatory angle is equally serious. GDPR requires you to know where personal data goes. If you can't tell regulators exactly which AI tools your employees use, you're in violation. Same with HIPAA, PCI-DSS, SOX. These frameworks assume you have visibility and control over data flows. Shadow AI breaks that assumption.

GDPR violations can cost up to 4% of global turnover, while HIPAA fines range up to

Compliance Risk: The Regulatory Minefield

Let's talk about the regulatory angle, because this is where shadow AI becomes genuinely catastrophic.

GDPR is the obvious starting point. If you operate in or serve the European Union, you have specific obligations about where personal data is processed. Uploading customer data to Chat GPT (which is processed in the US) might violate GDPR if you don't have proper data processing agreements. The fines? Up to 4% of annual global turnover for serious violations. For a mid-sized company, that's millions.

But GDPR is just one framework. HIPAA applies if you're in healthcare. A nurse using Chat GPT to discuss patient symptoms is creating a HIPAA violation. HIPAA fines range from

PCI-DSS applies if you handle payment card data. If an employee uses shadow AI to troubleshoot a payment processing issue and mentions a customer's credit card number in the prompt, you're in violation. Fines are less standardized, but the reputational damage is enormous.

SOX applies if you're a publicly traded company. Using shadow AI to help with financial analysis or forecasting creates audit trail problems. When your auditors ask where analysis came from, you can't explain it because you didn't know it was being done.

The pattern is consistent: every regulatory framework assumes you have visibility and control over how sensitive data is processed. Shadow AI breaks that assumption. You lose visibility. You lose control. You fall out of compliance.

What makes this worse is that many organizations don't even realize they're in violation. An employee uploads some customer names to Chat GPT to get help organizing a mailing list. Seems innocent. In reality, that's a potential GDPR violation. No one reported it. No one will ever know. But the violation happened.

The proactive solution is to implement data loss prevention tools that detect shadow AI usage and either block it or alert security teams. But these tools are expensive, create friction, and employees work around them. It's a game that never ends unless you address the underlying cause: employees feel they need shadow AI because approved tools don't meet their needs.

The Intellectual Property Angle: Losing Competitive Advantage

One of the most insidious aspects of shadow AI is how it erodes your intellectual property and competitive advantage.

Imagine your R&D team has spent 18 months developing a proprietary algorithm for customer churn prediction. It's a real differentiator. Your sales team uses it to win deals. Your retention improves. You gain competitive advantage.

Now imagine a junior analyst on the team uses Chat GPT to help document how the algorithm works. "Explain this approach to customer churn prediction..." Maybe they redact some details. Maybe they don't. Either way, they've uploaded your methodology to a third-party service.

What happens next? Open AI's training data pipeline might incorporate it. Future versions of GPT learn from it. Eventually, your proprietary algorithm becomes part of the broader knowledge available to competitors. The competitive advantage you spent millions developing is now common knowledge.

This isn't hypothetical. Companies like Google, Meta, and Microsoft have discovered employees uploading proprietary code to public Git Hub repositories or using it in shadow AI tools. Each discovery represents a potential leak of billions in R&D investment.

The IP angle also extends to your data. Your proprietary datasets are competitive advantages. A machine learning model trained on your data is powerful because of the data quality and specificity. But if an employee uses shadow AI to analyze your dataset, they might be feeding your data into someone else's model. Your competitive edge becomes commoditized.

Here's the subtle part: it's hard to quantify how much IP you've lost through shadow AI because the loss is diffuse and delayed. You don't know if your competitor's sudden innovation came from your leaked IP or independent research. You don't know if your market share erosion is connected to shadow AI usage. But statistically, if 58% of your employees are using shadow AI, and some percentage of them work with IP-sensitive materials, you're definitely leaking IP. The question is how much and at what cost.

The solution requires treating shadow AI as an IP risk, not just a security risk. You need controls, monitoring, and employee training that emphasize the competitive advantage implications, not just security and compliance.

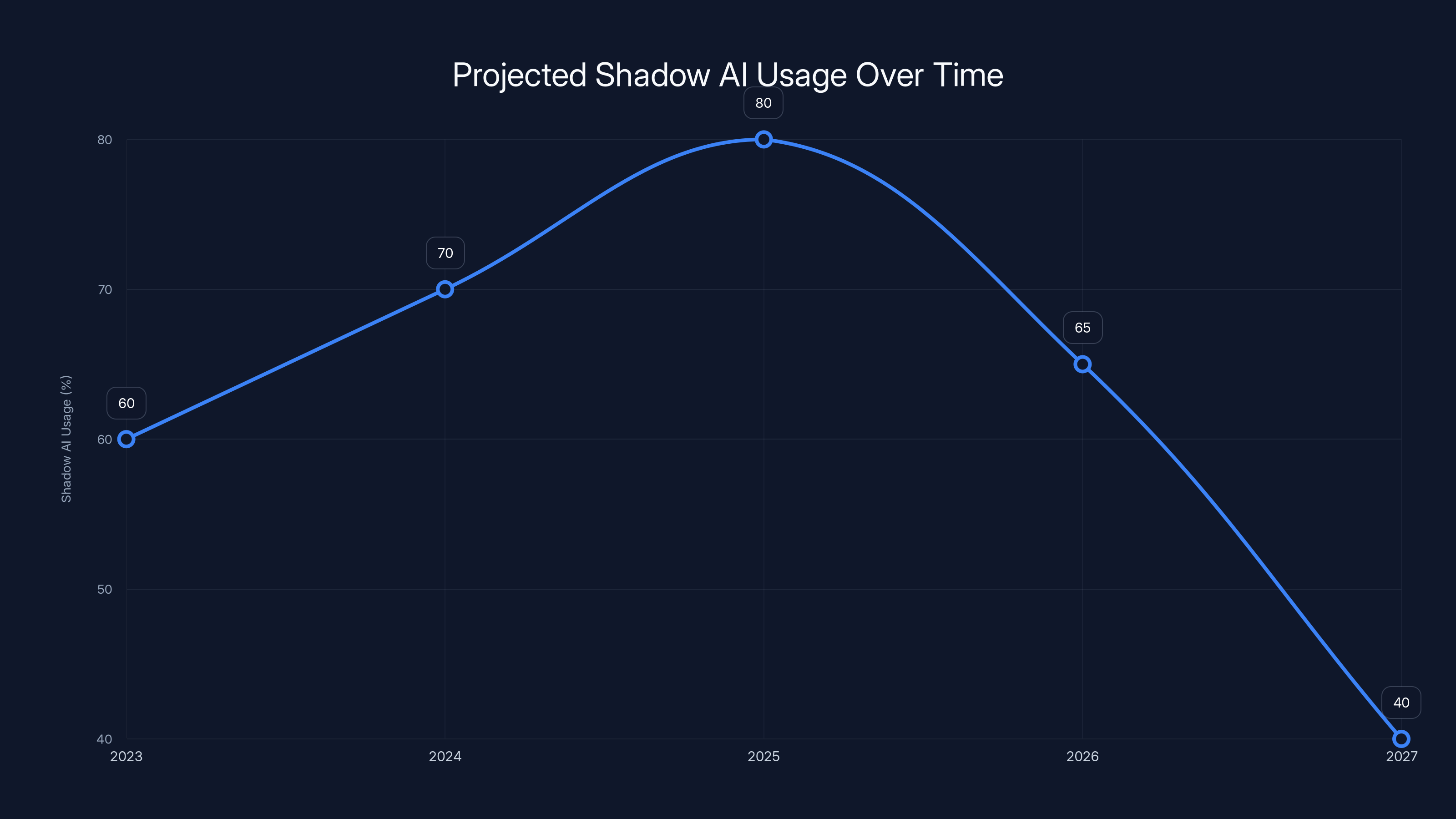

Shadow AI usage is expected to peak around 2025 due to increased accessibility and lower costs, before declining as regulations and enterprise solutions improve. (Estimated data)

Detection and Monitoring: The Visibility Challenge

You can't solve a problem you can't see. And shadow AI is designed to be hard to see.

Traditional network monitoring tools can identify Chat GPT traffic by IP address or domain, but users bypass these easily with VPNs, mobile hotspots, or unmanaged devices. You can block the domain, but then employees use the mobile app or access it through a proxy. It's an endless game.

Application-level monitoring is slightly better. Endpoint detection and response (EDR) tools can monitor what applications are running on devices and what data they're accessing. But employees access shadow AI through web browsers, which are legitimate business tools. Blocking all browser-based AI usage isn't practical. Detecting specific AI usage requires analyzing browser activity in real time, which is resource-intensive and raises privacy concerns.

Data loss prevention (DLP) tools look for sensitive data in network traffic and can block uploads containing credit card numbers, social security numbers, or keywords like "confidential." But employees are creative about evading DLP. They paraphrase sensitive data. They use abbreviations or code names. They split data across multiple prompts. A sophisticated employee can defeat most DLP rules.

User behavior analytics can identify unusual patterns. If an employee suddenly spends two hours in Chat GPT when they usually don't use it, that's a signal. If they upload unusually large amounts of data, that's worth investigating. But this requires baseline data and statistical analysis, which takes time and resources.

The fundamental problem is that shadow AI tools are designed by sophisticated companies with massive budgets to be user-friendly and difficult to detect. You're trying to detect adversarially-designed evasion with point solutions. You're fighting an uphill battle.

The better approach is to assume some level of shadow AI usage is inevitable and focus on detection, response, and reduction rather than prevention and elimination. Implement monitoring that catches egregious violations. Respond quickly to detected incidents. Reduce incentives for shadow AI usage by improving approved tools. This is more realistic than trying to prevent all shadow AI usage.

Some organizations are starting to use AI-powered security tools to detect shadow AI. The irony is delicious. You use machine learning to detect unauthorized machine learning usage. These tools analyze communication patterns, data flows, and user behavior to identify likely shadow AI usage without requiring explicit detection rules.

Industry-Specific Vulnerabilities: Where Shadow AI Is Most Dangerous

Shadow AI risk isn't evenly distributed. Some industries have much more exposure than others.

Healthcare and Pharmaceuticals: This is the highest-risk industry. HIPAA regulations are strict, penalties are severe, and sensitive data is extremely valuable. A clinician using Chat GPT to discuss patient symptoms is creating immediate regulatory violation. A researcher using shadow AI to analyze clinical trial data is potentially invalidating the entire trial. The risk profile is catastrophic.

Financial Services: Banks, insurance companies, and investment firms deal with highly regulated data. PCI-DSS, SOX, AML regulations all create strict requirements about data handling. An analyst using shadow AI to create a financial model that will be used for customer decisions is creating compliance exposure. Shadow AI in finance isn't just a security problem, it's an audit and governance nightmare.

Government and Defense: If you have a security clearance or work on government contracts, using shadow AI is often explicitly prohibited. Data can't be sent to foreign companies. Yet contractors and government employees use shadow AI regularly, sometimes unknowingly violating laws. The penalties can include loss of clearance, legal prosecution, and contract cancellation.

Legal Services: Attorneys have strict confidentiality obligations under attorney-client privilege. A lawyer using Chat GPT to help draft a motion is potentially waiving privilege and violating professional ethics rules. Some bar associations have explicitly warned against using shadow AI for confidential work.

Consulting and Professional Services: Consultants are hired for their expertise and proprietary methodologies. If they use shadow AI and the AI's responses become part of work product, you're essentially hiring AI instead of the consultant. Clients discover this, trust erodes, contracts are terminated.

Technology and Saa S: Tech companies are the least regulated and probably have more shadow AI usage than any industry. Engineers use shadow AI for code review, debugging, and algorithm development. This is actually somewhat rational because the value of code is less sensitive than, say, patient data. But there's still IP and competitive risk.

Each industry needs a different shadow AI strategy. A compliance-heavy healthcare company needs strict controls and detection. A tech company might benefit from a "controlled shadow AI" approach where developers use Chat GPT for certain low-risk tasks.

Shadow AI can lead to significant financial losses, with GDPR fines averaging €14.6 million and healthcare breaches costing $10.93 million. Estimated data for compliance violations and IP loss suggests additional substantial risks.

Strategy 1: Meet Employees Where They Are

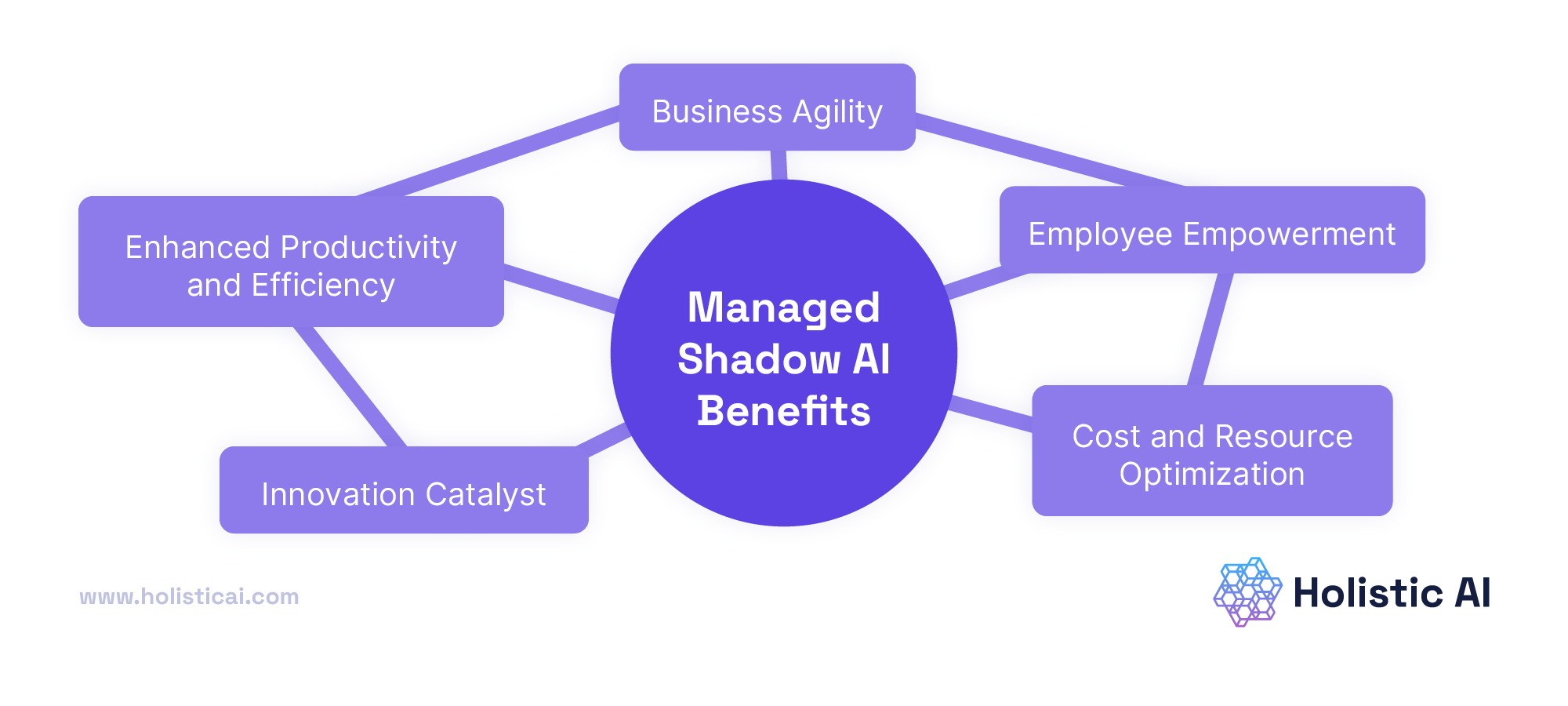

The most effective shadow AI mitigation strategy isn't to eliminate shadow AI. It's to provide enterprise-grade alternatives that employees actually want to use.

Start by understanding what employees are using shadow AI for. Conduct anonymous surveys. Analyze network logs (within privacy guidelines). Interview team leads. Create a catalog of shadow AI usage patterns. This tells you what employees actually need.

Common patterns include:

- Brainstorming and ideation: Employees want to bounce ideas off AI to refine thinking

- Writing and editing: People want help drafting emails, documents, proposals

- Code review and debugging: Engineers want AI to explain code and spot bugs

- Data analysis and visualization: Analysts want help creating insights from data

- Research and synthesis: Knowledge workers want AI to summarize research and find patterns

- Learning and skill development: Employees want AI tutors for learning new skills

Once you understand the use cases, you can evaluate approved tools against these needs. Do you have a brainstorming tool? A writing assistant? A code review tool? A data analysis platform? If the answer is no for any major use case, you have a gap. Employees will fill that gap with shadow AI.

The next step is to invest in enterprise-grade AI tools that cover the gaps while providing security, governance, and compliance features that consumer tools don't. This is where platforms like Runable become valuable. Tools designed for teams and enterprises that need AI capabilities (presentations, documents, reports, images, videos) without the security and compliance baggage of consumer AI tools are worth exploring. Starting at $9/month, enterprise AI tools can provide comparable or better functionality than shadow alternatives while keeping data within your control.

When implementing approved tools, focus on user experience. Make them faster to access than shadow alternatives. Make them more capable. Make them easier to use. If you can clear these bars, adoption will follow naturally.

Also, involve employees in the selection process. If they feel heard and their needs are taken seriously, they're more likely to adopt approved tools even if they're not perfect. This builds organizational buy-in.

Strategy 2: Clear Communication and Culture Change

No technology solution eliminates shadow AI. You also need cultural change and clear communication about why shadow AI is risky.

The problem is that most security awareness training is ineffective. Employees hear abstract warnings about "data security" and "compliance" and tune out. These concepts don't feel real to them. What feels real is missing a deadline because they had to wait for IT approval.

Effective shadow AI communication is concrete, specific, and tied to employee interests:

For engineers: "If you use shadow AI to debug proprietary code, that code might be incorporated into the AI's training data. Your competitive advantage becomes a training dataset for your competitors."

For analysts: "If you upload our customer analysis to free AI tools, the model learns our approach. Next year, every competitor has the same analysis approach we invented."

For healthcare workers: "If you use Chat GPT to discuss patient care, you're violating HIPAA. The fine is

For executives: "Shadow AI on company devices creates audit trail problems. When auditors ask where analysis came from, you can't explain it. Unexplained analysis creates audit failures."

Be specific about consequences. Not abstract risks, but concrete outcomes. People respond to specificity.

Also, acknowledge the legitimate appeal of shadow AI. Don't just say "don't use it." Say "here's why our approved tool is better, and here's how we made it better based on feedback." Show that you're listening and improving.

Involve leadership visibly. If executives publicly commit to using approved tools and avoiding shadow AI, that cultural signal matters. Employees notice who walks the walk.

Create clear policies with graduated consequences. First offense: warning and training. Second offense: documented conversation with manager. Third offense: disciplinary action. This creates accountability without being punitive.

Estimated data suggests that speed and feature richness are the top reasons employees prefer shadow AI tools over company-approved alternatives.

Strategy 3: Technical Controls and Governance

Cultural change and approved tools are necessary but not sufficient. You also need technical controls to prevent the worst scenarios.

Implement a data classification framework where data is labeled as public, internal, confidential, or restricted. Restricted data should never go to shadow AI tools. Confidential data requires extra scrutiny. This gives employees a simple rule: "Don't upload anything labeled confidential or restricted to unapproved tools."

Deploy data loss prevention (DLP) tools that monitor network and email traffic for sensitive data. When DLP detects an employee trying to send restricted data to a shadow AI tool, it blocks the action and alerts security. This catches egregious violations.

Implement conditional access policies that restrict shadow AI usage on certain devices or networks. For example, employees connecting from a cafe Wi Fi network can't access shadow AI tools. Employees on company VPN with full security posture can. This creates incentives for secure behavior.

Use application control to whitelist approved AI tools and blacklist known shadow alternatives. This isn't bulletproof, but it creates friction that prevents casual shadow AI usage.

Implement browser monitoring that detects shadow AI access even if employees use VPNs. Many commercial security tools can do this. When detected, the organization can block access or alert the user's manager.

Deploy user behavior analytics that establishes baseline usage patterns for each employee. When someone suddenly spends hours in Chat GPT or uploads large volumes of data, the system flags it. This catches unusual activity early.

Implement API controls that prevent integration between approved tools and shadow AI services. If an employee tries to connect Chat GPT to your data platform, the integration fails.

Use tokenization and encryption for sensitive data. Even if data is uploaded to shadow AI, it's gibberish without decryption keys. This protects IP and PII.

The key is to layer controls so that shadow AI usage requires significant effort. Make it technically difficult without making approved tools technically difficult. This creates the right incentive structure.

Strategy 4: Monitoring, Detection, and Response

Assume some shadow AI usage will always exist. Build a monitoring and response program to catch the worst violations.

Implement continuous monitoring of shadow AI usage. Use EDR tools, network monitoring, and behavioral analytics to detect activity. The goal isn't to catch every instance, but to identify patterns and high-risk usage.

When shadow AI usage is detected, implement a tiered response:

- Monitoring only for low-risk usage (employee using Chat GPT to draft an email). No action, just tracking.

- Alert and audit for medium-risk usage (employee uploading non-sensitive analysis). Alert the employee and their manager, audit what was uploaded.

- Block and investigate for high-risk usage (employee uploading restricted data). Block the action, investigate the incident, escalate to leadership.

Implement a shadow AI reporting mechanism where employees or security teams can report suspicious activity. Make it easy to report without creating fear of punishment.

Conduct monthly audits of detected shadow AI usage. Look for patterns. Which teams are most affected? Which data types? Which tools? Use this data to improve mitigation strategies.

Create incident response playbooks for different shadow AI scenarios. If someone uploads customer data to Chat GPT, what happens? Who gets notified? How quickly do we respond? Having clear processes reduces response time and improves outcomes.

Invest in threat hunting for shadow AI. Assign someone to actively look for suspicious patterns: unusual logins from unexpected countries, large data downloads followed by shadow AI uploads, etc. Proactive hunting catches things automated monitoring misses.

The Cost-Benefit Analysis: Is Strict Prevention Worth It?

Here's an uncomfortable question: is it worth spending massive resources to prevent all shadow AI usage?

The answer depends on your organization's risk profile. For a healthcare company handling HIPAA data, strict prevention is worth the cost. The regulatory fines and reputational damage from a breach justify significant investment in prevention.

For a tech company, a more permissive approach might be rational. Tech companies already understand AI. Their IP is less sensitive than healthcare data. Developers using Chat GPT for code review might actually be more productive than without it. The cost of prevention might exceed the cost of risk.

Here's a framework for thinking about it:

Cost of Prevention = (tools + technology + staff + lost productivity) Cost of Risk = (probability of breach) × (damage if breach occurs)

If Cost of Prevention > Cost of Risk, your mitigation is probably too aggressive. If Cost of Prevention < Cost of Risk, you need stronger mitigation.

For most mid-sized organizations, the optimal strategy is somewhere in the middle:

- Provide excellent approved alternatives so users don't feel pressured to go rogue

- Implement monitoring that catches high-risk usage

- Enforce consequences for violations

- Create culture that respects both productivity and security

- Regularly reassess risk profile and adjust strategy

This balanced approach reduces shadow AI usage significantly without being so restrictive that productivity suffers.

Future Trends: The Shadow AI Problem Will Get Worse (Then Better)

Shadow AI is going to get more prevalent before it gets less prevalent. Here's why.

AI capabilities are improving and becoming more accessible. Chat GPT is more capable than GPT-3. Claude is competitive with Chat GPT. Open-source models are approaching commercial quality. As capabilities improve, shadow AI becomes more appealing because the risk-reward ratio shifts toward more reward.

Consumer AI pricing is trending down. Open AI charges

Regulatory capture is coming. As shadow AI becomes widespread, regulators will face pressure to act. New regulations will require shadow AI disclosure. Some industries might ban certain types of shadow AI usage. These regulations will create compliance incentives for approved tools, reducing shadow AI usage in regulated industries.

But here's the counterintuitive part: this regulatory capture might actually make the problem worse short-term. As regulations become stricter, more organizations will crack down on shadow AI. Employees, frustrated by restrictions, will find more creative ways to bypass controls. Shadow AI goes from visible to invisible. The last 40% of shadow AI usage is the hardest to detect.

Long-term, I expect shadow AI to be solved by legitimacy. Enterprise AI tools will become so good, so secure, and so cheap that shadow alternatives become unnecessary. An organization can give every employee access to an enterprise Chat GPT equivalent for $5/month. No reason to use public Chat GPT. The tool is approved, governance is automated, security is built in.

We're not there yet. We're in the phase where shadow AI is endemic and growing. But the trajectory is clear: eventually, approved tools will dominate because they'll be better, cheaper, and more trustworthy than shadow alternatives.

Building Your Shadow AI Mitigation Program: A Roadmap

If you're starting from zero, here's a pragmatic roadmap for building an effective shadow AI mitigation program.

Month 1-2: Assessment and Visibility Conduct surveys of employees about shadow AI usage. Review network logs for AI tool access. Interview IT teams and security about what they're seeing. Create a baseline of current shadow AI adoption and risk profile.

Month 2-3: Approval Review Audit your current approved AI tools. Do they cover common use cases? Are they easy to use? Are they secure? Where are the gaps? Get feedback from employees about which tools they actually want to use.

Month 3-4: Gap Filling Invest in new approved tools that address the biggest gaps. If employees are using shadow AI for data analysis because you don't have an approved tool, fix that. If they're using it for writing because your approved tool is terrible, fix that.

Month 4-5: Communication and Training Launch a communication campaign about shadow AI risks. Make it specific and relevant to your organization's risk profile. Train employees on approved tools. Involve leadership visibly.

Month 5-6: Technical Controls Implement DLP, EDR, monitoring, and other technical controls. Start with low-friction controls (detection and alerting) before moving to high-friction controls (blocking).

Month 6-12: Iteration and Improvement Monitor adoption. Iterate on approved tools based on feedback. Adjust communication and training based on detection data. Gradually increase enforcement as awareness improves.

The timeline is 6-12 months for a basic program, 12-24 months for a comprehensive program.

Conclusion: Shadow AI Is Solvable

Shadow AI is a real problem. The statistics are alarming. The risks are genuine. The consequences of inaction can be catastrophic.

But it's also solvable. It's not like ransomware, where an organization is either infected or not. Shadow AI exists on a spectrum. Your goal isn't to eliminate it completely. Your goal is to move it from "endemic and uncontrolled" to "monitored and managed" to "rare and caught immediately."

The key insight is that shadow AI isn't primarily a security problem. It's a user experience problem. Employees aren't using unapproved AI tools out of malice. They're using them because they perceive that approved tools don't meet their actual needs. Fix the user experience problem and you fix most of the shadow AI problem.

That means:

- Understand what employees actually need - Conduct surveys, analyze logs, talk to users

- Provide approved alternatives that are better - Faster, more capable, easier to use

- Communicate clearly about risks - Be specific and tie risks to employee interests

- Implement technical controls - Detect violations, prevent high-risk scenarios

- Iterate and improve - Track metrics, gather feedback, refine strategy

Organizations that follow this approach see dramatic improvements in shadow AI adoption within 6-12 months. Not elimination, but significant reduction. More importantly, they maintain visibility over remaining shadow AI usage so they can respond to high-risk incidents quickly.

The organizations that struggle are those that try to prevent all shadow AI through technical controls alone. They end up with invisible shadow AI that's harder to detect and more dangerous.

The future state isn't "no shadow AI." It's "shadow AI is rare, detected quickly, and our approved tools are so good that employees prefer them to alternatives." That's achievable with the right strategy, commitment, and resources.

Start with assessment and visibility. Build from there. Your organization's data security, regulatory compliance, and competitive advantage depend on it.

FAQ

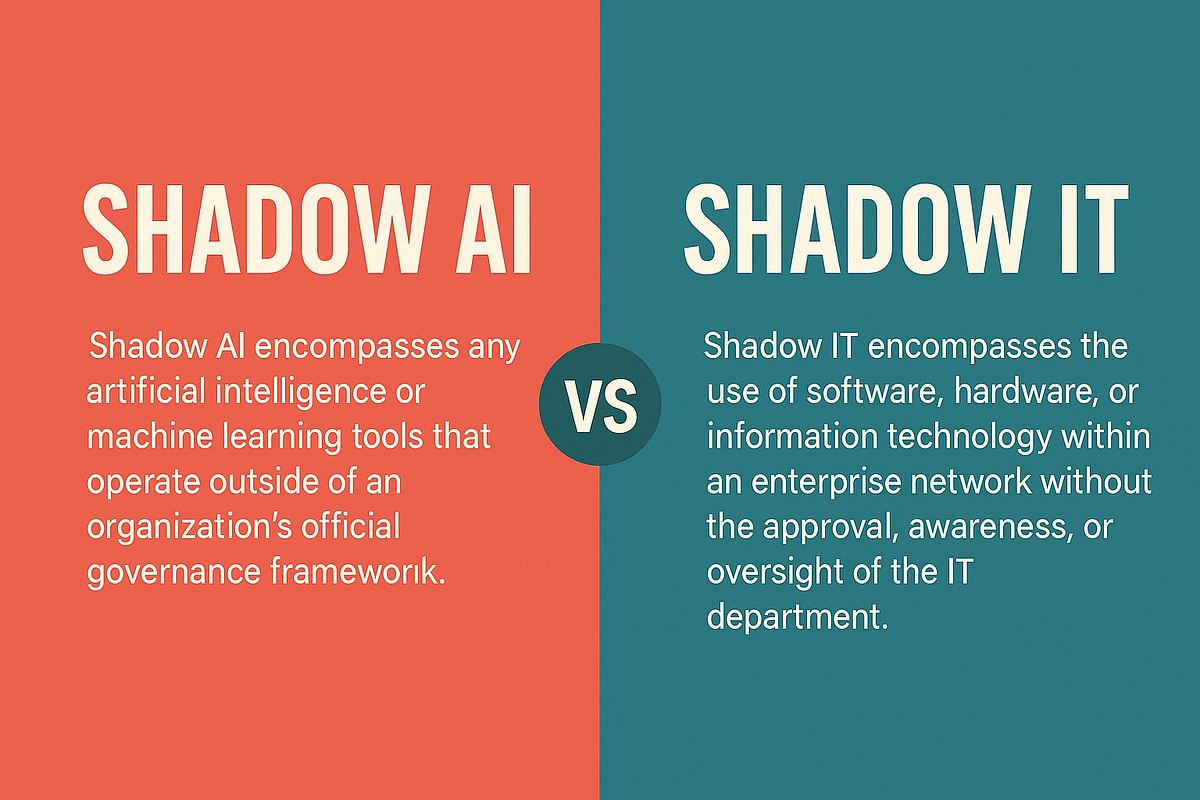

What exactly is shadow AI and how is it different from shadow IT?

Shadow AI refers specifically to unauthorized artificial intelligence tools and services that employees use without IT approval. Shadow IT is the broader category of any unauthorized technology. Shadow AI is a subset of shadow IT, but it's accelerating because AI tools are incredibly easy to access, free or cheap, and immediately useful. An employee using an unapproved AI chatbot is shadow AI. An employee installing unauthorized software is shadow IT. Most organizations today focus on shadow IT controls, but shadow AI is becoming the more pressing problem because AI adoption is outpacing IT governance.

Why don't companies just block access to shadow AI tools like Chat GPT?

Blocking is possible but ineffective at scale. Employees use VPNs to bypass network blocks. They access tools from personal devices on personal networks. They use mobile apps instead of web interfaces. They route traffic through proxies. For every control you implement, sophisticated users find a workaround. The more restrictive you become, the more covert shadow AI usage becomes, which can be worse than visible usage because you lose monitoring and detection capability. The most effective approach is to provide better approved alternatives combined with detection and graduated consequences for violations.

What data is most at risk when employees use shadow AI tools?

The most at-risk data includes customer personal information (names, email, phone, addresses), financial data (account numbers, transaction history, pricing), healthcare information (patient names, diagnoses, treatment plans), intellectual property (algorithms, code, research), employee data (payroll, performance reviews, personal information), and proprietary business data (strategies, competitor analysis, financial forecasts). Any of this data uploaded to a shadow AI tool could be used for training, incorporated into competing products, sold to third parties, or accessed by unauthorized actors if the AI service is compromised.

What are the regulatory consequences of shadow AI usage in my organization?

Regulatory consequences depend on your industry and the data involved. GDPR violations carry fines up to 4% of global annual revenue. HIPAA violations are

How can I detect shadow AI usage in my organization if employees are trying to hide it?

Detection is challenging but possible. Use EDR tools to monitor for Chat GPT, Claude, and other AI tools in browser history and network traffic. Implement DLP tools that flag when data is uploaded to external AI services. Use network proxies to analyze encrypted traffic patterns (Chat GPT has characteristic traffic signatures). Deploy behavioral analytics that identifies unusual cloud upload patterns. Monitor for VPN usage combined with AI tool access. Conduct user surveys (anonymous) about tool usage. Review security logs for attempts to bypass controls. No single method catches everything, but combining multiple approaches gives reasonable visibility.

What's the best approved AI tool for our organization to standardize on?

The best tool depends on your organization's specific use cases, security requirements, and budget. Consider tools that offer enterprise features like user management, data governance, audit logs, and compliance certifications. Evaluate based on: Does it meet the use cases employees need? Is the UX competitive with shadow alternatives? Does it have strong security and compliance controls? Is the pricing sustainable? Platforms like Runable offer AI-powered capabilities for presentations, documents, reports, images, and videos starting at $9/month, which can provide good value for organizations seeking approved alternatives. Whatever tool you choose, involve employees in the selection process so they feel heard and are more likely to adopt it.

How do I convince my organization's executives to care about shadow AI when they don't see it as a problem?

Tie shadow AI to business metrics that executives care about: revenue risk (IP leaks affecting competitive advantage), compliance risk (regulatory fines and audit failures), operational efficiency (standardized tools vs. fragmented shadow AI), and security risk (data breach costs). Quantify as much as possible. "We have a 23% risk of a major data breach in the next three years if shadow AI remains uncontrolled." "Our IP exposure through shadow AI is worth approximately $50 million in lost competitive advantage." "We have compliance violations in 47% of our organization." Executives respond to numbers. Also, involve the board or audit committee if appropriate. External pressure accelerates internal action.

What's the difference between blocking shadow AI and detecting it?

Blocking prevents shadow AI usage by restricting access at the network or device level. When an employee tries to reach Chat GPT, the connection is refused. Blocking is more secure but creates friction and users find workarounds. Detecting identifies shadow AI usage after the fact through logs, monitoring, and analysis. When an employee uses Chat GPT, the organization detects it and responds. Detecting is less secure (the bad thing already happened) but causes less friction. Most effective programs use a combination: detect by default, block for high-risk scenarios (restricted data), and allow with monitoring for low-risk usage. Pure blocking drives shadow AI underground. Pure detection lacks preventive capability. Hybrid is best.

How can we make approved AI tools appealing enough that employees stop using shadow AI?

Make approved tools faster, more capable, and easier to use than shadow alternatives. Involve employees in tool selection and iteration. Incorporate feedback immediately. Ensure approved tools have the same UX polish as consumer tools (which have massive budgets for UX design). Offer training and support so employees know how to use tools effectively. Create communities of practice around approved tools where power users share tips. Make approved tools visibly endorsed by leadership. Reward teams that achieve high approved-tool adoption. Recognize that adoption isn't technical, it's behavioral and cultural. If employees prefer approved tools because they're better, they'll use them. If they feel forced to use them, they'll go rogue.

What's the ROI of investing in shadow AI mitigation?

ROI is calculated as (benefits - costs) / costs. Benefits include: avoided compliance fines (highly material), prevented data breaches (averages

Key Takeaways

- Shadow AI adoption is endemic: 58-59% of workers use unapproved AI tools despite company alternatives being available

- High-risk behavior is normalized: 63% of employees believe it's acceptable to use AI without IT approval, creating cultural blind spots

- Data exposure is massive: 33% have shared research, 27% have shared employee data, 23% have shared financial information with unapproved tools

- Executive behavior enables shadow AI: C-suite and managers are more likely than junior staff to use unauthorized tools and support others doing so

- Mitigation requires balance: Pure prevention drives shadow AI underground; the most effective approach combines approved tools, detection, and cultural change

- Regulatory risk is material: GDPR fines reach 4% of revenue, HIPAA penalties are 50K per violation, creating compliance urgency

- Meet employees where they are: Shadow AI thrives because approved tools don't meet actual needs—providing better alternatives is more effective than restrictions

Related Articles

- The AI Trust Paradox: Why Your Business Is Failing at AI [2025]

- Bumble & Match Cyberattack: What Happened & How to Protect Your Data [2025]

- Upwind Security's $250M Series B: Runtime Cloud Security's Defining Moment [2025]

- ChatGPT's Age Detection Bug: Why Adults Are Stuck in Teen Mode [2025]

- Outtake's $40M AI Security Breakthrough: Inside the Funding [2025]

- WinRAR Security Flaw CVE-2025-8088: Complete Defense Guide [2025]

![Shadow AI in the Workplace: How Unsanctioned Tools Threaten Your Business [2025]](https://tryrunable.com/blog/shadow-ai-in-the-workplace-how-unsanctioned-tools-threaten-y/image-1-1769787458164.jpg)