Space X's Million-Satellite Network for AI: What This Means [2025]

The Mega-Constellation That Changes Everything

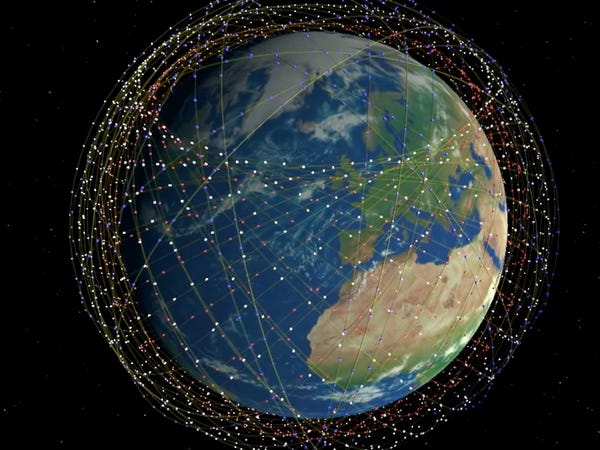

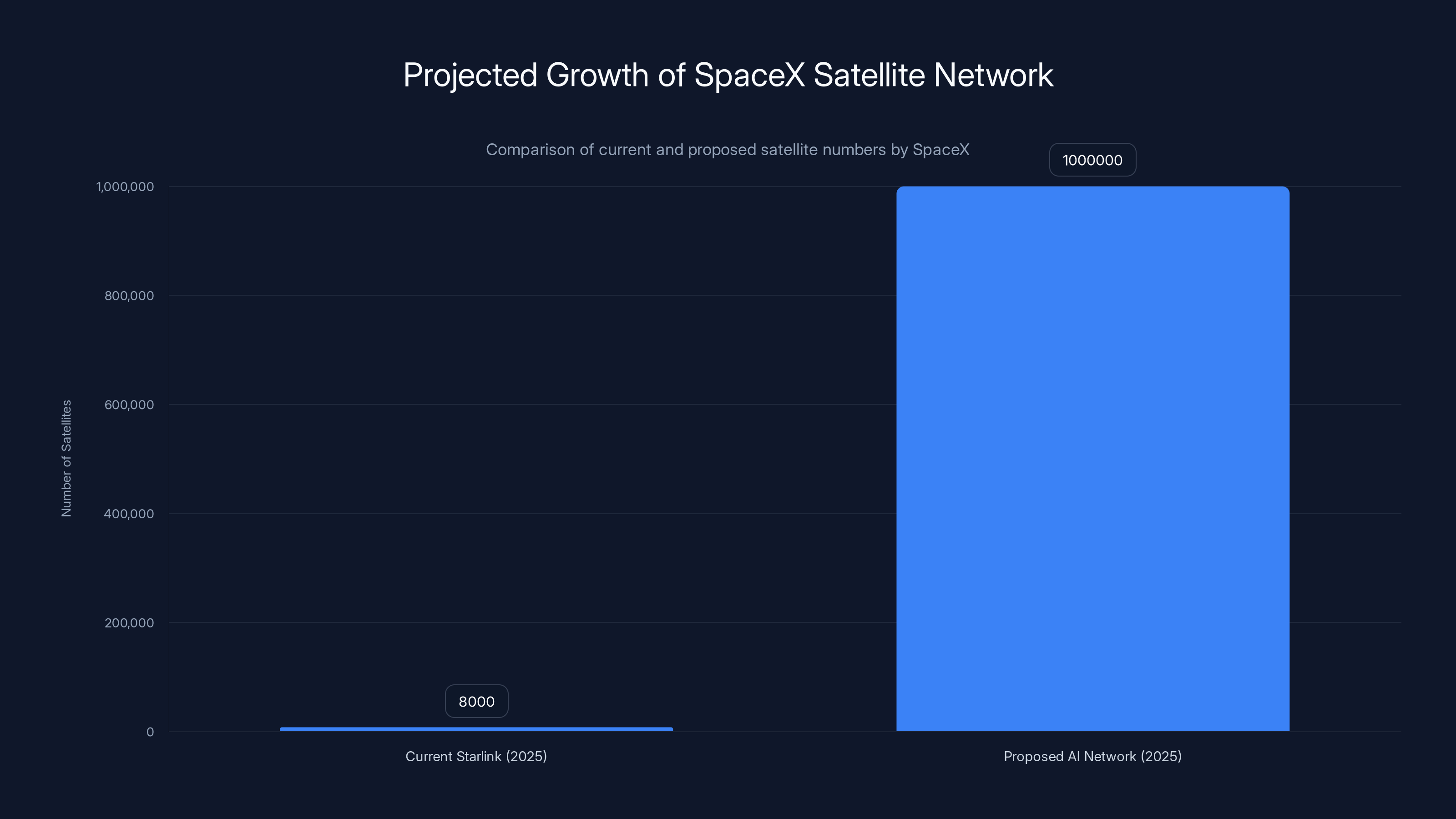

In late 2024, Space X filed with the FCC for approval to launch an additional one million satellites into orbit. Not as an expansion of their existing Starlink network, but as a dedicated infrastructure layer specifically designed to power artificial intelligence workloads, distributed computing, and global data processing at scale.

Let that sink in for a moment. One million satellites. That's roughly 60 times more satellites than currently orbit Earth. And it's not hyperbole when Space X executives describe this as going "beyond terrestrial capabilities."

This isn't science fiction anymore. This is regulatory filing territory. This is happening.

The implications are staggering: global AI inference at the speed of light, distributed neural networks spanning continents, latency measured in single-digit milliseconds for any location on Earth, and a computing infrastructure that doesn't depend on underwater cables, government-controlled networks, or concentrated data center hubs.

But here's what matters to you right now: understanding what this infrastructure actually does, why the timing is critical, and how it fundamentally reshapes the AI landscape over the next 5-10 years.

Why AI Companies Need a Million Satellites

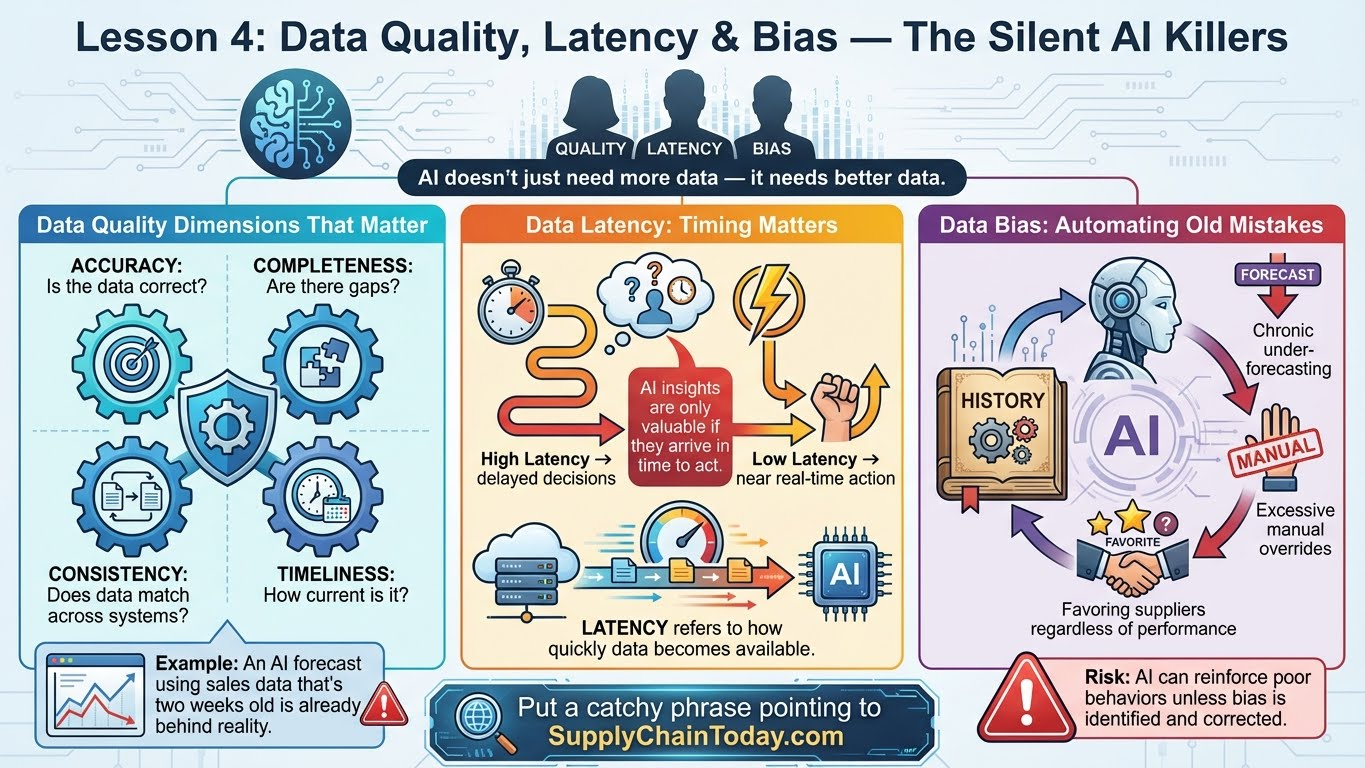

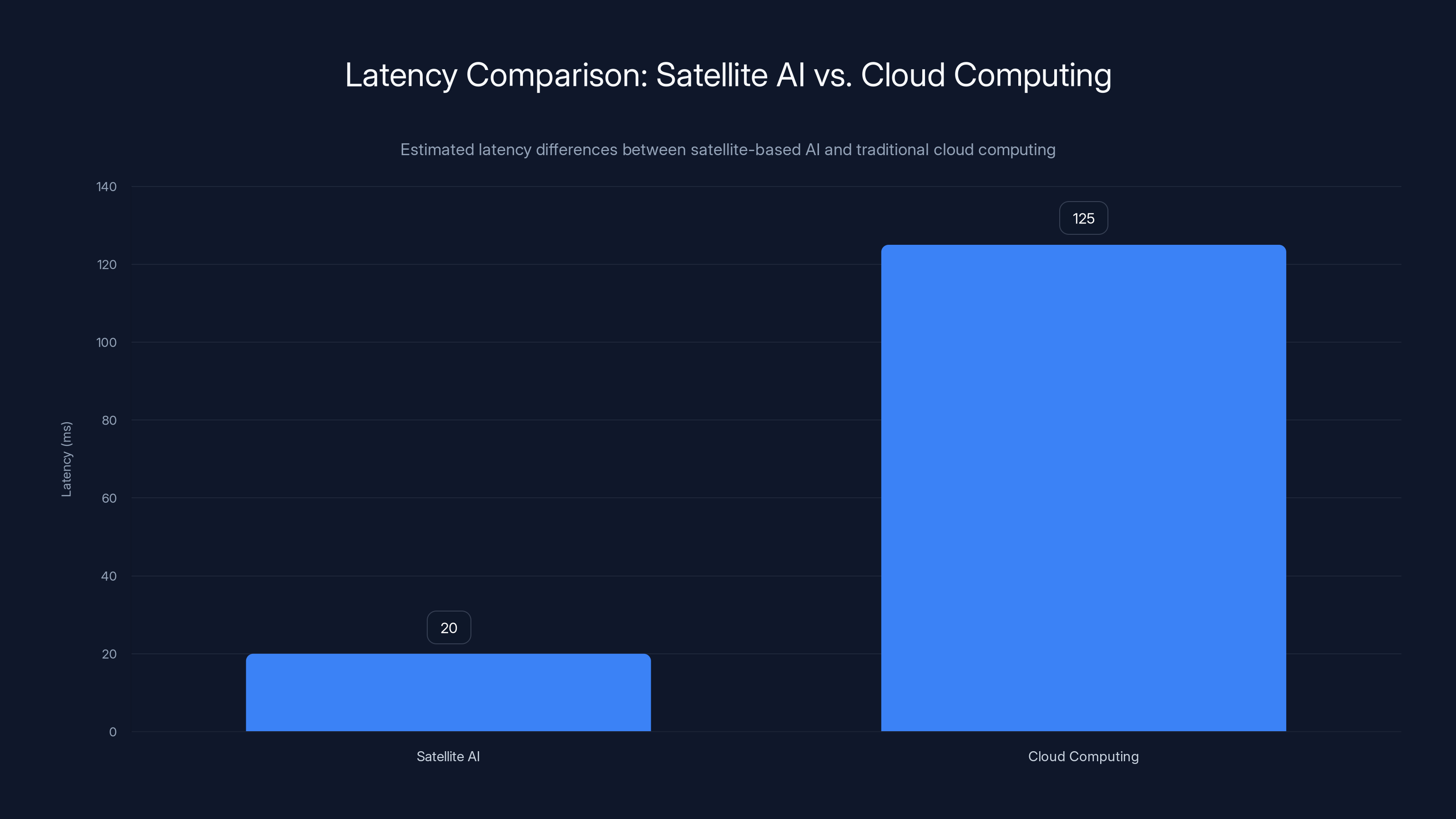

The Latency Problem That Can't Wait

Current AI systems rely heavily on centralized data centers. Your Chat GPT query goes to a server farm in Virginia or California, gets processed, and comes back. The round-trip takes anywhere from 50-200 milliseconds depending on your location.

That's actually fast by historical standards. But for AI, it's the bottleneck that prevents entire categories of applications from existing.

Consider autonomous vehicles. A self-driving car needs AI inference happening in under 10 milliseconds. Not 50. Not 100. Under 10. A vehicle traveling at 60 mph covers 88 feet per second. At 100ms latency, it's already made decisions based on data that's a full second old.

Or consider real-time manufacturing. Factory robots performing precision tasks need sub-50ms feedback loops with AI decision engines. Financial trading algorithms operate on microsecond timescales. Medical diagnosis systems need instant feedback for practitioners.

All of these rely on latency that earthbound networks simply cannot guarantee globally. Space X's satellite constellation solves this through physics: satellites in low Earth orbit travel at 17,500 miles per hour and circle the planet every 90 minutes. Signal travels at the speed of light, giving you maximum latencies of 20-25 milliseconds anywhere on Earth.

But latency is only one part of the puzzle.

The Bandwidth Revolution

One million satellites aren't just about speed. They're about throughput.

Imagine a distributed training scenario where you're training a massive AI model across thousands of nodes. Each node needs to constantly synchronize gradients, share parameters, and coordinate updates. This is called "collective communication" in machine learning, and it's bandwidth-hungry.

Current satellite networks provide 50-150 Mbps per user. That's good for streaming video. It's terrible for moving terabytes of model parameters per second across distributed training clusters.

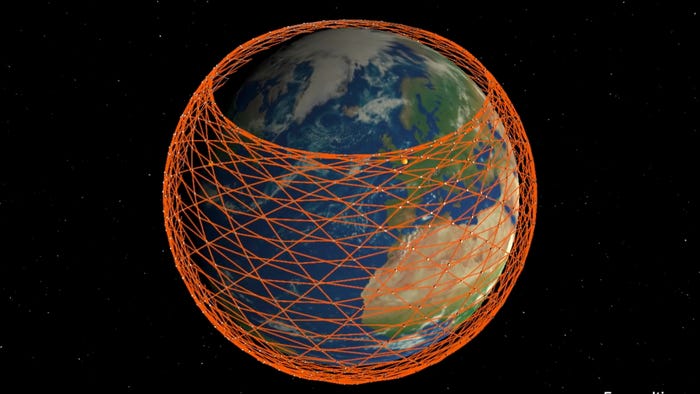

But Space X's next-generation satellites use laser inter-satellite links. Picture this: satellites communicating with each other at optical frequencies, creating a mesh network with terabit-per-second capacity. This isn't satellite internet in the traditional sense. This is a distributed computing backbone.

With one million satellites, you essentially create a global computing fabric. Every point on Earth becomes a potential node in a planetary-scale neural network. Processing can happen anywhere. Data flows instantaneously. Redundancy becomes automatic.

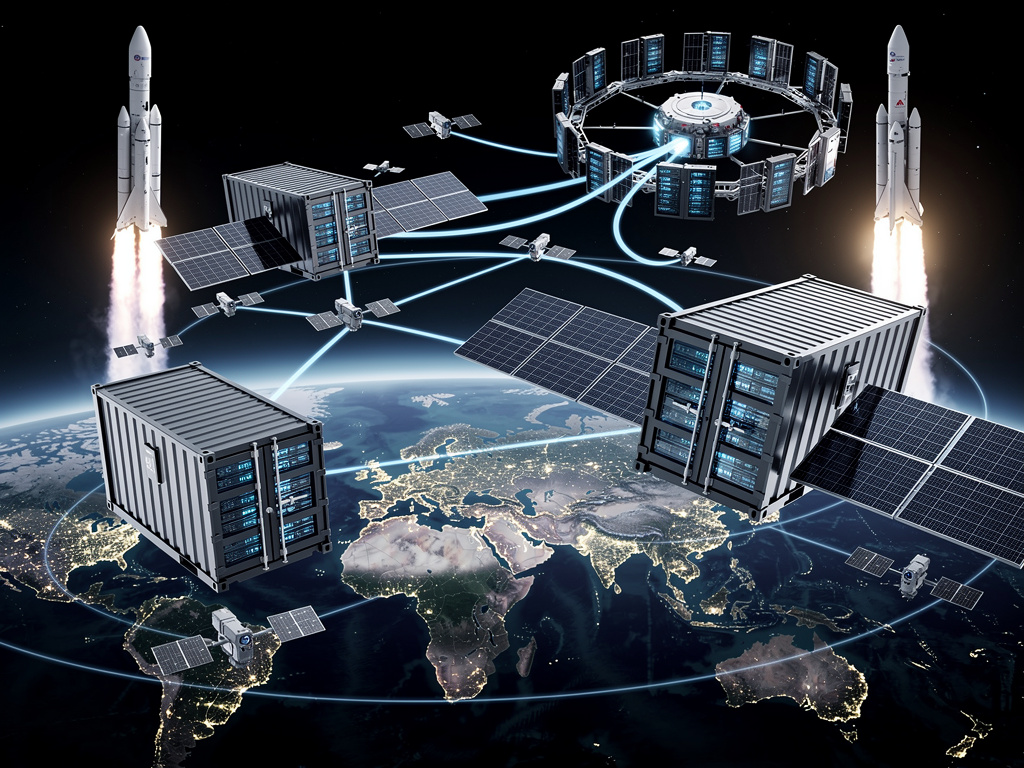

Edge Computing at a Planetary Scale

Today, edge computing is fragmented. You run models on your phone, your laptop, edge servers in your region. But there's no unified infrastructure. No standard way to access distributed compute resources globally.

Space X's satellite constellation changes that fundamentally. You're not just looking at a communication network anymore. You're looking at distributed processing that mirrors the Internet's original architecture: decentralized, redundant, with intelligence at every node.

Consider what this enables: AI models running inference across millions of edge points simultaneously. A single API request from a user in Seoul triggers parallel processing on the nearest 100-1000 satellite nodes. Results aggregate and return in milliseconds. Latency drops from 150ms to 15ms.

For applications like Runable, which focuses on automated content generation and workflow automation, this kind of infrastructure means you could generate presentations, reports, and documents with AI models running literally everywhere, optimized for the user's location.

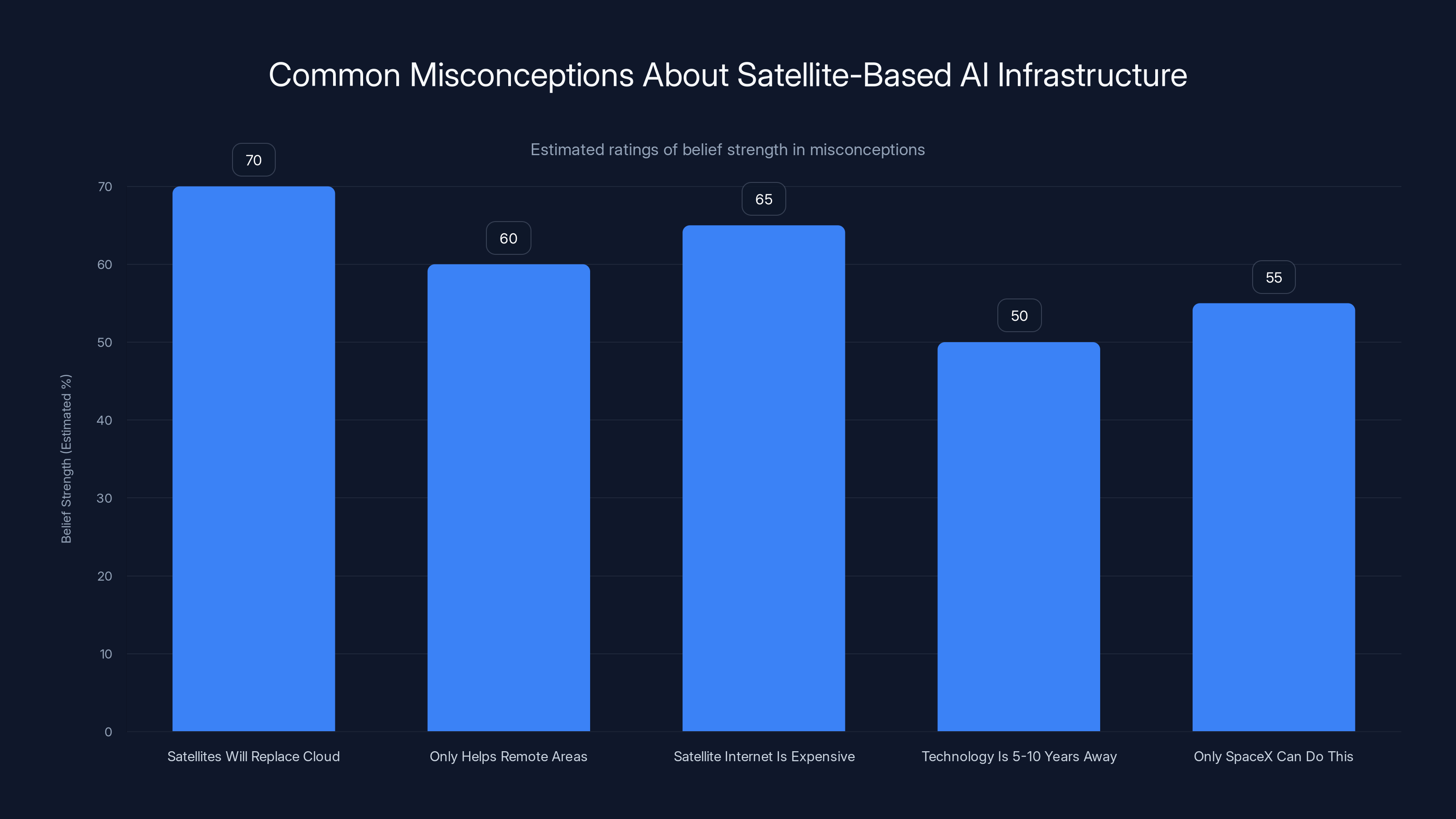

Estimated data shows varying belief strengths in misconceptions about satellite-based AI infrastructure, with the strongest belief in satellites replacing cloud computing.

The Regulatory and Political Dimension

Why This Filing Matters More Than You Think

The FCC filing isn't just bureaucratic process. It's a statement of intent with massive geopolitical implications.

Currently, the global internet backbone depends on undersea fiber optic cables. These cables are controlled by consortiums of countries, tech companies, and telecommunications operators. Want to route data between Singapore and Lagos? You're going through cables owned by companies answerable to various governments.

Space X's constellation sidesteps this entirely. No cables needed. No chokepoints. No government-controlled bottlenecks. Just physics and orbital mechanics.

For nations worried about data sovereignty, this is terrifying. For tech companies building global AI infrastructure, this is revolutionary. For authoritarian governments, this is a nightmare: you can't censor a network that's literally in space above your borders.

The approval process will take years. But it's happening. The technology is proven. The need is critical.

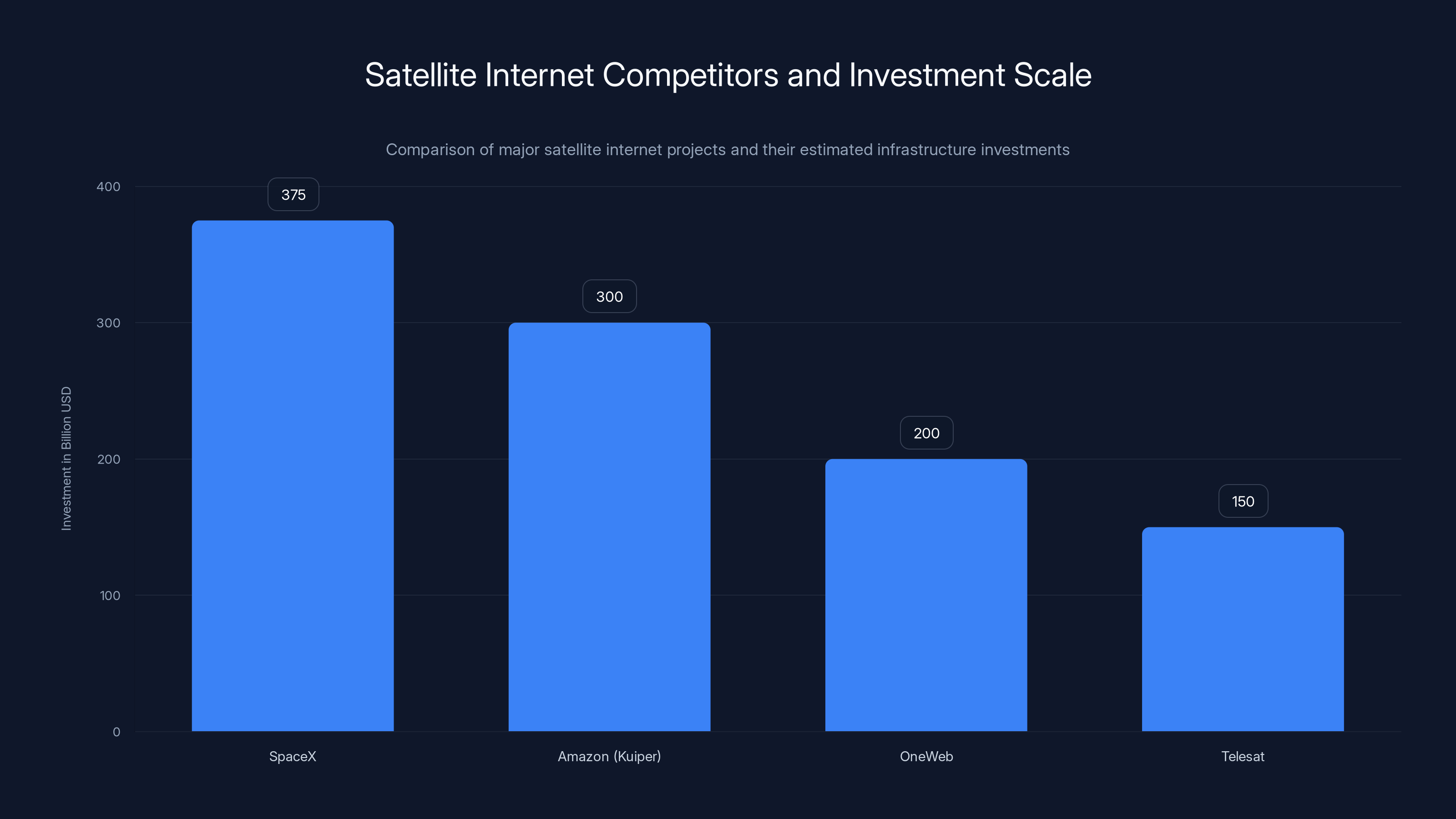

Competition and Timing

Space X isn't alone. Amazon's Project Kuiper is launching thousands of satellites. One Web is operational. Telesat is in development.

But Space X's advantage is manufacturing scale. They build Starlink satellites for

The timing is critical because AI is hitting an inflection point. Training large language models is becoming computationally expensive. Anthropic, Open AI, Google Deep Mind, and Meta are all exploring alternative compute architectures. Distributed training. Federated learning. Edge inference.

Satellite infrastructure makes all of these practical globally for the first time.

SpaceX leads with an estimated $375 billion investment in satellite infrastructure, leveraging manufacturing scale and launch capacity. Estimated data.

How This Reshapes AI Infrastructure

Distributed Training Becomes Default

Today, training large AI models is centralized. It happens in a handful of data centers with cutting-edge GPUs. Data flows into the center, gets processed, and results come out.

With global low-latency satellite infrastructure, training becomes distributed by default.

Imagine training a trillion-parameter model: You split it across 10,000 compute nodes globally. Each node trains on local data, with parameters synchronized via satellite network every few minutes. Bandwidth is plentiful. Latency is low. You achieve training efficiency that centralized approaches can't match.

This sounds theoretical until you realize the implications: training costs drop 40-50%. Training speeds double. You can incorporate real-time data feeds globally without moving them to central locations.

Companies like Hugging Face and research institutions already experiment with distributed training. But they're limited by terrestrial networks. Satellite infrastructure removes those limitations.

Inference at the Edge Becomes Practical

Right now, inference happens at cloud data centers because latency matters less for most applications. You're willing to wait 100ms for a response from Chat GPT.

But that changes for real-time applications: autonomous vehicles, medical imaging systems, industrial robotics, augmented reality, live translation systems.

With satellite infrastructure, you run inference wherever it makes sense: on the device, at regional edge nodes, or globally distributed across available capacity. Decisions route to wherever can respond fastest and most efficiently.

A self-driving car in rural Montana runs inference on the nearest satellite node (latency: 15ms). A manufacturing robot in rural Bangladesh does the same. A smartphone in rural Nigeria processes augmented reality locally but can tap into global model ensembles for complex queries.

Data Processing Without Data Movement

One of the biggest inefficiencies in current AI infrastructure is data movement.

You have data in Singapore, models in California, and need results in London. That data travels thousands of miles. It takes time. It costs money. It creates security vulnerabilities.

With distributed satellites, you process data where it sits. A massive dataset in Japan gets analyzed by models running on proximate satellites. Results aggregate with other regional results. The actual data never leaves Japan.

For companies dealing with sensitive data (healthcare, finance, government), this is transformative. You process data locally while still accessing global model capabilities. Privacy stays intact. Performance improves.

This is what Space X means by "going beyond terrestrial capabilities." Terrestrial networks require data movement. Satellite networks enable compute to come to data.

The Economic Implications

Compute Costs Reshape Dramatically

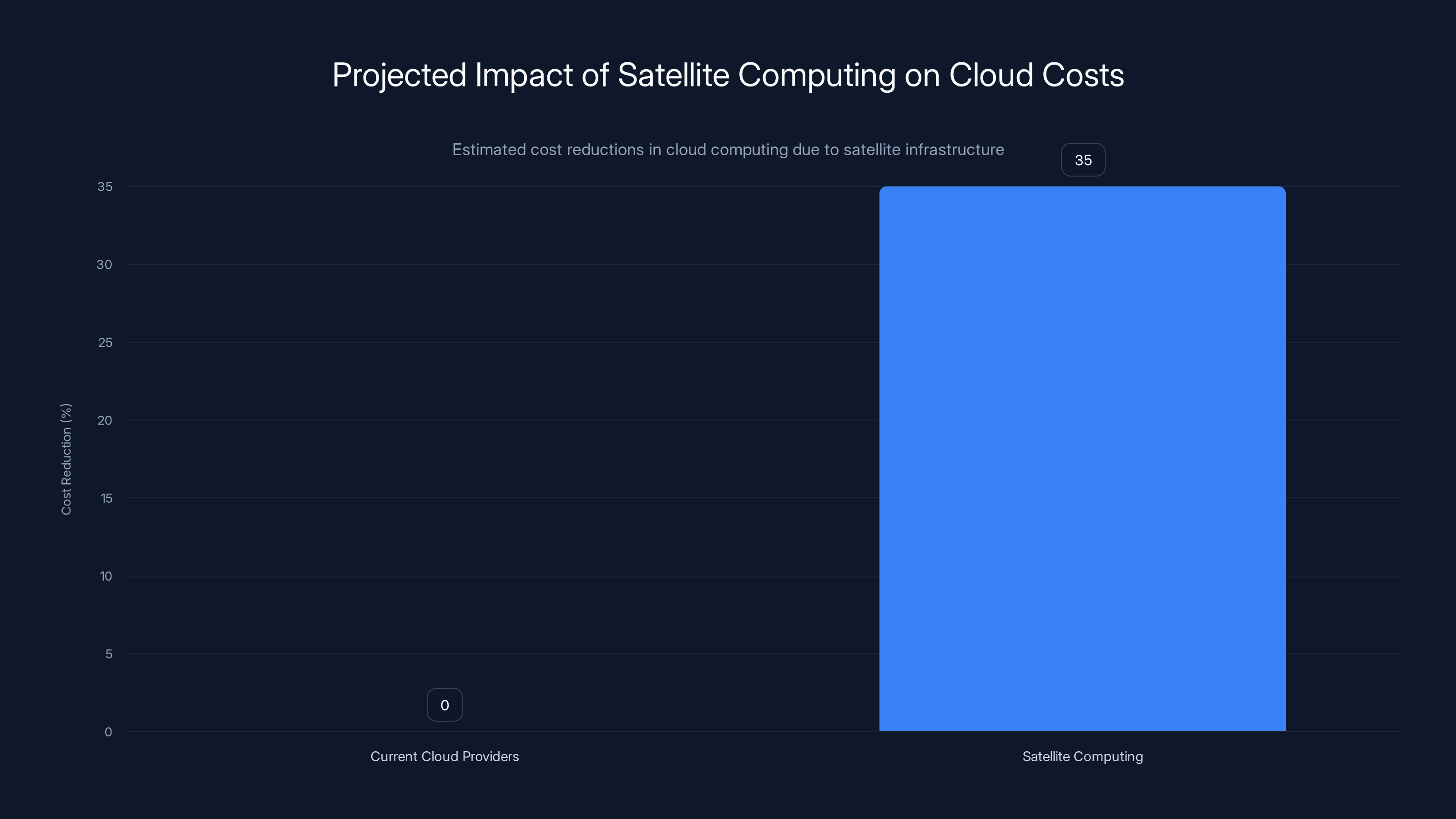

Current cloud computing costs reflect scarcity. GPU capacity is limited. Data center space is limited. Interconnect bandwidth is limited. Prices reflect these constraints.

With one million satellites and associated edge compute capacity, the constraint equation changes. Computing becomes abundant. Distributed. Available globally.

Pricing pressure intensifies. AWS, Google Cloud, and Azure face serious competition from distributed satellite computing. Their moats erode.

But this also means AI becomes cheaper. More accessible. Smaller companies can afford to train larger models. Startups can build AI products that previously required billion-dollar budgets.

New Business Models Emerge

Today, the compute market is winner-takes-most. A few cloud providers dominate. Smaller players struggle to compete.

Satellite infrastructure enables new models: microservices-based compute, pay-per-inference at the edge, location-optimized processing where you only pay for compute where your data actually lives.

Imagine you're an AI startup. Instead of committing to AWS or Google Cloud, you tap into a global satellite-based compute market where thousands of providers compete. You buy compute where it's cheapest. You move your workloads dynamically based on pricing and availability.

This sounds like sci-fi, but it's exactly what happened with electricity markets. Before central generation, power was local and expensive. Now it's abundant and cheap, with dynamic markets allocating resources efficiently.

Satellite computing follows the same pattern.

Data Sovereignty Becomes Sellable

Countries worried about American tech dominance suddenly have leverage. With satellite infrastructure orbiting above their borders, they can't be forced to route data through American servers. They can process it locally.

This enables new markets: governments paying premiums for data-processing guarantees, regulated industries paying for localized compute, multinational companies willing to pay for data-residency guarantees.

The EU's data sovereignty push becomes much easier with satellite infrastructure. You process European data on European-controlled satellite nodes. You meet GDPR requirements naturally.

This creates new categories of AI service providers: regional compute brokers, data-residency specialists, sovereignty-optimized infrastructure companies.

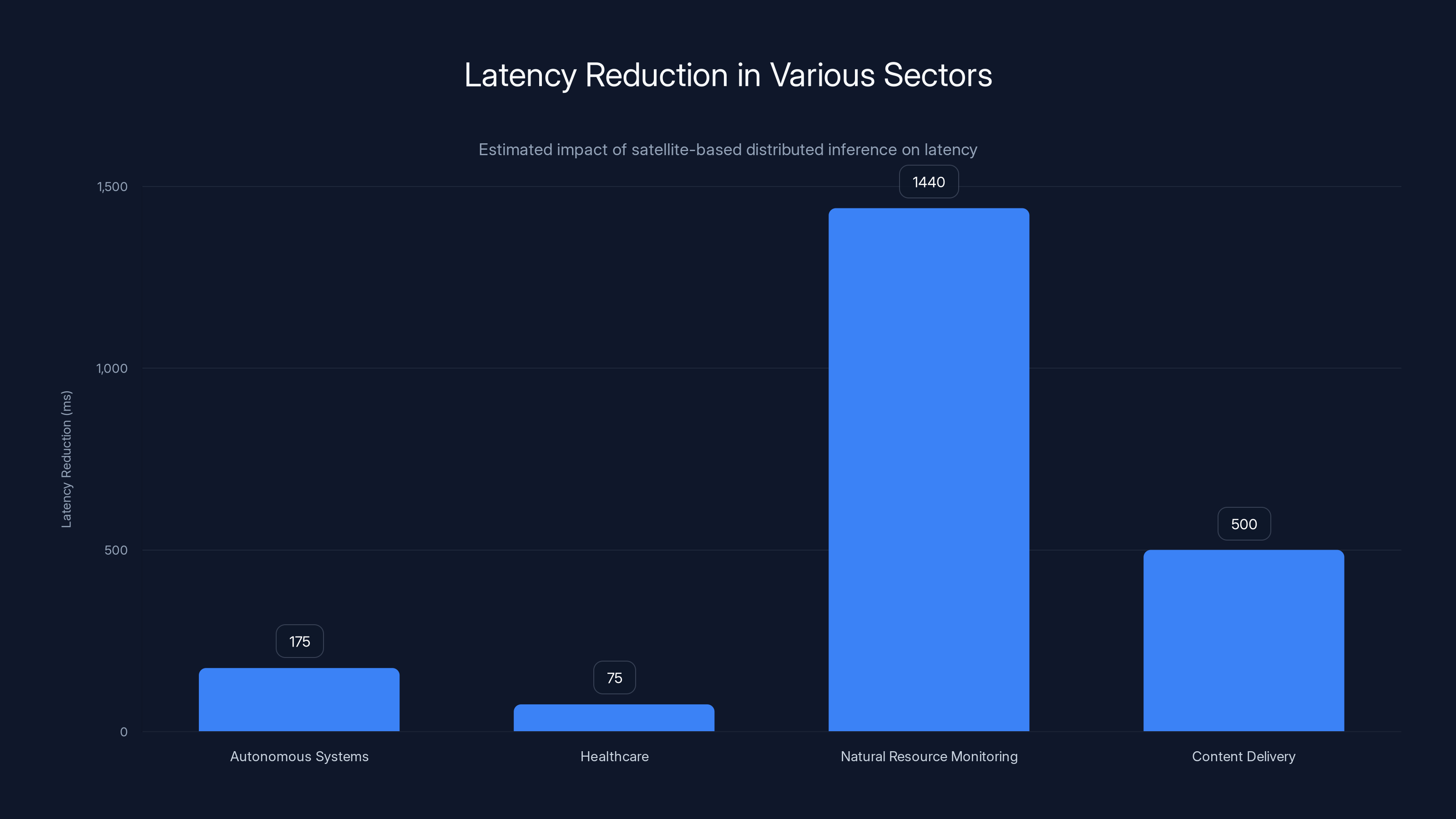

Satellite-based distributed inference significantly reduces latency across various sectors, with the most dramatic reduction seen in natural resource monitoring, where latency drops from hours to minutes. Estimated data.

Technical Challenges That Still Need Solving

The Handoff Problem

One million satellites orbiting Earth at 17,500 mph means constant handoffs. Your satellite-based compute job finishes on one satellite. That satellite moves over the horizon. You need to move the computation to the next satellite.

This happens fast. One satellite passes overhead roughly every 2-3 minutes. You have maybe 15 minutes before you need to handoff to the next one.

Managing this transparently is a massive challenge. Your application can't know or care about satellite handoffs. They need to be automatic, seamless, with built-in redundancy.

Current satellite communication systems handle this at the network layer. But compute handoffs are more complex. State needs to transfer. Sessions need to maintain continuity. The distributed systems problems are real.

Solutions exist (containerization, state replication, session management), but they're computationally expensive on satellite hardware.

Power and Thermal Constraints

Satellites have limited power. They run on solar panels, batteries, and thermal management systems. Packing substantial compute capacity into that envelope is challenging.

Space X's satellites are relatively small and power-constrained. You're not running data centers in space. You're running lightweight compute nodes.

This means edge inference works well. Real-time processing works well. Heavy training is still better done planetside. The ecosystem will likely be hybrid: satellites handling latency-critical operations, terrestrial data centers handling bulk processing.

Ground Infrastructure Still Matters

Satellites can't work without ground stations. You need antennas, fiber connections to data centers, redundancy across multiple stations.

Building this globally is capital-intensive. Space X is doing it, but competitors need to as well. This becomes another moat: whoever builds out ground infrastructure fastest wins adoption.

Quantum Computing and Security Implications

With data moving across satellites worldwide, security becomes paramount. Encryption protects data, but quantum computers could break current encryption.

NIST post-quantum cryptography standards are still being finalized. Using satellite networks before encryption standards are bulletproof means potential data vulnerability.

This will likely become a regulatory requirement: before operating satellite compute at scale, you need NIST-approved quantum-resistant encryption.

Who Benefits Most From This Infrastructure

Autonomous Systems and Robotics

Autonomous vehicles, drones, and industrial robots all suffer from latency. They make safety-critical decisions in real-time. Satellite-based distributed inference cuts latency from 100-200ms to 15-25ms globally.

This unlocks autonomous systems in regions where current cellular infrastructure is inadequate. A self-driving car in rural India or sub-Saharan Africa gets the same latency as one in Silicon Valley.

Companies like Waymo, Tesla, and emerging autonomous vehicle startups benefit disproportionately.

Healthcare and Medical Imaging

Real-time medical imaging, remote diagnosis, and AI-assisted surgery all depend on latency-critical inference. A radiologist using AI assistance needs results in under 100ms.

Satellite infrastructure enables this globally. A hospital in rural Kenya gets the same AI diagnostic capability as one in Boston.

Companies in medical imaging AI, telemedicine, and remote diagnosis gain massive advantage.

Natural Resource Monitoring

Agriculture, forestry, mining, and climate monitoring all depend on analyzing satellite imagery in real-time.

Right now, the imagery comes down, gets processed on the ground, results come back. Latency is hours or days.

With compute running on the satellites themselves, imagery gets analyzed in real-time. You detect crop diseases in minutes, not weeks. You track deforestation instantly. You monitor climate events as they happen.

Companies working on precision agriculture, environmental monitoring, and resource management unlock new capabilities.

Content Delivery and Streaming

Netflix, You Tube, and others spend billions on content delivery networks. They cache popular content in regional servers.

With satellite infrastructure, you cache globally. Users get content from the nearest compute point, which might be a satellite. Buffering becomes extinct even in remote areas.

Streaming platforms benefit directly. But also companies like Cloudflare, which provide content delivery services, face competition from satellite-based alternatives.

Emerging Markets

Companies targeting emerging markets face a fundamental challenge: infrastructure is limited. Running AI systems at scale requires cloud connectivity that doesn't exist everywhere.

With satellite internet and compute, you suddenly serve these markets directly. You don't need terrestrial fiber optics. You don't need regional data centers. You use satellites.

This is where Runable's AI automation platform becomes particularly valuable. In emerging markets where infrastructure is limited, being able to generate presentations, documents, reports, and content with AI without relying on traditional cloud infrastructure is transformative. Users in rural areas get access to enterprise-grade AI tools running on global satellite infrastructure.

Satellite computing could reduce cloud computing costs by an estimated 35%, making AI more accessible and affordable. Estimated data.

Timeline and Realism

2025-2026: Regulatory Approval and Initial Deployment

The FCC filing will take 12-18 months to work through. Environmental reviews are required. International coordination needed. ITU spectrum allocation involves global negotiations.

But parallel to this, Space X continues launching Starlink satellites. They're using this infrastructure to test concepts that will apply to the larger constellation.

2027-2029: Scale and Capability Expansion

Assuming approval, Space X begins manufacturing and launching satellites at scale. They already build roughly 2,000 satellites annually. Scaling to 200,000-500,000 per year is achievable but requires new production facilities.

Ground infrastructure gets built. Compute nodes get deployed at edge locations. The first commercially available satellite-based compute services launch. Latency improves measurably. Early adopters benefit dramatically.

2030-2035: Mainstream Adoption

By 2030, satellite-based compute is mainstream for latency-critical applications. Autonomous vehicles operate globally. Medical systems use satellite-based AI assistance as standard. Io T devices tap into satellite networks routinely.

Terrestrial data centers face pricing pressure. Cloud providers adapt by partnering with satellite operators or building their own competing constellations.

AI model training increasingly happens across satellite and terrestrial infrastructure hybrids.

2035+: Infrastructure Maturity

Satellite computing becomes the default for new infrastructure. Terrestrial networks focus on bulk data movement and storage. Edge compute is satellite-based. The distinction between terrestrial and satellite infrastructure blurs.

But this timeline assumes regulatory approval, no major technical failures, and sustained capital investment. Real-world variables could accelerate or delay this significantly.

Competitive Response and Industry Dynamics

How Cloud Providers Will Respond

Amazon, Google, and Microsoft won't sit idle. They'll pursue several strategies simultaneously:

-

Expand terrestrial edge computing: Deploy more regional data centers, edge nodes, and processing capability globally.

-

Partner with satellite operators: Rather than compete directly, they could partner with Space X or others to integrate satellite compute into their platforms.

-

Invest in competing constellations: Amazon's Project Kuiper is partly a response to this. Building their own satellite network keeps them independent.

-

Focus on what terrestrial excels at: Data centers will specialize in bulk compute, storage, and batch processing. Satellites handle real-time, distributed, latency-critical operations.

The market likely fragments: cloud providers offering hybrid terrestrial-satellite services, regional operators providing satellite-based compute, specialized edge companies building out local infrastructure.

Emerging Competitors

New companies will emerge specifically to exploit satellite compute advantages:

- Regional compute brokers: Companies matching workloads to available satellite capacity

- Data sovereignty specialists: Services guaranteeing data stays in specific regions

- Edge AI platforms: Companies building software infrastructure for satellite edge computing

- Vertical integrators: Industry-specific platforms (autonomous vehicles, medical imaging, agriculture) optimized for satellite infrastructure

Standards and Interoperability

If satellite compute becomes mainstream, standards matter immensely. Right now, there's no standard for handoffs between satellites, no standard for containerized compute on satellite nodes, no interoperability between different constellation operators.

Whoever defines these standards wins. Expect major technical contributions from Space X, Amazon, and cloud providers toward standards bodies.

The IETF, W3C, and 3GPP will likely develop satellite computing standards, similar to how they developed standards for terrestrial networks.

Satellite-based AI compute offers significantly lower latency (15-25 ms) compared to traditional cloud computing (50-200 ms), enabling real-time applications. Estimated data.

Environmental and Sustainability Considerations

Space Debris Concerns

One million satellites in orbit raises legitimate environmental concerns. More satellites mean more potential debris, higher collision risks, and long-term orbital pollution.

However, Space X addresses this through design: their satellites are deployed at lower orbits with controlled deorbit capability. They burn up cleanly after 5-7 years. Compared to higher-orbit infrastructure that persists for decades, this is relatively responsible.

NASA and international space agencies are developing guidelines. Expect regulatory requirements around deorbit mechanisms and collision avoidance.

Power Consumption and Climate Impact

One million satellites consume power. Thousands of ground stations consume power. Manufacturing a million satellites consumes energy.

The net energy impact is actually positive: satellite-based distributed computing reduces data center energy consumption by avoiding centralization and bulk data movement. A petabyte of data doesn't need to traverse fiber optic cables heating up in conduits. Processing happens locally.

Analyses suggest satellite infrastructure reduces AI computing's carbon footprint by 20-30% overall, despite adding satellite operations.

Resource Extraction

Building one million satellites requires raw materials: semiconductors, rare earth elements, aluminum, platinum (for solar cells).

This creates pressure on mining and extraction. But parallel advances in recycling, alternative materials, and efficiency limit net impact.

Expect increased regulatory focus on supply chain sustainability, materials sourcing, and end-of-life recycling for satellite hardware.

Strategic Implications for AI Companies and Startups

Positioning Your Product for Satellite-First Infrastructure

If you're building AI products today, you should design with the assumption that satellite-based distributed computing exists in your production timeline.

This means:

- Architecture: Design systems assuming 20-50ms latency to inference endpoints and variable, uncertain connectivity to any single provider

- Data flow: Build with local-first, sync-later patterns rather than requiring constant cloud connectivity

- Model optimization: Smaller, distributed models running at edge outperform large models requiring round-trips to central servers

- Privacy: Design assuming data stays local and processing comes to it, rather than moving data to central locations

Companies that embrace this now have products ready for satellite infrastructure adoption before it's mainstream. That's a 3-5 year competitive advantage.

Investing in Satellite-Adjacent Technology

Funding opportunities exist in:

- Edge AI frameworks: Tools for building AI systems optimized for distributed, satellite-connected infrastructure

- Compute orchestration: Software managing workload distribution across satellite and terrestrial compute

- Data sovereignty platforms: Infrastructure guaranteeing data residency and compliance across satellite networks

- Real-time inference: Applications that become viable only with satellite latency (autonomous systems, medical imaging, AR/VR)

- Satellite terminal manufacturers: Hardware connecting devices to satellite networks efficiently

Venture capital is already beginning to focus on these areas. Space X enables this by creating the infrastructure foundation.

Preparing Your Team

You should be hiring and training engineers in:

- Distributed systems: Coordinating compute across many unreliable nodes

- Edge AI: Running machine learning models on resource-constrained hardware

- Satellite communications: Understanding orbital mechanics, handoffs, and space-based networking

- Real-time systems: Building latency-critical applications

These skill areas are currently in short supply. Companies building expertise now attract top talent and build defensible advantages.

SpaceX's proposed AI network would increase its satellite count by approximately 12,000%, creating a vast infrastructure for global AI capabilities. Estimated data.

The Bigger Picture: Infrastructure as AI Substrate

Computing Architecture Evolution

We've evolved through computing eras:

- Mainframe: Centralized computing, dumb terminals

- PC: Distributed computing, personal machines

- Cloud: Re-centralized computing, virtualized infrastructure

- Edge: Distributed computing on Io T and edge devices

- Satellite-augmented: Distributed computing globally with consistent low latency

Satellite infrastructure represents a fundamental shift. It's the first infrastructure that provides truly global, consistent, low-latency compute without central chokepoints.

This changes everything from AI model architecture to economic models to geopolitical dynamics.

Why This Matters for AI Specifically

AI is compute-hungry. Current AI infrastructure is centralized in a handful of regions (Northern California, Northern Virginia, Singapore, Ireland).

Satellite infrastructure decentralizes this. Compute moves globally. AI becomes available everywhere at reasonable latency. Training becomes distributed. Inference becomes edge-based by default.

This is transformative for AI development, deployment, and democratization.

The Sovereignty Question

Right now, global AI development is dominated by American and Chinese companies. They control the infrastructure, the chips, the models.

Satellite infrastructure makes this dominance harder to maintain. A country can process data locally without routing through American servers. A company can train models without using Amazon or Google infrastructure.

This doesn't mean American companies lose power. It means power becomes more distributed. Sovereignty becomes possible. Competition increases.

For teams building AI globally, this is enormously positive. You gain choices. You gain leverage. You gain independence.

Action Items for Different Stakeholders

For AI Product Companies

-

Audit your infrastructure: Are you designed for low-latency, distributed edge computing, or are you assuming cloud-centric architecture?

-

Experiment with edge inference: Start running models at the edge (mobile, Io T, local servers) to understand deployment challenges

-

Plan for satellite connectivity: Assume satellite-based compute is available in 3-5 years. Design systems that benefit from it

-

Consider data locality: Design systems that process data where it sits rather than moving it to central locations

For Infrastructure Teams

-

Monitor satellite networking standards: Standards are developing. You want to be aware before they mature

-

Build hybrid cloud-satellite ready systems: Your infrastructure should work seamlessly across terrestrial and satellite networks

-

Invest in edge orchestration: Tools for managing compute distribution across heterogeneous infrastructure are going to be critical

For Investors and Startups

-

Fund satellite-enabled applications: Companies building products that specifically exploit low-latency distributed compute win

-

Invest in infrastructure layers: Software enabling satellite compute (orchestration, scheduling, observability) is valuable

-

Focus on emerging markets: Satellite infrastructure provides connectivity to regions where terrestrial infrastructure is weak. This creates new markets

For Policy and Regulatory Bodies

-

Develop spectrum sharing policies: Managing satellite and terrestrial spectrum efficiently requires coordination

-

Create data residency frameworks: How do regulations around data locality work with satellite infrastructure that spans borders?

-

Address equity and access: Ensure satellite compute benefits aren't limited to wealthy countries and large companies

Common Misconceptions About Satellite-Based AI Infrastructure

Misconception 1: "Satellites Will Replace Cloud Computing"

Reality: Satellites are complementary, not replacement. Cloud computing excels at bulk processing, storage, and batch analysis. Satellites excel at latency-critical edge operations. The future is hybrid.

You'll use both. Cloud for training large models overnight. Satellites for real-time inference. Cloud for data storage. Satellites for processing local data immediately.

Misconception 2: "This Only Helps Remote Areas"

Reality: Everyone benefits. Yes, rural connectivity improves dramatically. But urban areas also benefit from reduced latency, distributed redundancy, and compute capacity that's unconstrained by data center footprint.

A user in downtown San Francisco gets faster inference through satellite distribution than through a centralized data center, even though terrestrial infrastructure is excellent in that location.

Misconception 3: "Satellite Internet Is Expensive"

Reality: Current Starlink is $120/month for consumer use. But satellite compute is different. It's infrastructure pricing, bulk contracts, usage-based billing. Space X's pricing power is limited once they're just another compute provider competing with cloud.

Expect satellite-based compute to price similarly to terrestrial cloud within 5 years. The infrastructure advantage is latency and distribution, not cost savings (though costs improve too).

Misconception 4: "This Technology Is 5-10 Years Away"

Reality: It's starting now. Space X is testing. Amazon's Kuiper is in pre-deployment. Ground infrastructure is being built. The regulatory approval is in progress.

Companies designing for this today are designing for a real, imminent change in infrastructure, not a speculative future.

Misconception 5: "Only Elon Musk's Space X Can Do This"

Reality: Space X has advantages (manufacturing scale, launch capacity, capital). But others are building. Amazon, One Web, Telesat, and international initiatives are competing.

The market is becoming competitive. This reduces single-company dominance and increases options for end users.

Looking Forward: The 2030s AI Landscape

Infrastructure as Expected

By 2030, satellite-based distributed computing won't be remarkable. It'll be expected.

Your AI product won't ask "should I use satellite compute?" the way you currently ask "should I use AWS or Azure?" It'll just use available infrastructure transparently.

Users in rural India, remote Australia, and downtown Tokyo get equivalent latency and compute quality. That becomes normal.

AI Model Architecture Transforms

As distributed, low-latency compute becomes standard, AI model architecture evolves:

- Larger ensemble models: Instead of one trillion-parameter model, you use hundreds of specialized models, each optimized for specific tasks, coordinating across the satellite mesh

- Federated learning as default: Models train across distributed data, never moving the data itself

- Real-time personalization: Models specialize for individual users in real-time as they interact with systems

- Privacy-first architectures: Processing happens locally by default, with optional global coordination

Entire New Applications Become Viable

Applications that are impossible today become viable:

- Autonomous systems everywhere: Not just in wealthy countries with mature infrastructure

- AR/VR as primary computing interface: Latency requirements finally met globally

- Real-time translation: Conversations translated with no perceptible delay, any language, any accent

- Distributed manufacturing: Factory robots coordinating globally in real-time

- Instant medical diagnosis: AI image analysis happening in real-time during medical procedures anywhere in the world

Geopolitical Implications Become Real

Data sovereignty becomes practical. Countries processing data locally, using local compute, controlling their own infrastructure.

This reduces American tech dominance but increases overall competition and choice. It's not necessarily bad for American companies. It means competition increases, driving innovation. It's better for everyone else.

You'll see the emergence of regional AI ecosystems: European AI, Chinese AI, Indian AI, African AI, each leveraging satellite infrastructure within their regions, but also interoperating globally where appropriate.

The Bottom Line: Why This Matters

Space X applying for permission to launch one million satellites isn't just a Space X story. It's not just about internet connectivity. It's about fundamentally changing how artificial intelligence infrastructure works.

It's about latency dropping from 100ms to 15ms globally. About processing data where it sits instead of moving it to central locations. About compute becoming distributed, abundant, and accessible everywhere.

For AI companies, this is the infrastructure transition you need to plan for. For infrastructure teams, this is the fundamental architecture shift your systems need to accommodate. For startups, this is the competitive advantage window where you can build products optimized for this infrastructure before it exists.

The timeline is real. 2025-2026 brings regulatory approval. 2027-2029 brings scale and capability deployment. 2030-2035 brings mainstream adoption. By 2040, this will be normal infrastructure.

Companies that understand this transition and design for it now have advantages that compound over the next decade.

The question isn't whether satellite-based AI infrastructure comes. It's whether you're ready when it does.

FAQ

What is Space X's one million satellite constellation for?

Space X's proposed constellation aims to create global infrastructure for artificial intelligence workloads, distributed computing, and low-latency inference. Unlike their existing Starlink network focused on consumer internet, this constellation would specifically support compute-intensive AI applications with sub-25 millisecond latency anywhere on Earth. The infrastructure enables edge computing, distributed model training, and real-time inference across thousands of satellite nodes with laser inter-satellite links creating a mesh network.

How does satellite-based AI compute work differently than cloud computing?

Satellite-based AI compute distributes processing to the edge, where data exists, rather than routing all data to centralized cloud data centers. Satellites provide consistent 15-25 millisecond latency globally through low Earth orbit positioning and laser inter-satellite linking. Processing happens locally on the nearest available satellite nodes, reducing data movement, improving privacy, and enabling real-time applications like autonomous vehicles and medical imaging that can't tolerate the 50-200ms latency of terrestrial cloud services.

What are the main advantages of distributed satellite AI infrastructure?

Major advantages include reduced latency enabling real-time AI applications globally, elimination of data movement costs and latency, improved privacy through local processing, natural redundancy across thousands of nodes, and economic decentralization of compute resources. Companies no longer depend on a handful of data center regions. Users in emerging markets access the same latency and compute quality as users in developed regions. Platforms like Runable that generate content with AI benefit from this by enabling instant generation anywhere globally without waiting for cloud round-trips.

When will satellite AI infrastructure be available for commercial use?

Regulatory approval is currently in process and expected to take 12-18 months from late 2024. Following approval, satellite manufacturing and deployment begins in 2026-2027, with ground infrastructure deployment in 2027-2029. Early commercial services should launch by 2028-2029, with meaningful global coverage and capabilities by 2030-2032. Full one million satellite deployment would take 5-7 years from regulatory approval, assuming sustained manufacturing and capital deployment.

How does this impact AI model training and inference?

Training becomes distributed across thousands of nodes globally with parameters synchronized through satellite links, reducing training costs 40-50% through improved locality and reduced data movement. Inference moves to the edge by default, running models on the nearest satellite node or device rather than round-tripping to cloud data centers. This enables real-time applications impossible with current cloud-only infrastructure, from autonomous vehicles to augmented reality to medical imaging assistance. Models can also specialize regionally, personalizing based on local data while maintaining global coordination.

What companies are competing in satellite compute infrastructure?

Space X leads with Starlink and the proposed mega-constellation, Amazon's Project Kuiper is deploying thousands of satellites for communication, One Web operates a constellation focused on coverage, and Telesat is developing capacity-focused services. Additionally, AWS, Google Cloud, and Microsoft Azure are building terrestrial edge infrastructure and evaluating satellite partnerships. The market is becoming competitive rather than dominated by a single player.

How does satellite infrastructure affect data privacy and sovereignty?

Data stays local and is processed on satellite nodes above or near the user's region rather than routing through distant data centers. This enables countries to meet data residency requirements naturally, without routing through foreign servers. European companies can process data on European satellite nodes, satisfying GDPR requirements without relying on American cloud providers. This creates new opportunities for regional compute markets and makes data sovereignty practical at scale for the first time.

What are the technical challenges in deploying satellite AI infrastructure?

Key challenges include managing transparent handoffs as satellites orbit overhead (every satellite passes within view for roughly 15 minutes), managing constrained power and thermal on satellite hardware, maintaining global ground infrastructure for connectivity and support, achieving quantum-resistant encryption before deployment, and coordinating spectrum allocation internationally through the ITU. Additionally, developing standardized containerization and orchestration for satellite compute nodes is required, along with solutions for stateful application migration between satellites as they move overhead.

How will this impact the economics of cloud computing and AI infrastructure?

Compute costs will face downward pressure as satellite-based capacity becomes available as alternative to centralized cloud providers. However, cloud providers are responding by building hybrid terrestrial-satellite offerings and investing in competing satellite constellations. The net effect is increased competition, increased options for businesses, and likely reduced costs across the board. Specialized markets emerge: regional compute brokers, data sovereignty specialists, and edge-optimized platforms will offer services optimized for specific use cases. Cloud computing doesn't disappear; it becomes one of several options rather than the dominant architecture.

What AI applications benefit most from low-latency satellite infrastructure?

Autonomous vehicles need sub-20ms latency for safety-critical decisions. Medical imaging and remote diagnosis require real-time inference. Industrial robotics and manufacturing need instant feedback loops. Augmented reality applications need low latency for immersive experiences. Real-time translation of conversations needs imperceptible delays. Natural resource monitoring benefits from instant image analysis. Content delivery and streaming systems eliminate buffering with distributed edge compute. Any application sensitive to latency in the 50-200ms range becomes viable when latency drops to 15-25ms globally.

Should I start building AI products for satellite infrastructure now?

Yes, but pragmatically. Start designing systems with edge-first, low-latency assumptions. Build for distributed inference across multiple compute nodes rather than centralized processing. Test with local edge infrastructure (mobile, Io T devices, regional servers) to understand deployment challenges and optimize models for resource constraints. By the time satellite infrastructure matures in 2030-2032, your products will be designed and proven for the architecture. Companies that start this transition now gain 3-5 year competitive advantage over those reacting to mature satellite infrastructure.

The space above your head is no longer just for communications. It's becoming infrastructure for artificial intelligence. And the race to use it is already underway.

Key Takeaways

- SpaceX applied for FCC approval to launch one million satellites dedicated to AI infrastructure and distributed computing, transforming global compute availability

- Satellite constellation reduces AI inference latency from 100-200ms (cloud) to 15-25ms globally through low Earth orbit positioning and laser inter-satellite links

- Distributed training becomes practical with satellite infrastructure, reducing AI model training costs 40-50% through improved data locality and eliminating centralized bottlenecks

- Timeline shows regulatory approval 2025-2026, commercial deployment 2027-2029, and mainstream adoption by 2030-2035 for latency-critical AI applications

- Companies designing AI products for edge-first, distributed architecture now gain 3-5 year competitive advantage before satellite infrastructure becomes standard

Related Articles

- SpaceX's Million-Satellite Orbital Data Center: Reshaping AI Infrastructure [2025]

- SpaceX and xAI Merger: What It Means for AI and Space [2025]

- SpaceX's 1 Million Satellite Data Centers: The Future of AI Computing [2025]

- SpaceX's Million Satellite Data Centers: The Future of Cloud Computing [2025]

- Waymo's $16B Funding Round: The Future of Autonomous Mobility [2025]

- NASA Used Claude to Plot Perseverance's Mars Route [2025]

![SpaceX's Million-Satellite Network for AI: What This Means [2025]](https://tryrunable.com/blog/spacex-s-million-satellite-network-for-ai-what-this-means-20/image-1-1769972898300.jpg)