The AI Deployment Gap: Why Surface-Level Integration Is Costing You

You've invested in AI. Your team's using it. Maybe they're happy with the results. And then you hear that a competitor is getting double the ROI from their AI investment, with half the complexity.

This isn't a luck thing. It's a deployment gap thing.

The difference between teams that are actually getting value from AI and teams that are just going through the motions comes down to one word: depth. And right now, that gap is widening fast.

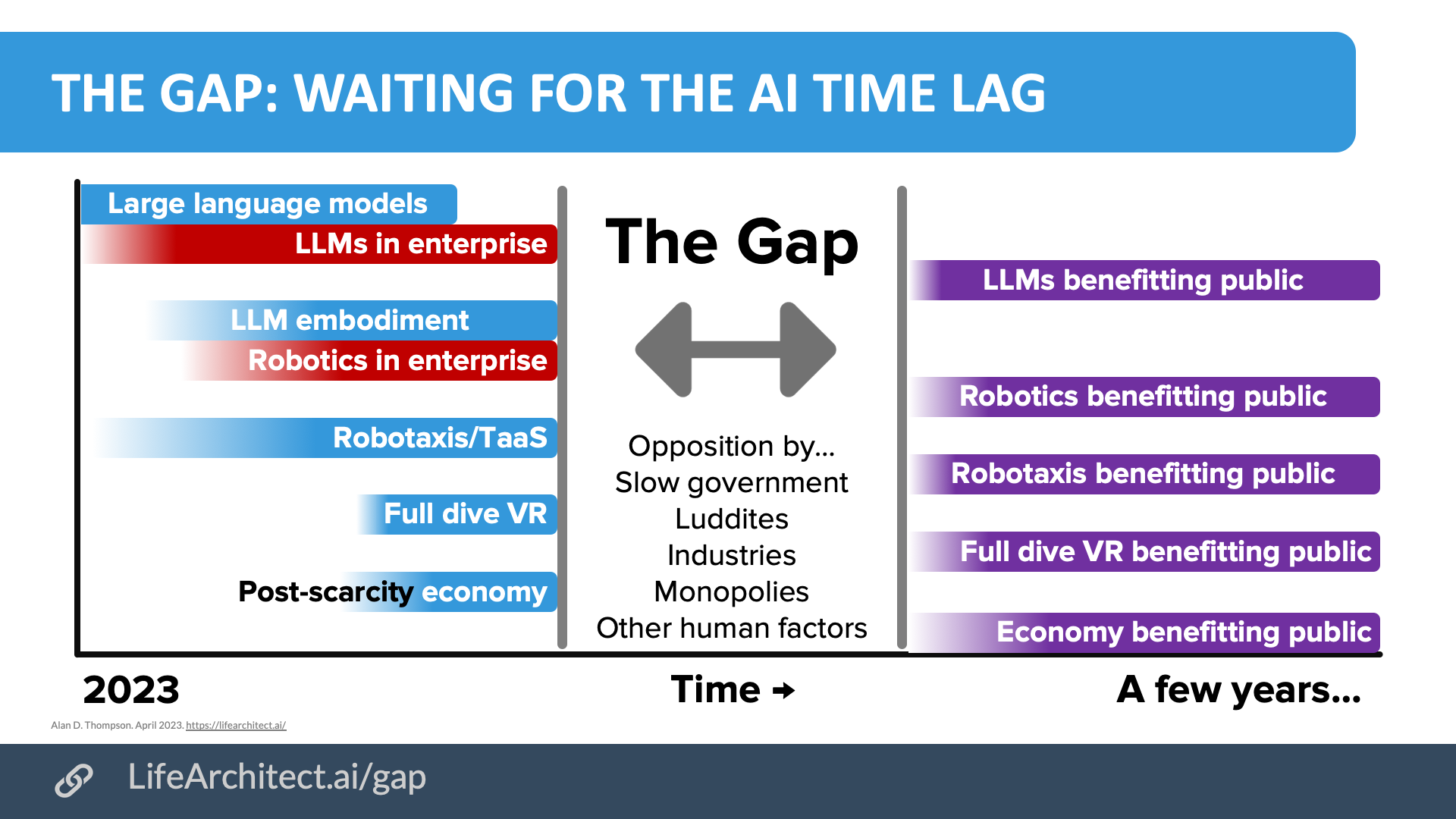

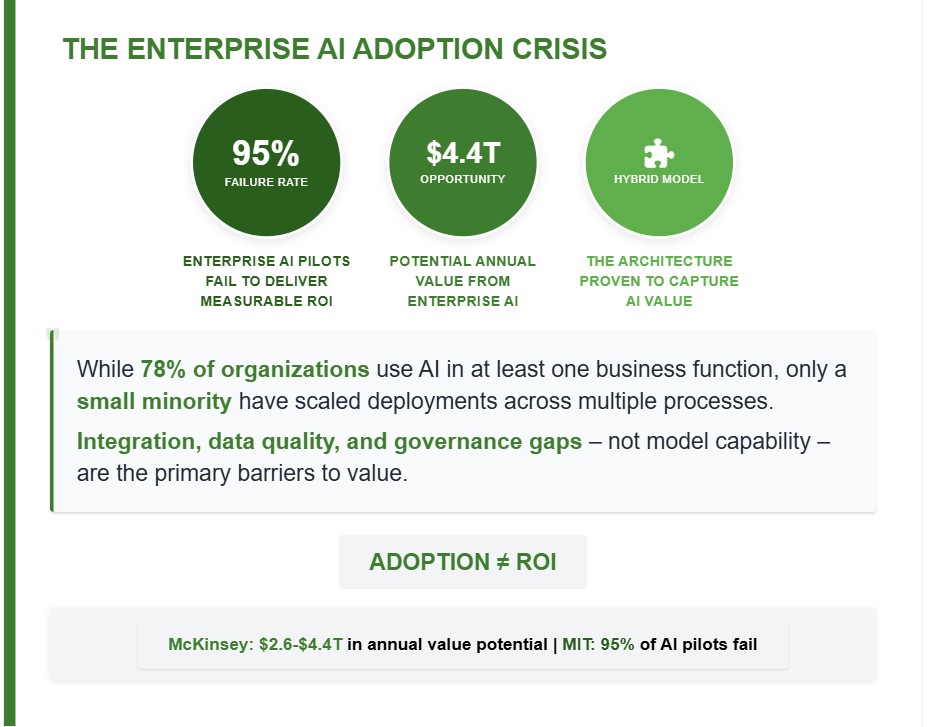

The data is striking. While 82% of senior leaders invested in AI for customer service in 2025, and 87% plan to keep investing in 2026, only 10% of teams have reached a mature level of deployment where AI is fully integrated into operations and working at scale. That's not a small number. That's the difference between getting crushed by your competition and becoming the company everyone else is trying to copy.

Here's the thing: most teams are using AI to handle the easy stuff. Simple questions, routine tasks, obvious automation targets. And they're seeing benefits from that. Their response times are faster. They're handling more volume. The cost per ticket went down. Those are real wins, and they matter.

But then there's the group that's pulled away. They didn't stop at the quick wins. They looked at AI as a fundamental reorganization of how they do customer service, not just a tool that handles overflow. They integrated it into the workflows that actually matter. They gave it the hard problems. They iterated. They measured. They got smarter about it over time.

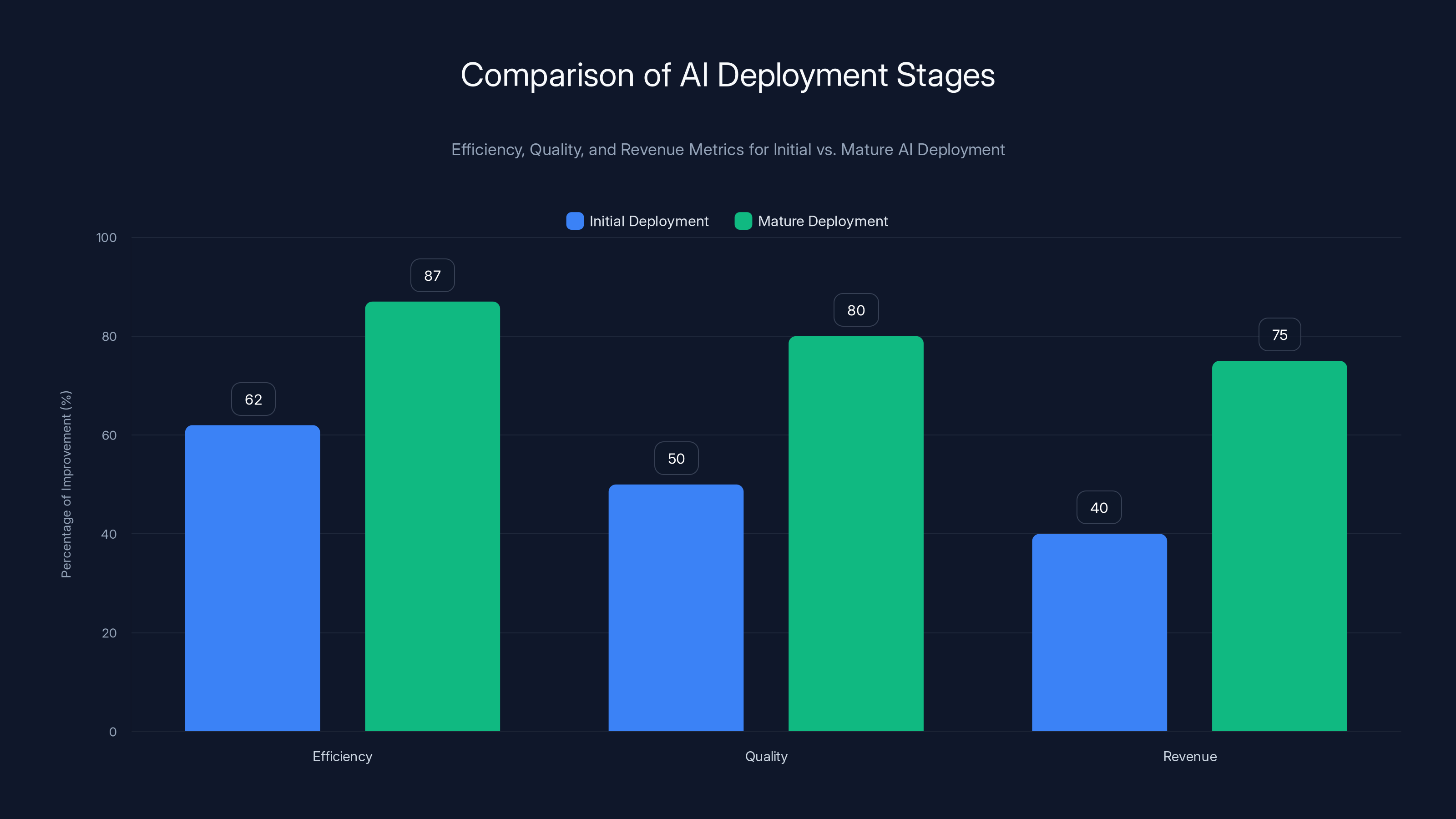

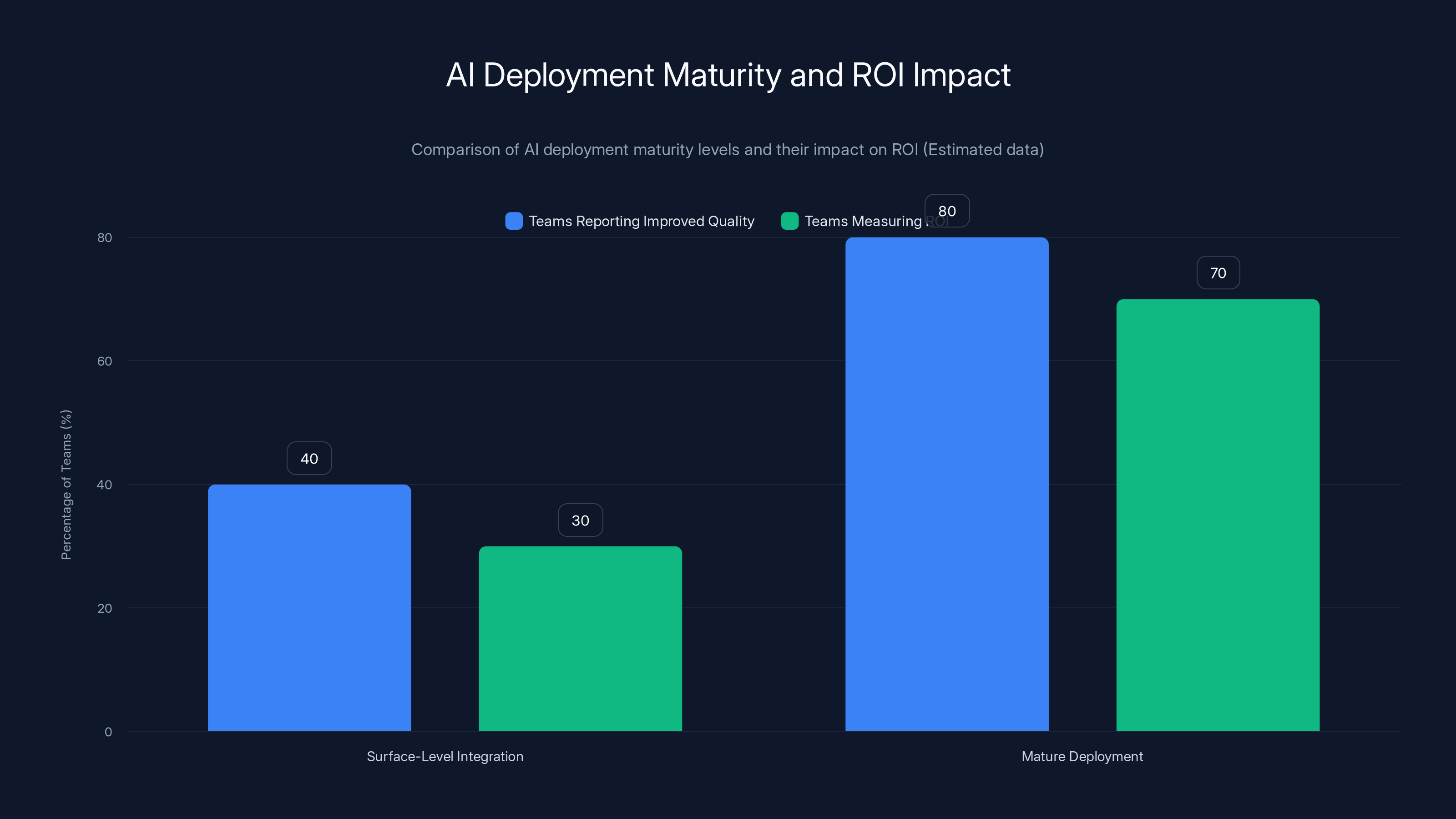

The results aren't comparable. Teams with mature AI deployments report improved quality and consistency at nearly double the rate of those still exploring. They're also way more likely to actually measure their ROI and prove the investment was worth it. That creates buy-in across the organization. That means more budget next year. That means more innovation.

This report digs into what's actually happening with AI in customer service right now, where teams are getting stuck, and more importantly, how to stop being stuck.

TL; DR

- Only 10% of teams have reached mature AI deployment where it's truly integrated at scale, despite 82% investing in 2025

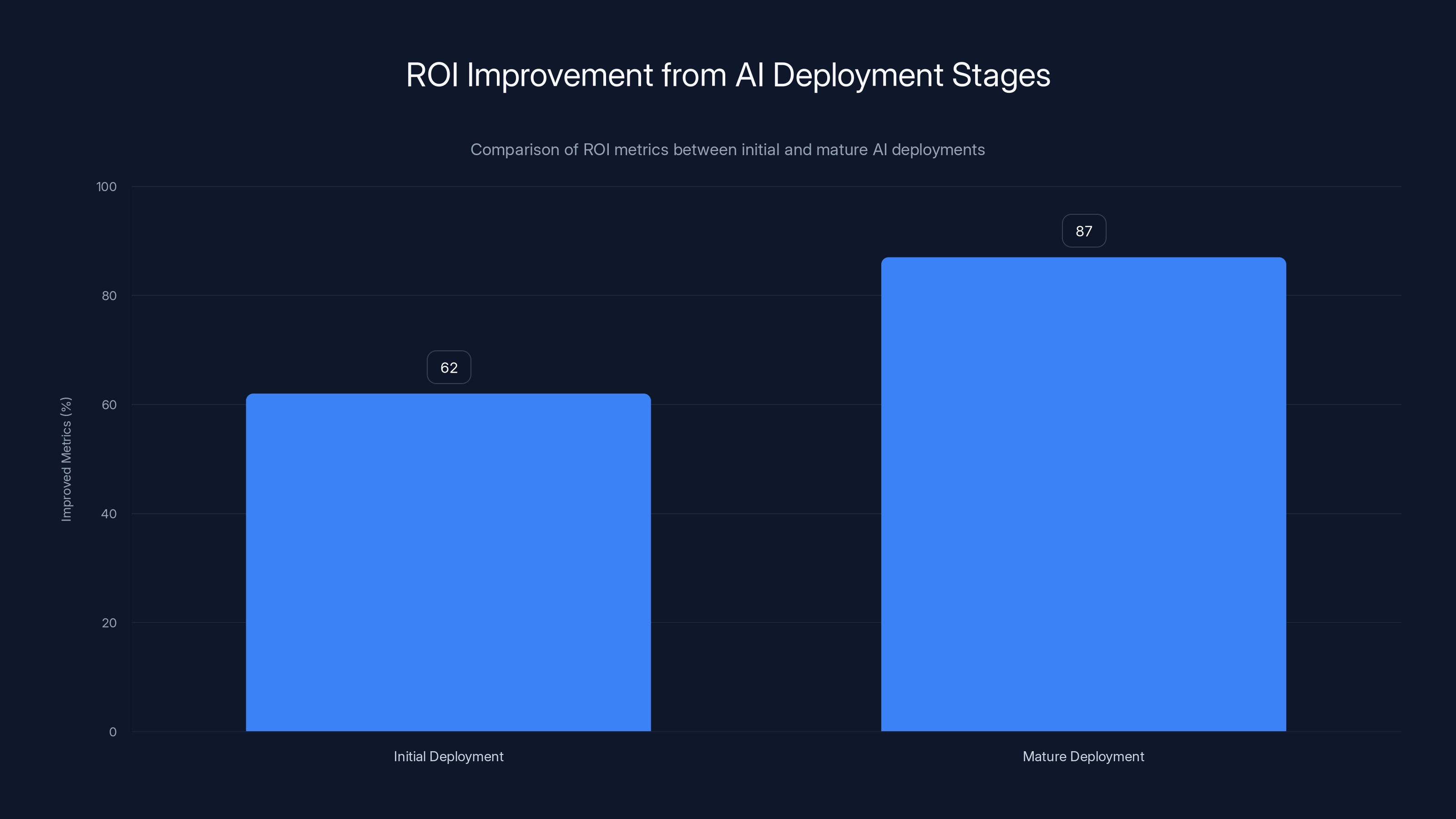

- Teams with mature deployment report improved metrics at a rate of 87%, compared to 62% for early-stage teams—a 25-point gap

- The ROI measurement gap is real: mature teams are significantly more likely to actually measure and prove their investment's value

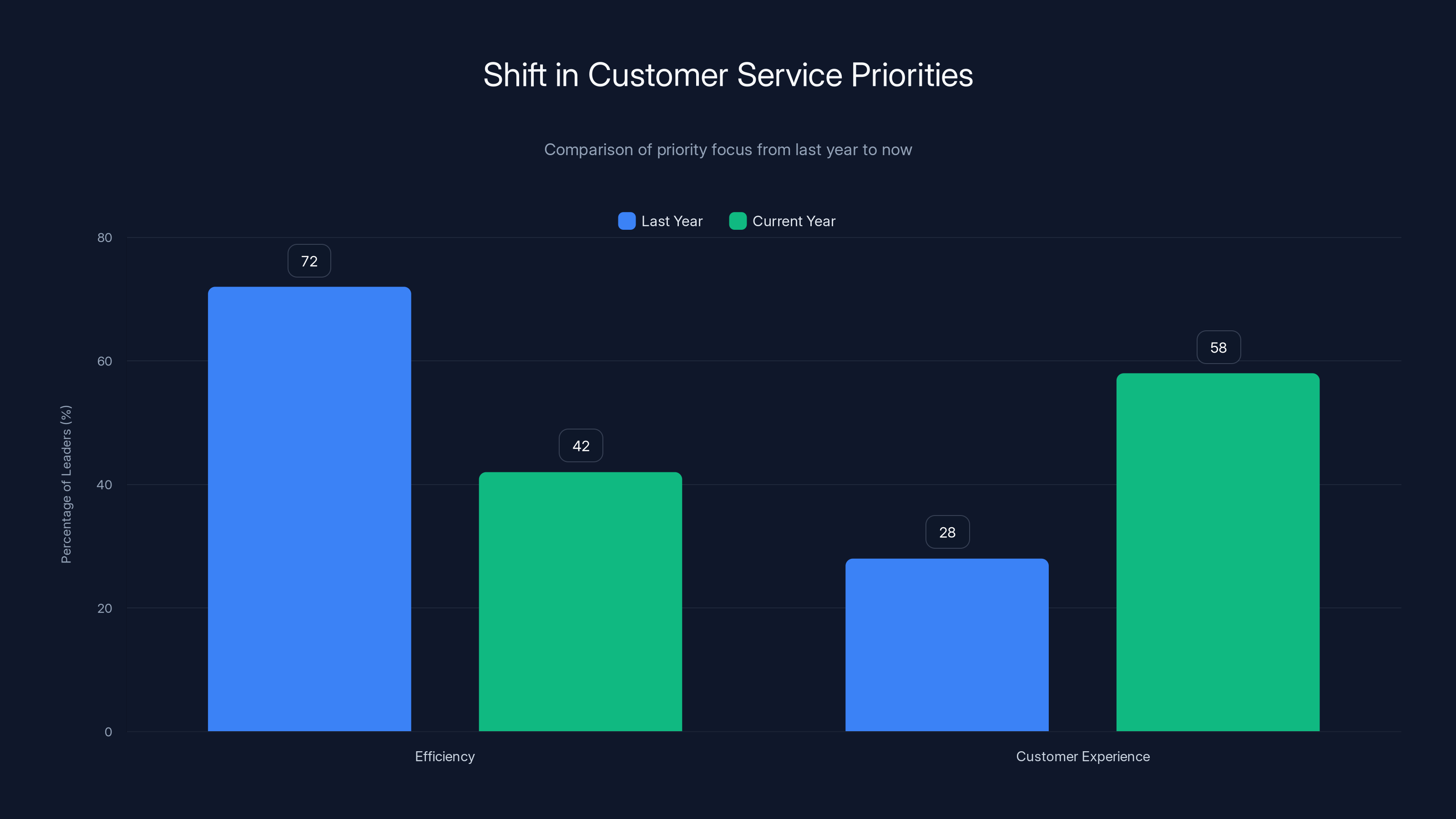

- Customer experience jumped to top priority with 58% of leaders now prioritizing it in 2026, compared to just 28% last year

- New roles and team structures are emerging as AI takes on more complex work, forcing organizations to rethink how customer service teams operate

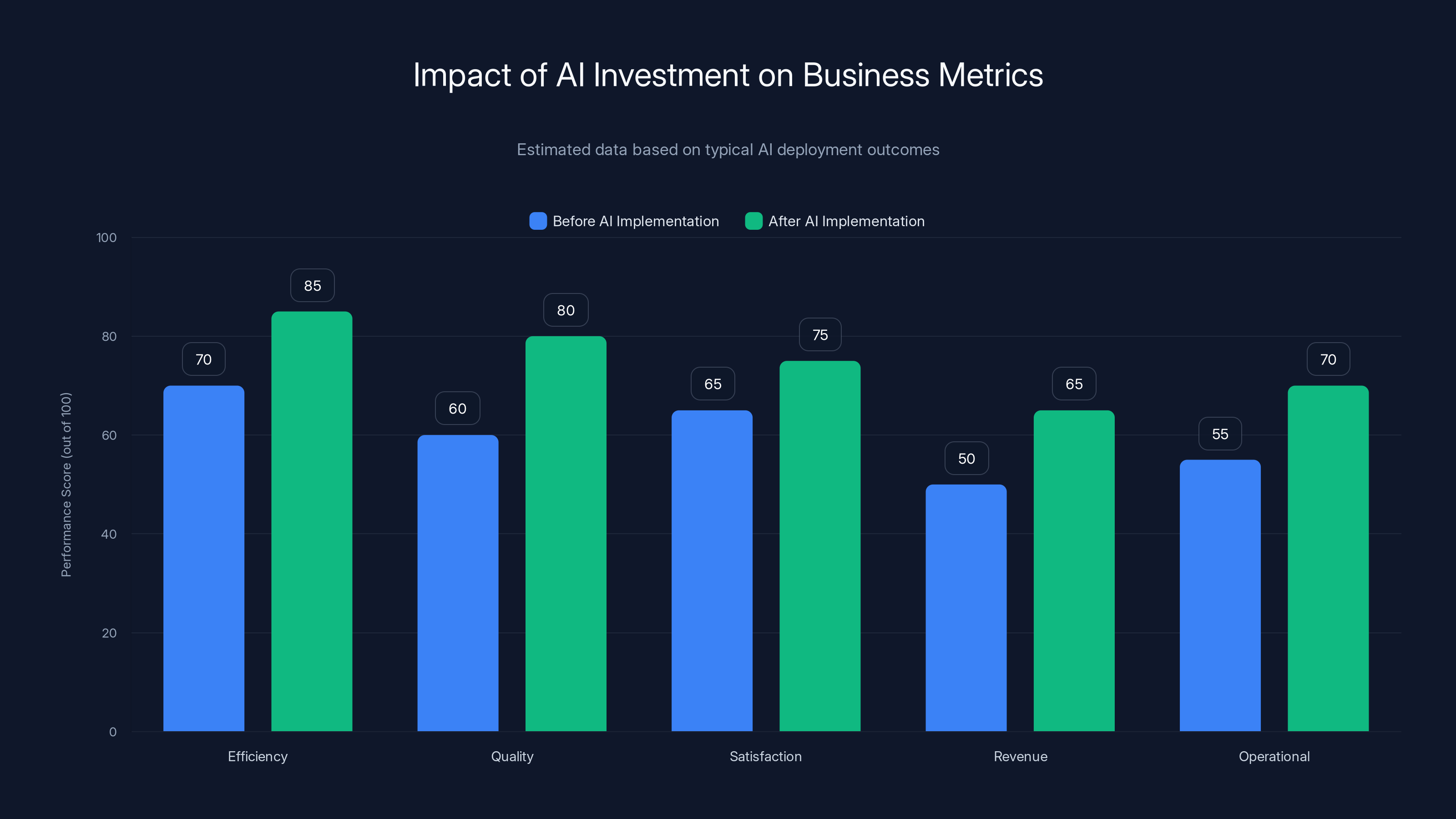

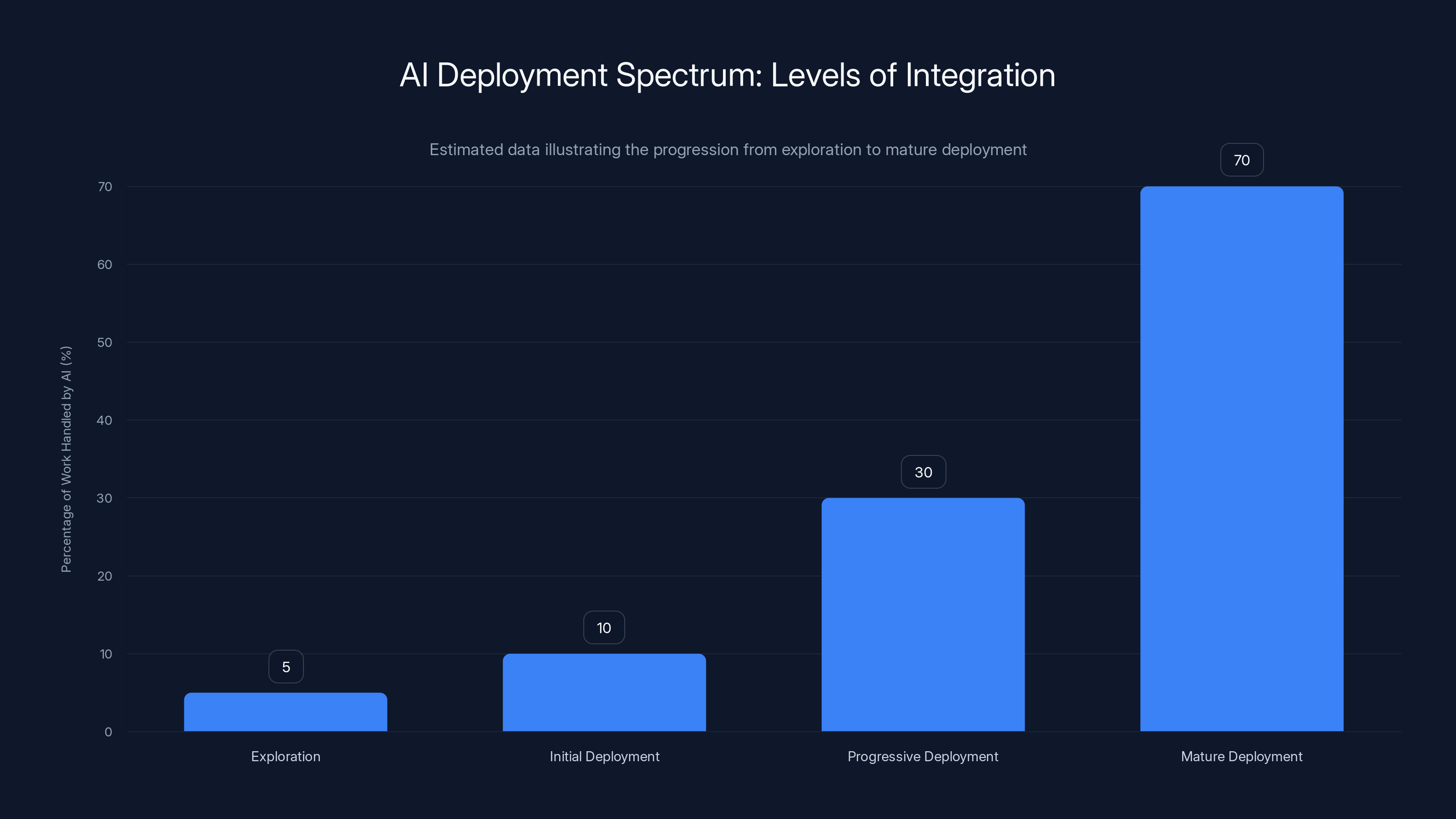

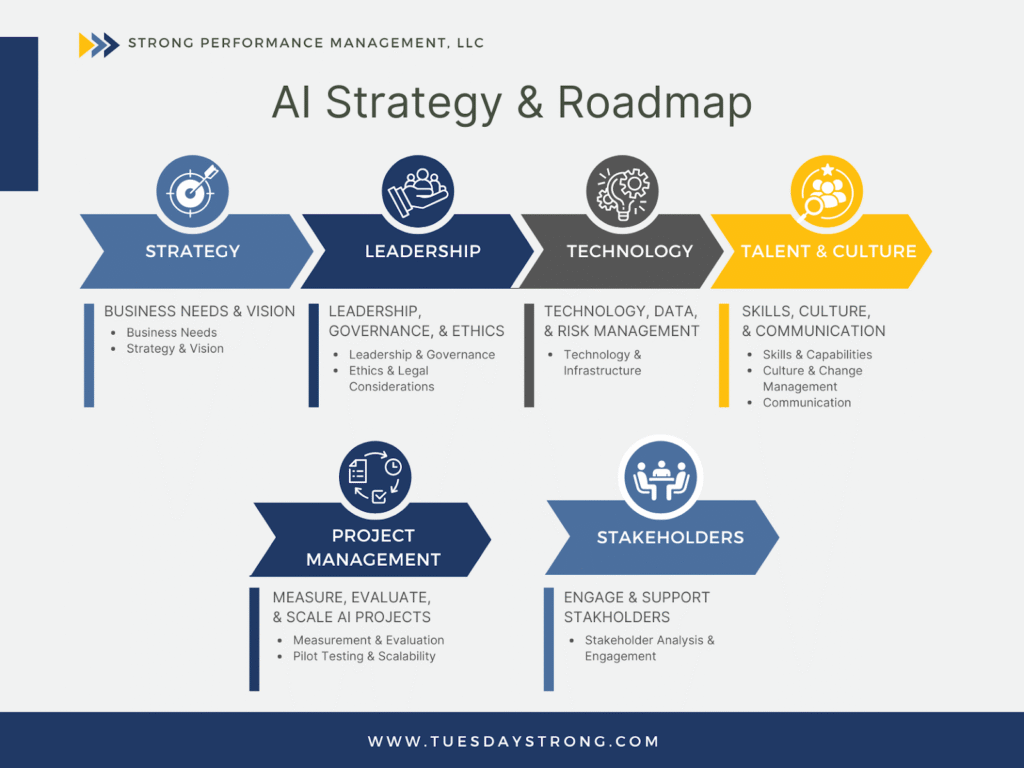

AI implementation typically improves business performance across various metrics, notably in efficiency and quality, leading to better overall outcomes. Estimated data.

Understanding the Deployment Spectrum: From Surface-Level to Strategic Integration

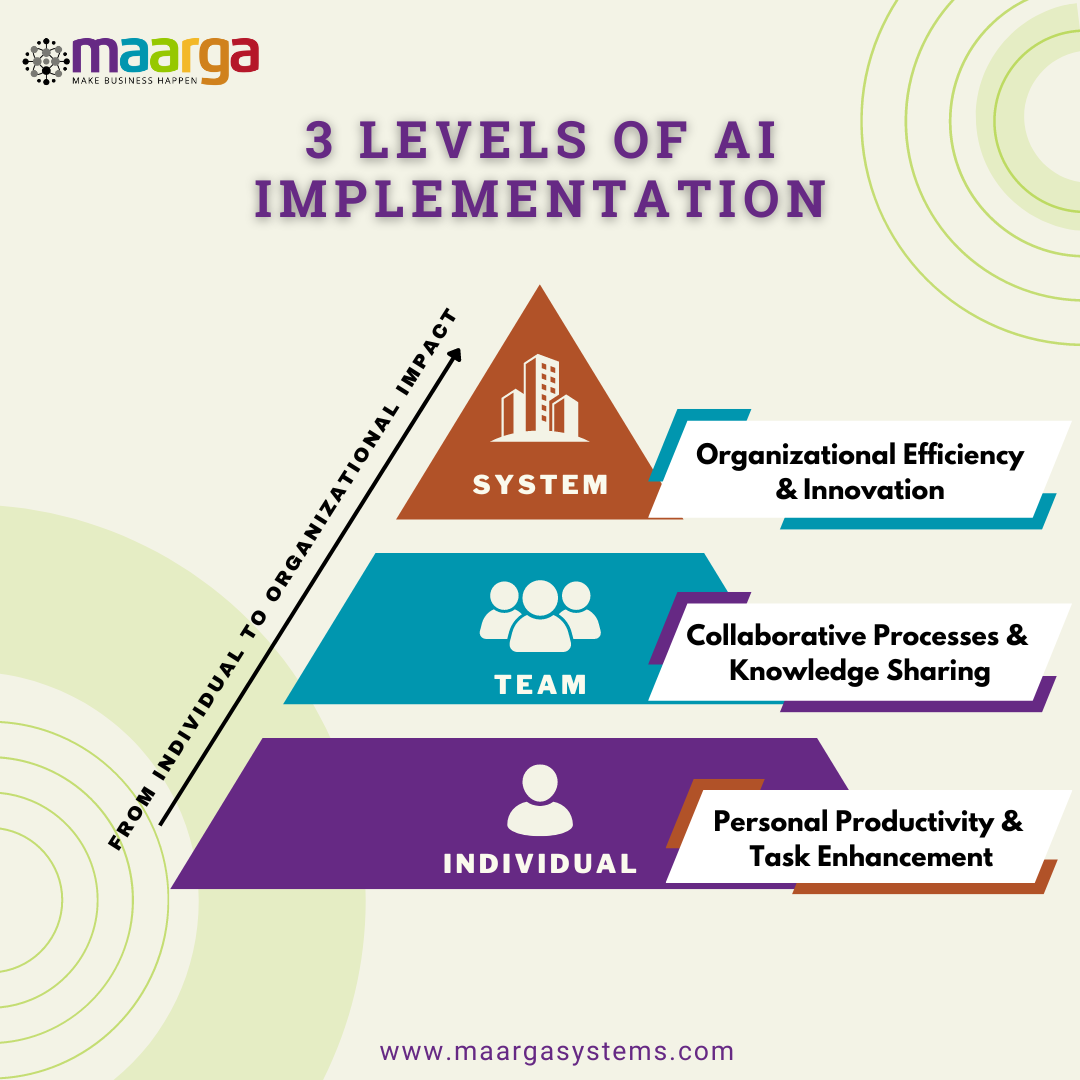

AI deployment doesn't happen all at once. There's a spectrum, and understanding where you sit on it matters way more than just having the tool installed.

On one end, you've got teams in the exploration phase. They've identified AI, they've piloted it somewhere safe, and they're trying to figure out if it's actually useful for their specific situation. This is where most teams are right now. There's nothing wrong with being here—you have to start somewhere. But you can't stay here. The exploration phase is supposed to be temporary.

Then there's initial deployment. The AI is live. It's handling specific, narrow tasks. Customer service teams are using it for the things they know it can do well: answering simple, frequently asked questions, categorizing tickets, maybe routing conversations to the right person. These are valuable things. A team in initial deployment is getting some real benefits. Response times improve. Volume capacity increases. Costs go down. But the AI is still in a box. It's handling maybe 5-10% of your actual work.

Then there's the next level. Progressive deployment. Here, teams are starting to expand what they ask AI to do. They're not just handling the easy questions anymore—they're handling partial conversations, multi-turn interactions, things that require a bit more context and reasoning. They're integrating AI into workflows, not just dropping it in as a parallel tool. They're starting to see ROI show up not just in efficiency metrics but in quality metrics too.

And then there's mature deployment. This is the 10% that's pulling away. AI isn't a tool these teams are using. It's part of the operating system. It's integrated into the workflows that run the business. It's handling complex work. It's improving over time because teams are constantly feeding it better data and better feedback. It's not replacing people—it's fundamentally changing what people do.

The jump from initial deployment to mature deployment isn't just a difference in degree. It's a difference in kind.

Here's why that matters: the value of AI grows exponentially as you move deeper into deployment. The first win is easy and fast—you set up a simple automation and it works. The next wins require more thoughtfulness. The wins after that require structural changes. But those later wins are way bigger.

The problem is that many teams think the journey from initial to mature deployment is mostly just "more of the same." More automation. More AI. More coverage. But that's not how it actually works. Moving deeper requires a completely different approach.

Mature AI deployment significantly outperforms initial deployment across efficiency, quality, and revenue metrics, with improvements reported at 87%, 80%, and 75% respectively. Estimated data for quality and revenue.

Why Most Teams Get Stuck at Surface-Level Integration

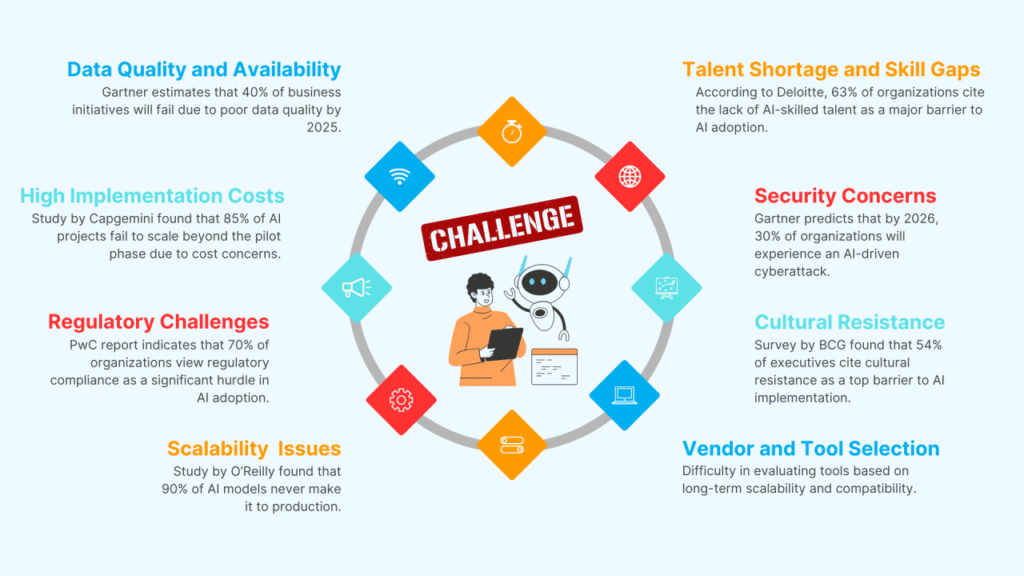

There are real barriers keeping teams from moving past initial deployment, and they're not all technical.

The first one is actually perverse incentives. When you implement an AI tool for the first time, the easy wins come fast. You're automating questions that are repetitive, time-consuming, and boring. Your team loves it because they're not answering the same thing for the hundredth time. Management loves it because it's visible—the metrics improve immediately. You're handling more volume, costs are down. Everyone's happy.

But here's the trap: those initial wins are so satisfying that teams stop pushing. They've proven the tool works. The business case is made. Why would you go deeper when you're already getting wins?

Because the teams that go deeper get better wins. But that's not obvious when you're sitting in a room full of happy metrics.

The second barrier is complexity. Moving from initial to mature deployment is actually hard. It means rethinking workflows. It means giving AI more responsibility, which means more careful testing and validation. It means training teams to work with AI in new ways. It means investing in the people and processes that make AI actually work in production.

It's easier to buy a tool and let it do what it was designed to do. It's harder to reshape your organization around it.

The third barrier is measurement. Teams in initial deployment can measure their wins easily. We automated X, so now we handle Y more volume, so we saved Z dollars. Done. But as you move deeper, the value becomes more subtle. Quality improves. Customer satisfaction moves. Employees are happier. Those things matter more than cost savings, but they're harder to measure. If you can't measure it, it's hard to justify investing more in it.

The fourth barrier is organizational friction. Customer service doesn't operate in a vacuum. To really integrate AI deeply, you need buy-in from product teams, data teams, engineering teams, security teams. You need to invest in training. You need to change how people work. That's a lot of organizational coordination for a department that historically hasn't had it.

The fifth barrier, and this is less obvious, is cultural. Customer service is built on the principle that every customer problem is unique and deserves individual attention. That's a good principle. It creates good experiences. But it also makes teams skeptical of automation. There's a genuine tension between the human-centered philosophy of customer service and the efficiency-seeking approach of automation.

Teams that move past initial deployment do something interesting: they don't resolve that tension by choosing sides. They choose both. They use AI to handle what it can do well—handling volume, catching patterns, escalating the right things to people—while creating more space for human judgment on the conversations that actually need it.

The ROI Gap: Why Mature Deployments Deliver Exponentially Better Returns

Let's talk about money, because this is where the deployment gap shows up most clearly.

When teams implement AI for the first time, the ROI math is simple. You automate a process that used to take a human 5 minutes. AI does it in 30 seconds. You've saved 4.5 minutes per conversation. Multiply that by hundreds or thousands of conversations per month, and suddenly you're looking at significant cost savings.

That's real. And it's why 62% of all teams report improved customer service metrics since implementing AI.

But here's the thing: that's the easy ROI. It's easy to see, easy to measure, and it plateaus fast. Once you've automated all the simple stuff, the remaining work doesn't get faster at the same rate.

But when you look at teams that have reached mature deployment, something different happens. Their ROI doesn't plateau. It actually accelerates.

Why? Because the value of AI isn't primarily in speed. It's in what that speed buys you.

Let me explain that with a formula. The traditional customer service ROI calculation looks something like this:

For initial deployment, the quality gains are minimal. You're mostly getting cost savings. So the formula simplifies to mostly just labor efficiency.

But for mature deployment, something shifts. As AI handles more of the volume and more of the easy work, human agents have more time to focus on complex conversations and on improving the overall experience. Suddenly, quality gains aren't just nice-to-haves. They become significant. Customer satisfaction goes up. Churn goes down. Lifetime value increases.

And here's where it gets interesting: those downstream effects are way bigger than the direct labor savings.

Teams with mature AI deployment report improved metrics at 87%, compared to 62% for teams in earlier stages. That's not just a 25-point difference. That's a completely different distribution of where value is coming from.

More importantly, teams with mature deployments are significantly more likely to actually measure their ROI. This matters because measurement drives investment. If you can't prove that your AI investment paid for itself, you're probably not getting more budget next year. You're probably reducing scope instead. That's when teams start losing momentum.

Let's put some numbers to this. Say you implement AI for customer service and it handles 30% of your volume automatically. If the average cost per ticket is

But that's assuming your team size stays the same. In most cases, you either reinvest that capacity into more volume, or you keep your headcount the same and let your team breathe. Both are good outcomes, but they're not as easy to measure as a simple cost reduction.

Now imagine you're a mature deployment team. You've integrated AI into your entire workflow. It's handling 50% of volume, but more importantly, it's handling the full range of volume—from simple to complex. The easy stuff, the medium stuff, and even some of the complex stuff. Your human agents are now spending 40% of their time on the actual conversations that need human judgment, and 60% of their time on analysis, process improvement, and strategic work that actually reduces your operational costs way more than the direct labor savings.

Your customers are happier because they're getting better answers. Your team is happier because they're doing more meaningful work. And your business is happier because customer lifetime value went up, churn went down, and upsell opportunities increased.

That's a completely different ROI story.

Teams with mature AI deployments report 87% improved metrics, compared to 62% for initial deployments, highlighting the exponential ROI growth with maturity.

The Quality Inflection Point: When Efficiency Becomes Excellence

Here's something that jumped out of the research: the priority system completely changed.

A year ago, 28% of leaders cited customer experience improvement as their top priority for customer service. That was a meaningful minority, but it wasn't the main thing. The main thing was efficiency. How do we handle more tickets with the same team?

Now? 58% of leaders cite customer experience as the top priority. That's more than doubled in a year.

That's not a random shift. That's the natural consequence of what happens when AI actually works.

When AI handles the easy work—the questions that have standard answers, the simple routing decisions, the obvious categorizations—it frees up human time for things that actually require human judgment. And once that happens, the question changes from "are we efficient enough?" to "are we good enough?"

Those are different problems with different solutions.

Efficiency is mostly a technology problem. You can solve it with better algorithms, better automation, smarter rules. Quality is a people and process problem. You can't automate your way to excellence. Excellence requires the right person having the right information at the right moment and making a good judgment call.

When most of your team is stuck answering repetitive questions, they can't do that. They're too busy. They're tired. Their job is to handle volume, not to think deeply about customer problems.

But when AI is handling the volume, suddenly people have the space to actually think. And when people have the space to think, they start making better decisions. They start seeing patterns in what customers are struggling with. They start suggesting process changes that would help. They start genuinely wanting to improve the experience.

That shift is huge. It's also hard to measure with traditional metrics. But it shows up in quality scores, in customer satisfaction surveys, in retention rates. And it's where mature deployment teams are pulling away from everyone else.

The bar for AI has moved, in other words. It used to be: does it work? Now it's: is it good? And that's a much higher bar. But it's also where the real competitive advantage is.

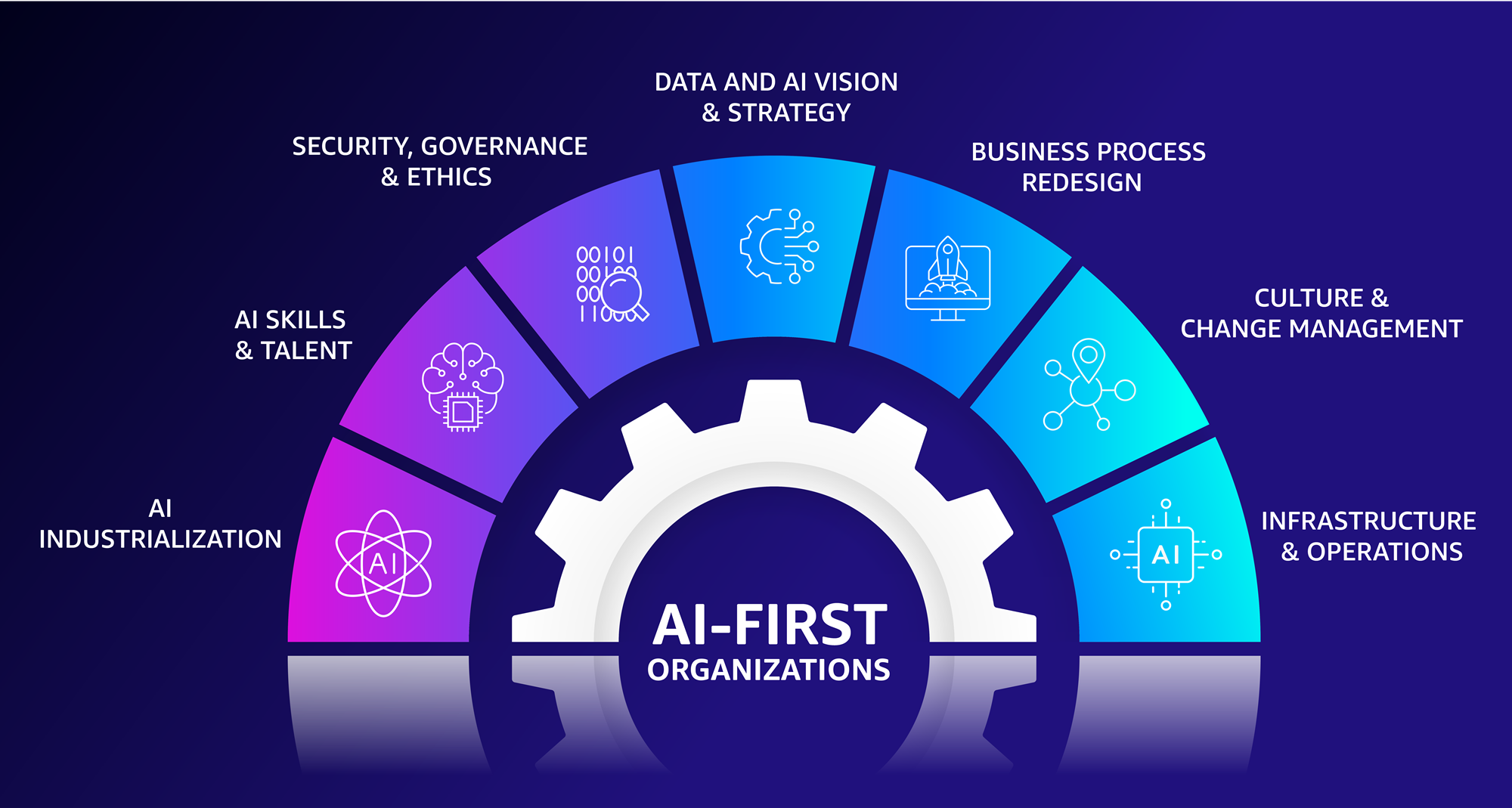

Workflow Reorganization: How AI Is Reshaping Customer Service Operations

When AI actually integrates deeply, it doesn't just change what your team does. It changes your entire operation.

We're seeing teams that have reached maturity report spending significantly less time on support volume. At initial deployment, 16% of teams say they're spending less time on volume. At maturity, that jumps to 28%. Nearly double.

But here's the thing: that's not because AI is replacing people. It's because the work is changing shape.

When AI starts handling significant portions of your volume, the skills you need on your team start to shift. You still need people who understand customer problems and can solve them. That doesn't change. But you also start needing people who understand how AI works and can improve it. You need people who can analyze patterns in AI behavior and decide if it's getting smarter or dumber. You need people who can manage the transition of work from human to AI and back again.

New roles are emerging that didn't exist two years ago. Prompt engineer. AI trainer. Conversation analyst. Workflow optimizer. These aren't made-up roles in some futuristic company. Real organizations are hiring for these positions right now because they've integrated AI deeply enough that they need people who specialize in making AI work better.

At the same time, the customer service role itself is evolving. It's becoming more specialized. Some of your team will focus on the high-complexity conversations that AI can't handle. Some will focus on validation—checking what AI did and making sure it's correct. Some will focus on improvement—analyzing patterns and feeding that back into the system. Some will focus on strategy—thinking about which problems AI should handle next and why.

This reorganization is actually a major driver of ROI for mature teams. You're not just getting efficiency from AI. You're getting efficiency from having the right people in the right roles, doing work that actually needs to be done.

The other thing that's happening is that support is becoming more strategic. It's not just a cost center anymore. It's a data center. Every conversation tells you something about what customers are struggling with, what's working, what's not. Teams that integrate AI deeply are mining that data and using it to improve the product itself.

Fifty-two percent of organizations are actively planning to scale AI to other departments like customer success, marketing, and sales in 2026. Why? Because customer service has proven that it works. And once you've proven it works in one place, the obvious next step is to expand it.

But there's something more interesting happening too. Support is creating the blueprint for how AI should be deployed across the organization. It's the first place many companies are figuring out which problems are suitable for AI, how to implement it without breaking things, how to measure whether it's actually helping, and how to improve it over time.

That's enormously valuable. And it's why the companies that get customer service right are pulling ahead in the AI race overall.

In the past year, the focus of customer service leaders has shifted significantly from efficiency (72% to 42%) to customer experience (28% to 58%). This reflects the impact of AI in handling routine tasks, allowing more focus on quality. Estimated data.

The Measurement Challenge: Proving Your Investment Actually Paid Off

Here's the uncomfortable truth: most teams can't prove their AI investment paid off.

Not because it didn't. But because they can't measure it.

When you're in initial deployment, the metrics are straightforward. Volume handled: check. Response time: check. Cost per ticket: check. These are easy to measure. Your system was already tracking these numbers before you implemented AI. Now you're just comparing before and after.

But as you move deeper into deployment, the value starts coming from subtler places. It's not just "we answered more questions." It's "our answers are better, so customers are happier, so they stay longer, so lifetime value went up." That's harder to draw a direct line from "we implemented this AI tool" to "revenue went up."

Teams with mature deployments are significantly more likely to report that they can measure their ROI. That's partly because they've had more time to set up measurement infrastructure. But it's also because they're thinking about ROI differently.

They're not just asking: did the automation save us money? They're asking: did the AI investment make the business better? Those are very different questions.

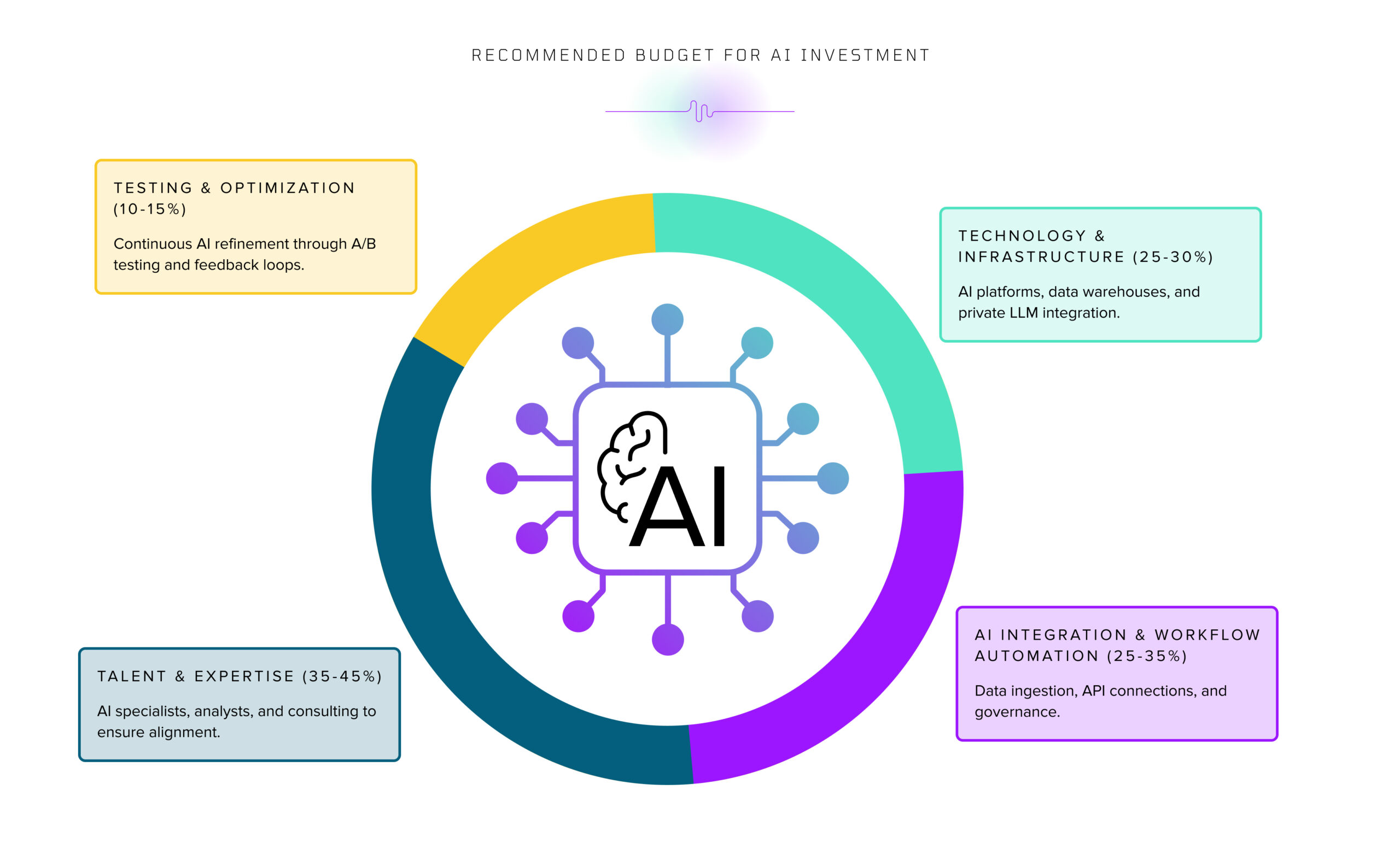

The measurement framework for mature deployments typically includes:

- Efficiency metrics: Volume handled, response time, cost per conversation

- Quality metrics: Satisfaction scores, resolution rate, follow-up conversation reduction

- Satisfaction metrics: Customer satisfaction, customer effort score, net promoter score

- Revenue metrics: Churn rate reduction, lifetime value increase, upsell opportunities created

- Operational metrics: Team engagement, training time, ramp-up speed for new hires

With that framework, you can actually draw lines from AI investment to business outcomes. You can say: we implemented AI, which freed up our team to focus on quality, which increased satisfaction, which reduced churn, which meant 3% of customers who would have left stayed, which at

That's a measurement story you can take to executive leadership. That's the kind of story that gets you more budget next year.

Cross-Functional AI: The Next Frontier for Competitive Advantage

Customer service has been the proving ground for AI in most organizations. It's the right place to start. The problems are clear, the measurement is relatively straightforward, and the stakes are high but contained.

But it's not the end destination. It's the beginning.

Half of organizations are actively planning to scale AI to other departments. The top targets are customer success, marketing, and sales. Why those? Because they're all customer-facing. They all have similar problems to customer service: high volume, repetitive work, opportunities for automation and improvement.

But here's the interesting thing: the companies that are winning in customer service aren't just rolling out AI to those other departments and hoping for the best. They're using the blueprint they created in customer service.

They know what works: deep integration, not surface-level automation. Continuous improvement, not set-it-and-forget-it. Measurement focus, not just implementation focus. Cross-functional collaboration, not siloed teams.

The companies that took their time in customer service and got it right are now able to move fast in other departments. They know the pitfalls. They know what to measure. They know how to organize teams. They're basically running an AI playbook that they've already validated.

The companies that stayed at surface-level deployment in customer service? They're going to make the same mistakes in sales and marketing. They're going to implement AI for efficiency, declare victory when the first wave of automation pays off, then wonder why they're not seeing the bigger benefits their competitors are seeing.

The strategic implication here is significant. The companies that are furthest ahead in customer service AI are building organizational capability that's going to compound as they expand AI to other areas. They're not just deploying a tool. They're building a company-wide capacity for AI integration.

That capacity is hard to catch up to. It's not just technology. It's culture, process, measurement, skills. All of that builds over time.

Estimated data showing the progression from exploration to mature deployment, with AI handling an increasing percentage of work. Mature deployment sees AI deeply integrated, handling 70% of tasks.

The Skills Gap: What Your Team Actually Needs to Succeed

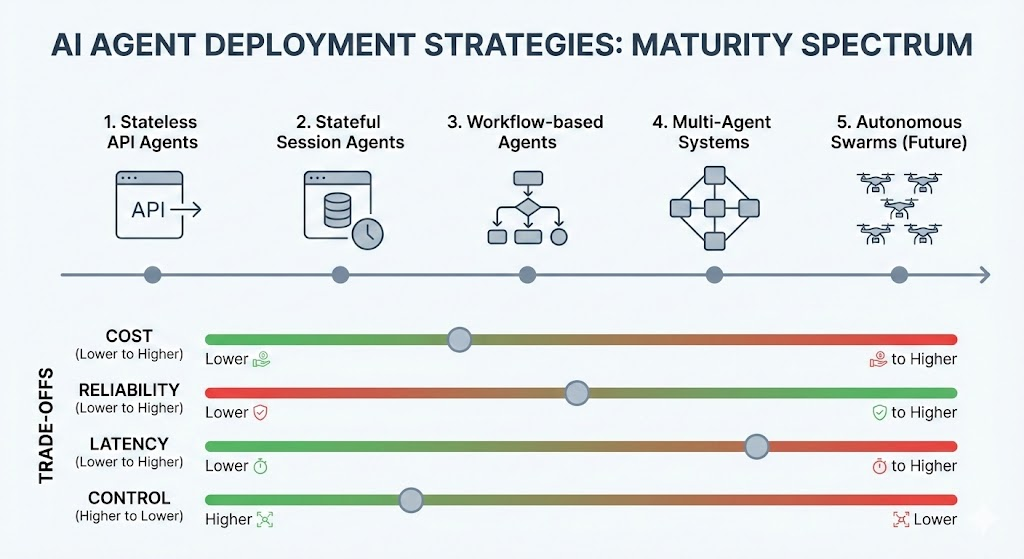

Deploying AI requires different skills at different levels of maturity.

At initial deployment, you mostly need implementation skills. How do you set up the tool? How do you write the rules? How do you integrate it with your existing systems? These are technical skills, and they're well-documented. There are courses. There's documentation. There are consultants who can help.

At progressive deployment, you start needing different skills. You need people who understand what problems are suitable for AI to solve. You need people who can look at a workflow and see where AI could fit in. You need people who can explain to non-technical stakeholders why they should invest more in AI. You need people who can manage change—because when you expand AI, you're changing how people work, and that creates friction.

At mature deployment, you need yet another set of skills. You need people who can analyze data deeply. Who can look at 1000 conversations and spot patterns. Who can understand why AI is making certain decisions and whether those decisions are good. Who can write prompts that make AI work better. Who can design experiments to test whether a change to your AI system actually helps.

These are rare skills. And they're the bottleneck for most teams trying to go deeper.

Where do you get these skills? Partly from training. There are bootcamps and courses and certifications emerging for AI literacy. Partly from hiring. Some companies are bringing in people from data science or machine learning backgrounds and teaching them customer service. And partly from building on your bench—taking your best people and training them deep on AI.

The companies that are succeeding are the ones that are investing in skills right now, before the talent war heats up. In six months, everyone's going to want AI-fluent people. The organizations that already have them will have a competitive advantage.

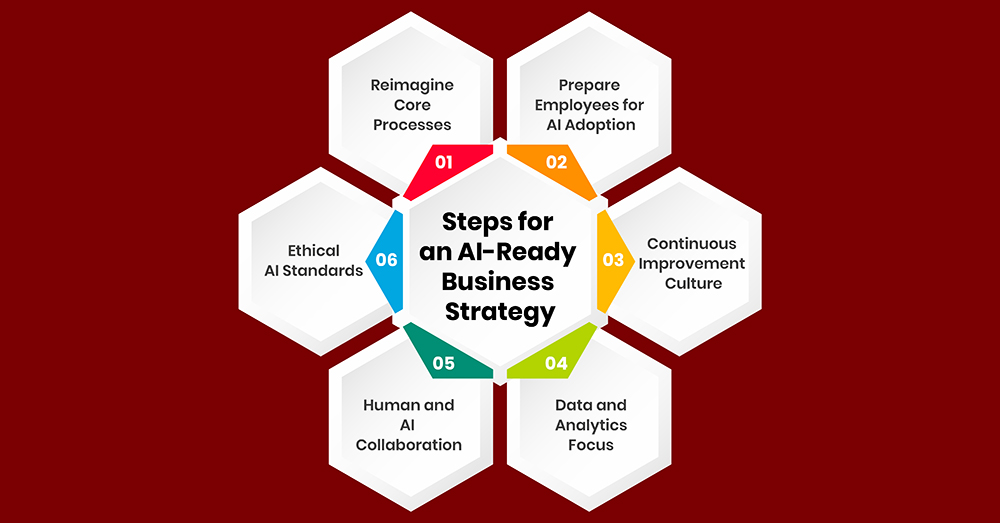

The Implementation Roadmap: Getting from Here to Mature Deployment

Okay, so you're in initial deployment or even just exploring. How do you actually get to mature deployment?

It's not a linear journey. It's more like a ladder, and there are distinct steps.

Step 1: Establish Clear Measurement Before you go deeper, you need to know where you are now. What are your baseline metrics? Volume handled per month? Average response time? Customer satisfaction? Churn rate? If you can't measure these now, you won't be able to measure whether you've improved. This is unsexy but critical.

Step 2: Expand Scope Carefully Don't just turn up the dial on your AI. Instead, identify one new type of conversation or workflow that you think AI could handle better than it currently does. Try it in a small subset of your volume. Measure what happens. Learn from it. Then expand or iterate.

Step 3: Build Feedback Loops As AI handles more work, you need feedback. What conversations is it succeeding at? What's it failing at? Where is the AI making bad decisions? You need to channel that information back into the system so it can improve. This is where many teams fail. They implement AI, declare victory, and never measure whether it's actually improving over time.

Step 4: Reorganize Around AI At some point, you need to have people whose primary job is making AI work better. Maybe it's 20% of one person's job. Maybe it's 100% of three people's jobs. But you need to allocate resources to improvement, not just operation.

Step 5: Connect to Other Departments Once you've proven it works in customer service, the question becomes: where else could this work? Talk to product, sales, marketing. See where they have similar problems. Start planning the next deployment.

Step 6: Measure Indirect Impact Move beyond efficiency metrics. Start tracking quality, satisfaction, and revenue impact. This is where the real business case becomes clear.

This isn't a six-week journey. It's more like a six-month to two-year journey depending on your starting point and your organization's ability to move fast. But each step compounds on the previous one.

Teams with mature AI deployments report improved quality and consistency at nearly double the rate of those with surface-level integration. Estimated data highlights the significant impact of deeper AI integration on ROI measurement.

Competitive Risk: The Cost of Standing Still

Let's talk about the strategic risk of not moving deeper into AI deployment.

Right now, there are companies in every industry that are moving toward mature AI deployment. They're the ones that will be pulling away in the next 12-24 months. Not because they have a secret. But because they're integrating AI into the core of how they operate, and that creates compounding advantages.

When they handle conversations better, customers stick around longer. When customers stick around longer, they know more about them. When they know more, they can serve them better. When they serve them better, they stick around even longer. That's a flywheel.

Meanwhile, teams that stay at initial deployment will keep getting benefits, but those benefits will plateau. They'll handle the easy stuff faster, but the hard stuff will still be hard. Their team will still be busy. Their quality will still be inconsistent. Their customer experience will still be okay, but not great.

And then one day, they'll realize that their competitor is offering a better experience at a lower cost with a smaller team. How did that happen? Incremental improvements, compounded over 18 months.

The competitive risk isn't that AI is coming. You already know it's coming. The risk is that you're not moving as fast as the people you're competing against. And every month you wait, that gap gets bigger.

Making the Case Internally: Getting Budget and Buy-In

Here's the thing that's not in the data but shows up in every conversation: it's hard to get buy-in for deeper AI investment after the initial wave.

You've already convinced people to try it. You've already gotten some budget. You've already done the implementation. And now you're asking for more money and more organizational attention to go deeper.

The business case is there. We've talked about the ROI. But from an internal politics standpoint, it's harder to make the case for the second wave than the first.

Here's what actually works:

Lead with measurement: Show the metrics from your initial deployment. Be specific. "We implemented AI six months ago. We're now handling 35% of our volume with AI, response times are down 40%, and customer satisfaction is up 8 points." That's a story you can tell.

Connect to strategy: Don't just talk about efficiency. Talk about how deeper AI integration helps you achieve your business goals. "Our goal is to improve customer lifetime value by 15% this year. Data shows that quality and consistency matter more than speed. Deeper AI integration lets us improve both simultaneously."

Show the competitive gap: Help your leadership understand that this isn't a "nice to have." It's a "must have." "Our competitors have been talking about this for months. Some of them are probably already building capability here. If we wait six more months, we'll be six months behind."

Prove you can execute: Don't ask for a massive budget to overhaul everything. Ask for a small budget to run a proof-of-concept. Prove you can execute, then ask for more.

Identify the blockers: Be honest about what's getting in the way. Is it data quality? Is it team skills? Is it organizational process? If you can identify the real blocker and show you're thinking about how to solve it, you'll build credibility for the second wave.

Building Your AI-Ready Organization: Long-Term Capability

The real win isn't deploying AI once. It's building an organization that's capable of deploying AI repeatedly, in different contexts, and getting better at it over time.

That requires some foundational stuff.

First, data infrastructure: You need clean, organized data. Lots of companies don't have this. They've been operating on partially structured data for years. But AI needs good data. It needs lots of it. It needs it to be accurate. If your data is a mess, your AI is going to be a mess. So step one is often fixing data infrastructure, which is not sexy but essential.

Second, measurement infrastructure: You need systems that actually track what you care about. Not just customer service metrics, but the full range of things that matter to your business. If you can't measure it, you can't improve it. And if you can't prove it, you can't get budget for it.

Third, AI literacy: You need a culture where people understand what AI can and can't do, and where non-technical people can participate in AI decisions. This means training. It means bringing in speakers. It means creating spaces where people can experiment with AI tools. It means celebrating successes and learning from failures.

Fourth, governance and safety: As you integrate AI deeper, you need processes to make sure it's not causing harm. Are conversations being escalated when they should be? Are quality standards being maintained? Is bias being introduced? These are real risks, and you need systems to catch them.

Fifth, continuous learning: The AI landscape is changing fast. The tools are improving. New capabilities are emerging. Your team needs time and space to learn and experiment. This might look like AI exploration time, innovation teams, participation in industry conferences. But it matters.

Organizations that build this foundation are the ones that can move fast on AI deployment. Organizations that skip it will struggle.

Common Pitfalls: What Gets in the Way and How to Avoid It

Based on what we're seeing, there are some really common patterns where teams get stuck.

Pitfall 1: Optimizing for the wrong metric Many teams optimize for volume handled or cost per interaction. Those metrics push you in the direction of surface-level automation. But they don't necessarily lead to better outcomes for customers. Better to optimize for something like "quality while handling acceptable volume" or "customer satisfaction at current cost."

Pitfall 2: Not planning for team change When AI handles more work, your team structure needs to change. People need new skills or new roles. If you don't manage that transition, you end up with people who feel threatened by AI, and that creates organizational resistance. Better to be proactive about helping people understand what their new role will be.

Pitfall 3: Underestimating the data quality problem AI only works as well as the data you train it on. If your training data is biased, incomplete, or inaccurate, your AI will be too. Fixing this is often the longest part of deploying AI. Many teams underestimate how long it takes.

Pitfall 4: Not iterating You implement AI once, and then you leave it running. But the world changes. Customer expectations change. Your product changes. Your AI needs to change too. Teams that get mature deployment right are the ones that commit to continuous iteration, not one-time implementation.

Pitfall 5: Isolating AI to one department Once you've proven AI works in customer service, you have a choice: share what you learned with other departments, or keep it to yourself so you have competitive advantage. The smart play is to share. When other departments learn from your success, they can implement better the first time. That's a rising tide situation.

The Opportunity: How to Close the Gap Before It's Too Late

The deployment gap is real, and it's widening. But it's also an opportunity.

Right now, most teams are still at initial or exploration. The companies that move to progressive or mature deployment in the next 12 months are going to have a huge advantage. They're going to have better metrics, happier customers, more engaged teams, and better business outcomes.

That window won't stay open forever. As more companies move deeper, the competitive advantage shrinks. In three years, mature deployment will probably be table stakes in most industries. You won't be winning because you have AI. You'll be winning because you have AI that's better than the other guy's AI.

But right now, in 2025, the gap is still there. And you can still close it.

Here's what that looks like in practice:

Start now. Not next quarter. Not next year. Now. Even if you're not ready, start thinking about what deeper deployment would look like. What workflows would you expand? What metrics would you track? What skills do you need? What blockers would you need to solve?

Measure where you are. Be honest about your current deployment level. Are you exploring, initial, progressive, or mature? If you're not sure, you're probably initial. That's okay. That's where most people are. But you need to acknowledge it so you can make a plan to move.

Build a roadmap. Not a massive five-year strategy. A 12-month roadmap that takes you from where you are to the next level. What's the first step? What's the second step? What budget do you need? What timeline?

Get buy-in early. The CEO needs to understand this isn't just about customer service. It's about building organizational capability for AI. Once you have buy-in, the rest gets easier.

Invest in your team. Training, hiring, tools, infrastructure. These aren't costs. They're investments. And they're investments with a clear ROI.

Start measuring differently. Not just efficiency. Quality, satisfaction, revenue impact. Show how deeper AI integration is moving your business closer to your strategic goals.

Looking Ahead: The 2026 Outlook for AI in Customer Service

What we're seeing in 2025 is just the beginning of a larger shift.

87% of leaders plan to invest in AI for customer service in 2026. But not all of those investments will be equal. Some will be deepening what already exists. Some will be expanding to new use cases. Some will be building the infrastructure that should have been built first time around.

The gap between initial and mature deployment will probably widen before it narrows. There's going to be a divergence where the winners pull further ahead, and the people stuck in initial deployment realize they need to invest more than they thought.

We'll also see AI spreading beyond customer service into customer success, sales, and marketing. The companies that get customer service right will move into those areas with a template. The companies that didn't get it right will make the same mistakes again.

There will be more regulation. More scrutiny. More conversations about bias, safety, privacy. That's good. It will force organizations to be more thoughtful about how they implement AI. It will make the gap between mature and immature deployments even wider, because mature deployments will have the governance and safety infrastructure in place.

And we'll see the emergence of new roles and skill sets. The AI literacy that's emerging now will be table stakes in two years. What's going to differentiate people is the ability to think strategically about where AI should go, not just how to implement it.

FAQ

What's the difference between initial and mature AI deployment?

Initial deployment means AI is handling specific, narrow tasks like answering simple FAQ questions or categorizing tickets. You're seeing benefits in efficiency and volume handling, but AI isn't integrated into core workflows. Mature deployment means AI is integrated throughout your operation, handling complex workflows, continuously improving over time, and delivering benefits across efficiency, quality, and revenue metrics. Teams with mature deployment report improved metrics at 87%, versus 62% for initial deployment.

Why should we invest in deeper AI integration if surface-level deployment is already saving us money?

Surface-level deployment gives you quick wins in efficiency, but those benefits plateau. Deeper integration unlocks quality improvements, better customer satisfaction, and downstream revenue benefits that are significantly larger. Teams with mature deployment are more likely to measure and prove their ROI, which means they get more budget next year. Initial deployment teams often plateau and struggle to justify further investment.

What are the common barriers to moving past initial deployment?

Teams typically get stuck because: (1) initial wins feel like complete victories and create complacency, (2) deeper integration is harder and requires more organizational coordination, (3) the value becomes harder to measure, (4) it requires team restructuring and skill development, and (5) there's cultural tension between human-centered customer service philosophy and automation. Overcoming these requires commitment to measurement, organizational change management, and expanding what you ask AI to do.

How do we measure ROI for deeper AI deployment when benefits are less obvious?

Move beyond just measuring efficiency (volume, cost per interaction). Track quality metrics (satisfaction, resolution rate), satisfaction metrics (NPS, effort score), and revenue metrics (churn reduction, lifetime value increase). Mature deployments typically show benefits across all three categories. The business case becomes much clearer when you can show that AI investment reduced churn by 2%, which at

What skills does our team need to move to mature deployment?

Initial deployment mainly needs technical implementation skills. Progressive deployment needs people who understand workflow design and change management. Mature deployment needs data literacy, analytical thinking, prompt engineering, experiment design, and strategic thinking about where AI should go next. Many of these skills can be developed from your existing team, but you may need to hire or contract specialist expertise in certain areas.

How long does it take to move from initial to mature deployment?

It typically takes 6 to 24 months depending on your starting point, organization's ability to move fast, and how deep you want to go. There's no shortcut. You need time to gather data, test hypotheses, iterate, build team skills, and align stakeholders. The organizations that move fastest are the ones that commit resources (time, people, budget) upfront rather than treating it as a side project.

Should we expand AI to other departments after proving it works in customer service?

Yes, absolutely. Customer service is the ideal proving ground because the problems are clear and measurement is straightforward. But the real value comes from building organizational capability for AI and expanding it across the business. The companies that do this are building a capability advantage that compounds over time. Use the same methodology you learned in customer service, but don't just copy-paste the implementation.

What's the competitive risk if we don't move to deeper AI deployment?

Initial deployment gives you benefits for a few months or quarters, but those benefits plateau. Meanwhile, competitors who move to mature deployment will pull ahead on quality, cost, customer satisfaction, and revenue. In 2-3 years, mature deployment could be table stakes in your industry, meaning you'll need it just to compete, not to win. The window for differentiation is closing. Companies that move now are building capability advantages that will be hard to catch up to.

Conclusion: Your Call to Action

The data is clear: the deployment gap is widening, and it's not closing on its own.

82% of organizations invested in AI for customer service in 2025. 87% plan to in 2026. But only 10% have reached mature deployment. That's a massive opportunity for the companies that can bridge that gap.

You already know AI works. The question now is whether you're going to stay at the surface level where the easy wins are, or whether you're going to go deeper.

Going deeper isn't harder because it's technically complicated. It's harder because it requires organizational commitment. It requires investing in your team. It requires being willing to change how you work. It requires measurement discipline. It requires patience to iterate instead of declaring victory after the first wave.

But the payoff is huge. Teams with mature deployment report quality and consistency improvements at nearly double the rate of initial deployment teams. They're more likely to actually measure their ROI. They're building organizational capability that scales to other departments. They're moving from "can we automate this?" to "how do we make this actually good?"

That's the difference between a company that's using AI and a company that's winning with AI.

The path is clear. Start with honest assessment of where you are now. Build a roadmap to get to the next level. Invest in measurement, team skills, and infrastructure. Get buy-in from leadership. Move fast but thoughtfully.

The companies that take this seriously in the next 12 months are going to be pulling away from everyone else. Don't let that company be someone else.

Your team has the capability to do this. Your organization has the resources. What's needed now is clarity about why it matters and commitment to actually doing it.

The deployment gap is widening. The question is: which side of it will you be on?

Key Takeaways

- Only 10% of organizations have reached mature AI deployment despite 82% investing in 2025, creating massive competitive opportunity

- Teams with mature deployment report improved metrics at 87%, compared to 62% for initial-stage teams—a 25-point ROI gap

- The value of AI shifts from efficiency-focused (speed, cost reduction) at initial stages to quality-focused (satisfaction, consistency) at mature stages

- New roles and skill sets are emerging that didn't exist two years ago, requiring organizations to invest in team restructuring and capability building

- 52% of organizations plan to expand AI to customer success, marketing, and sales by 2026, making customer service the proving ground for enterprise-wide AI capability

Related Articles

- Is AI Adoption at Work Actually Flatlining? What the Data Really Shows [2025]

- Enterprise AI Agents & RAG Systems: From Prototype to Production [2025]

- Why AI Projects Fail: The Alignment Gap Between Leadership [2025]

- Enterprise Agentic AI Risks & Low-Code Workflow Solutions [2025]

- The Future of Digital Documents: Moving Beyond PDFs With AI [2025]

- AI Pro Tools & Platforms: Complete Guide to Enterprise AI [2025]

![The AI Deployment Gap: Why Surface-Level Integration Is Costing You [2025]](https://tryrunable.com/blog/the-ai-deployment-gap-why-surface-level-integration-is-costi/image-1-1769625680717.png)