The Day a Million Developers' Code Got Stolen

You open VSCode on a Tuesday morning. You install what looks like a helpful AI coding assistant. It works great. You get on with your day. By the time you realize something's wrong, your entire project codebase is sitting on a server in China.

That's roughly what happened to over 1.5 million developers between the install dates of two malicious VSCode extensions. The extensions, Chat GPT – 中文版 and Chat Moss, appeared legitimate. They promised to speed up coding with artificial intelligence. They delivered on that promise. But they also did something else entirely: they systematically harvested sensitive data and sent it to unauthorized servers.

This wasn't a subtle attack. It was aggressive, multi-layered, and built on the assumption that most developers wouldn't notice until it was too late.

When security researchers at Koi Security uncovered the Malicious Corgi campaign, it became clear that the VSCode Marketplace, one of the most trusted software distribution channels in the developer world, had become a vector for sophisticated data theft. The attack raises hard questions about extension vetting, supply chain security, and what it means to trust an open ecosystem.

Here's what you need to know: what happened, how it happened, why it matters, and what you should do right now.

TL; DR

- 1.5M+ developers installed two fake AI extensions from the VSCode Marketplace

- Entire project files were uploaded to Chinese servers via base 64 encoding, not just metadata

- Three attack vectors were used: real-time file monitoring, server-controlled commands, and hidden analytics SDKs

- The extensions worked perfectly as advertised, making them harder to detect than typical malware

- Microsoft was notified but the extensions remained available for days, continuing to harvest data

- If you installed either extension, your source code may have been compromised and should be treated as exposed

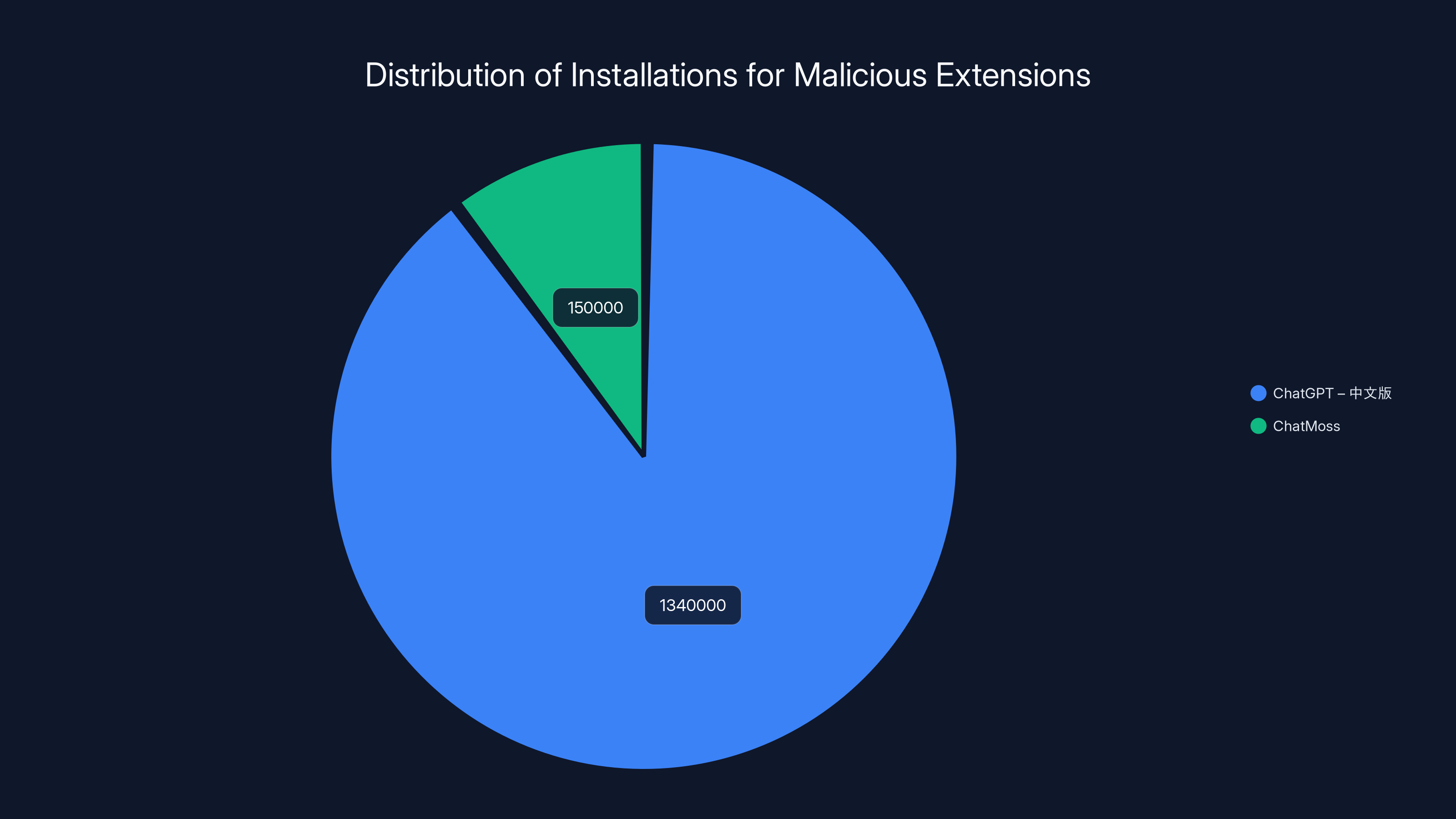

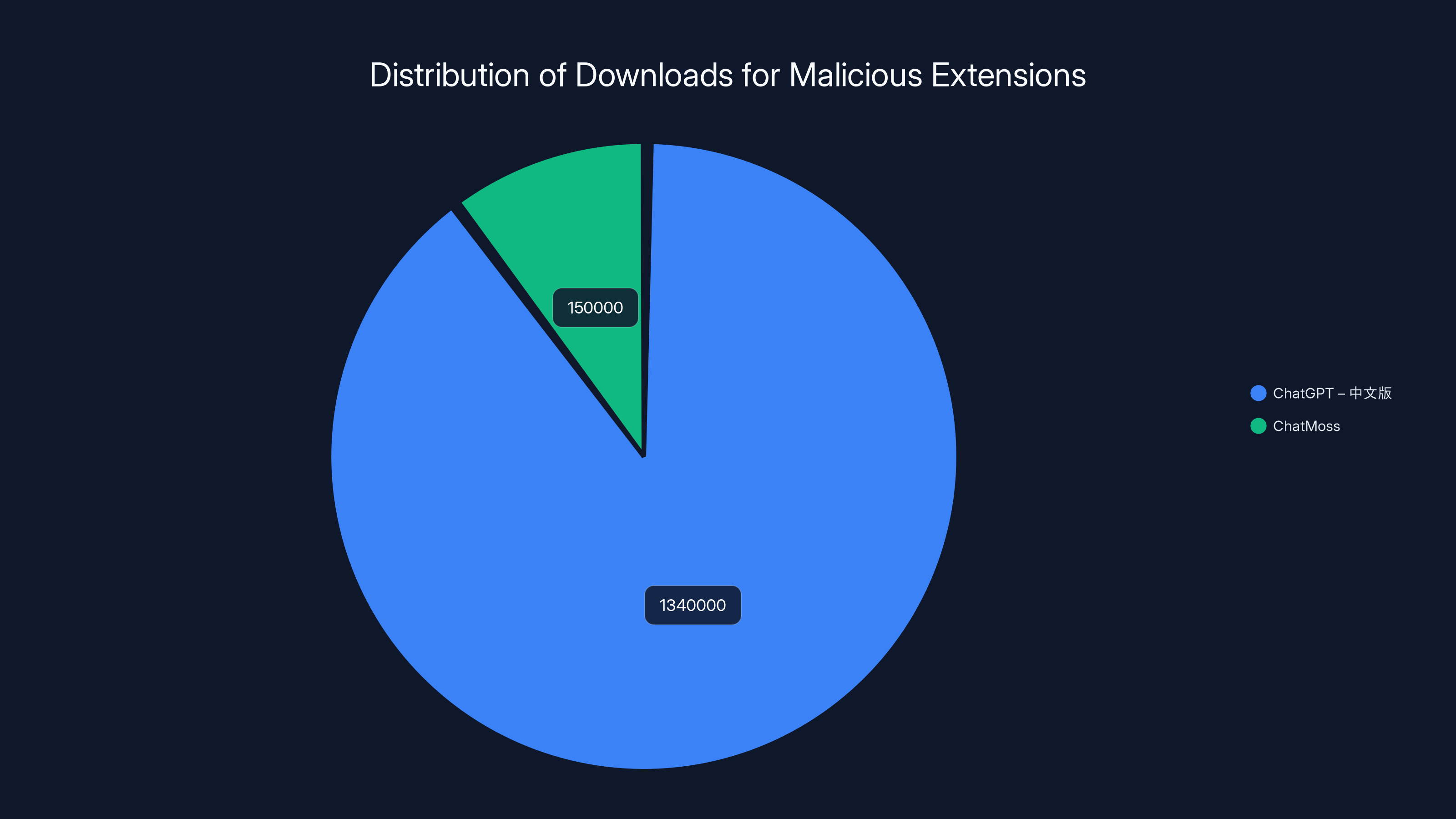

ChatGPT – 中文版 accounted for the majority of installations with 1.34 million, while ChatMoss had 150,000 installations. Estimated data.

What Actually Happened: The Malicious Corgi Campaign Explained

In early 2024, security researchers discovered that two VSCode extensions had been quietly exfiltrating developer data for weeks. The extensions in question were:

Chat GPT – 中文版 (Chinese version of Chat GPT)

- Publisher: When Sunset

- Downloads: 1.34 million

- Installation period: Unknown, but active at time of discovery

Chat Moss (also listed as Code Moss)

- Publisher: zhukunpeng

- Downloads: 150,000

- Installation period: Unknown, but active at time of discovery

What made this attack sophisticated wasn't the malware itself. It was the cover. Both extensions genuinely worked as AI coding assistants. They integrated with popular large language models. They provided real value to users. This wasn't ransomware that made your system unusable. It was a trojan horse that made your system more productive while simultaneously stealing your work.

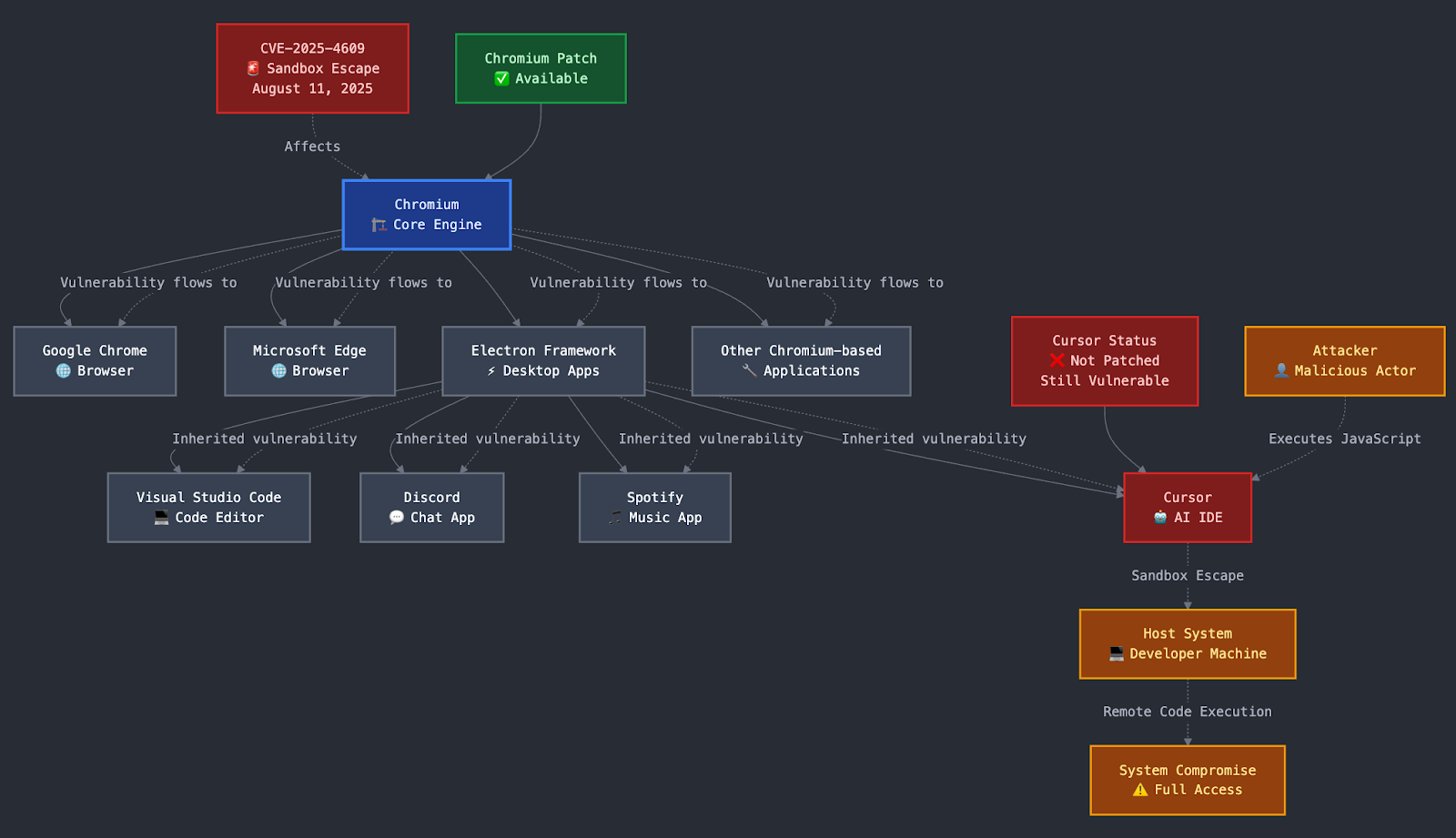

The infection vector was the VSCode Marketplace itself. Developers trust the official marketplace because Microsoft maintains it. An extension there should be safe. An extension there should have been vetted. The Malicious Corgi campaign exploited exactly that assumption.

Both extensions were part of the same operation, using identical exfiltration techniques and sending stolen data to the same command and control servers. This wasn't a coincidence. This was a deliberate campaign designed to cast a wide net and maximize the number of potential victims.

The research team at Koi Security identified the campaign through telemetry data and behavioral analysis. They spotted suspicious network traffic patterns, reverse-engineered the malicious code, and found the mechanisms designed to hide the theft from users.

By the time the threat was public, both extensions had already harvested data from millions of development environments across the world.

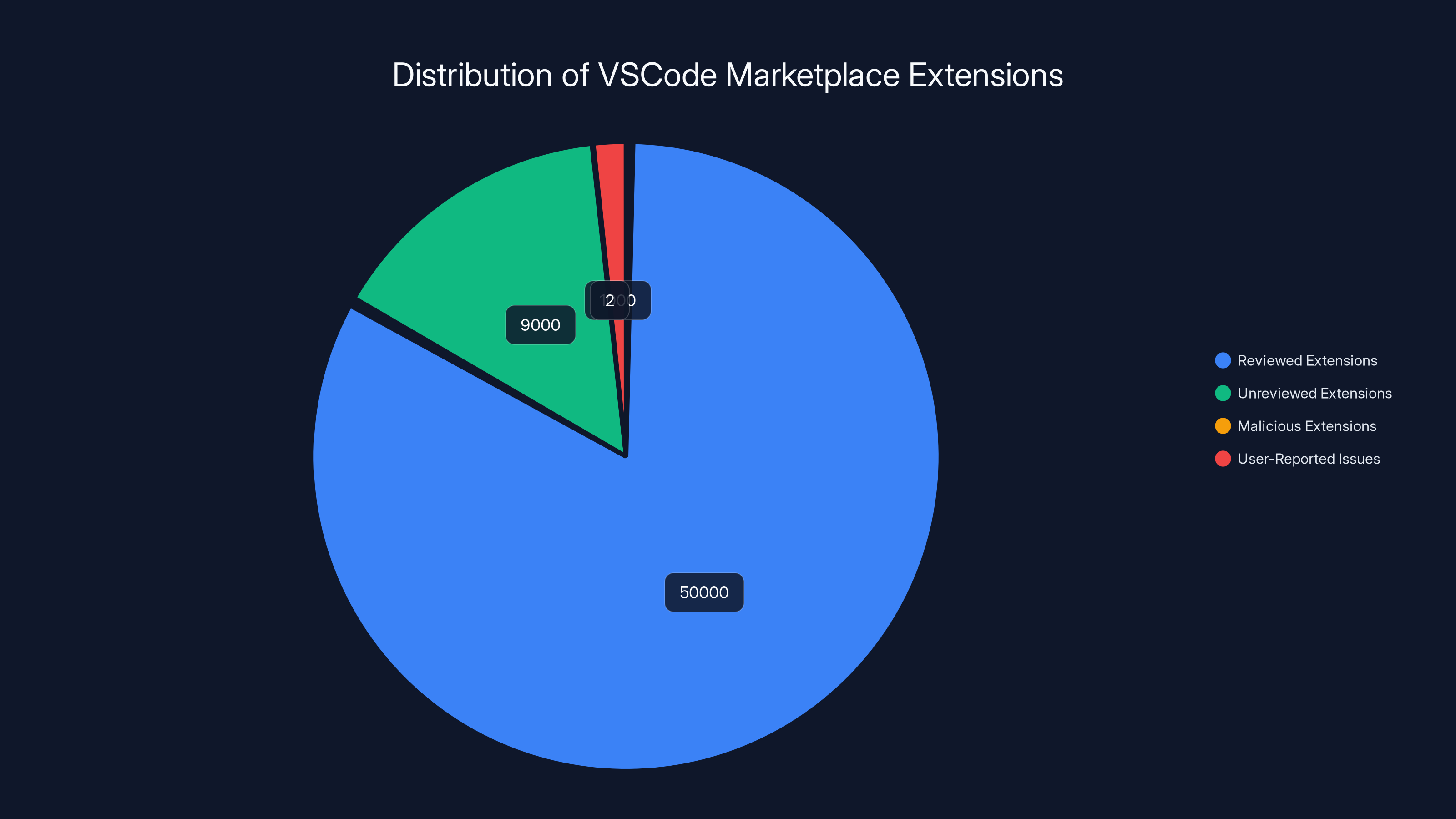

The VSCode Marketplace hosts over 60,000 extensions. Most are reviewed or user-reported, but a small number can slip through undetected. (Estimated data)

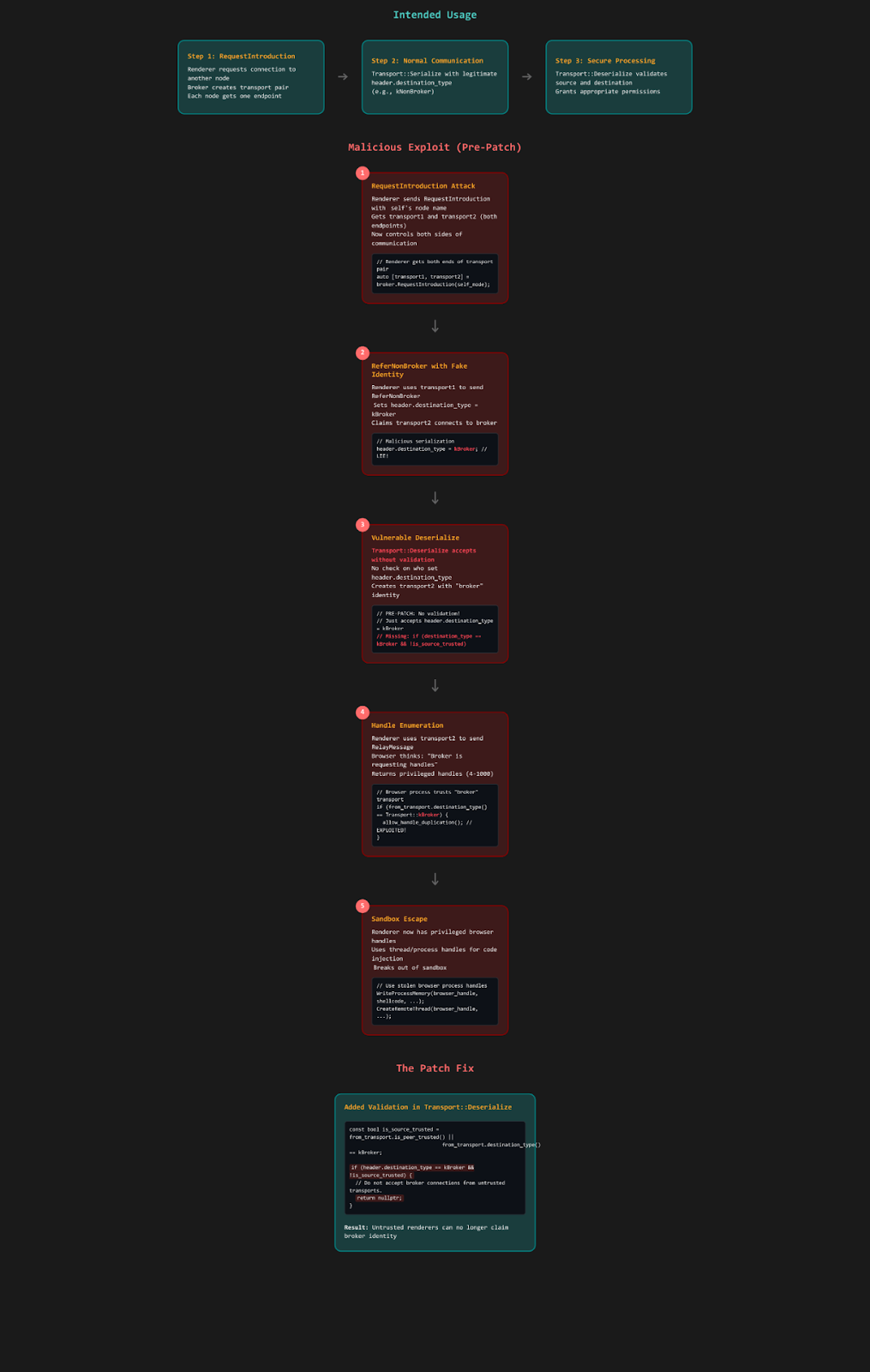

The Three Attack Mechanisms: How Your Code Got Out

Malware is only as effective as its ability to operate without detection. The Malicious Corgi campaign used three distinct mechanisms to pull data out of infected systems. Understanding how they worked explains why so few users noticed until the extensions were publicly flagged.

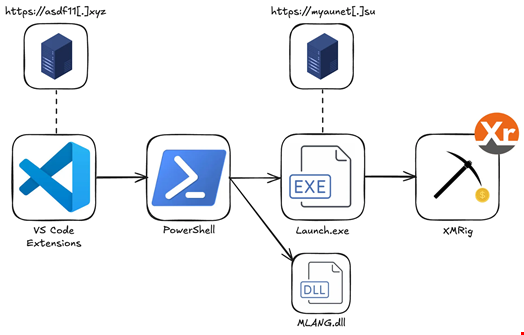

Real-Time File Monitoring and Automatic Exfiltration

The first mechanism was the most aggressive and the most revealing. The moment you opened any file in VSCode, the extension read its entire contents. Not just the filename. Not just metadata. The entire file. Every line of code. Every comment. Every API key accidentally left in a config file.

The extension then encoded that content in base 64 and sent it to a hidden iframe embedded in the extension's webview. This happened automatically, without any user action beyond opening the file. You didn't need to save. You didn't need to compile. You didn't need to do anything except open the file and the theft occurred.

Consider what this means in practice. A developer working on a microservices backend opens a file containing database credentials. Stolen. A security engineer reviews a file with authentication tokens. Stolen. A developer working on a proprietary algorithm opens the implementation. Stolen. A data scientist loads a file with model training data. Stolen.

This wasn't selective data gathering. It was comprehensive surveillance of every code file you touched. The base 64 encoding served two purposes: it obscured the data in transit and it made the stolen data immediately usable by the attackers, who could decode it on their end to reconstruct your exact source code.

For most developers, this happened thousands of times per week. Every file you opened during development. Every file you reviewed in code review. Every file you opened while debugging. Each one triggered automatic exfiltration.

Server-Controlled Command Injection

The second mechanism was more flexible. The attackers could send commands from their servers that instructed the extension to upload specific files. This gave them the ability to target particular codebases, extract specific files they wanted, or trigger bulk data collection when they determined it was worth the risk.

This mechanism was capable of uploading up to 50 files in a single command. This wasn't random file selection. This was targeted exfiltration. If the attackers identified a particularly valuable target through the first mechanism, they could then use the second mechanism to extract entire directories or specific high-value assets.

The command injection approach meant the attack evolved in real-time. The attackers didn't need to update the extension code. They just needed to send new commands. This made the threat persistent and adaptable. They could change their tactics without the malware itself being modified.

Hidden Analytics SDKs and Behavioral Tracking

The third mechanism was almost insidious in its subtlety. The extensions loaded commercial analytics SDKs through hidden zero-pixel iframes. These SDKs tracked user behavior, built identity profiles, and monitored activity across the VSCode environment.

Why would data thieves care about tracking user behavior? Because it gave them intelligence about the targets. By understanding what code you wrote, how you structured your projects, what frameworks you used, and what timing patterns you followed, they could build profiles of valuable targets. They could identify which developers worked on sensitive systems. They could determine which projects were worth deeper exploitation.

The SDKs also served a secondary purpose: plausible deniability. If someone noticed suspicious network traffic and asked why an extension was communicating with external servers, the developers could claim they had implemented analytics to improve the tool. This is technically true—just analytics for a different purpose than the users understood.

Why These Extensions Looked Legitimate

The sophistication of the Malicious Corgi campaign becomes clear when you understand that both extensions genuinely worked as advertised. This wasn't a case of a broken tool that immediately revealed itself as malicious. This was a functional tool that also happened to be stealing your data.

Chat GPT – 中文版 promised integration with OpenAI's API to provide Chinese language support for coding tasks. It delivered. Users could ask it to explain code, generate functions, or help with debugging, and it worked. The extension had a user rating. People left reviews saying it was helpful. Some people bought premium access to unlock additional features.

The functionality wasn't fake. The theft was the hidden layer underneath.

Chat Moss similarly promised AI-powered coding assistance. It worked the same way legitimate AI coding tools work. You highlight a problem, ask for a solution, and the tool generates code. It was convenient. It was useful. It saved time. For many developers, the value provided by the tool outweighed any suspicion they might have had about why an unknown publisher was offering it for free.

This is the trap that makes supply chain attacks through open marketplaces so dangerous. A tool doesn't need to be malicious in its primary functionality to be malicious overall. It just needs to provide enough value that users don't uninstall it, and enough cover that the hidden malicious behavior doesn't get noticed.

Think about your own behavior. You install an extension. If it works, you keep it. If it breaks your workflow, you uninstall it. You don't regularly inspect the network traffic it generates. You don't audit the files it accesses. You assume that if something is on the official VSCode Marketplace, it's been reviewed and approved.

That assumption was the vulnerability the attackers exploited.

The ChatGPT – 中文版 extension had significantly more downloads than ChatMoss, indicating a wider reach and potential impact. Estimated data based on reported figures.

The Data Targets: What Was Stolen

The Malicious Corgi campaign didn't discriminate about what data it collected. If it could be found in a file that a developer opened, it was vulnerable.

Source Code Exfiltration

The most obvious target was source code itself. Every line of code you wrote while the malicious extension was installed was uploaded to external servers. For developers working on proprietary software, this represents complete intellectual property theft. Competitors could obtain your algorithms. Malicious actors could identify security flaws in your code and exploit them. Your competitive advantage, expressed in code, was now in unauthorized hands.

For open-source developers, this might seem less critical. The code would be public anyway. But for enterprise developers, startups, and researchers, source code theft is catastrophic. It's the foundation of competitive advantage.

Configuration Files and Secrets

Configuration files often contain the keys to your entire infrastructure. Database connection strings. API keys. OAuth tokens. Cryptocurrency wallet addresses. AWS credentials. These files are typically named predictably: .env, config.json, secrets.yml. When a developer opens one of these files to troubleshoot a connection issue or deploy an update, the entire contents are uploaded.

For an attacker, a single stolen API key can be worth thousands of dollars. A stolen AWS credential set can unlock an entire cloud infrastructure. A stolen database connection string provides direct access to your production data.

Personal Information and Metadata

Beyond code and secrets, the extensions collected metadata about the developers themselves. Through the analytics SDKs, the attackers identified:

- Project names and structures

- Development frameworks and technologies

- Team sizes and development patterns

- Deployment timings and update schedules

- Geographic locations based on IP addresses

- Work hours and productivity patterns

This metadata can be used to build detailed profiles of targets for further attacks. If an attacker knows a developer works on payment processing systems, they can prioritize attacks on that developer's machine. If they know a team deploys on Tuesday evenings, they can time their own attacks for that window.

Comments and Documentation

Source code comments often contain sensitive information developers assume will remain private. Business logic explanations that reveal competitive strategies. TODO comments that expose known vulnerabilities. Security notes that hint at where the good stuff is hidden. Deprecation notices that explain why certain approaches were abandoned.

All of this was captured and exfiltrated.

Why Detection Was Difficult

Most malware detection approaches look for obvious signs of malicious behavior. Unusual network requests. Suspicious file operations. Processes attempting to escalate privileges. The Malicious Corgi campaign defeated detection through a simple but effective approach: it hid its malicious behavior inside normal, expected behavior.

Network Traffic Camouflage

The extensions communicated through hidden iframes within the extension's webview. From a network traffic perspective, this looked like normal web content loading. The iframe contained legitimate analytics SDKs from recognized companies. The request pattern matched what you'd expect from a productivity tool checking for updates or synchronizing settings.

To an untrained observer examining network logs, this looks like normal VSCode extension behavior. To a sophisticated analyst, it looks like a well-designed hiding mechanism.

Permission Abuse

VSCode extensions can request various permissions: file system access, network access, command execution. These permissions are displayed when you install an extension, and most users click "accept" without reading the details. The malicious extensions requested permissions that seemed reasonable for AI coding assistants—access to open files, network access to reach API endpoints, the ability to execute commands for code generation.

These permissions were completely legitimate for a real AI coding assistant. The fact that they were being abused for data theft didn't change the appearance of the permission request.

Behavioral Normalization

The extensions didn't steal all your data and transmit it in one massive burst. That would have created noticeable network spikes. Instead, they sent data continuously, in small chunks, mixed in with normal extension traffic. File contents were encoded and transmitted gradually. Analytics data was batched and sent on normal intervals.

From a behavioral perspective, this looked like a tool that was functioning normally, occasionally communicating with its backend servers, as expected.

Time-Based Obfuscation

The extensions were smart about when they exfiltrated data. They weren't transmitting all the time. They were transmitting when it was hardest to notice. During busy work hours when network traffic is high. During deployments when administrators are focused on other tasks. During periods when multiple network connections are happening simultaneously.

Both ChatGPT – 中文版 and ChatMoss received high user ratings, indicating their functionality was convincing despite malicious intent. Estimated data.

The Marketplace Failure: How This Got Past Microsoft

The VSCode Marketplace is maintained by Microsoft. It's the official distribution channel for extensions. Microsoft has the resources to implement security reviews, static analysis, dynamic testing, and other safeguards. Yet two extensions with 1.5 million combined downloads managed to operate undetected for extended periods.

The Scale Problem

The VSCode Marketplace has over 60,000 extensions. Microsoft can't manually review every single one. They rely on automated systems, permission scanning, and user reports. For a new extension from an unknown publisher, the level of scrutiny is often minimal. Does it ask for permissions that match its description? Does it trigger known malware signatures? If the answer to both is "no," it gets published.

A sophisticated attacker can engineer their malicious code to pass these automated checks. The permissions requested are legitimate. The network destinations aren't yet known as malicious. The code doesn't match signatures of known malware.

The Trust Problem

Extension marketplaces function on the assumption that publishers are trustworthy. You publish an extension, you maintain it, and the community uses it. If it's malicious, users will notice and report it. The marketplace relies on crowdsourced security through reputation.

But reputation takes time to build. A new publisher with a new extension has zero reputation. Users installing it are making a leap of faith. In the case of the Malicious Corgi campaign, that leap was exploited.

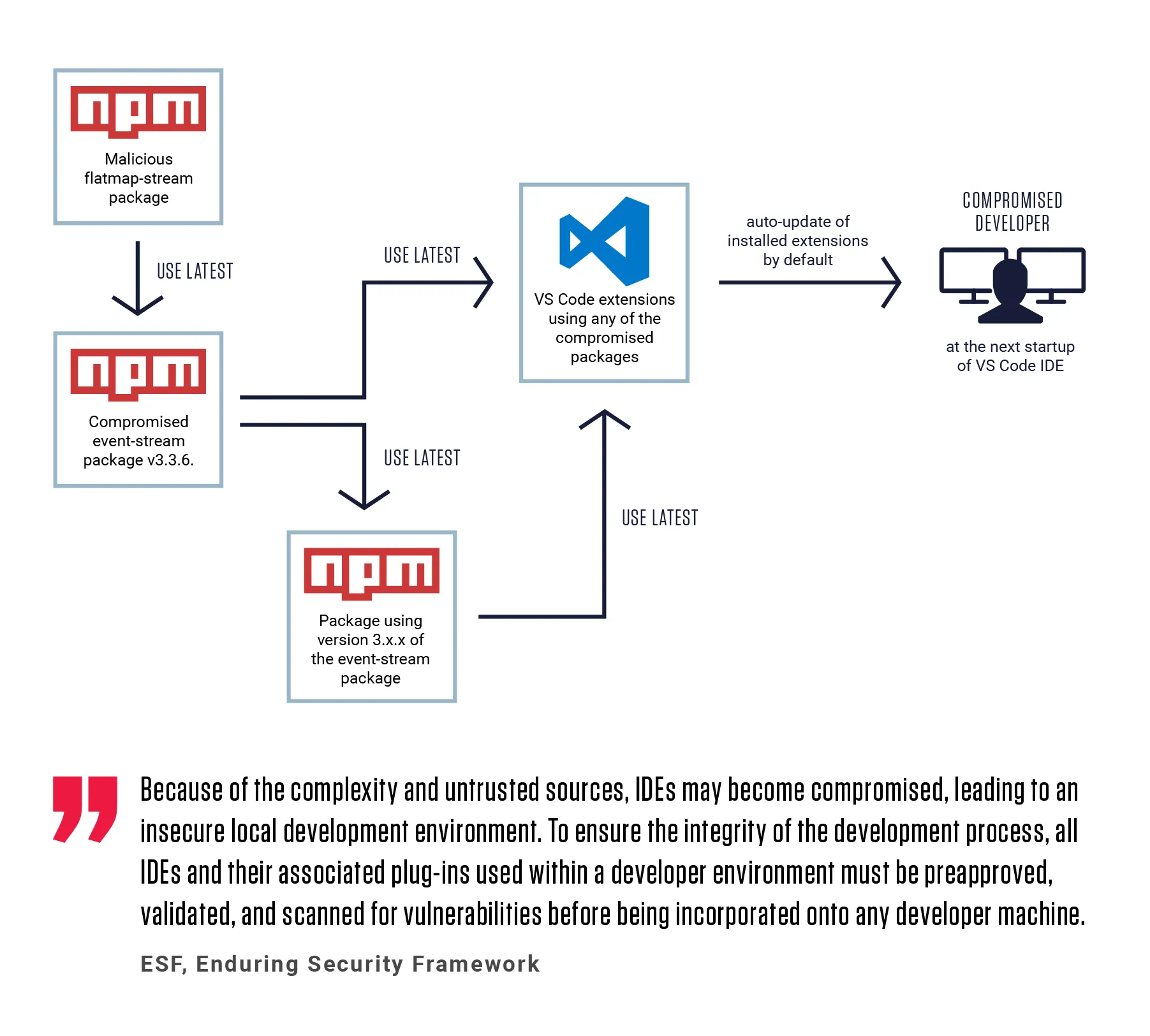

The Update Problem

Once an extension is installed, it receives automatic updates. The malicious extensions could have been published as legitimate tools, built trust, and then updated to include malicious code. Or they could have contained the malicious code from the start, with the assumption that by the time they were discovered, millions of people would already be using them.

In this case, the extensions contained the malicious code from the beginning, suggesting the attackers were operating on a timeline of weeks or months before expecting to be caught.

The Response Problem

Even after Microsoft was notified of the malicious extensions, they remained available for download for an extended period. This suggests that either the review process for removing extensions is slow, or Microsoft prioritized the risk assessment lower than the security researchers did. Either way, this window allowed additional users to install the extensions while they were still active.

Impact Assessment: Who Was Actually Hurt

The 1.5 million installation figure is the headline number, but it obscures important nuance. Not everyone who installed these extensions was equally harmed.

Enterprise Developers

Large enterprises are the most impacted by this attack. They have extensive proprietary codebases, sensitive business logic, and valuable intellectual property. An enterprise developer installing a malicious extension creates an exposure that affects the entire organization.

A financial services company's algorithm for detecting fraud is now in external hands. A healthcare startup's system for processing patient data has been compromised. A defense contractor's integration with critical infrastructure is exposed. The damage extends far beyond the individual developer.

Enterprise developers are often targets for additional attacks. Once an attacker knows a developer works on valuable systems, they can focus other attacks on that developer's accounts, attempting to access email, source control, CI/CD pipelines, and cloud infrastructure.

Open-Source Maintainers

Open-source maintainers represent a secondary impact category. If an active maintainer on a popular open-source project has their development environment compromised, the attacker gains insight into:

- The project's security architecture

- Known vulnerabilities being worked on

- Planned features and timelines

- Integration points with other critical systems

- The project's repository access controls

This information can be used to compromise the project itself, introducing malicious code into libraries used by millions of downstream users.

Security Researchers and Penetration Testers

Pentration testers and security researchers often work on sensitive systems and have access to restricted environments. Compromising a penetration tester's development environment gives an attacker insight into which systems are being tested, what vulnerabilities have been found, and what security controls exist.

Security researchers who develop tools have their intellectual property stolen. Defenders fighting security threats have their hand tipped to the attackers they're fighting against.

Startups and Small Development Teams

Small companies and startups represent a large segment of VSCode users. Many of them have minimal security budgets and limited visibility into their development infrastructure. A compromised developer environment might be the only development environment they have.

Startups are often pre-revenue or early revenue. Their source code is their most valuable asset. Stealing a startup's codebase can mean stealing the company's entire future.

Government and Critical Infrastructure

While not explicitly mentioned in the security research, government agencies and critical infrastructure operators use VSCode for development. Developers working on critical infrastructure, national security systems, and government services might have been using one of the malicious extensions.

The impact of compromising government or critical infrastructure source code extends to national security implications.

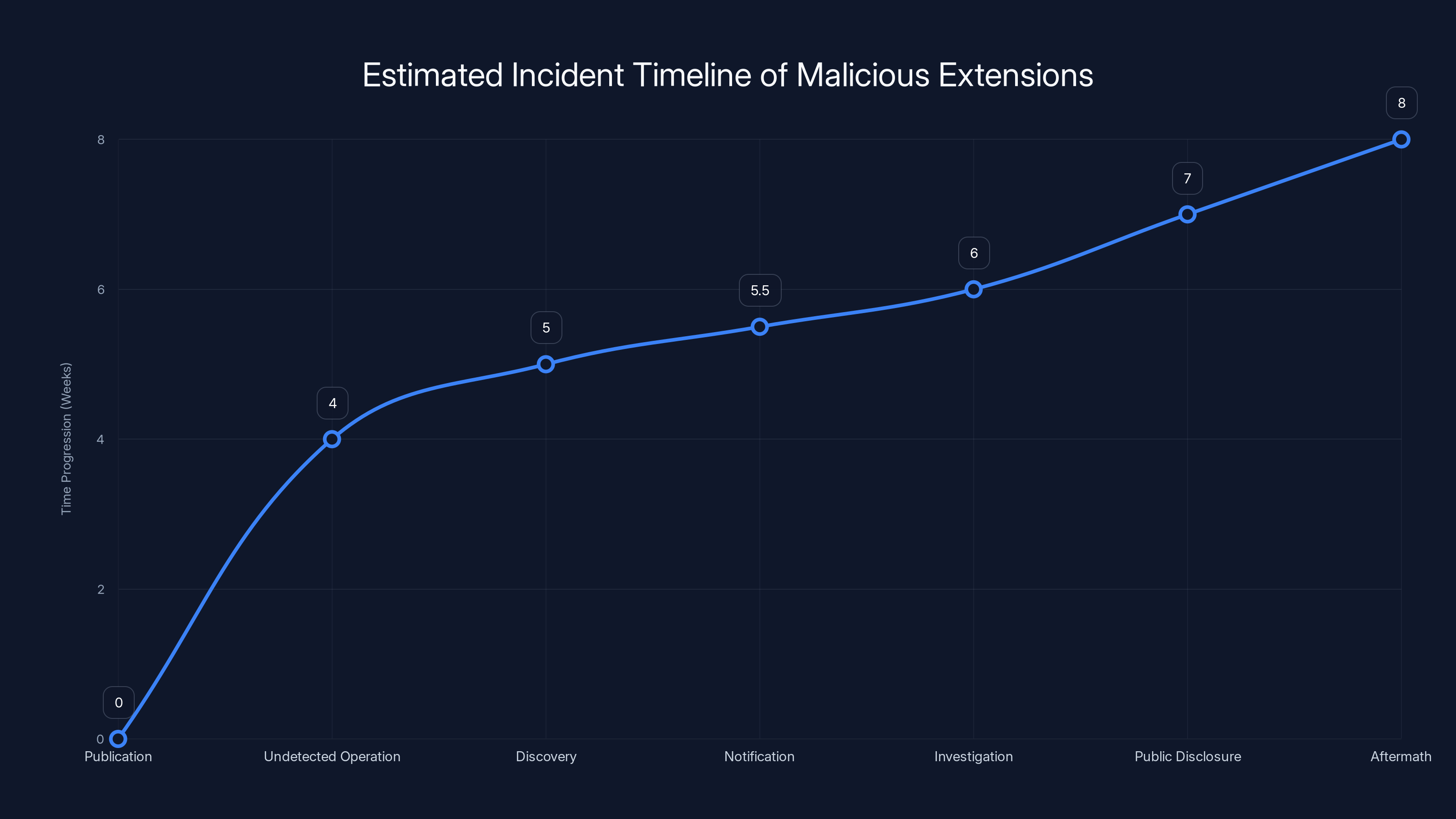

The timeline shows an estimated progression of events from the publication of malicious extensions to the ongoing aftermath. Estimated data based on typical incident response durations.

Incident Timeline: What Happened When

While the exact timeline isn't fully public, the sequence of events likely followed this pattern:

Unknown Date (Weeks/Months Before Discovery) The malicious extensions were published to the VSCode Marketplace. They passed initial automated checks and were made available for download. Users began installing them.

Extended Period (Weeks) Both extensions operated undetected, continuously harvesting data. The extensions received some user engagement, positive reviews, and ratings that helped them climb marketplace rankings. The more popular they became, the more data was harvested.

Discovery Date Security researchers at Koi Security identified suspicious network behavior associated with the extensions. They reverse-engineered the code, identified the malicious mechanisms, and analyzed the threat.

Notification (Shortly After Discovery) Koi Security and possibly other researchers notified Microsoft about the malicious extensions.

Investigation Period (Days) Microsoft reviewed the notification, likely confirmed the findings, and began the process of removing the extensions from the marketplace.

Public Disclosure Details of the attack were published, allowing users to identify whether they had installed the extensions.

Aftermath (Ongoing) Users who installed the extensions are now dealing with the consequences of potential data exposure. Developers are reviewing their installed extensions and uninstalling anything suspicious.

Technical Deep Dive: How Base 64 Encoding Facilitates Theft

Base 64 encoding appears throughout the Malicious Corgi campaign as the method for disguising stolen data. Understanding how this works explains why the attack was so effective.

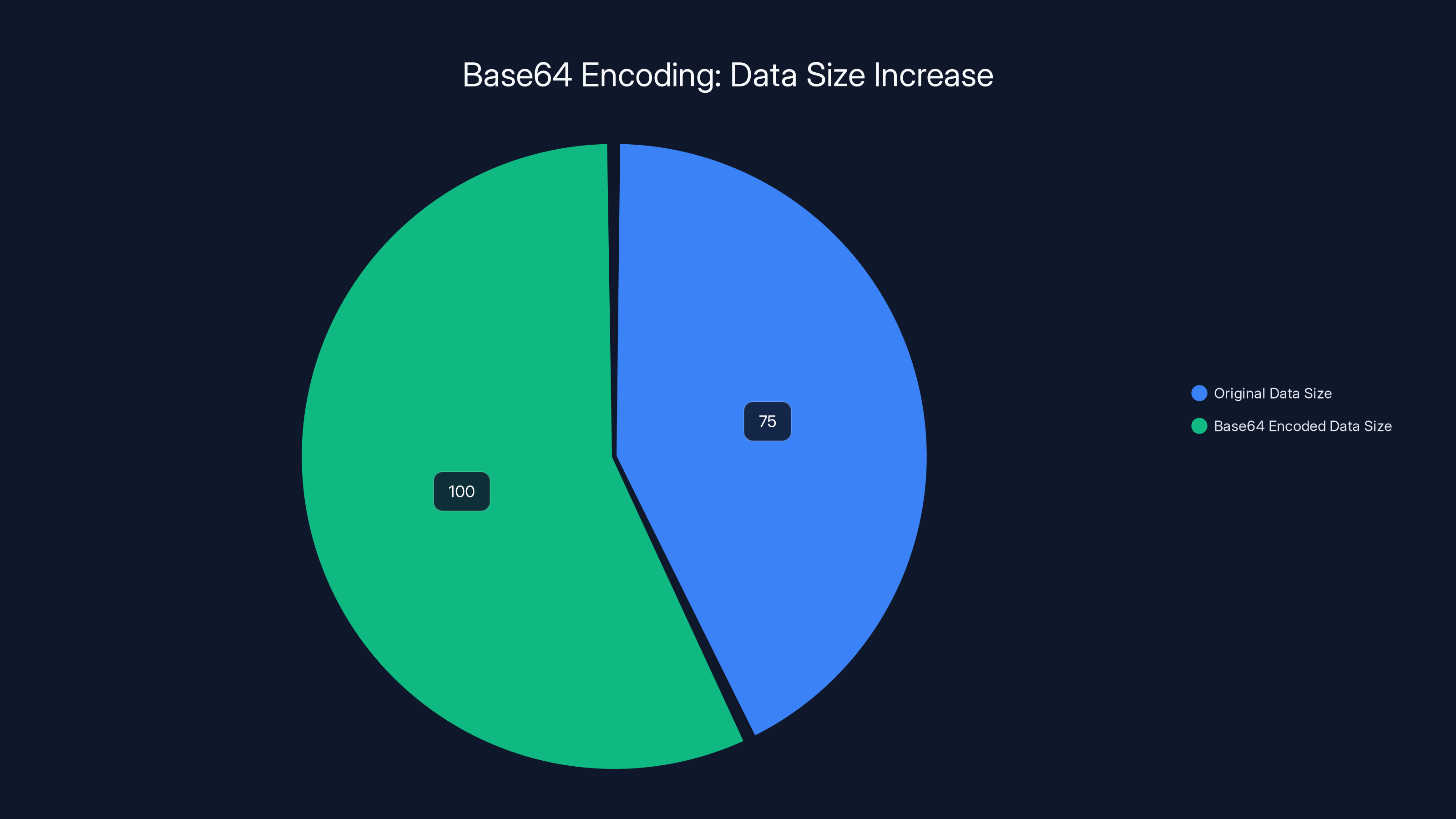

Base 64 encoding converts binary data into a text format using 64 "safe" characters (A-Z, a-z, 0-9, +, /). Every 3 bytes of input become 4 characters of output. This increases the size of the data by approximately 33%, but makes it suitable for transmission through text-based protocols like HTTP.

When you write source code, it's stored as bytes in a file. When the malicious extension reads that file, it has the raw bytes. It then encodes those bytes as base 64, which produces a string of text that looks meaningless to a human observer but can be easily decoded by the attacker.

For example, if your source code is:

const api Key = "sk-abc 123xyz";

The bytes of this code are converted to base 64, which might look like:

Y29uc 3 Qg YXBp S2V5ID0g In Nr LWFi Yz Ey M3h 5ei I7

When this base 64 string is transmitted through the internet, it looks like random data to someone casually observing network traffic. But the attacker can instantly decode it back to the original source code.

The genius of using base 64 isn't that it's secure encryption. It's not. The genius is that it makes the stolen data compatible with text-based protocols and makes it harder for automated security tools to recognize what's actually being transmitted.

If an automated security tool is looking for patterns that match "function declarations" or "API keys", it would struggle to find them when they're base 64-encoded. But if a human security engineer builds a rule to detect base 64-encoded code being transmitted to unusual destinations, the attack would be immediately apparent.

This suggests the attackers assumed they would remain undetected for a specific period. They weren't trying to hide the attack forever. They were trying to hide it long enough to extract maximum value.

Base64 encoding increases data size by approximately 33%, making it suitable for text-based protocols but larger in size.

Threat Actor Attribution: Who Was Behind This

The security research doesn't explicitly state the origin of the Malicious Corgi campaign, but several indicators suggest state-sponsored or organized criminal activity.

Evidence of Organization

The attack shows signs of professional coordination. Both extensions were published to the same marketplace, operated simultaneously, used identical exfiltration techniques, and sent data to the same servers. This isn't the work of a single attacker experimenting with malware. This is coordinated, planned, and executed by a team.

Infrastructure Sophistication

The command and control servers that received the stolen data required infrastructure setup, monitoring, and maintenance. The attackers needed to process and store potentially terabytes of stolen code. They needed authentication mechanisms to ensure only authorized systems could issue commands to the malware.

This level of infrastructure suggests resources beyond what typical cybercriminals operate with.

The Chinese Connection

The extension names, publisher names, and server locations all point to Chinese infrastructure or actors. The name "Chat GPT – 中文版" literally means "Chat GPT – Chinese Version," suggesting targeting of Chinese-speaking developers. The published names "When Sunset" and "zhukunpeng" may be Chinese transliterations.

The exfiltration of data to Chinese servers is the most direct indicator of origin, though it's possible the attackers intentionally used Chinese infrastructure to mislead investigators.

Possible Motivations

State-sponsored attackers might be interested in source code from developers working on critical systems. Commercial entities might be conducting industrial espionage against competitors. Organized criminals might be harvesting credentials and data for resale on darknet markets.

Without explicit attribution from threat intelligence firms, the specific motivation remains speculative. But the scale and sophistication point toward a well-resourced adversary with sustained access to infrastructure.

Detection Methods: How to Know If You Were Compromised

If you installed Chat GPT – 中文版 or Chat Moss while it was active, you should assume your development environment was compromised. But there are ways to verify the extent of the damage.

Review Installation History

In VSCode, open the Extensions panel and scroll to the "Installed" section. Look for Chat GPT – 中文版 or Chat Moss. If either extension is listed, click it and check the installation date. If it was installed before the extensions were publicly flagged as malicious, assume it was active and harvesting data.

You can also check the "Extension Update History" for any of your installed extensions to see when they were last updated.

Analyze Network Traffic

If you have access to network logs from your development period, look for:

- Unusual outbound connections to IP addresses not associated with known services

- Large data transfers to external destinations during development sessions

- Regular, periodic data transmission patterns

- Base 64-encoded data in the transmission payloads

Network analysis requires access to packet capture data, firewall logs, or proxy logs. Most individual developers won't have this information, but enterprises can request it from their network security team.

Review File Access Logs

Some systems maintain logs of which applications accessed which files. If your system has this capability, look for:

- The malicious extension reading files you opened

- File reads occurring moments after you opened files in VSCode

- Access patterns that match the timing of your development activity

Behavioral Indicators

If you notice any of these symptoms, your system may have been compromised:

- Fan noise and system usage spikes during normal coding work

- Network activity on your machine during idle periods

- Increased bandwidth usage that doesn't match your normal pattern

- Unusual heat generation from your machine

These indicators suggest the extension was actively processing and transmitting data.

Credential Exposure Analysis

If you have any credentials configured in files that the malicious extension could have accessed, assume those credentials are compromised. This includes:

- API keys in

.envfiles - Database credentials in configuration files

- SSH keys or certificates accessed during development

- OAuth tokens or access tokens

- Cloud provider credentials

For each of these, the recommended response is to rotate the credentials immediately. Change the passwords, regenerate the API keys, and issue new certificates.

Mitigation Strategy: What to Do Right Now

If you may have been impacted by the Malicious Corgi campaign, follow these steps in order of priority.

Immediate Actions (Today)

1. Uninstall the Malicious Extensions If Chat GPT – 中文版 or Chat Moss are still installed, uninstall them immediately. In VSCode, right-click the extension and select "Uninstall."

2. Identify Installation Timeframe Determine when you installed the extension and how long it was active on your system. This tells you the window during which your code could have been harvested.

3. Change All Credentials Assuming any credential that was stored in a file accessible to the extension is compromised, change it. This includes:

- Database passwords

- API keys

- Cloud provider credentials

- Repository access tokens

- SSH keys

- OAuth tokens

This is not optional. If credentials are compromised, they should be rotated.

4. Notify Your Organization If you're a developer at a company, notify your security team immediately. Provide them with the installation date, the names of the extensions, and your best estimate of which files you opened while the extension was active.

5. Clear Your Local Caches The extension may have cached credentials or data locally. Clear the VSCode cache, browser caches, and application-specific caches. While this won't undo any damage that was done, it removes any local artifacts the extension may have stored.

Short-Term Actions (This Week)

1. Audit Recent Changes Review any code changes, deployments, or infrastructure modifications you made during the period the malicious extension was active. If an attacker had access to your credentials, they might have made unauthorized changes.

2. Review Repository Access Logs If you use Git Hub, Git Lab, or another Git hosting service, review the access logs for your repositories. Look for access from IP addresses you don't recognize, changes made at times you weren't working, or unauthorized push events.

3. Monitor for Unusual Activity Enable alerts on your accounts to notify you of:

- Unexpected login activity

- Changes to repository permissions

- New SSH keys added to your account

- Unexpected API access or usage

4. Check for Evidence of Compromise Review your production systems for evidence of unauthorized access:

- Unexpected cron jobs or scheduled tasks

- Unusual user accounts

- Modified system files

- Network traffic to unknown destinations

Long-Term Actions (Ongoing)

1. Implement Extension Allowlists If you're a developer at an organization with security policies, work with your security team to implement extension allowlists. Only allow extensions that have been explicitly reviewed and approved.

2. Use Verified Publishers When selecting extensions, prefer those from verified publishers with extensive usage and positive reviews. While this isn't a guarantee of safety (as the Malicious Corgi campaign demonstrates), it reduces risk.

3. Regular Audits Regularly audit your installed extensions. Delete anything you're not actively using. Each extension is a potential attack surface.

4. Monitor for Updates Enable notifications for VSCode updates and extension updates. Apply security patches promptly.

5. Consider Isolated Development Environments For highly sensitive work, consider using isolated development environments that don't have access to your main credentials or production systems.

Lessons for the Ecosystem: What Needs to Change

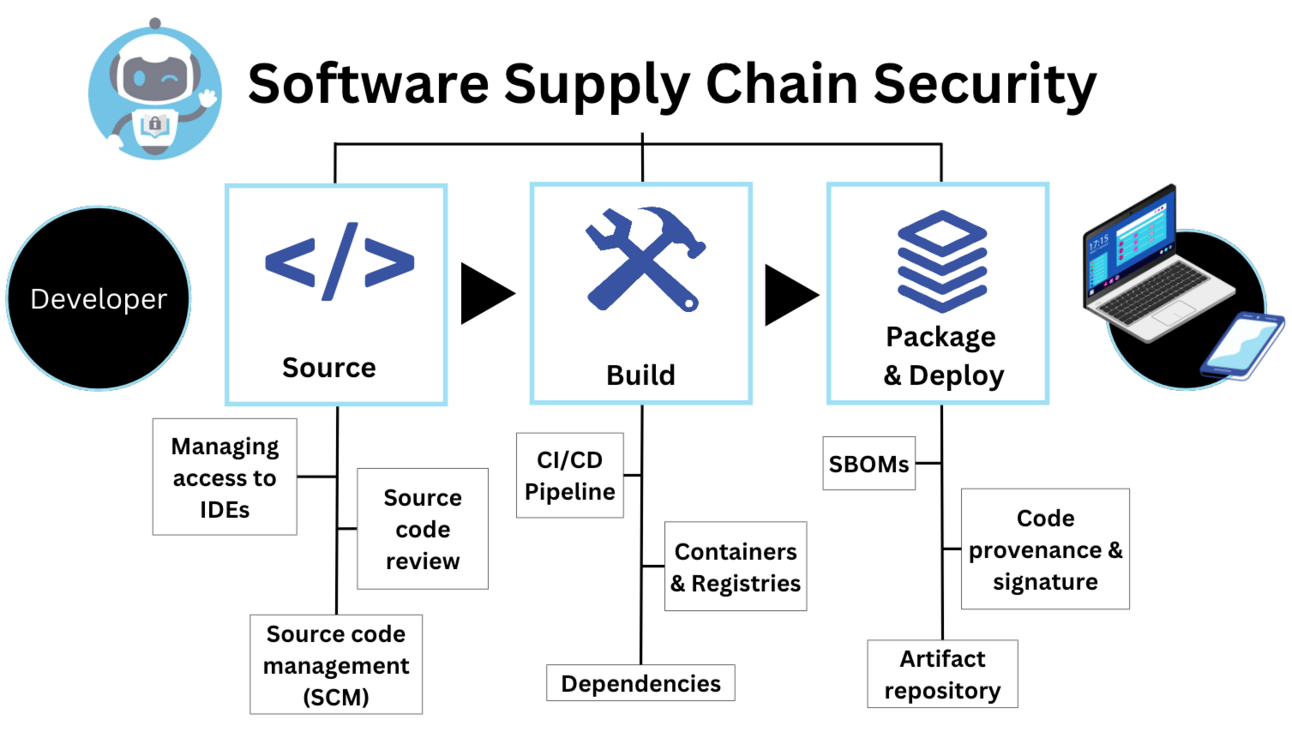

The Malicious Corgi campaign exposes fundamental weaknesses in how open-source marketplaces handle security. These lessons apply beyond VSCode to npm, Py PI, and other package managers.

Stronger Vetting for New Publishers

The marketplace currently allows unknown publishers to reach millions of users with minimal friction. A more rigorous vetting process for new publishers—possibly requiring identity verification or reputation building—could reduce the barrier for attack.

This creates friction for legitimate developers, but the security benefit may justify it.

Mandatory Code Review for Sensitive Extensions

Extensions that request file system access, network access, or command execution should undergo mandatory code review before publication. This is more labor-intensive than automated scanning, but it could catch sophisticated attacks that pass automated detection.

Dynamic Analysis Sandboxing

Extensions should be analyzed not just statically (by reviewing their code) but dynamically (by running them and observing their behavior). A sandboxed execution environment could reveal whether an extension is behaving suspiciously, attempting unauthorized network connections, or accessing files beyond what its declared purpose requires.

User Permission Transparency

When an extension updates, users should be notified about permission changes. If an extension requests new permissions, users should explicitly approve the update. Currently, updates often install silently with no user notification.

Faster Incident Response

Once a malicious extension is identified, it should be removed from the marketplace and users should be notified within hours, not days. Notification should include the specific dates the extension was malicious, information about what data might have been compromised, and recommended remediation steps.

Community Reporting Incentives

Marketplaces should incentivize users to report suspicious extensions through security research programs, bug bounties, or public recognition. Users have the broadest view into actual extension behavior and should be empowered to report concerns.

Extension Security Best Practices for Developers

If you're a developer using VSCode, these practices reduce your risk.

Principle of Least Privilege for Extensions

Only grant extensions the permissions they actually need. If an extension doesn't need file system access, disable it. If it doesn't need network access, be suspicious of why it's requesting it.

Regular Extension Audits

Monthly, review your installed extensions. Delete anything you haven't used in the past month. The fewer extensions you have, the smaller your attack surface.

Separation of Concerns

If possible, use separate VSCode profiles for different types of work. One profile for open-source development where you can be liberal with extensions. Another profile for sensitive work where you use only the most essential, well-vetted extensions.

Network Isolation for Sensitive Work

For work involving highly sensitive data or credentials, consider developing in an isolated network environment with minimal external connectivity. The Malicious Corgi campaign couldn't exfiltrate data if the development machine wasn't connected to the internet.

Credential Rotation as Standard Practice

Regularly rotate credentials used in development environments. Don't think of credential rotation as something you only do when you suspect compromise. Think of it as routine maintenance.

Monitoring and Alerting

Set up alerts on your accounts and systems to notify you of unusual activity. This won't prevent an attack, but it helps you detect it quickly and limit the damage.

Broader Supply Chain Security Implications

The Malicious Corgi campaign is one example of a broader class of supply chain attacks targeting the software development ecosystem.

Dependencies as Attack Surface

Developers rely on thousands of dependencies in their projects. Each dependency is a potential attack surface. A malicious actor who compromises a popular dependency can impact millions of downstream users.

The npm ecosystem has seen multiple attacks on popular packages. The Py PI ecosystem has had similar incidents. The supply chain for software development is a fundamental weakness in the ecosystem.

The Trust Problem in Open Source

Open source thrives on trust. You trust that a maintainer is competent and well-intentioned. You trust that dependencies you install won't do malicious things. But trust is fragile. A maintainer's account can be compromised. A formerly trustworthy maintainer can become malicious.

Balancing trust with security requires mechanisms beyond reputation. It requires technical controls: code review, sandboxing, permissions restriction, and monitoring.

The Sustainability Challenge

Many popular open-source packages are maintained by volunteers with limited resources. These maintainers can't perform extensive security reviews for every dependency. They can't monitor for every possible attack vector.

If open source is to be made more secure, it likely requires more resources devoted to security, either through funding or through automation.

Commercial Tool Supply Chains

Commercial tools have their own supply chain risks. When a tool like VSCode accepts extensions from third parties, it creates a supply chain. Each extension is a potential vector for attack. The marketplace becomes a trusted distribution channel that can be exploited.

Securing the supply chain is the responsibility of both the marketplace and the users. Microsoft needs stronger controls. Developers need better awareness and hygiene.

What Microsoft's Response Reveals

Microsoft's handling of the Malicious Corgi campaign provides insight into how the company approaches security.

When initially contacted by Koi Security, Microsoft acknowledged the issue but didn't immediately remove the extensions. This suggests several possibilities:

- The initial notification might not have included clear technical evidence

- Microsoft's process for reviewing and removing extensions might be slower than optimal

- Resource constraints might affect how quickly Microsoft can respond to security incidents

- The risk assessment might not have matched the researchers' assessment

Once the issue became public and researchers published detailed technical analysis, Microsoft moved to remove the extensions. This is the expected response, but the delayed removal meant additional users could install the malicious extensions during the window after the vulnerability was reported but before it was remedied.

Microsoft has since stated it's reviewing its processes for vetting marketplace extensions and responding to security reports. This is appropriate. The marketplace should be a secure channel, not a vector for attacks.

Future Outlook: Preventing the Next Malicious Corgi

The next malicious extension campaign is likely already underway. Attackers understand that marketplaces are valuable attack surfaces and will continue targeting them.

Preventing the next attack requires simultaneous action from multiple parties:

Marketplace Operators (Microsoft)

- Implement mandatory code review for extensions requesting sensitive permissions

- Use dynamic analysis to detect suspicious behavior

- Implement faster incident response timelines

- Provide user notifications when malicious extensions are discovered

Security Researchers

- Continue monitoring extensions for suspicious behavior

- Publish research about attack patterns to raise awareness

- Develop and share tools for analyzing extension security

Developers

- Be suspicious of new extensions from unknown publishers

- Audit installed extensions regularly

- Rotate credentials regularly

- Report suspicious behavior to marketplace operators

Organizations

- Implement extension allowlists in corporate environments

- Monitor developer infrastructure for unauthorized access

- Provide security training to developers about supply chain risks

- Enable multi-factor authentication on developer accounts

Conclusion: The Price of Convenience

The VSCode extension ecosystem provides tremendous value. Thousands of developers have built tools that make development easier, faster, and more enjoyable. The marketplace democratizes distribution, allowing anyone to reach millions of users.

But that same openness and accessibility that makes the marketplace valuable also makes it vulnerable. An attacker can reach 1.5 million users with a single malicious tool. The barrier to entry is zero. The vetting is lightweight. The trust is implicit.

The Malicious Corgi campaign didn't exploit a technical flaw in VSCode. It exploited a human flaw: the assumption that tools in official marketplaces are safe. It's an assumption that's usually correct but occasionally catastrophic when violated.

If you installed either malicious extension, you now have to assume your source code, your credentials, and your development environment are compromised. You have to rotate credentials that might never have been suspected. You have to audit systems for unauthorized access. You have to rebuild trust in your infrastructure.

This is the cost of the attack for individual developers. The cost for the ecosystem is a loss of confidence in the marketplace. Developers will be more cautious. They'll install fewer extensions. They'll be suspicious of new publishers. The openness that made the ecosystem valuable will be constrained by the security concerns that the attack justified.

There's no perfect solution. Marketplace operators can't vet every extension to military standards without grinding the ecosystem to a halt. Developers can't avoid all risk without eliminating all tools and development to baseline capabilities. But the ecosystem can do better.

The lesson isn't that extensions are dangerous and should be avoided. The lesson is that security is a shared responsibility. Marketplace operators, tool developers, security researchers, and end users all have a role to play. The Malicious Corgi campaign succeeded because that shared responsibility was incomplete. It's a lesson worth taking seriously.

FAQ

What exactly did these malicious extensions do?

The extensions Chat GPT – 中文版 and Chat Moss functioned as legitimate AI coding assistants that genuinely helped developers write code. However, they simultaneously harvested sensitive data through three mechanisms: real-time file monitoring that uploaded entire files whenever they were opened, server-controlled commands that could extract specific files in bulk, and hidden analytics SDKs that tracked user behavior and built identity profiles of developers.

How many people were affected by this attack?

The two malicious extensions had a combined 1.5 million installations: Chat GPT – 中文版 had 1.34 million installs and Chat Moss had approximately 150,000 installs. However, "installs" doesn't necessarily mean "users harmed." The actual impact depends on how long each extension was installed and active before being removed.

How can I tell if I installed one of these extensions?

In VSCode, open the Extensions panel and look through your "Installed" extensions list for Chat GPT – 中文版 or Chat Moss. You can also check the extension details page to see when it was installed. If it appears in your history, assume it was harvesting data during its installation period.

What data was stolen from my system?

If the malicious extension was installed on your machine, every file you opened in VSCode was uploaded, including source code, configuration files, credentials, API keys, and any other data stored in files. The extension also collected behavioral data including which projects you worked on, which files you accessed, and when you were developing.

How do I protect myself from similar attacks in the future?

Implement these practices: only install extensions from verified publishers with extensive usage and positive reviews, regularly audit your installed extensions and delete unused ones, rotate credentials frequently as a standard practice, enable alerts on your accounts to detect unusual activity, and if you work for an organization, advocate for extension allowlists that only permit pre-approved extensions.

Why didn't antivirus software detect these extensions?

Because the extensions were legitimate tools that also happened to be stealing data. They didn't crash your system, destroy files, or display ransom notes. They didn't trigger common malware signatures. They used legitimate network requests to legitimate-looking servers. Antivirus software looks for known patterns of maliciousness, but this attack looked like normal development activity.

Is VSCode itself compromised or unsafe?

No. VSCode itself is secure. The vulnerability was in the marketplace extension system, not the core VSCode application. The solution is not to stop using VSCode, but to be more careful about which extensions you install and to advocate for stronger security controls in the marketplace.

What should I do if I definitely installed one of these extensions?

First, uninstall it immediately. Second, rotate all credentials that could have been accessed during the time it was installed, including API keys, database passwords, cloud provider credentials, and any stored authentication tokens. Third, review your code repository access logs for unauthorized access. Fourth, if you work for an organization, notify your security team. Finally, consider your development machine compromised and monitor it for suspicious activity going forward.

Why would anyone create extensions like this if the intent was malicious?

Because the strategy works. By providing a legitimate tool that actually works, the attacker builds trust and encourages installation. Users keep the extension installed because it's genuinely useful. The malicious behavior operates invisibly in the background. By the time the attack is discovered, millions of users have been compromised. The attacker's window of opportunity is weeks or months before detection.

Are other VSCode extensions being investigated for similar behavior?

Following the discovery of the Malicious Corgi campaign, security researchers have increased focus on VSCode extensions. Additional malicious extensions may be discovered. This is healthy—discovering compromised extensions allows them to be removed and users to be notified. If additional extensions are discovered, they'll be dealt with more rapidly than the Malicious Corgi extensions were.

How can I contribute to making VSCode extensions more secure?

If you discover a suspicious extension, report it to Microsoft through their responsible disclosure program. If you develop extensions, follow security best practices and minimize the permissions you request. If you use VSCode, leave detailed reviews of extensions you use, noting any suspicious behavior. If you have resources, contribute to open-source security tools that help analyze extension behavior. Security is a collective responsibility.

Key Takeaways

- Over 1.5 million developers installed two malicious VSCode extensions (ChatGPT – 中文版 and ChatMoss) that stole entire source code files, credentials, and behavioral data through automated harvesting

- The MaliciousCorgi campaign used three simultaneous attack vectors: real-time file monitoring that uploaded every opened file, server-controlled commands for bulk file extraction, and hidden analytics SDKs for behavior tracking

- Both extensions functioned as legitimate AI coding assistants, providing genuine value that made detection difficult and encouraged continued use during the theft period

- Stolen data included proprietary source code, database credentials, API keys, OAuth tokens, and complete development metadata that could enable follow-on attacks

- Even after Microsoft was notified of the malicious extensions, they remained available for download for extended periods, allowing continued compromise of additional users

- Developers who installed these extensions must assume complete compromise of their development environment and should immediately rotate all credentials that were accessible in files

Related Articles

- HPE OneView CVE-2025-37164: Critical RCE Vulnerability & RondoDox Botnet [2025]

- AI-Powered Malware Targeting Crypto Developers: KONNI's New Campaign [2025]

- Microsoft BitLocker FBI Access: Encryption Backdoor Reality [2025]

- OpenAI Scam Emails & Vishing Attacks: How to Protect Your Business [2025]

- Vishing Kits Targeting SSO: Google, Microsoft, Okta at Risk [2025]

- Microsoft BitLocker Encryption Keys FBI Access [2025]

![VSCode Malicious Extensions: How 1.5M Developers Got Compromised [2025]](https://tryrunable.com/blog/vscode-malicious-extensions-how-1-5m-developers-got-compromi/image-1-1769451228037.jpg)