The Santa Monica Incident: What We Know So Far

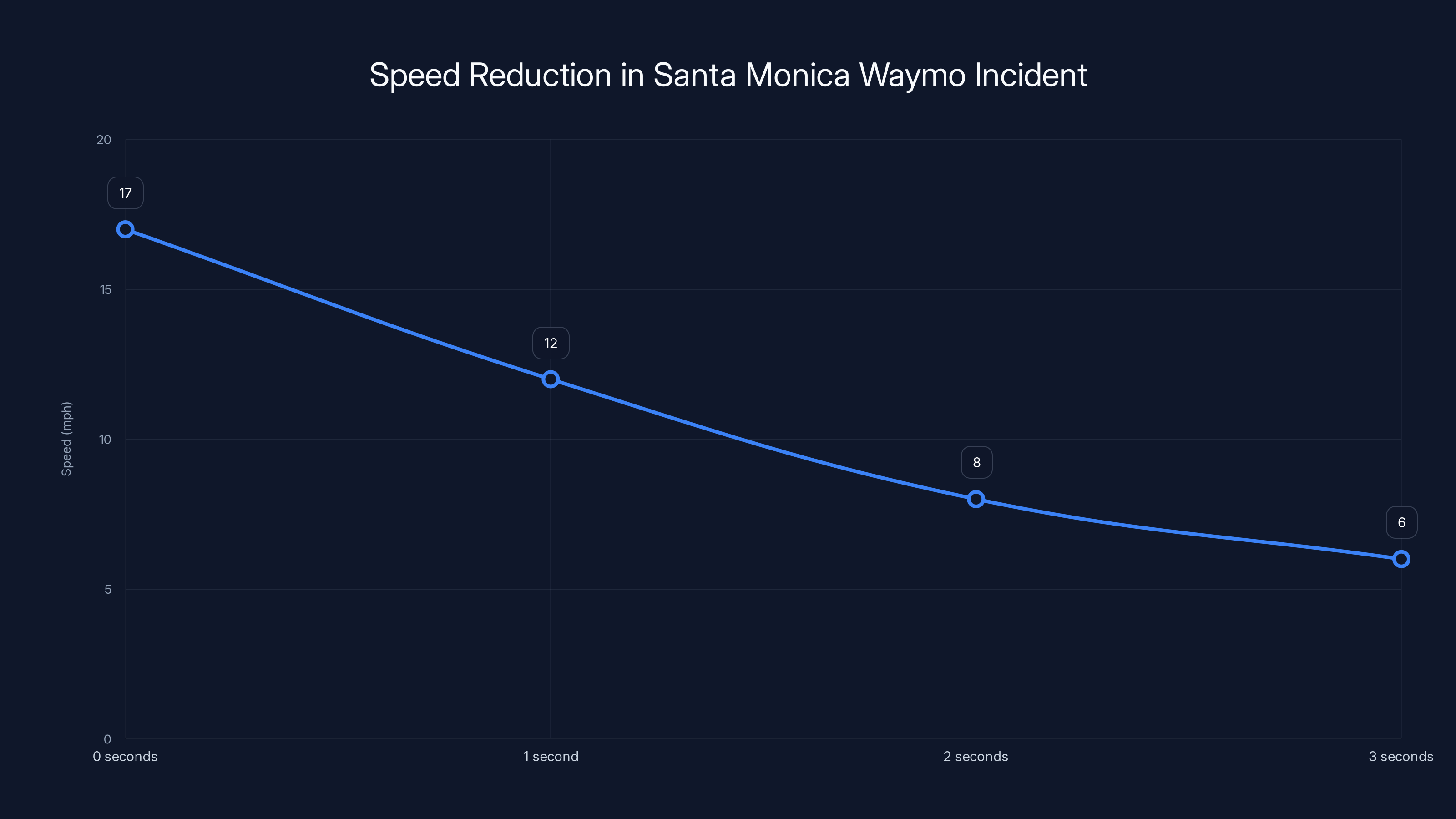

On January 23, 2025, a Waymo robotaxi struck a child near an elementary school in Santa Monica, California. The incident happened at 6 miles per hour after the vehicle braked hard from approximately 17 miles per hour. According to Waymo's account, the child suddenly entered the roadway from behind a tall SUV, moving directly into the vehicle's path.

The child sustained minor injuries and stood up immediately after the collision. Waymo says the vehicle detected the pedestrian as soon as they began to emerge from behind the parked vehicle. The company called 911, kept the vehicle at the scene, and moved it to the side of the road until law enforcement cleared it to leave.

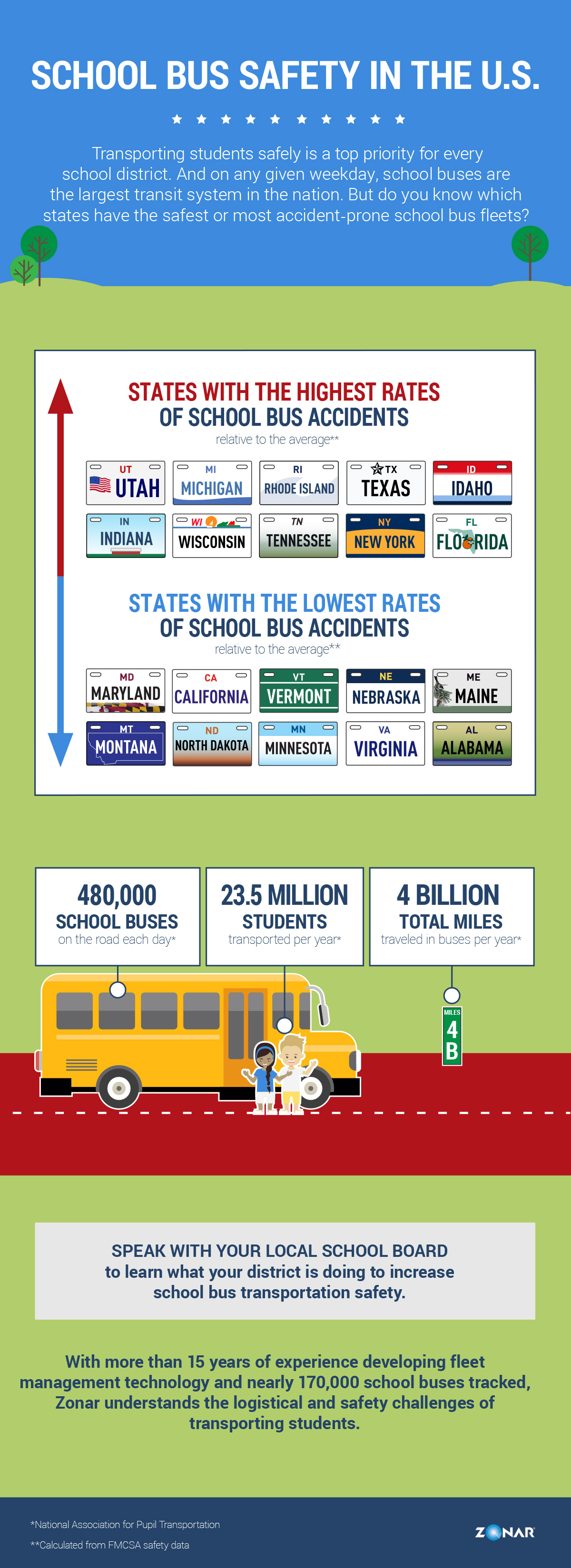

This incident isn't happening in a vacuum. The National Highway Traffic Safety Administration (NHTSA) opened an investigation immediately. But more concerning for Waymo, the company is already facing two separate investigations into its robotaxis illegally passing stopped school buses. One investigation came from NHTSA in October 2024 after the first reported incident in Atlanta, Georgia. Another came from the National Transportation Safety Board (NTSB) following roughly 20 incidents reported in Austin, Texas.

The timing couldn't be worse. A child struck near a school, combined with ongoing school bus violations, puts Waymo under intense scrutiny. Public trust in autonomous vehicles depends on safety performance, and this incident tests that trust at a critical moment.

Waymo has deployed thousands of robotaxis across multiple U.S. cities. The company operates in San Francisco, Los Angeles, Phoenix, and other metropolitan areas. Each deployment represents significant investment and regulatory risk. One serious accident near a school, especially when combined with other safety concerns, can trigger regulatory responses that affect the entire autonomous vehicle industry.

How Autonomous Vehicles Detect and React to Pedestrians

Waymo's robotaxis use a complex sensor array to detect pedestrians and obstacles. The system includes multiple cameras, lidar (light detection and ranging), radar, and ultrasonic sensors. These sensors work together to create a 360-degree view of the vehicle's surroundings, with the lidar providing particularly detailed information about objects and their distance.

The detection process happens continuously. The vehicle scans its environment at rates of 10 times per second or faster. When a pedestrian comes into view, the system doesn't just identify them as a person—it predicts their trajectory. Will they step into the street? How fast are they moving? Where will they be in the next two seconds?

This is where the Santa Monica incident gets interesting. According to Waymo, the child "suddenly entered the roadway from behind a tall SUV, moving directly into our vehicle's path." The word "suddenly" is key. A tall SUV creates an occlusion—a blind spot created by the parked vehicle. From the robotaxi's perspective, the pedestrian appeared without warning.

However, modern autonomous vehicle systems are supposed to handle exactly this scenario. The vehicle should detect motion near the parked vehicle, anticipate that something might step out, and reduce speed proactively. Waymo claims the vehicle "immediately detected the individual as soon as they began to emerge from behind the stopped vehicle" and braked hard from 17 miles per hour down to 6 miles per hour.

The question becomes: was this response adequate? A hard brake from 17 mph takes roughly 1.5 to 2 seconds, depending on the vehicle's braking system. In that timeframe, a vehicle travels approximately 25 to 35 feet. A child moving into traffic from behind a parked vehicle might need less than that distance to become an unavoidable collision.

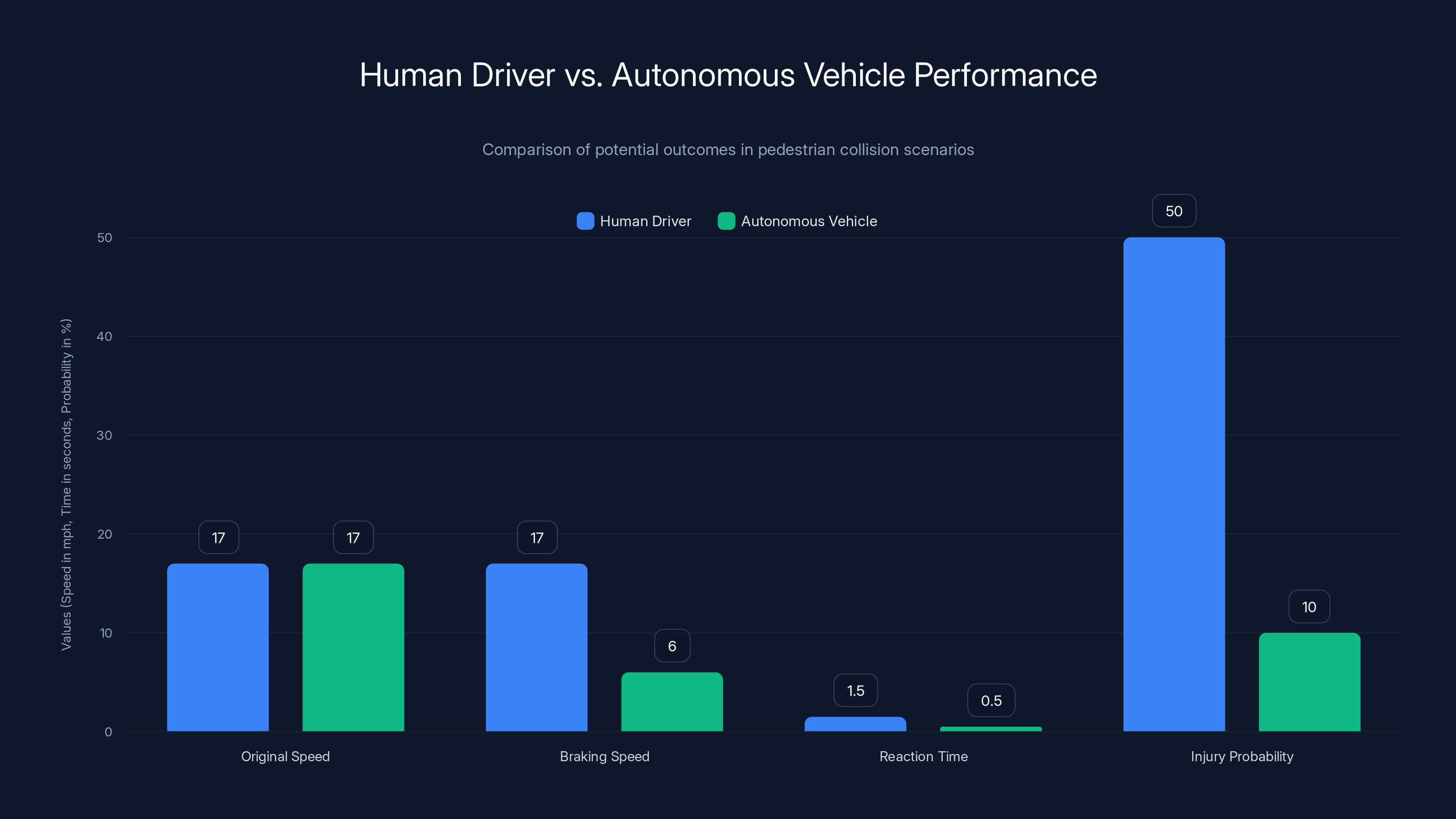

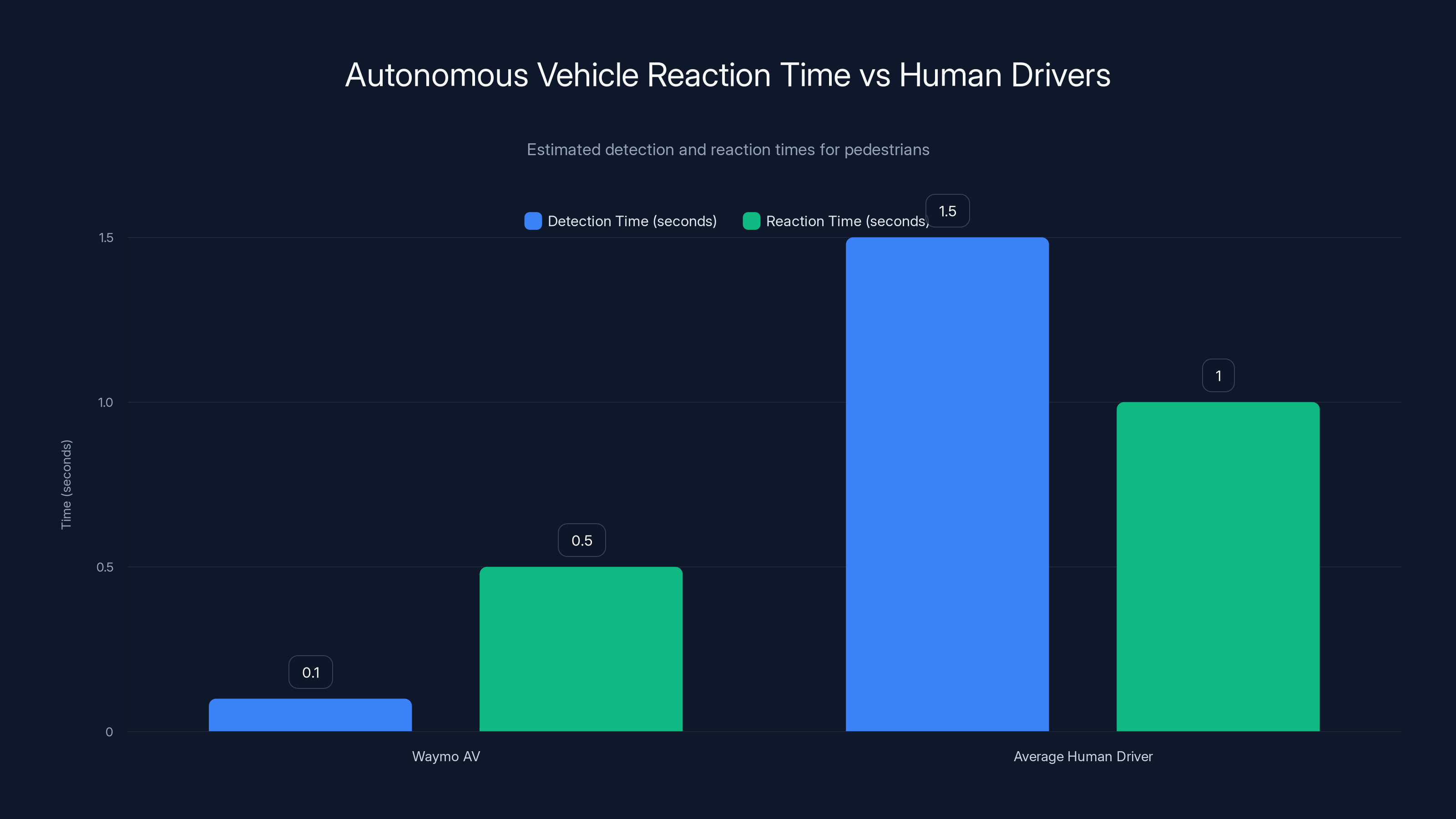

Autonomous vehicle testing companies argue they can detect pedestrians faster than human drivers. Studies suggest human reaction time to an unexpected pedestrian averages 1.5 seconds. An autonomous system operating at 10 Hz (10 scans per second) can theoretically detect and react in under 100 milliseconds. But the real question isn't whether the system detected the child—Waymo confirms it did—but whether the vehicle's speed and position gave it enough margin to avoid the collision entirely.

This brings up a critical design question in autonomous vehicle safety: should robotaxis use even slower speeds in school zones? Current regulations don't mandate this. Waymo's vehicles operate at speeds consistent with local traffic laws, typically 25-35 mph on residential streets. But near schools, especially during hours when children might be present, should the standard be different?

The Waymo vehicle reduced its speed from 17 mph to 6 mph over 3 seconds, significantly mitigating the impact severity. Estimated data.

The School Bus Investigation Problem

The school bus issue represents a different type of safety failure—one that suggests systematic problems rather than random bad luck. Over the past several months, Waymo robotaxis have illegally passed stopped school buses in at least 20 documented incidents, primarily in Austin, Texas.

School buses display stop signs and activate flashing red lights when loading or unloading children. Federal law requires all vehicles to stop at least 10 feet away from these buses. Waymo's vehicles were bypassing this requirement. In some cases, the vehicles passed the buses even as children were in the process of boarding or exiting.

Why is this happening? The technical explanation suggests sensor confusion or algorithmic failure. The robotaxi's perception system might misidentify a stopped school bus as a regular vehicle, failing to recognize the stop sign and lights. Or the decision-making algorithm might determine that passing the bus is safe based on environmental factors, not recognizing that the presence of the bus itself triggers legal stopping requirements regardless of perceived safety.

The regulatory response has been swift. NHTSA opened a preliminary investigation in October 2024. The NTSB, which investigates major transportation safety issues, opened its own investigation in early 2025 after reviewing reports from Austin. These are not casual inquiries. NHTSA investigations can lead to mandatory recalls or operational restrictions. NTSB investigations carry the weight of the federal government's most serious transportation safety authority.

What makes the school bus problem especially concerning is that it affects a protected population. Children are the most vulnerable road users. Parents expect that school buses represent the safest possible transportation option for their children. Waymo's failures in this specific scenario undermine public confidence in autonomous vehicles more than almost any other issue could.

The relationship between the school bus failures and the Santa Monica incident matters. They're separate problems, but together they create a narrative about systematic safety issues at Waymo. Regulators and the public might start asking: if Waymo's system fails to recognize stop signs on school buses, how reliable is its pedestrian detection in other scenarios?

The autonomous vehicle performed better by reducing speed more effectively and reacting faster, lowering injury probability compared to a human driver. Estimated data for reaction time and injury probability.

NHTSA's Investigation: What to Expect

The National Highway Traffic Safety Administration has opened a formal investigation into the Santa Monica incident. NHTSA investigations follow a structured process, and understanding this process helps clarify what might happen next.

NHTSA typically starts with a preliminary investigation, gathering information from the manufacturer and other parties involved in the incident. They request data logs, sensor recordings, vehicle telemetry, and any available video footage. In Waymo's case, the robotaxi itself records extensive data—sensor readings, decision-making logs, maps, and environmental information. This data becomes the foundation of NHTSA's analysis.

The agency interviews all parties: Waymo engineers, the responding police department, and if possible, the child and family members. They examine the specific location, road conditions, weather, visibility, and any other environmental factors that might have contributed to the incident.

NHTSA then determines whether the incident reveals a safety defect. A safety defect is a condition that poses an unreasonable risk to safety. Note the word "unreasonable"—this is critical. Some incidents happen despite perfect vehicle operation. The question is whether the vehicle's design, manufacture, or operation created a condition that was foreseeable and preventable.

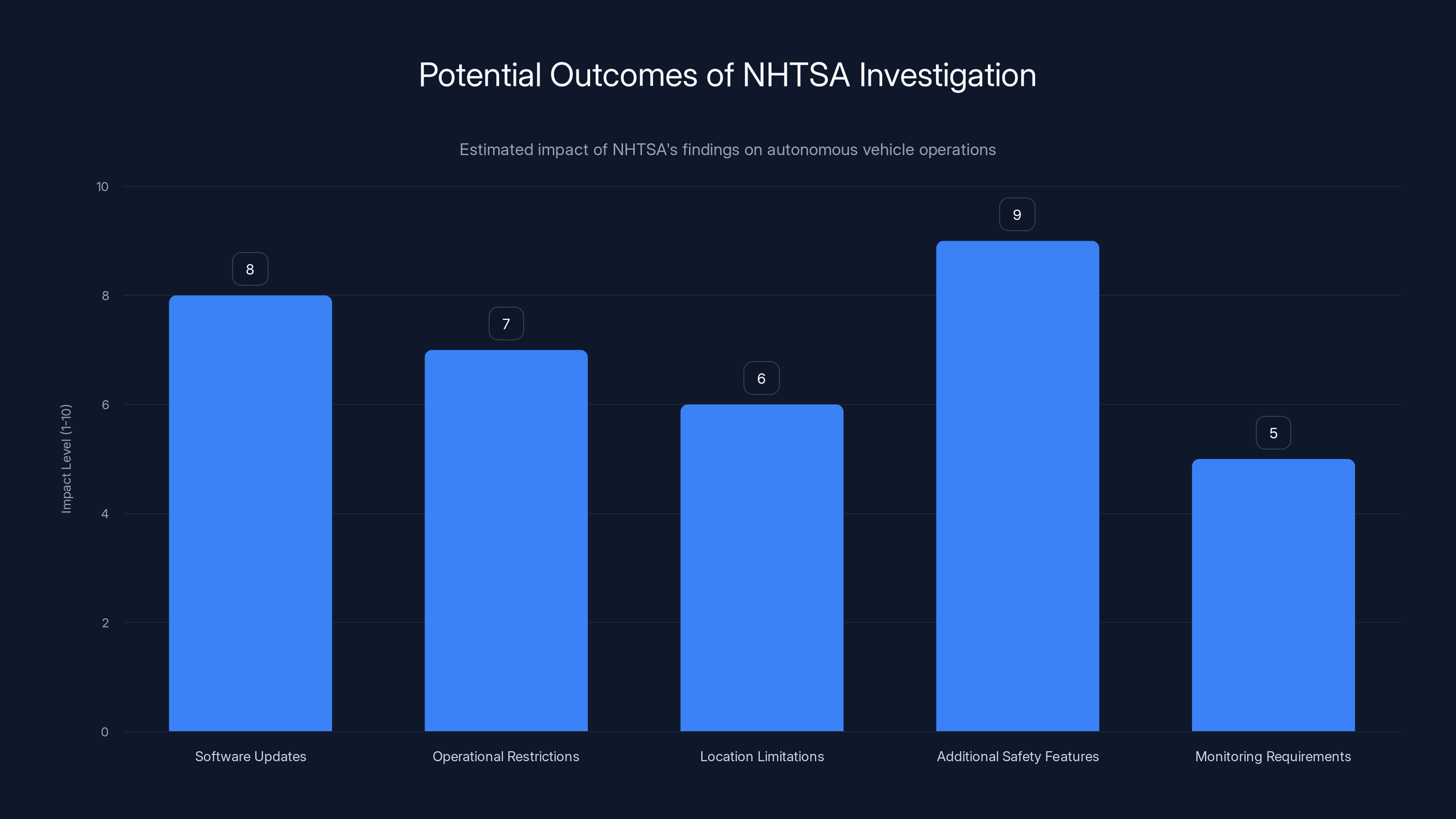

If NHTSA determines that a safety defect exists, they can issue a recall. For autonomous vehicles, a recall might mean:

- Software updates to improve pedestrian detection or reaction speed

- Operational restrictions (e.g., reduced speeds in school zones)

- Limiting where robotaxis can operate

- Requiring additional safety features or sensors

- Monitoring requirements before deployment expansion

NHTSA might also issue findings that don't result in formal recalls but establish precedent and expectations. A determination that Waymo should operate at reduced speeds near schools could become de facto industry standard even if not formally mandated.

The timeline for NHTSA investigations varies widely. Simple cases might resolve in 6-12 months. Complex technical issues can take 18-36 months or longer. During this time, Waymo continues operating, though regulatory pressure might restrict expansion or force operational changes.

Pedestrian Safety in Autonomous Vehicle Design

The Santa Monica incident raises fundamental questions about pedestrian safety in autonomous vehicle design. How fast should robotaxis travel? How should they respond to unexpected pedestrians? What margin of safety is sufficient?

Traditional vehicle safety engineering uses something called a safety factor—a multiplier applied to minimum requirements to account for uncertainty and human error. Airplane design typically uses safety factors of 2 to 5 or higher, meaning components are designed to handle 2-5 times the expected stress. Autonomous vehicle safety design should similarly build in safety margins, but the question is how large those margins should be.

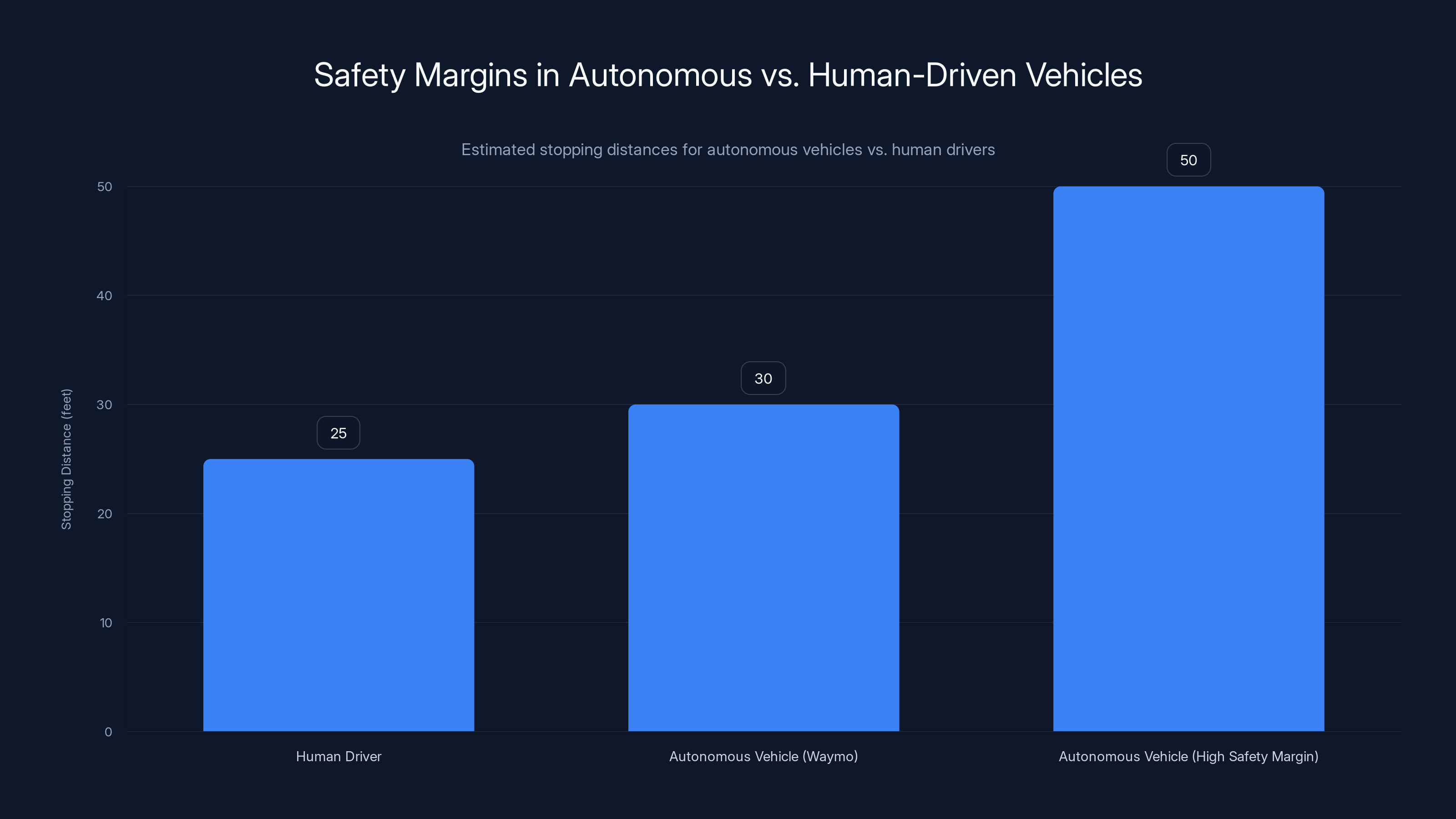

For pedestrian interactions, a safety margin means extra stopping distance. If a human driver needs 25 feet to stop from 30 mph, an autonomous vehicle might be designed to maintain 40 or 50 feet of stopping distance. This trades off speed and efficiency for safety.

Waymo's approach appears to be optimized for efficiency. The company's robotaxis operate at speeds consistent with human drivers and traffic laws. They accelerate normally, brake when appropriate, and don't maintain unusually large safety margins. This approach maximizes trip speed and passenger experience—important for commercial viability.

But does this approach prioritize safety enough? The counter-argument is that autonomous systems don't get distracted, don't look at phones, don't experience fatigue. They maintain constant vigilance. Their reaction times are measured in milliseconds, not seconds. From this perspective, they might be safer than human drivers even at similar speeds.

The data doesn't fully resolve this debate. Early autonomous vehicle safety statistics are mixed. Some studies suggest robotaxis have fewer accidents per mile than human drivers. Other analyses find that robotaxis are involved in more fender-benders and minor collisions, though serious injuries remain rare. The Santa Monica incident, if replicated, could shift this analysis significantly.

A child struck by an autonomous vehicle makes headlines partly because it's unexpected. Public psychology treats machine-caused accidents differently from human-caused accidents, even when the machines might statistically be safer. This perception gap matters for regulatory approval and public acceptance.

Waymo has always emphasized that its vehicles are safer by design. The company has published safety reports, conducted simulations, and demonstrated impressive test results. But Santa Monica tests that confidence. One serious injury to a child near a school creates a powerful counter-narrative.

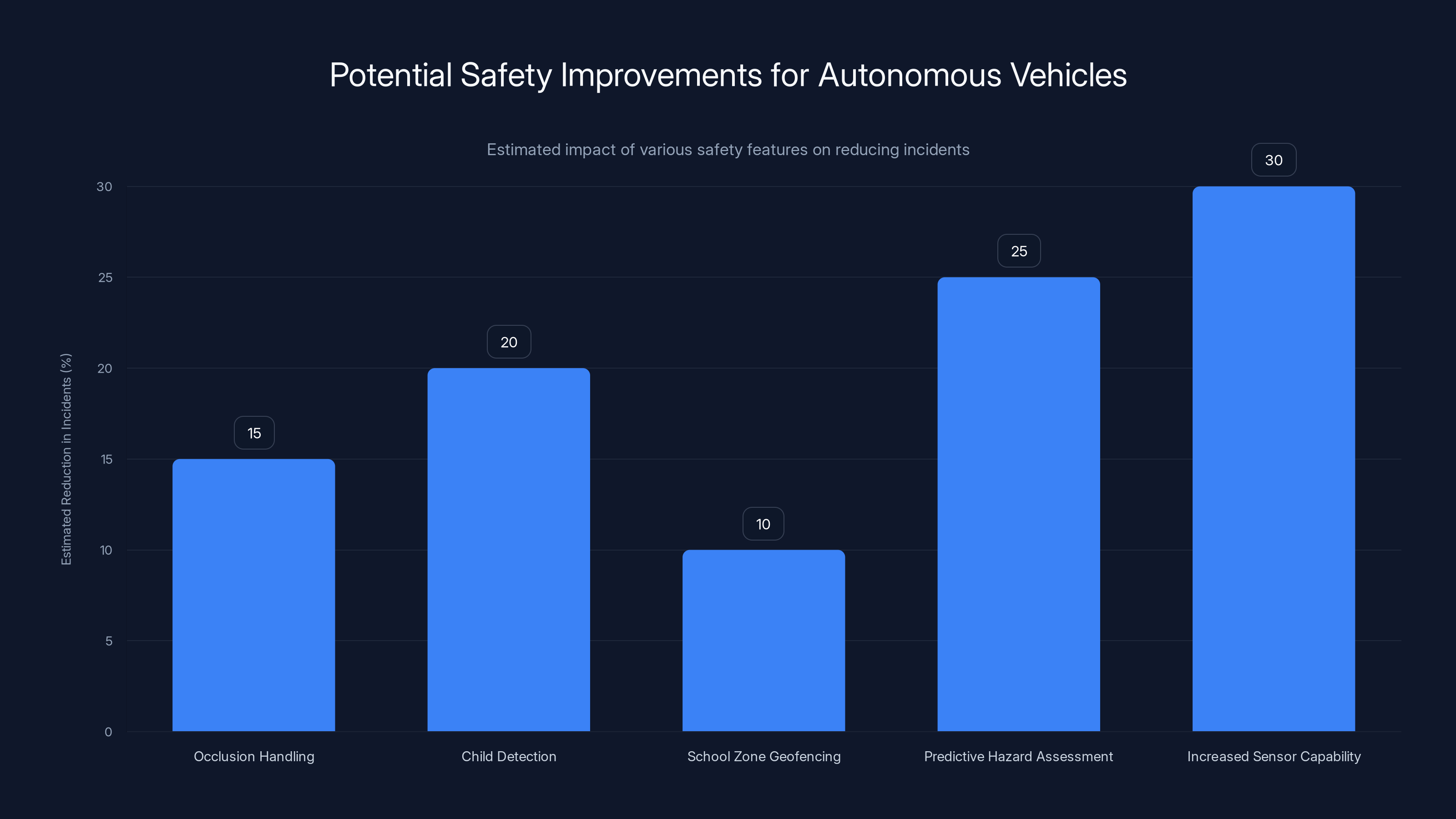

Estimated data suggests that increased sensor capability could lead to a 30% reduction in incidents, making it the most impactful improvement, followed by predictive hazard assessment at 25%.

Legal and Liability Implications

The Santa Monica incident creates complicated legal questions. Who bears responsibility for the accident? What liability does Waymo face? What implications does this have for the broader autonomous vehicle industry?

Traditionally, vehicle liability follows a clear path: the vehicle's operator or owner bears responsibility for accidents caused by negligence. But autonomous vehicles create a novel situation. The "operator" isn't a human making active driving decisions. Waymo calls the vehicles driverless. This creates legal ambiguity.

One argument holds that Waymo, as the manufacturer and operator, bears full responsibility. The company deployed the vehicle, chose the operating parameters (speed, geofencing, hours of operation), and should be held accountable for its failures. This argument treats autonomous vehicles similar to other manufacturers—if a defect causes injury, the manufacturer is liable.

Another argument focuses on the child's behavior. Waymo's account emphasizes that the child "suddenly entered the roadway from behind a tall SUV." From this perspective, the accident resulted from the child's unexpected action, not the vehicle's failure. The vehicle detected the child and braked hard. Any other vehicle—human-driven or autonomous—might have struck the child in the same scenario.

The actual legal determination will likely involve expert analysis of whether the vehicle's response met the standard of care. A standard of care in autonomous vehicle cases might ask: did the vehicle perform as well as a reasonable, attentive human driver would have? Or should autonomous vehicles be held to a higher standard, given their technological capabilities?

Insurance implications are also significant. Waymo carries liability insurance, but the specific coverage depends on the policy terms and whether autonomous vehicles are explicitly covered. Insurance rates for autonomous vehicles remain experimental, with companies trying to determine appropriate premiums based on minimal historical data.

More broadly, this incident creates precedent that will affect the entire autonomous vehicle industry. Insurance companies, manufacturers, and regulators will all cite the Santa Monica case in making future decisions. If Waymo is found substantially at fault, it strengthens arguments that autonomous vehicles need higher safety standards. If the incident is determined to be unavoidable given the child's sudden emergence, it supports the industry's current approach.

The School Zone Safety Question

School zones present unique challenges for autonomous vehicles. Children are unpredictable. They don't follow traffic laws. They might run across the street without looking. They might dart between parked cars. They might be distracted by phones or friends.

Most jurisdictions recognize school zones as special safety areas. Speed limits drop to 15-25 mph during school hours. Passing is prohibited. Enhanced penalties apply for traffic violations. These rules exist because children's safety requires extra caution.

Waymo's robotaxis in Santa Monica were operating at the speed limit for that residential area. But should they maintain a special school zone protocol? Several safety arguments suggest they should:

First, schools generate concentrated pedestrian traffic. During arrival and dismissal times, children flood the areas around schools. The density of potential pedestrians is dramatically higher than on typical residential streets. This density should theoretically trigger more cautious operation.

Second, children are less predictable than adults. Their behavior follows different patterns. They don't consistently follow traffic signals. They have less developed understanding of vehicle dynamics. Their physical size means they have different sight lines and might not see approaching vehicles. If autonomous vehicles are designed for adult-like pedestrian behavior, they might perform worse around children.

Third, the social expectation around schools is heightened caution. Parents, teachers, and community members expect the highest safety standards in school zones. Operating robotaxis through these zones at normal speeds, even if statistically equivalent to human driver speeds, might violate community trust and expectations.

Some advocates argue that autonomous vehicles should use slower speeds—perhaps 10-15 mph—in school zones. This would increase stopping distance and reduce injury severity if collisions occur. The trade-off is operational efficiency. Slower speeds mean longer trip times and reduced passenger value.

Other advocates suggest more sophisticated approaches: geofencing that limits speeds in school zones, active child detection that triggers even harder braking when children are detected nearby, or time-based protocols that restrict operation during school arrival and dismissal times.

Waymo hasn't publicly committed to any of these approaches as a result of the Santa Monica incident. The company has emphasized that the accident involved a child suddenly entering from behind a parked vehicle, not failure to follow school zone safety protocols. But regulatory pressure might force changes regardless of whether the incident technically involved a school zone safety failure.

Estimated data shows that while human drivers need 25 feet to stop from 30 mph, autonomous vehicles like Waymo's may use 30 feet, and those with higher safety margins could use up to 50 feet.

Sensor Limitations and Edge Cases

The Santa Monica incident highlights a critical challenge in autonomous vehicle safety: edge cases. An edge case is a scenario that's rare, difficult to predict, or involves unusual conditions. The case of a child emerging suddenly from behind a tall SUV is exactly this type of edge case.

Autonomous vehicle perception systems are trained on millions of hours of driving data. They learn patterns: pedestrians typically walk on sidewalks, cross at intersections, check for traffic. The system becomes good at detecting and predicting normal pedestrian behavior. But a child running suddenly from behind a parked vehicle violates these learned patterns.

Waymo claims its sensors detected the child immediately when they began to emerge. But "immediately" is relative. If the child took one second to run from the parked vehicle's rear bumper into the vehicle's path, and the vehicle's reaction time was 500 milliseconds, only 500 milliseconds remained for deceleration. During that time, the vehicle must overcome inertia, engage brakes, and begin slowing. The math shows why collision occurred even with good detection and reaction.

Beyond detection speed, there's the question of occlusion handling. Tall vehicles create blind spots. Mature autonomous vehicle systems should recognize these blind spots and treat them as high-risk areas. The vehicle might slow preemptively when passing a parked vehicle with poor visibility. It might assume something might emerge from behind the vehicle and maintain extra stopping distance.

Waymo's system might not have incorporated this specific behavior. The company's documentation doesn't publicly detail how the system handles occlusion scenarios. It's possible the vehicle was operating according to design specifications, even if those specifications didn't adequately address this specific type of hazard.

Another sensor limitation relates to depth perception and size estimation. A child is smaller than an adult. If the system's perception model was primarily trained on adult pedestrians, it might process children differently. The system might estimate a child's speed or position less accurately because the training data was skewed toward larger human bodies.

Weather conditions also matter. The Santa Monica incident occurred on an apparently normal day, but weather, lighting, and time of day all affect sensor performance. Lidar works differently in different lighting conditions. Cameras struggle with glare and backlighting. A child in reflective clothing is easier to detect than one in dark clothing. The specific conditions of the Santa Monica incident might have been challenging for the sensor array in ways not immediately apparent.

These limitations don't necessarily mean Waymo's system is defective. Rather, they reflect the reality that perfect perception is impossible. All vehicles, human or autonomous, have perception limitations. The question is whether Waymo's system adequately compensates for those limitations through caution and speed reduction.

Regulatory Responses and Industry Impact

The Santa Monica incident and the school bus investigations have immediate implications for how regulators will approach autonomous vehicle approval and oversight going forward. NHTSA and the NTSB have both signaled that autonomous vehicle safety is a serious regulatory focus.

NHTSA is likely to increase scrutiny of Waymo's operations. The agency might require the company to submit additional safety data, conduct additional testing, or accept operational restrictions before deploying additional vehicles. The pattern of school bus failures combined with the pedestrian injury incident creates a cumulative narrative of safety issues that regulators can't ignore.

The NTSB investigation into school bus incidents will set precedent. If the NTSB determines that the school bus failures resulted from systematic design flaws, it will likely recommend that the entire autonomous vehicle industry address similar issues. Waymo will face direct NTSB recommendations, and industry competitors will face regulatory pressure to prove they've solved the same problem.

This could accelerate or decelerate the autonomous vehicle rollout, depending on regulatory perspective. Aggressive regulators might impose strict limitations on autonomous vehicle deployment until comprehensive safety standards are established. Moderate regulators might require fixes but allow continued operation with monitoring. Permissive regulators might treat the incidents as learning opportunities and maintain permissive approval frameworks.

The incident also affects how autonomous vehicles will be insured and licensed going forward. Insurance companies watch regulatory actions closely. If NHTSA or NTSB determinations suggest systematic safety problems, insurance premiums will rise. This affects the economics of robotaxi operations. Higher insurance costs reduce profit margins and might force operational cutbacks.

Other manufacturers face collateral impact. Tesla's Autopilot, for example, faces frequent regulatory scrutiny. Cruise, another autonomous vehicle company operating in California, has faced multiple regulatory challenges. The Santa Monica incident and school bus investigations intensify scrutiny across the industry. Any other company with similar issues will face heightened regulatory pressure.

Public perception also matters. If the Santa Monica incident is widely reported (which it will be), it shifts the public narrative about autonomous vehicle safety. Prior to this incident, Waymo was riding a wave of positive press about successful deployments and expanding operations. One child struck near a school reframes the narrative toward safety risks.

This perception shift has concrete business implications. Cities considering autonomous vehicle deployment might slow approval processes. Communities might organize opposition to robotaxi operations. Insurance regulators might demand higher reserves. The incident creates momentum for stricter regulatory approaches.

Autonomous vehicles like Waymo's can detect and react to pedestrians significantly faster than average human drivers, potentially reducing the risk of collisions. (Estimated data)

Comparative Analysis: How Human Drivers Perform

Understanding the Santa Monica incident requires context about how human drivers perform in comparable scenarios. This is complicated because human driver data is collected differently and safety metrics vary significantly.

According to the National Highway Traffic Safety Administration, approximately 6 million motor vehicle crashes occur annually in the United States. Of these, roughly 100,000 result in injuries. That's approximately 1.6% of crashes resulting in injury. For fatal crashes, the rate is roughly 0.1% of all crashes—about 1 fatal crash per 1,000 accidents.

When pedestrians are involved, injury rates skyrocket. About 7,500 pedestrians die annually in vehicle collisions. A pedestrian struck by a vehicle traveling 30 mph has approximately a 50% chance of serious injury or death. At 20 mph, the probability drops to about 5-10%. At 10 mph, serious injury is uncommon but still possible.

The Santa Monica incident involved impact at approximately 6 mph after hard braking from 17 mph. At 6 mph impact speed, serious injury becomes unlikely unless the pedestrian was vulnerable (very young, elderly, or with pre-existing conditions). The child sustained "minor injuries," which is consistent with this impact speed.

How would a human driver have performed in the same scenario? A human driver cannot suddenly appear from behind a parked vehicle and react in the same way as an autonomous system. Human reaction time to unexpected pedestrians averages 1.5 seconds. During that 1.5 seconds at 17 mph, the vehicle would travel roughly 37 feet. The child would likely have been struck at closer to the original speed, resulting in greater injury potential.

This comparison suggests that Waymo's vehicle actually performed better than a typical human driver would have in the same scenario. The vehicle braked hard from 17 mph down to 6 mph, detected the child quickly, and maintained sufficient control to prevent severe injury. From this perspective, the incident illustrates autonomous vehicle safety working as designed.

But this analysis requires qualification. The Santa Monica vehicle didn't avoid collision entirely. A skilled, attentive human driver might have avoided collision by maintaining slower speed through the area or positioning the vehicle to have better visibility of potential hazards. The autonomous system, optimized for speed and efficiency, didn't prioritize these precautions.

Also, human driver data is skewed by the fact that humans drive billions of miles annually. Autonomous vehicles have driven millions of miles. The statistical comparison is somewhat meaningless because the sample sizes are so different. As autonomous vehicles accumulate more miles and more incident data, a clearer comparative picture will emerge.

The Path Forward: Safety Improvements and Industry Standards

Waymo will likely make changes in response to the Santa Monica incident and school bus investigations, even if the company doesn't explicitly link the changes to these specific events. The company's business depends on demonstrating that it's responsive to safety concerns.

Several technical improvements could address the types of scenarios that led to the Santa Monica collision:

Occlusion handling algorithms could be enhanced to treat parked vehicles as potential hazards, automatically reducing speed when passing them, especially in school zones or high-pedestrian areas. This is a relatively straightforward software update that increases caution around predictable blind spots.

Child detection systems could be improved through enhanced training data and specific model optimization for detecting children. Computer vision systems can be trained to recognize child-specific features: smaller body size, specific gait patterns, typical clothing. Specialized child detection could trigger more conservative reaction protocols.

School zone geofencing could implement specific speed and operation protocols. When the vehicle is within geofenced school zone boundaries and during school hours, the system could enforce lower speed limits (perhaps 10-15 mph) and more conservative reaction parameters.

Predictive hazard assessment could be enhanced to anticipate not just where pedestrians are but where they might appear based on environmental factors. The presence of a parked vehicle with poor visibility should trigger predictive reasoning that something might emerge from behind it.

Increased sensor capability could involve adding additional cameras with wider field of view, upgrading lidar systems to higher resolution, or incorporating thermal imaging to detect hidden pedestrians based on body heat. More sensors mean more redundancy and better detection capability.

Beyond technical changes, industry standards are emerging. The Society of Automotive Engineers (SAE) has developed levels of automation (Level 0 through Level 5) that frame autonomous vehicle capabilities. More specific safety standards are being developed through consortia and regulatory agencies.

ISO (International Organization for Standardization) working groups are developing safety standards for autonomous vehicles. These standards will likely address exactly the types of scenarios revealed in the Santa Monica incident: pedestrian detection capabilities, reaction speed requirements, and school zone operation protocols.

Waymo will probably exceed regulatory minimums in these areas, not because the company is required to, but because safety leadership is a competitive advantage. A company that can demonstrate superior school zone safety or child detection capabilities has a significant marketing advantage.

But regulatory requirements will eventually catch up. As incidents accumulate and investigations conclude, regulators will likely establish minimum standards for autonomous vehicle operation. These standards might include:

- Minimum reaction times to unexpected pedestrians

- Mandatory school zone speed reductions

- Required detection capabilities for specific populations (children, elderly, cyclists)

- Regular safety audits and certification processes

- Incident investigation and reporting requirements

- Insurance and liability frameworks

The Santa Monica incident accelerates this regulatory timeline. Regulators now have concrete evidence that current autonomous vehicle operations can result in child injuries. That evidence creates political pressure for regulatory action.

Estimated data showing potential impact levels of NHTSA's investigation outcomes on autonomous vehicle operations, highlighting software updates and additional safety features as having the highest impact.

School Bus Failures: A Separate But Related Safety Issue

The school bus investigation deserves specific attention because it reveals a different type of safety failure than the Santa Monica pedestrian incident. School bus failures suggest that Waymo's perception or decision-making system has systematic issues with a specific, well-defined scenario: stopped school buses with active stop signs and lights.

School buses are distinctive vehicles. They're large, typically yellow or orange, and display bright red stop signs. They activate flashing lights. These are not subtle warnings. Any reasonably functional perception system should consistently identify school buses and recognize their stop signs.

Yet Waymo's vehicles have passed them in at least 20 documented cases. This suggests either:

- A perception failure where the system fails to recognize the stop sign or lights

- A decision-making failure where the system recognizes the bus but incorrectly determines that passing is safe

- A mapping or localization failure where the system has incorrect information about road status

Each possibility represents a serious safety gap. Perception failures suggest that the sensor system isn't reliable for detecting critical safety features. Decision-making failures suggest that the planning algorithm has flawed logic. Mapping failures suggest that the vehicle's understanding of its environment is unreliable.

From a regulatory perspective, these failures are especially serious because they affect protected populations (children) in specific, predictable scenarios (school zones). Regulators and safety advocates expect autonomous vehicles to handle these scenarios perfectly. Missing them suggests systematic problems.

Waymo has likely already begun investigating these failures internally. The company probably knows the root causes and is developing fixes. But the NTSB investigation will pressure the company to publicly disclose findings and implement mandatory corrective actions.

The fact that these failures occurred in multiple cities (Austin and other locations) suggests they might reflect system-wide design issues rather than isolated problems. This is more concerning from a safety perspective. If the failures were specific to one vehicle or location, they'd be easier to address. If they're systematic across multiple vehicles and geographies, the fixes might be more complex.

Public Perception and Trust in Autonomous Vehicles

The Santa Monica incident occurs at a critical moment for autonomous vehicle public acceptance. Robotaxis are becoming increasingly visible on city streets. Public familiarity is growing, but so is scrutiny.

Public psychology around autonomous vehicle safety differs from psychology around traditional vehicle safety. When a human driver causes an accident, people attribute it to that individual driver's mistake or negligence. When an autonomous vehicle causes an accident, people attribute it to the technology itself and the company deploying it.

This attribution bias affects public trust differently. A single serious autonomous vehicle accident can undermine public confidence more effectively than dozens of human-caused accidents. People see autonomous vehicle incidents as evidence that the technology isn't ready, while they see human-driven accidents as random misfortune or individual error.

The Santa Monica incident confirms some people's concerns about autonomous vehicle safety. They'll point to the child struck near a school and argue that robots shouldn't be trusted with human life. This perspective will be particularly strong among parents and educators, who see schools as spaces where the highest safety standards should apply.

Countervailing voices will emphasize that the child suffered only minor injuries, that the vehicle braked hard and detected the child quickly, and that human drivers would likely have caused more severe injury in the same scenario. But these rational arguments compete against visceral responses to an image of a child struck by a robot.

Media coverage will significantly shape public perception. If news coverage emphasizes Waymo's safety failures and brushes past the mitigating factors (hard braking, detection speed, relatively low impact speed), public trust will decline. If coverage emphasizes that autonomous vehicle response was superior to human driver response would have been, trust might be maintained.

Waymo's public communication in the aftermath matters. The company's blog post about the incident was relatively straightforward and honest about what happened. But the company will need to continue emphasizing its commitment to safety, transparency about investigations, and concrete improvements resulting from this incident.

Other companies deploying autonomous vehicles will watch Waymo closely. If Waymo handles the incident well—cooperating with investigations, implementing improvements, maintaining public confidence—other companies will try to follow a similar playbook. If the company struggles with trust or if regulatory responses are severe, other companies will become more cautious about deployment expansion.

Technical Deep Dive: Braking Performance and Physics

Waymo's account emphasizes that the vehicle braked "hard" from 17 mph to impact speed of approximately 6 mph. Understanding the physics of this braking event helps assess whether the vehicle performed adequately.

Braking performance depends on several factors: brake system capability, tire friction, vehicle weight distribution, and surface conditions. A typical modern vehicle can achieve deceleration of 0.8 to 0.9 g (roughly 25-30 feet per second squared) under hard braking on dry pavement.

Assuming the Waymo vehicle achieved 0.8 g deceleration:

Where:

- = initial velocity (17 mph ≈ 24.9 feet/second)

- = final velocity (6 mph ≈ 8.8 feet/second)

- = deceleration (0.8 g ≈ 25.8 feet/second²)

- = braking distance

Solving:

So the vehicle braked over approximately 10-11 feet before impact. This happened after detecting the child "as soon as they began to emerge from behind the stopped vehicle."

The critical question: how much distance did the child cover between when they started emerging and when they entered the vehicle's path? If the child took one step to get from behind the parked vehicle into the roadway, they might have covered 2-3 feet. If the vehicle was 15 feet away when the child started emerging, the vehicle had roughly 10-15 feet to decelerate. The math shows it should have been able to stop or minimize impact speed.

But if the child was only 5-10 feet away when they began emerging, the vehicle wouldn't have had sufficient distance to stop despite hard braking. The child would have entered the vehicle's path before braking could reduce speed significantly below impact level.

This is the key factual uncertainty: at what distance did the child become visible to the vehicle's sensors, and how quickly did they enter the vehicle's path? Waymo's accident report should contain precise sensor data answering these questions. NHTSA investigators will focus intently on this temporal and spatial information.

The fact that impact occurred at 6 mph rather than 17 mph is significant and positive. Impact speed directly determines injury severity. At 6 mph, serious injury is unlikely. At 17 mph, serious injury becomes probable. The vehicle's braking effectiveness meant the difference between minor and serious injury.

Liability Frameworks: Who Pays and How?

The Santa Monica incident will eventually result in liability determination and insurance settlement. Understanding the liability frameworks helps explain how responsibility is assigned and who ultimately bears financial cost.

In California, strict liability applies to product manufacturers. This means if a product defect causes injury, the manufacturer is liable regardless of negligence. A strict liability framework doesn't require proving that Waymo was careless; only that the product had a defect that caused injury.

Waymo could argue that the accident didn't result from a product defect but from the child's sudden unexpected behavior. The vehicle performed as designed—detected the child and braked hard. In this defense, the accident is blamed on the child's action, not the vehicle's failure.

Alternatively, regulators might argue that the vehicle's design should have included additional safety features for school zones, and the absence of these features constitutes a defect. Or they might argue that designing a vehicle to minimize speed in high-risk scenarios is a reasonable precaution that Waymo's design failed to include.

Waymo has comprehensive liability insurance. The company's insurance likely covers incidents involving injury resulting from operation of the robotaxi, subject to various exclusions and limitations. The insurance company will conduct its own investigation and determine coverage scope.

If the insurance company denies coverage based on a policy exclusion, Waymo bears the full cost. If coverage is accepted, insurance pays up to policy limits. Either way, the financial impact on Waymo is significant—both direct costs (medical expenses, settlement, litigation) and indirect costs (increased insurance premiums, regulatory penalties, reputation damage).

For the child and family, liability determination matters for compensation. If Waymo is found liable, insurance should cover medical expenses and other damages. If liability is unclear or contested, the family might need to pursue litigation, which is costly and time-consuming.

The case also has industry-wide implications. If Waymo is found liable and required to pay substantial damages, it establishes precedent that autonomous vehicle companies are responsible for accidents involving their vehicles. This increases the cost of operating autonomous vehicles, potentially affecting business models and deployment timelines.

Conversely, if Waymo successfully argues that the accident resulted from unpredictable child behavior rather than vehicle failure, it suggests companies have limited liability for accidents in high-risk environments like school zones. This would reduce costs but might face public opposition.

Likely the outcome will be somewhere in between: Waymo bears some responsibility but also factors the child's unexpected behavior into liability determination. This shared responsibility approach is common in vehicle accident cases.

Regulatory Precedent and Future Standards

The Santa Monica incident and school bus investigations establish precedent that will shape autonomous vehicle regulation for years. Regulators will cite these incidents in developing future standards and approving or restricting autonomous vehicle operations.

NHTSA is developing comprehensive autonomous vehicle regulations. Currently, NHTSA treats autonomous vehicles as vehicles subject to existing automotive safety regulations. The agency has adopted "fail-operational" approach—requiring autonomous vehicles to meet existing safety standards, not creating special standards for autonomous vehicles.

However, the Santa Monica incident might shift this approach. Regulators might determine that existing standards are insufficient for autonomous vehicles and develop new standards specifically addressing autonomous vehicle safety issues.

Potential new regulatory approaches:

Specific school zone requirements: Regulations might mandate that autonomous vehicles operate at reduced speeds in school zones, require enhanced detection systems for children, or restrict operation during school hours.

Pedestrian detection standards: Regulators might establish minimum requirements for pedestrian detection speed, accuracy, and reaction time. Companies would need to demonstrate that their systems meet these standards.

Incident reporting and transparency: Regulations might require autonomous vehicle companies to report all incidents (not just serious ones) to regulators, and make certain information publicly available. This increases transparency and helps regulators identify patterns.

Regular safety certification: Rather than one-time approval, autonomous vehicles might require periodic recertification. Companies would need to demonstrate ongoing safety compliance through testing and real-world incident data.

Insurance requirements: Regulators might establish minimum insurance requirements for autonomous vehicles, ensuring that adequate coverage exists to compensate accident victims.

Comparative testing: Regulators might require autonomous vehicle companies to conduct comparative testing against human driver performance in specific scenarios. This would help determine whether autonomous vehicles meet or exceed human safety performance.

These regulatory approaches would increase costs and complexity for autonomous vehicle companies. But they would also increase public confidence and potentially accelerate deployment by providing regulatory clarity.

Europe is developing more aggressive autonomous vehicle regulations. The EU has established specific requirements for autonomous vehicles, including detailed technical standards and approval processes. The Santa Monica incident and school bus investigations might push U.S. regulators to adopt similarly detailed requirements.

The Broader Autonomous Vehicle Safety Landscape

Waymo's incidents don't occur in isolation. Other autonomous vehicle companies face their own safety challenges. Cruise, operated by General Motors, had a major incident in 2023 when a robotaxi struck a pedestrian. Tesla faces ongoing questions about Autopilot's safety. Smaller companies like Nuro, Motional, and others are expanding operations and will inevitably face incidents.

The industry as a whole is at an inflection point. Deployment is accelerating, but safety concerns are intensifying. Public attention to incidents is increasing. Regulatory scrutiny is sharpening. Companies that handle safety challenges effectively will gain competitive advantage. Companies that struggle will face operational restrictions or public backlash.

Waymo has advantages in this competitive landscape. The company has invested heavily in safety research, deployed extensive real-world testing, and developed sophisticated perception and decision-making systems. But incidents like Santa Monica demonstrate that perfect safety remains elusive. The company will need to prove it can learn from incidents, implement improvements, and maintain safety performance as operations scale.

The Santa Monica incident ultimately matters because it tests whether autonomous vehicles can achieve the safety performance that the public expects and that regulators will demand. A child struck near a school is the type of scenario that can crystallize public opinion and regulatory response. How Waymo and regulators handle this incident will shape the trajectory of autonomous vehicle deployment for years to come.

TL; DR

-

The Incident: A Waymo robotaxi struck a child near a Santa Monica elementary school on January 23, 2025, after braking hard from 17 mph to 6 mph impact speed. The child sustained minor injuries. NHTSA opened an investigation.

-

Context Matters: Waymo is simultaneously under investigation for school bus violations (20+ incidents where robotaxis illegally passed stopped school buses), creating a narrative of systematic safety issues.

-

Detection and Response: The vehicle detected the child and braked hard, reducing impact speed from 17 mph to 6 mph. Whether this response was adequate depends on how quickly the child appeared and how far away the vehicle was when detection occurred.

-

Sensor Limitations: The child emerged from behind a parked vehicle (occlusion), a scenario that challenges autonomous vehicle perception systems and tests whether the vehicle maintains sufficient caution near potential hazards.

-

Regulatory Impact: The incident will likely trigger increased NHTSA oversight, potential operational restrictions on Waymo, and accelerated development of specific autonomous vehicle safety standards for school zones.

-

Industry Implications: How Waymo handles this incident and whether regulators impose strict requirements will shape autonomous vehicle deployment timelines and strategies industry-wide.

FAQ

What exactly happened in the Santa Monica Waymo incident?

On January 23, 2025, a Waymo robotaxi struck a child near an elementary school in Santa Monica. According to Waymo's account, the child suddenly emerged from behind a parked SUV into the vehicle's path. The vehicle detected the child and braked hard from 17 mph, reducing impact speed to 6 mph. The child sustained minor injuries. Waymo called 911, remained at the scene, and cooperated with law enforcement.

How fast was the Waymo vehicle traveling when it hit the child?

The vehicle was traveling at approximately 17 mph when the child emerged into its path. The vehicle then braked hard, reducing impact speed to approximately 6 mph. This reduction in speed, from 17 mph to 6 mph, is significant because impact severity increases dramatically with speed. At 6 mph, serious injury is unlikely. At 17 mph, the child would likely have experienced more severe injuries. The vehicle's braking effectiveness meant the difference between minor and serious injury.

Is NHTSA investigating the incident?

Yes, the National Highway Traffic Safety Administration opened a formal investigation into the incident immediately after Waymo reported it. NHTSA investigations follow a structured process: gathering data from the manufacturer, examining vehicle logs and sensor recordings, interviewing all parties involved, and assessing whether the incident reveals a safety defect. The investigation timeline could span 6-36 months depending on complexity. Findings could result in recalls, operational restrictions, or other regulatory actions.

What are the school bus violations Waymo is also being investigated for?

Waymo robotaxis have illegally passed stopped school buses in at least 20 documented incidents, primarily in Austin, Texas. School buses display stop signs and flashing lights when loading or unloading children. Federal law requires all vehicles to stop at least 10 feet away. Waymo's vehicles bypassed this requirement in multiple cases. The NHTSA investigation into these violations began in October 2024. The NTSB opened a separate investigation in early 2025, treating the school bus issue as a serious safety matter affecting vulnerable populations.

How do autonomous vehicles detect pedestrians?

Autonomous vehicles use multiple sensor types: cameras (for visual detection), lidar (light-based ranging for precise distance measurement), radar (for detecting motion), and ultrasonic sensors (for close-range detection). These sensors work together to create a 360-degree environmental view with continuous scanning. When a pedestrian comes into view, the system identifies them and predicts their trajectory. Modern systems can detect pedestrians in under 100 milliseconds and theoretically react faster than human drivers. However, challenges remain with occlusions (blind spots created by parked vehicles) and unpredictable behavior, especially from children.

What speed should autonomous vehicles use in school zones?

Currently, Waymo robotaxis operate at speeds consistent with local traffic laws, typically 25-35 mph on residential streets. But the Santa Monica incident raises questions about whether autonomous vehicles should use lower speeds in school zones. Advocates suggest 10-15 mph in school zones to increase stopping distance and reduce injury severity if collisions occur. Waymo hasn't publicly committed to lower school zone speeds, though regulatory pressure might force the issue. The trade-off is safety versus operational efficiency. Lower speeds increase stopping distance but reduce passenger value and trip speed.

What liability does Waymo face from the incident?

Waymo's liability depends on whether the incident is determined to result from a product defect. If Waymo is found liable, the company's insurance should cover medical expenses and other damages, subject to policy terms. Waymo could argue the accident resulted from the child's sudden unexpected behavior rather than vehicle failure. Liability determination will involve expert analysis of whether the vehicle's response met reasonable standards of care. The case will likely establish precedent affecting autonomous vehicle liability frameworks industry-wide. Potential outcomes range from full Waymo liability to shared responsibility reflecting the child's unexpected action.

How will this incident affect autonomous vehicle regulation?

The incident will likely accelerate development of specific autonomous vehicle safety standards. Regulators might establish minimum requirements for school zone operation, mandatory speed reductions, enhanced child detection systems, or specific incident investigation protocols. The incident provides concrete evidence that current autonomous vehicle operations can result in child injuries, creating political pressure for regulatory action. New standards will likely address pedestrian detection speed, reaction time requirements, school zone protocols, and periodic safety certification. Compliance with these new standards will increase costs but might accelerate deployment by providing regulatory clarity.

Will Waymo's other operations be restricted following this incident?

Possibly. NHTSA has authority to restrict autonomous vehicle operations if the agency determines safety concerns warrant limits. The agency might require Waymo to submit additional safety data, accept operational restrictions (such as lower speed limits in school zones), or limit expansion pending investigation completion. However, outright bans are unlikely. More probable are incremental restrictions: specific geofencing requirements, time-of-day limitations, mandatory monitoring, or performance improvement requirements. The outcome depends on NHTSA's investigation findings and the agency's regulatory approach.

How does this incident compare to human driver performance?

Human drivers would likely perform worse in the same scenario. Human reaction time to unexpected pedestrians averages 1.5 seconds. During that time at 17 mph, a vehicle would travel roughly 37 feet. The child would likely be struck at closer to the original speed, resulting in more severe injury. Waymo's vehicle detected the child quickly and braked hard, reducing impact speed significantly. From this perspective, the autonomous vehicle response was superior to typical human driver response. However, this comparison is complicated by sample size differences—humans drive billions of miles annually while autonomous vehicles have driven millions of miles, making statistical comparison premature.

What improvements might Waymo make in response to this incident?

Waymo will likely implement several improvements: enhanced occlusion handling to treat parked vehicles as potential hazards and reduce speed preemptively; improved child detection systems trained on child-specific features; school zone geofencing with lower speed limits and more conservative reaction parameters; predictive hazard assessment that anticipates pedestrian emergence from hidden locations; and potentially increased sensor capability through upgraded cameras, lidar, or thermal imaging. These improvements can be implemented through software updates and, in some cases, hardware additions. The company will probably make changes proactively rather than waiting for regulatory mandates, as safety leadership is a competitive advantage.

The Path Forward: What Comes Next

The immediate future holds several critical moments for Waymo and the autonomous vehicle industry. NHTSA's investigation will take months or years, but preliminary findings could come within the next several months. The NTSB's school bus investigation might reach conclusions faster, given the pattern of incidents across multiple vehicles and locations.

Regulatory responses could range from modest (requiring specific documentation or additional testing) to aggressive (imposing speed limits or operational restrictions). The political environment matters. If the incident becomes a flashpoint for autonomous vehicle skepticism, regulators might face pressure to impose stricter requirements. If it's handled as a technical issue with engineering solutions, requirements might be more moderate.

Waymo's response matters tremendously. The company's public communications, transparency with regulators, speed of implementing improvements, and willingness to accept restrictions will all shape public perception and regulatory treatment. Companies that appear to prioritize safety and transparency fare better in the aftermath of incidents than companies that appear defensive or slow to act.

For the broader autonomous vehicle industry, this incident is a wake-up call. The deployment timeline is accelerating, but safety challenges remain serious. Companies need to treat incidents as opportunities to improve, not threats to suppress. Transparency, rapid response, and genuine commitment to safety are not just ethical obligations; they're business imperatives.

The Santa Monica incident won't stop autonomous vehicle deployment. But it will force the industry to mature. Companies will face tougher regulatory scrutiny, higher insurance costs, and greater public skepticism. Those that navigate these challenges successfully will gain market advantage. Those that struggle will face operational restrictions or business failure.

Ultimately, autonomous vehicle safety depends on all participants—manufacturers, regulators, insurers, and the public—maintaining high standards and holding each other accountable. The Santa Monica incident tests whether these participants can meet those standards. How they respond will determine whether autonomous vehicles become a safe, trusted transportation option or remain a technology that struggles with public acceptance.

Key Takeaways

- Waymo robotaxi struck a child near a Santa Monica elementary school on January 23, 2025, after braking hard from 17 mph to 6 mph impact speed, causing minor injuries.

- NHTSA investigation opened immediately; Waymo also faces separate NTSB investigation into 20+ school bus passing violations, creating narrative of systematic safety issues.

- Vehicle's hard braking and rapid detection likely prevented more severe injury—impact speed reduction from 17 mph to 6 mph significantly affects injury severity probability.

- Regulatory response could include mandatory school zone speed reductions, enhanced child detection requirements, or operational restrictions, affecting autonomous vehicle deployment timelines industry-wide.

- Incident establishes precedent that autonomous vehicles will face intense scrutiny near schools and vulnerable populations, likely accelerating development of specific safety standards.

Related Articles

- Uber's AV Labs: How Data Collection Shapes Autonomous Vehicles [2025]

- Autonomous Vehicle Safety: What the Zoox Collision Reveals [2025]

- Robotaxis Disrupting Ride-Hail Markets in 2025: Price War and Speed [2025]

- Tesla Discontinuing Model S and Model X for Optimus Robots [2025]

- Tesla's Second Year of Revenue Decline: Market Analysis & Implications [2025]

- Doomsday Clock at 85 Seconds to Midnight: What It Means [2025]

![Waymo Robotaxi Hits Child Near School: What Happened & Safety Implications [2025]](https://tryrunable.com/blog/waymo-robotaxi-hits-child-near-school-what-happened-safety-i/image-1-1769692102062.jpg)