How Waymo Is Using Genie 3 to Master Impossible Driving Scenarios

Imagine your autonomous vehicle encountering an elephant blocking the highway. Or navigating snow-covered streets in San Francisco. Or safely driving through a thunderstorm you've never seen before.

These aren't hypothetical problems anymore. They're the exact scenarios Waymo is preparing for with a groundbreaking new tool called the Waymo World Model, built on top of Google Deep Mind's Genie 3 technology. And this shift represents something fundamental: the autonomous vehicle industry is moving away from pure real-world training data and into the realm of synthetic intelligence.

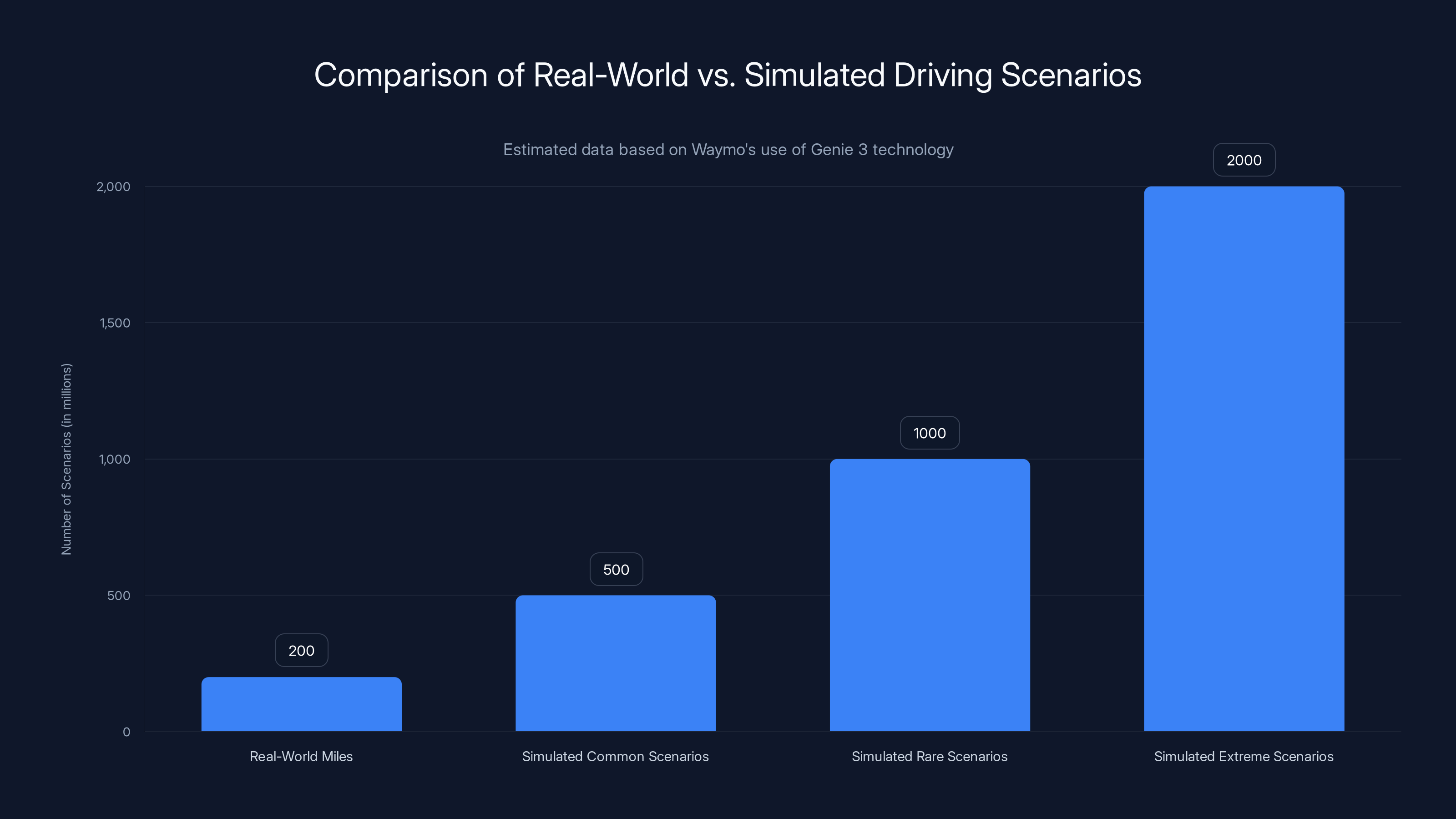

Waymo has driven over 200 million miles on real roads. But here's the thing that keeps autonomous driving engineers up at night: the rarest, most dangerous situations are statistically underrepresented in that data. A snow-covered intersection in New England happens maybe once per year for most vehicles. A pedestrian acting unpredictably happens... well, more often than we'd like. But the AI needs to see tens of thousands of variations to truly understand edge cases.

The solution? Stop waiting for rare events to happen. Instead, generate billions of simulated driving scenarios that cover everything from common conditions to events that practically never occur in the real world. That's where Genie 3 changes the game.

In this comprehensive guide, we're breaking down how Waymo is using world models to train the next generation of autonomous vehicles, what makes Genie 3 different from previous attempts, and why this technology could accelerate self-driving cars reaching your city far sooner than anyone expected.

Why Real-World Training Data Hits a Wall

Traditionally, autonomous vehicle companies relied on a straightforward approach: collect driving data, lots of it, and train AI systems to recognize patterns. Waymo's 200 million real-world miles represent an enormous dataset. But scale alone doesn't solve the rare-event problem.

Consider weather conditions. Phoenix, where Waymo started operating extensively, averages 300 sunny days per year. Boston, one of Waymo's newer markets, has snow for nearly four months annually. If your training data comes exclusively from Phoenix, your model has seen maybe 20 snow-driving events across millions of miles. But Boston drivers encounter snow regularly. That distribution mismatch creates vulnerability.

The mathematics here are unforgiving. If you want your AI to handle situation X with 99.9% competence, you typically need to train it on thousands of examples of situation X. Real-world data collection is prohibitively expensive at that scale. Waymo would need to deploy thousands of vehicles to thousands of locations across multiple years just to capture the statistical variance needed for true robustness.

There's another problem: truly dangerous scenarios are rare because human drivers and existing safety systems avoid them. You won't have much training data on what happens when a vehicle's brakes fail partially because modern vehicles are engineered to prevent that failure. You won't see extensive data on multi-vehicle collision scenarios because traffic laws and infrastructure are designed to prevent them. Yet autonomous vehicles still need to respond correctly if they occur.

This is why synthetic data generation has become essential. You can't wait for nature to provide every edge case. You need to create them.

Understanding World Models: The New Foundation of AV Training

Before diving into Genie 3 specifically, let's establish what world models actually are, because the term gets thrown around loosely.

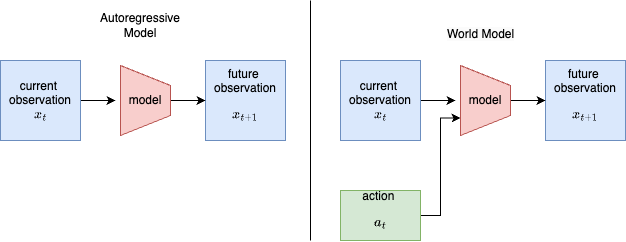

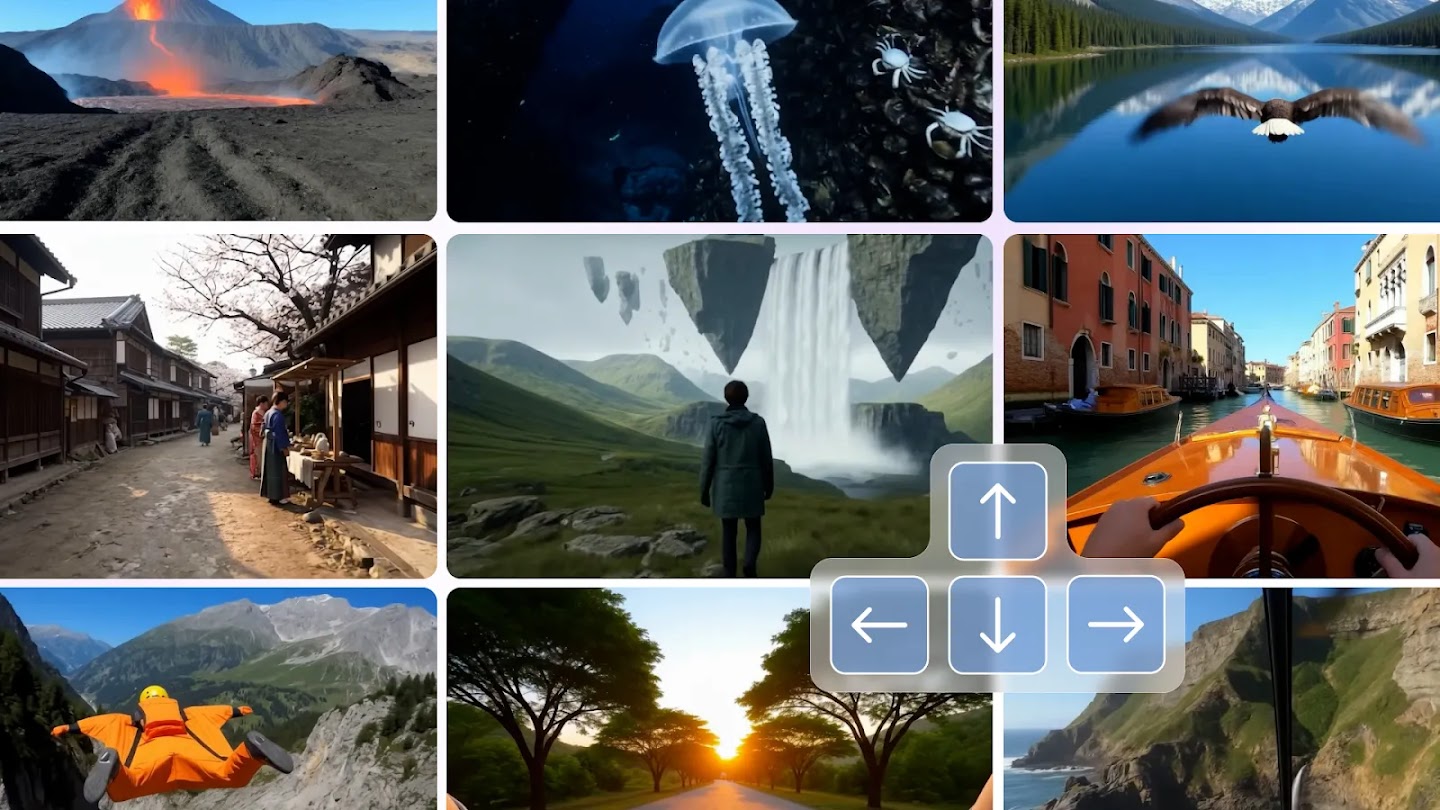

A world model is an AI system trained to understand how the physical world works based on visual data. Feed it a video of a scene, and it can predict what the next frames will look like. Feed it instructions like "move the camera left" or "turn the car right," and it can generate realistic video showing what would be visible from that new viewpoint.

The critical insight: world models don't build explicit 3D maps or physics engines. Instead, they learn statistical patterns in video data and generate new video frames that feel authentic to a viewer. They're autoregressive models, meaning they predict the next frame based on previous frames, then use that predicted frame to predict the next one, and so on.

This might sound like a parlor trick, but the implications are profound. If a system can generate photorealistic video of any driving scenario you describe, you've essentially created a synthetic data generation engine with infinite capacity.

Previous world models had critical limitations. Early systems would generate video for 5-10 seconds reasonably well, but then the quality would degrade sharply. Long-term consistency was terrible. If the AI generated a car in frame one, and you looked away for a few seconds, when you looked back, that car might have inexplicably changed shape or color. It's like the world forgot what it generated a moment ago.

This "short-term memory" problem made world models useless for most practical applications. You couldn't generate a coherent 30-second clip of highway driving. The world would drift and contradict itself.

The Long-Horizon Memory Breakthrough

Genie 3 solves this with what Google Deep Mind calls long-horizon memory. The model maintains awareness of objects and details for several minutes of generated video, not just a few seconds. This is a technical achievement that required entirely new architectures and training approaches.

Why does this matter for autonomous driving? Because now Waymo can generate coherent driving scenarios that last long enough to be useful. A five-minute clip of highway driving. A fifteen-minute simulation of navigating a city. These generated scenarios maintain visual consistency and physical plausibility throughout.

Long-horizon memory also means the generated world responds consistently. If Genie 3 generates a specific vehicle in the scene, that vehicle maintains its appearance, size, and behavior across the entire generated sequence. If it generates road markings, they remain consistent. If it places a pedestrian at a crosswalk, that pedestrian's position and movements follow natural physics.

For training autonomous vehicles, this consistency is essential. The AI needs to see driving scenarios where the world behaves according to consistent rules, not hallucinations that change moment-to-moment.

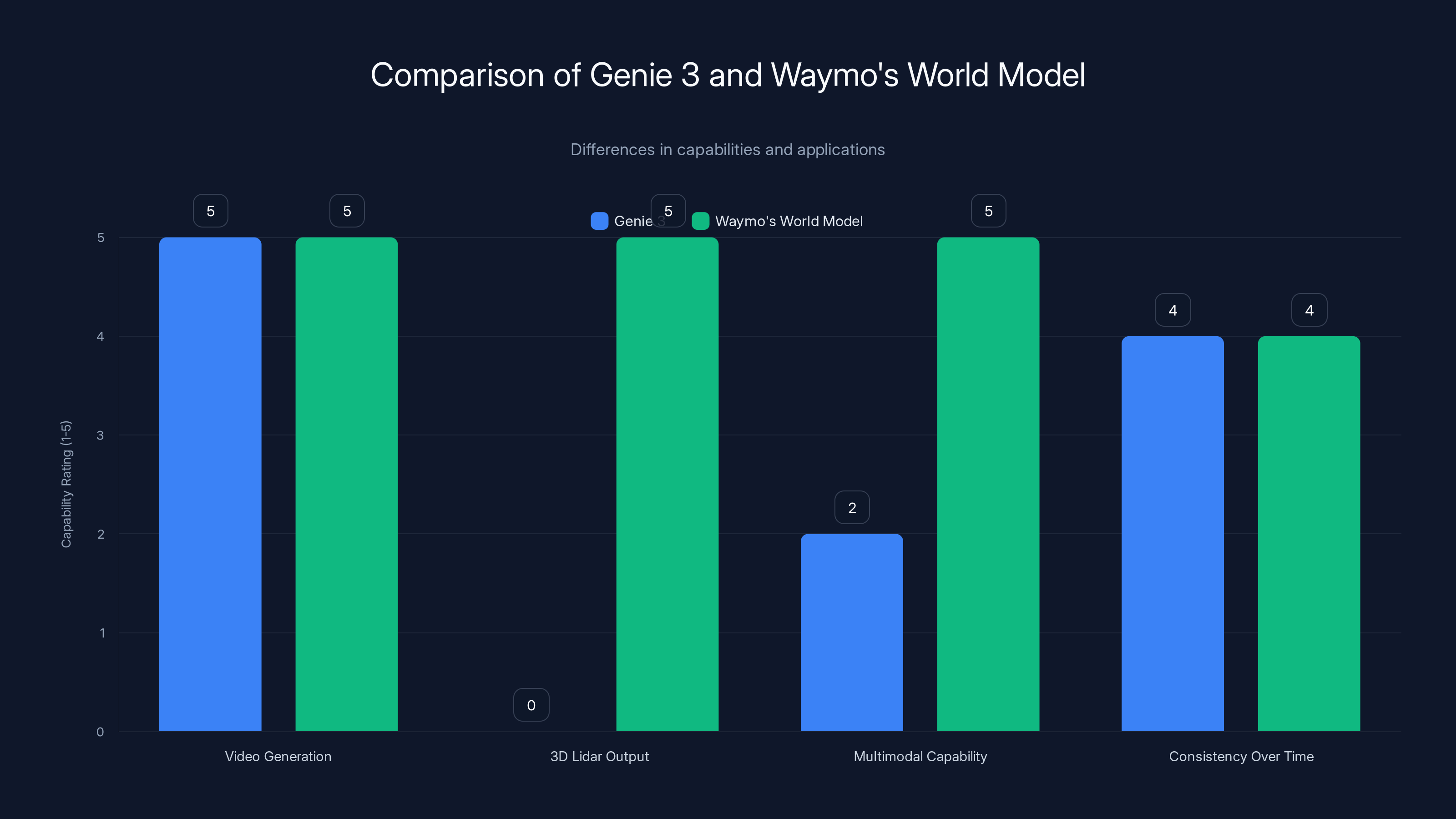

Waymo's World Model extends Genie 3 by adding 3D lidar output and enhanced multimodal capability, crucial for autonomous vehicles. Estimated data based on described features.

Waymo's Specialized Implementation: The World Model Architecture

Here's where the story gets more interesting. Waymo didn't just plug dashcam footage into Genie 3 and call it a day. That wouldn't work because Genie 3, in its standard form, was trained primarily on internet video data. It's optimized for general visual coherence, not the specific sensor requirements of autonomous vehicles.

Waymo vehicles don't just have cameras. They have lidar sensors that create 3D point clouds of the environment. They have radar. They have IMU sensors measuring acceleration and rotation. This multimodal sensor data is critical for autonomous driving because it provides depth information and detects objects beyond the camera's line of sight.

Standard Genie 3 generates 2D video. That's insufficient for training Waymo's autonomous systems.

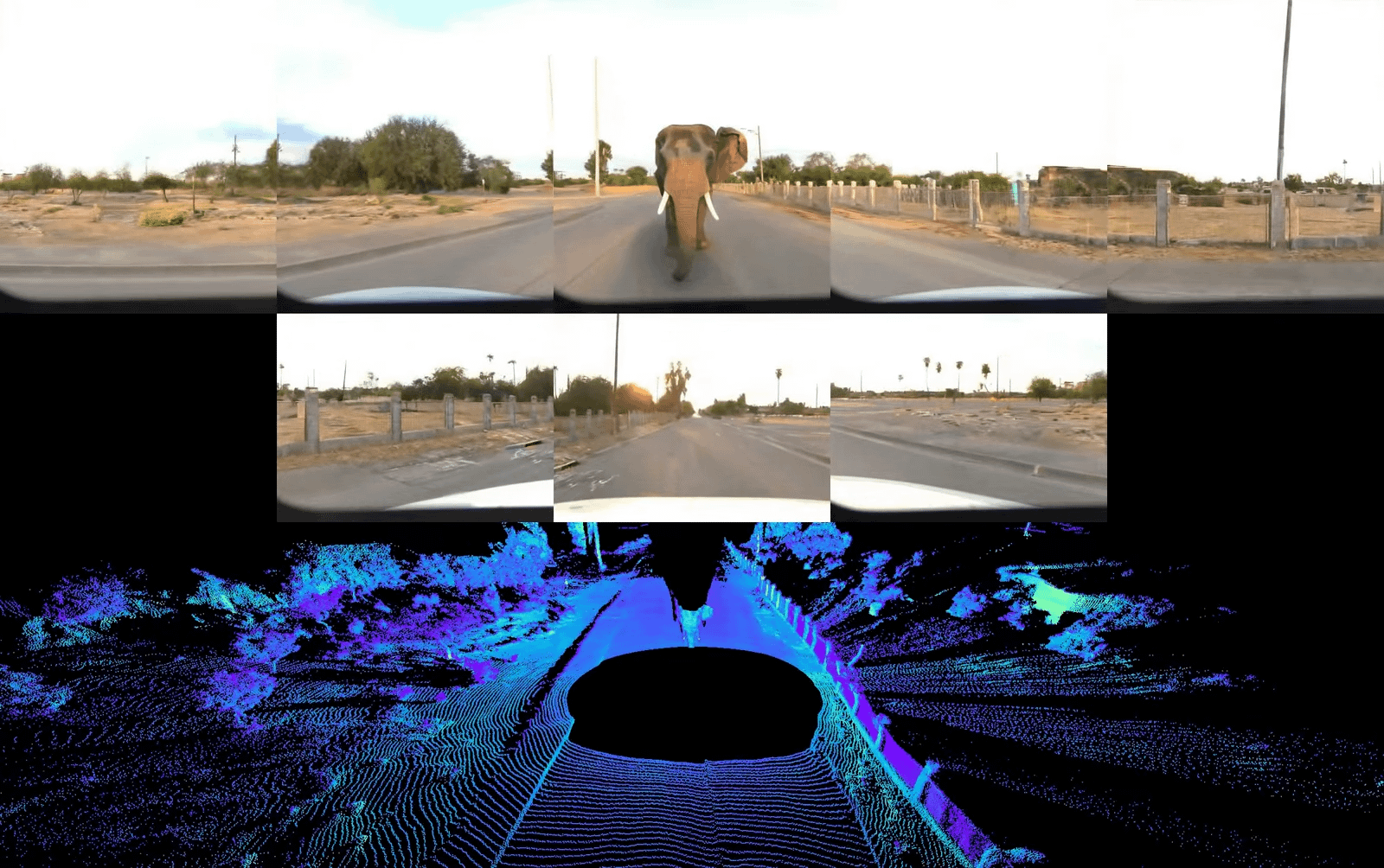

So Waymo and Google Deep Mind undertook specialized post-training to make Genie 3 generate both 2D video and matching 3D lidar outputs. This is technically non-trivial. The model had to learn to generate realistic video frames while simultaneously generating corresponding lidar point clouds that align spatially and temporally with the video. The lidar output must show consistent depth information, occlusion handling, and object detection that matches what the video shows.

The trained Waymo World Model can take a video sequence and, through a single forward pass, generate photorealistic dashcam footage paired with realistic lidar readings. This is what they call the multimodal world model.

Driving Action Control: Prompt-Based Scenario Manipulation

Once Waymo has this world model, they unlock a capability they call driving action control. This is where the real power emerges.

Take a video of a real drive through downtown Phoenix. The video shows a specific route, specific weather, specific traffic. Now, instead of just replaying that video, use prompts to change what happens. "Make it snowy." "Shift the vehicle to take a left turn at the next intersection." "Add a pedestrian crossing unexpectedly."

The Waymo World Model regenerates the footage, accounting for these changes. The video now shows what would have happened if the vehicle had taken that left turn. The lidar output changes accordingly. The weather overlay renders accurately. The background vehicles adjust their positions.

This is phenomenally useful for training. You take real driving footage and generate unlimited variations by changing route, weather, traffic patterns, or hazard conditions. You're not inventing completely synthetic scenarios from scratch. You're mutating real scenarios systematically.

The quality advantage is significant. Previous simulation methods relied on reconstructive approaches: take a real video, extract the 3D structure, build a 3D model, then render it under different conditions. These reconstructive methods struggle with fine detail and often produce artifacts. The world model approach generates video end-to-end, which tends to preserve photorealism better.

Waymo uses Genie 3 to simulate billions of scenarios, far surpassing the 200 million real-world miles driven. Estimated data shows the potential scale of simulated scenarios.

From Dashcams to World Models: Expanding Training Data Exponentially

Here's a practical scenario that illustrates the value chain: Waymo discovers publicly available dashcam footage from a city they're expanding into. The footage is high-quality but comes from regular cameras, not Waymo's multimodal sensors.

Previously, this footage was essentially unusable for training. Waymo's autonomous systems need lidar data they can learn from. Dashcam-only footage doesn't provide it.

Now, Waymo can drop that footage into the World Model. The system generates matching lidar data, inferring what the lidar would have sensed based on the visual scene. Suddenly, that external dashcam footage becomes usable training data.

Multiply this across thousands of hours of available dashcam footage from cities worldwide. The potential training dataset expands by orders of magnitude. Waymo can incorporate dashcam footage from taxi companies, delivery services, insurance companies, and the public—all converted into multimodal training data through the World Model.

This also solves a critical data scarcity problem. Certain dangerous or rare scenarios have minimal real-world dashcam footage. But they might have some. The World Model can take that scarce real footage and generate variations. A single video of a vehicle hydroplaning on wet roads becomes five hundred variations showing hydroplaning under different speeds, angles, road conditions, and traffic situations.

The economics are compelling. Rather than spending millions deploying vehicles to gather rare-event data, Waymo can capture limited real examples and extrapolate synthetically.

Hyper-Realism Meets Edge Case Synthesis

The term "hyper-realistic" appears frequently in Waymo's announcements about the World Model. What does that actually mean in practice?

First, it means the generated video doesn't look obviously fake. Early world models produced that uncanny valley quality where something feels off even if you can't immediately pinpoint why. Genie 3, through scale and training sophistication, generates footage that appears photographically real. Objects have correct occlusion. Shadows fall naturally. Reflections in windows show appropriate content. Motion blur appears where expected.

Second, it means physical consistency. If the world model generates a vehicle with specific proportions and color, that vehicle maintains those properties throughout the generated sequence. If it generates a pedestrian walking, the gait follows biomechanical rules—limbs bend naturally, weight shifts realistically.

Third, and most important for autonomous driving, it means the generated scenarios obey traffic and environmental rules that the autonomous vehicle needs to learn. Vehicles don't clip through each other. Pedestrians don't walk backwards. Rain accumulation on windshields follows physical properties.

But here's the nuance: hyper-realism doesn't mean perfect accuracy. Tests of Genie 3 show it occasionally produces subtle errors. Vehicle positions might be slightly off. Lighting might not perfectly match camera angle. These errors are increasingly rare, but they exist.

For Waymo's purposes, perfect accuracy isn't necessary. The autonomous vehicle needs to see millions of scenarios, not each scenario perfectly. If the World Model is 95% accurate, the AI still learns massive amounts about how to navigate varied conditions. The small percentage of inaccuracies act like natural noise in training data, potentially even improving robustness through a form of regularization.

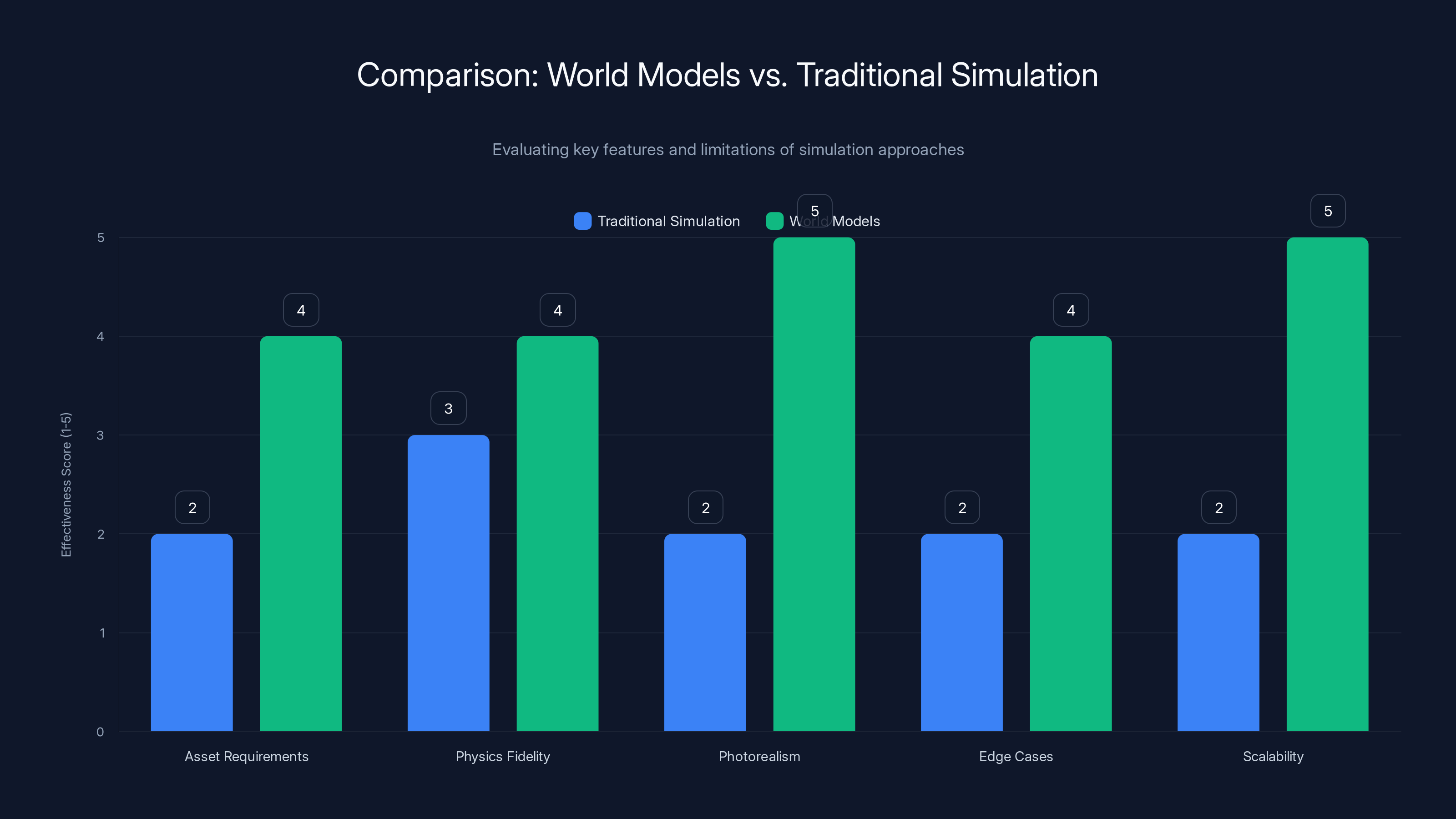

World Models outperform Traditional Simulation in scalability and photorealism, offering inherent realism and reduced manual effort. Estimated data based on qualitative analysis.

Scaling Into New Markets: Boston, Washington D. C., and Beyond

Waymo's expansion into new cities created an acute problem that directly motivated this technology investment.

Phoenix and other early markets were strategically chosen. Consistently clear weather. Predictable road conditions. Minimal weather variability. This made initial training and validation straightforward but created an obvious blind spot: the model was optimized for perfect conditions that don't represent much of the United States.

Now Waymo is expanding into Boston and Washington, D. C. These markets have:

- Winter snow and ice that requires different braking dynamics, traction control awareness, and visibility management

- Heavy rain that affects sensor performance and requires adapted driving behavior

- Fog and low-visibility conditions that challenge perception systems

- Seasonal variation where the same road looks and behaves completely differently across the year

- Old infrastructure with degraded road markings and inconsistent signage

Without the World Model approach, Waymo would need to wait 1-2 years in each new market, collecting seasonal data, before deploying confidently. That's a massive business constraint. Each new market launch requires substantial preparation time.

With the World Model, Waymo can take its existing real-world data from Boston winters, generate hundreds of scenario variations using prompts, and train its autonomous systems on the full diversity of conditions before even deploying many vehicles.

The scenario variations Waymo can generate include:

- Different levels of snow accumulation (light dusting to heavy accumulation)

- Various ice conditions (black ice, partially melted roads, refrozen patches)

- Rain intensity and duration variations

- Fog density and visibility ranges

- Road salt and residue effects on markings

- Pedestrian and vehicle behavior variations in poor weather

Each variation is sampled thousands of times with different traffic patterns, vehicle types, and hazard combinations. The training dataset becomes immensely more diverse without requiring proportional real-world data collection.

Comparison: World Models vs. Traditional Simulation

Waymo's previous approach to training on rare scenarios relied on traditional simulation. This means building explicit 3D models of environments, physics engines that simulate vehicle dynamics, rendering systems that generate images.

Products like CARLA, an open-source autonomous driving simulator, represent this traditional approach. CARLA lets you build 3D environments, define vehicle physics, place traffic, and render footage. It's powerful and flexible, but it has fundamental limitations:

Traditional Simulation Limitations:

- Asset requirements: Building 3D models of environments is labor-intensive and expensive. Every unique location needs to be manually modeled with accurate geometry and textures.

- Physics fidelity: Accurately simulating real-world physics for all possible scenarios is computationally expensive and difficult. Weather effects, material interactions, and vehicle dynamics require constant calibration.

- Photorealism challenges: Rendering photorealistic images from 3D models is computationally intensive. Most traditional simulators sacrifice visual fidelity for speed.

- Edge cases: Some scenarios (like specific weather effects, material interactions, or rare events) are difficult to parameterize and simulate accurately.

World Model Advantages:

- Data input: Use real video footage. No need to build explicit 3D models.

- Learned realism: The model inherently learns realistic physics because it's trained on real data. No manual physics tuning needed.

- Photorealism by default: The generated footage is inherently photorealistic because the model is trained to reconstruct real video properties.

- Scalability: Add more training data, improve quality. No geometric modeling bottleneck.

The tradeoff is that world models are new and still being refined. There's less control over generated scenarios compared to traditional simulators where you can explicitly specify parameters. World models require prompting, which is less precise than parameters.

But for Waymo's use case—generating diverse training scenarios from real driving data—world models have clear advantages.

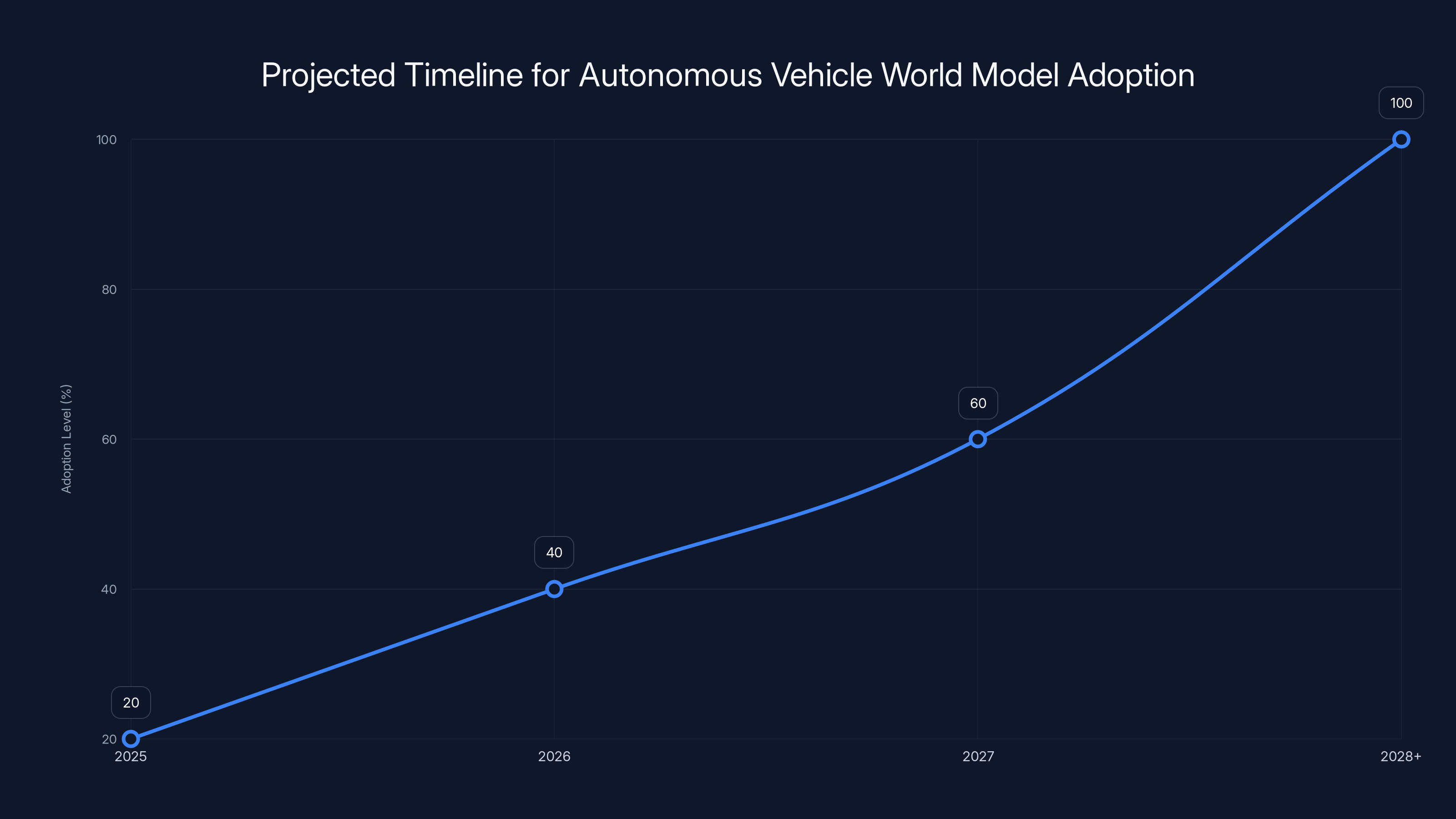

Estimated data suggests that by 2028, world models will be a standard infrastructure in autonomous vehicle training, contingent on successful real-world validation in 2025.

The Technical Foundation: How Genie 3 Works

Understanding why Genie 3 solves problems that defeated previous world models requires understanding the technical innovation.

Genie 3 uses a transformer-based architecture with video compression. Here's the simplified version: it breaks incoming video into small temporal chunks, compresses each chunk, then processes them through transformer layers that can attend to distant parts of the sequence.

The key innovation is something called "spatiotemporal token merging." Without getting too deep into the weeds, this means the model efficiently tracks which parts of the scene matter for predicting future frames. It doesn't maintain equal detail for everything—it focuses computational resources on areas that are changing or important to the driving task.

For autonomous driving specifically, this is ideal. When you're driving, certain elements are critical to tracking (other vehicles, pedestrians, traffic signals) while others are less critical (clouds, distant buildings). The model learns this naturally from training data.

The long-horizon memory comes from several architectural choices:

- Efficient attention mechanisms that scale better across long sequences

- Temporal position embeddings that help the model track time consistently

- State management that preserves key information across long sequences

- Training techniques that specifically optimize for coherence over extended durations

Google trained Genie 3 on massive amounts of video data from the internet, which already gave it a strong prior about how visual worlds work. Then Waymo applied specialized post-training on dashcam footage to adapt it specifically for autonomous driving scenarios.

This transfer learning approach is powerful. Waymo didn't need to train a world model from scratch—they leveraged Google's foundational work and specialized it.

Practical Implementation: The Workflow

So how does Waymo actually use this in practice? Here's the concrete workflow:

Step 1: Data Collection and Preparation

Waymo's vehicles collect dashcam footage continuously. This footage is stored, organized by location, weather conditions, and scenario type.

Step 2: Scenario Selection

Engineers identify gaps in their training data. Maybe they need more examples of dense traffic in rain. Or snow-covered intersections with pedestrians. Or unusual vehicle placements.

Step 3: Source Video Selection

They select real dashcam footage that comes closest to the desired scenario. This could be footage from Waymo vehicles or external dashcam sources.

Step 4: Prompt Engineering

Engineers write prompts describing the variation they want: "Make it snow heavily. Add three additional vehicles in unexpected locations. Extend the scenario 30 seconds."

Step 5: World Model Generation

The Waymo World Model processes the source video and prompts, generating new 2D dashcam footage and corresponding 3D lidar data.

Step 6: Quality Validation

The generated footage is reviewed for consistency and realism. Some is rejected if the World Model made obvious errors. Most is accepted for training.

Step 7: Training Data Integration

The synthetic scenarios are mixed with real collected data in a training pipeline that feeds the autonomous driving neural networks.

Step 8: Performance Evaluation

Waymo tests whether the trained model actually performs better on real-world test routes, especially in scenarios that match the synthetic variations.

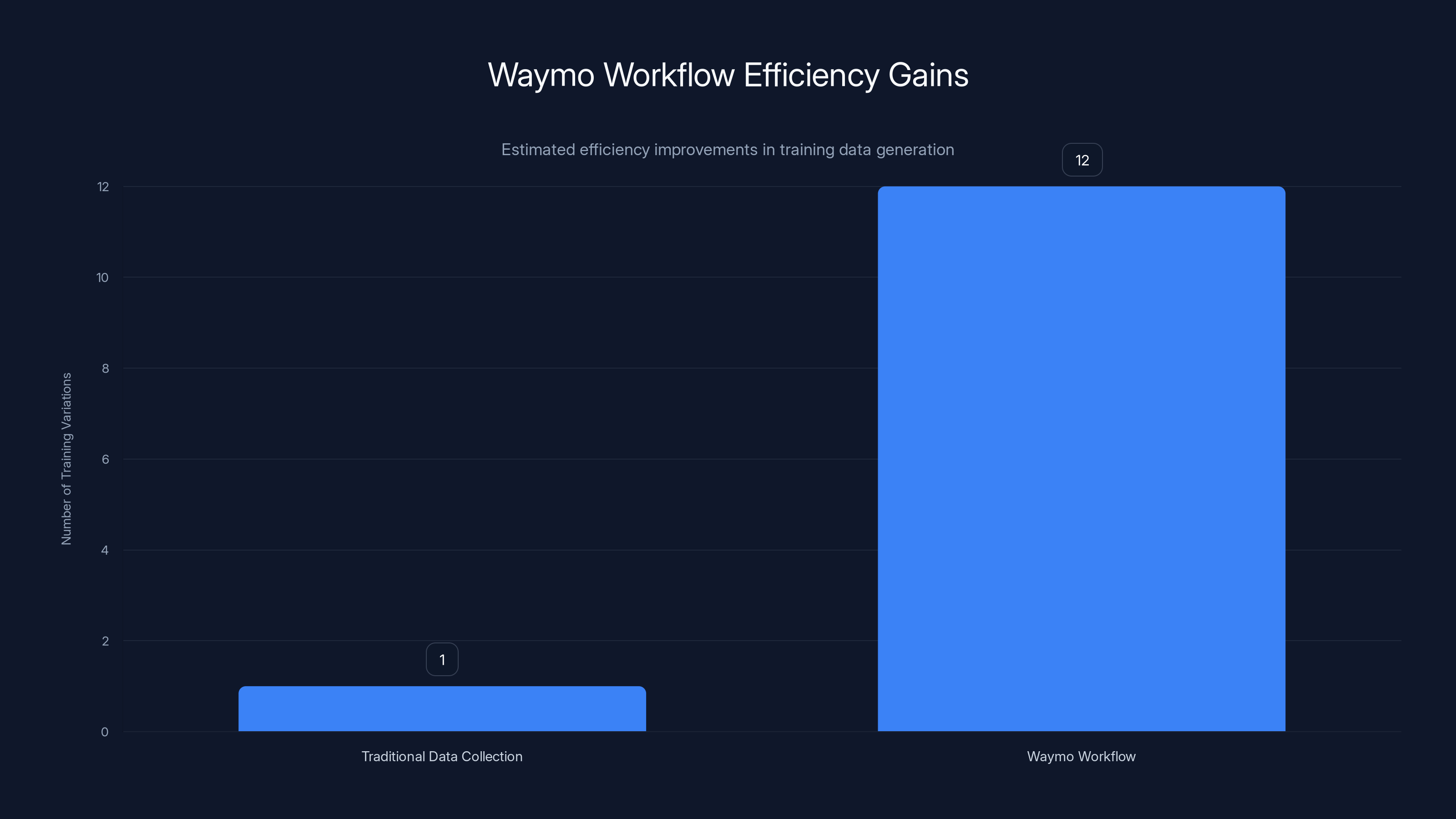

This workflow is efficient compared to traditional data collection. Each real footage clip can be transformed into dozens of training variations, multiplying the effective size of the training dataset.

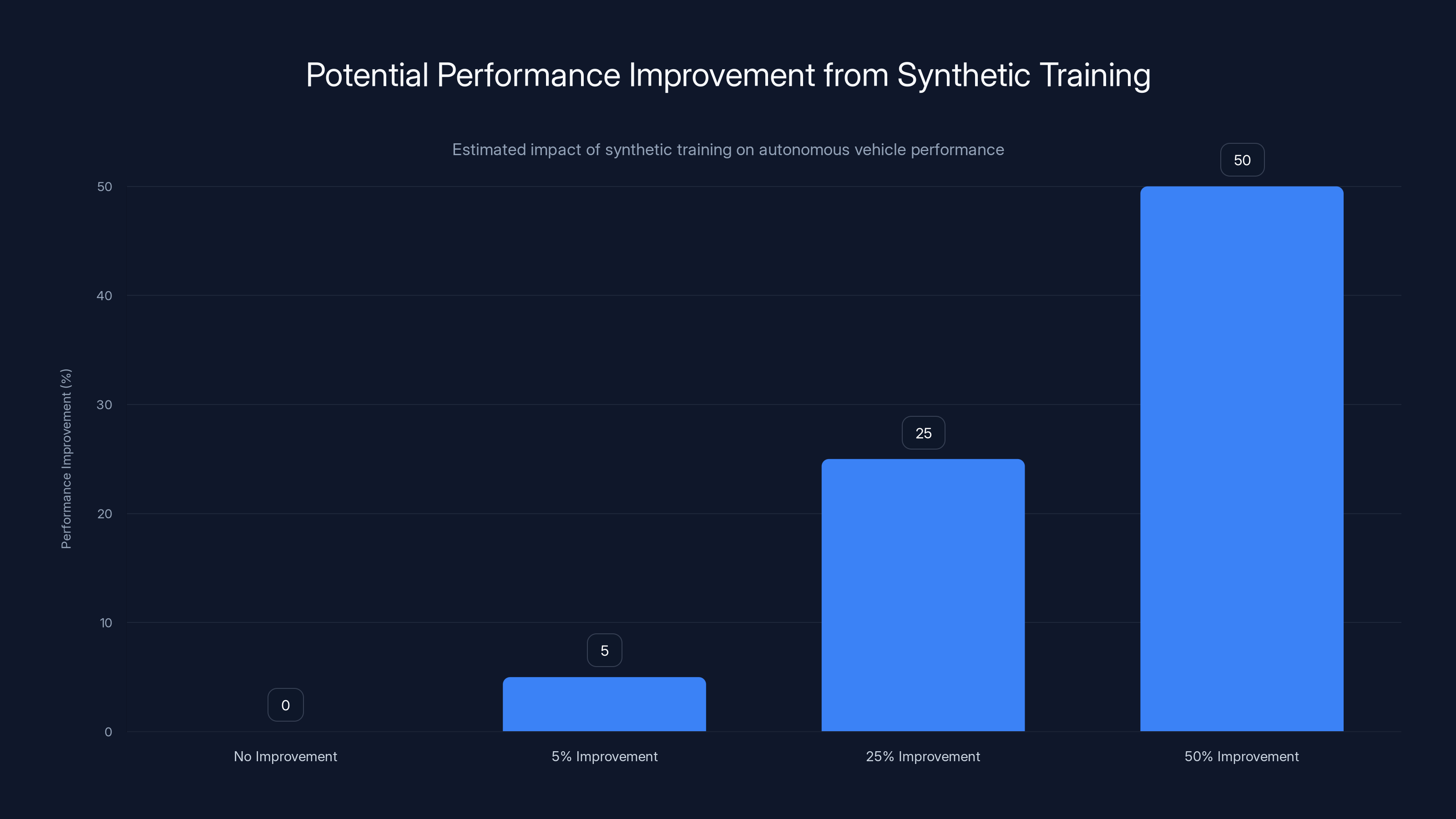

Estimated data: If synthetic training yields a 50% improvement, it could revolutionize the industry, whereas a 5% improvement is beneficial but less impactful.

Edge Cases That Change Everything

Let's get concrete about why this matters. Consider an elephant on the road. Waymo's blog post mentioned this, and it's worth taking seriously.

Waymo's vehicles will never encounter an elephant on a Boston road. Probably never encounter one anywhere in normal operations. But there might be a traffic incident involving an elephant at a zoo parking lot, or a circus setting up, or an escaped animal. The autonomous vehicle needs to handle it.

With traditional training, Waymo would need footage of this scenario. That footage doesn't exist in sufficient quantity. You might find one or two examples of an elephant on a road. That's not enough training data.

With the World Model, Waymo can take a real scenario of a cow on a road, and through prompting, generate variations where it's other large animals. Or take a scenario with an elephant and generate variations with different positions, traffic patterns, and pedestrian responses.

Is the World Model's elephant photorealistic? Probably. Will it be anatomically perfect? Maybe not. But that doesn't matter. The autonomous driving system doesn't need to learn "what elephants look like." It needs to learn "if there's a large, unpredictable object in my path, maintain distance, slow down, and consider alternative routes."

Other edge cases Waymo can now synthesize:

- Vehicle breakdowns: A car stopped unexpectedly in the middle of a lane

- Debris: Construction debris, fallen branches, or accident aftermath on the road

- Extreme weather: Hail, heavy snow, intense rain, fog that reduces visibility to 20 feet

- Unusual pedestrian behavior: People running into traffic, cycling in unexpected patterns, or disregarding traffic signals

- Infrastructure failures: Missing traffic signs, crossed-out lane markings, or malfunctioning traffic lights

- Rare vehicle types: Emergency vehicles with unusual lighting, oversized trucks, or motorcycle groups

The World Model can generate hundreds of variations for each, with different times of day, weather conditions, and surrounding traffic.

Quality Concerns: Can We Actually Trust Synthetic Data?

Here's where we need to be honest. Genie 3 isn't perfect. Videos published showing the technology's capabilities run the gamut from impressive to obviously flawed. Artifacts appear, especially in long sequences. Physics violations happen occasionally. Hands disappear from arms. Objects duplicate or vanish without explanation.

Waymo acknowledges this. The World Model has improved significantly from earlier versions, but it's not flawless. The question is: does imperfect synthetic data still help training?

Research suggests yes, but with important caveats. Synthetic data with minor errors can still teach useful features to neural networks. A slightly misplaced vehicle still teaches the autonomous system to maintain lateral distance. An artifact in the corner of the frame doesn't corrupt learning about the primary driving task.

But systematic errors are problematic. If the World Model consistently makes a specific mistake—like rendering rain particles through vehicle windows—this error might teach the autonomous system an incorrect behavior.

Waymo addresses this by mixing synthetic data with real data. The training pipeline doesn't rely exclusively on generated footage. Real data grounds the learning process and prevents systematic artifacts from dominating the training signal.

There's also ongoing quality assessment. Waymo tests whether synthetic training actually improves real-world performance. If generated data isn't helping—or worse, is degrading performance—they adjust the mix or fine-tune the generation process.

The honest assessment: Waymo is trading some degree of synthetic imperfection for massive increases in training data diversity. The math still works out in their favor because diversity typically outweighs minor quality issues in neural network training.

Waymo's workflow can transform a single real footage clip into approximately 12 training variations, significantly enhancing the training dataset size. (Estimated data)

Competitive Implications: The Industry Responds

Waymo isn't the only autonomous vehicle company facing the rare-scenario training problem. Competitors like Cruise, Wayve, and others have proposed different solutions.

Some companies emphasize collecting more real data. Tesla's approach involves crowdsourcing real-world edge cases from millions of vehicles. Cruise has similarly emphasized real-world data collection at scale.

Others develop proprietary simulators. Some invest in synthetic data generation through different methods.

Waymo's adoption of Genie 3 represents a particular bet: that world models trained on real video are more efficient than either pure real-world data collection or traditional simulation. This bet positions them to scale faster into new markets without waiting years for real-world data accumulation.

If the approach works at the level Waymo claims, it's a significant competitive advantage. It's not just about having more data—it's about having data that covers the specific scenarios your system struggles with, generated efficiently without real-world data collection delays.

This might be why Google was willing to expand access to Genie 3 technology. The potential applications extend beyond Waymo to the entire autonomous vehicle industry, plus robotics, VR, and other sectors that benefit from synthetic world generation.

Future Directions: World Models Beyond Driving

The implications extend beyond autonomous vehicles. Genie 3 and similar world models represent a fundamental shift in how AI systems can be trained.

For robotics, world models could accelerate training of manipulation tasks. A robot arm learning to grasp objects could practice millions of variations in simulation, improving real-world performance.

For content creation, world models could enable game designers and filmmakers to generate large portions of visual content from high-level descriptions.

For scientific simulation, world models could predict complex physical systems more efficiently than traditional physics engines.

But each application requires specialized adaptation, like Waymo's modification to generate multimodal sensor data. This means the next few years will likely see numerous specialized versions of Genie 3 or similar architectures, each tuned for specific domains.

The broader trend is toward leveraging learned models of the world instead of handcrafted simulators. This is computationally attractive and often produces more realistic results. But it requires careful validation, especially for safety-critical applications like autonomous driving.

The Timeline: When Does This Matter?

Waymo is already deploying this technology. The Waymo World Model isn't vaporware—it's integrated into their current training pipelines. But what's the realistic timeline for impact?

2025: Waymo uses synthetic training to accelerate expansion into cold-weather markets. Real-world performance in Boston and D. C. will be the key test. If vehicles deployed in 2025 operate safely despite minimal real-world training data, that's evidence the approach works.

2026-2027: If successful, other companies adopt similar approaches. Competition drives improvements to world model quality and specialized applications.

2028+: World models become standard infrastructure for autonomous vehicle training. The industry shifts from data-collection-limited to synthetic-generation-limited. The constraint becomes quality of world models and computational efficiency, not availability of real-world footage.

This timeline depends on the real-world validation happening in 2025. If Waymo's vehicles perform poorly in Boston despite synthetic training, that suggests world models aren't ready for deployment-critical training. That would be important information and would slow adoption industry-wide.

But if they perform well, we're looking at a significant acceleration in autonomous vehicle deployment timelines across the industry.

Practical Limitations Worth Considering

Let's not oversell this technology. There are real limitations worth acknowledging.

Generalization failures: World models trained on video data from specific locations sometimes struggle to generalize to very different environments. A model trained on US urban streets might not perform well on European streets or developing-world roads. Each new geographic region might require retraining or fine-tuning.

Sensor domain shift: Genie 3 was trained on standard camera footage. Adapting it to work with lidar, radar, and other specialized sensors required additional development. This creates a technical barrier that competitors must overcome.

Computational cost: Generating high-quality synthetic scenarios still requires significant computation. While cheaper than collecting real data, it's not free. Running a comprehensive training pipeline with thousands of generated scenarios is computationally expensive.

Validation complexity: Testing that synthetic-trained models perform correctly in the real world is non-trivial. You can't just assume that good synthetic performance translates to real-world safety. Extensive testing is still required.

Regulatory uncertainty: Regulators haven't explicitly endorsed using synthetic-data-trained models for autonomous vehicles. There might be future requirements for a minimum percentage of real-world training data, which would limit how aggressively companies can use synthetic approaches.

These limitations don't make the World Model approach worthless. But they mean it's not a silver bullet. Real-world validation and careful testing are still essential.

The Bigger Picture: AI Is Learning to Learn From Itself

The Waymo World Model represents a broader trend in AI: systems learning not just from human-labeled data or real-world observations, but from simulated data generated by other AI systems.

This is conceptually interesting because it creates a feedback loop. AI models trained on real data generate synthetic data. New AI models train on this synthetic data and perform better on real-world tasks. This improved performance could feed back into generating even better synthetic data.

In theory, this could create a virtuous cycle where AI capability accelerates without requiring proportional increases in real-world data collection. In practice, there are limits. Systematic errors and mode collapse could prevent indefinite improvement.

But for autonomous driving specifically, the loop seems beneficial. World models trained on real dashcam footage generate driving scenarios that train better autonomous agents, which presumably would produce better driving behavior in the real world, creating better training data for world models.

This is why companies are investing heavily in foundation models like Genie 3. They're not just tools for specific applications. They're potentially the foundation for exponential improvements in AI capability across multiple domains.

What We Still Don't Know

Despite impressive announcements, critical questions remain unanswered. Waymo hasn't published peer-reviewed papers with detailed performance metrics. We don't know precisely how much synthetic training improves real-world performance, or in what specific domains.

We don't know whether Waymo's synthetic-trained models perform as well as vehicles trained exclusively on real data. This is the crucial test. If synthetic training provides a 5% performance improvement, it's valuable but not transformative. If it provides a 50% improvement, it changes the industry.

We don't know how the technology scales. Genie 3 was trained on large amounts of internet video data. Will it work as effectively for more specialized domains with less training data available? Will performance degrade for rare or completely novel scenarios?

We don't know the failure modes. What specific mistakes does the World Model make repeatedly? Are these mistakes teaching bad behaviors to the autonomous driving system?

These questions will be answered over the next 2-3 years as Waymo deploys vehicles in new markets and competitors evaluate similar approaches. Real-world safety data will be the ultimate arbiter.

Why This Matters for the Future of Autonomous Driving

The Waymo World Model represents the shift from supervised learning (learning from human-labeled data) to self-supervised learning (learning from unlabeled data) and synthetic supervised learning (learning from AI-generated data).

This shift enables autonomous vehicle companies to decouple from real-world data collection constraints. Instead of needing to wait years to accumulate data for new markets, they can generate it synthetically.

The business implications are substantial. If Waymo can deploy safely in Boston in 2025 despite minimal real-world training time, that's a massive competitive advantage. Expansion into new markets becomes faster and cheaper. Market share and profitability could shift accordingly.

The safety implications are the flipside. If the industry accelerates deployment before fully validating synthetic-trained models, that's a risk. Autonomous vehicles are high-stakes—failures can cause injuries or deaths. Rushing deployment because synthetic training looks promising could have tragic consequences.

Waymo seems aware of this. They're testing extensively. But the pressure to scale and capture market share is real. There will be temptation to accelerate timelines.

The technology itself is impressive. The idea of using world models trained on video to generate unlimited diverse training scenarios is elegant and potentially transformative. But execution matters. And real-world validation is non-negotiable.

As of early 2025, the field is watching closely to see how Waymo's expansion into cold-weather markets goes. Success will validate the approach and accelerate adoption. Unexpected failures or safety concerns would force more caution and slower deployment.

Either way, Genie 3 and synthetic training represent the future direction of autonomous vehicle development. The question is how quickly and how safely we transition to it.

FAQ

What exactly is Genie 3?

Genie 3 is a generative world model developed by Google Deep Mind that creates photorealistic video sequences. It's trained on large amounts of real video data and can generate extended sequences of realistic video based on prompts and user input. The key breakthrough is long-horizon memory, meaning it can maintain consistency across several minutes of generated video, whereas earlier world models would lose coherence after just a few seconds.

How does Waymo's World Model differ from standard Genie 3?

Waymo modified Genie 3 through specialized post-training to output both 2D video and 3D lidar data simultaneously. This multimodal capability is crucial because autonomous vehicles rely on lidar for depth information and object detection, not just cameras. Standard Genie 3 generates video only, so Waymo adapted it to the specific sensor requirements of their vehicles.

Why can't Waymo just use traditional simulation software?

Traditional simulators like CARLA require extensive manual effort to build 3D environments, tune physics engines, and create photorealistic graphics. They're labor-intensive to scale and difficult to parameterize accurately for edge cases. World models learn realism from real data, avoiding this manual bottleneck. However, traditional simulators offer more explicit control, which is valuable for some testing scenarios. Waymo likely uses both approaches.

Can synthetic training data actually improve autonomous vehicle performance?

Yes, but with important caveats. Synthetic data with diversity can teach useful features even if the data contains minor errors. Waymo mixes synthetic and real data in training, so systematic errors don't dominate the learning signal. Real-world testing in new markets will ultimately determine how much synthetic training helps. Early results from Boston and Washington D. C. deployments in 2025 will be crucial data points.

What happens if the World Model generates unrealistic scenarios?

Waymo's neural networks are robust enough to handle minor imperfections in synthetic data, similar to how noise and errors in real-world data don't completely break learning. However, systematic errors could teach incorrect behaviors. Waymo mitigates this through quality validation, mixing synthetic with real data, and testing extensively before deployment. If generated data produces worse real-world performance, it gets adjusted or removed from training.

Could other companies build their own world models instead of using Genie 3?

In theory yes, but it requires massive computational resources and large training datasets. Building a world model from scratch would take years and billions in investment. That's why companies are focusing on adapting existing models like Genie 3. Google's willingness to expand Genie 3 access to partners like Waymo makes adoption practical for others rather than forcing them to develop independently.

When will synthetic-trained autonomous vehicles be widely deployed?

Waymo is already testing them in Boston and Washington D. C. in 2025. If real-world performance meets expectations, we'll likely see rapid expansion into other markets throughout 2025-2027. Full industry adoption would take longer, probably 2028 or later, pending regulatory approval and competitor development of similar capabilities. The timeline depends heavily on how well synthetic-trained vehicles perform in practice during 2025 deployments.

Does Waymo need to collect less real-world data now?

No, they still need real data, just not as much. The World Model approach reduces the data-collection burden but doesn't eliminate it. Waymo still needs real footage to train the world model itself and to validate that synthetic-trained autonomous systems work correctly. The advantage is that Waymo can amplify limited real-world data through synthetic generation, accelerating expansion timelines.

Conclusion: The New Frontier of Autonomous Vehicle Training

Waymo's adoption of Genie 3 represents a fundamental shift in how autonomous vehicles are trained. After years of struggling with the chicken-and-egg problem—needing extensive real-world data to train safely, but needing safe training to justify extensive real-world deployment—the industry has found a promising workaround: generate synthetic training data at scale.

The implications are massive. Instead of spending years collecting data in each new market, Waymo can now generate diverse training scenarios synthetically and deploy more quickly. This could accelerate autonomous vehicle adoption significantly, bringing safe self-driving to more cities faster than anyone expected.

But this comes with important caveats. Synthetic data, no matter how sophisticated, requires careful validation. Systematic errors in generated footage could teach autonomous systems incorrect behaviors. Deploying too aggressively before real-world validation could create safety risks.

The technology itself is impressive—Genie 3's long-horizon memory breakthrough solves real technical problems that defeated earlier world models. Waymo's specialization to multimodal sensor output shows thoughtful engineering. The practical workflow makes sense: take real data, generate variations addressing specific gaps, mix with real data, train, and validate.

But the proof is in the pudding. When Waymo's synthetic-trained vehicles hit the snowy streets of Boston and navigate the chaotic intersections of Washington D. C., we'll learn whether this approach actually works at scale. That's the real test.

If it works, expect rapid industry adoption. Competitors will race to develop similar capabilities. The constraint on autonomous vehicle deployment will shift from data collection to synthetic data quality. Within 5 years, this approach could be standard across the industry.

If it doesn't work—if synthetic-trained vehicles perform worse than expected or encounter unexpected failure modes—that sends a different signal. It suggests that authentic real-world data remains irreplaceable, and scaling autonomous vehicles requires continued patient data collection rather than shortcuts through synthetic generation.

Either way, Genie 3 and world models represent the future of how AI systems will be trained. The question isn't whether this approach will be adopted, but how quickly and carefully the adoption happens. The stakes are too high to rush, but the potential is too significant to ignore. Waymo and the autonomous vehicle industry are walking that line carefully, and the next 12-24 months will tell us whether they're walking it successfully.

Key Takeaways

- Waymo uses Genie 3 world models to generate synthetic driving scenarios that cover rare and impossible conditions, multiplying training data without extensive real-world collection

- The Waymo World Model generates both 2D video and 3D lidar data simultaneously, enabling multimodal sensor training that traditional simulators can't match

- Long-horizon memory breakthrough in Genie 3 maintains visual consistency across several minutes of generated video, solving coherence problems that defeated earlier world models

- Synthetic training accelerates expansion into new markets by reducing real-world data collection requirements and enabling rapid adaptation to diverse weather and road conditions

- 2025 deployments in Boston and Washington D.C. will provide crucial real-world validation of synthetic-trained autonomous vehicles, determining industry-wide adoption trajectory

Related Articles

- How Waymo Uses AI Simulation to Handle Tornadoes, Elephants, and Edge Cases [2025]

- Senate Hearing on Robotaxi Safety, Liability, and China Competition [2025]

- Waymo's DC Regulatory Battle: Autonomous Vehicles Face Urban Complexity [2025]

- Uber's New CFO Strategy: Why Autonomous Vehicles Matter [2025]

- GPT-5.3-Codex: The AI Agent That Actually Codes [2025]

- ChatGPT Caricature Trend: How Well Does AI Really Know You? [2025]

![Waymo's Genie 3 World Model Transforms Autonomous Driving [2025]](https://tryrunable.com/blog/waymo-s-genie-3-world-model-transforms-autonomous-driving-20/image-1-1770412098384.jpg)