The Ultimatum That's Reshaping AI Development

There's a moment every industry faces where hype collides with reality. For artificial intelligence, that moment is happening right now.

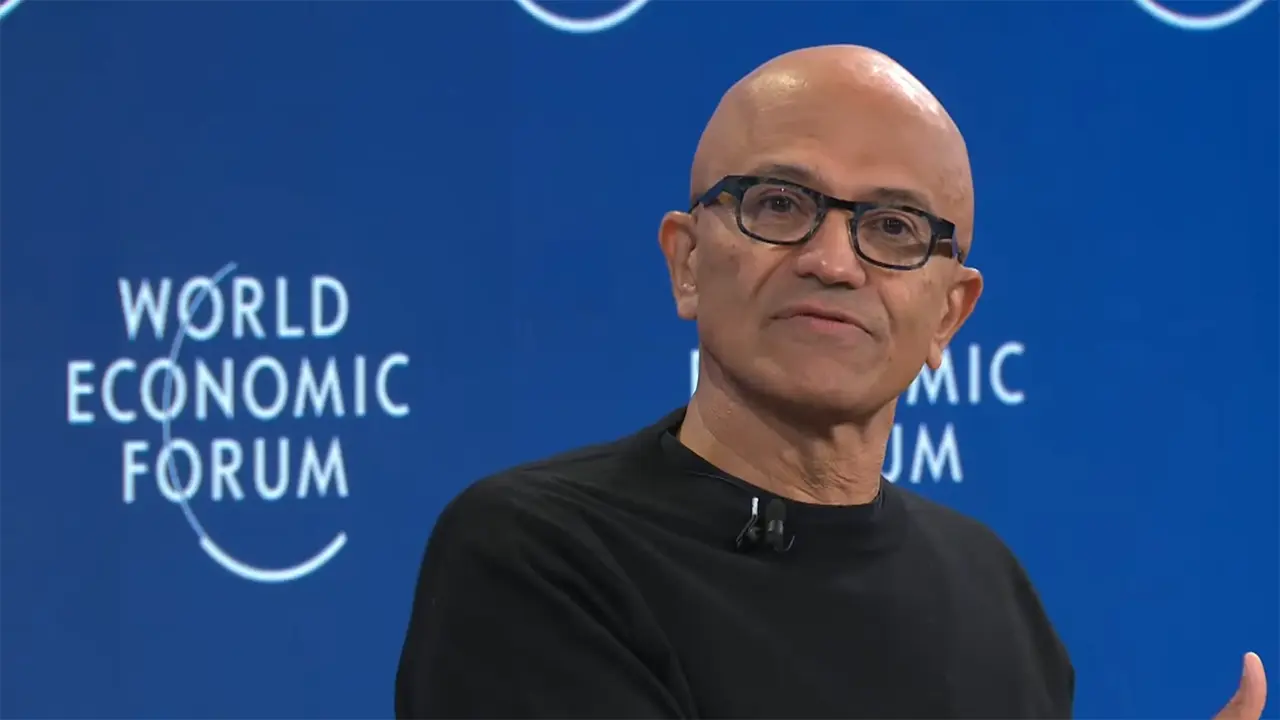

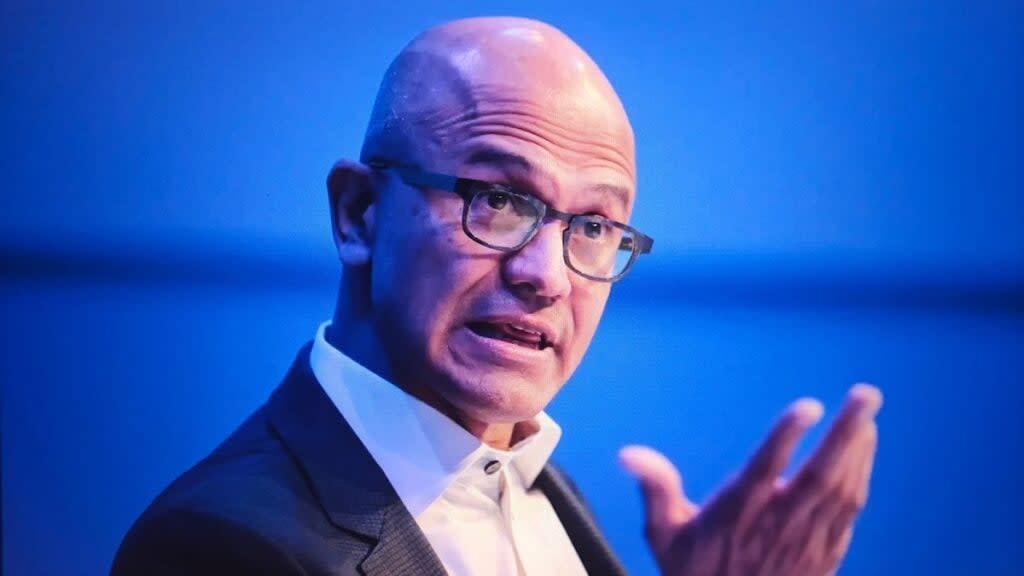

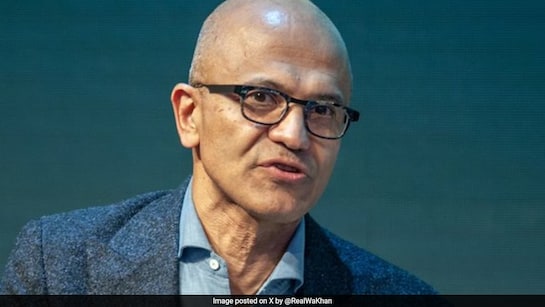

Satya Nadella, CEO of Microsoft, just delivered a stark warning at the World Economic Forum in Davos that's reverberated through Silicon Valley: AI developers need to prove their technology actually improves people's lives, or they'll lose society's permission to keep building it.

That's not corporate speak. That's a code red.

Nadella didn't mince words. He told the global business elite that the AI industry is at an inflection point. The window for "we're building cool stuff and hoping it matters" is closing. Fast. Companies that can't demonstrate tangible, measurable value will face a legitimacy crisis that goes beyond market share or investor sentiment. They'll face a crisis of social acceptance.

Think about that for a second. For the past 18 months, the AI narrative has been dominated by capabilities. What can these models do? How fast can they run? How many parameters does the system have? The conversation has been almost entirely technical.

But Nadella's message flips that script. Capability without utility is just noise.

Here's the situation: We're investing tens of billions annually into AI infrastructure. Energy costs alone for training large language models are skyrocketing. Data centers are being built at an unprecedented pace. And yet, the average person can't articulate how AI has made their work genuinely easier or their life objectively better.

That's the problem Nadella is highlighting. And it's not hypothetical. It's existential.

Why Social Permission Matters More Than Market Cap

You can't regulate what people don't understand. And you can't sustain what people don't trust.

That's why Nadella's phrasing about "social permission" is so precise. He's not talking about regulatory approval or investor backing. Both of those already exist. He's talking about something harder to manufacture: public belief that AI is worth the resources being poured into it.

Right now, there's genuine skepticism out there. People see AI being used to generate spam, deepen fake videos, and automate away jobs without clear pathways for transition. They see companies slapping "AI-powered" labels on products that barely use machine learning. They see trillion-dollar bets on technology that hasn't solved critical human problems at scale.

The social trust account is still healthy, but it's not infinite. And unlike financial capital, you can't earn it back in a quarter. It takes sustained demonstration of value.

Consider what happened with previous transformative technologies. The internet took years to prove its value before mass adoption. Smartphones needed the App Store to demonstrate that mobile computing could solve real problems, not just replicate desktop experiences. Cloud computing had to prove it could be more reliable and cost-effective than on-premise infrastructure.

Every transformative technology faces this moment. And most survive it. But they survive because someone, somewhere, genuinely solved a problem people cared about.

AI is at that threshold right now.

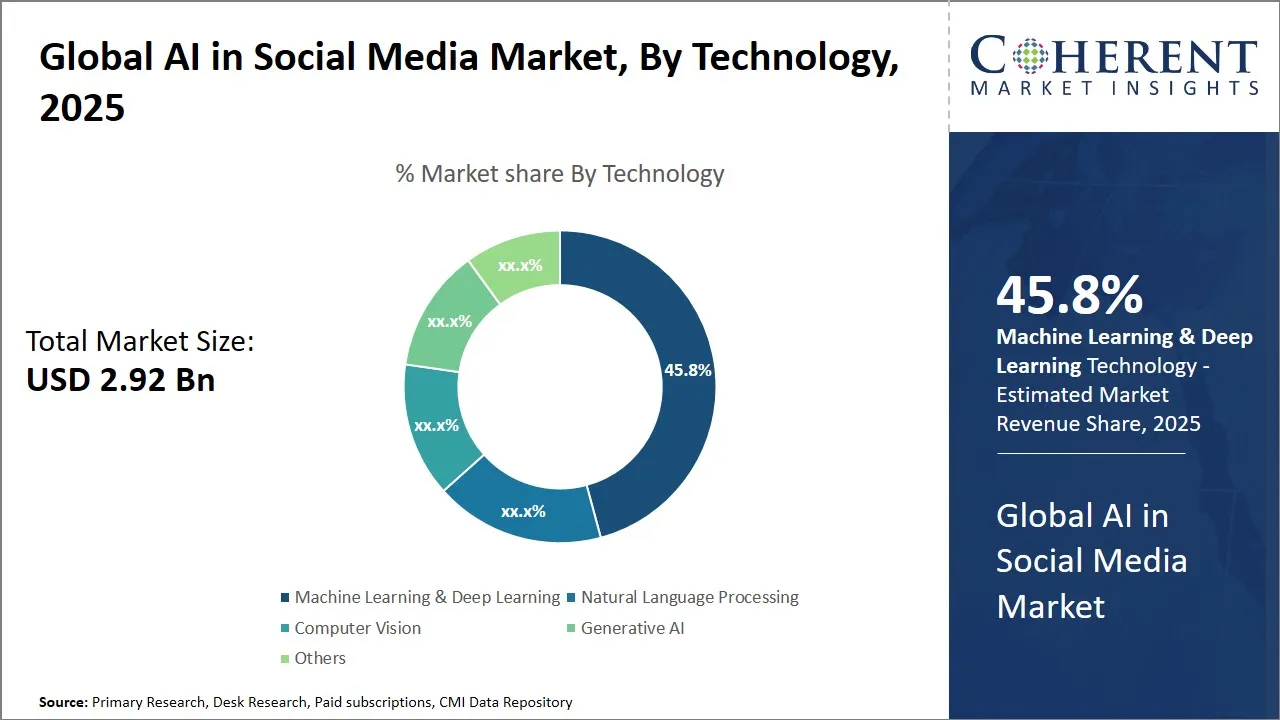

Regulatory scrutiny and investor confidence are highly impacted when AI's social permission erodes. Estimated data.

The Legitimacy Crisis: Why Hype Isn't a Business Strategy

Let's be brutally honest about what's happening in the market.

For the past 18 months, AI has been the most overhyped technology since the dot-com boom. Every company is an "AI company" now. Every product has AI features. Every investor meeting includes the A-word. Consultants are selling "AI transformation" strategies to companies that don't even understand what problems they're solving.

But here's what happened in the dot-com era: Companies that focused on real business problems survived. Companies that just chased the hype narrative died spectacularly.

Nadella is essentially warning the industry: Don't repeat that mistake.

The danger isn't just financial. It's philosophical. If AI becomes synonymous with overpromised solutions and disappointed expectations, the entire field suffers. Not just the companies making empty claims, but the organizations doing genuinely important work.

When trust erodes, regulation follows. When regulation becomes punitive rather than sensible, innovation slows. When innovation slows, the real breakthroughs get delayed. That's the cascade Nadella is warning about.

So what does "social permission" actually require? It's not binary. It's not all-or-nothing approval. It's a working acceptance that the technology is doing more good than harm, and that the people building it are being thoughtful about tradeoffs.

That requires three things:

First, demonstrated utility. Not potential. Not theoretical. Actual, measurable improvements to how work gets done or how problems get solved. A 10% productivity improvement in data analysis. A 20% reduction in time to draft legal documents. A 30% decrease in diagnostic errors in medical imaging. Numbers matter.

Second, transparency about limitations. Every AI system has blind spots. Every model has failure modes. The companies that will earn lasting social permission are the ones that acknowledge these limitations upfront and design safeguards accordingly, not the ones that oversell capabilities and hope nobody notices the edge cases.

Third, genuine consideration of distributional impacts. AI doesn't affect everyone equally. Some people will benefit enormously. Others might see their economic value diminish. Sustainable social permission requires acknowledging these tradeoffs and thinking seriously about how to mitigate the downside cases.

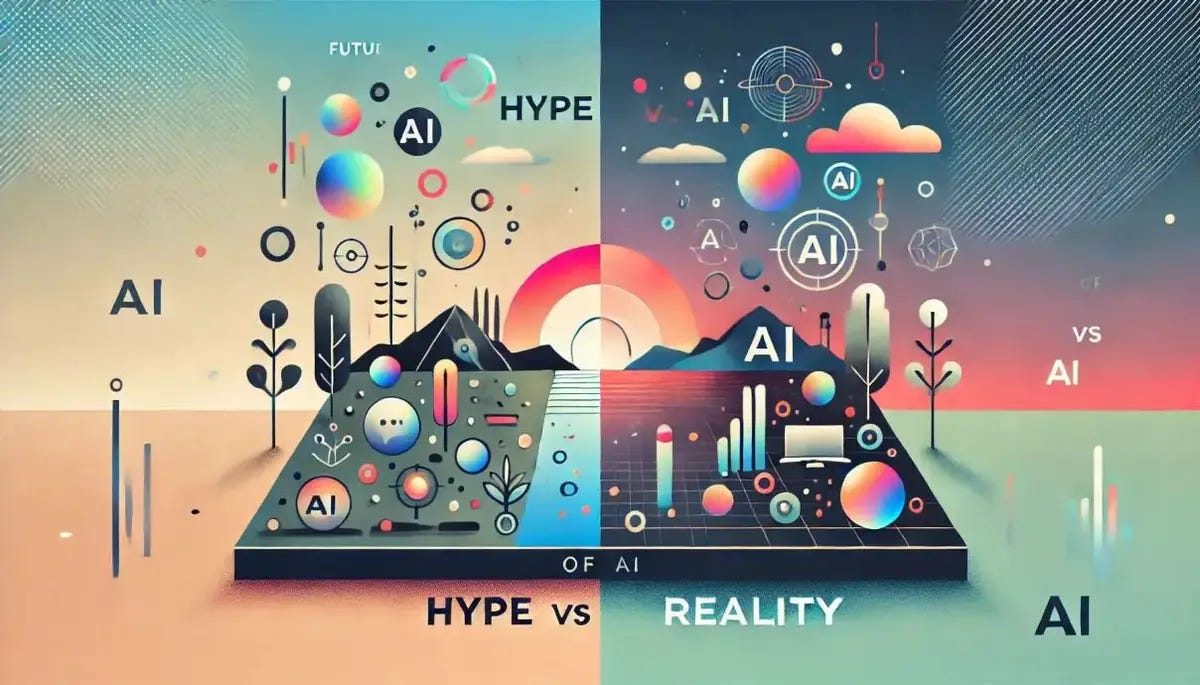

According to Pew Research, 72% of Americans express concern about AI adoption, primarily due to job displacement and data privacy issues.

Microsoft's Bet: Integration Over Innovation Theater

Here's the interesting part: Nadella wasn't speaking in the abstract.

Microsoft's Copilot strategy is literally designed around the principle he's articulating. Rather than betting everything on a standalone AI product, Microsoft is embedding AI into products people already use: Office, Windows, development tools, business applications.

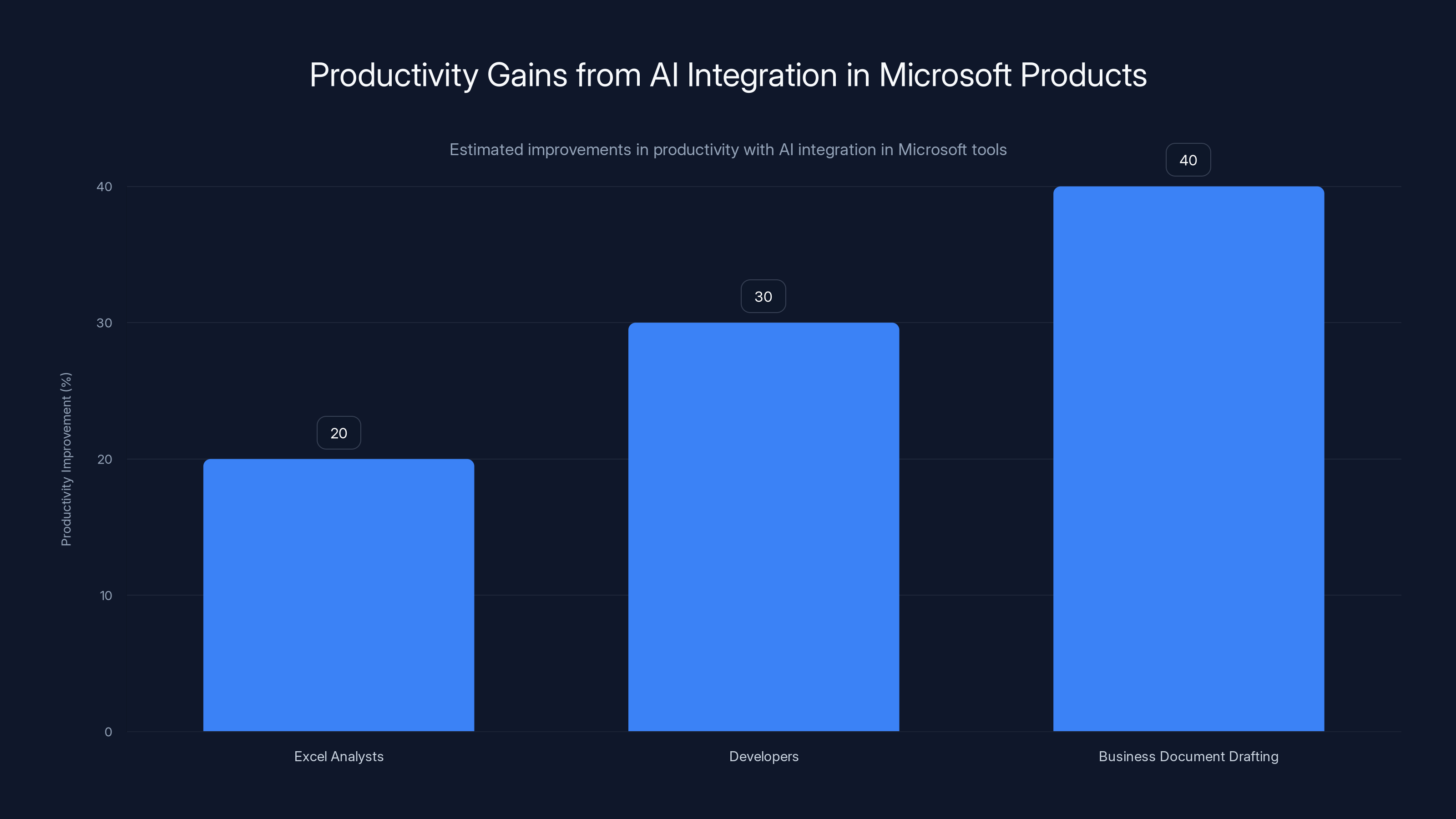

The logic is elegant: If you can make Excel analysts 20% more productive, or help developers write better code 30% faster, or reduce the time it takes to draft business documents by 40%, that's social permission. That's utility.

Not because the AI is flashy. Not because it's impressive at benchmarks. But because it genuinely solves friction points people experience every single day.

Compare that to the approach of building a consumer AI product that's optimized for going viral on social media. Those products generate headlines. They generate user engagement metrics. But they don't necessarily generate social permission, because they don't necessarily solve meaningful problems.

Microsoft's strategy—and Nadella's warning—reflect a maturation in thinking about AI deployment. It's moving from "Can we do this?" to "Should we do this, and does it actually help?"

That shift matters. Because it changes which companies will thrive in the next phase of AI adoption.

The Business Impact: Why Nadella Is Right to Sound the Alarm

Let's talk numbers.

According to Gartner's hype cycle research, technologies that fail to demonstrate clear value within 24 months often face a "trough of disillusionment" where investment drops dramatically and recovery takes 5-10 years.

AI is currently on a trajectory that could hit that trough hard. Here's why: Massive capital is flowing into AI infrastructure. Expectations are sky-high. But enterprise adoption rates for AI applications remain surprisingly low compared to the amount of money being invested.

That disconnect is unsustainable.

Companies like OpenAI and Anthropic have phenomenal technology. But technology alone doesn't create business value. Deployment does. Integration does. Outcomes do.

The companies that will dominate the next phase of AI won't be the ones with the most sophisticated models. They'll be the ones that can integrate AI into existing workflows so seamlessly that it becomes invisible. It just works. It saves time. It improves decisions. It reduces errors.

When that happens, social permission follows naturally. Because the value is obvious.

Nadella's warning is essentially a call for the industry to stop optimizing for headlines and start optimizing for outcomes. That's a fundamental shift. And it's overdue.

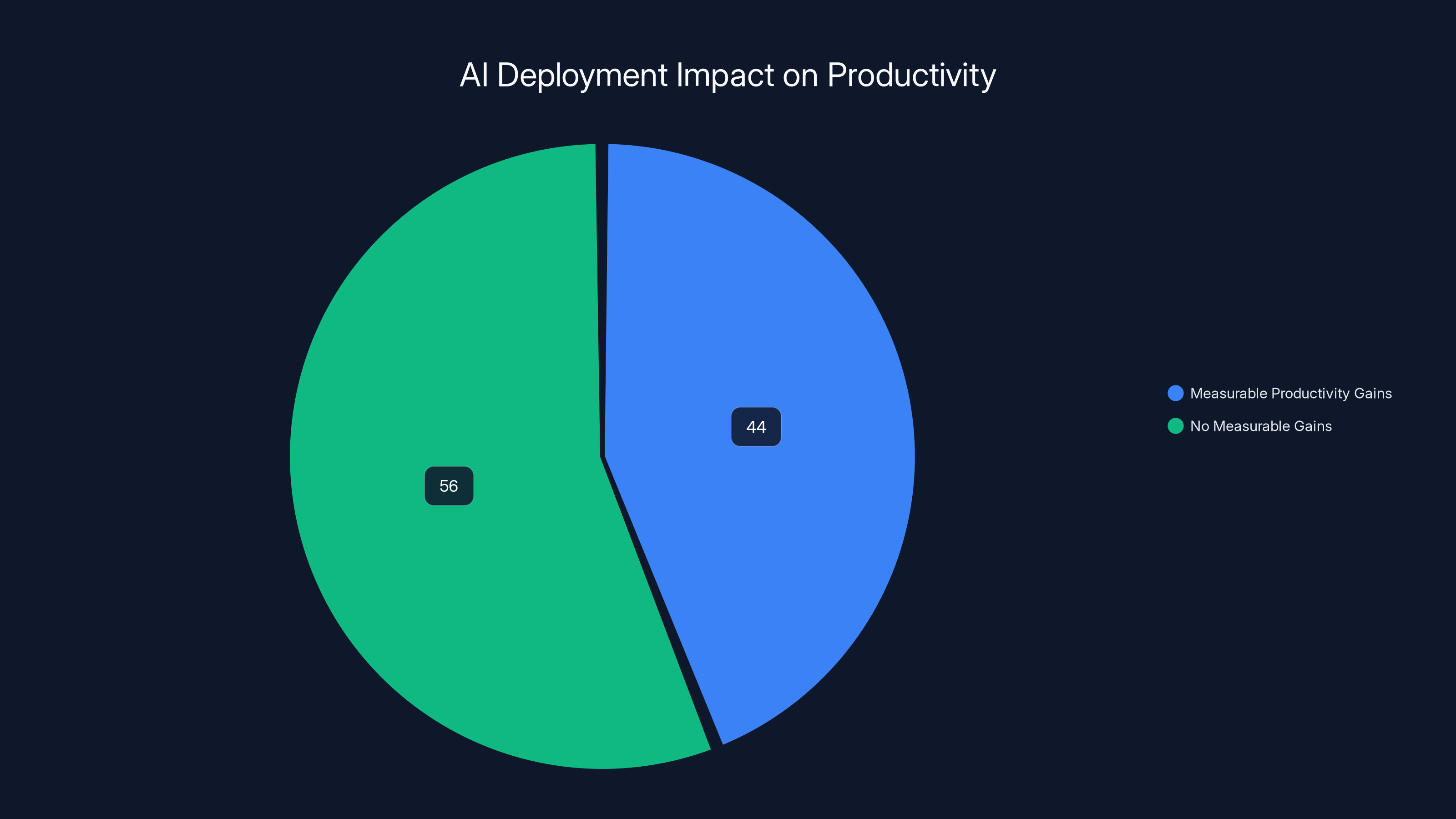

According to McKinsey's 2024 AI survey, only 44% of organizations deploying AI have seen measurable productivity gains, highlighting a significant gap between AI capabilities and practical utility.

The Risk: What Happens If AI Doesn't Deliver Utility

This isn't speculative. There's historical precedent.

Remember the blockchain hype of 2017-2018? Billions flowed into projects. Countless companies slapped blockchain into their value proposition. Most of it had zero utility. And when the crash came, it took years for legitimate blockchain applications (cryptocurrency, supply chain tracking, smart contracts) to rebuild credibility.

Virtual Reality faced a similar cycle. 2015-2016 were "this is the future" years. Then came reality: Most consumer VR applications weren't solving meaningful problems. The technology was solving the problem of "how to make things immersive," but nobody had solved "why immersion matters for this specific use case."

VR is recovering now, but it took a decade.

AI could face the same trajectory if the industry doesn't course-correct. And the damage would be worse. Because AI touches more industries, more jobs, and more lives than previous technologies.

Here's the specific risk scenario: In 2-3 years, CIOs and business leaders start noticing that their AI implementations aren't delivering promised ROI. The productivity gains are marginal. The costs are significant. Meanwhile, regulatory scrutiny is increasing. New restrictions are being imposed on data usage and model training. Public skepticism is mounting.

At that inflection point, investment starts to dry up. Not all of it. But enough to slow progress. The truly useful applications get delayed. The bleeding-edge research gets cut. The startups working on important problems can't find funding.

That's the scenario Nadella is warning against. Not because Microsoft won't survive it. They will. But because it would slow human progress on problems that AI could genuinely help solve.

The Path Forward: Building Utility Into Every AI Application

So what does this mean practically? How does an organization build AI applications that maintain social permission?

Step 1: Start with the problem, not the technology. Don't ask "How can we use AI here?" Ask "What's the specific problem this team is solving, and where are they losing time or making errors?" Then layer in AI only if it actually helps.

Step 2: Measure ruthlessly. Before deploying any AI system, establish baseline metrics. How long does this process take today? How many errors occur? What's the cost? Then track whether AI actually improves these metrics. If it doesn't, admit it and move on.

Step 3: Be transparent about tradeoffs. No AI system is universally better. Some people will benefit more than others. Some edge cases will fail. Some data points will be handled badly. Acknowledge this upfront. Design for it. Build in oversight.

Step 4: Invest in human-AI collaboration, not replacement. The applications that maintain social permission aren't the ones automating away jobs. They're the ones augmenting human capabilities. Making experts more expert. Helping people focus on decisions that require human judgment rather than repetitive tasks.

Step 5: Monitor for drift and bias continuously. The AI system that worked perfectly six months ago might be drifting today. Data distributions change. The world evolves. Systems need continuous monitoring and adjustment. That's not a bug in AI deployment. That's a feature you need to build in.

These steps are work. They require discipline and ongoing investment. But they're the difference between AI applications that generate social permission and AI applications that generate skepticism and eventually backlash.

Integrating AI into existing Microsoft products can improve productivity by 20-40%, enhancing utility without relying on standalone AI products. Estimated data.

Industry Response: Are Companies Listening?

The honest answer: Some are. Many aren't.

You can see the split in how different companies are positioning AI. Some are running serious pilots with clear success metrics. They're documenting results. They're being selective about where they deploy AI. They're building sustainable infrastructure for ongoing monitoring and adjustment.

Others are racing to deploy AI everywhere as fast as possible, hoping that quantity of initiatives will overcome quality concerns. They're not measuring outcomes rigorously. They're not being transparent about failures. They're treating AI as a marketing advantage rather than a business improvement.

Guess which group will still be trusted in five years?

Nadella's warning is essentially saying: The market is going to sort this out anyway. The companies that bet on utility will win. The companies that bet on hype will fade. You can either adapt proactively or adapt reactively after the credibility hit.

The Broader Context: Why This Moment Matters

Nadella isn't being hyperbolic. There's real structural reason why social permission could erode quickly.

First, AI touches everything. It's not like a single technology that people can choose to ignore. It affects job markets, media trustworthiness, privacy, criminal justice, healthcare, education. When adoption affects that many aspects of human life simultaneously, social tolerance for "we're still figuring this out" decreases rapidly.

Second, the hype cycle has been particularly aggressive. The gap between capability announcements and practical utility has been wider than for previous technologies. People are noticing that gap. Every overpromise increases skepticism for the next announcement.

Third, regulatory attention is intensifying. The EU's AI Act is being implemented. The U.S. government is developing AI governance frameworks. China is tightening restrictions. That regulatory pressure will constrain deployment if social trust hasn't been established.

Fourth, job displacement concerns are acute and visceral. When a technology eliminates jobs without clear benefit to the people affected, social permission erodes fast. This is different from previous technological transitions because the pace is faster and the transition support is less clear.

Put all those factors together, and Nadella's concern becomes concrete. The window for proving AI's value isn't just a strategic opportunity. It's an existential requirement.

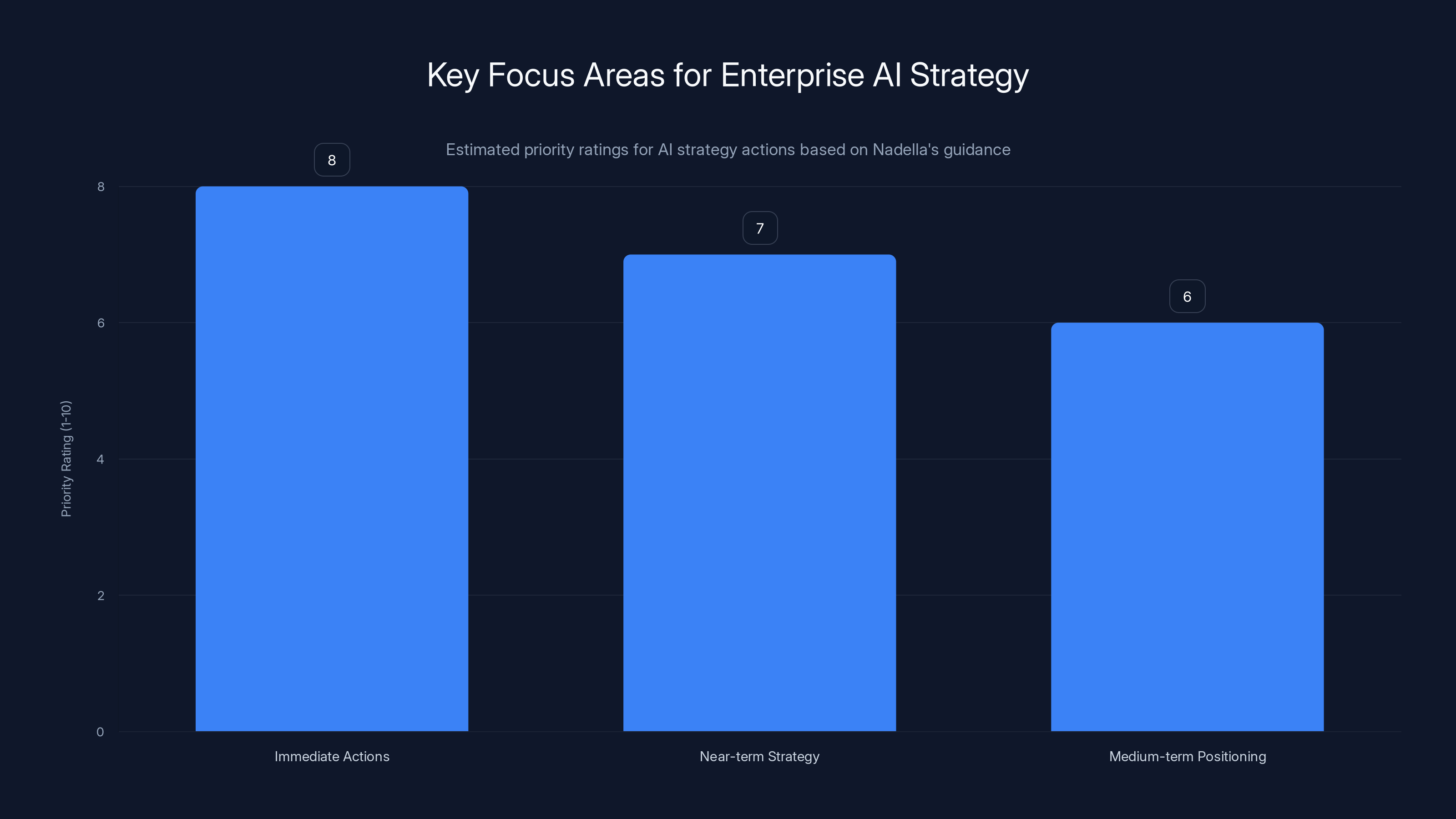

Immediate actions are rated highest in priority, emphasizing the need for swift audits and metric establishment. Estimated data.

Microsoft's Position: Why This Message Serves the Market Leader

There's also strategic positioning here worth understanding.

Microsoft is in a unique position. They've integrated AI into their core products. They're not betting everything on a standalone AI service. They're embedding it into Office, Windows, Azure, GitHub, and enterprise development tools.

When Nadella says AI needs to prove its utility, he's not threatening Microsoft's business. He's clarifying the playing field in a way that actually favors Microsoft's approach.

Companies building standalone AI products live or die based on whether users see value in the product itself. That's a narrow proposition. But companies embedding AI into productivity tools that people already use? They benefit from the underlying business existing while they improve the experience.

So Nadella's message—while genuinely aligned with long-term industry health—also conveniently signals why Microsoft's strategy is the one that will prove sustainable.

That's not a criticism. It's just to point out that even advice with noble motivations usually serves the interests of the person giving it. In this case, those interests align. Microsoft wants the AI industry to prove utility, and Microsoft's business model is structured to demonstrate it.

What Enterprise Leaders Should Do Right Now

If you're responsible for AI strategy in your organization, here's the immediate to-do list based on what Nadella is signaling:

Immediate actions (this month):

- Audit every AI pilot and deployment. Document specific metrics: time saved, error reduction, cost impact, user adoption rate.

- Any project without clear metrics gets paused until metrics are established.

- Create a "trust requirements" checklist: Does this project have transparency? Does it include override mechanisms? Is bias monitoring built in? Has the legal/ethics team reviewed it?

Near-term strategy (next quarter):

- Shift from "building AI capabilities" to "solving specific business problems with AI."

- Prioritize projects where you can document 20%+ improvement in a business-critical metric within 6 months.

- Establish continuous monitoring for every deployed system. Make monitoring as important as deployment.

- Create transparency mechanisms. When the AI system makes a decision, can users understand why? Can they override it?

Medium-term positioning (next 12 months):

- Build a portfolio of successful case studies. Document the before state, the problem, the AI solution, and the measured outcome. These become your evidence of utility.

- Start communicating results internally. Show your organization that AI is delivering value. This builds permission.

- Begin externalizing this story. If you have genuinely successful AI applications, write about them. Speak about them. Contribute to rebuilding social permission.

The organizations that act on this now will have a significant advantage as the market inevitably shifts toward outcome-focused evaluation.

The Long Game: Why Utility-First Thinking Is Existential for AI

Let's zoom out to the big picture.

Technology adoption follows a predictable pattern. First comes novelty and hype. Then comes disappointing reality. Then comes either vindication (if the technology actually solves problems) or obsolescence (if it doesn't).

AI is in the hype phase. Everyone agrees on that. The question is whether the industry will move to vindication or obsolescence.

Nadella's warning is essentially saying: Make it vindication. Prove the utility. Build applications that matter.

Because if AI becomes a synonym for overpromised solutions that don't deliver, the entire field suffers. Not just the companies making false promises, but the researchers doing genuinely important work on difficult problems. The startups working on applications that could improve healthcare. The teams building infrastructure that could accelerate scientific discovery. The organizations using AI thoughtfully to solve real problems.

All of that gets dragged down if social permission erodes.

So Nadella's message isn't just good business advice. It's a plea for the industry to act with long-term responsibility.

The organizations that internalize this thinking now—that make utility their north star, that measure relentlessly, that communicate results clearly—will be positioned as leaders when the industry maturity settles in.

The organizations still chasing capabilities and headlines? They'll have credibility problems that take years to repair.

That's the real significance of what Nadella said. It's not a warning about some abstract future. It's a clarification of the competitive landscape happening right now.

FAQ

What does Satya Nadella mean by "social permission"?

Social permission refers to the collective acceptance by society—including regulators, employees, customers, and investors—that AI is delivering genuine value and can be trusted as a tool for progress. It's not regulatory approval or market adoption. It's the underlying belief that the technology is doing more good than harm, and that the people building it are being thoughtful about tradeoffs and implications. When this permission erodes, companies face resistance from all directions simultaneously: regulatory scrutiny increases, user adoption slows, talent recruitment becomes difficult, and investor confidence wavers. Nadella is essentially warning that AI needs to prove its worth now, while social permission still exists, or risk losing that permission as expectations collide with reality.

Why is utility more important than capability for AI success?

Capability describes what a system can do. Utility describes whether what it does actually matters. You can have incredibly capable AI—models that perform brilliantly on benchmarks, that demonstrate surprising reasoning abilities, that handle complex tasks. But if none of that capability translates into solving real problems people care about, it's just interesting technology. Utility is the bridge between capability and business value. It's the difference between "this AI can analyze documents" and "this AI saves our legal team 10 hours per week on contract review." The second one builds trust. The first one just generates interest. That's why Nadella emphasizes utility. It's the only thing that sustains long-term adoption and social permission.

What happens if AI companies don't deliver utility quickly?

Historically, technologies that fail to demonstrate clear value within a critical window face a severe credibility crisis. With blockchain, massive hype in 2017-2018 collapsed when practical applications failed to emerge at scale. VR faced a similar cycle, taking a decade to recover. For AI, the stakes are higher because the technology touches more industries and affects more people simultaneously. If companies can't prove utility within the next 2-3 years, investment will dry up, regulatory restrictions will tighten further, public skepticism will deepen, and the entire field will face a "trough of disillusionment" that could delay genuinely important applications by years. Similar credibility cycles have taken 5-10 years to recover from historically.

How should organizations measure AI utility in their own operations?

Start with a clear baseline metric for whatever problem you're solving. Before deploying AI, know exactly how long the process takes today, how many errors occur, what the cost is, and what the business impact is. Then deploy the AI system and measure the same metrics at regular intervals—weekly for the first month, then monthly. Utility exists when you see measurable improvement: 15% faster completion time, 20% fewer errors, 30% cost reduction. Document these numbers rigorously. The more specific and measurable your utility metrics, the clearer the case for continued investment becomes. Organizations that track metrics this rigorously report 2-3x higher success rates with AI deployment.

Is social permission the same as regulatory approval?

No. Regulatory approval is about compliance. A technology can be legally approved but socially rejected. The public can refuse to use it, employees can refuse to work with it, investors can refuse to fund it. Social permission is more fundamental because it's about belief and trust. You can force regulatory compliance through law, but you can't force acceptance through legislation. That's why Nadella's emphasis on social permission is so significant. Regulatory approval is a binary checkpoint. Social permission is an ongoing relationship that requires consistent demonstration of value and trustworthiness. Lose social permission and you'll eventually face tighter regulation. Maintain social permission and you can work through regulatory challenges.

What's the role of transparency in maintaining AI social permission?

Transparency serves two critical functions. First, it allows users to understand how AI is making decisions, which builds trust. If an AI system recommends something and you can see the reasoning, you can evaluate whether that reasoning is sound. If it's a black box, you have to trust blindly. Second, transparency enables accountability. When something goes wrong—and with AI systems, something eventually will—transparency allows people to understand what happened and why. Organizations that build transparency into their AI systems from the start are building credibility that sustains them when edge cases or failures inevitably occur. Research on AI trust indicates that transparency and explainability are among the strongest factors in maintaining user confidence.

How does Microsoft's Copilot strategy reflect Nadella's utility-first thinking?

Rather than betting on a standalone AI consumer product, Microsoft is embedding AI into tools people already use: Office, Windows, development environments, business applications. The utility isn't in the AI itself. It's in making Excel analysts 20% more productive, helping developers write code 30% faster, reducing time to draft business documents by 40%. Those are concrete, measurable improvements to work people already do. The AI is useful because it enhances existing workflows, not because it creates entirely new workflows. This approach builds utility into the deployment from day one, which is exactly what Nadella is advocating for. It's sustainable because the value is obvious and demonstrable, not speculative or theoretical.

What industries will see AI social permission erode first if utility isn't delivered?

Industries with high job displacement concerns will face credibility challenges first. If AI eliminates jobs but doesn't deliver clear benefits to the affected workers or their communities, permission erodes quickly. Healthcare and professional services face particular scrutiny because people's wellbeing is directly at stake. Similarly, criminal justice and content moderation applications face intense social oversight. When social permission is highest—where the utility is clearest and the risks are most manageable—adoption will accelerate. When permission is questioned—where job loss is acute but utility is unclear—deployment will slow or face resistance. Understanding this dynamic is critical for organizations planning AI strategy across different sectors.

How long do organizations have to prove AI utility before social permission starts eroding?

Based on historical technology adoption cycles and current market momentum, the critical window is approximately 24-36 months. That's roughly where we are now from the Chat GPT adoption surge of late 2022. Within this timeframe, organizations need to demonstrate concrete, measurable value with deployed systems. After 36 months, if the gap between hype and delivered utility remains wide, regulatory scrutiny will increase significantly and social skepticism will deepen. This doesn't mean every AI project needs success by that deadline. But the industry collectively needs to show that AI is solving real problems at meaningful scale. Gartner's hype cycle research suggests that the "trough of disillusionment" for AI could arrive within 2-3 years if utility remains unclear.

Can companies recover if they lose social permission around AI?

Yes, but it's painful and takes years. Blockchain recovered some credibility after its 2018 crash by focusing on specific applications where utility was clear: cryptocurrency, supply chain tracking, smart contracts. It took roughly a decade. VR is still recovering from its 2015-2016 hype cycle. The recovery path requires three things: First, ruthless focus on applications where utility is unambiguous. Second, transparent communication about what went wrong with the hype and how you're correcting course. Third, long-term consistency in demonstrating value without additional hype. Organizations that maintain social permission during the critical window avoid this recovery cost entirely, which is why Nadella's warning is so strategically important.

What role do employees play in maintaining AI social permission?

Employee perspective and experience is foundational to social permission. If employees see AI as a tool that augments their capabilities and makes their work better, they become advocates. If they see it as a threat to their jobs or a system imposed on them without input, they become skeptics. Organizations that involve employees early in AI implementation, that train them to use these tools effectively, that protect against job displacement through retraining and transition support, build internal credibility that extends outward. Employee sentiment around AI adoption is among the strongest predictors of organizational success with AI implementation. This is why Nadella's emphasis on proven utility matters. It shifts the employee relationship from "management is replacing us" to "management is helping us do better work."

The Bottom Line

Nadella's warning is profound precisely because it's obvious once you hear it: Technology without utility is just complexity. AI needs to solve real problems for real people, or it will lose the permission to keep building.

That's not a technical requirement. That's a social one. And it's far harder to fix after the fact than to build in from the start.

For organizations building AI applications, that clarity is invaluable. Stop asking "How can we use AI?" Start asking "What specific problem does AI solve here, and can we measure whether it actually solves it?" Build from utility outward, not from capability inward.

The companies that do that—ruthlessly focusing on measurable outcomes, transparently communicating results, and continuously monitoring whether the technology is delivering on its promise—will emerge as the leaders of the next wave of AI adoption.

The companies still chasing hype and headlines? Their credibility problems are just beginning.

Key Takeaways

- Social permission—public belief in AI's value—matters more than technology capability or market metrics for long-term AI industry success

- Organizations have approximately 24-36 months to prove measurable AI utility before social skepticism intensifies and regulatory restrictions tighten

- Companies must shift from capability-focused AI deployment to outcome-focused implementation with ruthless measurement of business impact

- Historical technology cycles show that hype without utility leads to credibility crises lasting 5-10 years—AI faces similar risk if utility isn't demonstrated

- Transparency, employee involvement, and genuine problem-solving are essential to maintaining social permission; AI-for-AI's-sake approaches will face market rejection

Related Articles

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

- Why AI Isn't a Product: Enterprise Strategy Guide [2025]

- RadixArk Spins Out From SGLang: The $400M Inference Optimization Play [2025]

- Why CEOs Are Spending More on AI But Seeing No Returns [2025]

- 7 Biggest Tech Stories: Apple Loses to Google, Meta Abandons VR [2025]

- Wikimedia's AI Partnerships: How Wikipedia Powers the Next Generation of AI [2025]

![Microsoft CEO: AI Must Deliver Real Utility or Lose Social Permission [2025]](https://tryrunable.com/blog/microsoft-ceo-ai-must-deliver-real-utility-or-lose-social-pe/image-1-1769056549545.jpg)