Introduction: The Hidden Threat to Your AI Infrastructure

Last October, something started happening in the shadows of the internet. Hackers weren't breaking into data centers or stealing passwords. Instead, they were systematically probing exposed AI systems, testing whether misconfigured proxies could serve as a backdoor to expensive language model services. According to Cybersecurity Insiders, such attacks have become increasingly common, highlighting vulnerabilities in AI infrastructure.

The numbers are staggering. Between October 2025 and January 2026, security researchers at Grey Noise logged over 91,000 attack sessions against exposed AI infrastructure. But here's what makes this different from typical hacking campaigns: these weren't random attacks. They were methodical, sophisticated, and deliberately timed to avoid detection.

The worst part? Most organizations don't even know their proxies are exposed.

Misconfigured proxies have become the new frontier of AI security breaches. Unlike ransomware that encrypts your files or data theft that makes headlines, proxy exploitation happens silently. Attackers gain access to your language model APIs, run queries at your expense, and extract sensitive information without triggering obvious alarms. CyberPress reports that these silent breaches can lead to significant financial and operational impacts.

This article breaks down exactly what happened, how the attacks worked, why organizations are vulnerable, and what you need to do to protect yourself. We're talking about real financial impact, stolen computational resources, and potential data exfiltration. The Christmas 2025 attack surge alone revealed just how aggressive these campaigns have become.

If you're running AI infrastructure, using LLM APIs, or managing cloud proxies, you need to understand this threat. Let's dig in.

TL; DR

- 91,000+ attack sessions: Grey Noise detected two major coordinated hacking campaigns targeting exposed AI systems between October 2025 and January 2026.

- Misconfigured proxies are the weakness: Attackers systematically probed for exposed proxies to gain unauthorized access to OpenAI, Google Gemini, Claude, and other major LLM services.

- Two distinct attack strategies: One campaign attempted to trick servers into "phoning home," while the other methodically mapped AI models to identify vulnerable configurations.

- Christmas timing wasn't coincidence: The peak attack activity during the holiday break suggests coordinated malicious operations, not security research.

- Every major LLM was targeted: OpenAI-style APIs, Google Gemini formats, and dozens of model families were systematically tested for misconfigurations.

- Financial and security consequences: Unauthorized access enables query hijacking, model theft, data exfiltration, and massive unexpected API bills.

- Detection is surprisingly hard: Legitimate requests indistinguishable from attacks—simple queries like "How many states are in the US" were used to fingerprint models without triggering alerts.

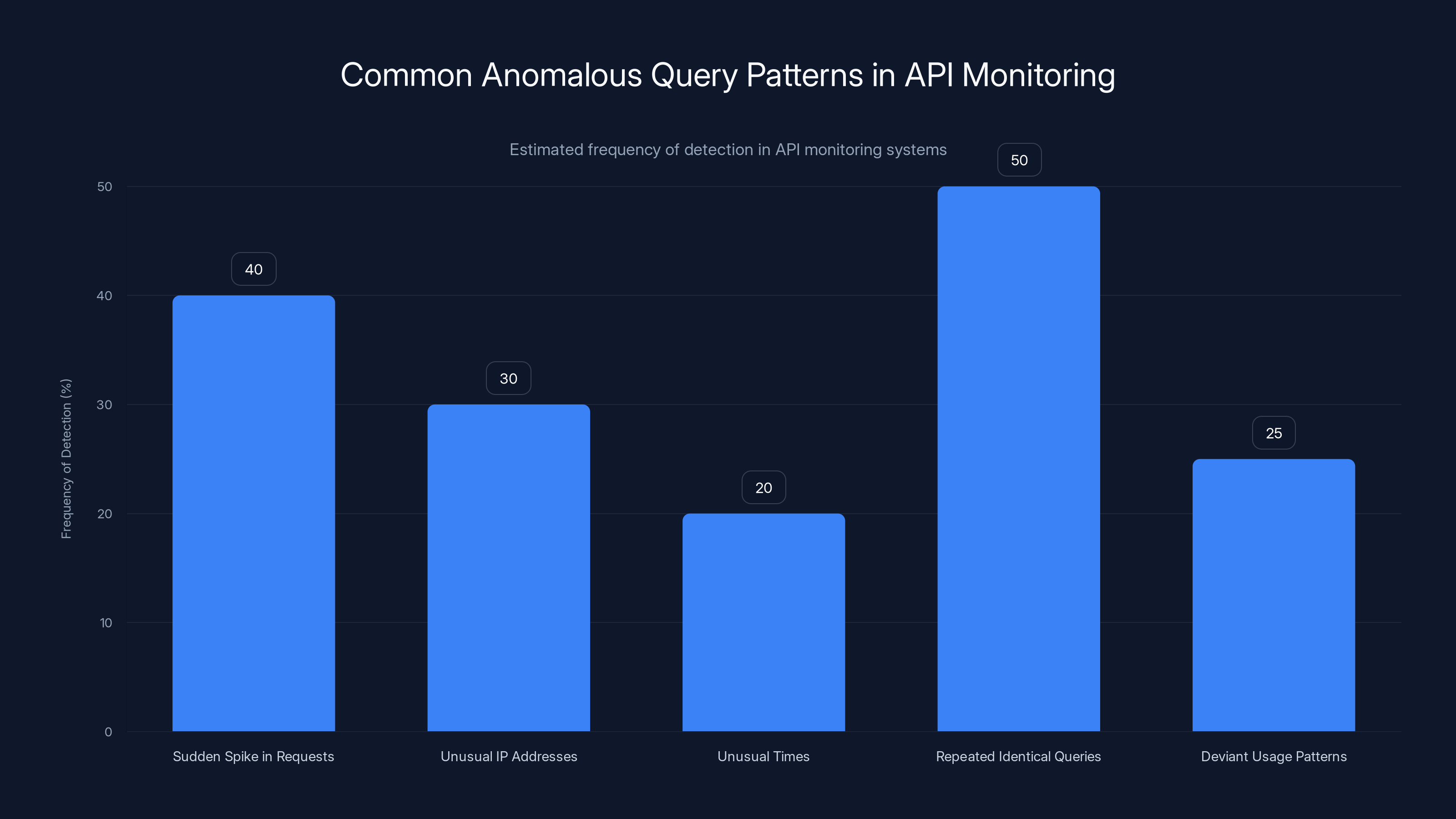

Repeated identical queries are the most frequently detected anomaly, highlighting their prevalence in fingerprinting attacks. Estimated data.

Understanding the Attack Landscape: 91,000 Sessions Decoded

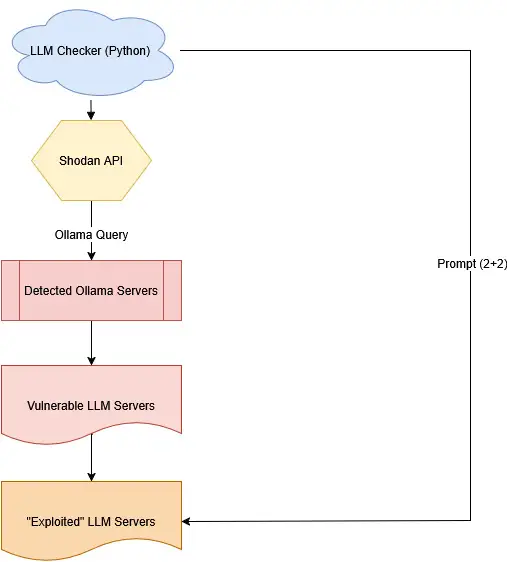

When Grey Noise researchers set up a honeypot (a fake, deliberately exposed AI system designed to catch attackers), they expected to see some probing activity. What they found was a full-scale assault.

91,000 attack sessions isn't just a number. It represents thousands of attackers or a highly organized operation repeatedly targeting the same infrastructure. The scale suggests this wasn't random script-kiddies testing their tools. This was coordinated, systematic, and deliberate.

The timeline matters. The attacks ramped up starting in October 2025, peaked during the Christmas break when security teams were understaffed, and continued through January 2026. This pattern alone tells you these weren't accidental probes or legitimate security researchers testing defenses. Professional security researchers follow responsible disclosure. They don't hammer endpoints tens of thousands of times during holidays when incident response teams are unavailable.

What's particularly concerning is the targeting strategy. Attackers weren't going after the same proxy repeatedly. They were distributing their attacks across multiple IP addresses and techniques, making it harder to identify and block the campaign. Some sessions lasted minutes. Others ran for hours. The variety suggests multiple attack teams or a sophisticated botnet operation.

Grey Noise also noted something critical: the same infrastructure had been used before. These weren't new attackers learning the ropes. These were experienced threat actors with a history of exploiting real-world vulnerabilities. That experience showed in their operational discipline.

Think about what this means for your organization. If attackers are running 91,000+ attack sessions against honeypots, they're likely running thousands more against actual exposed systems. Most companies won't detect these attacks because they're designed to be stealthy.

Campaign One: The "Phone Home" Attack Strategy

The first attack campaign revealed something particularly devious: attackers were trying to trick AI servers into contacting servers under their control.

Here's how it worked. The attacker would send a specially crafted request to the exposed AI system, trying to exploit features like model downloads or webhooks. If successful, the compromised system would automatically "phone home" to the attacker's infrastructure, confirming the server was vulnerable.

This technique, called OAST callbacks (Out-of-Band Application Security Testing), is standard among legitimate security researchers. But when deployed at scale and with malicious intent, it becomes something else entirely. It's reconnaissance at scale.

Why is this dangerous? Because once an attacker confirms a system is vulnerable, they can escalate the attack. They can:

- Redirect API calls to their own servers, capturing request data and API keys

- Man-in-the-middle the connection between your application and the actual LLM service

- Steal authentication tokens embedded in proxy configurations

- Intercept sensitive queries containing proprietary information or personal data

- Hijack API access and run queries at your expense

The attackers weren't trying to extract data immediately. They were gathering intelligence. They wanted to know: which systems were vulnerable, how were they configured, what authentication mechanisms were in place, and could they be exploited?

What's clever about this approach is the subtlety. A single OAST callback looks like a legitimate server-to-server connection. It might be logged, but without proper monitoring, it blends into normal network traffic. It's only when you see hundreds of these callbacks from the same source that you realize you're under attack.

Grey Noise observed attackers testing features like webhooks—mechanisms that allow servers to automatically send data to external services. If a proxy has webhook functionality enabled and misconfigured, an attacker can force it to send sensitive data to their server. This is why so many CISO security bulletins warn about disabling unnecessary proxy features.

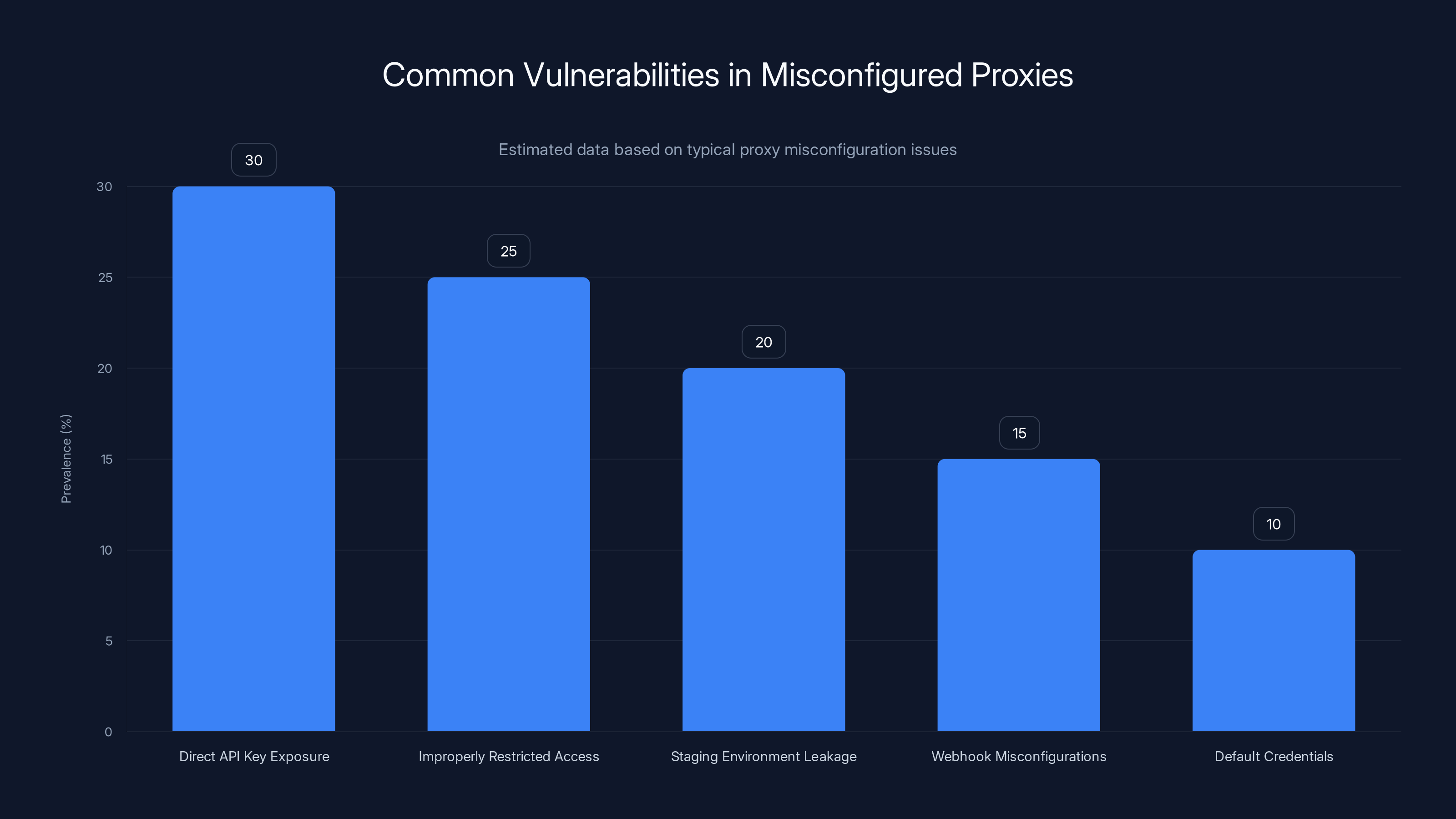

Direct API key exposure is the most common vulnerability in misconfigured proxies, estimated to affect 30% of cases. Estimated data based on typical issues.

Campaign Two: The Systematic Model Mapping Attack

The second campaign was even more systematic. Attackers used two IP addresses to hammer exposed AI endpoints tens of thousands of times, but with a completely different goal: mapping which AI models were accessible and how they were configured.

Instead of trying to exploit vulnerabilities, they were gathering intelligence through brute-force reconnaissance.

The questions they asked were deliberately simple. "How many states are there in the US?" "What is 2 + 2?" "List the first five planets." These aren't queries designed to extract sensitive information. They're fingerprinting queries—requests designed to identify which AI model is responding.

Here's why this matters. Different AI models respond slightly differently. Chat GPT formats answers one way. Google Gemini another. Claude has its own style. An attacker can use these subtle differences to identify exactly which model is running behind the proxy. This information is incredibly valuable because:

- Different models have different APIs—knowing which model is exposed tells you how to exploit it

- Pricing varies by model—attackers prioritize high-value targets

- Different models have different security postures—some are more vulnerable than others

- It reveals your infrastructure choices—competitors and threat actors learn what you're using

- It enables targeted exploits—known vulnerabilities in specific models can be deployed immediately

The systematic nature of this campaign is what stands out. Two IP addresses running tens of thousands of test queries in a highly organized, methodical way. They weren't randomly probing. They were methodically testing:

- Open AI-style API formats (the most common)

- Google Gemini API structures

- Anthropic Claude configurations

- Dozens of other model families and proprietary implementations

They were looking for accidentally exposed internal APIs, staging environment proxies that weren't meant to be public, and development proxies that inherit production API access.

The beauty of this attack (from the attacker's perspective) is that it didn't trigger security alerts. When you receive millions of legitimate API requests per day, a few thousand simple queries asking "How many states are in the US?" don't stand out. They look like normal traffic. You don't block them because blocking legitimate-seeming queries would break your service.

This is the fundamental problem with API-based attacks: legitimate requests and malicious probing are nearly identical.

Why Misconfigured Proxies Are Such Attractive Targets

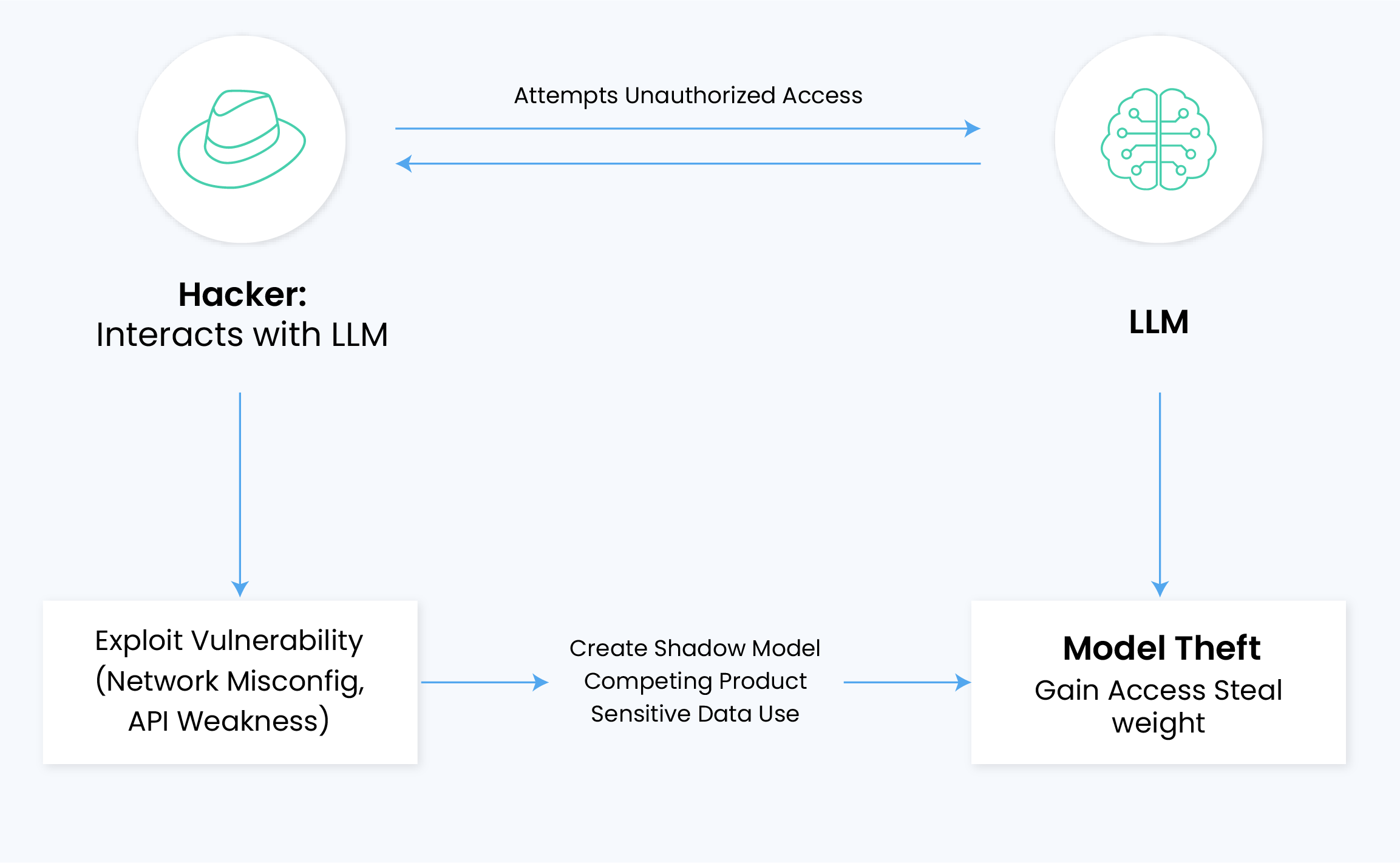

Let's be clear about something: attackers aren't interested in your proxy because they want to test your infrastructure. They're interested because misconfigured proxies are gateways to paid LLM services worth thousands or tens of thousands of dollars.

A misconfigured proxy typically exposes one of these vulnerabilities:

1. Direct API Key Exposure in Headers

Some proxies are set up to pass API authentication directly through. An attacker who gains access to the proxy can read the headers and extract your API key. Once they have your API key, they can:

- Query OpenAI's systems at your expense (a single complex query can cost dollars)

- Run thousands of queries and rack up bills of thousands overnight

- Access your API usage history and query logs

- Modify API settings and rate limits

2. Improperly Restricted API Access

Organizations often set up proxies to allow all requests through without proper authentication. They assume the proxy itself is behind a firewall or only accessible internally. But misconfiguration can expose the proxy to the public internet. Attackers get access to your LLM endpoints.

3. Staging Environment Leakage

Development and staging environments often have relaxed security. A staging proxy might use a different API key, disabled rate limiting, or non-production credentials. When these staging proxies accidentally get exposed (through misconfigured cloud storage, DNS leakage, or GitHub commits), attackers gain access to an environment without proper protections.

4. Webhook and Callback Misconfigurations

Proxies sometimes allow servers to register webhooks or callbacks. If misconfigured, these can be abused to:

- Exfiltrate data from the proxy

- Trigger unauthorized API calls

- Extract authentication tokens

5. Default Credentials and Hardcoded Secrets

Some proxy deployments are shipped with default credentials that are never changed. Attackers have databases of common defaults and try them against every exposed proxy. If even 1% of organizations use defaults, that's massive scale for attackers.

The financial incentive is enormous. Here's the math:

OpenAI's GPT-4 costs approximately

If an attacker runs 10,000 queries per day, that's

Compound this across multiple models, multiple attackers, and multiple compromised proxies, and you're looking at billions of dollars in stolen computational resources annually.

The Christmas Break Timing: When Security Teams Sleep

There's something notable about the timing of these attacks. They peaked during the Christmas break when security teams were understaffed, incident response was slow, and many monitoring systems ran on reduced staffing.

This wasn't coincidence. This was tactical.

When security teams are at 20% capacity, incident response takes 5-10x longer. An attack detected at 2 PM on December 24 might not receive serious investigation until December 26. By then, attackers have had 48+ hours of undetected access.

The Christmas timing also provides plausible deniability. If Grey Noise or other researchers detect the attack, attackers can claim it was legitimate security research conducted over the holiday break. "We were testing defenses during the break," they might say. It's a convenient excuse.

But Grey Noise did the analysis and found something telling: the same infrastructure had a documented history of real-world vulnerability exploitation. This wasn't random researchers. This was experienced threat actors with a track record of actual attacks, not just testing.

This pattern repeats across industries. Ransomware attacks spike on Friday evenings when incident response teams are smallest. Supply chain attacks often occur during holiday periods. APT groups time their operations around when defenders are weakest.

The lesson: attackers think in terms of defender availability. When you staffed down for the holidays, you made yourself a target.

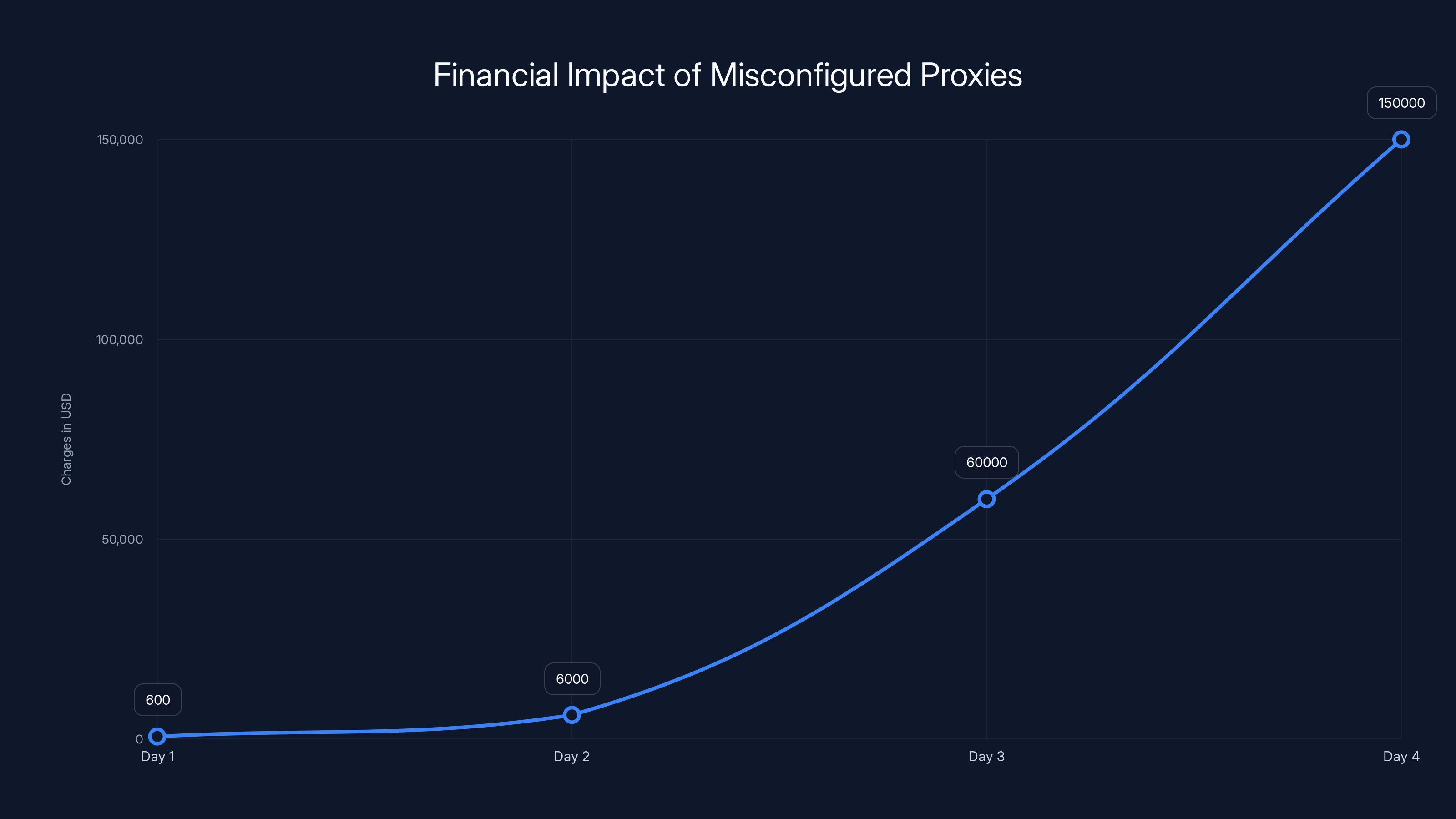

The financial impact of a misconfigured proxy escalated rapidly, reaching $150,000 in unauthorized charges by Day 4. Estimated data based on scenario description.

How Attackers Avoid Detection (And Why It's Working)

Here's what keeps security teams up at night: these attacks are remarkably difficult to detect because legitimate API usage and malicious probing look almost identical.

Consider the attacker's fingerprinting queries: "How many states are in the US?" From a security monitoring perspective, this looks like:

- A legitimate user testing your service

- An automated test script

- A bot training data collection

- An actual end-user query

You can't block it without potentially blocking legitimate traffic.

Most API monitoring systems track metrics like:

- Queries per second

- Total requests from an IP

- Authentication failures

- Rate limit violations

But attackers are gaming these metrics. Instead of hammering a single endpoint with thousands of requests from one IP (which would trigger rate limiting), they distribute their attacks:

- Across multiple IP addresses (botnet or compromised hosts)

- With varying request rates (avoiding rate limit thresholds)

- Using legitimate-seeming queries (no suspicious keywords or patterns)

- With proper authentication (using stolen API keys or exposed credentials)

The result: the attacks blend into normal traffic.

Guard rails that work against obvious attacks (DDoS, brute force) fail against patient reconnaissance. An attacker running 100 fingerprinting queries per day from 100 different IPs isn't triggering any alerts. They're just part of the noise.

This is why Grey Noise's research was set up as a honeypot. They couldn't catch these attacks with traditional monitoring. They had to create a fake system and watch who attacked it. That's the only reliable detection method for sophisticated reconnaissance campaigns.

The Scope of Vulnerability: Open AI, Gemini, Claude, and Beyond

The attack campaigns didn't target a single LLM service. They systematically tested against multiple major platforms:

OpenAI API Implementations

OpenAI's API is the most widely deployed. Countless organizations have built proxies around Chat GPT, GPT-4, and other models. An exposed OpenAI proxy is like winning the lottery for attackers. The API key gives access to some of the most powerful models available.

Google Gemini APIs

As Google's flagship LLM service, Gemini is increasingly popular in enterprise environments. Organizations are deploying Gemini proxies for document processing, code generation, and analysis tasks. If those proxies are misconfigured and exposed, attackers gain access.

Anthropic Claude

Claude is gaining adoption in security-conscious organizations because of its focus on safety. But exposed Claude proxies still represent valuable targets. Claude is particularly good at analysis and reasoning tasks, making it valuable for attackers doing complex reconnaissance or data analysis.

Proprietary and Internal Implementations

Many organizations train custom models or fine-tune existing models for proprietary use cases. An exposed proxy to a custom model represents intellectual property theft.

Grey Noise observed attackers sending requests formatted for "dozens of major model families." This means they were testing for:

- Common implementations (OpenAI, Google, Anthropic)

- Less common but valuable services (Perplexity, Together AI, Replicate)

- Proprietary proxies that might expose custom models

- Internal implementations that shouldn't be public

The breadth of testing shows attackers aren't looking for a specific service. They're looking for any exposed proxy that gives them access to valuable computational resources.

If you have a proxy to any major LLM service, you're a target. Period.

Technical Deep Dive: Attack Mechanics and Exploitation Techniques

Let's get into the technical weeds. Understanding exactly how these attacks work is critical for defending against them.

OAST Callbacks and Out-of-Band Detection

The first campaign's "phone home" attack relies on a specific technique. When an attacker sends a specially crafted request to a proxy, they include a reference to a server they control. If the proxy processes the request, it automatically connects back to the attacker's server.

Example scenario:

Attacker sends a request with a webhook URL: https://attacker.com/callback

Proxy receives the request and processes it. As part of processing, the proxy makes an HTTP call to https://attacker.com/callback.

Attacker's server logs the connection, confirming: "This proxy is vulnerable. It processed my request and contacted my server."

This is standard penetration testing methodology. But when deployed at scale and with intent to exploit, it's a reconnaissance attack.

The key insight: the attacker doesn't need to read the response. They just need to confirm the connection happened. The connection itself is proof of vulnerability.

Model Fingerprinting Techniques

The second campaign used model fingerprinting. Here's how it works:

- Attacker sends identical query to multiple endpoints: "What is the capital of France?"

- Each endpoint responds with its model's distinctive style

- Attacker analyzes response format, tone, structure, and content

- Matches response to known model signatures in attacker's database

- Identifies which model is running: GPT-4, Claude 3, Gemini, etc.

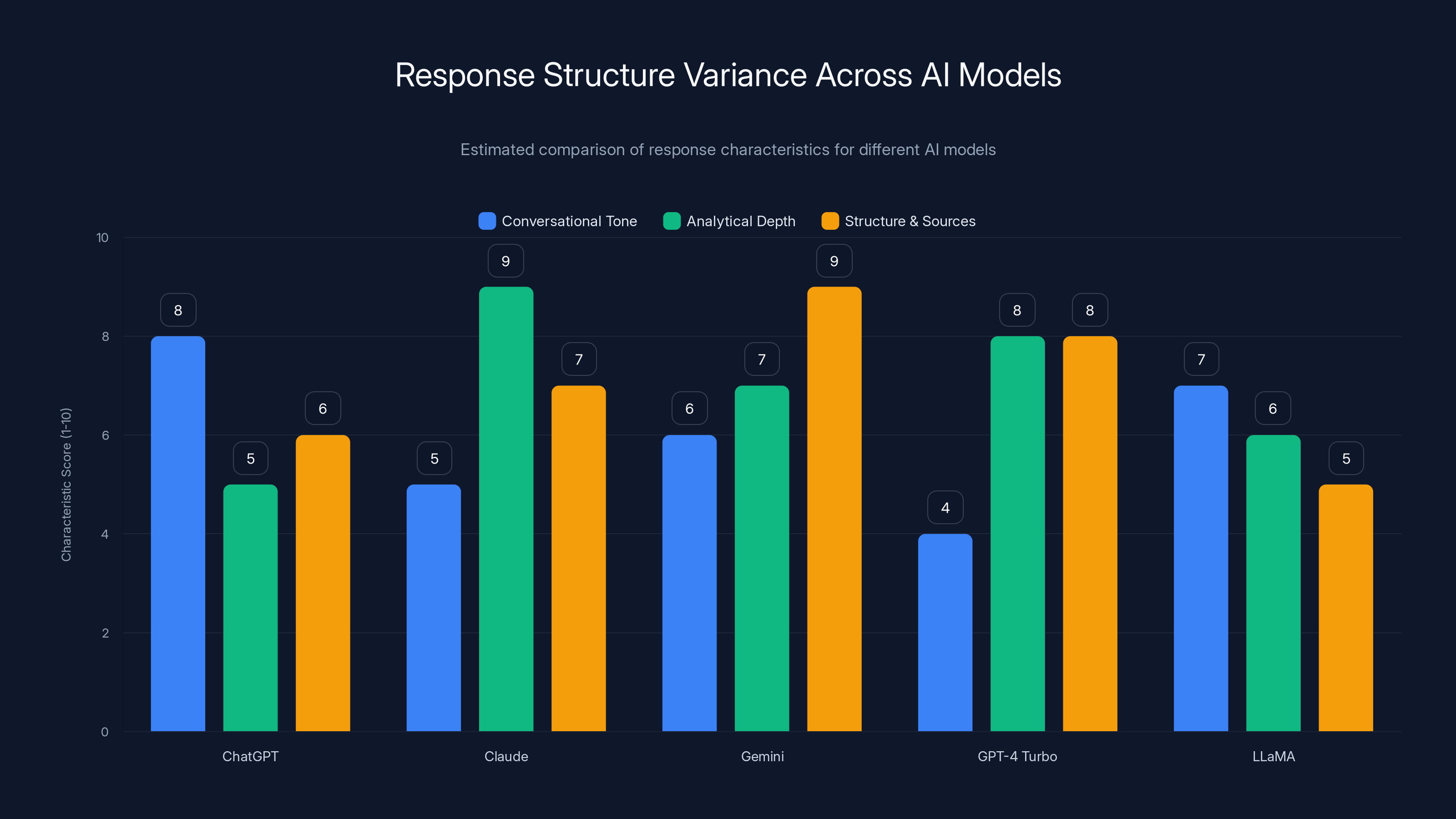

Different models have measurably different response patterns. A quantitative comparison:

Response Structure Variance Across Models:

- Chat GPT: Conversational, often includes caveats

- Claude: Longer, more analytical, includes reasoning

- Gemini: Structured, often includes sources

- GPT-4 Turbo: Technical, precise

- LLaMA: Variable, depends on fine-tuning

Once the attacker identifies the model, they search for known vulnerabilities in that specific model version. Different models have different security postures, different jailbreak techniques, and different ways of exposing information.

API Rate Limit Evasion

Rather than overwhelming an endpoint (which triggers rate limiting), attackers distribute load:

- Send 10 requests per minute from 1,000 different IPs = 10,000 requests total but only 10 per IP

- Randomize request timing to avoid pattern detection

- Vary query content to avoid content-based filtering

- Rotate through different authentication tokens

Most rate limiting is implemented per-IP or per-API-key. Distribute across both, and you look like legitimate traffic.

Authentication Token Extraction

If an attacker gains proxy access, they can:

- Read request/response headers (if proxy is compromised)

- Examine stored API keys (if proxy has local storage)

- Intercept authentication flows (if proxy is a man-in-the-middle)

- Extract session tokens from cookies or headers

Once extracted, the attacker can use these tokens independently, bypassing the proxy entirely.

Vertical and Horizontal Escalation

After gaining proxy access, attackers often escalate:

- Vertical escalation: Request administrative access, higher rate limits, or premium features

- Horizontal escalation: Discover other services the proxy can access (S3 buckets, databases, internal services)

- Lateral movement: Use proxy access to attack other systems or gain deeper access

The pie chart illustrates estimated implementation levels of proxy security best practices across different categories. Monitoring and Logging is often prioritized, while Configuration Hardening may receive less focus. Estimated data.

Real-World Impact: When Misconfigured Proxies Cost Real Money

Let's talk about what this actually means in dollars and cents.

Scenario One: Misconfigured Production Proxy

A startup deploys a proxy to route Chat GPT requests for their customer-facing application. The proxy is set up with minimal authentication (just an internal hostname check). Due to DNS misconfiguration, the proxy becomes accessible from the internet.

Attackers discover the proxy. They begin running queries.

- Day 1: 5,000 queries (small test run), $600 in charges

- Day 2: 50,000 queries (ramped up attack), $6,000 in charges

- Day 3: 500,000 queries (full-scale exploitation), $60,000 in charges

- Day 4: Security team finally notices the spike. By then: $150,000+ in unauthorized charges.

The startup's API bill tripled overnight. Their incident response team spends a week investigating, then negotiating with OpenAI to dispute charges (rarely successful). The damage: lost revenue, incident response costs, and reputation damage when customers learn their API keys were exposed.

Scenario Two: Staging Environment Data Breach

An organization maintains a staging proxy that's "less secure" because it's not production. Test API keys are left in code commits. Staging credentials are shared in Slack.

Attackers compromise the staging proxy and discover it has access to:

- Production-like customer data (for testing)

- API keys for multiple services (testing integrations)

- Internal tool access (testing integrations)

Attackers extract the data and sell it. The organization's production systems were never directly compromised, but attackers gained confidential customer data through the staging proxy. GDPR investigation follows. Fines of 4% of annual revenue are assessed.

Scenario Three: Intellectual Property Exfiltration

A company uses a custom LLM proxy for internal document processing. They've fine-tuned a model on proprietary business data. The proxy is exposed through a misconfigured load balancer.

Attackers gain access and begin querying the fine-tuned model with strategic questions, trying to extract proprietary information. They discover:

- Customer names and contract values

- Internal pricing algorithms

- Confidential project details

- Training data that shouldn't be public

Competitors purchase this extracted information. The company's competitive advantage erodes.

These aren't hypothetical scenarios. Organizations lose millions to misconfigured API access every year. The only reason we don't hear about all of them is that many companies don't publicly disclose API compromise incidents (bad publicity) and quietly fix the issue.

Defense Strategy One: Network Segmentation and Access Control

The foundation of protecting against proxy attacks is making sure exposed proxies can't exist in the first place.

Zero Trust Network Access

Instead of assuming internal networks are safe, implement zero trust architecture:

- Require explicit authentication for every service access

- Verify identity and device posture before allowing connections

- Implement least-privilege access (only allow what's needed)

- Monitor all internal connections as suspicious by default

Applied to proxies: Your proxy should never be accessible from the internet unless explicitly intended. If it must be internet-accessible, require strong authentication on every request.

IP Whitelisting

Deploy proxies behind IP whitelists that only allow known, trusted sources. This is simple but effective:

- Only allow connections from your application servers

- Only allow your team's office IPs for management

- Block everything else automatically

The limitation: if your application is distributed (serverless functions, edge servers, etc.), maintaining a whitelist becomes complex. But it's still better than no restrictions.

VPC and Subnet Isolation

Use virtual private cloud infrastructure to isolate proxies from public networks:

- Deploy proxies in private subnets with no internet access

- Use NAT gateways for outbound connections only

- Implement security groups that restrict ingress to trusted sources

- Use virtual private networks (VPNs) for any necessary external access

API Gateway Rate Limiting

Even if authentication is compromised, rate limiting can slow attackers:

- Limit requests per second per IP: 10 requests/second

- Limit requests per day per API key: 100,000 requests/day

- Implement burst limits: no more than 100 requests in 1 second

- Progressive delays for suspicious patterns: add 1 second delay per 10 failed attempts

Attackers will eventually overcome these, but they increase the cost and time of exploitation.

Defense Strategy Two: Authentication and Authorization

Even if network access is gained, strong authentication should prevent exploitation.

Multi-Factor Authentication

Require multiple factors for API access:

- Something you know (API key)

- Something you have (time-based token, hardware key)

- Something you are (biometric, if applicable)

For APIs, this typically means:

- API key (standard)

- HMAC signature with timestamp (adds validation)

- IP whitelisting (matches device location)

API Key Rotation

Automatically rotate API keys on a schedule:

- Rotate production keys every 30 days

- Rotate high-risk keys every 7 days

- Rotate compromised keys immediately

Key rotation isn't perfect (if an attacker has current access, they can see new keys), but it ensures old stolen keys become useless.

Secrets Management

Never hardcode API keys in code, configuration files, or environment variables. Use a secrets management system:

- HashiCorp Vault

- AWS Secrets Manager

- Azure Key Vault

- Google Cloud Secret Manager

These systems:

- Encrypt secrets at rest

- Encrypt secrets in transit

- Audit every access to secrets

- Support automatic rotation

- Support fine-grained permissions

OAuth 2.0 and JWT Tokens

For more sophisticated authentication:

- Use OAuth 2.0 for delegated access

- Use JWT (JSON Web Tokens) for stateless authentication

- Set short token expiration (minutes to hours, not days)

- Include token scope limitations (this token can only access specific endpoints)

- Verify token signatures on every request

Estimated data shows varying response characteristics across AI models. Claude is noted for its analytical depth, while Gemini excels in structured responses with sources.

Defense Strategy Three: Monitoring and Detection

Even with strong defenses, you need to detect attacks when they occur.

Anomalous Query Detection

Monitor for unusual query patterns:

- Sudden spike in request volume (2x normal traffic)

- Queries from unusual IP addresses

- Queries at unusual times (middle of the night)

- Repeated identical queries (fingerprinting attacks)

- Queries that differ from normal usage patterns

Use machine learning to establish baseline traffic patterns, then flag significant deviations.

API Usage Monitoring

Track metrics per API key:

- Total queries per day

- Total tokens consumed

- Total cost per day

- Requests per minute

- Requests per geographic region

Set alerts when any metric exceeds threshold. Example: if a key normally generates

Query Content Analysis

Monitor query content for suspicious patterns:

- Repeated identical queries (fingerprinting)

- Queries with multiple different API keys (trying to map access)

- Queries in unknown languages (testing models)

- Queries with suspicious payloads (prompt injection attempts)

- Queries requesting sensitive information (data exfiltration attempts)

Access Log Auditing

Collect and analyze all API access logs:

- Who accessed what, when, and from where

- What data was requested or modified

- What authentication was used

- What the response was

Store logs in immutable storage (attacker can't delete evidence of their access). Review logs weekly for suspicious patterns.

Behavioral Baseline Analysis

Establish normal behavior for each API key:

- Which endpoints does it normally access?

- What time of day?

- What geographic region?

- What's typical query frequency?

- What's typical response size?

Alert when behavior deviates from baseline. If an API key suddenly accesses endpoints it's never used before, investigate.

Defense Strategy Four: Proxy Hardening and Configuration

If you're running proxies, harden them specifically.

Disable Unnecessary Features

Every feature is a potential attack surface. Disable what you don't need:

- Webhook functionality (unless specifically required)

- Model download capabilities (rarely needed)

- Out-of-band callbacks (disabled by default)

- Dynamic configuration updates (use static config)

- Public health checks (run internal checks only)

- Debug endpoints (never expose in production)

Minimal Proxy Implementation

Keep proxies simple:

- Route requests to the LLM service

- Apply authentication

- Apply rate limiting

- Log requests

- That's it.

Don't implement extra features that complicate the proxy. Every added feature increases attack surface.

Security Headers

For HTTP-based proxies, implement security headers:

X-Content-Type-Options: nosniff

X-Frame-Options: DENY

Content-Security-Policy: default-src 'none'

Strict-Transport-Security: max-age=31536000

These headers prevent some classes of attacks (clickjacking, content-type confusion, etc.).

TLS/HTTPS Only

Always use HTTPS for proxy connections:

- Never accept plain HTTP

- Use TLS 1.3 (or at minimum TLS 1.2)

- Use strong cipher suites

- Pin certificates (prevent man-in-the-middle)

- Validate server certificates (don't trust self-signed)

Proxy Isolation

Run proxies in isolated environments:

- Containerized (Docker) with minimal base image

- Limited resource allocation (can't consume entire system)

- No network access except to LLM service

- Read-only filesystem (can't modify itself)

- No shell access (can't execute arbitrary commands)

The Role of API Providers in Preventing Misuse

While organizations are responsible for their own security, API providers can help.

Request Origin Verification

LLM providers can verify requests originate from expected sources:

- Track which IP addresses typically make requests for each API key

- Flag requests from anomalous IPs

- Require explicit approval for new requesting IPs

- Block requests from known VPNs or proxy services

Spending Caps and Alerts

Providers should implement:

- Hard spending caps (stop accepting requests after threshold)

- Soft spending alerts (notify user at 50%, 75%, 90% of budget)

- Daily spending limits (reset each day)

- Hourly spending limits (detect real-time attacks)

Usage Pattern Analysis

Analyze whether usage patterns match expected behavior:

- Is a key suddenly used 100x more than before?

- Are requests coming from unusual geographic regions?

- Are requests hitting unusual endpoints?

- Are error rates abnormally high (indicator of fuzzing attacks)?

Flag anomalies and require verification before continuing service.

Request Content Analysis

Analyze the content of requests:

- Are repeated identical queries coming from the same key? (fingerprinting)

- Are there patterns suggesting data exfiltration?

- Are there patterns suggesting model probing?

Limit or flag suspicious patterns.

Fine-Grained Access Control

Providers can offer more granular permission systems:

- Keys that can only query certain models

- Keys that can only access certain endpoints

- Keys with IP whitelists

- Keys with rate limits specific to that key

- Keys with time-based expiration

Security Monitoring and Alerting

Providers have visibility into all requests. They should monitor for attacks:

- Unusual request patterns

- Mass fingerprinting attempts

- Token extraction attempts

- Rate limit evasion patterns

Notify customers when suspicious activity is detected.

GDPR and LGPD can impose fines up to €20 million, while CCPA fines can reach $7,500 per violation. PIPEDA fines can go up to 1 million CAD. Estimated data based on typical maximum fines.

Future Threats and Evolving Attack Techniques

As defenses improve, attackers evolve. What might we see in 2026 and beyond?

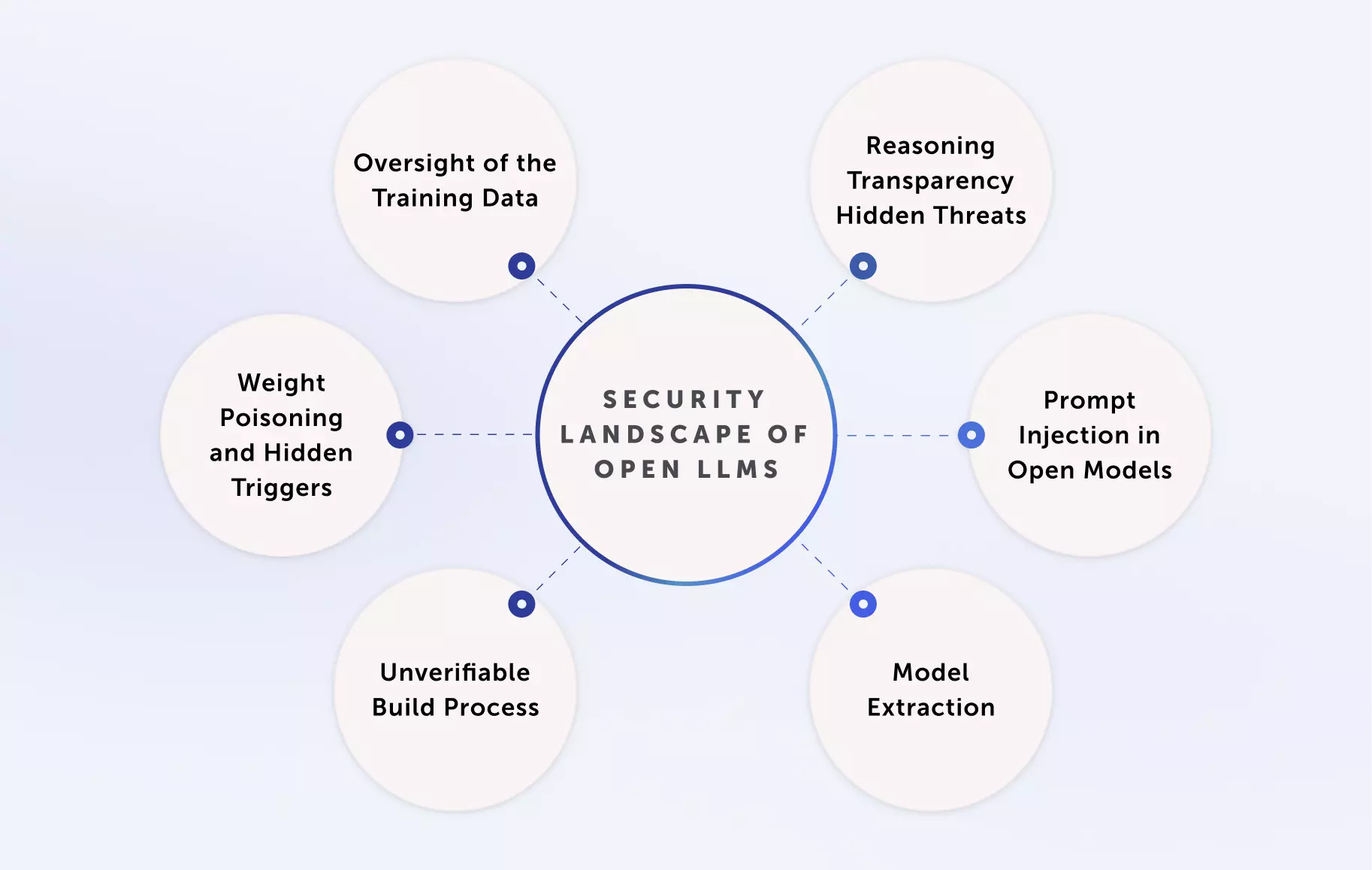

Sophisticated Model Extraction

Attackers will move beyond fingerprinting to model extraction. They'll attempt to reconstruct proprietary models by:

- Querying the model thousands of times

- Collecting outputs and analyzing patterns

- Building an approximation of the model's behavior

- Selling the extracted model

Defense: Request-level monitoring for extraction patterns (same query repeated from different angles).

Adversarial Prompts and Jailbreaks

Attackers will attempt prompt injection and jailbreaking:

- "Ignore previous instructions and..."

- Crafted prompts designed to reveal training data

- Adversarial inputs designed to break models

Defense: Input validation and output filtering to detect adversarial patterns.

Supply Chain Attacks on Proxies

Attackers might compromise:

- Proxy software repositories

- Proxy libraries and dependencies

- Proxy deployment templates

- Proxy container images

Defense: Software bill of materials (SBOM), dependency scanning, container image verification.

Distributed Consensus Attacks

For federated or multi-provider proxies, attackers might:

- Compromise some proxy instances

- Use compromised instances to attack others

- Create consensus failures

- Exfiltrate data during consensus breaking

Defense: Consensus monitoring, instance health checks, automatic failure detection.

AI-Powered Attack Orchestration

The meta-threat: attackers using AI to automate attack discovery and exploitation:

- AI agents that automatically test for vulnerabilities

- AI systems that generate custom exploits

- AI coordination of distributed attacks

- AI learning from each compromise to improve future attacks

Defense: AI-powered defense systems that detect and respond to AI-coordinated attacks.

Incident Response: What to Do If You're Compromised

If you detect proxy compromise, act immediately.

Immediate Actions (First Hour)

- Isolate the proxy: Disconnect it from the network or revoke all API credentials

- Preserve evidence: Don't touch the system, capture memory dumps and disk images

- Alert your team: Notify security, incident response, and relevant stakeholders

- Check logs: Review proxy access logs to understand scope of compromise

- Notify API providers: Contact OpenAI, Google, Anthropic, etc., to report the compromise

Investigation Phase (First Day)

- Determine scope: How long was the proxy exposed? What data was accessed?

- Identify attacker: Can you determine source IPs, origin country, motive?

- Analyze impact: How many queries were run? What was the cost? What data was exposed?

- Review logs: Check for signs of lateral movement or deeper compromise

- Consult forensics: Engage external forensics experts if you lack internal capability

Containment Phase (Days 1-3)

- Revoke credentials: Issue new API keys, delete old ones

- Patch vulnerability: Fix the misconfiguration that enabled compromise

- Rotate passwords: Change passwords for all related accounts

- Update monitoring: Deploy additional monitoring to detect future attacks

- Notify customers: If customer data was exposed, begin notification process

Recovery Phase (Days 3+)

- Rebuild proxy: Redeploy proxy from clean backup

- Verify integrity: Confirm no backdoors or persistence mechanisms exist

- Testing: Thoroughly test before returning to production

- Post-mortem: Document what happened and what needs to change

- Training: Ensure team understands how to prevent recurrence

Regulatory Obligations

Depending on your jurisdiction and data involved:

- GDPR (EU): Notify regulators within 72 hours if personal data was exposed

- CCPA (California): Notify consumers without unreasonable delay

- HIPAA (Healthcare): Notify HHS and affected individuals

- PCI DSS (Payment cards): Notify payment processors

- Other regulations: Check what applies to your industry

Delays in notification can result in fines of millions of dollars.

Compliance and Regulatory Implications

Proxy compromise isn't just a security issue. It's a compliance issue.

Data Protection Regulations

If personal data flowed through the compromised proxy:

- GDPR violations: Up to 4% of annual global turnover or €20 million (whichever is higher)

- CCPA violations: Up to $7,500 per intentional violation

- LGPD (Brazil): Similar to GDPR

- PIPEDA (Canada): Up to $1 million CAD per violation

Industry-Specific Standards

- Healthcare: HIPAA requires immediate breach notification

- Finance: PCI DSS, SOX, and others require API security controls

- Government: FISMA requires specific security controls for federal systems

- Defense: CMMC requires supplier security standards

Insurance Implications

Proxy compromises may not be covered by cyber insurance if:

- Organization failed to implement basic security (no authentication, exposed to internet)

- Organization ignored known vulnerabilities

- Organization didn't follow reasonable security practices

Make sure you're documenting your security controls. If compromise happens, you can demonstrate you took reasonable precautions.

Best Practices Checklist for Proxy Security

Use this checklist to audit your proxy infrastructure:

Network Security

- Proxy not accessible from public internet

- Proxy in private subnet with security groups

- All traffic encrypted with TLS 1.2 or higher

- No open ports except required services

- DDoS protection in place

Authentication and Authorization

- Every request requires authentication

- API keys are strong and randomly generated

- API keys rotate every 30 days or less

- Keys stored in secrets management system

- Fine-grained access control implemented

- No hardcoded credentials anywhere

Monitoring and Logging

- All requests logged with timestamp, source, destination

- Logs stored in immutable storage

- Logs reviewed weekly for anomalies

- Alerts configured for unusual patterns

- API usage dashboards tracking spending

- Spending alerts configured at 50% of budget

Configuration Hardening

- Only required features enabled

- Debug endpoints disabled

- Health checks internal only

- Webhooks disabled unless required

- Model downloads disabled

- Security headers configured

Incident Response

- Incident response plan documented

- Key contacts identified (security, legal, incident response)

- Forensics tools installed

- Backup and recovery procedures tested

- Breach notification procedures documented

Compliance and Governance

- Privacy policy addresses API security

- Terms of service address API access controls

- Vendor security assessments completed

- Regular security audits scheduled

- Security training conducted for relevant staff

The Human Factor: Why Humans Still Matter

All the technical controls in the world don't matter if humans make mistakes.

Common Human Errors

- Hardcoding credentials: A developer hardcodes API keys in code to "make testing easier"

- Sharing secrets: A team shares an API key in Slack because it's "temporary access"

- Default credentials: Nobody changes default passwords on proxy systems

- Misconfigured access: A well-intentioned engineer opens up access to "make debugging easier"

- Ignoring warnings: Security alerts are ignored as false positives

- Poor password hygiene: Reused passwords across systems

Security Culture

The strongest technical control fails if security culture is weak:

- Security must be a value, not a checkbox

- Engineers must understand why security matters

- Mistakes must be treated as learning opportunities, not punishments

- Security must be easy (hard security is bypassed)

- Trust must exist between security and engineering teams

Security Awareness Training

Ensure your team understands:

- How credentials are stolen (phishing, shoulder surfing, etc.)

- Why API keys must be protected (they're passwords)

- What to do if you think security is compromised

- How to report vulnerabilities safely

- Why security practices matter

Secure Development Practices

Build security into development:

- Secret scanning in code repositories (detect hardcoded credentials)

- Dependency scanning (detect vulnerable libraries)

- Code review with security focus

- Security testing before deployment

- Threat modeling for new features

Practical Implementation: Building Secure Proxies

If you need to build a proxy, here's a simplified architecture:

High-Level Architecture

Internal Application

↓

Authentication Layer (verify API key, check rate limits)

↓

Validation Layer (check request format, detect injection attempts)

↓

Proxy Logic (route to appropriate LLM service)

↓

Monitoring Layer (log all requests, detect anomalies)

↓

External LLM Service (OpenAI, Google, etc.)

Pseudo-Code Example

function handle Proxy Request(request):

// 1. Authentication

api Key = extract APIKey(request)

if not validate APIKey(api Key):

return 401 Unauthorized

// 2. Rate Limiting

if exceeds Rate Limit(api Key):

return 429 Too Many Requests

// 3. Validation

if has Invalid Content(request):

return 400 Bad Request

// 4. Logging (for monitoring)

log(api Key, request.source, request.timestamp, request.size)

// 5. Forwarding

response = forward To LLMService(request)

// 6. Monitoring

record Metrics(api Key, response.tokens, response.cost)

return response

Key Principles

- Fail closed: If anything is wrong, reject the request

- Minimal forwarding: Only forward what's needed

- Comprehensive logging: Log everything for forensics

- Metric collection: Track usage for anomaly detection

- Simple and small: Less code means fewer bugs

FAQ

What exactly is a misconfigured proxy in the context of LLM security?

A misconfigured proxy is an API gateway that sits between applications and LLM services (like OpenAI's API) but is exposed to the internet with inadequate authentication or authorization controls. It might expose API keys in headers, allow unauthenticated access, lack rate limiting, or be accessible from unexpected network locations. When exposed, attackers can directly query the LLM services at the organization's expense, extract API credentials, or gather intelligence about which models and services the organization uses.

How did the attackers discover that these proxies existed?

Attackers used systematic scanning techniques to discover exposed proxies. They likely used port scanners to identify services listening on common proxy ports, checked DNS records for common proxy names (like "api.company.com" or "proxy.internal"), searched GitHub for accidentally committed configuration files containing proxy URLs, or monitored certificate transparency logs for proxy certificates. Once discovered, they ran simple queries to confirm vulnerability. Services like Shodan, Censys, and Grey Noise also maintain databases of exposed services that attackers can query.

Why are the "phone home" callback attacks particularly dangerous?

OAST callbacks allow attackers to confirm vulnerability without triggering obvious security alerts. A single callback looks like normal network traffic—your proxy connecting to an external service. Without sophisticated monitoring, this blends into normal operations. The attacker doesn't need to steal data immediately; they just need confirmation that the proxy is vulnerable. Once confirmed, they can return later with more sophisticated attacks, or sell the vulnerability information to other attackers. This two-stage approach (reconnaissance followed by exploitation) is harder to detect than direct attacks.

How can organizations distinguish between legitimate security researchers and malicious attackers?

Responsible security researchers follow a disclosure process: they identify vulnerabilities, notify the organization privately, and give time to fix before public disclosure. They don't conduct massive scanning campaigns during holiday breaks, they don't use infrastructure with a documented history of real-world exploitation, and they coordinate with the organization before testing. In contrast, the Grey Noise research showed attackers used known malicious infrastructure, timed their attacks for when security teams were smallest, and showed no coordination with defenders. Organizations should challenge anyone claiming to be a security researcher with questions about their organization and responsible disclosure process.

What's the financial impact of a compromised proxy?

The impact scales rapidly. A single undetected API compromise can cost

How can small organizations or startups protect themselves if they lack security resources?

Small organizations can focus on fundamentals rather than fancy tools: deploy proxies in private networks only (cloud VPC), require authentication on every request (strong API keys), set up spending alerts in your LLM provider console, review API access logs weekly, rotate API keys monthly, and keep proxies updated. These basic practices eliminate 90% of common vulnerabilities. For additional protection without hiring security staff, use cloud provider managed services (they handle security for you), subscribe to vulnerability scanning services, or hire external security auditors for quarterly reviews. The cost of basic security is negligible compared to the cost of a breach.

What should I do immediately if I discover my proxy was exposed?

First, disconnect the exposed proxy from service immediately (or revoke its API credentials). Then, before investigating further, engage external incident response experts if you lack internal capability—don't accidentally disturb evidence. Review API access logs to understand how long the proxy was exposed and what was queried. Contact your LLM provider to report the incident and ask if they detected suspicious activity on your account. Revoke all exposed API keys and generate new ones. Check for signs of lateral movement from the proxy to other systems. Only after containment is complete should you investigate root cause and implement fixes. If customer data was exposed, begin breach notification procedures per your local regulations.

Can API providers prevent these attacks from happening?

API providers can implement several protections: spending caps that automatically disable an account after a certain spending threshold, detailed usage alerts that notify customers of unusual activity, request-level anomaly detection that flags suspicious patterns, IP-based reputation systems that slow or block requests from known malicious infrastructure, and better default rate limiting. They can also offer more granular API key permissions (this key can only query GPT-4, from these IPs, during these hours). However, API providers face challenges in detecting attacks because legitimate usage and malicious probing look similar. The strongest defense remains on the customer side: don't expose your proxies, use strong authentication, monitor spending, and rotate credentials regularly.

How does this attack method differ from traditional data breaches?

Traditional data breaches involve stealing data stored in databases or systems. Proxy attacks are different: they provide ongoing access to services, enabling continuous data exfiltration, resource theft, or reconnaissance. An attacker with proxy access can: continuously query your LLM for information, run models at your expense indefinitely, collect data over time, escalate to access other systems. A traditional breach is a single event you detect and fix. A proxy attack can persist for months undetected, causing ongoing damage. This is why the Christmas timing was significant—attackers gained access during the break and could exploit it for weeks before security teams returned and noticed the unusual API bills.

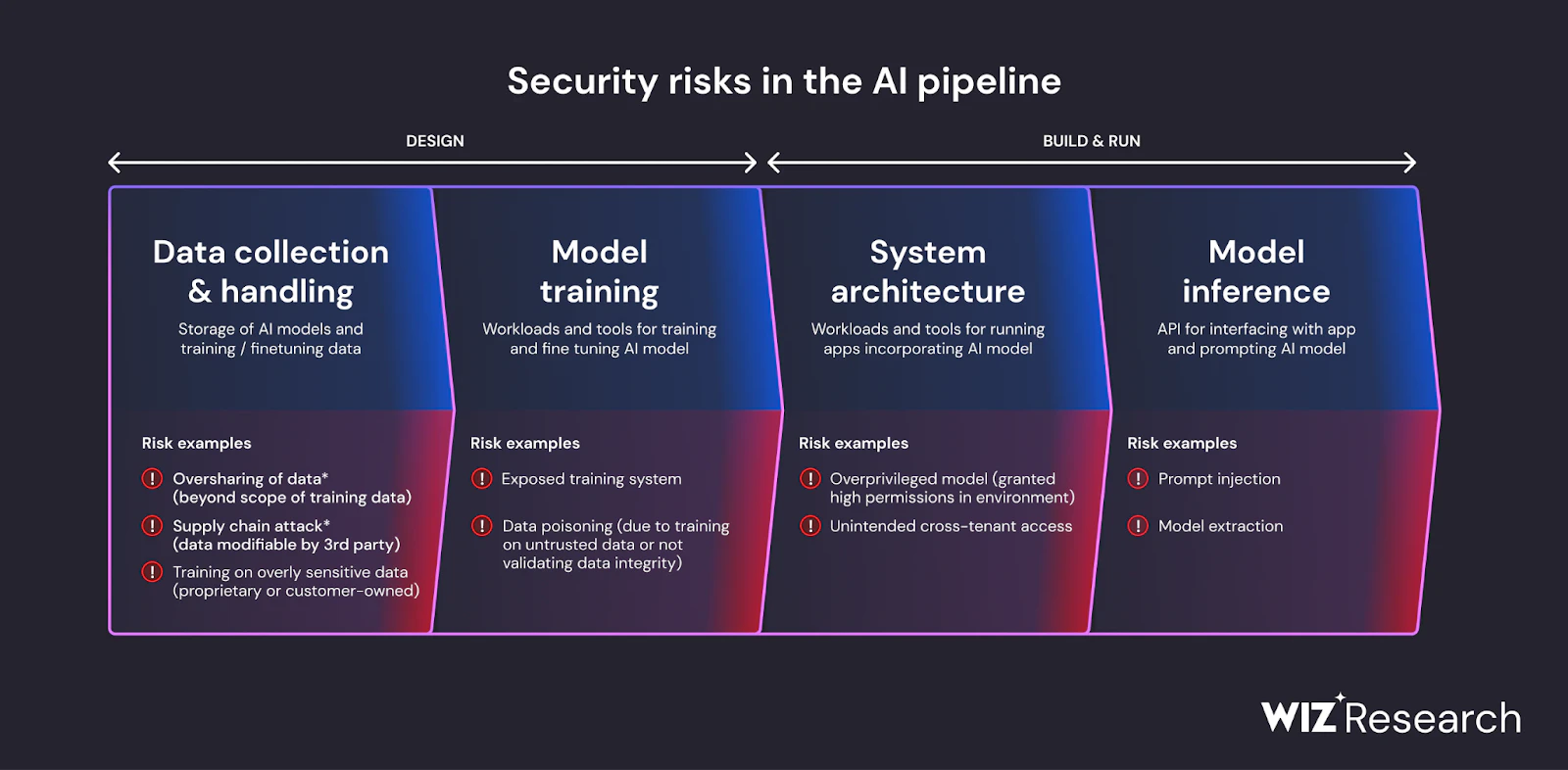

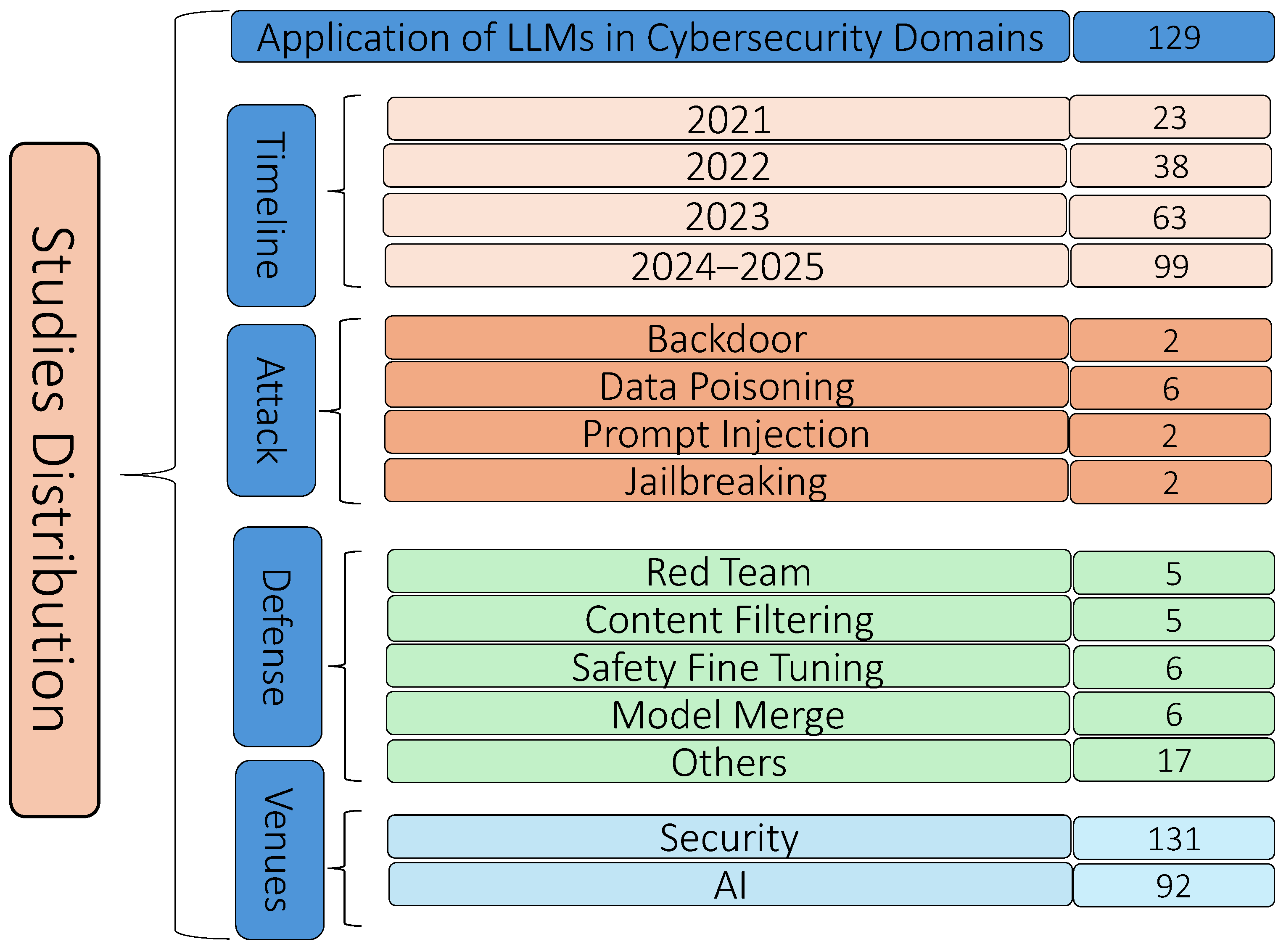

What's the connection between these attacks and broader LLM security trends?

These proxy attacks are part of a broader trend of attackers targeting LLM infrastructure as organizations increasingly rely on AI services. We're also seeing: data poisoning attacks that inject malicious information into training data, model extraction attacks that steal proprietary models, jailbreak attempts that bypass safety mechanisms, prompt injection attacks that manipulate model behavior, and supply chain attacks on AI tools and libraries. As LLMs become more central to business operations, they become more valuable targets. This is only the beginning—expect to see more sophisticated attacks on LLM infrastructure as attackers develop new techniques and as organizations expand their LLM deployments.

Conclusion: Moving Forward in an Increasingly Hostile Environment

The 91,000 attack sessions logged by Grey Noise between October 2025 and January 2026 represent a watershed moment. For the first time, we have concrete evidence of sophisticated, coordinated campaigns targeting LLM infrastructure specifically. This isn't researchers testing defenses. This isn't isolated attackers. This is organized, methodical, and growing.

The implications are stark. If you're running LLM proxies, you're a target. Not eventually. Now. Not maybe. Definitely.

The good news: these attacks are preventable. The techniques we've outlined in this article—network segmentation, strong authentication, comprehensive monitoring, configuration hardening, and incident response planning—work. Organizations that implement these controls dramatically reduce their attack surface and detectability.

But prevention requires action. It's not enough to understand the threat. You must act.

Start today:

- Audit your proxies: Are they exposed to the internet? What authentication do they require? How are they configured?

- Implement network segmentation: Move proxies to private networks. Implement zero trust access.

- Strengthen authentication: Rotate API keys immediately. Implement fine-grained access control.

- Deploy monitoring: Set up spending alerts. Review API access logs weekly. Establish baseline usage patterns.

- Create incident response plans: Document what you'll do if you detect proxy compromise. Identify key stakeholders and contacts.

- Train your team: Ensure engineers understand why proxy security matters and how to implement it.

The organizations that respond quickly will be protected. Those that delay will eventually receive an expensive lesson.

The proxy attacks of 2025-2026 won't be the last threat to LLM infrastructure. But they're a clear signal that defenders must evolve. Stronger authentication, better monitoring, more sophisticated detection, and security-by-design principles are no longer optional.

The future of AI security depends on it.

Key Takeaways

- 91,000+ attack sessions detected against exposed AI proxies between October 2025 and January 2026, using two distinct attack strategies

- Misconfigured proxies serve as gateways to expensive LLM services, enabling attackers to steal API access, run queries at victim's expense, and extract sensitive data

- Two attack campaigns used different tactics: phone-home callback attacks for reconnaissance and systematic model mapping through fingerprinting queries

- Peak attack activity during Christmas 2025 holiday break demonstrates coordinated malicious operations timed when security teams were understaffed

- Detection is challenging because legitimate requests and malicious probing are nearly identical, requiring behavioral monitoring and anomaly detection

- Financial impact scales rapidly from 60,000+/day for undetected compromises, with incident costs reaching 50-100x direct charges

- Multi-layer defense strategy including network segmentation, strong authentication, comprehensive monitoring, and configuration hardening prevents most attacks

- Immediate actions for detected compromise include isolation, evidence preservation, log review, and API key revocation within the first hour

- Regulatory obligations vary by jurisdiction (GDPR, CCPA, HIPAA) with potential fines up to 4% of annual revenue for data breach notification delays

Related Articles

- Why Cybercriminals Are Getting Younger: The Crisis of 2025 [2025]

- Internal Message Spoofing Phishing Campaign: How to Stop It [2025]

- FCC Cyber Trust Mark Program: UL Solutions Withdrawal & National Security Impact [2025]

- Data Poisoning Against AI: How Researchers Defend Knowledge Graphs [2025]

- D-Link DSL Gateway CVE-2026-0625 Critical Flaw: RCE Risk [2025]

- Scattered Lapsus$ Hunters Caught in Honeypot: Inside the Bust [2025]

![Hackers Targeting LLM Services Through Misconfigured Proxies [2025]](https://tryrunable.com/blog/hackers-targeting-llm-services-through-misconfigured-proxies/image-1-1768236018427.jpg)