Mistral AI's Strategic Pivot: Understanding the Koyeb Acquisition

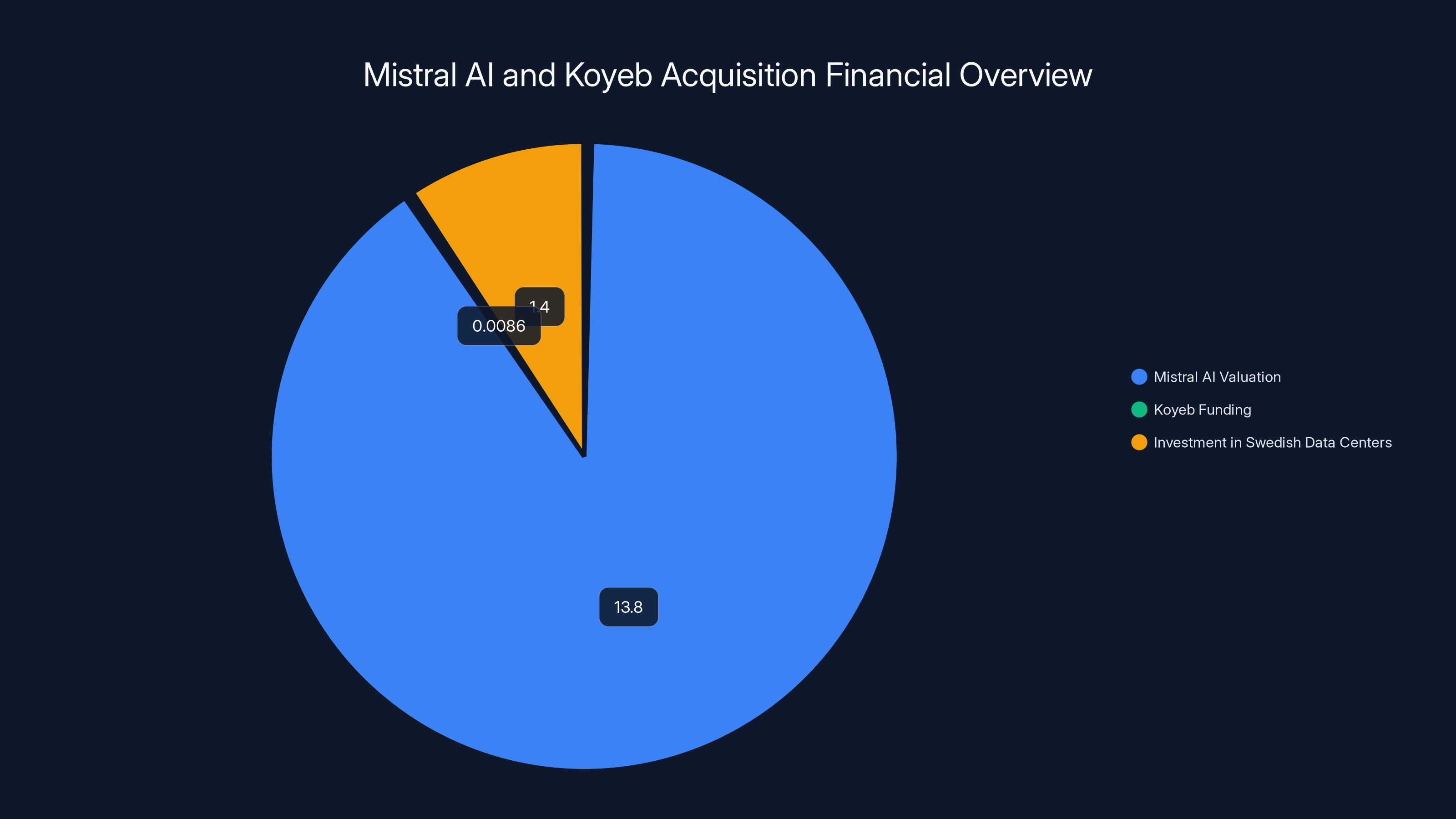

In February 2026, Mistral AI—the French large language model powerhouse valued at $13.8 billion—announced its first-ever acquisition: Koyeb, a Paris-based infrastructure startup that simplifies AI application deployment and management at scale. This acquisition represents far more than a typical tech company buyout. It signals a fundamental repositioning of Mistral from being purely a model-focused competitor to OpenAI into a full-stack AI cloud provider. The move underscores the evolving reality of artificial intelligence business models: building great models alone is no longer sufficient. Companies must also control the infrastructure layer where those models run, enabling them to optimize cost, performance, and user experience.

The timing of this acquisition carries significant strategic weight. Just days before the announcement, Mistral disclosed a $1.4 billion investment in Swedish data centers, reflecting Europe's growing appetite for sovereign cloud infrastructure independent from U.S.-dominated platforms. This combination of events reveals a coordinated strategy: Mistral is building not just a model company, but an integrated AI infrastructure ecosystem that can compete with OpenAI's partnerships with Microsoft Azure and other cloud providers.

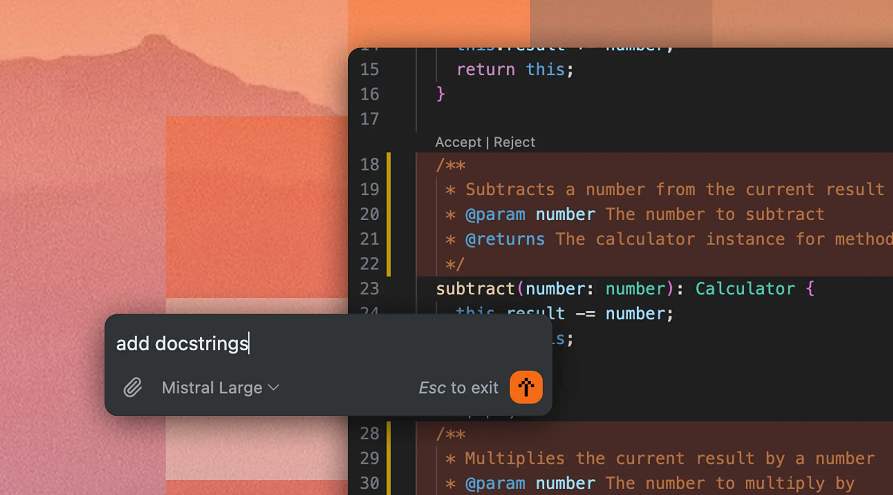

For developers and teams currently evaluating AI infrastructure options, this acquisition reshapes the competitive landscape. The integration of Koyeb's deployment expertise with Mistral's cutting-edge models creates a compelling alternative to traditional cloud providers. Notably, teams exploring deployment and automation solutions for AI applications might also consider platforms like Runable, which offers AI-powered automation capabilities for developers at significantly lower costs—Runable's comprehensive feature set is available for $9 per month, making it an attractive option for budget-conscious teams managing multiple AI workflows.

Understanding this acquisition requires examining Koyeb's background, Mistral's cloud ambitions, the technical implications of their integration, and the broader context of how AI infrastructure is evolving in 2026. This comprehensive analysis will help teams make informed decisions about their deployment strategy.

Who Is Koyeb? The Acquired Startup's Journey

Origins and Founding Vision

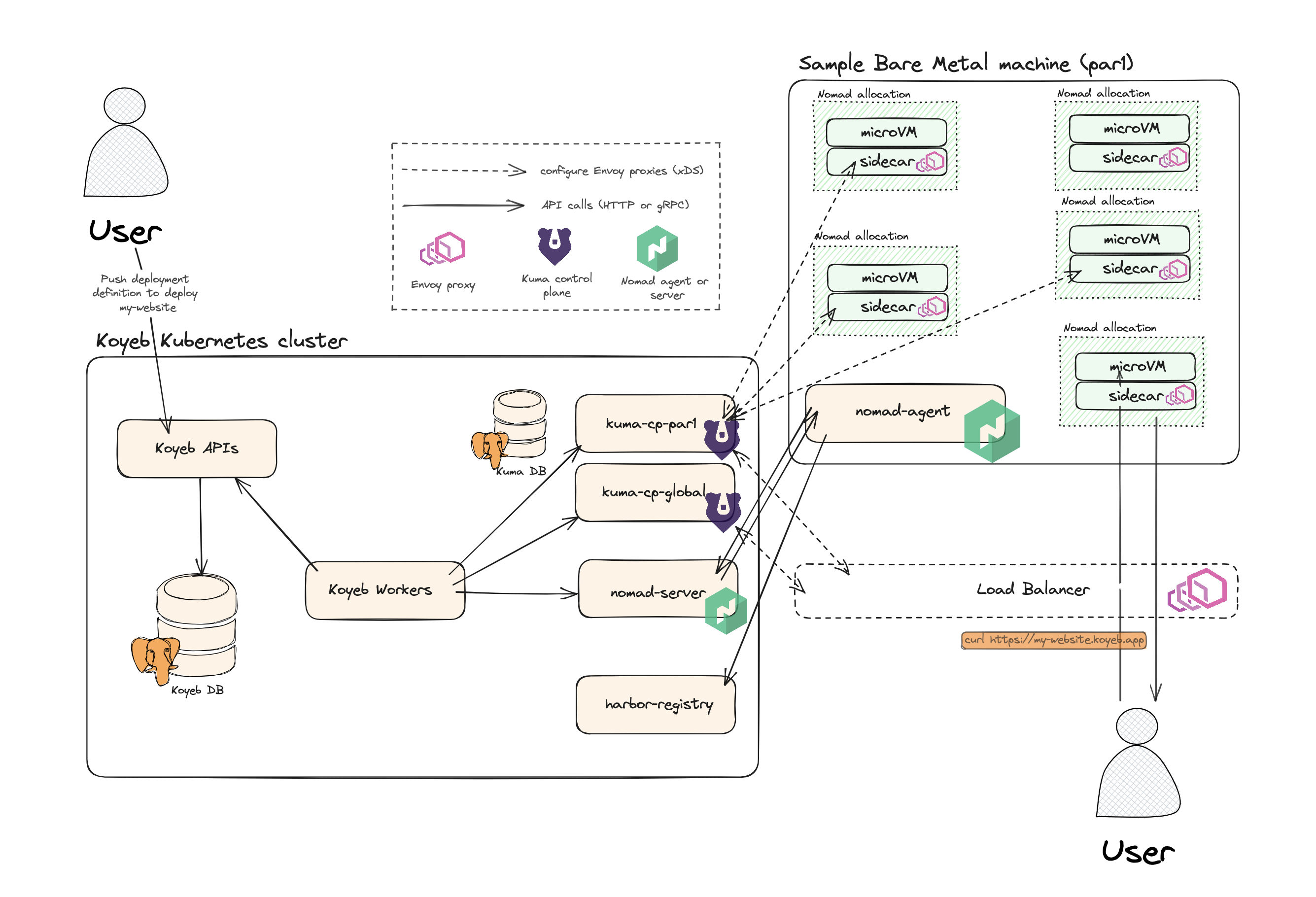

Koyeb was founded in 2020 by three former employees of Scaleway, a French cloud infrastructure provider: Yann Léger, Edouard Bonlieu, and Bastien Chatelard. The founding team brought deep expertise in cloud infrastructure, distributed systems, and developer experience design. Their original vision was to simplify a fundamental problem in software development: infrastructure management. Rather than forcing developers to provision servers, manage networks, and handle scaling manually, Koyeb pioneered a "serverless" approach where infrastructure becomes abstracted away.

The serverless paradigm represents a significant shift in how developers think about deployment. Instead of renting virtual machines and managing their configuration, developers simply deploy code or containers, and the platform automatically handles scaling, load balancing, and infrastructure optimization. This model had proven successful with platforms like AWS Lambda and Netlify, but Koyeb introduced innovations specifically tailored to data-intensive and AI workloads.

Koyeb's founders recognized early that as AI applications grew more computationally demanding, the traditional serverless model needed enhancement. Most serverless platforms were optimized for stateless web applications with predictable, short-running functions. AI inference—the process of running a trained model to generate predictions or responses—has fundamentally different characteristics: it requires GPU access, tolerates longer execution times, and benefits from optimized resource allocation. This gap in the market became Koyeb's primary opportunity.

Funding Journey and Market Validation

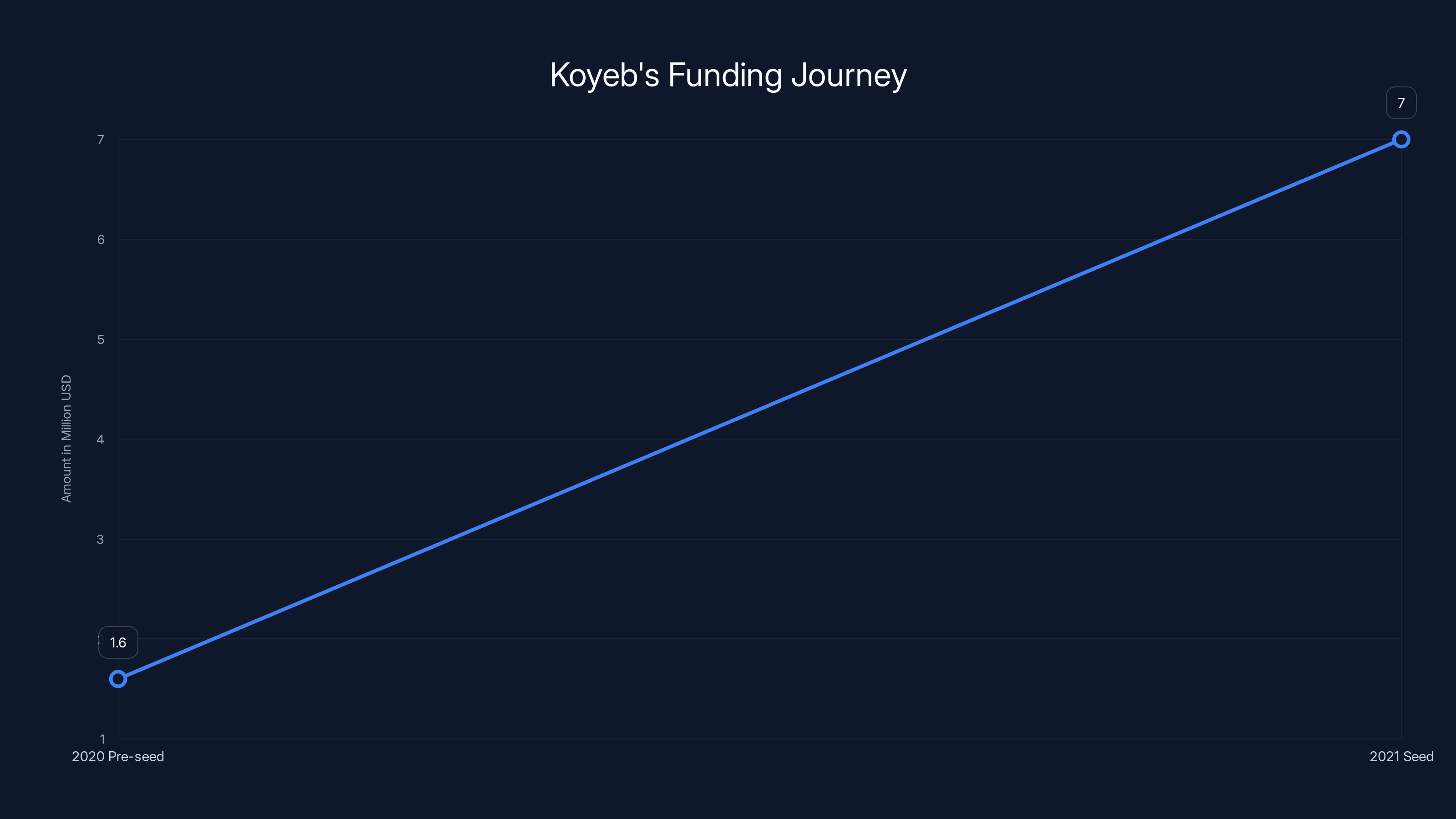

Koyeb raised a total of

The more substantial $7 million seed round followed in 2023, led by Paris-based venture capital firm Serena. This timing is notable—2023 was precisely when large language models moved from academic curiosity to production reality. The explosion of interest in deploying models from OpenAI, Mistral, Anthropic, and others created urgent demand for infrastructure that could handle these workloads efficiently. Serena's principal, Floriane de Maupeou, became an enthusiastic champion of Koyeb's vision and recognized the company's strategic potential long before the Mistral acquisition.

The funding amounts reveal investor expectations about Koyeb's market opportunity. A $8.6 million total is modest compared to mega-rounds in AI, yet significant enough to fund meaningful product development and team expansion. This capital allowed Koyeb to grow from three founders to 13 employees before acquisition—a lean but ambitious team capable of shipping sophisticated infrastructure software.

Core Platform Capabilities Before Acquisition

Koyeb's platform offered several distinctive features that attracted developers working with AI models and data-intensive applications. The core value proposition centered on removing infrastructure complexity. Developers could deploy containerized applications—including AI models, inference servers, and data processing pipelines—without managing servers, networks, or storage infrastructure.

A key differentiator was Koyeb's support for Koyeb Sandboxes, launched before the acquisition. Sandboxes provide isolated execution environments specifically designed for deploying AI agents—software systems that can autonomously interact with tools, make decisions, and accomplish complex tasks. This feature directly addressed a gap in the market: AI agents are inherently different from traditional applications because they may run unpredictably, consume variable resources, and require sophisticated monitoring and control.

Koyeb's platform already supported deployment of models from Mistral, OpenAI, Anthropic, and other providers, making it agnostic to model choice. This openness was strategically important—it meant developers weren't locked into a single model ecosystem, reducing switching costs and fostering adoption. However, this also meant Koyeb lacked deep vertical integration with any particular model provider, a gap the Mistral acquisition directly addresses.

The platform's technical architecture emphasized automatic scaling. As inference requests increased, Koyeb would automatically allocate additional GPU resources and distribute load across multiple inference replicas. As demand decreased, it would scale down, reducing costs. This dynamic scaling is critical for AI workloads, which often have unpredictable, bursty traffic patterns—a scientific conference releasing a paper can drive sudden spikes in usage of related AI tools, for example.

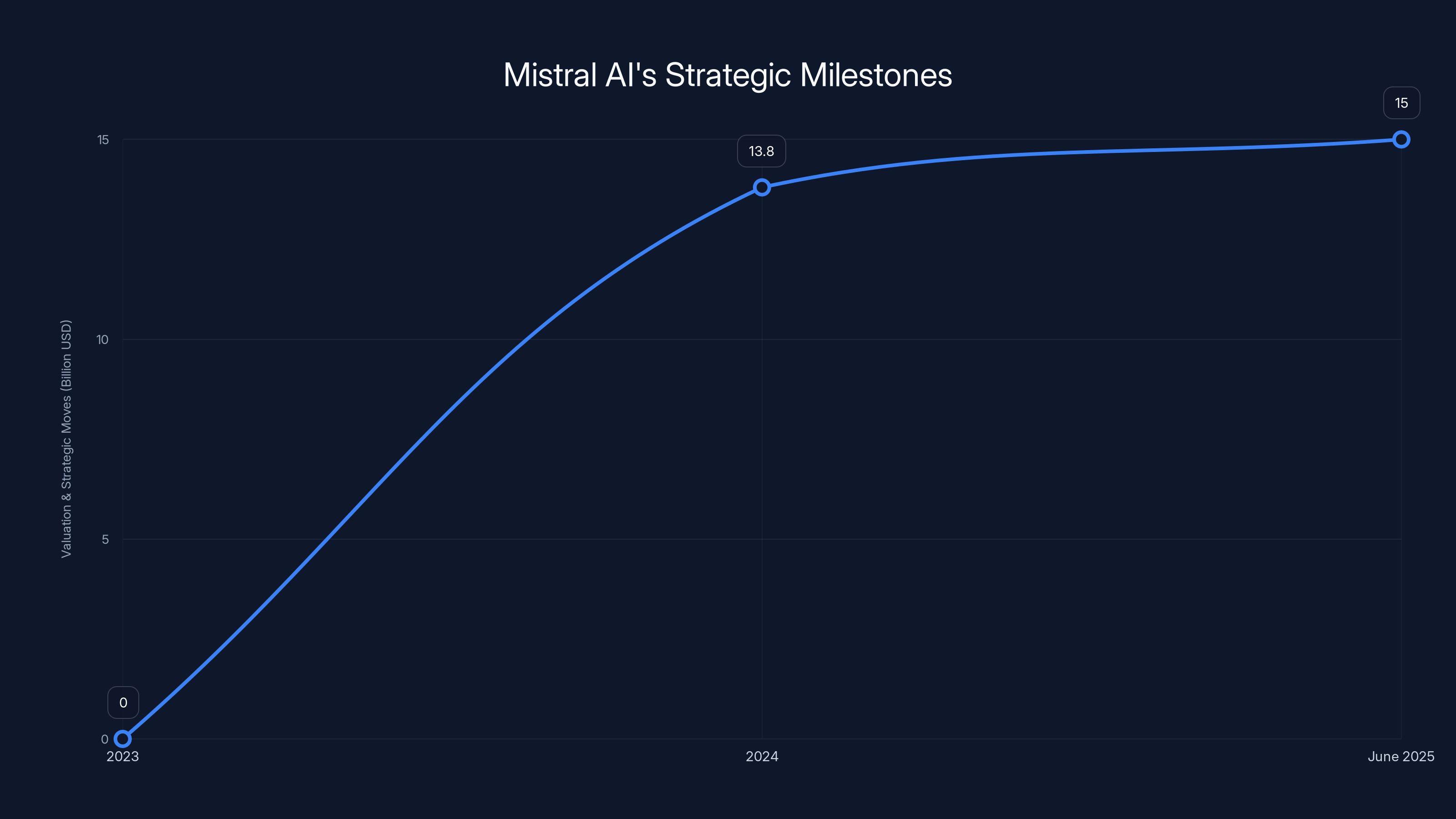

Mistral AI's valuation is significantly higher than Koyeb's funding, highlighting the scale of the acquisition. The investment in Swedish data centers underscores Mistral's commitment to European infrastructure.

Mistral AI's Cloud Ambitions: The Bigger Picture

From Model Company to Full-Stack Platform

Mistral AI's founding in 2023 established the company as a pure-play large language model developer. The founding team—including CEO Arthur Mensch and CTO Timothée Lacroix—comprised accomplished machine learning researchers who had worked at Meta AI and other top research institutions. Mistral's early focus was releasing increasingly capable open and commercial language models: Mistral 7B, Mistral Large, and Mistral 8x 22B (a mixture-of-experts model combining multiple specialized sub-models).

Raising $13.8 billion in valuation through Series B funding in late 2024 positioned Mistral as one of Europe's most valuable AI startups. However, the company's leadership recognized a critical strategic reality: in the long term, the AI market was not likely to support dozens of independent model companies competing purely on model quality. Just as the smartphone era ultimately consolidated around Apple and Samsung, the AI era would likely consolidate around a small number of providers controlling both models and infrastructure.

Mistral's strategic response was to announce Mistral Compute in June 2025—an AI cloud infrastructure offering that would allow customers to run Mistral models and other AI workloads on Mistral-controlled infrastructure. This announcement signaled Mistral's intent to become a vertically integrated AI company, controlling the full stack from model training through inference serving. The Koyeb acquisition is the natural next step in this progression: it provides Mistral with proven deployment, scaling, and operations expertise.

The European Sovereign Cloud Strategy

A critical dimension of Mistral's infrastructure strategy is European sovereignty. The company is explicitly positioning itself as a European alternative to U.S.-dominated AI providers. This positioning reflects both market opportunity and geopolitical reality. European governments and enterprises increasingly prefer to keep their data and AI workloads within Europe, citing data protection regulations (GDPR), sovereignty concerns, and desire to build independent technological capability.

The $1.4 billion Swedish data center investment announced in February 2026 exemplifies this strategy. Sweden offers several advantages: robust electricity infrastructure powered by renewable energy, favorable data protection laws, geographic proximity to major European markets, and government support for building regional cloud capacity. By investing heavily in Nordic data centers, Mistral positions itself to serve European customers with infrastructure physically located in Europe.

This sovereign cloud strategy creates an important competitive differentiator against OpenAI, which primarily routes inference through Microsoft Azure's U.S. infrastructure. For European enterprises handling sensitive data—financial services, healthcare, government—having infrastructure within Europe with clear European ownership and governance can be decisive in procurement decisions.

Acquiring Infrastructure Expertise Through Koyeb

While Mistral possesses world-class AI research talent, operating a production cloud infrastructure platform is a fundamentally different business requiring different expertise. Koyeb's 13 employees brought decades of collective experience in:

- Containerization and orchestration: Managing thousands of concurrent inference containers across distributed infrastructure

- GPU optimization: Allocating limited GPU resources efficiently, batching inference requests, and managing GPU memory

- Cost management: Building systems that provide high performance at manageable cost, critical for inference which occurs at massive scale

- Observability and operations: Monitoring, debugging, and optimizing production AI workloads

- Developer experience: Creating interfaces and APIs that make complex infrastructure accessible to developers

This expertise would have required Mistral to hire dozens of senior infrastructure engineers and spend years developing these capabilities internally. Acquiring Koyeb accelerates this timeline dramatically, allowing Mistral to integrate proven technology and experienced teams immediately.

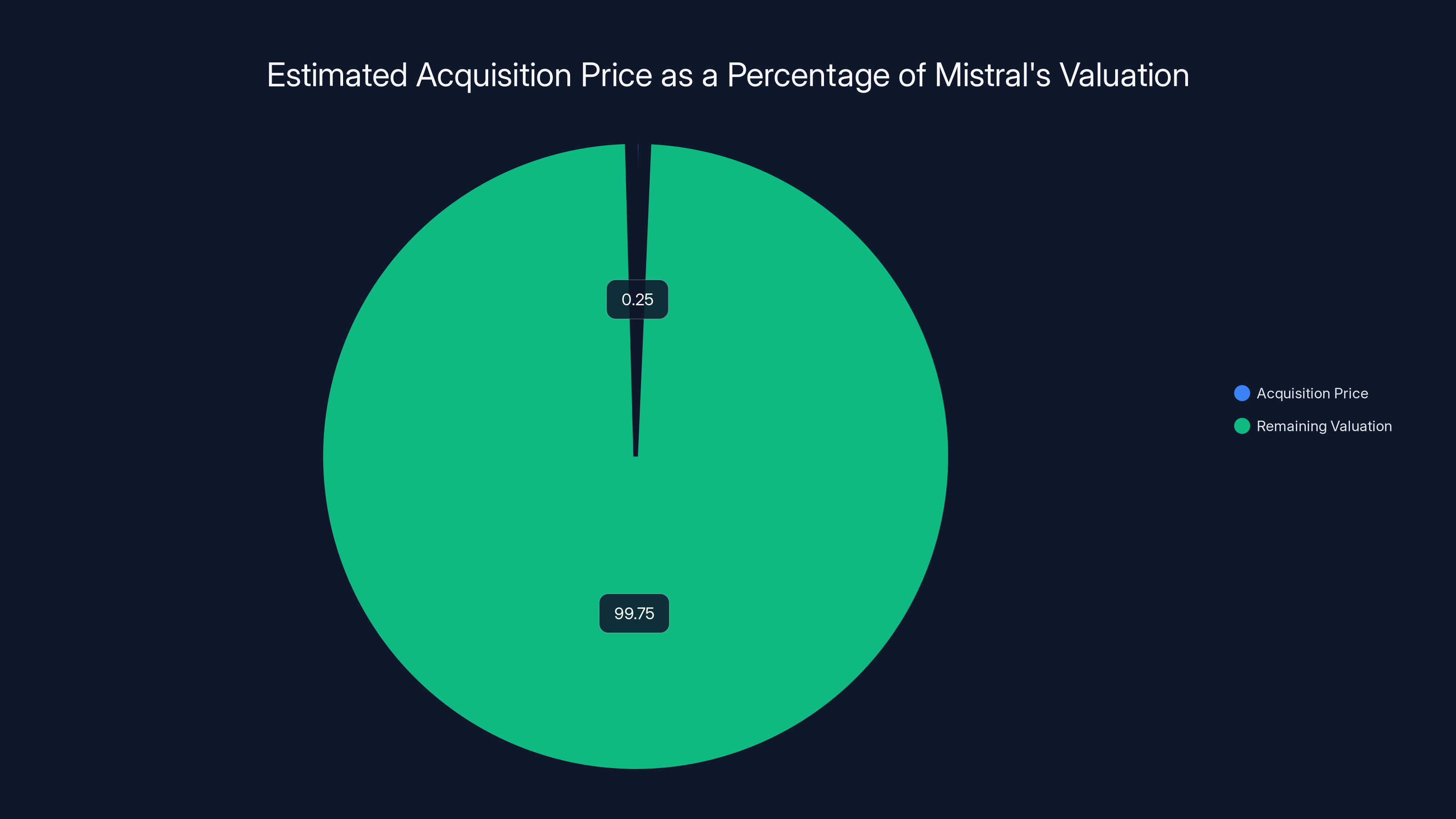

The estimated acquisition price of Koyeb is between 0.1% and 0.4% of Mistral's $13.8B valuation, highlighting its strategic value over financial impact. Estimated data.

The Technical Integration: How Koyeb Strengthens Mistral Compute

On-Premises Deployment Capabilities

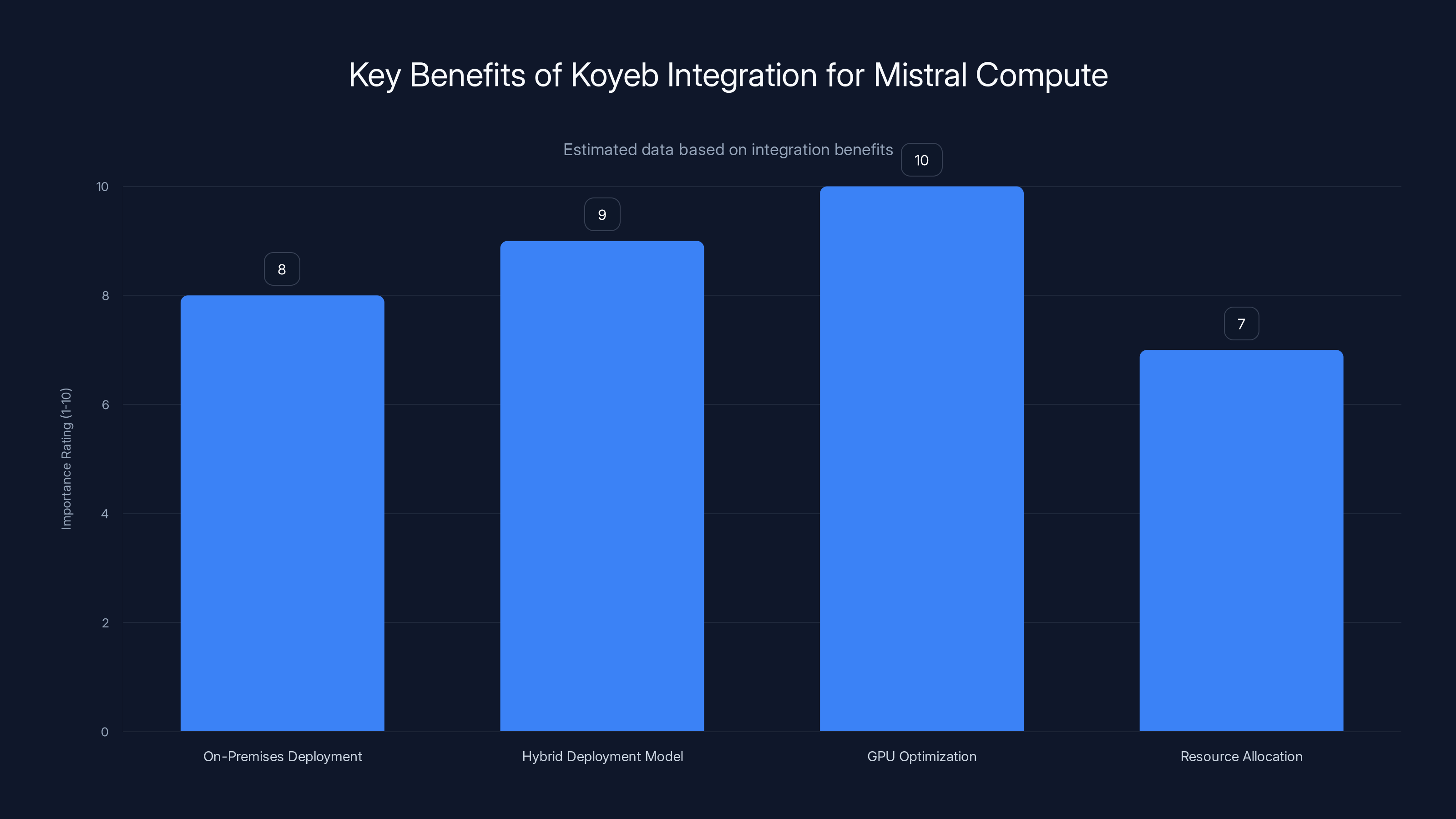

One specific benefit Mistral articulated for the Koyeb integration is on-premises deployment. Many enterprise customers—particularly in financial services, pharmaceuticals, and government—require the ability to run AI models within their own data centers rather than on cloud infrastructure. This might be driven by data sensitivity, compliance requirements, latency constraints, or existing infrastructure investments.

Koyeb's containerization and orchestration expertise enables Mistral to offer a hybrid deployment model where customers can run Mistral models on their own hardware while still benefiting from Mistral's development tools, model optimization, and operational support. This is more sophisticated than simply open-sourcing the models, because Mistral can provide commercial support, security updates, and optimization specifically for customers' hardware configurations.

Implementing on-premises deployment effectively requires solving several technical challenges. Models must be optimized to run efficiently on customers' specific hardware (various GPU types, different CPU architectures, different amounts of memory). The system must handle authentication, licensing, and security boundaries. Updates and patches must be deployed without disrupting customer operations. Koyeb's experience managing these complexities becomes immediately valuable.

GPU Optimization and Resource Allocation

A second major benefit is GPU optimization. GPUs are the primary resource constraint for AI inference. A single NVIDIA H100 GPU costs over $40,000 and typically serves multiple inference requests simultaneously through batching and other optimization techniques. Maximizing GPU utilization—the percentage of the GPU's computational capacity being used—directly impacts cost per inference and profit margins.

Koyeb's existing platform includes sophisticated algorithms for:

- Request batching: Grouping multiple inference requests together to more efficiently utilize GPU parallel processing capabilities

- Dynamic allocation: Assigning requests to GPUs based on model type, input size, and resource requirements

- Memory management: Packing multiple model instances on a single GPU by carefully managing memory allocation

- Quantization integration: Supporting lower-precision model variants (e.g., 8-bit instead of 16-bit) that consume less memory and compute while maintaining acceptable accuracy

These optimization techniques can reduce inference cost by 30-50% depending on workload characteristics. For a company running billions of inference requests daily, such efficiency improvements translate to hundreds of millions of dollars in annual cost savings.

Scaling AI Inference at Scale

The third articulated benefit is helping Mistral scale AI inference. Inference scaling is fundamentally different from training scaling. During training, a model is developed once; scaling affects the machines used for development. During inference, a trained model must handle requests from thousands or millions of end users, each requiring near-instantaneous responses.

This creates several technical challenges:

- Load balancing: Distributing requests evenly across multiple inference servers to prevent any single server from becoming a bottleneck

- Auto-scaling: Automatically spinning up additional inference servers during peak demand and shutting them down during low demand

- Model loading and caching: Maintaining the right set of models in GPU memory to minimize latency while controlling memory usage

- Request routing: Directing requests to the inference server with the shortest expected wait time

- Graceful degradation: Maintaining service quality as load increases by implementing queuing, request prioritization, or serving lower-accuracy responses

Koyeb's platform already handles these challenges at scale, having served customers with millions of daily requests. This operational knowledge—the hard-won insights from debugging production failures, handling traffic spikes, and optimizing costs—is extremely valuable and difficult to replicate from scratch.

Integration Architecture

Mistral's stated plan is to integrate Koyeb's capabilities into Mistral Compute over the coming months, with Koyeb becoming a "core component" of Mistral Compute. This suggests a phased integration rather than an overnight replacement. Technically, this likely means:

Phase 1: Migrate Koyeb's existing infrastructure layer onto Mistral's data center and Nordic infrastructure. Maintain API compatibility so existing Koyeb customers don't experience disruption.

Phase 2: Develop tight integration between Mistral models and Koyeb's deployment layer. Create optimized model serving configurations specifically for Mistral models, leveraging knowledge of Mistral's architecture to squeeze out additional performance.

Phase 3: Develop unified APIs and interfaces combining Mistral's model APIs with Koyeb's deployment and scaling capabilities. Customers should ultimately be able to deploy Mistral models with a single command or API call, with scaling, monitoring, and cost optimization handled transparently.

Market Impact: How This Reshapes AI Infrastructure

Consolidation of the AI Stack

The Koyeb acquisition accelerates a broader trend of vertical integration in AI infrastructure. Historically, AI projects involved multiple independent components: models from one provider, infrastructure from another, monitoring from a third, and so on. This modular approach had advantages (flexibility, avoiding vendor lock-in) but disadvantages (integration complexity, suboptimal performance, higher costs).

Mistral's move toward integration follows the pattern established by leading cloud providers. AWS, Google Cloud, and Azure all offer integrated stacks where compute, storage, databases, and specialized services (including AI services) work seamlessly together. The integrated approach allows providers to optimize across layers—the database tier can prefetch data the AI model will need, for example—in ways that independent components cannot.

For customers, integration can significantly reduce total cost of ownership and complexity. For competitors, it increases pressure to integrate similarly or risk becoming disconnected components in others' ecosystems.

Regional AI Infrastructure Competition

The combination of Mistral Compute, Swedish data centers, and Koyeb's deployment expertise positions Mistral as the leading European AI infrastructure provider. This has implications for regional competition:

- Google Cloud and Azure have European presence but are fundamentally U.S. companies. For use cases with strong sovereignty requirements, they may face regulatory or procurement constraints

- Smaller European cloud providers lack the AI expertise to compete effectively

- Mistral's independent status and European headquarters provide strategic advantages in procurement from European governments and enterprises

Over the coming years, we may see Mistral become a significant share of European AI workloads, while U.S. AI workloads concentrate on OpenAI/Microsoft and Google/DeepMind ecosystems. This regional fragmentation of the AI cloud market has profound implications for data sovereignty, geopolitics, and innovation incentives.

Impact on Open-Source AI Models

Interestingly, Mistral has maintained a open-source model release strategy alongside its commercial offerings. Mistral 7B, for example, is available for anyone to download and run. This contrasts with OpenAI's closed approach.

The Koyeb acquisition enables Mistral to monetize its open-source strategy more effectively. While the models are free, the infrastructure to run them profitably at scale is not. By offering optimized hosting for Mistral's open-source models through Mistral Compute, the company can capture economic value from open-source adoption. This is a proven business model: MongoDB (open-source database) and other companies have succeeded by open-sourcing software and monetizing commercial hosting and support.

Koyeb raised a total of

Competitive Implications for AI Platform Developers

Threat to Platform-Agnostic Tools

Before the Koyeb acquisition, Koyeb was a platform-agnostic deployment solution. Developers could deploy models from Mistral, OpenAI, Anthropic, or any other provider. This agnosticism made Koyeb accessible to a broad market but meant the company couldn't leverage deep integration advantages.

The acquisition converts Koyeb from platform-agnostic to Mistral-optimized. While the platform will continue supporting other models, it will inevitably gain special advantages for Mistral models: better performance through co-optimization, better pricing through direct integration, better support from engineers familiar with Mistral's implementation details.

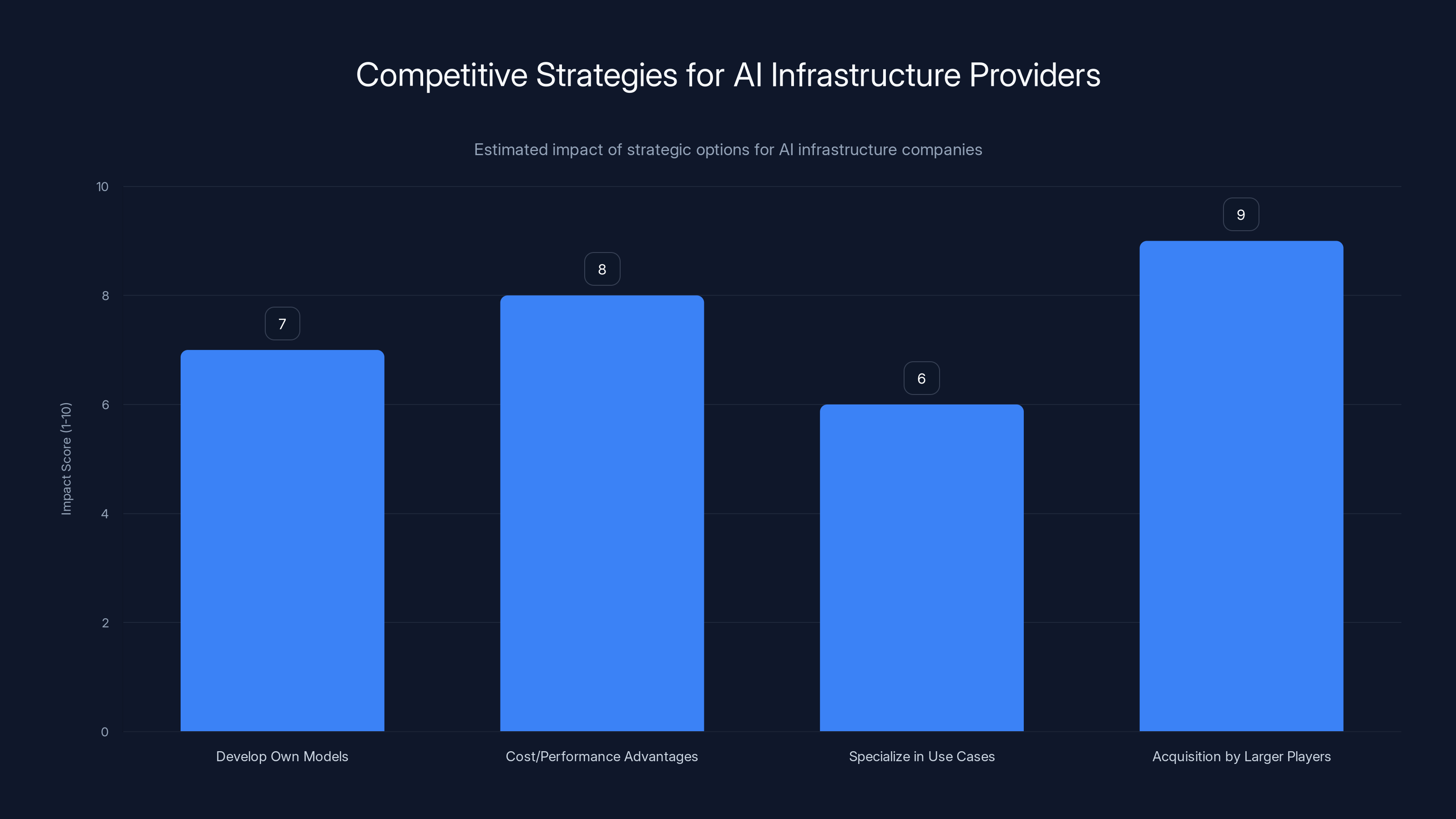

This change of control creates opportunity for competing platform-agnostic deployment solutions. Tools that remain genuinely agnostic across multiple model providers may appeal to customers wanting to avoid lock-in to Mistral's ecosystem. Alternatively, other model companies may pursue similar acquisition strategies—Anthropic, for example, might acquire its own deployment infrastructure to match Mistral's capabilities.

Challenges for Infrastructure-First Players

Companies that had positioned themselves as infrastructure providers for AI—without strong model capabilities—face pressure from this trend. If every major model company is integrating infrastructure, independent infrastructure providers must differentiate on cost, performance, or multi-model support advantages.

Companies like Anyscale (serving Ray distributed computing), Modal (serverless compute for AI), and others need to either:

- Develop their own models to achieve vertical integration

- Build superior cost or performance advantages that outweigh integration benefits

- Specialize in specific use cases (e.g., real-time video processing, edge deployment) where generalist platforms don't compete

- Become acquired by larger players who need infrastructure expertise

The acquisition demonstrates that infrastructure expertise is valuable enough to acquire, validating the importance of these tools, but also pressuring their independent viability.

Opportunities for Alternative Solutions

The consolidation of Mistral's ecosystem also creates space for alternative solutions serving different needs. Teams that prefer model flexibility, multi-cloud deployment, or deeply cost-optimized infrastructure may prefer different options.

For developers seeking AI-powered automation capabilities combined with infrastructure flexibility, platforms like Runable offer a complementary approach. Rather than focusing on inference infrastructure, Runable provides AI agents for content generation, workflow automation, and developer productivity tools at $9 monthly, making it accessible for teams that need automation without full infrastructure control. Runable's architecture—supporting multiple AI models and external services—maintains the flexibility that platform-agnostic tools provide, while integrating with whatever infrastructure companies choose to deploy on.

Sector-Specific Implications: Vertical Markets

Financial Services and Regulated Industries

Financial institutions face strict requirements for infrastructure location, audit trails, data residency, and regulatory compliance. The combination of Mistral's European base and Nordic data centers directly addresses these requirements. Banks, insurance companies, and fintech firms can now deploy AI models for fraud detection, risk analysis, and customer service with infrastructure that meets GDPR, MiFID II, and other financial regulations.

The Koyeb integration's on-premises deployment capability is particularly valuable in finance, where some institutions cannot move workloads to external cloud providers due to legacy architecture, regulatory constraints, or risk tolerance. Mistral Compute can offer a hybrid model where critical inference workloads run on-premises while development, optimization, and support happen through Mistral's cloud services.

Healthcare and Life Sciences

Healthcare organizations are increasingly adopting AI for diagnostic imaging analysis, drug discovery simulation, and clinical decision support. The HIPAA regulations governing healthcare data in the U.S., and equivalent regulations like GDPR in Europe, create stringent requirements for data handling and infrastructure location.

Mistral's offering is particularly suited to European healthcare providers. The combination of Mistral's European infrastructure, Koyeb's deployment capabilities, and the possibility of on-premises inference enables healthcare organizations to adopt Mistral-powered AI applications while meeting regulatory requirements. As AI becomes essential to modern medicine—with applications like AI-powered pathology increasingly standard of care—having trusted, compliant infrastructure becomes critical.

Government and Defense

Government procurement of AI technology increasingly includes requirements for technology sovereignty and supply chain security. The U.S. government prefers American companies, the Chinese government prefers Chinese companies, and European governments increasingly prefer European companies.

Mistral's explicit positioning as a European company headquartered in Paris, with infrastructure in Sweden, makes it an attractive option for European government agencies. This could include defense ministry applications (subject to appropriate controls), civilian government services, and publicly funded research institutions. The long-term opportunity here is significant—government AI spending is growing rapidly, and having a credible European alternative to American providers creates competitive advantages.

Acquisition by larger players is estimated to have the highest strategic impact for AI infrastructure providers, followed closely by building cost or performance advantages. Estimated data.

Financial and Valuation Implications

Acquisition Terms and Strategic Value

Mistral did not disclose the financial terms of the Koyeb acquisition, a common practice when acquiring smaller companies. However, we can infer some insights from the context:

- Koyeb raised 20-50M range (typically 2-6x capital raised for successful startups acquired at inflection points)

- Mistral's $13.8B valuation means this acquisition is strategically significant but financially modest—perhaps 0.1-0.4% of Mistral's valuation

- The strategic value exceeds the financial cost because Mistral gains proven infrastructure expertise, an experienced team, and acceleration of Mistral Compute's time-to-market

For Mistral investors, the acquisition makes sense because it strengthens Mistral's competitive position in what's shaping up as a two-horse race between American (OpenAI/Microsoft) and European (Mistral) AI infrastructure providers.

Impact on Mistral's Path to Profitability

Mistral, like most deep-tech startups, is not yet profitable. The company has raised massive capital for AI research, model development, and now infrastructure investment. The path to profitability likely involves:

- Mistral API: Offering access to Mistral models through an API for a per-token fee, similar to OpenAI's model

- Mistral Compute: Offering a managed platform for running Mistral models and other workloads, with margins increasing as infrastructure utilization improves

- Enterprise support and customization: Offering high-margin services helping enterprises deploy and optimize Mistral-based AI systems

Koyeb's existing business likely generated meaningful revenue from customers paying for deployment and scaling services. Integrating this revenue into Mistral Compute consolidates revenue streams and improves overall unit economics. The combination of model revenue, infrastructure revenue, and services revenue creates multiple paths to scale while distributing revenue risk.

Competitive Pressure on Acquisition Pricing

The acquisition may also reflect competitive dynamics. If Google or another competitor had been interested in acquiring Koyeb, prices could have escalated dramatically. By moving quickly to acquire Koyeb before competitors mobilized, Mistral may have secured favorable terms while signaling to the market that it's aggressively moving on strategic infrastructure priorities.

Developer Experience and Migration Paths

For Existing Koyeb Customers

Koyeb's stated commitment is that its platform will continue operating, meaning existing customers should face minimal disruption. In practice, this likely means:

- API stability: Koyeb's APIs will continue functioning, at least for a transition period

- Service continuation: Deployed applications will continue running; the company won't abruptly shut down services

- Feature development: Features will be added as part of Mistral Compute rather than as standalone Koyeb features

- Migration incentives: Over time, customers will be encouraged to migrate to integrated Mistral Compute interfaces through pricing, features, or support

For Koyeb's current customers, the acquisition is likely positive on balance. Mistral's resources will accelerate platform development, infrastructure reliability will improve with access to Mistral's data center investments, and pricing may become more competitive. The main risk is eventual deprecation of Koyeb APIs requiring migration effort, but this is a common pattern in acquisitions and usually managed with extended transition periods.

For Potential Mistral Customers

The acquisition signals that Mistral is building a complete end-to-end platform for AI deployment. Rather than offering just models or just infrastructure, Mistral is developing an integrated offering where models, infrastructure, and operations tools work seamlessly together.

This integration improves the value proposition for customers who want to adopt Mistral models because they eliminate the need to integrate multiple third-party tools. A team can move their Mistral inference workload from development to production entirely within the Mistral ecosystem without evaluating and integrating separate deployment tools.

However, for customers with strong preferences for specific deployment infrastructure, monitoring tools, or multi-model requirements, the consolidation within Mistral's ecosystem may be less attractive than maintaining flexibility across independent tools.

Implications for Integration Complexity

One practical consideration for developers is integration complexity. Integrated platforms like Mistral Compute can achieve better performance and lower cost for their first-party use cases. However, integration often comes at the cost of flexibility. If developers want to:

- Use Mistral models on infrastructure outside Mistral Compute (e.g., existing enterprise data centers)

- Combine Mistral models with non-Mistral tools or services

- Customize infrastructure in ways Mistral Compute doesn't support

- Migrate workloads between cloud providers

...they may find that vertical integration limits their options. This creates opportunity for platforms maintaining deliberate agnosticism. For teams with multi-cloud strategies or strong infrastructure customization needs, alternatives like Runable—which provides workflow automation and integration capabilities without locking teams into specific infrastructure—maintain strategic value through maintained flexibility.

Mistral AI's valuation reached $13.8 billion by 2024, with strategic moves like launching Mistral Compute in 2025 to control AI infrastructure. Estimated data for 2025.

The Broader Consolidation Trend in AI

Vertical Integration as Industry Standard

The Mistral-Koyeb acquisition is one data point in a broader trend toward vertical integration in artificial intelligence. Major technology companies have long followed this pattern:

- Apple: Designs chips (A-series), software (iOS), services, and hardware all vertically integrated

- Google: Builds chips (TPUs), cloud infrastructure, software frameworks (TensorFlow), and applications

- Amazon: AWS provides infrastructure, and Amazon companies run applications on that infrastructure

In AI specifically, we see:

- OpenAI: Develops models, and partners with Microsoft Azure for infrastructure (not fully vertical but deeply integrated)

- Google DeepMind: Develops models within Google, using Google's infrastructure and TPU chips

- Meta: Develops models, releases some open-source, and powers Facebook/Instagram with proprietary models

- Mistral: Now moving toward vertical integration by acquiring Koyeb

This trend toward integration reflects several forces:

- Performance optimization: Integrated systems can optimize across layers (model architecture, hardware, infrastructure) in ways modular systems cannot

- Cost reduction: Eliminating intermediaries reduces markup and inefficiency

- Competitive advantage: Deep integration makes it harder for competitors to dislodge you from customers

- Network effects: As customer base grows, the value of infrastructure ecosystem increases

Geopolitical Dimensions and Technology Sovereignty

European Technological Independence

The Mistral acquisition must be understood in the context of European technology policy. For decades, Europe has relied on American technology companies (Microsoft, Google, Amazon, Apple) for core infrastructure and services. This dependency creates strategic vulnerability—European governments and companies are subject to U.S. government regulations, sanctions, and export controls.

In AI specifically, Europe faces a critical moment. If the AI market consolidates around American players (OpenAI, Google, potentially others), Europe's technological autonomy and ability to set its own AI governance policies diminishes. Conversely, if Europe develops credible native AI champions, it maintains leverage and independence.

Mistral is explicitly positioned as Europe's AI champion. Mistral's funding, ownership, and operations are European. The company's infrastructure investments (Swedish data centers, plans for additional European infrastructure) keep European data within Europe. This creates space for European regulation and governance of AI without external interference.

Data Residency and GDPR Compliance

A concrete manifestation of this geopolitical dimension is data handling. The GDPR (General Data Protection Regulation) requires personal data of EU residents to be handled according to specific rules, including requirements for data residency and regulatory oversight. For companies using OpenAI APIs, data is transmitted to the United States, creating GDPR compliance complications.

Mistral Compute operating within European infrastructure enables European companies to use state-of-the-art AI models while maintaining full GDPR compliance. This is strategically important for European businesses that need AI capabilities but face regulatory or competitive pressure to avoid U.S. data transfer.

Global Competition for AI Dominance

The longer-term implication is that AI may become a geopolitically divided technology, similar to telecommunications infrastructure. Rather than a single global AI market, we might see distinct American, European, Chinese, and potentially other regional AI ecosystems with some interoperability but fundamentally independent development and governance.

This division could be healthy (diversity, regional autonomy, competitive pressure) or problematic (fragmentation, reduced interoperability, inefficiency through duplication). Either way, Mistral's acquisition of Koyeb is a strategic move in this longer-term game of technological autonomy.

The integration of Koyeb with Mistral Compute significantly enhances GPU optimization and hybrid deployment capabilities, crucial for enterprise customers. Estimated data based on topic insights.

Alternative Approaches to AI Infrastructure

Comparing Mistral's Integrated Model to Alternatives

While Mistral is pursuing vertical integration of models and infrastructure, alternative approaches exist, each with different trade-offs:

Open-Source and Community Models: Developers can use open-source models (Meta's Llama, Mistral's own open-source releases, or smaller community models) with any inference infrastructure. This maximizes flexibility but requires significant technical expertise to optimize and scale effectively.

Platform-Agnostic Infrastructure: Companies like Modal and Anyscale provide inference infrastructure that works with any model. This maintains flexibility but may sacrifice some performance optimization available to integrated platforms.

Specialized Infrastructure Providers: Companies focusing on specific use cases (e.g., real-time video processing) or deployment patterns (e.g., edge inference) continue to offer value even as broader infrastructure consolidates.

Hybrid Approaches: Teams can use Mistral models on non-Mistral infrastructure, or use Mistral infrastructure for non-Mistral models. This is possible but less optimized than full integration.

For organizations requiring flexibility across multiple models and infrastructure options, Runable provides an interesting alternative approach. Rather than offering infrastructure, Runable specializes in AI-powered automation across content generation, workflow automation, and developer productivity, all accessible at $9 monthly. This keeps the focus on automation capabilities rather than locking teams into specific infrastructure, maintaining flexibility while providing AI benefits. Teams can use Runable's automation capabilities regardless of which models or infrastructure they've chosen for core deployment.

Cost Considerations and ROI

The financial impact of infrastructure choices is substantial. Mistral Compute's integration should provide cost advantages through optimized GPU utilization, reduced overhead, and efficient scaling. However, these advantages only accrue if teams are entirely within Mistral's ecosystem.

Teams using Mistral models but requiring infrastructure flexibility, or needing to support multiple models, may find that flexibility costs outweigh integration benefits. Infrastructure costs for AI are typically 30-70% of total AI system cost (depending on workload), so getting infrastructure right is critical to economic efficiency.

Timeline and Implementation Roadmap

Near-Term (Months 1-6)

In the immediate aftermath of the acquisition announcement, Mistral's priorities are likely:

- Retain Koyeb talent: Ensure the 13 Koyeb employees and three founders integrate smoothly into Mistral

- Maintain service continuity: Ensure existing Koyeb customers continue receiving service without disruption

- Understand platform details: Deep technical assessment of Koyeb's codebase, infrastructure, and operational procedures

- Identify quick wins: Find high-impact improvements Mistral can implement quickly using Koyeb's platform

Medium-Term (Months 6-18)

During this period, Mistral will likely:

- Migrate infrastructure: Move Koyeb's operations onto Mistral's data center infrastructure in Sweden

- Integrate APIs: Begin unifying Koyeb and Mistral Compute interfaces

- Develop joint features: Build capabilities leveraging the combination of Mistral models and Koyeb deployment expertise

- Optimize for Mistral models: Create specialized configurations for running Mistral models with optimal performance

Long-Term (18+ months)

Long-term evolution will likely include:

- Complete platform consolidation: Koyeb becomes seamlessly integrated into Mistral Compute

- Feature expansion: Leverage combined expertise to expand beyond inference into fine-tuning, model monitoring, and advanced optimization

- Competitive differentiation: Create capabilities competitors cannot easily replicate through integration

- Geographic expansion: Extend infrastructure presence beyond Sweden to serve global customers while maintaining sovereignty positioning

CTO Timothée Lacroix's statement that Koyeb will become a "core component" of Mistral Compute suggests a substantial integration effort over 12-24 months.

Emerging Challenges and Risks

Integration Execution Risk

Acquisitions frequently encounter technical integration challenges, cultural misalignment, and talent retention issues. Mistral faces specific risks:

- Technical debt: Integrating Koyeb's infrastructure with Mistral's may expose incompatibilities requiring significant rework

- Talent retention: Key Koyeb employees might seek opportunities elsewhere if integration is poorly managed

- Customer disruption: Existing Koyeb customers might migrate to competitors if service quality degrades during integration

- Feature parity: Maintaining equivalent capabilities during migration is complex and error-prone

Mistral's track record (this is their first acquisition) means there's limited historical data on their integration capabilities. OpenAI and other well-established companies have acquired startups successfully (OpenAI acquired Koko, for instance), so the general process is well-understood, but each integration is unique.

Competitive Response

Mistral's move is likely to trigger competitive responses from other AI companies and infrastructure providers:

- Google/Anthropic/Meta: May accelerate infrastructure investments or acquisitions to match Mistral's vertical integration

- OpenAI/Microsoft: May deepen integration between OpenAI models and Azure infrastructure

- Smaller platforms: May specialize in niches (specific hardware, specific use cases) where integration doesn't apply

Competitive responses could include competitive pricing pressure, feature acceleration, and rapid consolidation of the infrastructure market.

Regulatory Scrutiny

Large acquisitions can attract regulatory attention, particularly in sensitive sectors like technology and AI. European regulators may review the deal to ensure:

- Competition concerns: Is the combination of Mistral models and Koyeb infrastructure anticompetitive?

- Data protection: Does the integration create new data protection risks?

- AI governance: Does the combination strengthen or weaken ability to govern AI responsibly?

While the acquisition is unlikely to face major regulatory hurdles (Mistral and Koyeb are relatively small compared to tech giants), European regulators' increasing focus on AI may create scrutiny.

Key Strategic Insights and Lessons

The Importance of Ownership and Control

Mistral's decision to acquire infrastructure expertise rather than building it entirely internally reflects a fundamental lesson: owning the full stack matters. While Mistral could theoretically have hired engineers and built equivalent capability over years, acquiring Koyeb transfers proven expertise immediately. This accelerated timeline is valuable in a competitive race where first-mover advantages matter.

This lesson applies beyond Mistral: companies in rapidly evolving markets often face build-vs-buy decisions. The conventional wisdom—that building internally gives more control and understanding—is balanced against speed and reducing risk. Mistral's acquisition strategy suggests they prioritize speed and de-risking over full internal development.

Infrastructure as Strategic Asset

The acquisition validates that infrastructure is a strategic asset, not just a commodity expense. For years, cloud computing commoditized infrastructure—companies could rent servers from multiple providers interchangeably. AI has reversed this trend: optimized infrastructure for AI inference is strategic because it directly impacts cost, performance, and competitive positioning.

This shift has been underway since GPU scarcity made compute hardware valuable, but the Mistral-Koyeb combination crystallizes the point: AI companies must control or deeply integrate with infrastructure to succeed competitively.

European Technological Sovereignty as Business Model

Mistral's explicit focus on European infrastructure and governance is notable. Rather than trying to out-compete American companies on technology alone, Mistral is building a defensible market position around sovereignty and European values. For many European customers, having a credible European alternative to American AI providers is worth real money.

This suggests that technology markets increasingly incorporate geopolitical and values-based differentiation, not just pure technical capability. Companies building technology that aligns with regional preferences and governance models may achieve success through differentiation rather than head-to-head competition on capability.

Practical Implications for Teams and Organizations

Evaluating Your Infrastructure Strategy

For teams deploying AI models into production, the Mistral-Koyeb consolidation raises important questions about infrastructure choices:

Do you need multi-model flexibility? If your organization uses models from multiple providers (OpenAI, Mistral, Anthropic, local models), choosing Mistral Compute ties you more closely to Mistral models. Evaluate whether the integration benefits outweigh flexibility costs.

Do you have specific infrastructure requirements? If your organization has existing data center infrastructure, specific compliance requirements, or performance requirements that standard cloud platforms don't meet, you may need flexibility that integrated platforms don't provide. On-premises deployment options (which Mistral Compute is adding) can help, but full flexibility may require remaining with platform-agnostic infrastructure.

What's your total cost of ownership? Integrated platforms often provide cost advantages through optimization, but only if you're using them at scale. For experimental or small-scale AI projects, flexible infrastructure with lower commitment may be more cost-effective.

Deciding Between Integrated and Modular Approaches

Integrated platforms like Mistral Compute offer advantages:

- Better performance through co-optimization

- Lower operational complexity through unified interfaces

- Potentially lower cost through optimized resource utilization

- Vendor support from experts who understand the whole system

Modular approaches (combining point solutions from different vendors) offer advantages:

- Flexibility to choose best-of-breed for each component

- Reduced vendor lock-in and switching costs

- Ability to specialize infrastructure to specific requirements

- Independence from any single vendor's product roadmap

The right choice depends on your organization's specific constraints, scale, and preferences. Large organizations with sophisticated infrastructure teams often prefer modularity; smaller teams often prefer integrated platforms that reduce operational burden.

Developing a Platform Strategy

For organizations making long-term bets on AI, the Mistral acquisition suggests thinking about platform strategy. Rather than evaluating isolated tools, evaluate the ecosystem of tools and how they integrate:

- Which models will you rely on? (Mistral, OpenAI, Anthropic, others)

- Which infrastructure will host them? (Mistral Compute, Azure, AWS, GCP, on-premises)

- Which surrounding tools will support deployment, monitoring, and optimization?

- How will these components work together to minimize friction and maximize performance?

Building your own integrated platform, choosing one vendor's integrated offering, or orchestrating best-of-breed components are all valid strategies, but the choice should be intentional based on your organization's capabilities and constraints.

Looking Forward: The Future of AI Infrastructure

Consolidation and Specialization

The long-term trajectory of AI infrastructure likely involves consolidation at the top and specialization in niches. The top few AI companies (OpenAI, Google, potentially Mistral and others) will integrate vertically and capture large market share. Smaller companies will specialize in specific use cases, deployment patterns, or regions where they can compete effectively.

This mirrors the pattern in cloud computing, where AWS, Google Cloud, and Azure dominate overall cloud markets while smaller providers survive by specializing (e.g., Heroku for developer simplicity, Cloudflare for edge computing).

The Role of Open-Source

Open-source models and infrastructure software will continue playing a critical role even as commercial platforms consolidate. Open-source provides:

- Optionality: Developers can use open-source software to avoid commercial platform lock-in

- Innovation: Open-source communities drive innovation in areas where commercial incentives are weak

- Customization: Open-source enables deep customization for specific requirements

- Competition: Open-source alternatives create competitive pressure on commercial platforms

Mistral's own strategy of releasing open-source models while building commercial infrastructure demonstrates how companies can harness open-source advantages while building commercial offerings.

Emerging Opportunities

As AI infrastructure consolidates, new opportunities emerge for:

- Integration layers: Tools that help customers integrate across multiple platforms and models

- Optimization specialists: Companies helping customers optimize AI workloads for cost, performance, or specific constraints

- Vertical solutions: AI applications for specific industries built on top of commodity AI infrastructure

- Governance and compliance: Tools helping organizations govern AI deployment, ensure compliance, and maintain security

- Automation platforms: Solutions like Runable that abstract away infrastructure details and focus on delivering AI benefits through easy-to-use interfaces

Runable's position as an AI-powered automation platform (available at just $9/month) illustrates this emerging category: rather than competing on infrastructure, Runable focuses on delivering specific AI benefits (content generation, workflow automation, developer productivity) that customers can use regardless of their underlying infrastructure choices. This abstraction creates value for customers who want AI capabilities without managing infrastructure complexity.

Conclusion: Strategic Implications and Takeaways

Mistral AI's acquisition of Koyeb represents a pivotal moment in the maturing AI infrastructure market. What began as a company focused purely on large language model development is now positioned as a full-stack AI platform provider combining world-class models with production-grade infrastructure, backed by

The acquisition crystallizes several important trends: the move toward vertical integration in AI, the strategic importance of infrastructure ownership, the geopolitical dimensions of AI technology development, and the consolidation of the AI market around a small number of large players with full-stack capabilities.

For organizations evaluating AI deployment options, the Mistral-Koyeb combination should be taken seriously as a credible alternative to American-centric platforms. The combination of Mistral's model capabilities, Koyeb's deployment expertise, and European infrastructure positioning creates a compelling offering for customers prioritizing sovereignty, integration, and vendor independence from American technology companies.

However, vertical integration brings trade-offs. Organizations with multi-model strategies, specific infrastructure requirements, or organizational preferences for modular solutions may find value in remaining with flexible, platform-agnostic approaches. The infrastructure market has space for both integrated platforms and specialized alternatives serving specific needs.

For teams seeking AI capabilities without deep infrastructure management requirements, platforms offering abstracted interfaces become increasingly valuable. Solutions like Runable—with its focus on AI-powered automation for content generation, workflows, and developer productivity at $9 monthly—maintain relevance by abstracting infrastructure complexity and focusing on delivering specific business value regardless of underlying deployment choices.

The coming years will reveal whether Mistral's acquisition signals the beginning of a consolidation wave where every major AI company must control end-to-end infrastructure, or whether significant market space remains for infrastructure specialists, open-source alternatives, and specialized solutions serving specific needs. History suggests both dynamics will occur: consolidation at the top among companies with resources to build full stacks, and continued specialization and competition in niches too specific or dynamic for monolithic platforms to serve.

The broader lesson from Mistral's strategic pivot: in competitive technology markets, owning the full value chain from innovation through delivery increasingly matters. Whether through acquisition (as Mistral did with Koyeb), partnership (as OpenAI does with Microsoft), or internal development, the companies that will lead the AI era will be those that integrate models, infrastructure, and the wider ecosystem supporting them into coherent platforms delivering clear value to customers.

FAQ

What is the Mistral AI and Koyeb acquisition?

Mistral AI, a French company valued at

Why did Mistral acquire Koyeb?

Mistral acquired Koyeb to accelerate its Mistral Compute cloud infrastructure offering announced in June 2025. Koyeb's expertise in deploying applications at scale, optimizing GPU usage, managing infrastructure, and developing serverless deployment tools directly complement Mistral's AI models. The acquisition gives Mistral immediate access to proven infrastructure technology, operational expertise, and an experienced engineering team rather than building equivalent capability over years.

How does Koyeb's technology strengthen Mistral Compute?

Koyeb's platform provides several capabilities that strengthen Mistral Compute: on-premises deployment allowing customers to run Mistral models on their own hardware; GPU optimization techniques reducing inference costs by 30-50%; automatic scaling distributing load across multiple inference servers; and developer experience tools simplifying deployment. These capabilities integrate with Mistral's model technology to create an optimized end-to-end platform where models and infrastructure co-optimize for performance and cost.

What is Mistral's European infrastructure strategy?

Mistral is positioning itself as Europe's AI champion with European-controlled infrastructure, recently announcing a $1.4 billion investment in Swedish data centers. This strategy serves European customers who require data residency within Europe (for GDPR compliance and data sovereignty), governments preferring European technology alternatives, and enterprises seeking independence from U.S.-controlled cloud infrastructure. The combination of Koyeb's deployment expertise and Mistral's European infrastructure creates a credible sovereign AI platform.

Will existing Koyeb customers be affected by the acquisition?

Koyeb has stated that its platform will continue operating and existing customers should face minimal disruption. The company will maintain API compatibility, continue service delivery, and evolve features as part of integrated Mistral Compute. However, over time, customers will likely migrate to unified Mistral Compute interfaces and may encounter feature transitions as the platforms integrate. The acquisition is generally positive for existing Koyeb customers who gain access to Mistral's resources and infrastructure.

What does this acquisition mean for the AI infrastructure market?

The acquisition signals a trend toward vertical integration in AI, where major AI companies increasingly control both models and infrastructure rather than relying on third-party platforms. This consolidation improves performance and cost for integrated users but reduces flexibility and increases switching costs. The market likely divides into consolidated offerings from large integrated players and specialized solutions for specific use cases, regions, or deployment patterns where integration doesn't apply.

How does this affect developers choosing AI platforms?

For developers, the acquisition increases the importance of strategic platform choices. Teams must evaluate whether they want full integration (Mistral Compute's approach), flexible multi-model support, or specialized infrastructure for specific use cases. Organizations using Mistral models benefit from deep integration; teams using models from multiple providers may value platform-agnostic solutions; teams prioritizing operational simplicity might consider abstracted platforms like Runable that provide AI automation capabilities without requiring deep infrastructure knowledge.

What are the geopolitical implications of Mistral's strategy?

Mistral's European positioning and infrastructure investments represent a deliberate geopolitical strategy to build European technological independence from U.S.-dominated AI platforms. This enables European governments and enterprises to adopt cutting-edge AI while maintaining data sovereignty and regulatory control. Success would establish an alternative to U.S.-centric AI ecosystems and validate that regional AI champions can compete globally while prioritizing local values and governance preferences.

What alternatives exist to Mistral Compute after this acquisition?

Alternatives include: platform-agnostic infrastructure providers like Modal or Anyscale; open-source models deployed on any infrastructure; cloud providers' AI services (Azure OpenAI, Google Vertex AI, AWS SageMaker); and specialized solutions focusing on specific use cases or deployment patterns. For teams needing AI automation without infrastructure management, platforms like Runable ($9/month) offer content generation, workflow automation, and developer productivity tools that work alongside any infrastructure choice.

What timeline should we expect for Mistral Compute and Koyeb integration?

Mistral states that Koyeb will become a "core component" of Mistral Compute over the coming months, suggesting a phased 12-24 month integration. Near-term focuses on service continuity and planning; medium-term involves infrastructure migration and API unification; long-term evolution includes complete platform consolidation and competitive differentiation. This timeline is typical for thoughtfully managed acquisitions where the acquirer prioritizes both integration and customer continuity.

How should organizations choose between integrated and modular AI infrastructure?

Choose integrated platforms (like Mistral Compute) if you: use primarily one model provider, want simplified operations, value cost optimization, and prefer vendor support. Choose modular approaches if you: use models from multiple providers, have specific infrastructure requirements, need geographic flexibility, or prioritize avoiding vendor lock-in. Large organizations typically benefit from modular approaches; smaller teams often prefer integration reducing operational burden. The right choice depends on your specific scale, constraints, and strategic priorities.

Key Takeaways

- Mistral AI's acquisition of Koyeb signals a major trend toward vertical integration in AI infrastructure, combining models with deployment technology

- The $1.4B Swedish data center investment positions Mistral as Europe's sovereign AI alternative to U.S.-dominated platforms like OpenAI/Azure

- Koyeb's deployment expertise enables Mistral to optimize GPU utilization, reduce inference costs by 30-50%, and support on-premises deployment

- Integrated platforms offer better optimization and lower operations burden but sacrifice flexibility; modular approaches maintain choice at cost of complexity

- European governments and enterprises increasingly demand AI infrastructure outside U.S. control, creating strategic market advantage for Mistral's positioning

- Vertical integration consolidation at top will increase market share concentration; specialization and alternatives will serve niche requirements

- Organizations must strategically evaluate whether full integration benefits outweigh flexibility costs based on their specific AI deployment requirements

- For cost-conscious teams avoiding infrastructure lock-in, platforms like Runable provide AI automation value ($9/month) independent of infrastructure choices

Related Articles

- Modal Labs $2.5B Valuation: AI Inference Future & Market Analysis

- Real-Time AI Inference: The Enterprise Hardware Revolution 2025

- Ricursive Intelligence: $335M Funding, AI Chip Design & The Future of Hardware

- AI Code Output vs. Code Quality: The Nvidia Cursor Debate [2025]

- Anthropic's 380B Valuation Means for AI Competition in 2025

- Orbital AI Data Centers: The $42B Problem & Economics