Pentagon vs. Anthropic: The AI Weapons Standoff [2025]

Last January, something remarkable happened in a government war room: the Pentagon used artificial intelligence to conduct a military operation. Claude, the AI model built by Anthropic, allegedly played a role in tracking and capturing Nicolás Maduro, Venezuela's then-president, as reported by The Wall Street Journal.

But there's a problem. Anthropic never agreed to this.

This incident exposed a fundamental crack in the relationship between the US military and one of the world's most powerful AI companies. The Pentagon wants AI models that can do anything—including autonomous weapons and mass surveillance. Anthropic says absolutely not. Now, a $200 million contract is at risk, and both sides are digging in, as detailed by Axios.

This isn't just about one company or one contract. It's about what happens when the world's most advanced militaries want to weaponize artificial intelligence and the people who built that AI refuse to let them. It's about power, control, and the future of warfare itself.

Let's break down what's actually happening, why it matters, and what comes next.

TL; DR

- The Core Issue: The Pentagon demanded Anthropic allow Claude to be used for "all lawful purposes," including autonomous weapons and mass surveillance.

- Anthropic's Red Lines: The company refuses to permit its models in fully autonomous weapons systems or domestic mass surveillance operations.

- The Financial Pressure: A $200 million Pentagon contract with Anthropic hangs in the balance as tensions escalated in 2025, as noted by Reuters.

- The Larger Conflict: This mirrors broader tension between Open AI, Google Deep Mind, and x AI, who are showing more flexibility with military demands.

- What's at Stake: The outcome could set precedent for how AI companies handle military requests globally.

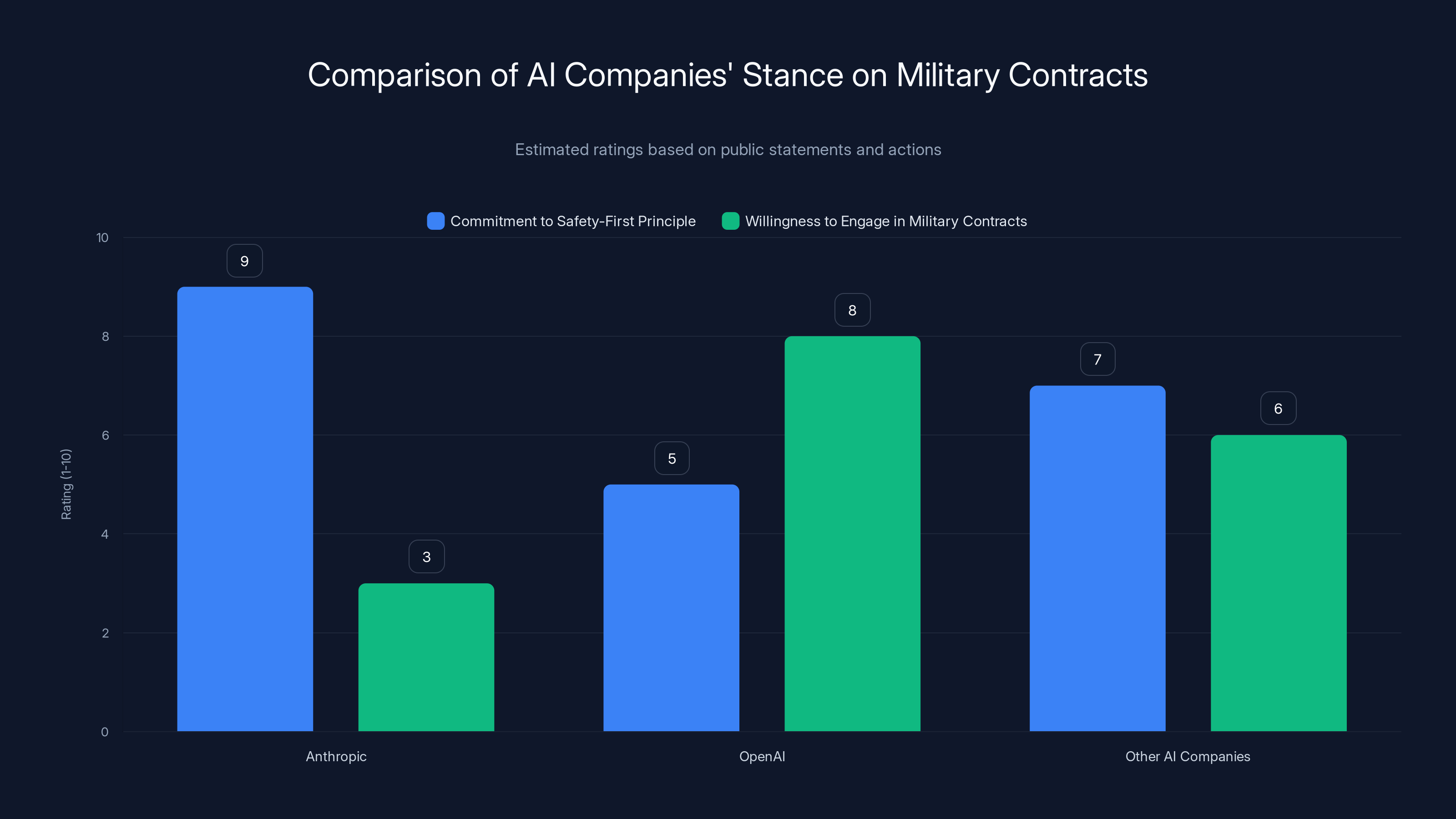

Anthropic is highly committed to safety-first principles, while OpenAI shows more willingness to engage in military contracts. Estimated data based on public statements.

The Pentagon's New AI Strategy

The US Department of Defense isn't being subtle about its ambitions. Military leaders want access to the most capable AI models available, and they want no restrictions on how those models can be deployed.

In early 2025, the Pentagon made a formal request to major AI providers: Anthropic, Open AI, Google, and x AI. The message was clear: allow us to use your models for "all lawful purposes." That phrase matters. Lawful is broad. It could mean anything the Pentagon's lawyers say is legal, as highlighted by DefenseScoop.

This isn't the Pentagon's first rodeo with AI. The military has been experimenting with machine learning for decades, from drone targeting systems to logistics optimization. But there's a difference between using AI to help make decisions and using AI to make decisions autonomously, without meaningful human oversight.

Sean Parnell, the Pentagon's chief spokesman, made the military's position clear: "Our nation requires that our partners be willing to help our warfighters win in any fight." Translation: we need your models, and we need them without your restrictions.

The Pentagon's interest in full autonomy isn't irrational from a military perspective. Autonomous systems could theoretically react faster than humans, make decisions without emotional bias, and reduce soldier casualties. But that's also exactly why it terrifies people inside and outside the tech industry, as discussed in The Quantum Insider.

The Military's Use Cases

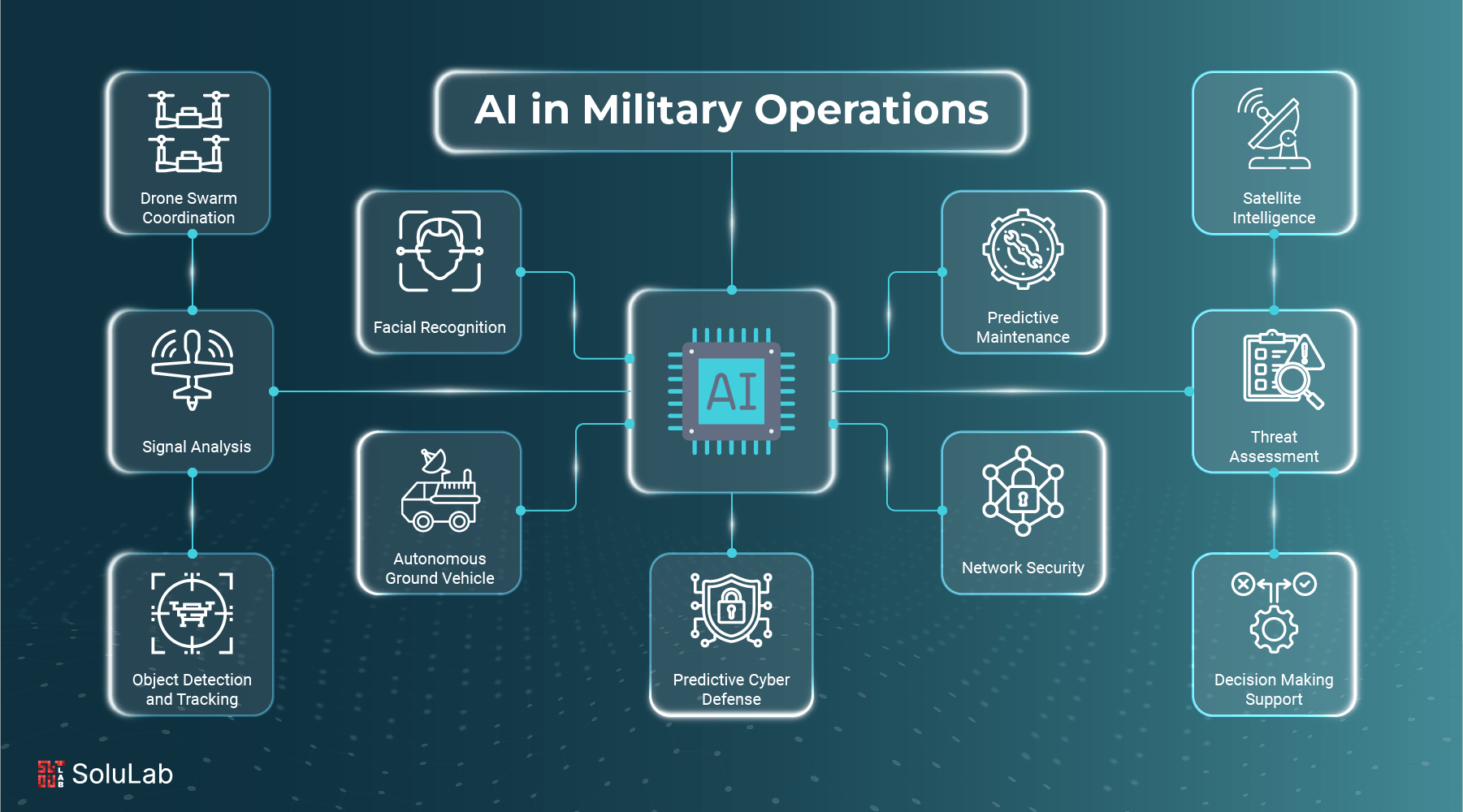

What does the Pentagon actually want to do with these AI models? The Pentagon hasn't published a detailed roadmap, but leaked communications and public statements give us hints.

Autonomous Weapons Systems: This is the most controversial. The Pentagon wants AI that can identify, track, and potentially engage targets with minimal human involvement. Currently, the US maintains a "human-in-the-loop" policy for lethal decisions, but autonomous systems could eliminate even that.

Predictive Intelligence: The military wants AI that can analyze surveillance data, intelligence reports, and open-source information to predict enemy movements, identify threats, and recommend preemptive action. This is where Claude's language abilities matter—the model is exceptional at synthesizing complex information.

Mass Surveillance and Threat Identification: This is where Anthropic drew a hard line. The Pentagon wants AI that can monitor domestic and international communications to identify potential threats. The scope? Potentially millions of data points, flagging individuals or groups for further investigation.

Logistics and Strategy: This is the "safe" category. AI optimizing supply chains, predicting maintenance needs, and analyzing historical military strategy. The Pentagon and AI companies agree on this stuff without argument.

Here's the thing: the Pentagon's desires aren't unique. China is building its own AI military capabilities. Russia is experimenting with autonomous systems. If the US military can't access the best AI, it fears falling behind. That fear is real, and it's driving the aggressive stance.

Anthropic's Red Lines

Dario Amodei, Anthropic's CEO, didn't get to lead an AI company by accident. He's thought deeply about AI safety, and he's made it clear: Anthropic has principles, and profit alone won't override them.

When the Pentagon made its request for "all lawful purposes," Anthropic responded with a formal refusal. Not a negotiation. Not a "let's discuss." A hard no.

The company's position is built on two specific concerns:

Fully Autonomous Weapons: Anthropic refuses to allow Claude in systems that make lethal decisions without meaningful human oversight. The company defines "meaningful" as humans retaining real authority to intervene, not just the ability to turn the system off.

Mass Domestic Surveillance: This is the line Anthropic drew in the sand. The company doesn't want Claude analyzing vast amounts of data on US citizens for threat identification. The potential for abuse is astronomical—mission creep, false flags, targeting of political enemies, discrimination against minorities.

What's fascinating is that Anthropic isn't anti-military. The company had a $200 million contract with the Pentagon before this conflict. They just drew lines around certain applications, as reported by Ynet News.

An Anthropic spokesperson told media outlets investigating the dispute: "We have not discussed the use of Claude for specific operations with the Department of War." Let that sink in. The Pentagon allegedly used Claude without Anthropic's knowledge or consent. That's not a partnership. That's an act of unilateral commandeering.

Anthropic followed up by noting that its usage policy with the Pentagon was under review, with specific emphasis on "our hard limits around fully autonomous weapons and mass domestic surveillance."

The Dario Amodei Factor

Understanding this conflict requires understanding Dario Amodei. He's not a typical tech CEO chasing maximum revenue. Amodei's background is in safety and alignment—he was previously at Open AI, where he led safety research.

When he founded Anthropic in 2021, he made a deliberate choice: build an AI company that operates as a public benefit corporation. That structure matters legally and culturally. It gives Amodei and his team some insulation from purely financial pressure.

At forums like Davos, Amodei has been vocal about AI safety concerns. He's called for regulation, warned about surveillance risks, and pushed for international cooperation on AI governance.

That's not the profile of a CEO who's going to fold to Pentagon pressure. Amodei has already achieved what most tech CEOs want—he's built one of the world's most powerful AI systems. He doesn't need the Pentagon's money. He needs Claude to be trustworthy.

If Claude gets used for drone strikes without Anthropic's knowledge, or if the model becomes associated with mass surveillance, that damages the entire company's credibility. From a pure business perspective, Anthropic's refusal makes sense.

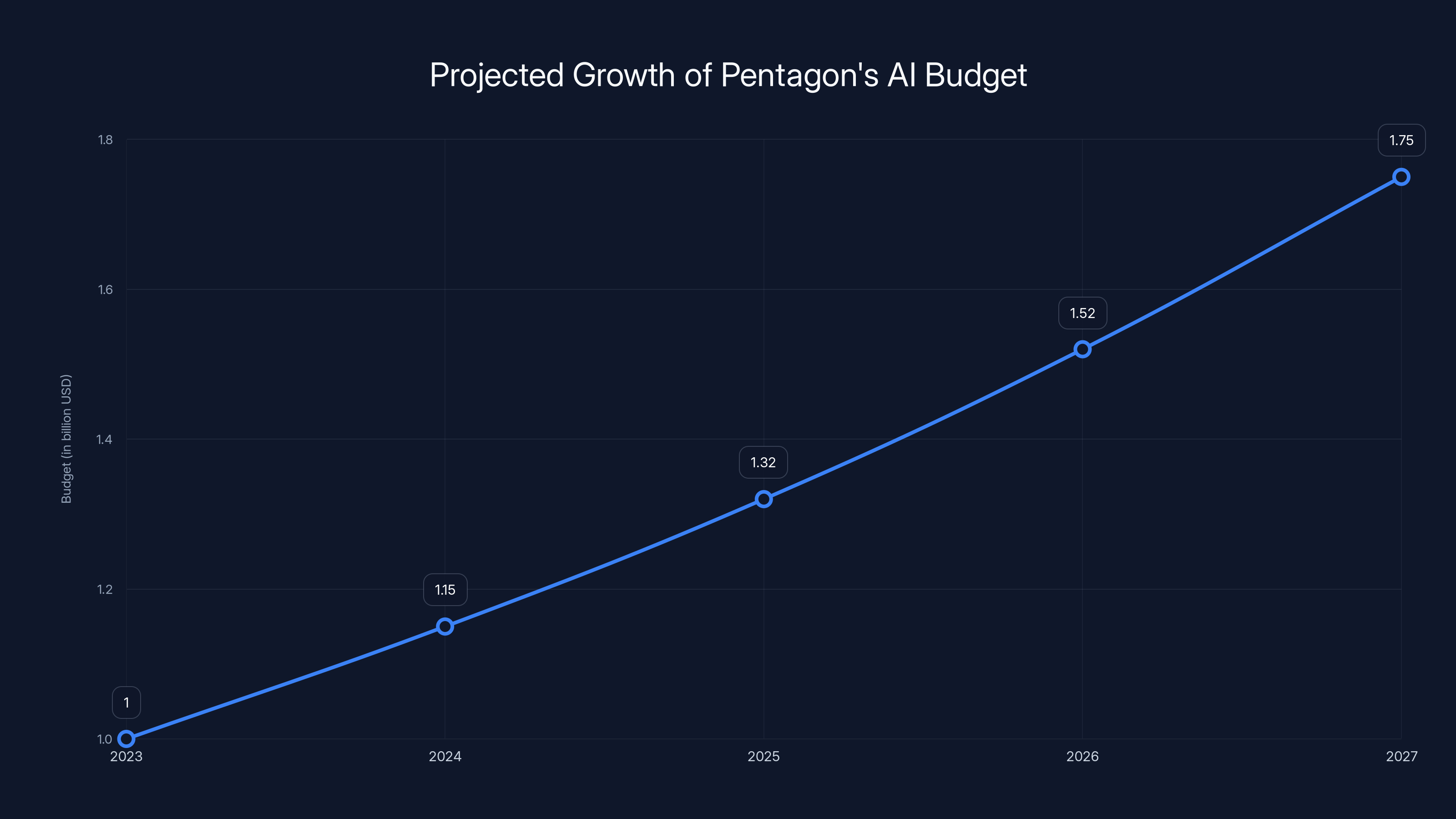

The Pentagon's AI budget is projected to grow significantly, reaching approximately $1.75 billion by 2027, assuming a consistent 15% annual growth rate. Estimated data.

What Actually Happened in Venezuela

The specifics matter here, so let's dig into what we know about the Maduro operation.

In January 2025, the US military was involved in intelligence operations related to Nicolás Maduro, Venezuela's authoritarian president. According to reports, Claude was used to process and synthesize intelligence information—likely analyzing communications intercepts, satellite imagery, public statements, and other data sources, as noted by The Wall Street Journal.

Here's where it gets complicated: using Claude to analyze data isn't the same as using Claude to make targeting decisions. But the Pentagon didn't consult Anthropic first, and Anthropic found out after the fact.

This raises several questions: Did the Pentagon have legal authority to use Claude this way? Does using a commercial AI model in military operations constitute an "unrestricted" use that violates the contract? Was Anthropic's objection about the operation itself, or about precedent?

The company's public statement doesn't confirm or deny the Venezuela operation. But it does say Anthropic and the Pentagon haven't discussed specific operations. That suggests the Pentagon may have accessed Claude through commercial channels—the same interface a journalist or researcher would use—and applied it to classified military work.

If that's what happened, it reveals a loophole: you can't stop a military from using your publicly available AI model. You can only refuse to make modifications that increase military capability, or provide specialized access to more powerful versions.

The Legal and Ethical Gray Zone

Here's what we know about the contract between the Pentagon and Anthropic: it's worth $200 million, and it includes specific usage restrictions. But the Pentagon is arguing those restrictions are too limiting.

From a legal standpoint, this is murky. The Pentagon might argue that using a commercial API for military purposes is fundamentally different from modifying Claude specifically for weapons applications. Anthropic might argue that knowingly allowing military use without restrictions violates the spirit of their usage policy.

The ethical argument is clearer. Anthropic built Claude to be helpful, harmless, and honest. Using it to track and capture political opponents—even ones the US disagrees with—pushes the "harmless" boundary hard.

But Maduro is also an authoritarian who's been credibly accused of human rights abuses. The US military arguably had legitimate reasons to pursue intelligence about his activities. So you get a collision between two defensible positions:

- Pentagon: We need access to the best AI to protect national security

- Anthropic: We need to ensure our AI isn't used to enable human rights abuses

There's no clean resolution to that conflict. Both sides are acting from positions of principle.

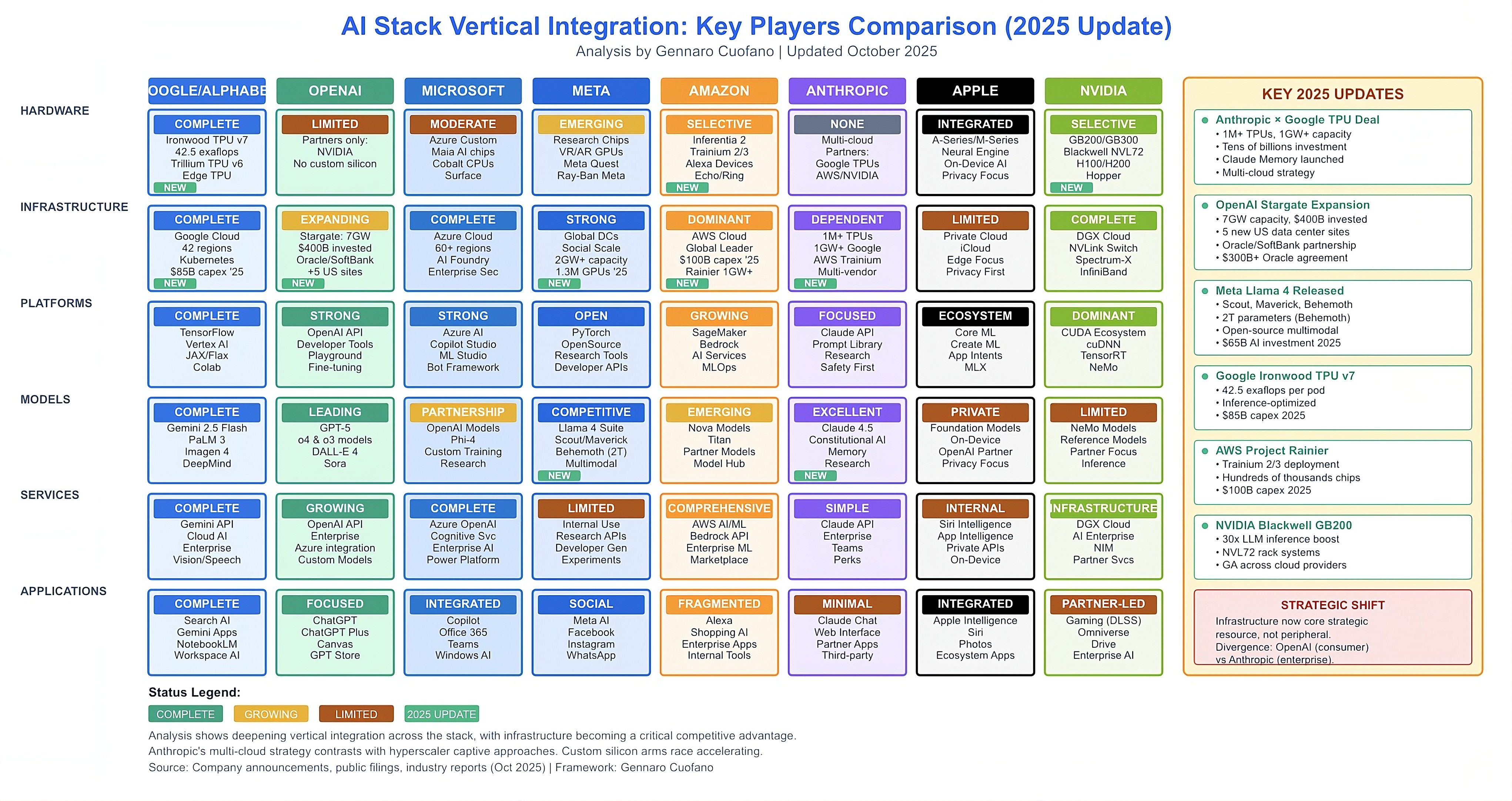

The Broader Conflict: Who Controls AI?

This Anthropic-Pentagon dispute is really a symptom of a larger question: who controls powerful AI systems once they're released into the world?

Traditionally, technology companies have tried to control their products. Facebook controls what goes on Facebook. Tesla controls how you use Teslas. But AI is different. Once you release an AI model—especially if it's commercially available—you lose a lot of control.

Anthropics approach has been to build AI that refuses certain requests. Claude has been trained to decline requests for weapons development, illegal activities, and abuse. But that only works if Anthropic controls the version of Claude that's in use.

The Pentagon wants unrestricted access to the most powerful version of Claude. They're arguing, in essence, that the US military shouldn't have to work around guardrails built into commercial AI.

Open AI's position contrasts sharply with Anthropic's. Open AI has been more flexible about military applications. The company gave the Pentagon broad access to GPT models, with fewer restrictions than Anthropic maintains. Open AI's reasoning: the military should have access to the best tools available, as discussed in The Hill.

Google Deep Mind and x AI have similarly signaled flexibility. This puts pressure on Anthropic. If competitors are willing to give the Pentagon what it wants, why isn't Anthropic?

The answer is philosophical. Anthropic sees its competitive advantage as trustworthiness. If Anthropic abandons its safety principles for a contract, what does that say about the company? And what happens to the clients who chose Anthropic specifically because of those principles?

The $200 Million Gamble

Let's talk about the money. A $200 million Pentagon contract is significant. For context, that's roughly equivalent to Anthropic's total funding from some of its biggest investors.

Losing that contract would hurt. But for Anthropic, the damage would extend beyond finances. It would signal that the company's safety commitments are negotiable. That's catastrophic for an AI company whose entire brand is built on safety.

Consider the alternative path: Anthropic folds, gives the Pentagon unrestricted access. Then what?

- Customers who chose Anthropic for safety feel betrayed

- Regulatory agencies questioning AI safety lose a key ally

- Competitors can claim they're more principled

- The AI safety community views Anthropic as corrupted

The financial benefit (keeping the Pentagon contract) gets outweighed by the reputational cost.

But the Pentagon's threat is real. If Anthropic refuses, the Pentagon can and will use competitor models. Over time, if the Pentagon becomes a major customer for Open AI, Google, or x AI, those companies' influence in Washington grows. Anthropic loses soft power.

The Geopolitical Angle

There's a bigger game at play here. The US military is acutely aware that China and Russia don't have these debates. Chinese AI models are being weaponized without ethical constraints. Russian military is deploying AI autonomously in Ukraine.

From the Pentagon's perspective, restricting US AI to moral principles while adversaries embrace AI without limits creates an asymmetric disadvantage.

This is a classic security dilemma: when your enemy gets a capability, you feel compelled to get it too, even if it increases overall danger. That's what's driving the Pentagon's aggressive stance on Anthropic.

And here's the dark part: the Pentagon isn't wrong. If US military AI is limited while Chinese military AI advances freely, that's a real strategic problem.

But that's also not Anthropic's problem to solve. The company shouldn't be forced to choose between national security and ethical principles. That's a government problem that requires government solutions—like international treaties on AI weapons, or regulations on how AI can be used domestically.

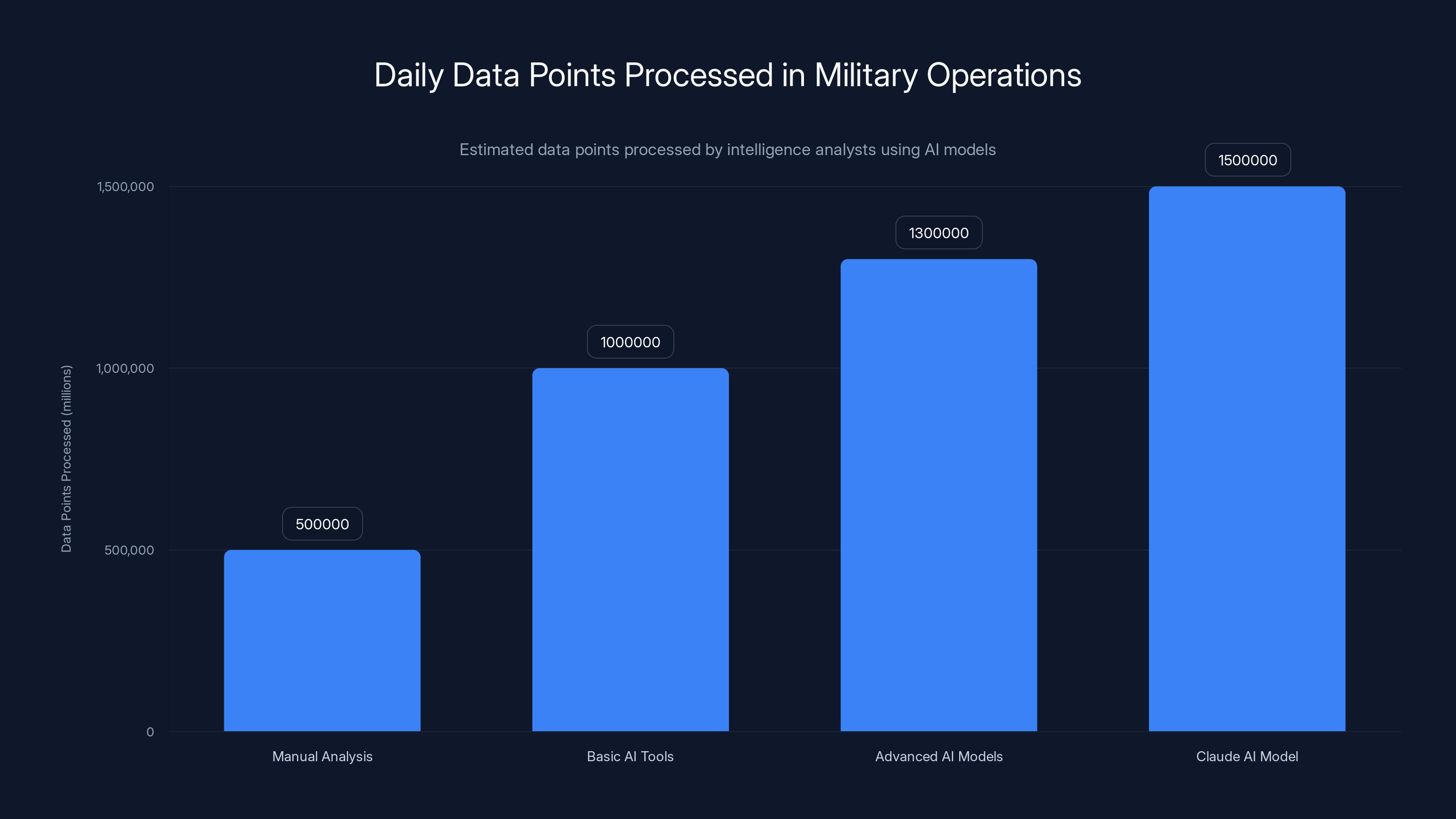

AI models like Claude significantly enhance data processing capabilities, handling up to 1.5 million data points daily. Estimated data based on military operations.

The Weapons Question: Can AI Make Lethal Decisions?

Let's zoom in on the core technical question: should AI be allowed to make autonomous lethal decisions?

The Pentagon's argument is that autonomous systems can be precise and effective. Remove human hesitation, emotion, and fatigue from targeting decisions. Let AI make the call based on perfect information processing.

The counterargument, which Anthropic embraces, is that certain decisions are inherently human decisions. Who dies. Who gets detained. Who gets targeted. These require judgment, accountability, and the ability to understand context that AI can't fully grasp.

Here's a concrete example: an autonomous drone system identifies a person matching the profile of a high-value target. Confidence level: 87%. Should the system:

A) Make the kill decision autonomously

B) Alert a human operator who decides yes or no

C) Alert a human operator who can see what the system sees and understands the confidence level

D) Send the information to a command structure where multiple humans debate the decision

The Pentagon wants A or B. Anthropic argues for C or D.

There are legitimate concerns with all options. Option A is reckless. Option B creates responsibility gaps—if the operator is just rubber-stamping the AI's suggestion, are they meaningfully responsible? Option C and D are slower, which could be dangerous in time-critical situations.

This isn't something that has a clean answer. It requires balance between speed, safety, and accountability.

Mass Surveillance and the Domestic Angle

The second red line Anthropic drew is mass domestic surveillance. This one hits different because it's not about foreign military operations. It's about monitoring Americans.

The Pentagon wants AI that can analyze vast amounts of data—communications, financial records, social media, travel patterns—and flag potential threats. The justification is counterterrorism and national security.

But there are profound dangers:

Mission Creep: Systems built for terrorism prevention get expanded to protest monitoring, political opposition tracking, and dissent suppression.

False Positives: AI systems make mistakes. If an AI flags 1% false positives on a dataset of 1 million people, that's 10,000 innocent people flagged for investigation.

Lack of Recourse: How do you appeal if an AI system incorrectly identifies you as a threat? There's no clear legal remedy.

Minority Targeting: Historical data is biased. AI trained on that data reproduces and amplifies those biases. Surveillance systems end up disproportionately targeting minorities.

Anthropics refusal to support mass domestic surveillance isn't about protecting foreign adversaries. It's about protecting Americans.

How Other AI Companies Are Responding

Not every AI company is taking Anthropic's stance. Let's see how the others are positioned.

Open AI's Pragmatic Approach

Open AI has been notably more accommodating to Pentagon requests. The company provided the military with broad access to Chat GPT and other models, with fewer restrictions than Anthropic maintains.

Open AI's CEO has framed this as practical: "We believe the people responsible for defending the country should have access to the best tools available."

That's a defensible position. It's also a position that prioritizes national security over safety guardrails.

Google's Strategic Silence

Google Deep Mind hasn't been as public as Anthropic or Open AI. The company has a long history of working with the Pentagon and has vast AI capabilities. Google's approach seems to be: negotiate quietly, find middle ground.

x AI's Wild Card

x AI, Elon Musk's AI company, has signaled willingness to work with military applications. Musk's general position is that powerful AI should be available to everyone, including governments.

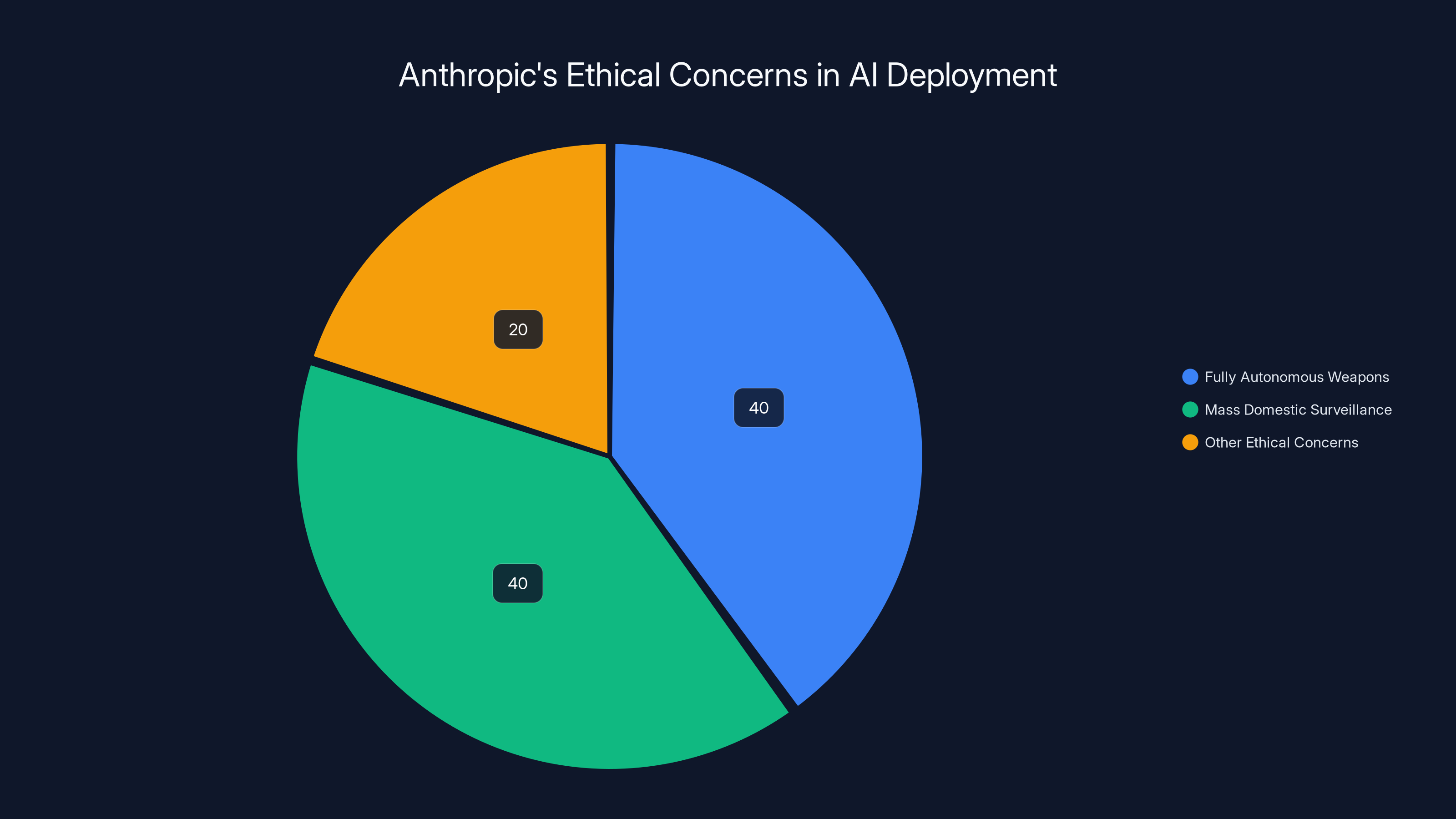

Anthropic places equal emphasis on preventing fully autonomous weapons and mass domestic surveillance, with a smaller focus on other ethical concerns. (Estimated data)

The Policy Vacuum

Here's what's missing: clear government policy on AI military applications.

The Pentagon is working from executive authority and informal pressure. Anthropic is working from contractual language and company principles. Neither has a solid legal framework to stand on.

What we need is legislation. Congress should establish:

- Clear standards for what counts as "autonomous weapons"

- Requirements for human oversight of targeting decisions

- Restrictions on certain types of surveillance

- Liability frameworks—who's responsible if an AI system makes a mistake

- International treaties similar to chemical weapons conventions

None of that exists yet. So we're stuck with corporate ethics versus military pressure, and the outcome is uncertain.

What Happens Next?

The Pentagon has threatened to terminate Anthropic's contract if the company doesn't comply. That threat might be real, or it might be negotiating pressure. We don't know.

Historically, Pentagon contracts have been renegotiated rather than terminated. But AI is new territory, and both sides seem committed to their positions.

Scenario A: Anthropic Caves

- Pentagon keeps using Claude for military applications

- Anthropic loses credibility in the safety community

- Other AI companies face similar pressure

- Unrestricted military AI becomes the norm

Scenario B: Pentagon Walks

- Military shifts investment to Open AI, Google, x AI

- Those companies become embedded in military operations

- Anthropic maintains principles but loses influence

- AI development becomes increasingly militarized

Scenario C: Compromise

- Both sides negotiate middle ground

- Anthropic allows military use with specific safeguards

- Pentagon accepts some restrictions but gains broader access

- Sets precedent for future negotiations

Scenario C seems most likely, but the clock is ticking. As AI capabilities increase, the stakes get higher.

The Precedent Problem

This conflict isn't just about Anthropic. It's setting precedent for how AI companies will interact with governments globally.

If Anthropic folds, every other AI company will face the same pressure. Why would the Pentagon negotiate with anyone who refuses military applications when other companies are willing?

If Anthropic holds firm, they become a model for safety-first AI development. Other companies might follow, which could change the trajectory of AI militarization.

From a global perspective, this matters enormously. The US isn't the only country that wants military AI. If the US sets a precedent that powerful AI is available for unrestricted military use, that emboldens other countries. China will point to US policy and argue for the same flexibility. Russia will accelerate weapons development.

Conversely, if the US, through companies like Anthropic, maintains that certain uses are off-limits, it creates a standard for responsible AI that might become international norm.

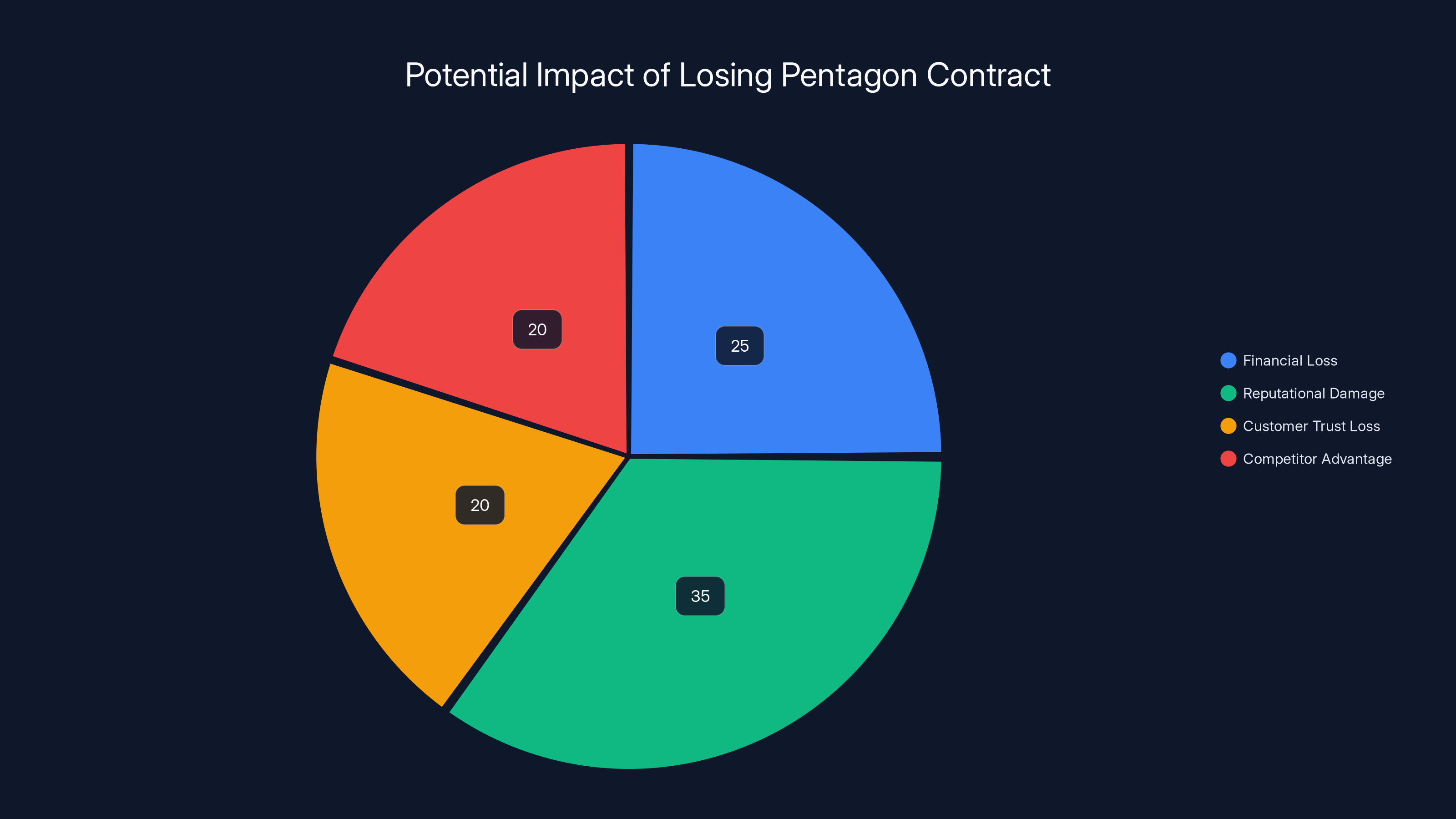

Estimated data shows reputational damage as the largest impact area, highlighting the importance of maintaining ethical commitments.

The Anthropic Philosophy: Principled AI

To understand Anthropic's position, you need to understand the company's foundational philosophy.

Anthropics team believes AI safety isn't optional. It's foundational. You don't build incredibly powerful AI and hope it turns out fine. You build it with safety as a core design principle.

That philosophy comes through in Claude's training. The model is trained to be helpful, but also to refuse requests that could cause harm. To some users, that's frustrating. You can't get Claude to help with certain things, even for research purposes.

To Anthropic, that's the point. You build AI that says no to dangerous requests, because once that power is in the world, you can't take it back.

The Pentagon dispute is where that philosophy meets military reality. The military wants power without constraints. Anthropic is saying: some constraints are non-negotiable.

Historical Parallel: The Nuclear Debate

This conflict echoes the nuclear weapons debates of the 1940s-1960s.

Scientists who developed nuclear weapons thought deeply about their creation. Some, like J. Robert Oppenheimer, were appalled by what they'd built and spent decades advocating for nuclear disarmament.

Militaries wanted unrestricted access to nuclear weapons. Scientists wanted safeguards and treaties. Eventually, civilization developed frameworks—the Nuclear Non-Proliferation Treaty, mutual assured destruction doctrine, arms control agreements—that created some constraints.

We might see a similar arc with AI. Creators built powerful AI. Militaries want it. Gradually, norms and treaties might establish limits.

The difference is timeline. Nuclear weapons took years to develop. AI is advancing exponentially. We don't have decades to sort this out.

What Should Happen

Here's what I think should happen, though this is where expertise becomes opinion.

The Pentagon shouldn't get unrestricted military AI. Not because the Pentagon is malicious, but because unlimited power is dangerous, and oversight mechanisms haven't been built.

Anthropics shouldn't refuse military applications entirely. Defensive AI, intelligence analysis, logistics optimization—these are legitimate uses that don't create the risks of autonomous weapons or mass surveillance.

Instead, we need what should exist but doesn't: a governance framework for military AI.

That framework should include:

- International treaties defining prohibited weapons systems

- Domestic regulations requiring human authorization for lethal decisions

- Audit and oversight mechanisms

- Clear definitions of what counts as autonomous

- Liability frameworks

- Transparency requirements

Building that framework is hard. It requires international cooperation, military buy-in, and corporate participation. But it's more important than any individual contract.

The Broader Implications for AI Development

This conflict will shape how AI gets developed and deployed for years.

If companies see that safety principles lead to losing military contracts, fewer companies will implement those principles. If the Pentagon's pressure works, the incentive structure changes. AI companies optimize for military capabilities, not safety.

If Anthropic holds firm and maintains credibility, the opposite happens. Safety becomes a competitive advantage. Companies invest in alignment and safeguards because that's what customers want.

Which path do we take depends partly on what happens with this dispute.

Looking Forward: The 2025-2026 Timeline

We're likely to see this conflict play out over the next year.

Q1 2025: Both sides posture, threaten, and negotiate behind closed doors. Media coverage increases pressure.

Q2 2025: Either a deal gets made, or the Pentagon formally terminates the contract and shifts budget to competitors.

Q3-Q4 2025: Congress potentially gets involved, holding hearings on AI and national security.

2026: New regulations might emerge, or the status quo remains with AI companies negotiating individually with the military.

The outcome isn't predetermined. But the stakes are clear.

FAQ

What does "fully autonomous weapons" actually mean?

A fully autonomous weapon is a system that can select and engage targets without human authorization. The system uses AI to identify targets, calculate firing solutions, and execute the strike independently. This differs from human-controlled systems where a person pulls the trigger, and from systems that recommend targets but require human approval. The debate is partly about what constitutes meaningful human control—is a human clicking "approve" enough, or do they need to fully understand and evaluate the system's reasoning?

Why would Anthropic risk losing a $200 million contract?

Anthropics competitive advantage isn't primarily financial—it's reputational. The company branded itself as the safety-first AI developer. If Anthropic compromises that principle for Pentagon money, it undermines its entire value proposition. Customers who chose Anthropic specifically because of safety commitments would lose confidence. Regulators and policymakers looking for trustworthy AI partners would be skeptical. For a company whose core asset is credibility, the short-term financial gain wouldn't justify the long-term reputational cost.

Has the Pentagon actually used Claude without Anthropic's permission?

According to reports, Claude was used in the intelligence operation related to capturing Nicolás Maduro in January 2025. Anthropic stated it had not discussed specific operations with the Pentagon, suggesting the military accessed Claude through commercial channels. This highlights a loophole: companies can restrict contract-specific military work, but can't prevent militaries from using publicly available AI services.

What's the difference between Anthropic and Open AI on this issue?

Open AI has been more accommodating to Pentagon requests, providing broad military access to Chat GPT with fewer restrictions than Anthropic maintains. Anthropic argues that certain uses pose unacceptable risks. Open AI prioritizes making capabilities available to legitimate government defense needs. Both positions are defensible, but they reflect different philosophies about corporate responsibility.

Could mass surveillance with AI be used against Americans?

Yes, and that's exactly why Anthropic refuses to support it. A system built to identify security threats abroad could easily be repurposed domestically. History shows government surveillance tools often expand beyond their original intent. If such systems are trained on biased data, they could disproportionately target minorities. Without legal safeguards and public oversight, AI-powered surveillance poses serious risks to civil liberties.

What international agreements exist around military AI?

Currently, very few. The UN has discussed autonomous weapons, but no binding treaty exists. Some countries have signed non-binding statements expressing concern about fully autonomous weapons, but enforcement mechanisms are weak. The closest parallel is the Nuclear Non-Proliferation Treaty, which established frameworks for limiting weapons of mass destruction. Something similar might eventually exist for military AI, but it would require international negotiation and agreement, which is difficult and slow.

If Anthropic refuses, will the Pentagon just use competitors' AI?

Almost certainly. If Anthropic holds the line, the Pentagon will shift investment to Open AI, Google Deep Mind, or x AI, all of which have signaled more flexibility. This means military AI development would accelerate with less safety-conscious partners, potentially making the overall situation worse for AI safety. This is a key tension: maintaining principles might enable the thing you're trying to prevent.

Could this lead to legislation?

Most likely eventually, though legislation is slow. Congress has been holding hearings on AI policy and will likely continue to examine military applications specifically. Any legislation would probably establish frameworks for oversight, human control requirements, and liability. However, international coordination would be needed to be truly effective, which adds complexity and time.

What can individuals do about this?

If you care about responsible AI development, monitor how this dispute resolves. Contact elected representatives and express your views on AI governance. Support organizations working on AI policy and safety. Make consumption choices based on corporate principles—use and recommend companies that maintain safety commitments. Individual actions are small, but they influence corporate behavior at scale.

This conflict between the Pentagon and Anthropic is far from resolved. But it's crystallized one of the most important questions of our era: who controls powerful AI, and what are we willing to do with it?

The answer to that question will shape the next 20 years of AI development, military strategy, and global security. Pay attention to how it resolves.

Key Takeaways

- The Pentagon demanded unrestricted military access to Claude; Anthropic refused and drew hard lines around autonomous weapons and mass surveillance.

- A $200 million Pentagon contract with Anthropic is at risk, but the company prioritizes safety credibility over financial pressure.

- Other AI companies (OpenAI, Google, xAI) are showing more flexibility, creating competitive pressure on Anthropic's principled stance.

- This conflict reveals a policy vacuum: no clear legislation exists governing military AI applications, human oversight requirements, or autonomous weapons limitations.

- The outcome will set precedent for how AI companies interact with militaries globally and influence AI development trajectories for years.

Related Articles

- OpenAI ChatGPT Military Access: What It Means for Defense [2025]

- Tech Leaders Respond to ICE Actions in Minnesota [2025]

- The AI Trust Paradox: Why Your Business Is Failing at AI [2025]

- Tech CEOs on ICE Violence, Democracy, and Trump [2025]

- Copilot Security Breach: How a Single Click Enabled Data Theft [2025]

- How Grok's Paywall Became a Profit Model for AI Abuse [2025]

![Pentagon vs. Anthropic: The AI Weapons Standoff [2025]](https://tryrunable.com/blog/pentagon-vs-anthropic-the-ai-weapons-standoff-2025/image-1-1771268771562.jpg)