Senator Elizabeth Warren Just Put Open AI on Notice About Government Bailouts

It started with a letter. On one side, Senator Elizabeth Warren, a heavyweight on banking oversight. On the other, Sam Altman, running the most valuable AI startup in the world. The subject matter? Whether American taxpayers should end up footing the bill if Open AI runs out of money.

This isn't abstract policy debate. This is about real money, real risk, and the uncomfortable question nobody wants to answer: what happens if the AI bubble pops?

Warren's letter landed like a grenade in Silicon Valley. She's not accusing Open AI of secretly negotiating a bailout. Instead, she's doing something sharper: she's asking pointed questions about financial structures that could create de facto government involvement even without explicit bailout requests. The kind of questions that force companies to either answer honestly or look like they're dodging.

Here's what makes this moment crucial. Open AI has committed to over a trillion dollars in infrastructure spending. Think about that number for a second. One trillion. The company isn't profitable yet. Neither are most of its infrastructure partners. The financial engineering holding this whole thing together is increasingly complex, increasingly leveraged, and increasingly dependent on continued investor enthusiasm.

Warren sees something dangerous in the architecture of these deals. Open AI isn't just spending its own money. It's structured partnerships with companies like Core Weave that are themselves taking on massive debt to build infrastructure for Open AI. It's a financial house of cards that might be stable in a bull market, but becomes fragile the moment sentiment shifts.

What makes Warren's intervention different from typical political posturing is that she's asking for concrete information. Not soundbites. Not reassurances. Actual financial projections, profit margins, government conversations, and scenarios for what happens if AI demand plateaus. She's given Altman until February 13th, 2026, to respond.

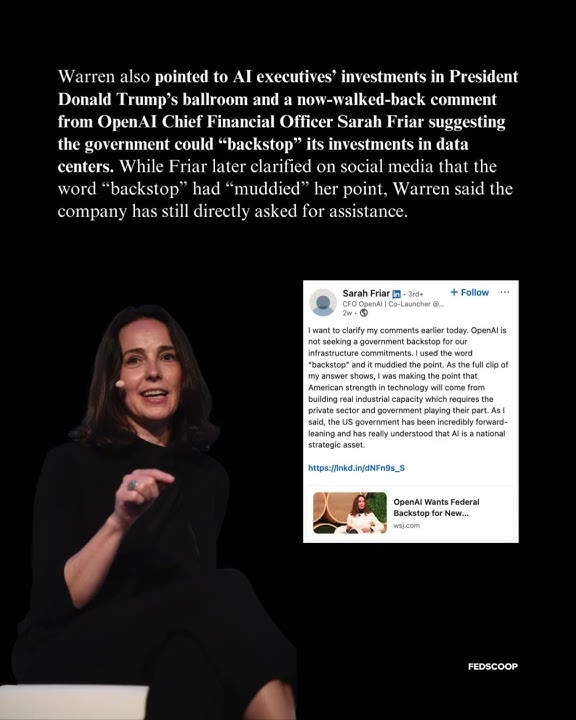

The timing matters too. This happens after Open AI's own CFO Sarah Friar suggested taxpayers should "backstop" infrastructure costs, creating an absolute PR nightmare that the company has spent months trying to contain. It happens as the White House, under David Sacks' AI policy leadership, has explicitly rejected the idea of government support for AI companies. And it happens as the AI industry pours tens of billions into projects with no clear path to profitability.

Warren isn't wrong to be concerned. The architecture of modern AI financing is genuinely novel and genuinely risky. Understanding what she's asking for, why she's asking, and what it means for Open AI's future is essential context for anyone watching the AI industry.

TL; DR

- Warren's Core Concern: Open AI has committed to over $1 trillion in spending without profitability, creating systemic risk

- The Real Issue: Debt-based partnerships mean the entire AI industry could need government rescue if deals unravel

- What She Wants: Specific financial projections, profit timelines, and confirmation Open AI won't seek bailouts

- Altman's Response Required: February 13th, 2026 deadline to provide details on government conversations and financial forecasts

- Bottom Line: This reveals fundamental questions about AI's financial viability that nobody wants to answer publicly

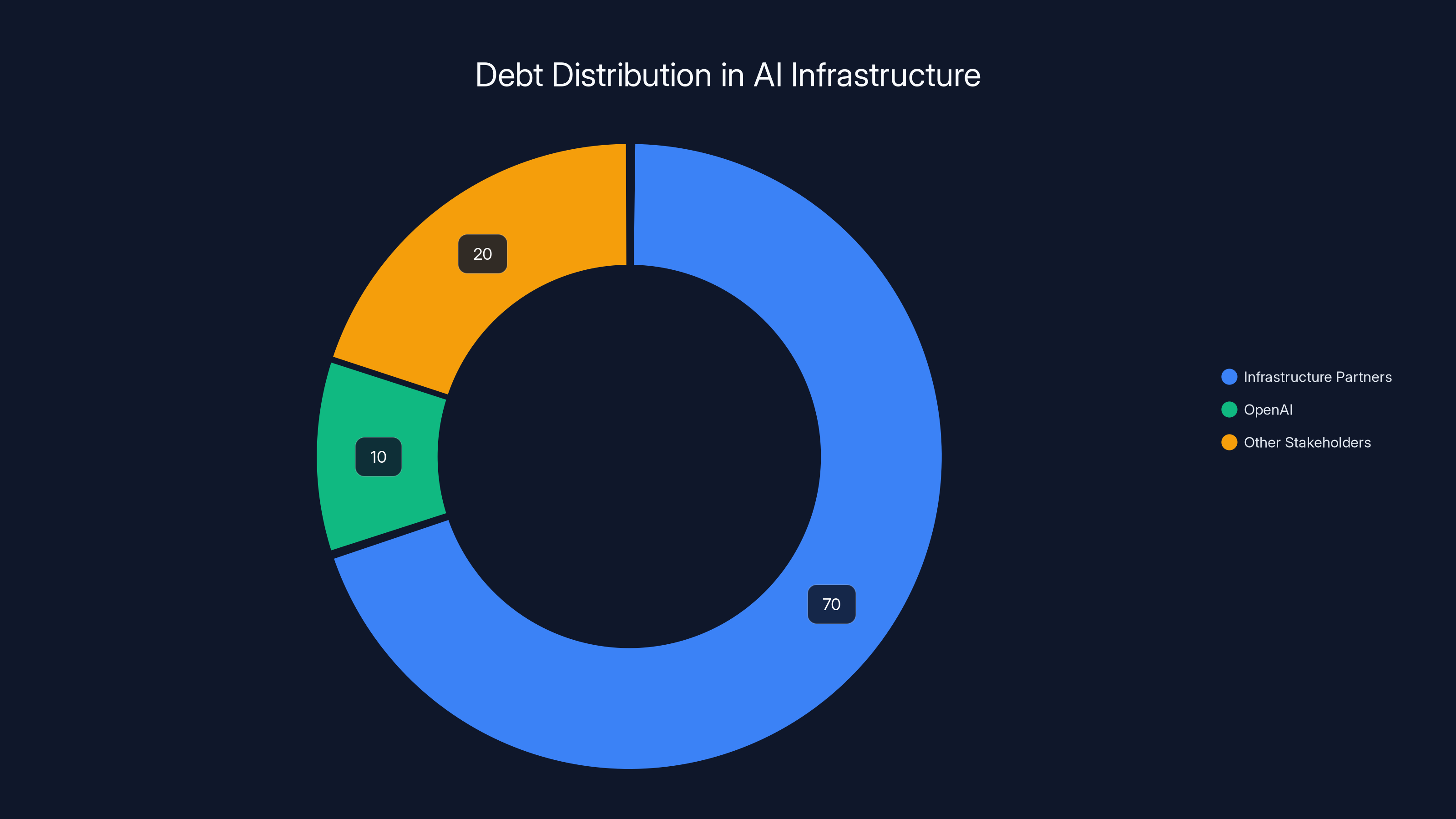

Infrastructure partners shoulder the majority of debt (70%), while OpenAI maintains minimal direct exposure (10%). Estimated data.

The Letter That Made Sam Altman Uncomfortable

Senator Warren's letter wasn't written on impulse. This was a carefully constructed document from a ranking member of the Senate Committee on Banking, Housing, and Urban Affairs, someone who spent her career studying financial collapse, institutional risk, and what happens when markets get too clever for their own good.

The letter opens with a fact that's hard to argue with: Open AI has committed to more than a trillion dollars in spending despite not turning a profit. That's the core premise. Everything else flows from there. Not accusation, not allegation. Just a statement of what's publicly known.

Then Warren moves to the meat of her concern. She writes that Open AI "appears to be seeking government assistance should it prove unable to pay its bills." She's not saying they've asked for money yet. She's saying the structure of their financial commitments and partnerships suggests they're preparing the conditions where such a request would make sense.

The language matters here. Warren chose her words with precision. She's not accusing them of wrongdoing. She's saying the financial architecture creates incentives for government involvement. It's the difference between saying someone committed a crime and saying someone created a situation where a crime becomes likely.

Warren specifically points to something most people haven't paid attention to: the mismatch in debt between Open AI and its infrastructure partners. Open AI has "comparatively little debt on its own balance sheet" while companies like Core Weave are "saddled with debt to fulfill its contract with Open AI." This is the real insight.

It's financial engineering with a specific purpose. Keep Open AI's debt low while shifting infrastructure risk to partners who are more likely to become insolvent. If those partners fail, suddenly Open AI's infrastructure commitments become endangered. And suddenly, government involvement doesn't seem like an option anymore, it seems like a necessity.

The letter also addresses what Open AI has already said on the record. Altman has repeatedly stated that Open AI "does not have or want government guarantees for Open AI datacenters." But Warren, reading this carefully, points out that this statement "does not appear to reject federal loans and guarantees for the AI industry as a whole."

That's a crucial distinction. Open AI could refuse targeted bailout funds while still benefiting enormously from an industry-wide rescue package. The company's trillion-dollar spending commitments would benefit from broad government support for AI infrastructure even if Open AI itself never takes a direct government loan.

Warren's framing is essentially: you don't need to bail out Open AI specifically. You just need to bail out the AI infrastructure ecosystem that Open AI depends on. And suddenly the whole thing looks different.

Why Open AI's Financial Structure Is Actually Concerning

Look at how modern AI infrastructure financing works and you'll spot the risk Warren is talking about.

Open AI doesn't own most of its data centers. It partners with specialized infrastructure companies. These partners, like Core Weave, take on enormous debt to build facilities that meet Open AI's specifications. They do this because Open AI's revenue projections and growth forecasts are compelling enough that lenders are willing to take the risk.

But here's what makes this fragile: those forecasts are based on continued explosive growth in AI adoption and usage. If growth slows even moderately, the unit economics of data centers deteriorate rapidly. A company taking on $500 million in debt to build Open AI-specific infrastructure suddenly faces a different calculus when the revenue that was supposed to pay for that debt gets cut by 30%.

Open AI itself has limited direct exposure to this debt. That's not accidental. It's a feature of the structure. Open AI benefits from infrastructure scaling without proportional balance sheet risk. Its infrastructure partners shoulder the debt.

This is actually a rational financial strategy from Open AI's perspective. Why take on debt yourself when you can have partners take it on and sign long-term contracts that obligate them to pay you regardless? From Open AI's point of view, they're securing infrastructure commitments while maintaining financial flexibility.

But from a systemic risk perspective, from a taxpayer perspective, from an economy-wide stability perspective, it creates a precarious situation. If these infrastructure partners face insolvency, the entire ecosystem needs rescue. Core Weave can't be allowed to fail because Open AI depends on their infrastructure. Open AI's customers depend on that infrastructure. Customers of Open AI's customers depend on those services.

Suddenly you have a situation where government intervention looks less like a favor to one company and more like economic necessity to prevent cascading failure across the entire AI infrastructure layer.

Warren understands this dynamic deeply. She watched it play out in 2008 when investment banks took excessive leverage, distributed the risk throughout the system, and then watched taxpayers get stuck with the bill when the whole structure became unstable.

What she's asking is: are we about to do this again, but with AI infrastructure instead of mortgage-backed securities?

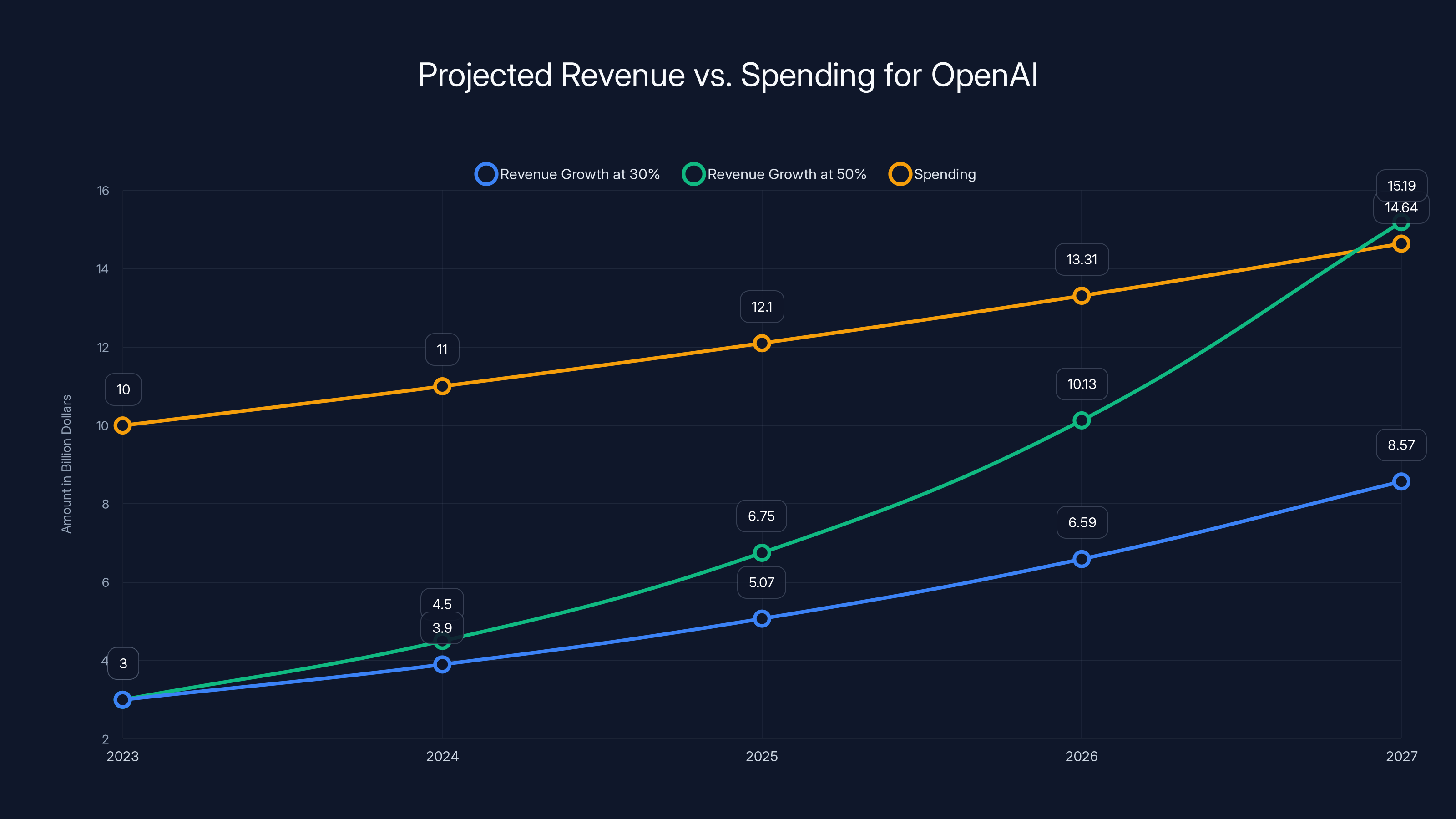

This chart illustrates a hypothetical scenario where AI services revenue grows at 50% annually, showcasing the potential for exponential growth if adoption accelerates. Estimated data.

The CFO Comment That Started This Whole Thing

This entire situation traces back to something Open AI's CFO Sarah Friar said last November. In an interview, Friar suggested that taxpayers should "backstop" the company's infrastructure investments. Those exact words. Backstop. Taxpayers.

The reaction was immediate and severe. Altman had to do damage control. He tweeted reassurances that Open AI doesn't want or have government guarantees. The company quickly walked back Friar's remarks. But the damage was done. The conversation had been opened in a way Open AI couldn't completely close again.

Because Friar had said out loud what many people in the industry were already thinking privately: the amounts of money required to build AI infrastructure at the scale Open AI wants might exceed what private capital is willing to shoulder alone. Taxpayer backing might become necessary.

That comment exposed something real about the financial challenges the AI industry faces. You can't unring that bell. Once your CFO has acknowledged that taxpayer support might be needed, people start asking whether that support is already being quietly negotiated.

Warren's letter is in some sense a direct response to Friar's comment. It's saying: you went on the record suggesting taxpayer support might be necessary, now explain to us how you're not planning to request it.

It's worth noting that David Sacks, the Trump administration's crypto and AI policy czar, explicitly rejected this framing. He tweeted that "there will be no federal bailout of AI." That's a policy statement. But Warren is essentially saying: policy statements don't matter much if the financial incentives still point toward government involvement.

The math doesn't lie. If Open AI is spending a trillion dollars on infrastructure and only generates $500 million in annual revenue, at some point the gap becomes impossible to bridge with private capital. Either growth accelerates dramatically, or someone steps in. And if private investors won't step in, well, the government is always there as a last resort.

What Warren Is Actually Asking For

Warren's letter includes specific requests for information. Understanding what she wants reveals what she's actually concerned about.

First, she wants details on conversations Open AI has had with the U. S. government about loan guarantees, grants, and infrastructure support. This is about transparency. If those conversations have happened, they should be on the record.

Second, she wants to know what kind of federal support for the AI industry Open AI would actually accept or prefer. This is clever. It's forcing Open AI to either say "none, we want no government support" or to explain what kind of support they'd consider acceptable. Either answer reveals something important.

Third, she wants specific details on infrastructure Open AI is seeking tax credits for. This is about understanding how much Open AI is relying on government incentives that already exist. Most data center construction does get tax incentives. How much of Open AI's trillion-dollar plan depends on those incentives?

Fourth, Warren wants financial projections through 2032. Not just revenue, but specifically what happens if AI models plateau and demand fails to materialize. This forces Open AI to model scenarios where their core assumptions don't hold.

Fifth, she wants to know whether Open AI is currently profitable on any of its Chat GPT plans, and whether the company expects profitability within three years. This gets at the heart of the issue: is this path to profitability plausible, or are we in classic bubble territory where growth is expected to eventually make unprofitable things profitable?

These aren't hostile questions. They're not accusations. They're the kinds of questions you ask a company that matters enough that its failure would have systemic consequences. They're the kinds of questions financial regulators ask when they're trying to understand whether a company's business model is sustainable or dependent on continued favorable conditions.

The Broader Context: Why This Matters Right Now

This moment is significant because it's when several trends collide.

First, AI is consuming more resources than even bullish forecasters expected a few years ago. Training large models requires enormous computational resources. Serving those models to millions of users requires equally enormous infrastructure. The costs are staggering, growing faster than revenue.

Second, the funding environment has shifted. Two years ago, every venture capitalist and venture debt provider was falling over themselves to fund AI companies. Now there's more scrutiny. Capital is still available but on more demanding terms. The era of funding companies that lose money at scale is ending.

Third, the Trump administration has taken an explicit stance against AI bailouts. That's a meaningful constraint. It means Open AI can't count on government support if things get tight.

Fourth, there's increased scrutiny of how much money tech companies are spending on capital projects with unclear returns. The trillion-dollar spend by Open AI is real money. Somewhere, someone is questioning whether that's actually going to generate returns or if it's just spending for spending's sake.

Warren's letter lands in this context. It's not a random investigation. It's addressing something genuinely concerning: the structure of AI financing suggests that even if individual companies try to avoid government support, the collective risk might force government's hand.

Consider the scenario where Open AI remains independent and never takes a government loan, but Core Weave and other infrastructure partners face insolvency because the revenue they're receiving from Open AI doesn't meet their debt obligations. Suddenly there's a problem. Does the government let critical infrastructure for AI fall apart? Or does it intervene?

That's the systemic risk Warren is pointing to. It's not about whether Open AI will personally ask for a bailout. It's about whether the whole system is structured in a way that makes government involvement inevitable.

Projected data shows that even with a 50% annual revenue growth, OpenAI's spending could still outpace revenue, highlighting the challenge of achieving profitability. (Estimated data)

How This Connects to Broader AI Industry Risk

Open AI isn't unique in this structure. It's representative of the entire industry.

Claude maker Anthropic is burning through capital rapidly. It's raised multiple rounds at increasingly high valuations, but generating revenue at a rate that justifies that valuation is challenging. They're in a similar position: committing to large infrastructure spending, dependent on continued capital influx.

Google is spending heavily on AI but can at least offset those costs against its massive advertising business. Microsoft has similar scale. But smaller players, even well-funded ones, face genuine financial constraints.

What Warren understands is that systemic risk can emerge even without any single company requesting a bailout. If the entire AI infrastructure layer becomes economically stressed simultaneously, government involvement becomes not a favor but a necessity.

It's similar to the 2008 financial crisis logic. No single bank wanted to fail. But when the structure of the financial system meant that one major failure could trigger cascading failures across the system, government had to intervene. Not because anyone asked nicely, but because the alternative was economic catastrophe.

Warren is essentially saying: we need to understand whether AI infrastructure is heading toward a similar situation. And if it is, we need to plan for it now rather than being forced to scramble when things break.

The Political Dimension: Who Wins This Argument?

From a pure political standpoint, Warren's move is clever. She's positioned herself as the adult in the room asking hard questions while others are caught up in AI mania.

Altman faces a real dilemma. If Open AI provides detailed financial projections showing a clear path to profitability, great, that addresses Warren's concerns. But those projections are based on assumptions about AI adoption that might not hold. Publishing them risks creating doubts if they seem too aggressive.

If Open AI refuses to provide detailed projections or provides vague responses, they look evasive. They look like they're hiding something.

This is why Warren's approach is effective. She's not making accusations that Open AI can simply deny. She's asking for transparency on information that Open AI should be willing to share if they're genuinely not concerned about government bailouts.

The Trump administration's position creates additional pressure. The administration has explicitly said no bailouts for AI. That means Altman can't hope that government will quietly step in if things get tight. He has to demonstrate that Open AI's path to sustainability is real and doesn't depend on government support.

That's actually valuable pressure. It forces the company to think seriously about business model sustainability rather than assuming that growth will eventually solve all problems.

From Warren's perspective, even if Altman provides reassuring responses, she's accomplished something important. She's put the AI industry on notice that its financial architecture is under scrutiny. She's signaled that taxpayer protection is a legitimate policy concern, not something that can be dismissed as luddite thinking from Congress.

What Open AI Likely Has to Say

If Open AI responds seriously to Warren's questions, what will they probably argue?

First, they'll emphasize that they're fundamentally different from banks or other systemically important institutions. If Open AI fails, the AI industry continues. Other companies provide similar services. The economy doesn't collapse.

Second, they'll point to their revenue growth. Chat GPT is actually generating meaningful subscription revenue. Enterprise customers are using the platform. The path to profitability, while not currently achieved, is plausible within a reasonable timeframe.

Third, they'll argue that their infrastructure partnerships are economically rational for both parties. Core Weave and other infrastructure providers benefit from these contracts. They're not being exploited or set up to fail. They're making calculated business decisions.

Fourth, they'll emphasize that continued AI investment is necessary because the competitive landscape demands it. If Open AI doesn't spend on infrastructure, competitors will. The spending isn't wasteful, it's competitive necessity.

Fifth, they might argue that government support for AI infrastructure is actually good policy regardless of Open AI's specific situation. Investment in AI is a national priority. Supporting that investment makes sense for competitiveness against China and other rivals.

These are legitimate arguments. They might even be true. But they don't directly address Warren's core concern: that the financial structure of the AI industry creates systemic risk even if individual companies are competitive and viable.

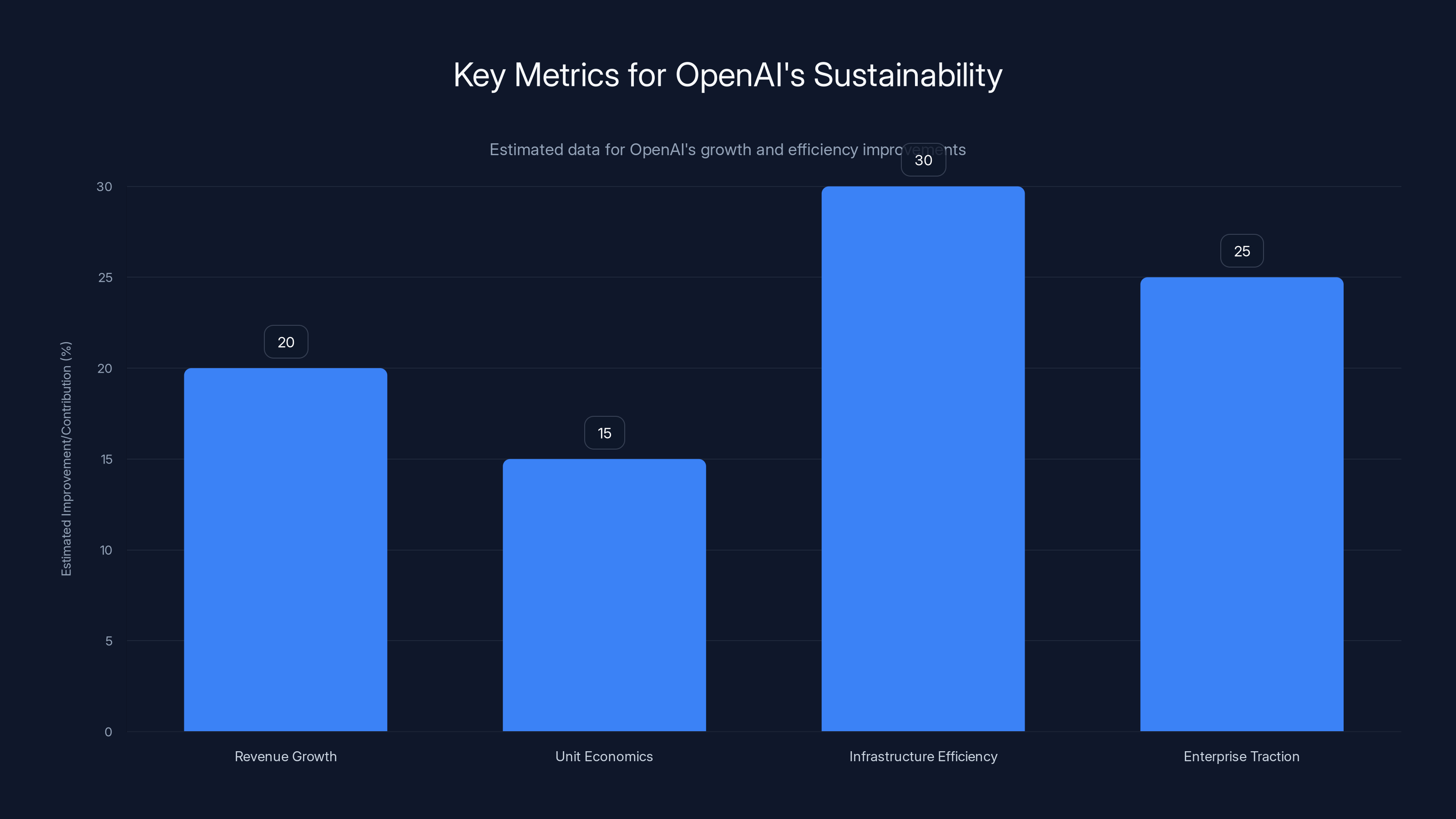

Estimated data shows potential areas of focus for OpenAI: 20% revenue growth, 15% improvement in unit economics, 30% infrastructure efficiency, and 25% enterprise traction.

The February Deadline and What Comes After

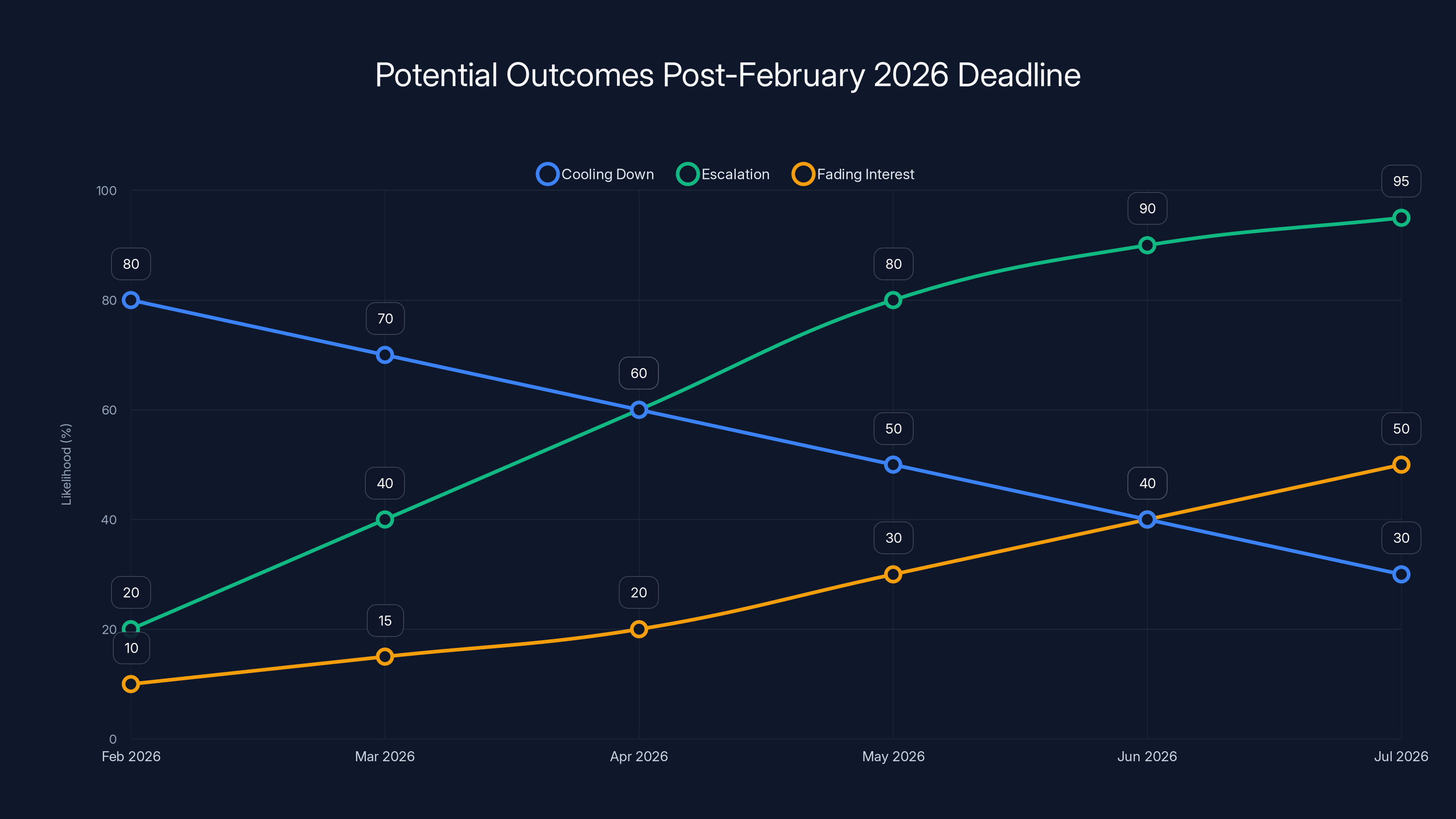

Warren has given Altman until February 13th, 2026, to respond. That's a real deadline with real consequences.

If Open AI provides a substantive response, the issue might cool for a while. Both sides can claim victory. Open AI has answered the senator's concerns. Warren has demonstrated that oversight works.

If Open AI provides evasive or incomplete responses, Warren will probably escalate. She has significant influence on banking committee matters. She can push for formal investigations. She can drag executives before Congress for testimony. She can propose legislation requiring transparency from AI companies receiving tax credits or other government benefits.

That escalation path is worth thinking about. A formal congressional investigation into Open AI's financial structure could be disruptive. It could cloud investor perception. It could accelerate capital market reassessment of AI company valuations.

But there's also a possibility that Warren's letter becomes a one-off signal and then gradually fades. Tech policy moves fast. Congressional attention is limited. By summer 2026, the focus might be entirely different depending on what other crises emerge.

What probably won't happen is the issue going away entirely. As the AI industry continues to mature, questions about financial sustainability and systemic risk will only grow more pressing. Warren is early in asking these questions, but she's not alone. Others in Congress, banking regulators, and market participants are thinking about similar issues.

Infrastructure as the Bottleneck

One thing Warren's letter highlights is that infrastructure, not software or models, is increasingly the constraint on AI development.

Building large language models requires extraordinary computational resources. Serving those models to millions of users requires even more resources. The capital intensity of AI is not a temporary phase. As models get larger and more capable, the infrastructure requirements grow.

This is different from the previous wave of tech growth, where software scaled relatively cheaply once built. Cloud providers could serve millions of users with marginal cost increases. AI infrastructure doesn't work that way. Each incremental user or capability requires proportional infrastructure investment.

That's why companies like Core Weave exist. They specialize in building GPU-dense data centers optimized for AI workloads. These are capital-intensive businesses with long payoff periods. They require certainty of revenue streams to justify the debt financing.

Open AI has provided that certainty through long-term contracts. But uncertainty about Open AI's ultimate viability flows backward through the supply chain to infrastructure providers who have made massive capital commitments based on that revenue certainty.

Warren understands this chain of risk. If any link breaks, pressure flows backward to government. The infrastructure providers can't absorb unlimited losses. If they're forced to, they either fail or seek government support. And suddenly the entire AI infrastructure layer is destabilized.

This is systemic risk in its purest form.

The Alternative Scenario: What If AI Growth Continues?

There's a version of this story where Warren's concerns prove overblown.

Suppose AI adoption accelerates even beyond current forecasts. Suppose revenue from AI services grows at 50% annually. Suppose enterprise customers deploy AI at every opportunity. Suppose consumer adoption of AI-powered applications becomes ubiquitous.

In that scenario, Open AI's trillion-dollar infrastructure investment looks prescient rather than reckless. The revenue to justify that investment materializes. Core Weave's debt becomes easily serviceable. The entire structure becomes wildly profitable.

Then Warren's warning looks like it was worrying about nothing. The market solved the problem. Growth produced profitability. No bailout was needed.

But that scenario depends on specific assumptions about the world. It depends on AI being broadly useful enough and increasingly essential enough that adoption accelerates. It depends on competition not eroding Open AI's profit margins. It depends on regulatory constraints not limiting AI deployment.

Those are plausible assumptions. They're what most AI believers expect. But they're not guaranteed.

Warren is essentially saying: let's plan for the scenarios where these assumptions don't hold. Let's understand what happens if AI adoption is slower than expected. Let's be prepared for contingencies.

That's not unreasonable. It's financial prudence.

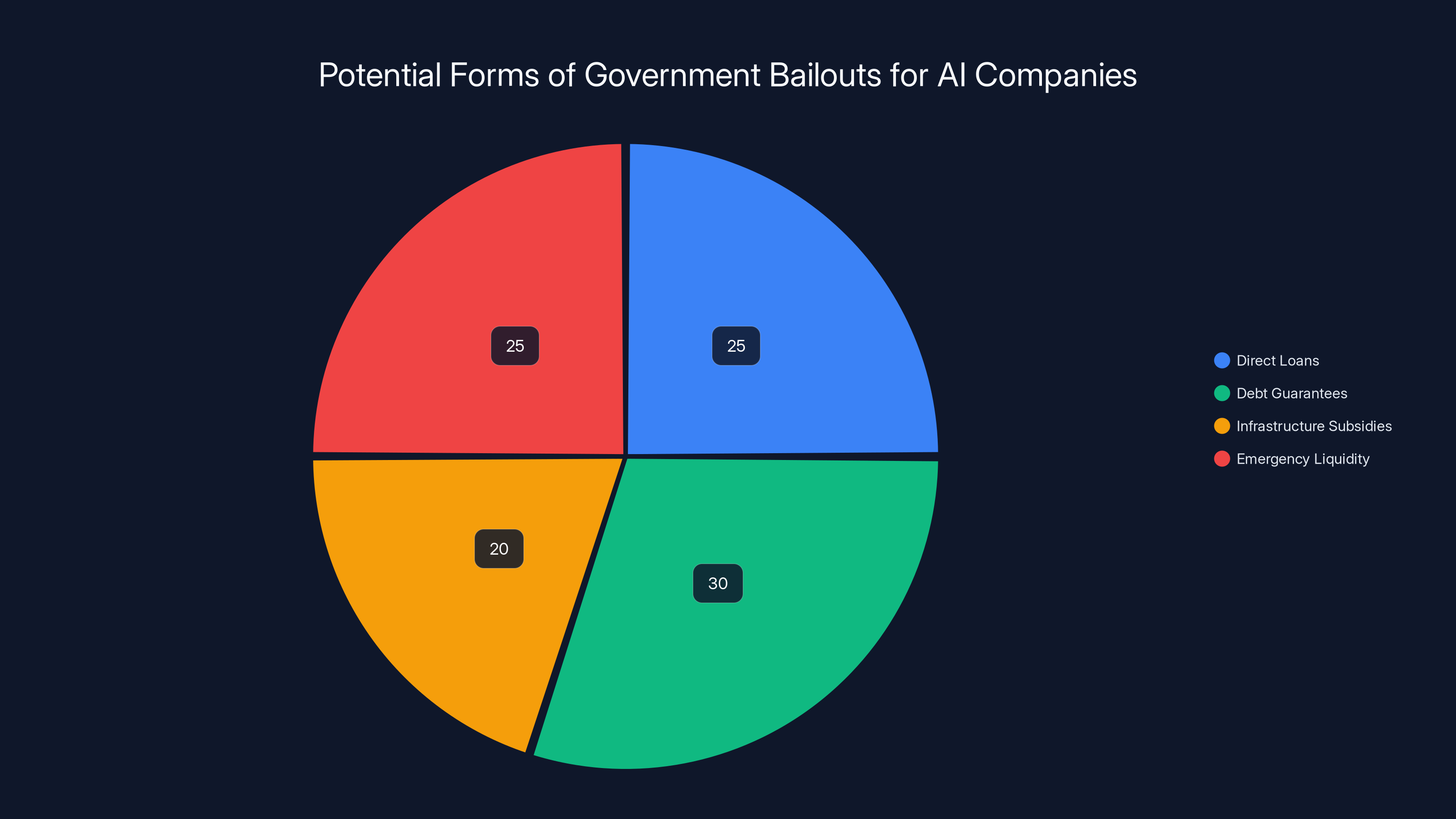

Estimated data shows that debt guarantees and direct loans could be the most common forms of government bailouts for AI companies, each comprising around a quarter of potential bailout strategies.

Comparing to Previous Technology Booms

It's worth placing this in historical context. Tech booms have repeatedly created financial fragility.

The dot-com boom of the late 1990s saw companies spending billions on infrastructure without clear business models. When the market corrected, a lot of that infrastructure became obsolete. Billions were lost. But the losses were contained within the tech industry itself. There wasn't systemic contagion to the broader economy.

That's partly because infrastructure capacity, once built, can be repurposed. A data center built for one service can theoretically be used for another. The assets have some salvage value.

But it's also because the infrastructure wasn't specifically designed for one company's business model in the way AI data centers are. GPU-heavy, high-bandwidth specialized infrastructure built specifically for large language models has less versatility than general-purpose server capacity.

The 2008 financial crisis came from a different direction. Financial institutions created complex debt instruments that distributed risk throughout the system. When the underlying assets failed, the entire financial system faced stress. Government had to intervene to prevent cascading failures.

AI infrastructure financing is somewhat parallel. Risk is distributed across infrastructure providers, cloud companies, and venture capital firms. If Open AI's business model doesn't pan out, losses cascade through the system.

The key difference is scale and interconnectedness. In 2008, the failures were in mortgages and mortgage-backed securities. Billions in losses. In AI, we're potentially talking about failures measured in hundreds of billions if the entire infrastructure investment doesn't produce returns.

That's systemic risk at a different order of magnitude.

Policy Questions Going Forward

Warren's letter raises policy questions that extend beyond Open AI.

Should the government provide tax incentives for AI infrastructure investment? Most data centers get tax credits. But should government be actively subsidizing infrastructure for specific companies?

Should there be disclosure requirements? Should companies receiving tax credits be required to provide financial information to regulators? Should there be rules about how much debt-to-revenue ratio is acceptable for companies in critical infrastructure?

Should government take equity stakes in critical AI infrastructure? Should there be public-private partnerships that give government some upside if these investments pay off?

These are genuinely hard questions. There are reasonable arguments on multiple sides.

From a free market perspective, companies should be able to structure their financing however they want. If they fail, they fail. That's how markets work.

From a systemic risk perspective, if the failure of a company or infrastructure provider threatens broader economic stability, government has legitimate reasons to intervene early rather than waiting for crisis.

There's a middle ground where government provides transparency requirements and ensures that policy makers understand the risks but doesn't directly intervene unless necessary.

Warren seems to be pushing toward that middle ground. She's not saying ban AI investment or prevent companies from spending money on infrastructure. She's saying let's understand what we're dealing with and plan accordingly.

The Profitability Question

Understanding whether AI companies can actually be profitable is crucial.

Open AI generates revenue from Chat GPT subscriptions and API access. Those revenue streams are real and growing. But they're not growing fast enough to cover spending at current levels.

Inferring from public information, Open AI probably has annual revenue in the range of a few billion dollars. Its spending is probably in the range of ten billion dollars or more annually. That's a significant gap.

Can that gap be closed? It depends on whether revenue can grow much faster than spending. That's plausible. API pricing could increase. Usage could accelerate. Enterprise deployments could become the core business rather than niche.

But it's not guaranteed. At some point, if revenue growth stalls, spending has to adjust downward. And if spending adjusts downward at the moment when major infrastructure projects are supposed to come online, suddenly there's misalignment between capacity and usage.

That's where systemic risk emerges.

Warren is asking Open AI to model these scenarios transparently. What happens if revenue grows at 30% annually instead of 50%? What happens if AI adoption plateaus? What happens if competition erodes margins? These are standard business scenarios. Every mature company models them.

But AI companies haven't typically been required to do so publicly. Warren is changing that expectation.

Estimated data shows potential outcomes: cooling down, escalation, or fading interest post-February 2026 deadline, with escalation becoming more likely if OpenAI's response is inadequate.

Looking at Comparable Infrastructure Investments

What do comparable massive infrastructure investments look like?

The space industry spent decades and billions before achieving sustainable profitability. Space X, for instance, took heavy losses for years before revenue from launches and satellite services scaled. But Space X was eventually bankrolled by government contracts for national security purposes. The company could persist through losses because government had interest in its success.

Railroads in the 19th century required enormous capital investment. Much of that investment was subsidized by government land grants. The profit margins on railroads were modest, but the infrastructure was valuable enough that government and private investors both participated.

The electricity grid required massive infrastructure investment but has proven sustainable because demand is constant and pricing can be regulated to ensure adequate returns.

AI infrastructure is somewhere in between. It's capital-intensive like railroads or the electric grid. But demand is less certain. The business model is still evolving. And pricing is not regulated, it's competitive.

That's a harder scenario for pure private funding. It's also harder for government to justify participating because the return on government investment is unclear.

Warren is essentially asking whether we're heading toward a situation where the infrastructure is too important to fail but the business model is too uncertain to succeed without government involvement.

The Competitive Dimension: Why Open AI Feels Forced to Spend

It's important to understand Open AI's spending in competitive context.

Google has vastly more capital than Open AI. Microsoft has effectively allied with Open AI but also invests heavily in its own AI. Meta is investing in AI infrastructure. Anthropic is raising capital to match spending.

In this environment, any company that moderates spending risks falling behind. Competitors might build more capable systems with better infrastructure. The market might consolidate around the company that has spent most aggressively.

This is a classic arms-race dynamic. No individual company wants to spend at these levels. But if competitors are spending, the cost of not spending is potentially losing the market.

Open AI's spending isn't reckless from a competitive perspective. It's defensive. The company is trying to maintain its leading position while competitors close in.

But that competitive dynamic also creates fragility. If the entire industry is spending unsustainably, no amount of individual company discipline solves the problem. Everyone becomes vulnerable simultaneously.

Warren's point is that this collective vulnerability creates systemic risk. Individually rational decisions to spend aggressively become collectively irrational when everyone does it simultaneously.

What This Means for Investors

For people investing in AI companies or AI infrastructure, Warren's letter should prompt some reflection.

Are valuations based on assumptions about continued funding? Are business plans based on the idea that capital will remain cheap and plentiful? Are growth projections based on exponential user adoption that might not materialize?

If so, the risk profile is higher than many investors realize.

This doesn't mean AI is a bad investment. It means the margin of safety might be smaller than it appears. Companies that seem obviously profitable on paper might face real difficulty if capital markets shift or if adoption rates disappoint.

For long-term investors, Warren's letter is relevant because it signals that political pressure on AI spending might increase. If Congress starts imposing restrictions on government benefits for AI companies, or if regulators start requiring more disclosure, that could affect profitability calculations.

For short-term traders, Warren's letter is relevant because formal congressional scrutiny could affect sentiment and stock prices in the near term.

The Broader Question: Can Tech Solve Real Problems?

Underlying all of this is a bigger question.

Is AI fundamentally going to be useful enough to justify the investment being poured into it? Or is there an element of hype and bubble dynamics in the current spending?

The honest answer is: nobody knows. The infrastructure being built now could prove essential if AI systems become as transformative as believers think. Or it could end up partially obsolete if adoption is slower or if more efficient architectures emerge.

That's radical uncertainty. And radical uncertainty is exactly when Warren's questions become most important. When nobody really knows whether an investment will pay off, government absolutely should be skeptical about becoming a backstop for that uncertainty.

This is especially true when the alternative to government involvement is for private investors to absorb the risk. If private investors are willing to fund this infrastructure, they should be willing to absorb losses if it doesn't work out.

The moment government starts backstopping the losses is the moment that incentive structure breaks. Investors stop being careful about how much they're willing to risk. Spending becomes unlimited. Fragility increases.

Warren is essentially saying: let's protect that incentive structure. Let companies and investors take risks, but let them absorb losses if those risks don't pay off.

That's financially sound policy even if it means some infrastructure projects fail or scale more slowly than current plans assume.

The Response Open AI Has to Hope For

From Open AI's perspective, the ideal outcome is that their response to Warren convinces her (and broader Congress) that the company has a plausible path to sustainability without government support.

That probably requires showing:

Clarity on revenue growth. Specific numbers about how revenue is growing and at what rate. Transparency about unit economics. How much revenue does each Chat GPT subscription generate? How much does each API call contribute?

Showing infrastructure efficiency improvements. Not all spending has to be on building new capacity. Some can be on making existing capacity more efficient. If you can improve inference efficiency by 30%, you've effectively reduced the infrastructure spend needed per unit of output.

Demonstrating enterprise traction. Consumer subscriptions to Chat GPT are growing, but are enterprise customers actually deploying AI at scale? If so, that creates a more sustainable business model with better margins.

Setting realistic expectations. Rather than talking about trillion-dollar spending and exponential growth, maybe showing a more modest but more credible path: we'll spend tens of billions, we'll achieve profitability within 5 years, we'll do so without government support.

Open AI probably doesn't want to be seen as a company that needs bailouts. The company wants to be seen as a large, growing, eventually profitable business. Warren's letter is an opportunity to make that case clearly.

Historical Precedent: Who Gets Bailouts?

When government does bail out companies, who are they usually? Banks, financial institutions, auto companies in crisis. Broadly, companies that are seen as too big to fail or critical infrastructure.

Open AI probably doesn't want to be seen as either of those things. The company wants to be seen as a healthy, competitive, growing business. But the financial structure Warren is questioning could gradually shift that perception.

If Open AI is increasingly seen as dependent on government support or as creating systemic risk, the political economy of the company changes. Suddenly it's not just about whether Open AI is profitable, it's about whether the government has leverage.

And government leverage, even when not explicitly used, changes how companies operate. Regulatory compliance becomes more stringent. Reporting becomes more detailed. Strategic decisions might be influenced by government preferences.

That's another reason Open AI wants to make clear that it doesn't need or want government support. It's not just about the money. It's about independence.

The Global Dimension

There's also an international angle to Warren's concerns.

China is investing heavily in AI infrastructure. If the U. S. government ends up supporting American AI companies (through subsidies, tax credits, or bailouts if needed), China will probably do the same. That could trigger a subsidy war where governments feel obligated to support their domestic AI champions.

That's not necessarily bad. Government investment in critical technology can be justified for national security reasons. But it does change the dynamics of competition and the business models companies use.

For Open AI and other American AI companies, there's a case that government support is necessary to compete globally. That's different from Warren's concern about bailouts for failed companies. But both are worth thinking through carefully.

A more secure government policy would probably be direct investment in AI infrastructure as a national priority, rather than reactive bailouts if private companies fail. But that's a different conversation than Warren is having.

What Might Actually Happen

Best case scenario for Open AI: The company provides detailed financial projections and information that convinces Warren they have a credible path to sustainability. Warren is satisfied, the issue cools, and the company can operate with less political scrutiny.

Worst case scenario: Open AI's response is evasive or unconvincing. Warren escalates, bringing in other lawmakers. Congress demands more information. The issue becomes high-profile. Investors start questioning the company's financial model. Capital becomes more expensive or difficult to raise. The company's growth trajectory changes.

Middle case: Open AI provides a response. Warren is partially satisfied but continues monitoring. The issue becomes one of several things Congress is watching rather than an immediate crisis. The company continues on its current path but with slightly more scrutiny.

My guess is middle case is most likely. Open AI provides enough information to avoid immediate escalation but probably doesn't completely satisfy Warren. The issue simmers rather than boiling over.

But the mere fact that it's being raised signals something important: the unlimited growth and unlimited spending phase of AI might be starting to face real constraints. Not from technology or products, but from politics and finance.

Thinking About the Trillion Dollar Question

Ultimately, Warren's letter forces us to reckon with the central question: is Open AI's trillion-dollar spending spree justified?

From the company's perspective, yes. Building AI infrastructure at that scale is necessary to maintain technological leadership and serve growing demand.

From a financial perspective, it depends on whether revenue can grow to justify the spending. That's plausible but uncertain.

From a policy perspective, Warren is right to ask: if things go sideways, who absorbs the losses? If it's taxpayers, policy makers should have input into how much risk they're accepting.

The healthy outcome is a middle ground: companies are free to spend as they choose, but they absorb the financial consequences of their decisions. Government provides support only when there's a genuine public interest in doing so, not merely to rescue private investors from bad bets.

Warren's letter pushes the AI industry toward that kind of clarity. It's uncomfortable for Open AI, but it's not unfair.

The Bottom Line: This Isn't About Preventing AI Investment

It's crucial to understand what Warren is actually asking for and what she's not.

She's not asking Open AI to stop spending money. She's not saying AI infrastructure investment is wasteful. She's not trying to hobble U. S. competitiveness in AI.

She's asking for transparency about financial assumptions. She's asking whether government is being set up to absorb losses if those assumptions don't hold. She's asking whether the industry's financial structure creates systemic risk.

Those are legitimate questions. The fact that they make Open AI uncomfortable suggests they're hitting something real.

The best response isn't to dismiss Warren as a tech skeptic. It's to engage seriously with the questions and provide the transparency she's requesting.

Because if Open AI's path to sustainability is actually solid, that clarity helps the company more than it hurts. If the path isn't solid, better to understand that now when there are options for adjustment than to discover it later when government has no choice but to step in.

That's what good financial governance looks like. Warren is pushing the AI industry toward it, whether the industry wants it or not.

FAQ

What does Senator Warren mean by "socializing losses"?

Warren is referring to a financial pattern where companies keep profits private (within the company or with shareholders) but shift losses onto the public through government bailouts. This happened in 2008 when financial institutions took excessive risks, generated profits when times were good, but then needed taxpayer rescue when the risks materialized. She's concerned Open AI's financial structure sets up a similar dynamic where losses could be transferred to taxpayers.

Why does Open AI need to spend so much on infrastructure?

Large language models require enormous computing power to train and then even more computing power to serve to millions of users. Each new capability improvement, each new model release, and each new user requires infrastructure scaling. Open AI is essentially trying to build the computational backbone that can support AI at scale, which is why the spending is measured in hundreds of billions of dollars. Without this infrastructure investment, the company can't maintain its competitive position or serve growing demand.

Is Open AI actually unprofitable right now?

Based on available information, Open AI generates significant revenue from Chat GPT subscriptions and API usage, but its spending exceeds that revenue. The company has raised capital from investors to fund the gap. Whether this changes in the near future depends on whether revenue grows fast enough to catch up with spending, which is one of the key questions Warren is asking.

What would a government bailout of AI companies actually look like?

A bailout could take several forms: direct government loans to Open AI or other companies, government guarantees on debt (meaning the government promises to pay if the company defaults), subsidies for infrastructure spending, or emergency liquidity assistance during market stress. Warren's concern isn't necessarily that a formal bailout will be labeled as such, but that government will end up supporting the AI industry under some name if things go wrong.

Why does Warren focus on companies like Core Weave rather than Open AI directly?

Because Core Weave is the leverage point in the system. Core Weave has taken massive debt to build infrastructure that Open AI uses. If Core Weave becomes financially stressed, it creates pressure for government to step in. By focusing on infrastructure partners, Warren is highlighting how systemic risk flows through the supply chain. Open AI might remain solvent while its infrastructure partners fail, but that would be equally disruptive.

Could this investigation actually affect Open AI's operations?

Possibly, but probably not dramatically in the near term. If the investigation becomes high-profile or results in regulatory restrictions, it could affect the company's access to government contracts, tax incentives, or favorable policy treatment. More importantly, it could affect investor perception and confidence, which influences capital availability and borrowing costs. Congressional pressure isn't law, but it can change the operating environment for companies.

What's the difference between subsidizing infrastructure and bailing out companies?

Good question. Subsidizing infrastructure (like tax credits for data center construction) can be legitimate policy that promotes national interests. A bailout rescues a company from failure due to poor decisions or bad luck. The line is blurry, but the distinction matters. Subsidies applied universally are different from emergency rescue packages for specific companies in crisis.

Has this happened before in tech?

There have been moments where government supported critical tech infrastructure. The internet backbone was heavily government-funded early on. Semiconductor manufacturing has received subsidies. But pure company bailouts in tech are rare. Most failures in tech just result in companies shutting down or being acquired. The difference with AI infrastructure is the scale and the interconnectedness, which could create systemic risk.

What timeline is Warren expecting for profit?

Warren wants to understand Open AI's own timeline. The company hasn't publicly committed to specific profitability targets. That's one reason Warren's request for projections through 2032 is relevant. She's trying to understand whether Open AI itself believes profitability is achievable and on what timeline. If Open AI can't provide credible projections, that's concerning.

Why does this matter for people not involved in AI or policy?

Because if Open AI or other AI companies end up needing government support, taxpayers foot the bill. Additionally, how this plays out affects AI's development and deployment. If government becomes heavily involved in supporting AI infrastructure, that could shape what AI systems get built, who gets access to them, and how they're used. The policy questions now affect the technology and economics of AI for years to come.

Key Takeaways

- Warren's letter exposes a real structural risk: OpenAI's trillion-dollar spending commitment exceeds current revenue, creating financial fragility independent of the company's competitive position

- The core issue isn't whether OpenAI will directly request a bailout, but whether the financial architecture of the entire AI infrastructure layer makes government involvement inevitable if things deteriorate

- OpenAI shifted debt risk to infrastructure partners like CoreWeave, who have taken large loans to build specialized capacity—creating cascading failure risk if revenue assumptions don't materialize

- Warren's transparency demands are reasonable financial governance, forcing companies to model scenarios where AI adoption is slower than expected or where growth plateaus unexpectedly

- The February 13th, 2026 deadline creates real pressure for OpenAI to either provide credible financial projections or face escalating congressional scrutiny and potential regulatory action

Related Articles

- Tesla's $2B xAI Investment: What It Means for AI and Robotics [2025]

- Face Recognition Surveillance: How ICE Deploys Facial ID Technology [2025]

- Once Upon a Farm IPO 2025: What Investors Need to Know [2025]

- Big Tech's $7.8B Fine Problem: How Much They Actually Care [2025]

- Why Allbirds Closing Stores Signals Tech Culture's Biggest Shift [2025]

- TechCrunch Founder Summit 2026: The Ultimate Guide to Scaling Your Startup [2026]

![Warren Demands OpenAI Bailout Guarantee: What's Really at Stake [2025]](https://tryrunable.com/blog/warren-demands-openai-bailout-guarantee-what-s-really-at-sta/image-1-1769699362978.jpg)