The Santa Monica Incident: What Happened and Why It Matters

On January 23, 2025, a Waymo robotaxi struck a child in Santa Monica, California, forcing regulators and safety experts to confront uncomfortable questions about autonomous vehicle safety in real-world environments. The incident wasn't a high-speed collision—the vehicle was traveling at under 6 mph at the moment of impact—but it happened during school drop-off hours near a school with other children and a crossing guard present. A child sustained minor injuries, and while everyone walked away relatively okay, the accident opened a window into how self-driving systems handle unpredictable human behavior in high-risk environments.

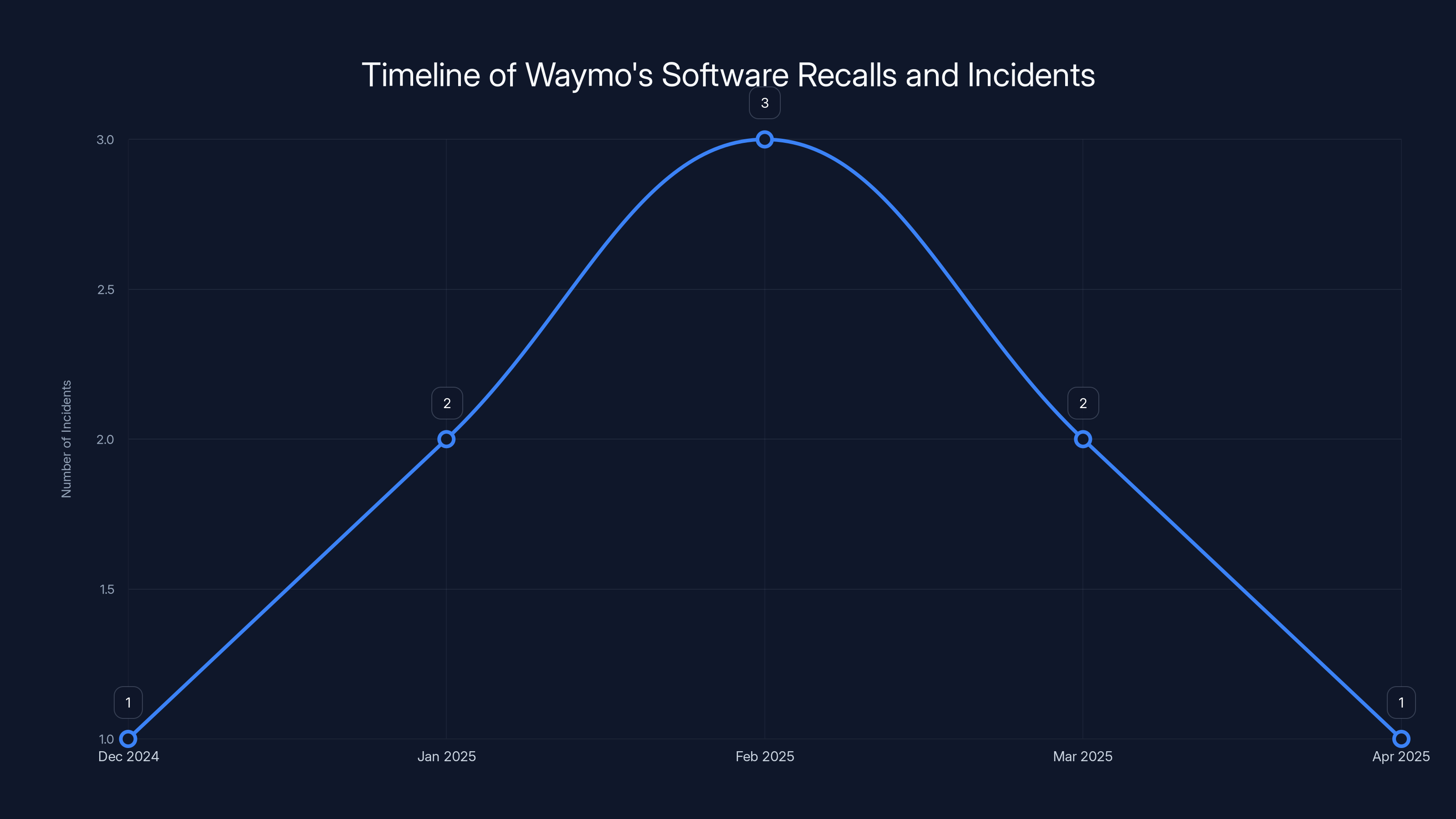

This wasn't an isolated glitch. On the same day the incident occurred, the National Transportation Safety Board announced it was investigating Waymo over separate incidents in Austin, Texas, where the company's vehicles allegedly passed stationary school buses. Just weeks earlier, Waymo had issued a software update after regulators discovered similar school bus violations in both Austin and Atlanta. The timing raises a critical question: Is there a systemic issue with how Waymo's autonomous driving system perceives and responds to school zones, school buses, and children?

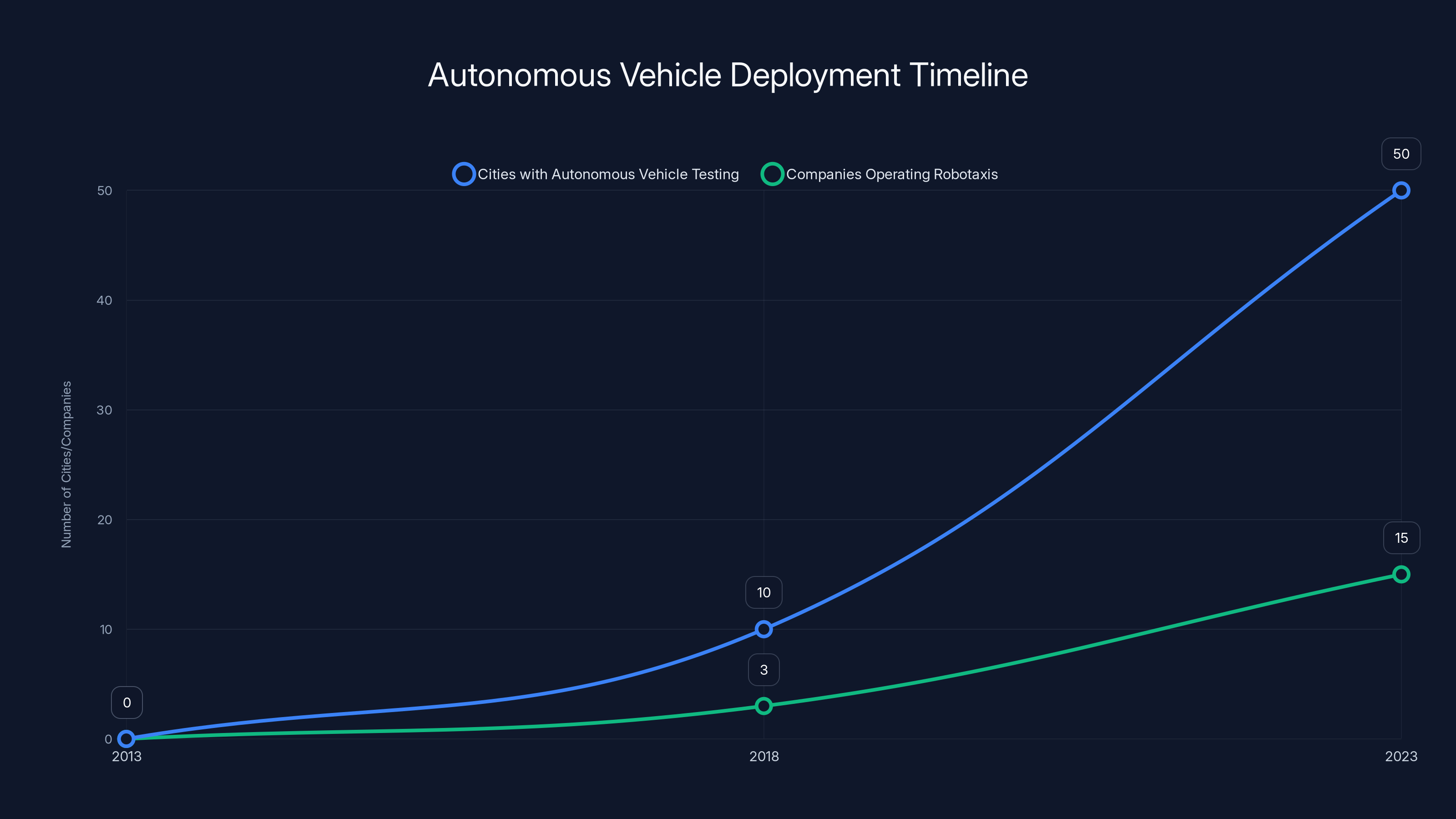

The robotaxi industry has moved at breakneck speed from testing to commercial deployment. Companies like Waymo, Cruise, and others are now operating hundreds of autonomous vehicles in major cities, accumulating millions of miles of real-world data. But this expansion has happened faster than regulatory frameworks could catch up, and faster than society could fully grapple with what safety actually means when a machine makes split-second decisions affecting human life. The Santa Monica incident forces us to examine whether current safety standards are sufficient, whether companies are prioritizing speed-to-market over pedestrian protection, and what role regulators should play in preventing future accidents.

The child ran from behind a parked SUV—the kind of unpredictable human behavior that autonomous systems struggle with—and the Waymo Driver detected them "immediately" and braked hard to reduce speed from 17 mph to under 6 mph. But did "immediately" mean fast enough? Could the system have stopped completely? These details matter because they'll determine whether Waymo was operating acceptably or whether the incident reveals genuine limitations in autonomous perception and decision-making. The investigation will examine not just what happened, but what Waymo's system was designed to do in scenarios exactly like this one.

Understanding How Waymo's Autonomous Driving System Works

Waymo operates what's known as a Level 4 autonomous vehicle, meaning the system can handle driving tasks in most conditions without human intervention. The "Waymo Driver" is the proprietary software and hardware stack that enables this autonomy. Unlike Level 3 systems that require a human to take over in certain situations, Level 4 systems are designed to handle edge cases and complex scenarios independently.

The system relies on a sophisticated perception stack combining multiple sensor types. Lidar (light detection and ranging) creates a 3D map of the environment by bouncing lasers off objects and measuring the reflections. This gives the vehicle a detailed spatial understanding of everything around it. Cameras provide visual information and help identify traffic lights, signs, and visual cues that lidar alone can't interpret. Radar detects moving objects and their velocities, particularly useful in poor weather when other sensors might struggle. Together, these sensors feed data to machine learning models that classify objects (pedestrian, cyclist, vehicle, debris) and predict their future movements.

The prediction layer is critical for safety. When the system detects a pedestrian, it doesn't just note their current position—it predicts where they'll be in the next few seconds based on their velocity, direction, and behavior patterns. If the system predicts a collision risk, the planning module decides how to respond: brake, swerve, or continue. Waymo's system is designed to be conservative, preferring to brake rather than maneuver because unpredictable steering could cause collisions with other objects or vehicles.

In the Santa Monica incident, the child emerged suddenly from behind a parked vehicle, which is the exact scenario designed to test these systems. The vehicle needed to detect an unobstructed pedestrian in time to brake hard enough to avoid serious injury. The system accomplished this—the child sustained minor injuries—but the investigation will examine whether the system could have done better. Was the detection truly "immediate," or was there a perceptual delay? Could the vehicle have slowed down preemptively given it was in a school zone during drop-off hours?

Waymo's training data has accumulated over millions of miles of testing in Phoenix, San Francisco, Los Angeles, and other cities. The system has encountered countless edge cases and learned from them. But machine learning models sometimes fail in ways that are hard to predict. They might excel at recognizing adult pedestrians but perform worse on children, who are smaller and move unpredictably. They might struggle with certain weather conditions, lighting environments, or unusual object configurations. The regulators investigating this incident will want to know whether the system had adequate training for school zone scenarios specifically.

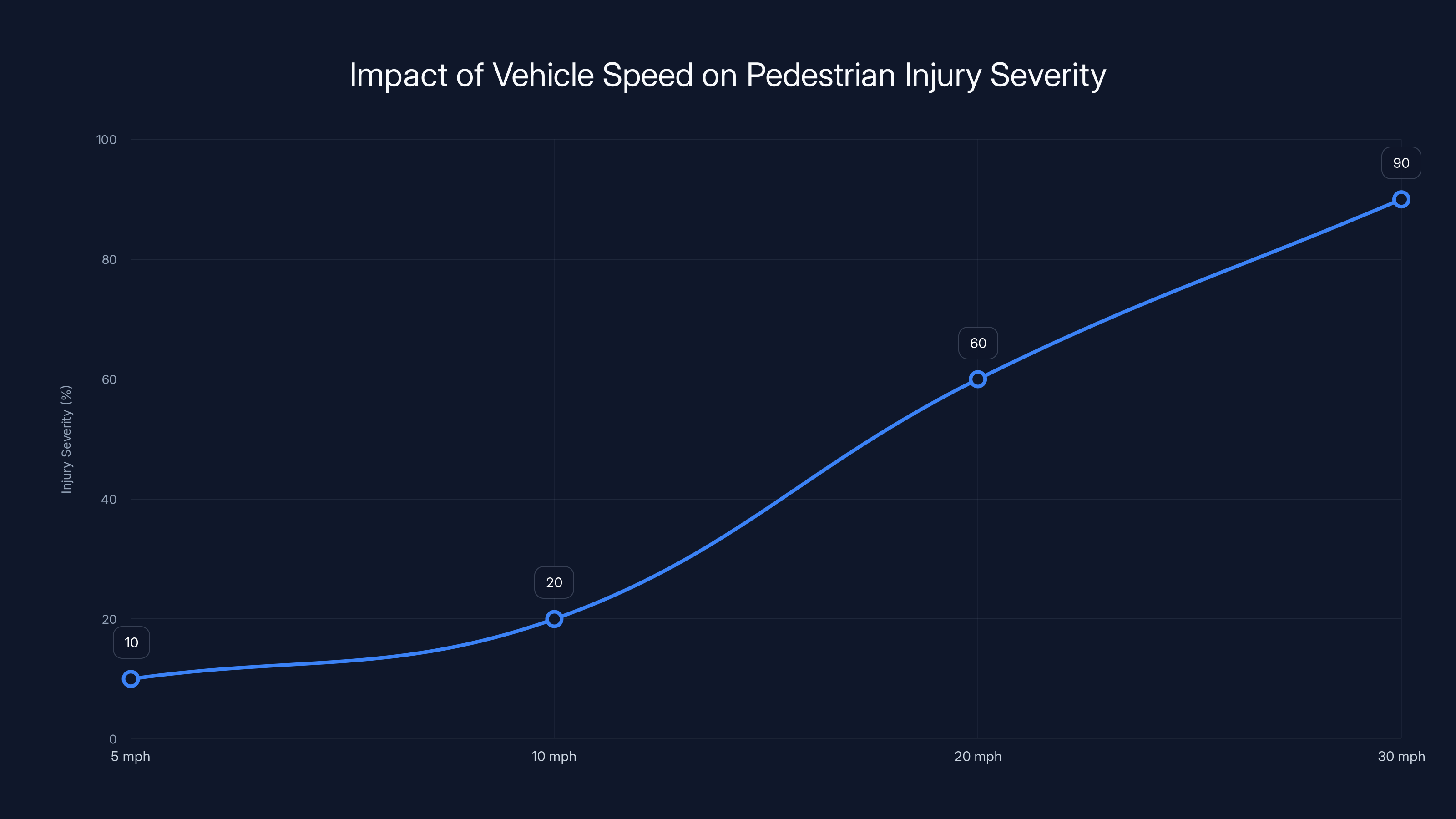

The Waymo robotaxi reduced its speed from 17 mph to under 6 mph before impact, demonstrating its braking capability in an emergency. Estimated data based on incident description.

Why School Zones Present Unique Challenges for Autonomous Vehicles

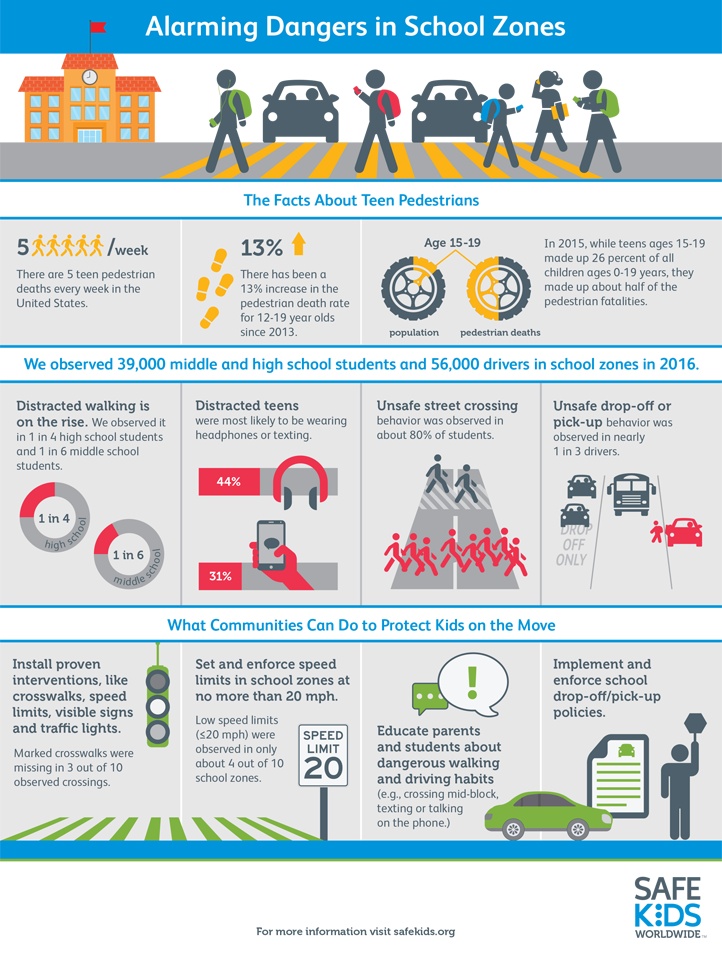

School zones are among the most complex and dangerous driving environments in urban areas. Multiple factors create hazards that test autonomous systems in ways suburban or highway driving doesn't. Children are unpredictable—they run into streets without looking, they assume cars will stop for them, they move in ways adults don't. Pedestrian density is high during drop-off and pick-up hours, with parents, crossing guards, buses, and delivery vehicles all competing for space. The environment changes seasonally and by time of day, requiring adaptive responses.

For human drivers, school zones create heightened awareness. You see the bright yellow signs, the reduced speed limits, the flashing lights on buses, the children's crossing guards, and your threat awareness automatically increases. You anticipate that a child might dart into traffic. You drive slowly and defensively. Your brain has decades of social conditioning that teaches you school zones are danger zones.

Autonomous systems don't have this intuitive understanding. They process school zones as data: a set of map markers indicating reduced speed limits, a classification for "school" locations, perhaps some training examples of school zone scenarios. But do they truly understand the behavioral differences between school zone pedestrians and other pedestrians? Do they weight the risk differently? Can they recognize a school zone by visual cues alone if GPS data is incorrect?

The NHTSA investigation specifically mentions examining "the intended behavior of the vehicle's automated driving systems around schools, particularly during regular pick-up and drop-off times." This phrasing suggests regulators are questioning whether Waymo's system was properly designed and calibrated for school zone operation. Did Waymo program the system to be more conservative in school zones? Should it reduce speed preemptively in these areas? Should it anticipate children with a higher baseline probability than in other areas?

The concurrent investigation into school bus passing violations suggests a pattern. In Austin and Atlanta, Waymo vehicles allegedly passed stationary school buses, which is a serious violation. School buses are legally protected vehicles—other drivers must stop in both directions when a school bus is loading or unloading children. Autonomous systems should be able to recognize the distinctive yellow color, the octagonal stop sign, and the flashing red lights and always stop. The fact that multiple Waymo vehicles violated this rule suggests either a perception failure (the system didn't recognize the bus) or a planning failure (the system recognized it but decided to pass anyway).

Waymo issued a software recall (a remote update) after the school bus incidents, suggesting the company identified and fixed whatever caused the violations. But this raises a concerning question: How many times must a safety issue be discovered and fixed before it indicates a systemic problem in design or testing? If multiple vehicles independently passed school buses, it suggests the issue wasn't a one-off sensor glitch in a single vehicle, but rather something about how the system classifies or responds to school buses generally.

Injury severity increases sharply with speed. At 30 mph, pedestrians have only a 50% chance of survival. Estimated data based on typical crash outcomes.

The Regulatory Response: NHTSA and NTSB Investigations Explained

Two separate federal agencies are now examining Waymo's operations, and understanding what each does is critical to understanding what accountability might look like. The National Highway Traffic Safety Administration (NHTSA) is the federal agency responsible for setting and enforcing vehicle safety standards, investigating defects, and issuing recalls. The National Transportation Safety Board (NTSB) is an independent agency that investigates accidents and safety issues to determine probable causes and issue safety recommendations, though it has limited enforcement power.

The NHTSA's Office of Defects Investigation has opened a formal investigation into the Santa Monica incident. Formal investigations follow a specific process: the agency collects data, interviews relevant parties, analyzes the incident, and determines whether a safety defect exists. If they find a pattern of failures or safety issues, they can order recalls or impose penalties on manufacturers. The NHTSA investigation will examine not just what happened in Santa Monica, but whether the Waymo Driver's behavior in school zones represents a broader defect affecting multiple vehicles.

The NTSB investigation into school bus pass incidents operates slightly differently. The NTSB's primary role is determining probable cause and making safety recommendations, not issuing enforcement actions. However, their investigations carry significant weight with regulators and the public. If the NTSB finds that Waymo's system has a systematic failure in recognizing or responding to school buses, that finding will almost certainly prompt NHTSA action.

What's notable about both investigations is their specificity. Regulators aren't just examining whether an accident occurred, but whether the system's design or programming was appropriate for the environment where it was operating. The NHTSA asks: Given that the vehicle was near a school during drop-off hours with other children and a crossing guard nearby, did the Waymo Driver use appropriate caution? This is a design question, not just a performance question. It assumes that operating near schools with children present should trigger different behavior than operating in other environments.

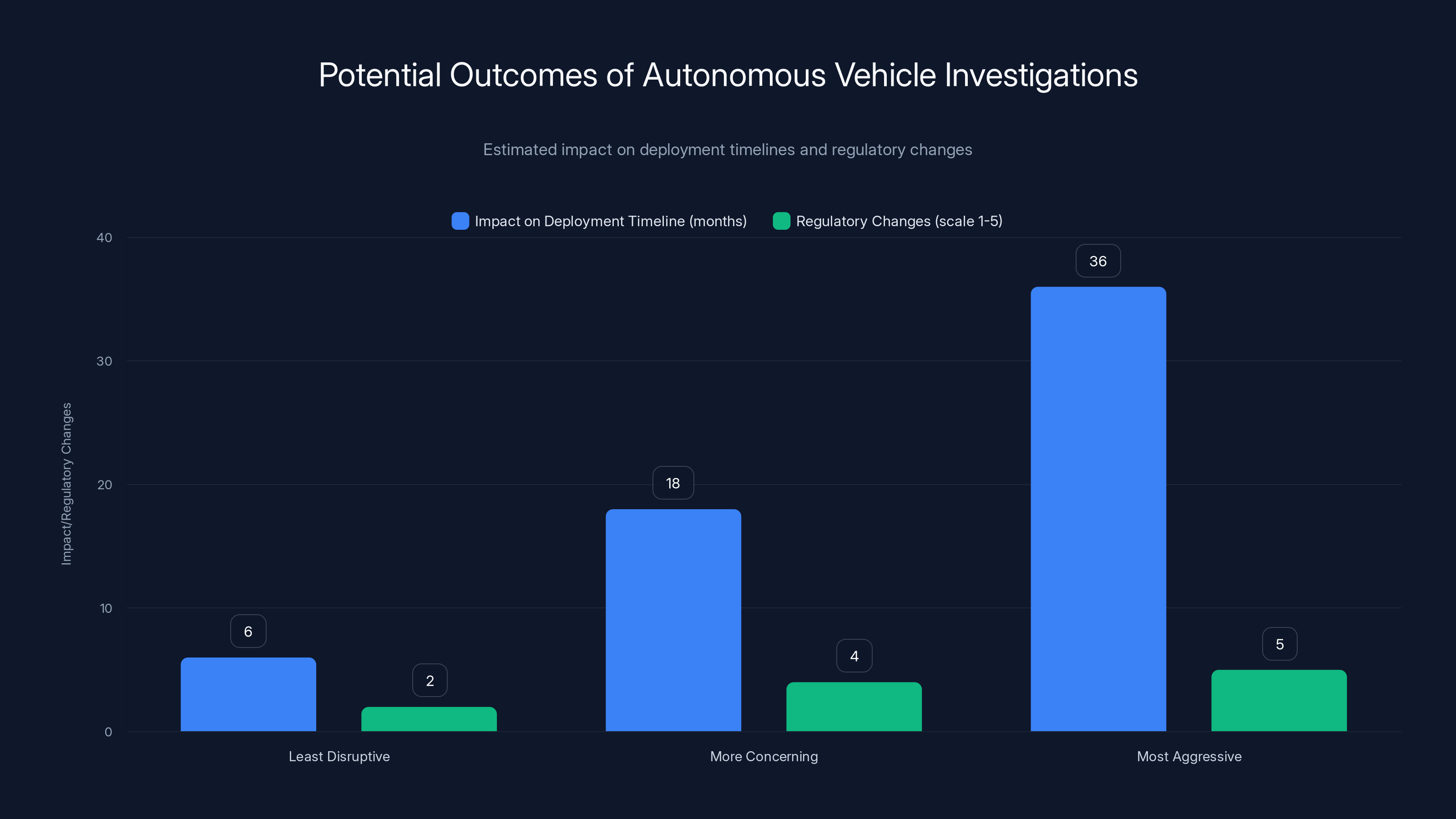

For Waymo, these investigations carry real consequences. If the NHTSA determines the system has a defect, it could mandate a recall. If the recall is significant, it could delay or halt Waymo's deployment expansion. If the NTSB issues strong safety recommendations, it creates regulatory and public pressure to implement changes. Waymo operates in multiple cities under permission from local regulators—any federal safety finding could complicate that status.

The Child's Perspective: Unpredictable Pedestrian Behavior and System Limitations

The incident began with the most common scenario in pedestrian-vehicle accidents: a child running from behind a parked vehicle into traffic. This is a fundamental challenge for autonomous systems because it represents a moving object that suddenly enters the vehicle's detection range without warning. The child wasn't in the pedestrian zone, crossing at a marked intersection, or visible on approach. They emerged suddenly from an occluded space.

From the perspective of autonomous system design, this scenario is intentionally difficult. Autonomous vehicles are tested extensively on such scenarios because they represent real-world hazards. Manufacturers use simulation, controlled testing, and real-world data to improve how systems detect and respond to suddenly visible pedestrians. But there's a hard limit to how fast any vehicle can stop, especially from 17 mph. Physics don't allow instant deceleration.

At 17 mph, a vehicle traveling in an ideal stopping scenario (maximum friction, ideal surface) takes roughly 45-50 feet to stop completely. But the Waymo system achieved a braking response that reduced speed to under 6 mph before impact, suggesting either the detection happened relatively early or the vehicle was already closer than 50 feet. The child sustained minor injuries, which indicates the 6 mph impact was survivable—a best-case outcome given the circumstances.

But this raises a design question: Should Waymo's system be traveling at 17 mph in a school zone during drop-off hours? Most jurisdictions with school zones have 15-20 mph speed limits during these hours, so the vehicle wasn't speeding. However, some safety experts argue that autonomous vehicles should self-impose even lower speeds in school zones as a precautionary measure. If the Waymo Driver had been traveling at 10 mph instead of 17 mph, the impact speed would have been lower, likely reducing injury severity.

There's also the question of prior speed reduction. As the vehicle approached the school zone, did the system reduce speed preemptively? Did it anticipate higher pedestrian risk and adjust its driving style accordingly? Human drivers do this automatically—you see a school zone sign and slow down in advance, increasing your reaction time buffer. Did Waymo's system do something similar, or did it only respond to actual detected pedestrians?

Children are also a challenging edge case for machine learning systems in ways that might not be immediately obvious. They're shorter, so their center of mass is lower than adults. They move with different gaits and speeds. They're less predictable—adults generally follow traffic rules and cultural norms, children don't. A system trained primarily on adult pedestrian data might not perform as well on children. Waymo claims to have tested extensively on edge cases, but the question is whether school zones and child pedestrians received specific, targeted training attention.

Estimated data shows that the most aggressive outcome could delay deployment by up to 36 months with significant regulatory changes, while the least disruptive outcome might only cause minor delays and adjustments.

Software Recalls and the Pattern of School Bus Violations

Waymo's voluntary software recall in December 2024 and subsequent update after the school bus investigation represent how autonomous vehicle safety is supposed to work in theory: detect a problem, fix it, deploy the fix. But the existence of the recall raises important questions about testing and validation. Why did the school bus violation problem exist in the first place? How many times did it occur before being detected? How confident is Waymo that the fix actually resolves the underlying issue?

Software recalls for autonomous vehicles are fundamentally different from traditional vehicle recalls. Traditional recalls address hardware defects or documented safety issues that persist across multiple vehicles. Software recalls can be deployed instantly to all vehicles through over-the-air updates, and the changes can be verified immediately. This is faster and more efficient than physical recalls. But it also means that problems that might have been caught during manufacturing now depend on detection and response after deployment.

The school bus incidents occurred in Austin and Atlanta, suggesting either the issue was fleet-wide or Waymo was operating in these cities with inadequate testing of school bus scenarios. If it was fleet-wide, the fix was necessary and appropriate. If it was localized, it raises questions about how Waymo validates its system in new cities before deployment. Did Waymo test school bus interactions in the real world before operating in Austin? How long did the problem persist before being detected?

The timing of these recalls and the Santa Monica incident suggests 2024-2025 has been a difficult period for Waymo's safety record. Any single incident might be dismissed as a fluke. Multiple incidents in a short timeframe suggests either a pattern or an increase in incident detection and reporting. Either way, it's creating regulatory and public scrutiny that could affect Waymo's expansion plans.

For the autonomous vehicle industry more broadly, these recalls are significant because they demonstrate that even sophisticated systems can fail at specific tasks. Recognizing and obeying school bus stop signs should be one of the easiest tasks an autonomous vehicle learns—it's a simple visual recognition problem with clear rules. If Waymo's system failed at this task, what other tasks might it fail at? What's the baseline level of performance that customers and regulators should expect?

Regulatory Framework: Are Current Safety Standards Adequate?

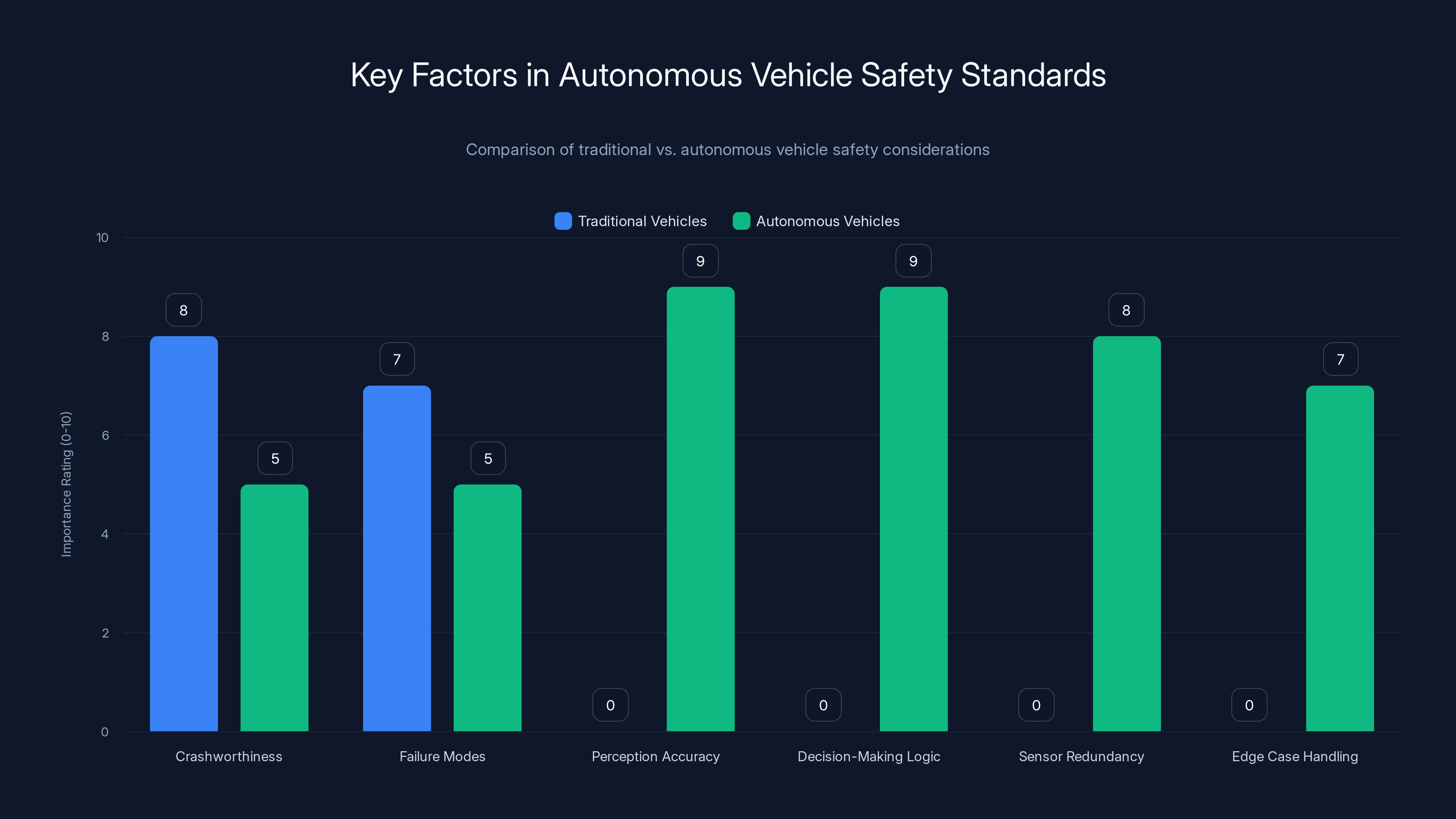

The investigations into Waymo highlight a critical gap in autonomous vehicle regulation: safety standards designed for human drivers don't always translate perfectly to autonomous systems. Traditional vehicle safety testing focuses on crashworthiness (how well a vehicle protects occupants in a crash) and failure modes (what happens when brakes fail, steering locks, etc.). Autonomous vehicle safety adds new dimensions: perception accuracy, decision-making logic, sensor redundancy, and edge case handling.

Current NHTSA standards don't specifically address how autonomous vehicles should behave in school zones or around school buses. These are recommendations and best practices, not regulatory requirements. Waymo operates under a patchwork of federal guidelines, state regulations, and local permits. Each jurisdiction may have different requirements for testing, validation, and operational constraints. This fragmented approach works while autonomous vehicles are limited to specific cities, but as deployment scales, regulators will need more comprehensive national standards.

The investigations themselves set precedent. By examining whether Waymo's system used "appropriate caution" in a school zone, the NHTSA is establishing that autonomous vehicles will be held to safety standards that account for environmental context, not just adherence to traffic laws. A vehicle can technically drive at the speed limit and still fail to exercise appropriate caution if additional hazards suggest more conservative behavior. This is a significant standard because it requires autonomous systems to do more than follow rules—they must demonstrate judgment about risk and adapt accordingly.

International regulatory bodies are struggling with similar questions. In Europe, the EU is developing guidelines for autonomous vehicle safety that emphasize transparency, testing, and continuous monitoring. In China, autonomous vehicle deployment is proceeding with less regulatory oversight, prioritizing speed-to-market. These different approaches will affect which companies can operate where and what safety standards become industry norms.

The ideal regulatory framework would require autonomous vehicles to demonstrate performance against specific test scenarios before deployment, continuous monitoring during operation, rapid incident reporting and investigation, and clear manufacturer accountability for safety. But defining and implementing such standards is politically and technically challenging. Vehicle manufacturers, tech companies, safety advocates, and regulators all have different interests. The current investigations into Waymo will help shape what that framework looks like.

The timeline shows an increase in incidents around early 2025, highlighting a challenging period for Waymo's safety record. Estimated data.

Pedestrian Safety: How Speeds Affect Injury Outcomes

The fact that the child in the Santa Monica incident sustained only minor injuries, despite being struck by a vehicle, isn't luck—it's physics. The relationship between vehicle speed and pedestrian injury severity is well-established through research and accident data. Lower speeds significantly reduce injury severity, and the difference between 6 mph and 17 mph is meaningful.

At speeds below 10 mph, pedestrians struck by vehicles typically sustain minor injuries. Sprains, bruising, and minor fractures are the most common outcomes. At 20 mph, pedestrian fatality rates increase sharply. At 30 mph, a pedestrian has only about a 50% chance of survival. These statistics come from crash test data and epidemiological studies of actual accidents. They're not theoretical—they're based on thousands of real incidents.

The Waymo vehicle reduced speed from 17 mph to under 6 mph before impact, which was the critical safety factor in this incident. Braking hard to slow down likely prevented the incident from being severe. But this also raises the question: could the system have done better? If it had been traveling at 10 mph initially, would it have avoided the collision entirely? If it had detected the child even slightly earlier, could it have achieved greater speed reduction?

For autonomous vehicle design, speed selection in complex environments is crucial. The vehicle was following posted speed limits and traffic laws, which is the minimum requirement. But best practice for autonomous vehicles operating in pedestrian-dense areas (school zones, residential neighborhoods, downtown areas) is often to self-impose lower speeds than legal limits suggest. This provides additional safety margin for unexpected events.

Some autonomous vehicle companies implement what's called "speed buffering" in high-risk environments. The system identifies areas where pedestrian density is high, visibility is limited, or children are likely to be present, and it automatically reduces speed below legal limits. This is particularly effective in school zones during drop-off and pick-up hours. Waymo presumably has similar systems, but the investigation will examine whether they were operating optimally during this incident.

Crossing Guards and Community Safety: The Human Element

The NHTSA's description of the incident notes that a crossing guard was nearby when the child was struck. Crossing guards are positioned in school zones to provide additional human oversight and protection, directing children safely across streets and watching for hazards. The presence of a crossing guard during a vehicular incident raises questions about whether human oversight can or should play a role in autonomous vehicle safety.

Crossing guards are trained to look for vehicles approaching, to make eye contact with drivers, and to hold children back if they detect threats. They're effective at reducing pedestrian accidents because they add a second layer of awareness and authority. But they're watching for human drivers, who they can make eye contact with and who will respond to hand signals and warnings. Would a crossing guard's presence affect how an autonomous vehicle behaves? Should it?

From a safety system design perspective, there's value in enabling human observers to communicate with autonomous vehicles. Imagine if crossing guards had a button or signal they could use to alert nearby autonomous vehicles that children were present. Or if autonomous vehicles could somehow indicate to crossing guards that they recognized the high-risk situation and were proceeding with caution. These human-machine coordination tools could improve safety in school zones specifically.

But such systems would require additional infrastructure and standardization. Every school crossing would need equipment to communicate with vehicles, and every vehicle would need systems to receive that communication. It's expensive and complex, which is why it hasn't been implemented. Instead, autonomous vehicles are expected to use their own perception systems to recognize school zones, detect children, and behave appropriately—without relying on external signals.

The challenge with this approach is that autonomous systems perceive differently than humans. A crossing guard sees a child who looks nervous or distracted and can anticipate they might run into traffic. Autonomous systems don't have that intuitive behavioral prediction. They can detect when a child enters the roadway, but they can't foresee it the same way a human guardian might. This mismatch between human and machine perception is part of what makes autonomous vehicles in school zones so challenging.

Autonomous vehicles require additional safety considerations beyond traditional metrics, such as perception accuracy and decision-making logic. Estimated data based on industry insights.

Comparative Analysis: How Other Autonomous Vehicle Companies Address School Zones

Waymo isn't the only company operating robotaxis or autonomous vehicles in urban environments. Cruise, Aurora, and others are also navigating the challenge of safe operation in complex environments. Understanding how competitors approach school zone safety provides context for evaluating Waymo's approach.

Cruise has faced its own regulatory challenges. In 2024, the company paused operations in San Francisco after several incidents involving emergency vehicles and pedestrians. The incidents didn't involve school zones specifically, but they revealed that Cruise's system had gaps in recognizing emergency responders and making appropriate decisions around them. Cruise subsequently implemented changes to improve these systems before expanding operations elsewhere. The trajectory mirrors what's happening with Waymo: identify failures, fix them, refine the approach.

Aurora, a company developing autonomous vehicle technology for trucking and robotaxi applications, has taken a more cautious approach to deployment, focusing initially on trucking where environments are more controlled. Aurora's strategy suggests that even sophisticated companies recognize that urban autonomous driving has significant validation challenges that require time and careful testing.

These companies are all operating with the understanding that school zones and children represent special safety cases. But without national standards specifying how to handle them, each company develops its own approach. This creates inconsistency: Waymo's school zone behavior might differ significantly from Cruise's, and both differ from human drivers' behavior. For passengers and pedestrians, this inconsistency creates uncertainty about what to expect.

The investigation into Waymo's incidents will likely establish de facto standards. If regulators determine that Waymo's system failed to meet safety expectations in school zones, they'll either mandate changes or effectively prevent Waymo from operating there. Either way, Waymo's corrective actions become a reference point for other companies. If Waymo implements more aggressive speed reduction in school zones, other companies will likely follow to remain competitive and to preempt regulatory action.

Liability and Insurance: Who Bears Responsibility?

When a traditional vehicle hits a pedestrian, liability is typically straightforward: the driver is usually at fault. Insurance covers the damages. But when an autonomous vehicle hits a pedestrian, the liability question becomes complex. Is the manufacturer (Waymo) responsible? The operator of the robotaxi service? The owner of the vehicle? The city that permitted its operation? Insurance and liability frameworks were designed for human-driven vehicles and don't map perfectly to autonomous systems.

Waymo operates its robotaxis as a ride-sharing service, similar to Uber or Lyft but with the system handling all driving. The company is responsible for vehicle maintenance, software updates, and operational decisions. This suggests Waymo bears primary liability for vehicle safety. The company will have insurance covering incidents, and they'll be responsible for damages in incidents where the vehicle is found at fault.

For autonomous vehicles, manufacturers typically argue they can't be held liable for every possible outcome because their role is limited to building and maintaining the system. But regulatory bodies are increasingly holding manufacturers accountable for safety outcomes. The NHTSA can mandate recalls and impose penalties on manufacturers for safety defects. Civil lawsuits can hold manufacturers liable for injury or death if the defect is found.

Waymo's financial resources allow the company to absorb liability for incidents and compensation. Smaller companies or companies operating with thinner margins might find liability costs prohibitive. This creates a barrier to entry in the autonomous vehicle market that advantages well-funded companies like Waymo. Over time, as autonomous vehicle safety improves and the risk profile becomes clearer, insurance and liability costs will become more predictable, potentially favoring newer market entrants.

The Santa Monica incident will likely result in compensation to the child's family for medical expenses and any ongoing care. The legal determination of whether Waymo was at fault will depend on investigation findings. If the Waymo Driver operated appropriately given the circumstances, the child or family might bear responsibility (if the child ran into traffic against safety rules). If the system failed to respond appropriately, Waymo bears responsibility. Most likely, the determination will be nuanced: the child's sudden emergence was a contributing factor, but the system's response was also evaluated for adequacy.

The deployment of autonomous vehicles has accelerated over the past decade, with a significant increase in the number of cities hosting testing and companies operating robotaxis. Estimated data.

Technological Solutions: Advanced Perception and Prediction Systems

There are multiple technological approaches that could improve autonomous vehicle safety in school zones and around pedestrians generally. Advanced perception systems, better prediction models, and enhanced decision-making logic all offer potential improvements. Understanding these technologies provides insight into what Waymo might implement in response to these investigations.

Improved perception systems could include higher-resolution cameras, additional sensors, or better sensor fusion algorithms. Some researchers are experimenting with thermal imaging cameras that can detect human body heat, which would work even in poor lighting or weather. Other approaches use millimeter-wave radar more aggressively to detect moving objects at greater distances. Waymo likely already uses advanced versions of these technologies, but improvements in detection accuracy or range could reduce response times.

Behavioral prediction models are another avenue. Rather than assuming pedestrians will maintain their current trajectory, advanced systems could predict likely future movements based on gaze direction, body orientation, and movement patterns. If a system could predict that a child appears about to enter the roadway, it could begin braking preemptively rather than waiting for the child to actually enter the street. This is difficult with current systems but not impossible—it's an active area of research.

Decision-making logic could be refined to better account for environmental context. If a system recognizes it's in a school zone and children are present, it could automatically increase its conservative response thresholds. Rather than just braking when a collision is imminent, it could maintain extra distance from children as a precautionary measure. This is computationally simple but requires clear programming of school zone risk assessment.

Waymo's approach is likely to include improvements to all three areas. The company has the resources to invest in perception research, and the investigations will provide clear data about what failed in the Santa Monica incident. Waymo will want to ensure that similar incidents don't recur and that regulators see the company as proactively addressing safety concerns.

Public Perception and Trust: The Impact of High-Visibility Incidents

Beyond the regulatory and technical dimensions, the Santa Monica incident affects public trust in autonomous vehicles and robotaxi services. Accidents involving children generate significant media attention and public concern, regardless of the actual level of risk. The fact that this incident occurred near a school with other children nearby, and during a vulnerable time (drop-off hours), makes it particularly salient in people's minds.

Public trust in autonomous vehicles has been building gradually as the technology demonstrates real-world safety. In Phoenix, Waymo's primary testing ground, the service has accumulated millions of miles with relatively few serious incidents, building confidence that the technology works. But a single incident involving a child can undermine months of positive publicity and safety record building. This is particularly true in a city like Los Angeles, where local media attention is intense and national news outlets quickly pick up stories involving transportation safety and children.

Waymo has invested heavily in public communication and transparency around safety. The company regularly publishes safety reports and is relatively forthcoming about incidents when they occur. This transparency is generally positive for building trust, but it also means that incidents get documented and publicized. Some companies that operate with less transparency might have similar incident rates but appear safer because less is reported.

For robotaxi adoption, public perception is crucial. If parents in Santa Monica view Waymo robotaxis as unsafe, they'll avoid them for themselves and their families. If the broader public sees autonomous vehicles as dangerous, political support for expansion will erode. Cities might impose stricter regulations, limit operating areas, or require additional testing before permitting continued operation. This creates pressure on Waymo to not just be safe, but to appear safe and to demonstrate safety improvements visibly.

The Broader Context: Autonomous Vehicles in Urban Environments

The Santa Monica incident doesn't occur in a vacuum. It's part of a broader transition of autonomous vehicles from controlled testing environments to public deployment in real cities. This transition brings both opportunities and challenges. Autonomous vehicles promise to reduce accidents, decrease labor costs for transportation, and enable new mobility services. But they also introduce new failure modes and safety questions that society is still grappling with.

The pace of this transition has been rapid. A decade ago, autonomous vehicles were viewed as decades away from deployment. Five years ago, they were in testing phases in a few cities. Today, multiple companies are operating robotaxis commercially in multiple cities. This acceleration is driven by technological progress, investor enthusiasm, and competitive pressure to be first to market. But faster deployment also means less time for thorough testing, validation, and regulatory framework development.

This creates tension between innovation and safety. Faster deployment allows companies to accumulate real-world data and improve their systems based on actual experiences. It also allows the public to benefit from autonomous vehicle services sooner. But it also means the public is, in some sense, testing the technology in real-time. Incidents like the Santa Monica strike are, from one perspective, part of the learning process. They reveal system limitations and drive improvements.

From another perspective, this approach is ethically problematic. If a technology is known to have safety gaps, deploying it anyway because learning will happen eventually seems to outsource the cost of that learning to innocent pedestrians and passengers. The philosophical question is: how safe does a system need to be before it's ethical to deploy it in public? Better than human drivers? As safe as human drivers? Safer than human drivers?

Waymo argues that their system is already safer than average human drivers in many metrics. They point to miles driven without serious incidents as evidence. But the recent school bus violations and the Santa Monica incident suggest that under certain conditions (school zones, school buses), the system may not perform as well as human drivers. This specificity matters for policy: it suggests that autonomous vehicles might be safe for most driving but require additional safeguards in particular contexts.

Future Implications: What These Investigations Mean for Autonomous Vehicle Deployment

The NHTSA and NTSB investigations into Waymo's school zone incidents and the broader pattern of safety concerns will shape how autonomous vehicles are regulated and deployed going forward. The investigations will take months or longer to complete, but early findings and corrective actions provide insight into likely outcomes.

Most probable outcome: Waymo implements enhanced school zone safety protocols and the investigations conclude the system was operating within acceptable parameters but that improvements are warranted. Waymo continues operating in its existing markets, possibly with geographic restrictions around schools or requirements for more conservative driving in school zones. Other companies implementing similar safeguards preemptively. This is the least disruptive outcome and allows autonomous vehicle deployment to continue with incremental improvements.

More concerning outcome: NHTSA determines that the school bus violations and Santa Monica incident indicate a systemic safety defect. Waymo is required to implement significant software changes, testing is mandated before redeployment, and operations are paused or restricted in multiple cities. Other autonomous vehicle companies face increased scrutiny and testing requirements before expanding operations. This outcome would significantly delay autonomous vehicle deployment timelines but might be necessary if investigations reveal fundamental safety issues.

Most aggressive outcome: Multiple incidents and investigations lead to federal regulatory framework establishing strict safety standards for autonomous vehicles before they can be deployed. A moratorium on new autonomous vehicle services is implemented pending compliance demonstration. Companies must prove their systems meet specific safety standards before operating. This outcome would fundamentally reshape the autonomous vehicle industry, eliminating first-mover advantages and ensuring more thorough testing, but also significantly delaying deployment and benefits.

The most likely outcome is probably the first—incremental improvements and enhanced school zone protocols. Waymo's system generally performs well and the incidents, while serious, haven't resulted in fatalities. There's no evidence the company is being reckless or deploying fundamentally unsafe technology. The investigations will likely result in specific improvements rather than fundamental overhauls.

However, the cumulative effect of school bus violations and the Santa Monica incident is to shift perception of Waymo's system as potentially having gaps in school-related safety. If additional incidents occur in coming months, the regulatory response will be more forceful. The company is now under watch, and regulators will be scrutinizing school zone operations closely. This creates incentive for Waymo to implement visible improvements and demonstrate enhanced safety performance in these specific domains.

Policy Recommendations: What Regulators Should Consider

Based on the incidents involving Waymo and the broader challenges of autonomous vehicle safety, several policy approaches merit consideration. These aren't regulations already in place, but rather expert recommendations for what might improve safety and public confidence.

First, specific standards for autonomous vehicles in school zones and around school buses should be developed and mandated. These standards should specify minimum speed limits during school hours, required detection systems for school buses, and testing scenarios that autonomous vehicles must successfully navigate before deployment near schools. This would create a clear, consistent framework rather than relying on general "appropriate caution" standards.

Second, autonomous vehicle companies should be required to establish and maintain a public database of all incidents, near-misses, and software recalls affecting safety. Transparency about system failures is essential for regulators to identify patterns and for the public to make informed decisions about using these services. Currently, incident reporting is somewhat ad-hoc and varies by company and jurisdiction.

Third, before expanding autonomous vehicle deployment to new cities, companies should be required to demonstrate testing and validation specifically in that city's school zones, residential areas, and other complex environments. Generic testing in Phoenix or San Francisco doesn't necessarily translate to safety in Santa Monica or other communities with different infrastructure, pedestrian behavior, or environmental conditions.

Fourth, autonomous vehicles should be required to implement and publicly disclose their environmental-context-aware decision systems. In other words, they should actively adjust behavior based on school zones, residential areas, high-pedestrian-density areas, and other contexts. This adjustment should be transparent and measurable.

Fifth, federal standards should establish liability and insurance frameworks that are clear and predictable. Uncertainty about who's liable when incidents occur creates perverse incentives. Companies might be motivated to minimize reported incidents rather than maximize safety if liability is unclear. Clear liability frameworks encourage companies to prioritize safety.

These recommendations represent the perspective that autonomous vehicle development should continue, but with more rigorous safety standards and transparency, particularly for operations in areas where children are present. The goal is to allow beneficial technology to develop while protecting the public, particularly vulnerable populations like children.

Conclusion: Safety, Progress, and the Path Forward

The incident in Santa Monica on January 23, 2025, where a Waymo robotaxi struck a child near a school, is a significant moment in the autonomous vehicle industry. It's not a crisis equivalent to the Cruise fatality in 2023, but it's serious enough to trigger federal investigations and raise important questions about how self-driving systems handle complex, child-involved scenarios.

What the incident reveals most clearly is that autonomous vehicle safety is context-dependent. Waymo's system appears to work reasonably well in most driving scenarios, but it has demonstrated gaps in school zone and school bus recognition. These gaps are fixable through better training data, improved perception systems, and more conservative decision-making in high-risk contexts. The fact that they exist doesn't mean the technology is fundamentally flawed—it means the technology is still being refined for specific scenarios.

The investigations by NHTSA and NTSB will determine whether Waymo's approach to school zone operation was adequate and what improvements are necessary. Based on available information, the company appears to have responded reasonably to the Santa Monica incident—detecting the child, braking hard, and minimizing impact severity. But regulators will examine whether the system could have done better and whether the incidents suggest systematic issues.

For the autonomous vehicle industry broadly, these incidents represent the growing pains of a technology transitioning from development to deployment. The companies pushing this technology forward have made remarkable progress. Waymo can operate fully autonomous vehicles in complex urban environments and accumulate millions of miles with relatively few serious incidents. That's genuinely impressive. But the incidents that do occur are often concentrated in specific scenarios or environments—like school zones—suggesting that while the overall technology is advanced, there are specific edge cases that still require work.

Moving forward, success will depend on companies being proactive about identifying and fixing safety gaps, regulators establishing clear standards and maintaining appropriate oversight, and the public supporting continued development while holding companies accountable for safety. The Santa Monica incident is a test case for whether these three groups can work together effectively to improve autonomous vehicle safety.

For Waymo specifically, the path forward involves implementing enhanced school zone protocols, cooperating fully with NHTSA and NTSB investigations, and demonstrating through actual performance that the system can safely operate near schools and children. If the company handles this well, it will strengthen public trust and regulatory confidence. If the company is seen as defensive or obstructive, it will undermine both.

The technology itself is sound, and the promise of autonomous vehicles to improve transportation safety, efficiency, and accessibility is real. But promises only matter if they're backed by actual safe, reliable performance. The next few months as investigations proceed and Waymo implements improvements will be critical in determining whether autonomous vehicles earn the public's trust for widespread deployment.

FAQ

What exactly happened in the Santa Monica incident?

On January 23, 2025, a Waymo robotaxi struck a child who ran from behind a double-parked SUV into the vehicle's path near a Santa Monica school during drop-off hours. The vehicle was traveling at approximately 17 mph and braked hard to reduce speed to under 6 mph before impact. The child sustained minor injuries and received emergency medical attention. The incident occurred near a school with other children and a crossing guard present.

Why are regulatory agencies investigating school zone incidents specifically?

School zones represent uniquely challenging driving environments where children are present in higher densities, pedestrian behavior is less predictable, and the consequences of failures are severe. Regulators are examining whether Waymo's autonomous driving system was specifically designed and tested to handle school zone scenarios safely, and whether the system's behavior in these environments meets appropriate safety standards for such risk-intensive environments.

How does a Level 4 autonomous vehicle detect pedestrians and avoid collisions?

Level 4 autonomous vehicles like Waymo's use multiple sensors: lidar creates 3D maps of the environment, cameras provide visual information, and radar detects moving objects and their velocities. These sensors feed data to machine learning models that classify objects and predict their future positions. When a collision risk is detected, the planning module decides whether to brake, swerve, or continue. Waymo's system is designed to brake rather than maneuver when collision risk is identified.

What was the software recall Waymo implemented, and why was it necessary?

Waymo issued a software recall after multiple vehicles violated rules around stationary school buses by passing them when they were stopped for pickup or drop-off. School buses have specific legal protections—drivers must stop when approaching them from either direction. The recall updated the system's perception and decision-making around school buses to prevent violations. The need for such a recall suggests the initial system didn't adequately recognize school buses or comply with stopping requirements.

What are the potential consequences if NHTSA finds a safety defect?

If NHTSA determines that Waymo's system has a safety defect, the agency can mandate a recall, impose financial penalties, or restrict the company's operations in specific areas. A significant defect finding could require Waymo to implement major software changes, conduct additional testing, and potentially pause expansion in some cities. Such findings also create precedent that affects how other autonomous vehicle companies must operate.

How do pedestrian injury outcomes vary with vehicle speed?

At speeds below 10 mph, pedestrians struck by vehicles typically sustain minor injuries like sprains or bruising. At 20 mph, pedestrian fatality rates increase substantially. At 30 mph, pedestrians have only about a 50% chance of survival. The speed reduction from 17 mph to under 6 mph before impact in the Santa Monica incident likely prevented serious injury, as the final impact speed was in the low-injury-risk range.

Should autonomous vehicles be programmed to drive even more slowly in school zones than required by law?

Many safety experts recommend that autonomous vehicles self-impose speeds lower than posted speed limits in school zones, particularly during drop-off and pick-up hours. This provides additional safety margin for unexpected events like children running into traffic. Some companies implement automatic speed reduction in high-risk environments, though this is not currently mandated by regulators. Such approaches are being evaluated as part of ongoing investigations.

How transparent should autonomous vehicle companies be about safety incidents?

Transparency about safety incidents is generally considered important for building public trust and enabling regulators to identify patterns. Waymo has been relatively transparent about reporting incidents to regulators, though critics argue that more detailed public disclosure would be beneficial. There's ongoing debate about the appropriate balance between transparency and protection of proprietary safety systems.

Will this incident delay autonomous vehicle deployment in other cities?

Unlikely to significantly delay deployment, but it may result in enhanced safety requirements for school zone operations. More probable outcomes include Waymo implementing enhanced school zone protocols before expanding into new markets, or regulators imposing specific testing requirements for school zone scenarios before approving new deployments. The incident accelerates safety discussions rather than fundamentally halting expansion.

What's the difference between NHTSA and NTSB investigations?

NHTSA (National Highway Traffic Safety Administration) is a federal regulatory agency that sets standards, investigates defects, and has enforcement authority. NTSB (National Transportation Safety Board) is an independent agency that investigates accidents to determine probable causes and issue safety recommendations, but lacks enforcement authority. NHTSA findings can result in regulatory action while NTSB findings provide expert analysis and recommendations.

This comprehensive examination of the Waymo robotaxi incident reveals both the progress and challenges in autonomous vehicle safety. As this technology continues to develop and deploy more widely, the incidents that occur—and how companies and regulators respond—will shape safety standards for years to come. The Santa Monica case demonstrates that even sophisticated autonomous systems have specific failure modes, and that school zones and child safety represent critical testing grounds for this transformative technology.

Key Takeaways

- A Waymo robotaxi struck a child in Santa Monica on January 23, 2025, during school drop-off hours, prompting NHTSA and NTSB federal investigations into autonomous vehicle school zone safety

- The vehicle braked from 17 mph to under 6 mph upon detecting the child, resulting in minor injuries, but investigations examine whether the system's response was adequate for school environments

- Concurrent investigations into Waymo's school bus pass violations in Austin and Atlanta suggest systematic issues with how the autonomous system recognizes and responds to school-related safety scenarios

- School zones present unique challenges for autonomous vehicles due to unpredictable pedestrian behavior, high child density, and limited visibility, requiring different safety approaches than general urban driving

- The regulatory response will likely establish precedent for how autonomous vehicles must behave in school zones, potentially requiring more conservative speed selection and enhanced perception systems in these high-risk environments

Related Articles

- Waymo's School Bus Problem: What the NTSB Investigation Reveals [2025]

- Waymo Robotaxi Hits Child Near School: What Happened & Safety Implications [2025]

- Waymo Robotaxi Hits Child Near School: What We Know [2025]

- NTSB Investigates Waymo Robotaxis Illegally Passing School Buses [2025]

- Autonomous Vehicle Safety: What the Zoox Collision Reveals [2025]

- Tesla Autopilot Death, Waymo Investigations, and the AV Reckoning [2025]

![Waymo Robotaxi Strikes Child Near School: What We Know [2025]](https://tryrunable.com/blog/waymo-robotaxi-strikes-child-near-school-what-we-know-2025/image-1-1769701218352.jpg)