Why AI GTM Tools Still Fall Short of Cursor and Replit [2025]

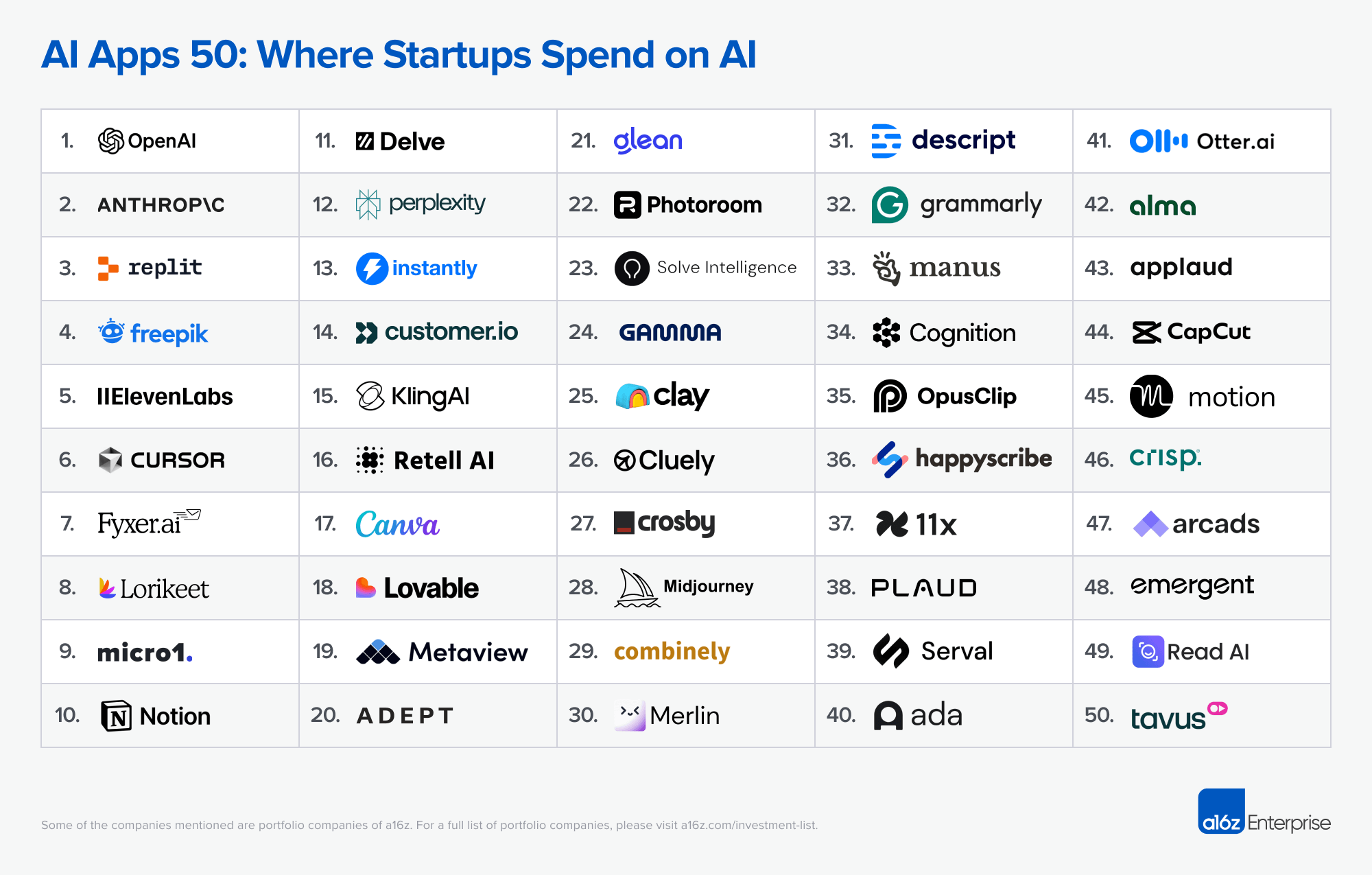

Let's cut through the hype. If you've spent time with both Cursor or Replit and then jumped into an AI sales tool, you've probably felt the difference immediately. The gap isn't subtle. It's the difference between a collaborator and a workflow automation system pretending to be intelligent.

I'm not saying AI GTM tools don't work. They do. We've deployed multiple platforms across our own stack, sent over 20,000 AI-generated outreach messages, and closed more than $2 million in revenue directly attributed to AI-driven sales development. We run Artisan for outbound, we've integrated inbound AI conversations, and we're testing the latest AI-native sales agents. The tools are generating pipeline. They're saving time. They're valuable.

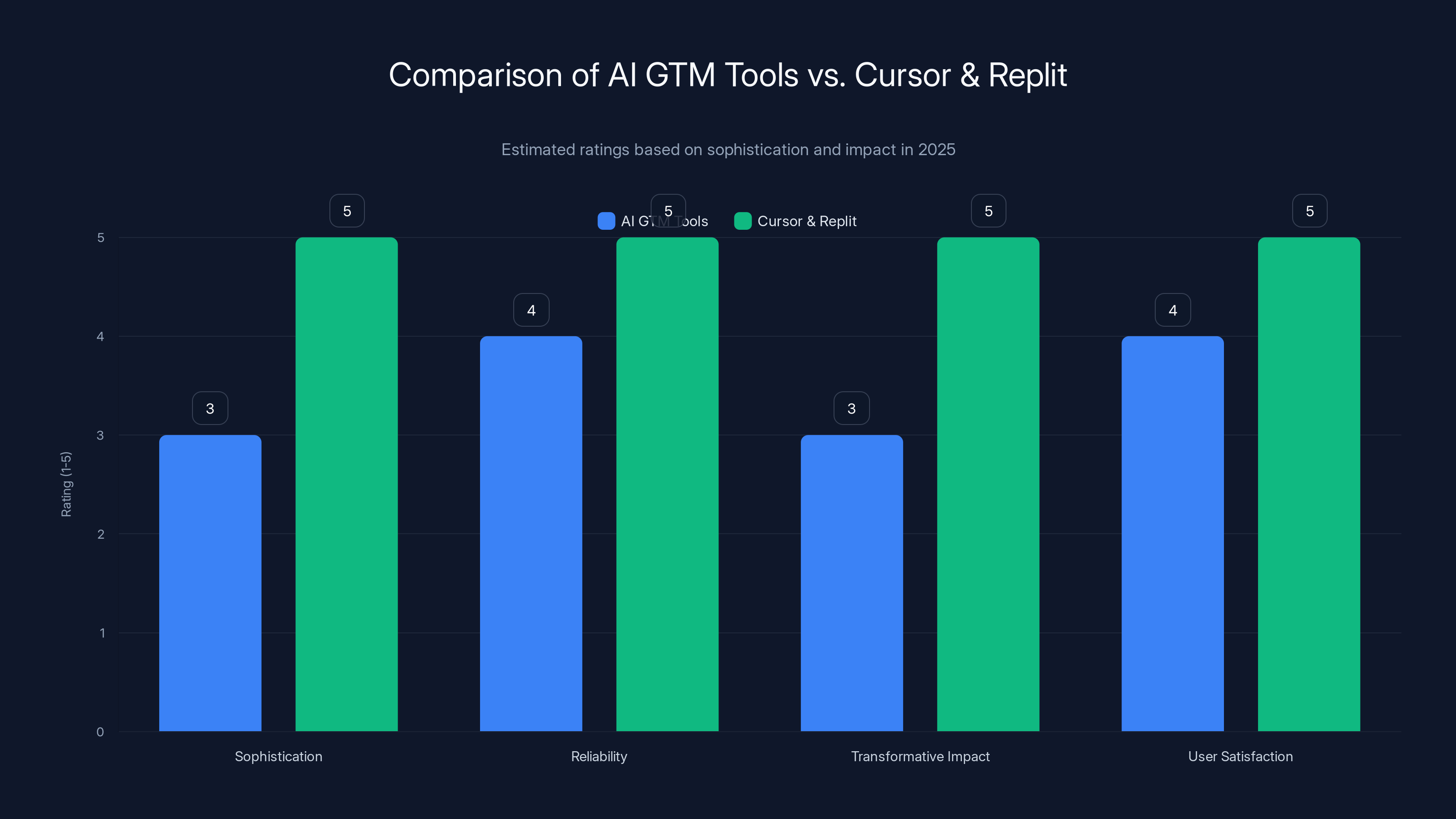

But here's the uncomfortable truth: they're nowhere near the sophistication, reliability, and transformative impact of what Cursor and Replit have achieved in the engineering space. And that gap? That gap represents everything that's still missing in AI-driven go-to-market work.

The question isn't whether AI GTM tools will eventually reach that level. They will. The real question is why they're not there yet, what's holding them back, and what needs to change for the next generation to actually deliver on the promise of "AI SDR" rather than "very smart automation with AI features."

TL; DR

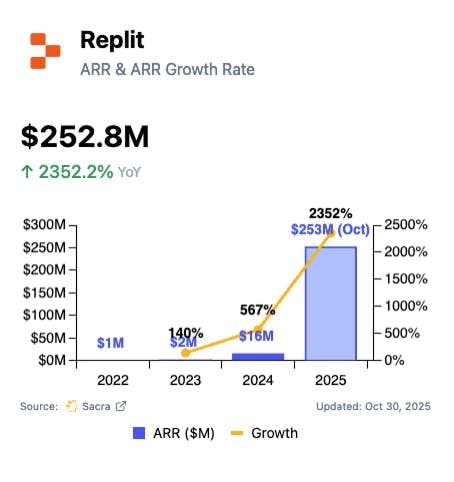

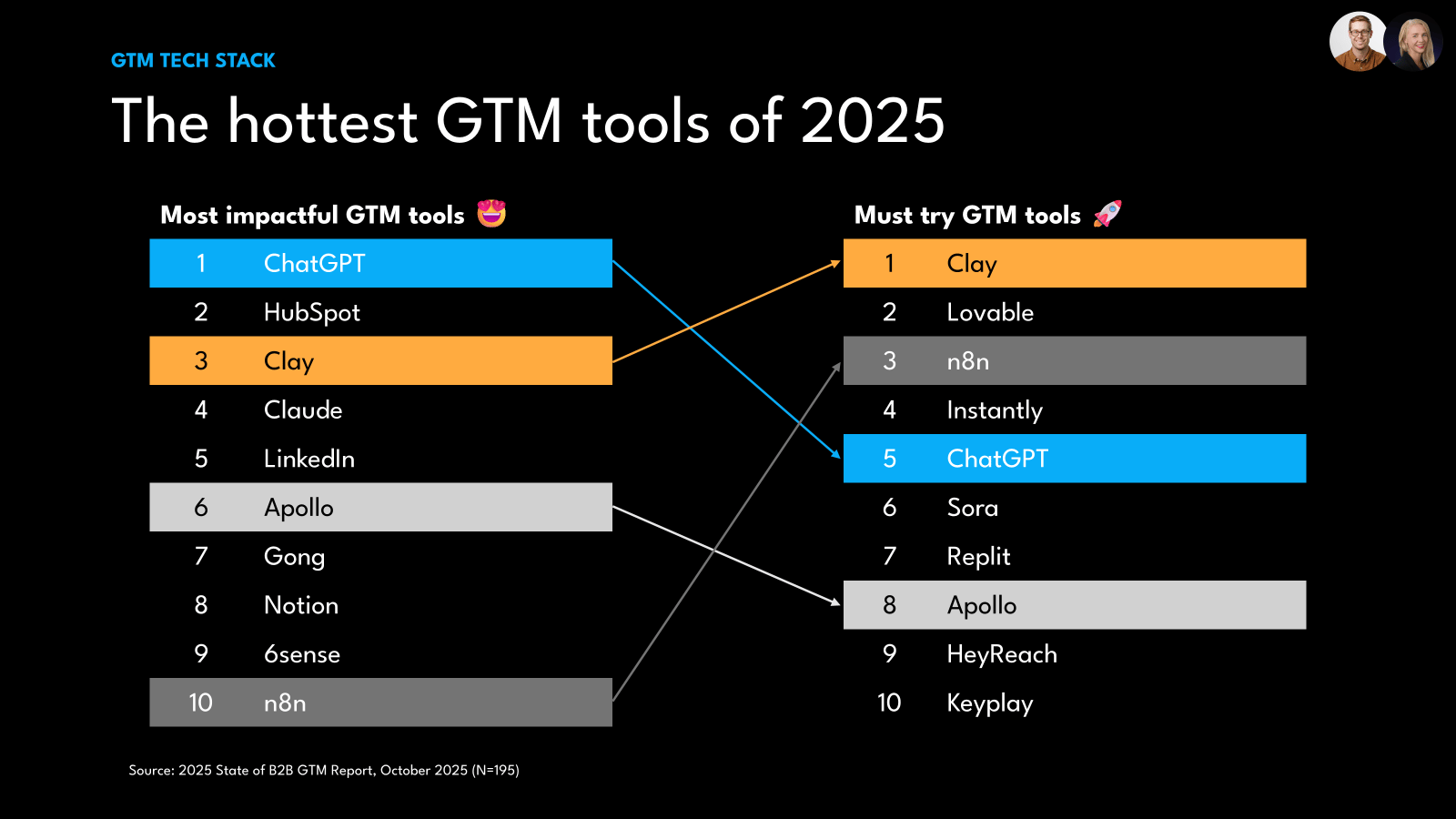

- Cursor hit $1B+ ARR in under two years with 72% of developers using AI coding assistants daily, while AI GTM tools remain niche automation platforms

- Current AI sales tools require continuous human oversight, daily reviews, and 30+ days of training to function reliably, unlike Cursor's plug-and-play experience

- Most "AI SDRs" are actually hyper-automation systems that personalize templates and classify replies, not agents that truly understand sales context

- Developers can feel 20-55% faster delivery times with AI coding tools; sales leaders can't feel comparable improvements yet

- The path forward requires solving context awareness, multi-step reasoning, and accountability in ways AI GTM platforms haven't attempted

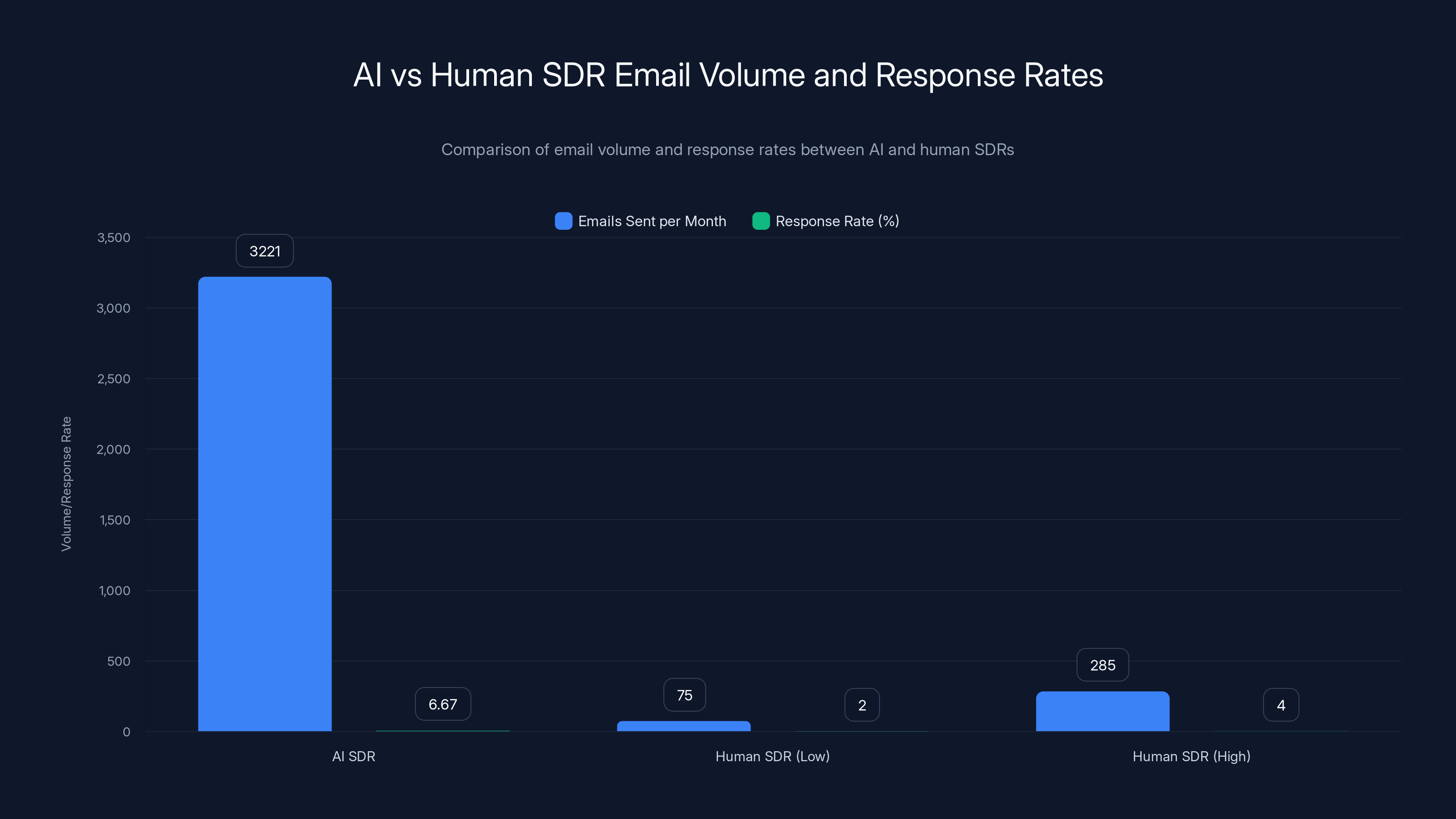

AI SDRs send significantly more emails (11x to 43x) than human SDRs, with a higher response rate of 6.67% compared to the 2-4% industry average.

The Growth Gap: Numbers That Tell the Story

Let's start with the clearest signal of all: market traction. These numbers matter because they reflect real user satisfaction and transformational impact.

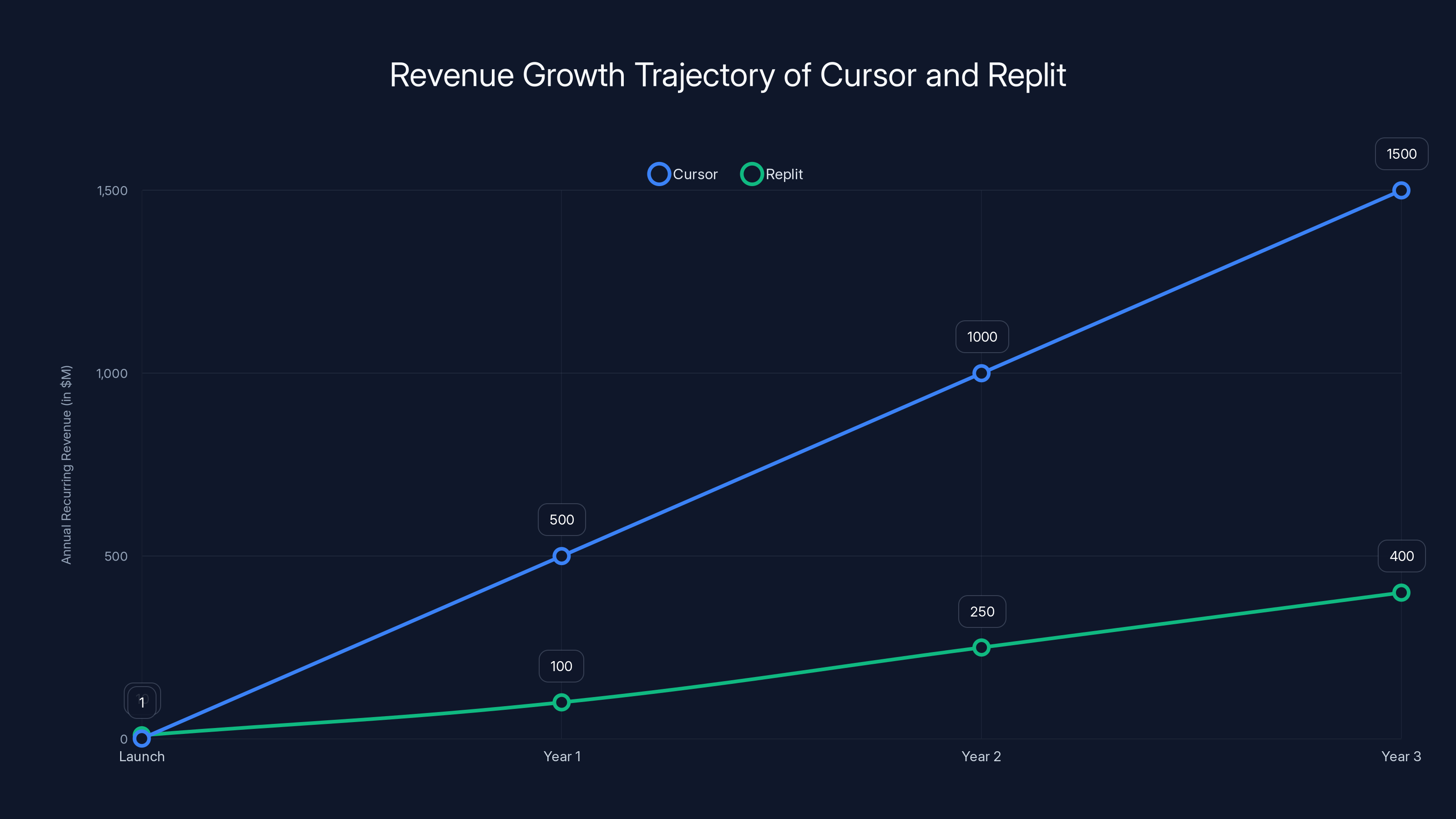

Cursor went from launch to

What about developer productivity gains? The numbers are concrete. Cursor users report 20-55% faster delivery times on engineering tasks. Think about that range for a moment: that's not marginal improvement. That's transformational. Seventy-two percent of professional developers now use or plan to use AI coding assistants as part of their daily workflow. That's adoption at scale.

Replit followed a similar trajectory. The platform crossed 22.5 million users and serves over 500,000 businesses building applications. In just six months, users created 2 million apps using AI. Replit went from

Now compare that to the AI SDR and AI GTM space.

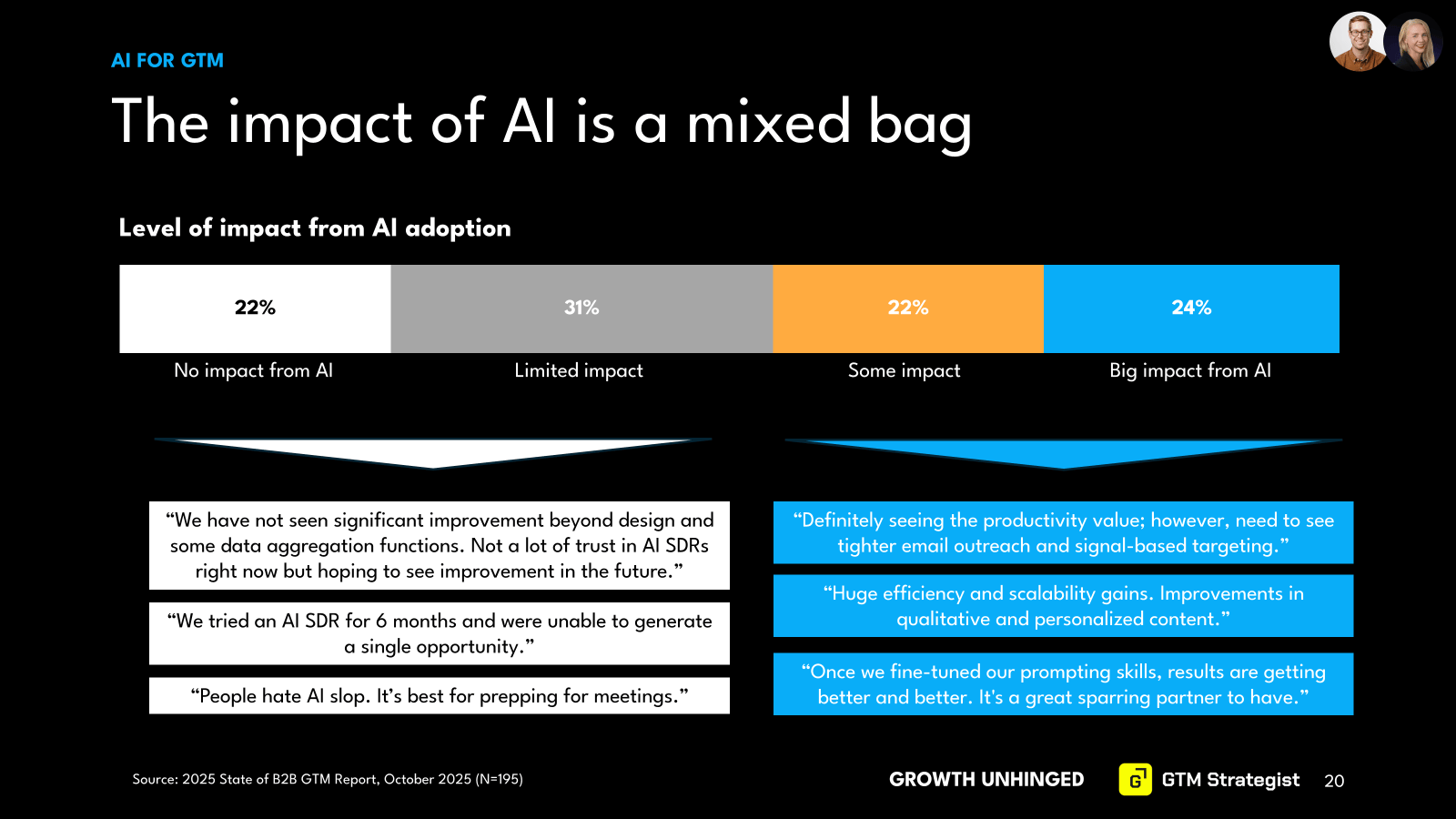

The market is growing. Companies in this category are raising serious funding and hitting impressive milestones. But the growth rates? The user adoption? The transformational feeling when someone uses the product? It's not the same magnitude.

When you look at the revenue numbers, the user adoption curves, and the developer enthusiasm, there's a 10x gap in maturity and impact. That gap is the story.

Cursor and Replit both experienced rapid ARR growth, with Cursor reaching $1B in just three years, highlighting its significant market traction and user adoption. Estimated data for 2025.

What We're Actually Running: The Real Numbers

Let me get specific about what a real, multi-tool AI GTM stack actually accomplishes. These aren't theoretical numbers. This is what's running in production right now.

Artisan: The Outbound AI SDR

Artisan is one of the most mature AI outbound platforms available. Over six months, we sent 19,326 messages from a single AI entity. That's an average of 3,221 emails per month from one AI SDR. For comparison, a human SDR typically sends 75 to 285 emails per month depending on their market and approach. That's an 11x to 43x volume difference.

The response rate matters just as much as volume. Our overall response rate across all campaigns hit 6.67%, which sits comfortably above the 2-4% industry average for human outreach. Warm campaigns performed significantly better at 12.13% positive response rates.

Here's what impressed us most: the AI booked a six-figure sponsor meeting on a Saturday at 6:02 PM. That's not a system following a template. That's intelligent timing, message crafting, and persistence at moments when humans aren't working.

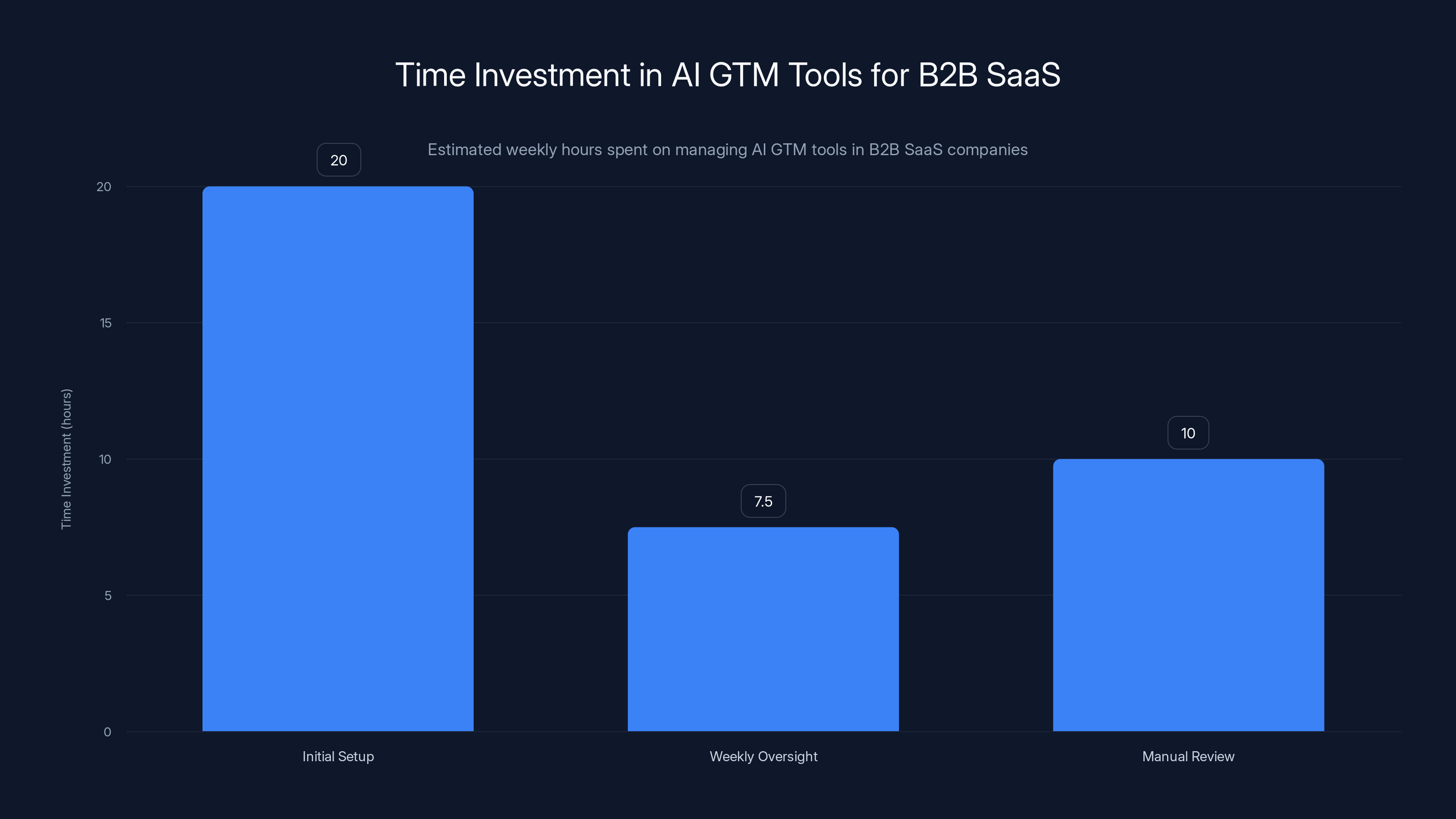

But here's the catch that matters: 3,221 emails per month sounds automated and hands-off. It's not. We spent weeks iterating on messaging. We went through 47 different versions just to prevent the AI from being too aggressive on pricing language. We manually reviewed the first 1,000 emails. And we still spend 20-30 minutes every single day spot-checking for tone, appropriateness, and brand voice.

Inbound AI: The Conversation Layer

Inbound is where the real complexity lives. We've deployed inbound AI conversation tools that handled 668,591 total sessions. Those weren't all qualified conversations, but 1,025 meaningful conversations happened between interested prospects and our AI system.

The business impact was measurable:

But again, this required setup. This required tuning. This required understanding how our sales conversations work and encoding that into the system.

Agentforce: The Salesforce-Native Layer

Agentforce represents a different approach: AI agents that live natively inside Salesforce. We deployed this just over a month ago. The early results are striking.

We achieved a 72% open rate on emails. Compare that to zero percent when humans never sent follow-up emails to previously ghosted leads. We're now closing deals from prospects who received no follow-up for six months. That's meaningful.

The reason this works better than traditional tools: Agentforce knows everything in your Salesforce record. Previous interactions. Company research. Event attendance. Every note a human has taken. When it personalizes an email or decides when to reach out, it has context that generic AI tools don't access.

Yet we still review draft responses before they go out. We still iterate on prompt builder instructions. We still decide which campaigns to include or exclude. This is not "set it and forget it."

Delphi: The Advisory Layer

Then there's the advisory piece. Delphi handles 139,000+ conversations annually, reviews hundreds of VC pitch decks, and provides product and go-to-market coaching. It works because it's built on structured knowledge, not general LLMs trying to understand sales patterns.

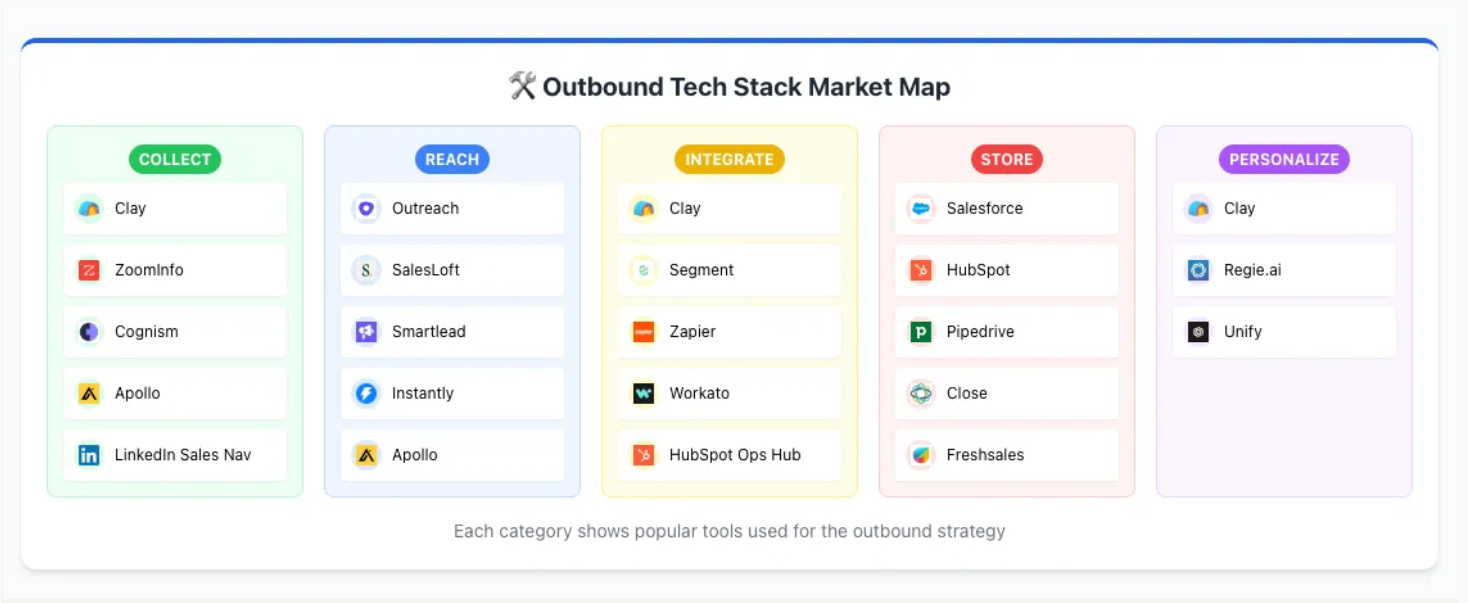

The Architecture Gap: Automation Versus Agency

Let me be direct about what most AI GTM tools actually are right now, because this is where the gap becomes architectural rather than incremental.

Most AI SDR and AI GTM platforms are hyper-automation systems with AI components. I don't mean that as an insult. Hyper-automation is genuinely valuable. But it's not the same as true agency.

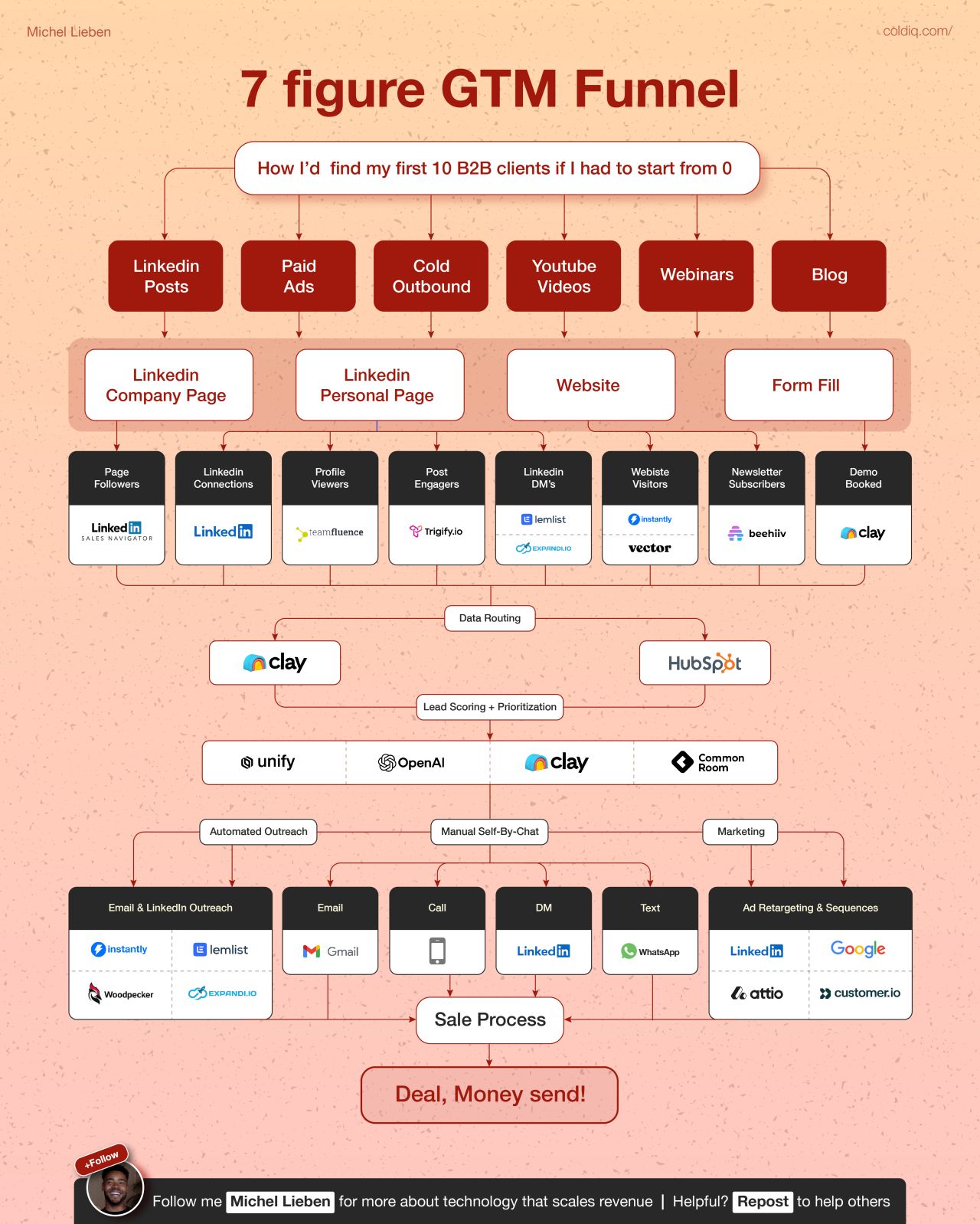

Here's what the typical architecture looks like:

Data integration layer: Pull prospect information from various CRM sources, intent signals, company research APIs, and enrichment platforms. This is all handled through standard API connections. Nothing here is particularly intelligent.

Personalization layer: Take a template email and use AI to personalize the first or second line. "Hi [first name], I saw you recently visited our pricing page..." That's valuable personalization, but it's still template-based variation.

Sequencing layer: Automate the timing and delivery of these personalized messages across email, LinkedIn, SMS, or other channels. Wait 3 days, send follow-up. Wait 5 days, send second follow-up. If no response after 15 days, move to alternative sequence.

Lead scoring layer: Use intent signals, engagement metrics, and behavioral data to identify which leads are likely to be receptive. This is where real AI comes in, but it's still classification, not understanding.

Response handling: Detect when a prospect responds, classify whether it's positive/negative/irrelevant, and either escalate to a human or move them to a nurture sequence.

Meeting booking: If a prospect indicates interest, automatically send a calendar link or hand off to a scheduling system.

This is useful. This is valuable. We've invested in companies doing exactly this. Companies like 11x, Artisan, Qualified are growing rapidly because this works.

But is it an "AI AE"? Is it an "AI SDR"? Not yet. Not really.

Compare this to what Cursor does:

Context understanding: Cursor reads your entire codebase, understands the architecture, and knows what you're trying to build.

Multi-step reasoning: It doesn't just suggest the next line of code. It understands the problem you're solving and reasons through multiple approaches to find the best one.

Cross-file awareness: It debugs and refactors across your entire codebase, understanding dependencies and implications.

Learning and adaptation: It learns from your feedback and gets better at anticipating what you need.

Collaborative partnership: It feels like pair programming with a skilled engineer, not like using a powerful autocomplete.

That's the architecture difference. One is automation with AI mixed in. The other is AI with full understanding of the domain.

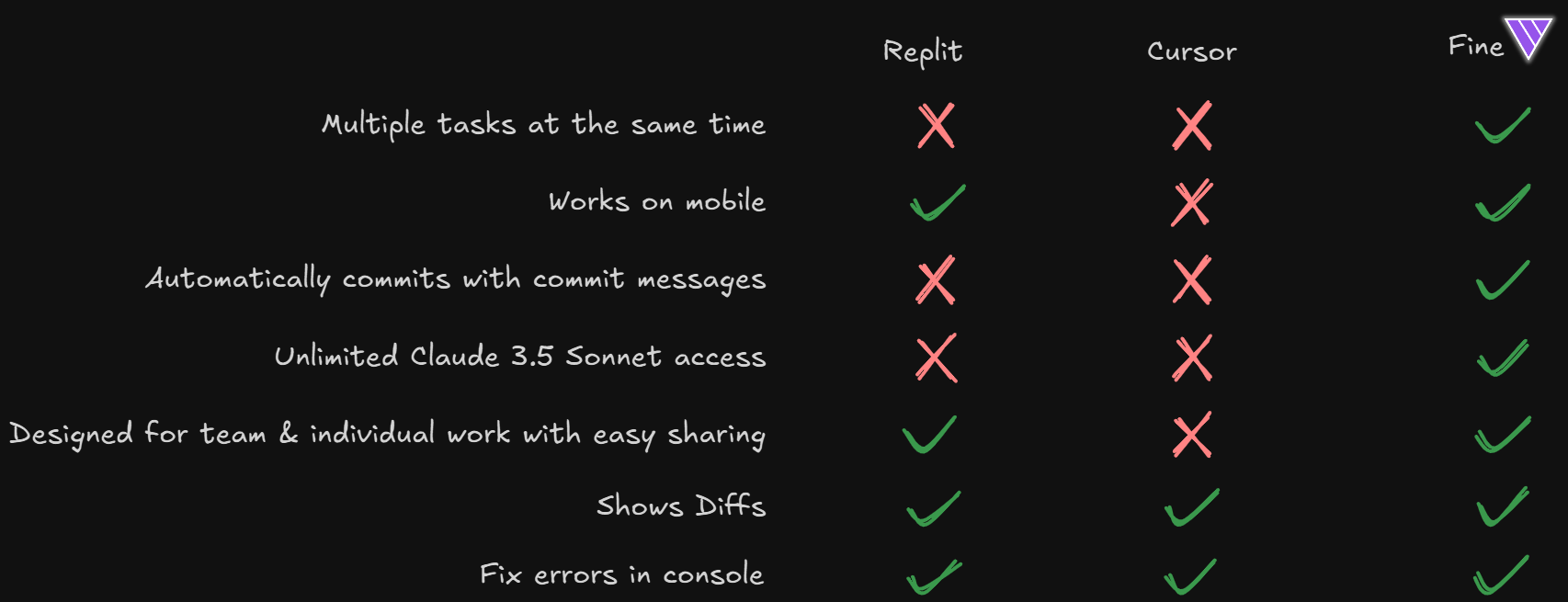

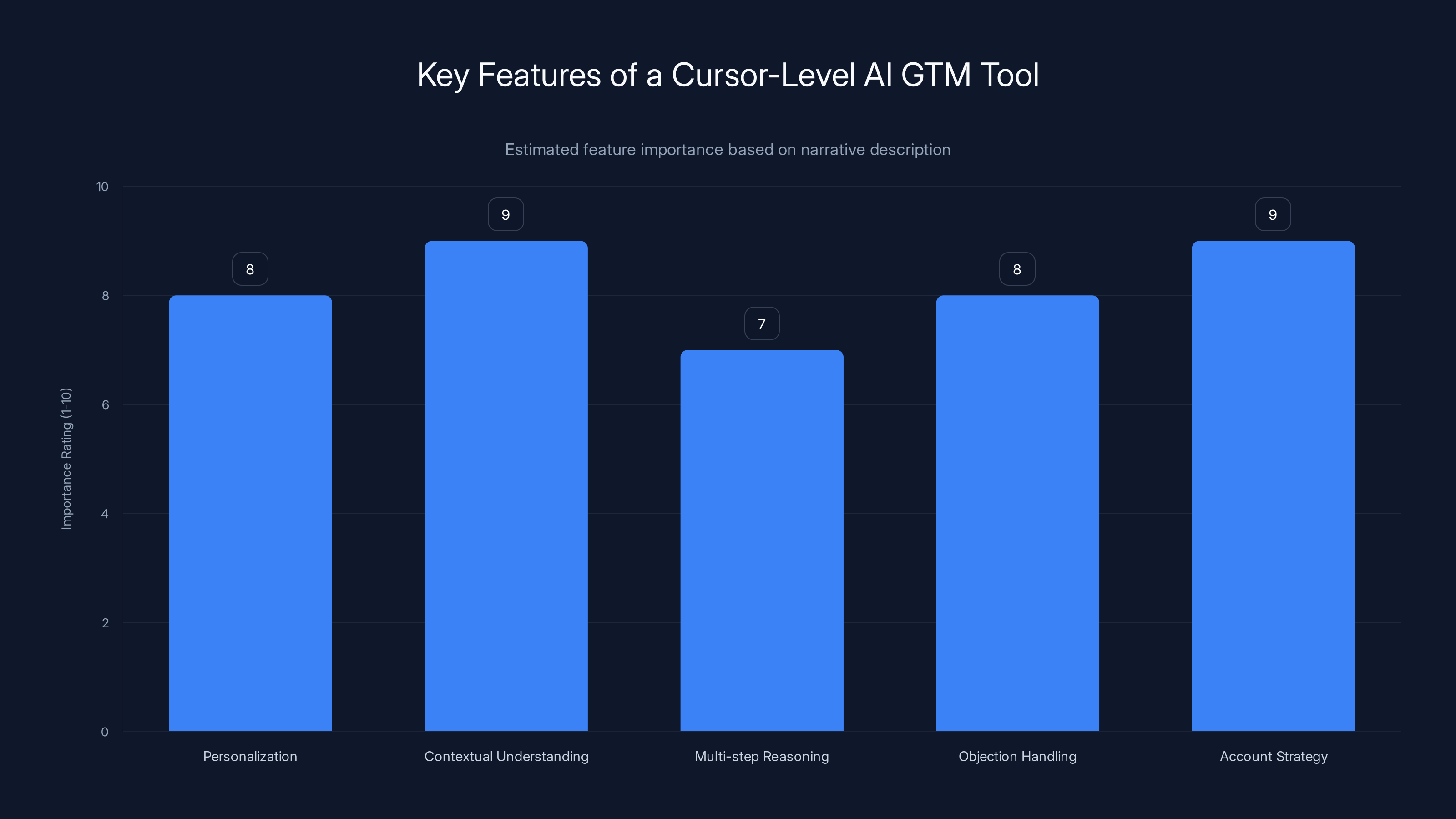

Estimated data suggests that contextual understanding and account strategy are crucial features of a cursor-level AI GTM tool, rated highest in importance.

What It Actually Takes: The Hidden Complexity

Let me tell you what people don't talk about when they claim they're running an "AI SDR."

We went through 47 iterations of our AI outbound approach just to stop the system from being too aggressive on pricing. Forty-seven. Not seven. Not five. Forty-seven.

Why? Because AI models, when not carefully constrained, will optimize for the metric you give them, even if it destroys your brand. If you tell a system to maximize positive responses, it will eventually suggest pricing that no customer would accept but that technically gets a "maybe" reply.

The first 1,000 emails we sent required manual review. Every single one. A human had to read it, check for tone, verify it was appropriate for the prospect, and approve it. That's not scalability. That's proof of concept labor.

Even now, twelve months into running our AI GTM stack, we spend 20-30 minutes every single day reviewing AI-generated outreach. We spot-check for brand voice consistency. We look for any signs of tone drift. We make sure the system isn't getting too aggressive or too friendly.

Agentforce, with all of Salesforce's resources and all the context from your CRM, still requires human review of draft responses before they go out. We iterate on prompt builder instructions constantly. We have weekly meetings to discuss which campaigns should be included, which should be paused, which messaging approaches are working and which aren't.

This is not "set it and forget it." This is not hands-free AI.

This is ongoing management of an intelligent system that requires domain expertise to oversee.

Now, compare that to Cursor. You install it. You use it. It works. Does it require tweaking? Sometimes. But you don't need to manually review the first 1,000 code suggestions. You don't need 47 iterations to prevent it from suggesting dangerous patterns. You don't need to spend 20 minutes every day babysitting it.

Cursor became a $29.3B company because it works reliably enough that it requires minimal human intervention. It's trustworthy out of the box.

That's the gap.

The Sales Context Problem: Why Coding Is Easier for AI

This deserves its own section because it's important and counterintuitive.

AI is actually easier in coding than in sales. This seems backward, but it's true.

Why? Because coding has clear success metrics. Did the code run? Is it correct? Does it pass tests? Is it performant? These are measurable, objective questions. An AI system can be evaluated against concrete criteria.

Sales is messier. Way messier.

A prospect who doesn't respond to an initial email might not respond because they're not interested. Or they might be interested but swamped this week. Or they're interested but don't make buying decisions until Q2. Or they're not the right contact and it never mattered. Or they would have been perfect, but your email hit their spam folder.

An AI system optimizing for meetings booked might push harder and harder on unqualified prospects just to hit the number. A skilled AE, on the other hand, learns to read situations and knows when to persist and when to move on.

Sales is about understanding human psychology, industry dynamics, company politics, budget cycles, and a thousand contextual factors that vary by situation. Sales is about building relationships. Sales is about knowing when to be direct and when to be subtle. Sales is about understanding not just what someone wants to buy, but why they want it and what could prevent the deal from closing.

Coding has a defined problem space. The code either works or it doesn't. Sales has an infinite problem space. Every prospect is different. Every situation is different. Every objection requires a different response.

That's not an excuse for AI GTM tools to be where they are. It's just context for why closing the gap is harder than it appears.

AI SDR requires significantly more iterations, initial manual reviews, and daily management time compared to Cursor, highlighting its complexity. Estimated data for Cursor.

Current AI GTM Tools: Honest Assessment

Let me be specific about what today's AI GTM tools actually do well and where they fall short.

What they do well:

They generate volume. A system running 24 hours a day, 7 days a week will send more emails, make more calls, and initiate more conversations than any human could. That volume alone is valuable.

They score and classify with reasonable accuracy. If you've trained them on your historical data, they can identify which inbound conversations are worth a human response and which aren't. This saves significant filtering work.

They handle repetitive tasks. Follow-ups, reminders, basic qualification questions, calendar scheduling. These are things humans hate doing and AI handles cleanly.

They improve over time. As you feed them more data about what works, they get better at replicating successful patterns.

They don't get tired. They don't have off days. They don't phone it in on Friday afternoons.

Where they fall short:

They struggle with context and nuance. They might send a perfect email to the wrong person at the wrong time because they don't truly understand the buying situation.

They require constant oversight. You can't trust them completely. You need human review and guidance.

They don't handle objections well. A prospect raises a legitimate concern, and the AI either repeats its pitch or escalates. It doesn't truly engage with the objection.

They optimize for the wrong metrics. They maximize response rates without understanding if those responses are qualified. They book meetings without checking if those meetings will actually close.

They don't understand your product deeply. They can recite features, but they don't understand problems your product solves in nuanced ways. They can't have a true business conversation.

They're not account-aware. Most systems treat each prospect in isolation. They don't understand company structure, decision dynamics, or account strategy.

They can't adapt mid-conversation. If a prospect takes a conversation in an unexpected direction, most systems struggle to follow intelligently. They'll either stick to their script or hand off to a human.

What a Cursor-Level AI GTM Tool Would Actually Look Like

This is the question that keeps me up at night, because it's the question that reveals exactly what's missing.

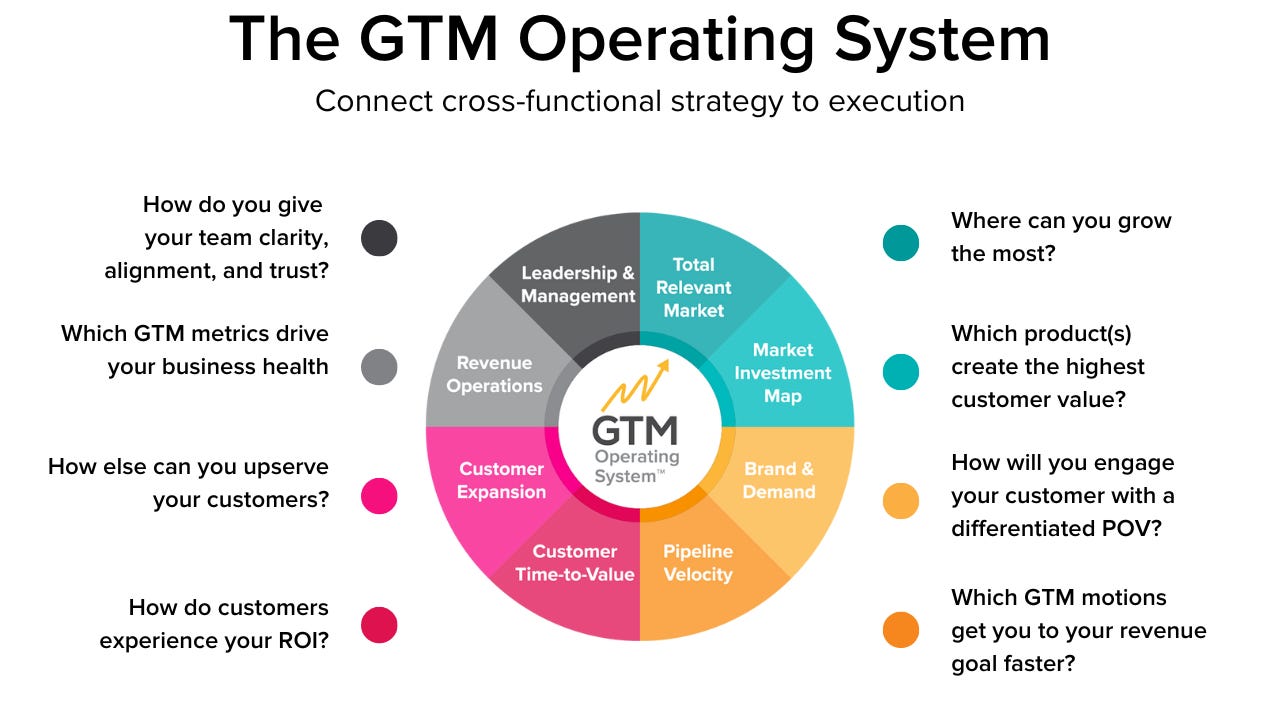

A Cursor-level AI GTM tool wouldn't just personalize emails. It would understand your entire revenue strategy and make decisions in context of that strategy.

It would know your product so deeply that it could explain why your solution matters in ways that resonate with different personas. Not from a template library, but from genuine understanding.

It would read a prospect's LinkedIn profile, their company's recent news, their industry trends, and their role within their organization and synthesize that into a genuine understanding of what matters to them right now.

It would have multi-step reasoning. It wouldn't just decide "send email today." It would reason through: "This prospect just got promoted. They're probably in onboarding mode and won't be receptive. But they'll likely own a project in Q2 that we can help with. Send a brief, relevant message now that demonstrates we understand their situation. Then reach back out in four weeks with specific relevance to their new role."

It would handle objections intelligently. A prospect says "Your pricing is too high." Instead of repeating the value prop, it would reason through: "They have 200 employees. If our ROI is $3 per dollar spent, and they could deploy across 50 of their teams, they'd see payback in 4 months. So the objection isn't really price, it's confidence in ROI. Tailor the conversation to address that specifically."

It would understand account strategy. It wouldn't just think about one contact. It would think about the buying committee, understand the roles and dynamics, and coordinate outreach across multiple stakeholders.

It would learn from what actually closes versus what generates ghosted meetings. An AI system that sees that 40% of meetings it books never happen would get smarter about which types of conversations are worth booking.

It would handle non-linear conversations. A prospect raises something unexpected, and instead of either repeating the script or escaping, the AI would genuinely engage with the new topic and understand how it relates to the core conversation.

It would know when to escalate to a human and why. Not just "engagement didn't happen," but "This is an account executive opportunity because they need custom implementation, which requires deeper conversation."

It would be accountable. If it books a meeting, it would follow up to make sure the human actually showed up and had a productive conversation. It would learn from outcomes and adjust behavior.

It would work across the entire revenue organization. Not just outbound prospecting, but supporting account executives with existing accounts, helping with renewal conversations, identifying expansion opportunities, supporting customer success with at-risk accounts.

That's what Cursor-level would look like.

Do we have any AI GTM tools doing all that? No. Not even close.

B2B SaaS companies should expect to invest significant time in setting up and managing AI GTM tools, with an estimated 20 hours for initial setup and 7.5 hours weekly for oversight. Estimated data.

The Measurement Problem: Why Growth Feels Different

Here's a subtle but crucial difference. With Cursor, you can feel the improvement immediately. You write some code. Cursor suggests a completion. It's better than what you would have written. It's faster than you would have written it. You use it, trust it, use it more.

The feedback loop is instant. The value is immediately apparent.

With AI GTM tools, the feedback loop is weeks or months. You send 500 emails. You get some responses. Some of those responses turn into conversations. Some of those conversations turn into meetings. Some of those meetings turn into customers. At the end of the quarter, you see the revenue impact.

That's a 12-week feedback loop instead of a 12-second feedback loop.

With that long a feedback loop, it's hard to know if the system is actually working or if you're just seeing natural sales variability. Did the AI system drive better results, or did you just happen to reach out in a good market moment?

That measurement ambiguity makes it hard for AI GTM tools to iterate as fast as AI coding tools can.

Additionally, Cursor's value is highly concentrated. One developer, one tool, measurable productivity improvement. If 30% of your engineering team uses Cursor and is 25% more productive, that's a massive, measurable business impact.

AI GTM tools are more distributed. One AI SDR, one sales team, impact distributed across many factors. Did this AI system drive revenue, or did your product improvements drive revenue? Did this AI system help close deals, or did your human AEs close deals and the AI just provided support?

That distribution of impact makes it harder to demonstrate ROI, which makes it harder for users to commit fully.

The Training and Trust Problem

I mentioned we spent 30 days of daily training just to get our AI outbound system to a reliable place. Let me expand on that because it reveals something important.

Cursor works well immediately. You install it. You use it. It's good. It gets better as you use it, but the baseline is already high.

AI GTM tools require significant setup, training, and hand-holding.

You need to feed them your CRM data. You need to train them on your messaging approach. You need to give them examples of your best emails so they understand your voice. You need to set up rules about who they can reach out to. You need to establish guardrails to prevent them from saying things that damage your brand.

Then you need to monitor them closely. Watch the first 1,000 emails. Review response rates. Check for any tone drift. Make sure they're not getting too aggressive.

Then you need to continuously iterate. As you learn what works, you update the system. As you see what doesn't work, you fix it.

That's a lot of work. And until that work is done, you don't actually trust the system.

Compare that to Cursor. You install it. It works. You trust it immediately.

Trust is the key differentiator. When you trust a tool, you use it more. When you use it more, you see more value. When you see more value, you commit deeper.

AI GTM tools don't have that trust yet. They're still tools that you supervise. Cursor is a tool you collaborate with.

AI GTM tools, while valuable, are estimated to lag behind Cursor and Replit in sophistication, reliability, and transformative impact as of 2025.

Why This Gap Exists: The Fundamental Challenges

Let me be clear: this isn't because the people building AI GTM tools are less talented than the people building Cursor. That's not it at all. Many of these founders are incredibly smart and well-funded.

The gap exists because of fundamental differences in the problem space.

Ambiguity in outcomes: With coding, you know when something works. Code either runs or doesn't. With sales, you don't know for months whether something worked.

Complexity of context: Coding exists within a defined system. Your codebase has clear boundaries. Sales exists in the real world with infinite variables.

Moral stakes: There's a difference between an AI making a programming error and an AI being too aggressive in a sales message. The stakes are different. The regulatory environment is different. The brand risk is different.

Feedback loops: Developers use Cursor dozens of times per day. Sales leaders see results from AI GTM tools once per quarter. Short feedback loops enable rapid improvement. Long feedback loops slow everything down.

Cultural acceptance: Everyone wants AI developers. Everyone is a bit nervous about AI doing sales work. There's cultural resistance that doesn't exist in engineering.

Talent concentration: The best AI talent is concentrated in large companies and specialized AI firms. Most AI GTM companies are early-stage startups competing for that talent.

Scale dynamics: Cursor had months of runway and years to optimize. Most AI GTM companies are on shorter timelines with more pressure to generate revenue.

These challenges are real. They're not insurmountable, but they explain why the gap exists.

The Path Forward: What Would Accelerate Progress

If you're building an AI GTM tool and you want to close the gap with Cursor, here's what I think matters:

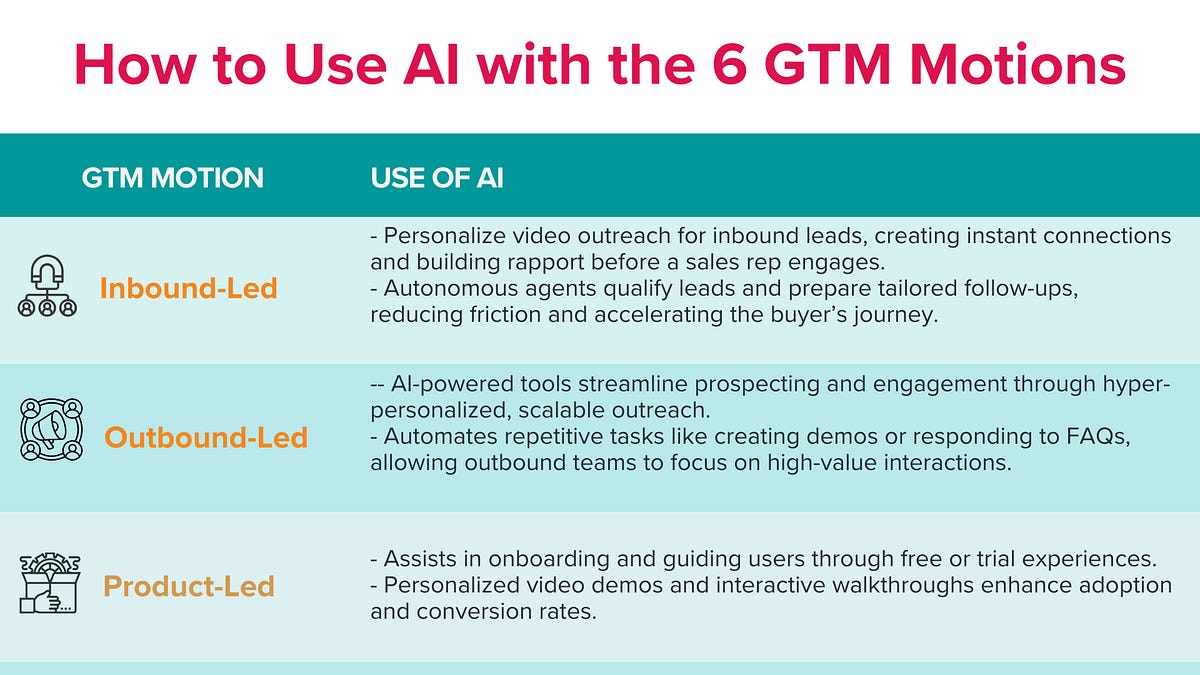

Deep product knowledge integration: Stop treating sales as a generic domain. Work with sales experts to build domain-specific reasoning into your system. Understand not just prospect data, but product-market fit principles, sales methodologies, and objection patterns.

Account-level understanding: Move beyond individual outreach. Build systems that understand buying committees, account dynamics, and company strategy. This is how real AEs think.

Outcome accountability: Track whether meetings booked actually happen, whether conversations are qualified, whether deals close. Don't optimize for vanity metrics. Optimize for real sales outcomes.

Reduced supervision requirements: Iterate until your system works well enough that humans need to spend less than 5 hours per week overseeing it. Until you hit that bar, you haven't really solved the problem.

Industry-specific models: Don't build one AI GTM tool for all industries. Build tools that deeply understand B2B SaaS, or healthcare, or fintech. Specialization beats generalization in immature markets.

Human-AI collaboration: Stop thinking about replacing AEs. Think about augmenting them. The best version of this might be an AE with AI assistance rather than an AI with human backup.

Real learning loops: Build systems that learn from what actually closes, not just what generates engagement. This requires tracking outcomes through the entire sales cycle.

Transparent ROI: Make it obvious to customers whether your system is working. Build dashboards that show impact clearly. Customers should be able to see, without ambiguity, whether this system is driving revenue.

What Cursor and Replit Got Right That GTM Tools Should Learn From

Cursor didn't succeed by being a better autocomplete. It succeeded by being a fundamentally different product: a collaborative AI pair programmer.

Replit didn't succeed by being a better code editor. It succeeded by enabling people who can't code to build applications with AI.

Both of them understood that incremental improvements to existing workflows don't drive adoption. Transformational change in how people work drives adoption.

Current AI GTM tools are making incremental improvements to existing sales workflows: better email personalization, better lead scoring, better sequencing. Those are valuable, but they're not transformational.

What would be transformational?

Reducing the skill gap: Making great sales possible for people who aren't naturally gifted at it. Cursor does this for coding. An AI GTM tool that could make great outreach possible for non-talented salespeople would be transformational.

Changing the economics: Making one person as productive as three used to be. That's real transformation. That changes unit economics and company structure.

Opening new possibilities: Cursor enabled new coding patterns that weren't possible before. What sales patterns would become possible with better AI?

Building networks: Cursor succeeded in part because developers love it and recommend it to other developers. AI GTM tools need similar network effects.

Making something feel magical: When you first use Cursor, there's a moment where you feel like something changed. That feeling is hard to engineer, but it matters.

Real-World Impact: Where We're Seeing Value Today

I don't want to be completely negative. AI GTM tools are working. They're just not working the way Cursor works.

We sent 20,000 AI messages and generated $2M in direct revenue. That's real impact. But consider what that means: for every dollar spent on the AI GTM stack, we got multiple dollars back in revenue.

Our inbound AI system handled 668,000 conversations and qualified leads that turned into $1M in closed revenue in 90 days. The system is working.

Agentforce is closing deals from prospects who would have ghosted and fallen off our radar months ago. That's valuable.

But none of that feels like magic. None of that feels like the difference between struggling and thriving. None of that feels like a 10x improvement in how we do sales.

Compare that to how developers feel about Cursor. Many developers say they can't imagine working without it anymore. Many developers say they're literally more productive with Cursor than they are without it. That's the standard.

We haven't hit that standard yet with AI GTM tools. We're at "valuable." We're not at "can't live without it."

The Timeline: When Will We Get There?

Cursor went from zero to $1B ARR in 24 months. That's fast. But Cursor had advantages: clear success metrics, immediate feedback loops, and deep integration with how developers actually work.

AI GTM tools have longer feedback loops, more complex outcomes, and more distributed impact. They'll take longer to mature.

My prediction: within three years, we'll see the first truly Cursor-level AI GTM tool emerge. It won't be an iteration on current tools. It will be a fundamentally different approach to what an AI sales agent is and does.

That tool will likely:

- Have extremely deep product and market knowledge built in

- Think in terms of accounts and buying committees, not individual prospects

- Require minimal human oversight once deployed (under 5 hours per week)

- Drive measurable 2-3x improvements in territory productivity

- Feel collaborative instead of automated

- Have strong network effects within sales organizations

Will it be built by a current AI GTM company? Maybe. More likely, it will be built by a new company that doesn't have legacy constraints and can rethink the entire approach.

Who builds it will matter less than the fact that it gets built. When someone cracks this, they'll build a $100B company. The market is large enough and the gap is wide enough that whoever solves this gets massive wins.

Why This Matters Beyond AI GTM

The Cursor-versus-AI-GTM gap tells us something important about AI progress in general.

AI is excellent in domains where:

- Success is measurable and fast

- Context is bounded

- Feedback loops are tight

- Skill differentials are large

AI is still immature in domains where:

- Success is ambiguous and slow

- Context is complex and unbounded

- Feedback loops are long

- Judgment matters more than execution

Sales falls into the second category. So do law, strategy consulting, therapy, teaching, and many other high-value domains.

Closing those gaps requires not just better AI, but better ways of measuring impact, better feedback mechanisms, and deeper domain expertise baked into AI systems.

Cursor succeeded because the engineering domain has clear metrics and tight feedback loops. Scaling AI to other domains requires either finding ways to create tighter feedback loops or building AI that's sophisticated enough to handle ambiguity and long feedback loops.

The companies that crack that problem will unlock enormous value.

What This Means for Your Business

If you're running a B2B SaaS company and considering AI GTM tools:

Do it. The tools work. They generate pipeline. They're valuable. But don't expect them to replace your best salespeople. Use them to scale what's already working. Use them to generate volume. Use them to eliminate low-value work.

Expect to spend time setting them up and managing them. Expect to review the first 1,000 interactions manually. Expect to spend 5-10 hours per week on oversight. Plan for that.

Measure outcomes clearly. Don't assume the tool is working because it's generating activity. Track whether that activity turns into qualified conversations, meetings, and revenue.

Get creative about how you use them. Don't just use an AI SDR for your basic outbound. What about using AI for re-engagement campaigns? What about using AI for renewal outreach? What about using AI for expansion conversations? The current tools work better in some use cases than others.

If you're building an AI GTM tool:

The gap is real. You have runway to close it, but not forever. Users can feel the difference between your tool and what Cursor is doing. You need to be explicit about what you're solving and honest about what you're not.

Focus obsessively on outcomes. Not activity. Not engagement. Actual revenue impact. Users will stick with you if they can see, without ambiguity, that your system drives revenue.

Build for the entire account, not just the individual. The next generation of AI GTM tools will think in terms of accounts and buying committees, not individual prospects.

Reduce the supervision burden. Every hour of weekly oversight you can eliminate is an order of magnitude improvement in user experience.

If you're an investor in AI GTM tools:

The category works. Companies are raising money, hitting growth targets, and building valuable businesses. But the ceiling is lower than the AI coding space unless and until someone cracks the fundamental challenges.

Pay attention to which companies are making real progress on measurement, outcome tracking, and reducing supervision burden. Those are the companies that will win in 2027 and beyond.

The biggest opportunity is probably the company that figures out how to build a collaborative AI sales partner that works across the entire revenue organization, from prospecting to renewal to expansion. That's a bigger market than just prospecting.

The Bottom Line

AI GTM tools are valuable today. They're not great tomorrow. But they're not broken.

They're just not at the level of Cursor and Replit yet. That gap represents a real opportunity for someone to build something significantly better. That someone will build a major company.

In the meantime, if you're running a B2B SaaS company, these tools are worth using, worth investing in, and worth managing carefully. They work. They just require more care and feeding than Cursor does.

The future of AI-driven go-to-market work is bright. But we're still in the early days. The real breakthroughs are coming, and when they arrive, they'll feel as transformational as Cursor felt when you first tried it.

We're not there yet. But we're closer than we were last year. And in another year, we'll be even closer.

That's the path. That's the future.

FAQ

What is the difference between current AI GTM tools and AI coding assistants?

Current AI GTM tools are hyper-automation systems that personalize templates, score leads, and sequence outreach. They require significant human oversight and 20-30 minutes of daily review. AI coding assistants like Cursor understand entire codebases, perform multi-step reasoning, and work collaboratively with minimal supervision. The architectural difference is substantial: one is automation with AI mixed in, the other is AI-native with automation as output.

How much human oversight do AI GTM tools actually require in production?

Based on real implementations, most AI GTM tools require 5-10 hours of weekly human oversight, 20-30 minutes of daily spot-checking, and continuous iteration on messaging and targeting. The first 1,000 interactions typically need manual review. Setup and training take 30+ days before the system becomes reliable. This is very different from Cursor, which requires minimal intervention after installation.

Why are AI coding tools more mature than AI GTM tools?

AI coding tools succeed because they have clear success metrics (code runs or doesn't), tight feedback loops (seconds, not months), bounded context (your codebase), and immediate measurability of impact. Sales has ambiguous outcomes, month-long feedback loops, complex unbounded context, and distributed impact across many variables. These fundamental differences make scaling AI in sales significantly harder.

What would a truly excellent AI GTM tool actually do?

A Cursor-level AI GTM tool would understand your entire revenue strategy and account dynamics, perform multi-step reasoning about buying committees and budget cycles, require less than 5 hours of weekly human oversight, drive measurable 2-3x productivity improvements, handle non-linear conversations intelligently, track outcomes through the entire sales cycle, and feel like collaborative partnership rather than automation.

Should B2B SaaS companies invest in AI GTM tools today?

Yes. These tools generate real pipeline and save significant time on repetitive work. But go in with realistic expectations: they're valuable but require careful setup, ongoing management, and clear outcome measurement. Use them to scale what already works and to eliminate low-value activities, not to replace your best sales talent. Plan for 5-10 hours weekly of oversight and measure revenue impact specifically.

What timeline should we expect for AI GTM tools to reach Cursor-level maturity?

Based on current progress, the first truly transformational AI GTM tool will likely emerge within 2-3 years. It probably won't be an iteration on current tools but rather a fundamentally different approach built by a company without legacy constraints. When that tool arrives, it will likely drive 2-3x productivity improvements and feel as indispensable as Cursor feels to developers today.

How do you actually measure whether an AI GTM tool is working for your business?

Go beyond activity metrics. Track: qualified conversations (not just outreach volume), actual meetings booked and attended, close rates from AI-generated pipeline, average deal size, and revenue attribution. Build a clear funnel showing how activity from the AI tool converts to actual revenue. Most failures to see value come from measuring activity instead of outcomes. Insist on outcome-level measurement.

What are the biggest limitations of current AI GTM tools?

They struggle with context and nuance, require constant human oversight, don't handle objections well, optimize for wrong metrics, don't understand your product deeply enough for consultative selling, lack account-level awareness, struggle with non-linear conversations, and have long feedback loops that slow iteration. All of these are solvable, but none have been solved at scale yet.

Key Takeaways

- Cursor achieved $1B+ ARR in 24 months while AI GTM tools remain valuable but immature automation systems, reflecting a fundamental gap in product sophistication

- Current AI SDRs are hyper-automation with AI components, not true agents—they require 20-30 minutes of daily review, 5-10 hours weekly of oversight, and 30+ days of training to function reliably

- Real implementations show value: 1M in 90 days from inbound AI, but these require significant human management and don't feel transformational

- The gap exists due to fundamental differences: AI works best with clear metrics and tight feedback loops (coding), while sales has ambiguous outcomes and long feedback cycles

- The next-generation AI GTM tool will likely understand accounts and buying committees holistically, require minimal oversight, drive 2-3x productivity gains, and create a $100B company when it arrives

Ready to explore how AI is transforming your revenue operations? Consider how tools like Runable are bringing AI-native automation to business processes beyond pure sales—from presentation creation to automated reporting, helping teams work smarter while humans focus on high-value judgment calls.

Use Case: Automating your weekly sales reports and creating executive presentations from raw pipeline data in minutes instead of hours

Try Runable For Free

Related Articles

- Why AI ROI Remains Elusive: The 80% Gap Between Investment and Results [2025]

- Building Your Own AI VP of Marketing: The Real Truth [2025]

- Why CEOs Are Spending More on AI But Seeing No Returns [2025]

- Responsible AI in 2026: The Business Blueprint for Trustworthy Innovation [2025]

- Enterprise AI Needs Business Context, Not More Tools [2025]

- OpenAI's Enterprise Push 2026: Strategy, Market Share, & Alternatives

![Why AI GTM Tools Still Fall Short of Cursor and Replit [2025]](https://tryrunable.com/blog/why-ai-gtm-tools-still-fall-short-of-cursor-and-replit-2025/image-1-1769182766265.jpg)