Wikipedia's AI Detection Guide Powers New Humanizer Tool for Claude

Here's something weird that happened in late 2024: a developer created a tool to make AI writing sound more human, and the blueprint came straight from Wikipedia. Not from an AI company. Not from some fancy research lab. From Wikipedians.

Think about that for a second. The people who've been fighting AI slop on Wikipedia for years put together a list of tells—patterns and phrases that scream "I was written by a bot." Then someone took that list, fed it to Claude, and created the "Humanizer" skill. The goal? Help AI outputs dodge detection by avoiding those exact patterns.

This creates a genuinely weird arms race. Wikipedia documents what makes AI writing obvious. AI companies respond by training models to avoid those tells. Then Wikipedia updates their guide. Round and round.

But here's the thing that makes this interesting beyond the cat-and-mouse game: it exposes something fundamental about how we detect AI in the first place. There isn't some magical detector that "just knows." Instead, there are patterns. Habits. Quirks that trained human editors spotted over thousands of edits and corrections.

In this guide, we're going to break down everything about this phenomenon. What patterns does Wikipedia identify? How is the Humanizer actually working? What does this mean for content quality across the internet? And most importantly, why should you care if you're creating anything with AI?

TL; DR

- Wikipedia's editors created a guide documenting common AI writing tells like vague attributions, promotional language, and collaborative phrases

- The Humanizer skill for Claude uses this guide to automatically remove AI detection patterns from generated text

- This sparks an ongoing arms race where detection methods and avoidance techniques constantly evolve

- AI writing patterns are identifiable because they reflect training data biases and common model behaviors

- Human detection still matters because sophisticated AI can now mimic natural writing patterns more effectively

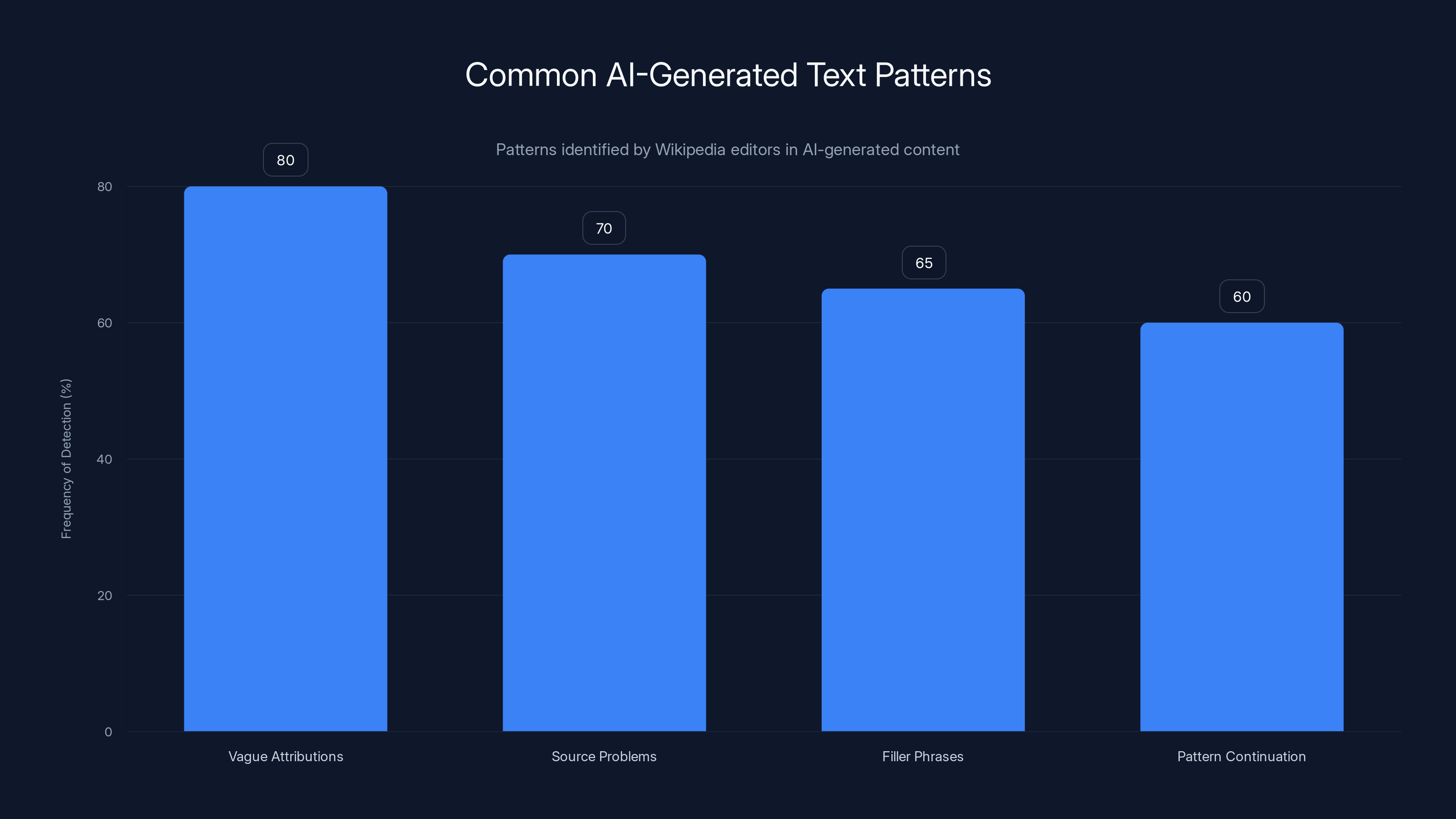

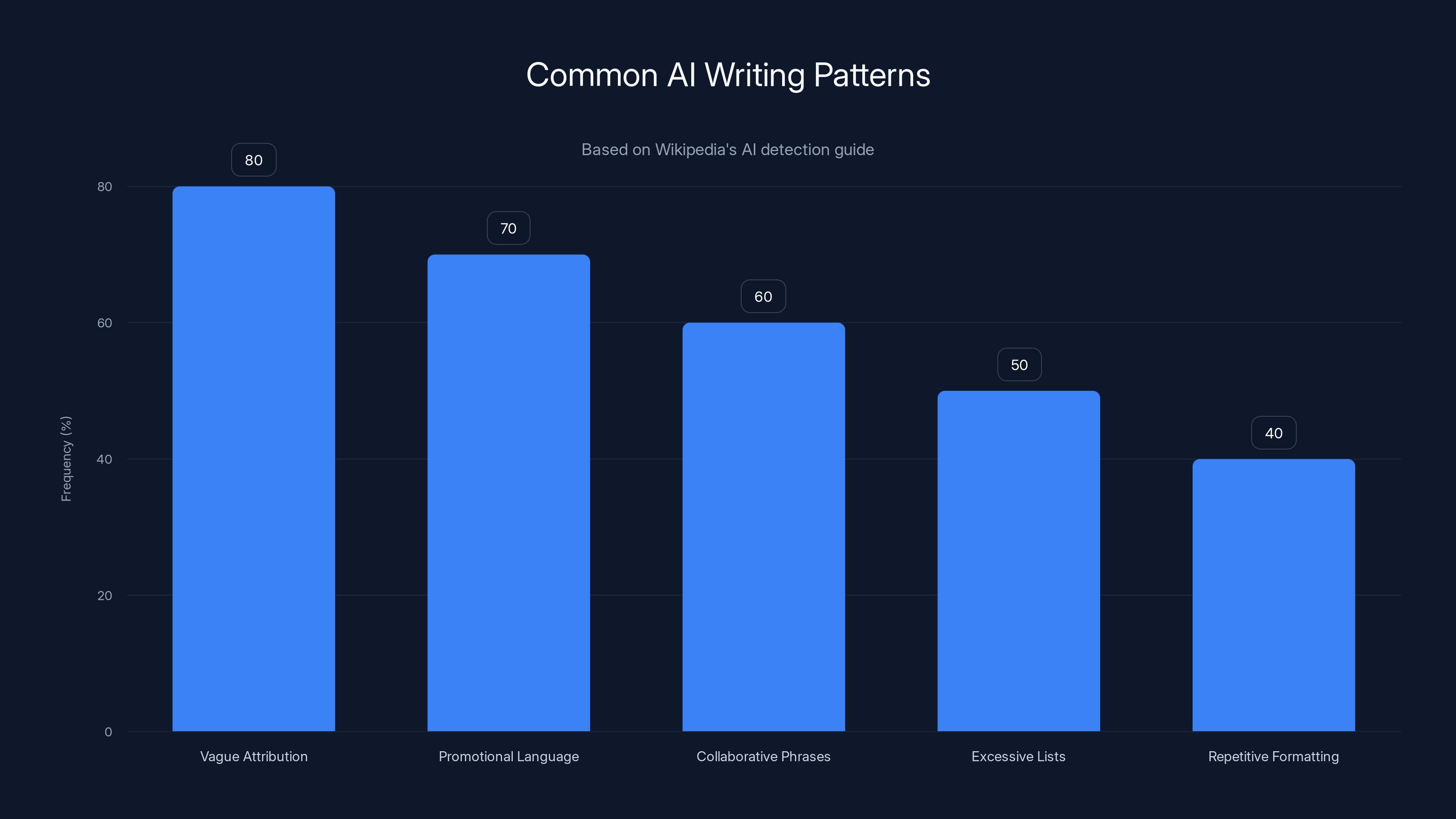

Wikipedia editors frequently identify vague attributions and source problems as key indicators of AI-generated text. Estimated data based on common patterns.

Understanding the Wikipedia AI Detection Guide

Wikipedia didn't set out to become an AI detection authority. They fell into it. Volunteer editors, spending hours refining articles, started noticing patterns in submitted edits that screamed "bot." Not actual bots (those existed too), but AI-generated text that someone copy-pasted hoping nobody would notice.

The patterns were consistent. Remarkably consistent. Over time, thousands of editors collectively identified what those patterns looked like, and someone formalized them into a guide.

Let's talk about what actually shows up on that list. Understanding these patterns is crucial because they represent the accumulated observation of one of the internet's most quality-conscious communities.

Vague Attributions and Source Problems

One of the biggest tells Wikipedia identified: vague attribution. When AI writes something, it tends to use phrases like "Experts agree that..." or "Research suggests..." without pointing to actual experts or actual research.

Human writers do this too, sure. But there's usually a reason. A person might write "Studies show" because they're about to cite something specific in the next sentence. They're setting up context. An AI trained on millions of articles sometimes generates these phrases as filler, padding between actual information.

The difference becomes obvious when you start looking for the citation. A human who wrote "experts believe" probably has someone in mind. An AI? Might just be continuing a pattern it learned from its training data.

Wikipedia's guide specifically flags phrases like:

- "Experts believe it plays a crucial role"

- "Research indicates that"

- "It is widely believed that"

- "Many people think"

All of these are red flags when they're not immediately followed by specific, verifiable sources.

Promotional Language and Breathtaking Adjectives

This one's hilarious once you notice it. AI models, trained on massive amounts of internet text, picked up on promotional language because the internet is full of marketing copy. Blog posts use words like "breathtaking," "stunning," "revolutionary," and "groundbreaking" to make mundane things sound exciting.

AI models learned this pattern: when you want to make something sound impressive, add an emotional adjective. The problem? When you're writing an encyclopedia entry about a geographic region, "breathtaking" doesn't belong. It's marketing speak in a place where factual description is required.

Wikipedia's guide calls out specific promotional terms:

- "Breathtaking region"

- "Stunning landscape"

- "Revolutionary approach"

- "Game-changing innovation"

- "Seamless integration"

- "Cutting-edge technology"

These terms have a marketing flavor that stands out in neutral, encyclopedic writing. They're tools for selling something, not describing it.

Collaborative and Helpful Phrases

Here's a weird one: AI loves saying "I hope this helps." Not just once in a while. A lot.

This emerged from training data. Chatbots, customer service responses, and helpful forum posts all end with "I hope this helps!" It's genuine and friendly in those contexts. In a Wikipedia article about medieval history? Completely out of place.

Wikipedia noticed these "helpful" collaborative phrases:

- "I hope this helps!"

- "Let me know if you have questions"

- "Feel free to reach out"

- "Don't hesitate to ask"

- "Please let me know your thoughts"

- "I'm here to assist"

These are perfect for a customer support chat. They're terrible for an encyclopedia. The pattern reveals itself: AI trained on conversational data sometimes generates text that's overly friendly for the context.

List-Heavy Structure and Repetitive Formatting

Another observation: AI tends to love lists. Not because lists are bad, but because they're safe. They're structured. They're easy to generate.

Human writers use lists strategically. They use them when a concept genuinely needs to be broken down into component parts. AI uses them because they reduce the complexity of generating coherent prose.

Wikipedia editors noticed that AI-generated content often includes:

- Excessive numbered or bulleted lists

- Lists that could easily be converted to prose

- Repeated formatting patterns across different sections

- Lists that don't add clarity (just restate the same information in grid format)

The difference is subtle but detectable. A human chooses a list when it serves the reader. AI defaults to lists when it's the path of least resistance.

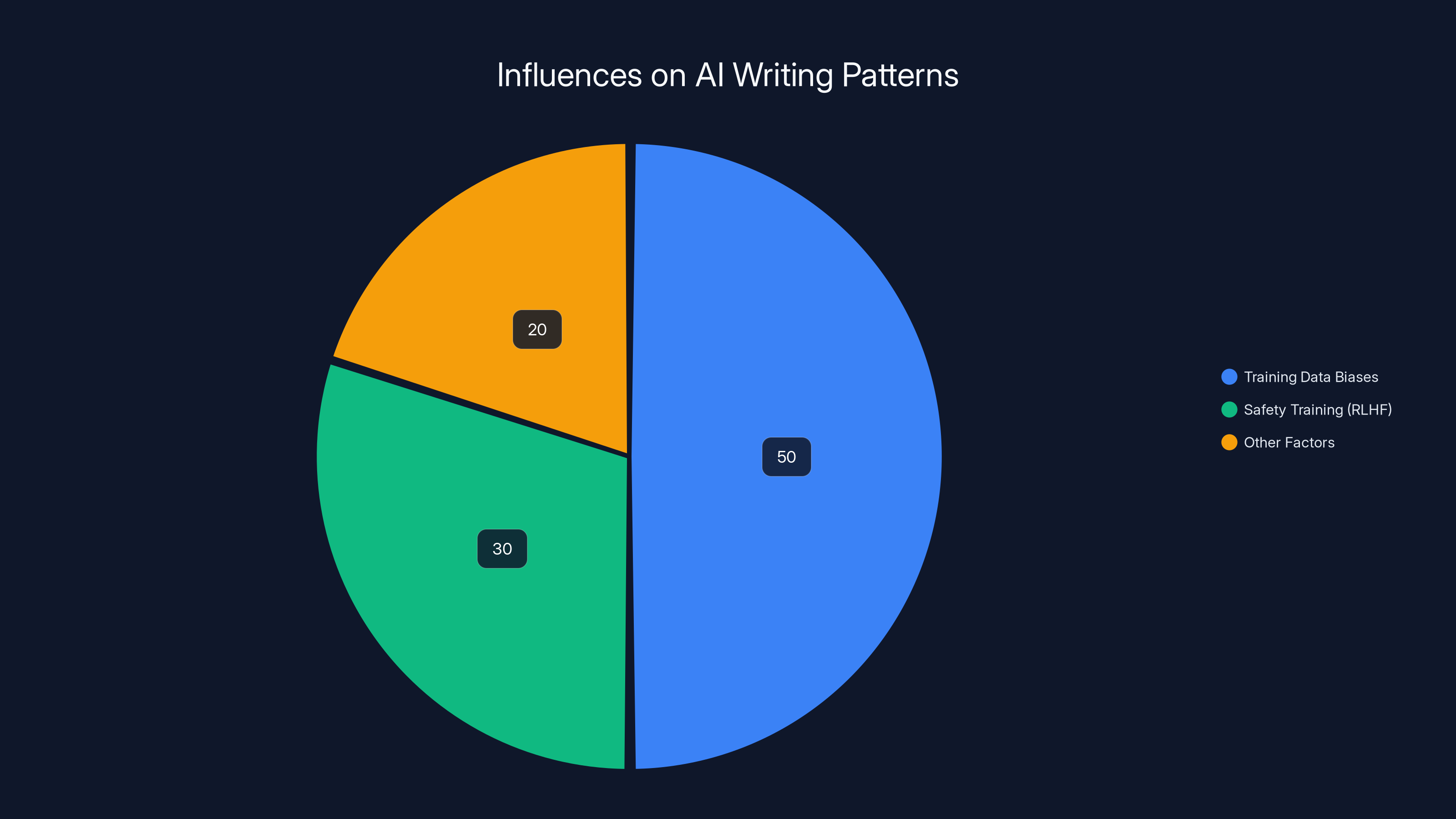

Training data biases and safety training (RLHF) are major influences on AI writing patterns, with training data biases being the most significant. Estimated data.

How the Humanizer Skill Actually Works

Once Wikipedia documented these patterns, developer Siqi Chen built something clever. He took the guide, fed it to Claude, and created a skill that automatically scans text and removes these tells.

The Humanizer is a custom skill for Claude Code. You paste your AI-generated text into it, and it runs through the text searching for the problematic patterns. When it finds them, it rewrites them to sound more natural.

The Pattern Recognition Engine

The Humanizer doesn't work like a traditional spell-checker. It's not looking for exact phrases (though it does some of that). It's looking for patterns that indicate AI writing.

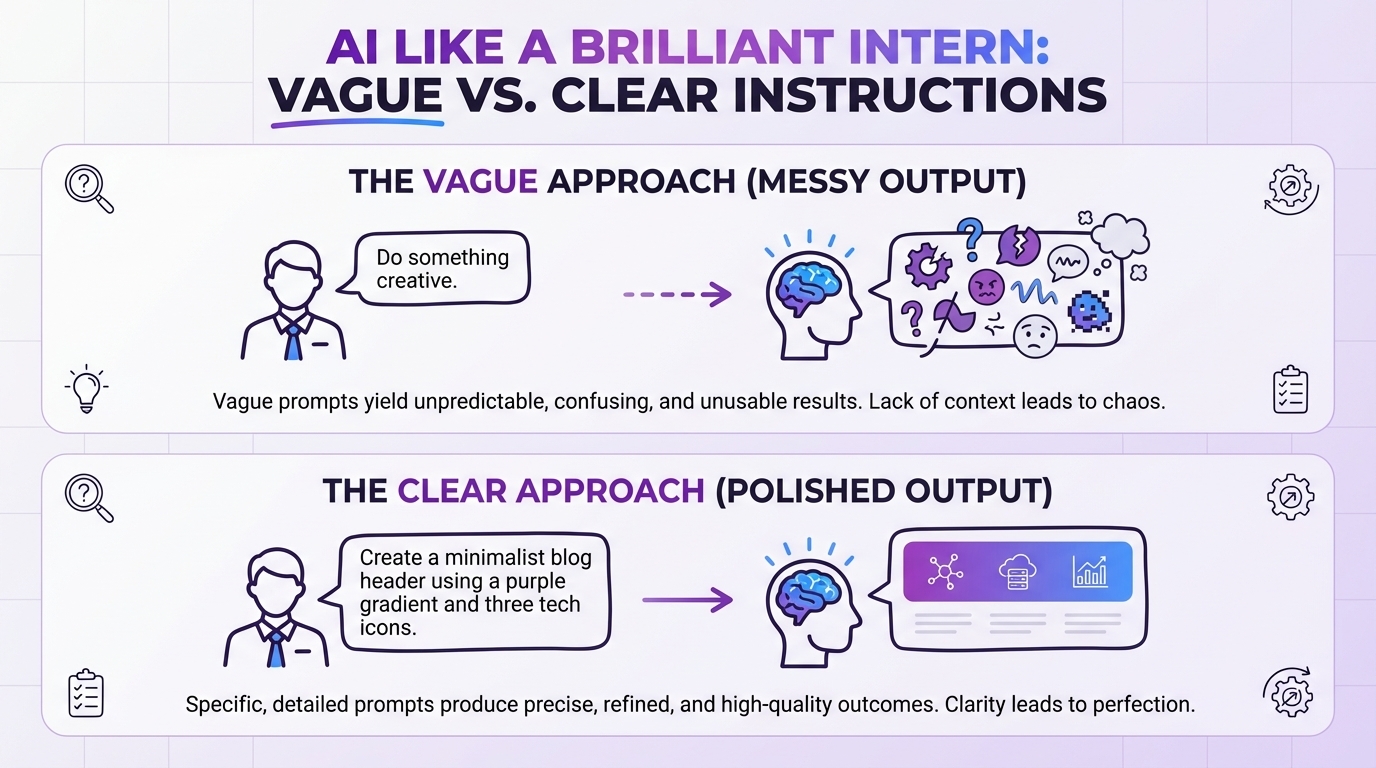

This is important because a simple find-and-replace wouldn't work. "Experts believe" isn't always wrong. Sometimes an expert does believe something, and you want to express that. The Humanizer tries to be smarter than a keyword filter.

It uses Claude's language understanding to evaluate context. Is this phrase serving a real purpose, or is it filler? Does this adjective add information, or is it promotional padding? Would a human writer in this field use this terminology, or is it training-data artifact?

Real-World Transformation Examples

The Git Hub page for Humanizer includes concrete examples. Let's break a few down.

Example 1: Location Description

- Original: "A breathtaking town nestled within the mountainous Gonder region"

- Humanized: "A town in the Gonder region"

Notice what happened. The promotional language ("breathtaking") got removed. The purple prose ("nestled within the mountainous") got stripped down. The result is more factual, more direct, more like how a human would actually describe a place.

Example 2: Attribution

- Original: "Experts believe it plays a crucial role in the ecosystem"

- Humanized: "According to a 2019 study by the Environmental Research Institute, the species plays a crucial role in the ecosystem"

This is the key transformation. The vague attribution got replaced with specific sourcing. Now the claim is actually verifiable. A human editor can check the 2019 study. They can't check "experts."

Example 3: Collaborative Language

- Original: "I hope this helps! Let me know if you have any questions"

- Humanized: "[removed as inappropriate for encyclopedia entry]"

Sometimes the answer isn't to rewrite. It's to delete. These phrases don't belong in formal writing at all.

Continuous Updates from Wikipedia

Here's the clever part: Chen built Humanizer to automatically pull updates from Wikipedia's guide. As the Wikipedia community identifies new AI tells, the Humanizer updates.

This creates a feedback loop. Wikipedia documents patterns. The Humanizer learns them. AI models get better at avoiding those patterns. Wikipedia notices the new patterns. The cycle continues.

It's like intellectual arms race infrastructure. Instead of humans and AI companies fighting separately, we've got an open-source detection guide powering tools that help models avoid detection. It's chaotic in the best way.

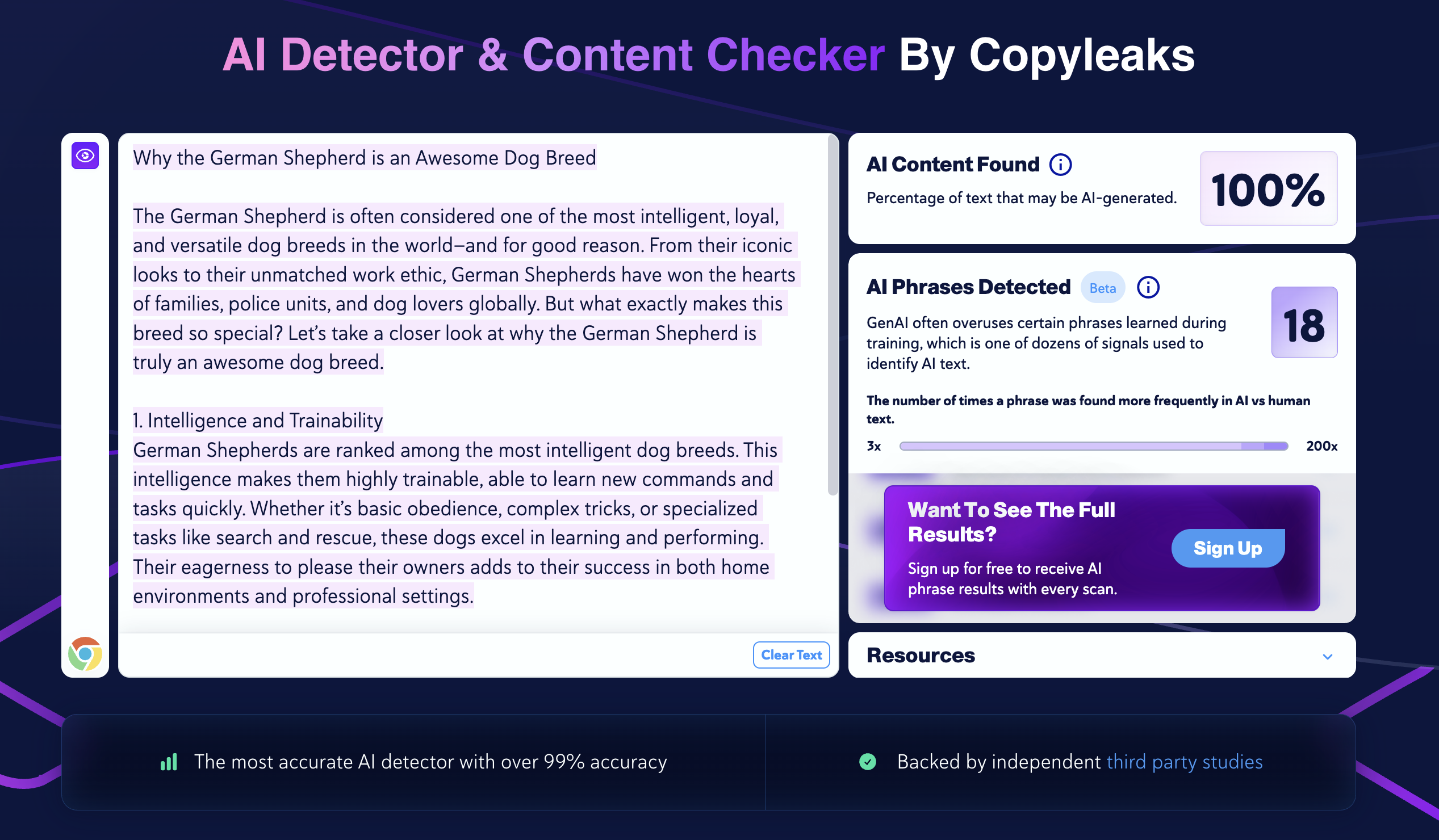

The Cat-and-Mouse Game: Detection vs. Evasion

This is where things get philosophically interesting. We've created tools to detect AI writing. Then we've created tools to help AI avoid those detections. Now detection methods are evolving again.

It's not malicious, exactly. It's just... inevitable. Once a detection method becomes public, people will build against it.

How AI Companies Are Responding

OpenAI already made moves. They noticed that Chat GPT overused em dashes. Like, weirdly frequently. Humans use em dashes sometimes. Chat GPT was using them constantly.

So they fixed it. They adjusted the model's behavior to use em dashes at more human frequencies. That tells you something important: AI companies are actively monitoring what makes their outputs detectable and adjusting accordingly.

Anthropic (the company behind Claude) has been similarly thoughtful. They've trained Claude with Constitutional AI, which includes feedback about writing quality. The model learned to sound more natural partly because that feedback was explicit.

This means the Humanizer might eventually become unnecessary. If Claude gets trained to avoid these patterns natively, why would you need a separate tool? But that's assuming Anthropic decides to do that. They might decide that sometimes sounding AI-like is appropriate. In a chatbot context, you might actually want to know you're talking to an AI.

Wikipedia's Escalation

Wikipedia isn't sitting still. The community that created the detection guide is monitoring how AI evolves. When new patterns emerge, they document them.

There's something beautiful about this. Wikipedia's volunteer editors are basically the immune system of the internet's most important reference work. They spot infections (AI-generated garbage) and catalogue the symptoms.

This is one reason Wikipedia remains relatively clean while Reddit, Twitter, and other platforms are drowning in AI slop. The community has built detection muscles. They've gotten good at spotting the unnatural.

But it won't stay ahead forever. Eventually, AI writing will get good enough that it's not about patterns. It'll be about meaning. And at that point, the battleground shifts entirely.

Estimated data shows that vague attribution and promotional language are the most common AI writing patterns identified by Wikipedia's guide.

Why These Patterns Exist in AI Writing

Understanding detection patterns requires understanding why they exist in the first place. It's not random. It's not a bug in specific models. It's a feature of how language models learn.

Training Data Biases

Language models learn from data. All the text they see becomes part of their understanding of language. If that training data includes a lot of blog posts, marketing copy, and customer service interactions, the model learns those styles.

This is why AI writes promotionally. It learned that style from millions of marketing emails and promotional articles in its training data. When asked to write about something, it sometimes defaults to "make it sound impressive" because that's how a huge chunk of its training data works.

Vague attribution appears because academic papers and news articles often use attribution as a rhetorical device. They say "Studies show" not because they're about to cite studies, but because it lends authority to what follows. The model learned this pattern and replicates it.

Collaborative language appears because the model trained on customer service interactions, forum posts, and chatbot responses. Those contexts demand friendliness. The model learned to be friendly, and sometimes that's inappropriate.

The Safety Training Factor

Modern AI models go through additional training called RLHF (Reinforcement Learning from Human Feedback). This training shapes behavior based on human preferences.

When training these models, companies often reward certain behaviors:

- Being helpful

- Being clear

- Being explicit about uncertainty

- Acknowledging limitations

These are good behaviors in most contexts. But they can create recognizable patterns. If your model was trained to be "always helpful," it'll sometimes be overly helpful. It'll add explanatory phrases it doesn't need. It'll frontload context. It'll be verbose when brevity would be better.

A human writer, in contrast, has learned to adapt. They're helpful when appropriate, concise when that works better, formal in some contexts and casual in others. An AI model trained to be "helpful" across the board creates detectable patterns.

Context and Coherence Issues

Here's a subtle one: long-range coherence. Humans maintain context across entire documents. We remember what we said in paragraph one when we write paragraph ten. We maintain consistent voice, consistent references, consistent logic.

AI models, particularly earlier ones, had limits to their context windows. They couldn't see the whole document at once. They generated text based on what came immediately before. This created a different kind of pattern.

Newer models have larger context windows (Claude 3.5 has 200K tokens, for example), which helps. But the underlying generation process still creates subtle coherence differences from human writing.

A human plans a document. They decide on structure, themes, and evidence before writing. Then they execute that plan. An AI model generates text token by token, influenced by what came before but not following a deliberate pre-planned structure. The results can sound coherent in small chunks while lacking the deeper coherence of human planning.

Implications for Content Creators and Publishers

If you're generating content with AI, or reviewing content others generated, understanding these patterns matters. Not because you need to hide that AI was involved (you shouldn't), but because these patterns actually indicate quality problems.

Quality Signals Beyond Detection

Here's the thing: the patterns Wikipedia identified aren't just "tells that reveal AI." They're quality issues. Vague attribution is bad writing whether it's AI or human. Promotional language in formal contexts is bad writing. Collaborative phrases in encyclopedia entries are bad writing.

By using tools like Humanizer, you're not just making AI writing less detectable. You're making it better. You're removing genuinely problematic patterns that harm clarity and credibility.

Some of this is obvious. If you're writing a technical document, promotional language hurts credibility. If you're citing research, specific attribution is better than vague attribution. These improvements benefit any writing.

Building a Hybrid Approach

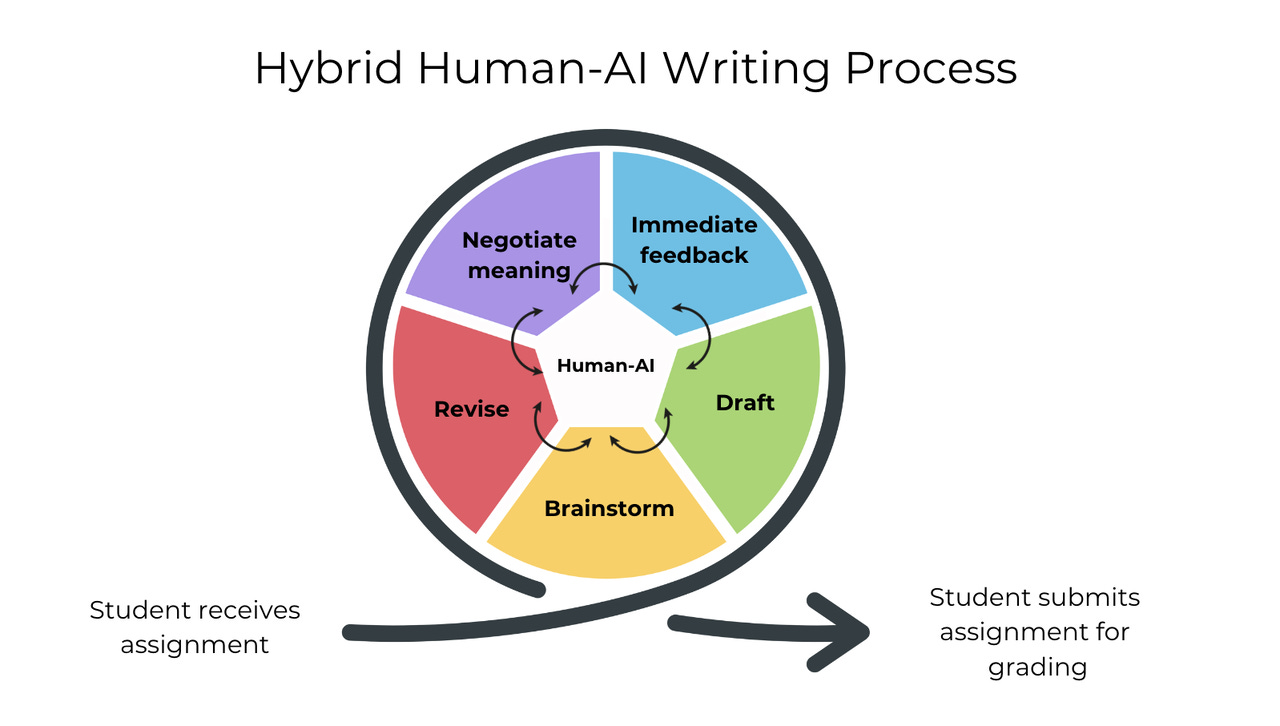

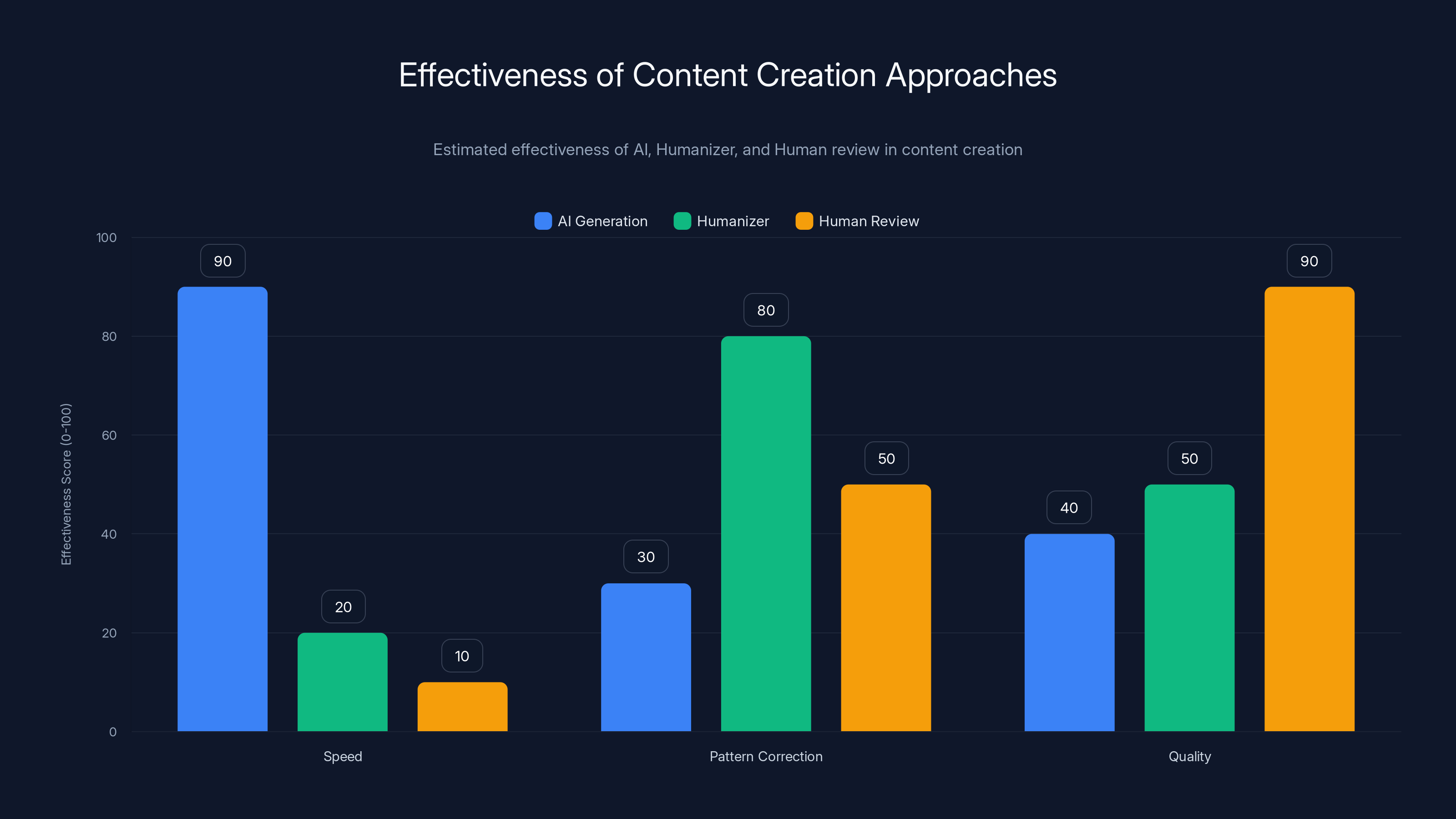

The smartest approach isn't to use Humanizer to sneak AI past detection. It's to use AI-generation plus human-editing plus tools like Humanizer to create better content faster.

Generate with AI. Edit with Humanizer to remove obvious patterns. Then have a human review for accuracy, voice, and context-appropriateness. You get speed from AI generation, pattern-correction from Humanizer, and quality from human judgment.

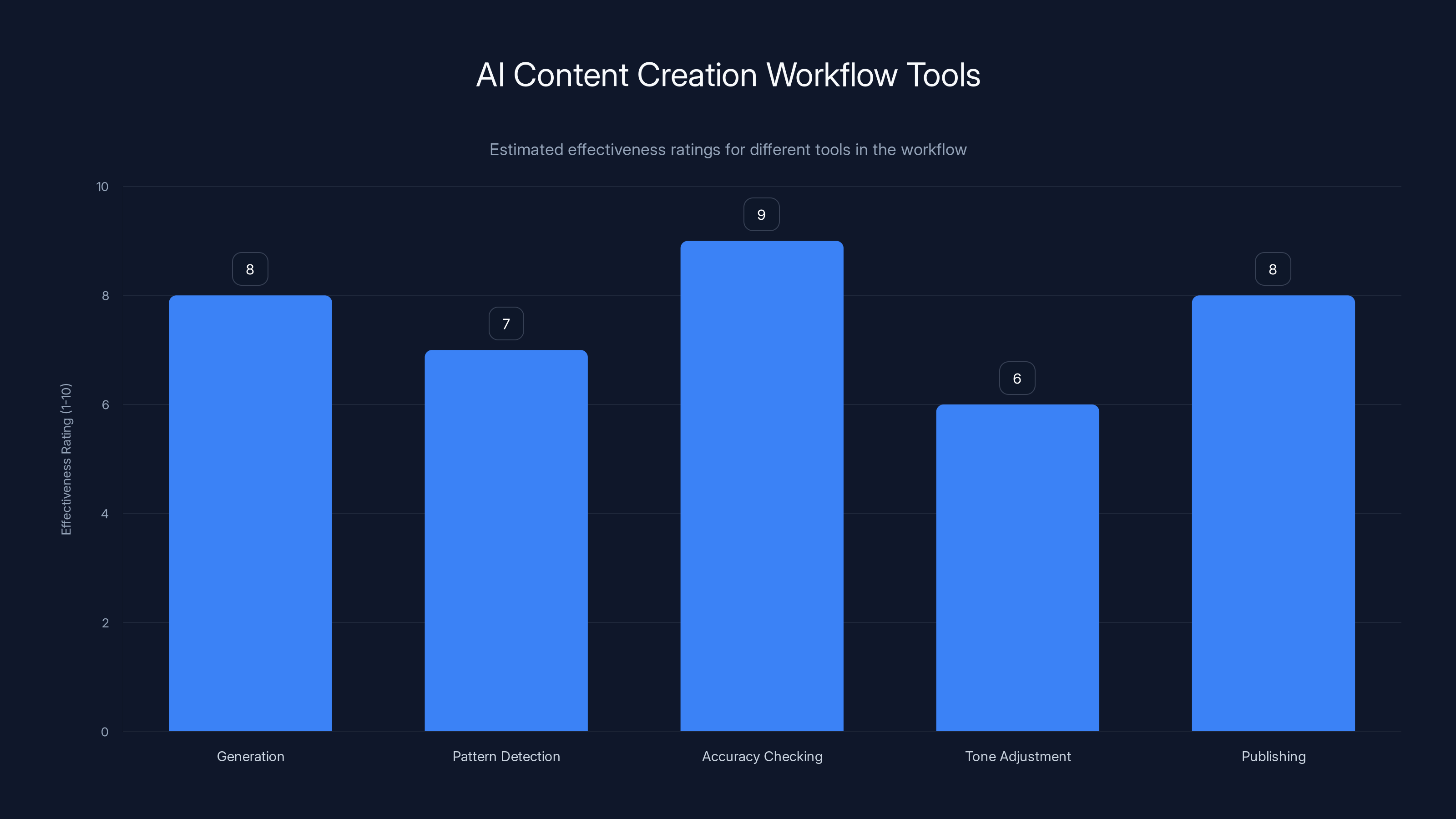

This hybrid approach works because each component does what it's good at:

- AI generation: Fast, broad coverage, structural coherence

- Humanizer: Pattern recognition, consistency checking, obvious-issue removal

- Human review: Accuracy verification, voice alignment, context judgment

Alone, none of these is sufficient. Together, they create strong content.

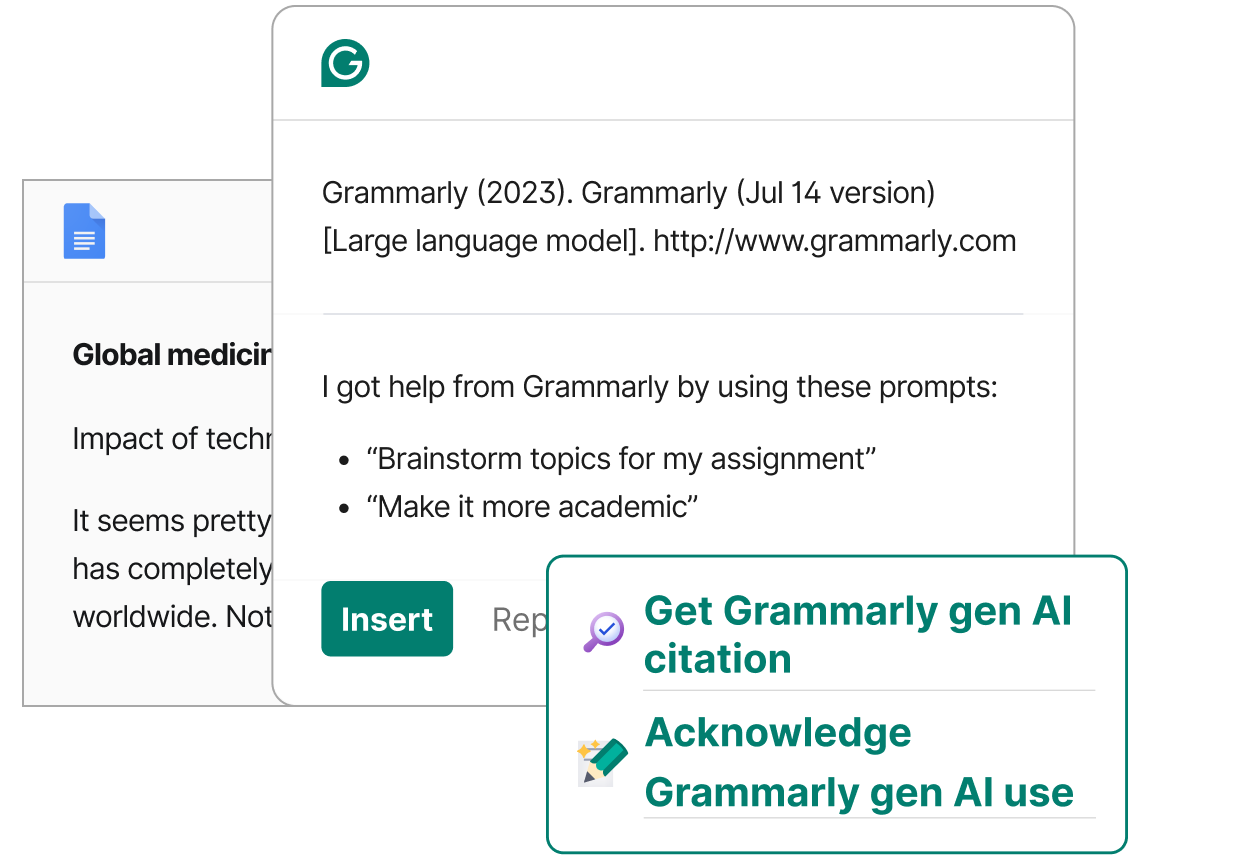

Disclosure and Transparency

Here's where I'll be direct: if you're using AI to generate content, disclosing that matters. Not because AI-generated content is inherently bad (it's not), but because your audience deserves to know how you made what they're reading.

This is especially true for:

- News articles and journalism

- Academic writing

- Medical or health information

- Legal analysis

- Expert commentary

In these contexts, the authorship and methodology matter. A reader needs to know whether they're reading analysis from a domain expert or a language model trained on internet text.

For other content—marketing copy, social media, internal documentation, brainstorming—the transparency needs are different. But transparency itself should be consistent.

Using Humanizer to remove AI detection patterns while not disclosing AI involvement is ethically problematic. Using Humanizer to improve quality while being transparent is just smart practice.

A hybrid approach combining AI generation, Humanizer, and human review maximizes content quality by leveraging the strengths of each method. Estimated data.

The Bigger Picture: AI Writing Quality Over Time

We're living through a weird moment. AI writing is good enough to be useful but still distinctive enough to detect. That won't last.

Current State: Detection is Still Effective

Right now, in 2025, human experts can still identify AI writing reliably. Especially in domains where they have deep knowledge. A physicist reading AI-generated physics writing can spot it. A historian reading AI-generated history writing can spot it. Domain knowledge beats detection tools.

But average users? People reading outside their expertise? They're increasingly fooled. They can't tell AI from human. The patterns don't jump out because they lack context.

This is why Wikipedia's approach works. The editors have domain knowledge (about their specific topics) and collective expertise (from thousands of editors learning to spot these patterns). Together, they can catch AI writing other tools miss.

Likely Evolution: Better AI, Newer Patterns

As AI improves, the patterns will shift. AI won't always write with vague attribution because models will learn to generate specific citations. AI won't always use promotional language because feedback training will reward accuracy over enthusiasm.

But new patterns will emerge. As current tells become rare, detectors will shift focus to more subtle things:

- Unusual word frequency distributions

- Unexpected semantic clustering

- Patterns in how concepts connect

- Unusual metaphor usage

- Changes in sentence rhythm that don't match the writing context

These are harder to detect. They require deeper analysis. They're less obviously "right" or "wrong" (unlike "experts believe" which is just vague).

This escalation is predictable. As long as we want to distinguish AI from human, we'll keep finding new ways to detect it. And as long as we want AI to be useful, we'll keep training it to avoid detection. The dance continues.

Ultimate State: When Detection Becomes Meaningless

Eventually, detection might become impossible. If AI gets good enough at understanding context, maintaining coherence, and genuinely reasoning about topics (not just pattern-matching), it would produce writing indistinguishable from human writing.

We're not there yet. But it's the direction we're heading. And when we get there, detection stops mattering. The question becomes entirely different. It's not "Is this AI?" It's "Is this good?" Is it accurate? Is it helpful? Does it serve the reader?

At that point, the distinction between AI-written and human-written becomes as meaningless as the distinction between a calculator-assisted calculation and a mental-math calculation. The tool matters less than the output.

We might not even care anymore. If AI can write something genuinely helpful and accurate, why would we care whether a human wrote it? We'll care about the result, not the process.

Practical Applications: Where This Matters Now

This isn't all theoretical. The Humanizer tool and the underlying detection patterns have real applications today.

Content Moderation at Scale

Platforms struggling with AI spam can use detection patterns to identify and filter low-quality AI content. This doesn't require perfect detection. Just good enough to catch the worst offenders.

Reddit, for example, could use these patterns as signals in their spam filters. Content that hits multiple AI tells gets flagged for review. This isn't perfect (might catch some human content, miss some AI content), but it raises the bar for AI spam.

This matters because low-effort AI spam currently infests most platforms. It's filling Reddit, Twitter, and Medium with worthless content. Detection patterns help platforms push back.

Editorial and Publishing Workflows

Publications can integrate pattern-detection into their editorial workflows. Not as a final authority, but as a signal. If an article hits many AI tells, an editor reviews it more carefully.

This is already happening. Some publications are experimenting with AI-detection tools. They're treating them as quality signals, not absolute blockers.

The best use case: catch unintentional AI patterns. A human writer might unconsciously adopt AI patterns after seeing a lot of AI-generated content. A tool flags it. The writer reviews and improves. Quality goes up.

Student Work and Academic Integrity

Universities are exploring these tools for academic integrity. Not to catch every AI use (that's probably impossible), but to catch essays that are entirely AI-generated.

A tool like Humanizer is useful in reverse here. If a student ran their essay through Humanizer after generating it with AI, the tool removed the obvious tells. But a skilled instructor might still notice that something feels off. The patterns shift, but the overall character of AI writing might persist.

The long-term answer probably isn't detection tools. It's changed assignment design. Stop assigning essays that can be easily replaced with AI. Assign projects that require reasoning, judgment, and understanding that students must demonstrate through their work.

Internal Documentation and Knowledge Management

Companies use AI to generate first drafts of documentation, internal guides, and knowledge bases. Running these through pattern-removal tools improves quality before human review.

This is a smart use case. Fast generation, quality improvement, then human verification. The hybrid approach works well here.

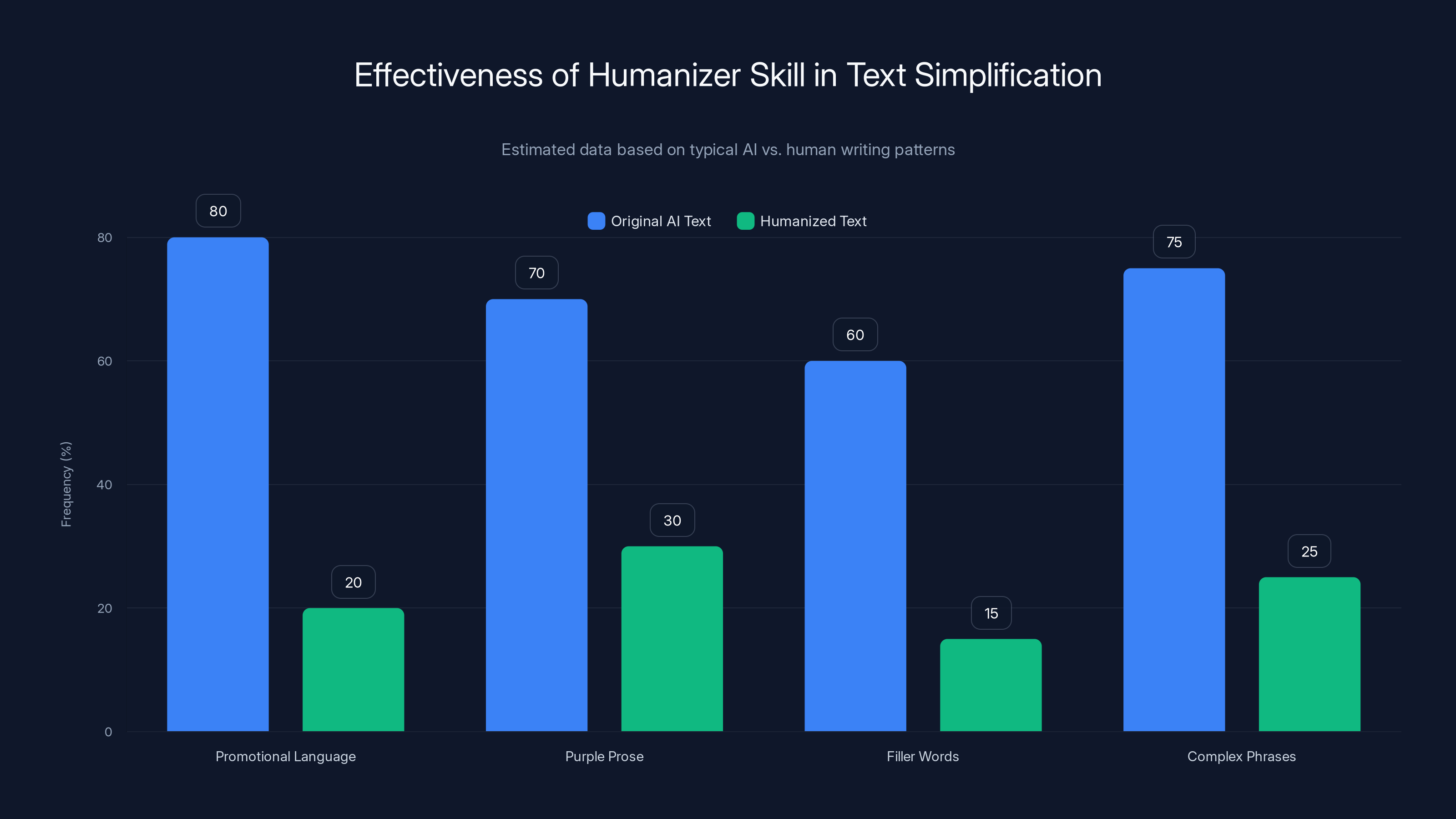

The Humanizer skill effectively reduces promotional language, purple prose, filler words, and complex phrases in AI-generated text, making it more direct and human-like. Estimated data based on typical patterns.

Ethical Considerations and the Broader Debate

Let's get into the uncomfortable part. The Humanizer tool, while interesting technically, sits in ethically murky territory.

The Transparency Problem

If someone uses Humanizer to remove AI detection patterns and then publishes the result as human-written work, that's deceptive. The reader deserves to know how the content was made.

But here's the complexity: what if the writing is good? What if it's accurate and helpful? Does deception become okay if the output is valuable?

Most people would say no. Deception about authorship matters even if the content is good. Because authorship affects trust. It affects how we weight the information. An essay written by a domain expert should weigh differently than an essay generated by a language model, even if they're identical.

The Quality vs. Honesty Tension

There's a real tension in content creation:

- You want to improve quality (using tools like Humanizer)

- You want to be transparent about your process (disclosing AI use)

But improving quality by removing detection patterns while staying silent about AI use creates dishonesty.

The solution: improve quality AND disclose. Use Humanizer, use editing, use human review. Then say "This piece was generated with AI assistance and human review." Transparency and quality aren't mutually exclusive.

The Democratization Angle

One counterargument: Humanizer democratizes content creation. Not everyone has time to write everything themselves. AI speeds things up. Humanizer improves what AI generates.

Small creators, non-native English speakers, busy professionals—they can use these tools to create better content faster. That's genuinely valuable.

But it only remains valuable if it's honest. "I used AI to write faster, then improved it" is different from "I wrote this myself." The first is transparent. The second is a lie.

The Future of AI Detection and Pattern Recognition

Where does this go? What happens in the next few years?

More Sophisticated Detection Methods

As current patterns become obsolete, detection will evolve. Researchers are already exploring:

- Statistical anomalies in language patterns

- Semantic coherence across document-length scales

- Unusual combinations of concepts

- Patterns in how arguments structure themselves

These are harder to teach an AI to avoid because they're less obvious. You can tell a model "Don't say 'breathtaking'" but you can't easily tell it "Write with better long-range semantic coherence" without fundamentally improving how it reasons.

AI Companies Getting Ahead

Companies like Anthropic and OpenAI will likely integrate quality improvements into their base models. Why wait for users to post-process? Build the improvements in.

Claude, GPT-4, and other advanced models already use better training methods that reduce obvious tells. As they improve, the need for Humanizer-like tools decreases.

But there will always be older models and free models with more obvious patterns. The pattern continues: detect, improve, evolve.

A World Beyond Detection

Eventually, we might stop trying to detect whether something is AI. Instead, we verify whether it's accurate. We check sources. We evaluate reasoning. We don't care how it was made; we care whether it's true.

This shift from "Is it AI?" to "Is it accurate?" is probably inevitable. And it's probably healthy. It forces us to think about actual quality rather than authorship method.

Estimated effectiveness ratings show that accuracy checking is crucial, with a high rating of 9, while tone adjustment is slightly lower at 6. Estimated data.

Implementing These Insights in Your Workflow

If you're creating content and want to use AI effectively while maintaining quality and honesty, here's a practical framework.

The Quality-Honesty Framework

Step 1: Generate Use AI to create first drafts. This is where AI excels. Speed. Coverage. Structural coherence.

Step 2: Detect and Improve Run the output through pattern-detection tools. Identify AI tells. Improve them. This includes Humanizer-type tools but also basic editing (removing promotional language, tightening vague attribution, checking for collaborative phrases).

Step 3: Verify Accuracy Have a human with domain knowledge check facts. Verify citations. Ensure claims are accurate. This is where human expertise is irreplaceable.

Step 4: Adjust Voice and Tone Make sure the piece matches your voice and the context. AI-generated content often sounds generic. Your voice should shine through.

Step 5: Disclose Tell your reader what you did. "This piece was researched and drafted with AI assistance, then edited and fact-checked by [your name]." Honesty builds trust.

Step 6: Publish Post the improved, accurate, honest piece.

This workflow takes longer than just generating and posting. But it's faster than writing from scratch. And it produces content you can stand behind.

Tools That Work Together

You don't need just Humanizer. You need a toolkit:

For generation: Claude, Chat GPT, Perplexity, or other capable models

For pattern detection: Humanizer for the Wikipedia-based patterns, but also basic reading comprehension (does this sound natural?)

For accuracy checking: Domain knowledge, citation verification, fact-checking databases

For tone adjustment: Your own voice, style guides, examples of desired tone

For publishing: Standard editorial review, any platform-specific content policies

None of these tools alone is sufficient. Together, they work.

What This Means for Different Audiences

Different people should care about different aspects of this story.

For Content Creators

You can use AI more confidently if you understand the quality issues it creates. Use detection patterns as a quality checklist. Remove vague attribution (it's bad anyway). Cut promotional language (it hurts credibility). Be specific when possible.

Then disclose honestly. Your credibility matters more than hiding your process.

For Readers and Consumers

Be skeptical when you can't identify sources. If an article cites specific research, you can check it. If it says "experts believe," that's a red flag. Check for vague attribution. It indicates either poor writing or AI writing.

More importantly, evaluate actual content. Is it accurate? Can you verify it? Does the reasoning hold? These questions matter more than whether AI was involved.

For Platforms and Moderators

You can use these patterns as signals in your content systems. Not as definitive detectors, but as indicators. Combine them with other signals. But don't rely solely on pattern detection.

Build systems that encourage transparency. Make it easy for creators to disclose AI use. Build in friction against undisclosed AI use.

For Researchers and Academics

This situation is fascinating and worth studying. How do detection patterns evolve? How do they affect human perception? What's the optimal balance between detecting AI and accepting its usefulness?

These questions need serious research. The arms race between detection and avoidance deserves academic attention.

For AI Companies

You have an opportunity to lead on this. Build quality improvements into your models. Make AI writing better natively, not because it's trying to avoid detection, but because it's genuinely better.

Support transparent tools. Help creators understand what your models do well and poorly. Encourage disclosure instead of hiding AI involvement.

The Wikipedia Community as an Early Warning System

One overlooked aspect of this story: Wikipedia's role as an early warning system for AI problems.

Wikipedia got hit by AI spam early and often. Thousands of low-quality AI-generated edits. The community had to deal with it. They learned to detect it. They documented what they learned.

This makes Wikipedia valuable for everyone. The patterns they identified help other platforms. The Humanizer tool brings that knowledge to creators and AI companies.

It's a form of collective intelligence. The Wikipedia community observed a problem. They solved it. They shared the solution. Others built on it.

This is how good things happen online. A community identifies a problem, solves it, and shares openly. Then others improve on the solution. Then the original community adapts to the improvements.

It's slow sometimes. It's messy. But it works better than centralized solutions.

What You Should Do Right Now

If this topic interests you, here are concrete next steps.

Understand the Patterns

Read the actual Wikipedia guide on AI detection. See the patterns yourself. When you read something, ask: does this use vague attribution? Promotional language? Collaborative phrases? You'll be surprised how often you spot these patterns in all kinds of writing.

Evaluate Your Own Writing

If you create content, read your own work looking for these patterns. Don't ask "Is it AI?" Ask "Are these quality issues present?" If they are, fix them.

Consider Your Process

If you use AI in your workflow, think about disclosure. How are you using AI? What value does it add? What could you improve? Are you being honest with your audience about your process?

Try Detection Tools

Experiment with Humanizer or similar tools. Run some of your writing through them. See what gets flagged. Understand why. Use that feedback to improve.

Contribute to Solutions

If you're interested in this space, there are ways to help. Contribute to open-source detection tools. Help platforms build better content systems. Research how people perceive AI-generated content. There's real work to be done.

Conclusion: Living in the Detection Arms Race

We're in a weird moment in internet history. We can detect AI writing. But that detection isn't perfect. And it's constantly evolving. Tools like Humanizer accelerate the evolution.

This isn't necessarily bad. It's pushing AI to be better. It's pushing humans to think more carefully about content quality. It's creating tools that help creators improve their work faster.

The key is honesty. Use AI. Improve what it creates. Use tools like Humanizer. But tell your audience what you did. Transparency plus quality equals trust.

Wikipedia's guide—and the Humanizer tool built on it—reveals something important: a lot of what we think of as "AI-ness" is just poor writing. Vague attribution is bad. Promotional language is bad. Collaborative phrases in formal contexts are bad.

By removing these patterns, you're not just avoiding detection. You're improving quality. That's worth doing, regardless of whether anyone knows the writing involved AI.

The arms race between detection and evasion will continue. New patterns will emerge. Better detection methods will develop. AI models will improve to avoid those methods.

But underneath it all, the fundamentals stay the same. Good writing is clear. It's accurate. It's honest. It's appropriately toned for its context. Whether it's written by a human, generated by AI, or some combination doesn't change those requirements.

Focus on those fundamentals. Disclose your process. Verify accuracy. Improve quality. And let the detection arms race play out in the background.

That's how you create work you can be proud of.

FAQ

What is the Wikipedia AI detection guide?

The Wikipedia AI detection guide is a list of patterns and tells that Wikipedia's volunteer editors compiled to help identify AI-generated content. These include vague attributions, promotional language like "breathtaking," collaborative phrases like "I hope this helps," excessive lists, and repetitive formatting. The guide emerged from thousands of editors observing AI-generated edit submissions and noticing consistent patterns.

How does the Humanizer skill for Claude work?

The Humanizer is a custom skill for Claude Code that scans text for AI detection patterns identified in Wikipedia's guide. It uses Claude's language understanding to evaluate context and identify whether patterns are genuinely problematic or appropriate for the context. When it finds issues, it rewrites them to sound more natural. The tool automatically updates when Wikipedia's guide is updated, staying current with newly identified patterns.

What are the benefits of understanding AI writing patterns?

Understanding these patterns helps you improve any writing, whether AI-generated or human-written. Vague attribution is bad writing regardless of source. Promotional language in formal contexts hurts credibility. Specific sources are better than vague ones. By understanding these patterns, you can identify and fix quality issues in your own writing, evaluate sources more critically when reading, and use AI tools more effectively while maintaining transparency with your audience.

Why does AI writing have these patterns?

AI writing exhibits these patterns because language models train on large amounts of internet text that includes marketing copy, customer service interactions, blog posts, and other content types. Models learn those styles and sometimes default to them. Additionally, safety training emphasizes being helpful and explicit, which can create recognizable patterns. These aren't bugs but natural consequences of how language models learn and how they're trained to behave.

Should I use Humanizer to hide that my content is AI-generated?

No. Using Humanizer to remove AI detection patterns while not disclosing AI involvement is ethically problematic. Readers deserve to know how content was made because authorship affects credibility and how information should be weighted. Instead, use Humanizer to improve quality, then disclose transparently: "This was drafted with AI assistance and edited for accuracy." Honesty builds trust better than deception.

How does detection of AI writing work beyond just patterns?

Patterns are the most obvious detection method, but humans can also detect AI writing through deeper analysis: unusual word frequency distributions, unexpected semantic clustering, changes in writing rhythm that don't match context, and patterns in how arguments structure themselves. Domain experts can often detect AI writing in their field even without obvious pattern tells. Eventually, as AI improves, detection will focus more on accuracy and reasoning quality than on authorship method.

What should I do if I use AI to generate content?

Follow a quality-honesty framework: (1) Generate with AI for speed and coverage, (2) Detect and improve patterns using tools or careful editing, (3) Verify accuracy with human expertise, (4) Adjust voice and tone to match your style, (5) Disclose your process transparently, (6) Publish with confidence. This hybrid approach leverages AI's speed while maintaining quality and credibility through human oversight.

Will AI writing detection always be necessary?

Detection might eventually become less important. As AI improves and gets trained to avoid current tells, new patterns will emerge, creating an arms race. Eventually, AI writing might become indistinguishable from human writing, making the question "Is this AI?" less relevant than "Is this accurate and well-reasoned?" At that point, we'll evaluate content on quality rather than authorship method.

How does Wikipedia stay relatively clean despite AI spam?

Wikipedia's volunteer editors have developed pattern-recognition skills through thousands of edits. The community collectively identified AI writing tells and built detection methods. Additionally, the edit oversight system means suspicious edits get reviewed quickly. This combination of community expertise, formal documentation of detection patterns, and active moderation keeps Wikipedia cleaner than other platforms where AI spam runs rampant.

What does this mean for academic integrity?

The longer-term answer probably isn't detection tools but better assignment design. Instead of essays that can be replaced with AI, assign projects requiring reasoning and judgment that students must demonstrate. For detecting entirely AI-generated work, understand that patterns can be removed with tools like Humanizer, but skilled instructors might notice other subtle signs. Emphasis should shift from detection to designing work that requires genuine student engagement.

Ready to improve your AI-generated content and create high-quality pieces with transparency? Tools like Humanizer help remove obvious AI patterns, but smart creators combine AI generation with human editing and honest disclosure. Start using these frameworks today to create content you can genuinely stand behind.

Key Takeaways

- Wikipedia's volunteer editors created a documented guide of AI writing patterns including vague attribution, promotional language, and collaborative phrases

- The Humanizer tool uses Claude to automatically identify and rewrite these patterns, creating a continuous feedback loop with Wikipedia's evolving guide

- AI writing patterns exist due to training data biases, safety training effects, and the model's generation methodology, not intentional deception

- Detection methods and evasion techniques form an ongoing arms race that will eventually become less relevant as AI writing quality improves

- Content creators should use AI plus pattern detection plus human review, then disclose transparently rather than hiding AI involvement

Related Articles

- ChatGPT Safety vs. Usability: The Altman-Musk Debate Explained [2025]

- AI Slop Crisis: Why 800+ Creatives Are Demanding Change [2025]

- AI Hallucinated Citations at NeurIPS: The Crisis Facing Top Conferences [2025]

- ChatGPT Age Prediction: How AI Protects Minors Online [2025]

- ChatGPT's Age Prediction Feature: How AI Now Protects Young Users [2025]

- X's Algorithm Open Source Move: What Really Changed [2025]

![Wikipedia's AI Detection Guide Powers New Humanizer Tool for Claude [2025]](https://tryrunable.com/blog/wikipedia-s-ai-detection-guide-powers-new-humanizer-tool-for/image-1-1769094418162.jpg)